Abstract

In contrast to static sounds, spatially dynamic sounds have received little attention in psychoacoustic research so far. This holds true especially for acoustically complex (reverberant, multisource) conditions and impaired hearing. The current study therefore investigated the influence of reverberation and the number of concurrent sound sources on source movement detection in young normal-hearing (YNH) and elderly hearing-impaired (EHI) listeners. A listening environment based on natural environmental sounds was simulated using virtual acoustics and rendered over headphones. Both near-far (‘radial’) and left-right (‘angular’) movements of a frontal target source were considered. The acoustic complexity was varied by adding static lateral distractor sound sources as well as reverberation. Acoustic analyses confirmed the expected changes in stimulus features that are thought to underlie radial and angular source movements under anechoic conditions and suggested a special role of monaural spectral changes under reverberant conditions. Analyses of the detection thresholds showed that, with the exception of the single-source scenarios, the EHI group was less sensitive to source movements than the YNH group, despite adequate stimulus audibility. Adding static sound sources clearly impaired the detectability of angular source movements for the EHI (but not the YNH) group. Reverberation, on the other hand, clearly impaired radial source movement detection for the EHI (but not the YNH) listeners. These results illustrate the feasibility of studying factors related to auditory movement perception with the help of the developed test setup.

Keywords: auditory movement perception, hearing loss, minimum audible movement angle, distance perception

Introduction

There is a large body of research showing that sensorineural hearing loss can lead to a multitude of hearing deficits, elevated hearing thresholds, impaired suprathreshold coding, and reduced speech understanding abilities (e.g., Bronkhorst & Plomp, 1989; Moore, 2012). These deficits can be expected to manifest themselves particularly under acoustically complex listening conditions, for example, in situations that are characterized by the presence of background noise or reverberation. Another dimension of complexity relates to the dynamics of the listening environment, for example, when a sound source changes its spatial position over time. Importantly, questionnaire studies have shown that hearing-impaired listeners experience great difficulty not only with listening in noise but also with judging source movements and that problems with perceiving spatially dynamic aspects are related to their experience of handicap (Gatehouse & Noble, 2004). As a consequence, it is important to understand these deficits better so that ways of addressing them (e.g., with hearing devices) can be developed.

In the horizontal plane, source movements can occur along the near-far (N-F; ‘radial’ movements) and left-right (L-R; ‘angular’ movements) dimensions. Concerning previous research related to N-F source movement perception, the available studies generally made use of simplistic stimuli and focused exclusively on normal-hearing listeners. For example, Neuhoff and coworkers presented tonal signals over headphones, to which they applied simple level changes to investigate the perception of ‘looming’ sounds (e.g., Neuhoff, 1998; Seifritz et al., 2002). Similarly, Andreeva and colleagues performed a number of studies concerned with the perception of approaching and receding sounds by simulating such movements through simple level balancing between two loudspeakers positioned directly ahead of the listener at different distances (Altman & Andreeva, 2004; Andreeva & Malinina, 2011; Malinina, 2014; Malinina & Andreeva, 2013). Taken together, the results from these studies indicate that overall level serves as a cue for N-F movement perception. Nevertheless, given that the manipulation of a single acoustic feature results in an impoverished set of movement cues, the applicability of these findings to more realistic scenarios is uncertain.

Compared with N-F movement perception, N-F distance perception has been investigated in much more detail. To summarize, this research has shown that the overall level, the direct-to-reverberant sound ratio (DRR), and monaural spectral cues are the salient acoustical cues for distance perception with static sound sources (see Kolarik, Moore, Zahorik, Cirstea, & Pardhan, 2016 for a review). Furthermore, under reverberant conditions, the DRR is generally assumed to be the dominant cue, whereas the overall level and monaural spectral cues are presumed to play a secondary role (Zahorik, Brungart, & Bronkhorst, 2005). The monaural spectral cues that can occur are due to air absorption at high frequencies for faraway (>∼10 m) sound sources, the Doppler shift, and comb filter effects that arise as a result of the direct sound interfering with any reflections (e.g., Störig & Pörschmann, 2013). With the exception of a single study that found hearing-impaired listeners (who were tested without any form of audibility compensation) to exhibit deficits in the ability to discriminate different source distances based on the DRR but not based on overall level cues (Akeroyd, Gatehouse, & Blaschke, 2007), research into distance perception seems to have been restricted to normal-hearing listeners so far.

Concerning spatial hearing in the L-R dimension, binaural cues are thought to play the dominant role. That is, listeners make use of interaural time differences (ITDs) below ∼1.5 kHz and interaural level differences (ILDs) at higher frequencies to estimate the laterality of a stimulus (e.g., Blauert, 1997; Middlebrooks & Green, 1991). Monaural spectral cues, on the other hand, are generally thought to help with distinguishing between sounds coming from in front of or from behind the listener, but not with determining laterality (Macpherson & Middlebrooks, 2002; Musicant & Butler, 1985). A measure that has been relatively widely used for investigating L-R movement perception is the minimum audible movement angle (MAMA), that is, the smallest azimuthal displacement of a moving sound signal relative to a static one that a listener is able to detect. Psychoacoustic experiments conducted with normal-hearing listeners have shown that the MAMA strongly depends on stimulus bandwidth and movement velocity, decreasing with bandwidth and increasing with velocity (see Carlile & Leung, 2016 for a review). In contrast, reverberation does not seem to affect MAMA thresholds, at least not for velocities between 25°/s and 100°/s (Sankaran, Leung, & Carlile, 2014).

The studies reviewed so far have in common that they were generally based on rather artificial stimuli such as tones or noise signals presented in isolation. To achieve greater ecological validity, Brungart, Cohen, Cord, Zion, and Kalluri (2014) recently presented up to six concurrent environmental sounds (e.g., a ringing phone or soda pouring) to groups of normal-hearing and hearing-impaired listeners over an arc of loudspeakers. The participants had to localize a target sound source or determine the source that was added to, or removed from, a given stimulus. For both groups, performance decreased as the task complexity and number of sound sources increased, particularly so for the hearing-impaired group. A similar approach was adopted by Weller, Best, Buchholz, and Young (2016) who investigated the ability of normal-hearing and hearing-impaired listeners to count, locate, and identify up to six concurrent talkers in a simulated reverberant room. In general, the performance of both groups decreased with the number of talkers. However, whereas the normal-hearing listeners could reliably analyze stimuli with up to four concurrent talkers, the hearing-impaired listeners already made errors with just two talkers. In contrast, Akeroyd and colleagues recently reported on a sound-source enumeration experiment, which indicated that hearing-impaired listeners can identify up to four simultaneous speech sources and that reverberation only has a modest effect on this (Akeroyd, Whitmer, McShefferty, & Naylor, 2016).

In summary, research into auditory movement perception has so far exclusively dealt with normal-hearing listeners and artificial stimuli. Only very recently have researchers begun to investigate spatial perception in realistically complex listening environments, that is, with multiple concurrent sound sources and reverberation, but without any source movements. The aim of the current study was to address this shortage of research. Our focus was on the perception of ecologically valid sounds with angular or radial source movements. In particular, we investigated the influence of the number of concurrent sound sources as well as reverberation on the ability of young normal-hearing (YNH) and elderly hearing-impaired (EHI) listeners to detect changes in angular or radial source position. To that end, we used a toolbox for spatial audio reproduction that allowed us to create complex acoustic stimuli. Our hypotheses were as follows:

YNH listeners will generally outperform EHI listeners in terms of source movement detection;

An increase in the number of concurrent sound sources will generally result in poorer source movement detection;

Reverberation will have no effect on L-R source movement detection but will positively affect N-F movement detection, at least for YNH listeners.

Methods

The current study was approved by the ethics committee of the University of Oldenburg. All participants provided written informed consent, and the EHI participants received financial compensation for their participation.

Participants

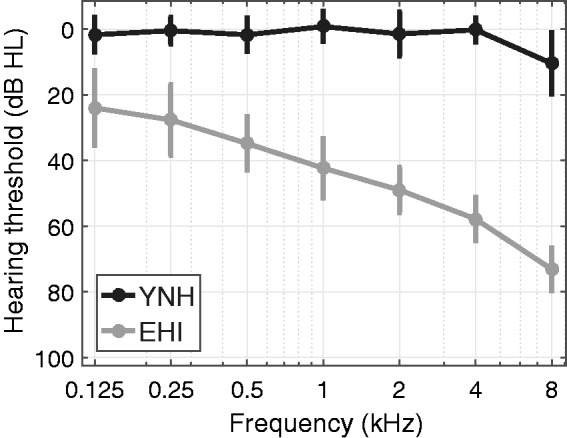

The participants were 10 YNH volunteers (4 male, 6 female) aged 21 to 29 years (mean: 24.4 years, standard deviation [SD] = 3.5 years) and 15 paid EHI listeners (11 male, 4 female) aged 67 to 79 years (mean: 71.8 years, SD = 7.1 years), four of whom had bilateral hearing aid experience of 3 to 8 years. The YNH listeners served as a reference group. The EHI listeners were tested here because of the goal to extend this research to the effects of hearing aid signal processing on source movement detection in hearing aid users. The YNH listeners had audiometric thresholds ≤25 dB HL at all standard audiometric test frequencies from 0.125 to 8 kHz. The EHI listeners had symmetric, sloping mild-to-moderate sensorineural hearing losses. The average audiograms of both groups are depicted in Figure 1.

Figure 1.

Mean hearing thresholds averaged across left and right ears for the YNH (black) and EHI (gray) groups. Error bars denote ±1 SD.

YNH = young normal hearing; EHI = elderly hearing impaired.

Experimental Setup

To create our stimuli, we used the ‘Toolbox for Acoustic Scene Creation and Rendering’ (TASCAR; Grimm, Ewert, & Hohmann, 2015). TASCAR is a system that allows rendering complex acoustic environments with high perceptual plausibility. Instead of trying to reproduce a given sound field as accurately as possible, TASCAR aims to simulate the dominant acoustical properties to obtain a perceptually similar equivalent that can be rendered in real time. To do so, TASCAR uses an image source model to simulate early reflections. Late reflections can be simulated based on either an Ambisonics recording made in a real room or an artificial reverberation algorithm. For moving sound sources, the Doppler shift is included in the simulation of the direct sound and the early reflections. Air absorption effects are also simulated. For our stimuli, however, Doppler and air absorption effects were negligible. Air absorption effects are confined to relatively large source distances (see Introduction section), while Doppler shifts influence motion perception for velocities >10 m/s (Lutfi & Wang, 1999). Neither of these conditions was fulfilled here.

In the current study, we used a second-order geometric image source model (e.g., Savioja, Huopaniemi, Lokki, & Väänänen, 1999) that included the time-variant simulation of all nonoccluded image sources together with late reverberation. As a consequence, time-variant comb filter effects due to the interference between the direct sound and the early reflections were included in the generated stimuli. The reverberation was a recording made with a TetraMic (Core Sound, Teaneck NJ, USA) in a real room that was converted into Ambisonics B-format (as described in Adriaensen, 2007). The recorded room was an entrance hall of approximately 10.5 m × 6 m × 2.8 m with solid walls (including various large glass surfaces) and a wooden floor (see Figure 2). To achieve a smooth transition between the early reflections and the late reverberation, the reverberation was faded in with a Hanning window after approx. 20 ms.

Figure 2.

Schematic top-down view of the simulated room, showing the virtual listener and the five sound sources at 0° (S1), ±45° (S2, S3), and ±90° (S4, S5). S1 could move in the left-right/right-left or the near-far direction (see text for details).

To simulate a virtual binaural listener, we used a horizontal third-order Ambisonics receiver (Daniel, 2000). The output signals of this receiver were fed into an Ambisonics decoder (dual band with a crossover frequency of 500 Hz; described in Daniel, 2000) to produce eight virtual loudspeaker signals with a spatial resolution of 45°. The virtual listener was placed at the center of this array. Previous research into higher order Ambisonics has shown that, although the physical sound field is not correctly reproduced with an array of eight loudspeakers, the spatial hearing abilities of normal-hearing listeners are essentially unaffected at the center position of the array (Daniel, 2000; Daniel, Moreau, & Nicol, 2003). Furthermore, in a previous study, the setup used here was shown to produce room acoustical parameters comparable with those of real rooms that were simulated in TASCAR (Grimm, Heeren, & Hohmann, 2015), thereby providing support for the general validity of our simulation approach. To obtain the binaural signals for headphone presentation, the virtual loudspeaker signals were convolved with head-related transfer functions (HRTFs) for each loudspeaker location that were recorded with a Brüel & Kjær (B&K; Nærum, Denmark) head-and-torso simulator (cf., Kayser et al., 2009). In other words, the HRTFs were interpolated using higher order Ambisonics.

In the simulated scenario, the head of the virtual listener was placed 1 m away from the middle of the shorter wall facing along the longer side at a height of 1.5 m (see Figure 2). In the reference condition, the target source was located 1 m away from, and directly in front of, the listener. A change in complexity of the scenario was achieved by adding two or four static distractor sound sources at a distance of 1 m each and with azimuthal angles of ±45° and ±90° relative to the frontal direction. Furthermore, the room could be changed from an echoic environment with a reverberation time of ∼0.8 s to an anechoic environment by omitting the early reflections and late reverberation.

Stimulus presentation was via a 24-bit RME (Haimhausen, Germany) Hammerfall DSP 9632 soundcard, a Tucker-Davis Technologies (Alachua, USA) HB7 headphone buffer and a pair of Sennheiser (Wennebostel, Germany) HDA200 headphones. Calibration was carried out using a B&K 4153 artificial ear, a B&K 4134 1/2" microphone, a B&K 2669 preamplifier, and a B&K 2610 measurement amplifier.

For the EHI listeners, linear amplification was applied to the stimuli in accordance with the ‘National Acoustics Laboratories—Revised-Profound’ (NAL-RP) fitting rule (Byrne, Parkinson, & Newall, 1990) to ensure adequate audibility. The Master Hearing Aid research platform (Grimm, Herzke, Berg, & Hohmann, 2006) was used for that purpose. Figure 3 illustrates the use of NAL-RP amplification for the two target stimuli used here (see later). The gray dotted line corresponds to mean hearing thresholds of the YNH listeners tested here plotted in terms of 1/3-octave band sound pressure levels (SPLs). The gray dashed line corresponds to the mean hearing thresholds (also in dB SPL) of the EHI listeners tested here. The error bars denote ±1 SD. The black and gray solid lines without any symbols depict the long-term average spectrum (LTAS) of the two target signals (fountain and phone, respectively) at the eardrum as calculated with a diffuse field-to-eardrum transformation (ANSI, 2007). The black and gray solid lines with diamonds show the effects of NAL-RP amplification prescribed for the grand average hearing thresholds of the EHI listeners for these two signals. These data show that, on average, both target signals were several decibels above the participants’ hearing thresholds for frequencies between ∼0.4 and ∼3 kHz. Potential audibility issues could have occurred for certain EHI listeners, particularly below 0.4 kHz and above 4 kHz where NAL-RP reduces gain compared with the midfrequency range (e.g., Dillon, 2012).

Figure 3.

Illustration of the effects of NAL-RP amplification. Gray dotted line: mean hearing thresholds (in dB SPL) corresponding to the YNH listeners; gray dashed line: mean hearing thresholds for the EHI group. Error bars denote ±1 SD. Black and gray solid line without symbols: LTAS of the fountain and phone signals at the eardrum without amplification. Black and gray solid lines with diamonds: LTAS of the fountain and phone signals at the eardrum with NAL-RP amplification for the EHI group.

YNH = young normal hearing; EHI = elderly hearing impaired; NAL-RP = National Acoustics Laboratories—Revised-Profound; SPL = sound pressure level; LTAS = long-term average spectrum.

Stimuli

We made measurements with up to five different environmental sounds similar to those used by Brungart et al. (2014). Except for some complementary measurements (see later), a ringing phone served as the target sound (see Figure 2). The other sound sources (soda poured into a glass, a bleating goat, ringing bells, a splashing fountain) were fixed in location. Based on the results of a pilot study (Lundbeck, Grimm, Hohmann, Laugesen, & Neher, 2016), we presented the target sound (S1) at a nominal SPL of 65 dB and each of the other sounds (S2-S5) at 62 dB SPL (nominal), as measured at the listening position with the receiver absent. Depending on the number of concurrent sound sources, the signal-to-noise ratio was therefore 0 dB (two distractors) or −3 dB (four distractors). The duration of each environmental sound was 2.3 s. In case of the reverberant test scenario, the duration increased by the reverberation time of the room (∼0.8 s).

Because the environmental sounds differed considerably in terms of their spectro-temporal properties (see Figure 4), we also addressed the influence of these differences on source movement detection. Specifically, as part of the radial movement detection measurements, we exchanged the phone sound with the fountain sound, as these two sounds differed most from each other.

Figure 4.

Spectrograms of S1 (ringing phone), S2 (soda being poured into a glass), S3 (bleating goat), S4 (ringing bells), and S5 (splashing fountain) without reverberation.

Procedure

Initially, the participants’ hearing thresholds at the standard audiometric frequencies from 0.125 to 8 kHz were measured. They were then seated in a soundproof booth in front of a screen where they could use a graphical user interface to familiarize themselves with some example stimuli with static or moving target sounds. Once the participants felt comfortable with detecting the source movements and changes in the movement direction (from angular to radial or vice versa), a training run was completed. For all (training or test) runs, a single-interval two-alternative-forced-choice paradigm was used. Each interval had a duration of 2.3 s under anechoic conditions and 3.1 s under reverberant conditions. On each trial, the task of the participants was to indicate whether or not they perceived a target source movement by pressing a button on the screen (‘Yes’ or ‘No’). No feedback was provided.

The psychoacoustic measurement procedure was implemented in the ‘psylab’ toolbox (Hansen, 2006). In half of the trials, a moving target source was simulated, whereas in the other trials, the target source remained at the reference position (0°, 1 m). For the angular measurements, the direction of movement (toward the left or right) was randomized, whereas for the radial measurements, a withdrawing (N-F) movement was always simulated. The withdrawing direction was chosen to ensure the same reference position (0°, 1 m) for both movement dimensions. To control the extent of the movement, the velocity (in °/s or m/s) was varied in the adaptive procedure. For the angular source movement measurements, the velocity ranged from 2 to 25°/s. For the radial source movement measurements, it ranged from 0.2 to 3.7 m/s. At the beginning of a run, a 1-up 2-down procedure (Levitt, 1971) was used until the smallest step size (YNH: 2° or 0.15 m; EHI: 2° or 0.25 m) was reached (after three reversals). During the measurement phase, a 1-up 3-down rule was used to track the 79.4% detection threshold. A measurement was terminated after four additional reversals or when the maximum number of trials (i.e., 70) was reached. The detection thresholds were estimated by taking the arithmetic mean of the four reversal points in the measurement phase. In this manner, we quantified the smallest displacement (in ° or m) of the target source that the participants were able to perceive within the 2.3 s over which the movements occurred. In the following, we will refer to these thresholds as the MAMA and minimum audible movement distance (MAMD).

The angular and radial source movement measurements were carried out in separate blocks. Within these blocks, the various test conditions were tested in randomized order. After 1 to 2 weeks, a set of retest measurements was performed to assess test–retest reliability. The whole experiment took about 4 hr to complete. Table 1 provides an overview of the various test conditions.

Table 1.

Overview of the 14 Experimental Conditions per Listener Group.

| Condition | Target signal | Movement dimension | Degree of reverberation | Number of sound sources |

|---|---|---|---|---|

| 1–3 | Phone | Left-right | Anechoic | 1 (target only), 3, 5 |

| 4–6 | Echoic | 1 (target only), 3, 5 | ||

| 7–9 | Near-far | Anechoic | 1 (target only), 3, 5 | |

| 10–12 | Echoic | 1 (target only), 3, 5 | ||

| 13 | Fountain | Near-far | Anechoic | 1 (target only) |

| 14 | Echoic | 1 (target only) |

In total, we measured 12 angular movement detection thresholds and 16 radial movement detection thresholds per listener (and thus a total of, respectively, 300 and 400 thresholds across all listeners) for both the test and the retest measurements. Out of these, we excluded six L-R thresholds (from six different EHI listeners) and four N-F thresholds (from four different EHI listeners) obtained for the five-source scenario because in these cases the listeners could not hear out the target signal from the signal mixture and therefore had to guess. Furthermore, the N-F movement detection thresholds of one EHI listener were excluded altogether because this listener was generally unable to distinguish between static and moving stimuli along the N-F dimension.

Prior to the statistical analyses, we examined the distributions of the various datasets. According to Kolmogorov–Smirnov’s test, all but three datasets fulfilled the requirements for normality (p > .05). Exclusion of three outlier thresholds resulted in normally distributed data for all datasets. We therefore used parametric statistical tests to analyze our data. Whenever appropriate, we corrected for violations of sphericity using the Greenhouse–Geisser correction.

Acoustic Analyses

To verify that our test setup is capable of simulating the stimulus changes that are thought to be relevant for L-R and N-F movement perception and to also characterize our stimuli better, we performed a detailed acoustic analysis. That is, we created a set of single-source stimuli with and without reverberation and with either the phone or the fountain as the target signal. These stimuli included source movements with velocities corresponding to the median detection thresholds of our YNH and EHI listeners (see Results section). In this manner, we captured the changes in the acoustic properties of the target signals that our two groups of participants could just detect. To be able to reveal short-time changes in the measures of interest (see later), we used a 100-ms analysis window with 50% overlap. Furthermore, we performed all analyses relative to the corresponding static reference stimulus (0°, 1 m). In other words, we calculated relative changes (i.e., Δ values) in the measures of interest for each spatial movement dimension.

Left-right dimension

To analyze the stimuli moving along the L-R dimension, we applied the binaural hearing model of Dietz, Ewert, and Hohmann (2011). This model takes a binaural stimulus as input and estimates the ITDs and ILDs, which are considered the dominant cues for L-R spatial hearing (see Introduction section). The model includes a middle-ear transfer characteristic, band-pass filtering along the basilar membrane, cochlear compression, and the mechano-electrical transduction process at the level of the inner hair cells. Hearing loss is not considered in this model. In the model, the interaural similarity (or coherence) is estimated for each time-frequency segment and used as a weighting function so that only highly similar (and thus reliable) signal components are used for further analysis. For our purpose, we used the ITDs in the low-frequency channels (with center frequencies from 0.24 to 1.1 kHz) and the ILDs in the high-frequency channels (with center frequencies from 1.1 to 4.7 kHz) as estimated by the model to calculate the mean ITD and mean ILD across frequency channels in each time window.

In addition to the above, we also quantified monaural spectral changes in our stimuli. To that end, we always analyzed the left stimulus channel. As explained in the Introduction section, a moving sound source gives rise to time-variant comb filter effects. To quantify these changes in spectral coloration, we applied a measure of Moore and Tan (2004). After a simulation of peripheral auditory processing, this measure computes the internal excitation pattern for a given input stimulus. In doing so, it takes into account both the magnitude of the changes in the excitation pattern and the rapidity with which the excitation pattern changes as a function of frequency and then combines this information into a single dimensionless spectral distance measure. Originally, the spectral distance was further transformed into a prediction of perceived naturalness; here, the distance measure was used directly. In contrast to the binaural model, this measure rates only monaural spectral features, for example, changes in coloration.

Figure 5 illustrates the changes in ITD (left panel), ILD (middle panel), and spectral coloration (right panel) as a function of source azimuth for two different extents of movement (or velocities) for the anechoic (top panels) and reverberant (bottom panels) scenarios. As can be seen, under anechoic conditions, monotonic changes in the ITDs and ILDs with increasing azimuth are apparent, and the magnitude of these changes is consistent with the literature (e.g., Blauert, 1997). Furthermore, the monaural spectral changes also increase monotonically with increasing azimuthal displacement. Under reverberant conditions, however, all three measures show a much more erratic behavior. This holds true especially for the ITDs and ILDs, for which monotonic changes are no longer apparent. In contrast, the monaural spectral changes show a more consistent pattern. Also, in terms of magnitude, they are larger under reverberant than anechoic conditions. These results confirm the changes in the acoustical features that are thought to drive L-R source movement perception, at least under anechoic conditions. For the reverberant measurements, they suggest that monaural spectral changes play a particular role.

Figure 5.

Changes in ITD (left) and ILD (middle) under anechoic (top, solid lines) and reverberant (bottom, dashed lines) conditions for the phone moving either 10° (black) or 28° (gray) to the side over 2.3 s. Monaural spectral changes (right) as estimated using the measure of Moore and Tan (2004) are also shown.

ITD = interaural time difference; ILD = interaural level difference.

Near-far dimension

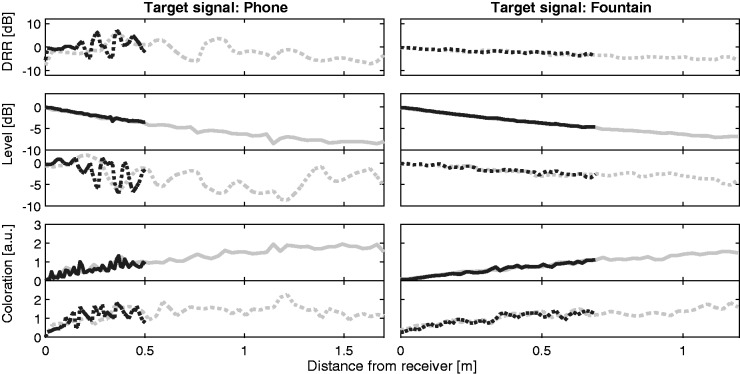

To analyze the stimuli moving along the N-F dimension, we calculated the DRR, which declines proportionally with source distance in reverberant rooms (e.g., Bronkhorst & Houtgast, 1999). In addition, we calculated the overall level decay, which amounts to 6 dB per doubling of source distance under anechoic conditions and to smaller decays in reverberant rooms (e.g., Blauert, 1997). Although the DRR and level decay usually show a frequency-dependent behavior under reverberant conditions, we carried out broadband analyses to quantify the overall changes in these measures due to increasing source distance. Furthermore, we calculated monaural spectral changes based on the measure of Moore and Tan (2004) described earlier. Figure 6 illustrates the changes in these three measures as a function of distance (or time) for the phone sound and additionally for the fountain sound, as this signal was also used as the target in the N-F movement detection measurements.

Figure 6.

Left: changes in DRR (top), overall level (middle), and spectral coloration (bottom) for the phone signal moving either 0.5 m (black) or 1.7 m (gray) away from the listener over 2.3 s. Changes in overall level and spectral coloration are shown for both anechoic (solid lines) and reverberant (dashed lines) conditions. Right: corresponding plots for the fountain signal moving either 0.7 m (black) or 1.3 m (gray) away from the listener.

DRR = direct-to-reverberant sound ratio.

As can be seen, the changes in these measures are generally as expected. That is, the DRR and overall level decrease with increasing source distance, whereas the spectral coloration increases. Furthermore, whereas for the highly modulated and narrowband phone signal these measures are relatively variable, the changes are much more monotonic for the noise-like fountain signal (cf., Figure 4). These results illustrate the expected changes in the acoustical features that are thought to underlie N-F source movements. For the reverberant measurements, they suggest that monaural spectral cues may provide salient information about source movements.

Results

L-R Dimension

Initially, we examined the test–retest reliability of the L-R data. For both groups, we found moderate to large correlations (YNH: r = .58, EHI: r = .53, both p < .001). Two paired t tests revealed a small training effect for the YNH listeners (t55 = 3.3, p < .01) but not for the EHI listeners (p > .05). For the following analyses, we used the mean of the test–retest measurements or, in case one of the two measurements was previously excluded (see Methods and Procedure sections), the single remaining reliable threshold.

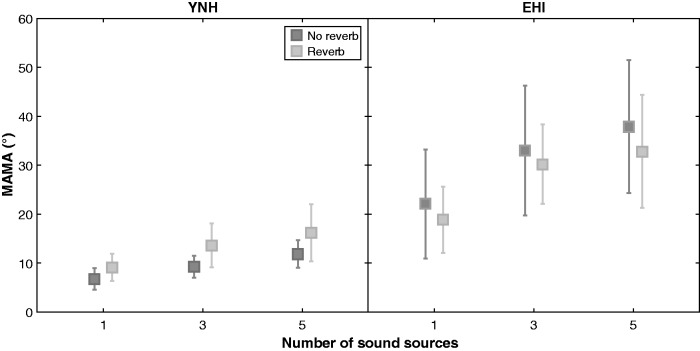

Figure 7 shows means and SDs of the MAMA thresholds of the YNH (left) and EHI (right) listeners for the different numbers of sound sources and levels of reverberation. As can be seen, the thresholds of the YNH group increased only slightly with increasing acoustic complexity, with the highest thresholds and largest spread being observable for the greatest number of sound sources in the presence of reverberation. In general, the thresholds of the EHI group were higher, and an increase in the number of sound sources led to clearly elevated thresholds for most of these participants. In contrast, reverberation did not seem to have much additional impact on their thresholds. It is also worth noting that the ability of the EHI listeners to detect angular source movements varied widely, with some of the EHI participants performing on a par with the YNH listeners, at least for the single-source scenarios, and with others having substantially higher thresholds.

Figure 7.

Means and SDs of the MAMA thresholds of the YNH (left) and EHI (right) participants for the different numbers of sound sources and levels of reverberation (black: without reverb; gray: with reverb).

YNH = young normal hearing; EHI = elderly hearing impaired; MAMA = minimum audible movement angle.

To test the statistical significance of these observations, we conducted an analysis of variance (ANOVA) with between-subject factor group (YNH, EHI) and within-subject factors number of sound sources (1, 3, 5) and reverberation (no reverb, reverb). This revealed significant effects of group, F(1, 18) = 29.9, p < .0001, and number of sound sources, F(2, 36) = 33.6, p < .00001, no effect of reverberation, F(1, 18) = 3.6, p = .7. The interaction between group and number of sound sources was significant, F(2, 36) = 4.7, p = .017, consistent with a stronger influence of the number of sound sources on the MAMA thresholds of the EHI group. The interaction between group and reverberation was not significant, however, F(1, 19) = 3.8, p = .065, and neither was the three-way interaction, F(2, 36) = 1.1, p > .3.

N-F Dimension

As for the MAMA measurements, we initially assessed the test–retest reliability of the MAMD data. For the EHI group, we found a large positive correlation (r = .61, p < .001), while for the YNH group, the test–retest measurements were not correlated (r = .16, p > .2). Closer inspection revealed that this was due to the small range of MAMD thresholds obtained for the YNH listeners (∼0.3 to 1 m). On average, the absolute difference between the test and retest measurements of these listeners was only 0.06 m, illustrating good repeatability of these data. Two paired t tests revealed no training effect for either group (both p > .05). For the following analyses, we used the mean of the test–retest measurements or, in case one of the two measurements was previously excluded, the single remaining reliable threshold.

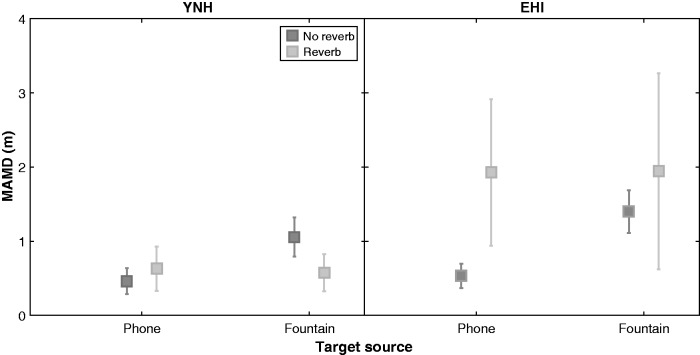

Figure 8 shows means and SDs of the MAMD thresholds. As can be seen, the YNH listeners obtained thresholds of ∼1 m or lower in all conditions. In other words, neither the number of sound sources nor the level of reverberation appeared to affect their performance. Furthermore, the variance across these listeners was very small. In contrast, for the EHI group the level of reverberation appeared to have a strong effect, whereas the number of sound sources seemed to have a smaller effect. Remarkably, a few EHI individuals obtained MAMD thresholds in excess of 5 m, especially under reverberant conditions.

Figure 8.

Means and SDs of the MAMD thresholds of the YNH (left) and EHI (right) participants for the different numbers of sound sources and levels of reverberation (black: without reverb; gray: with reverb).

YNH = young normal hearing; EHI = elderly hearing impaired; MAMD = minimum audible movement distance.

To analyze these data further, we carried out an ANOVA with between-subject factor group (YNH, EHI) and within-subject factors number of sound sources (1, 3, 5) and level of reverberation (no reverb, reverb). Again, the effect of group was significant, F(1, 19) = 25.0, p < .0001, whereas the effect of the number of sound sources was not, F(1.1, 20.1) = 3.4, p = .08. The main effect of reverberation, however, was significant, F(1, 19) = 31.6, p < .0001, as was the interaction between reverberation and group, F(1, 19) = 23.2, p < .001. The latter finding confirms that the EHI listeners faced greater difficulties regarding source movement detection under reverberant conditions than the YNH group. The interaction between group and number of sound sources was not significant, F(1.1, 20.1) = 3.7, p > .06, and neither was the three-way interaction, F(1.2, 23.0) = 0.23, p > .6.

Influence of Target Signal on N-F Measurements

Figure 9 shows means and SDs of the MAMD thresholds obtained for the single-source scenario with either the phone or the fountain as the target signal. Under anechoic conditions, the MAMD thresholds of both groups were comparable, with both groups having somewhat elevated thresholds for the fountain signal compared with the phone signal. Under reverberant conditions, the thresholds of the YNH group were only marginally affected, whereas those of the EHI group tended to be higher, regardless of the target signal.

Figure 9.

Means and SDs of the MAMD thresholds of the YNH (left) and EHI (right) participants obtained with the phone or fountain signal in the single-source scenario (black: without reverb; gray: with reverb).

YNH = young normal hearing; EHI = elderly hearing impaired; MAMD = minimum audible movement distance.

Again, we conducted an ANOVA with between-subject factor group (YNH, EHI) and within-subject factors target signal (phone, fountain) and reverberation (anechoic, echoic). This revealed significant effects of group, F(1, 17) = 25.0, p < .0001, target signal, F(1, 17) = 6.9, p = .018, and reverberation, F(1, 17) = 9.2, p < .01. Furthermore, the interactions between reverberation and group, F(1, 17) = 17.1, p < .001, and between reverberation and target signal, F(1, 17) = 11.1, p < .01, were significant, while the three-way interaction was not, F(1, 17) = 0.34, p > .5. The observed interaction between reverberation and group is consistent with the finding reported earlier that for the EHI group N-F source movement detection was much more affected by reverberation than for the YNH group. The interaction between reverberation and target signal confirms that the effects of reflections on N-F source movement detection depend on the spectro-temporal characteristics of the chosen stimulus. Interestingly, the presence of reverberation had a positive influence on source movement sensitivity of the YNH group with the fountain signal (p < .01), whereas for the EHI group, no such influence was found (p > .4), as revealed by post hoc t tests.

Discussion

The aim of the current study was to investigate sensitivity to angular and radial source movements in complex auditory scenes for both YNH listeners and EHI listeners tested with linear amplification. The acoustic complexity was varied by adding static sound sources and reverberation to the stimuli, which were rendered using virtual acoustics and presented binaurally over headphones. The data analyses revealed large differences in the ability to detect dynamic changes in azimuth or distance under complex (but not single-source) conditions, especially among the EHI listeners. Related to these findings, the analysis of the acoustical properties of the target signals suggested that monaural spectral cues may be important for source movement perception under reverberant conditions. In the following, we discuss these results in more detail.

Concerning our first hypothesis that YNH listeners will generally outperform EHI listeners, the results we obtained were consistent with this. That is, our statistical analyses revealed significant effects of listener group on L-R and N-F movement detection, confirming that EHI listeners face greater difficulties with perceiving source movements than YNH listeners. Broadly speaking, our results are also consistent with those of Brungart et al. (2014) who observed a performance difference between their normal-hearing and hearing-impaired listeners in the add and remove tasks and also with those of Weller et al. (2016) who observed significantly poorer speaker localization performance for their hearing-impaired listeners (see Introduction section). Nevertheless, for the single-source conditions without reverberation, our two groups performed equally well, regardless of the spatial movement dimension. This is in line with Best, Carlile, Kopčo, and van Schaik (2011) who found no difference in performance on a single-source sound localization task between normal-hearing and hearing-impaired listeners. It is also in line with Brungart et al. (2014) who reported very similar mean single-source localization performance for their two groups of participants.

In our study, the overall performance of both groups decreased with the number of sound sources, particularly so for the EHI listeners. This supports our second hypothesis that an increase in the number of concurrent sound sources would generally result in poorer source movement detection. This finding is also in line with Brungart et al. (2014), Weller et al. (2016), and Akeroyd et al. (2016) who all found negative effects of the number of sound sources on the performance of their hearing-impaired participants (see Introduction section). Taken together, these results confirm that hearing-impaired listeners experience great difficulties with determining the spatial properties of multiple concurrent sound sources.

Concerning our third hypothesis that reverberation would have no effect on L-R movement perception and a positive effect on N-F movement perception, at least for the YNH listeners, our results were partly consistent with this. Regarding L-R movement detection, the results were unaffected by reverberation, irrespective of listener group. In a recent study conducted with normal-hearing listeners, no effects of reverberation were found for velocities between 25°/s and 100°/s (Sankaran et al., 2014). This is in line with our results, although in our study the velocities were <25°/s (see Procedure section). Interestingly, there was a tendency toward greater intersubject variability in the YNH group (Figure 7), suggesting that these listeners were differentially affected by the reverberation. For the EHI listeners, on the other hand, there was less intersubject variability under reverberant compared with anechoic conditions. Further research would be necessary to follow-up on the cause for this. Regarding N-F movement detection, we found no effect of reverberation for the YNH listeners (who obtained very low thresholds throughout), whereas for the EHI listeners, we found a clearly negative effect. This suggests that the reverberation distorted the acoustic cues that are thought to be salient for movement detection in such a way that these listeners were unable to make use of them. Broadly speaking, this is consistent with the finding of Akeroyd et al. (2007) that hearing-impaired listeners are hampered in their ability to use the DRR as a cue for (static) distance perception (see Introduction section). For the multisource conditions, it could also be that the addition of reverberation led to a blurring of the different signals, making source segregation problematic if not impossible for the EHI participants. Reverberation can greatly alter monaural and binaural cues (cf., Figure 5), which are thought to be related to cocktail party listening, that is, the ability to hear out a target signal amid a cacophony of other sounds (e.g., McDermott, 2009).

It is also worth noting that the influence of reverberation on dynamic distance perception seems to be different to that on static distance perception. To recapitulate, the DRR is widely believed to be crucial for static distance perception. For dynamic distance (or movement) perception, on the other hand, our data imply that other cues (or perhaps combinations thereof) may be essential. The precise mechanisms underlying source movement perception are currently unclear. However, our acoustic analyses suggested that monaural spectral changes play a role for source movement perception in reverberant environments. Further research should be devoted to this issue. Ideally, this research should also address the influence of individualized versus nonindividualized HRTFs. In our study, nonindividualized HRTFs were used. Because research has shown that this can adversely affect the perception of externalization in hearing-impaired listeners (Boyd, Whitmer, Soraghan, & Akeroyd, 2012), it could in principle also have an influence on source movement detection.

In our study, we also considered the influence of the acoustical properties of the target stimulus and observed an interaction with reverberation. That is, the N-F movement detection thresholds of the EHI listeners measured with the narrow-band tonal phone signal were elevated in the presence of reverberation, whereas the thresholds measured with the noise-like fountain signal were robust to its influence. For the YNH listeners, the fountain thresholds even improved in reverberation, possibly because they had access to relevant high-frequency (>6 kHz) acoustic information that was inaudible for the EHI listeners (see Figure 3). In accordance with the MAMA literature (e.g., Carlile & Leung, 2016), we expected that movement detection thresholds would generally decrease with greater stimulus bandwidth, including in multisource scenarios and in reverberation. In general, our data were consistent with this. Given the influence of the spectro-temporal stimulus characteristics that we found, follow-up research should ideally investigate this more fully.

Another limitation of the current study was that the participants always knew in advance about the upcoming stimulus movements. In the real world, listeners often do not have such a priori information available. Therefore, to detect such changes, they must remain constantly vigilant. In future work, the task could be made more realistic by randomizing the spatial movement dimension or the starting position of the target signal. Furthermore, our study was limited to one acoustic environment. Even though we used a real room as the basis for our simulation, it would be important to extend the investigation to other types of environments, for example, rooms with different levels of reverberation, different types of noise sources (e.g., diffuse noise), or scenarios that reflect other complex listening tasks such as a traffic situation or a group discussion (cf., Grimm, Kollmeier, & Hohmann, 2016).

Finally, due to the inclusion of a YNH and an EHI group, the effects of age and hearing loss on source movement detection were confounded in our study. Our focus was on the deficits that EHI listeners exhibit, with a view toward investigating ways of addressing them with hearing devices in future research. Ideally, this research would also disentangle the effects of age and hearing loss to allow for more targeted rehabilitative solutions.

In summary, the current study showed that our setup is capable of simulating changes in acoustical features that are thought to underlie source movement perception. Furthermore, the test setup appears to give informative estimates of spatial listening abilities that are sensitive to factors such as acoustic complexity and hearing loss. Altogether, this suggests that our setup can be used for investigating spatial perception in complex scenarios. Nevertheless, the extent to which our setup enables an accurate representation of real-world sound field listening is currently unknown and should therefore ideally be investigated. Furthermore, in view of the fact that our results highlight certain aspects that are particularly difficult for EHI listeners (even with adequate stimulus audibility), it would be of interest to investigate if hearing aid signal processing can ameliorate these deficits.

Acknowledgments

The authors thank Laura Hartog (Oldenburg University) for her help with the measurements and Lars Bramsløw (Oticon A/S) for input to the article.

Declaration of Conflicting Interests

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This research was funded by the Oticon Foundation and the DFG Cluster of Excellence EXC 1077/1 ‘Hearing4all.’

References

- Adriaensen, F. (2007). A tetrahedral microphone processor for ambisonic recording. In Proceedings of the 5th International Linux Audio Conference (LAC07), Berlin, Germany.

- Akeroyd M. A., Gatehouse S., Blaschke J. (2007) The detection of differences in the cues to distance by elderly hearing-impaired listeners. The Journal of the Acoustical Society of America 121(2): 1077–1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Akeroyd M. A., Whitmer W. M., McShefferty D., Naylor G. (2016) Sound-source enumeration by hearing-impaired adults. The Journal of the Acoustical Society of America 139(4): 2210–2210. [Google Scholar]

- Altman J. A., Andreeva I. G. (2004) Monaural and binaural perception of approaching and withdrawing auditory images in humans. International Journal of Audiology 43(4): 227–235. [DOI] [PubMed] [Google Scholar]

- Andreeva I., Malinina E. (2011) The auditory aftereffects of radial sound source motion with different velocities. Human Physiology 37(1): 66–74. [PubMed] [Google Scholar]

- ANSI (2007) ANSI S3, 4-2007: Procedure for the computation of loudness of steady sounds, New York, NY: Author. [Google Scholar]

- Best V., Carlile S., Kopčo N., van Schaik A. (2011) Localization in speech mixtures by listeners with hearing loss. The Journal of the Acoustical Society of America 129(5): EL210–EL215. [DOI] [PubMed] [Google Scholar]

- Blauert J. (1997) Spatial hearing: The psychophysics of human sound localization, 2nd ed Cambridge, MA: MIT Press. [Google Scholar]

- Boyd A. W., Whitmer W. M., Soraghan J. J., Akeroyd M. A. (2012) Auditory externalization in hearing-impaired listeners: The effect of pinna cues and number of talkers. The Journal of the Acoustical Society of America 131(3): EL268–EL274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronkhorst A., Houtgast T. (1999) Auditory distance perception in rooms. Nature 397(6719): 517–520. [DOI] [PubMed] [Google Scholar]

- Bronkhorst A. W., Plomp R. (1989) Binaural speech intelligibility in noise for hearing-impaired listeners. The Journal of the Acoustical Society of America 86(4): 1374–1383. [DOI] [PubMed] [Google Scholar]

- Brungart D. S., Cohen J., Cord M., Zion D., Kalluri S. (2014) Assessment of auditory spatial awareness in complex listening environments. The Journal of the Acoustical Society of America 136(4): 1808–1820. Retrieved from https://dx.doi.org/10.1121/1.4893932. [DOI] [PubMed] [Google Scholar]

- Byrne D., Parkinson A., Newall P. (1990) Hearing aid gain and frequency response requirements for the severely/profoundly hearing impaired. Ear and Hearing 11(1): 40–49. [DOI] [PubMed] [Google Scholar]

- Carlile, S., & Leung, J. (2016). The perception of auditory motion. Trends in Hearing, 20(1), 1–19. [DOI] [PMC free article] [PubMed]

- Daniel, J. (2000). Représentation de champs acoustiques, application à la transmission et à la reproduction de scènes sonores complexes dans un contexte multimédia (PhD thesis). Université Pierre et Marie Curie, Paris.

- Daniel, J., Moreau, S., & Nicol, R. (2003). Further investigations of high-order ambisonics and wavefield synthesis for holophonic sound imaging. Paper presented in Amsterdam, The Netherlands Audio Engineering Society Convention 114.

- Dietz M., Ewert S. D., Hohmann V. (2011) Auditory model based direction estimation of concurrent speakers from binaural signals. Speech Communication 53(5): 592–605. [Google Scholar]

- Dillon, H. (2012). Hearing aids. Sydney, Australia: Boomerang Press; New York, NY: Thieme.

- Gatehouse S., Noble W. (2004) The speech, spatial and qualities of hearing scale (SSQ). International Journal of Audiology 43(2): 85–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grimm G., Ewert S., Hohmann V. (2015) Evaluation of spatial audio reproduction schemes for application in hearing aid research. Acta Acustica United with Acustica 101(4): 842–854. [Google Scholar]

- Grimm, G., Heeren, J., & Hohmann, V. (2015). Comparison of distance perception in simulated and real rooms. In Proceedings of the International Conference on Spatial Audio, Graz, Austria.

- Grimm G., Herzke T., Berg D., Hohmann V. (2006) The master hearing aid: A PC-based platform for algorithm development and evaluation. Acta Acustica United with Acustica 92(4): 618–628. [Google Scholar]

- Grimm G., Kollmeier B., Hohmann V. (2016) Spatial acoustic scenarios in multichannel loudspeaker systems for hearing aid evaluation. Journal of the American Academy of Audiology 27(7): 557–566. [DOI] [PubMed] [Google Scholar]

- Hansen M. (2006) Lehre und ausbildung in psychoakustik mit psylab: Freie software fur psychoakustische experimente. Fortschritte der Akustik [Teaching and education in psychoacoustics with psylab: free software for psychoacoustic experiments] 32(2): 591. [Google Scholar]

- Kayser H., Ewert S. D., Anemüller J., Rohdenburg T., Hohmann V., Kollmeier B. (2009) Database of multichannel in-ear and behind-the-ear head-related and binaural room impulse responses. EURASIP Journal on Advances in Signal Processing 2009: 6. [Google Scholar]

- Kolarik A. J., Moore B. C., Zahorik P., Cirstea S., Pardhan S. (2016) Auditory distance perception in humans: A review of cues, development, neuronal bases, and effects of sensory loss. Attention, Perception, & Psychophysics 78(2): 373–395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt H. (1971) Transformed up-down methods in psychoacoustics. The Journal of the Acoustical Society of America 49(2B): 467–477. [PubMed] [Google Scholar]

- Lundbeck M., Grimm G., Hohmann V., Laugesen S., Neher T. (2016) Sensitivity to angular and radial source movements as a function of acoustic complexity in normal and impaired hearing. In: Santurette S., et al. (eds) Proceedings of ISAAR 2015: Individual Hearing Loss – Characterization, Modelling, Compensation Strategies, Holbæk, Denmark: Centertryk A/S, pp. 469–476. [Google Scholar]

- Lutfi R. A., Wang W. (1999) Correlational analysis of acoustic cues for the discrimination of auditory motion. The Journal of the Acoustical Society of America 106(2): 919–928. [DOI] [PubMed] [Google Scholar]

- Macpherson E. A., Middlebrooks J. C. (2002) Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited. The Journal of the Acoustical Society of America 111(5): 2219–2236. [DOI] [PubMed] [Google Scholar]

- Malinina E. (2014) Asymmetry and spatial specificity of auditory aftereffects following adaptation to signals simulating approach and withdrawal of sound sources. Journal of Evolutionary Biochemistry and Physiology 50(5): 421–434. [PubMed] [Google Scholar]

- Malinina E., Andreeva I. (2013) Auditory aftereffects of approaching and withdrawing sound sources: Dependence on the trajectory and location of adapting stimuli. Journal of Evolutionary Biochemistry and Physiology 49(3): 316–329. [PubMed] [Google Scholar]

- McDermott J. H. (2009) The cocktail party problem. Current Biology 19(22): R1024–R1027. [DOI] [PubMed] [Google Scholar]

- Middlebrooks J. C., Green D. M. (1991) Sound localization by human listeners. Annual Review of Psychology 42(1): 135–159. [DOI] [PubMed] [Google Scholar]

- Moore B. C. (2012) An introduction to the psychology of hearing, Bingley, England: Emerald. [Google Scholar]

- Moore B. C., Tan C.-T. (2004) Development and validation of a method for predicting the perceived naturalness of sounds subjected to spectral distortion. Journal of the Audio Engineering Society 52(9): 900–914. [Google Scholar]

- Musicant A. D., Butler R. A. (1985) Influence of monaural spectral cues on binaural localization. The Journal of the Acoustical Society of America 77(1): 202–208. [DOI] [PubMed] [Google Scholar]

- Neuhoff J. G. (1998) Perceptual bias for rising tones. Nature 395(6698): 123–124. [DOI] [PubMed] [Google Scholar]

- Sankaran N., Leung J., Carlile S. (2014) Effects of virtual speaker density and room reverberation on spatiotemporal thresholds of audio-visual motion coherence. PloS One 9(9): e108437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Savioja L., Huopaniemi J., Lokki T., Väänänen R. (1999) Creating interactive virtual acoustic environments. Journal of the Audio Engineering Society 47(9): 675–705. [Google Scholar]

- Seifritz E., Neuhoff J. G., Bilecen D., Scheffler K., Mustovic H., Schächinger H., Di Salle F. (2002) Neural processing of auditory looming in the human brain. Current Biology 12(24): 2147–2151. [DOI] [PubMed] [Google Scholar]

- Störig C., Pörschmann C. (2013) Investigations into velocity and distance perception based on different types of moving sound sources with respect to auditory virtual environments. Journal of Virtual Reality and Broadcasting 10(4): 1–22. [Google Scholar]

- Weller T., Best V., Buchholz J. M., Young T. (2016) A method for assessing auditory spatial analysis in reverberant multitalker environments. Journal of the American Academy of Audiology 27(7): 601–611. [DOI] [PubMed] [Google Scholar]

- Zahorik P., Brungart D. S., Bronkhorst A. W. (2005) Auditory distance perception in humans: A summary of past and present research. Acta Acustica United with Acustica 91(3): 409–420. [Google Scholar]