Abstract

Interoception, the sensitivity to visceral sensations, plays an important role in homeostasis and guiding motivated behaviour. It is also considered to be fundamental to self-awareness. Despite its importance, the developmental origins of interoceptive sensitivity remain unexplored. We here provide the first evidence for implicit, flexible interoceptive sensitivity in 5 month old infants using a novel behavioural measure, coupled with an established cortical index of interoceptive processing. These findings have important implications for the understanding of the early developmental stages of self-awareness, self-regulation and socio-emotional abilities.

DOI: http://dx.doi.org/10.7554/eLife.25318.001

Research Organism: Human

eLife digest

From the beginning till the end of a person’s life, parts of the body continuously send signals to the brain. Most of this happens without the person even being aware of it, yet people can become aware of the signals under certain circumstances. For example, we can feel our racing heart rate or the “butterflies in our stomach” when we are anxious or excited. This ability to consciously sense signals from the body is called interoception, and some people are more aware of these signals than others. These differences between people can influence a wide range of psychological processes, including how strongly they feel emotions, how they make decisions, and their mental health.

Despite the crucial role that interoception plays in thought processes in adults, scientists know practically nothing about how it first develops. Progress in this field has been hindered largely because there was no way to measure sensitivity to interoceptive signals in infants.

Now, Maister et al. have developed a new task called iBEATS that can measure how sensitive an infant is to their own heartbeat. During the task, five-month old infants were shown an animated character that either moved in synchrony with their own heartbeat or out of synchrony with their heartbeat. The infants spent longer looking at the character that was moving out of synchrony than the one moving in synchrony, suggesting that even at this early age, infants can sense their own interoceptive signals.

As with adults, some of the infants were more sensitive to their heartbeats than others, and Maister et al. could see these differences played out in the infant’s brain activity via electrodes placed on the infant’s head. Infants who had shown a strong preference in the iBEATS task also showed a larger brain signal known as the Heart-Evoked Potential (or HEP). Furthermore, this brain signal got larger when infants viewed a video clip of an angry or fearful face. This suggests that the infants’ brains were monitoring their hearts more closely when they were confronted with negative emotions.

This study provides a validated measure of interoception for very young participants. Using this task, researchers can now investigate which factors affect how awareness to interoceptive signals develops, including social interactions and the infant’s temperament. Maister et al. also plan to carry out longer-term experiments to learn exactly how interoception may influence the development of emotional abilities, and also what role it might play in disorders such as anxiety and depression. The findings of these future experiments may eventually guide interventions to treat these conditions.

Main text

Our entire experience of the world is perceived against a pervasive backdrop of internal physiological sensations from our bodies. Our sensitivity to these visceral sensations, known as ‘interoception’, is thought to be the fundamental basis for subjective feeling states (Craig, 2009; Damasio, 2010), and important for maintaining homeostasis (Gu and FitzGerald, 2014). Interoceptive sensitivity is a stable trait which varies between individuals, and influences a range of psychological processes from emotion processing to decision-making and various psychological disorders (Critchley and Harrison, 2013). Inevitably, interoception has recently witnessed an exponential rise in interest across the neurosciences, psychology and psychiatry (Khalsa and Lapidus, 2016).

Behaviourally, individual differences in interoception are commonly measured by assessing participants’ ability to count their own heartbeats (Schandry, 1981) or discriminate cardio-auditory synchrony from asynchrony (Brener et al., 1993). Interoceptive processing can also be observed in the electroencephalogram (EEG); the Heartbeat Evoked Potential (HEP) is an electrophysiological index of cortical cardiac processing, and is found between 250–600 ms after the cardiac R wave over frontocentral (Pollatos and Schandry, 2004) and parietal regions (Dirlich et al., 1998; Couto et al., 2015). HEP amplitude is positively correlated with interoception at rest (Pollatos and Schandry, 2004), and is also modulated by state changes in interoception such as during emotional processing (Fukushima et al., 2011).

Beyond its fundamental role in adulthood, interoception has been given prominence in developmental theories, which highlight its importance in the infant’s experience of reward, motivation and arousal (Fogel, 2011; Mundy and Jarrold, 2010; Fotopoulou and Tsakiris, 2017). Interoceptive sensitivity may allow infants to perform crucial self-regulatory behaviours that facilitate homeostasis. By linking perceived internal sensations with events or objects in the environment, infants may be able to develop allostatic seeking or avoiding behaviours depending on the nature of the internal sensation. For example, accurately perceiving sensations of satiety and linking these sensations with feeding behaviour may allow infants to self-regulate their milk intake (Harshaw, 2008). Furthermore, the detection of unpleasant arousal states may initiate attentional disengagement or avoidance of an aversive stimulus. Therefore, individual differences in interoception may represent an important determinant in socio-emotional and physical development. However, despite much speculation regarding the developmental origins of interoception, developmental research in this area has been restricted to older childhood (Koch and Pollatos, 2014) due to methodological limitations. Here, we develop a novel behavioural measure of interoception in infancy, and present the first ever demonstration of interoceptive sensitivity at 5 months of age, coupled with electrophysiological markers, to show that (a) infants display an implicit sensitivity to interoceptive signals, and (b), that this sensitivity is responsive to socio-emotional processing demands, as in adults.

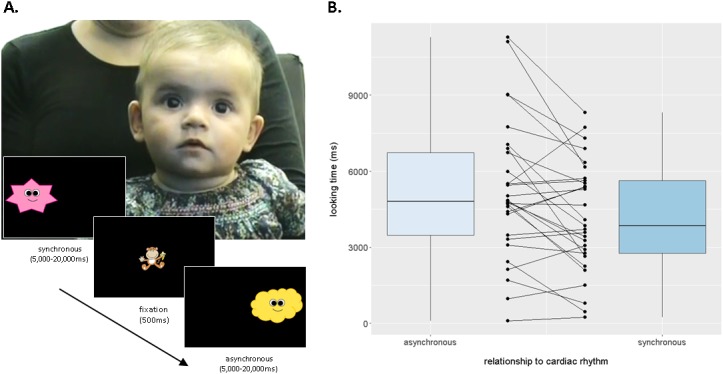

We developed a novel implicit behavioural measure, the Infant Heartbeat Task (iBEAT), which employs a sequential looking paradigm to assess whether infants are able to differentiate synchronous from asynchronous cardiac rhythms. Twenty-nine infants (17 males, mean age = 5.13 months, SD = 0.29) viewed an animated character, moving either in synchrony or asynchrony (±10% speed) with the infant’s own heartbeat, during continuous eye-tracking (Figure 1A). A clear visual preference for the asynchronous stimulus emerged at the group level, MASYNCH = 5194 ms, SD = 2697, MSYNCH = 4170 ms, SD = 2167, t(28)=-3.267, p=0.0029, Cohen’s d = 0.40 (Figure 1B, also see Figure 1—figure supplement 1), indicating that infants displayed an implicit sensitivity to interoceptive signals, and an ability to integrate these interoceptive signals with external visual-auditory stimuli. As with adults, there were individual differences in the direction and magnitude of preference (Figure 1B).

Figure 1. Infants differentiate between synchronous and asynchronous cardiac rhythms in a sequential looking paradigm (iBEAT task).

(A) iBEAT paradigm. Infants viewed trials alternating between synchronous and asynchronous cardiac rhythms, presented either on the left or right of the screen. Stimuli remained on the screen for 5000 ms, after which time its continued presentation depended on infant attention (max. 20,000 ms). Informed consent was obtained from the caregiver of the infant featured in Figure 1A. (B) Boxplot quantification of average looking times (ms) to stimuli that were asynchronous or synchronous with infants’ own cardiac rhythm (N = 29 infants, paired t-test, p=0.0029). Strip-chart points indicate pairs of raw data points from individual infants, reflecting individual differences in looking behaviours. Boxplot whiskers denote ±1.5*interquartile range limits.

DOI: http://dx.doi.org/10.7554/eLife.25318.003

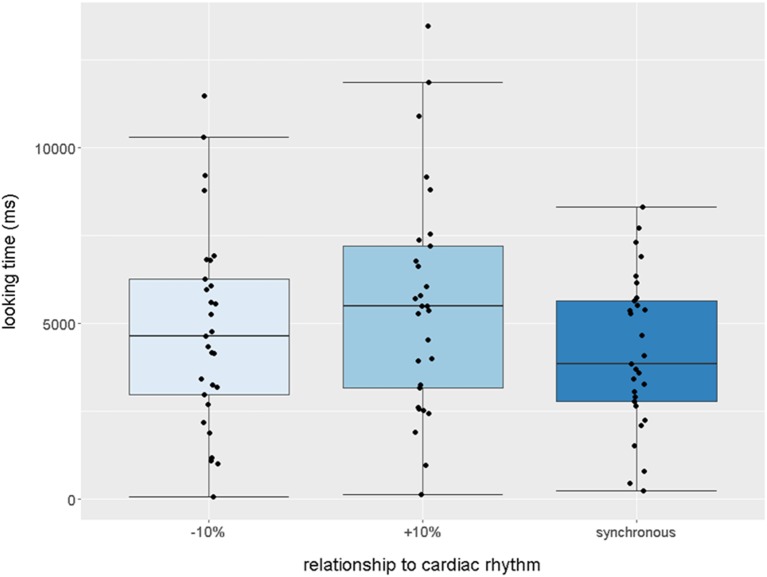

Figure 1—figure supplement 1. Boxplot quantification of average looking times (ms) to stimuli that were −10% (slower),+10% (faster), or synchronous with infants’ own cardiac rhythm (N = 29 infants, paired one-tailed t-tests, synchronous stimulus received less attention than both slower, p=0.029 and faster stimuli, p=0.0025).

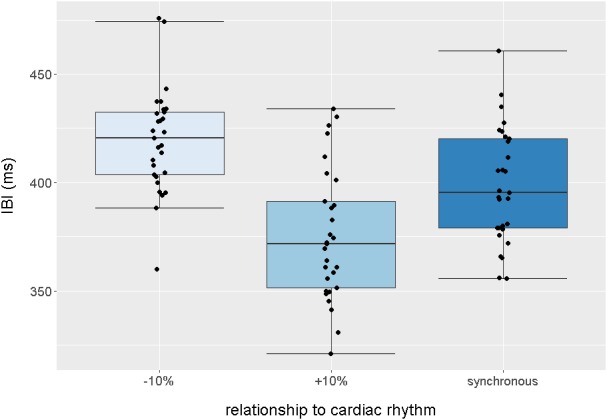

Figure 1—figure supplement 2. Boxplot quantification of average intervals (ms) between each audiovisual beat presented in the −10% (slower),+10% (faster) and synchronous trials, across the entire task.

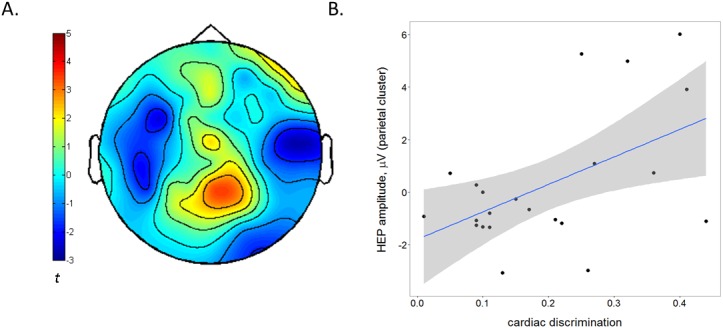

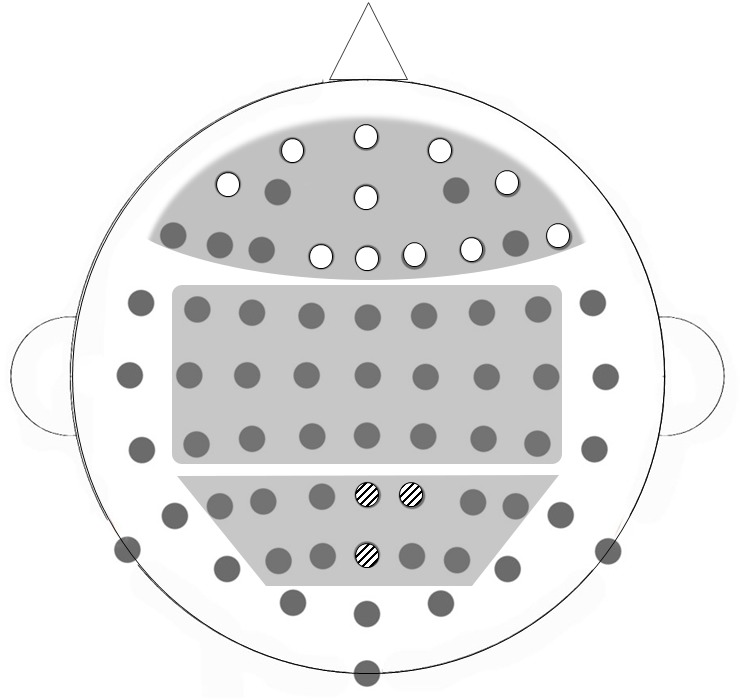

We then measured HEP amplitude in the same infants whilst they viewed short video clips of emotional and non-emotional facial expressions (Missana et al., 2014). First, we investigated the relation between the behavioural cardiac discrimination performance and HEP amplitude collapsed across emotion conditions to obtain a measure of baseline HEP independent of emotion. Given the varied nature of the topography and timing of HEP in adults (Kern et al., 2013), we used a non-parametric Monte-Carlo cluster-based approach (Maris and Oostenveld, 2007) in three broad bilateral regions of interest (ROI: frontal, central and parietal, see Figure 2—figure supplement 1) across a 150–300 ms time window after the R wave. This time window was chosen to account for the infants’ rapid heart rate (mean R-R interval = 422.98 ms, SD = 25.36). We calculated a Cardiac Discrimination score as the absolute proportion difference between looking times to the synchronous and asynchronous stimuli during the iBEAT task. Individual Monte-Carlo cluster-based regression analyses were carried out for each of the three ROIs (Couto et al., 2015; Canales-Johnson et al., 2015). In the parietal ROI, a midline cluster (P2, POz, Pz) between 206–272 ms after the R wave was found to significantly correlate with infant cardiac discrimination, p=0.019, TSUM = 577.0. HEP amplitude was higher for infants who showed a greater discrimination between synchronous and asynchronous cardiac rhythms during the iBEAT task (Figure 2, also see Supplementary Results).

Figure 2. The amplitude of the Heartbeat Evoked Potential is related to individual differences in infants' behavioural cardiac discrimination.

(A) Topographical representation of the significant midline parietal cluster in which HEP positively correlated with cardiac discrimination on the iBEAT task (N = 22 infants, Monte-Carlo cluster regression, p=0.019). Colorbar represents cluster statistic (t). (B) Scatterplot illustrating the positive correlation between cardiac discrimination and HEP amplitude. Shaded area represents 95% confidence interval of fitted regression line, r = 0.52, p=0.013. HEP amplitude is the subject-wise average signal from the midline parietal cluster (P2, POz and Pz) across the 206–272 ms time window of all HEP segments (irrespective of emotion observation).

DOI: http://dx.doi.org/10.7554/eLife.25318.007

Figure 2—figure supplement 1. Broad bilateral ROIs selected for HEP analysis; frontal, central and parietal regions, illustrated on a BioSemi 64-channel 10/20 system.

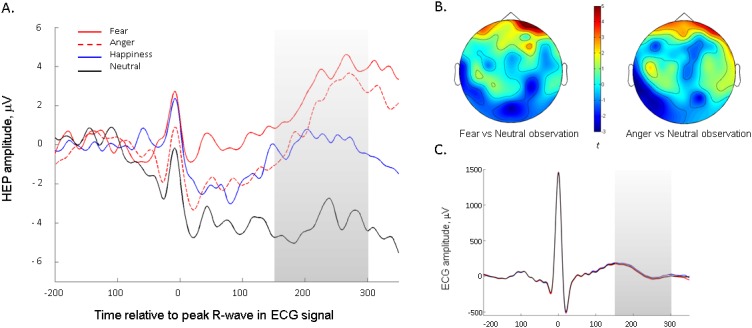

We next investigated emotion-specific modulations in HEP amplitude, by individually comparing HEPs in each emotion condition to the neutral baseline (as in [Couto et al., 2015]), again using a Monte-Carlo cluster analysis. In the frontal ROI, the cluster-permutation analysis identified a significant cluster for both fear observation, p=0.0015, TSUM = 1842.2, and for anger observation, p=0.002, TSUM = 1994.8. Amplitude was significantly higher during fear and anger observation than during neutral observation (Fig, 3A). The effects extended across the entire time window (150 ms-300ms after the R wave), and the clusters closely overlapped in topography (Figure 3B). There were no significant clusters in parietal or central ROIs, and no significant clusters emerged for the happiness vs. neutral comparison. An identical analysis on the ECG channel did not identify any significant temporal clusters discriminating between emotional conditions, confirming that the effect was specific to the cortical processing of the heartbeat and not due cardiac field artefacts (Figure 3C). An analysis on the EEG signal time-locked to the emotional stimulus, rather than the heartbeat, did not show any significant differences between emotions in the specific frontal ROI identified in the HEP analysis, suggesting that the emotional modulation of the HEP was not merely an artefact of ERPs to emotional expressions in the same areas (see Supplementary Results).

Figure 3. The Heartbeat Evoked Potential is modulated by infants' observation of negative emotional expressions.

(A) Average HEP amplitude (across representative frontal channels common to fear- and anger-specific clusters) for the four emotion conditions. Shaded region represents the time-window analysed. (B) Topographical representation of positive frontal clusters, showing significantly higher activity during negative emotion (i.e. fear and anger) versus neutral observation (N = 22 infants, Monte-Carlo cluster analysis, p≤0.002). Averaged across 150–300 ms period. Colour bar shows Monte-Carlo cluster statistic (t). (C) Average ECG signal across the four emotion observation conditions.

Here, we report the first evidence of implicit neurobehavioral sensitivity to interoceptive signals in early infancy. Infants’ ability to discriminate cardiac-audiovisual synchrony during the iBEAT task suggests that they are not only sensitive to internal sensations, but are also able to integrate interoceptive information with stimuli in the environment to drive visual preferences. In adults, the relationship between the internal and external experience of the body has been shown to play a critical role in the malleability of body ownership (Tsakiris et al., 2011; Suzuki et al., 2013; Aspell et al., 2013). Therefore, the ability to integrate interoceptive and exteroceptive information may be central to infants’ developing awareness of their own body boundaries. Furthermore, this integration process could be a precursor to mechanisms which allow the attribution of reward value to certain events and objects. The ability to preferentially orient to specific aspects of the environment that elicit positive interoceptive sensations, and to avoid aspects that lead to aversive or over-arousing bodily states, may provide the basis for an emerging homeostatic self-regulatory capacity in the developing infant (Gu and FitzGerald, 2014), via a process of interoceptive inference (Seth, 2013; Allen et al., 2016). This process is likely to be essential for guiding learning towards intrinsically motivating classes of stimuli, for example faces (Quattrocki and Friston, 2014). A difficulty in detecting interoceptive states, or a subsequent disruption in ascribing interoceptive ‘value’ to the environment, could lead to atypical development in emotional, cognitive and social domains (Mundy and Jarrold, 2010; Quattrocki and Friston, 2014). The developmental trajectory of interoception may therefore provide strong clues as to the origins of certain psychopathologies (Quattrocki and Friston, 2014; Murphy et al., 2017).

We also demonstrated that a cortical index of interoceptive processing, the HEP, is particularly sensitive to emotional processing, especially negative emotions, in infancy as it does in adulthood. These findings indicate that infants’ state interoceptive processing and its cortical representation is dynamic, flexible and responsive to task demands. These results support a proposed relationship between online cardiac signalling and emotional evaluation in early infancy, which has already received persuasive support in adults (Garfinkel and Critchley, 2013).

Our results also have important bearing on the study of self-awareness, the development of which has long been a topic of fascination in the behavioural sciences. It is now known that self-awareness in adulthood has close links with interoceptive abilities (Tsakiris et al., 2011; Filippetti and Tsakiris, 2017), but how or when this link emerges has never yet been investigated. Our results allow future research to assess the relationship between individual differences in interoceptive sensitivity and the achievement of various milestones in the development of self-awareness, for example mirror self-recognition or the onset of episodic remembering. The implicit, non-verbal nature of the newly-developed tasks also raise the possibility of taking a comparative approach by measuring interoception in other non-human animals (Evrard et al., 2014). This will provide a unique opportunity to learn more about the evolutionary trajectory of interoception and embodied self-awareness.

In conclusion, the discovery that infants are sensitive to interoceptive sensations has wide-reaching implications for the study of interoception, self-awareness and cognitive development, and paves the way for understanding how individual differences in interoception can develop, persist and influence the way in which we experience ourselves and the world.

Supplementary results

Supplementary results for iBEAT task

As the rhythm of the asynchronous stimuli could either be faster or slower than the infant’s own heartbeat, two further one-tailed t-tests were carried out comparing these two types of asynchronous stimuli to the synchronous stimulus separately to ensure that the asynchronous preference held in both cases. Infants looked longer at both the slower stimulus, M = 4829, SD = 2829, t(28)= - 1.977, p=0.029 (1-tailed), d = 0.25, and the faster stimulus, M = 5547, SD = 3190, t(28)= 3.038, p=0.0025 (1-tailed), d = 0.475, as compared to the synchronous stimulus. Looking times for the faster vs. the slower stimulus did not significantly differ, p>0.05. These analyses are illustrated in Figure 1—figure supplement 1.

Interbeat intervals were then calculated for each condition averaged across the group of infants; M(asynch-faster) = 375 ms (SD = 15), range = 361–392 ms, M(asynch-slower) = 417 ms (SD = 18), range = 399–436 ms, M(synch) = 398 ms (SD = 18), range = 376–419 ms. These data are displayed in Figure 1—figure supplement 2.

Supplementary results for HEP

To ensure that the correlation found between cardiac discrimination and HEP amplitude was not due to cardiac field artefacts, we also calculated the correlation coefficient between the cardiac discrimination score and the average ECG amplitude across the time window of significance (206–272 ms). The correlation was non-significant, r = –0.15, p=0.502, suggesting that the observed relationship between cardiac discrimination and HEP amplitude was specific to the cortical processing of the heartbeat rather than cardiac activity per se.

To ensure that the emotional modulation of HEP observed for fear and anger was not instead caused by an ERP to the emotional expressions in the same channels, we also carried out an analysis of the EEG signal time-locked to the onset of emotional expressions, instead of heartbeats. Segmentation was performed surrounding the entire duration of each emotional expression (2000 ms). We employed the same Monte-Carlo random cluster permutation method as used for the HEP analysis, to ensure comparability. First, the entire time-window was interrogated for spatiotemporal clusters that significantly discriminated fear or anger from neutral conditions, within the eleven channels that we found emotional modulation of HEP (see Figure 2—figure supplement 1). No significant clusters were revealed in these channels, neither for the anger-neutral comparison (p≥0.213) nor the fear-neutral comparison (p≥0.395). We repeated this analysis with a more specific temporal focus on 300–600 ms after emotional expression onset, which has been identified as a likely time-window for infant ERP to emotional expressions (Kobiella et al., 2008). Again, no significant clusters were revealed, either for the fear-neutral comparison (no clusters) or the anger-neutral comparison (p=0.279). Finally, we extracted average amplitude of the EEG signal from the critical channels time-locked to the emotional expression for each individual infant, and calculated correlation coefficients between this signal and the average HEP amplitude during observation of that same emotion. If our HEP results were actually caused by ERP signal fluctuations, there should be a significant correlation between these two variables. Importantly, there were no significant correlations between average ERP amplitude and HEP amplitude, either for fear (r = –0.171, p=0.470) or anger (r = 0.175, p=0.436). The results of these analyses suggest that the emotional modulation of the HEP signal in frontal areas is not an artefact of more general ERPs in response to these emotions in the same locations.

Materials and methods

Participants

Forty-one healthy, full-term infants were tested in total, at 5 months of age (19 males, mean age = 5.10 months, SD = 0.29). The expected effect sizes were not known in advance, so samples were selected according to similar adult literature (e.g. [Fukushima et al., 2011]) assuming an approximate 50% attrition rate, which is usual in infant EEG studies with this age range. Infants were recruited using a marketing company database, which provides data information from consenting mothers to be. Recruitment leaflets were sent to each household. Parents were able to participate by signing up to our online database or by contacting us via email. The study was completed in one session, and conducted according to the Declaration of Helsinki and all methods were approved by the Royal Holloway University of London Departmental Ethics Committee.

Measures

iBEAT task

For this task, infants were seated in a high-chair approximately 60 cm away from a computer screen integrated into a Tobii T120 eyetracker. Three disposable paediatric AgCl electrodes were attached to the infants’ chest and stomach in a 3-lead ECG setup, to monitor their cardiac activity throughout the task (Powerlab, ADInstruments, www.adinstruments.com). The R-peaks of the ECG were identified online using a hardware-based detection function (‘Fast Output Response’ function) from ADInstruments, and stimulus presentation was managed by a custom-made algorithm implemented in Matlab 2015a (MathWorks, Natick, MA). The onset of each R wave in the ECG trace was defined as the moment the voltage exceeded a predefined threshold, set individually for each infant. Once the function detected a R wave, a transistor-transistor logic (TTL) pulse was then sent to the computer presenting the audiovisual stimuli, with a delay of less than 2 ms as confirmed by internal lab reports.

In each trial, infants were shown an animated character, which moved rhythmically up and down, either synchronously with the infant’s own heartbeat, or asynchronously (±10% speed). Each up-down movement was accompanied by an attractive sound to mark the rhythm. For synchronous trials, the custom-made algorithm presented an auditory tone and change in position of the animated character upon the receipt of every TTL pulse. For asynchronous trials, the algorithm produced a cardiac-like rhythm that was ±10% the speed of the infant’s average heart rate recorded from the previous trial. Trials alternated between showing either asynchronous or synchronous movement, and the character could appear either on the left or the right of the screen (see [Phillips-Silver and Trainor, 2005]).

Once a steady ECG trace had been achieved, and the eyetracker had been calibrated to accurately detect the infant’s fixations, the computer task was started. Infants were shown the character for a minimum of 5 s, after which the continued presentation of the character was contingent on the infant’s attention. If the infant continued to look at the character, it remained on the screen for a maximum of 20 s. If the infant looked away from the character for longer than three consecutive seconds, the trial was automatically terminated by the computer program and the next trial begun. Between each trial, an attractive central fixation clip was presented to re-engage the infants’ attention. The task was terminated when four consecutive trials did not receive sufficient fixation to extend the trial time past the five second minimum, or when the infant became too tired or fussy to continue.

HEP measurement

The EEG recording was prepared whilst the infant was seated on their caregiver’s lap. A BioSemi elastic electrode cap was fitted onto the infant’s head, into which 64 active Ag-AgCl electrodes were fixed according to the 10/20 system. An external electrode was attached to the infant’s upper left abdomen to provide an ECG trace sufficient to detect the R-wave offline. The EEG and ECG signals were recorded using the BioSemi ActiveTwo system (BioSemi, Amsterdam, The Netherlands), with a sampling rate of 1024 Hz and band-pass filtered online at 0.16–100 Hz. The standard BioSemi references Common Mode Sense (CMS) electrode and Driven Right Leg (DRL) electrode were used.

Once clear EEG and ECG signals were obtained, the infant was repositioned 60 cm in front of a 29’ computer screen. The caregiver was asked to avoid interacting with the infant or looking at the screen during the task. The task comprised of short video clips of females making emotional expressions, following the paradigm of Missana et al. (2014). The standardised clips were sourced from the Montréal Pain and Affective Face Clips (MPAFC [Simon et al., 2008], RRID:SCR_015497) database. Happiness, fear, and anger were selected as emotions of interest. A neutral stimulus was also included, in order to provide a ‘no emotion’ control condition for comparison. Each video was modified to last for three seconds in total; this duration was comprised of a 1 s period showing the face with a neutral expression, then a 1 s dynamic period when the emotional expression manifested, and a final second freeze-frame of the expression at its peak intensity (Missana et al., 2014). The videos featured four different female models, and were cropped around the models’ faces using a soft circular template to remove extraneous features.

Trials were presented in a pseudo-randomized order, ensuring that trials featuring the same model and emotion were not presented consecutively. Between each trial, infants were shown an attention-getter, consisting of a short audio-visual clip of an engaging, non-social object presented centrally on the screen. When infants’ attention failed to return to the screen for two consecutive trials, the experimenter (positioned behind a curtain partition) initiated a longer video clip with music which gave the infant a short break and an incentive to reorient to the screen. Once the infant’s attention was recaptured, the experimenter manually recommenced the next trial. If the infant showed prolonged inattention (defined as having not attended to four consecutive trials) or fussiness, the task was terminated. A maximum of 48 trials were presented. Stimulus presentation was controlled using Presentation software (Neurobehavioural Systems, Inc.).

Testing procedure

Mothers and infants familiarised themselves with the testing room. Infants first performed the iBEAT task. After a short break for feeding and changing if needed, they were then placed on their mother’s lap for the EEG cap and electrodes to be fitted. Once the EEG signal was clear and the infant was ready, they were returned to the high-chair and the Emotion Observation Task was run. The cap and ECG electrodes were then removed, and mother and infant were taken to a comfortable rest area to take a break, feed and change if necessary. The mother then completed a brief questionnaire and further behavioural task (the results of which will be reported in a separate publication) before being thanked, debriefed and given a small gift and monetary compensation for travel costs.

Data pre-processing

iBEAT task

Looking time data were processed offline. Only trials in which there were no movement artefacts in the ECG recording were included in the analysis. Infants were excluded if they did not complete a minimum of eight trials in total (four in the synchronous and four in the asynchronous conditions). In total, seven infants were unable to commence the task, due to the eyetracker being unable to detect the infant’s eyes (N = 5) or the ECG signal having a high level of interference (N = 2). Of the remaining 36 infants who took part in the task, seven infants were excluded due to insufficient artefact-free trials (19%), leaving 29 infants remaining in the final analysis (see Table 1).

Table 1.

Mean number of valid, artefact-free trials in the iBEAT task included in the final sample, for each age group and condition. Standard deviation from the mean indicated in brackets. This table relates to the data displayed in Figure 1.

| Average number of trials completed (SD) | |||

|---|---|---|---|

| Synchronous | Asynch - slower | Asynch - faster | Total |

| 7.18 (2.96) | 4.04 (1.62) | 3.82 (1.47) | 15.04 (4.87) |

Total looking time in each trial was calculated as the summed duration of all recorded eyetracker samples falling in the region encompassing the location of the animated character during that trial. Outliers in the looking time data, defined as any trial which received an individual looking time greater than two standard deviations away from the group mean for that condition, were then removed from the sample. For the purpose of correlating heartbeat discrimination ability with HEP amplitude, a discrimination score was calculated reflecting the absolute proportion difference between looking times to the synchronous and asynchronous stimuli. An absolute preference was considered important as it reflected an ability to detect cardio-visual synchrony, regardless of whether the infant had a preference towards or against it. This is because there are high inter-individual and intra-individual differences in whether an infant will show a familiarity or a novelty preference, particularly in intermodal looking time procedures (Houston-Price and Nakai, 2004)

HEP measure

Out of the full sample, thirty-nine infants completed the EEG recording. Offline EEG pre-processing was performed using BrainVision Analyzer software (Brain Products, Munich, Germany). The continuous EEG data was filtered offline with a bandpass filter of 0.1–30 Hz (24 dB/oct) and a 50 Hz notch filter. An Independent Component Analysis (ICA) was performed on raw EEG data to remove eye-movement artefacts and Cardiac Field Artefacts (CFA) (Terhaar et al., 2012). Using ICA to remove CFAs has been shown to be highly effective in removing cardiac artefacts from the EEG signal (Terhaar et al., 2012; Park et al., 2014; Luft and Bhattacharya, 2015). Bad channels were replaced using topographic interpolation.

The data was then segmented into a 2000 ms epoch (1000 ms to 3000 ms) time-locked to the onset of the presentation of the emotion stimulus. This period was selected as this was when the observed emotional expression was at peak intensity. The data was then re-segmented to extract the HEPS, with a duration of 600 ms (−200 ms to 400 ms) time-locked to the R-wave. The resulting segments were baseline corrected using an interval from −200 ms to −50 ms to avoid artefacts from the R wave rising edge (Canales-Johnson et al., 2015). Semi-automatic artefact rejection was combined with visual inspection for all participants. Epochs exceeding a voltage step of 200 µv/200 ms, a maximal allowed difference of 250 µv/200 ms, amplitudes exceeding ±250 μV, and low activity less than −0.5 μV/50 ms were rejected from analyses. Infants who completed less than 25% of the total trials (less than five trials) in each emotion condition were automatically excluded. In total, 7 infants (18%) of the infants were excluded from the EEG analyses based on these criteria which is in line with other infant EEG studies. Segments were then re-referenced to the average and grand averaged (see Table 2).

Table 2.

Mean number of trials, heartbeats and valid HEP segments extracted for each emotion condition (standard deviation in brackets), N = 22. This table relates to data displayed in Figure 3.

| Condition: | Happiness | Fear | Anger | Neutral |

|---|---|---|---|---|

| Average Number of Trials | 12.5 (3.6) | 12.4 (3.6) | 12.7 (3.7) | 12.7 (3.6) |

| Average Number of Heartbeats | 46.6 (12.6) | 45.8 (12.3) | 46.3 (12.7) | 47.4 (12.8) |

| Average Number of HEP segments | 22.0 (13.9) | 21.3 (13.9) | 23.8 (13.9) | 21.8 (15.0) |

As a central aim was to investigate whether the observed behavioural cardiac discrimination was related to HEP amplitude during the EEG recording, we restricted EEG analysis to those infants who had valid, complete data from both the EEG task and the task (N = 22). To investigate whether behavioural cardiac discrimination was related to HEP amplitude, HEP segments were collapsed across emotion conditions in order to get an estimate of HEP amplitude independent of specific emotional processing demands.

Statistics

All tests were evaluated against a two-tailed p<0.05 level of significance. For the HEP analysis, a Monte-Carlo random cluster-permutation method was implemented in FieldTrip. This method corrects for multiple comparisons in space and time (Maris and Oostenveld, 2007). Using this method, all samples that showed a significant (p<.05) relationship with our independent variable were clustered according to spatiotemporal adjacencies, and cluster-level statistics were calculated by taking a sum of the t-values for each cluster. A Monte-Carlo permutation method then generated a p-value by calculating the probability that this cluster-level statistic could be achieved by chance, by randomly shuffling and resampling the independent variable structure a large number of times (2000 repetitions) (Maris and Oostenveld, 2007). Spatiotemporal clusters that had a resulting Monte-Carlo corrected p-value of less than the critical alpha level of. 05 were interpreted as ‘significant’.

For both the iBEAT task and the HEP measurement, data collection was not performed blind to the experimental condition to which each trial belonged, due to the requirements for stimulus and cardiac monitoring during the task. However, for HEP analysis, experimental condition was removed from the data after data collection and all EEG pre-processing was performed blind to the conditions of the experiment. Condition was revealed at final statistical analysis so that specific emotions could be compared to the neutral condition. Both reported tasks had within-subjects designs involving no group allocation; therefore, blinding to any between-subject conditions and randomization to such conditions was not applicable.

Acknowledgements

This study was supported by a BIAL Foundation Grant for Research Project 087/2014 to LM and MT and the European Research Council [ERC- 2010-StG-262853] under the FP7 to MT.

Funding Statement

The funders had no role in study design, data collection and interpretation, or the decision to submit the work for publication.

Funding Information

This paper was supported by the following grants:

Fundação Bial 87/14 to Lara Maister, Manos Tsakiris.

H2020 European Research Council ERC- 2010-StG-262853 to Manos Tsakiris.

Additional information

Competing interests

The authors declare that no competing interests exist.

Author contributions

LM, Conceptualization, Data curation, Formal analysis, Supervision, Funding acquisition, Methodology, Writing—original draft, Writing—review and editing.

TT, Data curation, Investigation, Methodology.

MT, Conceptualization, Supervision, Funding acquisition, Writing—review and editing.

Ethics

Human subjects: Informed consent was obtained from the caregivers of all infants involved in the study. Informed consent was also obtained from the caregiver of the infant featured in Figure 1A. All methods were explicitly approved by the Royal Holloway Department of Psychology Ethics Committee, reference code 2015/050R1.

References

- Allen M, Frank D, Schwarzkopf DS, Fardo F, Winston JS, Hauser TU, Rees G. Unexpected arousal modulates the influence of sensory noise on confidence. eLife. 2016;5:e18103. doi: 10.7554/eLife.18103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aspell JE, Heydrich L, Marillier G, Lavanchy T, Herbelin B, Blanke O. Turning body and self inside out: visualized heartbeats alter bodily self-consciousness and tactile perception. Psychological Science. 2013;24:2445–2453. doi: 10.1177/0956797613498395. [DOI] [PubMed] [Google Scholar]

- Brener J, Liu X, Ring C. A method of constant stimuli for examining heartbeat detection: comparison with the Brener-Kluvitse and Whitehead methods. Psychophysiology. 1993;30:657–665. doi: 10.1111/j.1469-8986.1993.tb02091.x. [DOI] [PubMed] [Google Scholar]

- Canales-Johnson A, Silva C, Huepe D, Rivera-Rei Á, Noreika V, Garcia MC, Silva W, Ciraolo C, Vaucheret E, Sedeño L, Couto B, Kargieman L, Baglivo F, Sigman M, Chennu S, Ibáñez A, Rodríguez E, Bekinschtein TA. Auditory Feedback differentially modulates behavioral and neural markers of objective and subjective Performance when tapping to your heartbeat. Cerebral Cortex. 2015;25:4490–4503. doi: 10.1093/cercor/bhv076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Couto B, Adolfi F, Velasquez M, Mesow M, Feinstein J, Canales-Johnson A, Mikulan E, Martínez-Pernía D, Bekinschtein T, Sigman M, Manes F, Ibanez A. Heart evoked potential triggers brain responses to natural affective scenes: a preliminary study. Autonomic Neuroscience. 2015;193:132–137. doi: 10.1016/j.autneu.2015.06.006. [DOI] [PubMed] [Google Scholar]

- Craig AD. How do you feel--now? the anterior insula and human awareness. Nature Reviews Neuroscience. 2009;10:59–70. doi: 10.1038/nrn2555. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Harrison NA. Visceral influences on brain and behavior. Neuron. 2013;77:624–638. doi: 10.1016/j.neuron.2013.02.008. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Self Comes to Mind: Constructing the Conscious Brain. Heineman; 2010. [Google Scholar]

- Dirlich G, Dietl T, Vogl L, Strian F. Topography and morphology of heart action-related EEG potentials. Electroencephalography and Clinical Neurophysiology/Evoked Potentials Section. 1998;108:299–305. doi: 10.1016/S0168-5597(98)00003-3. [DOI] [PubMed] [Google Scholar]

- Evrard HC, Logothetis NK, Craig AD. Modular architectonic organization of the insula in the macaque monkey. Journal of Comparative Neurology. 2014;522:64–97. doi: 10.1002/cne.23436. [DOI] [PubMed] [Google Scholar]

- Filippetti ML, Tsakiris M. Heartfelt embodiment: changes in body-ownership and self-identification produce distinct changes in interoceptive accuracy. Cognition. 2017;159:1–10. doi: 10.1016/j.cognition.2016.11.002. [DOI] [PubMed] [Google Scholar]

- Fogel A. Embodied awareness: neither implicit nor explicit, and not necessarily nonverbal. Child Development Perspectives. 2011;5:183–186. doi: 10.1111/j.1750-8606.2011.00177.x. [DOI] [Google Scholar]

- Fotopoulou A, Tsakiris M. Mentalizing homeostasis: the social origins of interoceptive inference. Neuropsychoanalysis. 2017;19:3–28. doi: 10.1080/15294145.2017.1294031. [DOI] [Google Scholar]

- Fukushima H, Terasawa Y, Umeda S. Association between interoception and empathy: evidence from heartbeat-evoked brain Potential. International Journal of Psychophysiology. 2011;79:259–265. doi: 10.1016/j.ijpsycho.2010.10.015. [DOI] [PubMed] [Google Scholar]

- Garfinkel SN, Critchley HD. Interoception, emotion and brain: new insights link internal physiology to social behaviour. commentary on:: "Anterior insular cortex mediates bodily sensibility and social anxiety" by Terasawa et al. (2012) Social Cognitive and Affective Neuroscience. 2013;8:231–234. doi: 10.1093/scan/nss140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu X, FitzGerald TH. Interoceptive inference: homeostasis and decision-making. Trends in Cognitive Sciences. 2014;18:269–270. doi: 10.1016/j.tics.2014.02.001. [DOI] [PubMed] [Google Scholar]

- Harshaw C. Alimentary epigenetics: a Developmental Psychobiological Systems View of the perception of Hunger, Thirst and Satiety. Developmental Review. 2008;28:541–569. doi: 10.1016/j.dr.2008.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houston-Price C, Nakai S. Distinguishing novelty and familiarity effects in infant preference procedures. Infant and Child Development. 2004;13:341–348. doi: 10.1002/icd.364. [DOI] [Google Scholar]

- Kern M, Aertsen A, Schulze-Bonhage A, Ball T. Heart cycle-related effects on event-related potentials, spectral power changes, and connectivity patterns in the human ECoG. NeuroImage. 2013;81:178–190. doi: 10.1016/j.neuroimage.2013.05.042. [DOI] [PubMed] [Google Scholar]

- Khalsa SS, Lapidus RC. Can Interoception improve the pragmatic search for biomarkers in Psychiatry? Frontiers in Psychiatry. 2016;7:1–19. doi: 10.3389/fpsyt.2016.00121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobiella A, Grossmann T, Reid V, Striano T. The discrimination of angry and fearful facial expressions in 7-month-old infants: an event-related potential study. Cognition & Emotion. 2008;22:134–146. doi: 10.1080/02699930701394256. [DOI] [Google Scholar]

- Koch A, Pollatos O. Cardiac sensitivity in children: sex differences and its relationship to parameters of emotional processing. Psychophysiology. 2014;51:932–941. doi: 10.1111/psyp.12233. [DOI] [PubMed] [Google Scholar]

- Luft CD, Bhattacharya J. Aroused with heart: modulation of heartbeat evoked potential by arousal induction and its oscillatory correlates. Scientific Reports. 2015;5:15717. doi: 10.1038/srep15717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods. 2007;164:177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Missana M, Grigutsch M, Grossmann T. Developmental and individual differences in the neural processing of dynamic expressions of pain and anger. PLoS One. 2014;9:e93728. doi: 10.1371/journal.pone.0093728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mundy P, Jarrold W. Infant joint attention, neural networks and social cognition. Neural Networks. 2010;23:985–997. doi: 10.1016/j.neunet.2010.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy J, Brewer R, Catmur C, Bird G. Interoception and psychopathology: a developmental neuroscience perspective. Developmental Cognitive Neuroscience. 2017;23:45–56. doi: 10.1016/j.dcn.2016.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park HD, Correia S, Ducorps A, Tallon-Baudry C. Spontaneous fluctuations in neural responses to heartbeats predict visual detection. Nature Neuroscience. 2014;17:612–618. doi: 10.1038/nn.3671. [DOI] [PubMed] [Google Scholar]

- Phillips-Silver J, Trainor LJ. Feeling the beat: movement influences infant rhythm perception. Science. 2005;308:1430. doi: 10.1126/science.1110922. [DOI] [PubMed] [Google Scholar]

- Pollatos O, Schandry R. Accuracy of heartbeat perception is reflected in the amplitude of the heartbeat-evoked brain potential. Psychophysiology. 2004;41:476–482. doi: 10.1111/1469-8986.2004.00170.x. [DOI] [PubMed] [Google Scholar]

- Quattrocki E, Friston K. Autism, oxytocin and interoception. Neuroscience & Biobehavioral Reviews. 2014;47:410–430. doi: 10.1016/j.neubiorev.2014.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schandry R. Heart beat perception and emotional experience. Psychophysiology. 1981;18:483–488. doi: 10.1111/j.1469-8986.1981.tb02486.x. [DOI] [PubMed] [Google Scholar]

- Seth AK. Interoceptive inference, emotion, and the embodied self. Trends in Cognitive Sciences. 2013;17:565–573. doi: 10.1016/j.tics.2013.09.007. [DOI] [PubMed] [Google Scholar]

- Simon D, Craig KD, Gosselin F, Belin P, Rainville P. Recognition and discrimination of prototypical dynamic expressions of pain and emotions. Pain. 2008;135:55–64. doi: 10.1016/j.pain.2007.05.008. [DOI] [PubMed] [Google Scholar]

- Suzuki K, Garfinkel SN, Critchley HD, Seth AK. Multisensory integration across exteroceptive and interoceptive domains modulates self-experience in the rubber-hand illusion. Neuropsychologia. 2013;51:2909–2917. doi: 10.1016/j.neuropsychologia.2013.08.014. [DOI] [PubMed] [Google Scholar]

- Terhaar J, Viola FC, Bär KJ, Debener S. Heartbeat evoked potentials mirror altered body perception in depressed patients. Clinical Neurophysiology. 2012;123:1950–1957. doi: 10.1016/j.clinph.2012.02.086. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Tajadura-Jiménez A, Costantini M. Just a heartbeat away from one's body: interoceptive sensitivity predicts malleability of body-representations. Proceedings of the Royal Society B: Biological Sciences. 2011;278:2470–2476. doi: 10.1098/rspb.2010.2547. [DOI] [PMC free article] [PubMed] [Google Scholar]