Abstract

Brain-computer interfacing is a technology that has the potential to improve patient engagement in robot-assisted rehabilitation therapy. For example, movement intention reduces mu (8-13 Hz) oscillation amplitude over the sensorimotor cortex, a phenomenon referred to as event-related desynchronization (ERD). In an ERD-contingent assistance paradigm, initial BCI-enhanced robotic therapy studies have used ERD to provide robotic assistance for movement. Here we investigated how ERD changed as a function of audio-visual stimuli, overt movement from the participant, and robotic assistance. Twelve unimpaired subjects played a computer game designed for rehabilitation therapy with their fingers using the FINGER robotic exoskeleton. In the game, the participant and robot matched movement timing to audio-visual stimuli in the form of notes approaching a target on the screen set to the consistent beat of popular music. The audio-visual stimulation of the game alone did not cause ERD, before or after training. In contrast, overt movement by the subject caused ERD, whether or not the robot assisted the finger movement. Notably, ERD was also present when the subjects remained passive and the robot moved their fingers to play the game. This ERD occurred in anticipation of the passive finger movement with similar onset timing as for the overt movement conditions. These results demonstrate that ERD can be contingent on expectation of robotic assistance; that is, the brain generates an anticipatory ERD in expectation of a robot-imposed but predictable movement. This is a caveat that should be considered in designing BCIs for enhancing patient effort in roboticallyassisted therapy.

Index Terms: Rehabilitation robotics, brain-computer interface (BCI), man machine systems

I. Introduction

ROBOTIC devices, such as powered exoskeletons, have demonstrated utility for rehabilitation therapy of the upper extremity for individuals with stroke and other neurologic impairments [1-3]. In the most commonly used paradigm, the robotic therapy device physically assists the patient in completing repetitive desired movements that are pre-specified by a computer game that provides audio-visual cues [1,4]. Physical assistance is thought to enhance proprioceptive input, which may aid neural reorganization [5]. In past studies, robotic therapy has been shown to match or better the results obtainable with conventional rehabilitation movement therapy [1,6,7].

Research suggests that an important factor for ensuring the effectiveness of robotic therapy is active effort by the patient [1-6]. A key study showed that repetitive robotic movement of the upper extremity with a passive stroke patient has little therapeutic effect compared to robotic therapy in which the patient and robot work together [2]. Another study found no improvements in clinical movement scales following continuous passive range of motion therapy of the stroke-impaired arm [7]. It has also been shown that physically assisting in movement with a robot can trigger slacking by the motor system, which is an automatic and subconscious reduction in patient effort [8-11]. Thus it is important when designing robotic therapy systems to develop methods that encourage patient engagement and effort during the therapy and prevent slacking, since robotic assistance may in some cases innately encourage slacking.

Electroencephalography (EEG) based Brain Computer Interface (BCI) systems have been proposed for the purpose of enhancing robot-assisted rehabilitation training [12-15]. It is yet unclear how best to harness the strengths of these systems together, but one rationale focuses on promoting patient engagement [2, 8]. A BCI system could be used to detect movement intention, and the robotic therapy system could be programmed to provide assistance contingent on the sensed movement intention. For this purpose, mu and beta frequency bands (8-12, and 13-35 Hz) have been suggested for identifying brain states associated with movement intention [12-22]. Mu and beta sensorimotor rhythm (SMR) oscillatory amplitude is known to attenuate during preparation for an overt movement or motor imagery, a phenomenon referred to as event related desynchronization (ERD) [22].

ERD has been used successfully as a control signal for BCI applications, including, recently, robot-assisted therapy [12, 15, 23-26]. However, use of ERD as a contingent control signal for robotic therapy has not been shown to decisively improve motor outcomes for robotic therapy after stroke. One study that employed a BCI-contingent orthosis movement paradigm found no significant improvement in clinical scales used to rate hand function after the study [12]. Another, larger study found modest improvements in motor outcome measures compared to a sham control group who received random imposed (passive) movements [15].

It is possible that ERD is not tied to movement intention alone, but may instead be the result of sensory feedback associated with movement, whether active or passive. Indeed, previous research has suggested that ERD occurs during passive movements driven by a robotic orthosis or an experimenter, similar to when the subject performs overt movement or motor imagery [13, 15, 17-20]. For example, Alegre et al. [20] studied beta band desynchronization in six healthy volunteers during passive wrist extensions performed with the help of a pulley system at random intervals. The passive movements were found to induce ERD after the movement onset. The authors concluded that proprioceptive inputs induce ERD similar to that observed during voluntary movements. Another study analyzed beta ERD during passive and attempted foot movements in unimpaired subjects and subjects with paraplegia after spinal cord injury (SCI) [17]. Passive motions were controlled using a custom foot release mechanism at eight second intervals. A significant ERD was found to occur ∼500ms before movement onset in the unimpaired participants. Thus, in this case, ERD was found to anticipate predictable passive movement of the foot. These findings suggest that ERD is not solely related to the intention to move, but is also influenced by proprioceptive input and/or the expectation of imposed movement. Therefore, these findings have implications for the use of ERD as a control signal for detecting patient motor engagement during robot-assisted therapy. If the user's expectation and preparation for somatosensation as a result of predictable, passive motion is sufficient cause to trigger an ERD, the user might no longer need to actively engage in overt movement to cause the ERD trigger signal and the contingent imposed robotic movement.

The purpose of this study was to determine the effect of passive movement and subject effort on sensorimotor ERD within the context of a robot-assisted therapy paradigm we previously developed for retraining finger rehabilitation and individuation after stroke [27]. Robotic assistance and motor activity were treated as binary categorical design factors in a 22 factorial experiment, resulting in four primary conditions: A) active subject/passive robot, B) active subject/active robot, C) passive subject/passive robot, and D) passive subject/active robot (see Table I). Audio-visual stimulation without subject or robot movement was also tested to identify any changes in ERD that may be elicited by the robotic-therapy computer gaming paradigm itself.

Table I. Factor Combinations corresponding to the four experimental conditions.

| Robot | |||

|---|---|---|---|

| passive | active | ||

| Participant | active | A | B |

| passive | C | D | |

II. Methods

A. Experimental Setup

The Finger INdividuating Grasp Exercise Robot or “FINGER”, described at length in [27], was used as the robotic therapy device in this study (See Fig. 1). This robot facilitates the naturalistic grasping patterns of the index and middle fingers together or individuated. Robot assistance used a position feedback controller to follow a minimum-jerk trajectory which began 500ms before the note reached the target. The robot spent 500ms providing flexion assistance, followed by 500ms of extension assistance. Finger movements were mapped to corresponding cues on a screen in front of the participant and set to popular music in the form of a custom video game similar to Guitar Hero©. The gaming environment and user interface software were tailored specifically for this study. We previously found a therapeutic benefit to playing a similar version of the game after stroke [28].

Fig. 1.

Experimental Setup. A user is shown using the robot to play the game used in this study. The Finger individuating Grasp Exercise Robot (FINGER) appears in the foreground. FINGER makes use of two stacked, single-degree-of-freedom eight-bar mechanisms designed to assist the user in naturalistic grasping trajectories for each, or both of the index and middle fingers. FINGER is backdriveable. Robotic feedback gains, which determine the robotic forces, were held constant for active robot conditions. FINGER did not provide any assistance during the passive robot cases.

In the game, a note appears on screen for two seconds. The note moves down, reaching a target near the bottom of the screen. Notes were timed to reach the target with the beat of the music (“Blackbird” by The Beatles, 94 bpm, 62 notes). Notes were selected to occur at a maximum frequency of every four beats, which resulted in a minimum inter-note period of 2.55 seconds. Maximum note spacing was every eight beats (5.11 seconds).

During active portions of the experiment, the participant attempted to match the speed and timing of the note to complete the flexion portion of the grasping trajectory just as the note reaches the target. An on-screen marker represented the position of the robot. If the participant attained the desired amount of flexion and accurately matched the timing of the note, the game considered this a “hit” and provided visual feedback in the form of a fire graphic on the target and a progress bar counter increase (See Fig. 2).

Fig. 2.

The gaming environment used in this experiment. Notes streamed down the screen in synchrony with popular music. The user tried to match a finger flexion to the timing of the note crossing the target at the bottom of the screen. Fire appeared when a note was hit, and a score bar at the top of the screen gave visual performance feedback. Green notes indicated a desired index finger movement, yellow notes a middle finger movement, and blue notes indicated that both fingers should grasp together. Orange and red notes were not used. Red spheres above the three virtual “strings” on the right were mapped to actual robot finger positions in real time, and were intended to be matched to note timing on screen. In this screenshot, the user had just executed a hit with the yellow note/middle finger. A second note has just appeared on screen, and will reach the target 2 seconds later.

In some experimental conditions, we used the robot to assist in completing the movement with the correct finger at the correct time. More details of the assistive control algorithm can be found in [27], but essentially the movements followed a minimum-jerk trajectory calculated to guide the subject using a position feedback controller. The minimum-jerk trajectory had a duration of 500ms for flexion assistance, and a second minimum-jerk trajectory of duration 500ms was used for extension assistance, with no pause in between.

EEG data was collected using 256 electrodes, sampled at 1 kHz using the EGI Geodesic EEG System 400. Impedance values were kept below 100 kOhm. Raw EEG data was exported for offline analysis in Matlab. Marker timing data was captured from the gaming environment. Robot position, velocity, and controller gains were also sampled and recorded at 1 kHz.

B. Participants

Twelve unimpaired participants took part in this study (6 male; 6 female). All participants provided written informed consent and the study was approved by the Institutional Review Board of UC Irvine. A prerequisite for study inclusion was naivety to the experiment and gaming environment. All participants were considered unimpaired, and had no history of neurologic injury. In a study of EEG during shoulder-elbow movements, both unimpaired and stroke survivors were found to exhibit significantly greater ERD intensity while using their non-dominant arm vs. their dominant arm [14]. To maximize the ERD signal, all participants used their non-dominant hand in the robot. All participants were right handed, and thus used their non-dominant left hand.

C. Experimental Design

We used a two factor, two level (22) factorial design (Table I). The two factors were robot assistance (on or off), and overt motor activity (on or off). We will use the term “overt” to refer to a willed, voluntary, finger movement by the subject. In the factorial part of the experiment, each subject experienced each of the four conditions, labeled in Table I, A through D. We also tested the effect of audio-visual stimulation alone before and after the four conditions. This condition was the same as that used in the factorial block (Table I, C).

The subjects' fingers were first fastened to the robot as they sat comfortably in front of the screen. The gaming environment was loaded and the test song played while the participant watched. Participants were instructed to remain as still as possible during this audio-visual only condition. The robot did not provide any assistance. At the conclusion of the experiment, the participant was then asked to complete this same task again.

After the initial audio-visual only session, the participants were allowed to familiarize themselves with the robot and gaming environment during a short training session. Robot assistance was included during the training period, but limited to small forces that could not successfully complete the movement without the overt movement of the subject. Subjects were instructed to actively participate in the motor task to the best of their ability. All participants trained on the same song (“Gold on the Ceiling” by The Black Keys, 104 notes) until both the participant and experimenter felt comfortable in the participant's ability to understand the gaming environment and perform to an acceptable level. All participants exceeded an 80% note “hit” rate in the gaming environment during the training period. All participants gained proficiency within three test songs, and most within two.

The factorial part of the experimental session was divided into four runs consisting of one run per each of the four experimental conditions, for each participant. Inter-participant session order was randomized using a Williams Design Latin Square to minimize first order carryover effects. Factor combinations were explained to the participant by the test proctor using standardized scripts. Participants were allowed to ask clarification questions regarding their role during the current factor combinations, but were not privy to why the combination was being tested. During all combinations, participants completed one song, consisting of 62 notes (trials) each. The song, “Blackbird” by The Beatles, was the same for all participants and all conditions.

III. Data Analysis

Raw EEG data were recorded using the EGI Geodesic EEG System 400 with 256 electrodes at 1 kHz. Data were exported for offline analysis in Matlab (7.10.0, MathWorks, Inc., Natick, MA). The continuous EEG signal was detrended and low pass filtered at 50Hz. A surface LaPlacian filter was then applied to reduce effects of volume conduction. Eighteenth-order Legendre polynomials were used with a smoothing factor of 1×10−6. The data were then manually screened for artifacts such as eye blinks or muscle activity in the neck or face. Trial-channel combinations exhibiting artifact were marked for removal from later analysis.

For time-frequency decomposition, a Morlet wavelet transformation was applied. Wavelet transformations used 5 cycles at the lowest frequency of 5Hz and increased to 12 cycles at the highest frequency of 40 Hz. Wavelet analysis was applied at 1Hz steps resulting in 36 distinct frequencies. Wavelet transformation was performed on the continuous EEG data to eliminate the possibility of edge effects. Trials were then segmented into 3000ms epochs surrounding the note-target time, where the epoch start time was 1500ms prior to the note reaching the target. Trial-channel combinations marked for removal during data screening were removed at this point.

Power within a frequency bin was calculated as the magnitude of the complex coefficient result of the wavelet transformation:

| (1) |

where Pf is the power at a given frequency, f, Ψ is the Morlet wavelet, and S is the EEG signal. All are functions of time, t. The symbol | | represents the complex norm, and ⊗ represents the complex convolution. Power was then normalized using the decibel normalization method outlined in [29], and described by:

| (2) |

where is the scalar mean power taken across the baseline time period, defined as the initial 250ms of each note (trial). In the same equation, t and f are time and frequency points, respectively. The baseline period began 500ms after the note initially appeared on screen. The baseline period ended approximately 800ms before movement, and 1250ms before the note reached the target (end of flexion).

In previous studies that explored the effects of active and passive movements on ERD, maximum modulation of sensorimotor rhythm appeared in electrodes overlying the right and left motor cortices, locations C4 and C3, respectively [13, 20, 30]. During overt hand movement as well as motor imagery and passive movements, SMR modulation appeared primarily in contralateral M1, although bilateral modulation was also seen in some cases. Channel selection for this experiment was based on consideration of these previous studies' results (i.e. over C4). We then verified the topography of SMR modulation of the participants in this study. In an intermediate analysis, topographical location of ERD maxima confirmed SMR modulation in contralateral motor cortex near C4 as well as lesser activity in several subjects near CPz. A combination of electrodes overlying C4 and CPz that exhibited time-locked motor behavior was selected for analysis (see Fig. 3).

Fig. 3.

Topographical selection of channels for inclusion in processing. Participants used their left (non-dominant) hand in the movement task. Channel selection included the contralateral sensorimotor cortex surrounding C4, as well as central areas near Cz and CPz.

A 2×2 ANOVA was conducted on the maximum pre-movement decibel normalized desynchronization value (in dB) and max post-movement offset decibel normalized synchronization value (in dB), with a significance level set to p < 0.05. Robot assistance and overt movement (i.e. active movement by the subject) were treated as binary categorical design factors.

IV. Results

None of the 12 subjects exhibited ERD during the audiovisual only condition presented at the beginning and end of the experiment (see Fig. 6). Consistent with this, no ERD was seen in the audio-visual only condition in the factorial part of the experiment (passive subject/passive robot).

Fig. 6.

Group-level mean power amplitude time-frequency map in the audio-visual only condition. Results are shown at pre-exam and post-exam tests. No significant power modulation was recorded in either test. Time = 0 corresponds to the moment when the moving note crossed the target location.

In contrast, ERD was observed in the three conditions that involved physical movement of the fingers, including the condition in which the subject remained passive and the robot moved the subjects' fingers (see Fig. 4). In all, 10 out of 12 subjects exhibited ERD during active subject/passive robot movements. 11 out of 12 exhibited ERD during active subject/active robot movements. 10 out of 12 exhibited ERD during the passive subject/active robot movements. All 12 subjects exhibited ERD within at least one of the physical movement conditions. There was also evidence of event-related synchronization (ERS) in these three conditions, as seen by a rebound in power occurring at the end of the initial finger flexion, approximately 200ms after movement offset.

Fig. 4.

Mean mu band (8-13 Hz) power across subjects. Time = 0 corresponds to the moment when the moving note crossed the target location. Thin blue traces represent individual participant means. Thick red trace represents group-level mean. Red shading indicates amplitude significance (t-test) for each condition compared to the audio-visual only (passive subject/passive robot) condition. Significant ERD and ERS were seen in all three conditions in which a movement occurred. ERD preceded movement in all cases. Peak ERS values occurred just after finger flexion. Green traces show mean robot trajectory, with flexion being defined downward. Scale refers to percent range of motion. Discrete flex/extend portions of the grasping trajectory, with a no-movement interval at the target time (t=0), is illustrated by the passive subject/active robot condition (movement duration 937ms). A smooth grasping movement consisting of a flexion followed immediately by an extension with no pause between is seen in the active subject/passive robot condition (duration 1637ms). Robot assistance aided a shorter extension period in the active subject/active robot condition (duration 1253ms). There was no movement in the passive subject/passive robot condition.

The timing of the ERD and ERS were similar in the three experimental conditions in which they occurred (Fig. 4, Table II) with some minor differences. ERD began approximately 600-900ms before the start of movement in all three conditions, including when the subject remained passive but the robot moved. ERD in the active subject/passive robot condition preceded that of the remaining two movement conditions. Secondary ERS was seen following the finger extension period in Fig. 4 in the active robot conditions. These secondary ERS signals were not statistically significant from the active subject/passive robot condition. However, the mean secondary ERS value was largest in the passive subject/active robot condition (2.19 dB), followed by the active subject/active robot condition (1.96 dB). No secondary local maxima were seen in the active subject/passive robot condition. ERS was also seen to last longest in the active robot conditions, likely due to the secondary ERS feature. In these conditions, ERS extends to approximately 1000ms after finger-extension was complete.

Table II. ERD/ERS Timing.

| ERD | ERS | |||

|---|---|---|---|---|

|

| ||||

| Condition | start | end | start | end |

| A) motor only (active) | -854 | 333 | 405 | 1169 |

| B) robot+motor (active) | -682 | 263 | 323 | 1258 |

| C) audio-visual only | none | none | none | none |

| D) robot only (passive) | -642 | 266 | 333 | 1287 |

Time periods in which event related desynchronization and synchronization achieved statistical significance relative to baseline, via pointwise t-test (p < 0.05) are given in milliseconds relative to mean movement onset.

Robotic assistance increased ERD magnitude for the pre-movement onset ERD, but it only approached significance (ANOVA, p = 0.07). It also increased the magnitude of the post-movement ERS, but again it only approached significance (p = 0.06). Overt movement did not significantly alter ERD amplitude (p = 0.66) or ERS amplitude (p = 0.88). Interaction effects between robotic assistance and overt movement were not significant for ERD (p = 0.59) or ERS (p = 0.99). A comparison of each time point revealed no significant differences in the passive subject/active robot condition compared to the active subject conditions as well (t-test, p > 0.05). The maximum across-subject ERD value seen in the passive subject/active robot condition was found to reach 3.68 (+/− 2.96) dB. The maximum across-subject ERD value seen in the active subject/passive robot condition was larger at 4.35 (+/− 3.71) dB, but the difference was not significant (t-test, p = 0.63).

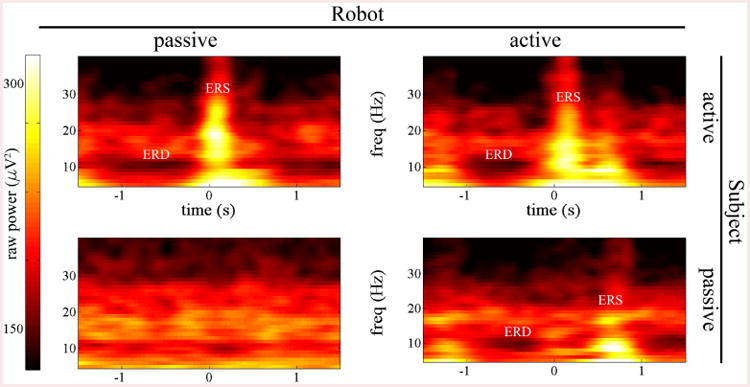

A total of 9 out of 12 subjects showed ERD primarily within the mu band (8-13 Hz). The remaining exhibited primarily beta-focused desynchronization (13-30 Hz). Two of these subjects exhibited ERD from 17-22 Hz, and the remaining subject showed ERD from 12-18 Hz. An example of broadband power amplitude can be seen for one subject in Fig. 5. Again, no significant modulation was seen in the passive subject/passive robot, audio-visual stimulation only condition. Mu-rhythm specific ERD was seen prior to movement onset with a peak at approximately -500ms and 10 Hz. This subject also exhibited prominent beta rebound at the end of finger flexion. These were especially noticeable in the active subject/active robot condition where beta ERS can be seen after flexion at approximately 12-28 Hz.

Fig. 5.

Time-frequency power maps during the four conditions for an example subject. Time = 0 corresponds to the moment when the moving note crossed the target location. This subject showed mu band (8-12 Hz) ERD and beta band (13-30 Hz) ERS in the three movement cases. Mu ERD always preceded movement. Beta ERS followed the completion of finger flexion, occurring at t=0 in the subject active conditions. A second, smaller ERS was seen in the robot active conditions. ERS appeared only after finger extension in the passive subject/active robot condition.

V. Discussion

In a robotic neurorehabilitation setting, the patient is often given the task of combinations of overt movement, robotic assistance, and external audio-visual stimuli associated with a computer game. The effects of these stimuli and interaction with one-another on ERD have not yet been well defined. The aims of this study were to identify the effects of these factors on ERD using a prototypical robotic therapy paradigm. ERD was found to precede movement during all three movement conditions, and notably even in the passive subject/active robot condition. No significant power modulation was seen in the audio-visual only condition before or after the factorial conditions were completed. ERS was identified during post-movement periods, with a tendency toward a secondary ERS in the robotically assisted conditions. Next we discuss the effects of robotic assistance on ERD and ERS. We will highlight the implications of these effects with regard to patient engagement in therapy and future BCI-robot therapy paradigms.

A. Effects of Robot Assistance on ERD

This study identified pre-movement ERD during passively imposed movement, suggesting that ERD during passive movement is not tied solely to proprioceptive feedback, but is likely the result of preparation for the impending somatosensory input the movement will produce. In many past studies, ERD has been found to follow imposed movement onset, and has been attributed to proprioceptive feedback [19, 20, 24]. During self-paced movements however, ERD has been observed to precede movement onset [22, 31-33]. Pre-movement ERD has also been observed previously before cued predictable movements [16, 21], including passive movements imposed on the subject [17, 18]. This study built on these findings, showing that ERD appeared in advance of a predictable imposed movement from a robotic orthosis when the subject was instructed to remain passive. Because proprioceptive feedback is not yet affected by the imposed movement during the pre-movement interval, these findings suggest there is a cortical preparation of the somatosensory system in advance of an imposed movement. The existence of ERD before movement onset, comparable to that found preceding overt movement, suggests that ERD can become contingent on the expectation of robotically-imposed movement. That is, ERD is not uniquely tied to active movement but can reflect preparation for movement, whether active or passive.

ERD preceding both active and passive movements may be explained by two physiological mechanisms: the efference copy and anticipatory attention. Unlike studies that used random movement intervals [19, 20], participants in the present experiment were aware of the existence and timing of oncoming notes. Participants had sufficient time to prepare for the movement, whether active or imposed by the robot. Past studies have found that the brain predicts oncoming sensory information related to an intended movement so that the system can learn and adapt to changes [34-36]. When a command is sent to the motor system to generate movement, an internal copy of the command is created to predict sensory consequences of the movement. This phenomenon is referred to as an efference copy. The efference copy is collated with sensory inputs produced by the movement, allowing a comparison of the expected movement (forward model) and the actual movement. In one study, subjects performed a self-paced finger-tapping task that alternated hands [37]. ERD was observed to occur up to two seconds before movement over the contralateral hemisphere during dominant hand movements, and bilaterally during non-dominant hand movements. The authors suggest that while ERD of the contralateral sensorimotor cortex is an excitatory process, ERD of the ipsilateral hemisphere may be the result of an efference copy reflecting inhibition of movement. During the passive subject/active robot condition of the current experiment, participants expected movement but suppressed overt intention of that movement. Although the descending motor command was inhibited, the internal network requires the efference copy to predict the somatosensation of the imposed movement [38]. Therefore, in the current experiment, ERD preceding movement may be the EEG correlate of the efference copy sent in preparation for predictable imposed movement. Others have suggested that contralateral beta ERD may be a corollary of anticipatory attention to a future motor stimulus [39]. It may be the case that ERD measured here is the result of maintained attention to the oncoming note stimulus. Examination of the effects of predictable and unpredictable cueing would be necessary to further explore the roles of the efference copy and movement inhibition on the magnitude and temporal features of ERD.

B. Implications for Patient Engagement in BCI-Robot Therapy

Although ERD has been shown to be a reliable control signal for BCI applications, the use of BCI-contingency in robot therapy has not yet been proven superior to traditional or robotic therapy. One important rationale for using BCIs in robotic therapy is ensuring the active engagement of the patient in the movement task [2, 8]. This study shows that ERD can be contingent on the expectation of passive, imposed robotic movement. Therefore, in a predictable task therapy environment, the use of ERD as an orthosis control signal does not necessarily require the patient's active motor engagement in the task, but simply the expectation of robotic movement. These findings suggest that ERD is not only an indicator of motor intention, but may also be an indicator of preparation for somatosensation. Producing anticipatory ERD in expectation of an upcoming passive movement could, in theory, allow the patient to slack, which, as described previously, is, a subconscious and involuntary phenomenon wherein the patient allows the robotic environment to supersede their effort in overt movement during the task [10]. As such, ERD may be suboptimal as a signal to ensure patient motor engagement in BCI-contingent robot therapy.

Despite the possibility of reduced patient motor engagement in a BCI-contingent robot therapy paradigm, the patient must also exhibit some amount of expectation for the sensory feedback of the passive movement in order to create an anticipatory ERD as seen in this study. An important question is whether sensory engagement alone, without overt movement intention, is enough to aid motor outcome after therapy. A recent paper found modest improvements in a BCI-contingent robotic therapy group versus a sham control group who received randomized robotic movements not contingent on the BCI [15]. The sham control group was not able to predict the movement, and therefore unable to train ERD. These findings may indicate that the generation of ERD, even if it is generated in preparation for sensory stimuli only, may be beneficial to motor outcome.

C. Absence of Effects of Audio-Visual Stimuli

Power modulation did not appear in any of the audio-visual only condition exams. The final audio-visual only condition is of particular interest, as it occurred after repetitive conditioning of motor activity to the audio-visual stimuli. The gaming environment in this study utilized very engaging visual and aural cueing matched to overt movement and haptic feedback. Popular music with a consistent beat was chosen for maximum influence on the participant; and indeed the gaming paradigm used here is similar to the third most popular video game in history. By repeatedly matching hundreds of individuated finger movements to the audio-visual cues on screen, the participant was placed in a scenario that one might expect would lead to classical conditioning. However, the lack of activity in the final audio-visual condition suggests an insusceptibility of EEG power modulation to conditioning based on audio-visual cueing or gaming environments commonly seen in robot therapy. This finding also rules out the possibility that the gaming environment affected ERD in the remaining conditions. This is an important null result for ERD-based BCIs relying on aural and/or visual cues, as it suggests that the cueing environment alone is unlikely to falsely trigger a BCI contingent robot; rather the imposed movement by the robot plays a key role. An anticipatory ERD is only generated if the user associates an audio-visual cue as indicating an upcoming movement, whether active or passive.

D. Effects of Robotic Assistance on Synchronization after Movement

Event related synchronization or “rebound” occurred following finger flexion offset in all three movement conditions. These findings agree with Pfurtscheller et al., who characterized the temporal traits of ERS, finding that a burst of beta power appeared within a one second interval following movement offset [32]. Post movement beta ERS has since been shown to follow voluntary hand movements [33, 40-42], as well as passive movements [19, 40]. ERS following movement matches previous findings, with the exception of a second, smaller synchronization that was more prominent in the robot active conditions.

The presence of a secondary synchronization in the two active robot conditions may be a result of the discrete flexion/extension forces applied by the robot. Secondary synchronizations seen in the active robot conditions were not statistically significant from the active subject/passive robot case. However, the group-level mean ERS was larger in the active robot conditions and ERS significance periods ended later. The mean secondary synchronization was greatest in the passive subject/active robot condition, followed by the active subject/active robot condition. ERD and ERS in relation to kinematic and kinetic hand movements were recently characterized by use of a 3×4 factorial design experiment in which the subjects repeated hand grasping movements at different speeds and forces [16]. The authors found that although grasping force did not affect the magnitude or time course of ERD/ERS, repeating grasping motions caused repeated up-modulation of the signal power. This supports our findings, because in the present experiment the robot assistance for flexion and extension were separated by approximately a ∼200ms interval in which no movement occurred, thereby creating two distinct motions (flexion, pause, extension), and therefore two distinct synchronization features. In contrast, in the active subject/passive robot condition the finger extension occurred immediately after the finger flexion without a pause, and therefore did not show a secondary ERS.

E. Limitations and Future Research

In this experiment, we studied unimpaired subjects. Exclusion of the confounding influence of brain lesions on EEG activity allowed us to gain insight into the normative interaction between robotic assistance and brain activity. However, one study found that peak ERD during attempted shoulder-elbow movements was smaller in individuals with a stroke compared to unimpaired subjects [14]. Although this study did not test passive movements, there is a possibility that ERD preceding passive movements may be diminished in people with neurological impairment. A future aim of this research is the replication the experiment utilizing participants with a stroke.

This study used a factorial combination of overt movement and robot assistance, rather than online BCI contingent control of the robot to study the potential effects of robotic therapy on event related EEG features. The observation that the pre-movement ERD was contingent on the robotic assistance has implications for using contingent-BCI to improve patient engagement. However, it will be important to verify the results presented here in an online BCI-contingent robot therapy paradigm in future work.

Fine motor tasks such as finger individuation are important for daily function. Furthermore, it has been suggested that isolated, individualized movement deficit also affects impairment in gross movements, such as elbow extension [43]. A recent study employed an EEG based BCI in decoding individuated finger movements, and achieved accuracy significantly above chance level [44]. It may therefore be possible to decode EEG-based signals in real time for the online BCI-contingent control of individual fingers in the FINGER robotic orthosis, which may improve therapeutic outcome.

A logical progression of this work would be the identification of an event-related brain state robust to the effects of robot-contingent triggering. Functional connectivity has been shown to vary between active and passive movements during motor tasks [18], and therefore may be useful as an indicator for active motor engagement in the context of BCI-robot therapy. A second approach might forego a-priori feature selection altogether, using machine learning algorithms to decode movement intention. Past studies have used similar approaches to classify resting state versus active or imagined movements [23, 45]. To our knowledge, no such approach has been applied to the classification of passive versus active movements. Such an approach may be able to isolate the spatio-spectral EEG features associated with active motor engagement in the task. This would circumvent the robot-contingency observed in ERD preceding passive movements. If patients are indeed slacking in the current BCI-contingent robotic therapy paradigms, a passive/active classification BCI paradigm might encourage patient motor engagement in the task, improving motor outcomes after therapy.

Acknowledgments

We would like to thank Dr. Jonathan R. Wolpaw and Dr. Dennis McFarland of the National Center for Adaptive Neu-rotechnologies (www.neurotechcenter.org) (NIBIB/NIH Bio-medical Technology Resource Center P41EB018783) at the Wadsworth Center of the New York State Department of Health in Albany, N.Y who were essential in the initiation of this research and supported us throughout the study.

This work was supported in part by R01 (NIH-R01HD062744-01) from the National Center for Medical Rehabilitation Research, part of the Eunice Kennedy Shriver National Institute of Child Health and Human Development, and in part by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant UL1 TR000153. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Biographies

Sumner L. Norman received the B.S. degree in mechanical engineering from the University of Utah in 2012 and the M.S. degree in mechanical and aerospace engineering from the University of California, Irvine in 2015.

From 2011-2012, he worked as a Research Assistant with the University of Utah Haptics and Embedded Mechatronics Laboratory. In 2012, he received the graduate research fellowship award from the National Science Foundation (NSF). He is currently working toward the PhD degree in mechanical and aerospace engineering at the University of California, Irvine. His research interests include human-machine interface optimization for the purpose of robotically assisted neurorehabilitation and learning.

Mark Dennison received the B.A. degree in psychology from the University of California, Irvine in 2013 and the M.S. degree in cognitive neuroscience from the University of California, Irvine in 2015.

From 2012-2013, he worked as a Research Assistant in the University of California, Irvine, Human Neuroscience Laboratory. He is currently working toward the PhD degree in Psychology with a concentration in cognitive neuroscience at the University of California, Irvine. His research interests include multisensory integration and brain computer interfaces.

Ramesh Srinivasan received the B.S. degree in Electrical Engineering from the University of Pennsylvania in 1988 and PhD degree in Biomedical Engineering from Tulane University in 1994.

He worked in industry at Electrical Geodesics, Inc. and did a postdoctoral fellowship in Cognitive Neuroscience at the University of Oregon. He was a Fellow in Theoretical Neurobiology at the Neurosciences Institute in San Diego. He is presently a Professor of Cognitive Sciences and Biomedical Engineering at the University of California, Irvine. His research interests are in the theoretical models of EEG and MEG, statistical signal processing methods for EEG, and applications of EEG in cognitive and clinical neuroscience.

Steven C. Cramer received the B.A. degree in Neurobiology from the University of California, Berkeley, earning Highest Honors (1980-1983). He then received an MD from University of Southern California (1984-1988) in Los Angeles, CA. This was followed by a residency in internal medicine at UCLA (1988-1991); and a residency in neurology (1992-1995) plus a fellowship in cerebrovascular disease (1995-1997) at Massachusetts General Hospital in Boston, MA. He also earned a Master's Degree in Clinical Investigation from Harvard Medical School in Boston, MA (1995-1997).

He worked as an Assistant Professor in the department of Neurology at the University of Washington (1997-2002), after which he moved to the University of California, Irvine, where he is now a Professor of Neurology, Anatomy & Neurobiology, and Physical Medicine & Rehabilitation. He is also the Vice Chair for Research in Neurology, the Clinical Director of the Stem Cell Research Center, and the Associate Director of the UC Irvine Institute for Clinical & Translational Science. His research publications have largely been focused on brain repair after central nervous system injury in humans, with an emphasis on stroke and recovery of movement.

Dr. Cramer is a member of the American Neurological Association, American Academy of Neurology, and the American Stroke Association.

Eric Wolbrecht (M'06) received the B.S. degree in mechanical engineering from Valparaiso University, Valparaiso, IN, in 1996, the M.S. degree in mechanical engineering from Oregon State University, Corvallis, in 1998, and the Ph.D. degree in mechanical and aerospace engineering from the University of California, Irvine, in 2007.

He is currently an Associate Professor in the Department of Mechanical Engineering at the University of Idaho, Moscow. His research interests include upper-extremity rehabilitation robotics, compliant actuation, state-estimation, nonlinear and adaptive control, motor learning, and assist-as-needed control for neurorehabilitation. Dr. Wolbrecht is also member of the American Society of Mechanical Engineering.

David J. Reinkensmeyer is a Professor in the Departments of Mechanical and Aerospace Engineering, Anatomy and Neurobiology, Biomedical Engineering, and Physical Medicine and Rehabilitation at the University of California at Irvine. He received the B.S. degree in electrical engineering from the Massachusetts Institute of Technology and the M.S. and Ph.D. degrees in electrical engineering from the University of California at Berkeley studying robotics and the neuroscience of human movement. He carried out postdoctoral studies at the Rehabilitation Institute of Chicago and Northwestern University Medical School from 1994 to 1997, building one of the first robotic devices for rehabilitation therapy after stroke. He became an assistant professor at U.C. Irvine in 1997, establishing a research program that develops robotic and sensor-based systems for movement training and assessment following neurologic injuries and disease.

Contributor Information

Sumner L. Norman, Department of Mechanical and Aerospace Engineering, University of California, Irvine, in Irvine, CA 92617 USA

Mark Dennison, School of Social Sciences, University of California, Irvine, in Irvine, CA 92617 USA.

Eric Wolbrecht, Department of Mechanical Engineering, University of Idaho, Moscow, ID 83844 USA.

Steven C. Cramer, Department of Neurology, University of California, Irvine, in Irvine, CA 92617 USA

Ramesh Srinivasan, Cognitive Science Department, University of California, Irvine, in Irvine, CA 92617 USA.

David J. Reinkensmeyer, Department of Mechanical and Aerospace Engineering, University of California, Irvine, in Irvine, CA 92617 USA.

References

- 1.Lotze M, Braun C, Birbaumer N, Anders S, Cohen LG. Motor learning elicited by voluntary drive. Brain. 2003;126:866–872. doi: 10.1093/brain/awg079. [DOI] [PubMed] [Google Scholar]

- 2.Hu XL, Tong Ky, Song R, Zheng XJ, Leung WW. A comparison between electromyography-driven robot and passive motion device on wrist rehabilitation for chronic stroke. Neurorehabilitation and Neural Repair. 2009;23:837–846. doi: 10.1177/1545968309338191. [DOI] [PubMed] [Google Scholar]

- 3.Kaelin-Lang A, Sawaki L, Cohen LG. Role of voluntary drive in encoding an elementary motor memory. Journal of neurophysiology. 2005;93:1099–1103. doi: 10.1152/jn.00143.2004. [DOI] [PubMed] [Google Scholar]

- 4.Hornby TG, Campbell DD, Zemon DH, Kahn JH. Clinical and quantitative evaluation of robotic-assisted treadmill walking to retrain ambulation after spinal cord injury. Topics in Spinal Cord Injury Rehabilitation. 2005;11:1. [Google Scholar]

- 5.Hornby TG, Reinkensmeyer DJ, Chen D. Manually-Assisted Versus Robotic-Assisted Body Weight– Supported Treadmill Training in Spinal Cord Injury: What Is the Role of Each? PM&R. 2010;2:214–221. doi: 10.1016/j.pmrj.2010.02.013. [DOI] [PubMed] [Google Scholar]

- 6.Hidler J, Nichols D, Pelliccio M, Brady K, Campbell DD, Kahn JH, et al. Multicenter randomized clinical trial evaluating the effectiveness of the Lokomat in subacute stroke. Neurorehabilitation and Neural Repair. 2009;23:5–13. doi: 10.1177/1545968308326632. [DOI] [PubMed] [Google Scholar]

- 7.Volpe BT, Ferraro M, Lynch D, Christos P, Krol J, Trudell C, et al. Robotics and other devices in the treatment of patients recovering from stroke. Current neurology and neuroscience reports. 2005;5:465–470. doi: 10.1007/s11910-005-0035-y. [DOI] [PubMed] [Google Scholar]

- 8.Reinkensmeyer DJ, Emken JL, Cramer SC. Robotics, motor learning, and neurologic recovery. Annu Rev Biomed Eng. 2004;6:497–525. doi: 10.1146/annurev.bioeng.6.040803.140223. [DOI] [PubMed] [Google Scholar]

- 9.Reinkensmeyer DJ, Wolbrecht ET, Chan V, Chou C, Cramer SC, Bobrow JE. Comparison of 3d, Assist-as-Needed Robotic Arm/Hand Movement Training Provided with Pneu-WREX to Conventional Table Top Therapy Following Chronic Stroke. American journal of physical medicine & rehabilitation/Association of Academic Physiatrists. 2012;91:s232. doi: 10.1097/PHM.0b013e31826bce79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wolbrecht ET, Chan V, Reinkensmeyer DJ, Bobrow JE. Optimizing compliant, model-based robotic assistance to promote neu-rorehabilitation. Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2008;16:286–297. doi: 10.1109/TNSRE.2008.918389. [DOI] [PubMed] [Google Scholar]

- 11.Israel JF, Campbell DD, Kahn JH, Hornby TG. Metabolic costs and muscle activity patterns during robotic-and therapist-assisted treadmill walking in individuals with incomplete spinal cord injury. Physical therapy. 2006;86:1466–1478. doi: 10.2522/ptj.20050266. [DOI] [PubMed] [Google Scholar]

- 12.Buch E, Weber C, Cohen LG, Braun C, Dimyan MA, Ard T, et al. Think to move: a neuromagnetic brain-computer interface (BCI) system for chronic stroke. Stroke. 2008;39:910–917. doi: 10.1161/STROKEAHA.107.505313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Formaggio E, Storti SF, Galazzo IB, Gandolfi M, Geroin C, Smania N, et al. Modulation of event-related desynchronization in robot-assisted hand performance: brain oscillatory changes in active, passive and imagined movements. J Neuroeng Rehabil. 2013;10:24. doi: 10.1186/1743-0003-10-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fu MJ, Daly JJ, Cavusoglu MC. Assessment of eeg event-related desynchronization in stroke survivors performing shoulder-elbow movements. Robotics and Automation, 2006; ICRA. 2006; Proceedings 2006 IEEE International Conference on; 2006. pp. 3158–3164. [Google Scholar]

- 15.Ramos-Murguialday A, Broetz D, Rea M, Läer L, Yilmaz Ö, Brasil FL, et al. Brain–machine interface in chronic stroke rehabilitation: a controlled study. Annals of neurology. 2013;74:100–108. doi: 10.1002/ana.23879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nakayashiki K, Saeki M, Takata Y, Hayashi Y, Kondo T. Modulation of event-related desynchronization during kinematic and kinetic hand movements. Journal of neuroengineering and rehabilitation. 2014;11:90. doi: 10.1186/1743-0003-11-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Müller-Putz GR, Zimmermann D, Graimann B, Nestinger K, Korisek G, Pfurtscheller G. Event-related beta EEG-changes during passive and attempted foot movements in paraplegic patients. Brain research. 2007;1137:84–91. doi: 10.1016/j.brainres.2006.12.052. [DOI] [PubMed] [Google Scholar]

- 18.Formaggio E, Storti SF, Galazzo IB, Gandolfi M, Geroin C, Smania N, et al. Time–Frequency Modulation of erd and eeg Coherence in Robot-Assisted Hand Performance. Brain topography. 2014:1–12. doi: 10.1007/s10548-014-0372-8. [DOI] [PubMed] [Google Scholar]

- 19.Cassim F, Monaca C, Szurhaj W, Bourriez JL, Defebvre L, Derambure P, et al. Does post-movement beta synchronization reflect an idling motor cortex? Neuroreport. 2001;12:3859–3863. doi: 10.1097/00001756-200112040-00051. [DOI] [PubMed] [Google Scholar]

- 20.Alegre M, Labarga A, Gurtubay I, Iriarte J, Malanda A, Artieda J. Beta electroencephalograph changes during passive movements: sensory afferences contribute to beta event-related desynchro-nization in humans. Neuroscience letters. 2002;331:29–32. doi: 10.1016/s0304-3940(02)00825-x. [DOI] [PubMed] [Google Scholar]

- 21.Pfurtscheller G, Aranibar A. Event-related cortical desynchro-nization detected by power measurements of scalp EEG. Electroen-cephalography and clinical neurophysiology. 1977;42:817–826. doi: 10.1016/0013-4694(77)90235-8. [DOI] [PubMed] [Google Scholar]

- 22.Pfurtscheller G, Lopes da Silva FH. Event-related EEG/MEG synchronization and desynchronization: basic principles. Clinical neu-rophysiology. 1999;110:1842–1857. doi: 10.1016/s1388-2457(99)00141-8. [DOI] [PubMed] [Google Scholar]

- 23.Fabiani GE, McFarland DJ, Wolpaw JR, Pfurtscheller G. Conversion of EEG activity into cursor movement by a brain-computer interface (BCI) Neural Systems and Rehabilitation Engineering, IEEE Transactions on. 2004;12:331–338. doi: 10.1109/TNSRE.2004.834627. [DOI] [PubMed] [Google Scholar]

- 24.Ramos-Murguialday A, Schürholz M, Caggiano V, Wildgruber M, Caria A, Hammer EM, et al. Proprioceptive feedback and brain computer interface (BCI) based neuroprostheses. PloS one. 2012;7:e47048. doi: 10.1371/journal.pone.0047048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Yuan H, He B. Brain-Computer Interfaces Using Sensorimotor Rhythms: Current State and Future Perspectives. 2014 doi: 10.1109/TBME.2014.2312397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fok S, Schwartz R, Wronkiewicz M, Holmes C, Zhang J, Somers T, et al. An EEG-based brain computer interface for rehabilitation and restoration of hand control following stroke using ipsilateral cortical physiology, in. Engineering in Medicine and Biology Society, EMBC, 2011 Annual International Conference of the IEEE. 2011 doi: 10.1109/IEMBS.2011.6091549. [DOI] [PubMed] [Google Scholar]

- 27.Taheri H, Rowe JB, Gardner D, Chan V, Reinkensmeyer DJ, Wolbrecht ET. Robot-assisted guitar hero for finger rehabilitation after stroke, in. Engineering in medicine and biology society (EMBC), 2012 annual international conference of the IEEE. 2012:3911–3917. doi: 10.1109/EMBC.2012.6346822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Friedman N, Chan V, Reinkensmeyer AN, Beroukhim A, Zam-brano GJ, Bachman M, et al. Retraining and assessing hand movement after stroke using the MusicGlove: comparison with conventional hand therapy and isometric grip training. Journal of Neuro Engineering and Rehabilitation. 2014;11:76. doi: 10.1186/1743-0003-11-76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Cohen MX. Analyzing Neural Time Series Data: Theory and Practice. 2014 [Google Scholar]

- 30.Yuan H, Perdoni C, He B. Relationship between speed and EEG activity during imagined and executed hand movements. Journal of neural engineering. 2010;7:026001. doi: 10.1088/1741-2560/7/2/026001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Derambure P, Defebvre L, Bourriez J, Cassim F, Guieu J. Event-related desynchronization and synchronization. Reactivity of electrocortical rhythms in relation to the planning and execution of voluntary movement. Neurophysiologie clinique=Clinical neurophys-iology. 1999;29:53–70. doi: 10.1016/s0987-7053(99)80041-0. [DOI] [PubMed] [Google Scholar]

- 32.Pfurtscheller G, Stancak A, Jr, Edlinger G. On the existence of different types of central beta rhythms below 30 Hz. Electroencephalog-raphy and clinical neurophysiology. 1997;102:316–325. doi: 10.1016/s0013-4694(96)96612-2. [DOI] [PubMed] [Google Scholar]

- 33.Stančák A, Jr, Feige B, Lücking CH, Kristeva-Feige R. Oscillatory cortical activity and movement-related potentials in proximal and distal movements. Clinical Neurophysiology. 2000;111:636–650. doi: 10.1016/s1388-2457(99)00310-7. [DOI] [PubMed] [Google Scholar]

- 34.Blakemore SJ, Wolpert D, Frith C. Why can't you tickle yourself? Neuroreport. 2000;11:R11–R16. doi: 10.1097/00001756-200008030-00002. [DOI] [PubMed] [Google Scholar]

- 35.Kawato M. Internal models for motor control and trajectory planning. Current opinion in neurobiology. 1999;9:718–727. doi: 10.1016/s0959-4388(99)00028-8. [DOI] [PubMed] [Google Scholar]

- 36.Wolpert DM, Ghahramani Z, Jordan MI. An internal model for sensorimotor integration. SCIENCE-NEW YORK THEN WASHINGTON- 1995:1880–1880. doi: 10.1126/science.7569931. [DOI] [PubMed] [Google Scholar]

- 37.Bai O, Mari Z, Vorbach S, Hallett M. Asymmetric spatiotem-poral patterns of event-related desynchronization preceding voluntary sequential finger movements: a high-resolution EEG study. Clinical Neurophysiology. 2005;116:1213–1221. doi: 10.1016/j.clinph.2005.01.006. [DOI] [PubMed] [Google Scholar]

- 38.Blakemore SJ, Goodbody SJ, Wolpert DM. Predicting the consequences of our own actions: the role of sensorimotor context estimation. The Journal of Neuroscience. 1998;18:7511–7518. doi: 10.1523/JNEUROSCI.18-18-07511.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Pfurtscheller G, Krausz G, Neuper C. Mechanical stimulation of the fingertip can induce bursts of β oscillations in sensorimotor areas. Journal of clinical neurophysiology. 2001;18:559–564. doi: 10.1097/00004691-200111000-00006. [DOI] [PubMed] [Google Scholar]

- 40.Müller G, Neuper C, Rupp R, Keinrath C, Gerner H, Pfurtscheller G. Event-related beta EEG changes during wrist movements induced by functional electrical stimulation of forearm muscles in man. Neuroscience Letters. 2003;340:143–147. doi: 10.1016/s0304-3940(03)00019-3. [DOI] [PubMed] [Google Scholar]

- 41.Pfurtscheller G, Zalaudek K, Neuper C. Event-related beta synchronization after wrist, finger and thumb movement. Electroen-cephalography and Clinical Neurophysiology/Electromyography and Motor Control. 1998;109:154–160. doi: 10.1016/s0924-980x(97)00070-2. [DOI] [PubMed] [Google Scholar]

- 42.Neuper C, Pfurtscheller G. Evidence for distinct beta resonance frequencies in human EEG related to specific sensorimotor cortical areas. Clinical Neurophysiology. 2001;112:2084–2097. doi: 10.1016/s1388-2457(01)00661-7. [DOI] [PubMed] [Google Scholar]

- 43.Zackowski K, Dromerick A, Sahrmann S, Thach W, Bastian A. How do strength, sensation, spasticity and joint individuation relate to the reaching deficits of people with chronic hemiparesis? Brain. 2004;127:1035–1046. doi: 10.1093/brain/awh116. [DOI] [PubMed] [Google Scholar]

- 44.Liao K, Xiao R, Gonzalez J, Ding L. Decoding individual finger movements from one hand using human EEG signals. PloS one. 2014;9:e85192. doi: 10.1371/journal.pone.0085192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Blankertz B, Dornhege G, Krauledat M, Müller KR, Curio G. The non-invasive Berlin brain–computer interface: fast acquisition of effective performance in untrained subjects. NeuroImage. 2007;37:539–550. doi: 10.1016/j.neuroimage.2007.01.051. [DOI] [PubMed] [Google Scholar]