Abstract

IMPORTANCE

In the United States, hospitals receive accreditation through unannounced on-site inspections (ie, surveys) by The Joint Commission (TJC), which are high-pressure periods to demonstrate compliance with best practices. No research has addressed whether the potential changes in behavior and heightened vigilance during a TJC survey are associated with changes in patient outcomes.

OBJECTIVE

To assess whether heightened vigilance during survey weeks is associated with improved patient outcomes compared with nonsurvey weeks, particularly in major teaching hospitals.

DESIGN, SETTING, AND PARTICIPANTS

Quasi-randomized analysis of Medicare admissions at 1984 surveyed hospitals from calendar year 2008 through 2012 in the period from 3 weeks before to 3 weeks after surveys. Outcomes between surveys and surrounding weeks were compared, adjusting for beneficiaries’ sociodemographic and clinical characteristics, with subanalyses for major teaching hospitals. Data analysis was conducted from January 1 to September 1, 2016.

EXPOSURES

Hospitalization during a TJC survey week vs nonsurvey weeks.

MAIN OUTCOMES AND MEASURES

The primary outcome was 30-day mortality. Secondary outcomes were rates of Clostridium difficile infections, in-hospital cardiac arrest mortality, and Patient Safety Indicators (PSI) 90 and PSI 4 measure events.

RESULTS

The study sample included 244 787 and 1 462 339 admissions during survey and nonsurvey weeks with similar patient characteristics, reason for admission, and in-hospital procedures across both groups. There were 811 598 (55.5%) women in the nonsurvey weeks (mean [SD] age, 72.84 [14.5] years) and 135 857 (55.5%) in the survey weeks (age, 72.76 [14.5] years). Overall, there was a significant reversible decrease in 30-day mortality for admissions during survey (7.03%) vs nonsurvey weeks (7.21%) (adjusted difference, −0.12%; 95% CI, −0.22% to −0.01%). This observed decrease was larger than 99.5% of mortality changes among 1000 random permutations of hospital survey date combinations, suggesting that observed mortality changes were not attributable to chance alone. Observed mortality reductions were largest in major teaching hospitals, where mortality fell from 6.41% to 5.93% during survey weeks (adjusted difference, −0.38%; 95% CI, −0.74% to −0.03%), a 5.9% relative decrease. We observed no significant differences in admission volume, length of stay, or secondary outcomes.

CONCLUSIONS AND RELEVANCE

Patients admitted to hospitals during TJC survey weeks have significantly lower mortality than during nonsurvey weeks, particularly in major teaching hospitals. These results suggest that changes in practice occurring during periods of surveyor observation may meaningfully affect patient mortality.

Medical error is a significant cause of preventable mortality in US hospitals.1 To ensure compliance with high standards for patient safety, The Joint Commission (TJC) performs unannounced on-site inspections (ie, surveys) at US hospitals every 18 to 36 months as an integral part of their accreditation process.2 During these week-long inspections, TJC surveyors closely observe a broad range of hospital operations, focusing on high-priority patient safety areas, such as environment of care, documentation, infection control, and medication management.3 The stakes for performance during a TJC survey are high: loss of accreditation or a citation in the review process can adversely affect a hospital’s reputation and presage public censure or closure.4–6 This possibility can be especially important for large academic medical centers, whose reputation provides significant financial leverage in their local market.7 Hospital staff are keenly aware of their behavior being observed and reflecting on their institution as a whole during TJC surveys.8 This pressure has created an entire category of staff training in many hospitals around “survey readiness.”9,10

The visible nature of these surveys puts hospitals into a state of high vigilance and activates survey readiness training, called a “code J” by one observer.8 This phenomenon closely resembles the Hawthorne effect, an observation in the social sciences that research participants change their behavior because of the awareness of being monitored.11 The Hawthorne effect has been well described in various health care settings, including antibiotic prescribing,12,13 hand hygiene,14 and outpatient process quality in low-resource settings,15 and has been cited in a TJC report as a significant barrier to accurate observation of hand hygiene practices.14 In addition to the Hawthorne effect, there is a robust literature in economics describing how audits or monitoring of employees can lead to improved performance.16,17 There is little doubt that, for many hospitals, the monitoring of staff by TJC surveyors motivates changes in staff behavior to reflect expectations of what hospitals want surveyors to see.8–10,18

To our knowledge, no research has addressed whether potential changes in behavior and heightened vigilance during a TJC survey are associated with changes in patient outcomes. Several mechanisms could plausibly create such an effect, including increased attention to infection control leading to reduced hospital-associated infections and heightened awareness of medication management leading to reduced adverse medication events. If the presence of TJC surveyors affected patient outcomes, it would imply that the survey-week scramble to improve staff compliance with surveyor expectations has a significant safety impact worth further exploration.

We examined the association of TJC survey visits with patient safety outcomes via the quasi-random assignment of admissions between unannounced survey weeks and nonsurvey weeks within hospitals. We focused on inpatient safety–related outcomes that could be plausibly affected by attention to survey-relevant aspects of inpatient care and assessed potential mechanisms for changes in these outcomes, such as increased physician staffing or changes in the composition of admissions or inpatient procedures performed. We hypothesized that the presence of TJC surveyors would temporarily improve safety outcomes relative to surrounding nonsurvey weeks, with a larger effect in major teaching hospitals, which may have greater resources and incentives to ensure high compliance with surveys.

Methods

Study Sample and Data Sources

To identify hospital admissions and measure outcomes, we used the 2008–2012 Medicare Provider Analysis and Review (MedPAR) files that contain records for 100% of Medicare beneficiaries using hospital inpatient services supplemented by annual Beneficiary Summary Files, which include demographics and chronic illness diagnoses.19 Information on hospital teaching status and geography was obtained from the 2011 American Hospital Association Annual Survey.20 We identified all admissions occurring during the business week of a TJC survey as well as all admissions occurring 3 weeks before and after each survey week, a time interval used in prior similar analyses.21 We included all hospitals surveyed by the TJC with available historical survey dates, representing the majority of admissions from 2008–2012 (described below).22 We excluded admissions occurring on weekend days because TJC surveyors are not on site on these days, and we also excluded admissions from hospitals not surveyed by TJC. This study was approved by the institutional review board at Harvard Medical School.

Defining TJC Survey Weeks

We identified survey dates using publicly available data on the TJC Quality Check website, which lists survey dates going back to 2007.23 We extracted survey dates for 1984 general medical-surgical hospitals in the 2008–2012 MedPAR database, corresponding to 3417 TJC survey visits. These hospitals represent the location for 68% of all Medicare admissions in the 2008–2012 MedPAR cohort. To merge survey visit data with the Med-PAR database, we used a crosswalk of TJC and Medicare hospital identifiers obtained from another publicly available TJC hospital quality database.24 We defined our primary exposure variable to be the 5 weekdays during the week of a full accreditation TJC survey date (survey weeks), as reported on the Quality Check website. We did not include TJC follow-up on-site surveys, which are smaller, focused assessments that typically occur from 30 to 180 days after the full survey to assess hospital responses to issues marked as problem areas by TJC surveyors.25

Study Outcomes and Covariates

Our primary outcome was 30-day mortality, defined as death within 30 days of admission. We chose this outcome because it integrates many aspects of care that might change during TJC observation, such as discharge planning and documentation, which are not relevant for outcomes such as in-hospital mortality. Moreover, this mortality time frame is used by Medicare in its reporting of hospital quality.

We also measured 4 additional secondary outcomes to capture possible effects of unannounced surveys on patient safety. The first 2 were composite measures of several patient safety indicators (PSIs), which are validated patient safety metrics developed by the Agency for Healthcare Research and Quality used in public reporting.26 First, we used the PSI 90 composite measure utilized by Medicare in the Hospital Acquired Condition Reduction program, which measures 11 different individual patient safety events, including pressure ulcers and central line–associated blood stream infection. Second, we examined the PSI 4 composite measure for failure to rescue from serious postsurgical complications. This measure assesses safety on the premise that mortality from a complication can be rescued if recognized early and treated effectively. The PSI 4 measure combines mortality rates for 5 potentially treatable complications in surgical inpatients, including sepsis and pulmonary embolism. The last 2 secondary outcomes included Clostridium difficile infection rates and in-hospital cardiac arrest mortality.27 We hypothesized that TJC surveyor presence could reduce the rates of the 4 abovementioned outcomes through more fastidious attention to infection control and adherence to protocols and best practices for inpatient care.

We ascertained incident cases of C difficile infection by identifying any facility admissions with International Classification of Diseases, Ninth Revision (ICD-9) code 008.45 in any field upon discharge. The sensitivity and specificity of using ICD-9 codes to identify C difficile infections have been reported elsewhere to be adequate for identifying overall C difficile burden for epidemiologic purposes.28,29 Finally, we noted in-hospital cardiac arrest mortality by identifying all admissions with a discharge destination of “expired” with any ICD-9 diagnosis code of 427.5.

Patient covariates included age, sex, race/ethnicity, the Elixhauser comorbidity score for each admission (calculated as the number of 29 Elixhauser comorbidities present during a hospital admission),30 and indicators for each of 11 chronic conditions obtained from the Chronic Conditions Data Warehouse research database (Table 1).19 At the admission level, we used the reported diagnosis-related group to categorize each admission into 1 of 25 mutually exclusive major diagnostic categories.31

Table 1.

Admission Characteristics by Date of Admission Relative to TJC Survey Date

| Characteristic | Survey Weeks | P Valueb | |

|---|---|---|---|

| Non-TJC (n = 1 462 339)a | TJC (n = 244 787) | ||

| Females, No. (%) | 811 598 (55.5) | 135 857 (55.5) | .90 |

| White race, No. (%) | 1 183 032 (80.9) | 1 97 788 (80.8) | .44 |

| Age, mean (SD), y | 72.84 (14.5) | 72.76 (14.5) | .008 |

| Length of stay, mean (SD), d | 5.37 (8.0) | 5.37 (7.9) | .55 |

| Total Medicare payments, mean (SD), $ | 8306 (19 954) | 8322 (19 360) | .55 |

| Weekly admissions, mean (SD), No. | 123.0 (193) | 123.5 (199) | .86 |

| Elixhauser score, mean (SD)c | 3.3 (1.8) | 3.3 (1.8) | .26 |

| Presence of chronic illness, No. (%)d | |||

| AMI/ischemia | 880 328 (60.2) | 146 872 (60.0) | .23 |

| Alzheimer dementia | 283 694 (19.4) | 47 244 (19.3) | .25 |

| Atrial fibrillation | 307 091 (21.0) | 50 916 (20.8) | .16 |

| Chronic kidney disease | 517 668 (35.4) | 86 165 (35.2) | .06 |

| COPD | 563 001 (38.5) | 93 509 (38.2) | .005 |

| Diabetes | 641 967 (43.9) | 107 217 (43.8) | .53 |

| Congestive heart failure | 649 279 (44.4) | 107 951 (44.1) | .001 |

| Hyperlipidemia | 998 778 (68.3) | 166 945 (68.2) | .57 |

| Hypertension | 1 162 560 (79.5) | 194 361 (79.4) | .54 |

| Stroke or TIA | 315 865 (21.6) | 52 874 (21.6) | .89 |

| Cancere | 241 286 (16.5) | 40 635 (16.6) | .42 |

Abbreviations: AMI, acute myocardial infarction; COPD, chronic obstructive pulmonary disease; TIA, transient ischemic attack; TJC, The Joint Commission.

Non-TJC survey weeks were defined as the 6 weeks occurring 3 weeks before and 3 weeks after the week of the TJC survey.

P values estimated using 2-sample t tests or z tests for proportions, as appropriate.

Elixhauser score calculated as the number of 29 Elixhauser comorbidities present during a hospital admission.30

Presence of chronic illness assessed using indicators from the Chronic Conditions Data Warehouse research database.19

Includes breast, endometrial, prostate, or colon cancer.

Statistical Analysis

The identification strategy relied on the assumption that, because TJC visits are unannounced, patients are quasi-randomized within a hospital to being admitted during a TJC survey week vs the surrounding weeks. We assessed the validity of this assumption in several ways. First, counts of hospitalizations between survey and nonsurvey weeks were compared (3 weeks before and after surveys). Second, unadjusted characteristics of admissions occurring during both periods were compared. Third, to assess balance in admission diagnoses and in-hospital procedural mix between survey and nonsurvey weeks, the cumulative distribution of all admissions in each group were plotted by diagnosis related group and by primary ICD-9 procedure code. Finally, the distribution of survey weeks during the year from calendar years 2008 to 2012 were plotted.

We next translated data into event-time and plotted mean unadjusted mortality rates from 3 weeks before through 3 weeks after the survey week. We calculated unadjusted differences in mortality rates between survey and nonsurvey weeks and then estimated a multivariable logistic model to assess the association of survey weeks with mortality and secondary patient safety outcomes. For each outcome, we fitted the following model:

where E denotes the expected value; Yi,j,t,k is the outcome of admission i for patient j on weekday t in hospital k; survey_week is a binary indicator for an admission happening during a survey week vs 3 weeks before or after the survey; covariates denotes age, sex, race/ethnicity, Elixhauser index score,30 and the presence of 11 different chronic conditions; and MDC denotes indicators for admission major diagnostic category. Our key parameter of interest is the estimate of β1, which represents the mean adjusted change in each outcome attributable to the presence of TJC surveyors, compared with combined mean rates 3 weeks before and after the survey week. To present results from this regression, we simulated the absolute change in each outcome attributable to surveyor presence (ie, β1). In all analyses, we used robust variance estimators to account for clustering of admissions within hospitals.32

We conducted prespecified subgroup analyses to explore whether any observed effect was associated with certain types of hospitals or patients. First, we examined major teaching hospitals (defined as ≥0.6 resident-to-bed ratio) vs all other hospitals.21,33,34 We also divided hospitals into the top or bottom half of overall publicly reported quality using the Centers for Medicare & Medicaid Services Total Performance Score.35 Second, we examined whether there were differential effects among patients in the top and bottom 50th percentiles of predicted mortality, hypothesizing that any potential effect of surveyor presence might be magnified in the higher mortality group (eMethods in the Supplement).

Additional Analyses

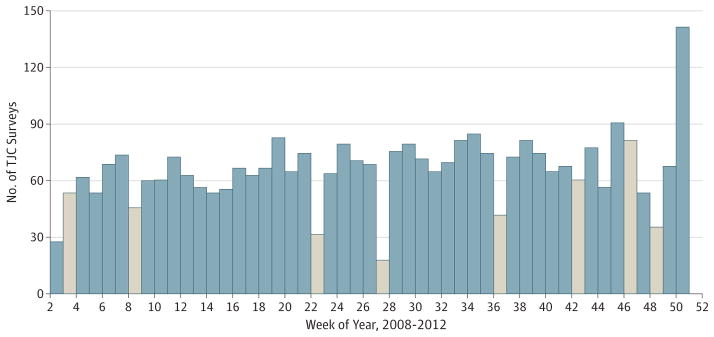

First, to assess whether the findings could be attributable to chance, we performed a random permutation test. We randomly assigned hospitals to different survey weeks according to the empirical distribution of TJC survey dates in Figure 1 (without replacement) and calculated the unadjusted mortality difference between survey and nonsurvey weeks in 1000 replications. We plotted the distribution of all permutation effect sizes and calculated the percentile distribution and P value for the empirically observed mortality effect. This permutation test adds value to the main analysis as a robustly nonparametric approach to examine how the effect size that we observe fits into the distribution of all possible effect sizes given the distribution of TJC survey dates and hospitals in our sample.

Figure 1. Distribution of The Joint Commission (TJC) Survey Dates Across the Year.

Distribution of TJC on-site survey dates during the 2008–2012 study period by week of the year. Weeks with US federal holidays are shown in tan. The disproportionately high number of TJC surveys during week 51 likely reflects the shifting of surveys from week 52 (occurring between Christmas and New Year’s Eve) to week 51.

Second, because TJC visits are less common during major holidays (Figure 1), our analysis may be confounded if mortality differs during holidays. We therefore excluded all admissions occurring on Christmas day, New Year’s day, Thanks-giving day, and Fourth of July. Third, because it is possible that mortality differences may occur if the distribution of elective hospitalizations or medical vs surgical admissions differs between survey and nonsurvey weeks, we repeated the main analysis restricted to emergency hospitalizations or stratified by medical vs surgical admissions. Fourth, a related concern may be that the arrival of TJC surveyors leads hospitals to avoid admitting sicker patients later in the week in an effort to free staff resources. We therefore conducted a subgroup analysis among patients admitted during Wednesday to Friday in survey vs nonsurvey weeks. Finally, we examined whether hospitals might increase staffing by counting all unique provider identifiers billing in each hospital by week.

Analyses were performed in R, version 3.1.2 (R Foundation) and Stata, version 14 (StataCorp). The 95% CI around reported estimates reflects 0.025 in each tail or P ≤ .05. P values were estimated using 2-sample t tests or z tests for proportions. Data analysis was conducted from January 1 to September 1, 2016.

Results

Patient and Admission Characteristics

Our sample contained 244 787 admissions during 3417 survey weeks and 1 462 339 admissions in the 3 weeks before and after these survey weeks. The average number of weekly admissions was nearly identical between survey and nonsurvey weeks (Table 1). Patient characteristics were similar between survey and nonsurvey weeks for the entire sample and in major teaching hospitals (Table 1; eTable 1 in the Supplement). In the full cohort, the few characteristics that were statistically significantly different between survey and nonsurvey weeks (eg, age and prior chronic obstructive pulmonary disease or congestive heart failure) were clinically trivial. The cumulative distributions of diagnosis related group categories and ICD-9 procedures were also nearly identical between survey and nonsurvey weeks (eFigure 1 and eFigure 2 in the Supplement). We observed no significant difference in the number of unique providers billing for hospital admissions per week in survey vs nonsurvey weeks (eTable 2 in the Supplement). Last, the distribution of survey weeks across the calendar year demonstrated no evidence for seasonal bias in survey dates beyond expected deviations during holidays (Figure 1).

Mortality During Survey Weeks

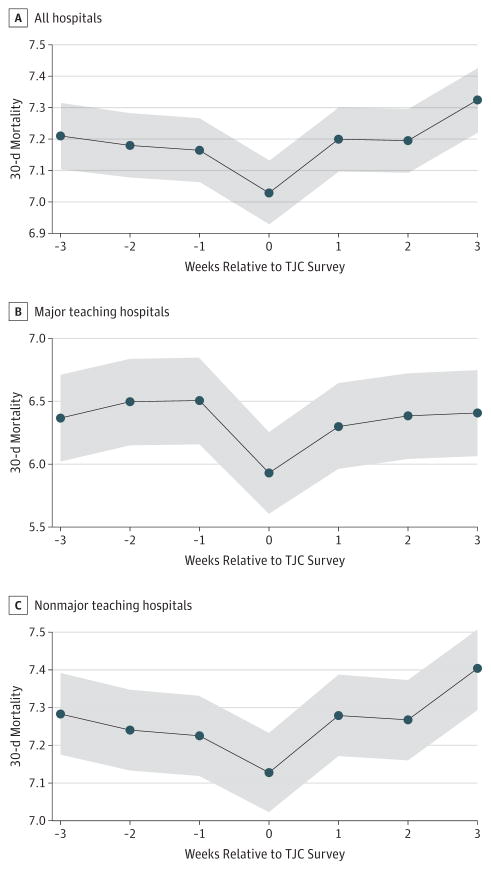

In unadjusted analysis across all hospitals, there was a significant reversible decrease in 30-day mortality for admissions occurring during a survey week vs the surrounding 3 weeks (Figure 2A). Overall, unadjusted 30-day mortality was 7.03% during survey weeks vs 7.21% during nonsurvey weeks (absolute difference, 0.18%). Otherwise, 30-day mortality rates in all other nonsurvey weeks were stable (P > .13 for all pairwise comparisons vs third week before survey visit). After adjustment, there was a statistically significant absolute decrease in mortality of 0.12% (95% CI, −0.22% to −0.01%; P = .03) (Table 2). This finding corresponds to an overall relative decrease of 1.5% in the 30-day mortality rate potentially attributable to TJC surveys.

Figure 2. Unadjusted 30-Day Mortality by Week of Admission Relative to The Joint Commission Survey Visit.

Trends for 30-day mortality in week-long intervals relative to on-site surveys for all hospitals (A), major teaching hospitals alone (B), and nonmajor teaching hospitals (C). Shaded 95% CIs are shown for all unadjusted estimates, assuming a normal distribution of rates given the large sample size of admissions.

Table 2.

Thirty-Day Mortality for Admissions During TJC Survey Weeks vs Other Weeks

| Factor | No. | 30-d Mortality (%)

|

||||

|---|---|---|---|---|---|---|

| Unadjusted

|

Adjusteda

|

|||||

| Survey Weeks

|

Absolute Difference | Absolute Difference (95% CI) | P Value | |||

| Non-TJCb | TJC | |||||

| All admissions | 1 707 126 | 7.21 | 7.03 | −0.18 | −0.12 (−0.22 to −0.01) | .03 |

|

| ||||||

| Teaching hospital status | ||||||

|

| ||||||

| Nonmajor | 1 568 812 | 7.28 | 7.13 | −0.16 | −0.10 (−0.21 to 0.01) | .08 |

|

| ||||||

| Major | 136 693 | 6.41 | 5.93 | −0.49 | −0.38 (−0.74 to −0.03) | .04 |

|

| ||||||

| CMS total performance score halves | ||||||

|

| ||||||

| Lower half | 1 102 021 | 7.28 | 7.12 | −0.16 | −0.10 (−0.23 to 0.03) | .14 |

|

| ||||||

| Upper half | 561 730 | 7.11 | 6.86 | −0.25 | −0.16 (−0.34 to 0.01) | .07 |

|

| ||||||

| Patient-expected mortality halvesc | ||||||

|

| ||||||

| Lower half | 864 241 | 1.19 | 1.20 | 0.01 | 0.02 (−0.08 to 0.11) | .72 |

|

| ||||||

| Upper half | 842 885 | 13.37 | 13.08 | −0.29 | −0.19 (−0.35 to −0.03) | .02 |

Abbreviations: CMS, Centers for Medicare & Medicaid Services; TJC, The Joint Commission.

Adjusted results were estimated from logistic regression models comparing 30-d mortality outcomes between TJC survey weeks vs nonsurvey weeks, with separate models estimated for each subgroup. All models were adjusted for age, sex, race/ethnicity, Elixhauser comorbidity score, the presence of any of 11 chronic illnesses (reported in Table 1), and major diagnostic category for admission. All analyses used robust variance estimators to account for clustering of admissions within hospitals. Absolute percentage changes in mortality attributable to TJC surveys were estimated using a marginal standardization approach.

Non-TJC survey weeks were defined as the 6 weeks occurring 3 weeks before and 3 weeks after the week of the TJC survey.

Expected 30-d mortality was calculated using a logistic regression model including all patient characteristics in the entire 100% Medicare admission data set (eMethods in the Supplement), and patients were categorized as having mortality above or below the expected median.

In subgroup analyses, major teaching hospitals showed the largest mortality change associated with survey weeks (Figure 2B and Table 2). In these hospitals, unadjusted 30-day mortality fell from a mean of 6.41% during nonsurvey weeks to 5.93% during survey weeks (unadjusted absolute decrease, 0.49%) (Figure 2B), corresponding to an adjusted decrease of 0.38% (95% CI, −0.74% to −0.03%; P = .04) (Table 2). With adjustment, this translates to a 5.9% adjusted relative decrease in 30-day mortality attributable to survey weeks in major teaching hospitals.

We did not observe significant mortality associations between survey and nonsurvey weeks in hospitals according to whether they were in the top or bottom half of total performance scores (Table 2). However, we noted a significant decrease in mortality during survey weeks among patients in the top half of expected mortality, from 13.37% in nonsurvey weeks to 13.08% in survey weeks. After adjustment, this change corresponded to an absolute decrease of 0.19% (95% CI, −0.35% to −0.03%; P = .02).

Among other patient safety outcomes, we did not find significant differences in outcome rates between survey and nonsurvey weeks, either overall or across the prespecified subgroup analyses (Table 3 and eTable 3 in the Supplement). The only effect that was potentially consistent with decreased mortality was in the PSI 4 measure, although this finding did not reach statistical significance (adjusted difference, −0.85%; 95% CI, −1.9% to −0.20%; P = .13) (Table 3).

Table 3.

Safety-Related Secondary Outcomes for Admissions During TJC Survey Weeks vs Other Weeks

| Secondary Outcome Measuresa | No. | Unadjusted, %

|

Adjusted, %b

|

|||

|---|---|---|---|---|---|---|

| Survey Weeks

|

Absolute Difference | Absolute Difference (95% CI) | P Value | |||

| Non-TJCc | TJC | |||||

| PSI 90 | 1 707 126 | 1.29 | 1.31 | 0.02 | 0.02 (−0.03 to 0.07) | .48 |

|

| ||||||

| PSI 4 | 37 594 | 19.0 | 18.2 | −0.84 | −0.85 (−1.90 to 0.20) | .13 |

|

| ||||||

| Clostridium difficile infection | 1 707 126 | 1.47 | 1.48 | 0.00 | 0.01 (−0.04 to 0.06) | .63 |

|

| ||||||

| In-hospital cardiac arrest mortality | 9794 | 63.00 | 63.61 | 0.61 | 0.86 (−1.86 to 3.57) | .54 |

Abbreviations: PSI, patient safety indicator; TJC, The Joint Commission.

Rate per 100 admissions. Definitions of each secondary outcome are provided in the Methods section.

Adjusted results were estimated from logistic regression models comparing 30-d mortality outcomes between TJC survey weeks vs nonsurvey weeks, with separate models estimated for each subgroup. All models were adjusted for age, sex, race/ethnicity, Elixhauser comorbidity score, the presence of any of 11 chronic illnesses (reported in Table 1), and major diagnostic category for admission. All analyses used robust variance estimators to account for clustering of admissions within hospitals. Absolute percentage changes in mortality attributable to TJC surveys were estimated using a marginal standardization approach.

Non-TJC survey weeks were defined as the 6 weeks occurring 3 weeks before and 3 weeks after the week of the TJC survey.

Additional Analyses

In the overall analysis, the observed mortality decrease was larger than 99.5% of effect sizes noted in a permutation test of 1000 replications of randomly assigned hospital survey date pairs, corresponding to a 2-sided P value of 0.01 (eFigure 3 in the Supplement). The findings were also unaffected by exclusion of major holidays or by restricting the analysis to emergency hospitalizations or to hospitalizations occurring Wednesday through Friday in survey and nonsurvey weeks (eTable 4 in the Supplement), thereby suggesting that the abovementioned findings are not driven by distribution of TJC survey dates or by a shift toward lower-risk or elective hospitalizations during TJC survey weeks or at the end of a survey week. The mortality effect that we observed was also not dominated by medical vs surgical admissions (eTable 4 in the Supplement).

Discussion

Hospital admissions during TJC survey weeks had significantly lower 30-day mortality than during nonsurvey weeks. The mean weekly number of admissions, patient characteristics, reasons for diagnosis, and in-hospital procedures performed were nearly identical between survey and nonsurvey weeks, consistent with the unannounced nature of these visits and the plausible quasi-randomization of patients between survey and nonsurvey weeks. In subgroup analyses, the decrease in mortality during survey weeks was largely driven by mortality reductions in major teaching hospitals. These mortality changes were reversible since mortality in all hospitals was otherwise stable in the 3 weeks before and after TJC surveys. We did not observe differences between survey and nonsurvey weeks in a broad range of patient safety measures.

These changes suggest that some aspect of predictable behavior change associated with TJC surveys might improve the quality of inpatient care. The effects that we observed were modest in size, ranging from a relative decrease of 1.5% in hospitals overall to 5.9% in major teaching hospitals. However, even changes of this magnitude throughout the year could theoretically have a significant public health impact. At major teaching hospitals, which had the largest relative mortality reduction, given an annual average of more than 900 000 Medicare admissions as defined in this study from 2008 to 2012, an absolute reduction of 0.39% in 30-day mortality (Table 2) would translate to more than 3600 fewer mortality events for Medicare patients annually. We do not propose that the high stress and scrutiny of TJC surveys be replicated across the entire year. Instead, we view TJC surveys as a window into quality improvement that is likely driven by a small number of key changes during surveys that require further research.

The study results are unlikely to be driven by chance or selection bias alone for several reasons. First, patient demographics, chronic illnesses, diagnosis related groups, and in hospital procedures were similar between survey and nonsurvey weeks. Moreover, if selection was likely to be an important issue, one would expect differences in the number of hospitalizations between survey and nonsurvey weeks; however, the numbers were identical during both periods. Finally, we demonstrated the robustness of the findings by excluding major holidays (during which TJC visits are less likely to occur), focusing on emergency hospitalizations (to ensure greater homogeneity in the comparison of hospitalizations across survey and nonsurvey periods), and focusing on hospitalizations occurring from Wednesday to Friday (to address the possibility that hospitals may respond to TJC visits by altering the composition of patients hospitalized later in the week).

Several possible reasons may explain why mortality declined during TJC survey weeks. The most plausible mechanism for these results could be that heightened scrutiny during visits raises awareness of possible operational deficiencies that could improve patient safety. For example, increased attention to methods of paper documentation could lead to more carefully documented encounters and better communication during survey weeks than other weeks. Surveyor presence on hospital floors and operating rooms could improve compliance with hand hygiene and infection control protocols, reducing hospital-acquired infection rates. Surveyor presence may also reduce the time spent by hospital staff on other activities with the potential to distract focus from patient care. To the extent that we were able to capture these mechanisms through secondary outcomes, we did not observe any effects associated with survey weeks that can be closely tied to changes in specific patient safety practices. The decrease observed in the PSI 4 failure-to-rescue mortality measure is suggestive of an effect of increased vigilance on the response to patient complications, but this effect did not reach statistical significance. Because the secondary outcomes we can measure in claims data are relatively uncommon, the analysis could be underpowered to detect a small change of the magnitude that was observed for 30-day mortality.

The prominent reduction in mortality in major teaching hospitals was a strong driver of the overall mortality change and a notable finding. This finding was consistent with our a priori hypothesis that the largest teaching hospitals may have a greater mortality change because they are better able to mobilize staff resources due to their size and more motivated to do so because they have a reputation at stake. One strategy for health systems to consider would be to observe which aspects of normal day-to-day operations change most dramatically in their institution to meet survey readiness standards (eg, clean environment and proper documentation). Those changes may be the best opportunities to identify whether more continual attention could improve patient safety.

Limitations

Our study had several limitations. First, the observational nature precludes interpreting the findings to reflect a causal link between TJC surveyor presence and reduced mortality. However, the unannounced nature of surveys supports a strong observational, quasi-randomized study design that is supported by similar admission characteristics between survey and nonsurvey weeks. Second, the modest effect size limits statistical power to assess similar effect sizes across the secondary outcomes to find suggestive mechanisms explaining the primary results. It is likely that we cannot rule out an effect on PSI 90 safety measures, infection control as measured by C difficile rates, or hospital operational readiness as measured by in-hospital cardiac arrest mortality given the baseline rates that we observed for these outcomes. More generally, we were unable to identify a specific mechanism by which mortality is reduced during TJC surveys. Future work could consider additional potential explanations, including hospital safety culture or other hospital characteristics.36 Third, the exposure could be measured with error if not all TJC surveys required 5 weekdays during the survey week to complete. However, this potential issue would bias the findings to the null, most likely leading to underestimation of the true effect. Finally, our analysis was limited to the Medicare population and may not generalize to commercially insured populations hospitalized during TJC survey weeks.

Conclusions

We observed lower 30-day mortality for admissions occurring during TJC survey weeks compared with nonsurvey weeks, particularly among major teaching hospitals. This observation could be explained by heightened attention by hospital staff to multiple aspects of patient care during intense surveyor observation and suggests that differential behavior during survey weeks may have meaningful effects on patient mortality.

Supplementary Material

Key Points.

Question

What is the effect of heightened vigilance during unannounced hospital accreditation surveys on the quality and safety of inpatient care?

Findings

In an observational analysis of 1984 unannounced hospital surveys by The Joint Commission, patients admitted during the week of a survey had significantly lower 30-day mortality than did patients admitted in the 3 weeks before or after the survey. This change was particularly pronounced among major teaching hospitals; no change in secondary safety outcomes was observed.

Meaning

Changes in practice occurring during periods of surveyor observation may meaningfully improve quality of care.

Acknowledgments

Funding/Support: The study was supported by grant 1DP5OD017897-01 from the Office of the Director, National Institutes of Health (NIH) (Dr Jena, NIH Early Independence Award) and grant T32-HP10251 from the Health Resources and Services Administration (HRSA) (Dr Barnett).

Footnotes

Conflict of Interest Disclosures: Dr Jena received consulting fees unrelated to this work from Pfizer, Inc, Hill Rom Services, Inc, Bristol-Myers Squibb, Novartis Pharmaceuticals, Vertex Pharmaceuticals, and Precision Health Economics, a company providing consulting services to the life sciences industry. No other disclosures were reported.

Disclaimer: The contents of this publication are solely the responsibility of the authors and do not necessarily represent the official views of the HRSA.

Author Contributions: Drs Barnett and Jena had full access to all data in the study and take full responsibility for the integrity of the data and accuracy of the data analysis.

Study concept and design: Barnett, Jena.

Acquisition, analysis, or interpretation of data: All authors.

Drafting of the manuscript: Barnett, Jena.

Critical revision of the manuscript for important intellectual content: All authors.

Statistical analysis: All authors.

Obtained funding: Jena.

Administrative, technical, or material support: Barnett, Jena.

Supervision: Barnett, Jena.

Role of the Funder/Sponsor: The funding organizations had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS, editors. To Err Is Human: Building a Safer Health System. Washington, DC: National Academies Press; 2000. [PubMed] [Google Scholar]

- 2.Joint Commission. [Accessed March 11, 2016];History of The Joint Commission. http://www.jointcommission.org/about_us/history.aspx.

- 3.Joint Commission. [Accessed March 11, 2016];Facts about the on-site survey process. http://www.jointcommission.org/facts_about_the_on-site_survey_process/

- 4.Perkes C. Anaheim General Hospital loses national accreditation. [Accessed March 11, 2016];Orange County Register. http://www.ocregister.com/articles/hospital-39147-medicare-hospitals.html. Updated August 21, 2013.

- 5.Reese P, Hubert C. Nevada mental hospital accepts loss of accreditation. [Accessed March 11, 2016];Sacramento Bee. http://www.sacbee.com/news/investigations/nevada-patient-busing/article2578259.html. Published July 26, 2013.

- 6.Los Angeles hospital loses accreditation. [Accessed March 11, 2016];HCPro. http://www.hcpro.com/SAF-45563-874/Los-Angeles-hospital-loses-accreditation.html. Published February 3, 2005.

- 7.A handshake that made healthcare history. [Accessed March 24, 2016];Boston Globe. http://www.bostonglobe.com/specials/2008/12/28/handshake-that-made-healthcare-history/QiWbywqb8olJsA3IZ11o1H/story.html. Published December 28, 2008.

- 8.Sorbello BC. Mission: achieve continual readiness for Joint Commission surveys. [Accessed March 11, 2016];American Nurse Today. http://www.americannursetoday.com/mission-achieve-continual-readiness-for-joint-commission-surveys/. Published September 2009.

- 9.Cutler A, Conley L, Kohlbacher D. Tracing accountability. Nurs Manage. 2013;44(12):16–19. doi: 10.1097/01.NUMA.0000437777.22972.d1. [DOI] [PubMed] [Google Scholar]

- 10.Murray K. Are you ready for The Joint Commission survey? Nurs Manage. 2013;44(9):56. doi: 10.1097/01.NUMA.0000433387.95507.f8. [DOI] [PubMed] [Google Scholar]

- 11.McCambridge J, Witton J, Elbourne DR. Systematic review of the Hawthorne effect: new concepts are needed to study research participation effects. J Clin Epidemiol. 2014;67(3):267–277. doi: 10.1016/j.jclinepi.2013.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Meeker D, Linder JA, Fox CR, et al. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. JAMA. 2016;315(6):562–570. doi: 10.1001/jama.2016.0275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mangione-Smith R, Elliott MN, McDonald L, McGlynn EA. An observational study of antibiotic prescribing behavior and the Hawthorne effect. Health Serv Res. 2002;37(6):1603–1623. doi: 10.1111/1475-6773.10482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Joint Commission. [Accessed March 11, 2016];Measuring hand hygiene adherence: overcoming the challenges. http://www.jointcommission.org/measuring_hand_hygiene_adherence_overcoming_the_challenges_/. Published May 4, 2010.

- 15.Leonard K, Masatu MC. Outpatient process quality evaluation and the Hawthorne Effect. Soc Sci Med. 2006;63(9):2330–2340. doi: 10.1016/j.socscimed.2006.06.003. [DOI] [PubMed] [Google Scholar]

- 16.Nagin D, Rebitzer J, Sanders S, Taylor L. Monitoring, motivation and management: the determinants of opportunistic behavior in a field experiment. National Bureau of Economic Research; [Accessed December 9, 2016]. http://www.nber.org/papers/w8811. Published February 2002. [Google Scholar]

- 17.Olken BA. Monitoring corruption: evidence from a field experiment in Indonesia. National Bureau of Economic Research; [Accessed December 9, 2016]. http://www.nber.org/papers/w11753. Published November 2005. [Google Scholar]

- 18.Devkaran S, O’Farrell PN. The impact of hospital accreditation on clinical documentation compliance: a life cycle explanation using interrupted time series analysis. BMJ Open. 2014;4(8):e005240. doi: 10.1136/bmjopen-2014-005240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Centers for Medicare & Medicaid Services. [Accessed March 25, 2015];Chronic Conditions Data Warehouse. https://www.ccwdata.org/. Updated 2017.

- 20.Health Forum. [Accessed February 9, 2017];AHA annual survey database. https://www.ahadataviewer.com/additional-data-products/AHA-Survey/

- 21.Jena AB, Prasad V, Goldman DP, Romley J. Mortality and treatment patterns among patients hospitalized with acute cardiovascular conditions during dates of national cardiology meetings. JAMA Intern Med. 2015;175(2):237–244. doi: 10.1001/jamainternmed.2014.6781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Schmaltz SP, Williams SC, Chassin MR, Loeb JM, Wachter RM. Hospital performance trends on national quality measures and the association with Joint Commission accreditation. J Hosp Med. 2011;6(8):454–461. doi: 10.1002/jhm.905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.The Joint Commission Quality Check. [Accessed March 21, 2016]; http://www.qualitycheck.org/Consumer/SearchQCR.aspx.

- 24.The Joint Commission Quality Check. [Accessed March 21, 2016];Quality data download. http://www.healthcarequalitydata.org/

- 25.Joint Commission. [Accessed March 31, 2016];Accreditation guide for hospitals. http://www.jointcommission.org/accreditation_guide_for_hospitals_hidden.aspx.

- 26.AHRQ - Quality Indicators. [Accessed December 20, 2016];Patient Safety Indicators overview. http://www.qualityindicators.ahrq.gov/modules/psi_overview.aspx.

- 27.Agency for Healthcare Research and Quality. [Accessed December 9, 2016];Patient safety indicators technical specifications updates–version 6.0 (ICD-9) http://www.qualityindicators.ahrq.gov/Modules/PSI_TechSpec_ICD09_v60.aspx. Published October 2016.

- 28.Scheurer DB, Hicks LS, Cook EF, Schnipper JL. Accuracy of ICD-9 coding for Clostridium difficile infections: a retrospective cohort. Epidemiol Infect. 2007;135(6):1010–1013. doi: 10.1017/S0950268806007655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schmiedeskamp M, Harpe S, Polk R, Oinonen M, Pakyz A. Use of International Classification of Diseases, Ninth Revision, Clinical Modification codes and medication use data to identify nosocomial Clostridium difficile infection. Infect Control Hosp Epidemiol. 2009;30(11):1070–1076. doi: 10.1086/606164. [DOI] [PubMed] [Google Scholar]

- 30.van Walraven C, Austin PC, Jennings A, Quan H, Forster AJ. A modification of the Elixhauser comorbidity measures into a point system for hospital death using administrative data. Med Care. 2009;47(6):626–633. doi: 10.1097/MLR.0b013e31819432e5. [DOI] [PubMed] [Google Scholar]

- 31.3M Health Information Systems. [Accessed August 4, 2015];All Patient Refined Diagnosis Related Groups (APR-DRGs) version 31.0: methodology overview. https://www.hcup-us.ahrq.gov/db/nation/nis/grp031_aprdrg_meth_ovrview.pdf.

- 32.White H. A heteroskedasticity-consistent covariance matrix estimator and a direct test for heteroskedasticity. Econometrica. 1980;48(4):817–838. [Google Scholar]

- 33.Volpp KG, Rosen AK, Rosenbaum PR, et al. Mortality among hospitalized Medicare beneficiaries in the first 2 years following ACGME resident duty hour reform. JAMA. 2007;298(9):975–983. doi: 10.1001/jama.298.9.975. [DOI] [PubMed] [Google Scholar]

- 34.Taylor DH, Jr, Whellan DJ, Sloan FA. Effects of admission to a teaching hospital on the cost and quality of care for Medicare beneficiaries. N Engl J Med. 1999;340(4):293–299. doi: 10.1056/NEJM199901283400408. [DOI] [PubMed] [Google Scholar]

- 35.Medicare.gov. [Accessed March 21, 2016];Total performance scores. https://www.medicare.gov/hospitalcompare/data/total-performance-scores.html.

- 36.Agency for Healthcare Research and Quality. [Accessed December 10, 2016];Hospital survey on patient safety culture. https://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/hospital/index.html. Updated November 2016.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.