Abstract

Objective To examine the extent and nature of outcome reporting bias in a broad cohort of published randomised trials.

Design Retrospective review of publications and follow up survey of authors.

Cohort All journal articles of randomised trials indexed in PubMed whose primary publication appeared in December 2000.

Main outcome measures Prevalence of incompletely reported outcomes per trial; reasons for not reporting outcomes; association between completeness of reporting and statistical significance.

Results 519 trials with 553 publications and 10 557 outcomes were identified. Survey responders (response rate 69%) provided information on unreported outcomes but were often unreliable—for 32% of those who denied the existence of such outcomes there was evidence to the contrary in their publications. On average, over 20% of the outcomes measured in a parallel group trial were incompletely reported. Within a trial, such outcomes had a higher odds of being statistically non-significant compared with fully reported outcomes (odds ratio 2.0 (95% confidence interval 1.6 to 2.7) for efficacy outcomes; 1.9 (1.1 to 3.5) for harm outcomes). The most commonly reported reasons for omitting efficacy outcomes included space constraints, lack of clinical importance, and lack of statistical significance.

Conclusions Incomplete reporting of outcomes within published articles of randomised trials is common and is associated with statistical non-significance. The medical literature therefore represents a selective and biased subset of study outcomes, and trial protocols should be made publicly available.

Introduction

Researchers have often commented on the existence of unreported study outcomes.1,2 Even when outcomes are presented in journal publications, they may be reported with inadequate detail.3 Outcome reporting bias refers to the selective reporting of some results but not others in trial publications. Direct evidence of such bias has recently been shown in two cohort studies that compared trial publications with the original protocols.4,5 However, it is unknown whether selective outcome reporting can be identified when protocols are unavailable.

We used a large representative sample of publications of randomised trials indexed on PubMed to determine the prevalence of incomplete outcome reporting; the reasons for omitting outcomes; and the degree of association between completeness of reporting and statistical significance for trial outcomes.

Methods

Study selection

Using an extended version of Phase 1 from the Cochrane search strategy (see box 1),6 we identified primary publications of randomised trials published in December 2000 and included in PubMed by August 2002. A primary publication was the first report of final trial results. We excluded studies of cost effectiveness and diagnostic test properties, and those published in languages other than English and French.

We recorded trial characteristics from the primary publications published in December 2000. We identified extra journal publications for these trials through a survey of contact authors, as well as literature searches of PubMed, Embase, the Cochrane Controlled Trials Register, and PsychINFO using investigator names and keywords (final search January 2003). For each trial, we reviewed the primary and any subsequent publications to extract the number and characteristics of reported outcomes (including statistical significance, level of reporting, and specification as primary, secondary, or unspecified according to the text).

Reporting of outcomes

We identified unreported outcomes if they were described in the methods section but not the results section of any publication. Furthermore, using a pre-piloted questionnaire, we asked the contact authors to list any outcomes that were not reported in the published papers and to indicate for each unreported outcome whether it reached statistical significance (P < 0.05) in any inter-group comparison; whether it was a primary, secondary, or unspecified outcome according to the protocol; whether it was of little, moderate, or high clinical importance; and the reasons for not reporting it. We sent two reminders via email or post to non-responders.

Box 1: Modified Cochrane search strategy for identifying randomised trials on PubMed that were published in December 20006

randomized controlled trial [pt]

controlled clinical trial [pt]

randomized controlled trials [mh]

random allocation [mh]

double blind method [mh]

single blind method [mh]

cross-over studies [mh]

multicenter study [pt]

#1 OR #2 OR #3 OR #4 OR #5 OR #6 OR #7 OR #8

#9 NOT (animal [mh] NOT human [mh])

limit 00/12/01 - 00/12/31

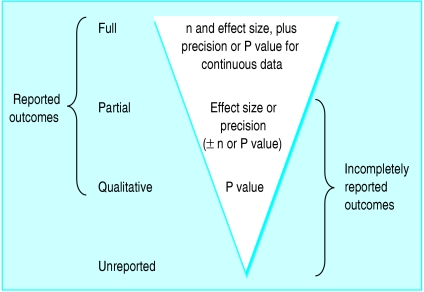

For each identified outcome, we recorded the level of reporting as one of four levels based on the amount of data presented in any of the journal publications (fig 1). If sufficient data were provided for inclusion in a meta-analysis, we recorded the outcome as fully reported (see box 2). We recorded an outcome as partially reported if the publications provided only some of the data necessary for meta-analysis, and as qualitatively reported if the publications presented only a P value or some indication of the presence or absence of statistical significance. Finally, unreported outcomes were those for which no data were provided in any of the publications despite being identified in the methods section or the authors' responses to our survey.

Fig 1.

Hierarchy of levels of outcome reporting (n=number of participants per group)

We used two further terms to describe composite levels of reporting (fig 1). “Reported outcomes” referred to those with some data presented in any of the publications (full, partial, and qualitative). “Incompletely reported outcomes” referred to those with inadequate data for meta-analysis (partial, qualitative, and unreported).

Box 2: Amount of data required in trial publications for outcomes to be classified as fully reported

Unpaired continuous data

Group numbers and

Size of treatment effect (group means or medians or difference in means or medians) and

Measure of precision or variability (confidence interval, standard deviation, or standard error for means; range for medians) or the precise P value

Unpaired binary data

Group numbers and

Numbers of events or event rates in each group

Paired continuous data

Mean difference between groups and a measure of its precision or exact P value or

Raw data for each participant

Paired binary data

Paired numbers of participants with and without events

Survival data

Kaplan-Meier curve with numbers of patients at risk over time or

Hazard ratio with a measure of precision

Statistical analyses

Using Stata 7 (Stata Corporation, College Station, TX, USA), we conducted analyses at the trial level stratified by efficacy and harm outcomes. Primary variables of interest included the proportion of incompletely reported outcomes per trial and the reasons given by authors for not reporting outcomes. We also examined the association between the level of outcome reporting and statistical significance.

For each trial, we created a 2×2 table for the outcomes, relating the level of reporting (full v incomplete) to statistical significance at the P < 0.05 level. We calculated odds ratios for each trial and pooled these using a random effects meta-analysis to provide an overall estimate of outcome reporting bias. We excluded trials if entire rows or columns were empty in the 2×2 table, as meaningful odds ratios could not be calculated. If one cell or two diagonal cells were empty we added 0.5 to all four cell frequencies in the table. We conducted sensitivity analyses by excluding trials without survey responses as well as excluding physiological and pharmacokinetic trials. We also assessed the impact of using a different cut-off point for dichotomising the level of reporting (fully or partially reported v qualitatively reported or unreported).

We used exploratory meta-regression to evaluate the effect of 13 factors on the size of bias: trial characteristics (study design, intervention type, sample size, blinding, source of funding, number of centres); journal characteristics (specialty v general medical journal, short v full length publication); and the reporting of important methodological details (power calculation, specification of primary outcomes, description of random sequence generation, allocation concealment, and handling of attrition). Definitions for these characteristics are described in detail elsewhere.7 We used a restricted maximum likelihood method to estimate residual heterogeneity for the univariate and multivariate backward stepwise regression analyses.8

Results

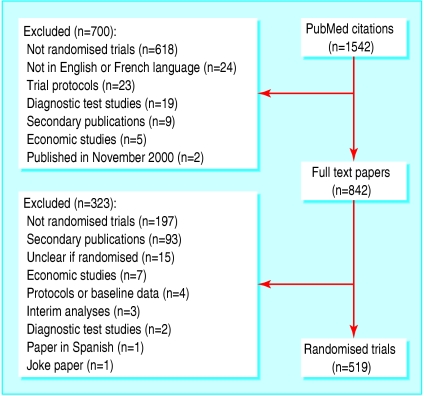

We identified 519 trials with 553 publications and 10 557 outcomes (8325 efficacy and 2232 harm outcomes) (fig 2). Table 1 shows the main trial characteristics. Median sample sizes were 80 (10-90th centile range 25-369) and 15 (8-38) for parallel group and crossover trials respectively. A detailed report of the cohort characteristics has been published separately.7

Fig 2.

Identification of randomised trials from PubMed citations of studies published in December 2000

Table 1.

Overview of characteristics of 519 randomised trials published in December 2000 and cited in PubMed

| No (%) of trials. | |

|---|---|

| Study design: | |

| Parallel group | 383 (74) |

| Crossover | 116 (22) |

| Other* | 20 (4) |

| Intervention: | |

| Drug | 393 (76) |

| Surgery or procedure | 51 (10) |

| Counselling or lifestyle | 55 (11) |

| Equipment | 20 (4) |

| Study centres: | |

| Single | 376 (72) |

| Multiple | 134 (26) |

| Unclear | 9 (2) |

| Funding: | |

| Full industry | 167 (32) |

| Partial industry | 61 (12) |

| Non-industry | 184 (35) |

| None | 54 (10) |

| Unknown | 53 (10) |

| Journal type: | |

| General† | 37 (7) |

| Specialty | 482 (93) |

Split body (n=9), cluster (n=6), factorial (n=4), and “n of 1” (n=1) trials.

Journals publishing studies from any specialty field.

For our survey of contact authors, 69% (356/519) of authors responded to the questionnaire with information. Among the 466 trials with identified funding sources, we obtained lower response rates for those funded solely by industry (65% (108/167)) compared with those with partial industry funding (75% (46/61)), non-industry funding (80% (147/184)), or no funding (100% (54/54)).

Prevalence of incompletely reported outcomes

From publications and survey responses, we identified a median of 11 (10-90th centile range 3-36) efficacy outcomes per trial (n = 505) and 4 (1-17) harm outcomes per trial (n = 308). Of these trials, 75% (380/505) and 64% (196/308) respectively did not fully report all their efficacy and harm outcomes in any journal publications. The median proportion of incompletely reported efficacy outcomes per trial was 42% (table 2). For harm outcomes, the median proportion per trial was 50%. Of the 232 trials (45%) that defined primary outcomes in their publications, 83 (36%) presented at least one that was incompletely reported.

Table 2.

Median proportion of incompletely reported efficacy and harm outcomes per trial, stratified by trial characteristics, among 519 randomised trials published in December 2000 and cited in PubMed

|

Efficacy outcomes

|

Harm outcomes

|

|||

|---|---|---|---|---|

| Trial characteristic | No of trials | Median % of incompletely reported outcomes per trial* | No of trials | Median % of incompletely reported outcomes per trial* |

| All trials | 505 | 42 | 308 | 50 |

| Parallel group trials | 375 | 22 | 237 | 25 |

| Crossover trials | 110 | 100 | 62 | 82 |

| Other study designs | 20 | 59 | 9 | 80 |

| General medical journal | 37 | 40 | 26 | 58 |

| Specialty journal | 468 | 43 | 282 | 47 |

| Full industry funding† | 163 | 46 | 133 | 56 |

| Partial or non-industry funding† | 290 | 42 | 142 | 27 |

10-90th centile ranges were 0-100% for all median percentages except for efficacy outcomes in crossover trials (48-100%).

Trials with unknown funding sources were excluded.

When we stratified trials by study design, we found that parallel group trials contained much lower percentages of incompletely reported efficacy and harm outcomes than crossover trials (table 2). We found little difference between specialty and general medical journals, but greater deficiencies for reporting of harm outcomes among trials that were solely funded by industry (median 56% per trial) compared with those that were not (27%) (table 2).

Prevalence of unreported outcomes

Among 356 survey responders, 281 stated that there were no unreported outcomes. However, for 32% (90/281) of these responses, we found evidence of outcomes that were mentioned in the methods section but not the results section of individual publications.

From our survey responses alone, we identified 343 unreported outcomes in 71 trials. We identified another 545 unreported outcomes based solely on discrepancies between the methods and results sections of publications for 174 trials. Finally, we identified 27 unreported outcomes based on both survey responses and publications for eight trials.

Using combined data from survey responses and publications, we identified at least one unreported efficacy outcome in 33% (169/505) of trials that measured efficacy data, and 28% (85/308) of trials with unreported harms data. A median of 2 (10-90th centile range 1-7) efficacy and 2 (1-6) harm outcomes were unreported for each of these trials.

Characteristics of unreported outcomes based on survey responses

Fifty three survey responders provided data on the clinical importance of 238 unreported efficacy outcomes (table 3). Of these trials, 26% (14/53) had unreported outcomes that were categorised as having high clinical importance. According to survey responses, the important efficacy outcomes for three of these trials were to be reported in future publications. Sixteen survey responders indicated the clinical importance of 38 unreported harm outcomes, all of which were classified as having low or moderate clinical importance (table 3); 13 authors provided the statistical significance of their unreported harm outcomes, all of which were non-significant.

Table 3.

Randomised trials with at least one unreported outcome for which survey responders provided data on clinical importance and specification

|

No (%) of trials among responders*

|

||

|---|---|---|

| Parameter and survey rating | Efficacy outcomes | Harm outcomes |

| Clinical importance: | ||

| High | 14/53 (26) | 0 |

| Moderate | 26/53 (49) | 5/16 (31) |

| Low | 29/53 (55) | 13/16 (81) |

| Specification: | ||

| Primary | 13/54 (24) | 3/18 (17) |

| Secondary | 28/54 (52) | 8/18 (44) |

| Unspecified | 22/54 (41) | 8/18 (44) |

Denominator corresponds to number of trials with survey data on clinical importance or specification of unreported outcomes.

Fifty four survey responders indicated the specification of their unreported efficacy outcomes as primary, secondary, or neither (table 3). These responders included the authors who provided clinical importance ratings. Primary efficacy outcomes were unreported for 13 trials; according to authors, the primary outcomes for six of these trials were to be reported in future manuscript submissions. Eighteen responders indicated the specification of their unreported harm outcomes: three trials (17%) had at least one unreported primary harm outcome listed in the survey responses.

Table 4 shows the reasons given by authors for not reporting outcomes. For efficacy outcomes, the most common reasons were journal space restrictions (47%), lack of clinical importance (37%), and lack of statistical significance (24%). For harm outcomes, the commonest reasons were lack of clinical importance (75%) or of statistical significance (50%)

Table 4.

Reasons for omitting one or more outcomes per trial; based on 69 survey responses with unreported efficacy or harm outcomes in randomised trials published in December 2000 and cited in PubMed

|

No (%) of trials

|

||

|---|---|---|

| Reason* | Efficacy outcomes (n=59) | Harm outcomes (n=16) |

| Space constraints: | 28 (47) | 4 (25) |

| Journal imposed | 11 (19) | 1 (6) |

| Author imposed | 21 (36) | 3 (19) |

| Not clinically important | 22 (37) | 12 (75) |

| Not statistically significant† | 14 (24) | 8 (50) |

| Not yet submitted | 13 (22) | 1 (6) |

| Not yet analysed | 10 (17) | 1 (6) |

Reasons are not mutually exclusive (>1 may have been given per trial).

P≥0.05 in all inter-group comparisons.

Association between completeness of reporting and statistical significance

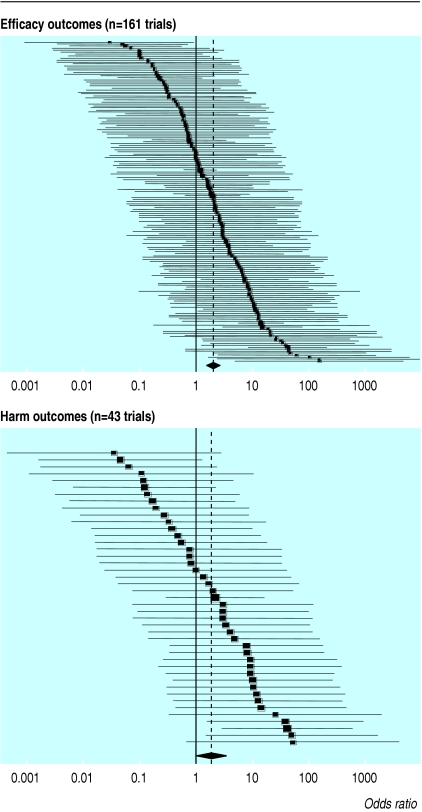

Fig 3 shows the odds ratios for outcome reporting bias in each trial. Statistically significant outcomes had a higher odds of being fully reported than those that were non-significant. The pooled odds ratio for outcome reporting bias in all trials was 2.0 (95% confidence interval 1.6 to 2.7) for efficacy outcomes and 1.9 (1.1 to 3.5) for harms (table 5). Across study designs, the size of bias was similar for efficacy outcomes. We found greater variation between study designs with harm outcomes, although the subset of crossover trials contained few studies.

Fig 3.

Odds ratios (black squares) with 95% confidence intervals for outcome reporting bias in randomised trials published in December 2000 and cited in PubMed. Size of the square reflects the weight of the trial in calculating the pooled odds ratio (diamond and dotted line)

Table 5.

Pooled odds ratio for outcome reporting bias (fully reported v incompletely reported outcomes) in randomised trials published in December 2000 and cited in PubMed, stratified by study design and sensitivity analyses

|

Efficacy outcomes

|

Harm outcomes

|

|||

|---|---|---|---|---|

| Trial population | No of trials* | Odds ratio (95% CI)† | No of trials* | Odds ratio (95% CI)† |

| All trials | 161 | 2.0 (1.6 to 2.7) | 43 | 1.9 (1.1 to 3.5) |

| Parallel group trials | 135 | 1.9 (1.4 to 2.6) | 33 | 3.1 (1.6 to 5.8) |

| Crossover trials | 22 | 2.7 (1.4 to 5.3) | 9 | 0.32 (0.10 to 1.1) |

| Excluding survey non-responders | 114 | 2.1 (1.6 to 2.9) | 31 | 1.4 (0.66 to 3.0) |

| Excluding physiological or pharmacokinetic trials | 148 | 1.9 (1.4 to 2.5) | 38 | 2.4 (1.3 to 4.4) |

| Fully or partially reported outcomes v qualitatively or unreported outcomes | 134 | 3.2 (2.4 to 4.2) | 38 | 4.2 (2.5 to 7.0) |

>1 means that fully or partially reported outcomes have higher odds of being significant (P<0.05) than qualitatively or unreported outcomes.

Trials excluded if odds ratio could not be calculated because of empty rows or columns in 2×2 table (see methods for details).

Except for the bottom row, odds ratio >1 signifies that fully reported outcomes have higher odds of being significant (P<0.05) than incompletely reported outcomes. In bottom row, odds ratio

Sensitivity analyses showed that the overall odds ratios were not greatly affected when we excluded non-responders to our survey or physiological or pharmacokinetic trials (table 5). Dichotomising the level of outcome reporting differently (fully or partially reported v qualitatively reported or unreported) produced greater bias (table 5).

In our exploratory multivariate analysis, sample size and reporting of a power calculation were excluded post hoc based on collinearity with other variables. The final exploratory model revealed that multicentre trials were associated with significantly less bias than were single centre trials (odds ratio 0.44 (95% confidence interval 0.24 to 0.80)), while those that defined primary outcomes in their publications were associated with greater bias than those which did not specify any (1.8 (1.0 to 3.2)).

Discussion

We identified deficiencies in outcome reporting in a large sample of randomised trials that was unrestricted by study location or funding source. Two recent cohort studies compared study protocols with publications to show similar sizes of outcome reporting bias in 102 trials approved by a Danish ethics committee4 and 48 trials funded by a government research agency.5 Other evidence of selective outcome reporting is limited to case reports.9-11

Contacting authors for information about outcomes

Our survey results indicate that a response rate of almost 70% is achievable when asking authors for unreported outcomes. Overall, a fifth of responders provided us with a list of unreported trial outcomes, many of which were primary outcomes that would not have been identified based on the publications alone. Thus there is potential benefit in reviewers contacting authors for information about study outcomes.

However, we were writing to researchers whose studies were published recently, which is often not the case for systematic reviews. In addition, many authors provided responses that contradicted evidence within their publications. Response rates were lower for industry funded trials, and it is unclear whether authors would have been willing to provide actual data.

How common are incompletely reported outcomes?

We have shown that trial outcomes are often reported inadequately for inclusion in meta-analysis. Even among primary outcomes, over a third of trials had at least one that was incompletely reported. Publications for crossover trials were particularly deficient, as they often did not provide the necessary paired outcome data.12 The revised CONSORT statement and its extension for harms recommend full reporting of data for all primary and secondary outcomes.13,14

Why are outcomes unreported?

Unreported outcomes were common, with over a third of trials omitting an average of two or more outcomes each. The decision to omit outcomes from publications seems to be made by investigators based on a combination of journal space restrictions, the importance of the outcome, and the statistical results. However, the omission of outcomes because of space constraints and a lack of clinical importance may well be directly associated with a lack of statistical significance. Outcomes could be deemed post hoc to have little clinical relevance if they fail to show significant findings and may thus be omitted when accommodating space limitations.

Is outcome reporting associated with statistical significance?

On average, the completeness of outcome reporting was biased to favour statistically significant outcomes. The sizes of bias for efficacy and harm outcomes (2.0 and 1.9 respectively) were robust or conservative in various sensitivity analyses, and are similar to those reported in other studies (efficacy odds ratios 2.4 and 2.7).4,5 However, we identified fewer unreported outcomes because we did not review trial protocols. Accordingly, we found 44% of trials to have one or more unreported outcomes, compared with 76% and 98% of trials in the earlier studies.4,5 We may therefore have underestimated the size of reporting deficiencies in our cohort.

Limitations of study

Response bias was expected in our study, as we relied on self reported data from questionnaires and publications. We observed that for 32% of authors who denied the existence of unreported outcomes there was evidence to the contrary in their publications. In addition, we found lower response rates for trials funded solely by industry. This finding is consistent with previous observations that industry funded researchers may be less willing or able to offer data from their studies.15-18 Regardless of the funding source, a non-response or inaccurate response may arise from a reluctance to reveal biased practices, and we may therefore have underestimated the deficiencies in outcome reporting.

Implications for health care and research

Inadequate reporting of outcomes can have a detrimental effect on the critical interpretation of individual trial publications and reviews by limiting the availability of all existing data. Outcome reporting bias acts in addition to and in the same direction as publication bias of entire studies to produce inflated estimates of treatment effect.19 At its worst, the suppression of non-significant findings could lead to the use of harmful interventions. Perhaps more commonly, a treatment may be considered to be of more value than it merits, depriving patients of more effective or cheaper alternatives.

To limit outcome reporting bias, researchers and journal editors should ensure that complete data are provided for all pre-specified trial outcomes, independent of their results. Discrepancies between outcomes in the methods and results sections of publications should also be addressed during peer review. The increasing use of journal internet sites should help to alleviate concerns over space restrictions.20

Given the difficulties in identifying unreported outcomes and in contacting investigators for further information, trials should be registered and protocols should be made available in the public domain before trial completion. At the least, they should be submitted with manuscripts and reviewed when being considered for publication by journals.21-24 The choice of outcomes and analysis plans would then be transparent, serving as a deterrent to selective reporting.

What is already known on this topic

Selective reporting of some measured outcomes but not others within published trials has been shown in cohorts restricted by geography and funding source

Outcome reporting bias limits the critical interpretation of individual trials as well as the conclusions of literature reviews

What this study adds

Outcome reporting bias exists in published trials indexed on PubMed

Contacting authors for a list of unreported outcomes has the potential to identify important omissions from publications, although responses are often unreliable

Clinically important trial outcomes are often inadequately reported

Trials should be registered, and protocols should be made publicly available

Contributors: AWC is the guarantor of the study, had full access to all the data in the study, and takes responsibility for the integrity of the data and the accuracy of the data analysis. AWC contributed to the study conception and design, acquisition of data, analysis and interpretation of data, and drafting the article. DGA contributed to the study conception and design, analysis and interpretation of data, and drafting the article.

Funding: AWC was funded by the Rhodes Trust; DGA is funded by Cancer Research UK. The funding sources had no role in any aspect of the study.

Competing interests: None declared.

Ethical approval: None required.

References

- 1.Hahn S, Williamson PR, Hutton JL. Investigation of within-study selective reporting in clinical research: follow-up of applications submitted to a local research ethics committee. J Eval Clin Pract 2002;8: 353-9. [DOI] [PubMed] [Google Scholar]

- 2.Tannock IF. False-positive results in clinical trials: multiple significance tests and the problem of unreported comparisons. J Natl Cancer Inst 1996;88: 206-7. [DOI] [PubMed] [Google Scholar]

- 3.Streiner DL, Joffe R. The adequacy of reporting randomized, controlled trials in the evaluation of antidepressants. Can J Psychiatry 1998;43: 1026-30. [DOI] [PubMed] [Google Scholar]

- 4.Chan AW, Hróbjartsson A, Haahr MT, Gøtzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA 2004;291: 2457-65. [DOI] [PubMed] [Google Scholar]

- 5.Chan AW, Krleža-Jerić K, Schmid I, Altman DG. Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research. CMAJ 2004;171: 735-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Robinson KA, Dickersin K. Development of a highly sensitive search strategy for the retrieval of reports of controlled trials using PubMed. Int J Epidemiol 2002;31: 150-3. [DOI] [PubMed] [Google Scholar]

- 7.Chan AW, Altman DG. Epidemiology and reporting of randomised trials published in PubMed journals. Lancet (in press). [DOI] [PubMed]

- 8.Thompson SG, Sharp SJ. Explaining heterogeneity in meta-analysis: a comparison of methods. Stat Med 1999;18: 2693-708. [DOI] [PubMed] [Google Scholar]

- 9.West RR, Jones DA. Publication bias in statistical overview of trials: example of psychological rehabilitation following myocardial infarction [abstract]. In: Proceedings of the 2nd International Conference on the Scientific Basis of Health Services and 5th Annual Cochrane Colloquium; 1997. October 8-12, Amsterdam: 82.

- 10.McCormack K, Scott NW, Grant AM. Outcome reporting bias and individual patient data meta-analysis: a case study in surgery [abstract]. In: Abstracts for workshops and scientific sessions, 9th International Cochrane Colloquium; 2001. October 9-13, Lyons: 34-5.

- 11.Felson DT. Bias in meta-analytic research. J Clin Epidemiol 1992;45: 885-92. [DOI] [PubMed] [Google Scholar]

- 12.Elbourne DR, Altman DG, Higgins JP, Curtin F, Worthington HV, Vail A. Meta-analyses involving cross-over trials: methodological issues. Int J Epidemiol 2002;31: 140-9. [DOI] [PubMed] [Google Scholar]

- 13.Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet 2001;357: 1191-4. [PubMed] [Google Scholar]

- 14.Ioannidis JP, Evans SJ, Gøtzsche PC, O'Neill RT, Altman DG, Schulz K, et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med 2004;141: 781-8. [DOI] [PubMed] [Google Scholar]

- 15.Johansen HK, Gøtzsche PC. Problems in the design and reporting of trials of antifungal agents encountered during meta-analysis. JAMA 1999;282: 1752-9. [DOI] [PubMed] [Google Scholar]

- 16.Hampton JR, Julian DG. Role of the pharmaceutical industry in major clinical trials. Lancet 1987;ii: 1258-9. [DOI] [PubMed] [Google Scholar]

- 17.Roberts I, Schierhout G. The private life of systematic reviews. BMJ 1997;315: 686-7. [Google Scholar]

- 18.Blumenthal D, Campbell EG, Anderson MS, Causino N, Louis KS. Withholding research results in academic life science. Evidence from a national survey of faculty. JAMA 1997;277: 1224-8. [PubMed] [Google Scholar]

- 19.Song F, Eastwood AJ, Gilbody S, Duley L, Sutton AJ. Publication and related biases. Health Technol Assess 2000;4(10): 1-115. [PubMed] [Google Scholar]

- 20.Chalmers I, Altman DG. How can medical journals help prevent poor medical research? Some opportunities presented by electronic publishing. Lancet 1999;353: 490-3. [DOI] [PubMed] [Google Scholar]

- 21.Staessen JA, Bianchi G. Registration of trials and protocols. Lancet 2003;362: 1009-10. [DOI] [PubMed] [Google Scholar]

- 22.Godlee F. Publishing study protocols: making them more visible will improve registration, reporting and recruitment. BMC News Views 2001;2: 4. [Google Scholar]

- 23.Goldbeck-Wood S. Changes between protocol and manuscript should be declared at submission. BMJ 2001;322: 1460-1. [Google Scholar]

- 24.Murray GD. Research governance must focus on research training. BMJ 2001;322: 1461-2. [Google Scholar]