Abstract

Previous studies have shown that giant pandas (Ailuropoda melanoleuca) can discriminate face-like shapes, but little is known about their cognitive ability with respect to the emotional expressions of humans. We tested whether adult giant pandas can discriminate expressions from pictures of half of a face and found that pandas can learn to discriminate between angry and happy expressions based on global information from the whole face. Young adult pandas (5–7 years old) learned to discriminate expressions more quickly than older individuals (8–16 years old), but no significant differences were found between females and males. These results suggest that young adult giant pandas are better at discriminating emotional expressions of humans. We showed for the first time that the giant panda, can discriminate the facial expressions of humans. Our results can also be valuable for the daily care and management of captive giant pandas.

Introduction

Animals adjust their behaviour based on their information-recognition ability1–3. Some types of recognition are innate, but others are acquired through learning (acquisition)4,5. Emotional facial expressions serve critical adaptive purposes6. Emotional facial expressions evolved for physiological functions7,8 and conspecific interactions9–11, and it is also involved in interspecific interactions12. Recent research has shown that domestic animals can discriminate human facial expressions13,14. Some research has also demonstrated that captive wildlife can recognize human faces15, while it is still unknown whether non-primate wildlife can discriminate the emotional facial expressions of humans.

Giant pandas have been shown to maintain their social relationships through visual as well as chemical (e.g., glandular secretions or urine) communication16–19. Giant pandas exhibit marking behaviour by peeling bark or disturbing the soil with their claws and directly transfer visual information through body language20,21. In addition, pandas can discriminate between black-and-white objects with only subtle differences in shape, implying they can perception face-like stimuli22. However, previous research has shown that giant pandas cannot recognize themselves in a mirror but instead consider the image to be a separate conspecific individual, indicating they do not have the capacity of self-recognition23. Therefore, many questions remain about the cognitive abilities of giant pandas.

Giant pandas have been bred in captivity for only 64 years and have never been intentionally selected and bred for panda–human interactions24. Understanding the ability of the giant panda as a wild animal to discriminate human facial expressions can provide valuable information for their daily care. In addition to their close interaction with keepers due to their daily care24, nearly three million tourists visit the Chengdu Research Base of Giant Panda Breeding to see the approximately one hundred and fifty giant pandas and ten new cubs at the facility each year. Thus, these giant pandas are exposed to the various expressions of a large number of visitors each day. Since giant pandas have visual and cognitive abilities, their response to human contact may lead to changes in behaviour and recognition, as has been found in domestic dogs (Canis familiaris)25 and cats (Felis catus)26 as well as birds27.

The captive breeding of giant pandas has been so successful that the captive population of giant pandas reached 471 in 201628. Subsequently, a program was launched to release captive giant pandas into the wild to reinforce wild populations. For captive-bred giant pandas, constant contact with humans is inevitable during pre-release training, which is essential for their survival in the wild29.

In this paper, following the methods of Müller et al.30, we aimed to examine the ability of pandas to discriminate different emotion of human faces. A previous study showed that giant pandas can recognize face-like geometric patterns22. To rule out the possibility that giant pandas can discriminate among facial expressions simply based on the geometric relationships among facial features, we presented giant pandas with pictures of halves of faces with happy or angry expression and tested whether they can choose correct stimuli.

Results

Eighteen adult giant pandas, nine females and nine males, were assigned to 4 groups based on the rewarded stimulus (happy or angry faces) and horizontal facial part (upper or lower half of the face) prior to the experiment (Table 1). To familiarize the subjects with the facial discrimination tasks and to select cooperative individuals for follow-up tests, pictures of faces with neutral expressions and the back of the head were simultaneously presented to the giant pandas in the pre-training phase. Ten (6 females and 4 males) giant pandas showed the ability to discriminate pictures of the face from those of the back of the head and entered the first stage trial (Table 1). There was no significant difference in age between the giant pandas that passed the pre-training and those that failed (Fig. S1, t-test, t16 = 0.170, P = 0.887), and there was also no difference in the number of males and females (Table S1, chi-square test: χ21 = 0.900, P = 0.343).

Table 1.

Subjects, experimental groups and training performances.

| Name | Studbook no. | Sex | Age (years) | Pre-training: no. sessionsa | First stage set | Rewarded expression | First stage: no. sessionsa |

|---|---|---|---|---|---|---|---|

| A Bao | 801 | Female | 5 | 27 | Upper | Happy | 10 |

| Qi Fu | 709 | Female | 7 | 10 | Lower | Happy | 30 |

| Xing Rong | 680 | Female | 8 | 5 | Lower | Happy | NA |

| Qi Zhen | 490 | Female | 16 | 15 | Lower | Happy | 24 |

| Shu Qing | 480 | Female | 16 | NA | Upper | Happy | NA |

| Yong Bing | 738 | Male | 7 | 15 | Lower | Happy | 20 |

| Xi Lan | 731 | Male | 7 | 30 | Lower | Happy | NA |

| Mei Lan | 649 | Male | 9 | 10 | Upper | Happy | NA |

| Bing Dian | 520 | Male | 15 | 28 | Upper | Happy | 33 |

| Mei Bing | 737 | Female | 7 | 16 | Upper | Angry | 15 |

| Bei Chuan | 785 | Female | 7 | 6 | Upper | Angry | 8 |

| Da Jiao | 845 | Female | 7 | 30 | Upper | Angry | NA |

| Xing Ya | 881 | Female | 8 | 13 | Lower | Angry | 35 |

| Wu Yi | 830 | Male | 7 | 5 | Upper | Angry | NA |

| Xing Bing | 814 | Male | 10 | 12 | Upper | Angry | NA |

| Qiao | 824 | Male | 11 | NA | Lower | Angry | NA |

| Xiong Bing | 540 | Male | 14 | 24 | Lower | Angry | 31 |

| Qiu Bing | 574 | Male | 12 | 10 | Lower | Angry | 30 |

N/A: not applicable. aBold type indicates that the learning criterion was reached.

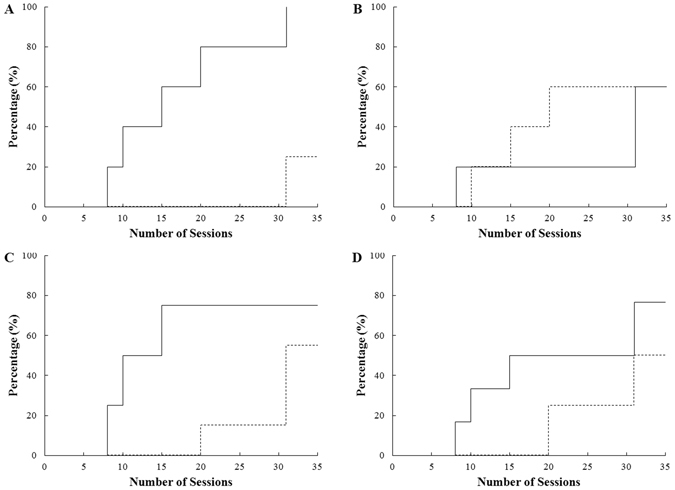

All giant pandas that entered the first stage were simultaneously presented with two horizontal half-face pictures from the same person, one happy and one angry. The stimulus showing the happy expression was the rewarded stimulus for the happy group (5 individuals), whereas for the other five in the angry group, the stimulus with the angry expression was rewarded (Table S2). A total of 5 young adult giant pandas (5–7 years old) and 1 older individual (older than or equal to 8 years) met the requirement (≥70% correct choices) within 30 sessions in the first stage (similar to Nagasawa et al.25). Another 2 male and 2 female individuals, with ages ranging from 8 to 16 years old, failed in this stage (Table 1). Analysis of the Cox proportional hazards model showed that the young adult pandas reached the learning requirement at a significantly faster rate than the older ones (Fig. 1A; proportional hazards model: n = 10, z = 2.285, P = 0.02).

Figure 1.

The survival curve of the giant pandas meeting the first-stage criterion. (A) Cumulative proportion of young giant pandas (solid line) and older giant pandas (dashed line) that reached the criterion. (B) Cumulative proportion of giant pandas that reached the criterion in the angry group (solid line) and the happy group (dashed). (C) Cumulative proportion of giant pandas that reached the criterion when shown the upper face (solid line) and the lower face (dashed line). (D) Cumulative proportion of female giant pandas (solid line) and male giant pandas (dashed line) that reached the criterion.

Three giant pandas from the happy group and three from the angry group achieved the learning criterion of the first stage. Analysis of the Cox proportional hazards model showed that there was no significant difference in the speed of achieving the criterion between the happy and angry groups (Fig. 1B; proportional hazards model: n = 10, z = 0.49, P = 0.63). This indicated that the emotion associated with the human expression did not affect the learning speed of the giant pandas. For the upper and lower face groups, three giant pandas that were shown the upper face and three that were shown the lower face met the requirement, and there was no significant difference in the learning rate between the two groups (Fig. 1C; proportional hazards model: n = 10, z = 1.29, P = 0.20). Additionally, there was no significant difference in the rate of learning between females and males (Fig. 1D; proportional hazards model: n = 10, z = 0.92, P = 0.36).

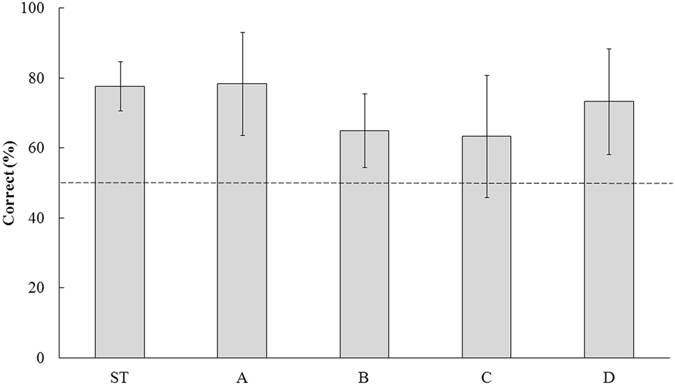

In the second stage, standard trials and probe trials were carried out on 6 giant pandas that passed the first stage (Table S3). The standard trials were employed to reinforce the behaviour of expressions selection. Four types of probe trials were carried out to test whether giant pandas can discriminate facial expressions based on facial features rather than simply their memory of the pictures as well as to test whether giant pandas can do so based on the features of the entire face rather than local features. The other halves of the faces from the same persons as the first stage were used as stimuli in the probe trials, so did pictures of same halves and the other halves of novel faces. We expected that giant pandas can use global information of whole face by linking the information from one horizontal half faces to the other horizontal half, if they can choose the same emotion as the first stage30. All six giant pandas performed significantly better than chance in all of the probe trials (Table 2, Fig. 2). However, there were no significant differences among the probe trials in terms of the proportion of correct choices (Fig. 2; generalized linear mixed model: χ23 = 3.225, P = 0.358), and we found that the proportion of correct choices did not differ between females (n = 4) and males (n = 2) (Fig. S2; generalized linear mixed model: F1,223 = 0.191, P = 0.663) or between the angry (n = 3) and happy (n = 3) groups (Table S3; generalized linear mixed model: F1,223 = 0.152, P = 0.697) in the second stage.

Table 2.

Binomial models comparing performance in the four types of probe trials and the level of significance.

| Probe trial | Estimate* | Standard error | z59 | P |

|---|---|---|---|---|

| a | 1.285 | 0.313 | 4.101 | <0.001 |

| b | 0.619 | 0.271 | 2.287 | 0.022 |

| c | 0.547 | 0.268 | 2.040 | 0.041 |

| d | 1.012 | 0.292 | 3.465 | 0.001 |

aThe same horizontal half of the face as in the standard trials but with novel faces; b: the other horizontal half of the same faces used in the standard trials; c: the other horizontal half of the novel faces; d: the left half (vertical) of the faces of the same persons used in the standard trials.

*Coefficient estimate of binomial models with logit link, value of zero indicates a choice probability of 50%.

Figure 2.

Ratios of correct responses in the standard trials (ST) and the four types of probe trials in the second stage. Proportion of conditioned correct choices for the 5 subjects in the second stage in standard trials (150 trials per subject) and in each type of probe trial (10 trials per subject): (A) the same horizontal portion of the face as shown in the standard trials but with novel faces; (B): the other horizontal half of the same faces used in the standard trials; (C): the other horizontal half of the novel faces; (D): the left half (vertical) of the same faces used in the standard trials.

Discussion

This study revealed that giant panda can discriminate human emotional expressions based on information from the entire face rather than local features when they are presented with pictures of half of a face. This ability to discriminate human emotional expressions has been identified in various species, especially domestic animals31–34, and dogs30 and pigeons (Columba livia)31,33,35 can do this based on information from the entire face. Note that the experimenter holding the pictures was not blind to the stimuli in this study. We can not, therefore, rule out the possibility that giant pandas can get unintentional hint from the experimenter (i.e. Clever Hans effect), though we tried to avoid it.

Our results showed that a negative stimulus (angry expression) did not affect the learning ability of the giant panda. An angry expression, which is generally accompanied by a threat or punishment, can have strong effects on captive or domestic animals32–35. A previous study found that dogs show better learning ability when rewarded for touching a happy stimulus than when rewarded for touching an angry stimulus30. A physiological reaction was also recorded in horses when they were exposed to angry facial expressions36. Our results did not show an effect of the valence of the stimuli (happy versus angry) on the speed of learning. This was possibly because the giant pandas used in this study have never experienced any negative treatment, such as threats or punishment, due to the rules and regulations for feeding and management24, and they therefore cannot associate negative experiences with angry expressions. However, without detailed and extensive experiments on the effects of angry expression, we can not rule out the possibility that angry expression can exert other behavioral or physiological impact on giant pandas. Further studies are also needed to explore whether the emotions of keepers and visitors can affect giant pandas.

In this study, young adult giant pandas (5–7 years old) exhibited a better learning ability than older individuals (8–16 years old). The learning abilities of animals change over their lifetime, increasing rapidly from infancy to the young adult stage; then, depending on the specific ability, learning ability either improves, is maintained, or declines in old age37,38. Studies have revealed that wild giant pandas learn most of their survival skills before 1.5 years of age, when they live with their mothers as cubs20. Wild subadult giant pandas have been reported to learn mating behaviours by watching adults during the mating season39.

As captive-bred giant pandas are now being released into the wild to supplement the small wild populations, enhancing the survival ability of captive-bred giant pandas before release is essential, especially in the early stage29,40. Our results suggest that young giant pandas are more suitable for pre-release training than old ones. We also suggest selecting young individuals for release because learning is necessary to adapt to the wild environment; for example, it allows potential predators to be identified or suitable habitat to be found.

Gender differences in learning ability vary among species25,41–43, but females tend to show superior learning abilities over males44–47. Females dogs respond more obviously than males do to human emotions48,49. In this study, however, we did not find a significant difference in the ability to discriminate human facial expressions between female and male giant pandas. Note that our results are limited, since “negative” findings from the trials may be a result of small sample sizes50.

Only pictures of male humans were used as stimuli to avoid introducing an extra factor of stimulus gender, given the small sample size of giant pandas in this study. Stimulus gender can affect the learning behaviour of animals43. Nagasawa et al.25 found that the ability of dogs to discriminate facial expressions increased when they were shown faces of the same gender as their owner rather than those of the opposite gender25. It will be interesting to test whether giant pandas can discriminate the facial expressions of female humans as well as those of males.

Methods

Animals and treatments

A total of 18 adult giant pandas at the Chengdu Research Base of Giant Panda Breeding were selected as subjects; information about the pandas is presented in Table 1. The 9 females and 9 males were in good health during the research phase, which began in September 2015 and ended in February 2016. All experimental procedures were approved by the Animal Care and Use Committee of the Chengdu Research Base of Giant Panda Breeding and were performed in accordance with its guidelines.

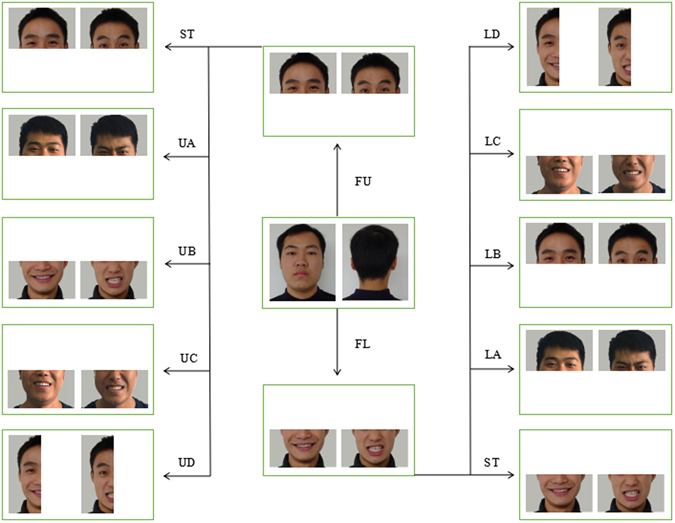

All individuals were divided into groups by horizontal half-face classes and expression classes in both the first stage and second stage. For class of horizontal half-face, we had upper- and lower-face groups; the images of the faces were divided at the horizontal midline of the nose (Fig. 3). There were 9 subjects each in the upper-face and lower-face groups. The class of facial expression consisted of 2 groups, the happy group and the angry group, and each group had 9 individuals (Table 1). In the happy group, pictures of happy human faces (stimuli) were shown as correct stimuli, and in the angry group, pictures of humans with angry expressions were shown as correct stimuli.

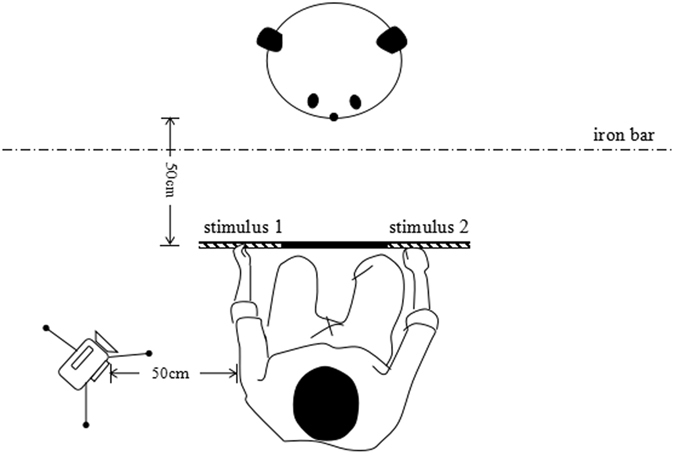

Figure 3.

Overhead sketch of the experimental setup (see text for details). This diagram was created using Microsoft Paint, https://support.microsoft.com.

Experimental apparatus

The experiment was conducted in different pens at the Chengdu Research Base of Giant Panda Breeding, generally from 8:00 to 11:00 and from 14:00 to 16:00; only one panda was tested at a time. The pens were approximately 4 × 5 m, and the lighting was similar to that under natural conditions. The giant pandas were attracted to the iron bars by calling their names, and they tended to sit near or hold the iron bars. The response of captive giant pandas to calling has been established during the subadult stage to facilitate their daily care. The experimenter squatted and held a transparent plastic sheet (65 × 25 cm) facing the panda within a distance of 50 cm; the sheet was placed at a height that allowed the subject to visually perceive the stimuli and indicate a choice with its nose. The experimenter’s line of sight was focused on giant pandas, and avoid potential unintentional hint on choices. A SONY FDR-AXP35 camera (Sony Corporation, Konan Minato-ku, Tokyo, Japan) was placed 50 cm behind the experimenter and to the left to record the tests (Fig. 3).

Two pictures (stimuli) were placed directly on the transparent plastic sheet and positioned 20 cm away from each other (Fig. 4). Seventeen pairs of pictures were presented as A4-size pictures (25.4 cm × 20.3 cm). The faces used in the pictures were of young men with short and black hair, without any associated accessories (such as glasses or a hat), with a gray background. Posed expressions were captured, following a guild from Ekman and Friesen51. Every stimulus was taken 5 times, and the most typical ones were chosen for experiment based on the agreement of three authors of this manuscript. The pictures of faces were split at the middle of the nose horizontally or vertically depending on the design of the trial.

Figure 4.

Some pictures used as stimulus pairs in this study. All pictures were of the faces of adult Chinese men, and the pre-training stimuli and test stimuli were pictures of members of our laboratory. Shown are example stimulus pairs in the pre-training set and the two first-stage sets as well as example stimulus pairs in the probe trials for a subject trained with the upper or lower halves of the faces (left and right columns, respectively). All picture pairs have been reproduced with the permission of the depicted person. FU: first stage trial in upper group; FL: first stage trial in lower group; ST: standard trial; UA: same portion, novel face in upper group; UB: other horizontal half, same face in upper group; UC: other horizontal half, novel face in upper group; UD: left half (vertical), same face in upper group; LA: same horizontal portion, novel face in lower group; LB: other horizontal half, same face in lower group; LC: other horizontal half, novel face in lower group; LD: left half (vertical), same face in lower group.

Experimental procedure

We conducted a two-way discrimination experiment in which the selection of either of the two stimuli was consistently rewarded with food (apple pieces) and conditioned with a whistle. The subjects were trained to indicate one stimulus with their noses in two ways. 1) The subject shifted its head and touched the iron bars with the tip of the nose pointing in the direction of the target stimulus. 2) The subject’s face was pressed to the iron bars in the direction of the target stimulus or the target stimulus was touched with the tip of the nose. Either of these two behaviours indicated that the subject had completed one trial. The subjects underwent sessions consisting of 30 trials each day. One trial was no more than 50 s with breaks of between 5 and 30 s separating the trials, and each session lasted between 2 and 15 min. If a subject choose neither of the stimuli after a break, the experiment continued on a different day. To eliminate any confounding effects of human gender, only pictures of men were used in this study.

The experiment consisted of pre-training and two stages of discrimination training that differed only in the stimuli presented. In each stand-alone trial, the same stimuli (pictures of the same person) were shown on the left or right side of the sheet. The two pictures shown to giant panda are also from a same person in each trial. In each session, the frequencies of left and right placement of the stimuli were equal, but they were presented in a random sequence. If the subject touched the correct stimulus based on the conditions defined above, the experimenter immediately provided a small slice of apple and whistled for 1 s. If the subject indicated the incorrect stimulus, no food reward was given and no sound was played until the subject chose the correct stimulus.

Pre-training

The aim of pre-training was to familiarize the pandas with the discrimination tasks and to select cooperative individuals for follow-up tests. Pictures of two keepers were used as stimuli. A pair of pictures of the same person, one of the face and one of the back of the head, was shown to subjects in each trial, and subjects were rewarded for touching the face stimulus. The placement (left or right) of the face stimulus was randomly chosen before the trials. In total, 30 sessions of trials were carried out, and each session included 30 trials. Pictures of both keeper were used 15 times in each session in a random sequence.

A subject passed a session if it selected the correct stimulus 24 times in 30 trials, and 3 consecutive passed sessions were required to achieve our success criteria (similar to Müller et al.30). The subjects that met the criteria advanced to the next stage, and the others were removed from the experiment.

First stage

The aim of the first stage was to test the different learning rates of the pandas and their reactions to emotion. Eighteen subjects were assigned to groups based on the upper/lower half (horizontal) of faces and happy/angry expression (Table S2). One group (n = 9) was shown only the upper halves of faces (upper group), and the other group (n = 9) was shown only the lower halves of faces (lower group). A pair of pictures, one happy and one angry, was shown to each giant panda in a trial. The subjects were rewarded for touching the happy stimulus or angry stimulus depending on the group to which they were assigned. The pictures of 10 strangers were used in this stage. Each session included 30 trials, and each stranger’s pictures were used in 3 trials. The position of the rewarded stimulus and the sequence of strangers’ pictures were randomized.

A giant panda passed a session if it selected the correct stimulus 21 times in 30 trials (corresponding to p < 0.05, binomial test), and 4 passed sessions out of any consecutive 5 sessions were required to achieve our success criteria. The subjects that met the criteria advanced to the next stage, and those that failed within 30 sessions were removed from the experiment.

Second stage

The aim of the second stage was to explore whether the pandas could recognize the emotional expressions of human faces or simply used local cues for discrimination. The same procedure used for group assignment in the first stage was used (Table S3). Two trial types were carried out in this stage, i.e., standard trial and probe trial. The standard trial followed the same procedure as in the first stage using the same pictures (old pictures) as stimuli and rewards depending on the group assignment. Four types of probe trials (Fig. 4) were designed: a. pictures of novel faces, same horizontal half; b. the other horizontal half of the old pictures; c. the other horizontal half of the novel faces; and d. the left half (vertical) of the old pictures. A total of 5 pairs of novel pictures of unfamiliar people (different from those in the first stage) were used in the probe trials. Only the left halves of the faces were used in probe trial d to rule out the possibility of lateral gaze bias52,53.

In this stage, the four probe trials were conducted between every third standard trial in alphabetical order. Each session comprised 15 standard trials and 4 probe trials (19 trials), and each subject underwent a total of 10 sessions (190 trials). In the probe trials, the subjects were rewarded for selecting either happy or angry faces, but whether the selection was correct, depending on the group assignment, was recorded.

Statistical analyses

We compared the rate at which the giant pandas met the success requirement between groups using Cox proportional hazards models in the “survival” package54. Performance in the four types of probe trials was compared using a generalized linear mixed effects model (GLMM) in the package “lme4”55, assuming a binomial distribution with a log-link function. The IDs of the giant pandas were included as random factors to account for the repeated measures structure of the dataset. The proportions of correct choices to the tests under the four experimental conditions were compared to a 50% level of chance using binomial generalized linear models (GLMs). All analyses were performed in the R 3.32 environment56.

Electronic supplementary material

Acknowledgements

This research was supported by the National Natural Science Foundation of China (31372223, 31670530, 31101649), the Sichuan Youth Science and Technology Foundation (2017JQ0026), the Chengdu Giant Panda Breeding Research Foundation (CPF Research 2013–17, 2014-11, 2015–19), and Chengdu Science and Technology Bureau (2015-HM01-00265-SF). We thank Xiangming Huang, Kongju Wu, Tao Deng, Kuixing Yang, and our colleagues for their assistance during this study.

Author Contributions

D.Q. and Z.Z. conceived, designed and supervised the experiments. Y.L., R.X., F.F., P.C. and C.C. performed the experiments and collected the data. Y.L. and Q.D. analyzed the data and created the figures and tables. Y.L., Q.D., D.Q. and Z.Z. wrote the paper. R.H., Z.Z., J.L. and X.G. revised the paper.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Youxu Li and Qiang Dai contributed equally to this work.

A correction to this article is available online at https://doi.org/10.1038/s41598-018-21907-8.

Electronic supplementary material

Supplementary information accompanies this paper at doi:10.1038/s41598-017-08789-y.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Zejun Zhang, Email: zhangzj@ioz.ac.cn.

Dunwu Qi, Email: qidunwu@163.com.

References

- 1.Burghardt GM, Greene HW. Predator simulation and duration of death feigning in neonate hognosesnakes. Anim. Behav. 1988;36:1842–1844. doi: 10.1016/S0003-3472(88)80127-1. [DOI] [Google Scholar]

- 2.Carter J, Lyons NJ, Cole HL, Goldsmith AR. Subtle cues of predation risk: starlings respond to a predator’s direction of eye-gaze. P. Roy. Soc. B-Biol. Sci. 2008;275:1709. doi: 10.1098/rspb.2008.0095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Krueger K, Flauger B, Farmer K, Maros K. Horses (Equus caballus) use human local enhancement cues and adjust to human attention. Anim. Cogn. 2011;14:187–201. doi: 10.1007/s10071-010-0352-7. [DOI] [PubMed] [Google Scholar]

- 4.Sackett GP. Monkeys reared in isolation with pictures as visual input: evidence for an innate releasing mechanism. Science. 1966;154:1468–1473. doi: 10.1126/science.154.3755.1468. [DOI] [PubMed] [Google Scholar]

- 5.Myowa-Yamakoshi M, Yamaguchi MK, Tomonaga M, Tanaka M, Matsuzawa T. Development of face recognition in infant chimpanzees (Pan troglodytes) Cogn. Dev. 2005;20:49–63. doi: 10.1016/j.cogdev.2004.12.002. [DOI] [Google Scholar]

- 6.Shariff AF, Tracy JL. “What Are Emotion Expressions For?”. Curr. Dir. Psychol. Sci. 2011;20:395. doi: 10.1177/0963721411424739. [DOI] [Google Scholar]

- 7.Susskind JM, Anderson AK. Facial expression form and function. Communicative Integr. Biol. 2008;1:148–149. doi: 10.4161/cib.1.2.6999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chapman HA, Kim DA, Susskind JM, Anderson AK. In bad taste: Evidence for the oral origins of moral disgust. Science. 2009;323:1222–1226. doi: 10.1126/science.1165565. [DOI] [PubMed] [Google Scholar]

- 9.Huber, E. Evolution of facial musculature and facial expresasion. (Oxford University Press, London, 1931).

- 10.Andrew RJ. Evolution of facial expression. Science. 1963;142:1034–1041. doi: 10.1126/science.142.3595.1034. [DOI] [PubMed] [Google Scholar]

- 11.Burrows AM. The facial expression musculature in primates and its evolutionary significance. Bioessays. 2008;30:212. doi: 10.1002/bies.20719. [DOI] [PubMed] [Google Scholar]

- 12.Anderson DJ, Adolphs R. A framework for studying emotions across species. Cell. 2014;157:187–200. doi: 10.1016/j.cell.2014.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hare B, Tomasello M. Human-like social skills in dogs? Trends. Cogn. Sci. 2005;9:439–444. doi: 10.1016/j.tics.2005.07.003. [DOI] [PubMed] [Google Scholar]

- 14.Albuquerque N, et al. Dogs recognize dog and human emotions. Biol. Lett. 2016;12:20150883. doi: 10.1098/rsbl.2015.0883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Martin-Malivel J, Okada K. Human and chimpanzee face recognition in chimpanzees (Pan troglodytes): Role of exposure and impact on categorical perception. Behav. Neurosci. 2007;121:1145–1155. doi: 10.1037/0735-7044.121.6.1145. [DOI] [PubMed] [Google Scholar]

- 16.Schaller, G. B., Hu, J., Pan, W. & Zhu, J. The giant pandas of Wolong. (University of Chicago Press, Chicago, Illinois, 1985).

- 17.Nie Y, et al. Giant panda scent-marking strategies in the wild: role of season, sex and marking surface. Anim. Behav. 2012;84:39–44. doi: 10.1016/j.anbehav.2012.03.026. [DOI] [Google Scholar]

- 18.Liu D, et al. Do anogenital gland secretions of giant panda code for their sexual ability? Chinese Sci. Bull. 2006;51:1986–1995. doi: 10.1007/s11434-006-2088-y. [DOI] [Google Scholar]

- 19.Liu Y, Zhang J, Liu D, Zhang J. Vomeronasal organ lesion disrupts social odor recognition, behaviors and fitness in golden hamsters. Integr. Zool. 2014;9:255–264. doi: 10.1111/1749-4877.12057. [DOI] [PubMed] [Google Scholar]

- 20.Hu, J., Schaller, G. B., Pan, W. & Zhu J. The giant pandas of Wolong. (Sichuan Science and Technology Press, Chengdu, Sichuan, in Chinese) (1985).

- 21.Zhang Z, et al. What determines selection and abandonment of a foraging patch by wild giant pandas (Ailuropoda melanoleuca) in winter? Environ. Sci. Pollut. R. 2009;16:79–84. doi: 10.1007/s11356-008-0066-4. [DOI] [PubMed] [Google Scholar]

- 22.Dungl E, Schratter D, Huber L. Discrimination of face-like patterns in the giant panda (Ailuropoda melanoleuca) J. Comp. Psychol. 2008;122:335–343. doi: 10.1037/0735-7036.122.4.335. [DOI] [PubMed] [Google Scholar]

- 23.Ma X, et al. Giant pandas failed to show mirror self-recognition. Anim. Cogn. 2015;18:713–721. doi: 10.1007/s10071-015-0838-4. [DOI] [PubMed] [Google Scholar]

- 24.Zhang, Z. & Wei, F. Giant panda ex-situ conservation: theory and practice. (Science Press, Beijing 2006).

- 25.Nagasawa M, et al. Dogs can discriminate human smiling faces from blank expressions. Anim. Cogn. 2011;14:525–533. doi: 10.1007/s10071-011-0386-5. [DOI] [PubMed] [Google Scholar]

- 26.Saito A, Shinozuka K. Vocal recognition of owners by domestic cats (Felis catus) Anim. Cogn. 2013;16:685–690. doi: 10.1007/s10071-013-0620-4. [DOI] [PubMed] [Google Scholar]

- 27.Levey DJ, et al. Urban mockingbirds quickly learn to identify individual humans. PNAS. 2009;106:8959. doi: 10.1073/pnas.0811422106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chengdu Research Base of Grant Panda Breeding. The 2016 annual conference of Chinese committee of breeding techniques for Giant Pandas. http://www.panda.org.cn/english/news/news/2016-12-01/5881.html (2016).

- 29.Zhang Z, et al. Translocation and discussion on reintroduction of captive giant panda. Acta Theriol. Sinica. 2006;26:292–299. [Google Scholar]

- 30.Müller CA, Schmitt K, Barber AL, Huber L. Dogs can discriminate emotional expressions of human faces. Curr. Biol. 2015;25:601–605. doi: 10.1016/j.cub.2014.12.055. [DOI] [PubMed] [Google Scholar]

- 31.Leopold DA, Rhodes G. A comparative view of face perception. J. Comp. Psychol. 2010;124:233–251. doi: 10.1037/a0019460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Carere C, Locurto C. Interaction between animal personality and animal cognition. Curr. Zool. 2011;57:491–498. doi: 10.1093/czoolo/57.4.491. [DOI] [Google Scholar]

- 33.Jitsumori M, Yoshihara M. Categorical discrimination of human facial expressions by pigeons: A test of the linear feature model. Q. J. Exp. Psychol.–B. 1997;50:253–268. [Google Scholar]

- 34.Wobber V, Hare B, Koler-Matznick J, Wrangham R, Tomasello M. Breed differences in domestic dogs’ (Canis familiaris) comprehension of human communicative signals. Interaction Studies. 2009;10:206–224. doi: 10.1075/is.10.2.06wob. [DOI] [Google Scholar]

- 35.Smith AV, Proops L, Grounds K, Wathan J, McComb K. Functionally relevant responses to human facial expressions of emotion in the domestic horse (Equus caballus) Biol. Lett. 2016;12:20150907. doi: 10.1098/rsbl.2015.0907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Niemann J, Wisse B, Rus D, Yperen NWV, Kai S. Anger and attitudinal reactions to negative feedback: The effects of emotional instability and power. Motiv. Emot. 2014;38:687–699. [Google Scholar]

- 37.Wallis LJ, et al. Aging effects on discrimination learning, logical reasoning and memory in pet dogs. AGE. 2016;38:6. doi: 10.1007/s11357-015-9866-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chan AD, et al. Visuospatial impairments in aged canines (Canis familiaris): the role of cognitive-behavioral flexibility. Behav. Neurosci. 2002;116:443–454. doi: 10.1037/0735-7044.116.3.443. [DOI] [PubMed] [Google Scholar]

- 39.Hu, J. & Wei, F. Comparative ecology of giant pandas in the five mountain ranges of their distribution in China. In Giant pandas: biology and conservation (eds Lindburg, D. & Baragona, K.) 137–148 (University of California Press, Berkeley, California, 2004).

- 40.Jiang, Z. Animal Behavioral Principles and Species Conservation Methods. (Science Press, Beijing 2004).

- 41.Range F, Bugnyar T, Schlögl C, Kotrschal K. Individual and sex differences in learning abilities of ravens. Behav. Process. 2006;73:100–106. doi: 10.1016/j.beproc.2006.04.002. [DOI] [PubMed] [Google Scholar]

- 42.Titulaer M, van Oers K, Naguib M. Personality affects learning performance in difficult tasks in a sex-dependent way. Anim. Behav. 2012;83:723–730. doi: 10.1016/j.anbehav.2011.12.020. [DOI] [Google Scholar]

- 43.Cellerino A, Borghetti D, Sartucci F. Sex differences in face gender recognition in humans. Brain Res. Bull. 2004;63:443–449. doi: 10.1016/j.brainresbull.2004.03.010. [DOI] [PubMed] [Google Scholar]

- 44.Astié AA, Kacelnik A, Reboreda JC. Sexual differences in memory in shiny cowbirds. Anim. Cogn. 1998;1:77–82. doi: 10.1007/s100710050011. [DOI] [PubMed] [Google Scholar]

- 45.Halpern, D. F. Sex differences in cognitive abilities. Fourth edition. (Psychology Press, Taylor & Francis Group, New York, 2013).

- 46.Bisazza A, Agrillo C, Luconxiccato T. Extensive training extends numerical abilities of guppies. Anim. Cogn. 2014;17:1413–1419. doi: 10.1007/s10071-014-0759-7. [DOI] [PubMed] [Google Scholar]

- 47.Lucon-Xiccato T, Bisazza A. Male and female guppies differ in speed but not in accuracy in visual discrimination learning. Anim. Cogn. 2016;19:733–744. doi: 10.1007/s10071-016-0969-2. [DOI] [PubMed] [Google Scholar]

- 48.Udell MA, Dorey NR, Wynne CD. What did domestication do to dogs? A new account of dogs’ sensitivity to human actions. Biol. Rev. 2010;85:327–345. doi: 10.1111/j.1469-185X.2009.00104.x. [DOI] [PubMed] [Google Scholar]

- 49.Kiddie J, Collins L. Identifying environmental and management factors that may be associated with the quality of life of kennelled dogs (Canis familiaris) Appl. Anim. Behav. Sci. 2015;167:43–55. doi: 10.1016/j.applanim.2015.03.007. [DOI] [Google Scholar]

- 50.Freiman JA, Chalmers TCSH, Jr., Kuebler RR. The importance of beta, the type ii error and sample size in the design and interpretation of the randomized control trial. survey of 71 “negative” trials. N. Engl. J. Med. 1978;299:690–694. doi: 10.1056/NEJM197809282991304. [DOI] [PubMed] [Google Scholar]

- 51.Ekman, P. & Friesen W. V. Unmasking the Face: A guide to recognizing emotions from facial clues. (Prentice Hall, New York, 2003).

- 52.Guo K, Meints K, Hall C, Hall S, Mills D. Left gaze bias in humans, rhesus monkeys and domestic dogs. Anim. Cogn. 2009;12:409–418. doi: 10.1007/s10071-008-0199-3. [DOI] [PubMed] [Google Scholar]

- 53.Racca A, Guo K, Meints K, Mills DS. Reading faces: differential lateral gaze bias in processing canine and human facial expressions in dogs and 4-year-old children. PLoS One. 2012;7:e36076. doi: 10.1371/journal.pone.0036076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Therneau, T. A package for survival analysis in S. R package version 2.37–7. http://CRAN.R-project.org/package= survival (2014).

- 55.Bates, D. & Mechler, M. Linear Mixed Effects Models Using S4 Classes, Ver 0.999375-27. University of Wisconsin, Madison. http://lme4.r-forge.r-project.org (2008).

- 56.R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/ (2016).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.