Abstract

Objective

Nonadherence reduces glaucoma treatment efficacy. Motivational interviewing (MI) is a well-studied adherence intervention, but has not been tested in glaucoma. Reminder interventions also may improve adherence.

Design

201 patients with glaucoma or ocular hypertension were urn-randomized to receive MI delivered by an ophthalmic technician (OT), usual care, or a minimal behavioral intervention (reminder calls).

Main Outcome Measures

Outcomes included electronic monitoring with Medication Event Monitoring System (MEMS) bottles, two self-report adherence measures, patient satisfaction, and clinical outcomes. Multilevel modeling was used to test differences in MEMS results by group over time; ANCOVA was used to compare groups on other measures.

Results

Reminder calls increased adherence compared to usual care based on MEMS, p = .005, and self-report, p = .04. MI had a nonsignificant effect but produced higher satisfaction than reminder calls, p = .007. Treatment fidelity was high on most measures, with observable differences in behavior between groups. All groups had high baseline adherence that limited opportunities for change.

Conclusion

Reminder calls, but not MI, led to better adherence than usual care. Although a large literature supports MI, reminder calls might be a cost-effective intervention for patients with high baseline adherence. Replication is needed with less adherent participants.

Keywords: adherence, glaucoma, motivational interviewing, reminder, treatment satisfaction

Glaucoma is a condition in which treatment is often suboptimal, and nonadherence is a major barrier to treatment. Estimated 12-month adherence is too low for therapeutic effects in 30%–50% of patients based on self-report (Gordon & Kass, 1991), pharmacy fills (Friedman et al., 2007; Schmier, Covert, & Robin, 2009), and electronic monitoring (Cook, Schmiege, McClean, Aagaard, & Kahook, 2011; Okeke et al., 2009a). Nonadherence is common in glaucoma despite serious consequences that include disease progression and vision loss (Rossi, Pasinetti, Scudeller, Radaelli, & Bianchi, 2010).

Motivational Interviewing to Improve Treatment Adherence

Many of the barriers to adherence in glaucoma treatment are linked to motivation (Cook et al., 2014): For instance, initial vision loss is not noticeable to patients and benefits of treatment only accrue long-term, but medication side effects are immediate. Interventions to improve adherence must address these motivational barriers (Cook, 2006). Motivational interviewing (MI) is a patient-centered psychological counseling method with a strong evidence base that is growing in use across diverse health care settings (Cook et al., 2016). Key components of MI include a recognition that all people are ambivalent about change, a guiding and egalitarian style rather than a directive “expert” role, and the use of strategies like reflective listening and open-ended questions to draw out patients’ own statements about their motivators, challenges, and decisions related to health behaviors (Miller & Rollnick, 2013). MI can be successfully delivered by a broad range of professionals and has proven efficacious in promoting many different types of health behavior change (Lundahl, Kunz, Brownell, Tollefson, & Burke, 2010), including adherence (Cook, 2006). MI functions by increasing counselors’ empathic attitudes and listening behaviors, which help to build patients’ motivation for change (Miller & Rose, 2009; Markland, Ryan, Tobin, & Rollnick, 2005). A small-scale pilot study showed that MI delivered by an ophthalmic technician (OT) was feasible and acceptable to patients and providers (Cook, Bremer, Ayala, & Kahook, 2010). Meta-analyses of MI have shown effects superior to treatment as usual or wait list, and similar to those of active interventions like cognitive-behavioral therapy (Burke, Arkowitz, & Menchola, 2003; Lundahl et al., 2010). However, MI has not been tested as a strategy to improve glaucoma adherence in a full-scale randomized controlled trial (RCT).

Reminders as an Alternate Adherence Intervention

Despite the strong evidence base for MI, many practitioners find this patient counseling approach to be quite different from their usual approach to care, and therefore more challenging to implement (Cook et al., 2016). Reminder telephone calls from clinic staff are a much simpler intervention that has been studied as a way to improve glaucoma treatment adherence. Although reminder calls contain none of the methods used to elicit and strengthen motivation used in MI (Miller & Rose, 2009), they nevertheless may include therapeutic elements of hope and caring (Lambert & Ogles, 2004), may increase patients’ accountability through a monitoring effect (Cook, Schmiege, McClean, Aagaard, & Kahook, 2011), or might serve as a prompt that re-engages clients’ own self-regulatory abilities (Sitzmann & Ely, 2010). Reminder calls as implemented in routine clinical practice – a single call before or after each appointment – are too infrequent to serve as prompts in the classical conditioning sense but might work through behavioral mechanisms like social reinforcement (Cook, 2006). There is some experimental evidence that reminders improve glaucoma adherence specifically (Okeke et al., 2009b; Boland, Chang, Frazier, Plyler, & Friedman, 2014), and one study found that reminders had comparable effects to MI for mammography screening (Taplin et al., 2000). Nevertheless, a Cochrane review concluded that reminders generally have the strongest effects when included as one element of a multi-component adherence intervention (Haynes, Ackloo, Sahota, McDonald, & Yao, 2008).

Purpose of the Current Study

Building on previous pilot work, we designed a full-scale RCT to test the hypothesis that MI counseling would result in greater medication adherence than usual glaucoma care. To provide a strong test of this hypothesis, the current study included important methodological features such as multiple sites and interventionists to improve generalizability, a larger sample that was stratified to increase comparability of the experimental and control groups, a well-validated behavioral measure of adherence as the primary dependent variable, and additional secondary measures to confirm the adherence results. In addition to MI and usual care, we included a third group in the study that provided reminder calls. This group was intended as a minimal behavioral intervention that could provide some potential benefit with little time expenditure or staff training required. Based on prior research we did expect some benefit of reminder calls, but based on the meta-analytic findings and Cochrane review cited above, we hypothesized that MI would be superior to reminder calls that did not contain these elements.

Methods

Participants

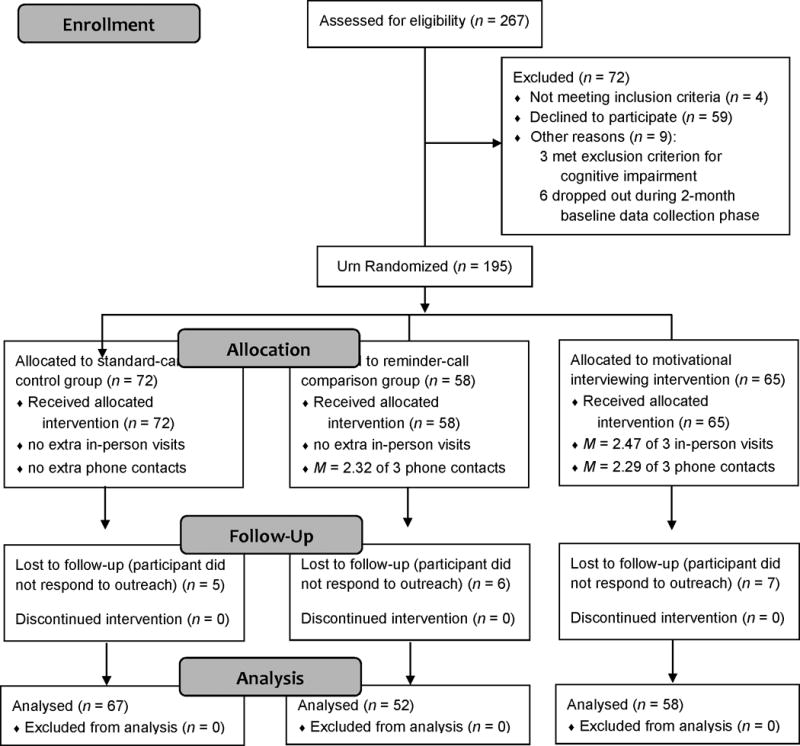

Participants were recruited into this RCT from 3 specialty glaucoma clinics in Denver Colorado; Portland Oregon; and Nashville Tennessee; two were university-affiliated, the other was independent. Human subjects approval was obtained at each site, confidentiality was protected, and participants gave written informed consent. Based on inclusion criteria, all participants were adults (minimum age of 18, no upper limit) with either open-angle glaucoma or ocular hypertension, were prescribed a single eye drop (monotherapy or combination drop), and had a visual field test in the past 9 months. Exclusion criteria were any plan for surgery in the next 6 months or any serious condition that in the judgment of the treating clinician precluded participation. During recruitment, we included patients with either ocular hypertension (36 or 18%) or open-angle glaucoma (165 or 82%) because both have similar treatment and follow-up. There were no other changes from the original protocol on clinicaltrials.gov (protocol #NCT01409421), and the trial ended as planned. Flow of patients through the study is shown in the CONSORT diagram (Consolidated Standards for Reporting Trials: Figure 1).

Figure 1.

CONSORT diagram showing study enrollment, random assignment to conditions, follow-up, attrition, and analysis sample.

A total of 260 eligible patients were approached for the study and 201 (77.3%) agreed to participate. Participants were more often female (63.7% versus 49.3%), χ2(1) = 4.44, p = .04, ϕ = .13, African American (19.9% versus 6.0%), χ2(1) = 4.71, p = .03, ϕ = .13, and slightly younger (M = 65.0 years versus 69.9), t(251) = 2.69, p = .008, d = 0.43, than those who refused participation. There were no differences (ps > .65) between participants and nonparticipants in the percentage who were Latino/Latina or the percentage with insurance. Participants had an average of 3.5 comorbid conditions, most commonly heart disease (64.2%), chronic pain (37.8%), non-glaucoma eye conditions (29.9%), diabetes (21.9%), respiratory conditions (16.4%), and cancer (13.4%). Similarities between participants and nonparticipants, and participants’ high level of comorbidities, each suggest high external validity in this study.

Procedure

Design

We randomly assigned participants to usual care, an intervention group with usual care plus MI, or a minimal behavioral intervention with usual care plus reminder calls.

Study Visits

Ophthalmologists identified eligible patients from clinic schedules and contacted them in person during a routine appointment. After the patient’s ophthalmologist described the study, a coordinator reviewed procedures and obtained written informed consent. Recruitment occurred from July 2011 to January 2013, with follow-up through June 2013.

After enrollment, all participants received a Medication Event Monitoring System (MEMS) bottle for adherence data collection, as described below. At a first visit, participants completed questionnaires and the study coordinator trained the participant to use the MEMS bottle. MEMS have a reactive measurement effect that at least temporarily increases glaucoma treatment adherence because patients know they are being monitored (Cook et al., 2011; Richardson et al., 2013). To reduce this potential source of bias, all participants completed a 2-month run-in monitoring period with MEMS prior to randomization. This procedure has resulted in a return to baseline adherence in other studies (Cook et al., 2011; Richardson et al., 2013). After 2 months participants returned to download MEMS data, complete questionnaires, and be randomly assigned to one of the study conditions. After a 3-month intervention, participants returned for a third visit to download MEMS data and complete final questionnaires.

Randomization

At the second visit, the study coordinator used an urn randomization program (Matthews, Cook, Terada, & Aloia, 2010) to stratify participants across groups within each recruitment site. Urn randomization is an adaptive allocation method where the probability of a particular group assignment changes based on group characteristics so far. Stratification criteria were participants’ age (<65 years, 65–80 years, or >80 years), glaucoma severity (mean deviation <−6 or >−6), and baseline MEMS adherence (<80% or >80%). Participants were not excluded based on initial adherence, which we expected to be high due to reactivity (Cook et al., 2011). The urn procedure was expected to equalize groups on the stratification criteria as well as other variables; because it contained a random element, it produced slightly unequal group sizes.

Masking

We masked ophthalmologists but not patients, OTs, or study coordinators to participants’ group assignment, resulting in a single-blind study. Participants were given a schedule of follow-up visits by the study coordinator who completed the urn randomization, which did reveal their group assignment based on the frequency of contacts described for each study condition on the consent form. However, participants were not informed of the investigators’ hypotheses about which group was expected to be more effective. Allocation information and study data were stored in a secure database separate from routine clinical records. Separate research team members provided the MI and non-MI intervention calls.

Retention

Participants received an incentive of $25 per session for completing each study visit ($75 total payment). Participants were not offered incentives for intervention sessions, so all three groups received the same overall compensation. Retention was high: 90% of patients randomized (177/196) actually completed the intervention phase. Participants who dropped out generally reported reasons related to convenience, such as going on vacation or living far away.

Interventions

Usual Care

All sites provided routine outpatient care by a glaucoma-subspecialty-trained ophthalmologist, plus written education materials approved by the American Glaucoma Society and/or ad hoc education and support. Formal approaches to patient counseling such as MI or routine telephone follow-up (other than scheduling) were not part of usual care at any study site.

Reminder Calls

Participants in the reminder-call comparison group received 3 scripted telephone calls from a clinic staff member with a reminder of their next appointment. They were asked about their level of adherence, the reasons for any missed doses, and their use of the MEMS bottle. Participants were instructed to contact their ophthalmologist if they had any medical questions. This condition provided increased attention and support compared to usual care, and might also have improved adherence via monitoring or operant conditioning effects.

Motivational Interviewing

Participants randomly assigned to the MI intervention received 3 one-to-one in-person meetings with an OT trained in MI (at weeks 1, 4, and 8 after randomization) plus 3 follow-up telephone calls from the same OT (at weeks 2, 6, and 12 after randomization). Each in-person or telephone contact began with a review of the participant’s use of eye drops, barriers to taking medication, any adverse events, questions about treatment, and level of readiness for behavior change. OTs then used MI strategies as described below.

Measures

Treatment Fidelity

We evaluated the fidelity of MI based on published expert consensus criteria (Bellg et al., 2004; Borrelli, 2011). First, to enhance study design fidelity we obtained a pre-study review of all intervention and training materials by 3 independent behavior-change experts, who were asked to consider whether the MI training appropriately emphasized patient-centered counseling techniques and avoided education or persuasion (Miller & Rose, 2009). The short protocol for reminder calls was also reviewed to ensure that it did not contain any MI techniques. We measured training fidelity based on the amount and type of MI training completed by OTs, and with a validated pre/post-training questionnaire on knowledge, abilities, and behaviors related to MI (Cook, Richardson, & Wilson, 2012). We evaluated treatment delivery via an outside observer’s ratings of a 10% random sample of MI and reminder call session recordings using the observational MI skills code (MISC: Moyers, Martin, Catley, Karris, & Ahluwalia, 2003), which counts the frequency of specific counselor and client behaviors and provides global ratings of counselors’ empathy and clients’ engagement on a scale from 1 = low to 7 = high. MISC coding was done by an experienced mental health professional and MI trainer, who was blind to study condition and not involved in intervention delivery, and who attended a coding group to maintain reliability. We evaluated treatment receipt based on enrollment and attrition, and on the number and length of contacts in each condition. Finally, we evaluated treatment enactment with a checklist completed by the OT or study coordinator after each session (Cook et al., 2010) to evaluate participants’ adherence and readiness for change. These treatment fidelity measures mirror those in other studies of MI (Resnick et al., 2005).

Primary Outcome: MEMS

We tested differences in adherence between the intervention and control groups using MEMS, electronic devices that record the date and time a pill bottle is opened. Reliability of MEMS is supported by a low rate of technical errors (Cook et al., 2011) and MEMS data are considered valid based on their ability to predict adherence-linked outcomes (Liu et al., 2001). Bottles with MEMS caps accommodate all currently used glaucoma eye drops and have been used in other glaucoma studies (Cook et al., 2011; Richardson et al., 2013). Participants were considered adherent if they took once-daily medication within 22 to 26 hours after the previous dose, based on a commonly used ±2-hour window for correct timing (Cook et al., 2011; Richardson et al., 2013). Patients taking 2 and 3 times daily medications were considered adherent based on the total number of doses taken, without any timing rules applied.

Secondary Outcomes: Self-Reported Adherence and Patient Satisfaction

Although use of MEMS is considered a best practice for research, MEMS measure bottle openings rather than actual use of medication and do not always correlate with other adherence metrics (Cook et al., 2011). The use of multiple adherence measures with different sources of error is therefore recommended (Chesney, 2006). We supplemented MEMS with two self-report scales. The Adherence Attitude Inventory (AAI: Lewis & Abell, 2002) is a 31-item measure of cognitive and behavioral items related to medication adherence in chronic disease states. The last 3 items ask whether the respondent took a targeted medication correctly today, yesterday, and the day before yesterday. Studies in other chronic diseases have found 3-day recall to be valid based on detection of nonadherence and correlations with known adherence predictors, physiological measures, and electronic monitoring (Chesney et al., 2000; Mathews et al., 2002). Additionally, the Morisky scale (Krousel-Wood et al., 2009), an 8-item self-report questionnaire, is the most widely used self-report measure of adherence across many chronic disease states. Items ask about respondents’ attitudes toward medication, barriers such as forgetting, and adherence behavior. Internal consistency was α = .79, so items were averaged into a single score.

At the end of the intervention phase, participants also completed a satisfaction survey (Battaglia, Benson, Cook, & Prochazka, 2013) on the level of support they received, including support from the OT or study coordinator in the two intervention conditions. Questions addressed satisfaction with relationship factors that are emphasized in MI, such as the support person took time to listen to me, I felt comfortable talking with the support person, and my freedom to choose was respected whether I used my medication or decided not to. The survey’s 15 items, rated on a 1–5 Likert-type scale from Never to Always, evaluate patients’ perceptions that they are receiving individualized, caring, and patient-centered treatment. All items loaded on a single factor with internal consistency reliability of α = .73, and were averaged together.

Exploratory Measures

Participants also completed surveys on satisfaction with medication and the doctor-patient relationship, (Cook et al., 2014), motivation and barriers to adherence (Cook, McElwain, & Bradley-Springer, 2010), and other measures. Participants used the VFQ-25 (Visual Functioning Questionnaire: Mangione et al., 2001) to rate their perceived health, perceived eyesight, worry about eyesight, eye symptoms, and overall quality of life. Finally, we extracted medical chart data on two key clinical outcomes: intra-ocular pressure and visual field. We tested each variable for between-group differences in exploratory analyses.

Data Analysis

General Approach

Participants’ demographic and clinical characteristics and treatment fidelity data were examined descriptively. For the primary outcome, participants’ MEMS-based adherence was compared across groups over time using multilevel modeling. Week since the start of the intervention was entered as a within-person factor in random-effects regression models, with the percent of doses taken each week as the dependent variable. In this analysis, a significant group-by-time interaction effect would reflect differences between groups in the trajectory of adherence, while main effects would indicate overall differences by group or an overall trend of change over time. Baseline demographic and clinical characteristics were screened to identify any pretreatment differences between groups. Any variables that did significantly differ, as well as those used for urn randomization, were entered as between-person covariates. Study site was included as between-person covariate to account for any differences based on patients’ geographic location, and site-by-treatment-group interactions were also considered. The secondary outcomes and exploratory variables were tested using analysis of covariance to examine differences in post-intervention scores. All analyses controlled for pre-intervention scores on the relevant outcome, plus the other demographic and clinical covariates.

Missing Data

Data were analyzed using an intent-to-treat method, based on participants’ group assignment as randomized regardless of whether they actually completed any intervention components. No interim analyses were conducted. Because the rate of missing data was low (4%–11% per variable) and values appeared to be missing at random, missing data points were handled using multiple imputation. The only consistent pattern of missingness involved MEMS data not being recorded for 21 out of 201 participants during the baseline phase (10%) due to equipment failure (e.g., damaged or inactivated MEMS devices). This is a level of missing observations that can be imputed without bias (Collins, Schafer, & Kam, 2001).

Power Analysis

This study was powered based on the number of participants needed to test treatment efficacy on the primary outcome measure within each study site. Projected effect sizes were based on prior adherence studies using MI (Cook, 2006; Cook et al., 2010), with an expected effect size of d = .65 for the difference between MI and usual care. Although pilot study effects may be either over- or under-estimates (Leon, Davis, & Kraemer, 2011), they are widely recommended as a basis for power analysis (Lenth, 2001) and were used to set a lower limit for power under the most restrictive assumptions about the data. The significance level for all tests was set at α < .05; we did not correct for alpha across measures because scores on each outcome variable were relatively independent. Power in multilevel models was based on the number of data points (60 participants per group times 12 weeks) after correction for the intra-class correlation (ICC) of data from the same participant (Hox, 2002), estimated at ICC = .70 based on prior research (Cook et al., 2010). This analysis revealed that 20 participants were required in each of 3 groups to achieve 80% power within each study site at the initially expected effect size. However, because there were few between-site differences we were able to calculate final results using a pooled sample, yielding power = .80 for effects as small as d = 0.15.

Results

Demographic Differences by Experimental Group

Demographics by group are given in Table 1; there were no between-group differences other than marital status, which we controlled for in subsequent analyses. As shown in Figure 1, groups were approximately equal in size and had similar attrition. Patients who left the study were no different (ps > .05) from those who remained based on age, gender, race/ethnicity, education, time since diagnosis, number of medications, comorbidities, doses per day, intra-ocular pressure, visual field, or treatment motivation. They were also no different at baseline on either recall-based adherence, t(25) = 1.22, p = .24, d = 0.23, or Morisky scale score, t(29) = 1.45, p = .16, d = 0.27. However, MEMS-based adherence was different at baseline, with those who dropped out having significantly higher adherence, M = 78.8%, than those who remained in the study, M = 66.3%, t(27) = 2.49, p = .02, d = 0.48. This suggests that the intervention reached those who most needed it, which we regard as a non-harmful selection bias. However, we still controlled for baseline MEMS based on its use as a stratification variable.

Table 1.

Participant Demographics (N = 195 Patients Randomized)

| Mean (SD) or Frequency (%) | ||||

|---|---|---|---|---|

|

| ||||

| Characteristic | Usual Care | Reminder Calls | MI | Difference |

| N | 72 | 58 | 65 | |

| Age (years) | M = 64.4 (11.3) | M = 66.1 (11.8) | M = 65.4 (10.5) | ns |

| Gender – women | 46 (63.8%) | 39 (67.2%) | 41 (63.1%) | ns |

| men | 26 (36.2%) | 19 (32.8%) | 24 (36.9%) | |

| Race/Ethnicity – White | 46 (63.9%) | 43 (74.1%) | 47 (72.3%) | ns |

| African-American | 16 (22.2%)† | 11 (19.0%) | 9 (13.8%) | |

| Latino/Latina | 3 (4.1%)† | 1 (1.7%) | 3 (4.6%) | |

| Asian | 4 (5.6%) | 2 (3.4%) | 0 (0%) | |

| Native American | 2 (2.7%) | 0 (0%) | 0 (0%) | |

| unknown | 2 (2.8%) | 1 (1.7%) | 6 (9.2%) | |

| Education – no diploma | 3 (4.1%) | 3 (5.2%) | 6 (9.2%) | ns |

| high school degree | 6 (8.3%) | 14 (24.1%) | 10 (15.4%) | |

| college degree | 47 (65.3%) | 24 (41.4%) | 33 (50.8%) | |

| master’s degree | 11 (15.3%) | 11 (19.0%) | 13 (20.0%) | |

| doctorate | 2 (2.8%) | 2 (3.4%) | 3 (4.6%) | |

| unknown | 3 (4.1%) | 4 (6.9%) | 0 (0%) | |

| Employment – retired | 28 (41.8%) | 27 (50.9%) | 35 (58.3%) | ns |

| employed | 28 (41.8%) | 20 (37.7%) | 17 (28.3%) | |

| unemployed | 8 (11.9%) | 5 (9.4%) | 7 (11.7%) | |

| disabled | 3 (4.5%) | 1 (1.9%) | 1 (1.7%) | |

| unknown | 5 | 5 | 5 | |

| Marital Status – married | 42 (58.3%) | 29 (51.8%) | 45 (71.4%) | χ2 = 16.4, |

| single | 9 (12.5%) | 15 (26.8%) | 8 (12.7%) | p = .04, |

| widowed | 8 (11.1%) | 8 (14.3%) | 8 (12.7%) | ϕ = .29 |

| divorced | 12 (16.7%) | 4 (7.1%) | 2 (3.2%) | |

| unknown | 0 | 2 | 2 | |

| Number of Comorbidities | 3.5 (2.4) | 3.4 (2.2) | 3.4 (2.3) | ns |

| Number of Other Medications | 6.4 (5.1) | 6.7 (4.8) | 6.4 (4.7) | ns |

| Visual Field (log units) | −2.9 (3.8) | −3.5 (3.4) | −3.4 (3.9) | ns |

| Intra-Ocular Pressure (mm Hg) | 15.0 (4.2) | 15.3 (4.5) | 14.9 (3.5) | ns |

| Number of Doses per Day | 1.2 (0.5) | 1.1 (0.3) | 1.2 (0.5) | ns |

| Treatment Duration (months) | 35.1 (53.2) | 38.5 (54.0) | 54.3 (61.3) | ns |

| Baseline Motivation (1–5) | 4.37 (0.29) | 4.27 (0.51) | 4.40 (0.35) | ns |

One participant was both African-American and Latina, and is counted twice.

Note. MI = motivational interviewing; ns = not statistically significant

Demographic Differences by Recruitment Site

Participants at all three recruitment sites were similar in terms of age, F(2, 190) = 0.89, p = .41, η2 = .009, gender, χ2(2) = 3.52, p = .17, Cramer’s V = .09, and marital status, χ2(8) = 9.30, p = .32, Cramer’s V = .08. Compared to the other two sites, the Oregon site had participants who were significantly more likely to be White, χ2(2) = 22.1, p < .001, Cramer’s V = .23, and who were more highly educated, F(2, 189) = 5.22, p = .006, η2 = .052. To account for these differences, we controlled for recruitment site as a covariate in all subsequent analyses.

Treatment Fidelity

For the MI condition, 5 OTs (1 in Colorado, 2 in Oregon, and 2 in Tennessee) received an average of 16 hours of training (range: 14 – 23), including in-person instruction, one-to-one coaching, group audio-conference calls, review of session recordings, and discussion of best practices and challenges in using MI. This combination of strategies has demonstrated efficacy for MI training (Miller, Yahne, Moyers, Martinez, & Pirritano, 2004) and was supplemented by a written manual and decision support tools. Training included role-played cases, but OTs were not required to reach a particular standard before beginning work with patients. Pre/post self-report data showed greater knowledge after training, t(5) = 4.41, p = .007, d = 1.47, and a nonsignificant change in willingness to use MI techniques, t(5) = 2.08, p = .09, d = 0.69. OTs also reported high perceived value of training, M = 4.50 (SD = 0.55) on a 5-point scale. Training for the reminder call condition was minimal: 6 study coordinators (2 in Colorado, 3 in Oregon, and 1 in Tennessee) received 30 minutes of training as part of a half-day orientation to study design and procedures, with a simple 2-page call script and no role-play exercises.

Observational coding data on treatment delivery for both conditions are shown in Table 2. The overall percentage of MI-consistent counselor statements was 70% in the MI condition, but only 26% in the reminder call condition where staff were not expected to use MI. This greater-than-zero result for the reminder call condition was due to some use of open-ended questions and did not represent treatment contamination; other MI techniques like reflection and elicitation were not used at all. Data from behavior checklists completed by OTs showed a similar pattern, with a low but non-zero level of MI behaviors in the reminder calls. Counts of individual MI-consistent behaviors such as reflection (expected to be higher in MI) and direct instruction (expected to be lower in MI), as well as the observer’s overall rating of MI skills, all showed expected differences between groups. Finally, OTs’ behavior checklists showed more MI-consistent techniques in the MI group, M = 93%, than in the reminder call group, M = 38%, t(48) = 7.07, p < .001, d = 1.03, based on their own self-assessment. All treatment delivery variables improved over time in the MI group (ps < .01 in ANOVAs of MISC scores with time as a random factor), indicating that counselors’ MI skills gradually increased.

Table 2.

MISC Observational Coding Data – Treatment Delivery and Enactment

| MISC Variable | Reminder Calls | Motivational Interviewing | Benchmark Level for MI |

|---|---|---|---|

| Treatment Delivery: Counselor Behaviors | |||

| Total % MI-consistent statements | 26% | 70% | 50%†–95%*,** |

| % reflection | 0% | 23% | 40%†–56%* |

| % open-ended questions | 14% | 49% | 18%†–23%* |

| % direct instruction | 13% | 3% | 4%* |

| Rating of counselor’s MI skills |

M = 2.56 (SD = 0.86) |

M = 5.77 (SD = 0.47) |

5.07* |

| Treatment Enactment: Participant Behaviors | |||

| % of time patient spoke | 25% | 59% | 62%†,** |

| # of change statements/minute |

M = 0.21 (SD = 0.19) |

M = 0.29 (SD = 0.26) |

— |

| Rating of patient engagement |

M = 2.60 (SD = 0.35) |

M = 4.60 (SD = 0.92) |

5.78* |

Note. Benchmark levels for the MI condition are drawn from:

Baer, et al. (2004) study of MI training results for mental health professionals, using post-training means for the counselors who were considered to be ‘MI-proficient’;

Moyers, et al. (2005) study showing linkages between counselors’ level of proficiency on the global rating scales and clients’ outcomes in MI;

Miller, et al. (2004) randomized trial of methods for learning MI, using average post-training means across the 4 training methods.

For the number of client change statements, we averaged by minutes of conversation to account for the fact that MI calls were longer and therefore clients had more opportunity to make change statements. The average number of total change statements in 15-minute MI calls was M = 4.55, which is similar to the benchmark level of 5 per session reported post-training in another MI training study by Schoener, Madeja, Henderson, Ondersma, and Janisse (2006).

Treatment receipt is supported by the finding of <10% attrition after randomization, and by the analyses above showing few differences between participants and nonparticipants. This pattern of results suggests that MI was acceptable to a broad range of patients with glaucoma. The number of contacts in each condition is shown in Figure 1 above. As expected, reminder calls were shorter, M = 3.0 minutes (SD = 1.99), than MI calls, M = 7.2 minutes (SD = 6.67), t(10) = 6.68, p < .001, d = 3.52. In-person MI sessions lasted M = 15.7 minutes (SD = 9.16). OTs anecdotally reported that they found telephone sessions just as effective as in-person sessions and easier to schedule; however, they preferred to have at least one in-person meeting.

Finally, in the analysis of treatment enactment, there was no significant group-by-time interaction in checklist-based adherence ratings, F(2, 67) = 1.98, p = .14, η2 = .06. Patients’ rated readiness for change was also high in both groups and remained that way over time. However, as shown in Table 2, patient engagement was rated substantially higher in the MI group, M = 4.60 (SD = 0.92), than in the reminder call group, M = 2.60 (SD = 0.35), t(9) = 5.65, p < .001, d = 2.98, based on MISC ratings by an outside observer. Patients in the MI group also spoke more and made more statements indicating their potential readiness for change. All of these patterns are in line with what has been reported in the MI literature (Moyers, Miller, & Hendrickson, 2005; Amrhein, Miller, Yahne, Palmer, & Fulcher, 2003), suggesting that there were clear differences between the MI and reminder call conditions, and that the MI condition included a substantial dose of the ingredients that are thought to be therapeutically relevant in MI.

Adverse Events

No adverse events related to either MI or reminder calls were reported over the 2-year study duration. A few participant complaints related to timely payment of incentives were reported and resolved, and one complaint related to the MI counselor’s attitude or mannerisms was investigated, found to be non-serious, and addressed as part of training.

Intervention Effects on Adherence

Baseline adherence was 78.2% based on MEMS, 91.3% on 3-day recall, and 94.8% on the Morisky scale. These three scales were only moderately inter-correlated (bivariate rs = .38 – .53), supporting the decision to analyze them separately. The groups were similar at baseline on MEMS, F(2, 192) = 1.14, p = .32, η2 = .01, 3-day recall, F(2, 192) = 0.52, p = .59, η2 = .01, and the Morisky scale, F(2, 192) = 0.52, p = .60, η2 = .01.

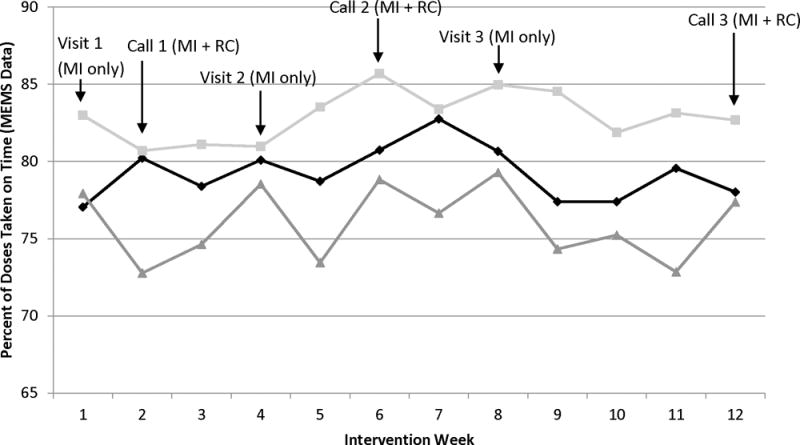

Figure 2 shows a pattern of higher MEMS-based adherence in the MI and reminder call groups relative to usual care, but the group-by-time interaction was non-significant in the multilevel modeling framework, F(22, 1911) = 0.68, p = .68, η2 = .01. However, there was a significant main effect of group as shown in Table 3, F(2,162) = 4.14, p = .02, η2 = .05, indicating differences between groups independent of study week. Post hoc comparisons among individual groups showed that reminder calls were superior to usual care based on the MEMS measure, t(162) = 2.88, p = .005, d = 0.45, but that MI was not, t(162) = 1.37, p = .17, d = 0.22. Contrary to prediction, MI and reminder calls did not differ, t(162) = 1.46, p = .15, d = 0.23.

Figure 2.

MEMS-based adherence by experimental group, showing weekly means after adjustment for all relevant covariates. MI = Motivational Interviewing, RC = Reminder Call intervention, MEMS = Medication Event Monitoring System (electronic pill bottle monitoring devices).

Table 3.

Mean and Standard Deviation on Adherence Measures by Group

| Self-Report Metric | Time | Usual Care | Reminder Calls | Motivational Interviewing | Omnibus Test Between Groups |

|---|---|---|---|---|---|

| MEMS | Pre | 78.7% (19.5%) | 75.7% (21.4%) | 79.7% (18.2%) | F(2, 162) = 4.14, |

| (% of days) | Post | 76.0% (20.4%) | 83.0% (23.5%) | 79.3% (22.1%) | p = .02, η2 = .05 |

| 3-Day Recall | Pre | 92.4% (19.1%) | 91.4% (21.9%) | 92.0% (19.3%) | F(2, 176) = 3.66, |

| (% of days) | Post | 94.2% (10.4%) | 98.2% ( 8.2%) | 97.3% ( 7.7%) | p = .03, η2 = .04 |

| Morisky Scale | Pre | 93.0% ( 9.4%) | 93.0% (10.7%) | 91.0% (13.5%) | F(2, 176) = 4.89, |

| (% calculated from 1–7 scale) | Post | 94.9% (13.7%) | 97.4% ( 9.9%) | 96.9% (12.4%) | p = .009, η2 = .05 |

Note. Average adherence was calculated for MEMS as the overall percentage of doses taken as prescribed during the baseline phase (2 months) and during the intervention phase (3 months); for recall as the percentage out of 3 days that the participant reported taking their medication correctly; and for the Morisky scale as a transformation of the raw average scale score (on a 1–7 Likert-type scale) into a percentage by this formula: (average - 1)/6. The transformed Morisky scale score was computed to aid interpretation in descriptive analyses by converting all variables to the same 0% – 100% range, with no effect on the variable’s underlying distribution. Adjusted post-intervention means are presented. Each analysis (multilevel modeling for MEMS or analysis of covariance for the other outcomes) controlled for the following covariates: pretest scores on the same measure; recruitment site; the urn randomization stratification variables of age, visual field, and MEMS-based baseline adherence; plus marital status which differed by experimental group at baseline, and number of doses per day, number of comorbidities, number of other medications, treatment duration, and intra-ocular pressure, all of which differed by site at baseline.

Table 3 also shows pre- and post-intervention means for the two self-report adherence measures, with a significant main effect of group on each self-report measure. Post hoc tests revealed that the post-treatment difference between reminder calls and usual care was also significant for both recall, t(113) = 2.10, p = .04, d = 0.40, and the Morisky scale, t(113) = 4.54, p = .006, d = 0.85. MI and usual care were not significantly different on recall, t(121) = 1.83, p = .07, d = 0.33, although there was a significant difference on the Morisky scale, t(121) = 2.11, p = .04, d = 0.38. There was no significant difference between MI and reminder calls on either recall, t(108) = 0.64, p = .52, d = 0.12, or the Morisky scale, t(108) = 0.69, p = .49, d = 0.13.

Patient Satisfaction

Post-intervention satisfaction was higher in MI (M = 4.01, SD = 0.53) than reminder calls (M = 3.66, SD = 0.83), t(116) = 2.73, p = .007, d = 0.49, or usual care (M = 3.40, SD = 0.94), t(99) = 4.53, p < .001, d = 0.92. Reminder calls and usual care had similar satisfaction, p = .10.

Exploratory Analyses

We tested other between-group differences in exploratory analyses (Appendix). There were no differences in satisfaction with medication or with the doctor-patient relationship. Both MI and reminder calls were associated with better self-reported vision than usual care. But two objective measures of vision – visual field and intra-ocular pressure – showed no differences. Because many tests were run, it is likely that the one apparent difference is a Type I error.

Discussion

In this multi-site RCT, reminder calls led to slightly but significantly better MEMS-based glaucoma medication adherence than usual care. Despite strong research support for MI in other studies of health behavior change, including studies of adherence, in this study MI had a smaller effect size than reminder calls and was not significantly different from usual care. A self-report recall measure of adherence showed a similar pattern of results, with participants having higher adherence in the reminder call group than in usual care, but no significant difference between MI and usual care. However, patients were significantly more satisfied with the interpersonal support they received in the MI group than in either the reminder call group or usual care, suggesting that MI did enhance the level of patient-centered care.

MI’s lack of efficacy in this study is surprising in light of extensive literature supporting MI as a method for improving health behaviors (Lundahl et al., 2010), including medication adherence (Cook, 2006), as well as positive pilot study results in glaucoma (Cook et al., 2010). MI’s smaller effect size in this study suggests that it was simply less efficacious than reminder calls in this specific setting and patient population. This is especially surprising given that MI interactions were longer in duration and included 3 additional in-person visits. Furthermore, although OTs had no prior MI training they did have patient care experience while the staff making reminder calls generally did not, and treatment fidelity measures showed clear differences between conditions that were all in the expected direction. All of these differences would have been expected to favor MI. The only area where MI did show an advantage was patients’ satisfaction with relational aspects of care, which fits with MI’s patient-centered focus. However, this advantage may not be enough to justify the additional training and time required for OTs to learn MI, especially given that reminder calls produced greater adherence benefits.

The most surprising finding of the current study is that a relatively simple reminder call protocol, originally designed as a minimal behavioral intervention condition and with no claim to investigator allegiance, produced significant improvement in adherence based on three separate measures. Brief reminders have been studied in the adherence literature and are generally most effective when delivered as part of a multi-component intervention strategy (Haynes et al., 2008). However, two other recent studies have shown reminders to be effective in improving glaucoma medication adherence (Okeke et al., 2009b Boland et al., 2014). Reactive measurement effects of adherence monitoring in prior glaucoma studies (Cook et al., 2011; Richardson et al., 2013) also suggest that reminders can be efficacious for this population. Although changes in adherence were small, they were potentially clinically meaningful. For instance, Rossi and colleagues (2010) found that each percentage point improvement in adherence (up to 90%) translates to about a 5% increase in the odds of preserving existing vision.

The small effect sizes for both MI and reminder calls in this study can be explained in part by a high overall level of adherence across all groups, a ceiling effect that resulted in limited variability for most statistical tests. All participants had much higher baseline adherence than has been previously documented in the glaucoma literature, which suggests that their motivation for treatment was already relatively high. Prior studies have shown adherence rates of 71% after just 2 months of treatment based on electronic eye dropper monitoring (Okeke, et al., 2009a) and 59% after 12 months based on pharmacy data (Friedman et al., 2007). Prior studies using MEMS have shown slightly higher adherence rates around 80% after 2–3 months (Robin et al., 2007; Cook et al., 2011). Data shown above in Table 1 confirm that baseline motivation was high for all 3 study groups. The fact that baseline adherence in this study was high compared to those prior findings means that patients might have been more receptive to a minimal behavioral intervention that helped them to maintain their existing high adherence levels. By contrast, MI may be more effective in patients with lower current levels of motivation for adherence. In that context, reminder calls might be viewed within a self-regulation model (Carver & Scheier, 2010) as a prompt to re-engage effective self-regulatory processes (Sitzmann & Ely, 2010) by patients who already have those skills and want to apply them in maintaining eye drop use.

Lack of treatment fidelity is not likely to explain MI’s lack of efficacy. Data from multiple sources suggest that MI was delivered with appropriate fidelity by staff at three different sites, was well received by patients, differed from a comparison condition based on observational coding, and provided the active ingredients of empathy and client engagement at levels likely to be therapeutic (Moyers et al., 2005). Delivery of MI was not perfect – as seen, for example, in only 23% use of reflections – but improved over time as OTs gained experience. In addition, rated levels of empathy were high throughout, which has been shown to be more important than behavior counts in MI (Lundahl et al., 2010; Moyers et al., 2005). Furthermore, the conditions were clearly distinct in their use of MI techniques. It is possible that if OTs had more practice or had been required to reach a particular fidelity standard before the start of data collection, these results would have been stronger. Variability in the OTs’ delivery of MI might in turn have obscured potential intervention effects. However, it seems unlikely that even a higher-fidelity MI intervention would have produced better results in the context of the ceiling effect noted above and the prior meta-analytic finding that fidelity does not predict outcomes (Lundahl et al., 2010).

Limitations and Directions for Future Research

Despite a number of methodological strengths, this multi-site RCT had some important limitations. As noted above, our ability to detect group differences was hindered by a sample of patients with high baseline levels of adherence. There are at least two plausible causes of this unexpected finding: One is reactivity of the MEMS measure. Although MEMS are widely used to measure adherence, they may have a greater impact on adherence in glaucoma because patients are not used to storing their eye dropper in a large pill bottle. In prior research we found a return to baseline adherence 6–8 weeks after glaucoma patients started using MEMS (Cook et al., 2011), and in this study we specifically included a run-in period to reduce reactivity, in which patients used the MEMS device to monitor their adherence for 2 months before being randomized to one of the experimental groups. If reactive measurement is the explanation, this effect was stronger and longer-lasting in the current study than in past glaucoma research using MEMS (Cook et al., 2011; Richardson et al., 2013).

Another working hypothesis for the high baseline adherence level, which we did not initially consider, is that patients seen in specialty glaucoma clinics or taking monotherapy medications may differ from typical patients with glaucoma in general ophthalmology practice. These differences might favor higher adherence among specialty-care patients (Quigley, 2014). For instance, patients who see a glaucoma specialist may have been identified as having particularly severe glaucoma, may have experienced failure of other treatment options, may work more closely with their ophthalmologist, may have especially complex care needs, or may be particularly motivated to seek out the best available care. Our participants did have a higher than expected educational level, consistent with this self-selection hypothesis. Alternately, patients taking monotherapy glaucoma medication may have less severe disease or less difficulty due to less demanding medication regimens, so our selection criteria might have produced a relatively adherent sample. Future studies could address these limitations by pre-screening patients for nonadherence, or by recruiting participants in settings that have poorer baseline adherence.

Consistent with pilot research (Cook et al., 2010), this study showed high patient acceptability based on enrollment results and limited attrition over time. This was true even among African-American and Latino/Latina patients, two groups at higher risk for glaucoma, suggesting that the interventions were acceptable to diverse groups. Participants were older and had multiple comorbidities, reflective of real-world practice. Participants also had diverse medication regimens, with 14% taking medication more than once per day. These participants might face additional barriers to adherence. Our analyses controlled for the number of doses per day and comorbidities to reduce heterogeneity in the data.

Additional limitations were identified by OTs, who reported barriers to implementing MI because the method differed so much from their usual approach to care. It took time and practice before trainees were able to routinely (a) ask open-ended questions rather than closed questions, (b) reflect back what the participant said about barriers rather than providing new factual information in response, and (c) use their already-strong people skills while providing the MI intervention. It is important to note that neither of the interventions in this study involved patient education, which is more typical of OTs’ usual work. Many of our trainees had strong interpersonal abilities, but initially seemed less relaxed with patients when using MI; they tended to read MI scripts as though the scripts were a research protocol instead of applying MI strategies flexibly in the context of a friendly conversation. Although there were too few OTs to test person-level differences in MI implementation, our impression is that some OTs felt comfortable using MI sooner than others. Over time, these trends diminished and all OTs appeared more fluent in their use of MI. We also could have potentially enhanced the efficacy of MI in other ways, such as offering a greater number of patient contacts or following patients for a longer period of time. It is possible that augmenting MI with these features would lead to larger effects.

Finally, the three adherence metrics in this study were only weakly correlated, consistent with other studies (Cook et al., 2011; Richardson et al., 2013). Although MEMS were the study’s primary outcome, no measure of adherence is error-free (Chesney, 2006). Other measures such as pharmacy fill data or electronic eye dropper monitoring might have shown different results.

Conclusions

Treatment fidelity results in this study expand on previous pilot data (Cook et al., 2010) to demonstrate that MI can be delivered by OTs. However, MI in this study did not improve adherence significantly and had a smaller effect size than a minimal behavioral reminder-call intervention. MI training required time and practice, and staff delivering MI outside a research study would need to be redirected from other responsibilities. Furthermore, not all ophthalmology practices have OTs in place to deliver this type of intervention, while most do have office staff who could deliver scripted reminder calls. Reminder calls are therefore recommended as a simple and potentially cost-effective intervention to improve glaucoma medication adherence among ophthalmology specialty clinic patients who already have high adherence. Despite MI’s efficacy in other settings, specialty clinics with high adherence may represent a boundary condition in which MI adds complexity without offering a significant clinical advantage. This might be due to differential effects for the two interventions, with MI being more useful only when patients are less motivated, and behavioral strategies such as reminder calls being more useful for highly motivated patients. Even though MI is widely recommended and implemented as a patient-centered communication technique, additional research is needed to determine when it should or should not be used.

Supplementary Material

Acknowledgments

This study was funded by a grant from Merck & Co., Inc., with additional support from the Colorado Clinical and Translational Science Institute, NIH grant #1UL1RR025780-01. The authors wish to acknowledge Laurra Aagaard, MS, MA, for MISC coding and MI training, and Jason Weiss for editorial assistance.

Footnotes

Disclosure Statement

Dr. Fitzgerald is an employee of Merck & Co., the study sponsor. In the past 12 months Dr. Kahook has also served as a speaker for Merck & Co; Dr. Cook has served as a consultant for Takeda Inc. and Academic Impressions Inc., and has received other grant support from several U.S. Federal government agencies, University of Colorado Hospital, Children’s Hospital of Colorado, the Mayo Clinic, the University of Denver, and Academic Impressions Inc. No other conflicting relationship exists for any author.

Contributor Information

Paul F. Cook, University of Colorado College of Nursing, Campus Box C288-04, Aurora CO 80045, USA. Phone: 303-724-8537.

Sarah J. Schmiege, University of Colorado College of Nursing, Campus Box C288-04, Aurora CO, 80045, USA. Phone: 303-724-8080.

Steven L. Mansberger, Devers Eye Institute, 1040 NW 22nd Ave., Suite 200, Portland OR 97210, USA. Phone: 503-413-6492.

Christina Sheppler, Devers Eye Institute, 1040 NW 22nd Ave., Suite 200, Portland OR 97210, USA. Phone: 503-413-6492.

Jeffrey Kammer, Vanderbilt University Eye Institute, 2311 Pierce Ave., Nashville TN 37232, USA. Phone: 615-936-7190.

Timothy Fitzgerald, Merck & Co. Inc. Global Health Outcomes, WP97A-243, White Horse Station NJ 08889, USA. Phone: 215-652-2800.

Malik Y. Kahook, University of Colorado School of Medicine, Mailstop F-731, Aurora CO 80045, USA. Phone: 303-848-2500.

References

- Amrhein PC, Miller WR, Yahne CE, Palmer M, Fulcher L. Client commitment language during motivational interviewing predicts drug use outcomes. Journal of Consulting and Clinical Psychology. 2003;71:862–878. doi: 10.1037/0022-006X.71.5.862. [DOI] [PubMed] [Google Scholar]

- Baer JS, Rosengren DB, Dunn CW, Wells EA, Ogle RL, Hartzler B. An evaluation of workshop training in motivational interviewing for addiction and mental health clinicians. Drug and Alcohol Dependence. 2004;73:99–106. doi: 10.1007/s12160-014-9595-x. [DOI] [PubMed] [Google Scholar]

- Battaglia C, Benson SL, Cook PF, Prochazka A. Building a tobacco cessation telehealth care management program for veterans with posttraumatic stress disorder. Journal of the American Psychiatric Nurses Association. 2013;19:78–91. doi: 10.1177/1078390313482214. [DOI] [PubMed] [Google Scholar]

- Bellg AJ, Borrelli B, Resnick B, Hecht J, Minicucci DS, Ory M. Enhancing treatment fidelity in health behavior change studies: Best practices and recommendations from the NIH Behavior Change Consortium. Health Psychology. 2004;23:443–451. doi: 10.1037/0278-6133.23.5.443. [DOI] [PubMed] [Google Scholar]

- Boland MV, Chang DS, Frazier T, Plyler R, Friedman DS. Electronic monitoring to assess adherence with once-daily glaucoma medications and risk factors for nonadherence: The Automated Dosing Reminder Study. JAMA Ophthalmology. 2014;132:838–844. doi: 10.1001/jamaophthalmol.2014.856. [DOI] [PubMed] [Google Scholar]

- Borrelli B. The assessment, monitoring, and enhancement of treatment fidelity in public health clinical trials. Journal of Public Health Dentistry. 2011;71:S52–S63. doi: 10.1111/j.1752-7325.2011.00233.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke BL, Arkowitz H, Menchola M. The efficacy of motivational interviewing: a meta-analysis of controlled clinical trials. Journal of Consulting and Clinical Psychology. 2003;71:843–861. doi: 10.1037/0022-006X.71.5.843. [DOI] [PubMed] [Google Scholar]

- Carver CS, Scheier MF. On the Self-Regulation of behavior. New York: Cambridge University Press; 2001. [Google Scholar]

- Chesney MA. The elusive gold standard: future perspectives for HIV adherence assessment and intervention. Journal of Acquired Immune Deficiency Syndromes. 2006;43:S149–S155. doi: 10.1080/09540120050042891. [DOI] [PubMed] [Google Scholar]

- Collins LM, Schafer JL, Kam CM. A comparison of inclusive and restrictive strategies in modern missing data procedures. Psychological Methods. 2001;6:330–351. doi: 10.1037/1082-989X.6.4.330. [DOI] [PubMed] [Google Scholar]

- Cook PF. Adherence to medications. In: O’Donohue WT, Levensky ER, editors. Promoting Treatment Adherence: A Practical Handbook for Health Care Providers. Thousand Oaks, CA: Sage; 2006. pp. 183–202. [Google Scholar]

- Cook PF, Bremer RW, Ayala AJ, Kahook MY. Feasibility of a motivational interviewing delivered by a glaucoma educator to improve medication adherence. Clinical Ophthalmology. 2010;4:1091–1101. doi: 10.2147/OPTH.S12765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook PF, Manzouri S, Aagaard L, O’Connell L, Corwin M, Gance-Cleveland B. Results from 10 years of interprofessional training on motivational interviewing. Evaluation and the Health Professions, Online First. 2016 doi: 10.1177/0163278716656229. [DOI] [PubMed] [Google Scholar]

- Cook PF, McElwain CJ, Bradley-Springer L. Feasibility of a daily electronic survey to study prevention behavior with HIV-infected individuals. Research in Nursing and Health. 2010;33:221–234. doi: 10.1002/nur.20381. [DOI] [PubMed] [Google Scholar]

- Cook PF, Richardson G, Wilson A. Evaluating the delivery of motivational interviewing to promote children’s oral health at a multi-site urban Head Start program. Journal of Public Health Dentistry. 2012;73:147–150. doi: 10.1111/j.1752-7325.2012.00357.x. [DOI] [PubMed] [Google Scholar]

- Cook PF, Schmiege SJ, Mansberger SL, Kammer J, Fitzgerald T, Kahook MY. Predictors of adherence to glaucoma treatment in a multi-site study. Annals of Behavioral Medicine. 2014;49:29–39. doi: 10.1007/s12160-014a-96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook PF, Schmiege SJ, McClean M, Aagaard L, Kahook MY. Practical and analytic issues in the electronic assessment of adherence. Western Journal of Nursing Research. 2011;34:598–620. doi: 10.1177/0193945911427153. [DOI] [PubMed] [Google Scholar]

- Friedman DS, Quigley HA, Gelb L, Tan J, Margolis J, Shah SN, Hahn S. Using pharmacy claims data to study adherence to glaucoma medications: Methodology and findings of the glaucoma adherence and persistency study (GAPS) Investigative Ophthalmology & Visual Science. 2007;48:5052–5057. doi: 10.1167/iovs.07-0290. [DOI] [PubMed] [Google Scholar]

- Gordon ME, Kass MA. Validity of standard compliance measures in glaucoma compared with an electronic eyedrop monitor. In: Cramer JA, Spilker B, editors. Patient Compliance in Medical Practice and Clinical Trials. New York: Raven; 1991. pp. 163–173. [Google Scholar]

- Haynes RB, Ackloo E, Sahota N, McDonald HP, Yao X. Interventions for enhancing medication adherence. Cochrane Database of Systematic Reviews. 2008:CD000011. doi: 10.1002/14651858.CD000011.pub3. [DOI] [PubMed] [Google Scholar]

- Hox J. Multilevel analysis: Techniques and applications. Mahwah, NJ: Erlbaum; 2002. [Google Scholar]

- Krousel-Wood M, Islam T, Webber LS, Re RN, Morisky DE, Munter P. New medication adherence scale versus pharmacy fill rates in seniors with hypertension. American Journal of Managed Care. 2009;15:59–66. [PMC free article] [PubMed] [Google Scholar]

- Lambert MJ, Ogles BM. The efficacy and effectiveness of psychotherapy. In: Lambert MJ, editor. Bergin and Garfield’s Handbook of Psychotherapy and Behavior Change. 5th. New York: Wiley; 2004. pp. 139–193. [Google Scholar]

- Lenth RV. Some practical guidelines for effective sample size determination. The American Statistician. 2001;55:187–193. [Google Scholar]

- Leon AC, Davis LL, Kraemer HC. The role and interpretation of pilot studies in clinical research. Journal of Psychiatry Research. 2011;45:626–629. doi: 10.1016/j.psychires.2010.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis SJ, Abell N. Development and evaluation of the Adherence Attitude Inventory. Research on Social Work Practice. 2002;12:107–123. doi: 10.1177/104973150201200108. [DOI] [Google Scholar]

- Liu H, Golin CE, Miller JG, Hays RD, Beck CK, Sanandaji S, Wenger NS. A comparison study of multiple measures of adherence to HIV protease inhibitors. Annals of Internal Medicine. 2001;134:968–977. doi: 10.7326/0003-4819-134-10-200105150-00011. [DOI] [PubMed] [Google Scholar]

- Lundahl BW, Kunz C, Brownell C, Tollefson D, Burke BL. A meta-analysis of motivational interviewing: Twenty-five years of empirical studies. Research on Social Work Practice. 2010;20:137–160. doi: 10.1177/1049731509347850. [DOI] [Google Scholar]

- Mangione CM, Lee PP, Gutierrez PR, Spritzer K, Berry S, Hays RD. Development of the 25-item National Eye Institute Visual Function Questionnaire (VFQ-25) Archives of Ophthalmology. 2001;119:1050–1058. doi: 10.1001/archopth.121.4.531. [DOI] [PubMed] [Google Scholar]

- Markland D, Ryan RM, Tobin VJ, Rollnick S. Motivational interviewing and self-determination theory. Journal of Social and Clinical Psychology. 2005;24:811–831. doi: 10.1521/jscp.2005.24.6.811. [DOI] [Google Scholar]

- Matthews EE, Cook PF, Terada M, Aloia M. Randomizing research participants: Promoting balance and concealment in small samples. Research in Nursing and Health. 2010;33:243–253. doi: 10.1002/nur.20375. [DOI] [PubMed] [Google Scholar]

- Miller WR, Rollnick S. Motivational Interviewing: Helping People Change. 3rd. New York: Guilford Press; 2013. [Google Scholar]

- Miller WR, Rose GS. Toward a theory of motivational interviewing. American Psychologist. 2009;64:527–537. doi: 10.1037/a0016830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller WR, Yahne CE, Moyers TB, Martinez J, Pirritano M. A randomized trial of methods to help clinicians learn motivational interviewing. Journal of Consulting and Clinical Psychology. 2004;72:1050–1062. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- Moyers T, Martin T, Catley D, Karris KJ, Ahluwalia JS. Assessing the integrity of motivational interviewing interventions. Behavioral and Cognitive Psychotherapy. 2003;31:177–184. doi: 10.1017/S1352465803002054. [DOI] [Google Scholar]

- Moyers TB, Miller WR, Hendrickson SML. How does motivational interviewing work? Therapist interpersonal skill predicts client involvement within motivational interviewing sessions. Journal of Consulting and Clinical Psychology. 2005;73:590–598. doi: 10.1037/0022-006X.73.4.590. [DOI] [PubMed] [Google Scholar]

- Okeke CO, Quigley HA, Jampel HD, Ying G, Plyler RJ, Jiang Y, Friedman DS. Adherence with topical glaucoma medication monitored electronically: The Travatan Dosing Aid Study. Ophthalmology. 2009a;116:191–199. doi: 10.1016/j.ophtha.2008.09.004. [DOI] [PubMed] [Google Scholar]

- Okeke CO, Quigley HA, Jampel HD, Ying G, Plyler RJ, Jiang Y, Friedman DS. Interventions improve poor adherence with once daily glaucoma medications in electronically monitored glaucoma patients. Ophthalmology. 2009b;116:2286–2293. doi: 10.1016/j.ophtha.2009.05.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quigley HA. Reminder systems, not education, improve glaucoma adherence: A comment on Cook et al. Annals of Behavioral Medicine. 2014;49:5–6. doi: 10.1007/s12160-014-9644-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Resnick B, Bellg AJ, Borrelli B, DeFrancesco C, Breger R, Hecht J, et al. Examples of implementation and evaluation of treatment fidelity in the BCC studies: where we are and where we need to go. Annals of Behavioral Medicine. 2005;29(Suppl):46–54. doi: 10.1207/s15324796abm2902s_8. [DOI] [PubMed] [Google Scholar]

- Richardson C, Brunton L, Olleveant N, Henson DB, Pilling M, Mottershead J, Waterman H. A study to assess the feasibility of undertaking a randomized controlled trial of adherence with eye drops in glaucoma patients. Patient Preference and Adherence. 2013;7:1025–1039. doi: 10.2147/PPA.S47785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossi GC, Pasinetti GM, Scudeller L, Radaelli R, Bianchi PE. Do adherence rates and glaucomatous visual field progression correlate? European Journal of Ophthalmology. 2010;21:410–414. doi: 10.5301/ejo.5000176. [DOI] [PubMed] [Google Scholar]

- Schoener EP, Madeja CL, Henderson MJ, Ondersma SJ, Janisse JJ. Effects of motivational interviewing training on mental health therapist behavior. Drug and Alcohol Dependence. 2006;82:269–275. doi: 10.1016/j.drugalcdep.2005.10.1003. [DOI] [PubMed] [Google Scholar]

- Sitzmann T, Ely K. Sometimes you need a reminder: The effects of prompting self-regulation on regulatory processes, learning, and attrition. Journal of Applied Psychology. 2010;95:132–144. doi: 10.1037/a0018080. [DOI] [PubMed] [Google Scholar]

- Taplin SH, Barlow WE, Ludman E, MacLehos R, Meyer DM, Seger D, Curry S. Testing reminder and motivational telephone calls to increase screening mammography: A randomized study. Journal of the National Cancer Institute. 2000;92:233–242. doi: 10.1093/jnci/92.3.233. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.