Abstract

During spoken language comprehension listeners transform continuous acoustic cues into categories (e.g. /b/ and /p/). While longstanding research suggests that phonetic categories are activated in a gradient way, there are also clear individual differences in that more gradient categorization has been linked to various communication impairments like dyslexia and specific language impairments (Joanisse, Manis, Keating, & Seidenberg, 2000; López-Zamora, Luque, Álvarez, & Cobos, 2012; Serniclaes, Van Heghe, Mousty, Carré, & Sprenger-Charolles, 2004; Werker & Tees, 1987). Crucially, most studies have used two-alternative forced choice (2AFC) tasks to measure the sharpness of between-category boundaries. Here we propose an alternative paradigm that allows us to measure categorization gradiency in a more direct way. Furthermore, we follow an individual differences approach to: (a) link this measure of gradiency to multiple cue integration, (b) explore its relationship to a set of other cognitive processes, and (c) evaluate its role in individuals’ ability to perceive speech in noise. Our results provide validation for this new method of assessing phoneme categorization gradiency and offer preliminary insights into how different aspects of speech perception may be linked to each other and to more general cognitive processes.

Keywords: phoneme categorization, individual differences, categorical perception, speech perception, multiple cue integration, visual analogue scaling, two-alternative forced choice, executive function, speech-in-noise perception

Introduction

Speech varies along multiple acoustic dimensions (e.g. formant frequencies, durations of various events, etc.) that are continuous and highly variable. From this signal, listeners extract linguistically relevant information that serves as the basis of recognizing words. This process represents a transformation from a continuous input that is both ambiguous and redundant into relatively discrete categories, such as features, phonemes, and words.

During this process, listeners face a critical problem: stimuli with the same acoustic cue values1 may correspond to different categories depending on the context (e.g., speech rate or talker’s sex). For example, Voice Onset Time (VOT) is the time between the onset of the release of the articulators and the onset of laryngeal vibration. VOT is the primary cue distinguishing voiced from unvoiced stop consonants with VOTs below 20 ms typical for /b/,/d/,/g/, while VOTs over 20 ms are typical for /p/,/t/,/k/. However, contextual factors can make VOT more ambiguous. For example, a stimulus with a VOT of 20 ms could be a /b/ in slow speech or a /p/ in fast speech. Despite over 40 years of research, speech scientists have identified few (if any) acoustic cues that unambiguously identify a phoneme across different contexts (e.g., McMurray & Jongman, 2015; Ohala, 1996).

Traditional approaches suggest that this problem is solved via specialized mechanisms that discard irrelevant information, leading to the perception of distinct phonemic categories (Liberman, Harris, Hoffman, & Griffith, 1957). However, recent studies show that typical listeners maintain information that is seemingly irrelevant for discriminating between phonemic categories (i.e. within-category information; Massaro & Cohen, 1983; McMurray, Tanenhaus, & Aslin, 2002; Toscano, McMurray, Dennhardt, & Luck, 2010).

Recent theoretical approaches suggest that such gradient representations may be useful for coping with ambiguity and integrating different pieces of information (Clayards, Tanenhaus, Aslin, & Jacobs, 2008; Kleinschmidt & Jaeger, 2015; McMurray & Farris-Trimble, 2012; Oden & Massaro, 1978). However, there is little empirical data that speaks to the functional role of maintaining within-category information (though see McMurray, Tanenhaus, & Aslin, 2009).

The present study addresses this issue using an individual differences approach. Work by Kong and Edwards (2011, 2016) suggests listeners vary in the degree to which they maintain within-category information (i.e. how gradiently they categorize speech sounds). We examined these individual differences and their role in speech perception by (1) linking them to a different aspect of speech perception (the use of secondary acoustic cues), (2) investigating their potential sources in executive function, and (3) examining how they relate to speech perception in noise.

The problem of lack of invariance and Categorical Perception

Variability in the acoustic signal is commonly described in terms of acoustic/phonetic cues like VOT. Critically, while acoustic cues are continuous, our percept (as well as most linguistic analyses) reflects more or less discrete categories (/b/ and /p/). Mapping continuous cues onto discrete categories is complex because the same cue values can map onto different categories, depending on many factors like the talker’s gender (Hillenbrand, Getty, Wheeler, & Clark, 1995), neighboring speech sounds (coarticulation, Hillenbrand, Clark, & Nearey, 2001), and speaking rate (Miller, Green, & Reeves, 1986). This is the problem of lack of invariance: speech sounds do not have invariant acoustic attributes, and a single acoustic cue cannot be reliably mapped to a single speech sound.

One solution to the lack of invariance problem was suggested by Categorical Perception (CP; Liberman et al., 1957). CP describes the well-established behavioral phenomenon that discrimination within a category (e.g. between two instances of a /b/) is poor, but discrimination of an equivalent acoustic difference that spans a category boundary is quite good (e.g., Liberman & Harris, 1961; Pisoni & Tash, 1974; Schouten & Hessen, 1992; Repp, 1984 for a review) (and see Dehaene-Lambertz, 1997; Phillips et al., 2000; Sams, Aulanko, Aaltonen, & Näätänen, 1990; Chang et al., 2010, for related neural evidence).

One hypothesis is that CP derives from some form of warping of the perceptual space that amplifies the influence of categories. Under this view, a [b] with a VOT of 15 ms is encoded more similarly to one with a VOT of 0 ms than to a [p] with a VOT of 30 ms. Such warping is often attributed to specialized processes that discard within-category variation in favor of discrete encoding at both the auditory/cue level and at the level of phoneme categories. This view—perhaps best exemplified by motor theory (Liberman & Whalen, 2000)—suggests that auditory encoding is aligned to the discrete goals of the system (phoneme categorization). As a result, acoustic variation, arising from talker differences and/or co-articulation, does not pose a challenge for speech perception, because the underlying representations (gestures or phonological units) can be rapidly extracted by such specialized mechanisms.

The gradient alternative

According to CP, encoding of acoustic cues is somewhat discrete, and, this enables cues to be easily mapped to fairly discrete categories. However, the claim of discreteness at both levels has not held up to scrutiny. Serious concerns have been raised about the discrimination tasks used to establish CP, while the degree to which discrimination is categorical (i.e. better discrimination across a boundary) depends on the specific task (Carney, Widin, & Viemeister, 1977; Gerrits & Schouten, 2004; Pisoni & Lazarus, 1974; Schouten, Gerrits, & Hessen, 2003). Gerrits and Schouten (2004) and Schouten et al. (2003) found that working memory demands associated different tasks can lead listeners to rely on subjective labels (rather than auditory codes, which may decay more rapidly). A reliance on labels could lead to a more categorical pattern of responses, even if the pre-categorized perceptual representation is continuous (see also Carney et al., 1977; Gerrits & Schouten, 2004; Pisoni & Tash, 1974). Therefore, CP may in fact reflect the influence of categories on memory and decision processes, not on perceptual processes per se. Indeed, when less biased discrimination measures are employed, CP-like effects disappear (Gerrits & Schouten, 2004; Massaro & Cohen, 1983; Pisoni & Lazarus, 1974).

This dependence of CP on the discrimination task implies that encoding of speech cues may not be warped at all, but rather may reflect the input monotonically. Consistent with this idea, ERP and MEG responses to isolated words from VOT continua reflect a linear pattern of response to changes along the continuum with no evidence of warping (Frye, Fisher, & Coty, 2007; Toscano et al., 2010) (and see Myers & Blumstein, 2009 for MRI evidence). Moreover, beyond auditory encoding, there is substantial evidence that fine-grained detail is preserved at higher levels of the pathway, affecting even lexical processing (Andruski, Blumstein, & Burton, 1994; McMurray et al., 2002; Utman, Blumstein, & Burton, 2000).

The functional role of gradiency

The usefulness of maintaining within-category information throughout levels of processing is a key idea of several theoretical approaches (Goldinger, 1998; Kleinschmidt & Jaeger, 2015; Kronrod, Coppess, & Feldman, 2016; McMurray & Jongman, 2011; Oden & Massaro, 1978). It is hypothesized to allow for more flexible and efficient speech processing via at least three mechanisms. First, processes like coarticulation and assimilation leave fine-grained, sub-categorical traces in the signal (e.g., Gow, 2001), which can be used to anticipate upcoming input, speeding up processing. Multiple studies suggest that listeners take advantage of anticipatory coarticulatory information in this way (Gow, 2001; Mahr, McMillan, Saffran, Ellis Weismer, & Edwards, 2015; McMurray & Jongman, 2015; Salverda, Kleinschmidt, & Tanenhaus, 2014; Yeni–Komshian, 1981). As these modifications are largely within-category, such anticipation is only possible if listeners are sensitive to this fine-grained detail.

Second, gradient encoding may offer greater flexibility in how cues map onto categories (e.g., Massaro & Cohen, 1983; Toscano et al., 2010). Continuous encoding of cues may, for example, permit for the values of one cue (e.g., VOT) to be interpreted in light of the values of another cue (e.g., F0). Such processes may underlie the well-known trading relations that have been documented in speech perception (Repp, 1982; Summerfield & Haggard, 1977; Winn, Chatterjee, & Idsardi, 2013). This kind of combinatory process would also be necessary for accurately compensating for higher level contextual expectations—for example, recoding pitch relative to the talker’s mean pitch (McMurray & Jongman, 2011, 2015).

Third, gradient responding at higher levels, at the level of phonemes (McMurray, Aslin, Tanenhaus, Spivey, & Subik, 2008; Miller, 1997) or words (Andruski et al., 1994; McMurray et al., 2008) may help cope with uncertainty. With a gradient encoding, the degree to which the perceptual system commits to one representation over another (e.g., /b/ vs. /p/) is monotonically related to the degree of support in the signal. For example, a labial stop with a VOT of 5 ms activates /b/-onset words more than a labial stop with a VOT of 15 ms, even though both map onto the same category. Superficially, this may appear disadvantageous as it could slow an efficient decision. However, given the variability, and noise in the signal, gradiency may allow listeners to “hedge” their bets in the face of ambiguity. It is precisely when cue values are more ambiguous when a listener should not commit too strongly and keep their options open until more information arrives (Clayards et al., 2008; McMurray et al., 2009).

In sum, gradiency may allow the system to (a) harness fine-grained (within-category) differences that may be helpful; (b) integrate information from multiple sources more flexibly; and (c) delay commitment when insufficient information is available. Thus, while the somewhat empirical question of the gradient versus discrete nature of speech representations has been hotly debated (Chang et al., 2010; Gerrits & Schouten, 2004; Liberman & Whalen, 2000; Massaro & Cohen, 1983; McMurray et al., 2002; Myers & Blumstein, 2009; Toscano et al., 2010), it has important theoretical ramifications for how listeners solve a fundamental perceptual problem.

Individual differences in phoneme categorization

Despite the evidence for gradiency in typical listeners, it is less clear whether there are individual differences. There is now mounting evidence in neuroscience for multiple pathways of speech processing (Blumstein, Myers, & Rissman, 2005; Hickok & Poeppel, 2007; Myers & Blumstein, 2009) that can be flexibly deployed under different conditions (Du, Buchsbaum, Grady, & Alain, 2014). Given this, different listeners may adopt different solutions to this problem, perhaps providing more weight to either dorsal or ventral pathways (see Ojemann, Ojemann, Lettich, & Berger, 1989 for analogous evidence in word production). Similarly, the Pisoni and Tash (1974) model of CP suggests that listeners have simultaneous access to both continuous acoustic cues and discrete categories. Again, this raises the possibility that listeners may weight these two sources of information differently during speech perception.

Considering the function of gradiency in speech perception, the possibility of individual differences raises three questions: 1) Are listeners gradient to varying degrees? 2) What are the sources of these differences? 3) Do such differences impact speech perception as a whole?

Much of the debate around categorical versus gradient perception in typical listeners concerns the degree to which gradiency might be adaptive (or maladaptive). In this regard, a consideration of listeners with communication disorders may be useful. Work on language-related disorders like specific language impairment (SLI) and dyslexia suggests significant differences in the gradiency of speech perception between impaired and typical listeners (Coady, Evans, Mainela-Arnold, & Kluender, 2007; Robertson, Joanisse, Desroches, & Ng, 2009; Serniclaes, 2006; Sussman, 1993; Werker & Tees, 1987, but see Coady, Kluender, & Evans, 2005; McMurray, Munson, & Tomblin, 2014). Much of this work has examined phoneme categorization in a 2AFC task. In this task, participants hear a word (or phoneme sequence; e.g. ba or pa) from a continuum ranging in small steps from one endpoint to the other and assign one of two labels. Listeners typically show a sigmoidal response function with a sharp transitioning from one phoneme category to the other. Critically, the steepness of the slope of the response function is used as a measure of category discreteness.

Using this measure, impaired listeners generally show shallower transitions between categories (but see Blomert & Mitterer, 2004; Coady, Kluender, & Evans, 2005; McMurray et al., 2014). For example, Werker and Tees (1987) found that children with reading difficulties had shallower slopes on a /b/-to-/d/ continuum than typical children (see also Godfrey & Syrdal-Lasky, 1981; Serniclaes & Sprenger-Charolles, 2001). Joanisse, Manis, Keating, and Seidenberg (2000) found a similar pattern for language impaired (LI) children. More recently, López-Zamora et al. (2012) found that shallower slopes in a phoneme identification task predict atypical syllable frequency effects in visual word recognition, suggesting some kind of atypical pattern of sublexical processing. Lastly, Serniclaes, Ventura, Morais, and Kolinski (2005) found that illiterate adults have shallower identification slopes than literate ones.

These findings are typically attributed to non-optimal CP; if impaired learners encode cues inaccurately (e.g., they hear a VOT of 10 ms occasionally as 5 or 15 ms), then tokens near the boundary are likely to be encoded with cue values on the other side, flattening the function. This assumes a sharp, discrete category boundary as the optimum response function, which is corrupted by internal noise (in the encoding of acoustic cues) for disordered listeners (Moberly, Lowenstein, & Nittrouer; Winn & Litovsky, 2015). Thus, impaired listeners may have equally sharp underlying categorization functions as non-impaired listeners, but the categorization output is corrupted due to noisier auditory encoding.

This account offers a clear explanation for listeners with obvious sensory impairments (e.g., hearing impairment), however, it may be less compelling, in the case of listeners with dyslexia or SLI, who may have impairments at higher levels than cue encoding. One alternative explanation is that children with dyslexia have heightened within-category discrimination (Werker & Tees, 1987). This links dyslexia to a difficulty in discarding acoustic detail that is linguistically irrelevant (Bogliotti, Serniclaes, Messaoud-Galusi, & Sprenger-Charolles, 2008; Serniclaes et al., 2004), a failure of a functional goal of categorization. Even in this case, however, the assumption is that discrete categorization, and a reduction of within-category sensitivity are to be desired, and a failure of any aspect of this process drives the shallower response slope (but see Messaoud-Galusi, Hazan, & Rosen, 2011).

Few studies have examined individual differences from the perspective that gradient perception may be beneficial (though see McMurray et al., 2014). An exception is Clayards et al., (2008) who manipulated within-category variability of VOT across trials. When VOTs were more variable, listeners’ response patterns followed shallower 2AFC slopes. This suggests that a shallower identification slope may reflect a different (and more useful) way of mapping cue values onto phoneme categories in that it reflects uncertainty in the input.

It is not clear how to reconcile the classic (categorical) view arguing for the utility of more categorical labeling functions, with the more recent view that gradiency may be beneficial. Both sides may hold truth; shallower functions could derive from both noisier cue encoding and a more graded mapping of cues to categories. What is clear from the work on disordered language is that group differences in categorization relate to differences in language processing. More importantly, our review suggests that measures like 2AFC phoneme identification may not do a good job measuring these differences, because it is difficult to distinguish noisy cue encoding from more gradient categorization.

Towards a new measure of phoneme perception gradiency

The foregoing review reveals a fundamental limitation of 2AFC tasks: the systematicity with which listeners identify acoustic cues and map them to phoneme categories (noise) may be orthogonal to the degree to which they maintain within-category information (López-Zamora et al., 2012; Messaoud-Galusi et al., 2011). This is partly because the 2AFC task only allows binary responses. When a listener reports a stimulus as /b/ 30% of the time and as /p/ 70%, it could be because they discretely thought the stimulus was a /b/ on 30% of trials, or because they thought it had some likelihood of being either or both (on every trial) and the responses reflect the probability distributions of cues-to-categories mappings. A continuous measure may be more precise; if listeners hear the stimulus categorically as /b/ on 30% of trials, the trial-by-trial data should reflect a fully /b/-like response on those trials. In contrast, if listeners’ representations reflect partial ambiguity, they should respond in between with variance clustered around the mean rating. As Massaro and Cohen (1983) argue: “relative to discrete judgment, continuous judgments may provide a more direct measure of the listener’s perceptual experience”.

One such task is a visual analogue scaling (VAS) task. In this task, participants hear an auditory stimulus and select a point on a line to indicate how close the stimulus was to the word on each side (Figure 1; Massaro & Cohen, 1983, for an analogous task in discrimination). This continuous response (instead of a binary choice) permits a more direct measure of gradiency. For example, if we assume a step-like categorization function plus noise in the cue encoding, listeners’ responses should cluster close to the extremes of the scale, though for stimuli near the boundary, participants might choose the wrong extreme because noise would lead to misclassifications (e.g., they may choose the left end of the continuum for ambiguous /p/-initial stimuli). On the other hand, if listeners respond gradiently, we should observe a more linear relationship between the cue value (e.g. the VOT) and the VAS response, with participants using the whole range of the line and variance across trials clustering around the line. However under either model, a 2AFC would give us an identical response function: a shallower slope.

Figure 1.

Visual analogue scaling task used by Kong and Edwards (2011, 2016)

VAS tasks have been used in speech, generally supporting the gradient perspective. Massaro and Cohen (1983) used a VAS task to show that discrimination continuously related to acoustic distance without warping by categories. Many studies by Miller and colleagues (e.g., Allen & Miller, 1999; Miller & Volaitis, 1989) used a VAS goodness scale task (e.g. asking “How good of a /p/ was this?”) to characterize the graded prototype structure of phonetic categories. However, none of these lines of work examined individual differences nor related such measures to variation in 2AFC categorization.

Kong and Edwards (2011, 2016) –building on related work by Schellinger, Edwards, Munson, and Beckman (2008) and Urberg-Carlson, Kaiser, and Munson (2008)–offer evidence for individual differences (see also Schellinger, Munson, & Edwards, 2016). They tested adults on a /da/-/ta/ continuum, asking them to rate each token on a continuous scale. Participants varied in their ratings; some exhibited a more categorical pattern, preferring the endpoints of the line, while others were more gradient, using the entire scale. Further, more gradient responders showed a stronger reliance on a secondary acoustic cue in a separate categorization task; a pattern that was consistent across two separate testing sessions. Lastly, there was a correlation between gradiency and cognitive flexibility (assessed by the switch version of the Trail Making task), suggesting a link between speech perception and executive function.

These findings speak to the potential strengths of an individual differences approach for studying fundamental aspects of speech perception. Kong and Edwards (2011, 2016) demonstrate the reliability of VAS measures, and provide preliminary support for a link between gradiency and the use of secondary cues (a key prediction of accounts suggesting gradiency could be beneficial to speech perception). However, some important methodological refinements and experimental extensions are necessary to fully address the key questions we ask here.

First, to assess secondary cue use, Kong and Edwards used the anticipatory eye movement (AEM) task (McMurray & Aslin, 2004). This is a somewhat non-traditional measure of phoneme categorization which makes it difficult to evaluate their results in relation to studies using more traditional (e.g., 2AFC) measures of phoneme categorization. It is, therefore, unclear how the same individual may perform the more traditional 2AFC task versus a task like the VAS, and the differences between the two patterns of performance would inform our understanding of the speech perception processes these two tasks tap into.

The previous point is particularly important given the discrepancy between studies of language disorders that have found shallower 2AFC slopes (e.g., Werker & Tees, 1987), and the newer view from basic research showing that gradiency is the typical pattern in non-impaired listeners and may be adaptive (Clayards et al., 2008). The VAS task may offer unique insight into the relationship between the 2AFC task and these contrasting theoretical views of gradiency.

The second motivation for the current study is arguably the most important; Kong and Edwards’ (2011) statistical measure of gradiency captured the overall distributions of ratings (e.g., how often participants use the VAS endpoints) independently of the stimulus characteristics. While this documents individual differences, it may also be limited for two reasons. First, it leaves open the possibility that individual differences may also be sensitive to other aspects of speech perception (e.g. multiple cue integration or noise). For example, a flatter distribution could be obtained if listeners matched their VAS ratings to the VOT, or if they showed a large effect of F0 (which would spread out their responses), or even if they simply guessed. In contrast, by taking into account the stimulus (e.g., the VOT) we can estimate categorization gradiency independently of potentially confounding factors. Second, by developing a stimulus-dependent measure we can also compute an estimate of trial-by-trial noise in the encoding of stimuli, addressing a main critique of the 2AFC task.

Finally, executive function (EF) is a multi-faceted construct. Kong and Edwards used two measures (Trail Making and color-word Stroop), which possibly load on different aspects of EF, but only found a correlation between the former and gradiency (though this should be qualified by their moderate sample size of 30). One goal here was to employ additional measures of EF, along with a much larger sample size to obtain a more definitive answer to this question.

Thus, the present study built on the Kong and Edwards VAS paradigm, but addressed the aforementioned issues with a number of changes and refinements of the methodology, including the use of a novel technique specifically developed to help us disentangle categorization gradiency from other aspects of speech perception.

Present study

We sought to examine individual differences in speech perception by: (1) establishing a precise and theoretically-grounded measure of gradiency from the VAS task; (2) exploring the role of several factors that may be linked to these differences; and (3) assessing the role of gradiency in the perception of speech in noise (an issue not addressed by prior studies).

We collected VAS responses from a large sample (N=131), so that we could better evaluate individual differences in phoneme categorization gradiency. Listeners heard tokens from a two-dimensional voicing continuum (matrix) that simultaneously varied in VOT and F0 (a secondary cue) and rated each token (how b-like versus p-like it sounded) using the VAS. Critically, we developed and validated a new set of statistical tools for assessing an individual subject’s gradiency that captured gradiency in responding in the same model that captured the relationship between stimulus-related factors and VAS responses.

Secondarily, we used a variety of continua (word and nonword, labial- and alveolar-initial) to assess the effects of lexical status and place of articulation respectively. While these manipulations were exploratory, prior results suggest that listeners may be more sensitive to subphonemic detail in real words (McMurray et al., 2008). This raises the possibility that the individual differences reported by Kong and Edwards are only seen with nonwords, while most listeners show a gradient response pattern with words.

Next, we related our gradiency measure to the more standard 2AFC measure of categorization. As described, the 2AFC slope may reflect both categorization gradiency and internal noise in cue encoding. Thus, an explicit comparison between the VAS and 2AFC tasks may help disentangle what the 2AFC task is primarily measuring. Since both tasks are thought to reflect, at least to some degree, categorization gradiency, we expected a positive correlation between the VAS and 2AFC slopes. However, it was not clear how strong a correlation should be expected, given the ambiguity as to what affects the 2AFC task.

We also related gradiency (in the VAS task) to cue integration (from the 2AFC task), indexed by the influence of a secondary cue on categorization. As described above, we predicted that gradient listeners would be more sensitive to fine-grained information and should, therefore, be better at taking advantage of multiple cues (see Kong and Edwards, 2016).

Next, we extended earlier investigations by addressing whether these speech measures (gradiency and multiple cue integration) were related to non-linguistic cognitive abilities. We collected a set of individual differences measures tapping different aspects of executive function to evaluate these higher cognitive processes as possible (direct or indirect) sources of gradiency. Our hypothesis was that, to the extent that speech categorization may draw on domain-general skills like EF or working memory, individual differences in these skills may be reflected in the gradiency or discreteness of categorization.

Finally, we performed a preliminary assessment of the functional role of gradiency (i.e. whether it is beneficial for speech perception) using a speech-in-noise recognition task.

Method

Participants

Participants were 131 adult monolingual speakers of American English, all of whom completed a hearing screening at four octave-spaced audiometric test frequencies for each ear; one participant was excluded on this basis because of thresholds greater than 25 dB HL. Participants received course credit, and underwent informed consent in accord with University of Iowa IRB policies. Technical problems with several tasks led to their results not being available for one or more participants. Consequently, between two and 11 participants were excluded from the analyses of the specific tasks for which there were missing data.

Overview of design

Participants performed six tasks (Table 1). To explore stimulus-driven effects on gradiency, we included voicing continua for labials and alveolars (within subject) in words, nonwords and phonotactically impermissible nonwords (between subjects). VAS stimuli varied on seven VOT steps and five F0 steps (secondary cue).

Table 1.

Order and description of tasks

| Order | Task | Domain | Primarily measure of… |

|---|---|---|---|

| 1 | VAS | Speech categorization | Phoneme categorization gradiency |

| 2 | Flanker | Cognitive | Executive Function: Inhibitory Control |

| 3 | N-Back | Cognitive | Executive Function: Working memory |

| 4 | 2AFC | Speech categorization | Secondary cue use |

| 5 | Trail Making | Cognitive | Executive Function: General |

| 6 | AzBio | Speech perception in noise | Speech perception accuracy |

A conventional 2AFC task was compared to the more continuous VAS task. The VAS task was always performed before the 2AFC task to avoid inducing any step-like bias on the former by the latter. The 2AFC task was conducted on continua that varied on seven steps of VOT and only two steps of F0; this allowed an independent estimate of secondary cue use measured as the difference in the category boundary between the two VOT continua.

We used three measures of non-language cognitive function, tapping different aspects of executive function (EF). We used the Flanker task to assess inhibition, the N-Back task, which taps primarily working memory, and the Trail Making task as a measure of planning and executive performance. Finally, as a measure of speech perception accuracy, we administered a computerized version of the AzBio sentences (Spahr et al., 2012), a speech-in-noise measure.

Measuring phoneme categorization gradiency

To measure individual differences in phoneme categorization gradiency we used the VAS task with three types of continua (stimulus-types): 1) CVC real words (RW); 2) CVC nonwords, (NW); and 3) phonotactically impermissible nonword CVs2 that violated an English phonotactic constraint that lax vowels cannot appear in open syllables. Each participant was only tested on one stimulus-type (randomly selected). Within that, each participant was tested on two places of articulation (PoA), labial (e.g. bull-pull) and alveolar (e.g. ten-den; see Table 2).

Table 2.

Stimuli used in the VAS and the 2AFC tasks

| Stimulus type | |||

|---|---|---|---|

|

| |||

| Real word | Nonword | CV | |

| Labial | bull – pull | buv – puv | buh – puh |

| Alveolar | den – ten | dev – tev | deh – teh |

VAS stimuli and design

For each of the six pairs (Table 2) we created a two-dimensional continuum by orthogonally manipulating VOT and F0 in Praat (Boersma & Weenink, 2016; [version 5.3.23]). VOT were manipulated in natural speech using progressive cross-splicing (Andruski et al., 1994; McMurray et al., 2008). Progressively longer portions of the onset of a voiced sound (/b/ or /d/) were replaced with equivalent amounts from the aspiration of the voiceless sound (/p/ or /t/). Prior to splicing, voicoids were multiplied by a 3 ms onset ramp, and cross-spliced with the consonant burst/aspiration segment using a symmetrical 2-ms cross-fading envelope, to remove any waveform discontinuities at the splice point.

At each VOT step, the pitch contour was extracted and modified using the pitch-synchronous overlap-add (PSOLA) algorithm in Praat. Pitch onset varied in five steps spaced equally from 190 Hz to 125 Hz. Pitch was kept steady over the first two pitch periods of the vowel and fell (or rose) linearly until returning to the original contour 80-ms into the vowel. Final stimuli varied along seven VOT steps (1 to 45 ms) and five F0 steps (90 to 125 Hz). During the VAS task, each participant was presented with all 35 stimuli from each of the two PoA series with three repetitions of each stimulus resulting in 210 trials. Stimulus presentation was blocked by PoA, with the block order counterbalanced between participants.

VAS procedure

On each trial, participants saw a line with a printed word at each end (e.g. bull and pull, Figure 1). Voiced-initial stimuli were always on the left side. Participants used a computer mouse to click on a vertical bar and drag it from the center to a point on the line to indicate where they thought the sound fell in between the two words. Before starting, participants performed a few practice trials. Unless the participant had clarifying questions, no further instructions were given. The VAS task took approximately 15 minutes.

Pre-processing of VAS data

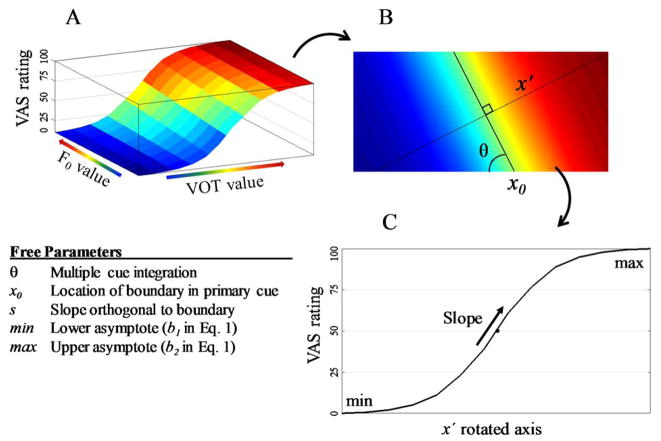

One obvious analytic strategy would be to fit a logistic to each participant’s VAS data and use the slope as a measure of gradiency. However, since stimuli also varied along a secondary cue, this method is problematic; if a listener has a discrete boundary in VOT space, but the location of this boundary varies with F0, the average boundary (across F0s) would look gradient. Instead what is needed is a two-dimensional estimate of the slope. While logistic regression can handle this by weighting and summing the two independent factors, there is no single term separating the contribution of each cue from the overall slope.

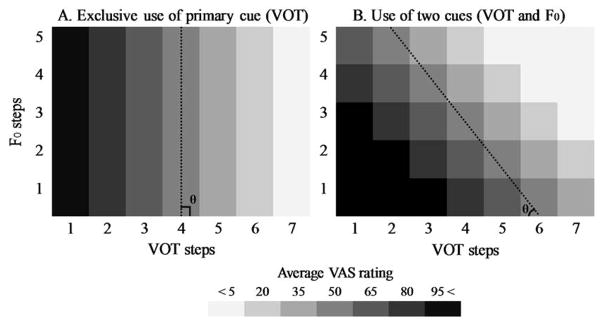

To solve this problem, we developed a new function (Eq.1), the rotated logistic. This assumes a diagonal boundary in a two-dimensional space described by a line with some cross-over point (along the primary cue) and an angle, θ (Figure 2). A 90° θ indicates exclusive use of the primary cue, while a 45° θ indicates roughly equal use of both cues. Once θ is identified, we rotate the coordinate space to be orthogonal to this boundary and estimate the slope of the response function perpendicular to the boundary.

Figure 2.

Hypothetical response patterns based on mono-dimensional (left) and bi-dimensional (right) category boundaries

This allows us to model gradiency with a single parameter that reflects the derivative of the function orthogonal to the diagonal boundary; shallower slopes indicate more gradient responses, independently of cue use (see Figure 3).

Figure 3.

Measuring phoneme categorization gradiency using the rotated logistic; Panel A: 3D depiction of voiced/unvoiced stop categorization as a function of VOT and F0 information (blue/lower front edge → more voiced VAS rating; red/high back edge → more unvoiced VAS rating); Panel B: 2D depiction of the same categorization function; θ marks the theta angle that we use to rotate the x axis so that it is orthogonal to the categorization boundary; Panel C: depiction of categorization slope using the rotated x axis (x′)

| (1) |

Here, b1 and b2 are the lower and upper asymptotes, and s is the slope (as in the four-parameter logistic). The new parameters are: θ, the angle of the boundary (in radians), and x0, is the boundary’s x-intercept. The independent variables are VOT and F0. υ(θ) (in the denominator, seen in [2]) switches the slope direction if θ is less than 90° to keep the function continuous.

| (2) |

For each participant, we calculated the average of the three responses for each of the 70 stimuli they heard during the VAS task (separately for each PoA). The equation in (1, 2) was then fit to each subject’s averaged VAS data using a constrained gradient descent method implemented in Matlab (using FMINCON) that minimized the least squared error (see S.1 for details about the curvetting procedure).

To assess the validity of this procedure we conducted extensive Monte Carlo analyses. These tested both the ability of this procedure to estimate the true values of the data, and looked for any spurious correlations imposed on the data by the function or the curve fitting (e.g., if parameters were confounded with each other). These are reported in Supplement S.2 and show very high validity, and no evidence of spurious correlation between the estimated parameters.

Measuring multiple cue integration

We used a 2AFC task for two purposes. First it offered a measure of multiple cue integration that is independent of the VAS. Second, by relating VAS slope to categorization slope we hoped to determine what drives changes in categorization slope.

2AFC stimuli and design

The 2AFC task was performed immediately after the N-Back task for all participants. A subset of the VAS stimuli was used in the 2AFC task: all 7 VOT steps, but only the two extreme F0 values. This simplified quantification of listeners’ use of F0 as the difference between boundaries for each F0. Each of the 28 (7 VOTs × 2 F0s × 2 PoA) stimuli was presented 10 times (280 total trials). Similarly to the VAS task, trials were presented in two separate blocks, one for each PoA, and block order was counter-balanced between participants.

2AFC procedure

On each trial participants saw two squares, one on each side of the screen, each containing one of two printed words (e.g. bull / pull). The voiced-initial word was always in the left square. Participants were prompted to listen carefully to each stimulus and click in the box with the word that best matched what they heard. At the beginning of the task participants performed a few practice trials. The 2AFC task took approximately 11 minutes.

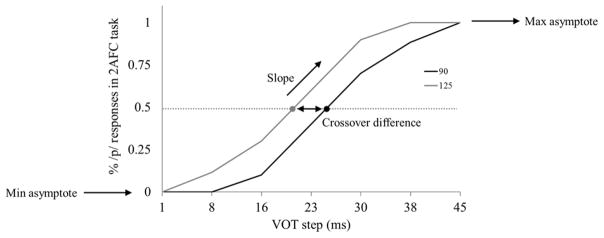

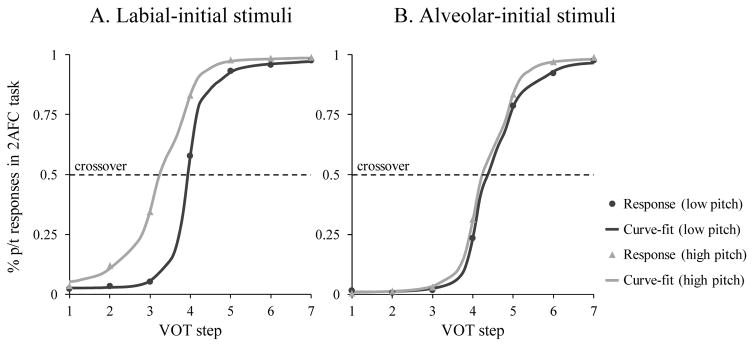

Pre-processing of 2AFC data

To quantify F0 use, we fitted each participant’s response curve using a four parameter logistic function (see McMurray et al., 2010), which provides with estimates for minimum and maximum asymptotes, slope, and crossover (see Eq. 3).

| (3) |

In this equation, b1 is the lower asymptote, b2 is the upper asymptote, s is the slope, and co is the x-intercept (see hypothetical data in Figure 4). This function was fit to each participant’s responses separately for each F0 and for each PoA. Curves were fit using a constrained gradient descent method implemented with FMINCON in Matlab.

Figure 4.

Hypothetical response curves in the 2AFC; (dark: low pitch; light: high pitch)

Measures of executive function

To investigate whether individual differences in cognitive function are related to gradiency in phoneme categorization, we used three tasks measuring aspects of executive function: 1) the Flanker task (available through the NIH Toolbox; Gershon, Wagster, & Hendrie, 2013), 2) the N-Back task, and 3) the switch version of the Trail Making task (TMT-B).

Flanker task (inhibitory control)

The Flanker task is commonly considered a measure of inhibitory control (Eriksen & Eriksen, 1974). Participants saw five arrows at the center of the screen and reported the direction of the middle arrow by pressing a key. The direction of the other four surrounding arrows (flankers) was either consistent or inconsistent with the target. On inconsistent trials, the degree to which participants inhibit the flanking stimuli predicts response speed. The Flanker task had 20 trials (approximately 3 minutes). Inhibition measures were a composite of both speed and accuracy, following NIH toolbox guidelines3 .

N-Back task (working memory)

The N-Back task was used to measure complex working memory (Kirchner, 1958). Participants viewed a series of numbers (each presented for 2000 ms) and indicated whether the current number matched the previous one (1-back), the one two numbers before (2-back), or three before (3-back). The three levels of difficulty were presented in this order for all participants. There were 41, 42, and 43 trials for each difficulty level (respectively), yielding 40 responses to be scored in each level. The N-Back task took approximately 9 minutes. Average accuracy across the three difficulty levels was used as an indicator of working memory capacity.

Trail Making task (cognitive control)

Part B of the Trail Making task assesses cognitive control (Tombaugh, 2004). During this task participants were given a sheet of paper with circles containing numbers 1 through 16 and letters A through P. They used a pencil to connect the circles in order, alternating between numbers and letters, starting at number 1 and ending at letter P. The time it took to complete this task was recorded by a trained examiner and used as a measure of cognitive control. On average, the Trail Making task took 2.5 mins to administer.

Speech recognition in-noise: the AzBio sentences

To measure how well participants perceive speech in noise, we administered the AzBio sentences (Spahr et al., 2012), which consists of 10 sentences masked with multi-talker babble (0 dB SNR). Sentences were delivered over high-quality headphones and participants repeated each sentence with no time constraint. An examiner recorded the number of correctly identified words on a computer display by clicking on each word of the sentence that was correctly produced. The logit-transformed percentage of correctly identified words was used as a measure of overall performance. The AzBio task took approximately 7 minutes.

Results

We start with a brief descriptive overview of the VAS and the 2AFC data to validate the tasks and examine stimulus factors such as the role of word/non-word status. We then proceed to our theoretical questions.

Descriptive overview of VAS data

Participants performed the VAS task as instructed except three who responded with random points on the line and were excluded from analyses. In addition, technical problems led to missing data for five participants, leaving 123 participants with data for this task.

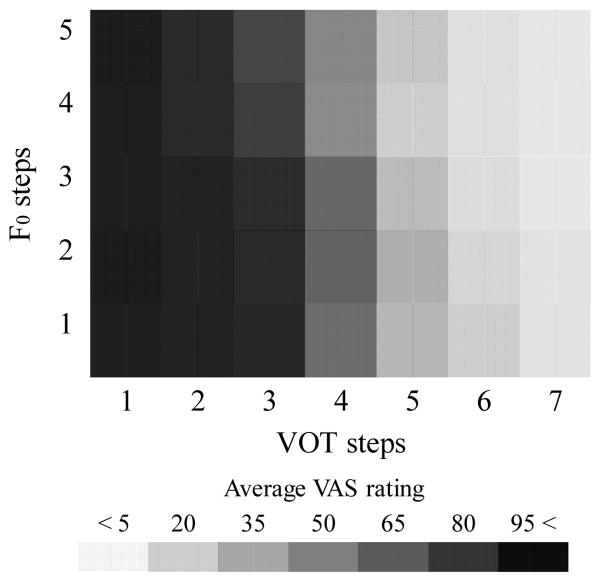

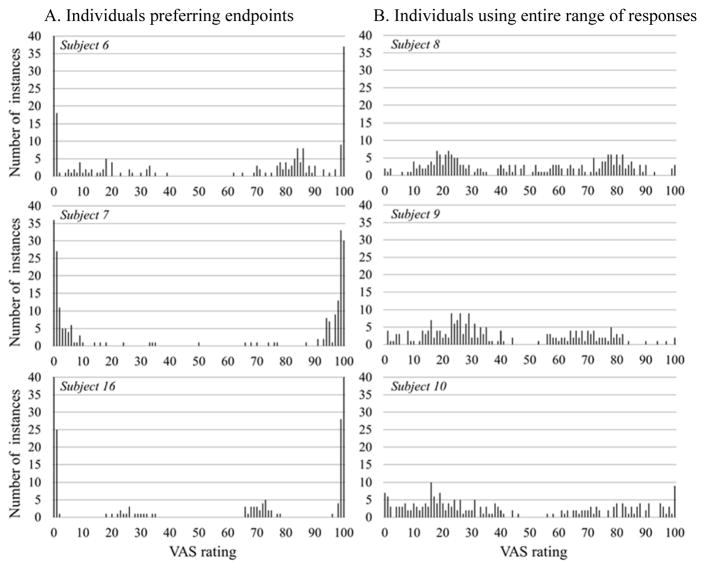

Participants used both VOT and F0 to categorize stimuli. As expected, participants rated stimuli with higher VOT and higher F0 values as more /p/ (or /t/) like (Figure 5). Replicating Kong and Edwards (2011), participants differed substantially in how they performed the VAS task. This can be clearly seen by computing simple histograms of the points that were used along the scale. As Figure 6A shows, some participants primarily responded using the endpoints of the line (Figure 6A), suggesting a more categorical mode of responding, while others used the entire line (Figure 6B), suggesting a more gradient pattern.

Figure 5.

VAS responses by VOT and F0 steps

Figure 6.

Histograms of sample individual VAS responses

While histograms like Figure 6 show individual differences, this approach cannot address our primary questions because it ignores the stimulus. For example, Subject 9 could show a flat distribution because they guessed or because they aligned VAS ratings with the stimulus characteristics. A better approach must consider the relationship between stimulus and response.

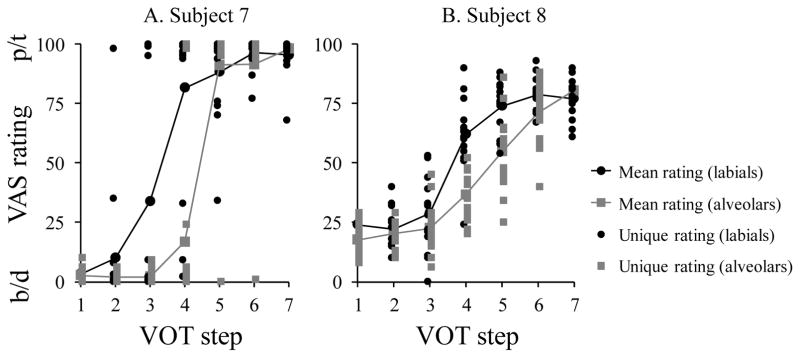

Figure 7 shows results for two participants plotting the individual (trial by trial) VAS responses as a function of VOT and F0. Subject 7 gives mostly binary responses, VAS scores near 0 or 100. What differs as a function of VOT is the likelihood of giving a 0 or 100 rating. In this case, at intermediate VOTs we see random fluctuations between the two endpoints, rather than responses clustered around an intermediate VAS value. Thus, this participant appears to have adopted a categorical approach. In contrast, Subject 8’s responses closely follow the cue values of each stimulus, and variation is tightly clustered around the mean. Thus, subject 8’s responses seem to reflect the gradient nature of the input.

Figure 7.

Sample VAS ratings per VOT and F0 value exhibiting highly dissimilar patterns of noise; Subject 7 (left) responds categorically (close to the endpoints), but sometimes picks the wrong endpoint, whereas Subject 8 (right) closely maps his ratings to the VOT steps

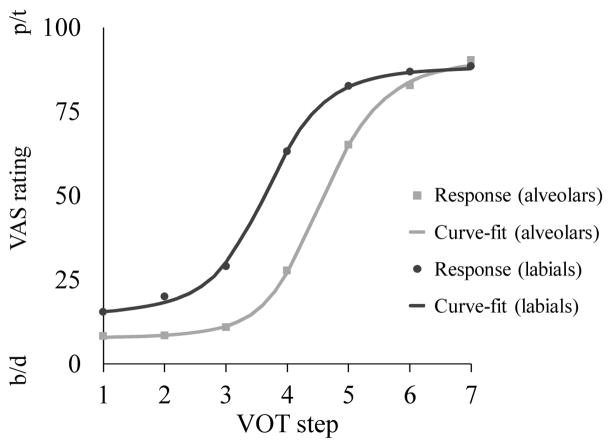

To quantify individual differences, we fitted participants’ VAS ratings using the rotated logistic function provided in Eq.1. Figure 8 shows the actual and fitted response functions for the two types of stimuli (labial and alveolar) across participants. Because the distribution of raw VAS slopes was positively skewed, we log-transformed values for analysis.

Figure 8.

Actual and fitted VAS ratings (dark: labial; light: alveolar)

We conducted an analysis of VAS scores as a function of stimulus type and place of articulation (PoA; see Supplement S.3 for details). In brief, we found no significant effects of stimulus type or PoA on VAS slope. We also found evidence for higher use of F0 for labial compared to alveolar stimuli.

Descriptive overview of 2AFC data

The three participants that were excluded from the VAS analyses were also excluded from the 2AFC analyses. In addition, two additional participants were excluded due to technical issues, leaving 126 participants with data for this task.

Participants used both VOT and F0 in the 2AFC task. They were more likely to categorize stimuli as /p/ (or /t/) when they had higher VOTs and higher F0 values (Figure 9). We fitted 2AFC data using Eq. 3. The distribution of 2AFC slopes was positively skewed, so these were log-transformed for analyses. Similarly, the distribution of raw crossover differences (our measure of F0 use) was moderately positively skewed, so these were square-root transformed.

Figure 9.

Actual and fitted 2AFC responses (dark: low pitch; light: high pitch)

Note: Figure depicts averages of fitted logistics - not fitted logistics of averages

We analyzed 2AFC results by stimulus type and PoA (see Supplement S.4 for details). In brief, we found no main effects of stimulus type or PoA on 2AFC slope. Second, similarly to the VAS task, listeners used F0 more for labial stimuli and hardly at all for alveolars (Figure 9B).

Descriptive analyses: Summary

Listeners were highly consistent across tasks in how they categorized stimuli (e.g. there was no main effect of stimulus type or PoA on slope in either task; and there was greater use of pitch information for labials in both tasks - see Supplement S.5). Based on these results, we averaged slopes across PoA to compute a single slope estimate for each participant in each task. In addition, given the importance of multiple cue integration for our questions, only labial-initial stimuli were included in the analyses of F0 use. More broadly, this close similarity in the pattern of effects between the VAS and 2AFC results validates the VAS task and is in line with a pattern of categorization that is relatively stable within individuals.

Individual differences in speech perception

We next addressed our primary theoretical questions by examining how our speech perception measures were related to each other and to other measures.

Noise and gradiency in phoneme categorization

We first examined the relationship between VAS slope (categorization gradiency) and 2AFC slope (which may reflect categorization gradiency and/or internal noise in cue encoding). As slope was averaged across the two PoA, there were no repeated measurements, enabling us to use hierarchical regression to evaluate VAS slope as a predictor of 2AFC slope.

On the first level of the model (see Table 3), stimulus type was entered, contrast-coded into two variables, one comparing CVs to the other two (CV = 2; RW = −1; NW = −1), and the other comparing RWs to the other two (RW = 2; NW = −1; CV = −1). This explained 1.78% of the variance, F(2,117) = 1.06, p = .35. On the second step, VAS slope was added to the model, which did not account for significantly additional variance (R2change = .002, Fchange < 1). On the last step we entered the VAS slope × stimulus type interaction, which accounted for a marginally significant additional variance (R2change = .048, Fchange(5,114) = 2.96, p = .056). To examine this interaction, we split the data by stimulus type, however, VAS slope did not account for a significant portion of the 2AFC slope variance in any of the subsets4.

Table 3.

Hierarchical regression steps: predicting 2AFC slope from VAS slope

| B | SE | β | R2 | ||

|---|---|---|---|---|---|

| Step 1 | RW vs others | 0.051 | 0.036 | 0.150 | 0.018 |

| CV vs others | 0.035 | 0.036 | 0.105 | ||

| Step 2 | VAS slope | 0.088 | 0.156 | 0.052 | 0.020 |

| Step 3 | VAS slope × RW vs others | −0.215 | 0.119 | −1.113• | 0.068 |

| VAS slope × CV vs others | 0.092 | 0.129 | 0.484 | ||

p<.1,

p<.05,

p<.01,

p<.001.

This lack of correlation between 2AFC and VAS slope implies the two measures may reflect different aspects of speech categorization. As described, this may be because the 2AFC task is more sensitive to noise (in the encoding of cues), while the VAS reflects categorization gradiency. This is in line with Figure 7, which suggests that two subjects may have similar mean slopes in the VAS task despite large differences in the trial-to-trial noise around that mean. While the 2AFC task cannot assess this, the VAS task may be able to do so.

To test this hypothesis, we extracted a measure of noise in cue encoding from the VAS task using residuals. We first computed the difference between each VAS rating (on a trial-by-trial basis) and the predicted value from that subject’s rotated logistic. We then computed the standard deviation of these residuals. This was done separately for each PoA and averaged to estimate the noise for each subject. The SD of the residuals in the VAS task was marginally correlated with 2AFC slope in the expected direction (negatively), r = −.168, p = .063. Listeners with shallower 2AFC slopes showed more noise in the VAS task. Interestingly, noise was weakly positively, though not significantly, correlated with VAS slope, r = .120, p = .185, suggesting that, if anything, listeners with higher gradiency (shallower VAS slope) are less noisy in their VAS ratings. This seems to agree with the sample results presented in Figure 7, as more gradient listeners tend to give ratings that more systematically reflect the stimulus characteristics.

Secondary cue use as a predictor of gradiency

Next, we examined whether gradiency in phoneme categorization was linked to multiple cue integration. As above, we used hierarchical regression with VAS slope as the dependent variable. Independent variables were stimulus type (coded as before) and F0 use (the difference in 2AFC crossover points between low and high F0). Only labial-initial stimuli were included. In the first level (see Table 4), stimulus type did not significantly account for variance in VAS slope, R2=.014, F < 1. In the second level, F0 explained significant new variance, β = −.296; R2change = .077, Fchange(1,116) = 9.87, p < .01. On the last level, the F0 use × stimulus type interaction did not significantly account for additional variance (R2change = .024, Fchange(2,114) = 1.53, p = .220). These results corroborate Kong and Edwards (2016): listeners with more phoneme categorization gradiency (shallower VAS slope) showed greater use of F0, suggesting a link between these aspects of speech perception.

Table 4.

Hierarchical regression steps: predicting VAS slope from F0 use

| B | SE | β | R2 | ||

|---|---|---|---|---|---|

| Step 1 | RW vs others | 0.012 | 0.027 | 0.047 | 0.014 |

| CV vs others | 0.034 | 0.027 | 0.133 | ||

| Step 2 | F0 use | −0.341 | 0.108 | −0.296** | 0.091 |

| Step 3 | F0 use × RW vs others | 0.077 | 0.090 | 0.098 | 0.115 |

| F0 use × CV vs others | −0.092 | 0.085 | −0.105 |

p<.05,

p<.01,

p<.001.

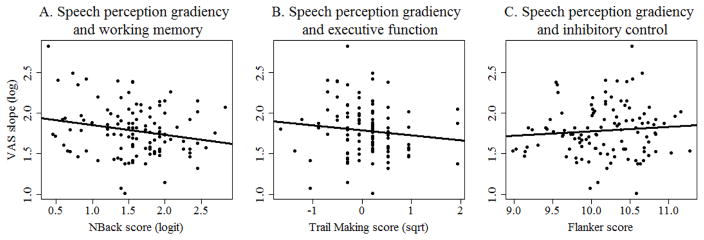

Executive function and gradiency

Next we examined the relationship between executive function (EF) and categorization gradiency. Because the distribution of N-Back scores was positively skewed, while the distribution of the Trail Making task was moderately positively skewed, we used the log-transformed and square-rooted values respectively in all analyses. We first estimated the correlations between EF measures. Flanker (inhibition) was not significantly correlated with either N-Back (working memory; r = .01) or Trail making (executive function; r = .12). However, N-Back performance was weakly, but significantly, correlated with Trail Making (r = .19, p < .05).

We then conducted a series of regressions examining the relationship between phoneme categorization gradiency and EF (see Table 5). Three regressions were run, one for each EF measure–with VAS slope (averaged across PoA) as the dependent variable. In the first level of each model we entered stimulus type and each EF measure was added in the second level.

Table 5.

Hierarchical regression steps: predicting VAS slope from executive function measures

| B | SE | β | R2 | ||

|---|---|---|---|---|---|

| Step 1 | RW vs others | 0.011 | 0.025 | 0.051 | 0.003 |

|

| |||||

| CV vs others | −0.001 | 0.024 | −0.006 | ||

|

| |||||

| Step 2a | N-Back | −0.127 | 0.057 | −0.215* | 0.048 |

|

|

|||||

| Step 2b | Trail Making | −0.062 | 0.051 | −0.117 | 0.017 |

|

|

|||||

| Step 2c | Flanker | 0.052 | 0.061 | 0.082 | 0.010 |

As in prior analyses, stimulus type did not correlate with VAS slope. N-Back however explained a significant portion of the VAS slope variance, with higher N-Back scores significantly predicting shallower VAS slopes, β = −.22; R2change = .045, Fchange(1,108) = 5.09, p < .05 (Figure 10A). Trail Making did not predict VAS slope, R2change = .014, Fchange(2,108) = 1.49, p = .23 (Figure 10B), nor did Flanker, R2change = .007, Fchange < 1, p = .40 (Figure 10C).

Figure 10.

Scatter plots showing VAS slope as a function of EF. A) N-Back (working memory); B) Trail Making (cognitive control); C) Flanker (inhibition)

Executive function and multiple cue integration

The two prior analyses showed a relationship (1) between gradiency and cue integration and (2) between gradiency and N-Back performance (i.e. working memory). Given this, we next tested the possibility that the first correlation (gradiency and multiple cue integration) may be driven by a third factor, possibly EF. For example, greater working memory span may allow listeners to better maintain within-category information and better combine cues. We thus conducted hierarchical regressions with secondary cue use as the dependent variable. As above, three regression models were fitted–one for each EF measure with stimulus type at the first level, and an EF measure on the second.

Stimulus type had a significant effect on secondary cue use (see Table 6; see also Supplement S.4), with significantly lower crossover differences (weaker use of F0 as a secondary cue) for CV stimuli. On the second level, none of the EF measures was correlated with secondary cue use (N-Back: R2change = .003, Fchange < 1, p = .50; Trail Making: R2change = .006, Fchange < 1; Flanker: R2change = .006, Fchange < 1). These results suggest that whatever the nature of the relationship between gradiency and multiple cue integration, it is unlikely to be driven by EF.

Table 6.

Hierarchical regression predicting secondary cue use from EF measures

| B | SE | β | R2 | ||

|---|---|---|---|---|---|

| Step 1 | RW vs others | 0.004 | 0.022 | 0.017 | 0.120 |

| CV vs others | −0.071 | 0.022 | −0.337** | ||

| Step 2a | N-Back | 0.036 | 0.053 | 0.062 | 0.123 |

| Step 2b | Trail Making | −0.043 | 0.047 | −0.083 | 0.126 |

| Step 2c | Flanker | −0.051 | 0.055 | −0.084 | 0.126 |

p<.05,

p<.01,

p<.001.

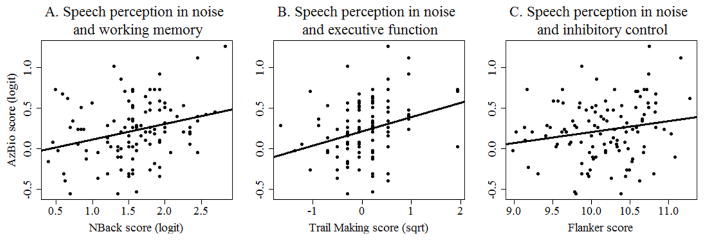

Speech-in-noise perception

Finally, we tested the hypothesis that maintaining within-category information may be beneficial for speech perception more generally. Speech recognition in noise (AzBio) was weakly negatively correlated with VAS slope (r = −.16), though this was only marginally significant (p = .09), suggesting more gradient VAS slopes might be beneficial for perceiving speech in noise. However, perception of speech in noise was significantly correlated with both N-Back performance (r = .29, p < .01) and Trail Making (r =.29, p < .01), and marginally correlated with Flanker (r = .18, p = .055). Thus, we assessed the relationship between gradiency and speech perception in noise after accounting for EF.

We again fitted hierarchical linear regressions with AzBio as the dependent variable. This time, in the first level, the three EF measures were entered simultaneously (see Step 1a in Table 7). These significantly explained 16.1% of the variance in AzBio performance, F(3,108) = 6.76, p < .001,. Within this level, N-Back β = .24, p < .01, and Trail Making, β = .22, p < .05, were significant, while Flanker was marginal, β = .15, p = .08. As indicated by the beta coefficients (and Figure 11), higher scores in each EF measure predicted better AzBio performance.

Table 7.

Hierarchical regression predicting AzBio score from EF measures and VAS slope

| B | SE | β | R2 | ||

|---|---|---|---|---|---|

| Step 1a | N-Back | 0.164 | 0.062 | 0.238* | 0.158 |

| Trail Making | 0.138 | 0.056 | 0.223* | ||

| Flanker | 0.113 | 0.065 | 0.155• | ||

| Step 1b | VAS slope | −0.184 | 0.109 | −0.159 | 0.025 |

| Step 2 | N-Back | 0.150 | 0.063 | 0.218* | 0.168 |

| Trail Making | 0.132 | 0.056 | 0.214* | ||

| Flanker | 0.120 | 0.065 | 0.165• | ||

| VAS slope | −0.121 | 0.105 | −0.105 | ||

| Step 3 | VAS slope × N-Back | 0.140 | 0.191 | 0.073 | 0.175 |

| VAS slope × Trail Making | −0.025 | 0.195 | −0.012 | ||

| VAS slope × Flanker | −0.109 | 0.263 | −0.039 |

p<.05,

p<.01,

p<.001.

Figure 11.

Scatter plots showing speech in noise perception (AzBio) as a function of EF. A) N-Back (working memory); B) Trail Making (cognitive control); C) Flanker (inhibition). Note that an AzBio logit score of 0 (zero) corresponds to 50% accuracy

In the second level, we added VAS slope, which did not account for significant new variance, R2change = 0.01, Fchange(1,107) = 1.33, p = .24. Finally, in the third level, we added two-way interactions between VAS slope and the three EF measures. None of the interaction terms accounted for significant new variance, R2change = .007, Fchange < 1, p = .84. Thus, even though there was a marginally significant correlation between VAS slope and AzBio performance, when the three EF measures were included, this was no longer significant.

Next, we followed the reverse procedure, entering VAS slope in the first step (see Step 1b in Table 7). This was marginally significant, β = −0.16; F(1,110) = 2.85, p = .094, explaining 2.5% of the variance. In the second level, we added the EF measures, which accounted for significant variance over and above VAS slope, R2change = .14, Fchange(3,107) = 6.14, p < .001. Thus, the relationship between gradiency and speech perception in noise may be largely due to individual differences in EF, with little unique variance attributable to gradiency.

Discussion

This study developed a novel way of assessing individual differences in speech categorization. The VAS task offers a unique approach to assessing gradiency of phoneme categorization, and contains a level of granularity that can robustly identify individual differences. While our most important finding was the correlation between phoneme categorization gradiency and cue integration, our correlational approach offers additional insights that are worth discussing before we turn to the implications of our primary finding.

Methodological implications: the VAS and 2AFC tasks

This study provides strong support for using VAS measures for assessing phoneme categorization. Monte Carlo simulations (Supplement S.2) demonstrated that (1) the curve-fitting procedure was unbiased and generated independent fits of gradiency and multiple cue integration, and (2) the fits accurately represented the underlying structure of the data even with as few as three repetitions per stimulus step.

In addition, when relating the pattern of effects obtained in the VAS and 2AFC task, we found the same stimulus-driven effects in both measures (Supplement S.5), and that category boundaries and estimates of multiple cue use were correlated in the two tasks (Supplement S.6). These findings (Supplement S.3–6) suggested that our estimates of various effects are relatively stable across tasks. Therefore, these effects seem to reflect underlying aspects of the processing system that are somewhat stable for any given individual, validating our individual differences approach. Furthermore, the similarity between the VAS and the 2AFC results provides strong validation of the VAS task as an accurate and precise measure of phoneme categorization.

Given this, the lack of correlation between the VAS and 2AFC slopes was striking. We expected to find some correlation between the two, since both are thought to reflect at least partly the degree of gradiency in speech categorization. However, the 2AFC slope did not predict VAS slope. This could mean that these two measures assess different aspects of speech perception, perhaps more so than initially thought. That is, the 2AFC slope may largely reflect internal noise, rather than the gradiency of the response function (as does the VAS slope).

This study cannot speak to the exact locus of the noise that the 2AFC is tapping; it could be noise at a processing stage as early as the perception of acoustic cues, or it could be that cues are perceived accurately, but noise is introduced when they are maintained or as they are mapped to categories. In all these cases, the likely result would be greater inconsistency in participants’ responses particularly near the boundary.

A number of arguments support the claim that the 2AFC task may reflect noise in how cues are encoded and used. For example, even if listeners make underlying probabilistic judgements about phonemes, when it comes to mapping this judgement to a response, the optimal strategy is to always choose the most likely response (rather than attempting to match the distribution of responses to the internal probability structure; Nearey & Hogan, 1986). Though it is unclear if some (or all) listeners do this, it suggests that the 2AFC slope may not necessarily reflect the underlying probabilistic mapping from cues to categories. In contrast to the 2AFC task, the VAS task may offer a unique window into this mapping, allowing us to extract information that is not accessible with other tasks. This is supported by our own analyses of trial-by-trial variation (i.e. noise) showing a markedly different relationship between noise and slopes from the 2AFC and VAS tasks. Interpreting these results cautiously, they suggest that variation in 2AFC slope may be more closely tied to noise in the system (higher noise = shallower slope), whereas VAS slope reflects the gradiency of speech categories.

This has a number of implications when we consider the use of phoneme categorization measures to assess populations with communication impairments. First, our findings seem to explain why gradient 2AFC responding is often associated with SLI and dyslexia, even as theoretical models and work with typical populations suggest a more gradient mode of responding is beneficial. In the former case, the 2AFC task is not tapping gradiency at all, but rather is tapping internal noise (which is likely increased in impaired listeners). As we show here, the VAS simultaneously taps both, with the slope of the average responding reflecting categorization gradiency and the SD of the residuals reflecting noise. A combination of measures may thus offer more insight into the locus of perceptual impairments than traditional 2AFC measures, particularly when combined with online measures like eye-tracking (c.f., McMurray et al., 2014) that overcome other limitations of phoneme judgment tasks.

It is also helpful to consider how the 2AFC and VAS measures relate to other measures of gradiency. Many of the seminal studies supporting an underlying gradient form of speech categorization used a variant of the Visual World Paradigm (Clayards et al., 2008; McMurray et al., 2002; McMurray, Aslin, et al., 2008). Here, gradiency is usually measured as the proportion of looks to a competitor (e.g. bear when the target is pear) as a function of step along a continuum like VOT. Typically, as the step nears the boundary, competitor fixations increase linearly, suggesting sensitivity to within-category changes. This measure is computed relative to each participant’s boundary, and only for trials on which participants click on the target. This allows us to extract a measure that may be less susceptible to the noise issues that appear with the 2AFC task. However, it is an open question whether this measure of gradiency may tap into the same underlying processes as those tapped by the VAS task.

Primary finding: gradiency and cue integration

A critical result was that phoneme categorization gradiency was linked to multiple cue integration, such that greater gradiency predicted higher use of pitch-related information (see also Kong & Edwards, 2016). This finding is correlational, and therefore consistent with a number of possible causal accounts. First, multiple cue integration may allow for more gradient categorization. Under this view, the ability to integrate multiple cues may help listeners form a more precise graded estimate of speech categories. Alternatively, as we proposed, the causality may be reversed, with more gradiency helping listeners to be more sensitive to small differences in each cue, permitting better integration. Third, a gradient representation could help listeners avoid making a strong commitment on the basis of a single cue, allowing them to use both cues more effectively. Lastly, there could be a third factor that links the two. In this regard, we examined EF measures and found a relationship with gradiency for only the N-Back task, but no relationship between any EF measures and multiple cue integration. Additional factors of this sort—such as auditory acuity—should be considered in future research. Even though our study was not designed to distinguish between these mechanisms, it offers strong evidence for a link between these two aspects of speech perception, which remains to be clarified.

Links to other cognitive processes

Our findings show a potential link between working memory (N-Back) and participants’ response pattern in the VAS task. One possibility for this correlation is that working memory mediates the relationship between gradiency and individuals’ responses; there may be individuals who have gradient speech categories, but this gradient activation is not maintained all the way to the response stage due to working memory limitations. That is, the degree to which gradiency at the cue/phoneme level is reflected in an individual’s response pattern may depend on their working memory span. Furthermore, measures that tap earlier stages of processing (e.g., ERPs, see Toscano et al., 2010), or earlier times in processing (e.g., eye-movements in the visual world paradigm: McMurray et al., 2002) may be less susceptible to working memory constraints, possibly explaining why these measures offer some of the strongest evidence for gradiency as a characterization of the modal listener.

Speech perception in noise

We predicted that higher gradiency would allow listeners to be more flexible in their interpretation of the signal and, thus, outperform listeners with lower levels of gradiency in a speech-in-noise task (AzBio sentences). Our results did not support this: gradiency was not a significant predictor of AzBio performance, which was, however, significantly predicted by our three EF measures (N-Back, Trail Making, and Flanker task).

The lack of correlation between gradiency and AzBio performance may reflect difficulties in linking laboratory measures of underlying speech perception processes (and cognitive processes more generally) to simple outcome measures. Such difficulty could arise from at least two sources. First, speech-in-noise perception may be more dependent on participants’ level of motivation and effort than laboratory measures. This is supported by recent work on listening effort (Wu, Stangl, Zhang, Perkins, & Eilers, 2016; Zekveld & Kramer, 2014), which suggests that listeners put forth very low effort at low signal-to-noise ratios, they often appear to give up. Even though it is unlikely that in our study participants gave up in the AzBio task, the point being made here is that motivation may be a significant source of unwanted variability in these measures. Indeed, the significant correlations between our speech-in-noise measure and scores on the three executive function tasks may derive from a similar source. If so, this correlation may have little to do with speech perception.

Furthermore, while speech-in-noise perception is a standard assessment of speech perception accuracy, performance in such tasks may not be strongly affected by differences in categorization gradiency. As we describe in the Introduction, theoretical arguments for gradiency are not typically framed in terms of speech-in-noise perception; rather the motivation seems to derive from the demands of interpreting ambiguous acoustic cues, such as those related to anticipatory coarticulation, speaking rate, or speaker differences. Noise does not necessarily alter the cue values; rather it masks the listeners’ ability to detect them. Thus, this task may not properly target the functional problems that categorization gradiency is attempting to solve.

In a related vein, it may be the case that both gradient and categorical modes of responding are equally adaptive for solving the problem of speech perception in noise. That is, to the extent that differences in listeners’ mode of categorization reflects a different weighting of different sorts of information (e.g., between acoustic or phonological representations in the Pisoni and Tash [1974] model; or between dorsal and ventral stream processing in the Hickock and Poeppel [2007] model), both sources of information may be equally useful for solving this problem (even as there are advantages of gradiency for other problems).

Gradiency and non-gradiency in the categorization of speech sounds can both be advantageous in different ways. Therefore, in order to find the link that connects the underlying cognitive processes to a performance estimate, we need to use different measures of performance that are more closely tied to the theoretical view of speech perception that is being evaluated. Similar concerns may suggest the need to reconsider the way we evaluate speech perception tests used in a variety of different settings, including for clinical evaluations, so that they tap more into the underlying processes linked to our predictions.

Conclusions

We evaluated individual differences in phoneme categorization gradiency using a VAS task. This task, coupled with a novel set of statistical tools and substantial experimental extensions, allowed us for the first time to extract independent measures of speech categorization gradiency, multiple cue integration, and noise, at the individual level.

Our main results can be summarized as follows: First, we found substantial individual differences in how listeners categorize speech sounds, thus verifying the results by Kong and Edwards (2016) with a significantly larger sample. Second, we showed that differences in phoneme categorization gradiency seem to be theoretically independent from differences in the degree of internal noise in the encoding of acoustic cues and/or cue-to-phoneme mappings, and thus should not be confused with the traditional interpretation of shallow slopes as indicating noisier categorization of phonemes. Both categorization gradiency and such forms of noise contribute to speech perception, but may be tapped by different tasks. Third, differences in categorization gradiency are not epiphenomenal to other aspects of speech perception and appear to be linked to differences in multiple cue integration. The functional role of gradiency, however, is not yet clear, as the causal direction of this relationship remains to be defined. Fourth, we found only limited relationship between executive function and gradiency, suggesting that differences in categorization sharpness may derive from lower-level sources. Lastly, gradiency may be weakly related to speech perception in noise, but this seems to be modulated by executive function-related processes.

These results provide useful insights as to the mechanisms that subserve speech perception. Most importantly, they seem to stand in opposition to the commonly held assumption (see Individual differences in phoneme categorization section), that a sharp category boundary (and poor within-category discrimination), is the desired strategy for categorizing speech sounds efficiently and accurately. Overall, speech categorization is gradient, although to different degrees among listeners, and further work is necessary to reveal the sources of these differences and the consequences they have for spoken language comprehension.

Supplementary Material

Statement of the public significance of the work.

Labeling sounds and images is an essential part of many cognitive processes that allow us to function efficiently in our everyday lives. One such example is phoneme categorization, which refers to listeners’ ability to correctly identify speech sounds (e.g. /b/) and is required for understanding spoken language. The present study presents a novel method for studying differences among listeners in how they categorize speech sounds. Our results show that: 1) there is substantial variability among individuals in how they categorize speech sounds and 2) this variability likely reflects fundamental differences in how listeners use the speech signal. The study of such differences will lead to a more comprehensive understanding of both typical and atypical patterns of language processing. Therefore, in addition to its theoretical significance, this study can also help us advance the ways in which we remediate behaviors linked to atypical perception of speech.

Acknowledgments

The authors thank Jamie Klein, Dan McEchron, and the students of the MACLab for assistance with data collection. This project was supported by NIH Grant DC008089 awarded to BM.

Footnotes

Even though we use the term “cues” here, we do not make a strong theoretical commitment as to the kind of auditory information this term entails.

Similar to those used by Kong and Edwards (2011, 2016)

Flanker task accuracy score = 0.125 * Number of Correct Responses; Reaction Time (RT) Score = 5-(5*((log(RT)-log(500))/(log(3000-log(500)); If accuracy levels are < = 80%, the final “total” computed score is the accuracy score. If accuracy levels are > 80%, reaction time score and accuracy score are combined.

The lack of a significant relationship between the slopes for the two tasks raised the possibility that perhaps the VAS task is not related to more standard speech categorization measures. To confirm that the VAS task could provide good measures of basic aspects of speech perception (such as category boundary and secondary cue use), we also examined correlations between the crossover and F0 use extracted from the two tasks (see Supplement S.6). These show a robust relationship, supporting the validity of the VAS task.

Contributor Information

Efthymia C. Kapnoula, Dept. of Psychological and Brain Sciences, DeLTA Center, University of Iowa

Matthew B. Winn, Dept. of Speech & Hearing Sciences, University of Washington

Eun Jong Kong, Dept. of English, Korea Aerospace University.

Jan Edwards, Dept. of Communication Sciences and Disorders, Waisman Center, University of Wisconsin-Madison.

Bob McMurray, Dept. of Psychological and Brain Sciences, Dept. of Communication Sciences and Disorders, Dept. of Linguistics, DeLTA Center, University of Iowa.

References

- Allen JS, Miller JL. Effects Of Syllable-initial Voicing And Speaking Rate On The Temporal Characteristics Of Monosyllabic Words. The Journal of the Acoustical Society of America. 1999;106(4 Pt 1):2031–9. doi: 10.1121/1.427949. [DOI] [PubMed] [Google Scholar]

- Andruski JE, Blumstein SE, Burton M. The Effect Of Subphonetic Differences On Lexical Access. Cognition. 1994;52(3):163–87. doi: 10.1016/0010-0277(94)90042-6. [DOI] [PubMed] [Google Scholar]

- Blomert L, Mitterer H. The Fragile Nature Of The Speech-perception Deficit In Dyslexia: Natural Vs Synthetic Speech. Brain and Language. 2004;89(1):21–6. doi: 10.1016/S0093-934X(03)00305-5. [DOI] [PubMed] [Google Scholar]

- Blumstein SE, Myers EB, Rissman J. The Perception Of Voice Onset Time: An FMRI Investigation Of Phonetic Category Structure. Journal of Cognitive Neuroscience. 2005;17(9):1353–66. doi: 10.1162/0898929054985473. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat: Doing Phonetics By Computer [Computer Program] 2016. [Google Scholar]

- Bogliotti C, Serniclaes W, Messaoud-Galusi S, Sprenger-Charolles L. Discrimination Of Speech Sounds By Children With Dyslexia: Comparisons With Chronological Age And Reading Level Controls. Journal of Experimental Child Psychology. 2008;101(2):137–55. doi: 10.1016/j.jecp.2008.03.006. [DOI] [PubMed] [Google Scholar]