Abstract

Purpose

During medical needle placement using image-guided navigation systems, the clinician must concentrate on a screen. To reduce the clinician’s visual reliance on the screen, this work proposes an auditory feedback method as a stand-alone method or to support visual feedback for placing the navigated medical instrument, in this case a needle.

Methods

An auditory synthesis model using pitch comparison and stereo panning parameter mapping was developed to augment or replace visual feedback for navigated needle placement. In contrast to existing approaches which augment but still require a visual display, this method allows view-free needle placement. An evaluation with 12 novice participants compared both auditory and combined audiovisual feedback against existing visual methods.

Results

Using combined audiovisual display, participants show similar task completion times and report similar subjective workload and accuracy while viewing the screen less compared to using the conventional visual method. The auditory feedback leads to higher task completion times and subjective workload compared to both combined and visual feedback.

Conclusion

Audiovisual feedback shows promising results and establish a basis for applying auditory feedback as a supplement to visual information to other navigated interventions, especially those for which viewing a patient is beneficial or necessary.

Keywords: Auditory Display, Image-Guided Therapy, Needle Placement, Human-Computer Interfaces

1 Introduction

Image-guided needle navigation is a growing field in which an operator inserts an applicator into a patient’s body to target a tumor or perform a biopsy. For image-guided needle insertion, this can be accomplished using a 2D or 3D visualization on a screen showing the position of a tracked needle in relation to the patient’s anatomy. In some cases, a screen is either unavailable, or the clinician must assume an uncomfortable body position to view a screen [11]. Auditory display may aid the clinician during needle placement using sound cues in addition or instead of the standard visual display. By doing so, the operator receives immediate guidance information to help focus attention on the situs or ameliorate situations in which a screen is unavailable or uncomfortable to view. In contrast to other attempts which solely augment visual feedback, we have developed an auditory display that reaches beyond augmentation and provides auditory feedback that is also capable of screen-free guidance. Our system supports placing the tip and handle of a navigated needle during guidance by means of a parameter-mapping auditory display that employs pitch comparison and stereo placement.

To assess the general applicability of this auditory feedback method, we performed a first evaluation with 12 participants in a laboratory study. This study with novice participants was performed to provide insight into the potential of auditory display and to provide a basis for refined follow-up studies with clinical end users. We compare auditory, visual, and combined feedback for placing a needle on a gelatin phantom under standardized conditions. We investigate the differences with regards to placement accuracy, task completion time, subjective workload, and viewing behavior. This work investigates in particular to what extent auditory feedback differs from both other types of feedback with visual information (combined and visual). Moreover, we investigate how the combined feedback may improve performance and reduce screen viewing time compared to visual feedback.

Because of the challenge of providing complex spatial information with audio and its novelty for most participants, we hypothesize that auditory-only feedback will exhibit higher task completion time, higher subjective workload, and slightly impair placement accuracy compared to both feedback methods providing visual information. Based on results of previous studies described in section 2.1, for combined feedback, we expect a prolongation of a task completion time, higher accuracy, and reduced subjective workload compared to visual feedback. Moreover, for combined feedback, we expect a reduction in screen viewing time compared to visual feedback.

To the authors’ knowledge, this work demonstrates the first investigation of the impact of auditory-only and combined audiovisual feedback on participant performance for navigated needle placement compared to typical visual-only feedback.

2 State of the Art

Modern sound synthesis methods can deliver information in realtime as an alternative or addition to visual systems. To harness this, auditory display can be succinctly defined as a display that uses sound to communicate information. Sonification is the “transformation of data relations into perceived relations in an acoustic signal for the purposes of facilitating communication or interpretation.” [25]. Hermann [17] proposed that sonification is “[a] technique that uses data as input, and generates sound signals (eventually in response to optional additional excitation or triggering).”

Parameter mapping is a form of auditory display that links continuous changes in one set of data to continuous changes in audio parameters. In essence, the underlying data are used to ‘play’ a realtime software instrument according to those changes [17]. Because audio has various parameters that may be altered (such as frequency, intensity, and timbre), continuous parameter mapping is also suitable for displaying multivariate data. This technique often makes the listener an active participant in the listening process by browsing the data set using the auditory display or by interactively changing the mappings that relate data to audio. The listener can navigate through a set of data to perform a task. This method is useful for smoothly representing continuous changes in underlying data.

2.1 Auditory Display in Image-Guided Therapy

Auditory display has been employed in image-guided medicine, although its primarily use has been to supplement visual displays and not function as a stand-alone means of navigation. These auditory displays warn the clinician when certain structures have been approached [7,10,19,24,27,28]. Compared to no navigation or visual navigation, these works demonstrate benefits including less possible complications [7, 19], better safe margin maintenance [7], higher surgery speed, improved subjective assessment of proximity to critical structures [8,10,12], lower subjective workload [8,10], higher resection precision [27], improved risk structure avoidance and surgical orientation [24], and higher subjective resection quality [28].

Hansen et al. [13] present the first auditory display for image-guided medicine to extend beyond simple warning sounds to support liver resection by guiding a surgical aspirator along a planned resection line on the liver surface. Combining auditory and visual displays led to longer task completion times but increased accuracy and significantly reduced time viewing the screen compared to visual feedback. Purely audio feedback for navigated needle placement has been suggested since 1998 [26], although no extensive evaluations of these systems have been provided.

3 Methods

3.1 Visual Navigation System for Needle Guidance

Different image-guided navigation systems for liver surgery that track surgical tools in relation to the patient and visualize the patients image data have been presented by several groups [2, 5, 22]. In this work, we adapt the commercially available CAS-ONE IR (CAScination AG, Bern, Switzerland) stereotactic needle navigation system. Arnolli et al. [1] provide a generic workflow for percutaneous needle placement that consists of planning a path in image space, retrieving skin entry point, estimating insertion angle, inserting the needle, and performing biopsy or ablation. For the developed method, the system support the steps of placing the needle at the skin entry point and finding the insertion angle of the handle. Depending on the surgical procedure and procedure step, specific visualization alternatives are utilized (e.g., a 3D volume rendering or a multiplanar reconstruction). For the utilized needle placement, a cross-hair plot displays the relative position of a needle tip and the needles orientation for a defined trajectory to a target structure, see Fig. 1.

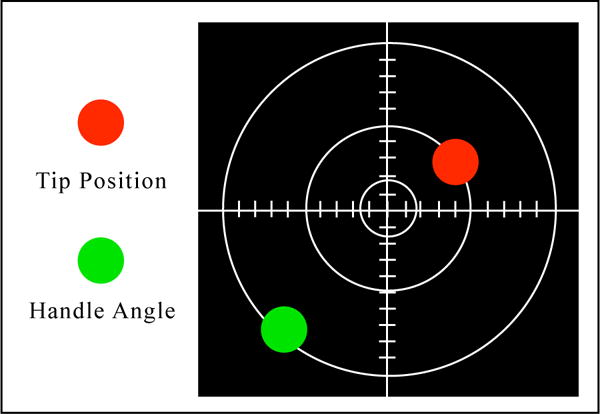

Fig. 1.

On-screen cross-hair visualization, in which the tip and handle of the needle are shown as red and green circles, respectively

To find the correct insertion point of that trajectory, the needle tip position, shown as a red circle, is to be brought to the center of the cross-hair. The correct needle angle to the surface is found when the green circle is in the center of the cross-hair as well. The orientation of the coordinate system is aligned to the needle, such that the positive y direction aligns with the part of the tracked needle facing toward the infrared stereo tracking camera and away from the operator, see Fig. 2.

Fig. 2.

Test setup featuring navigation system with on-screen visualization and phantom with outline of typical user

3.2 Auditory Navigation System for Needle Guidance

The developed auditory display for needle guidance is based on a parameter-mapping of the visual feedback, described above, and maps changes in needle placement onto auditory parameters that change in realtime, see Fig. 3. The auditory display system receives both tip and handle position data in two dimensions sent using Open Sound Control messages across a local network [29]. In fundamental terms, for both tip and handle placement, changes in the y axis (up and down) are mapped to the moving pitch of tone that the user compares to a static pitch of another tone. These are brought together such that the pitches are the same and y = 0. Changes in the x axis are mapped to the stereo position of the tones, such that the tones are brought to the center so that x = 0. To minimize possible distraction, synthesized tones for the auditory display method were developed which would not interfere with typical existing operating room sounds, such as ECG or pulse readings from anesthesia monitoring equipment.

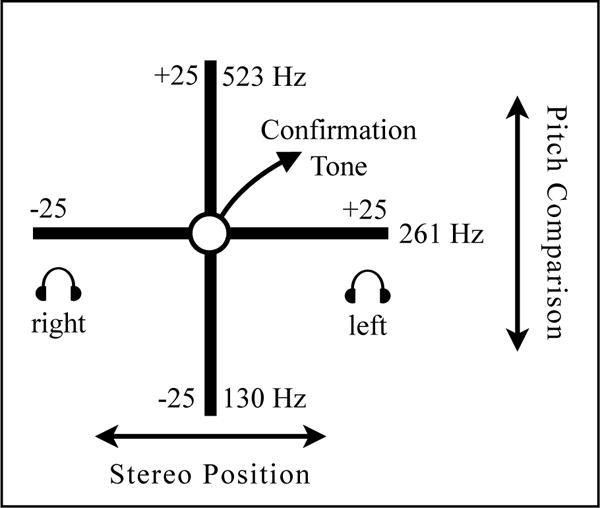

Fig. 3.

Auditory display method: The x axis provides navigation in the left-right direction from the planned position, and y axis provides navigation in the up-down direction (fore-ward/backward from user)

These correspond to the visualized tip placement and handle orientation circles shown in the cross-hair of Fig. 1. In pilot studies during the iterative design phase of this auditory display method, participants had difficulty perceiving auditory navigation for both tip and handle simultaneously. To simplify cognition, the placement sequence was divided into separate tip and handle placement, so that after correct tip placement, the auditory display is switched to feedback for handle alignment. The x and y unitless values with range and domain of −25 to 25 corresponding to the width and height of the given visualization, are mapped to auditory synthesis parameters including pitch and stereo position. The PureData audio programming environment [23] is used for realtime synthesis. Movement along the y-axis is mapped to the pitch of a triangle wave oscillator, which the user compares to a reference oscillator. These pitches of the moving oscillator range from Musical Instrument Digital Interface (MIDI) note numbers 48 to 72 quantized to equal temperament semitones, and the reference pitch has a constant frequency of 261 Hz (MIDI note 60). Thus, for y, the frequency f of the moving tone is given as:

Following discussions with partner radiologists, playback with headphones was found to be beneficial. This allows the additional employment of stereo-based parameter mapping, which was found in preliminary experiments to be more effective in conveying right-left navigation than a single monaural channel. For both moving and reference oscillators, phase-offset amplitude modulation at a frequency of 1.8 Hz allows the operator to hear both oscillators alternately to compare pitch. Movement along the x-axis is mapped to the linear stereo position of the oscillators. For x, the gain factors for left and right output channels, gl and gr, are:

Thus, when the tip or handle is located left of the planned position, sound output occurs in the right ear, and when located right of planned position, output occurs in the left ear. Using this mapping metaphor, the user moves a ‘listening object,’ in this case the tip or handle of the needle, towards the ‘sounding object,’ in this case the target position. This frame of reference for stereo mapping means that the user navigates towards the target. When distance of the needle tip or handle to the origin is less than 1, a confirmation tone of a C-major chord plays in addition to the moving and reference triangle wave oscillators.

3.3 Experimental Design

We conducted a user study to evaluate auditory (A), visual (V), and combined (C) feedback methods with respect to accuracy, task completion time, time spent viewing screen, and subjective workload during a typical needle placement task. Twelve participants with an average age of 26.25 took part in the evaluation (five female, seven male, all right-handed). Six participants were medical students in advanced semesters, one was a resident physician, and five were students or employed in other fields. Participants had limited to no navigated needle placement experience.

The task consisted of placing and orienting a navigated needle on the surface of a gelatin phantom (20.0cm length × 15.0cm width × 9.6cm height). This task was divided into two subtasks: placing the tip of the needle at the correct insertion point, and orienting the handle to the correct angle, corresponding to a typical needle insertion workflow [1]. For each placement, the surface of the gelatin phantom was covered in aluminum foil to obscure previous markings. The navigation screen was placed to the left of a table with the phantom, located approximately two meters from the participants standing position, see 2. Circumaural headphones (Sony MDR-7506) were provided, and participants chose a listening volume that they felt was comfortable.

A within-subject design was used, in which each participants performed the task 9 times, three times under each condition (audio, visual, and combined). Three manually predefined trajectories with varying surface insertion points and handle alignments were used for all participants. The sequence of the three conditions was balanced across participants. After greeting and instruction, participants completed placements for three conditions (audio, visual, combined), consisting of instruction on the method, one unrecorded training placement and two recorded test placements. For each placement, participants placed the tip of the needle, then the handle, declaring ‘start’ and ‘stop’ when beginning and ending each tip and handle placement.

3.4 Dependent measures

Four dependent measures were used to assess participants performance: First, accuracy of the placement of the needle tip (surface position) and handle (angle), defined as the 2-dimensional Euclidean distance of actual needle position to planned position. Second, task completion time was determined for both sub-tasks, measured as the time in seconds between declared ‘start’ and ‘stop’ commands given by participants. Third, subjective workload was assessed for each feedback with the modified NASA-Task Load Index, the so-called Raw TLX (RTLX) for which the ratings of different dimensions are averaged to gain an overall workload value [16]. NASA-TLX is a simple method to estimate subjective workload using a multi-dimensional approach that provides information about perceived task demands and subjective reactions to them, although drawbacks to this method include memory effects, response bias, and correlation with task performance. Finally, the fraction of the task completion time spent viewing the screen and phantom was calculated. Participants’ faces were recorded with video, which was manually analyzed by two researchers, whose results were averaged. The correlation between the values of two researchers was > 0.91.

3.5 Statistical analysis

For accuracy and task completion time, the results of both placements were averaged and analyzed by MANOVA for both subtasks as dependent variables and three experimental conditions (audio, visual, and combined feedback) as an independent factor. Subjective workload was analyzed by ANOVA with repeated measures with one factor representing the three experimental conditions. We used a common significance level of α = .05. Furthermore, the data was analyzed by two independent a priori planned contrasts of means. The first contrast addressed effects of audio compared to both feedback types with visual information (averaged across visual and combined). The second contrast compared visual and combined feedback. For accuracy and task completion time, the contrast analyses were performed for each subtask separately and by two-sided t-test for paired samples. Because of this, we corrected a common significance level to α = .025. Relative viewing time was analyzed by non-parametric Wilcoxon signed-rank test due to a ceiling effect. A common significance value of α = .05 was used for this test as well.

4 Results

The summary of the statistical analysis is presented in Table 1.

Table 1.

Summary of statistical analysis: three feedback methods include A (audio-only feedback), V (visual feedback) and C (combined audiovisual feedback)

| df | F | t | z | p | sig | d | Effect | ||

|---|---|---|---|---|---|---|---|---|---|

| Accuracy (MANOVA) | 2,10 | 2.23 | 0.16 | 1.34 | large | ||||

| Contrast Tip | A vs V and C | 11 | 1.29 | 0.22 | 0.39 | small | |||

| V vs. C | 11 | 2.00 | 0.07 | 0.60 | medium | ||||

| Contrast Handle | A vs V and C | 11 | 1.18 | 0.26 | 0.36 | small | |||

| V vs. C | 11 | 0.42 | 0.68 | 0.13 | no effect | ||||

|

| |||||||||

| Task Completion Time (MANOVA) | 2,10 | 46.18 | <0.01 | * | 6.00 | large | |||

| Contrast Tip | A vs V and C | 11 | 3.85 | <0.01 | * | 1.16 | large | ||

| V vs. C | 11 | 1.07 | 0.31 | 0.32 | small | ||||

| Contrast Handle | A vs V and C | 11 | 4.71 | <0.01 | * | 1.42 | large | ||

| V vs. C | 11 | 0.21 | 0.84 | 0.06 | no effect | ||||

|

| |||||||||

| Subjective Workload (ANOVA) | 2,22 | 21.73 | <0.01 | * | 2.79 | large | |||

| A vs V and C | 11 | 5.61 | <0.01 | * | 1.69 | large | |||

| V vs. C | 11 | 0.54 | 0.60 | 0.16 | no effect | ||||

|

| |||||||||

| Viewing Duration (Wilcoxon signed-rank test) | |||||||||

| V vs. C | 2.52 | 0.01 | * | 1.20 | large | ||||

4.1 Accuracy

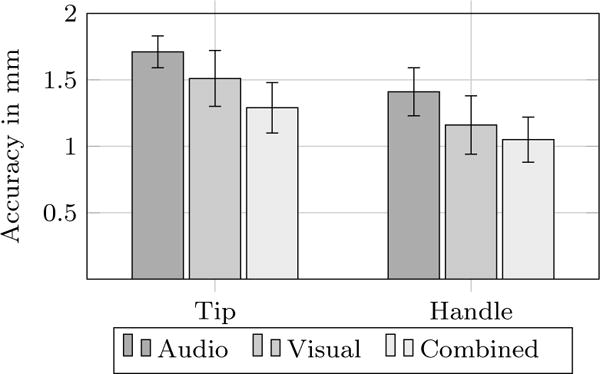

Effects of three experimental conditions on accuracy in two subtasks are illustrated in Fig. 5. Highest accuracy was achieved with the combined feedback, with deviations averaging 1.29 mm (tip) and 1.05 mm (handle), followed by visual feedback, with average deviations of 1.51 mm (tip) and 1.16 mm (handle). Using auditory feedback, the accuracy was slightly lower, average deviations of 1.71 mm (tip) and 1.41 mm (handle), respectively. However, the statistical analyses by MANOVA do not show any significant effects, and the differences to the combined and visual feedback are smaller than the tracking accuracy of the provided system. The following contrast analyses also do not show any significant effects.

Fig. 5.

Accuracy per task in millimeters to planned tip and handle position

4.2 Task Completion Time

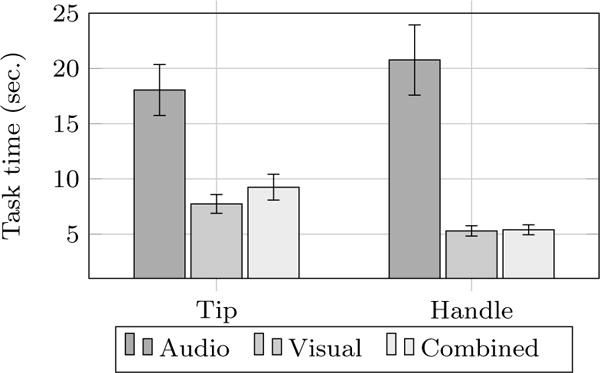

A significant difference was found with respect to the time needed to complete task, see Fig. 6. Participants needed significantly less time to place a needle using visual (7.74s tip, 5.29s handle) and combined feedback (9.25s tip, 5.39s handle) compared to auditory feedback (18.05s tip, 20.77s handle), which was reflected in the significant results of contrast 1 (auditory vs. visual and combined) for both subtasks. However, between visual and combined conditions (contrast 2), no difference was found.

Fig. 6.

Time per task in seconds for tip and handle placement

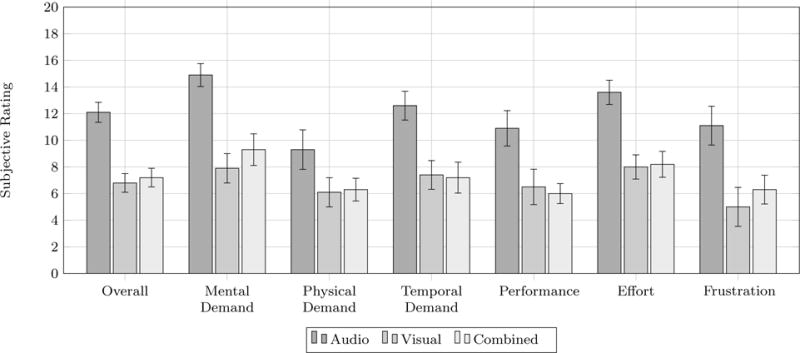

4.3 Subjective Workload

As seen in Fig. 7, a similar pattern was found for subjective workload. Participants reported relatively high subjective workload for audio feedback compared to visual and combined feedback. This pattern could be observed on all dimensions of NASA-TLX, with average values across of individual dimensions of 12.1 for auditory feedback, 6.8 for visual feedback, and 7.2 for combined feedback. This overall result was reflected in the significant effect in the one-way ANOVA which compares these three conditions and the significant contrast 1 comparing audio with visual and combined feedback. No differences appeared between visual and combined feedback (contrast 2).

Fig. 7.

Subjective workload as NASA-Task Load Index (TLX) scores from 0 to 20

4.4 Viewing Duration

Participants viewed to the navigation screen significantly less with the combined feedback, during which 82% of the total time was spent viewing the screen. For the visual-only condition, 99% of the time was spent viewing the screen.

5 Discussion

This work demonstrates the first investigation of the impact of auditory-only and combined audiovisual feedback on navigated needle placement accuracy, task completion time, and subjective workload compared to a typical visual-only feedback method. A parameter-mapping auditory synthesis model was developed to use sound to encode the tip and handle placement of a needle on a gelatin phantom for a medical needle placement task. We investigated whether augmenting existing visual feedback with auditory display offers benefits in increasing accuracy, reducing task completion time, reducing subjective workload, or reducing screen viewing time.

Following our hypothesis and previous work in path-following in image-guided navigation using a combined auditory display [13], the combined feedback significantly reduced the time viewing the navigation screen. For accuracy of tip and handle placement, no significant differences between three feedback methods emerged. The audio-only feedback was comparable to that of visual or combined feedback and was within an acceptable error of under 2 mm. In contrast to Hansen et al. [13], who reported increased accuracy for combined feedback, the current study did not reach a level of significance for accuracy, possibly due to the increased complexity of the task (2-dimensional as opposed to 1-dimension navigation) and, accordingly, increased complexity of the auditory display.

Task completion time and subjective workload provided a different picture: as hypothesized, ‘blind’ auditory-only feedback led to higher task completion times and increased subjective workload compared to both methods which incorporated the visual display (visual only and combined audiovisual feedback). These deficiencies can be traced to the fact that complex static information can often be processed better visually than with audio. Sounds in nature are linked to status change and motion, whereas visualization can better portray static objects and can be perceived at greater distances. Thus, visual information may give a more complete overview of an environment. In addition, the placing the needle using visual feedback mimicked the typical task of following an object such as a mouse cursor on a screen, whereas using solely auditory display for such a two-dimensional placement task was a new challenge for all participants. Needle placement is a highly complicated task which requires assessing position and orientation of two elements of the object in three-dimensional space and witnessing their change over time. Transmitting this information with audio understandably demands higher temporal and cognitive effort, which is reflected in the results of this study. Advantage with respect to accuracy for combined feedback may likely become present under conditions in which the clinician assumes a body position that would make viewing a screen uncomfortable or impossible, such as guidance of a needle inside an MRI scanner.

Improved auditory feedback mechanisms and increased participant familiarity and training with auditory display may decrease task completion time and further increase accuracy. To our knowledge, the described method presents the first evaluation of auditory-only display for image-guided medical instrument placement. The novelty of the presented method invites a completely new direction for research in auditory display for navigated interventions. Existing approaches all demand that the clinician devote a large amount of time viewing a screen, providing audio mainly as a warning system to inform the clinician of upcoming risk areas. Our method paves a new path by attempting to provide active navigation by audio. Although the results of this first study were promising, they show that future designs should exhibit decreased subjective workload. Optimized auditory display methods could be then applied to other applications in medical navigation and reduce screen viewing time substantially. Auditory display for navigation could also encode distance-based risk maps [15] to provide the clinician with a comprehensive solution for both reaching the target and avoiding risk structures. In addition, auditory display for image-guided instrument placement could include an encoding of both navigation information and the uncertainty present therein during targeting tasks [3]. By mapping estimated uncertainty onto the amount of modulation to change certain auditory parameters, such as those described above, a clinician could be informed of the reliability of underlying navigation data.

Apart from auditory displays, several methods have been described to encode information for navigated interventions using visual augmented reality (AR) [18], e.g., using video see-through displays, optical see-though displays, or projectors to augment the operation with navigation information directly on the patient. However, AR may be accompanied by drawbacks including attention tunneling [9, 20] which may jeopardize patient safety. Future research could investigate how such advanced medical augmented reality visualization techniques could be beneficially combined with auditory feedback, for example, to improve intraoperative assessment of depth and distances, especially for cases in which such novel video displays could compromise patient safety.

6 Conclusion

This study evaluated auditory, visual, and combined feedback methods in comparable standardized conditions to determine the differences in their effects on performance in a typical image-guided medical needle placement task.

By adding auditory display to existing visual display, time spent viewing the screen was significantly decreased while maintaining accuracy and task completion time.

After refining this auditory display method, an evaluation of the concept will focus both clinical trials as well as laboratory studies in which the navigation system display may be difficult to see due to operator body position.

We have shown that auditory feedback can be employed in laboratory conditions as an augmentation to visual feedback or employed as a singular feedback for situations in which a screen is unavailable, uncomfortable to view, or a high value is placed on being able to view the operating situs. Currently, however, the results suggests that auditory displays that reach the performance of visual-only displays are still to be developed, emphasizing the current need for visualization during interventions. Future research should place intensified consideration of auditory as a navigation feedback in a field where visual-only guidance is dominant.

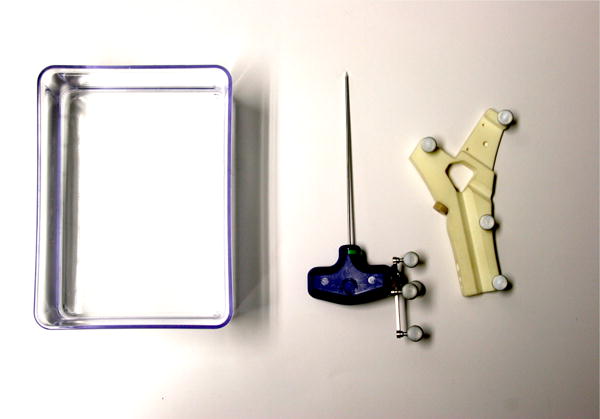

Fig. 4.

Phantom box, tracked needle, and registration device

Acknowledgments

Funding

The work of this paper is partly funded by the German Research Foundation (DFG) under grant number HA 7819/1-1, and the Federal Ministry of Education and Research within the Forschungscampus STIMULATE under grant number 13GW0095A.

Footnotes

Conflict of Interest

The authors declare that they have no conflict of interest.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Contributor Information

David Black, Jacobs University, Bremen, Medical Image Computing, University of Bremen and Fraunhofer MEVIS, Bremen, Germany.

Julian Hettig, Faculty of Computer Science, Otto-von-Guericke University Magdeburg, Germany.

Maria Luz, Faculty of Computer Science, Otto-von-Guericke University Magdeburg, Germany.

Christian Hansen, Faculty of Computer Science, Otto-von-Guericke University Magdeburg, Germany.

Ron Kikinis, Medical Image Computing, University of Bremen, Fraunhofer MEVIS, Bremen, Germany; Surgical Planning Laboratory, Brigham and Women’s Hospital, Boston, USA.

Horst Hahn, Jacobs University and Fraunhofer MEVIS, Bremen, Germany.

References

- 1.Arnolli MM, Hanumara NC, Franken M, Brouwer DM, Broeders IA. An overview of systems for CT– and MRI–guided percutaneous needle placement in the thorax and abdomen. Medical Robotics and Computer Assisted Surgery. 2015;11(4):458–475. doi: 10.1002/rcs.1630. [DOI] [PubMed] [Google Scholar]

- 2.Beller S, Hünerbein M, Eulenstein S, Lange T, Schlag PM. Feasibility of navigated resection of liver tumors using multiplanar visualization of intraoperative 3-dimensional ultrasound data. Annals of surgery. 2007;246:288–294. doi: 10.1097/01.sla.0000264233.48306.99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Black D, Kocev B, Meine H, Nabavi A, Kikinis R. Towards Uncertainty-Aware Auditory Display for Surgical Navigation. Computer Assisted Radiology and Surgery (in print) 2016 [Google Scholar]

- 4.Buxton B, Gaver W, Bly S. The Use of Non-Speech Audio at the Interface. 1994 Unpublished manuscript. [Google Scholar]

- 5.Cash DM, Miga MI, Glasgow SC, Dawant BM, Clements LW, Cao Z, Galloway RL, Chapman WC. Concepts and Preliminary Data Toward the Realization of Image-guided Liver Surgery. Gastrointestinal Surgery. 2007;11:844–859. doi: 10.1007/s11605-007-0090-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chen X, Wang L, Fallavollita P, Navab N. Precise X-ray and video overlay for augmented reality fluoroscopy. Int J Comput Assist Radiol Surg. 2013;8(1):2928. doi: 10.1007/s11548-012-0746-x. [DOI] [PubMed] [Google Scholar]

- 7.Cho B, Oka M, Matsumoto N, Ouchida R, Hong J, Hashizume M. Warning navigation system using realtime safe region monitoring for otologic surgery. Computer Assisted Radiology and Surgery. 2013;8:395–405. doi: 10.1007/s11548-012-0797-z. [DOI] [PubMed] [Google Scholar]

- 8.Dixon BJ, Chan H, Daly MJ, Vescan AD, Witterick IJ, Irish JC. The effect of augmented real-time image guidance on task workload during endoscopic sinus surgery. International Forum of Allergy and Rhinology. 2012;2:405–410. doi: 10.1002/alr.21049. [DOI] [PubMed] [Google Scholar]

- 9.Dixon BJ, Daly MJ, Chan H, Vescan AD, Witterick IJ, Irish JC. Surgeons blinded by enhanced navigation: the effect of augmented reality on attention. Surgical Endoscopy. 2013;27(2):454–461. doi: 10.1007/s00464-012-2457-3. [DOI] [PubMed] [Google Scholar]

- 10.Dixon BJ, Daly MJ, Chan H, Vescan AD, Witterick IJ, Irish JC. Augmented real-time navigation with critical structure proximity alerts for endoscopic skull base surgery. Laryngoscope. 2014;124:853–859. doi: 10.1002/lary.24385. [DOI] [PubMed] [Google Scholar]

- 11.Fischbach F, Bunke J, Thormann M, Gafke G, Jung-nickel K, Smink J, Ricke J. MR-Guided Freehand Biopsy of Liver Lesions With Fast Continuous Imaging Using a 1.0-T Open MRI Scanner: Experience in 50 Patients. CardioVascular and Interventional Radiology. 2011;34:188–192. doi: 10.1007/s00270-010-9836-8. [DOI] [PubMed] [Google Scholar]

- 12.Haerle SK, Daly MJ, Chan H, Vescan AD, Witterick I, Gentili F, Zadeh G, Kucharczyk W, Irish JC. Localized Intraoperative Virtual Endoscopy (LIVE) for Surgical Guidance in 16 Skull Base Patients. Otolaryngology — Head and Neck Surgery. 2015;152:165–17. doi: 10.1177/0194599814557469. [DOI] [PubMed] [Google Scholar]

- 13.Hansen C, Black D, Lange C, Rieber F, Lamad W, Donati M, Oldhafer K, Hahn H. Auditory support for resection guidance in navigated liver surgery. Medical Robotics and Computer Assisted Surgery. 2013;9(1):36–43. doi: 10.1002/rcs.1466. [DOI] [PubMed] [Google Scholar]

- 14.Hansen C, Wieferich J, Ritter F, Rieder C, Peitgen HO. Illustrative Visualization of 3D Planning Models for Augmented Reality in Liver Surgery. 2013;5(2):133–141. doi: 10.1007/s11548-009-0365-3. [DOI] [PubMed] [Google Scholar]

- 15.Hansen C, Zidowitz S, Ritter F, Lange C, Oldhafer K, Hahn HK. Risk maps for liver surgery. Int J Computer Assisted Radiology and Surgery. 2013;8(3):419–428. doi: 10.1007/s11548-012-0790-6. [DOI] [PubMed] [Google Scholar]

- 16.Hart SG. Human Factors and Ergonomics Society 50th Annual Meeting. HFES; Santa Monica: 2006. NASA-Task Load Index (NASA-TLX); 20 Years Later; pp. 904–908. [Google Scholar]

- 17.Hermann T. Taxonomy and definitions for sonification and auditory display. 14th International Conference on Auditory Display; Paris, France. 2008.2008. [Google Scholar]

- 18.Kersten-Oertel C, Jannin P, Collins DL. DVV: A Taxonomy for Mixed Reality Visualization in Image Guided Surgery. IEEE TVCG. 2012;18(2):332352. doi: 10.1109/TVCG.2011.50. [DOI] [PubMed] [Google Scholar]

- 19.Luz M, Manzey D, Modemann S, Strauss G. Less is sometimes more: a comparison of distance-control and navigated-control concepts of image-guided navigation support for surgeons. Ergonomics. 2015;58:383–393. doi: 10.1080/00140139.2014.970588. [DOI] [PubMed] [Google Scholar]

- 20.Marcus HJ, Pratt P, Hughes-Hallett A, Cundy TP, Marcus AP, Yang GZ, Darzi A, Nandi D. Comparative effectiveness and safety of image guidance systems in neurosurgery: a preclinical randomized study. Journal of Neurosurgery. 2015;123(2):307313. doi: 10.3171/2014.10.JNS141662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wright M, Freed A. Open sound control: A new protocol for communicating with sound synthesizers. Proceedings of the 1997 International Computer Music Conference.1997. [Google Scholar]

- 22.Peterhans M, Oliveira T, Banz V, Candinas D, Weber S. Computer-assisted liver surgery: clinical applications and technological trends. Critical Reviews in Biomedical Engineering. 2012;40 doi: 10.1615/critrevbiomedeng.v40.i3.40. [DOI] [PubMed] [Google Scholar]

- 23.Puckette M. Pure Data: another integrated computer music environment. Second Intercollege Computer Music Concerts. 1996:37–41. [Google Scholar]

- 24.Voormolen EH, Woerdeman PA, van Stralen M, Noordmans HJ, Viergever MA, Regli L, van der Sprenkel JW. Validation of exposure visualization and audible distance emission for navigated temporal bone drilling in phantoms. PLoS One. 2012;7:e41262. doi: 10.1371/journal.pone.0041262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Walker BN, Nees MA. Theory of Sonification. In: Hermann T, Hunt A, Neuhoff J, editors. Handbook of Sonification. Academic Press; New York: 2011. [Google Scholar]

- 26.Wegner K, Karron D. Audio-guided blind biopsy needle placement. Studies in Health Technology and Informatics. 1998;50:90–95. [PubMed] [Google Scholar]

- 27.Willems PW, Noordmans HJ, van Overbeeke JJ, Viergever MA, Tulleken CA, van der Sprenkel JW. The impact of auditory feedback on neuronavigation. Acta Neurochirurgica. 2005;147:167–173. doi: 10.1007/s00701-004-0412-3. [DOI] [PubMed] [Google Scholar]

- 28.Woerdeman PA, Willems PW, Noordmans HJ, van der Sprenkel JW. Auditory feedback during frameless image-guided surgery in a phantom model and initial clinical experience. Neurosurgery. 2009;110:257–262. doi: 10.3171/2008.3.17431. [DOI] [PubMed] [Google Scholar]

- 29.Wright M, Freed A, Momeni A. OpenSound Control: state of the art 2003. Proceedings of the 2003 conference on New interfaces for musical expression; 2003. pp. 153–160. [Google Scholar]