Abstract

Reinforcement learning involves decision making in dynamic and uncertain environments and constitutes an important element of artificial intelligence (AI). In this work, we experimentally demonstrate that the ultrafast chaotic oscillatory dynamics of lasers efficiently solve the multi-armed bandit problem (MAB), which requires decision making concerning a class of difficult trade-offs called the exploration–exploitation dilemma. To solve the MAB, a certain degree of randomness is required for exploration purposes. However, pseudorandom numbers generated using conventional electronic circuitry encounter severe limitations in terms of their data rate and the quality of randomness due to their algorithmic foundations. We generate laser chaos signals using a semiconductor laser sampled at a maximum rate of 100 GSample/s, and combine it with a simple decision-making principle called tug of war with a variable threshold, to ensure ultrafast, adaptive, and accurate decision making at a maximum adaptation speed of 1 GHz. We found that decision-making performance was maximized with an optimal sampling interval, and we highlight the exact coincidence between the negative autocorrelation inherent in laser chaos and decision-making performance. This study paves the way for a new realm of ultrafast photonics in the age of AI, where the ultrahigh bandwidth of light wave can provide new value.

Introduction

Unique physical attributes of photons have been utilized in information processing in the literature on optical computing1. New photonic processing principles have recently emerged to solve complex time-series prediction problems2–4, and issues in spatiotemporal dynamics5 and combinatorial optimization6, which coincide with the rapid shift to the age of artificial intelligence (AI). These novel approaches exploit the ultrahigh bandwidth attributes of light wave and their enabling device technologies2,3,6. This paper experimentally demonstrates the usefulness of ultrafast chaotic oscillatory dynamics in semiconductor lasers for reinforcement learning, which is among the most important elements in machine learning.

Reinforcement learning involves decision making in dynamic and uncertain environments7. It forms the foundation of a variety of applications, such as information infrastructures8, online advertisements9, robotics10, transportation11, and Monte Carlo tree search12, which is used in computer gaming13. A fundamental of reinforcement learning is known as the multi-armed bandit problem (MAB), where the goal is to maximize the total reward from multiple slot machines, the reward probabilities of which are unknown7,14,15. To solve the MAB, one needs to explore better slot machines. However, too much exploration may result in excessive loss, whereas too quick a decision, or insufficient exploration, may lead to the neglect of the best machine. There is a trade-off, referred to as the exploration–exploitation dilemma7. A variety of algorithms for solving the MAB have been proposed in the literature, such as ε-greedy14, softmax16, and upper confidence bound17.

These approaches typically involve probabilistic attributes, especially for exploration purposes. While the implementation and improvements of such algorithms on conventional digital computing systems are important for various practical applications, understanding the limitations of the algorithms and investigating novel approaches are also important based on perspectives from postsilicon computing. For example, pseudorandom number generation (RNG) used in conventional algorithmic approaches has severe limitations, such as its data rate, owing to the operating frequencies of digital processors (~gigahertz (GHz) range). Moreover, the quality of randomness in RNG has serious limitations18. The usefulness of photonic random processes for machine learning is also discussed by utilizing multiple optical scattering19.

We consider that directly utilizing physical irregular processes in nature is an exciting approach with the goal of realizing artificially constructed, physical decision-making machines20. Indeed, the intelligence of slime moulds or amoebae, single-cell natural organisms, has been used in solution searches, whereby complex intercellular spatiotemporal dynamics play a key role21. This has stimulated the subsequent discovery of a new principle of decision-making strategy called tug of war (TOW), invented by Kim et al.22,23. The principle of the TOW method originated from the observation of the slime mould: its body dynamically expands and shrinks while maintaining a constant intracellular-resource volume, allowing the mould to collect environmental information; this conservation of the volume entails a nonlocal correlation within the body. The fluctuation, or probabilistic behaviour, in the body of amoeba is important for the exploration of better solutions. The name TOW is a metaphor used to represent such a nonlocal correlation while accommodating fluctuation, which enhances decision-making performance23.

This principle can be adapted to photonic processes. In past research, we experimentally showed physical decision making based on near-field-mediated optical excitation transfer at the nanoscale20,24 and with single photons25. These former studies pursued the ultimate physical attributes of photons in terms of diffraction limit-free spatial resolutions and energy efficiency by considering near-field photons26,27, and the quantum attributes of single-light quanta28. The nonlocal aspect of TOW is directly physically represented by the wave nature of a single photon or an exciton-polariton, whereas fluctuation is also directly represented by the intrinsic probabilistic attributes. However, fluctuations are limited by the practical limitations on the measurements and control systems (second order in the worst case) as well as the single-photon generation rate (kHz range).

The ultrafast, high-bandwidth aspect of light wave, for instance beyond THz order if the wavelength is around 1.5 μm in optical communications, is another promising physical platform for TOW-based decision making to complement diffraction-limit-free and low-energy near-field photon approaches as well as quantum-level single-photon strategies. As demonstrated below, chaotic oscillatory dynamics of lasers that contains negative autocorrelation experimentally demonstrates 1-GHz decision making. In addition to the resultant speed merit, it should be emphasized that the technological maturity of ultrafast photonic devices allows for relatively easy and scalable system implementation through commercially available photonic devices. Furthermore, the applications of the proposed ultrafast photonics-based, and the former near-field/single-photon-based, decision making are complementary: the former targets high-end, data centre scenarios by highlighting ultrafast performance, whereas the latter appeals to low-energy, Internet-of-Things-related29, and security30 applications.

In this study, we demonstrate ultrafast reinforcement learning based on chaotic oscillatory dynamics in semiconductor lasers31–34 that yields adaptation from zero-prior knowledge at a GHz range. The randomness is based on complex dynamics in lasers32–34, and its resulting speed is unachievable in other mechanisms, at least through technologically reasonable means. We experimentally show that ultrafast photonics has significant potential for reinforcement learning. The proposed principles using ultrafast temporal dynamics can be matched to applications including an arbitration of resources at data centres35, high-frequency trading36, where decision making is required at least within milliseconds, and other such high-end utilities. Scientifically, this study paves a way toward the understanding of the physical origin of the enhancement of intelligent abilities (which is reinforcement learning herein) when natural processes (laser chaos herein) are coupled with external systems; this is what we call natural intelligence.

Chaotic dynamics in lasers has been examined in the literature32–34, and its applications have exploited the ultrafast attributes of photonics for secure communication37–39, RNG31,40,41, remote sensing42, and reservoir computing2–4. Reservoir computing is a type of neural network similar to deep learning13 that has been intensively studied to provide recognition- and prediction-related functionalities. Reinforcement learning described in this study differs completely from reservoir computing from the perspective that neither a virtual network nor machine learning for output weights is required. However, it should be noted that reinforcement learning is important in complementing the capabilities of neural networks, indicating the potential for the fusion of photonic reservoir computing with photonic reinforcement learning in future work.

Principle of reinforcement learning

For the simplest case that preserves the essence of solving the MAB, we consider a player who selects one of two slot machines, called slot machines 1 and 2 hereafter, with the goal of maximizing reward (known as the two-armed bandit problem). Denoting the reward probabilities of the slot machines by Pi (i = 1, 2), the problem is to select the machine with the highest reward probability. The amount of reward dispensed by each slot machine for a play is assumed to be the same in this study. That is, the probability of ‘win’ by playing the slot machine i is Pi and the probability of ‘lose’ by playing the slot machine i is 1 − Pi. The sum of ‘win’ and ‘lose’ is unity in playing a particular slot machine, whereas the sum of Pi among all slot machines may not be unity.

The measured chaotic signal s(t) is subjected to the threshold adjuster (TA), according to the TOW principle. The output of the TA is immediately the decision concerning the slot machine to choose. If s(t) is equal to or greater than the threshold value T(t), the decision is made to select slot machine 1. Otherwise, the decision is made to select slot machine 2. The reward—the win/lose information of a slot machine play—is fed back to the TA.

The chaotic signal level s(t) is compared with the threshold value T(t) denoted by

| 1 |

where TA(t) is the threshold adjuster value at cycle t, is the nearest integer to TA(t) rounded to zero, and k is a constant determining the range of the resultant T(t). In this study, we assumed that takes the values , where N is a natural number. Hence the number of the thresholds is 2N + 1, referred to as TA’s resolution. The range of TA(t) is limited between −kN and kN by setting T(t) = kN when is greater than N, as well as T(t) = −kN when is smaller than −N.

If the selected slot machine yields a reward at cycle t (in other words, wins the slot machine play), the TA value is updated at cycle t + 1 based on

| 2 |

where α is referred to as the forgetting (memory) parameter20, and Δ is the constant increment (in this experiment, Δ = 1 and α = 0.999). In this study, the initial TA value was zero. If the selected machine does not yield a reward (or loses in the slot machine play), the TA value is updated as follows:

| 3 |

where Ω is the increment parameter defined below. Intuitively speaking, the TA takes a smaller value if slot machine 1 is considered more likely to win, and a greater value if slot machine 2 is considered more likely to earn the reward. This is as if the TA value is being pulled by the two slot machines at both ends, coinciding with the notion of a tug of war.

The fluctuation, necessary for exploration, is realized by associating the TA value with the threshold of digitization of the chaotic signal train. If the chaotic signal level s(t) is equal to or greater than the assumed threshold T(t), the decision is immediately made to choose slot machine 1; otherwise, the decision is made to select slot machine 2. Initially, the threshold is zero; hence, the probability of choosing either slot machine 1 or 2 is 0.5. As time elapses, the TA value shifts (becomes positive or negative) towards the slot machine with the higher reward probability based on the dynamics shown in Eqs (2) and (3). We should note that due to the irregular nature of the incoming chaotic signal, the possibility of choosing the opposite machine is not zero, and this is a critical feature of exploration in reinforcement learning. For example, even when the TA value is sufficiently small (meaning that slot machine 1 seems highly likely to be the better machine), the probability of the decision to choose slot machine 2 is not zero.

In TOW-based decision making, the increment parameter Ω in Eq. (3) is determined based on the history of betting results. Let the number of times when slot machine i is selected in cycle t be Si and the number of wins in selecting slot machine i be Li. The estimated reward probabilities of slot machines 1 and 2 are given by

| 4 |

Ω is then given by

| 5 |

The initial Ω is assumed to be unity, and a constant value is assumed when the denominator of Eq. (5) is zero. The detailed derivation of Eq. (5) is shown in ref.23.

Results

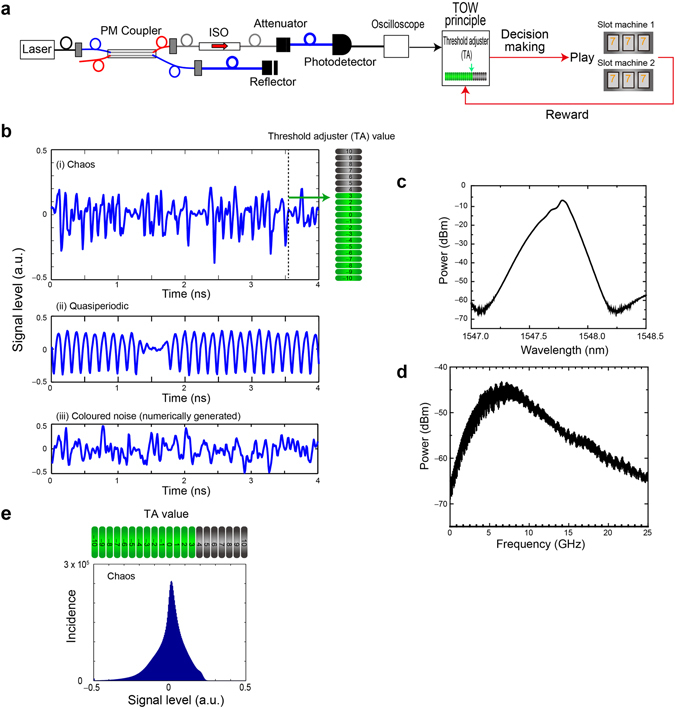

The architecture of laser chaos-based reinforcement learning is schematically shown in Fig. 1a. A semiconductor laser is coupled with a polarization-maintaining (PM) coupler. The emitted light is incident on a variable fibre reflector, by which a delayed optical feedback is supplied to the laser, leading to laser chaos40. The output light at the other end of the PM coupler is detected by a high-speed, AC-coupled photodetector through an optical isolator (ISO) and an attenuator, and is sampled by a high-speed digital oscilloscope at a rate of 100 GSample/s (a 10-ps sampling interval). The detailed specifications of the experimental apparatus are described in the Methods section.

Figure 1.

Architecture of photonic reinforcement learning based on laser chaos. (a) Architecture and experimental configuration of laser chaos-based reinforcement learning. Ultrafast chaotic optical signal is subjected to the tug-of-war (TOW) principle that determines the selection of slot machines. ISO: optical isolator. (b) (i) A snapshot of chaotic signal trains sampled at 100 GSample/s. The signal level is subjected to a threshold adjustment (TA) for decision making. (ii) A snapshot of quasiperiodic signal trains (also sampled at 100 GSample/s). (iii) A snapshot of pseudorandom signals (coloured noise containing negative autocorrelation) numerically generated by a computer. (c) Optical spectrum and (d) RF spectrum of the laser chaos used in the experiment. (e) Incidence statistics (histogram) of the signal level of the laser chaos signal.

Figure 1b(i) shows an example of the chaotic signal train. Figure 1c and d show the optical and radio frequency (RF) spectra of laser chaos measured by optical and RF spectrum analysers, respectively. The semiconductor laser was operated at a centre wavelength of 1547.785 nm. The standard bandwidth31 of the RF spectrum was estimated as 10.8 GHz. Figure 1e summarizes the histogram of the signal levels of the chaotic trains spanning from −0.5 to 0.5, with the level zero at its maximum incidence, and slightly skewed between the positive and negative sides. A remark is that the small incidence peak at the lowest measured amplitude is due to our experimental apparatus (not the laser), and it does not critically affect the present study. Meanwhile, Fig. 1b(ii) exhibits an example of a quasiperiodic signal train generated from the same laser by changing the optical feedback power from the external reflector (see the Methods section for details). Besides, Fig. 1b(iii) shows an example of a coloured noise signal train containing negative autocorrelation calculated in a computer on the basis of the Ornstein–Uhlenbeck process using white Gaussian noise and a low-pass filter43 with the cut-off frequency of 10 GHz. Relevant details and performance comparisons with respect to decision making will be discussed later.

The rounded TA values, , are also schematically illustrated at the right-hand side of Fig. 1b and the upper side of Fig. 1e, assuming N = 10. This means that ranges from −10 to 10. Signal value s(t) spans between −0.5 and 0.5. The particular example of TA values shown in Fig. 1b and e shows the case when the constant k in Eq. (1) is given by 0.05, so that the actual threshold T(t) spans from −0.5 to 0.5. In the experimental demonstration in this study, the TA and the slot machines were emulated in offline processing, whereas online processing was technologically feasible owing to the simple procedure of the TA (Remarks are given in the Discussion section).

[EXPERIMENT-1] Adaptation to sudden environmental changes

We first solved the two-armed bandit problems given by the following two cases, where the reward probabilities {P1, P2} were given by {0.8, 0.2} and {0.6, 0.4}. We assumed that the sum of the reward probabilities P1 + P2 was known prior to slot machine plays. With this knowledge, Ω is unity by Eq. (5).

The slot machine was consecutively played 4,000 times, and this play was repeated 100 times. The red and blue curves in Fig. 2a show the evolution of the correct decision rate (CDR), defined by the ratio of the number of selections of the machines that yielded a higher reward probability at cycle t in 100 trials, with respect to the probability combination of {0.8, 0.2} and {0.6, 0.4}. The chaotic signal was sampled every 10 ps; hence, the total duration of the 4,000 plays of the slot machine was 40 ns. To represent sudden environmental changes (or uncertainty), the reward probability was forcibly interchanged every 10 ns, or every 1,000 plays (For example, {P1, P2} = {0.8, 0.2} was reconfigured to {P1, P2} = {0.2, 0.8}). The resolution of the TA was set to 9 (or N = 4).

Figure 2.

Reinforcement learning in dynamically changing environments. (a) Evolution of the correct decision rate (CDR) when the reward probabilities of the two slot machines are {0.8, 0.2} and {0.6, 0.4}. The knowledge regarding the sum of the reward probability, which is unity in these cases, is supposed to be given. The reward probability is intentionally swapped every 10 ns to represent sudden environmental changes or uncertainty. Rapid and adequate adaptation is observed in both cases. (b) Evolution of the threshold adjuster (TA) value underlying correct decision making. (c–e) CDR performance dependency on the setting of TA. (c) TA resolution dependency. (d) TA range dependency. (e) TA centre value dependency.

We observed that the CDR curves quickly approached unity, even after sudden changes in the reward probabilities, showing successful decision making. The adaptation was steeper in the case of a reward probability combination of {0.8, 0.2} than that of {0.6, 0.4}, since the difference in the reward probability was greater in the former case (0.8 − 0.2 = 0.6) than the latter (0.6 − 0.4 = 0.2). This meant that decision making was easier. The red and blue curves in Fig. 2b represent the evolution of TA values (TA(t)) in the case of {0.8, 0.2} and {0.6, 0.4}, respectively, where the TA value became greater than 4 or −4; hence, it took a long time for the TA values to be inverted to the other polarity following environmental change. This is the origin of some delay in the CDR in responding to the environmental changes observed in Fig. 2a. A simple method of improving adaptation is to limit the maximum and minimum values of TA(t). The forgetting parameter α is also crucial to improving adaptation speed.

Figure 2c,d and e characterize decision-making performance dependencies with respect to the configuration of TA. Figure 2c concerns TA resolutions demonstrated by the eight curves therein, with corresponding TA resolutions of 3 to 257 (or ), where the adaptation was quicker in the case of lower resolutions than in the case of higher resolutions. Figure 2d considers TA range dependencies: while keeping the centre of the TA value at zero and the TA resolution at 8, the three curves in Fig. 2d compare CDRs in the TA ranges of 1, 0.25, and 0.125. We observed that a full coverage (TA range of 1) of the chaotic signal yields the best performance. Figure 2e shows the TA’s centre value dependencies while maintaining a TA range of 1 and a resolution of 8, and we can clearly observe the deterioration of CDRs with the shift in the centre of the TA from zero.

[EXPERIMENT-2] Adaptation from zero prior knowledge

Here we considered decision-making problems without any prior knowledge of the slot machines. Hence, parameter Ω needed to be updated. The reward probabilities of the slot machines were set to {P1, P2} = {0.5, 0.1}, and 100 consecutive plays were executed. Figure 3a shows the time evolution of the CDR until the 75th slot play cycle to enlarge the early phase of the adaptation. The red and blue curves in Fig. 3a depict CDRs on the basis of chaotic signal trains with sampling intervals of 10 and 50 ps, respectively. Hence, the time needed to complete 100 consecutive slot machine plays differed among these; it ranges from to . Meanwhile, the green curve in Fig. 3a represents the CDR with quasiperiodic signal trains sampled at 50-ps intervals. We observed that the CDR based on chaotic signals sampled at 50-ps intervals exhibited more rapid adaptation than quasiperiodic signals. The time evolution of the CDR for the coloured noise signal is shown by the magenta curve in Fig. 3a, which stays lower than the experimentally observed chaotic signal trains.

Figure 3.

Reinforcement learning from zero prior knowledge. (a) Evolution of the CDR with laser chaos signals (sampling interval: 10 and 50 ps), quasiperiodic signals (sampling interval: 50 ps), coloured noise (sampling interval: 50 ps), and uniformly and normally distributed pseudorandom numbers. The CDR exhibits prompt adaptation with laser chaos when the sampling interval is 50 ps. (b) The CDR is evaluated as a function of the sampling interval from 10 ps to 400 ps, where the maximum performance is obtained at 50 ps with laser chaos. CDR with chaotic lasers yields superior performances compared to quasiperiodic and coloured noise signals. (c) Autocorrelation of the experimentally observed laser chaos, the quasiperiodic signal trains, and numerically generated coloured noises. The chaotic signals exhibit the negative maximum when the time lag is 5 or −5, exactly coinciding with the fact that the optimal adaptation is realized at 50-ps (10 ps × 5) sampling intervals.

Moreover, the black curve shows the CDR obtained by uniformly distributed pseudorandom numbers generated with the Mersenne Twister as the random signal source, instead of the experimentally observed chaotic signals. In addition, we characterized CDRs with normally distributed random numbers (referred to as RANDN) to ensure that the statistical incidence patterns of the laser chaos, which were similar to a normal distribution shown in Fig. 1e, were not the origins of the fast adaptation of decision making. The solid, dotted, and dashed curves in Fig. 3a exhibit the CDR obtained by RANDN of which standard deviation (σ) was configured as 0.2, 0.1, and 0.01 while keeping the mean value at zero. We observed that the CDRs based on chaotic signals exhibited more rapid adaptation than the uniformly and normally distributed pseudorandom numbers.

The most prompt adaptation, or the optimal performance, was obtained at a particular sampling interval. The blue square marks in Fig. 3b compare CDRs at the cycle t = 5 as a function of the sampling interval ranging from 10 ps to 4000 ps. The sampling interval of 50 ps yielded the best performance, indicating that the original chaotic dynamics of the laser could physically be optimized such that the most prompt decision-making is realized. Indeed, the autocorrelation of the laser chaos signal trains was evaluated as shown by the blue square marks in Fig. 3c, and its negative maximum value is taken when the time lag is given by 5 or −5, corresponding exactly to the sampling interval of 50 ps (5 × 10 ps). In other words, the negative correlation of chaotic dynamics affects the exploration ability for decision making. Furthermore, this finding suggests that optimal performance is obtained at a particular sampling rate (or data rate) by physically tuning the dynamics of the original laser chaos, which will be an important and exciting topic for future investigation. The adaptation speed of decision making was estimated as 1 GHz in this optimal case, where CDR was larger than 0.95 at 20 cycles with a 50-ps sampling interval (20 GSamples/s) in Fig. 3a (1 GHz = (50 ps × 20 cycles)−1). In other words, the latency of decision making is approximately 1 ns, and the throughput is 20 GDecision/s.

Meanwhile, the autocorrelation of the quasiperiodic signal train also yields negative values, as shown by the green circular marks in Fig. 3c. In fact, the absolute value of the negative autocorrelation at the time lag of 5 (and −5) is larger than that of the chaotic signal train. However, the adaptation performance of the chaotic signal trains is superior (see Fig. 3b). At the same time, the best adaptation performance of the quasiperiodic signal train is obtained when the sampling interval is 70 ps (see Fig. 3b), which coincides with the peak of the negative maximum value of autocorrelation with the time lag of 7 (=70 ps) (see Fig. 3c).

Another indication of the observation is that the coloured noise containing negative autocorrelation might contribute to performance improvement. We generated the coloured noise signal by computer simulations of which autocorrelation is shown by the diamond marks in Fig. 3c whereby the negative maximum is obtained at the time lag of 7 (sampling interval: 70 ps). The sampling-interval-dependence of the CDR is summarized by the diamond marks in Fig. 3b, where a peak is observed at the sampling interval of 7, and also coincides with the peak of negative autocorrelation. These results imply the necessity of gaining deeper insights in future studies as discussed in the Discussion section.

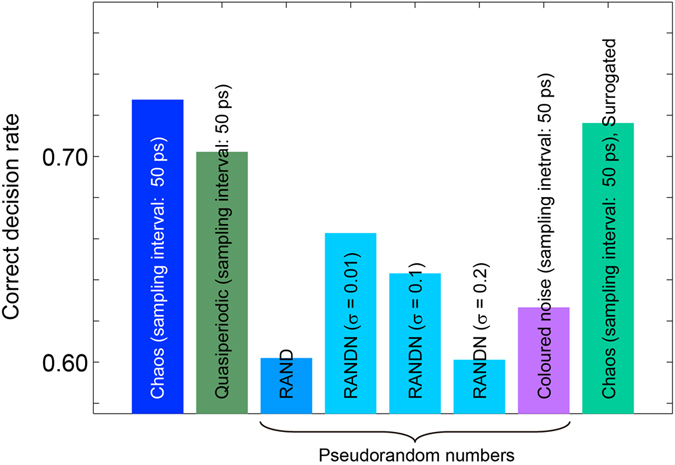

Figure 4 also presents the CDR at the cycle t = 5 obtained by the chaotic signal, quasiperiodic time series, coloured noise signal, and pseudorandom numbers. Moreover, the CDR at the cycle t = 5 was evaluated by surrogating, or randomly permutating, the chaotic signal time series sampled at 50 ps intervals, and this resulted in poorer performance compared with the original chaotic signal, as shown in Fig. 4. These evaluations support the claim that laser chaos is beneficial to the performance of reinforcement learning, in addition to its ultrafast data rate for random signals.

Figure 4.

Comparison of learning performance (CDR at the cycle t = 5) by laser chaos, quasiperiodic signals, coloured noise signals, uniformly and normally distributed pseudorandom numbers, and surrogate laser chaos signals. The laser chaos signal sampled at 50-ps intervals exhibits the best performance compared with other cases, showing that the dynamics of laser chaos positively influences reinforcement learning ability.

Discussion

The performance enhancement of decision making by chaotic laser dynamics is demonstrated and the impacts of negative autocorrelation are clearly suggested. Further understanding between chaotic oscillatory dynamics and decision making is a part of important future research.

The first consideration involves physical insights. Toomey et al. recently showed that the complexity of laser chaos varies within the coherence collapse region in the given system44. The level of the optical feedback, injection current of the laser becomes an important parameter in determining the complexity of chaos, and leads to thorough insights. We also consider the use of the bandwidth enhancement technique31 with optically injected lasers to improve the adaptation speed of decision higher than tens of GHz.

We showed the exact coincidence between the time lag that yielded the negative maximum of the autocorrelation and the sampling interval that provided the highest adaptation performance with the use of laser chaos signals (Fig. 3b). The absolute value of the negative autocorrelation itself is, however, larger with the quasiperiodic signals rather than with chaotic signals (Fig. 3c). Nevertheless, superior adaptation performance is realized with the chaotic signals (Figs 3b and 4). These observations indicate that, besides the negative autocorrelation inherent in chaotic and quasiperiodic oscillatory dynamics of lasers as well as the coloured noise generated by computers, other perspectives could explain the underlying mechanism, such as diffusivity45 and Hurst exponents46.

The decision-making latency of 1 ns demonstrated by chaotic lasers in this study may overachieve the expected performance demanded by practical applications today. However, high-end applications require even shorter latency; for example, microseconds and nanoseconds applications are discussed in the literature47,48. Meanwhile, fully electrical online processing using multiple processors presents difficulties in reducing latency while having comparable throughput; such an architectural standpoint continues to be an important aspect in future studies.

Online processing can become feasible through electric circuitry, as already demonstrated for a random-bit generator40,49 since the required processing is very simple as described in Eqs (1) to (5). More specifically, combining a demultiplexer and multiple data processing circuits (such as field-programmable gate array chips) could provide the requisite online processing capability. In this study, in contrast, the prime interest is to present the principle and the usefulness of laser chaos for reinforcement learning, and we do not experimentally adapt the electrical circuits for online processing that need substantial development costs. In addition, the performance comparison between laser chaos signals and numerically generated coloured noise signals shows the usefulness of direct usage of physical signal sources besides the advantages in terms of latency and technological implementations in the high-speed domain.

In the experimental demonstration, the nonlocal aspect of the TOW principle was not directly, physically relevant to the given chaotic oscillatory time series of the lasers whereas our former single photon-based decision maker directly utilizes the physical property therein (the wave–particle duality of single photons) for decision making as already mentioned in the introduction. By combining the chaotic dynamics with the threshold adjustor, the nonlocal and fluctuation properties of the TOW principle emerged which have not been completely reported in the literature nor realized in our past experimental studies24,25. Such a hybrid realization of nonlocality in TOW leads to higher likelihood of technological implementability and better scalability and extension to higher-grade problems.

All optical realization is an interesting issue to explore, and has already been implied by the analysis where the time-domain correlation of laser chaos strongly influences decision-making performances. The mode dynamics of multi-mode lasers50 are very promising for the implementation of nonlocal properties of fully photonic systems required for decision making. Synchronization and its clustering properties in coupled laser networks51,52 are also interesting approaches to physically realizing the nonlocality of the TOW. These systems can automatically tune the optimal settings for decision making (e.g., negative autocorrelation properties), which can lead to autonomous photonic intelligence.

For scalability, a variety of approaches can be considered, such as time-domain multiplication, which exploits the ultrafast attributes of chaotic lasers and is a frequently used strategy in ultrafast photonic systems. Introducing multi-threshold values31,41 is another simple extension of our proposed scheme. Furthermore, the relation between the difficulty level of the given MAB problem and the necessary irregularity of chaotic signal trains would be an interesting future study topic. In this respect, Naruse et al. experimentally examined a hierarchical method and successfully solved four-armed bandit problems using single photons53; the time-domain realization of such a hierarchical approach based on laser chaos may highlight the uniqueness of chaotic oscillatory dynamics of lasers.

We also make note of the extension of the principle demonstrated in this paper to higher-grade machine learning problems. This paper studied the MAB where the problem is decision making to maximize the income for a single player. The higher-grade problem in this context is competitive MAB54 where the issue is decision making to maximize the total reward for multiple players as a whole. The competitive MAB involves the so-called Nash equilibrium where the decisions based on an individual’s reward maximization do not yield maximum reward as a whole. This is a foundation of such important applications as resource allocation and social optimization. Investigating the possibilities of extending the present method and utilizing ultrafast laser dynamics for competitive MAB is highly interesting.

Conclusion

We experimentally established that laser chaos provides ultrafast reinforcement learning and decision making. The adaptation speed of decision making reached 1 GHz in the optimal case with the sampling rate of 20 GSample/s (50-ps decision-making intervals) using the ultrafast dynamics inherent in laser chaos. The maximum adaptation performance coincided with the negative maximum of the autocorrelation of the original time-domain laser chaos sequences, demonstrating the strong impact of chaotic lasers on decision making. The origin of superior performance was also validated by comparing with experimentally observed quasiperiodic signals, computer-generated coloured noises, uniformly and normally distributed pseudorandom numbers, and surrogated arrangements of original chaotic signal trains. This study is the first demonstration of ultrafast photonic reinforcement learning or decision making, to the best of our knowledge, and paves the way for research on photonic intelligence and new applications of chaotic lasers in the realm of artificial intelligence.

Methods

Optical system

The laser used in the experiment was a distributed feedback semiconductor laser mounted on a butterfly package with optical fibre pigtails (NTT Electronics, KELD1C5GAAA). The injection current of the semiconductor laser was set to 58.5 mA (5.37 Ith), where the lasing threshold Ith was 10.9 mA. The relaxation oscillation frequency of the laser was 6.5 GHz. The temperature of the semiconductor laser was set to 294.83 K. The laser output power was 13.2 mW. The laser was connected to a variable fibre reflector which reflected a fraction of light back into the laser, inducing high-frequency chaotic oscillations of optical intensity32–34. The values of the optical feedback power (ratio) from the external reflector to the laser were 211 μW (1.6%) and 15 μW (0.11%) in generating chaotic and quasiperiodic signals, respectively. The fibre length between the laser and the reflector was 4.55 m, corresponding to the feedback delay time (round trip) of 43.8 ns. Polarization-maintaining fibres were used for all optical fibre components. The optical output was converted to an electronic signal by a photodetector (New Focus, 1474-A, 38 GHz bandwidth) and sampled by a digital oscilloscope (Tektronics, DPO73304D, 33 GHz bandwidth, 100 GSample/s, eight-bit vertical resolution). The RF spectrum of the laser was measured by an RF spectrum analyser (Agilent, N9010A-544, 44 GHz bandwidth). The optical wavelength of the laser was measured by an optical spectrum analyser (Yokogawa, AQ6370C-20).

Data analysis

[EXPERIMENT-1]

A chaotically oscillated signal train was sampled at a rate of 100 GSample/s by 10,000,000 points, and lasted approximately 10 μs. As described in the main text, 4,000 consecutive plays were repeated 100 times; hence, the total number of slot machine plays was 400,000. With a 10-ps interval sampling, the initial 400,000 points of the chaotic signal were used for the decision-making experiments. The processing time required for 400,000 iterations of slot machine plays was approximately 0.93 s (or 2.3 μs/decision), on a personal computer (Hewlett-Packard, Z-800, Intel Xeon CPU, 3.33 GHZ, 48 GB RAM, Windows 7, MATLAB R2011b).

[EXPERIMENT-2]

Sampling methods: A chaotic signal train was sampled at 10-ps intervals with 10,000,000 sampling points. Such a train was measured 120 times. Each chaotic signal train was referred to as chaosi, and there were 120 kinds of such trains: . In demonstrating 10 × M ps sampling intervals, where M was a natural number ranging from 1 to 400 (that is, the sampling intervals were 10 ps, 20 ps, … and 4000 ps), we chose one of every M samples from the original sequence.

Evaluation of CDR regarding a specific chaos sequence: For every chaotic signal train chaosi, 100 consecutive plays were repeated 100 times. Consequently, 10,000 points were used from chaosi. Such evaluations were repeated 100 times. Hence, 1,000,000 slot machine plays were conducted in total. These CDRs were calculated for all signal trains chaosi ().

Evaluation of CDR of all chaotic sequences: We evaluated the average CDR of all chaotic signal trains () derived in (2) above, which were the results discussed in the main text. The methods of the performance evaluation of CDR with respect to the experimentally observed quasiperiodic signals, RAND, and RANDN were the same as that described in (2) and (3).

Autocorrelation of chaotic signals: The autocorrelation was computed based on all 10,000,000 sampling points of chaosi, and was evaluated for all chaosi (). The autocorrelation demonstrated in Fig. 3c was evaluated as the average of these 120 kinds of autocorrelations. The autocorrelation of quasiperiodic signals was evaluated in the same manner.

Coloured noise: Coloured noise was calculated on the basis of the Ornstein–Uhlenbeck process using white Gaussian noise and a low-pass filter in numerical simulations43. We assumed that the coloured noise was generated at the sampling rate of 100 GHz, and the cut-off frequency of the low-pass filter was set to 10 GHz (the correlation time was 100 ps). Forty sequences of 10,000,000 points were generated. The methods of calculating CDR and autocorrelation were the same as that with (2), (3), and (4) whereas the number of sequences was 40 (not 120). The reduction in the number of sequences was due to the excessive computational costs in our computing environment.

Surrogate methods: The surrogate time series of original chaotic sequences were generated by the randperm function in MATLAB which is based on the sorting of pseudorandom numbers generated by the Mersenne Twister.

Data availability

The data sets generated during the current study are available from the corresponding author on reasonable request.

Acknowledgements

The authors thank Mr. Takatomo Mihana of Saitama University for his help in numerically generating coloured noise signals. This work was supported in part by the Core-to-Core Program A. Advanced Research Networks and the Grants-in-Aid for Scientific Research (A) from the Japan Society for the Promotion of Science.

Author Contributions

M.N., A.U., and S.-J.K. directed the project. M.N. designed the system architecture. Y.T. and A.U. designed and implemented the laser chaos. Y.T., A.U., and M.N. conducted the optical experiments and the data processing. M.N., A.U., and S.-J.K. analysed the data, and M.N., A.U., and S.-J.K. wrote the paper.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

A correction to this article is available online at https://doi.org/10.1038/s41598-018-23476-2.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Jahns J, Lee SH. Optical Computing Hardware. San Diego: Academic Press; 1994. [Google Scholar]

- 2.Larger L, et al. Photonic information processing beyond Turing: an optoelectronic 3 implementation of reservoir computing. Opt. Express. 2012;20:3241–3249. doi: 10.1364/OE.20.003241. [DOI] [PubMed] [Google Scholar]

- 3.Brunner D, Soriano MC, Mirasso CR, Fischer I. Parallel photonic information processing at gigabyte per second data rates using transient states. Nat. Commun. 2013;4:1364. doi: 10.1038/ncomms2368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vandoorne K, et al. Experimental demonstration of reservoir computing on a silicon photonics chip. Nat. Commun. 2014;5:3541. doi: 10.1038/ncomms4541. [DOI] [PubMed] [Google Scholar]

- 5.Tsang, M. & Psaltis, D. Metaphoric optical computing of fluid dynamics. arXiv:physics/0604149v1 (2006).

- 6.Inagaki, T. et al. A coherent Ising machine for 2000-node optimization problems. Science, doi:10.1126/science.aah4243 (2016). [DOI] [PubMed]

- 7.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. Massachusetts: The MIT Press; 1998. [Google Scholar]

- 8.Awerbuch B, Kleinberg R. Online linear optimization and adaptive routing. J. Comput. Syst. Sci. 2008;74:97–114. doi: 10.1016/j.jcss.2007.04.016. [DOI] [Google Scholar]

- 9.Agarwal, D., Chen, B. -C. & Elango, P. Explore/exploit schemes for web content optimization. Proc. of ICDM 1–10, doi:10.1109/ICDM.2009.52 (2009).

- 10.Kroemer OB, Detry R, Piater J, Peters J. Combining active learning and reactive control for robot grasping. Robot. Auton. Syst. 2010;58:1105–1116. doi: 10.1016/j.robot.2010.06.001. [DOI] [Google Scholar]

- 11.Cheung, M. Y., Leighton, J. & Hover, F. S. Multi-armed bandit formulation for autonomous mobile acoustic relay adaptive positioning. In 2013 IEEE Intl. Conf. Robot. Auto. 4165–4170 (2013).

- 12.Kocsis L, Szepesvári C. Bandit based Monte Carlo planning. Machine Learning: ECML (2006), LNCS. 2006;4212:282–293. [Google Scholar]

- 13.Silver D, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016;529:484–489. doi: 10.1038/nature16961. [DOI] [PubMed] [Google Scholar]

- 14.Robbins H. Some aspects of the sequential design of experiments. B. Am. Math. Soc. 1952;58:527–535. doi: 10.1090/S0002-9904-1952-09620-8. [DOI] [Google Scholar]

- 15.Lai TL, Robbins H. Asymptotically efficient adaptive allocation rules. Adv. Appl. Math. 1985;6:4–22. doi: 10.1016/0196-8858(85)90002-8. [DOI] [Google Scholar]

- 16.Daw N, O’Doherty J, Dayan P, Seymour B, Dolan R. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Auer P, Cesa-Bianchi N, Fischer P. Finite-time analysis of the multi-armed bandit problem. Machine Learning. 2002;47:235–256. doi: 10.1023/A:1013689704352. [DOI] [Google Scholar]

- 18.Murphy TE, Roy R. The world’s fastest dice. Nat. Photon. 2008;2:714–715. doi: 10.1038/nphoton.2008.239. [DOI] [Google Scholar]

- 19.Saade, A., et al. Random projections through multiple optical scattering: Approximating Kernels at the speed of light. In IEEE International Conference on Acoustics, Speech and Signal Processing, March 20–25, 2016, Shanghai, China 6215–6219 (IEEE, 2016).

- 20.Kim S-J, Naruse M, Aono M, Ohtsu M, Hara M. Decision Maker Based on Nanoscale Photo-Excitation Transfer. Sci Rep. 2013;3:2370. doi: 10.1038/srep02370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nakagaki T, Yamada H, Tóth Á. Intelligence: Maze-solving by an amoeboid organism. Nature. 2000;407:470–470. doi: 10.1038/35035159. [DOI] [PubMed] [Google Scholar]

- 22.Kim S-J, Aono M, Hara M. Tug-of-war model for the two-bandit problem: Nonlocally correlated parallel exploration via resource conservation. BioSystems. 2010;101:29–36. doi: 10.1016/j.biosystems.2010.04.002. [DOI] [PubMed] [Google Scholar]

- 23.Kim S-J, Aono M, Nameda E. Efficient decision-making by volume-conserving physical object. New J. Phys. 2015;17:083023. doi: 10.1088/1367-2630/17/8/083023. [DOI] [Google Scholar]

- 24.Naruse M, et al. Decision making based on optical excitation transfer via near-field interactions between quantum dots. J. Appl. Phys. 2014;116:154303. doi: 10.1063/1.4898570. [DOI] [Google Scholar]

- 25.Naruse M, et al. Single-photon decision maker. Sci. Rep. 2015;5:13253. doi: 10.1038/srep13253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pohl DW, Courjon D. Near Field Optics. The Netherlands: Kluwer; 1993. [Google Scholar]

- 27.Naruse M, Tate N, Aono M, Ohtsu M. Information physics fundamentals of nanophotonics. Rep. Prog. Phys. 2013;76:056401. doi: 10.1088/0034-4885/76/5/056401. [DOI] [PubMed] [Google Scholar]

- 28.Eisaman MD, Fan J, Migdall A, Polyakov SV. Single-photon sources and detectors. Rev. Sci. Instrum. 2011;82:071101. doi: 10.1063/1.3610677. [DOI] [PubMed] [Google Scholar]

- 29.Kato, H., Kim, S.-J., Kuroda, K., Naruse, M. & Hasegawa, M. The Design and Implementation of a Throughput Improvement Scheme based on TOW Algorithm for Wireless LAN. In Proc. 4th Korea-Japan Joint Workshop on Complex Communication Sciences, January 12–13, Nagano, Japan J13 (IEICE, 2016).

- 30.Naruse M, Tate N, Ohtsu M. Optical security based on near-field processes at the nanoscale. J. Optics. 2012;14:094002. doi: 10.1088/2040-8978/14/9/094002. [DOI] [Google Scholar]

- 31.Sakuraba R, Iwakawa K, Kanno K, Uchida A. Tb/s physical random bit generation with bandwidth-enhanced chaos in three-cascaded semiconductor lasers. Opt. Express. 2015;23:1470–1490. doi: 10.1364/OE.23.001470. [DOI] [PubMed] [Google Scholar]

- 32.Soriano MC, García-Ojalvo J, Mirasso CR, Fischer I. Complex photonics: Dynamics and applications of delay-coupled semiconductors lasers. Rev. Mod. Phys. 2013;85:421–470. doi: 10.1103/RevModPhys.85.421. [DOI] [Google Scholar]

- 33.Ohtsubo, J. Semiconductor lasers: stability, instability and chaos (Springer, Berlin, 2012).

- 34.Uchida, A. Optical communication with chaotic lasers: applications of nonlinear dynamics and synchronization (Wiley-VCH, Weinheim, 2012).

- 35.Bilal K, Malik SUR, Khan SU, Zomaya AY. Trends and challenges in cloud datacenters. IEEE Cloud Computing. 2014;1:10–20. doi: 10.1109/MCC.2014.26. [DOI] [Google Scholar]

- 36.Brogaard J, Hendershott T, Riordan R. High-frequency trading and price discovery. Rev. Financ. Stud. 2014;27:2267–2306. doi: 10.1093/rfs/hhu032. [DOI] [Google Scholar]

- 37.Colet P, Roy R. Digital communication with synchronized chaotic lasers. Opt. Lett. 1994;19:2056–2058. doi: 10.1364/OL.19.002056. [DOI] [PubMed] [Google Scholar]

- 38.Argyris A, et al. Chaos-based communications at high bit rates using commercial fibre-optic links. Nature. 2005;438:343–346. doi: 10.1038/nature04275. [DOI] [PubMed] [Google Scholar]

- 39.Annovazzi-Lodi V, Donati S, Scire A. Synchronization of chaotic injected-laser systems and its application to optical cryptography. IEEE J Quantum Electron. 1996;32:953–959. doi: 10.1109/3.502371. [DOI] [Google Scholar]

- 40.Uchida A, et al. Fast physical random bit generation with chaotic semiconductor lasers. Nat. Photon. 2008;2:728–732. doi: 10.1038/nphoton.2008.227. [DOI] [Google Scholar]

- 41.Kanter I, Aviad Y, Reidler I, Cohen E, Rosenbluh M. An optical ultrafast random bit generator. Nat. Photon. 2010;4:58–61. doi: 10.1038/nphoton.2009.235. [DOI] [Google Scholar]

- 42.Lin F-Y, Liu J-M. Chaotic lidar. IEEE J. Sel. Top. Quantum Electron. 2004;10:991–997. doi: 10.1109/JSTQE.2004.835296. [DOI] [Google Scholar]

- 43.Fox RF, Gatland IR, Roy R, Vemuri G. Fast, accurate algorithm for numerical simulation of exponentially correlated colored noise. Phys. Rev. A. 1988;38:5938–5940. doi: 10.1103/PhysRevA.38.5938. [DOI] [PubMed] [Google Scholar]

- 44.Toomey JP, Kane DM. Mapping the dynamic complexity of a semiconductor laser with optical feedback using permutation entropy. Opt. Express. 2014;22:1713–1725. doi: 10.1364/OE.22.001713. [DOI] [PubMed] [Google Scholar]

- 45.Kim S-J, Naruse M, Aono M, Hori H, Akimoto T. Random walk with chaotically driven bias. Sci. Rep. 2016;6:38634. doi: 10.1038/srep38634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Lam WS, Ray W, Guzdar PN, Roy R. Measurement of Hurst exponents for semiconductor laser phase dynamics. Phys. Rev. Lett. 2005;94:010602. doi: 10.1103/PhysRevLett.94.010602. [DOI] [PubMed] [Google Scholar]

- 47.High-Frequency Trading Is Nearing the Ultimate Speed Limit, MIT Technology Review. https://www.technologyreview.com/s/602135/high-frequency-trading-is-nearing-the-ultimate-speed-limit/ (Last access: 05/07/2017).

- 48.High Frequency Trading Turns to High Frequency Technology to Reduce Latency. http://www.rec-usa.com/press/High%20Frequency%20Trading%20Turns%20to%20High%20Frequency.pdf (Last access: 05/07/2017).

- 49.Ugajin K, et al. Real-time fast physical random number generator with a photonic integrated circuit. Opt. Express. 2017;25:6511–6523. doi: 10.1364/OE.25.006511. [DOI] [PubMed] [Google Scholar]

- 50.Aida T, Davis P. Oscillation mode selection using bifurcation of chaotic mode transitions in a nonlinear ring resonator. IEEE J. Quantum Electron. 1994;30:2986–2997. doi: 10.1109/3.362706. [DOI] [Google Scholar]

- 51.Nixon M, et al. Controlling synchronization in large laser networks. Phys. Rev. Lett. 2012;108:214101. doi: 10.1103/PhysRevLett.108.214101. [DOI] [PubMed] [Google Scholar]

- 52.Williams CRS, et al. Experimental observations of group synchrony in a system of chaotic optoelectronic oscillators. Phys. Rev. Lett. 2013;110:064104. doi: 10.1103/PhysRevLett.110.064104. [DOI] [PubMed] [Google Scholar]

- 53.Naruse M, et al. Single Photon in Hierarchical Architecture for Physical Decision Making: Photon Intelligence. ACS Photonics. 2016;3:2505–2514. doi: 10.1021/acsphotonics.6b00742. [DOI] [Google Scholar]

- 54.Kim S-J, Naruse M, Aono M. Harnessing the Computational Power of Fluids for Optimization of Collective Decision Making. Philosophies Special Issue ‘Natural Computation: Attempts in Reconciliation of Dialectic Oppositions’. 2016;1:245–260. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data sets generated during the current study are available from the corresponding author on reasonable request.