Abstract

Objective

Direct electrophysiological recordings in epilepsy patients offer an opportunity to study human auditory cortical processing with unprecedented spatiotemporal resolution. This review highlights recent intracranial studies of human auditory cortex and focuses on its basic response properties as well as modulation of cortical activity during the performance of active behavioral tasks.

Data Sources: Literature review.

Review Methods: A review of the literature was conducted to summarize the functional organization of human auditory and auditory‐related cortex as revealed using intracranial recordings.

Results

The tonotopically organized core auditory cortex within the posteromedial portion of Heschl's gyrus represents spectrotemporal features of sounds with high temporal precision and short response latencies. At this level of processing, high gamma (70–150 Hz) activity is minimally modulated by task demands. Non‐core cortex on the lateral surface of the superior temporal gyrus also maintains representation of stimulus acoustic features and, for speech, subserves transformation of acoustic inputs into phonemic representations. High gamma responses in this region are modulated by task requirements. Prefrontal cortex exhibits complex response patterns, related to stimulus intelligibility and task relevance. At this level of auditory processing, activity is strongly modulated by task requirements and reflects behavioral performance.

Conclusions

Direct recordings from the human brain reveal hierarchical organization of sound processing within auditory and auditory‐related cortex.

Level of Evidence

Level V

Keywords: Electrocorticography, Heschl's gyrus, high gamma, superior temporal gyrus

INTRODUCTION

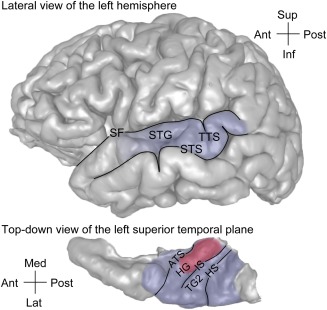

Auditory cortex in humans occupies the dorsal and lateral surface of the superior temporal gyrus (STG) (Fig. 1). The dorsal surface, termed the superior temporal plane, is buried deep within the Sylvian fissure. Heschl's gyrus (HG) is a major anatomical landmark within the superior temporal plane, oriented obliquely in a posteromedial‐to‐anterolateral direction. HG is bounded anteromedially by the anterior transverse sulcus extending from the circular sulcus, and posterolaterally by Heschl's sulcus, which extends onto the lateral surface of the STG as the transverse temporal sulcus (TTS).

Figure 1.

Approximate location of the auditory cortex on the lateral surface and the superior temporal plane in the human brain (top and bottom panels, respectively). Area comprising core region is shaded in red, areas comprising non‐core (putative belt and parabelt areas) are shaded in blue. ATS = anterior temporal sulcus; HG = Heschl's gyrus; HS = Heschl's sulcus; IS = intermediate sulcus; SF = Sylvian fissure; STG = superior temporal gyrus; STS = superior temporal sulcus; TG2 = second transverse gyrus; TTS = transverse temporal sulcus. Modified from Nourski & Brugge.39

On a gross anatomical level, the superior temporal plane exhibits considerable complexity. It is one of the most highly folded regions in the human brain.1 There is a great deal of anatomical variability across individuals and between hemispheres in the same individual.2, 3, 4, 5, 6, 7 While a single HG per hemisphere is observed most frequently, there may be partially duplicated HG, two completely duplicated gyri, or even three gyri within any single superior temporal plane.3, 4, 5, 6, 8 Further, gross anatomical landmarks are not entirely predictable of underlying cytoarchitecture and functional organization.2, 4, 9, 10 All these factors make human auditory cortex challenging to study.

Experimental animal models have proven extremely valuable in delineating basic organizational principles of auditory cortex (reviewed by Hackett11). In non‐human primates, including marmosets and macaques, auditory cortex has been hierarchically delineated into core (primary and primary‐like), belt, and parabelt fields. Currently it is not known how exactly this core–belt–parabelt model is reflected in the organization of human auditory cortex.12 Multiple studies have localized human core auditory cortex to the posteromedial portion of HG (approximately two thirds).1, 9, 10, 13, 14 In cases of HG duplication, core auditory cortex is identified in the most anterior gyrus within the superior temporal plane according to cytoarchitectonic criteria.2, 3, 9, 10 However, functional identification with neuroimaging methods shows that core auditory cortex can span both divisions of HG.6 While these studies provide a definition of core auditory cortex in the human, the specific organization of non‐core auditory fields, including homologs of auditory belt and parabelt areas, is at present unclear.

Non‐invasive neuroimaging methods (electro‐ and magnetoencephalography, functional magnetic resonance imaging) have contributed greatly to the current understanding of human auditory cortex function. However, the ability of these methods to elucidate the detailed organization of auditory cortex is limited by their spatiotemporal resolution. Moreover, sources of activity inside the brain cannot be unambiguously localized from surface recordings with currently available neuroelectric and neuromagnetic methods. Recording electrophysiological activity directly from the human brain, including auditory and auditory‐related cortex, is possible in neurosurgical patients. Direct intracranial recordings (electrocorticography, ECoG) are made using electrodes implanted in patients’ brains for clinical reasons, usually to localize a potentially resectable seizure focus in medically intractable epilepsy. This provides a unique research opportunity to study the brain with high resolution in time (milliseconds) and space (millimeters).15, 16, 17

METHODS

ECoG allows for simultaneous recording from multiple regions of human auditory cortex. Implanted multicontact electrodes come in a variety of form factors and include penetrating depth electrodes and surface arrays. Penetrating depth electrodes can target HG.18, 19 This provides clinically important coverage of the superior temporal plane and allows for investigation of core auditory cortex and surrounding non‐core areas. Surface arrays that are placed subdurally provide coverage of auditory cortex on the lateral surface of the STG.20, 21

Anatomical reconstruction of electrode locations following implantation is critically important for interpretation of recorded electrophysiological data. Accurate anatomical reconstruction is carried out by co‐registration of pre‐ and post‐implantation structural imaging data using local anatomical landmarks and is aided by intraoperative photography.17, 19 Pooling data from multiple subjects can be done by projecting the electrode locations into a common brain coordinate space (e.g., Montreal Neurological Institute MNI305), and mapping the locations onto a standard brain. This process is complicated by a relatively small number of subjects in any given study, limited coverage of the auditory cortex provided by electrode arrays, and the aforementioned structural complexity and inter‐individual variability. In order to address these issues, statistical techniques such as linear mixed effects models are developed to account for subject differences and anatomical variables.22

Cortical activity spans a broad range of frequencies. This includes relatively low‐frequency activity evoked by the stimulus that is phase‐locked to its temporal features. Such phase‐locked activity can be visualized and measured as the averaged evoked potential (AEP). On the other end of the spectrum, higher‐frequency activity, including that in the high gamma frequency range (>70 Hz), has been proven to be crucial for auditory cortical processing,21, 23, 24, 25 Studies in non‐human primates26, 27 and humans28 have established the high gamma band as surrogate for unit activity. In contrast to low‐frequency cortical activity, activity in gamma (30–70 Hz) and high gamma bands that is modulated (“induced”) by the stimulus, is often not phase‐locked to it.21, 23, 29, 30

It has been shown that the absolute magnitude of cortical activity decreases with frequency, following the power law, regardless of whether a stimulus is presented or not.31, 32 This requires specialized analysis techniques that typically include filtering and rectification of the recorded ECoG signal to extract analytical amplitude or power within the specified ECoG frequency band. This is then followed by normalization to a pre‐stimulus baseline.17, 21 The resultant event‐related band power (ERBP) reflects changes in ECoG power within a particular frequency band (such as high gamma) that occur upon stimulus presentation relative to power in a pre‐stimulus reference interval.

Understanding basic electrophysiological properties of human auditory cortex is achieved through a systematic study of responses to simple acoustic stimuli (e.g., pure tones and click trains) and complex sounds including speech. Characterization of onset response latencies allows to test hypotheses about the flow of information across core and non‐core regions within auditory cortex. Differential contributions of these regions to the higher auditory functions–"making sense of sound”33–are studied using active listening experimental paradigms. The present review highlights recent intracranial studies of basic response properties of human auditory cortex as well as modulation of auditory cortical activity during the performance of active behavioral tasks.

Basic response properties of human auditory cortex

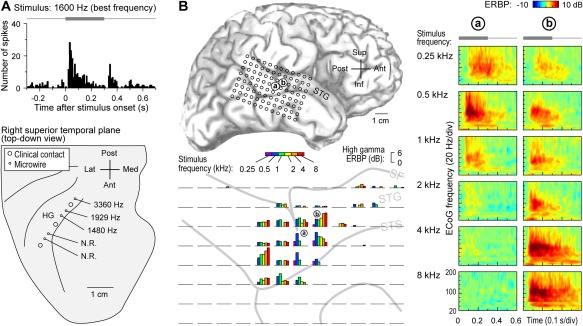

Tonotopy–the orderly spatial arrangement of neurons tuned to different sound frequencies – is one of the fundamental organizational principles of the auditory system34. Hybrid depth electrodes implanted into HG allow for recording of local field potentials that reflect activity of local neural populations, as well as single‐unit activity35 (Fig. 2A, top panel). Using this approach, a high‐to‐low frequency tuning gradient has been described at the single cell level within posteromedial HG36 (Fig. 2A, bottom panel). Further, the tuning of these HG neurons is exquisitely fine (narrow), so that they distinguish sound frequencies differing by just ∼1/6‐1/18 octave37. This fine orderly tuning for sound frequency is consistent with the interpretation of posteromedial HG as core auditory cortex.

Figure 2.

Core and non‐core auditory cortical regions exhibit spectral organization. A: Single unit best frequency data recorded from Heschl's gyrus (HG). Top: peristimulus time histogram for an exemplary unit depicting responses to a best‐frequency pure tone stimulus. Stimulus is schematically shown in gray. Bottom: Tracing of superior temporal plane showing the locations of clinical and microwire hybrid depth electrode contacts implanted in HG (large and small circles, respectively). Mean best frequencies for units recorded at three sites within core auditory cortex are indicated (N.R. = no response to pure tone stimuli). Modified from Howard et al.36 B: High gamma responses to pure tones recorded from superior temporal gyrus (STG). Top left: location of the 96‐contact subdural electrode grid implanted over perisylvian cortex, including STG (a and b denote the locations of two sites whose responses are detailed in the right panel). Bottom left: high gamma event‐related band power (ERBP) in response to tone stimuli presented at different frequencies (color bars), simultaneously recorded from the 96‐contact grid (individual plots). High gamma ERBP was averaged within the time window of 100–150 ms after stimulus onset. Right: time‐frequency analysis of cortical activity elicited by pure‐tone stimuli between 0.25 and 8 kHz (top to bottom), recorded from two sites on the STG (left and right columns). Stimulus is schematically shown in gray above time‐frequency plots. Modified from Nourski et al.38

Pure tones also elicit robust frequency‐selective high gamma responses on lateral STG (non‐core auditory cortical areas)38. In contrast to posteromedial HG, lateral STG does not exhibit a clear topographic selectivity gradient for sound frequency. For example, in Figure 2B, site ‘a’, located on the lateral surface of the STG immediately adjacent to the TTS, exhibits the strongest high gamma responses to the 500 Hz pure tones. An adjacent site ‘b’ responds best to 4‐8 kHz stimuli. In this case, sites that respond best to high frequencies surround a low‐frequency responsive region in a “mirror‐image” pattern (see Fig. 2B, bottom left panel). While such spectral organization is seen in some subjects, lateral STG is more often characterized by more complex, clustered response patterns, wherein low‐ and high‐frequency tones maximally activate different sites. Classification analysis, however, consistently reveals high accuracy for pure tone discrimination based on patterns of high gamma activity recorded from this region38. These findings indicate that high gamma activity on the lateral STG contains sufficient information to differentiate pure tone stimuli, and this region, though often considered tertiary (parabelt) auditory cortex, possibly includes a relatively early non‐core area (e.g. lateral belt) that maintains a topographic representation of sound frequency.

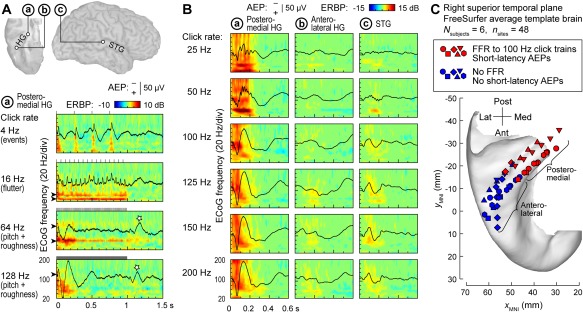

Cortical processing of temporal sound features plays a major role in perception of environmental sounds, including speech. Repeated or periodic non‐speech stimuli, such as bursts of sinusoidal amplitude‐modulated noise or sequences (trains) of clicks offer convenient tools to study the representation of temporal sound features in the human auditory cortex39. Depending on click repetition rate within a sequence, click trains elicit distinct percepts, from “events” (at rates below 8‐10 Hz), to “flutter” (between ∼10‐40 Hz), to “pitch” (above ∼30‐40 Hz)40, 41. Additionally, repetitive stimuli presented at rates between ∼20‐300 Hz have a buzzing perceptual quality, which is referred to as “roughness”42, 43. These distinct perceptual classes are reflected in patterns of activity within core auditory cortex, as illustrated in Figure 3A. At low presentation rates, each click elicits a distinct multi‐phasic evoked potential and a burst of high gamma activity, essentially representing the clicks as separate events. As the rate increases, and the train is perceived as having a fluctuation‐like or “flutter” quality, the evoked potentials overlap, giving rise to increases in phase‐locked power at the frequency corresponding to the repetition rate – the frequency‐following response (FFR). At even higher rates, when the percept of pitch emerges, an induced (non‐phase‐locked) response in gamma and high gamma bands becomes evident in addition to the sustained FFR. This emergence of induced high gamma activity in responses to temporally regular stimuli has been considered a physiological correlate of pitch44. On the other hand, the presence of the FFR in core auditory cortical activity and its dissipation at higher repetition rates parallels the perceptual boundaries of roughness24, 39 (see also Fishman et al.45).

Figure 3.

Auditory cortical regions differ in their capacity to track temporal modulations. A: Responses to 1 s click trains from core auditory cortex. Top: location of three exemplary recording sites: a core cortex site in medial Heschl's gyrus (HG), a site in a non‐core field on lateral HG and a superior temporal gyrus (STG) site (marked by a, b, and c, respectively). Bottom: averaged evoked potentials (AEPs) and event‐related band power (ERBP) obtained from site a in response to click trains presented at rates between 4 and 128 Hz (top to bottom rows). Stars indicate off‐response complexes. Arrowheads indicate driving frequencies and their harmonics at which increases in phase‐locked ERBP are seen. Stimulus schematics are shown above each ERBP plot. Modified from Brugge et al.24 B: AEPs and ERBP obtained from the three sites in response to 160 ms click trains presented at rates between 25 and 200 Hz (top to bottom rows). Modified from Nourski et al.49 C: Locations of physiologically defined posteromedial and anterolateral HG sites (red and blue symbols, respectively) in six subjects (different symbol shapes), plotted in Montreal Neurological Institute (MNI) coordinate space and projected onto the FreeSurfer average template brain. Recording sites were assigned to the posteromedial HG region of interest based on the presence of a frequency‐following response (FFR) to 100 Hz click trains and short‐latency (<20 ms) average evoked potential components. Modified from Nourski et al.50

The FFR, which represents neural phase locking to the temporal regularity of the stimulus, provides a useful physiological marker for cortical field delineation46, 47, 48. A comparison between response profiles of three auditory cortical sites is shown in Figure 3B. Intracranial electrophysiology studies using click train stimuli indicate that human auditory core cortex (site ‘a’ in Fig. 3B) can phase‐lock up to at least 200 Hz, non‐core cortex on STG (site ‘c’) has a more limited phase‐locking capacity, while anterolateral HG (site ‘b’) exhibits little‐to‐no phase locking24, 49. Pooling anatomical data across subjects (Fig. 3C) demonstrates that field delineation within HG based on physiological response properties in individual subjects translates into anatomically distinct regions on the population (across‐subject) level50. This supports the reliability of the physiology‐based operational definitions of posteromedial (core) and anterolateral (non‐core) HG cortex24, 47, 50. On the lateral STG, spatial distribution of phase‐locked responses to click trains is more variable, although a common organizational feature in individual subjects is that sites that feature FFRs typically cluster around the TTS49.

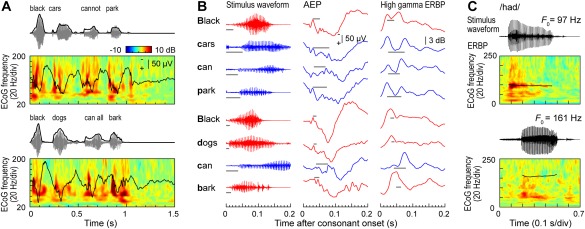

Simple non‐speech sounds such as pure tones or click trains also help to better understand patterns of neural activity elicited by speech. Auditory core cortex simultaneously represents temporal features of speech over multiple time scales, including its syllabic structure, voicing of stop consonants and voice fundamental frequency (F 0) (Fig. 4) (see also Arnal et al.51). Figure 4A shows examples of responses to two speech sentences recorded from an electrode implanted in posteromedial HG. The temporal speech envelope is dominated by its syllabic structure (∼200‐250 ms long segments that correspond to individual syllables). Auditory core represents this relatively slowly time‐varying signal both in the temporal cadence of the evoked activity (black plots) as well as modulation of high gamma activity (color plots). The latter pattern – “tracking” of speech envelope by high gamma activity – is preserved even when speech is time‐compressed (accelerated) up to five times its normal rate, rendering it unintelligible52.

Figure 4.

Temporal sound features of speech are represented in core auditory cortex. A: Representation of speech temporal envelope. Stimulus waveforms (gray) and temporal envelopes (black) of the two speech sentences are shown above averaged evoked potential (AEP) waveforms and event‐related band power (ERBP) time‐frequency plots. B: Representation of stop consonant voicing. Left column: waveforms corresponding to the first 200 ms following each initial stop consonant (top to bottom) in the two sentences shown in (A). Middle and right column: AEP and high gamma (70–150 Hz) ERBP responses, respectively, that correspond to each initial stop consonant. Red and blue plots correspond to voiced and unvoiced consonants, respectively. Gray horizontal lines represent the voice‐onset time. C: Phase locking to voice F 0. Stimulus waveforms (black) of the two speech syllables/had/, spoken by a male and a female talker (upper and lower waveforms, respectively) are shown above ERBP time‐frequency plots with superimposed pitch contours (black curves). Modified from Steinschneider et al.54

Finer‐grain temporal features, such as voicing onset of stop consonants (tens of milliseconds for unvoiced stops) – are also represented in both the AEP and high gamma activity at the level of core cortex53, 54. This is illustrated in Figure 4B, where the same two sentences (see Fig. 4A) are segmented into individual words. Here, words beginning with a voiced stop consonant (red waveforms) tend to elicit initial responses with a single peak, while words beginning with an unvoiced stop (blue waveforms) elicit double‐peaked responses. Finally, consistent with results of click train studies, auditory core cortex can exhibit FFRs to the voice . While FFRs are reliably observed in response to male talkers with relatively low F 0, this is not typically the case for female talkers with higher F 0s (Fig. 4C). Intracranial electrophysiology studies demonstrate that neural representations of basic spectrotemporal features of speech in auditory core cortex are remarkably similar in non‐human primates and humans53, 54 (see also Reser & Rosa57). The similarity in representation of speech at this cortical level is present despite the vastly different experience with language between humans and non‐human primate species. This indicates that activity within auditory core cortex is likely dominated by general auditory, rather than language‐specific, processing.

Non‐core auditory areas on the lateral STG exhibit a somewhat more limited capacity for isomorphic representation of speech temporal acoustic features. Speech envelope‐following responses have been described at this processing stage58, 59, 60, paralleling non‐invasive studies (reviewed by Peelle & Davis61). Voicing of stop consonants is reflected in the temporal patterns of the AEP recorded from the lateral STG62. There is, however, no evidence for reliable FFRs to the voice F 0 at this level of cortical processing62, 63. Focal electrical stimulation of STG via subdural electrodes differentially affects the discrimination of vowels and consonants, disrupting the former, but not the latter64. This suggests that consonants and vowels at the level of STG are represented as distinct perceptual phenomena.

Stimulus sets that are sufficiently rich in their spectral content and temporal modulations (such as spoken words or sentences) can be used to define spectrotemporal receptive fields (STRFs) of individual cortical sites. STRF‐based analysis65, 66 can describe the tuning properties of individual cortical sites and characterize spectrotemporal organization of human auditory cortex, predict responses to novel stimuli and, conversely, reconstruct the presented stimuli from patterns of cortical activity28, 67, 68, 69. Speech‐derived STRFs have been used by Hullett et al.69 to characterize sensitivity of the STG to spectrotemporal modulations. A posterior‐to‐anterior gradient was identified along the length of the STG, wherein posterior STG was tuned for fast temporal and low spectral modulation, while anterior STG represented slow temporal and high spectral modulation. Examination of spectral organization using this approach yielded similar results to those obtained using pure‐tone stimuli38, wherein spectral tuning maps in some subjects were reminiscent of a high‐low‐high mirror‐image pattern, but more often lacked clear frequency gradients69.

Using STRF‐based reconstruction models, Pasley et al.67 were able to decode speech spectrograms from patterns of cortical high gamma activity. Cortical sites that contributed the most to reconstruction were localized to the posterolateral portion of STG. Speech synthesis based on reconstruction of speech spectrograms from auditory cortical high gamma activity using linear models is feasible in real time70. Mesgarani et al.68 characterized distributed spatiotemporal patterns of high gamma activity on the STG during listening to speech. Speech‐responsive sites on the STG were found to be selective to specific phoneme groups. Phonemic feature selectivity at this level of cortical processing was proposed to result from neural tuning to signature spectrotemporal cues.

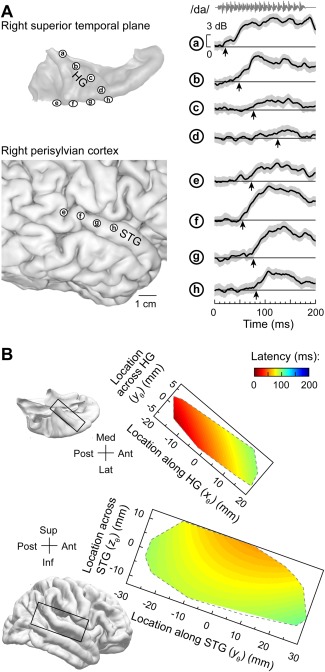

The latency of response onset is a basic electrophysiological property that permit inferences regarding relationship of different auditory cortical areas within the core‐belt‐parabelt processing hierarchy13, 71. Results obtained in non‐human primates predict that core areas would exhibit the shortest onset latencies, while non‐core areas would be characterized by progressively longer latencies72, 73, 74. Simultaneous recording from HG and lateral STG allows for systematic analysis of response latencies22 (Fig. 5). Onset latencies of high gamma responses to a speech syllable /da/ increase systematically along HG (sites ‘a’‐‘d’), and are the longest in its anterolateral portion. However, latencies on lateral STG (sites ‘e’‐‘h’) are shorter than those in anterolateral HG. Warping individual electrode locations from individual subjects into a common brain space reveals increases in latency along and across HG, a U‐shaped latency distribution along STG and longer latencies in the anterolateral third of the HG compared to middle portion of the STG (Fig. 5B). The latter two findings provide further evidence for the hypothesis that a portion of STG represents a non‐core field that is relatively early in the processing hierarchy.

Figure 5.

Onset response latencies on lateral superior temporal gyrus (STG) are shorter than in anterolateral Heschl's gyrus (HG). A: Data from a representative subject. Left: location of recording contacts in the superior temporal plane (top) and over perisylvian cortex (bottom). Right: High gamma event‐related band power (ERBP) recorded from eight representative sites (a through h) in response to speech syllable /da/. Thick lines and gray shading correspond to cross‐trial mean ERBP and its 95% confidence interval, respectively. Arrows indicate measured high gamma onset response latency. B: Model predictions of onset response latencies to the syllable /da/ in HG (top) and lateral STG (bottom). FreeSurfer template brain is shown on the left. Predictions are based on data from 11 subjects and are bound by the convex envelopes of locations of responsive cortical sites. Modified from Nourski et al.22

Studies that simultaneously examined both relatively low (<20 Hz) and higher frequency auditory cortical activity revealed differences in the ways different ECoG bands contribute to representation of stimulus acoustic attributes and sound perception52, 75, 76, 77. Speech processing within the human auditory cortex is subserved by oscillatory activity on multiple time scales75, 76. The relative function of slow and fast cortical oscillations has been shown to exhibit hemispheric asymmetry and hierarchical organization75. High gamma activity featured increasing left lateralization along the auditory cortical hierarchy (from core auditory cortex in posteromedial HG to non‐core cortex on the lateral STG). Transition from evoked (phase‐locked to the stimulus) to induced (non phase‐locked) activity along the auditory cortical hierarchy was also more prominent in the left hemisphere. Phase‐amplitude coupling – amplitude modulation of faster (25‐45 Hz) oscillatory activity by the phase of the slower (4‐8 Hz) activity at the level of STG, was interpreted as transition from acoustic feature tracking to abstract phonological processing. The study of Morillon et al.75 supports a model wherein the ongoing speech signal is simultaneously parsed by left‐lateralized low gamma and right‐lateralized high theta oscillations, with left auditory cortex extracting faster phonemic features, and right auditory cortex parsing speech information at a syllabic rate.

Fontolan et al.76 analyzed cross‐frequency regional coupling and showed that reciprocal functional connectivity between core auditory cortex and STG is dominated by activity in distinct ECoG frequency bands, with top‐down and bottom‐up information transfer subserved by relatively low (<40 Hz) and high (>40 Hz) ECoG bands, respectively. Modulation of local gamma activity by the phase of distant delta (1‐3 Hz) activity in the top‐down direction exhibited left hemisphere dominance. Further, directional information transfer was not continuous, but alternated between bottom‐up and top‐down directions at a rate of 1‐3 Hz. Taken together, the studies of Morillon et al.75 and Fontolan et al.76 indicate that both different ECoG frequencies and temporal windows are used for directional information transfer in the human auditory cortex, and that this process is asymmetric between the two hemispheres.

Task‐related modulation of auditory cortical activity

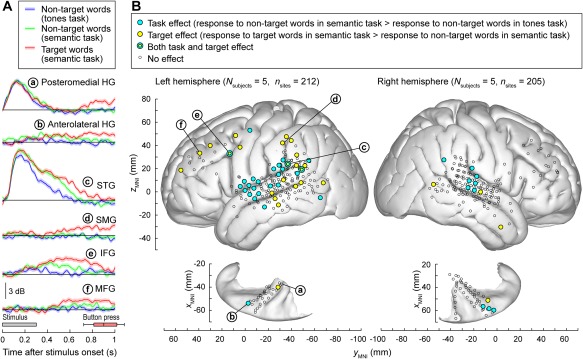

Using active listening paradigms such as auditory target detection, it is possible to characterize the extent to which auditory processing across different cortical areas is affected by task demands.78, 79, 80, 81, 82 In the study of Chang et al.,78 modest enhancement of high gamma activity was observed on the lateral surface of the superior temporal gyrus (STG) in response to target syllable stimuli in a phonemic categorization task. In the studies of Steinschneider et al.79 and Nourski et al.,80 subjects listened to words and tones presented in random order in a number of target detection tasks that required acoustic, phonemic, and semantic processing. The tasks included tone detection (“press the button whenever you hear a tone”) and semantic categorization (e.g., “press the button whenever you hear an animal word”). The experiment design allowed for comparisons of responses to the same class of stimuli–e.g., all instances of the word /cat/ and /dog/ ‐ presented in different experimental contexts (Fig. 6A). Early activity in posteromedial HG (site ‘a’) is minimally affected by the task, yet can feature target‐specific increases in late activity that follow the behavioral response (button press). Anterolateral HG (site ‘b’), in contrast, can exhibit target‐specific responses that precede the behavioral response. Activity on STG (site ‘c’) is strongly modulated by the task. Responses to non‐target words are greater during semantic categorization than tone detection and are further enhanced to target words. Auditory‐related cortex on supramarginal gyrus (site ‘d’) and prefrontal cortex in inferior and middle frontal gyri (IFG and MFG; sites ‘e’ and ‘f’, respectively) exhibit the most complex patterns, and often respond selectively to target stimuli prior to the behavioral response. When responses to target stimuli are divided into trials that yielded fast behavioral responses, slow responses or were missed altogether, the magnitude of STG high gamma responses, and timing and magnitude of prefrontal high gamma activity reflects the behavioral performance (i.e., reaction times).79, 80 These findings are corroborated by a related study that examined responses to pure tone stimuli in a target detection task.81

Figure 6.

Task demands have differential effects on auditory and auditory‐related cortical areas. A: Responses to speech stimuli /cat/ and /dog/are shown for representative left hemisphere recording sites (a through e) in posteromedial, anterolateral Heschl's gyrus (HG), superior temporal gyrus (STG), supramarginal gyrus (SMG), inferior and middle frontal gyrus (IFG, MFG).. Colors (blue, green, and red) represent different task conditions. Lines and shaded areas represent mean high gamma event‐related band power (ERBP) and its standard error, respectively. Gray box denotes stimulus duration (300 ms). Horizontal box plot denotes the timing of behavioral responses to the target stimuli (median, 10th, 25th, 75th, and 90th percentiles). Modified from Steinschneider et al. 79 B: Spatial distribution of task and target effects (blue and yellow symbols, respectively). Data from 10 cerebral hemispheres in 9 subjects are plotted in Montreal Neurological Institute (MNI) coordinate space and projected onto FreeSurfer average template brain. Left and right panels depict 212 sites in the left hemisphere and 205 sites in the right hemisphere, respectively. Open symbols indicate sites that exhibited significant high gamma responses to the word stimuli, but did not exhibit either task or target effect. Letters a through e denote projections of the recording sites presented in panel A onto the average template brain. Modified from Nourski et al.81

Analysis of data from multiple subjects demonstrates that the task effect (responses to non‐target words in a control tone detection task vs. semantic categorization tasks) is most commonly localized to the STG81 (Fig. 6B). Target effect (target vs. non‐target words in a semantic task), on the other hand, is more prominent in surrounding auditory related areas, including middle temporal, supramarginal gyri, as well as IFG and MFG. Both task and target effects are more prominent in the left hemisphere than in the right. Taken together, these findings support left‐lateralized hierarchical organization of speech processing at the cortical level, wherein acoustic, phonemic, and semantic processing are primarily subserved by core, non‐core, and auditory‐related cortex, respectively.

Tracking of speech temporal envelope by non‐core auditory cortex, introduced in the previous section, is also affected by task and attention. When the speech signal consists of two concurrent talkers, high gamma activity on the STG emphasizes spectrotemporal features of the talker's speech to which the listener is attending.83, 84, 85 Activity on STG thus does not merely reflect acoustic properties of speech, but also relates strongly to its perceived aspects, including attentional focus. This suggests that non‐core auditory cortex plays a key role in complex listening situations, such as a “cocktail party” environment.

Activity in anterolateral HG and STG is attenuated during hearing self‐generated speech compared to listening to recorded speech56, 63, 86 or hearing an interlocutor's speech during a conversation.50 These observations support the idea that suppression of cortical activity to self‐initiated speech is an emerging property of human non‐core auditory cortex. Auditory‐related cortex within anterior temporal lobe exhibits further specialization during verbal communication. Low‐frequency (3–5 and 8–12 Hz bands) oscillatory activity within anterior temporal lobe exhibits differential response patterns during conversations with different interlocutors (life partners vs. attending physicians), representing a neural signature of communication that emerges at the level of auditory‐related cortex.87

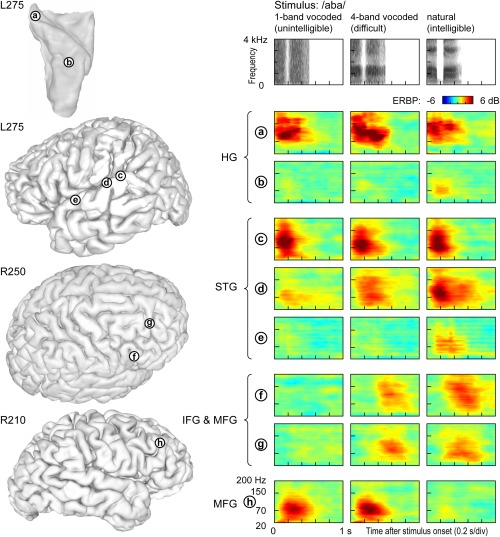

Differential involvement of cortical regions in processing of auditory stimuli that are spectrally impoverished (as encountered by cochlear implant users) can be studied in normal‐hearing subjects using noise‐vocoded speech.88, 89 Ongoing research is showing a cortical hierarchical processing of spectrally impoverished speech.90 The intelligibility of noise‐vocoded speech increases with the amount of spectral information available to the listener.91, 92 When noise‐vocoded speech is presented in a two‐alternative forced‐choice task, activity in core auditory cortex is comparable across stimulus conditions (site ‘a’ in Fig. 7). In contrast, anterolateral HG responds selectively to the natural (unprocessed) sounds (site ‘b’ in Fig. 7). STG exhibits a variety of response patterns, with responses becoming progressively more selective for clear speech in more anterior regions (sites ‘c’–‘e’ in Fig. 7). In the frontal lobe, IFG and MFG are strongly affected by the stimulus condition. Some sites respond selectively to all intelligible, rather than just clear, stimuli (sites ‘f’ and ‘g’ in Fig. 7), while others appear to respond preferentially to vocoded, but not natural speech, thus perhaps reflecting increased difficulty and effort (site ‘h’ in Fig. 7). Taken together, these findings further illustrate the tiered organization of auditory cortical processing within and beyond human auditory cortex.

Figure 7.

Responses to spectrally degraded speech are affected by intelligibility and task difficulty. Left: Locations of representative recording sites in Heschl's gyrus (HG), on superior temporal gyrus (STG), inferior and middle frontal gyrus (IFG, MFG) in three subjects. Right: Event‐related band power (ERBP) time‐frequency plots depicting responses to noise‐vocoded (1 and 4 bands) and natural speech stimuli /aba/, recorded from sites a through h (top to bottom). Stimulus spectrograms are shown on top.

SUMMARY

Direct recordings from the human brain reveal hierarchical organization of sound processing within auditory and auditory‐related cortex. The tonotopically organized core auditory cortex (posteromedial HG) represents spectrotemporal features of sounds with high temporal precision and short response latencies. At this level of processing, activity is minimally modulated by task, context or attention level. While non‐core cortex on lateral STG also represents stimulus acoustic features, its activity is modulated by task requirements. Finally, auditory‐related prefrontal areas (IFG and MFG) exhibit complex response patterns, related to stimulus intelligibility and task relevance. Responses in these cortical regions are strongly modulated by task requirements and correlate with behavioral performance.

ACKNOWLEDGMENTS

The author is grateful to Drs. Phillip Gander, Andrew Liu, Araceli Ramírez Cárdenas and Ariane Rhone for their constructive comments on the manuscript.

BIBLIOGRAPHY

- 1. Galaburda A, Sanides F. Cytoarchitectonic organization of the human auditory cortex. J Comp Neurol 1980;190:597–610. [DOI] [PubMed] [Google Scholar]

- 2. Rademacher J, Caviness VS Jr, Steinmetz H, Galaburda AM. Topographical variation of the human primary cortices: implications for neuroimaging, brain mapping, and neurobiology. Cereb Cortex 1993;3:313–329. [DOI] [PubMed] [Google Scholar]

- 3. Rademacher J, Morosan P, Schormann T, et al. Probabilistic mapping and volume measurement of human primary auditory cortex. Neuroimage 2001;13:669–683. [DOI] [PubMed] [Google Scholar]

- 4. Leonard CM, Puranik C, Kuldau JM, Lombardino LJ. Normal variation in the frequency and location of human auditory cortex landmarks. Heschl's gyrus: where is it? Cereb Cortex 1998;8:397–406. [DOI] [PubMed] [Google Scholar]

- 5. Abdul‐Kareem IA, Sluming V. Heschl gyrus and its included primary auditory cortex: structural MRI studies in healthy and diseased subjects. J Magn Reson Imaging 2008;28:287–299. [DOI] [PubMed] [Google Scholar]

- 6. Da Costa S, van der Zwaag W, Marques JP, Frackowiak RS, Clarke S, Saenz M. Human primary auditory cortex follows the shape of Heschl's gyrus. J Neurosci 2011;31:14067–14075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Marie D, Jobard G, Crivello F, et al. Descriptive anatomy of Heschl's gyri in 430 healthy volunteers, including 198 left‐handers. Brain Struct Funct 2015;220:729–743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Destrieux C, Fischl B, Dale A, Halgren E. Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. Neuroimage 2010;53:1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Hackett TA, Preuss TM, Kaas JH. Architectonic identification of the core region in auditory cortex of macaques, chimpanzees, and humans. J Comp Neurol 2001;441:197–222. [DOI] [PubMed] [Google Scholar]

- 10. Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K. Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage 2001;13:684–701. [DOI] [PubMed] [Google Scholar]

- 11. Hackett TA. Anatomic organization of the auditory cortex. Handb Clin Neurol 2015;129:27–53. [DOI] [PubMed] [Google Scholar]

- 12. Hackett TA. Organization and correspondence of the auditory cortex of humans and nonhuman primates In: Kaas JH, ed. Evolution of Nervous Systems Vol. 4: Primates. New York: Academic Press; 2007:109–119. [Google Scholar]

- 13. Liegeois‐Chauvel C, Musolino A, Chauvel P. Localization of the primary auditory area in man. Brain 1991;114:139–151. [PubMed] [Google Scholar]

- 14. Talavage TM, Ledden PJ, Benson RR, Rosen BR, Melcher JR. Frequency‐dependent responses exhibited by multiple regions in human auditory cortex. Hear Res 2000;150:225–244. [DOI] [PubMed] [Google Scholar]

- 15. Lachaux JP, Axmacher N, Mormann F, Halgren E, Crone NE. High‐frequency neural activity and human cognition: past, present and possible future of intracranial EEG research. Prog Neurobiol 2012;98:279–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Mukamel R, Fried I. Human intracranial recordings and cognitive neuroscience. Annu Rev Psychol 2012;63:511–537. [DOI] [PubMed] [Google Scholar]

- 17. Nourski KV, Howard MA. Invasive recordings in the human auditory cortex. Handb Clin Neurol 2015;129:225–244. [DOI] [PubMed] [Google Scholar]

- 18. Bancaud J, Talairach J, Bonis A, et al. La stéréo‐électroencéphalographie dans l'épilepsie. Informations neurophysiopathologiques apportées par l'investigation fonctionnelle stéréotaxique. Paris: Masson; 1965. [Google Scholar]

- 19. Reddy CG, Dahdaleh NS, Albert G, et al. A method for placing Heschl gyrus depth electrodes. J Neurosurg 2010;112:1301–1307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Howard MA, Volkov IO, Mirsky R, et al. Auditory cortex on the human posterior superior temporal gyrus. J Comp Neurol 2000;416:79–92. [DOI] [PubMed] [Google Scholar]

- 21. Crone NE, Boatman D, Gordon B, Hao L. Induced electrocorticographic gamma activity during auditory perception. Clin Neurophysiol 2001;112:565–582. [DOI] [PubMed] [Google Scholar]

- 22. Nourski KV, Steinschneider M, McMurray B, et al. Functional organization of human auditory cortex: investigation of response latencies through direct recordings. Neuroimage 2014;101:598–609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Crone NE, Sinai A, Korzeniewska A. High‐frequency gamma oscillations and human brain mapping with electrocorticography. Prog Brain Res 2006;159:275–295. [DOI] [PubMed] [Google Scholar]

- 24. Brugge JF, Nourski KV, Oya H, et al. Coding of repetitive transients by auditory cortex on Heschl's gyrus. J Neurophysiol 2009;102:2358–2374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Edwards E, Soltani M, Kim W, et al. Comparison of time‐frequency responses and the event‐related potential to auditory speech stimuli in human cortex. J Neurophysiol 2009;102:377–386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Ray S, Crone NE, Niebur E, Franaszczuk PJ, Hsiao SS. Neural correlates of high‐gamma oscillations (60‐200 Hz) in macaque local field potentials and their potential implications in electrocorticography. J Neurosci 2008;28:11526–11536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Steinschneider M, Fishman YI, Arezzo, JC. Spectrotemporal analysis of evoked and induced electroencephalographic responses in primary auditory cortex (A1) of the awake monkey. Cereb Cortex 2008;18:610–625. [DOI] [PubMed] [Google Scholar]

- 28. Jenison RL, Reale RA, Armstrong AL, Oya H, Kawasaki H, Howard MA 3rd. Sparse spectro‐temporal receptive fields based on multi‐unit and high‐gamma responses in human auditory cortex. PLoS One 2015;10:e0137915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Kalcher J, Pfurtscheller G. Discrimination between phase‐locked and non‐phase‐locked event‐related EEG activity. Electroencephalogr Clin Neurophysiol 1995;94:381–384. [DOI] [PubMed] [Google Scholar]

- 30. Pantev C. Evoked and induced gamma‐band activity of the human cortex. Brain Topogr 1995;7:321–330. [DOI] [PubMed] [Google Scholar]

- 31. Buzsáki G. Rhythms of the Brain. Oxford: Oxford University Press; 2006. [Google Scholar]

- 32. Buzsáki G, Mizuseki K. The log‐dynamic brain: how skewed distributions affect network operations. Nat Rev Neurosci 2014;15:264–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Schnupp J, Nelken I, King A. Auditory Neuroscience: Making Sense of Sound. Cambridge, MA: MIT Press; 2010. [Google Scholar]

- 34. Clopton BM, Winfield JA, Flammino FJ. Tonotopic organization: review and analysis. Brain Res 1974;76:1–20. [DOI] [PubMed] [Google Scholar]

- 35. Howard MA, Volkov IO, Granner MA, Damasio HM, Ollendieck MC, Bakken HE. A hybrid clinical‐research depth electrode for acute and chronic in vivo microelectrode recording of human brain neurons. Technical note. J Neurosurg 1996;84:129–132. [DOI] [PubMed] [Google Scholar]

- 36. Howard MA, Volkov IO, Abbas PJ, Damasio H, Ollendieck MC, Granner MA. A chronic microelectrode investigation of the tonotopic organization of human auditory cortex. Brain Res 1996;724:260–264. [DOI] [PubMed] [Google Scholar]

- 37. Bitterman Y, Mukamel R, Malach R, Fried I, Nelken I. Ultra‐fine frequency tuning revealed in single neurons of human auditory cortex. Nature 2008;451:197–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Nourski KV, Steinschneider M, Oya H, Kawasaki H, Jones RD, Howard MA. Spectral organization of the human lateral superior temporal gyrus revealed by intracranial recordings. Cereb Cortex 2014;24:340–352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Nourski KV, Brugge JF. Representation of temporal sound features in the human auditory cortex. Rev Neurosci 2011;22:187–203. [DOI] [PubMed] [Google Scholar]

- 40. Miller GA, Taylor WG. The perception of repeated bursts of noise. J Acoust Soc Am 1948;20:171–182. [Google Scholar]

- 41. Krumbholz K, Patterson RD, Pressnitzer D. The lower limit of pitch as determined by rate discrimination. J Acoust Soc Am 2000;108:1170–1180. [DOI] [PubMed] [Google Scholar]

- 42. Plomp R, Levelt WJ. Tonal consonance and critical bandwidth. J Acoust Soc Am 1965;38:548–560. [DOI] [PubMed] [Google Scholar]

- 43. Terhardt E. Pitch, consonance, and harmony. J Acoust Soc Am 1974;55:1061–1069. [DOI] [PubMed] [Google Scholar]

- 44. Griffiths TD, Kumar S, Sedley W, et al. Direct recordings of pitch responses from human auditory cortex. Curr Biol 2010;20:1128–1132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Fishman YI, Reser DH, Arezzo JC, Steinschneider M. Complex tone processing in primary auditory cortex of the awake monkey. I. Neural ensemble correlates of roughness. J Acoust Soc Am 2000;108:235–246. [DOI] [PubMed] [Google Scholar]

- 46. Liégeois‐Chauvel C, Lorenzi C, Trébuchon A, Régis J, Chauvel P. Temporal envelope processing in the human left and right auditory cortices. Cereb Cortex 2004;14:731–740. [DOI] [PubMed] [Google Scholar]

- 47. Brugge JF, Volkov IO, Oya H, et al. Functional localization of auditory cortical fields of human: click‐train stimulation. Hear Res 2008;238:12–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Gourévitch B, Le Bouquin Jeannès R, Faucon G, Liégeois‐Chauvel C. Temporal envelope processing in the human auditory cortex: response and interconnections of auditory cortical areas. Hear Res 2008;237:1–18. [DOI] [PubMed] [Google Scholar]

- 49. Nourski KV, Brugge JF, Reale RA, et al. Coding of repetitive transients by auditory cortex on posterolateral superior temporal gyrus in humans: an intracranial electrophysiology study. J Neurophysiol 2013;109:1283–1295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Nourski KV, Steinschneider M, Rhone AE. Electrocorticographic activation within human auditory cortex during dialog‐based language and cognitive testing. Front Hum Neurosci 2016;10:202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Arnal LH, Poeppel D, Giraud AL. Temporal coding in the auditory cortex. Handb Clin Neurol 2015;129:85–98. [DOI] [PubMed] [Google Scholar]

- 52. Nourski KV, Reale RA, Oya H,et al. Temporal envelope of time‐compressed speech represented in the human auditory cortex. J Neurosci 2009;29:15564–15574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Steinschneider M, Volkov IO, Fishman YI, Oya H, Arezzo JC, Howard MA. Intracortical responses in human and monkey primary auditory cortex support a temporal processing mechanism for encoding of the voice onset time phonetic parameter. Cereb Cortex 2005;15:170–186. [DOI] [PubMed] [Google Scholar]

- 54. Steinschneider M, Nourski KV, Fishman YI. Representation of speech in human auditory cortex: is it special? Hear Res 2013;305:57–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Greenlee JD, Behroozmand R, Nourski KV, Oya H, Kawasaki H, Howard MA. Using speech and electrocorticography to map human auditory cortex. Conf Proc IEEE Eng Med Biol Soc 2014;2014:6798–6801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Behroozmand R, Oya H, Nourski KV, et al. Neural Correlates of Vocal Production and Motor Control in Human Heschl's Gyrus. J Neurosci 2016;36:2302–2315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Reser DH, Rosa M. Perceptual elements in brain mechanisms of acoustic communication in humans and nonhuman primates. Behav Brain Sci 2014;37:571–572. [DOI] [PubMed] [Google Scholar]

- 58. Pei X, Leuthardt EC, Gaona CM, Brunner P, Wolpaw JR, Schalk G. Spatiotemporal dynamics of electrocorticographic high gamma activity during overt and covert word repetition. Neuroimage 2011;54:2960–2972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Kubanek J, Brunner P, Gunduz A, Poeppel D, Schalk G. The tracking of speech envelope in the human cortex. PLoS One 2013;8:e53398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Ding N, Melloni L, Zhang H, Tian X, Poeppel D. Cortical tracking of hierarchical linguistic structures in connected speech. Nat Neurosci 2016;19:158–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Peelle JE, Davis MH. Neural Oscillations Carry Speech Rhythm through to Comprehension. Front Psychol 2012;3:320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Steinschneider M, Nourski KV, Kawasaki H, Oya H, Brugge JF, Howard MA. Intracranial study of speech‐elicited activity on the human posterolateral superior temporal gyrus. Cereb Cortex 2011;21:2332–2347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Greenlee JD, Jackson AW, Chen F, et al. Human auditory cortical activation during self‐vocalization. PLoS One 2011;6:e14744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64. Boatman D, Hall C, Goldstein MH, Lesser R, Gordon B. Neuroperceptual differences in consonant and vowel discrimination: as revealed by direct cortical electrical interference. Cortex 1997;33:83–98. [DOI] [PubMed] [Google Scholar]

- 65. Mesgarani N, David SV, Fritz JB, Shamma SA. Phoneme representation and classification in primary auditory cortex. J Acoust Soc Am 2008;123:899–909. [DOI] [PubMed] [Google Scholar]

- 66. Zhao L, Zhaoping L. Understanding auditory spectro‐temporal receptive fields and their changes with input statistics by efficient coding principles. PLoS Comput Biol 2011;7:e1002123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67. Pasley BN, David SV, Mesgarani N, et al. Reconstructing speech from human auditory cortex. PLoS Biol 2012;10:e1001251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Mesgarani N, Cheung C, Johnson K, Chang EF. Phonetic feature encoding in human superior temporal gyrus. Science 2014;343:1006–1010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Hullett PW, Hamilton LS, Mesgarani N, Schreiner CE, Chang EF. Human superior temporal gyrus organization of spectrotemporal modulation tuning derived from speech stimuli. J Neurosci 2016;36:2014–2026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Herff C, Johnson G, Diener L, Shih J, Krusienski D, Schultz D. Towards direct speech synthesis from ECoG: A pilot study. Conf Proc IEEE Eng Med Biol Soc 2016;2016:1540–1543. [DOI] [PubMed] [Google Scholar]

- 71. Liégeois‐Chauvel C, Musolino A, Badier JM, Marquis P, Chauvel P. Evoked potentials recorded from the auditory cortex in man: evaluation and topography of the middle latency components. Electroencephalogr Clin Neurophysiol 1994;92:204–214. [DOI] [PubMed] [Google Scholar]

- 72. Recanzone GH. Response profiles of auditory cortical neurons to tones and noise in behaving macaque monkeys. Hear Res 2000;150:104–118. [DOI] [PubMed] [Google Scholar]

- 73. Lakatos P, Pincze Z, Fu KM, Javitt DC, Karmos G, Schroeder CE. Timing of pure tone and noise‐evoked responses in macaque auditory cortex. Neuroreport 2005;16:933–937. [DOI] [PubMed] [Google Scholar]

- 74. Camalier CR, D'Angelo WR, Sterbing‐D'Angelo SJ, de la Mothe LA, Hackett TA. Neural latencies across auditory cortex of macaque support a dorsal stream supramodal timing advantage in primates. Proc Natl Acad Sci U S A 2012;109:18168–18173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Morillon B, Liégeois‐Chauvel C, Arnal LH, Bénar CG, Giraud AL. Asymmetric function of theta and gamma activity in syllable processing: an intra‐cortical study. Front Psychol 2012;3:248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Fontolan L, Morillon B, Liegeois‐Chauvel C, Giraud AL. The contribution of frequency‐specific activity to hierarchical information processing in the human auditory cortex. Nat Commun 2014;5:4694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Nourski KV, Steinschneider M, Rhone AE, et al. Sound identification in human auditory cortex: Differential contribution of local field potentials and high gamma power as revealed by direct intracranial recordings. Brain Lang 2015;148:37–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78. Chang EF, Edwards E, Nagarajan SS, et al. Cortical spatio‐temporal dynamics underlying phonological target detection in humans. J Cogn Neurosci 2011;23:1437–1446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Steinschneider M, Nourski KV, Rhone AE, Kawasaki H, Oya H, Howard MA. Differential activation of human core, non‐core and auditory‐related cortex during speech categorization tasks as revealed by intracranial recordings. Front Neurosci 2014;8:240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Nourski KV, Steinschneider M, Oya H, Kawasaki H, Howard MA. Modulation of response patterns in human auditory cortex during a target detection task: an intracranial electrophysiology study. Int J Psychophysiol 2015;95:191–201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81. Nourski KV, Steinschneider M, Rhone AE, Howard MA. Intracranial electrophysiology of auditory selective attention associated with speech classification tasks. Front Hum Neurosci 2017;10:691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Kam JWY, Szczepanski SM, Canolty RT, et al. Differential sources for 2 neural signatures of target detection: an electrocorticography study. Cereb. Cortex (in press) doi:10.1093/cercor/bhw343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. Mesgarani N, Chang EF. Selective cortical representation of attended speaker in multi‐talker speech perception. Nature 2012;485:233–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. Zion Golumbic EM, Ding N, Bickel S, et al. Mechanisms underlying selective neuronal tracking of attended speech at a “cocktail party”. Neuron 2013;77:980–991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Dijkstra K, Brunner P, Gunduz A, et al. Identifying the attended speaker using electrocorticographic (ECoG) signals. Brain Comput Interfaces (Abingdon) 2015;2:161–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Chan AM, Dykstra AR, Jayaram V, et al. Speech‐specific tuning of neurons in human superior temporal gyrus. Cereb Cortex 2014;24:2679–2693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Derix J, Iljina O, Schulze‐Bonhage A, Aertsen A, Ball T. "Doctor” or “darling"? Decoding the communication partner from ECoG of the anterior temporal lobe during non‐experimental, real‐life social interaction. Front Hum Neurosci 2012;6:251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88. Merzenich MM. Coding of sound in a cochlear prosthesis: some theoretical and practical considerations. Ann N Y Acad Sci 1983;405:502–508. [DOI] [PubMed] [Google Scholar]

- 89. Turner CW, Souza PE, Forget LN. Use of temporal envelope cues in speech recognition by normal and hearing‐impaired listeners. J Acoust Soc Am 1995;97:2568–2576. [DOI] [PubMed] [Google Scholar]

- 90. Nourski KV, Rhone AE, Steinschneider M, Kawasaki H, Oya H, Howard MA III. Cortical processing of spectrally degraded speech as revealed by intracranial recordings. Proc 5th Int Conf Auditory Cortex – Towards Synthesis Human Animal Res, 2014;42. [Google Scholar]

- 91. Shannon RV, Zeng FG, Kamath V, Wygonski J, Ekelid M. Speech recognition with primarily temporal cues. Science 1995;270:303–304. [DOI] [PubMed] [Google Scholar]

- 92. Dorman MF, Loizou PC, Rainey D. Speech intelligibility as a function of the number of channels of stimulation for signal processors using sine‐wave and noise‐band outputs. J Acoust Soc Am 1997;102:2403–2411. [DOI] [PubMed] [Google Scholar]