Abstract

Background

Many recipients of bilateral cochlear implants (CIs) may have differences in electrode insertion depth. Previous reports indicate that when a bilateral mismatch is imposed, performance on tests of speech understanding or sound localization becomes worse. If recipients of bilateral CIs cannot adjust to a difference in insertion depth, adjustments to the frequency table may be necessary to maximize bilateral performance.

Purpose

The purpose of this study was to examine the feasibility of using real-time manipulations of the frequency table to offset any decrements in performance resulting from a bilateral mismatch.

Research Design

A simulation of a CI was used because it allows for explicit control of the size of a bilateral mismatch. Such control is not available with users of CIs.

Study Sample

A total of 31 normal-hearing young adults participated in this study.

Data Collection and Analysis

Using a CI simulation, four bilateral mismatch conditions (0, 0.75, 1.5, and 3 mm) were created. In the left ear, the analysis filters and noise bands of the CI simulation were the same. In the right ear, the noise bands were shifted higher in frequency to simulate a bilateral mismatch. Then, listeners selected a frequency table in the right ear that was perceived as maximizing bilateral speech intelligibility. Word-recognition scores were then assessed for each bilateral mismatch condition. Listeners were tested with both a standard frequency table, which preserved a bilateral mismatch, or with their self-selected frequency table.

Results

Consistent with previous reports, bilateral mismatches of 1.5 and 3 mm yielded decrements in word recognition when the standard table was used in both ears. However, when listeners used the self-selected frequency table, performance was the same regardless of the size of the bilateral mismatch.

Conclusions

Self-selection of a frequency table appears to be a feasible method for ameliorating the negative effects of a bilateral mismatch. These data may have implications for recipients of bilateral CIs who cannot adapt to a bilateral mismatch, because they suggest that (1) such individuals may benefit from modification of the frequency table in one ear and (2) self-selection of a “most intelligible” frequency table may be a useful tool for determining how the frequency table should be altered to optimize speech recognition.

Keywords: bilateral mismatch, cochlear implant, self-selected, simulation

INTRODUCTION

The benefits to binaural versus unilateral hearing are well known, and include improved sound localization and better speech understanding in the presence of background noise. However, for the benefits of binaural hearing to be fully realized, there is evidence indicating that the signal provided to each ear should stimulate neurons tuned to the same characteristic frequency in each ear. In normal-hearing individuals, poorer performance on sound localization or lateralization tasks has been consistently observed when there is a bilateral mismatch in the frequencies of the signals delivered to each ear. For example, the ability to discriminate interaural timing differences of amplitude modulated tones is poorer when the signal delivered to each ear is mismatched in frequency (Henning, 1974; Nuetzel and Hafter, 1981; van de Par and Kohlrausch, 1997; Blanks et al, 2008). Similar patterns of deficits are observed when listeners discriminate interaural level differences of signals with mismatched frequency in each ear (Francart and Wouters, 2007). Finally, the ability of normal-hearing individuals to fuse signals with different spectral characteristics into a single auditory image, and to discriminate a change in location of those signals, deteriorates significantly with increasing bilateral mismatch (Goupell et al, 2013).

In normal-hearing individuals, bilateral mismatches of this sort are unlikely to exist outside of a laboratory environment. However, in some patient populations, such as recipients of bilateral cochlear implants (CIs), there is a likelihood that some individuals will experience a bilateral mismatch in the site of stimulation. This situation could arise when there is a between-ear difference in electrode insertion depth or insertion angle. Such mismatches are likely to elicit interaural mismatches in the site of stimulation when the same signal is delivered to each ear. The likelihood of this occurrence may have some clinical relevance, because interaural mismatches in site of stimulation reduce the ability of bilateral CI users to (a) discriminate cues to sound location based on interaural time differences (van Hoesel and Clark, 1997; Long et al, 2003; Wilson et al, 2003; van Hoesel, 2004; Poon et al, 2009; Kan et al, 2013; Kan et al, 2015), (b) discriminate cues to sound location based on interaural level differences (Kan et al, 2015), and (c) bilaterally fuse independent stimuli from each ear into a single, fused percept (Kan et al, 2015).

Less is known about the effects of bilateral mismatches on speech understanding. This is due in part to the difficulty in identifying how insertion depths differ between ears in recipients of bilateral CIs. Acoustic models of CIs offer an opportunity to explore this issue by offering precise control of the stimulus and its presentation. Studies using these models generally attempt to simulate the reduced spectral and fine structure cues available to CI recipients with the goal of better understanding the mechanisms used by these patients to understand speech. Frequency-to-place mismatches can be simulated within an ear by imposing a mismatch between the analysis filters, which separate the incoming signal into different frequency channels, and the noise bands or pure tones that carry the information extracted in the analysis filters. In bilateral simulations, mismatched information can be provided to each ear, allowing researchers to simulate instances in which the insertion depth or angle varies between ears. Although CI simulations do not directly replicate the issues faced by recipients of CIs (Svirsky et al, 2013), these simulations do allow for precise control with regard to the stimulus and its presentation; the same amount of control is not available with actual CI users.

Previous studies have evaluated the use of acoustic CI models in monaural and diotic listening conditions. Although these mismatches are in the frequency domain, they are customarily expressed in mm of displacement along the cochlea, using Greenwood’s equation (Greenwood, 1990) to convert frequency values to locations in the cochlea (and equivalently, to convert frequency shifts to distances). On the whole, these studies show that with naïve listeners, speech-perception abilities are hindered when there is a within-ear frequency mismatch between the analysis filters and noise bands of the CI simulation that is ≥3 mm in displacement along the basilar membrane (Dorman et al, 1997; Shannon et al, 1998; Fu and Shannon, 1999). The negative effect of these mismatches can be ameliorated with practice (Rosen et al, 1999; Fu and Galvin, 2003; Faulkner et al, 2006; Svirsky, Talavage, et al, 2015), although some deficits in performance may remain if the mismatches are sufficiently large.

Bilateral mismatches can be emulated in a CI simulation by imposing an interaural mismatch between noise bands presented to each ear while using the same analysis filters in both ears. This is analogous to the case of a bilateral CI recipient whose implant is programmed with the same frequency table in each ear despite a difference in electrode insertion depth. Although data are limited with regard to the effect of bilateral mismatches on speech perception, the available data suggest that speech understanding can be hindered if a bilateral mismatch is sufficiently large, and that such deficits may be resistant to training. In one investigation (Yoon et al, 2011), sentence recognition was measured in quiet and in a +5 dB signal-to-noise ratio (SNR) with a six-channel sine-wave vocoder. The left ear attempted to simulate an insertion depth of 24 mm, and in the right ear, the analysis filters were shifted to simulate insertion depths ranging from 21 to 27 mm. Here, performance was better in the bilateral than unilateral conditions when the bilateral mismatch was ≤1 mm. Moreover, bilateral interference (poorer performance in the bilateral condition) was observed with a bilateral mismatch of 3 mm. This pattern of results was essentially the same both in quiet and in noise, suggesting that even small bilateral mismatches can inhibit bilateral benefit, and may in some instances result in bilateral interference (Yoon et al, 2011).

In a different investigation, a simulated bilateral mismatch was employed in an effort to observe whether the benefits normally observed with the head shadow effect, bilateral squelch, and bilateral redundancy were seen in cases of simulated bilateral mismatch. Sentence recognition scores were obtained both unilaterally and bilaterally with an eight-channel sine-wave vocoder at SNRs of +5 and +10 dB. For each of these SNRs, listeners were tested in a condition with no bilateral mismatch, and with a bilateral mismatch equivalent to 3 mm in cochlear space. As with the previous study from the same authors (Yoon et al, 2011), bilateral interference was noted when a bilateral mismatch of 3 mm was present. In the mismatch conditions, the benefits from the head shadow effect were still observed, but benefits associated with bilateral redundancy or squelch were not, again suggesting that bilateral mismatches can inhibit bilateral benefit in a CI simulation (Yoon et al, 2013).

A separate investigation also used a CI simulation to measure speech understanding in noise, and self-reported ease of listening (van Besouw et al, 2013). Here, listeners were tested unilaterally, bilaterally with the same simulated insertion depth in each ear, and bilaterally with simulated mismatches in insertion depth. Consistent with the previous reports, decrements in speech understanding were reported with bilateral mismatches ≥3 mm compared to both matched-bilateral and unilateral listening conditions. Moreover, perceived ease of listening also decreased for the mismatched-bilateral conditions.

In an additional report, (Siciliano et al, 2010), the speech-understanding abilities of normal-hearing individuals were tested with a sine-wave vocoder CI simulation where three channels were presented to one ear, and the remaining three channels were presented to the contralateral ear. In the first ear, analysis filters and synthesis sine waves were equal, but in the contralateral ear they were mismatched in frequency by an amount equivalent to 6 mm of displacement along the basilar membrane. Listeners were unable to integrate the bilateral-mismatched information, and these deficits associated with bilateral mismatches persisted after several hours of training (Siciliano et al, 2010), raising the possibility that CI users may have difficulty adapting to bilateral-mismatched information.

Taken together, these results suggest that bilateral mismatches may have a negative effect on speech perception if they become sufficiently large, and that these deficits may be resistant to multihour training. If listeners cannot adapt to a bilateral mismatch, then it is possible that the frequency table(s) of a CI speech processor may need to be modified to offset any deleterious effects of bilateral mismatch. One possible method for doing so would be to allow the listener to select a preferred frequency table with one CI. To date, this approach has been used in only two recipients of bilateral CIs. In one instance, an individual with a sizable mismatch in electrode insertion depth selected a preferred frequency table that differed from the standard in the ear with the shorter insertion depth. Perhaps more important, the unilateral speech-understanding score in the self-selected table was higher than with the standard table. Thus, in this instance, self-selection of a frequency table in one ear appeared to have benefitted that individual (Svirsky, Fitzgerald, et al, 2015). In a second report involving a different participant, self-selection of a preferred frequency table increased the likelihood that a single, fused percept was heard when a single electrode was stimulated simultaneously in both ears (Fitzgerald et al, 2015). Although promising, these reports include data from only two individuals, and do not offer any guidelines as to when frequency-table self-selection might be appropriate for recipients of bilateral CIs. To address this question would require precise and explicit control over the size of the bilateral mismatch; this cannot be done in recipients of bilateral CIs because it is impossible to measure noninvasively the characteristic frequency of the neurons stimulated by a given intracochlear electrode. Thus, to investigate the feasibility of frequency-table self-selection for recipients of bilateral CIs, we used an acoustic simulation of a CI to examine (a) the effects of varying degrees of bilateral mismatch on speech perception and (b) whether self-selection of a frequency table in one ear can ameliorate any deleterious effects stemming from a bilateral mismatch. We used a CI simulation because it allows us to control the degree of frequency mismatch in each ear with great precision. Moreover, self-selection of a preferred frequency table has been previously shown to be a feasible method for identifying cases in which listeners had not adapted to a unilateral frequency mismatch, and for improving speech-recognition scores in listeners who had not fully adapted (Fitzgerald et al, 2013).

METHODS

Listeners

A total of 31 listeners (21 females) ranging in age from 20 to 37 yr (mean = 26.9 yr) participated in this experiment. None of these listeners had any previous experience listening to speech processed through an acoustic CI simulation. All listeners reported having normal hearing bilaterally with no history of learning or other cognitive disorder. Listeners were paid an hourly wage for their participation. The study was approved by the New York University School of Medicine Institutional Review Board.

Acoustic Model

In the present experiment, we used an acoustic model of a CI to simulate the percepts elicited by stimulation of electrodes located in different parts of the cochlea. The acoustic model was an eight-channel noise vocoder (based on Kaiser and Svirsky, 2000) in which the input signal was digitized at 48,000 samples per second, low-pass filtered with a cutoff frequency of 24000 Hz, and divided into frequency channels using sixth-order Butterworth band-pass filters. For each of these analysis filters, the temporal envelope was extracted and used to modulate the corresponding noise band (Table 1). The eight noise bands were created by filtering white noise with sixth-order Butterworth filters, which is the most common procedure in published studies that use acoustic models of cochlear implantation (Dorman et al, 1997; Loizou et al, 2000; Garadat et al, 2010).

Table 1.

Noise Band Allocations for Cochlear Implant Simulation

| Condition (mm) | Frequency Range (Hz) | |||||||

|---|---|---|---|---|---|---|---|---|

| 0 | 125–251 | 251–376 | 375–502 | 502–737 | 737–1082 | 1082–1589 | 1589–2333 | 2333–3426 |

| 0.75 | 173–347 | 347–520 | 520–694 | 694–966 | 966–1430 | 1430–2054 | 2054–2950 | 2950–4235 |

| 1.5 | 232–464 | 464–696 | 696–928 | 928–1312 | 1312–1853 | 1853–2619 | 2619–3700 | 3700–5229 |

| 3 | 394–788 | 788–1182 | 1182–1576 | 1576–2179 | 2179–3012 | 3012–4164 | 4164–5756 | 5756–7957 |

Note: For each of the four mismatch conditions, the frequency ranges for the eight noise bands used in the right ear are shown. The noise bands were always fixed in the left ear, with the frequency range always corresponding to the 0-mm condition.

The frequency settings of the noise band filters and analysis filters were adjusted in each ear to simulate different degrees of bilateral mismatch. Two computers were used to process the input speech signal, one computer for the left ear and a second computer for the right ear.

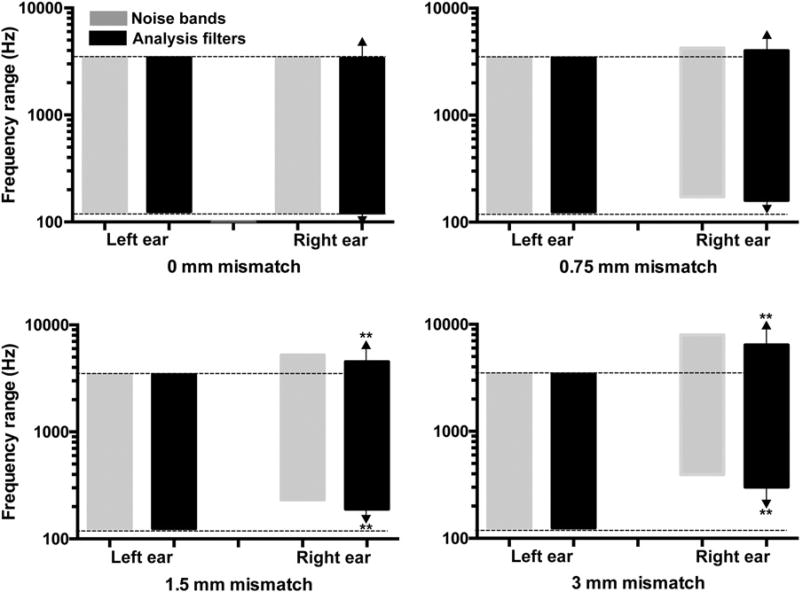

In the left ear, the noise bands were fixed to a frequency range of 125–3426 Hz. The analysis filters were set to the same range to match the noise bands. In the right ear, the noise bands were fixed to one of four different frequency ranges based on Greenwood’s frequency-to-place function (Greenwood, 1990). Each condition simulated a different insertion depth, and therefore introduced a different degree of bilateral mismatch between ears. For the 0-mm condition, a range of 125–3426 Hz was used to provide matched information to the left and right ears. The other three conditions, 0.75, 1.5, and 3 mm, created bilateral mismatches by simulating a shift to a more basal cochlear locations. This is analogous to the right ear having a shallower insertion depth. The 0.75-, 1.5-, and 3-mm conditions used noise bands fixed to 173–4235, 232–5229, and 394–7957 Hz, respectively. In the right ear, listeners could adjust the analysis filter bank while listening to running speech in real time by pressing keys on a computer keyboard (see also Fitzgerald et al, 2006; 2013). These adjustments of the analysis filters were made in steps that corresponded to a 0.25-mm shift along the basilar membrane (according to Greenwood, 1990; see Figure 1 for a visual depiction of the analysis filters and noise bands for each testing condition in both ears). For each adjustment step, all filter bank boundaries were shifted by the same amount when measured in those units (mm along the cochlea). The total frequency span of the filter bank remained constant when measured in mm of cochlear distance.

Figure 1.

For each of the four bilateral mismatch conditions, the noise bands (gray rectangles) and analysis filter bank (black rectangles) are shown for the left and right ear (left and right pairs of columns, respectively). Arrows above and below the rightmost shaded rectangle indicate that this is the self-selected filter bank, which was obtained solely in the right ear. The analysis filter banks shown here are the group means for the 31 listeners who were tested. Asterisks indicate that the edges of that self-selected analysis filter bank differ significantly from the noise bands in the right ear as determined by a 99% confidence interval.

Procedure

In each of the four test conditions, listeners performed two tasks: first, they obtained a “self-selected frequency table,” and then they completed a word-recognition task. The stimuli used in selecting a frequency table was running speech presented to both ears (consonant-nucleus-consonant [CNC] list 1; Peterson and Lehiste, 1962) was looped repeatedly, and was not used in any subsequent testing. The listeners were instructed that they would hear distorted speech in both ears, and that the speech might differ in sound quality between ears. The sound quality in the left ear would always remain fixed, and their task was to adjust the sound quality of the speech in the right ear such that the combined (bilateral) speech input was most intelligible. Listeners adjusted the frequency table in the right ear by pressing keys on a keyboard, which enabled them to shift the filter bank in the right ear higher or lower in frequency. Only the filter bank in the right ear was changed. The noise bands in the left and right ear, as well as the filter bank, remained constant. To aid the listeners in this frequency-table selection process, a visual representation of the frequency range of the analysis filters in the right ear was shown on a computer screen. In this representation, a dark gray rectangle represented the active filter bank. This dark gray rectangle was embedded within a larger light gray rectangle, which represented the entire audible frequency range of human hearing. As the listener shifted the analysis filter bank higher or lower in frequency, the position of the dark gray rectangle moved up and down within the light gray rectangle. As noted previously, listeners adjusted the active filter bank in an effort to maximize bilateral speech intelligibility. Listeners repeated this process three times with different starting points for the analysis filters. One starting point was a filter bank that extended from 60 to 1340 Hz, the second starting point was a filter bank from 864 to 10000 Hz, and the final starting point was a filter bank from 124 to 3426 Hz. The three starting positions were randomized for each listener. For an individual listener, we defined the “self-selected table” as the one that was closest to the average of the three frequency tables selected from each starting point. To obtain this average selection, listeners required 3–15 min per condition, with most individuals doing so in ~5–6 min (Fitzgerald et al, 2013).

In the CNC word–recognition task, listeners completed two CNC word lists for each one of the four frequency mismatch conditions. One of these word lists was completed using the “self-selected table” in the right ear, and the other list was completed using what we will call the “standard table” in the right ear. The standard table was used in the left ear for all conditions. Use of the standard table in both ears simulates current clinical practice, as patients are generally assigned the same frequency table in each ear regardless of the insertion depth. Use of the self-selected table in the right ear represents an experimental condition. Note that this CNC word–recognition task reflects an acute listening situation, as no training or experience was provided beyond that gained while the listener obtained a self-selected frequency table (an average of 5–6 min for each condition, as indicated above). The first bilateral mismatch condition to be tested was assigned in a balanced manner, such that Listener 1 was assigned to the 0-mm condition first, Listener 2 to 0.75 mm, Listener 3 to 1.5 mm, and Listener 4 to 3 mm; this order was repeated for each successive group of four listeners. The order of the remaining conditions was determined by the initial one. If Condition 3 was first, then 4 was second, then 1, and finally Condition 2. A similar pattern was employed when Condition 1, 2, or 4 was first. The testing order for the “self-selected” and “standard” tables was also balanced for the first bilateral mismatch condition to be tested, with even-numbered listeners beginning with the self-selected table, and odd-numbered listeners starting with the standard table. After the first mismatch condition was completed, presentation of the “self-selected” and “standard” tables alternated in an A-B-B-A, in which A corresponded either to the “self-selected” table or the “standard” table. In all lists and all conditions, we used a computer program to present the words to the listener. Individuals were instructed to repeat the target word, and their responses were recorded by the experimenter before beginning the next trial. Listeners could choose to hear a given word as many times as they wanted before responding. However, no feedback was provided as to whether a particular word was repeated correctly. All word lists were presented at 70 dB SPL over circumaural headphones while the listener was seated in a sound-treated booth. CNC words were scored by visually comparing the recorded response with the word presented. This scoring process was completed independently by two authors of this manuscript, and compared to ensure reliability.

Statistical Analyses

Our first step was to determine whether, for each condition, the average “self-selected table” across listener differed significantly from the “standard table.” We did so by first computing 99% confidence intervals on both the low- and high-frequency edges of the self-selected table, and then determining whether the standard table edges fell outside of that confidence interval. If so, we concluded that the self-selected table was significantly different from the standard table. Our second step was to identify whether, for each condition, the CNC word–recognition scores obtained with the self-selected table and standard table differed significantly. We did so by conducting a 2 (table, standard or self-selected) × 4 (mismatch condition) analyses of variance with repeated measures across the four conditions. Significant interactions were followed up with post hoc Holm–Sidak tests.

RESULTS

Self-Selected Frequency Tables

The current data show that when inexperienced listeners are exposed to bilateral vocoded speech that simulates a bilateral mismatch (with the right ear being stimulated by higher frequency noise bands), and they are allowed to self-select a frequency table that maximizes bilateral speech intelligibility, they select tables that fall between the frequencies of the standard table and the right-ear noise bands. Figure 1 shows, for each of the four bilateral mismatch conditions (different panels), the frequency range of the noise bands (gray rectangles) and analysis filters (black rectangles) for both the left and right ears. In the 0- and 0.75-mm mismatch conditions, the self-selected table (rightmost black rectangle within each panel) did not differ significantly from the noise bands in the right ear. In these two conditions, the low-frequency and high-frequency edges of the right-ear noise bands fell within the 99% confidence interval for the low- and high-frequency end of the self-selected table, respectively, in the right ear. In contrast, for the 1.5- and 3-mm mismatch conditions, the self-selected tables were lower in frequency than the right-ear noise bands. For these conditions, the low-frequency edge of the noise bands fell outside of the 99% confidence interval of the self-selected tables. This discrepancy between the self-selected table and the right-ear noise bands was larger for the 3-mm condition than for the 1.5-mm condition. Thus, as the frequency of the right-ear noise bands was increased, resulting in mismatches of 1.5 and 3 mm with respect to the left ear, listeners selected right-ear tables that were also higher in frequency, but not as high as the right-ear noise bands.

An analysis of the three frequency-table selections made by each listener for a given mismatch condition suggests that there may be a small effect of the starting position on the self-selected table. Due to a data-recording error, we only had access to the individual selections made by 15 of the 31 listeners tested here. These data were then subjected to a 2 × 4 repeated measures analysis of variance on the starting position (lowest, highest, and mid frequency tables) and mismatch condition. Results of this analysis indicate a significant main effect of starting point [F(2,84) = 3.39, p = 0.048] and mismatch condition [F(3,84) = 76.318, p < 0.001], but no significant interaction between starting point and mismatch condition [F(6,84) = 0.91, p = 0.492]. The significant main effect for mismatch condition is consistent with the data in Figure 1 depicting selection of different frequency tables for the 1.5- and 3-mm mismatch conditions. Regarding the main effect of starting point, post hoc Holm–Sidak tests indicated no significant difference between any of the three starting points, although the difference between the lowest and highest frequency starting points approached statistical significance (p = 0.062). The other two comparisons were nonsignificant (low versus mid,p = 0.668; high versus mid, p = 0.106). These results suggest there may be a small effect of the starting position on the frequency table in which extremely high-frequency starting points are associated with frequency-table selections that are higher in frequency than those observed when the starting point is very low in frequency. However, because the self-selected table used in the word-recognition testing was an average of the all three starting points (low, mid, and high), any small effect of starting point on frequency-table self-selection should not have influenced the word-recognition results.

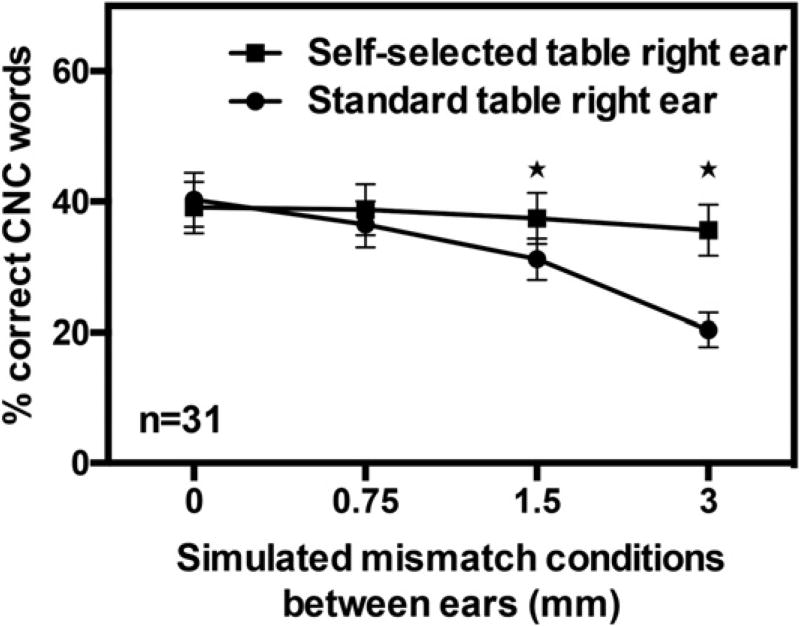

Speech Understanding

Figure 2 depicts the mean word-recognition scores for the four levels of simulated mismatch between ears for both the “standard” and “self-selected” frequency tables. The present data indicate that the ability to understand speech deteriorates significantly for simulated bilateral mismatches of 1.5 and 3 mm when a standard frequency table is used in an acute experiment. However, these deleterious effects associated with bilateral mismatches can be ameliorated when listeners are allowed to use a self-selected frequency table that was chosen to maximize bilateral intelligibility. Results of a 2×4 repeated measures analysis of variance on the word-recognition scores indicate a significant interaction between the simulated mismatch and the type of table [F(3,85) = 17.802, p < 0.001]. For simulated mismatches of 0 and 0.75 mm, there were no differences in the word-recognition scores when either the standard or the self-selected table was used in the right ear (Holm–Sidak test; p > 0.14 in each case). However, when the simulated mismatch was 1.5 or 3 mm, listeners performed significantly better when the “self-selected” table rather than the “standard” table was used in the right ear (Holm–Sidak test; p < 0.001 in each case) Similar results were observed for the phoneme-recognition scores [mismatch × table interaction; F(3,85) = 33.96, p < 0.001; Holm–Sidak post hoc tests p < 0.001 for simulated mismatches of 1.5 and 3 mm].

Figure 2.

Depicted here are the CNC word–recognition scores for the standard (squares) and self-selected (triangles) frequency tables. Error bars indicate ±1 standard error of the mean across listeners. Stars indicate mismatch conditions in which listeners performed significantly better with the self-selected table than with the standard table. AD = XXX.

DISCUSSION

The present data are consistent with previous results indicating that sizable bilateral mismatches can yield speech-perception scores that are lower than those observed when listening unilaterally. This occurs when a standard filter bank is used for both ears, but the noise bands are significantly mismatched in such a way that one ear has a large discrepancy between the analysis filters and noise bands, whereas the second ear has a good correspondence between the analysis filters and noise bands. These decrements in speech perception are likely due to interference from the signal in the second ear, and are consistent with those observed in other investigations (Siciliano et al, 2010; Yoon et al, 2011; 2013; van Besouw et al, 2013). However, the detrimental effects of bilateral mismatches can be ameliorated when listeners adjust the analysis filters in one ear with the goal of maximizing bilateral speech intelligibility. This is notable, because speech-perception deficits associated with bilateral mismatches can be resistant to several hours of training (Siciliano et al, 2010).

It is possible that selection of frequency tables may have some clinical utility for recipients of bilateral CIs. For example, some bilateral CI recipients may have electrodes with differing insertion depths. It is also possible that electrodes with the same insertion depths in both ears may stimulate neural populations with different characteristic frequencies, due to differences in neural survival patterns across the two ears. In either instance, the same signal is likely to elicit a mismatch in the site of stimulation in each ear. If this occurs, recipients of bilateral CIs must learn to adapt to such bilateral mismatches to reach their best possible level of performance. The simulation data from Siciliano et al (2010) suggest that this may be a difficult process, although it should be noted that bilateral CI recipients use their devices daily for months or years; this additional experience may allow them to adapt at least in part to a bilateral mismatch. It should be kept in mind, however, that both the present study and the Siciliano et al study used acoustic models of CIs. Such models have received some validation (Svirsky et al, 2013) but studies using them must ultimately be confirmed in the target population of bilateral CI users.

Evidence for adaptation to bilateral frequency mismatch has been observed in two separate investigations of individual cases in which bilateral mismatches in electrode insertion depth were documented (Reiss et al, 2011; Svirsky, Fitzgerald, et al, 2015). Although some adaptation was noted in both instances, none of the participants in either study were able to adapt completely. The present data suggest that if a user of bilateral CIs cannot fully adapt to a bilateral mismatch, then allowing that individual to select a preferred frequency table in one ear might help to offset the negative effects of that mismatch. Evidence supporting that premise is limited to date, although data from two individuals show promise in this regard.

In one example (Svirsky, Fitzgerald, et al, 2015), one of the participants with a sizable mismatch in insertion depth was allowed to select a preferred frequency table in the ear with the shorter electrode insertion depth. This individual selected a table that differed considerably from the standard in a way that was consistent with the extremely shallow insertion of the electrode array. More important, the speech-understanding scores with the self-selected frequency table were higher than with the standard frequency table. Thus, in this instance, self-selection of a frequency table in one ear appeared to have benefitted that individual.

In a second report (Fitzgerald et al, 2015), a recipient of bilateral CIs was again allowed to select a preferred frequency table in one ear. This individual, when using the standard frequency table in each ear, reported hearing a single, fused percept on ~75% of trials when electrode 20 was stimulated simultaneously in both ears. On the remaining ~25% of trials, this individual reported hearing two percepts, one in each ear. These reports differ considerably from individuals with normal hearing, who almost exclusively report hearing a single, fused image when the same signal is simultaneously presented to both ears. When allowed to select a preferred frequency table in one ear that was perceived as maximizing speech intelligibility, and “making the two ears blend together,” this individual selected a table (63–5188 Hz) that differed from the standard table (188–7938 Hz). After using the self-selected frequency table in that ear for one month, this individual was retested on the fusion task, and perceived all signals as fused regardless of which electrode was stimulated. Moreover, fusion was achieved with no change in speech-understanding ability. Thus, self-selection of a preferred frequency table appears to have the potential to facilitate bilateral fusion in recipients of bilateral CIs. Although such results are promising, it remains to be seen whether self-selection of frequency tables could be used to facilitate the ability of CI recipients to localize sound by minimizing bilateral mismatch, or to maximize the likelihood of bilateral benefits to speech understanding. This approach merits further study given the well-documented decrements in the ability of bilateral CI recipients to use cues for sound-source location when sizable bilateral mismatches are imposed (van Hoesel and Clark, 1997; Long et al, 2003; Wilson et al, 2003; van Hoesel, 2004; Poon et al, 2009; Kan et al, 2013; 2015).

If self-selection of a preferred frequency table were to have clinical utility, the present data suggest that several runs should be taken with different starting points before identifying a self-selected table. A previous implementation of this approach using diotic listening revealed that within a single session, the frequency-table selections made by a given listener were largely consistent across different selections made with the same low-, mid-, and high-frequency starting points (largest difference between trials was equivalent to a distance of 3 mm along the cochlea; (Fitzgerald et al, 2013). In addition, these data were no more variable than those obtained with a genetic algorithm (Başkent et al, 2007). With regard to the present data, we observed a similar degree of within-listener variability across the four mismatch conditions for low-, mid-, and high-frequency starting points. Specifically, 95% of all measurements (57 of 60) had between-trial variability of #1.5 mm in cochlear space, a value that is largely consistent with our previous observations. Moreover, two of the three instances with between-trial variability ≥1.5 mm came from the same listener, and in the other instance the largest between-trial variability was 1.75 mm in cochlear space. Given that each self-selection measurement requires only a few minutes (Fitzgerald et al, 2013), completing several runs and taking the average self-selected table seems feasible. One point to note, however, is that most listeners selected a table that was lower in frequency than the noise bands. This suggests that real-time frequency-table selections probably may be used to approximate the size of a mismatch within a given individual, but it may not yield the exact magnitude of a bilateral mismatch. For this procedure to identify the size of a bilateral mismatch, listeners would have needed to select a frequency table in the right ear that matched the noise bands in the right ear. Should a procedure akin to this prove to have clinical value, the inability to identify the magnitude of a mismatch is probably less relevant than the ability to help listeners overcome any deficits associated with such mismatches.

Other tools exist which could be used to recommend changes in the frequency table to account for bilateral mismatches, but these tools may not account for the behavioral percepts elicited by electrical stimulation over time. For example, use of an X-ray or computed tomography scan to estimate the location of the electrode array within the cochlea (Skinner et al, 1994) is the most direct way to identify a physical mismatch in between-ear electrode position. However, it fails to account for the possibility that a listener could partially, but not completely, adapt to a bilateral mismatch (Reiss et al, 2011; Svirsky, Fitzgerald, et al, 2015). In this instance, the self-selected frequency table may differ from one assigned on the basis of the electrode location and its position in relation to the frequency-to-place function of the cochlea (Greenwood, 1990; Stakhovskaya et al, 2007). A second approach is to use the binaural interaction component (BIC) of the evoked auditory brainstem response (Smith and Delgutte, 2007). This method is still experimental, but preliminary data thus far indicate that it can be recorded with large interaural offsets at high stimulation levels. This suggests that at high stimulation levels, there is a very broad pattern of neural activation (He et al, 2010). Perhaps more important, the BIC appears to be poorly correlated with pitch percepts elicited in each ear (He et al, 2012), suggesting that it may not be directly related to the behavioral percepts elicited by electrical stimulation. At present, there are little to no data suggesting that the BIC is associated with improved performance on behavioral measures in recipients of CIs. Taken together, these data indicate that additional work needs to be completed before the BIC could be used to recommend adjustments to the frequency table.

SUMMARY

In summary, speech perception in a bilateral acoustic model of cochlear implantation decreases as the mismatch in modeled electrode location reaches 1.5–3 mm. The negative effect of this mismatch can be substantially reduced by allowing listeners to select an alternative frequency table in one ear. In the present study, the selection was done while using a real-time speech processing system that listeners manipulated while hearing running speech and aiming to improve the intelligibility of the bilateral input. It is likely that this selection could be done in a number of different ways (such as with the use of a genetic algorithm) with similar results. The present results, considered in conjunction with studies conducted in recipients of bilateral CIs, suggest that the use of alternate frequency tables may be a promising approach to improving speech quality, intelligibility, or sound localization abilities in some users of bilateral CIs. This approach is likely to be particularly relevant for the subset of patients who are unable to completely adapt to bilateral frequency mismatch even after long-term exposure.

Acknowledgments

This research was supported by NIH/NIDCD grants K99/R00 DC009459 (awarded to MBF) and R01 DC003937 (awarded to MAS).

We thank Tasnim Morbiwala for her efforts in developing an earlier version of the real-time self-selection system used in this investigation.

Abbreviations

- BIC

binaural interaction component

- CI

cochlear implant

- CNC

consonant nucleus consonant

- SNR

signal-to-noise ratio

Footnotes

Portions of this work were presented at the 2009 Conference of Implantable Auditory Prostheses in Lake Tahoe, CA.

References

- Başkent D, Eiler CL, Edwards B. Using genetic algorithms with subjective input from human subjects: implications for fitting hearing aids and cochlear implants. Ear Hear. 2007;28(3):370–380. doi: 10.1097/AUD.0b013e318047935e. [DOI] [PubMed] [Google Scholar]

- Blanks DA, Buss E, Grose JH, Fitzpatrick DC, Hall JW., III Interaural time discrimination of envelopes carried on high-frequency tones as a function of level and interaural carrier mismatch. Ear Hear. 2008;29(5):674–683. doi: 10.1097/AUD.0b013e3181775e03. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Loizou PC, Rainey D. Simulating the effect of cochlear-implant electrode insertion depth on speech understanding. J Acoust Soc Am. 1997;102(5 Pt 1):2993–2996. doi: 10.1121/1.420354. [DOI] [PubMed] [Google Scholar]

- Faulkner A, Rosen S, Norman C. The right information may matter more than frequency-place alignment: simulations of frequency-aligned and upward shifting cochlear implant processors for a shallow electrode array insertion. Ear Hear. 2006;27(2):139–152. doi: 10.1097/01.aud.0000202357.40662.85. [DOI] [PubMed] [Google Scholar]

- Fitzgerald MB, Kan A, Goupell MJ. Bilateral loudness balancing and distorted spatial perception in recipients of bilateral cochlear implants. Ear Hear. 2015;36(5):e225–e236. doi: 10.1097/AUD.0000000000000174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald MB, Morbiwala TA, Svirsky MA. Customized selection of frequency maps in an acoustic simulation of a cochlear implant. Conf Proc IEEE Eng Med Biol Soc. 2006;1:3596–3599. doi: 10.1109/IEMBS.2006.260462. [DOI] [PubMed] [Google Scholar]

- Fitzgerald MB, Sagi E, Morbiwala TA, Tan CT, Svirsky MA. Feasibility of real-time selection of frequency tables in an acoustic simulation of a cochlear implant. Ear Hear. 2013;34(6):763–772. doi: 10.1097/AUD.0b013e3182967534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Francart T, Wouters J. Perception of across-frequency interaural level differences. J Acoust Soc Am. 2007;122(5):2826–2831. doi: 10.1121/1.2783130. [DOI] [PubMed] [Google Scholar]

- Fu Q-J, Galvin JJ., III The effects of short-term training for spectrally mismatched noise-band speech. J Acoust Soc Am. 2003;113(2):1065–1072. doi: 10.1121/1.1537708. [DOI] [PubMed] [Google Scholar]

- Fu Q-J, Shannon RV. Recognition of spectrally degraded and frequency-shifted vowels in acoustic and electric hearing. J Acoust Soc Am. 1999;105(3):1889–1900. doi: 10.1121/1.426725. [DOI] [PubMed] [Google Scholar]

- Garadat SN, Litovsky RY, Yu G, Zeng FG. Effects of simulated spectral holes on speech intelligibility and spatial release from masking under binaural and monaural listening. J Acoust Soc Am. 2010;127(2):977–989. doi: 10.1121/1.3273897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goupell MJ, Stoelb C, Kan A, Litovsky RY. Effect of mismatched place-of-stimulation on the salience of binaural cues in conditions that simulate bilateral cochlear-implant listening. J Acoust Soc Am. 2013;133(4):2272–2287. doi: 10.1121/1.4792936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenwood DD. A cochlear frequency-position function for several species—29 years later. J Acoust Soc Am. 1990;87(6):2592–2605. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- He S, Brown CJ, Abbas PJ. Effects of stimulation level and electrode pairing on the binaural interaction component of the electrically evoked auditory brain stem response. Ear Hear. 2010;31(4):457–470. doi: 10.1097/AUD.0b013e3181d5d9bf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He S, Brown CJ, Abbas PJ. Preliminary results of the relationship between the binaural interaction component of the electrically evoked auditory brainstem response and interaural pitch comparisons in bilateral cochlear implant recipients. Ear Hear. 2012;33(1):57–68. doi: 10.1097/AUD.0b013e31822519ef. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henning GB. Detectability of interaural delay in high-frequency complex waveforms. J Acoust Soc Am. 1974;55(1):84–90. doi: 10.1121/1.1928135. [DOI] [PubMed] [Google Scholar]

- Kaiser AR, Svirsky MA. Using a personal computer to perform real-time signal processing in cochlear implant research. Proceedings of the IXth IEEE-DSP Workshop; Hunt, TX. October 15–18.2000. [Google Scholar]

- Kan A, Litovsky RY, Goupell MJ. Effects of interaural pitch matching and auditory image centering on binaural sensitivity in cochlear implant users. Ear Hear. 2015;36(3):e62–e68. doi: 10.1097/AUD.0000000000000135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan A, Stoelb C, Litovsky RY, Goupell MJ. Effect of mismatched place-of-stimulation on binaural fusion and lateralization in bilateral cochlear-implant users. J Acoust Soc Am. 2013;134(4):2923–2936. doi: 10.1121/1.4820889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou PC, Dorman M, Poroy O, Spahr T. Speech recognition by normal-hearing and cochlear implant listeners as a function of intensity resolution. J Acoust Soc Am. 2000;108(5 Pt 1):2377–2387. doi: 10.1121/1.1317557. [DOI] [PubMed] [Google Scholar]

- Long CJ, Eddington DK, Colburn HS, Rabinowitz WM. Binaural sensitivity as a function of interaural electrode position with a bilateral cochlear implant user. J Acoust Soc Am. 2003;114(3):1565–1574. doi: 10.1121/1.1603765. [DOI] [PubMed] [Google Scholar]

- Nuetzel JM, Hafter ER. Discrimination of interaural delays in complex waveforms: spectral effects. J Acoust Soc Am. 1981;69:1112. [Google Scholar]

- Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- Poon BB, Eddington DK, Noel V, Colburn HS. Sensitivity to interaural time difference with bilateral cochlear implants: development over time and effect of interaural electrode spacing. J Acoust Soc Am. 2009;126(2):806–815. doi: 10.1121/1.3158821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss LAJ, Lowder MW, Karsten SA, Turner CW, Gantz BJ. Effects of extreme tonotopic mismatches between bilateral cochlear implants on electric pitch perception: a case study. Ear Hear. 2011;32(4):536–540. doi: 10.1097/AUD.0b013e31820c81b0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen S, Faulkner A, Wilkinson L. Adaptation by normal listeners to upward spectral shifts of speech: implications for cochlear implants. J Acoust Soc Am. 1999;106(6):3629–3636. doi: 10.1121/1.428215. [DOI] [PubMed] [Google Scholar]

- Shannon RV, Zeng F-G, Wygonski J. Speech recognition with altered spectral distribution of envelope cues. J Acoust Soc Am. 1998;104(4):2467–2476. doi: 10.1121/1.423774. [DOI] [PubMed] [Google Scholar]

- Siciliano CM, Faulkner A, Rosen S, Mair K. Resistance to learning binaurally mismatched frequency-to-place maps: implications for bilateral stimulation with cochlear implants. J Acoust Soc Am. 2010;127(3):1645–1660. doi: 10.1121/1.3293002. [DOI] [PubMed] [Google Scholar]

- Skinner MW, Ketten DR, Vannier MW, Gates GA, Yoffie RL, Kalender WA. Determination of the position of nucleus cochlear implant electrodes in the inner ear. Am J Otol. 1994;15(5):644–651. [PubMed] [Google Scholar]

- Smith ZM, Delgutte B. Using evoked potentials to match interaural electrode pairs with bilateral cochlear implants. J Assoc Res Otolaryngol. 2007;8(1):134–151. doi: 10.1007/s10162-006-0069-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stakhovskaya O, Sridhar D, Bonham BH, Leake PA. Frequency map for the human cochlear spiral ganglion: implications for cochlear implants. J Assoc Res Otolaryngol. 2007;8(2):220–233. doi: 10.1007/s10162-007-0076-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svirsky MA, Ding N, Sagi E, Tan CT, Fitzgerald M, Glassman EK, Seward K, Neuman AC. Validation of acoustic models of auditory neural prostheses. Proc IEEE Int Conf Acoust Speech Signal Process. 2013:8629–8633. doi: 10.1109/ICASSP.2013.6639350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svirsky MA, Fitzgerald MB, Sagi E, Glassman EK. Bilateral cochlear implants with large asymmetries in electrode insertion depth: implications for the study of auditory plasticity. Acta Otolaryngol. 2015;135(4):354–363. doi: 10.3109/00016489.2014.1002052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Svirsky MA, Talavage TM, Sinha S, Neuburger H, Azadpour M. Gradual adaptation to auditory frequency mismatch. Hear Res. 2015;322:163–170. doi: 10.1016/j.heares.2014.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Besouw RM, Forrester L, Crowe ND, Rowan D. Simulating the effect of interaural mismatch in the insertion depth of bilateral cochlear implants on speech perception. J Acoust Soc Am. 2013;134(2):1348–1357. doi: 10.1121/1.4812272. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJ. Exploring the benefits of bilateral cochlear implants. Audiol Neurootol. 2004;9(4):234–246. doi: 10.1159/000078393. [DOI] [PubMed] [Google Scholar]

- van Hoesel RJ, Clark GM. Psychophysical studies with two binaural cochlear implant subjects. J Acoust Soc Am. 1997;102(1):495–507. doi: 10.1121/1.419611. [DOI] [PubMed] [Google Scholar]

- van de Par S, Kohlrausch A. A new approach to comparing binaural masking level differences at low and high frequencies. J Acoust Soc Am. 1997;101(3):1671–1680. doi: 10.1121/1.418151. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Lawson DT, Müller JM, Tyler RS, Kiefer J. Cochlear implants: some likely next steps. Annu Rev Biomed Eng. 2003;5:207–249. doi: 10.1146/annurev.bioeng.5.040202.121645. [DOI] [PubMed] [Google Scholar]

- Yoon YS, Liu A, Fu Q-J. Binaural benefit for speech recognition with spectral mismatch across ears in simulated electric hearing. J Acoust Soc Am. 2011;130(2):EL94–EL100. doi: 10.1121/1.3606460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoon YS, Shin Y-R, Fu Q-J. Binaural benefit with and without a bilateral spectral mismatch in acoustic simulations of cochlear implant processing. Ear Hear. 2013;34(3):273–279. doi: 10.1097/AUD.0b013e31826709e8. [DOI] [PMC free article] [PubMed] [Google Scholar]