Abstract

We derive the asymptotic distributions of the spiked eigenvalues and eigenvectors under a generalized and unified asymptotic regime, which takes into account the magnitude of spiked eigenvalues, sample size, and dimensionality. This regime allows high dimensionality and diverging eigenvalues and provides new insights into the roles that the leading eigenvalues, sample size, and dimensionality play in principal component analysis. Our results are a natural extension of those in Paul (2007) to a more general setting and solve the rates of convergence problems in Shen et al. (2013). They also reveal the biases of estimating leading eigenvalues and eigenvectors by using principal component analysis, and lead to a new covariance estimator for the approximate factor model, called shrinkage principal orthogonal complement thresholding (S-POET), that corrects the biases. Our results are successfully applied to outstanding problems in estimation of risks of large portfolios and false discovery proportions for dependent test statistics and are illustrated by simulation studies.

Keywords and phrases: Asymptotic distributions, Principal component analysis, Spiked covariance model, Diverging eigenvalues, Approximate factor model, Relative risk management, False discovery proportion

1. Introduction

Principal Component Analysis (PCA) is a powerful tool for dimensionality reduction and data visualization. Its theoretical properties such as consistency and asymptotic distributions of the empirical eigenvalues and eigenvectors are challenging especially in high dimensional regime. For the past half century, substantial amount of efforts have been devoted to understanding empirical eigen-structure. An early effort came from Anderson (1963) who established the asymptotic normality of sample eigenvalues and eigenvectors under the classical regime with large sample size n and fixed dimension p. However, when dimensionality diverges at the same rate as the sample size, sample covariance matrix is a notoriously bad estimator with dramatically different eigen-structure from the population one. A lot of recent literature makes the endeavor to understand the behavior of the empirical eigenvalues and eigenvectors under high dimensional regime where both n and p go to infinity. See for example Baik, Ben Arous and Péché (2005); Bai (1999); Paul (2007); Johnstone and Lu (2009); Onatski (2012); Shen et al. (2013) and many related papers. For additional developments and references, see Bai and Silverstein (2009).

Among different structures of population covariance, the spiked covariance model is of great interest. It typically assumes several eigenvalues larger than the remaining, and focuses on recovering only these leading eigenvalues and their associated eigenvectors. The spiked part is of importance, as we are usually interested in the directions that explain the most variations of the data. In this paper, we consider a high dimensional spiked covariance model with the leading eigenvalues larger than the rest. We provide new understanding on how the spiked empirical eigenvalues and eigenvectors fluctuate around their theoretical counterparts and what their asymptotic biases are. Three quantities play an essential role in determining the asymptotic behavior of empirical eigen-structure: the sample size n, the dimension p, and the magnitude of leading eigenvalues . The natural question to ask is how the asymptotics of empirical engen-structure depends on the interplay of those quantities. We will give a unified answer to this important question in the principal component analysis. Theoretical properties of PCA have been investigated from three different perspectives: (i) random matrix theories, (ii) sparse PCA and (iii) approximate factor model.

The first angle to analyze PCA is through random matrix theories, where it is typically assumed p/n → γ ∈ (0, ∞) with bounded spike sizes. It is well known that if the true covariance matrix is identity, the empirical spectral distribution converges almost surely to the Marcenko-Pastur distribution (Bai, 1999) and when γ < 1 the largest and smallest eigenvalues converge almost surely to and respectively (Bai and Yin, 1993; Johnstone, 2001). If the true covariance structure takes the form of a spiked matrix, Baik, Ben Arous and Péché (2005) showed that the asymptotic distribution of the top empirical eigenvalue exhibits an n2/3 scaling when the eigenvalue lies below a threshold , and an n1/2 scaling when it is above the threshold (named BBP phase transition after the authors). The phase transition is further studied by Benaych-Georges and Nadakuditi (2011) and Bai and Yao (2012) under more general assumptions. For the case where we have the regular scaling, Paul (2007) investigated the asymptotic behavior of the corresponding empirical eigenvectors and showed that the major part of an eigenvector is normally distributed with a regular scaling n1/2. The convergence of principal component scores under this regime was considered by Lee, Zou and Wright (2010). The same random matrix regime has also been considered by Onatski (2012) in studying the principal component estimator for high-dimensional factor models. More recently, Koltchinskii and Lounici (2014a,b) revealed a profound link of concentration bounds of empirical eigen-structure with the effective rank defined as r̄ = tr(Σ)/λ1 (Vershynin, 2010). Their results extend the regime of bounded eigenvalues to a more general setting, although the asymptotic results in most cases still rely on the assumption r̄ = o(n), which essentially requires a low dimensionality, i.e. p/n → 0, if λ1 is bounded. In this paper, we consider the general regime of bounded p/(nλ1), which implies r̄ = O(n) and allows diverging λ1. More discussions will be given in Section 3.

A second line of efforts is through sparse PCA. According to Johnstone and Lu (2009), PCA does not generate consistent estimators for leading eigenvectors if p/n → γ ∈ (0, 1) with bounded eigenvalues. This motivates the development of sparse PCA, which leverages the extra assumption on the sparsity of eigenvectors. A large amount of literature has contributed to the topic of sparse PCA, for example Amini and Wainwright (2008); Vu and Lei (2012); Birnbaum et al. (2013); Berthet and Rigollet (2013); Ma (2013). Specifically, Vu and Lei (2012) derived optimal bound for the minimax estimation error of the first sparse leading eigenvector, while Cai, Ma and Wu (2015) conducted a more thorough study on the minimax optimal rates for estimating top eigenvalues and eigenvectors of spiked covariance matrices with jointly k-sparse eigenvectors. This type of work assumes bounded eigenvalues, which ignore the contributions of the strong signals from the data in many real applications. To make the problem solvable, sparsity assumptions on the eigenvectors are imposed. In contrast, driven by applications such as genomics, economics, and finance, this paper studies the contributions of the diverging eigenvalues (signals) to the estimation of their associated eigenvectors, without relying on sparsity assumptions on the eigenvectors.

In order to illustrate the third perspective, let us briefly review the approximate factor model (Bai, 2003; Fan, Liao and Mincheva, 2013) and see how the spiked eigenvalues arise naturally from the model. Consider the following data generating model:

where yt is a p-dimensional vector observed at time t, ft ∈ ℝm is the vector of latent factors that drive the cross-sectional dependence at time t, B is the matrix of the corresponding loading coefficients, and εt is the idiosyncratic part that can not be explained by the factors. Assume without loss of generality that var(ft) = Im, the m × m identity matrix. Then, the model implies Σ = var(yt) = BB′ + Σε, where Σε = var(ε). It admits a low-rank plus sparse structure, when Σε is assumed to be sparse (Fan, Fan and Lv, 2008; Fan, Liao and Mincheva, 2013). The recovery of the low-rank and sparse matrices was considered thoroughly by Candès et al. (2011) and Chandrasekaran et al. (2011) under the incoherence condition in the noise-less setting and by Agarwal, Negahban and Wainwright (2012) in the noisy case. If the factor loadings {bi}i≤p (the transpose of rows of B) are i.i.d. samples from a population with mean zero and covariance Σb [this is a pervasive assumption commonly used in the factor models (Fan, Liao and Mincheva, 2013)], then by the law of large numbers, , as p → ∞. In other words, the eigenvalues of BB′ are approximately

where λj(Σb) is the jth eigenvalue of Σb. Then, by Weyl’s theorem, we conclude that the eigenvalues of Σ

| (1.1) |

and the remaining are bounded, if ‖Σε‖ is bounded. Therefore, the factor model implies a spiked covariance with diverging leading eigenvalues. Fan, Liao and Mincheva (2013) showed that if the leading eigenvalues grow linearly with the dimension, then the corresponding eigenvectors can be consistently estimated as long as sample size goes to infinity. See Section 4 for more details.

Deviating from the classical random matrix and sparse PCA literature, we consider the high dimensional regime, allowing p/n → ∞. To take into account the contributions of the signals for PCA, we allow λj → ∞ for the first m leading eigenvalues. This leads to the third perspective for understanding PCA from this high dimensional setting. Shen et al. (2013) adopted this point of view and considered the regime of p/(nλj) → γj where 0 ≤ γj < ∞ for leading eigenvalues. This is more general than the bounded eigenvalue condition. Specifically if eigenvalues are bounded, we require the ratio p/n converges to a bounded constant as in the random matrix regime. On the other hand, if the dimension is much larger than the sample size, we offset the dimensionality by assuming increased signals or sample size, without additional sparse eigenvector assumption as in sparse PCA regime. In particular, as shown in (1.1), the strong (or pervasive) factors considered in financial applications corresponds to γj = 0 with the leading eigenvalues λj ≍ p; see for example Stock and Watson (2002); Bai (2003); Bai and Ng (2002); Fan, Liao and Mincheva (2013); Fan, Liao and Wang (2016). The weak or semi-strong factors considered by De Mol, Giannone and Reichlin (2008) and Onatski (2012) also imply bounded p/(nλ1), with p/n bounded and λj ≍ pθ for some θ ∈ [0, 1).

Hall, Marron and Neeman (2005); Jung and Marron (2009) started the research of high dimension low sample size (HDLSS) regime. With n fixed, Jung and Marron (2009) concluded that consistency of leading eigenvalues and eigenvectors is granted if λj ≍ pθ for θ > 1, which also corresponds to γj = 0. Shen et al. (2013) revealed an interesting fact that when γj ≠ 0, spiked sample eigenvalues almost surely converge to a biased quantity of the true eigenvalues; furthermore the corresponding sample eigenvectors show an asymptotic conical structure. However, their work focuses only on the consistency problem. In this study, we will consider the same regime as theirs, but focus more on the rates of convergence and the asymptotic distributions of the empirical eigen-structure, and under more relaxed conditions. Our results can be viewed as a natural extension of Paul (2007) to the high dimensional setting.

We would like to emphasize more on the scope and importance of our contributions here. Firstly, the regime we consider in this paper is p/(nλj) → γj ∈ [0, ∞) for j ≤ m, which permits high dimensionality p/n → ∞ and diverging eigenvalues without specifying their divergence rates. As we have argued, this encompasses many situations considered in the existing literature. It puts into the same framework of the typical random matrix regime with bounded eigenvalues and HDLSS analysis with fixed sample size. Secondly, the contributions of diverging eigenvalues are indeed recognized and accounted for in our theoretical developments. This avoids the restrictive assumptions on sparse eigenvectors. PCA without sparsity assumptions has been widely used in the diverging fields such as population association study (Yamaguchi-Kabata et al., 2008), genome-wide association study (Ringnér, 2008), microarray data (Landgrebe, Wurst and Welzl, 2002; Price et al., 2006), fMRI data (Thomas, Harshman and Menon, 2002), and financial returns (Chen and Shimerda, 1981; Chamberlain and Rothschild, 1983). Our efforts contribute to theoretical understanding why such a plain PCA works in these diverse fields. Finally, by allowing certain generality, we gain theoretical insights into how n, p and signal strength λj interplay.

The results are useful in two ways. On the one hand, they help quantify the biases of empirical eigen-structure and explain where they come from. Specifically, in Theorem 3.1, the bias of the jth sample eigenvalue (j ≤ m) is quantified by p/(nλj), which is also showed by Yata and Aoshima (2012, 2013) under different assumptions of the spiked covariance model. Our novel contribution lies in Theorem 3.2, revealing the bias of the jth sample eigenvector (j ≤ m). In (3.7), we provide a decomposition of each empirical eigenvector into a spiked part, which converges to the true eigenvector with a deflation factor also quantified by p/(nλj), and a non-spiked part, which creates a random bias distributed uniformly on an ellipse. More details will be presented in Section 3. On the other hand, the theoretical results provide new technical tools for analyzing factor models, which motivate the study. As we have seen, although it is natural to assume eigenvalues grow linearly with dimension, the assumption imposes a strong signal. Note that when p/(nλj) → 0, no biases will occur. So in Section 4, we consider to relax the order of spikes to slightly faster than . By correcting the biases, we propose a new method called Shrinkage Principal Orthogonal complEment Thresholding (S-POET) and employ it to two applications: risk assessment of large portfolios (Pesaran and Zaffaroni, 2008; Fan, Liao and Shi, 2015) and false discovery proportion estimation for dependent test statistics (Leek and Storey, 2008; Fan, Han and Gu, 2012). Existing methodologies for those two problems reply on rather strong signal level, but we are able to relax it with the help of S-POET.

The paper is organized as follows. Section 2 introduces the notations, assumptions, and an important fact which serves as basis of our proofs. Sections 3.1 and 3.2 devote to the theoretical results for the sample eigenvalues and eigenvectors of the spiked covariance matrix. In Section 4, we discuss several applications of the theories in the previous section. Simulations are conducted in Section 5 to demonstrate the theoretical results at the finite sample and the performance of S-POET. Section 6 provides concluding remarks. The proofs for Section 3 are provided in the appendix and those for Section 4 are relegated to the supplementary material.

2. Assumptions and a simple fact

Assume that is a sequence of i.i.d. random variables with zero mean and covariance matrix Σp×p. Let λ1, …, λp be the eigenvalues of Σ in descending order. We consider the spiked covariance model as follows.

Assumption 2.1

λ1 > λ2 > ⋯ > λm > λm+1 ≥ ⋯ ≥ λp > 0, where the non-spiked eigenvalues are bounded, i.e. c0 ≤ λj ≤ C0, j > m for constants c0, C0 > 0 and the spiked eigenvalues are well separated, i.e. ∃ δ0 > 0 such that minj≤m(λj − λj+1)/λj ≥ δ0.

The eigenvalues are divided into the spiked ones and bounded non-spiked ones. We do not need to specify the order of the leading eigenvalues nor require them to diverge. Thus, our results in Section 3 are applicable to both bounded and diverging leading eigenvalues. For simplicity, we only consider distinguishable eigenvalues (multiplicity 1) for the largest m eigenvalues and a fixed number m, independent of n and p.

The factor model y = Bf + ε with pervasive factors considered in Fan, Liao and Mincheva (2013) implies a spiked covariance model with λj ≍ p in (1.1) and satisfies the above assumption. For the interplay of the sample size n, dimension p and the spikes λj ’s, the following relationship is assumed as in Shen et al. (2013).

Assumption 2.2

Assume p > n. For the spiked part 1 ≤ j ≤ m, cj = p/(nλj) is bounded, and for the non-spiked part, .

We allow p/n → ∞ in any manner, though λj also needs to grow fast enough to ensure bounded cj. In particular, cj = o(1) is allowed as in the factor model. We do not assume the non-spiked eigenvalues are identical, as in most spiked covariance model literature (e.g. Paul (2007); Johnstone and Lu (2009)).

By spectral decomposition, Σ = ΓΛΓ′, where the orthonormal matrix Γ is constructed by the eigenvectors of Σ and Λ = diag(λ1, …, λp). Let Xi = Γ′Yi. Since the empirical eigenvalues are invariant and the empirical eigenvectors are equivariant under an orthonormal transformation, we focus the analysis on the transformed domain of Xi and then translate the results into those of the original data. Note that var(Xi) = Λ. Let Zi = Λ−1/2Xi be the elementwise standardized random vector.

Assumption 2.3

are i.i.d copies of Z. The standardized random vector Z = (Z1, …, Zp) is sub-Gaussian with independent entries of mean zero and variance one. The sub-Gaussian norms of all components are uniformly bounded: maxj ‖Zj‖ψ2 ≤ C0, where ‖Zj‖ψ2 = supq≥1 q−1/2(E|Zj|q)1/q.

Since Var(Xi) = diag(λ1, λ2, …, λp), the first m population eigenvectors are simply unit vectors e1, e2, …, em with only one nonvanishing element. Denote the n by p transformed data matrix by X = (X1, X2, …, Xn)′. Then the sample covariance matrix is

whose eigenvalues are denoted as λ̂1, λ̂2, …, λ̂p (λ̂j = 0 for j > n) with corresponding eigenvectors ξ̂1, ξ̂2, …, ξ̂p. Note that the empirical eigenvectors of data Yi’s are .

Let Zj be the jth column of the standardized X. Then each Zj has i.i.d sub-Gaussian entries with zero mean and unit variance. Exchanging the roles of rows and columns, we get the n by n Gram matrix

with the same nonzero eigenvalues λ̂1, λ̂2, …, λ̂n as Σ̂ and the corresponding eigenvectors u1, u2, …, un. It is well known that for i = 1, 2, …, n

| (2.1) |

while the other eigenvectors of Σ̂ constitute a (p−n)-dimensional orthogonal complement of ξ̂1, …, ξ̂n.

By using this simple fact, for the specific case with c0 = C0 = 1 in Assumption 2.1, λj = 1 for j > m in Assumption 2.2, and Gaussian data in Assumption 2.3, Shen et al. (2013) showed that

and

where 〈a, b〉 denotes the inner product of two vectors. However, they fail to establish any results on convergence rates or asymptotic distributions of the empirical eigen-structure. This motivates the current paper.

The aim of this paper is to establish the asymptotic normality of the empirical eigenvalues and eigenvectors under more relaxed conditions. Our results are a natural extension of Paul (2007) to a more general setting with new insights, where the asymptotic normality of sample eigenvectors is derived using complicated random matrix techniques for Gaussian data under the regime of p/n → γ ∈ [0, 1). In comparison, our proof, based on the relationship (2.1), is much simpler and insightful for understanding the behavior of high dimensional PCA.

Here are some notations that we will use in the paper. For a general matrix M, we denote its matrix entry-wise max norm as ‖M‖max = maxi,j{|Mi,j|} and define the quantities , ‖M‖∞ = maxi ∑j |Mi,j| to be its spectral, Frobenius and induced ℓ∞ norms. If M is symmetric, we define λj(M) to be the jth largest eigenvalue of M and λmax(M), λmin(M) to be the maximal and minimal eigenvalues respectively. We denote tr(M) as the trace of M. For any vector v, its ℓ2 norm is represented by ‖v‖ while ℓ1 norm is written as ‖v‖1. We use diag(v) to denote the diagonal matrix with the same diagonal entries as v. For two random vectors a, b of the same length, we say a = b+OP(δ) if ‖a−b‖ = OP(δ) and a = b + oP(δ) if ‖a − b‖ = oP(δ). We denote for some distribution ℒ if there exists b ~ ℒ such that a = b + oP(1). Throughout the paper, C is a generic constant that may differ from line to line.

3. Asymptotic behavior of empirical eigen-structure

3.1. Asymptotic normality of empirical eigenvalues

Let us first study the behavior of the leading m empirical eigenvalues of Σ̂. Denote by λj(A) the jth largest eigenvalue of matrix A and recall that λ̂j = λj(Σ̂). We have the following asymptotic normality of λ̂j.

Theorem 3.1

Under Assumptions 2.1 – 2.3, ’s have independent limiting distributions. In addition,

| (3.1) |

where κj is the kurtosis of Xj.

The theorem shows that the bias of λ̂j/λj is . The second term is dominated by the first term since p > n and it is of order oP(n−1/2) if . The latter assumption is satisfied by the strong factor model in Fan, Liao and Mincheva (2013) and a part of weak or semi-strong factor model in Onatski (2012). The theorem reveals the bias is controlled by a term of rate p/(nλj). To get the asymptotically unbiased estimate, it requires cj = p/(nλj) → 0 for j ≤ m. This result is more general than that of Shen et al. (2013) and sheds a similar light to that of Koltchinskii and Lounici (2014a,b) i.e. ‖Σ̂ − Σ‖/‖Σ‖ → 0 almost surely if and only if the effective rank r̄ = tr(Σ)/λ1 is of order o(n), which is true when c1 = o(1). Our result here holds for each individual spike. Yata and Aoshima (2012, 2013) employed a similar technical trick and gave a comprehensive study on the asymptotic consistency and distributions of the eigenvalues. They got similar results under different conditions from ours. Our framework is more general here. If cj ↛ 0, bias reduction can also be made; see Section 4.2, where an estimator for c̄ is proposed. Under the bounded spiked covariance model considered in Baik, Ben Arous and Péché (2005), Johnstone and Lu (2009) and Paul (2007), it is assumed λj = c0 = C0, j > m so that c̄ = c0, the minimum eigenvalue of the population covariance matrix. Our result is also consistent with Anderson (1963)’s result that

for Gaussian data and fixed p and λj’s, where the non-spiked part does not exist and thus the bias disappears. The proof is relegated to the appendix.

3.2. Behavior of empirical eigenvectors

Let us consider the asymptotic distribution of the empirical eigenvectors ξ̂j’s corresponding to λ̂j, j = 1, 2, …, m. As in Paul (2007), each ξ̂j is divided into two parts corresponding to the spiked and non-spiked components, i.e. where ξ̂jA is of length m.

Theorem 3.2

Under Assumptions 2.1 – 2.3, we have

- For the spiked part, if m = 1,

while if m > 1,(3.2)

for j = 1, 2, …, m, with(3.3)

where [m] = {1, ⋯, m}, ekA is the first m elements of the unit vector ek, and , which is assumed to exist. - For the non-spiked part, if we further assume the data is Gaussian, there exists p − m dimensional vector h0 ~ Unif (Bp−m(1)) such that

where is a diagonal matrix and Unif (Bk(r)) denotes the uniform distribution over the centered sphere of radius r. In addition, the max norm of ξ̂jB satisfies(3.4) (3.5) - Furthermore, and . Together with (i), this implies the inner product between empirical eigenvector and the population one converges to (1 + c̄cj)−1/2 in probability and

(3.6)

In the above theory, we assume exists. This is not restrictive if eigenvalues are well separated i.e. minj≠k≤m |λj − λk|/λj ≥ δ0 from assumption 2.1. The assumption obviously holds for the pervasive factor model, in which .

Theorem 3.2 is an extension of random matrix results into high dimensional regime. Its proof sheds light on how to use the smaller n × n matrix Σ̃ as a tool to understand the behavior of the larger p × p covariance matrix Σ̂. Specifically, we start from Σ̃uj = λ̂juj or identity (A.3) and then use the simple fact (2.1) to get a relationship (A.4) of eigenvector ξ̂j. Then (A.4) is rearranged as (A.6) which gives a clear separation of the dominating term, that is asymptotically normal, and the error term. This makes the whole proof much simpler in comparison with Paul (2007) who showed a similar type of result through a complicated representation of ξ̂j and λ̂j under more restricted assumptions. From this simple trick, we can understand deeply how some important high and low dimensional quantities link together and differ from each other.

Several remarks are in order. Firstly, since is the jth empirical eigenvector based on observed data Y, we have decomposition

| (3.7) |

where Γ = (ΓA, ΓB). Note that ΓAξ̂jA converges to the true eigenvector deflated by a factor of with the convergence rate while ΓBξ̂jB creates a random bias, which is distributed uniformly on an ellipse of (p − m) dimension and projected into the p dimensional space spaned by ΓB. The two parts intertwine in such a way that correction for the biases of estimating eigenvectors is almost impossible. More details are discussed in Section 4 for the factor models. Secondly, it is clearly as in the eigenvalue case, the bias term Theorem 3.2 (i) disappears when . In particular, for the stronger factor given by (1.1), is a consistent estimator. Thirdly, the situations m = 1 and m > 1 have slight difference in that multiple spikes could interact with each other. Especially this is reflected in the convergence of the angle between the empirical eigenvector and its population counterpart: the angle converges to (1 + c̄cj)−1/2 with an extra rate OP(1/n) which stems from estimating ξ̂jk for j ≠ k ≤ m (see proof of Theorem 3.2 (iii)). The difference will only be seen when the spike magnitude is higher than the order . We will verify this by a simple simulation in Section 5. Finally, it is the first time that the max norm bound of the non-spiked part is derived. This bound will be useful for analyzing factor models in Section 4.

Theorem 3.2 again implies the results of Shen et al. (2013). It also generalizes the asymptotic distribution of non-spiked part from pure orthogonal invariant case of Paul (2007) to a more general setting. In particular, when p/n → ∞, the asymptotic distribution of the normalized non-spiked component is not uniform over a sphere any more, but over an ellipse. In addition, our result can be compared with the low dimensional case, where Anderson (1963) showed that

| (3.8) |

for fixed p and λj’s. Under our assumptions, since the spiked eigenvalues may go to infinity, the constants in the asymptotic covariance matrix are replaced by the limits ajk’s. Similar to the behavior of eigenvalues, the spiked part ξ̂jA preserves the normality property except for a bias factor 1/(1+ c̄cj) caused by the high dimensionality. Also, recent work of Koltchinskii and Lounici (2014b) provides general asymptotic results for the empirical eigenvectors from a spectral projector point of view, but they mainly focus on the regime of p/nλj → 0 or r̄ = o(n). Last but not least, it has been shown by Johnstone and Lu (2009) that PCA generates consistent eigenvector estimation if and only if p/n → 0 when the spike sizes are fixed. This motivates the study of sparse PCA. We take the spike magnitude into account and provide additional insights by showing that PCA consistently estimate eigenvalues and eigenvectors if and only if p/(nλj) → 0. This explains why Fan, Liao and Mincheva (2013) can consistently estimate the eigenvalues and eigenvectors while Johnstone and Lu (2009) can not.

4. Applications to factor models

In this section, we propose a method named Shrinkage Principal Orthogonal complEment Thresholding (S-POET) for estimating large covariance matrices induced by the approximate factor models. The estimator is based on correction of the bias of the empirical eigenvalues as specified in (3.1). We derive for the first time the bound for the relative estimation errors of covariance matrices under the spectral norm. The results are then applied to assessing large portfolio risks and estimating false discovery proportions, where the conditions in existing literature are significantly relaxed.

4.1. Approximate factor models

Factor models have been widely used in various disciplines. For example, it is used to extract information from financial market for sufficient forecasting of other time series (Stock and Watson, 2002; Fan, Xue and Yao, 2015) and to adjust heterogeneity for biological data aggregation of multiple sources (Leek et al., 2010; Fan et al., 2016). Consider the approximate factor model

| (4.1) |

where yit is the observed data for the ith (i = 1, …, p) individual (e.g. returns of stocks) or component (e.g. expressions of genes) at time t = 1, …, T; ft is an m × 1 vector of latent common factors and bi is the factor loadings for the ith individual or component; uit is the idiosyncratic error, uncorrelated with the common factors. In genomics application, t can also index repeated experiments. For simplicity we assume there is no time dependency.

The factor model can be written into a matrix form as follows:

| (4.2) |

where Yp×T, Bp×m, FT×m, Up×T are respectively the matrix form of the observed data, the factor loading matrix, the factor matrix, and the error matrix. For identifiability, we impose the condition that cov(ft) = I. Thus, the covariance matrix is given by

| (4.3) |

where Σu is the covariance matrix of the idiosyncratic error at any time t.

Under the assumption that Σu = (σu,ij)i,j≤p is sparse with its eigenvalues bounded away from zero and infinity, the population covariance exhibits a “low-rank plus sparse” structure. The sparsity is measured by the following quantity

for some q ∈ [0, 1] (Bickel and Levina, 2008). In particular, with q = 0, mp equals the maximum number of nonzero elements in each row of Σu.

In order to estimate the true covariance matrix with the above factor structure, Fan, Liao and Mincheva (2013) proposed a method called “POET” to recover the unknown factor matrix as well as the factor loadings. The idea is simply to first decompose the sample covariance matrix into the spiked and non-spiked part and estimate them separately. Specifically, recall Σ̂ = T−1YY′ and let {λ̂j} and {ξ̂j} be its corresponding eigenvalues and eigenvectors. They define

| (4.4) |

where is the matrix after applying thresholding method (Bickel and Levina, 2008) to .

They showed that the above estimation procedure is equivalent to the least square approach that minimizes

| (4.5) |

The columns of are the eigenvectors corresponding to the m largest eigenvalues of the T × T matrix T−1Y′Y and B̂ = T−1YF̂. After B and F are estimated, the sample covariance of Û = Y − B̂F̂′ can be formed: Σ̂u = T−1ÛÛ′. Finally thresholding is applied to Σ̂u to generate , where

| (4.6) |

Here sij(·) is the generalized shrinkage function (Antoniadis and Fan, 2001; Rothman, Levina and Zhu, 2009) and τij = τ(σ̂u,iiσ̂u,jj)1/2 is the entry-dependent threshold. The above adaptive threshold corresponds to applying thresholding with parameter τ to the correlation matrix of Σ̂u. The positive parameter τ will be determined later.

Fan, Liao and Mincheva (2013) showed that under Assumptions ?? - ?? listed in Appendix ?? in the supplementary material (Wang and Fan, 2015),

| (4.7) |

where ‖A‖Σ,F = p−1/2‖Σ−1/2AΣ−1/2‖F and ‖ · ‖F is the Frobenius norm. Note that

which measures the relative error in Frobenius norm. A more natural metric is relative error under the operator norm ‖A‖Σ = ‖Σ−1/2AΣ−1/2‖, which can not be obtained by using the technical device of Fan, Liao and Mincheva (2013). Note ‖A‖Σ,F ≤ ‖A‖Σ. Via our new results in the last section, we will establish a result under those two relative norms, under weaker conditions than their pervasiveness assumption. Note that the relative error convergence is particularly meaningful for spiked covariance matrix, as eigenvalues are in different scales.

4.2. Shrinkage POET under relative spectral norm

The discussion above reveals several drawbacks of POET. First, the spike size has to be of order p which rules out relatively weak factors. Second, it is well known that the empirical eigenvalues are inconsistent if the spiked eigenvalues do not significantly dominate the non-spiked part. Therefore, a proper correction or shrinkage is needed. See a recent paper by Donoho, Gavish and Johnstone (2014) for optimal shrinkage of eigenvalues.

Regarding to the first drawback, we relax the assumption ‖p−1B′B − Ω0‖ = o(1) in Assumption ?? to the following weaker assumption.

Assumption 4.1

for some Ω0 with eigenvalues bounded from above and below, where ΛA = diag(λ1, …, λm). In addition, we assume λm → ∞, λ1/λm is bounded from above and below.

This assumption does not require the first m eigenvalues of Σ to take on any specific rate. They can still be much smaller than p, although for simplicity we require them to diverge and share the same diverging rate. Since ‖Σu‖ is assumed to be bounded, the assumption λm → ∞ is also imposed to avoid the issue of identifiability. When λm does not diverge, more sophisticated condition is needed for identifiability (Chandrasekaran et al., 2011).

In order to handle the second drawback, we propose the Shrinkage POET (S-POET) method. Inspired by (3.1), the shrinkage POET modifies the first part in POET estimator (4.4) as follows:

| (4.8) |

where , a simple soft thresholding correction. Obviously if λ̂j is sufficiently large, . Since c̄ is unknown, a natural estimator ĉ is such that the total of the eigenvalues remains unchanged:

or . It has been shown by Lemma 7 of Yata and Aoshima (2012) that

Thus, replacing c̄ by ĉ, we have , i.e. the estimation error in ĉ is negligible. From Lemma 3.1, we can easily obtain the asymptotic normality, if .

To get the convergence of relative errors under the operator norm, we also need the following additional assumptions:

Assumption 4.2

{ut, ft}t≥1 are independently and identically distributed with 𝔼[uit] = 𝔼[uitfjt] = 0 for all i ≤ p, j ≤ m and t ≤ T.

There exist positive constants c1 and c2 such that λmin(Σu) > c1, ‖Σu‖∞ < c2, and mini,j Var(uitujt) > c1.

- There exist positive constants r1, r2, b1 and b2 such that for s > 0, i ≤ p, j ≤ m,

There exists M > 0 such that for all i ≤ p, j ≤ m, .

.

The first three conditions are common in factor model literature. If we write B = (b̃1, …, b̃m), by Weyl’s inequality we have max1≤j≤m ‖b̃j‖2/λj ≤ 1+‖Σu‖/λj = 1+o(1). Thus it is reasonable to assume the magnitude |bij | of factor loadings is of order in the fourth condition. The last condition is imposed to ease technical presentation.

Now we are ready to investigate ‖Σ̂S −Σ‖Σ. Suppose the SVD decomposition of Σ,

Then obviously

| (4.9) |

and

| (4.10) |

It can be shown

| (4.11) |

where and . Thus in order to find the convergence rate of relative spectral norm, we need to consider the terms ΔL1, ΔL2 and ΔS separately. Notice that ΔL1 measures the relative error of the estimated spiked eigenvalues, ΔL2 reflects the goodness of the estimated eigenvectors, and ΔS controls the error of estimating the sparse idiosyncratic covariance matrix. To bound the relative Frobenius norm ‖Σ̂S − Σ‖Σ,F, we define similar quantities Δ̃L1, Δ̃L2, Δ̃S which replace the spectral norm by Frobenius norm multiplied by p−1/2. Note that (4.9) – (4.11) also hold for relative Frobenius norm with Δ̃L1, Δ̃L2, Δ̃S. The following theorem reveals the rate of each term. Its proof will be provided in Appendix ?? of the supplementary material (Wang and Fan, 2015).

Theorem 4.1

Under Assumptions 2.1, 2.2, 2.3, 4.1 and 4.2, if p log p > max{T(log T)4/r2, T(log(pT))2/r1}, we have

and by the applying adaptive thresholding estimator (4.6) with

we have

Combining the three terms, and .

The relative error convergence characterizes the accuracy of estimation for spiked covariance matrix. In contrast with the result on relative Frobenius norm, this is the first time that the relative rate under spectral norm is derived. As long as λm is slightly above , we reach the same rates of convergence. Therefore, we can conclude S-POET is effective even under a much weaker signal level. Comparing the rate with (4.7), under relative Frobenius norm, we achieve a better rate without the artificial log term, thanks to the new asymptotic results.

4.3. Portfolio risk management

The risk of a given portfolio with allocation weight w is conventionally measured by its variance w′Σw, where Σ is the volatility (covariance) matrix of the returns of underlying assets. To estimate large portfolio’s risks, it needs to estimate a large covariance matrix Σ and factor models are frequently used to reduce the dimensionality. This was the idea of Fan, Liao and Shi (2015) in which they used POET estimator to estimate Σ. However, the basic method for bounding the risk error |w′Σ̂w − w′Σw| in their paper is

They assumed that the gross exposure of the portfolio is bounded, i.e. ‖w‖1 = O(1). Technically, when p is large, w′Σw can be small. What an investor cares mostly is the relative risk error RE(w) = |w′Σw/w′Σw − 1|. Often w is a data-driven investment strategy, which depends on the past data. Regardless of what w is,

which does not converge by Theorem 4.1 for p > T. Thus the question of interest is what kind of portfolio w will make the relative error converge. Decompose w as a linear combination of the eigenvectors of Σ, namely w = (Γ, Ω)η and . We have the following useful result for risk management.

Theorem 4.2

Under Assumptions 2.1, 2.2, 4.1,4.2 and the factor model (4.1) with Gaussian noises and factors, if there exists C1 > 0 such that ‖ηB‖1 ≤ C1, and assume λj ∝ pα for j = 1, …, m and T ≥ Cpβ for α > 1/2, 0 < β < 1, α + β > 1, then the relative risk error is of order

for α < 1. If α ≥ 1 or ‖ηA‖ ≥ C2, .

The condition ‖ηB‖1 ≤ C1 is generally weaker than ‖w‖1 = O(1). It does not limit the total exposure of investor’s position, but only put constraint on investment of the non-spiked section. Note that under the conditions of Theorem 4.2, p/(Tλj) → 0, and S-POET and POET are approximately the same. So the stated result is valid for POET too.

4.4. Estimation of false discovery proportion

Another important application of the factor model is the estimation of false discovery proportion. For simplicity, we assume Gaussian data Xi ~ N(μ, Σ) with an unknown correlation matrix Σ and wish to test which coordinates of μ are nonvanishing. Consider the test statistic where X̄ is the sample mean of all data. Then Z ~ N(μ*, Σ) with . The problem is to test

Define the number of discoveries R(t) = #{j : Pj ≤ t} and the number of false discoveries V(t) = #{true null : Pj ≤ t}, where Pj is the p-value associated with the jth test. Note that R(t) is observable while V(t) needs to be estimated. The false discovery proportion (FDP) is defined as FDP(t) = V(t)/R(t).

Fan and Han (2013) proposed to employ the factor structure

| (4.12) |

where . λj and ξj are respectively the jth eigenvalue and eigenvector of Σ as before. Then Z can be stochastically decomposed as

where W ~ N(0, Im) are m common factors and K ~ N(0, A), independent of W, are the idiosyncratic errors. For simplicity, assume the maximal number of nonzero elements of each row of A is bounded. In Fan and Han (2013), they argued that the asymptotic upper bound

| (4.13) |

of FDP(t) should be a realistic target to estimate for dependence tests, where zt/2 is the t/2-quantile of the standard normal distribution ai = (1 − ‖bi‖2)−1/2, and is the ith row of B.

Realized factors W and the loading matrix B are typically unknown. If a generic estimator Σ̂ is provided, then we are able to estimate B and thus bi from its empirical eigenvalues and eigenvectors λ̂j’s and ξ̂j’s. W can be estimated by the least-squares estimate Ŵ = (B̂′B̂)−1B̂′Z. Fan and Han (2013) proposed the following estimator for FDPA(t):

| (4.14) |

where âi = (1 − ‖b̂i‖2)−1/2 and . The following assumptions are in their paper.

Assumption 4.3

There exists a constant h > 0 such that (i) R(t)/p > hp−θ for h > 0 and θ ≥ 0 as p → ∞ and (ii) âi ≤ h, ai ≤ h for all i = 1, …, p.

They showed that if Σ̂ is based on the POET estimator with a spike size λm ≍ p, under Assumptions ?? - ??, on the event that Assumption 4.3 holds,

| (4.15) |

Again we can relax the assumption on the spike magnitude from order p to much weaker Assumption 4.1. Since Σ is a correlation matrix, λ1 ≤ tr(Σ) = p. This, together with Assumption 4.1, leads us to consider leading eigenvalues of order pα for 1/2 < α ≤ 1.

Now we apply the proposed S-POET method to obtain Σ̂S and use it for FDP estimation. The following theorem shows the estimation error.

Theorem 4.3

If Assumptions 2.1, 2.2, 4.1, and 4.2 are applied to Gaussian independent data Xi ~ N(μ, Σ), and λj ∝ pα for j = 1, …, m, T ≥ Cpβ for 1/2 < α ≤ 1, 0 < β < 1, α + β > 1, on the event that Assumption 4.3 holds, we have

Comparing the result with (4.15), this convergence rate attained by S-POET is more general than the rate achieved before. The only difference is the second term, which is O(T−1/2) if and T−(α + β−1)/β otherwise. So we relax the condition from α = 1 in Fan and Han (2013) to α ∈ (1/2, 1]. This means a weaker signal than order p is actually allowed to obtain a consistent estimate of false discovery proportion.

5. Simulations

We conducted some simulations to demonstrate the finite sample behavior of empirical eigen-structure, the performance of S-POET, and validity of applying it to estimate false discovery proportion.

5.1. Eigen-structure

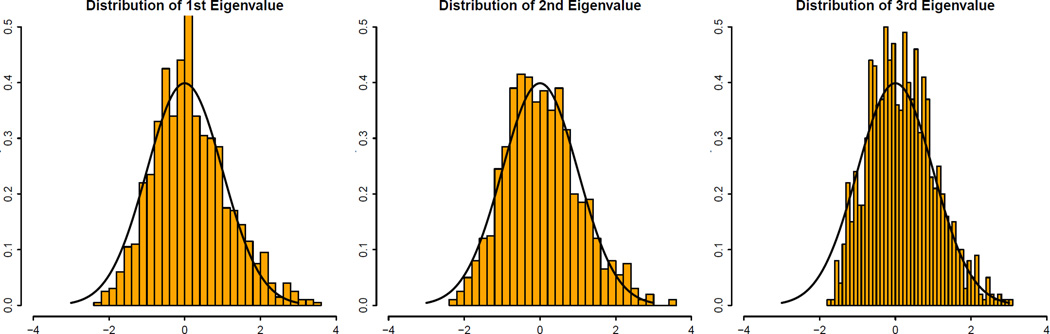

In this simulation, we set n = 50, p = 500 and Σ = diag(50, 20, 10, 1, …, 1), which has three spike eigenvalues (m = 3) λ1 = 50, λ2 = 20, λ3 = 10 and correspondingly c1 = 0.2, c2 = 0.5, c3 = 1. Data was generated from multivariate Gaussian. The number of simulations is 1000. The histograms of the standardized empirical eigenvalues , and their associated asymptotic distributions (standard normal) are plotted in Figure 1. The approximations are very good even for this low sample size n = 50.

Fig 1.

Behavior of empirical eigenvalues. The empirical distributions of for j = 1, 2, 3 are compared with their asymptotic distributions N(0, 1).

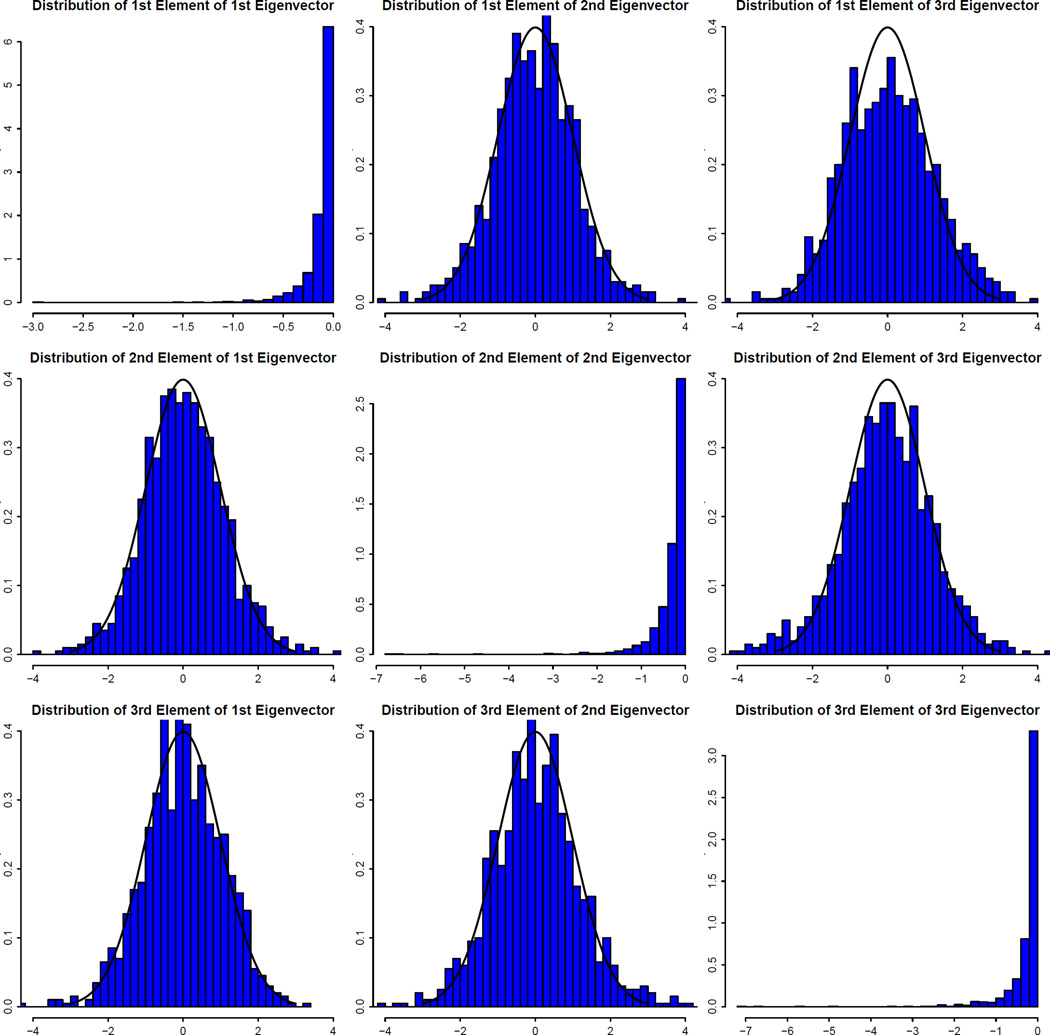

Figure 2 shows the histograms of for the first three elements (the spiked part) of the first three eigenvectors. On the one hand, according to the asymptotic results, the values in the diagonal position should stochastically converge to 0 as observed. On the other hand, plots in the off-diagonal positions should converge in distribution to N(0, 1) for k ≠ j after standardization, which is indeed the case. We also report the correlations between the first three elements for the three eigenvectors based on those 1000 repetitions in Table 1. The correlations are all quite close to 0, which is consistent with the theory.

Fig 2.

Behavior of empirical eigenvectors. The histogram of the kth element of the jth empirical vector is depicted in the location (k, j) for k, j ≤ 3. Off-diagonal plots of values are compared to their asymptotic distributions N(0, 1) for k ≠ j while diagonal plots of values are compared to stochastically 0.

Table 1.

The correlations between the first three elements for each of the three empirical eigenvectors based on 1000 repetitions

| 1st & 2nd elements | 1st & 3rd elements | 2nd & 3rd elements | |

|---|---|---|---|

| 1st Eigenvector | 0.00156 | −0.00192 | −0.04112 |

| 2nd Eigenvector | −0.02318 | −0.00403 | 0.01483 |

| 3rd Eigenvector | −0.02529 | −0.04004 | 0.12524 |

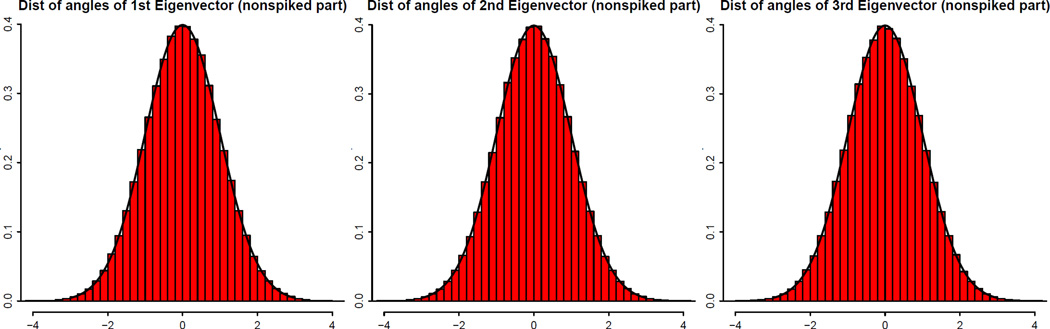

For the normalized non-spiked part ξ̂jB/‖ξ̂jB‖, it should be distributed uniformly over the unit sphere. This can be tested by the results of Cai, Fan and Jiang (2013). For any n data points X1, …, Xn on a p-dimensional sphere, define the normalized empirical distribution of angles of each pair of vectors as

where Θij ∈ [0, π] is the angle between vectors Xi and Xj. When the data are generated uniformly from a sphere, μn,p converges to the standard normal distribution with probability 1. Figure 3 shows the empirical distributions of all pairwise angles of the realized ξ̂jB/‖ξ̂jB‖ (j = 1, 2, 3) in 1000 simulations. Since number of such pairwise angels is , the empirical distributions and the asymptotic distributions N(0, 1) are almost identical. The normality holds even for a small subset of the angles.

Fig 3.

The empirical distributions of all pairwise angles of the 1000 realized ξ̂jB/‖ξ̂jB‖ (j = 1, 2, 3) compared with their asymptotic distributions N(0, 1).

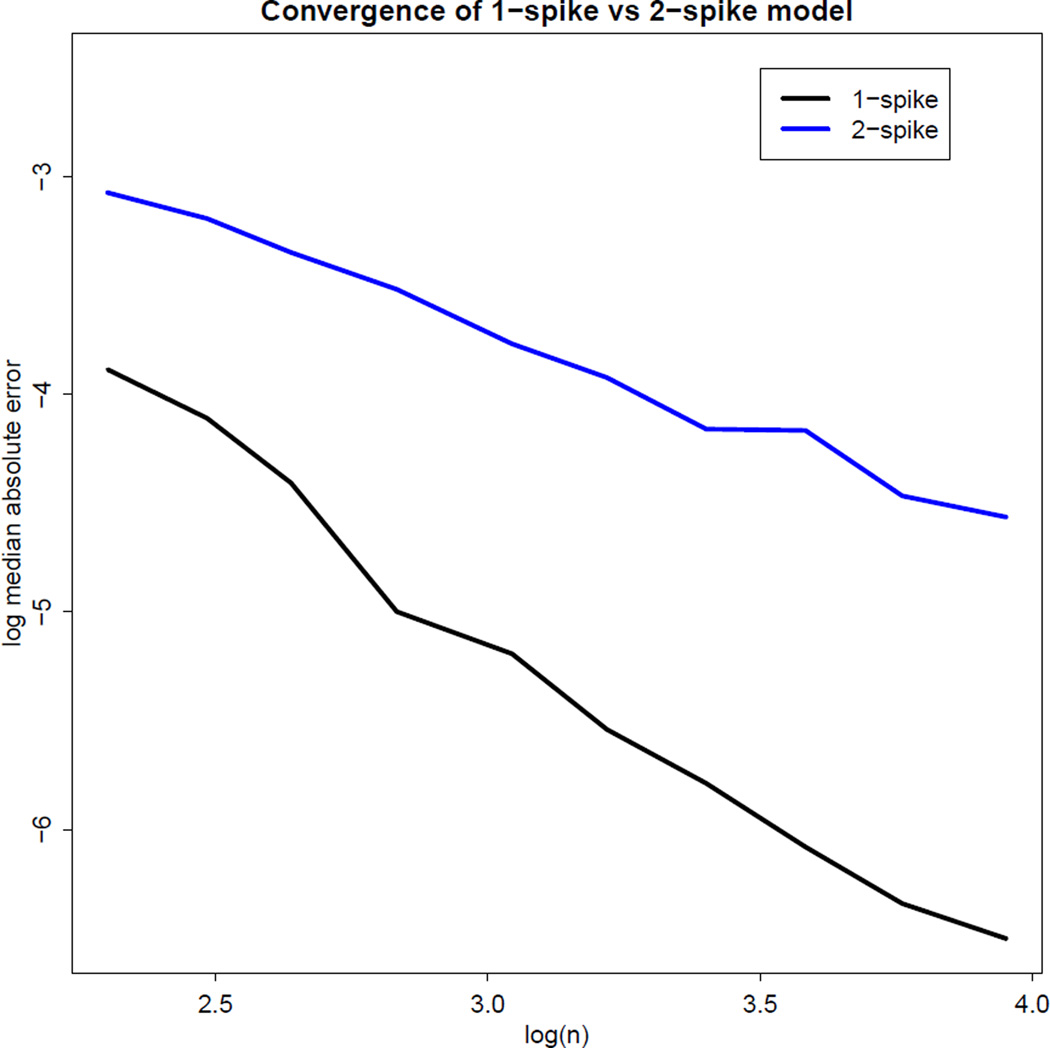

Lastly, we did simulations to verify the rate difference of 〈ξ̂j, ej〉 for m = 1 and m > 1, revealed in Theorem 3.2 (iii). We choose n = [10 × 1.2l] for l = 0, …, 9, p = [n3/100], where [·] represents rounding. We set λj = 1 for j ≥ 3 and consider two situations: (1) λ1 = p, λ2 = 1, (2) λ1 = 2λ2 = p. Under both cases, simulations were carried out 500 times and the corresponding angle of the empirical eigenvector and its truth was calculated for each simulation. The logarithm of the median absolute error of was plotted against log(n). Under the two situations, the rates of convergence are OP(n−3/2) and OP(n−1) respectively. Thus the slope of the curves should be −3/2 for a single spike and −1 for two spikes, which is indeed the case as shown in Figure 4.

Fig 4.

Difference of convergence rate of for models with a single spike and two spikes. The error should be expected to decrease at the rate of OP(n−3/2) and OP(n−1) respectively.

In short, all the simulation results match well with the theoretical results for the high dimensional regime.

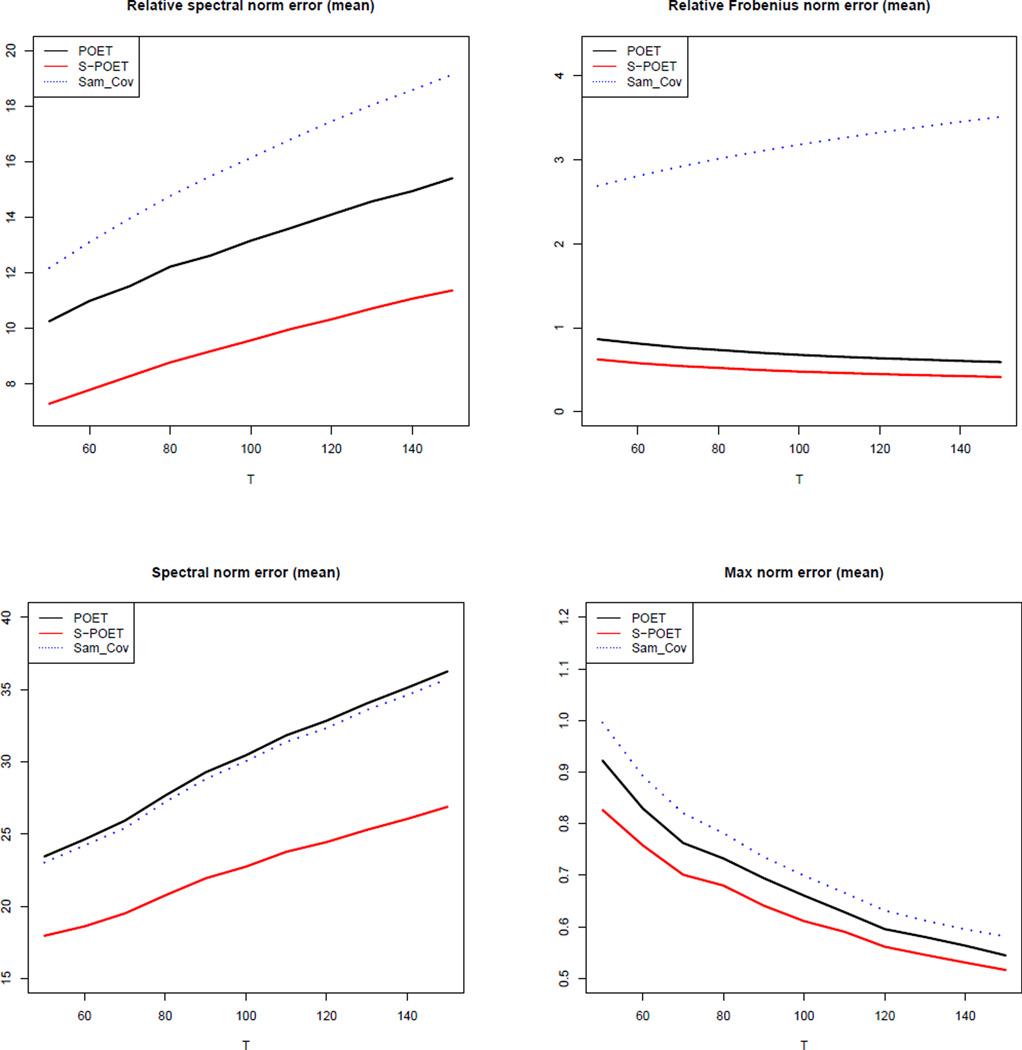

5.2. Performance of S-POET

We demonstrate the effectiveness of S-POET in comparison with POET. A similar setting to the last section is used, i.e. m = 3 and c1 = 0.2, c2 = 0.5, c3 = 1. The sample size T ranges from 50 to 150 and p = [T3/2]. Note that when T = 150, p ≈ 1800. The spiked eigenvalues are determined from p/(Tλj) = cj so that λj is of order , which is much smaller than p. For each pair of T and p, the following steps are used to generate observed data from the factor model for 200 times.

Each row of B is simulated from the standard multivariate normal distribution and the jth column is normalized to have norm λj for j = 1, 2, 3.

Each row of F is simulated from standard multivariate normal distribution.

Set where σi’s are generated from Gamma(α, β) with α = β = 100 (mean 1, standard deviation 0.1). The idiosyncratic error U is simulated from N(0, Σu).

Compute the observed data Y = BF′ + U.

Both S-POET and POET are applied to estimate the covariance matrix Σ = BB′ + Σu. Their mean estimation errors over 200 simulations, measured in relative spectral norm ‖Σ̂ − Σ‖Σ, relative Frobenius norm ‖Σ̂ − Σ‖Σ,F, spectral norm ‖Σ̂ − Σ‖ and max norm ‖Σ̂ − Σ‖max, are reported in Figure 5. The errors for sample covariance matrix are also depicted for comparison. First notice that no matter in what norm, S-POET uniformly outperforms POET and the sample covariance. It affirms the claim that shrinkage of spiked eigenvalues is necessary to maintain good performance when the spikes are not sufficiently large. Since the low rank part is not shrunk for POET, its error under the spectral norm is comparable and even slightly larger than that of the sample covariance matrix. The errors under max norm and relative Frobenius norm as expected decrease as T and p increase. However the error under the relative spectral norm does not converge: our theory shows it should increase in the order .

Fig 5.

Estimation errors of covariance matrix under relative spectral, relative Frobenius, spectral and max norms using S-POET (red), POET (black) and sample covariance (blue).

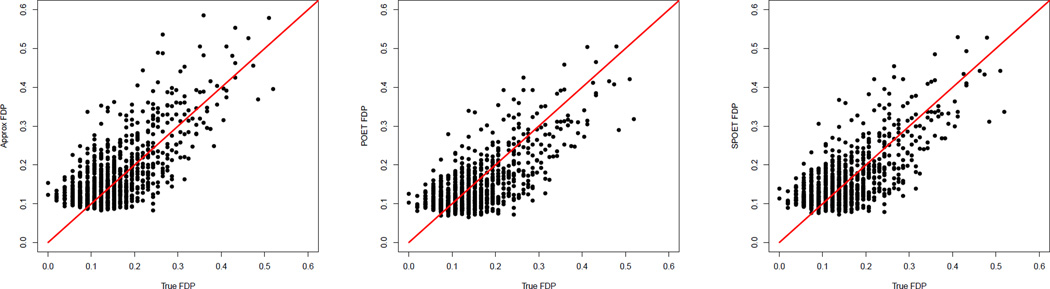

5.3. FDP estimation

In this section, we report simulation results on FDP estimation by using both POET and S-POET. The data are simulated in a similar way as in Section 5.2 with p = 1000 and n = 100. The first m = 3 eigenvalues have spike size proportional to which corresponds to α = β = 2/3 in Theorem 4.3. The true FDP is calculated by using FDP(t) = V (t)/R(t) with t = 0.01. The approximate FDP, FDPA(t), is calculated as in (4.13) with known B but estimated W given by Ŵ = (BB′)−1B′Z. This FDPA(t) based on a known covariance matrix serves as a benchmark for our estimated covariance matrix to compare with. We employed POET and S-POET to get and .

In Figure 6, three scatter plots are drawn to compare FDPA(t), and with the true FDP(t). The points are basically aligned along the 45 degree line, meaning that all of them are quite close to the true FDP. With the semi-strong signal , although much weaker than order p, POET accomplishes the task as well as S-POET. Both estimators perform as well as if we know the covariance matrix Σ, the benchmark.

Fig 6.

Comparison of estimated FDP’s with true values. The left plot assumes knowledge of B, the middle and right ones are corresponding to POET and S-POET methods respectively. The results are aligned along the 45-degree line, indicating the accuracy of the estimated FDP.

6. Conclusions

In this paper, we studied two closely related problems: the asymptotic behavior of empirical eigenvalues and eigenvectors under a general regime of bounded p/(nλj) and the large covariance estimation for factor models with relaxed signal level of .

The first study provides new technical tools for the derivation of error bounds for large covariance estimation under relative Frobenius norm (with better rate) and relative spectral norm (for the first time). The results motivate the new proposed covariance estimator S-POET for the second problem by correcting biases of the estimated leading eigenvalues. S-POET is demonstrated to have better sampling properties than POET, and this is convincingly verified in the simulation study. In addition, we are able to apply S-POET to two important applications, risk management and false discovery control, and relax the required signal to . Those conclusions shed new lights for applications of factor models.

On the other hand, the second problem is a key motivation for us to study the empirical engen-structure in a more general high dimensional regime. We aim to understand why PCA works for pervasive factor models but fails classical random matrix problems, without sparsity assumptions. What are the fundamental limit for the PCA in high dimension? We clearly showed that for both empirical eigenvalues and vectors, consistency is granted once p/(nλj) → 0. Further, our theories give a fine-grained characterization of the asymptotic behavior under the generalized and unified regime, which includes the situation of bounded eigenvalues, HDLSS, and pervasive factor models, especially for empirical eigenvectors. The asymptotic rate of convergence is obtained as long as p/(nλj) is bounded, while the asymptotic distribution is fully described when . Some interesting phenomena, such as interaction between multiple spikes, are also revealed in our results. Our proofs are novel in that we clearly identify terms that keep the low-dimensional asymptotic normality and terms that generate the random biases. In sum, our results serve as a necessary complement of the random matrix literature when the signal diverges with dimensionality.

Supplementary Material

Acknowledgments

The research was partially supported by NSF grants DMS-1206464 and DMS-1406266 and NIH grants R01-GM072611-11 and NIH R01GM100474-04. We would like to thank the Editor, associate editor, and anonymous referees for constructive comments that lead to substantial improvement of the presentation and results of the paper.

APPENDIX A

PROOFS FOR SECTION 3

A.1. Proof of Theorem 3.1

We first provide three useful lemmas for the proof. Lemma A.1 provides non-asymptotic upper and lower bound for the eigenvalues of weighted Wishart matrix for sub-Gaussian distributions.

Lemma A.1

Let A1, …, An’s be n independent p dimensional sub-Gaussian random vectors with zero mean and identity variance, and the sub-Gaussian norms bounded by a constant C0. Then for every t ≥ 0, with probability at least 1 − 2 exp(−ct2), one has

where for constants C, c > 0, depending on C0. Here |wi|’s is bounded for all i and .

The above lemma is the extension of the classical Davidson-Szarek bound [Theorem II.7 of Davidson and Szarek (2001)] to the weighted sample co-variance with sub-Gaussian distribution. It was shown by Vershynin (2010) that the conclusion holds with wi = 1 for all i. With similar techniques to those developed in Vershynin (2010), we can obtain the above lemma for general bounded weights. The details are omitted.

Now in order to prove the theorem, let us define two quantities and treat them separately in the following two lemmas. Let

where Zj is columns of . Then,

| (A.1) |

Lemma A.2

Under Assumptions 2.1 – 2.3, as n → ∞,

In addition, they are asymptotically independent.

Lemma A.3

Under Assumptions 2.1 – 2.3, for j = 1, ⋯, m, we have

The proofs of the above two lemmas will be given in Appendix?? in the supplementary material (Wang and Fan, 2015).

Proof of Theorem 3.1

By Wely’s Theorem, λj(A) + λn(B) ≤ λ̂j ≤ λj(A) + λ1(B): Therefore from Lemma A.3,

By Lemma A.2 and Slutsky’s theorem, we conclude that converges in distribution to N(0, κj − 1) and the limiting distributions of the first m eigenvalues are independent.

A.2. Proofs of Theorem 3.2

The proof of Theorem 3.2 is mathematically involved. The basic idea for proving part (i) is outlined in Section 2. We relegate less important technical lemmas A.4 – A.6 to Appendix ?? in the supplementary material (Wang and Fan, 2015) in order not to distract the readers. The proof of part (ii) utilizes the invariance of standard Gaussian distribution under orthogonal transformations.

Proof of Theorem 3.2

(i) Let us start by proving the asymptotic normality of ξ̂jA for the case m > 1. Write

where each Zj follows a sub-Gaussian distribution with mean 0 and identity variance In. Then by the eigenvalue relationship of equation (2.1), we have

| (A.2) |

Recall uj is the eigenvector of the matrix Σ̃, that is, . Using , we obtain

| (A.3) |

where we denote , Δ = λ̂j/λj − (1 + c̄cj). We then left-multiply equation (A.3) by and employ relationship (A.2) to replace uj by ξ̂jA and ξ̂jB as follows:

| (A.4) |

Further define

Then we have . Note that R is only well defined if m > 1. Therefore, by left multiplying R to equation (A.4),

| (A.5) |

where . Dividing both side by ‖ξ̂jA‖, we are able to write

| (A.6) |

where

| (A.7) |

Lemma A.4

As n → ∞, .

By Lemma A.4, rn is a smaller order term. Since ,

| (A.8) |

Now let us derive normality of the right hand side of (A.8). According to the definition of R,

| (A.9) |

Let and W(−j) be the (m − 1)-dimensional vector without the jth element in W. Since the jth diagonal element of R is zero, depends only on W(−j).

Lemma A.5

.

Therefore, by Lemma A.5 and Slutsky’s theorem,

Together with (A.8), we concludes (3.3) for the case m > 1.

Now let us turn to the case of m = 1. Since R is not defined for m = 1, we need to find a different derivation. Equivalently, (A.3) can be written as

Left-multiplying and using relationship (A.2), we obtain easily

where D is defined as before and according to the proof of Lemma A.4. Expanding at the point of (1 + c̄c1), we have

Note that from Lemmas A.2 and A.3, . Therefore due to the fact is asymptotically N(0, κ1 − 1), we conclude

This completes the first part of the proof.

(ii) We now prove the conclusion for the non-spiked part ξ̂jB. Recall that Xi follows N(0, Λ). Consider where as defined in the theorem . Here the superscript R indicates rescaled data by diag(Im, D0). After rescaling, we have . Correspondingly, the n × p data matrix XR = X diag(Im, D0) = (XA, XBD0) where and as the notations before. Assume and are eigenvectors given by Σ̂R and Σ̃R of the rescaled data XR and . It has been proved by Paul (2007) that is distributed uniformly over the unit sphere and is independent of due to the orthogonal invariance of the non-spiked part of . Hence it only remains to link ξ̂jB/‖ξ̂jB‖ with h0.

Note that Σ̃ = n−1XX′ and Σ̃R = n−1XRXR′, so

where the last term is of order by Lemma A.1. Thus by the sin θ theorem of Davis and Kahan (1970), . Next we convert from uj to ξ̂jB using the basic relationship (2.1). We have,

First it is not hard to see since and . The last result is due to the following lemma.

Lemma A.6

and .

We claim . Actually from the proof of Lemma A.6, we have

Then some elementary calculation gives the rate of I. Therefore, . The conclusion (3.4) follows.

To prove the max norm bound (3.5) of ‖ξ̂jB‖max, we first show . Recall that h0 is uniformly distributed on the unit sphere of dimension p − m. This follows easily from its normal representation. Let G to be (p − m)-dimensional multivariate standard normal distributed, then . It then follows

From the derivation above,

which gives , given the fact that by Lemma A.6. Thus we are done with the second part of the proof.

(iii) The proof for the convergence of ‖ξ̂jA‖ and ‖ξ̂jB‖ are given in Lemma A.6. If m = 1, the result for ‖ξ̂jA‖ directly gives (3.6) with the same rate. For m > 1, from Lemma A.6 we have

On the other hand, from Theorem 3.2 (i), for k ≠ j ≤ m. So , which implies (3.6).

Footnotes

SUPPLEMENTARY MATERIAL

Supplement: Technical proofs Wang and Fan (2015) (). This document contains technical lemmas for Section 3 and the comparison of assumptions and theoretical proofs for Section 4.

REFERENCES

- Agarwal A, Negahban S, Wainwright MJ. Noisy matrix decomposition via convex relaxation: Optimal rates in high dimensions. The Annals of Statistics. 2012;40:1171–1197. [Google Scholar]

- Amini AA, Wainwright MJ. Information Theory 2008. ISIT 2008. IEEE International Symposium on. IEEE; 2008. High-dimensional analysis of semidefinite relaxations for sparse principal components; pp. 2454–2458. [Google Scholar]

- Anderson TW. Asymptotic theory for principal component analysis. The Annals of Mathematical Statistics. 1963;34:122–148. [Google Scholar]

- Antoniadis A, Fan J. Regularization of wavelet approximations. Journal of the American Statistical Association. 2001;96 [Google Scholar]

- Bai Z. Methodologies in spectral analysis of large-dimensional random matrices, a review. Statist. Sinica. 1999;9:611–677. [Google Scholar]

- Bai J. Inferential theory for factor models of large dimensions. Econometrica. 2003;71:135–171. [Google Scholar]

- Bai J, Ng S. Determining the number of factors in approximate factor models. Econometrica. 2002;70:191–221. [Google Scholar]

- Bai Z, Silverstein JW. Spectral analysis of large dimensional random matrices. 2nd. Springer; 2009. [Google Scholar]

- Bai Z, Yao J. on sample eigenvalues in a generalized spiked population model. Journal of Multivariate Analysis. 2012;106:167–177. [Google Scholar]

- Bai Z, Yin Y. Limit of the smallest eigenvalue of a large dimensional sample covariance matrix. The Annals of Probability. 1993:1275–1294. [Google Scholar]

- Baik J, Ben Arous G, Péché S. Phase transition of the largest eigenvalue for nonnull complex sample covariance matrices. The Annals of Probability. 2005:1643–1697. [Google Scholar]

- Benaych-Georges F, Nadakuditi RR. The eigenvalues and eigenvectors of finite, low rank perturbations of large random matrices. Advances in Mathematics. 2011;227:494–521. [Google Scholar]

- Berthet Q, Rigollet P. Optimal detection of sparse principal components in high dimension. The Annals of Statistics. 2013;41:1780–1815. [Google Scholar]

- Bickel PJ, Levina E. Covariance regularization by thresholding. The Annals of Statistics. 2008:2577–2604. [Google Scholar]

- Birnbaum A, Johnstone IM, Nadler B, Paul D. Minimax bounds for sparse PCA with noisy high-dimensional data. The Annals of Statistics. 2013;41:1055. doi: 10.1214/12-AOS1014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai T, Fan J, Jiang T. Distributions of angles in random packing on spheres. The Journal of Machine Learning Research. 2013;14:1837–1864. [PMC free article] [PubMed] [Google Scholar]

- Cai T, Ma Z, Wu Y. Optimal estimation and rank detection for sparse spiked covariance matrices. Probability Theory and Related Fields. 2015;161:781–815. doi: 10.1007/s00440-014-0562-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Candès EJ, Li X, Ma Y, Wright J. Robust principal component analysis? Journal of the ACM (JACM) 2011;58:11. [Google Scholar]

- Chamberlain G, Rothschild M. Arbitrage, factor structure, and mean-variance analysis on large asset markets. Econometrica. 1983;51:1305–1324. [Google Scholar]

- Chandrasekaran V, Sanghavi S, Parrilo PA, Willsky AS. Rank-sparsity incoherence for matrix decomposition. SIAM Journal on Optimization. 2011;21:572–596. [Google Scholar]

- Chen KH, Shimerda TA. An empirical analysis of useful financial ratios. Financial Management. 1981:51–60. [Google Scholar]

- Davidson KR, Szarek SJ. Local operator theory, random matrices and Banach spaces. In: Johnson WB, Lindenstrauss J, editors. Handbook of the Geometry of Banach Spaces. Vol. 1. Elsevier Science BV; 2001. pp. 317–366. [Google Scholar]

- Davis C, Kahan WM. The rotation of eigenvectors by a perturbation. III. SIAM Journal on Numerical Analysis. 1970;7:1–46. [Google Scholar]

- De Mol C, Giannone D, Reichlin L. Forecasting using a large number of predictors: Is Bayesian shrinkage a valid alternative to principal components? Journal of Econometrics. 2008;146:318–328. [Google Scholar]

- Donoho DL, Gavish M, Johnstone IM. Optimal shrinkage of eigenvalues in the Spiked Covariance Model. arXiv preprint arXiv:1311.0851. 2014 doi: 10.1214/17-AOS1601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Fan Y, Lu J. High dimensional covariance matrix estimation using a factor model. Journal of Econometrics. 2008;147:186–197. [Google Scholar]

- Fan J, Han X, Gu W. Estimating false discovery proportion under arbitrary covariance dependence. Journal of the American Statistical Association. 2012;107:1019–1035. doi: 10.1080/01621459.2012.720478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Han X. Estimation of false discovery proportion with unknown dependence. arXiv preprint arXiv:1305.7007. 2013 doi: 10.1111/rssb.12204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Mincheva M. Large covariance estimation by thresholding principal orthogonal complements. Journal of the Royal Statistical Society: Series B. 2013;75:1–44. doi: 10.1111/rssb.12016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Shi X. Risks of large portfolios. Journal of Econometrics. 2015;186:367–387. doi: 10.1016/j.jeconom.2015.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Liao Y, Wang W. Projected Principal Component Analysis in Factor Models. The Annals of Statistics. 2016;44:219–254. doi: 10.1214/15-AOS1364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Xue L, Yao J. Sufficient Forecasting Using Factor Models. arXiv preprint arXiv:1505.07414. 2015 [Google Scholar]

- Fan J, Liu H, Wang W, Zhu Z. Heterogeneity Adjustment with Applications to Graphical Model Inference. arXiv preprint arXiv:1602.05455. 2016 doi: 10.1214/18-EJS1466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall P, Marron J, Neeman A. Geometric representation of high dimension, low sample size data. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2005;67:427–444. [Google Scholar]

- Johnstone IM. On the distribution of the largest eigenvalue in principal components analysis. The Annals of Statistics. 2001:295–327. [Google Scholar]

- Johnstone IM, Lu AY. On Consistency and Sparsity for Principal Components Analysis in High Dimensions. Journal of the American Statistical Association. 2009;104:682–693. doi: 10.1198/jasa.2009.0121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jung S, Marron J. PCA consistency in high dimension, low sample size context. The Annals of Statistics. 2009;37:4104–4130. [Google Scholar]

- Koltchinskii V, Lounici K. Concentration Inequalities and Moment Bounds for Sample Covariance Operators. arXiv preprint arXiv:1405.2468. 2014a [Google Scholar]

- Koltchinskii V, Lounici K. Asymptotics and Concentration Bounds for Bilinear Forms of Spectral Projectors of Sample Covariance. arXiv preprint arXiv:1408.4643. 2014b [Google Scholar]

- Landgrebe J, Wurst W, Welzl G. Permutation-validated principal components analysis of microarray data. Genome Biol. 2002;3:1–11. doi: 10.1186/gb-2002-3-4-research0019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee S, Zou F, Wright FA. Convergence and prediction of principal component scores in high-dimensional settings. The Annals of Statistics. 2010;38:3605. doi: 10.1214/10-AOS821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leek JT, Storey JD. A general framework for multiple testing dependence. Proceedings of the National Academy of Sciences. 2008;105:18718–18723. doi: 10.1073/pnas.0808709105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leek JT, Scharpf RB, Bravo HC, Simcha D, Langmead B, Johnson WE, Geman D, Baggerly K, Irizarry RA. Tackling the widespread and critical impact of batch effects in high-throughput data. Nature Reviews Genetics. 2010;11:733–739. doi: 10.1038/nrg2825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma Z. Sparse principal component analysis and iterative thresholding. The Annals of Statistics. 2013;41:772–801. [Google Scholar]

- Onatski A. Asymptotics of the principal components estimator of large factor models with weakly inuential factors. Journal of Econometrics. 2012;168:244–258. [Google Scholar]

- Paul D. Asymptotics of sample eigenstructure for a large dimensional spiked covariance model. Statistica Sinica. 2007;17:1617–1642. [Google Scholar]

- Pesaran MH, Zaffaroni P. Optimal asset allocation with factor models for large portfolios. 2008 [Google Scholar]

- Price AL, Patterson NJ, Plenge RM, Weinblatt ME, Shadick NA, Reich D. Principal components analysis corrects for stratification in genome-wide association studies. Nature Genetics. 2006;38:904–909. doi: 10.1038/ng1847. [DOI] [PubMed] [Google Scholar]

- Ringnér M. What is principal component analysis? Nature Biotechnology. 2008;26:303–304. doi: 10.1038/nbt0308-303. [DOI] [PubMed] [Google Scholar]

- Rothman AJ, Levina E, Zhu J. Generalized thresholding of large covariance matrices. Journal of the American Statistical Association. 2009;104:177–186. [Google Scholar]

- Shen D, Shen H, Zhu H, Marron J. Surprising asymptotic conical structure in critical sample eigen-directions. arXiv preprint arXiv:1303.6171. 2013 [Google Scholar]

- Stock JH, Watson MW. Forecasting using principal components from a large number of predictors. Journal of the American statistical association. 2002;97:1167–1179. [Google Scholar]

- Thomas CG, Harshman RA, Menon RS. Noise reduction in BOLD-based fMRI using component analysis. Neuroimage. 2002;17:1521–1537. doi: 10.1006/nimg.2002.1200. [DOI] [PubMed] [Google Scholar]

- Vershynin R. Introduction to the non-asymptotic analysis of random matrices. arXiv preprint arXiv:1011.3027. 2010 [Google Scholar]

- Vu VQ, Lei J. Minimax rates of estimation for sparse PCA in high dimensions. arXiv preprint arXiv:1202.0786. 2012 [Google Scholar]

- Wang W, Fan J. Supplementary appendix to the paper “Asymptotics of empirical eigen-structure for high dimensional spiked covariance”. 2015 [Google Scholar]

- Yamaguchi-Kabata Y, Nakazono K, Takahashi A, Saito S, Hosono N, Kubo M, Nakamura Y, Kamatani N. Japanese population structure, based on SNP genotypes from 7003 individuals compared to other ethnic groups: effects on population-based association studies. The American Journal of Human Genetics. 2008;83:445–456. doi: 10.1016/j.ajhg.2008.08.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yata K, Aoshima M. Effective PCA for high-dimension, low-sample-size data with noise reduction via geometric representations. Journal of Multivariate Analysis. 2012;105:193–215. [Google Scholar]

- Yata K, Aoshima M. PCA consistency for the power spiked model in high-dimensional settings. Journal of Multivariate Analysis. 2013;122:334–354. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.