Abstract

The naïve Bayes classifier (NBC) is one of the most popular classifiers for class prediction or pattern recognition from microarray gene expression data (MGED). However, it is very much sensitive to outliers with the classical estimates of the location and scale parameters. It is one of the most important drawbacks for gene expression data analysis by the classical NBC. The gene expression dataset is often contaminated by outliers due to several steps involved in the data generating process from hybridization of DNA samples to image analysis. Therefore, in this paper, an attempt is made to robustify the Gaussian NBC by the minimum β-divergence method. The role of minimum β-divergence method in this article is to produce the robust estimators for the location and scale parameters based on the training dataset and outlier detection and modification in test dataset. The performance of the proposed method depends on the tuning parameter β. It reduces to the traditional naïve Bayes classifier when β → 0. We investigated the performance of the proposed beta naïve Bayes classifier (β-NBC) in a comparison with some popular existing classifiers (NBC, KNN, SVM, and AdaBoost) using both simulated and real gene expression datasets. We observed that the proposed method improved the performance over the others in presence of outliers. Otherwise, it keeps almost equal performance.

1. Introduction

Classification is a supervised learning approach for separation of multivariate data into various sources of populations. It has been playing significant roles in bioinformatics by class prediction or pattern recognition from molecular OMICS datasets. Microarray gene expression data analysis is one of the most important OMICS research wings for bioinformatics [1]. There are several classification and clustering approaches that have been addressed previously for analyzing MGED [2–11]. The Gaussian linear Bayes classifier (LBC) is one of the most popular classifiers for class prediction or pattern recognition. However, it is not so popular for microarray gene expression data analysis, since it suffers from the inverse problem of its covariance matrix in presence of large number of genes (p) with small number of patients/samples (n) in the training dataset. The Gaussian naïve Bayes classifier (NBC) overcomes this difficulty of Gaussian LBC by taking the normality and independence assumptions on the variables. If these two assumptions are violated, then the nonparametric version of NBC is suggested in [12]. In this case the nonparametric classification methods work well but they produce poor performance for small sample sizes or in presence of outliers. In MGED the small samples are conducted because of cost and limited specimen availability [13]. There are some other versions of NBC also [14, 15]. However, none of them are so robust against outliers. It is one of the most important drawbacks for gene expression data analysis by the existing NBC. The gene expression dataset is often contaminated by outliers due to several steps involved in the data generating process from hybridization of DNA samples to image analysis. Therefore, in this paper, an attempt is made to robustify the Gaussian NBC by the minimum β-divergence method within two steps. At step-1, the minimum β-divergence method [16–18] attempts to estimate the parameters for the Gaussian NBC based on the training dataset. At step-2, an attempt is made to detect the outlying data vector from the test dataset using the β-weight function. Then an attempt is made to propose criteria to detect the outlying components in the test data vector and the modification of outlying components by the reasonable values. It will be observed that the performance of the proposed method depends on the tuning parameter β and it reduces to the traditional Gaussian NBC when β → 0. Therefore, we call the proposed classifier as β-NBC.

An attempt is made to investigate the robustness performance of the proposed β-NBC in a comparison with several versions of robust linear classifiers based on M-estimator [19, 20], MCD (Minimum Covariance Determinant), and MVE (Minimum Volume Ellipsoid) estimators [21, 22], Orthogonalized Gnanadesikan-Kettenring (OGK) estimator including MCD-A, MCD-B, and MCD-C [23], and Feasible Solution Algorithm (FSA) classifiers [24–26]. We observed that the proposed β-NBC outperforms existing robust linear classifiers as mentioned earlier. Then we investigate the performance of the proposed method in a comparison with some popular classifiers including Support Vector Machine (SVM), k-nearest neighbors (KNN), and AdaBoost; those are widely used in gene expression data analysis [27–29]. We observed that the proposed method improves the performance over the others in presence of outliers. Otherwise, it keeps almost equal performance.

2. Methodology

2.1. Naïve Bayes Classifier

The naïve Bayes classifiers (NBCs) [30] are a family of probabilistic classifiers depending on the Bayes' theorem with independence and normality assumptions among the variables. The common rule of NBCs is to pick the hypothesis that is most probable; this is known as the maximum a posteriori (MAP) decision rule. Assume that we have a training sample of vectors {xjk = (x1jk, x2jk,…,xpjk)T; j = 1, 2,…, Nk} of size Nk for k = 1,2,…, K, where xijk denotes the jth observation of the ith variable in the kth population/class (Ck). Then the NBCs assign a class label for some k as follows:

| (1) |

For the Gaussian NBC, the density function fk(xjk∣θk, Ck) of kth population/class (Ck) can be written as

| (2) |

where θk = {μk, Λk}, and here μk = (μ1k, μ2k,…,μpk)T, is the mean vector and the diagonal covariance matrix is

| (3) |

2.2. Maximum Likelihood Estimators (MLEs) for the Gaussian NBC

We assume that the prior probabilities p(Ck) are known and the maximum likelihood estimators (MLEs) and of μk and Λk are obtained based on the training dataset as follows:

| (4) |

| (5) |

| (6) |

where , , and N = ∑k=1KNk; i = 1,2,…, p.

It is obvious from (1)-(2) that the Gaussian NBC depends on the mean vectors (μk) and diagonal covariance matrix (Λk); those are estimated by the maximum likelihood estimators (MLEs) as given in (4)–(6) based on the training dataset. Therefore, MLE based Gaussian NBC produces misleading results in presence of outliers in the datasets. To get rid of this problem, an attempt is made to robustify the Gaussian NBC by minimum β-divergence method [16–18].

2.3. Robustification of Gaussian NBC by the Minimum β-Divergence Method (Proposed)

2.3.1. Minimum β-Divergence Estimators for the Gaussian NBC

Let g(xk) be the true density and f(xk∣θk) be the model density for kth populations; then the β-divergence of two p.d.f can be defined by

| (7) |

for β > 0 and Dβ(g(xk), f(xk∣θk)) ≥ 0. Equality holds if and only if g(xk) = f(xk∣θk) for all xk. When β tends to zero, β-divergence reduces to Kullback Leibler (K-L) divergence; that is,

| (8) |

The minimum β-divergence estimator is defined by

| (9) |

For the Gaussian density θk = {μk, Λk} and the minimum β-divergence estimators and for the mean vector μk and the diagonal covariance matrix Λk, respectively, are obtained iteratively as follows:

| (10) |

where

| (11) |

| (12) |

The formulation of (10)–(12) is straightforward as described in the previous works [17, 18]. The function in (12) is called the β-weight function, which plays the key role for robust estimation of the parameters. If β tends to 0, then (10) are reduced to the classical noniterative estimates of mean and diagonal covariance matrix as given in (4) and (6), respectively. The performance of the proposed method depends on the value of the tuning parameter β and initialization of the Gaussian parameters θk = {μk, Λk}.

2.3.2. Parameters Initialization and Breakdown Points of the Estimates

The mean vector μk is initialized by the median vector, since mean and median are same for normal distribution and the median (Me) is highly robust against outliers with 50% breakdown points to estimate central value of the distribution. The median vector of kth class/population is defined as

| (13) |

The diagonal covariance matrix Λk is initialized by the identity matrix (I). The iterative procedure will converge to the optimal point of the parameters, since the initial mean vector would belong to the center of the dataset with 50% breakdown points. The proposed estimators can resist the effect of more than 50% breakdown points if we can initialize the mean vector μk by a vector that belongs to the good part of the dataset and the variance-covariance Λk by the identity (I) matrix. More discussion about high breakdown points for the minimum β-divergence estimators can be found in [18].

2.3.3. β-Selection Using T-Fold Cross Validation (CV) for Parameter Estimation

To select the appropriate β by CV, we fix the tuning parameter β to β0. The computation steps for selecting appropriate β by T-fold cross validation is given below.

Step 1 . —

Dataset Dk = {xjk; j = 1,2,…, Nk} is split into T subsets; Dk(1), Dk(2),…, Dk(T) where Dk(t) = {xtk; t = 1,2,…, Ntk} and ∑t=1TNtk = Nk.

Step 2 . —

Let Dkc(t) = {xsk∣xsk ∉ Dk(t), s = 1,2,…, Ntkc = (Nk − Ntk)} for t = 1,2,…, T.

Step 3 . —

Estimate and iteratively by (10) based on dataset Dkc(t).

Step 4 . —

Compute CV(t) using dataset Dk(t), for , where .

Step 5 . —

End.

Computed suitable β by

| (14) |

where 𝔇k,β0(β) = (1/Nk)∑t=1TCVk(t).

If the sample size (Nk) is small such that Ntkc = (Nk − Ntk) < p, then T = Nk (leave-one-out CV) can be used to select the appropriate β. More discussion about β selection also can be found in [16–18].

2.3.4. Outlier Identification Using β-Weight Function

The performance of NBC for classification of an unlabeled data vector x using (1) not only depends on the robust estimation of the parameters but also depends on the values of x weather it is contaminated or not. The data vector x is said to be contaminated if at least one component of x = {x1, x2,…, xp} is contaminated by outlier. To derive a criterion of whether the unlabeled data vector x is contaminated or not, we consider β-weight function (12) and rewrite it as follows:

| (15) |

The values of this weight function lie between 0 and 1. This weight function produces larger weight (but less than 1) if x ∈ Ck and smaller weight (but greater than 0) if x ∉ Ck or contaminated by outlier. Therefore, the β-weight function (15) can be characterized as

| (16) |

The threshold value ψk can be determined based on the empirical distribution of β-weight function as discussed in [31] and by the quantile values of for j = 1,2,…, Nk with probability

| (17) |

where ϑ is the probability for selecting the cut-off value ψk and the value of ϑ should lie between 0.00 and 0.05. In this paper, heuristically we choose ϑ = 0.03 to fix the cut-off value ψk for detection of outlying data vector using (18). This idea was first introduced in [31].

Then the criteria whether the unlabeled data vector x is contaminated or not can be defined as follows:

| (18) |

where ψ = ∑k=1Kψk.

However, in this paper, we directly choose the threshold value of ψ as follows:

| (19) |

With heuristically η = 0.10, where 𝔇 is the training dataset including the unclassified data vector x, (19) was also used in the previous works in [16, 18] to choose the threshold value for outlier detection.

2.3.5. Classification by the Proposed β-NBC

When the unlabeled data vector x is usual, the appropriate label/class of x can be determined using the minimum β-divergence estimators of in the predicting equation (1). If the unlabeled data vector x is unusual/contaminated by outliers, then we propose a classification rule as follows. We compute the absolute difference between the outlying vector and each of mean vectors as

| (20) |

Compute sum of the smallest r components of dk as Skr = dk(1) + dk(2) + ⋯+dk(r), where r = round (p/2). Then the unlabeled test data vector x can be classified as

| (21) |

If the outlying test vector x is classified in to class k, then its ith component is said to be outlying if dki > Skr (i = 1,2,…, p). Then we update x by replacing its outlying components with the corresponding mean components from the mean vector of kth population. Let x∗ be the updated vector of x. Then we use x∗ instead of x to confirm the label/class of x using (1).

3. Simulation Study

3.1. Simulated Dataset 1

To investigate the performance of our proposed (β-NBC) classifier in a comparison with four popular classifiers (KNN, NBC, SVM, and AdaBoost), we generated both training and test datasets from m = 2 multivariate normal distributions with different mean vectors (μk, k = 1,2) of length p = 10 but common covariance matrix (Λk = Λ; k = 1,2). In this simulation study, we generated N1 = 40 samples from the first population and N2 = 42 samples from the second population for both training and test datasets. We computed the training error and test error rate for all five classifiers using both original and contaminated datasets with different mean vectors {(μ1, μ2 = μ1 + t); t = 0,…, 9}, where the other parameters remain the same for each dataset. For convenience of the presentation, we distinguish the two mean vectors in such a way in which the second mean vector is generated by adding t with each of the components of the first mean vector.

3.2. Simulated Dataset 2

To investigate the performance of the proposed classifier (β-NBC) in a comparison of the classical NBC for the classification of object into two groups, let us consider a model for generating gene expression datasets as displayed in Table 1 which was also used in Nowak and Tibshirani [32]. In Table 1, the first column represents the gene expressions of normal individuals and the second column represents the gene expressions of patient individuals. First row represents the genes from group A and second row represents the genes from group B. To randomize the gene expression, Gaussian noise is added from N(0, σ2). First we generate a training gene-set using the data generating model (Table 1) with parameters d = 5 and σ2 = 1, where p1 = 30 genes denoted by {A1, A2,…, A30} are generated for group A and p2 = 30 genes denoted by {B1, B2,…, B30} are generated for group B with n1 = 30 normal individuals and n2 = 30 patients (e.g., cancer or any other disease). Then we generate a test gene-set using the same model with the same parameters d = 5 and σ2 = 1 as before, where p11 = 30 genes denoted by {A31, A32,…, A60} are generated for group A and p22 = 30 genes denoted by {B31, B32,…, B60} are generated for group B with n11 = 25 normal individuals and n22 = 25 patients (e.g., cancer or any other disease).

Table 1.

Gene expression data generating model.

| Gene group | Individual | |

|---|---|---|

| Normal | Patient | |

| A | d + N(0, σ2) | −d + N(0, σ2) |

| B | −d + N(0, σ2) | d + N(0, σ2) |

3.3. Simulated Dataset 3

To demonstrate the performance of the proposed classifier (β-NBC) in a comparison of some other robust linear classifiers based on the robust estimators (MCD, MVE, OGK, MCD-A, MCD-B, MCD-C, and FSA) as mentioned earlier for the classification of object into different groups, we have generated the training and test datasets from m = 2, 3 multivariate normal distributions with variables p = 10, 5, respectively. We consider n1 = 40 and n2 = 35 (n = n1 + n2) samples from m = 2 different multivariate normal populations Np(µ1, Λ1) and Np(µ2, Λ2). Here µ2 = µ1 + Ω with Ω = 0,1,…, 10 such that µ1 = µ2 for Ω = 0; otherwise µ1 ≠ µ2, where the scalar number Ω is the common difference between two corresponding mean components of μ1 and μ2, respectively. Similarly, for generating the training and test datasets, we consider the n1 = 30, n2 = 30, and n3 = 30 (n = n1 + n2 + n3) samples from m = 3. It is carried out with different means and common variance-covariance matrix of multivariate normal populations Np(μ1, Λ1), Np(μ2, Λ2), and Np(μ3, Λ3). In this case we consider μk = μk + Ω with Ω = 0,1,…, 10 and k = 1,2, 3 such that μ1 = μ2 = μ3 for Ω = 0; otherwise μ1 ≠ μ2 ≠ μ3, where the scalar number Ω is the common difference among the corresponding mean components of μ1, μ2, and μ3, respectively.

3.4. Head and Neck Cancer Gene Expression Dataset

To demonstrate the performance of the proposed classifier (β-NBC) in a comparison with four popular classifiers (KNN, NBC, SVM, and AdaBoost) with the real gene expression dataset, we considered the head and neck cancer (HNC) gene expression dataset from the previous work [33]. The term head and neck cancer denotes a group of biologically comparable cancers originating from the upper aero digestive tract, including the following parts of human body: lip, oral cavity (mouth), nasal cavity, pharynx and larynx, and paranasal sinuses. This microarray gene expression dataset contains 12626 genes, where 594 genes are differentially expressed and the rest of the genes are equally expressed.

4. Simulation and Real Data Analysis Results

4.1. Simulation Results of Dataset 1

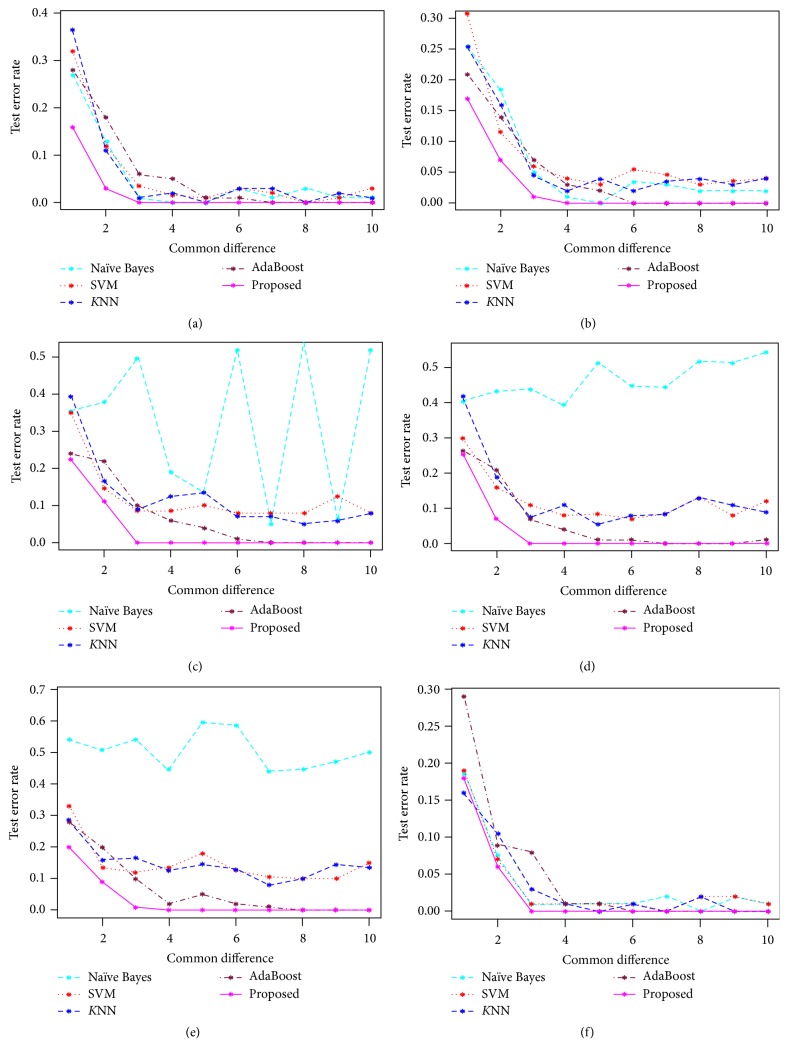

We have used the simulated dataset 1 to investigate the performance of the proposed method with the performance of the other popular classifiers such as classical NBC, SVM, KNN, and AdaBoost. Figures 1(a)–1(f) represent the test error rate estimated by these five classifiers against the common mean differences in absence of outliers (original dataset) and in presence of 5%, 10%, 15%, 20%, and 25% outliers in test dataset, respectively. From Figure 1(f) it is evident that in absence of outlier every method produces almost the same result, whereas, in presence of different levels of outliers (see Figures 1(a)–1(e)), the proposed method outperformed the other methods by producing low test error rate. Table 2 is summarized with different performance measures (accuracy, sensitivity, specificity, positive predicted value (PPV), negative predicted value (NPV), prevalence, detection rate, detection prevalence, Matthews correlation coefficient (MCC), and misclassification error rate). All these performance measures are computed by the five methods (NBC, KNN, SVM, AdaBoost, and proposed).

Figure 1.

Misclassification error rate at different outlier levels: (a) 5% contamination rate, (b) 10% contamination rate, (c) 15% contamination rate, (d) 20% contamination rate, (e) 25% contamination rate, and (f) without contamination rate for the test dataset by the simulated dataset 1.

Table 2.

Performance evaluation by different methods based on simulated dataset 1.

| Prediction methods | NBC | SVM | KNN | AdaBoost | Proposed | p value |

|---|---|---|---|---|---|---|

| Accuracy | 0.55 | 0.84 | 0.86 | 0.82 | 0.97 | 0.00 |

| 95% CI of accuracy | (0.45, 0.65) | (0.75, 0.90) | (0.77, 0.92) | (0.73, 0.89) | (0.91, 0.99) | — |

| Sensitivity | 0.54 | 0.78 | 0.79 | 0.90 | 0.95 | 0.00 |

| Specificity | 0.62 | 0.94 | 0.97 | 0.76 | 0.94 | 0.00 |

| PPV | 0.88 | 0.96 | 0.98 | 0.73 | 0.94 | 0.00 |

| NPV | 0.20 | 0.71 | 0.73 | 0.91 | 0.94 | 0.00 |

| Prevalence | 0.84 | 0.63 | 0.63 | 0.41 | 0.40 | 0.00 |

| Detection rate | 0.45 | 0.49 | 0.50 | 0.37 | 0.48 | 0.00 |

| Detection prevalence | 0.51 | 0.51 | 0.51 | 0.51 | 0.51 | — |

| MCC | 0.12 | 0.70 | 0.74 | 0.65 | 0.94 | 0.00 |

| MER | 0.49 | 0.18 | 0.17 | 0.08 | 0.03 | 0.03 |

From Table 2 we observed that the proposed method produces better results than the other classifiers (NBC, SVM, KNN, and AdaBoost), since it produces higher values of accuracy (>97%), sensitivity (>95%), specificity (>94%), PPV (>94%), NPV (>94%), and MCC (>94%) and lower values of prevalence and MER (<4%). The proportion test statistic [34] has been used to test the significance of several proportions produced by the five classifiers for each of the performance measures. The column 7 of Table 2 represents the p values of this test statistic. Since all the p values except MER are less than 0.01, so we can conclude that the performance results are highly statistically significant. The MER (p value < 0.05) is also statistically significant at 5% level of significance. So we may conclude from simulated dataset 1 that our proposed method performed better than the other classical methods for the contaminated dataset. It keeps equal performance in absence of outliers for the original dataset.

4.2. Simulation Results of Dataset 2

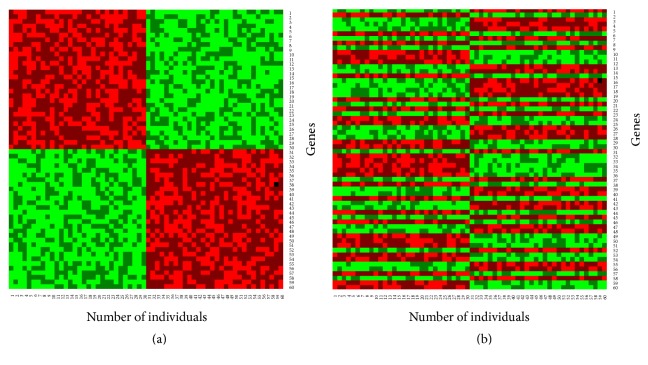

To investigate the performance of the proposed classifier (β-NBC) in a comparison of the classical NBC for the classification of objects into two groups, we considered the simulated dataset 2. Figures 2(a) and 2(b) show training and test datasets in absence of outliers, respectively. Here genes are randomly allocated in the test dataset. Figures 3(a) and 3(b) show the results of classified test dataset by classical and proposed NBC, respectively.

Figure 2.

Simulation dataset 2 using data generating model (given in Table 1): (a) training gene-set and (b) test gene-set, without contamination.

Figure 3.

Classification results using (a) classical NBC and (b) proposed (β-NBC) method for the case without contamination based on the simulated dataset 2.

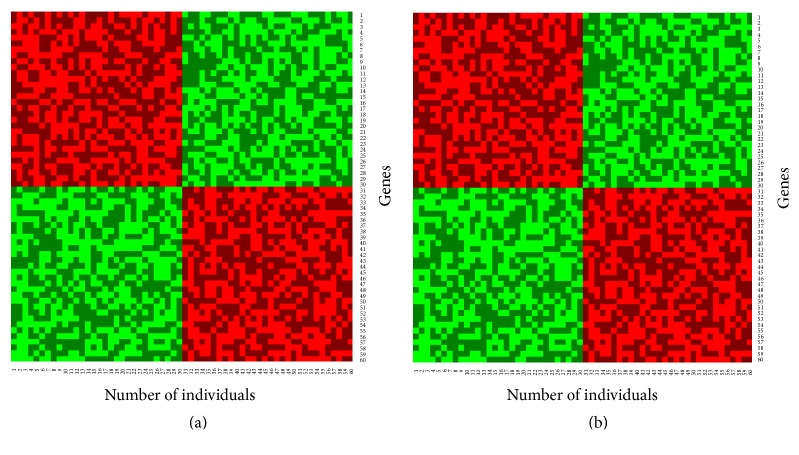

From classification results we observed that both the naïve Bayes procedures and proposed method produce almost the same results with low misclassification error rates in absence of outliers. To investigate the robustness performance of our proposed method in a comparison with the conventional naïve Bayes procedure for classification, we randomly contaminated 30% genes by outliers in the test gene-sets (Figures 4(a)–4(c)).

Figure 4.

Classification results for the contaminated data: (a) contaminated test gene-set, (b) classified test gene-set by NBC, and (c) classified test gene-set by proposed method.

To classify sample into any one of the groups using the contaminated test gene-set (Figure 4(a)), we calculated the misclassification error rate by NBC and proposed method. From Figure 4 we see that the traditional naïve Bayes procedures fail to achieve correct classification (Figure 4(b)) and the misclassification error rate is 34%. Then we try to classify objects/patients using the proposed method which is shown in Figure 4(c). It is obvious from these figures that the classification performance of the proposed method is good and the misclassification error rate is approximately 5% for test gene datasets.

4.3. Simulation Results of Dataset 3

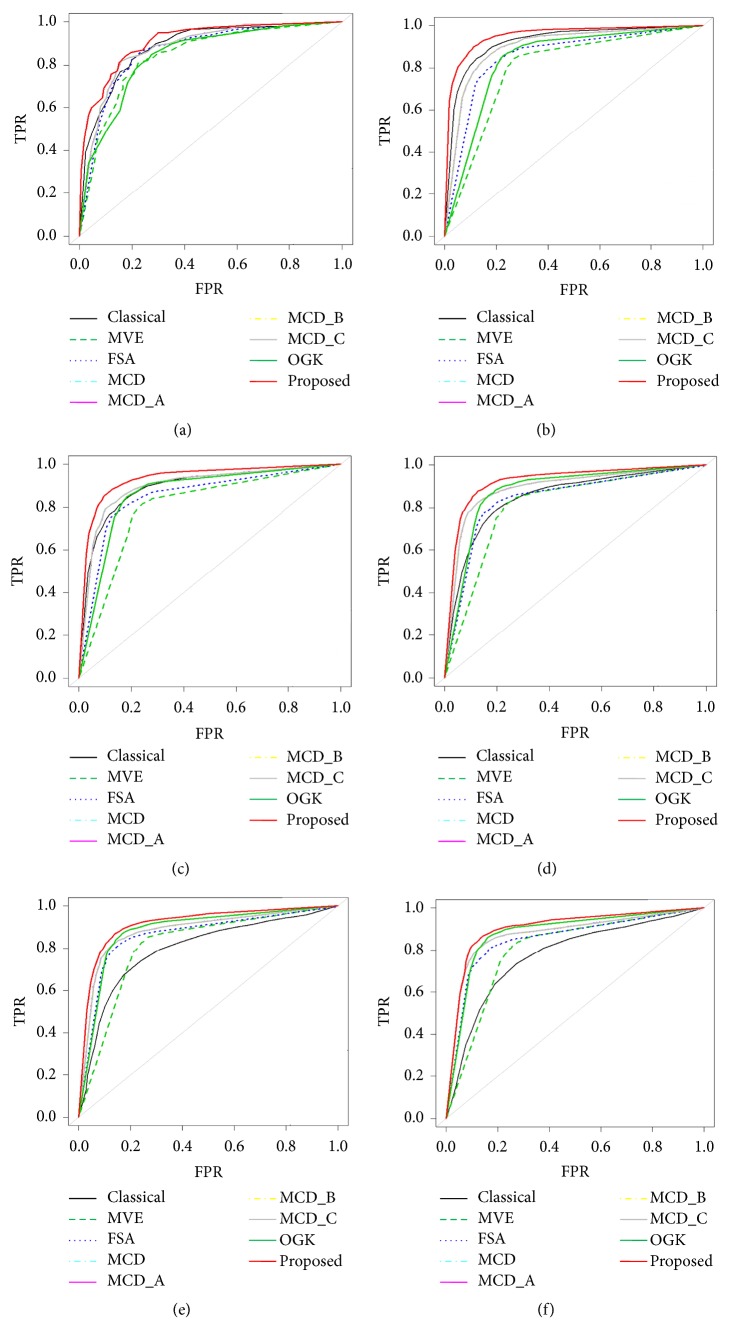

We also investigated the performance of the proposed robust naïve Bayes classifier in a comparison with classical naïve Bayes as well as robust linear classifier based on the MVE, FSA, MCD, MCD-A, MCD-B, MCD-C, and OGK estimators of the mean vectors and covariance matrices. We computed different performance measures such as average of true positive rate (TPR), false positive rate (FPR), area under the ROC curve (AUC), and partial AUC (pAUC) based on 50 replications of the dataset to measure the performance of all classifiers. A method is said to be better than others, if it produces larger values of TPR, AUC, and pAUC and smaller values of FPR and MER.

Table 3 shows the average values of AUC and pAUC at FPR = 0.2 based on the 50 replicated simulated datasets 3 with p = 15 for the two- (2-) class classification. The performance measures have been estimated by the classical, FSA, MCD, MVE, MCD-A, MCD-B, MCD-C, OGK, and proposed methods. They show the average estimates of AUC and pAUC for seven classifiers using simulated dataset 3 in absence and presence of outliers. We observed that in absence of outliers all the classifiers produce almost similar results. The proposed classifiers produced better result than the classical NBC and other robust estimators in presence of different levels (5%, 10%, 15%, 20%, and 25%) of outliers. Also MCD, MCD-A, MCD-B, and MCD-C show the constant performance result at the same level of outlier rate and varied for the different level of outlier rates. The ROC analysis also supported these results which are shown in Figures 5(a)–5(f), so we may conclude that the proposed method outperformed the others.

Table 3.

Performance evaluation of different methods using average values of AUC, pAUC, and standard error of pAUC for two-class classification based on simulated dataset 3.

| Two- (2-) class classification | ||||

|---|---|---|---|---|

| Estimators | Average.AUCtest | SE.AUCtest | Average.pAUCtest | SE.pAUCtest |

| Without outliers | ||||

|

| ||||

| Classical | 0.88 | 0.01 | 0.11 | 0.01 |

| MVE | 0.84 | 0.04 | 0.10 | 0.03 |

| FSA | 0.86 | 0.04 | 0.11 | 0.03 |

| MCD | 0.88 | 0.04 | 0.12 | 0.02 |

| MCD-A | 0.88 | 0.04 | 0.12 | 0.02 |

| MCD-B | 0.88 | 0.04 | 0.12 | 0.02 |

| MCD-C | 0.88 | 0.04 | 0.12 | 0.02 |

| OGK | 0.85 | 0.05 | 0.10 | 0.02 |

| Proposed | 0.91 | 0.01 | 0.13 | 0.02 |

|

| ||||

| 5% outliers | ||||

|

| ||||

| Classical | 0.92 | 0.03 | 0.14 | 0.01 |

| MVE | 0.79 | 0.07 | 0.04 | 0.04 |

| FSA | 0.85 | 0.06 | 0.10 | 0.05 |

| MCD | 0.90 | 0.06 | 0.13 | 0.04 |

| MCD-A | 0.90 | 0.06 | 0.13 | 0.04 |

| MCD-B | 0.90 | 0.06 | 0.13 | 0.04 |

| MCD-C | 0.90 | 0.06 | 0.13 | 0.04 |

| OGK | 0.84 | 0.04 | 0.07 | 0.04 |

| Proposed | 0.95 | 0.02 | 0.16 | 0.01 |

|

| ||||

| 10% outliers | ||||

|

| ||||

| Classical | 0.89 | 0.03 | 0.13 | 0.02 |

| MVE | 0.80 | 0.05 | 0.05 | 0.05 |

| FSA | 0.85 | 0.06 | 0.11 | 0.04 |

| MCD | 0.90 | 0.04 | 0.13 | 0.02 |

| MCD-A | 0.90 | 0.04 | 0.13 | 0.02 |

| MCD-B | 0.90 | 0.04 | 0.13 | 0.02 |

| MCD-C | 0.90 | 0.04 | 0.13 | 0.02 |

| OGK | 0.86 | 0.05 | 0.10 | 0.05 |

| Proposed | 0.93 | 0.02 | 0.15 | 0.01 |

|

| ||||

| 15% outliers | ||||

|

| ||||

| Classical | 0.85 | 0.05 | 0.11 | 0.03 |

| MVE | 0.81 | 0.05 | 0.06 | 0.05 |

| FSA | 0.84 | 0.06 | 0.10 | 0.04 |

| MCD | 0.89 | 0.05 | 0.13 | 0.03 |

| MCD-A | 0.89 | 0.05 | 0.13 | 0.03 |

| MCD-B | 0.89 | 0.05 | 0.13 | 0.03 |

| MCD-C | 0.89 | 0.05 | 0.13 | 0.03 |

| OGK | 0.88 | 0.05 | 0.10 | 0.05 |

| Proposed | 0.92 | 0.03 | 0.14 | 0.02 |

|

| ||||

| 20% outliers | ||||

|

| ||||

| Classical | 0.92 | 0.02 | 0.14 | 0.01 |

| MVE | 0.75 | 0.05 | 0.016 | 0.03 |

| FSA | 0.81 | 0.06 | 0.06 | 0.06 |

| MCD | 0.87 | 0.04 | 0.12 | 0.02 |

| MCD-A | 0.87 | 0.04 | 0.12 | 0.02 |

| MCD-B | 0.87 | 0.04 | 0.12 | 0.02 |

| MCD-C | 0.87 | 0.04 | 0.12 | 0.02 |

| OGK | 0.78 | 0.05 | 0.02 | 0.05 |

| Proposed | 0.95 | 0.01 | 0.17 | 0.00 |

|

| ||||

| 25% outliers | ||||

|

| ||||

| Classical | 0.81 | 0.07 | 0.09 | 0.03 |

| MVE | 0.72 | 0.08 | 0.04 | 0.04 |

| FSA | 0.83 | 0.07 | 0.08 | 0.05 |

| MCD | 0.86 | 0.07 | 0.11 | 0.05 |

| MCD-A | 0.86 | 0.07 | 0.11 | 0.05 |

| MCD-B | 0.86 | 0.07 | 0.11 | 0.05 |

| MCD-C | 0.86 | 0.07 | 0.11 | 0.05 |

| OGK | 0.87 | 0.04 | 0.11 | 0.043 |

| Proposed | 0.92 | 0.03 | 0.14 | 0.03 |

Figure 5.

ROC curve for the 2- (two-) class classification of different estimators at different percentage of outliers: (a) absence of outliers, (b) 5% outliers, (c) 10% outliers, (d) 15% outliers, (e) 20% outliers, and (f) 25% outliers.

To investigate the performance of the proposed method in a comparison with other methods (classical, FSA, MCD, MVE, MCD-A, MCD-B, MCD-C, OGK, and proposed) for multiclass (3) classification problem. We generated simulated datasets 3 based on 50 replicated with p = 5 the number of variables. The performance measures were estimated for each of these methods. Table 4 shows the average standard error of AUC and pAUC for multiclass classification. It is revealed that the proposed robust naïve Bayes classifier outperformed the classical and other robust linear classifiers in presence of outliers with false positive rate 0.2. The proposed method produces the larger values of AUC and pAUC and shows the lower values of MER and standard error of AUC and pAUC values. The performance measures using different types of MCD estimators were shown in the constant result at the same level of outlier rate. It was varied for the different levels of contamination rate.

Table 4.

Performance evaluation of different methods using average values of AUC, pAUC, and standard error of pAUC using dataset 3 for multiclass (3) classification.

| Multiclass (3) Class Classification | ||||

|---|---|---|---|---|

| Estimators | Average.AUCtest | SE.AUCtest | Average.pAUCtest | SE.pAUCtest |

| No outlier | ||||

|

| ||||

| Classical | 0.89 | 0.03 | 0.13 | 0.02 |

| MVE | 0.84 | 0.05 | 0.10 | 0.02 |

| FSA | 0.88 | 0.04 | 0.12 | 0.02 |

| MCD | 0.89 | 0.04 | 0.13 | 0.02 |

| MCD-A | 0.89 | 0.04 | 0.13 | 0.02 |

| MCD-B | 0.89 | 0.04 | 0.13 | 0.02 |

| MCD-C | 0.89 | 0.04 | 0.13 | 0.02 |

| OGK | 0.86 | 0.05 | 0.11 | 0.02 |

| Proposed | 0.90 | 0.03 | 0.13 | 0.02 |

|

| ||||

| 5% outliers | ||||

|

| ||||

| Classical | 0.84 | 0.05 | 0.10 | 0.02 |

| MVE | 0.82 | 0.05 | 0.08 | 0.03 |

| FSA | 0.86 | 0.05 | 0.11 | 0.02 |

| MCD | 0.87 | 0.04 | 0.12 | 0.02 |

| MCD-A | 0.87 | 0.04 | 0.12 | 0.02 |

| MCD-B | 0.87 | 0.04 | 0.12 | 0.02 |

| MCD-C | 0.87 | 0.04 | 0.12 | 0.02 |

| OGK | 0.85 | 0.05 | 0.10 | 0.03 |

| Proposed | 0.88 | 0.03 | 0.12 | 0.01 |

|

| ||||

| 10% outliers | ||||

|

| ||||

| Classical | 0.77 | 0.07 | 0.07 | 0.02 |

| MVE | 0.82 | 0.05 | 0.09 | 0.03 |

| FSA | 0.85 | 0.04 | 0.11 | 0.02 |

| MCD | 0.86 | 0.04 | 0.12 | 0.02 |

| MCD-A | 0.86 | 0.04 | 0.12 | 0.02 |

| MCD-B | 0.86 | 0.04 | 0.12 | 0.02 |

| MCD-C | 0.86 | 0.04 | 0.12 | 0.02 |

| OGK | 0.84 | 0.05 | 0.10 | 0.03 |

| Proposed | 0.87 | 0.04 | 0.12 | 0.02 |

|

| ||||

| 15% outliers | ||||

|

| ||||

| Classical | 0.76 | 0.07 | 0.07 | 0.03 |

| MVE | 0.82 | 0.05 | 0.09 | 0.03 |

| FSA | 0.83 | 0.05 | 0.11 | 0.02 |

| MCD | 0.85 | 0.05 | 0.12 | 0.02 |

| MCD-A | 0.85 | 0.05 | 0.12 | 0.02 |

| MCD-B | 0.85 | 0.05 | 0.12 | 0.02 |

| MCD-C | 0.85 | 0.05 | 0.12 | 0.02 |

| OGK | 0.85 | 0.05 | 0.11 | 0.03 |

| Proposed | 0.86 | 0.04 | 0.11 | 0.02 |

|

| ||||

| 20% outliers | ||||

|

| ||||

| Classical | 0.67 | 0.10 | 0.05 | 0.03 |

| MVE | 0.80 | 0.05 | 0.08 | 0.03 |

| FSA | 0.79 | 0.03 | 0.10 | 0.01 |

| MCD | 0.79 | 0.03 | 0.09 | 0.01 |

| MCD-A | 0.79 | 0.03 | 0.09 | 0.01 |

| MCD-B | 0.79 | 0.03 | 0.09 | 0.01 |

| MCD-C | 0.79 | 0.03 | 0.09 | 0.01 |

| OGK | 0.82 | 0.05 | 0.09 | 0.02 |

| Proposed | 0.84 | 0.03 | 0.10 | 0.01 |

|

| ||||

| 25% outliers | ||||

|

| ||||

| Classical | 0.72 | 0.08 | 0.05 | 0.03 |

| MVE | 0.82 | 0.06 | 0.08 | 0.04 |

| FSA | 0.81 | 0.07 | 0.10 | 0.03 |

| MCD | 0.81 | 0.07 | 0.10 | 0.03 |

| MCD-A | 0.81 | 0.07 | 0.10 | 0.03 |

| MCD-B | 0.81 | 0.07 | 0.10 | 0.03 |

| MCD-C | 0.81 | 0.07 | 0.10 | 0.03 |

| OGK | 0.85 | 0.05 | 0.10 | 0.03 |

| Proposed | 0.82 | 0.05 | 0.10 | 0.02 |

4.4. Head and Neck Cancer Gene Expression Data Analysis

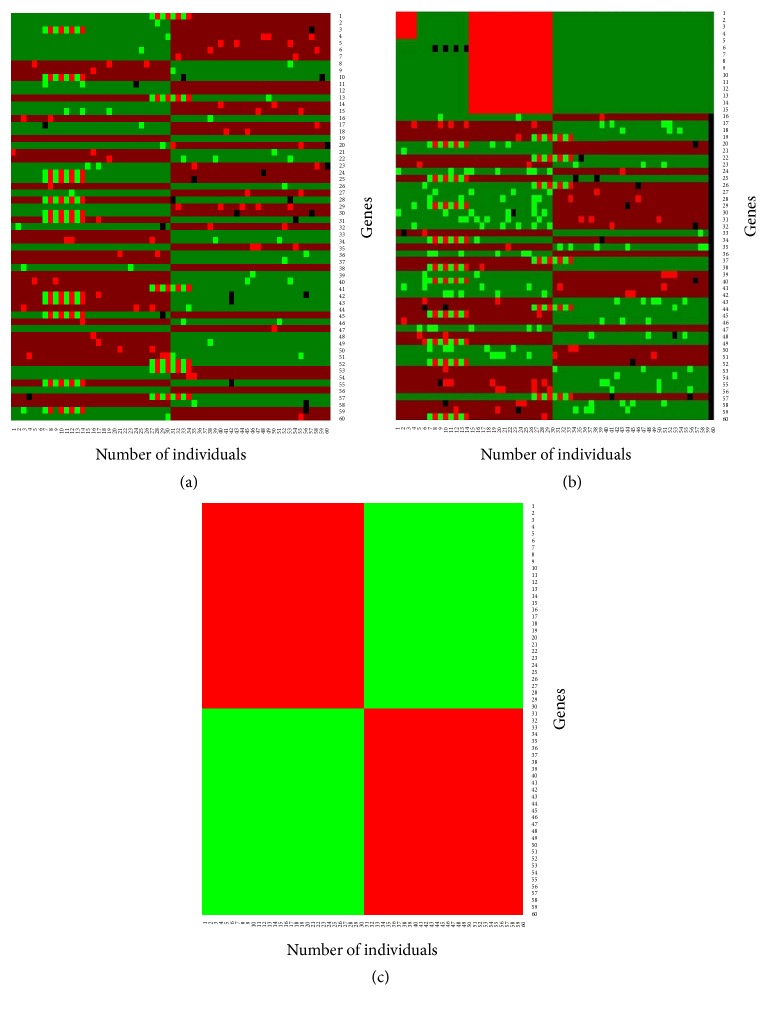

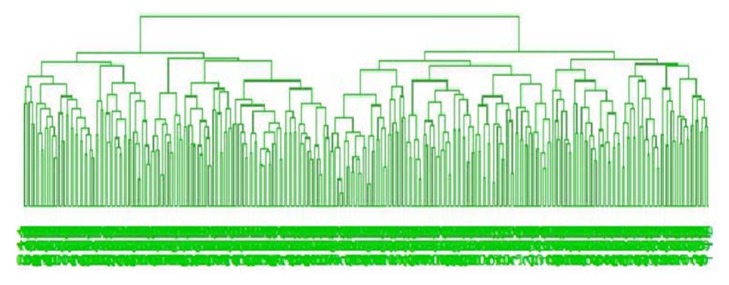

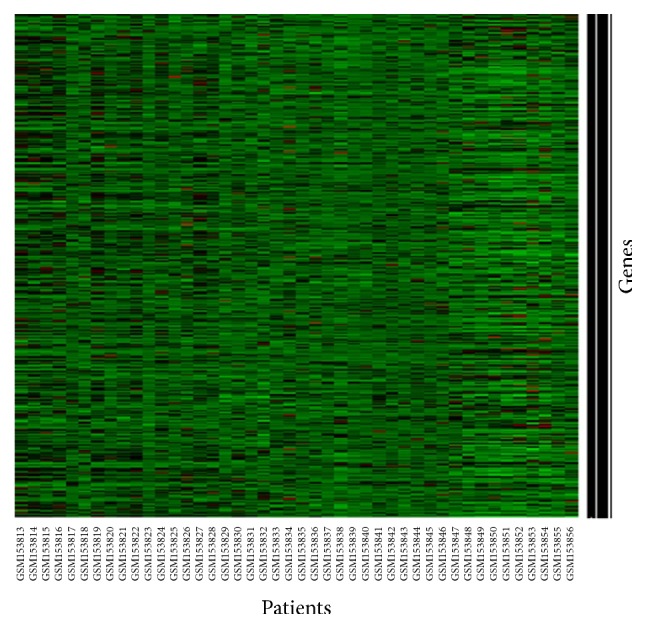

We also investigated the performance of the proposed method in real microarray gene expression dataset. The normalized Head and Neck cancer (HNC) dataset is considered here [33]. The RNA sample was extracted from the 22 normal and 22 cancer tissues for generating the HNC dataset. The Affymetrix GeneChip was used for processing RNA samples and finally got the quantified CELL file format. The Robust Multichip Analysis (RMA) and quantile normalization methods were used for processing the CELL files. The HNC dataset was 12,642 probe sets, 44 samples, and 42 significantly differentially expressed probe sets. The detailed discussion is shown in [33] for preprocessing of HNC dataset. We first select the differentially expressed (DE) genes whose posterior probability is more than 0.9; otherwise the genes are equally expressed (EE) using bridge R package [35] which is shown in Figure 6 that shows 594 differentially expressed genes from 12626 genes. We have performed the Anderson-Darling (A-D) normality test [36, 37] for the HNC dataset. The results show that a few numbers of DE genes (5%) for both normal and cancer groups break the normality assumption at 1% level of significance. Also we checked the independence assumption of DE genes using the mutual information [38]. We found that the mutual information for HNC dataset is 0.044 which is almost close to zero for both normal and cancer groups. So we may conclude that the DE genes almost satisfy the independence assumption. Therefore, we may assume that the HNC dataset almost satisfies the normality and independence assumption of NBC for a given class/groups.

Figure 6.

Differentially expressed genes of head and neck cancer dataset using bridge.

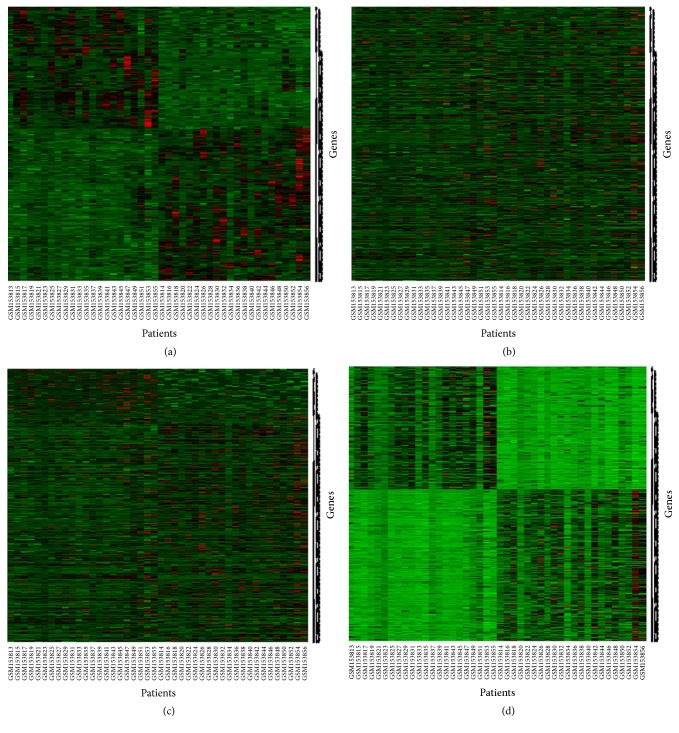

For classification problem, we have considered half of the differentially expressed genes (594/2 = 297) as training gene-set and we identified their group using hierarchical clustering (HC). Figure 7 represents the dendrogram of HC of half of the differentially expressed genes for training data. The rest of the 297 differentially expressed genes are considered as a test gene-set. Then we employed both classical NBC and robust NBC (β-NBC) in this dataset to classify cancer genes (see Figures 8(a)–8(d)). We observed that from Figure 8 the traditional naïve Bayes procedure can not find the group of gene properly whereas our proposed method (β-NBC) performs better for identifying the gene group in the HNC dataset. Figure 8(d) shows that the proposed classifier shows better performance for classifying the samples than the classical method (Figure 8(c)).

Figure 7.

HC Dendrogram for calculated first half of DE genes.

Figure 8.

(a) Training gene data set; (b) test gene data set; (c) classification of gene data set by classical naïve Bayes procedure; (d) classification of gene data set by proposed (β-naïve Bayes) method.

We also computed different performance measures (accuracy, sensitivity, specificity, positive predicted value (PPV), negative predicted value (NPV), prevalence, detection rate, detection, prevalence, Matthews correlation coefficient (MCC), and misclassification error rate) by the five classification methods (NBC, KNN, SVM, AdaBoost, and proposed) using HNC dataset (Table 5). From Table 5 we have observed that the proposed classifier produces better results than the other classifiers (NBC, SVM, KNN, and AdaBoost). The proportion test [34] has shown that the p values <0.01 for the different performance results excluding MCC and MER. Then we may say that they are highly statistically significant. The MCC and MER are statistically significant at 5% level of significance because of the p values < 0.05. Hence, the performances of the proposed methods in real HNC data analysis are better than classical and other methods. Also this data set is contaminated by outliers reported in [31]. So we consider this dataset to investigate the performance of the proposed method in a comparison of some popular existing classifiers. We observed that the proposed method outperforms the others for this HNC dataset.

Table 5.

Performance investigation using head and neck cancer data.

| Prediction methods | NBC | SVM | KNN | AdaBoost | Proposed | p value |

|---|---|---|---|---|---|---|

| Accuracy | 0.46 | 0.740 | 0.73 | 0.73 | 0.76 | 0.00 |

| 95% CI of accuracy | (0.35, 0.56) | (0.63, 0.81) | (0.63, 0.81) | (0.63, 0.81) | (0.75, 0.79) | — |

| Sensitivity | 0.46 | 0.79 | 0.84 | 0.79 | 0.83 | 0.00 |

| Specificity | 0.44 | 0.61 | 0.67 | 0.68 | 0.77 | 0.00 |

| PPV | 0.62 | 0.59 | 0.56 | 0.62 | 0.86 | 0.00 |

| NPV | 0.30 | 0.79 | 0.90 | 0.84 | 0.87 | 0.00 |

| Prevalence | 0.66 | 0.36 | 0.33 | 0.39 | 0.43 | 0.00 |

| Detection rate | 0.31 | 0.28 | 0.28 | 0.31 | 0.43 | 0.00 |

| Detection prevalence | 0.50 | 0.50 | 0.50 | 0.50 | 0.50 | — |

| Balanced accuracy | 0.45 | 0.71 | 0.76 | 0.74 | 0.93 | 0.00 |

| MCC | −0.08 | 0.48 | 0.47 | 0.86 | 0.90 | 0.04 |

| MER | 0.50 | 0.27 | 0.25 | 0.08 | 0.0 | 0.05 |

5. Discussion

In this paper, we discussed the robustification of Gaussian NBC using the minimum β-divergence method within two steps. For both simulated and real data analysis, at first, the mean vectors and the diagonal covariance matrices were computed by the minimum β-divergence estimators for the Gaussian NBC based on the training dataset. Then outlying test data vectors were detected from the test dataset using the β-weight function and outlying components in each test data vector were replaced by the corresponding values of their estimated mean vectors. Then the modified test data vectors were used as the input data vectors in the proposed β-NBC for their class prediction or pattern recognition. The rest of the data vectors from the test dataset were directly used as the input data vectors in the proposed β-NBC for their class prediction or pattern recognition. We observed that the performance of the proposed method depends on the tuning parameter β and the initialization of the Gaussian parameters. Therefore, in this paper, we also discussed the initialization procedure for the Gaussian parameters and the β-selection procedure using cross validation in Sections 2.3.2 and 2.3.3, respectively. The classifier reduces to the traditional Gaussian NBC when β → 0. Therefore, we call the proposed classifier β-NBC. We investigated the robustness performance of the proposed β-NBC in a comparison of several robust versions of linear classifiers based on MCD, MVE, and OGK estimators taking the smaller number of variables/genes (p) with larger number of patients/samples (n) in the training dataset, since these types of robust classifiers also suffer from the inverse problem of its covariance matrix in presence of large number of variables/genes (p) with small number of patients/samples (n) in the training dataset. We observed that the proposed β-NBC outperforms the existing robust linear classifiers as early mentioned in presence of outliers. Otherwise, it keeps almost equal performance. Then we investigated the performance of the proposed method in a comparison of some popular classifiers including Support Vector Machine (SVM), K-Nearest Neighbors (KNN), and AdaBoost which are widely used for gene expression data analysis [27–29]. In that comparison, we used both simulated and real gene expression datasets. We observed that the proposed method improves the performance over the others in presence of outliers. Otherwise, it keeps almost equal performance as before. The main advantage of the proposed classifier over the others is that it works well for both conditions of (i) p < n and (ii) p > n, and it can resist the effect of 50% breakdown points. If the dataset does not satisfy the normality assumptions, then the proposed method may show weaker performance than others in absence of outliers. However, the nonnormal dataset can transform to the normal dataset by some suitable transformation like Box-Cox transformation [39]. Then the proposed method would be useful to tackle the outlying problems. The proposed method may also suffer from the correlated observations. In that case, correlated observations can be transforming to the uncorrelated observations using standard principal component analysis (PCA) or singular value decomposition (SVD) based PCA. Then the proposed method would be more useful to tackle the outlying problems as before. However, in our current studied in this paper, we investigated the performance of the proposed classifier (β-NBC) in a comparison of some popular existing classifiers (NBC, KNN, SVM, and AdaBoost) including some robust linear classifiers (MCD, MVE, OGK, MCD-A, MCD-B, MCD-C, and FSA) using both simulated and real gene expression datasets, where simulated datasets satisfied the normality and independent assumptions. We observed that the proposed method improved the performance over the others in presence of outliers. Otherwise, it keeps almost equal performance. Usually gene expression datasets are often contaminated by outliers due to several steps involved in the data generating process from hybridization to image analysis. Therefore the proposed method would be more suitable for gene expression data analysis.

6. Conclusion

The accurate sample class prediction or pattern recognition is one of the most significant issues for MGED analysis. The naïve Bayes classifier is an important and widely used method for the class prediction in bioinformatics. However, this method suffers from outlying problems to estimate the location parameters in the MGED analysis. To overcome this we proposed β-NBC for estimating the robust location and scale parameters. In the simulation studies 1 and 2, we showed that, in presence of outliers, the proposed β-NBC outperforms other popular classifiers while datasets were generated from the multivariate and univariate normal distribution, respectively, and it keeps equal performance with the other classifiers, in absence of outliers. We also investigated the robustness performance of the proposed β-NBC in a comparison of linear classifier using some popular robust estimators in the simulation study 3. From this simulation study we observed that the proposed β-NBC outperforms existing robust linear classifiers. Finally we applied in the real HNC dataset; our proposed β-NBC showed better performance than the other traditional classifiers. Therefore, we may conclude that, in presence of outliers, our proposed β-NBC outperforms other methods using both simulated and real datasets.

Supplementary Material

The R source code for the robust naive Bayes classifier (β-NBC). The robust classification of microarray gene expression data.

Acknowledgments

This study was supported by HEQEP Subproject (CP-3603, W2, R3), Department of Statistics, University of Rajshahi, Rajshahi-6205, Bangladesh.

Additional Points

Supplementary Materials. The source code is written in R which is available in the Supplementary Material, available online at https://doi.org/10.1155/2017/3020627.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Veer V., Laura J., et al. Gene expression profiling predicts clinical outcome of breast cancer. Nature. 2002;415(6871):530–536. doi: 10.1038/415530a. [DOI] [PubMed] [Google Scholar]

- 2.Dam S., Võsa U., van der Graaf A., Franke L., de Magalhães J. P. Gene co-expression analysis for functional classification and gene–disease predictions. Briefings in Bioinformatics. 2017:p. bbw139. doi: 10.1093/bib/bbw139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Yu X., Yu G., Wang J., Brock G. N. Clustering cancer gene expression data by projective clustering ensemble. PLOS ONE. 2017;12(2) doi: 10.1371/journal.pone.0171429.e0171429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Singh R. K., Sivabalakrishnan M. Feature Selection of Gene Expression Data for Cancer Classification: A Review. Procedia Computer Science. 2015;50:52–57. [Google Scholar]

- 5.Novianti P. W., Jong V. L., Roes K. C., Eijkemans M. J. Meta-analysis approach as a gene selection method in class prediction: does it improve model performance? A case study in acute myeloid leukemia. BMC Bioinformatics. 2017;18(1) doi: 10.1186/s12859-017-1619-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chen M., Li K., Li H., Song C., Miao Y. The Glutathione Peroxidase Gene Family in Gossypium hirsutum: Genome-Wide Identification, Classification, Gene Expression and Functional Analysis. Scientific Reports. 2017;7 doi: 10.1038/srep44743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jong V. L., Novianti P. W., Roes K. C. B., Eijkemans M. J. C. Selecting a classification function for class prediction with gene expression data. Bioinformatics. 2016;32(12):1814–1822. doi: 10.1093/bioinformatics/btw034. [DOI] [PubMed] [Google Scholar]

- 8.Buza K. Classification of gene expression data: a hubness-aware semi-supervised approach. Computer Methods and Programs in Biomedicine. 2016;127:105–113. doi: 10.1016/j.cmpb.2016.01.016. [DOI] [PubMed] [Google Scholar]

- 9.Wang L., Oh W. K., Zhu J. Disease-specific classification using deconvoluted whole blood gene expression. Scientific Reports. 2016;6 doi: 10.1038/srep32976.32976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jiang L., Chen H., Pinello L., Yuan G.-C. GiniClust: Detecting rare cell types from single-cell gene expression data with Gini index. Genome Biology. 2016;17(1, article no. 144) doi: 10.1186/s13059-016-1010-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Golub T. R., Slonim D. K., Tamayo P., et al. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science. 1999;286(5439):531–527. doi: 10.1126/science.286.5439.531. [DOI] [PubMed] [Google Scholar]

- 12.Soria D., Garibaldi J. M., Ambrogi F., Biganzoli E. M., Ellis I. O. A 'non-parametric' version of the naive Bayes classifier. Knowledge-Based Systems. 2011;24(6):775–784. doi: 10.1016/j.knosys.2011.02.014. [DOI] [Google Scholar]

- 13.Wright G. W., Simon R. M. A random variance model for detection of differential gene expression in small microarray experiments. Bioinformatics. 2003;19(18):2448–2455. doi: 10.1093/bioinformatics/btg345. [DOI] [PubMed] [Google Scholar]

- 14.Jiang L., Cai Z., Wang D., Zhang H. Improving Tree augmented Naive Bayes for class probability estimation. Knowledge-Based Systems. 2012;26:239–245. doi: 10.1016/j.knosys.2011.08.010. [DOI] [Google Scholar]

- 15.Balamurugan A. A., Rajaram R., Pramala S., Rajalakshmi S., Jeyendran C., Dinesh Surya Prakash J. NB+: An improved Naïve Bayesian algorithm. Knowledge-Based Systems. 2011;24(5):563–569. doi: 10.1016/j.knosys.2010.09.007. [DOI] [Google Scholar]

- 16.Mollah M. N. H., Minami M., Eguchi S. Exploring latent structure of mixture ICA models by the minimum β-divergence method. Neural Computation. 2006;18(1):166–190. doi: 10.1162/089976606774841549. [DOI] [Google Scholar]

- 17.Mollah M. N. H., Eguchi S., Minami M. Robust prewhitening for ICA by minimizing β-divergence and its application to FastICA. Neural Processing Letters. 2007;25(2):91–110. doi: 10.1007/s11063-006-9023-8. [DOI] [Google Scholar]

- 18.Nurul Haque Mollah M., Sultana N., Minami M., Eguchi S. Robust extraction of local structures by the minimum β-divergence method. Neural Networks. 2010;23(2):226–238. doi: 10.1016/j.neunet.2009.11.011. [DOI] [PubMed] [Google Scholar]

- 19.Randles R. H., Broffitt J. D., Ramberg J. S., Hogg R. V. Generalized linear and quadratic discriminant functions using robust estimates. Journal of the American Statistical Association. 1978;73(363):564–568. doi: 10.1080/01621459.1978.10480055. doi: 10.1080/01621459.1978.10480055. [DOI] [Google Scholar]

- 20.Maronna R. A. Robust M-estimators of multivariate location and scatter. The Annals of Statistics. 1976;4(1):51–67. doi: 10.1214/aos/1176343347. [DOI] [Google Scholar]

- 21.Todorov V., Neykov P. Robust selection of variables in the discriminant analysis based on the mve and mcd estimators. Proceedings of the Computational Statistics, COMPAST; 1990; Heidelberg, deu. Physica Verlag; [Google Scholar]

- 22.Todorov V., Neykov N., Neytchev P. Robust two-group discrimination by bounded influence regression. A Monte Carlo simulation. Computational Statistics and Data Analysis. 1994;17(3):289–302. doi: 10.1016/0167-9473(94)90122-8. [DOI] [Google Scholar]

- 23.Todorov V., Pires A. M. Comparative performance of several robust linear discriminant analysis methods. REVSTAT Statistical Journal. 2007;5(1):63–83. [Google Scholar]

- 24.He X., Fung W. K. High breakdown estimation for multiple populations with applications to discriminant analysis. Journal of Multivariate Analysis. 2000;72(2):151–162. doi: 10.1006/jmva.1999.1857. [DOI] [Google Scholar]

- 25.Hubert M., Van Driessen K. Fast and robust discriminant analysis. Computational Statistics & Data Analysis. 2004;45(2):301–320. doi: 10.1016/S0167-9473(02)00299-2. [DOI] [Google Scholar]

- 26.Hawkins D. M., McLachlan G. J. High-breakdown linear discriminant analysis. Journal of the American Statistical Association. 1997;92(437):136–143. doi: 10.2307/2291457. [DOI] [Google Scholar]

- 27.Devi Arockia Vanitha C., Devaraj D., Venkatesulu M. Gene expression data classification using Support Vector Machine and mutual information-based gene selection. Procedia Computer Science. 2015;47:13–21. doi: 10.1016/j.procs.2015.03.178. [DOI] [Google Scholar]

- 28.Parry R. M., Jones W., Stokes T. H., et al. K-Nearest neighbor models for microarray gene expression analysis and clinical outcome prediction. Pharmacogenomics Journal. 2010;10(4):292–309. doi: 10.1038/tpj.2010.56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Long P. M., Vega V. B. Boosting and microarray data. Machine Learning. 2003;52(1-2):31–44. doi: 10.1023/A:1023937123600. [DOI] [Google Scholar]

- 30.Friedman N., Geiger D., Goldszmidt M. Bayesian network classifiers. Machine Learning. 1997;29(2-3):131–163. doi: 10.1023/A:1007465528199. [DOI] [Google Scholar]

- 31.Mollah M. M. H., Mollah N. H., Kishino H. β-empirical Bayes inference and model diagnosis of microarray data. BMC Bioinformatics. 2012;13(1):p. 135. doi: 10.1186/1471-2105-13-135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Nowak G., Tibshirani R. Complementary hierarchical clustering. Biostatistics. 2008;9(3):467–483. doi: 10.1093/biostatistics/kxm046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kuriakose M. A., Chen W. T., He Z. M., et al. Selection and validation of differentially expressed genes in head and neck cancer. Cellular and Molecular Life Sciences. 2004;61(11):1372–1383. doi: 10.1007/s00018-004-4069-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bergemann T. L., Wilson J. Proportion statistics to detect differentially expressed genes: A comparison with log-ratio statistics. BMC Bioinformatics. 2011;12, article no. 228 doi: 10.1186/1471-2105-12-228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Gottardo R. Bridge: Bayesian Robust Inference for Differential Gene Expression, R package version 1.40.0. 2017. [DOI] [PubMed] [Google Scholar]

- 36.Anderson T. W., Darling D. A. Asymptotic theory of certain goodness of fit criteria based on stochastic processes. Annals of Mathematical Statistics. 1952;23:193–212. doi: 10.1214/aoms/1177729437. [DOI] [Google Scholar]

- 37.Thomas R., de la Torre L., Chang X., Mehrotra S. Validation and characterization of DNA microarray gene expression data distribution and associated moments. BMC Bioinformatics. 2010;11, article no. 576 doi: 10.1186/1471-2105-11-576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Hausser J., Strimmer K. Entropy inference and the James-Stein estimator, with application to nonlinear gene association networks. Journal of Machine Learning Research (JMLR) 2009;10:1469–1484. [Google Scholar]

- 39.Box G. E. P., Cox D. R. An analysis of transformations. Journal of the Royal Statistical Society. Series B. 1964;26:211–252. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

The R source code for the robust naive Bayes classifier (β-NBC). The robust classification of microarray gene expression data.