Abstract

Purpose

The authors investigated how clear speech instructions influence sentence intelligibility.

Method

Twelve speakers produced sentences in habitual, clear, hearing impaired, and overenunciate conditions. Stimuli were amplitude normalized and mixed with multitalker babble for orthographic transcription by 40 listeners. The main analysis investigated percentage-correct intelligibility scores as a function of the 4 conditions and speaker sex. Additional analyses included listener response variability, individual speaker trends, and an alternate intelligibility measure: proportion of content words correct.

Results

Relative to the habitual condition, the overenunciate condition was associated with the greatest intelligibility benefit, followed by the hearing impaired and clear conditions. Ten speakers followed this trend. The results indicated different patterns of clear speech benefit for male and female speakers. Greater listener variability was observed for speakers with inherently low habitual intelligibility compared to speakers with inherently high habitual intelligibility. Stable proportions of content words were observed across conditions.

Conclusions

Clear speech instructions affected the magnitude of the intelligibility benefit. The instruction to overenunciate may be most effective in clear speech training programs. The findings may help explain the range of clear speech intelligibility benefit previously reported. Listener variability analyses suggested the importance of obtaining multiple listener judgments of intelligibility, especially for speakers with inherently low habitual intelligibility.

Keywords: clear speech, instructions, intelligibility

Clear speech is a speaking strategy used in people’s day-to-day lives when they want to ensure they are understood. It also is a common therapeutic technique for maximizing intelligibility in dysarthria (Beukelman, Fager, Ullman, Hanson, & Logemann, 2002; Dromey, 2000; Hustad & Weismer, 2007) as well as in the context of aural rehabilitation programs (Schum, 1997). In addition, clear speech is a central construct in certain speech production theories. Lindblom’s (1990) hypo–hyperarticulate theory, or H & H, suggests that talkers maximize effort for hyperarticulate speech or clear speech to ensure they are understood by the listener. In addition, the construct of economy of effort, wherein speakers adjust articulatory effort to vary speaking style, is discussed in Perkell and colleagues’ theory of segmental speech motor control (Perkell, 2012; Perkell, Zandipour, Matthies, & Lane, 2002). For example, relative to conversational speech, clear speech is associated with increased articulatory effort, as inferred from increases in movement distances, durations, and peak speeds. In this manner, clear speech research is of practical and theoretical relevance.

The Clear Speech Benefit

The perceptual benefits of clear speech, collectively referred to as the clear speech benefit, are suggested by research that has reported greater intelligibility for clear speech compared to conversational speech. Furthermore, the clear speech benefit has been studied with various listener and speaker populations. Listeners with hearing impairment, those with learning disabilities, nonnative speakers of English, and listeners with normal hearing all benefit from clear speech spoken by healthy talkers (Bradlow & Bent, 2002; Bradlow, Kraus, & Hayes, 2003; Picheny, Durlach, & Braida, 1985; Uchanski, Choi, Braida, Reed, & Durlach, 1996). In addition, listeners with normal hearing also show a clear speech benefit when listening to speakers with dysarthria (Beukelman et al., 2002). In general, studies have reported a clear speech benefit ranging from 12 to 34 percentage points (see reviews by Smiljanić & Bradlow, 2009, and Uchanski, 2005). However, in at least some studies, clear speech has been reported to be associated with a decrease in intelligibility from 4 to 12 percentage points relative to conversational speech (Ferguson, 2004; Maniwa, Jongman, & Wade, 2008).

As Smiljanić and Bradlow (2009) noted, there is substantial cross-study variation in the magnitude of the clear speech benefit. For example, seminal work by Picheny and colleagues (Picheny, Durlach, & Braida, 1985) elicited clear and conversational productions from three male speakers and collected sentence intelligibility scores from listeners with hearing loss. Sentence intelligibility revealed, on average, a 17% clear speech benefit. More recently, Ferguson and Kewley-Port (2002) studied the clear speech benefit for one male speaker. Listeners with normal hearing and those with hearing impairment were instructed to identify vowels produced in clear and conversational conditions. Similar to previous studies, listeners with normal hearing had an average clear speech benefit of 8% (Gagné, Masterson, Munhall, Bilida, & Querengesser, 1994; Gagné, Querengesser, Folkeard, Munhall, & Zandipour, 1995; Helfer, 1997; Payton, Uchanski, & Braida, 1994). However, contrary to results reported by Picheny et al., listeners with hearing impairment from Ferguson and Kewley-Port’s study did not show a significant clear speech benefit. As part of a larger study by Beukelman et al. (2002), speakers with dysarthria secondary to traumatic brain injury produced sentences using clear speech after a training session. Listeners with normal hearing judged intelligibility using an orthographic transcription task. On average, speakers with dysarthria showed an 8% clear speech benefit. Although the increase in intelligibility was not statistically significant, other studies suggest that this magnitude of improvement is clinically important (e.g., VanNuffelen, De Bodt, Vanderwegen, Van de Heyning, & Wuyts, 2010). More recently, a clear speech study of fricatives conducted by Maniwa et al. (2008) reported that, on average, listeners were 4.6% better at identifying clear fricatives relative to conversational productions.

Clear Speech Instructions

A variety of instructions have been used in clear speech research. For example, studies have instructed participants to “hyperarticulate” (Dromey, 2000; Feijoo, Fernandez, & Balsa, 1999; Moon & Lindblom, 1994), “speak to someone with a hearing impairment or someone from a different language background” (Bradlow & Bent, 2002; Bradlow et al., 2003), or “speak clearly” (Ferguson, 2004; Ferguson & Kewley-Port, 2007; Harnsberger, Wright, & Pisoni, 2008). Picheny et al. (1985) elicited clear speech using a combination of the definitions listed above. Other studies have elicited clear speech through an imitation task or training paradigm (Beukelman et al., 2002; Krause & Braida, 2004), providing a grade or reward system (Perkell et al., 2002), or by asking for a repetition (Maniwa et al., 2008; Oviatt, MacEachern, & Levow, 1998). Maniwa and colleagues (2008) elicited clear fricatives through an interactive program in which speakers attempted to disambiguate fricatives after receiving feedback containing errors in voicing or place of articulation.

As previously noted, a wide range of intelligibility benefit has been reported in the clear speech literature (see reviews in Smiljanić & Bradlow, 2009, and Uchanski, 2005). It seems reasonable to speculate that this may be explained, in part, by the fact that studies have used a variety of clear speech instructions. Knowing whether certain instructions for eliciting clear speech maximize intelligibility would help optimize clear speech training programs. For example, knowledge about effective instruction paradigms could help researchers design therapeutic programs, such as those used with speakers who have dysarthria or with communication partners of people with a hearing impairment. Furthermore, knowledge about effective clear speech instruction paradigms could have implications for programs related to aviation training (Huttunen, Keränen, Väyrynen, Pääkkönen, & Leino, 2011). Huttunen et al. (2011) studied speech produced during various cognitive tasks associated with military aviation training and found that speech measures such as articulation rate and vowel formant frequencies were affected by varying levels of cognitive load. Huttunen et al. further proposed the importance of clear speech training to minimize communication breakdowns during aviation. Finally, improved understanding of factors affecting intelligibility of clear speech has relevance for the development of speech enhancement algorithms for hearing aid technology (Krause & Braida, 2009; Picheny, Durlach, & Braida, 1985, 1986; Uchanski et al., 1996; Zeng & Liu, 2006).

To our knowledge, only Lam, Tjaden, and Wilding (2012) have directly compared different clear speech instructions. In their acoustic study, sentences produced by 12 speakers were elicited in four speaking conditions, including habitual, clear, hearing impaired, and overenunciate. The latter three conditions were variants of clear speech instructions used in previously published studies (Bradlow & Bent, 2002; Bradlow et al., 2003; Dromey, 2000; Feijoo et al., 1999; Ferguson, 2004; Ferguson & Kewley-Port, 2007; Harnsberger et al., 2008; Moon & Lindblom, 1994). For the habitual condition, speakers were instructed to “say the following sentences.” For the clear, hearing impaired, and overenunciate conditions, speakers were specifically instructed to “speak clearly,” “talk to someone with a hearing impairment,” or “overenunciate/hyperarticulate,” respectively. With the exception of the clear condition, in which speakers freely interpreted the general instruction to “speak clearly,” definitions of the overenunciate and hearing impaired conditions were chosen to be as distinct from one another as possible. Thus, the instruction to “overenunciate each word” (overenunciate condition) might be interpreted as hyperarticulated speech or exaggerated speech, whereas the instruction to “speak to someone with a hearing impairment” (hearing impaired condition) might be interpreted as increased vocal intensity.

The results indicated that the different clear speech instructions were associated with similar types of acoustic adjustments. In agreement with previous studies (Ferguson & Kewley-Port, 2007; Picheny et al., 1985), acoustic adjustments for the various clear speech conditions included tense and lax vowel space expansion, increased vowel spectral change, lengthened segment durations, and decreased articulation rate. Furthermore, relative to typical speech (habitual condition), speakers produced the greatest magnitude of acoustic change when instructed to “overenunciate each word” (overenunciate condition) followed by the instruction to speak “as if speaking to someone with a hearing impairment” (hearing impaired condition) and “speak clearly” (clear condition). One exception, however, was mean sound pressure level (SPL), wherein average SPL for the hearing impaired condition was slightly higher (+1 dB), on average, than the overenunciate condition.

As Ferguson and Kewley-Port (2002) noted, whether different clear speech instructions affect intelligibility is unknown. Thus, the current perceptual study was a follow-up to Lam et al.’s (2012) acoustic study. More specifically, we sought to determine whether the different instructions for eliciting clear speech reported by Lam et al. affected judgments of intelligibility. Given that the types of acoustic adjustments reported by Lam et al. (i.e., vowel space area expansion, lengthened speech durations, and increased vowel spectral change) may be explanatory variables for intelligibility (Kent et al., 1989; Turner, Tjaden, & Weismer, 1995; Weismer, Jeng, Laures, Kent, & Kent, 2001), we hypothesized that perceptual judgments of intelligibility would parallel the acoustic adjustments reported by Lam et al. More specifically, we hypothesized that the greatest clear speech benefit would be observed in the overenunciate condition, followed by smaller gains in intelligibility for the hearing impaired and the clear condition relative to the habitual condition.

Method

Speakers

Twelve neurologically healthy participants (six males and six females), ranging from 18 to 36 years of age (M = 24, SD = 6 years), were recruited from the student population at the University at Buffalo to serve as participants. Speakers were judged to speak Standard American English as a first language and reported no history of hearing, speech, or language pathology. All speakers passed a pure-tone hearing screening, administered bilaterally at 20 dB HL at 500, 1000, 2000, 4000, and 8000 Hz (American National Standards Institute, 2004). In addition, speakers had no university training in linguistics or communicative disorders and sciences.

Speech Sample

For each of the 12 speakers, 18 different sentences, varying in length from five to 11 words, were selected from the Assessment of Intelligibility of Dysarthric Speech (Yorkston & Beukelman, 1984). Sentences were produced in the context of a broader speech sample, which also consisted of a reading passage and/hVd/carrier phrases. Each speaker produced all sentences in the habitual, clear, hearing impaired, and overenunciate conditions. Throughout this article, the latter three conditions are also referred to as the non-habitual conditions. As previously reviewed, speaking conditions were selected on the basis of previous clear speech research (Bradlow et al., 2003; Ferguson, 2004; Johnson, Flemming, & Wright, 1993; Moon & Lindblom, 1994; Smiljanić & Bradlow, 2009). The habitual condition, wherein speakers were instructed to read the sentences, was elicited first. All speakers were then instructed to read the sentences “while speaking clearly” (clear condition; Ferguson, 2004; Ferguson & Kewley-Port, 2007; Harnsberger et al., 2008), allowing participants to determine what this meant (Ferguson, 2004). The clear condition was always elicited after the habitual condition to reduce any influence of instruction from the following hearing impaired and overenunciate conditions. Speakers were then instructed to “speak as if speaking to someone who has a hearing impairment” (hearing impaired condition; Bradlow & Bent, 2002; Bradlow et al., 2003) and to “overenunciate each word” (overenunciate condition; Dromey, 2000; Feijoo et al., 1999; Moon & Lindblom, 1994). The order of the last two conditions was alternated between participants. Because of the ordering of conditions, the cumulative effect of the clear condition on the following nonhabitual conditions is unknown. However, speakers were engaged in conversation between conditions for a few minutes to reduce potential carryover effects.

Speakers were seated in a sound-attenuated booth and recorded using a head-mounted CountryMan E6IOP5L2 Isomax Condenser Microphone. A mouth-to-microphone distance of 6 cm was maintained throughout the recording for each speaker. Audio recordings were preamplified using a Professional Tube MIC Preamp, low-pass filtered at 9.8 kHz and digitized to a computer at a sampling rate of 22 kHz using TF32 (Milenkovic, 2002).

Listeners

Forty listeners with normal hearing (15 males and 25 females), ranging from 19 to 42 years of age (M = 22, SD = 5 years), were recruited from the student population at the University at Buffalo to judge intelligibility. All listeners passed a hearing screening, administered bilaterally at 20 dB HL at 500, 1000, 2000, 4000, and 8000 Hz (American National Standards Institute, 2004). Listeners were judged to speak Standard American English as a first language; reported no history of hearing, speech, or language pathology; and were required to achieve 95% or better accuracy on a sample orthographic transcription task. For the sample transcription task, participants transcribed six Harvard sentences (IEEE, 1969) produced in a habitual condition by two additional speakers (one female and one male) who were not part of the original 12 speakers.

Procedure

All speakers who provided the speech stimuli were neurologically healthy. Therefore, to prevent ceiling effects, all stimuli were mixed with multitalker babble, as in previous studies of clear speech (Bradlow & Bent, 2002; Ferguson & Kewley-Port, 2007; Maniwa et al., 2008). Twenty-person multitalker babble was sampled at 22 kHz and low-pass filtered at 5000 Hz (Bochner, Garrison, Sussman, & Burkard, 2003; Frank & Craig, 1984). Similar to the procedures used in previous studies of clear speech (Bradlow & Bent, 2002; Ferguson & Kewley-Port, 2007; Krause & Braida, 2002, Maniwa et al., 2008), amplitude differences between speakers were eliminated by equating all stimuli for average root-mean-square (RMS) sentence intensity in GoldWave version 5.58 (GoldWave, Inc., 2010). Sentences were mixed with multitalker babble using MMScript (Johnson, 2010) and saved using GoldWave. On the basis of pilot testing for various signal-to-noise ratios (SNRs), an SNR of –3 dB was applied to each sentence using GoldWave. This SNR also was used by Ferguson and Kewley-Port (2002) and Maniwa et al. (2008).

Listeners were seated in a sound-attenuated booth in front of a computer monitor and keyboard. Participants were instructed to listen to a sound file one time and type their response using the computerized Sentence Intelligibility Test (SIT) software program (Yorkston & Beukelman, 1996). To prevent listener fatigue, testing took place over two sessions, with each session approximately 1 hr long. Sessions 1 and 2 were scheduled at least 1 hr apart and no longer than 5 days apart. Listeners heard stimuli via Sony Dynamic Stereo Headphones (MDR-V300) at 70 dB. The dB level over the headphones was calibrated at the beginning of each listening session using an earphone coupler and a Quest Electronics 1700 sound level meter. Two sentences produced by different speakers in each condition were randomly selected for use in headphone calibration.

To familiarize participants with the computer program and multitalker babble, listeners first transcribed six Harvard sentences (IEEE, 1969) mixed with multitalker babble at an SNR of –3 dB. After the familiarization task, experimental testing began. Stimuli presentation was blocked by speaker, and a different random ordering of speakers and conditions was created for every listener. To prevent listeners from becoming familiar with the stimuli, all listeners orthographically transcribed one set of 18 sentences from one condition for each of the 12 speakers. For example, Listener 1 might have transcribed the habitual conditions for Speakers 1, 2, and 3; the clear condition for Speakers 4, 5, and 6; the hearing impaired condition for Speakers 7, 8, and 9; and the overenunciate condition for Speakers 10, 11, and 12. Because every speaker produced different sentences, Listener 1 thus heard only a single production of each sentence. Groupings of speakers and listeners were carefully controlled so that every speaker and condition was judged by 10 different listeners.

Data Reduction and Analyses

Before scoring the SIT, an experimenter manually corrected each sentence for homonyms and spelling errors. The computerized SIT (Yorkston & Beukelman, 1996) then was used to score each test, and data were compiled into a database using Microsoft Excel. As discussed in more detail in the following paragraphs, both listener analyses and speaker analyses were performed using percentage-correct scores from the SIT. In the listener analyses, the purpose was to examine the intelligibility benefit of different clear speech instructions for listeners and the variability of listener responses to each condition. Therefore, individual listener SIT scores were calculated for each condition, yielding 480 SIT scores; that is, a given listener judged each condition from three randomly selected speakers (40 listeners × [4 conditions × 3 speakers] = 480 SIT scores). In the speaker analyses, the purpose was to examine individual speaker trends of the clear speech benefit in the three nonhabitual conditions; therefore, SIT scores were calculated for each individual speaker as a function of condition (12 speaker SIT scores × 4 conditions = 48 SIT scores).

Listener Analyses

Percentage-correct SIT scores for individual listeners

We calculated SIT scores by dividing the number of correctly transcribed words by all possible words. Each listener provided a total of 12 SIT scores, one for each speaker in a given condition. Across all 40 listeners this yielded 480 percentage-correct SIT scores. We then arcsine transformed the SIT scores for statistical analysis (Winer, 1981). We used a two-way repeated measures analysis of variance to investigate differences in listener SIT scores as a function of condition and speaker sex. Previous studies have reported that the magnitude of the clear speech benefit is greater for female speakers compared to male speakers (Bradlow & Bent, 2002; Ferguson, 2004). Bradlow, Torretta, and Pisoni (1996) further suggested that sex might be an influencing factor on overall intelligibility. Therefore, we included sex as an independent variable in the data analysis. Post hoc pairwise comparisons were performed using a Bonferroni correction for multiple comparisons. A nominal significance level of p < .05 was used in all hypothesis testing. We used IBM SPSS Statistics 19 (for Windows) for all statistical analyses.

Listener variability

Listeners are also known to vary in their ability to correctly orthographically transcribe speech—even speech produced by healthy talkers (Choe, 2008; Hustad, 2008; Tjaden & Liss, 1995). However, whether a speaker’s inherent, overall intelligibility (i.e., inherently more vs. less intelligible) contributes to or is related to this type of listener variability is not well established. Thus, we undertook an additional analysis to explore the variability of individual listener SIT scores within conditions for two groups of speakers with operationally defined high and low habitual intelligibility. We chose two speaker groups to be as different from one another as possible in order to maximize any potential group differences. Speaker groups were determined by first ranking speakers on the basis of their intelligibility scores for the habitual condition. Habitual intelligibility was calculated for each of the 12 speakers by pooling responses for the 10 listeners and dividing the total number of correctly transcribed words by all possible words in the habitual condition. The four speakers with the highest habitual intelligibility scores (M1, M4, F9, and F10; M = male; F = female) comprised the high habitual intelligibility speaker group, and four speakers with the lowest habitual intelligibility scores (M2, M12, F8, and F3) comprised the low habitual intelligibility speaker group.

For each low/high speaker group and condition, SIT scores for individual listeners were arcsine transformed for the statistical analysis (4 speakers per group × 10 listeners = 40 SIT scores per group). We used Levene’s test of homogeneity to investigate homogeneity of variance for low- and high-intelligibility speaker groups. A significant finding for Levene’s test would indicate that the variability of listener responses was different for speakers with low and high habitual intelligibility, whereas a nonsignificant result would indicate that listener responses were equally as variable in the low- and high-intelligibility speaker groups. Similar to procedures in McHenry (2011), we conducted a one-way analysis of variance to obtain results for Levene’s test. Therefore, a total of four Levene’s tests were performed: one for each condition. We used a significance level of p < .05 for hypothesis testing.

We also used the coefficient of variation (CoV; [SD/M) × 100]) to describe the relative variability of scores within the low- and high-intelligibility speaker groups. Although Levene’s test provides an overall parametric indication of listener variability, we used the CoV measure as a follow-up descriptive statistic to compare differences between groups. For each group and condition, means and standard deviations were calculated from 40 individual listeners’ SIT scores (4 speakers per group × 10 listener SIT scores).

Speaker Analyses

Speaker SIT score trends

Because previous studies have reported a wide range of clear speech benefit for individual speakers, we also calculated descriptive statistics to investigate speaker trends across conditions. For each speaker and condition, we calculated SIT scores by totaling the number of correctly transcribed words, pooled across responses from 10 listeners, and dividing by all possible words, yielding a speaker percentage-correct score. For every speaker, a clear speech benefit was calculated by subtracting the SIT score of each nonhabitual condition from a given speaker’s habitual SIT score, yielding a clear speech benefit score for the clear, hearing impaired, and overenunciate conditions.

Function versus content words

The scoring paradigm used by the SIT program to calculate intelligibility weights content and function words equally. However, because content words carry more information than function words, and some studies have reported function words to be transcribed with greater accuracy than content words, Hustad (2006) suggested an alternate scoring paradigm to account for the greater informational weight of content words. Therefore, we included an additional measure derived from Hustad’s scoring paradigm, labeled proportion of content words correct, to determine whether increases in intelligibility for nonhabitual conditions were due to a disproportionate number of function (or less meaningful) words transcribed. We calculated the measure proportion of content words correct for each condition by dividing all correctly transcribed content words (nouns, verbs, adjectives, and adverbs) by all possible content words. This measure was intended as an exploratory analysis, and therefore only a subset of speakers was selected, ranging from low (F2, M4), to middle (F5, M12), and high (F9, M9) habitual intelligibility.

Results

Listener Analyses

Percentage-correct SIT scores for individual listeners

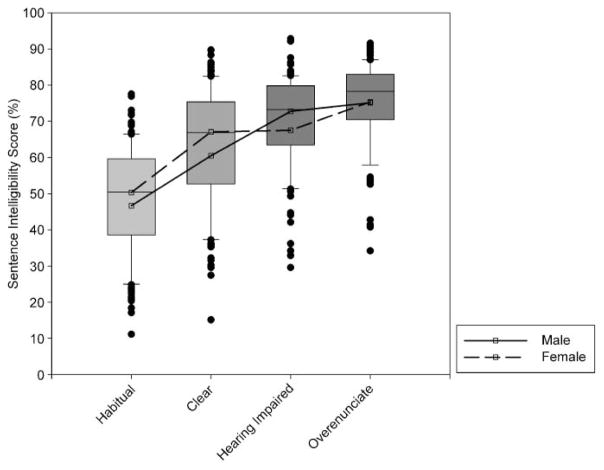

All statistical analyses were performed using arcsine-transformed SIT scores; however, raw percentage-correct SIT scores are reported in tables and figures for comprehensibility. Box plots in Figure 1 show the distributions of sentence intelligibility scores for individual listener scores as a function of condition. The square symbols connected by a solid or dashed line illustrate average intelligibility scores for male and female speakers, respectively. The statistical analysis indicated a significant main effect of condition, F(3, 472) = 85.992, p < .001. Post hoc comparisons indicated that all possible comparisons were significantly different from one another (p < .05). As suggested in Figure 1, increased intelligibility scores for the overenunciate, hearing impaired, and clear conditions were observed when compared to the habitual condition (p < .001). In addition, intelligibility scores in the overenunciate condition were significantly greater than in the hearing impaired (p = .01) and clear (p < .001) conditions. Furthermore, intelligibility scores in the hearing impaired condition were significantly greater than in the clear condition (p = .002). There was also a significant Sex × Condition interaction, F(3, 472) = 4.159, p = .006. As shown in Figure 1, female speakers (dashed line) had higher intelligibility scores than male speakers (solid line) in the habitual, clear, and the overenunciate conditions; however, for the hearing impaired condition, male speakers had higher intelligibility scores compared to female speakers. Post hoc comparisons indicated that intelligibility scores for female speakers did not differ significantly for the clear and hearing impaired conditions, and intelligibility scores for male speakers did not differ significantly for the hearing impaired and over enunciate conditions. All other pairwise comparisons within each group were significant (p < .001).

Figure 1.

Distributions of individual listener Sentence Intelligibility Test (SIT) scores as a function of condition. Square symbols connected by a solid or dashed line represent average intelligibility scores for male and female speakers, respectively. Error bars represent the 10th and 90th percentiles.

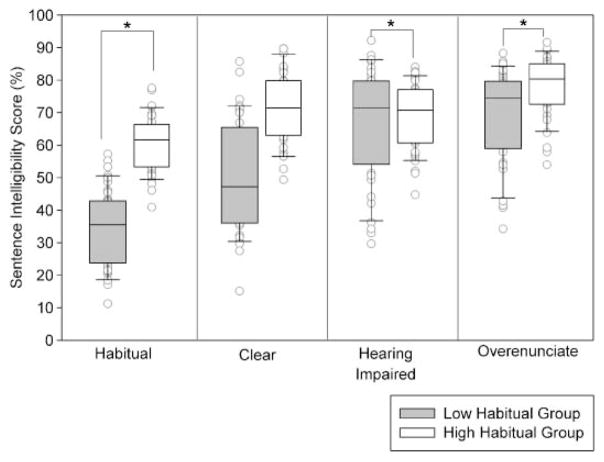

Listener variability

Figure 2 shows box plots of listener responses for the four speakers with operationally defined high habitual intelligibility (shown in white) and four speakers with operationally defined low habitual intelligibility (shown in gray). Conditions are indicated on the x-axis. Levene’s test indicated unequal variance of listener responses for the high habitual intelligibility speaker group when compared with the low habitual intelligibility speaker group for the habitual (p = .01), hearing impaired (p < .001), and overenunciate conditions (p = .02). Visual inspection of Figure 2 further suggests a smaller spread of scores for the high habitual intelligibility speaker group when compared to the low habitual intelligibility speaker group in the habitual, hearing impaired, and overenunciate conditions. The non-significant Levene’s test in the clear condition indicates that listener responses for the low and high habitual intelligibility speaker groups were equally variable.

Figure 2.

Distributions of individual listener SIT scores as a function of condition and group. Data for the high habitual intelligibility speaker group are shown in white, and data for the low habitual intelligibility speaker group are shown in gray. Error bars represent the 10th and 90th percentiles. *p < .05.

CoV values are reported in Table 1. In all conditions, the low habitual intelligibility group had greater CoV values when compared to the high habitual intelligibility group. Both groups had the smallest variability around the mean, as indicated by smaller CoV values in the overenunciate condition. Furthermore, CoV values for the low habitual intelligibility group were equally as large in the habitual and clear conditions, and the high habitual intelligibility group produced the largest CoV values in the clear condition.

Table 1.

Coefficients of variation for the low and high habitual intelligibility groups

| Group | Habitual (%) | Clear (%) | Hearing impaired (%) | Overenunciate (%) |

|---|---|---|---|---|

| Low habitual intelligibility | 34 | 34 | 25 | 20 |

| High habitual intelligibility | 14 | 16 | 14 | 12 |

Speaker Analyses

Speaker SIT score trends

Intelligibility scores from each speaker’s habitual condition and the clear speech benefit for each nonhabitual condition are reported in Table 2. Inspection of the data in Table 2 indicates an average clear speech benefit of 26% for the overenunciate condition, 21% for the hearing impaired condition, and 15% for the clear condition across the 12 speakers. However, as reported in previous studies, inspection of individual speaker data revealed a wide range of clear speech benefit across three nonhabitual conditions, ranging from 2% (Speaker 4, clear condition) to 47% (Speaker 12, hearing impaired condition; Ferguson, 2004; Gagné et al., 1994; Picheny et al., 1985).

Table 2.

Intelligibility scores from each speaker’s habitual condition and the clear speech benefit for each nonhabitual condition

| Speaker | Sex | Habitual intelligibility (%) | % Difference relative to habitual

|

||

|---|---|---|---|---|---|

| Clear | Hearing impaired | Overenunciate | |||

| 2 | F | 20.1 | +15 | +21 | +29 |

| 12 | M | 35.8 | +8 | +47 | +43 |

| 8 | M | 40.8 | +32 | +31 | +37 |

| 3 | M | 41.6 | +6 | +30 | +31 |

| 5 | F | 45.0 | +24 | +31 | +38 |

| 11 | M | 51.8 | +15 | +18 | +21 |

| 7 | M | 51.8 | +20 | +27 | +29 |

| 6 | F | 53.8 | +18 | +19 | +21 |

| 1 | F | 58.5 | +18 | +7 | +19 |

| 4 | M | 58.6 | +2 | +3 | +9 |

| 10 | F | 60.6 | +14 | +14 | +23 |

| 9 | F | 64.2 | +12 | +10 | +21 |

| M (SD) | 48.5 (12) | +15 (8) | +21 (12) | +26 (9) | |

Note. F = female; M = male.

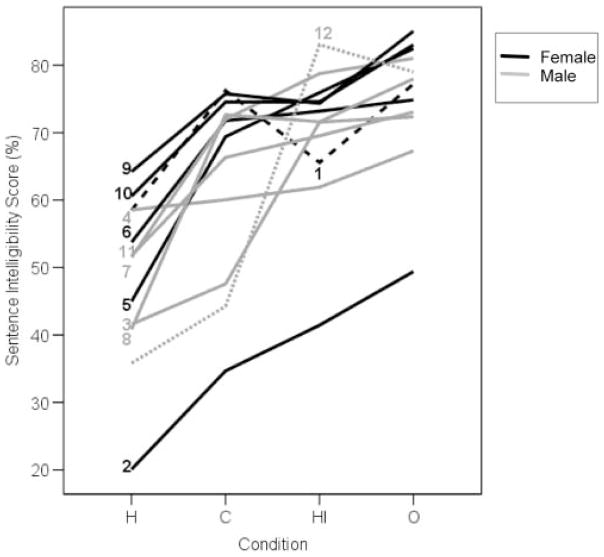

Figure 3 illustrates SIT scores labeled by speaker and condition. Data for females are depicted by black lines, and data for males are depicted by gray lines. Consistent with the group averages in Table 2, all speakers had the highest SIT score in the overenunciate condition with the exception of Speaker 12, who had the greatest SIT score in the hearing impaired condition. As shown in the figure, most speakers followed the trend of increasingly greater intelligibility scores from the habitual to the clear to the hearing impaired and then the overenunciate condition. Two speakers, however, did not follow this trend. Speaker 12 (represented by the dotted gray line in Figure 3) had a small increase in intelligibility for the clear condition relative to the habitual condition, a large increase in intelligibility for the hearing impaired condition, and a lesser increase in intelligibility in the overenunciate condition. Speaker 1 (represented by the black dashed line in Figure 3) had a moderate intelligibility benefit in the clear and overenunciate conditions but little to no intelligibility benefit in the hearing impaired condition relative to the habitual condition. For these two speakers, the hearing impaired condition affected intelligibility differently, maximizing the clear speech benefit for Speaker 12 and having little to no benefit for Speaker 1.

Figure 3.

Individual speaker SIT scores are reported as a function of condition. Female speakers are represented by black lines, and male speakers are represented by gray lines. H = habitual; C = clear; HI = hearing impaired; O = overenunciate.

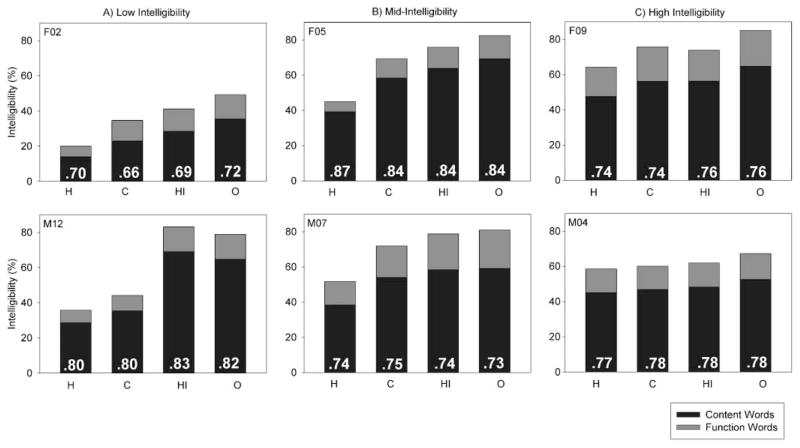

Function versus content words

The comparison of function and content words was completed as an exploratory analysis to investigate the proportion of content words correct at varying levels of habitual intelligibility. We completed this analysis for a subset of speakers who differed in habitual intelligibility from low (F2, M12), middle (F5, M7), and high (F9, M4). Habitual intelligibility for each speaker is reported in Table 2. In Figure 4, the black bars indicate the percentage of content words transcribed correctly, and the gray portion represents the percentage of function words transcribed correctly. The text in each bar indicates the corresponding proportion of content words correct. For all speakers, Figure 4 indicates that the proportion of content words transcribed correctly was relatively stable across conditions.

Figure 4.

The proportion of content words correct for speakers with low, middle, and high habitual intelligibility are shown in Panels A, B, and C, respectively. Black bars represent the percentage of content words transcribed correctly, and the gray portion of the bars represents the percentage of function words transcribed correctly. The numbers in each bar indicate the corresponding proportion of content words correct.

Discussion

The results of this study showed that variations of clear speech instruction were all associated with improved intelligibility, but the amount of benefit varied depending on the type of instruction. Relative to the habitual condition, on average, the instruction to “speak clearly” produced only a 15% clear speech benefit, whereas the instructions to “over-enunciate each word” and “speak to someone with a hearing impairment” resulted in larger clear speech benefits of 26% and 21%, respectively. The measure of proportion of content words correct further indicated that the intelligibility benefits observed in the nonhabitual conditions were not inflated due to an increase in correctly transcribed function words and that proportions did not vary considerably across speakers with inherently different habitual intelligibility. Although most (10 of 12) of the speakers followed the overall group trend, individual speakers varied in the amount of clear speech benefit (2%–47%) across the nonhabitual conditions. The implication is that, in addition to needing more detailed instructions, as Ferguson and Kewley-Port (2002) suggested, some speakers may prefer particular instructions and may be more successful at achieving a larger clear speech benefit for preferred instruction paradigms.

In general, both male and female speakers followed the overall trend, with the greatest intelligibility scores in the overenunciate condition, followed by the hearing impaired condition and then the clear condition. Contrary to previous clear speech studies (Bradlow & Bent, 2002; Ferguson, 2004), female speakers did not have a significantly larger clear speech benefit compared to male speakers. On average, however, female speakers produced a greater difference in intelligibility between the hearing impaired and overenunciate conditions compared to male speakers. In addition, male speakers produced a greater difference in intelligibility between the clear and hearing impaired conditions compared to female speakers. Because both speaker and listener factors contribute to judgments of intelligibility (Borrie, McAuliffe, & Liss, 2012; Hustad, 2008), the different results for male and female speakers might reflect different speech production strategies used by men and women to increase intelligibility in the nonhabitual conditions. Although men and women produced similar overall clear speech intelligibility benefits, listeners might be sensitive to particular speech production changes and in turn were able to perceive differences between some nonhabitual conditions for women and not men, and vice versa.

Listener Variability

To determine whether the spread of listener responses differed as a function of a speaker’s habitual intelligibility, we analyzed listener response variability for each condition using Levene’s test and CoV values for the low- and high-intelligibility speaker groups. For the habitual, hearing impaired, and overenunciate conditions, Levene’s test indicated unequal variance in listener responses between low- and high-intelligibility speaker groups, with greater CoV values in the low-intelligibility speaker group compared to the high-intelligibility speaker group. McHenry (2011) also studied listener response variability in three speakers with dysarthria. The results were comparable to those of the current study, wherein lower SIT scores elicited a wider range of listener variability, as indicated by greater CoV values. Thus, listener responses were more variable for speakers with low versus high habitual intelligibility. Because both speaker and listener factors contribute to intelligibility (Borrie et al., 2012; Hustad, 2008), one could predict that, for successful communication, a trade-off might occur between speaker and listener contributions. For example, if a speaker with low habitual intelligibility produced insufficient acoustic information, the burden might fall on the listener to use a variety of perceptual strategies to decipher the intended message. Across listeners, the success of the perceptual strategies might differ, and this might be reflected as across-listener variability, as in the current study. Southwood (1990) further suggested that increased listener demands, such as for a speaker who is relatively less intelligible, may be reflected as increased listener effort. More recently, Whitehall and Wong (2006) reported a strong negative correlation between sentence intelligibility and scaled listener effort, such that low intelligibility was associated with greater ratings of listener effort and high intelligibility was associated with lower ratings of listener effort. Results from the current study suggest that listener variability might correlate with listener effort; however, further research is warranted to determine the relationship between listener effort and listener variability.

The clear condition showed the same overall CoV trend, with greater response variability in the low-intelligibility speaker group compared to the high-intelligibility speaker group. It is interesting that both groups had the largest CoV values in the clear condition relative to the other nonhabitual conditions. However, Levene’s test indicated that listener responses in the clear condition were equally variable across groups. One explanation may be due to the instructions used to elicit the clear condition. In the current study, the clear condition was elicited by instructing speakers to “speak clearly” without further instruction. Ferguson and Kewley-Port (2002) suggested that such clear speech instructions may be too vague for some speakers. As discussed above, if a trade-off occurs between speaker and listener contributions, the analysis of listener variability in the clear condition could suggest that the instructions to “speak clearly” elicited a larger variety of speaker strategies and, in turn, greater variability in listener responses. Objective measures of intelligibility are frequently used in the clinical setting. Therefore, measures such as listener variability are clinically relevant. Results from the current study suggest the importance of having multiple listeners judge intelligibility for a given speaker. Sampling multiple listeners, especially for speakers who are hard to understand, may help provide a more accurate judgment of intelligibility.

Limitations and Future Research

A few factors should be taken into consideration when interpreting the current results. Young, healthy adult speakers and listeners from the western New York area participated. The ability to generalize findings to other age groups, populations, and/or languages is unknown. Furthermore, all participants were neurologically healthy individuals with normal hearing; therefore, intelligibility benefits reported in the current study might not be representative of other populations, such as speakers with dysarthria or listeners with a hearing impairment. Generalization of findings to clinical populations therefore should be made cautiously. Future studies that examine the effects of clear speech instruction for various clinical populations are needed.

Other factors to consider include the speech stimuli and the type of intelligibility measure reported. In this study, speech stimuli consisted of a set of 18 sentences read from a computer screen by each speaker. Therefore, the results might not be representative of real life situations or clear speech training programs. Furthermore, transcription tasks may not reflect listener comprehension or functional communication of naturally produced speech (Hustad, 2008; Hustad & Weismer, 2007). Although previous studies have often used similar orthographic transcription tasks (Beukelman et al., 2002; Bradlow & Bent, 2002; Bradlow et al., 1996; Caissie et al., 2005; Chen, 1980; Gagné et al., 1994; Picheny et al., 1985; Uchanski et al., 1996), alternate perceptual measures of intelligibility have also been reported in the literature, such as forced-choice tasks (Ferguson, 2004; Ferguson & Kewley-Port, 2002; Maniwa et al., 2008) and scaling tasks (Julien & Munson, 2012; Sussman & Tjaden, 2012). Thus, intelligibility scores reported in the current study might not be replicated for alternative measures of intelligibility. Future research is needed to determine whether results from the current study can be reproduced using a variety of intelligibility/comprehension measures.

Consistent with our hypothesis, perceptual findings paralleled the acoustic findings reported by Lam et al. (2012). In Lam et al.’s study, nonhabitual conditions elicited varying magnitudes of acoustic adjustment. For all but one acoustic measure, the greatest changes were observed in the over-enunciate condition, followed by the hearing impaired and clear conditions. SPL, however, increased most in the hearing impaired condition, followed by the overenunciate condition and the clear condition. The current perceptual study provides evidence that listeners are sensitive to the speech production adjustments elicited by different clear speech instructions. On the basis of the acoustic data reported by Lam et al. and the current perceptual results, one might predict that increased vowel space area, increased segment durations, greater vowel spectral change, and decreased articulation rate are driving the clear speech benefit observed in the overenunciate condition, whereas an increased SPL might contribute more to the clear speech benefit in the hearing impaired condition. Further research is warranted, however, to determine whether specific acoustic changes can account for the intelligibility benefit.

Summary

Different clear speech instructions elicited varying levels of clear speech benefit for the listener. On average, the greatest intelligibility benefit for listeners was observed in the overenunciate condition, followed by the hearing impaired condition and then clear condition, relative to the habitual condition. The results support the idea that some cross-study variation of clear speech benefit may be due to differences in instruction. As discussed above, the variability of listener responses was greater for speakers with low habitual intelligibility compared to speakers with high habitual intelligibility. This finding suggests the importance of having a variety of listeners provide judgments of intelligibility, especially when speakers are difficult to understand. Furthermore, the overall trend of the greatest benefits in the overenunciate condition, followed by the hearing impaired condition and then the clear condition, was observed for all but two speakers. These two speakers elicited a different trend for the non-habitual conditions, in support of the idea that some speakers might require additional clear speech instruction (Ferguson & Kewley-Port, 2002). In addition, the same two speakers produced opposite intelligibility effects in the hearing impaired condition, further suggesting that some speakers might prefer one instruction set over another. Thus, the results are clinically relevant, such that determining a client’s instructional preference might be vital in maximizing intelligibility benefits from clear speech training programs.

Although the purpose of this study was to investigate intelligibility benefits from the listener’s standpoint, we know that these speakers were able to produce a variety of acoustic changes for different clear speech instructions (Lam et al., 2012), and in turn these production changes as a whole affected a listener’s perception of intelligibility. With the knowledge that instructions matter to the listener, further research is needed to address the magnitude of change for particular speech production measures and its relationship to the magnitude of clear speech benefit heard by the listener.

Acknowledgments

This research was supported by National Institute on Deafness and Other Communication Disorders Grant R01DC004689. Portions of this study were presented at the June 2011 Conference on Speech Motor Control, Groningen, the Netherlands. We thank Joan Sussman and Elaine Stathopoulos for their valuable comments on a previous version of this article.

References

- American National Standards Institute. Specifications for audiometers. New York: Author; 2004. ANSI S3.6-2004. [Google Scholar]

- Beukelman DR, Fager S, Ullman C, Hanson E, Logemann J. The impact of speech supplementation and clear speech on the intelligibility and speaking rate of people with traumatic brain injury. Journal of Medical Speech-Language Pathology. 2002;10:237–242. [Google Scholar]

- Bochner JH, Garrison WM, Sussman JE, Burkard RF. Development of materials for the clinical assessment of speech recognition: The Speech Sound Pattern Discrimination Test. Journal of Speech, Language, and Hearing Research. 2003;46:889–900. doi: 10.1044/1092-4388(2003/069). [DOI] [PubMed] [Google Scholar]

- Borrie SA, McAuliffe MJ, Liss JM. Perceptual learning of dysarthric speech: A review of experimental studies. Journal of Speech, Language, and Hearing Research. 2012;55:290–305. doi: 10.1044/1092-4388(2011/10-0349). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradlow AR, Bent T. The clear speech effect for non-native listeners. The Journal of the Acoustical Society of America. 2002;112:272–284. doi: 10.1121/1.1487837. [DOI] [PubMed] [Google Scholar]

- Bradlow AR, Kraus N, Hayes E. Speaking clearly for children with learning disabilities: Sentence perception in noise. Journal of Speech, Language, and Hearing Research. 2003;46:80–97. doi: 10.1044/1092-4388(2003/007). [DOI] [PubMed] [Google Scholar]

- Bradlow AR, Torretta GM, Pisoni DB. Intelligibility of normal speech: I. Global and fine-grained acoustic-phonetic talker characteristics. Speech Communication. 1996;20:255–272. doi: 10.1016/S0167-6393(96)00063-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caissie R, Campbell MM, Frenette WL, Scott L, Howell I, Roy A. Clear speech for adults with a hearing loss: Does intervention with communication partners make a difference? Journal of the American Academy of Audiology. 2005;16:157–171. doi: 10.3766/jaaa.16.3.4. [DOI] [PubMed] [Google Scholar]

- Chen FR. Unpublished master’s thesis. Massachusetts Institute of Technology; Cambridge, MA: 1980. Acoustic characteristics and intelligibility of clear and conversational speech. [Google Scholar]

- Choe Y. Doctoral dissertation. 2008. Individual differences in understanding speech. Retrieved from ProQuest Dissertations and Theses. (Accession/Order No. 3319473) [Google Scholar]

- Dromey C. Articulatory kinematics in patients with Parkinson disease using different speech treatment approaches. Journal of Medical Speech-Language Pathology. 2000;8:155–161. [Google Scholar]

- Feijoo S, Fernandez S, Balsa R. Acoustic and perceptual study of phonetic integration in Spanish voiceless stops. Speech Communication. 1999;27:1–18. [Google Scholar]

- Ferguson SH. Talker differences in clear and conversational speech: Vowel intelligibility for normal-hearing listeners. The Journal of the Acoustical Society of America. 2004;116(4 Pt 1):2365–2373. doi: 10.1121/1.1788730. [DOI] [PubMed] [Google Scholar]

- Ferguson SH, Kewley-Port D. Vowel intelligibility in clear and conversational speech for normal-hearing and hearing-impaired listeners. The Journal of the Acoustical Society of America. 2002;112:259–271. doi: 10.1121/1.1482078. [DOI] [PubMed] [Google Scholar]

- Ferguson SH, Kewley-Port D. Talker differences in clear and conversational speech: Acoustic characteristics of vowels. Journal of Speech, Language, and Hearing Research. 2007;50:1241–1255. doi: 10.1044/1092-4388(2007/087). [DOI] [PubMed] [Google Scholar]

- Frank T, Craig CH. Comparison of the Auditec and Rintelmann recordings of the NU-6. Journal of Speech and Hearing Disorders. 1984;49:267–271. doi: 10.1044/jshd.4903.267. [DOI] [PubMed] [Google Scholar]

- Gagné J, Masterson V, Munhall KG, Bilida N, Querengesser C. Across talker variability in auditory, visual, and audiovisual speech intelligibility for conversational and clear speech. Journal of the Academy of Rehabilitative Audiology. 1994;27:135–158. [Google Scholar]

- Gagné JP, Querengesser C, Folkeard P, Munhall KG, Zandipour M. Auditory, visual, and audiovisual speech intelligibility for sentence-length stimuli: An investigation of conversational and clear speech. The Volta Review. 1995;97:33–51. [Google Scholar]

- GoldWave, Inc. GoldWave (Version 5.58) [Computer software] St. John’s, Newfoundland, Canada: Author; 2010. [Google Scholar]

- Harnsberger JD, Wright R, Pisoni DB. A new method for eliciting three speaking styles in the laboratory. Speech Communication. 2008;50:323–336. doi: 10.1016/j.specom.2007.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfer KS. Auditory and auditory–visual perception of clear and conversational speech. Journal of Speech, Language, and Hearing Research. 1997;40:432–443. doi: 10.1044/jslhr.4002.432. [DOI] [PubMed] [Google Scholar]

- Hustad KC. A closer look at transcription intelligibility for speakers with dysarthria: Evaluation of scoring paradigms and linguistic errors made by listeners. American Journal of Speech-Language Pathology. 2006;15:268–277. doi: 10.1044/1058-0360(2006/025). [DOI] [PubMed] [Google Scholar]

- Hustad KC. The relationship between listener comprehension and intelligibility scores for speakers with dysarthria. Journal of Speech, Language, and Hearing Research. 2008;51:562–573. doi: 10.1044/1092-4388(2008/040). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hustad KC, Weismer G. Interventions to improve intelligibility and communicative success for speakers with dysarthria. In: Weismer G, editor. Motor speech disorders. San Diego, CA: Plural Publishing; 2007. pp. 217–228. [Google Scholar]

- Huttunen K, Keränen H, Väyrynen E, Pääkkönen R, Leino T. Effect of cognitive load on speech prosody in aviation: Evidence from military simulator flights. Applied Ergonomics. 2011;42:348–357. doi: 10.1016/j.apergo.2010.08.005. [DOI] [PubMed] [Google Scholar]

- IEEE. IEEE Recommended Practice for Speech Quality Measurements. IEEE Transactions on Audio and Electroacoustics. 1969;17:225–246. [Google Scholar]

- Johnson K. MMScript [Computer software] Buffalo, NY: Department of Communicative Disorders and Sciences, University at Buffalo; 2010. [Google Scholar]

- Johnson K, Flemming E, Wright R. The hyperspace effect: Phonetic targets are hyperarticulated. Language. 1993;69:505–528. [Google Scholar]

- Julien HM, Munson B. Modifying speech to children based on their perceived phonetic accuracy. Journal of Speech, Language, and Hearing Research. 2012;55:1836–1849. doi: 10.1044/1092-4388(2012/11-0131). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kent RD, Kent JF, Weismer G, Martin RE, Sufit RL, Brooks BR, Rosenbek JC. Relationships between speech intelligibility and the slope of the second-formant transitions in dysarthric subjects. Clinical Linguistics & Phonetics. 1989;3:347–358. [Google Scholar]

- Krause JC, Braida LD. Investigating alternative forms of clear speech: The effects of speaking rate and speaking mode on intelligibility. The Journal of the Acoustical Society of America. 2002;112:2165–2172. doi: 10.1121/1.1509432. [DOI] [PubMed] [Google Scholar]

- Krause J, Braida L. Acoustic properties of naturally produced clear speech at normal speaking rates. The Journal of the Acoustical Society of America. 2004;115:362–378. doi: 10.1121/1.1635842. [DOI] [PubMed] [Google Scholar]

- Krause JC, Braida LD. Evaluating the role of spectral and envelope characteristics in the intelligibility advantage of clear speech. The Journal of the Acoustical Society of America. 2009;125:3346–3357. doi: 10.1121/1.3097491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lam J, Tjaden K, Wilding G. Acoustics of clear speech: Effect of instruction. Journal of Speech, Language, and Hearing Research. 2012;55:1807–1821. doi: 10.1044/1092-4388(2012/11-0154). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lindblom B. Explaining phonetic variation: A sketch of the H & H theory. In: Hardcastle WJ, Marchal A, editors. Speech production and speech modeling. Dordrecht, the Netherlands: Kluwer Academic; 1990. pp. 403–439. [Google Scholar]

- Maniwa K, Jongman A, Wade T. Perception of clear fricatives by normal-hearing and simulated hearing-impaired listeners. The Journal of the Acoustical Society of America. 2008;123:1114–1125. doi: 10.1121/1.2821966. [DOI] [PubMed] [Google Scholar]

- McHenry M. An exploration of listener variability in intelligibility judgments. American Journal of Speech-Language Pathology. 2011;20:119–123. doi: 10.1044/1058-0360(2010/10-0059). [DOI] [PubMed] [Google Scholar]

- Milenkovic P. TF32 [Computer software] Madison: Department of Electrical and Computer Engineering, University of Wisconsin—Madison; 2002. [Google Scholar]

- Moon S, Lindblom B. Interactions between duration, context, and speaking style in English stressed vowels. The Journal of the Acoustical Society of America. 1994;96:40–55. [Google Scholar]

- Oviatt S, MacEachern M, Levow GA. Predicting hyperarticulate speech during human–computer error resolution. Speech Communication. 1998;24:87–110. [Google Scholar]

- Payton KL, Uchanski RM, Braida LD. Intelligibility of conversational and clear speech in noise and reverberation for listeners with normal and impaired hearing. The Journal of the Acoustical Society of America. 1994;95:1581–1592. doi: 10.1121/1.408545. [DOI] [PubMed] [Google Scholar]

- Perkell JS. Movement goals and feedback and feedforward control mechanisms in speech production. Journal of Neurolinguistics. 2012;25:382–407. doi: 10.1016/j.jneuroling.2010.02.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perkell JS, Zandipour M, Matthies ML, Lane H. Economy of effort in different speaking conditions: I. A preliminary study of intersubject differences and modeling issues. The Journal of the Acoustical Society of America. 2002;112:1627–1641. doi: 10.1121/1.1506369. [DOI] [PubMed] [Google Scholar]

- Picheny MA, Durlach NI, Braida LD. Speaking clearly for the hard of hearing: I. Intelligibility differences between clear and conversational speech. Journal of Speech and Hearing Research. 1985;28:96–103. doi: 10.1044/jshr.2801.96. [DOI] [PubMed] [Google Scholar]

- Picheny MA, Durlach NI, Braida LD. Speaking clearly for the hard of hearing: II. Acoustic characteristics of clear and conversational speech. Journal of Speech and Hearing Research. 1986;29:434–445. doi: 10.1044/jshr.2904.434. [DOI] [PubMed] [Google Scholar]

- Schum DJ. Beyond hearing aids: Clear speech training as an intervention strategy. The Hearing Journal. 1997;50:36–38. [Google Scholar]

- Smiljanić R, Bradlow AR. Speaking and hearing clearly: Talker and listener factors in speaking style changes. Language & Linguistics Compass. 2009;3:236–264. doi: 10.1111/j.1749-818X.2008.00112.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Southwood MH. Unpublished doctoral dissertation. University of Wisconsin—Madison; 1990. A term by any other name: Bizarreness, acceptability, naturalness and normalcy judgments of speakers with amyotrophic lateral sclerosis. [Google Scholar]

- Sussman JE, Tjaden K. Perceptual measures of speech from individuals with Parkinson’s disease and multiple sclerosis: Intelligibility and beyond. Journal of Speech, Language, and Hearing Research. 2012;55:1208–1219. doi: 10.1044/1092-4388(2011/11-0048). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tjaden KK, Liss JM. The role of listener familiarity in the perception of dysarthric speech. Clinical Linguistics & Phonetics. 1995;9:139–154. [Google Scholar]

- Turner GS, Tjaden K, Weismer G. The influence of speaking rate on vowel space and speech intelligibility for individuals with amyotrophic lateral sclerosis. Journal of Speech and Hearing Research. 1995;38:1001–1013. doi: 10.1044/jshr.3805.1001. [DOI] [PubMed] [Google Scholar]

- Uchanski RM. Clear speech. In: Pisoni DB, Remez RE, editors. The handbook of speech perception. Malden, MA: Blackwell; 2005. pp. 207–235. [Google Scholar]

- Uchanski RM, Choi SS, Braida LD, Reed CM, Durlach NI. Speaking clearly for the hard of hearing: IV. Further studies of the role of speaking rate. Journal of Speech and Hearing Research. 1996;39:494–509. doi: 10.1044/jshr.3903.494. [DOI] [PubMed] [Google Scholar]

- VanNuffelen G, De Bodt M, Vanderwegen J, Van de Heyning P, Wuyts F. Effect of rate control on speech production and intelligibility in dysarthria. Folia Phoniatrica et Logopaedica. 2010;62:110–119. doi: 10.1159/000287209. [DOI] [PubMed] [Google Scholar]

- Weismer G, Jeng JY, Laures JS, Kent RD, Kent JF. Acoustic and intelligibility characteristics of sentence production in neurogenic speech disorders. Folia Phoniatrica et Logopedica. 2001;53:1–18. doi: 10.1159/000052649. [DOI] [PubMed] [Google Scholar]

- Whitehall TL, Wong CCY. Contributing factors to listener effort for dysarthric speech. Journal of Medical Speech-Language Pathology. 2006;14:335–341. [Google Scholar]

- Winer B. Statistical principles of experimental design. New York, NY: McGraw-Hill; 1981. [Google Scholar]

- Yorkston KM, Beukelman DR. Assessment of Intelligibility of Dysarthric Speech. Austin, TX: Pro-Ed; 1984. [Google Scholar]

- Yorkston KM, Beukelman DR. Sentence Intelligibility Test. Lincoln, NE: Tice Technologies; 1996. [Google Scholar]

- Zeng FG, Liu S. Speech perception in individuals with auditory neuropathy. Journal of Speech, Language, and Hearing Research. 2006;49:367–380. doi: 10.1044/1092-4388(2006/029). [DOI] [PubMed] [Google Scholar]