Abstract

Traditionally, physical features in musical chords have been proposed to be at the root of consonance perception. Alternatively, recent studies suggest that different types of experience modulate some perceptual foundations for musical sounds. The present study tested whether the mechanisms involved in the perception of consonance are present in an animal with no extensive experience with harmonic stimuli and a relatively limited vocal repertoire. In Experiment 1, rats were trained to discriminate consonant from dissonant chords and tested to explore whether they could generalize such discrimination to novel chords. In Experiment 2, we tested if rats could discriminate between chords differing only in their interval ratios and generalize them to different octaves. To contrast the observed pattern of results, human adults were tested with the same stimuli in Experiment 3. Rats successfully discriminated across chords in both experiments, but they did not generalize to novel items in either Experiment 1 or Experiment 2. On the contrary, humans not only discriminated among both consonance – dissonance categories, and among sets of interval ratios, they also generalized their responses to novel items. These results suggest that experience with harmonic sounds may be required for the construction of categories among stimuli varying in frequency ratios. However, the discriminative capacity observed in rats suggests that at least some components of auditory processing needed to distinguish chords based on their interval ratios are shared across species.

Keywords: consonance, interval ratio, auditory discrimination, comparative cognition, rats

Introduction

Music has been claimed to be a general ability unique to our species. Music production has been found in every known culture (Patel, 2008), and similarities in the perception of musical traits have been identified in listeners with very diverse backgrounds (Harwood, 1976). Among these traits, consonance, which is considered an aesthetically pleasing sensation produced by some musical chords, has emerged as a common theme in music perception. Several studies have reported concurrent judgments across different populations over the consonance ordering of tone combinations (Cosineau, McDermott, & Peretz, 2012; Malmberg, 1918; Roberts, 1986), with some combinations of musical notes being consistently ranked as consonant (pleasant) and other combinations as dissonant (unpleasant). Thus, musical chords can be ordered according to the aesthetical consideration of their degree of consonance. In fact, this ordering, and thus the perception of consonance, is so pervasive that it has been considered one of the musical universals (Krumhansl, 1990; Malmberg, 1918).

The study of the basis of consonance processing has long historic roots. Pythagoras proposed that musical intervals with small integer ratios (the ratio of the frequencies of pitches in a musical interval), tend to be more consonant (e.g. the ratio of frequencies in a perfect fourth is 5:4); while the intervals with larger integer ratios are dissonant (e.g. the ratio of frequencies in a major seventh is 15:8). Helmholtz (1877) claimed that dissonance is perceived as unpleasant due to a sensation of roughness that comes from rapid amplitude fluctuations called “beats” that are produced when some notes are combined. More recent studies suggest that harmonicity, the pattern of component frequencies of the notes that compose a chord, also accounts for the degree of consonance or dissonance that is perceived by a listener (Cousineau et al., 2012; McDermott, Lehr, & Oxenham, 2010). So, a common idea underlying these conceptualizations is that the key to the perception of consonance is to be found in the physical properties of the sound. That is, that certain acoustic properties that are produced when two or more musical notes are combined trigger the perception of consonance and dissonance.

However, other studies have taken complementary approaches, suggesting that the physical properties of the sound by itself may not completely explain the perception of musical universals (Gordon, Webb, & Wolpert, 1992; Hogden, Loqvist, Gracco, Zlokarnik, Rubin, & Saltzman, 1996). Instead, researches have focused on the role that experience may play on consonance perception. McLachlan, Marco, Light and Wilson (2013) have recently provided compelling evidence that adaptation of the sensory system through experience plays a central role in the development of harmony. The authors propose that recognition mechanisms are to be found at the basis of consonance judgments. In their experiments, McLachlan and collaborators showed that the listeners' familiarity with chords facilitated pitch processing, leading to an enhanced perception of a sound as consonant. Failure in the recognition mechanisms, in terms of incongruence between pitch processing and melodic priming, would lead to an increase in dissonance perception. Another type of experience that has been proposed to be important for the perception of musical universals, including consonance, is that provided by the statistical structure of human vocalizations (the periodic acoustic stimuli to which humans are more exposed to; Schwartz, Howe, & Purves, 2003). For example, in Schwartz et al. (2003), an analysis of the speech signal revealed that the probability distribution of amplitude-frequency combinations in human utterances predicts consonance ordering. So, from this point of view, it is suggested that at least some musical universals reflect a statistical structure that is present in the speech signal. The common idea among these proposals is that perceptual differences between consonant and dissonant chords do not arise from purely physical attributes of the chords, like differences in interval ratios. Rather, the idea is that experience with harmonic stimuli plays a fundamental role in how such differences arise.

Given how pervasive consonance is in humans, several comparative studies have tackled the question of how general the perception of consonance and dissonance of musical chords is across species. Most of these studies have used primates and birds as their models. Research has shown that Japanese monkeys (Macaca fuscata, Izumi, 2000), Java sparrows (Padda oryzivora, Watanabe, Uozumi, & Tanaka, 2005), European starlings (Sturnus vulgaris, Hulse, Bernard, & Braaten, 1995), black-capped chickadees (Poecile atricapillus, Hoeschele, Cook, Guillette, & Brooks, 2012), and pigeons (Columbia livia, Brooks & Cook, 2009), are able to discriminate across chords, and this capacity may be based on sensory consonance. In parallel, different neural responses have been observed for consonant and dissonant stimuli in macaque monkeys (Macaca fascicularis, Fishman et al., 2001). So, the ability to discriminate consonant from dissonant chords is certainly not uniquely human.

A complementary question of whether other animals may discriminate among consonant and dissonant chords is whether they also prefer one over the other (as has been observed in human infants; Trainor & Heinmiller, 1998; Trainor, Tsang, & Cheung, 2002; Zentner & Kagan 1996). There is evidence of spontaneous preference for consonant over dissonant melodies in an infant chimpanzee (Pan troglodytes, Sugimoto et al., 2009) and newly hatched domestic chicks (Gallus gallus, Chiandetti & Vallortigara, 2011). In contrast, cotton-top tamarins (Saguinus oedipus, McDermott & Hauser, 2004) and Campbell's monkeys (Cercopithecus campbelli, Koda et al., 2013) did not show preference for consonant over dissonant stimuli. One difference across these experiments is that the authors in the latter studies (Koda et al. 2013; McDermott & Hauser, 2004) used two-note chords as stimuli, and not complete melodies, as the ones that had been used in the former preference experiments (Chiandetti & Vallortigara, 2011; Sugimoto et al., 2009). So it might be the case that animals need a large amount of contrasting information (such as the one present in entire melodies, but probably lacking in two-note chords) to display preference for consonance. But it is still an open question what is the minimal amount of information, and what are the specific features that are present in the signal, that other species may use before they exhibit a preference behavior for consonant stimuli.

Furthermore, results on consonance processing have primarily been observed in avian species with a complex vocal system that may have converging mechanisms with those of human speech (Bolhuis, Okanoya, & Scharff, 2010). Research on consonance perception in species with a more limited vocal repertoire has been scarce. Rats produce a limited number of vocalizations, mostly comprising ultrasonic calls (that can reach up to 50 kHz) and few audible squeaks. To produce these squeaks, rats use vibrating structures of the larynx that give them some harmonic structure. However, they produce the more frequent ultrasonic vocalizations using a "whistle-like" laryngeal mechanism that produces pure tones (Knutson, Burgdorf & Panksepp, 2002). And more importantly, there are no clear indications that such vocalizations reach the complexity, in terms of concatenation and combination of different units, which may be found in birdcalls. Fannin and Braud (1971) reported that albino rats (Rattus norvegicus) showed a preference for consonant over dissonant tones. Similarly, Zentner and Kagan (1998) mentioned an experiment from 1976 by Borchgrevink that also observed such preference in rats. However, Fannin and Braud (1971) used only two chords (one consonant and one dissonant) in their experiment, and a description of the stimuli and experimental procedure in Borchgrevink's study are missing. So, together with the features in the stimuli that other species are using while processing consonant and dissonant stimuli, it is also still unknown to what extent such findings can be generalized to other species such as rodents.

In the present study we wanted to explore the extent to which an organism with no extensive experience with harmonic stimuli, properly perceives and differentiates consonance from dissonance. We thus conducted three experiments. In Experiment 1, we tested whether rats have the capacity to discriminate consonant from dissonant chords, and generalize them to novel chords they have never heard before. In Experiment 2, we tested new rats to see whether they can discriminate between two sets of dissonant stimuli differing only in their interval ratios. Finally, in Experiment 3 we presented the same stimuli used in the two previous experiments to human adults. Our aim was to assess how such stimuli may be processed across different species. If consonance processing is solely based in the physical properties of the sound, species with no experience with harmonic stimuli may exhibit proper discrimination and generalization of consonant and dissonant chords. On the contrary, if experience plays a role in consonance perception, we could find difficulties in consonance and dissonance processing in an animal such as the rat.

Experiment 1. Discrimination between consonant and dissonant stimuli

The present experiment was designed to test if rats have the capacity to discriminate consonant from dissonant chords. Rats were also tested whether they can generalize such discrimination to novel chords.

Subjects

Subjects were 21 Long-Evans rats (Rattus norvegicus), 6 males and 15 females of five months of age. Rats were caged in pairs of the same sex and were exposed to a 12h/12h light-dark cycle. Rats had water ad libitum and were food-deprived, maintained at 80-85% of their free-feeding weights. Food was delivered after each training session.

Apparatus

Rats were placed in Letica L830-C Skinner boxes (Panlab S. L., Barcelona, Spain) while custom-made software (RatBoxCBC) based on a PC computer controlled the stimuli presentations, recorded the lever-press responses and provided reinforcement. A Pioneer Stereo Amplifier A-445 and two E. V. (S-40) loudspeakers (with a response range from 85 Hz to 20 kHz), located besides the boxes, were used to present the stimuli at 68 dB.

Stimuli

During discrimination training, consonant and dissonant stimuli were used. Stimuli were the same ones used by McDermott and Hauser (2004). Each stimulus was composed of a sequence of three two-note chords, (see table 1). Consonant chords were the octave (C-C'), the fifth (C-G), and the fourth (C-F); while dissonant chords were the minor second (C-C#), the tritone (C-F#) and the minor ninth (C-C'#). Each chord was 1-second long. To increase variability during training, the stimuli were implemented at different octaves; so their absolute pitch changed, while their relative pitch remained constant. Pitch can be categorized in two ways: relative and absolute pitch. Relative pitch refers to the relation between two or more notes, while absolute pitch concerns the ability to determine note pitch in the absence of any external reference. The training stimuli were constructed in such a way that the relative pitch was maintained while the absolute frequencies were changed. Thus, by implementing the stimuli at different octaves, we ensured that the principal cue for the discrimination task was the relative pitch; that is, the frequency intervals between the notes and not the absolute pitch level. Also, by using whole octave transpositions across the chromatic scale, the contour, intervals and chroma across different chords are preserved, while only fundamental frequency changes. Three versions of each stimulus were made, each one produced in a different octave (C3, C4 and C5). For each octave, there were 6 different combinations of the three chords (e.g. consonant: octave-fifth-fourth, fifth-octave-fourth; dissonant: minor second-tritone-minor ninth, tritone-minor second-minor ninth). There were thus 36 stimuli sequences in total, 18 consonant and 18 dissonant. For the generalization test we made 8 new sequences, 4 consonant and 4 dissonant, (see table 1). Consonant test sequences were made by concatenating 3 chords, just as training sequences. However, we used 3 new consonant and 3 new dissonant chords different from those used during training. Consonant test sequences were composed of chords major third (C-E), minor third (C-E♭) major sixth (C-A). Dissonant test sequences were composed of chords major seventh (C-B), minor seventh (C-B♭) and major second (C-D). Four of the test sequences (2 consonant and 2 dissonant) were implemented in C6, while the remaining 4 test sequences were implemented in the C7 octave. Thus, the generalization test presented stimuli with similar intervals between tones as training stimuli, but with higher absolute pitch levels. All chords fell within the hearing range of the animals (Heffner, Heffner, Contos & Ott, 1994). Stimuli were made with GarageBand software for Mac OS X (Apple inc.). The grand piano setting was used in C major key. Each stimulus (sequence of 3 two-note chords) was 3 seconds long, with a tempo of 60 beats per second.

Table 1. Stimuli Used During Training and Test in Experiments 1 and 3.

| Consonant | Dissonant | |||

|---|---|---|---|---|

| Intervals | Ratio | Intervals | Ratio | |

| Training | ||||

| 8th (C-C) | 2:1 | Tritone (C-F#) | 45:32 | |

| 5th (C-G) | 3:2 | Minor 2nd (C-C#) | 16:15 | |

| 4th (C-F) | 4:3 | Minor 9th (C-C'#) | 15:32 | |

| Test | ||||

| Major 3rd (C-E) | 5:4 | Major 7th (C-B) | 15:8 | |

| Minor 3rd (C-E♭) | 6:5 | Minor 7th (C-B♭) | 16:9 | |

| Major 6th (C-A) | 5:3 | Major 2nd (C-D) | 9:8 | |

Note. Chords, with notes in parenthesis and frequency ratio, used in experiments 1 and 3. Rats and human participants were trained to discriminate consonant from dissonant chords. During test, subjects were required to discriminate among new chords implemented at different octaves.

Procedure

Before discrimination training, rats were trained to press a lever in order to obtain food. This continued until rats reached a stable response rate at a variable ratio of 10 (+/- 5), meaning they obtained reinforcement each time they pressed the lever between 5 and 15 times. Discrimination training consisted of 30 sessions, 1 session per day. In each training session, rats were placed individually in a Skinner box. Due to time constraints, only 30 stimuli were presented in each training session, 15 consonant and 15 dissonant. However, all the 36 stimuli were presented in a balanced manner along all thirty training sessions. The stimuli were played with an inter-stimulus interval of 1 minute. Stimuli presentation was balanced so no more than two stimuli of the same type (consonant or dissonant) would follow each other. Rats received food reinforcement for lever-pressing responses after the presentation of each consonant stimulus. No food was delivered after the presentation of dissonant stimuli, independently of lever presses. After the 30 sessions of the training phase, 1 generalization test session was run. It consisted of the presentation of 4 new consonant and 4 new dissonant stimuli played in different octaves (C6 and C7) from the discrimination training. The generalization block was 30 trials long. The 8 test items replaced 8 stimuli from the training list. The replacement of the stimuli was in such a way that, as in the training phase, there were no more than 2 stimuli of the same type following each other. Importantly, rats did not receive any food reward when the test stimuli were played.

Results and Discussion

To analyze the data, the discrimination ratio (DR) between the reinforced (consonant) and non-reinforced (dissonant) stimuli was calculated. The DRs were obtained by dividing the number of correct lever-pressing responses (responses to reinforced stimuli) by the total number of overall responses (sum of responses to reinforced and non-reinforced stimuli) of each rat. A DR higher than 0.5 indicates more lever-pressings to reinforced stimuli. Interestingly, one pattern that has been observed using similar procedures (e.g. De la Mora & Toro, 2013) is that during the first training sessions, the mean proportions of responses to reinforced stimuli are below 0.5. This means rats were pressing the lever more often after non-reinforced stimuli than after reinforced stimuli during the initial training sessions. This pattern is likely a result of the training to lever-pressing before the discrimination phase begins. As we explained above, rats were trained to press the lever to obtain food without sound stimuli for more than a month before the discrimination phase. Rats obtained food each time they pressed the lever between 5 and 15 times. Thus, rats were used to obtaining food for lever pressing without any acoustic stimuli signaling reinforced from non-reinforced trials. When discrimination training begins, rats would get food for lever-pressing responses for reinforced trials only. However, during non-reinforced trials, rats would keep on pressing the lever trying to obtain reinforcement that leads to higher response rates. It is only when rats begin to use acoustic stimuli as reliable cues to food delivery that responses to non-reinforced stimuli decrease.

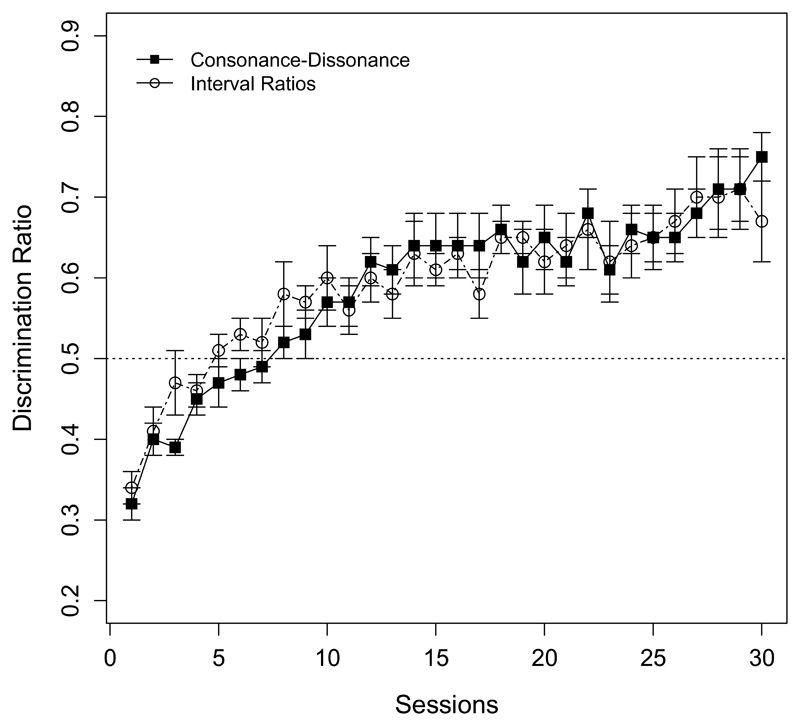

For the training phase, a repeated measures ANOVA over the discrimination ratio values was run with Session (1-30) as within-subject factor. The analysis showed a significant difference between sessions with an increase in responses to consonant stimuli (F (29, 580) = 15.27, p < 0.001; see Figure 1). For the test session, only data from test stimuli were analyzed. A one-sample t test showed that the mean proportion of responses to novel consonant stimuli was not significantly above what is expected by chance (M = 0.52, SD = 0.13; t (20) = 1.01, p = 0.32, 95% CI [0.47, 0.58], d = 0.15). We also compared the DR of the test items to the DR of the final training block (M = 0.75, SD = 0.10). The analysis revealed significant differences between them (t (20) = 5.65, p < 0.01, 95% CI [0.14, 0.31], d = 1.92). These results suggest that, during the training phase, rats successfully learned to discriminate consonant from dissonant chords. Nevertheless, performance during the test demonstrates that rats are not able to generalize this discrimination to novel consonant and dissonant chords they have not been exposed to. Thus, as results from the present experiment show, rodents effectively learn to discriminate consonant from dissonant chords, but they do not generalize such discrimination to novel chords.

Figure 1.

Mean proportion (and standard error bars) of rats' responses during 30 training sessions to target stimuli in Experiment 1 (consonant and dissonant chords; black squares) and Experiment 2 (different sets of dissonant stimuli; white circles). A performance of 0.5 suggests no discrimination between stimuli. Rats successfully learned to discriminate consonant from dissonant chords, as well as between two sets of different dissonant stimuli.

It remains unclear what are the specific features that rats are using to tell apart consonant from dissonant stimuli during training. The fact that rats do not generalize to novel stimuli casts some doubts about whether they grouped consonant and dissonant stimuli into categories. Instead, animals may be using the specific frequency intervals between tones as their only cue for discrimination, so rats could be learning specific intervals rather than the general notion of consonance or dissonance. If so, the degree to which a given chord may be classified as consonant or dissonant may not be discriminative at all in the present experiment; instead, rats may be focusing only on specific frequency intervals between tones to tell chords apart. To test this alternative, Experiment 2 was run using stimuli that differed in their intervals between tones but not in terms of consonance and dissonance.

Experiment 2. Discrimination between interval ratios

A second experiment was carried out to test if rats are able to discriminate between two sets of dissonant stimuli (set A and set B) that differed in their interval ratios between tones. It was also tested whether rats can generalize such discrimination to the same set of chords played at different octaves, whole octave transpositions. That is, the test explored whether rats generalized the discrimination to stimuli with different absolute frequencies but same frequency ratios. The aim of this experiment was to study whether rats are able to process the stimuli based on interval ratios. Using two sets of dissonant stimuli allowed us to explore if rats could still discriminate among chords even when no clearly defined consonant and dissonant categories differentiate the stimuli.

Subjects

Subjects were 18 Long-Evans rats, 7 males and 11 females of 5 months of age.

Stimuli

As in Experiment 1, each stimulus was composed of a sequence of three two-note chords. Unlike Experiment 1 that included both consonant and dissonant chords, stimuli in Experiment 2 comprised only dissonant chords divided in two sets (A and B). Set A included the major seventh (C-B), minor ninth (C-C'#) and major second (C-D). Set B included the tritone (C-F#), minor second (C-C#) and minor seventh (C-B♭; see table 2). Each set was implemented in three different octaves (C3, C4 and C5). For each octave there were 6 different combinations of the two-note chords. For the generalization test, the same chords used during training were implemented but at different octaves (C6 and C7). The reason for this is that the aim of the present experiment was to test whether rats could discriminate across chords using only their interval ratios, and not their absolute frequencies; so, by shifting octaves we kept constant the former, while changing the latter. We thus created 8 new test sequences at these octaves. Four sequences were from set A, and 4 from set B. In each set, 2 sequences were in C6 and the other 2 in C7.

Table 2. Stimuli Used During Training and Test in Experiments 2 and 3.

| Training and Test | ||||

|---|---|---|---|---|

| Set A | Set B | |||

| Intervals | Ratio | Intervals | Ratio | |

| Major 7th (C-B) | 15:8 | Tritone (C-F#) | 45:32 | |

| Minor 9th (C-C'#) | 15:32 | Minor 2nd (C-C#) | 16:15 | |

| Major 2nd (C-D) | 9:8 | Minor 7th (C-B♭) | 16:9 | |

Note. Chords, with notes in parenthesis and frequency ratio, used in experiments 2 and 3. Rats and human participants were trained to discriminate between two sets of dissonant chords. In the test phase, subjects were required to discriminate between the same two sets of dissonant chords but implemented at different octaves.

Apparatus and Procedure

The apparatus and procedure were the same as in Experiment 1.

Results and Discussion

As in Experiment 1, we calculated the mean DRs of lever-pressing responses between the reinforced (Set A) and non-reinforced (Set B) stimuli for each training session. Throughout the training phase, rats learned to discriminate between the two sets of different dissonant stimuli, progressively pressing the lever more often after target stimuli. A repeated measures ANOVA was run with Session (1-30) as a within-subject factor. The analysis yielded a significant difference between sessions (F (29, 493) = 6.93, p < 0.001; see Figure 1). A one-sample t test analysis over the test data showed that the mean proportion of responses to reinforced stimuli was not significantly above chance (M = 0.53, SD = 0.16; t (17) = 0.81, p = 0.43, 95% CI [0.45, 0.60], d = 0.19), and was significantly different from the DR of the last training block (M = 0.67, SD = 0.14; t (17) = 4.37, p < 0.01, 95% CI [0.08, 0.22], d = 0.93). So, as observed in Experiment 1, rats learned to discriminate among chords contrasting in frequency ratios, but did not generalized this discrimination to novel chords.

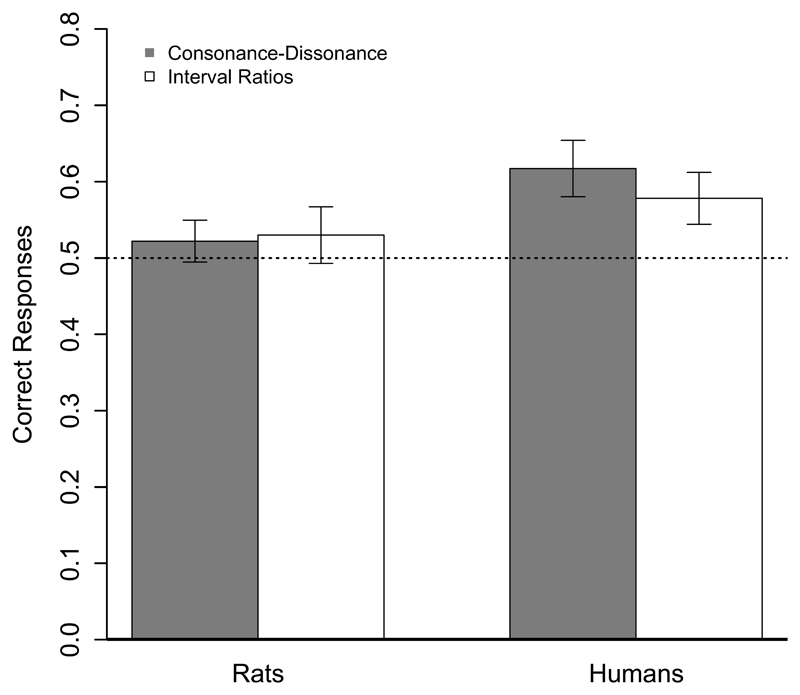

Comparing results from Experiment 1 and 2 yielded a very similar pattern of results. A comparison of the mean proportions of lever-pressing responses to reinforced stimuli (consonant in Exp. 1 and set A in Exp. 2) during the training phase with Experiment (1 and 2) as between-subject factor and Session (1-30) as within-subject factor, yielded a significant difference between sessions (F (29, 1073) = 20.67 p < 0.001), and no significant differences between experiments (F (1, 37) = 0.36, p = 0.55), nor interaction between the factors (F (29, 1073) = 0.98, p = 0.48) suggesting that rats learn to discriminate over both consonance category and interval ratios at the same rate. An independent sample t test, comparing the rats' performance during tests in Experiments 1 and 2, showed no significant differences between them (t (37) = -0.03, p = 0.96, 95% CI [-0.09, 0.09], d = 0.07; see Figure 2). Therefore, similar to Experiment 1, results from the training phase in Experiment 2 show rats can learn to discriminate among sets of chords that differ only in their interval ratios. Complementarily, results from the test suggest that rats are not able to generalize this discrimination to novel stimuli when absolute pitch levels are different. Thus, Experiments 1 and 2 show that rats could learn to discriminate between sets of chords, but failed to generalize their responses to novel stimuli. This suggests the animals might be memorizing the specific items used during discrimination training, and points towards a difficulty in establishing token-independent relations between interval ratios that could be distinguished when the absolute pitch of the tones changes, as is the case in the generalization test of both experiments. Humans, on the contrary, do not report problems performing whole octave transpositions (e.g. Patel, 2008). Thus, in the following experiment, we tested whether human participants display good generalization performance when presented with the stimuli used in Experiment 1 and 2.

Figure 2.

Mean proportion (and standard error bars) of responses to target stimuli during generalization tests using a Go/No-Go task from both rats (Experiments 1 and 2) and human participants (Experiment 3c). The mean proportion of correct responses was significantly above chance only for human participants.

Experiment 3. Discrimination over consonance categories and interval ratios by humans (Homo sapiens)

Experiment 3a

Experiment 3a explored the extent to which results from the two previous experiments can also be observed in humans. Results from the test in Experiments 1 and 2 showed that rats did not generalize to novel stimuli the discrimination patterns they learned during training. This could be due to the rats memorizing the specific items that were presented during training, while lacking the ability to extrapolate the cues used during discrimination to novel sequences implemented at different frequency octaves. Alternatively, it could be possible that stimuli used during the test, with whole octave transpositions, may make generalization impossible independently of the species tested. To test this possibility, the same stimuli were presented to humans.

Participants

Participants were 32 undergraduate students from the Universitat Pompeu Fabra. None of them had musical training or played a musical instrument. They received monetary compensation for their participation.

Stimuli

Stimuli for the training phase were exactly the same as in Experiments 1 (consonant and dissonant) and 2 (set A and set B). Stimuli for the test phase only differed from those used in the previous experiments in that chords were implemented in C1 and C2 octaves (with fundamental frequencies of 32.703 Hz and 65.406 Hz respectively), instead of C6 and C7 (with fundamental frequencies of 1046.50 Hz and 2093.00 Hz respectively). This modification was necessary because, although humans' and rats' hearing ranges overlap for low frequencies, rats comfortably hear sounds at high frequencies (up to 80 KHz; Heffner et al. 1994) that may be more difficult to perceive for humans (whose hearing range goes from 20 Hz to 20 KHz; see Table 3 for fundamental frequencies of the notes composing the stimuli). In fact, studies have shown higher frequencies are processed well by rats (e.g. Akiyama & Sutoo, 2011), while stimuli with lower frequencies might fit better the human auditory system (Fay, 1988). Importantly, while the fundamental frequency of the test items differed between humans and animals, the frequency ratio composing the chords remained the same.

Procedure

The experiment took place in a sound attenuating room. Participants were tested using a Macintosh OS X-based laptop running Psyscope XB57 experimental software and Sennheiser HD 515 headphones. Half of the participants (n=16) were presented with the stimuli from Experiment 1 (Consonant versus Dissonant), and half were presented with the stimuli from Experiment 2 (Set A versus Set B). The experiment consisted of a training phase followed by a test phase. During training participants were presented with stimuli in a balanced manner so no more than two stimuli of the same type were followed. There was an inter-stimulus interval of 2000 ms. Participants were instructed to find out which stimuli were "correct" (Exp. 1: consonant; Exp. 2: Set A) and which stimuli were "incorrect" (Exp. 1: dissonant; Exp. 2: Set B). They had to press a button on the keyboard every time a "correct" stimulus was presented. Participants received feedback on their performance after each response. The entire stimuli list played at least once and participants had to reach a criterion of 3 consecutive correct responses. Once this criterion was reached, the test phase began. For the test phase, we used a two-alternative forced choice task. It consisted of eight trials. In each trial, participants were presented with two stimuli and they had to choose which one was more similar to the “correct” stimuli in the training phase. No feedback was provided during the test.

Results and Discussion

First, we analyzed the mean number of trials participants needed to reach the criterion of 3 consecutive correct responses. Participants presented with the consonant and dissonant stimuli needed fewer trials (M = 45.06, SD = 8.07) than participants presented with sets A and B (M = 56.06, SD = 20.34) to reach the learning criterion before beginning the test phase (t (30) = -2.01, p = 0.05, 95% CI [-22.17, 0.17], d = 0.77). This suggested it was easier for participants to learn the discrimination between consonant and dissonant stimuli than to learn the discrimination between different sets of interval ratios. Thus, the learning pattern observed for humans contrasts with that observed for rats that displayed almost identical performance in the training phase of Experiment 1 and 2. However, although there were no large differences in the sets of dissonant interval ratios used in Experiment 2, humans, like rats, still learn to discriminate among them.

Next, we analyzed performance during the test. One-sample t tests analyses showed that the percentage of correct responses in both conditions were significantly above chance. Participants generalized to novel stimuli both consonance category (M = 58.59, SD = 14.23; t (15) = 2.41, p < 0.05, 95% CI [51.01, 66.17], d = 0.60), and interval ratios across different octaves (M = 61.72, SD = 14.05; t (15) = 3.34, p < 0.05, 95% CI [54.23, 69.20], d = 0.83). An independent sample t test comparing the percentage of correct responses across conditions revealed no significant differences between them (t (30) = -0.63, p = 0.53, 95% CI [-13.33, 7.08], d = 0.22). These results show that, different from rats, humans perform whole octave transpositions without apparent difficulty. That is, human participants generalize to novel stimuli based on both consonance and interval ratios across octaves. Such generalization does not seem to be numerically much superior to the performance observed in rats, pointing towards the idea that differences across species in this task might not be a case of all-or-none performance but rather reflect different degrees of sensitivity. However, it is often the case with studies comparing human and non-human animals' performance over the same set of stimuli, but using slightly different experimental procedures (e.g. Fitch & Hauser, 2004; Hoeschele, Cook, Guillette, Brooks, & Sturdy, 2012; Lipkind et al. 2013; Ohms, Escudero, Lammers, & ten Cate, 2012), we cannot directly compare tests from both species. The discrimination ratio of the rats is obtained by comparing the total lever-pressing responses after different stimuli, while the percentage of correct responses in humans is the result of a two-alternative forced-choice test. It is nevertheless the case that comparisons within each species show a different pattern of results for each. Rats learn to discriminate equally well stimuli differing in terms of consonance and dissonance (Experiment 1) and stimuli differing only in their interval ratios (Experiment 2). Nevertheless, we observed no indication that they can generalize such discrimination to new stimuli. In contrast, humans find it easier to learn to discriminate consonant from dissonant stimuli (as reflected by fewer training trials to reach the learning criterion). More importantly, human participants generalize their discrimination to novel stimuli implemented at different octaves.

Experiment 3b

Results from the previous experiments show that humans generalize the discrimination learned during training to novel stimuli. However, human participants had a fairly short discrimination training in the previous experiment, and one possibility of the lack of generalization on rats is that they were over-learning due to a greater exposure to the stimuli. In Experiment 3b we explored whether generalization performance in human participants would decrease with longer training. Thus, in the present experiment, we gave participants 80 training trials before the test.

Participants

Participants were 32 undergraduate students from the Universitat Pompeu Fabra. None of them had formal musical training. As in Experiment 3a, half of the participants (n=16) were presented with the stimuli from Experiment 1 (Consonant versus Dissonant), and half were presented with the stimuli form Experiment 2 (Set A versus Set B). They received monetary compensation for their participation in the experiment.

Stimuli

The stimuli were the same as in Experiment 3a.

Procedure

Procedure was similar to Experiment 3a. The only difference was that during the training phase all participants were presented with 80 trials. The test phase began after participants were exposed to all trials, instead of reaching a criterion of 3 consecutive correct responses.

Results and discussion

We analyzed performance during the test. One-sample t tests analyses showed that the percentage of correct responses in both conditions were significantly above chance. Participants generalized to novel stimuli both consonance category (M = 57.81, SD = 14.34; t (15) = 2.17, p < 0.05, 95% CI [50.16, 65.45], d = 0.57), and interval ratios across different octaves (M = 59.37, SD = 16.77; t (15) = 2.23, p < 0.05, 95% CI [50.43, 68.31], d = 0.56). An independent sample t test comparing the percentage of correct responses across conditions revealed no significant differences between them (t (30) = -0.28, p = 0.77, 95% CI [-12.82, 9.70], d = 0.10). We also compared the participants' performance at the end of the training with their generalization performance during test. We did not observe any differences between them (t (15) = 1.14, p = 0.27, 95% CI [-4.57, 15.20], d = 0.31, for the first condition; t (15) = 0.60, p = 0.55, 95% CI [-7.94, 14.19], d = 0.26 for the second condition). Independent sample t tests comparing tests from Experiments 3a and 3b over both consonance category (t (30) = 0.15, p = 0.87, 95% CI [-9.53, 11.09], d = 0.05) and interval ratios (t (30) = 0.43, p = 0.67, 95% CI [-8.82, 13.51], d = 0.15) revealed no differences between them. These results suggest that longer training does not necessarily leads to worse generalization performance in human participants.

Experiment 3c

In the previous experiments, human participants were tested in a two-alternative forced choice task while rats were tested with a go/no-go task. In the present experiment we tested human participant with a go/no-go task as well to make the test phase more similar between the species.

Participants

Participants were 32 undergraduate students from the Universitat Pompeu Fabra. None of them had formal musical training. Half of the participants (n=16) were presented with the stimuli from Experiment 1 (Consonant versus Dissonant), and half were presented with the stimuli form Experiment 2 (Set A versus Set B). They received monetary compensation for their participation in the experiment.

Stimuli

The stimuli were the same as in Experiments 3a and 3b.

Procedure

Procedure was the same as in Experiment 3a. The only difference was in the test phase. We used a go/no-go task instead of a two-alternative forced choice test. Participants were asked to respond with the same criterion as during training. There were 8 test trials played on C6 and C7 octaves.

Results and discussion

First, we analyzed the mean number of training trials participants needed to reach the criterion of 3 consecutive correct responses. Participants presented with the consonant and dissonant stimuli needed fewer trials (M = 37.12, SD = 17.15) than participants presented with sets A and B (M = 48.75, SD = 16.19) to reach the learning criterion before beginning the test phase (t (30) = -1.96, p = 0.06, 95% CI [-23.70, 0.45], d = 0.7). As in Experiment 3a, there is a trend suggesting it was easier for participants to learn the discrimination between consonant and dissonant stimuli than to learn the discrimination between different sets of interval ratios.

Next, we analyzed performance during the test. One-sample t tests analyses showed that the percentage of correct responses in both conditions were significantly above chance. Participants generalized to novel stimuli both consonance category (M = 61.72, SD = 14.76; t (7.3415) = 3.17, p < 0.05, 95% CI [53.84, 69.58], d = 0.79), and interval ratios across different octaves (M = 57.81, SD = 13.59; t (15) = 2.29, p < 0.05, 95% CI [50.56, 65.05], d = 0.57; see Figure 2). An independent sample t test comparing the percentage of correct responses across conditions revealed no significant differences between them (t (30) = 0.77, p = 0.44, 95% CI [-6.34, 14.16], d = 0.27). Independent sample t tests comparing tests data of Experiments 3a and 3c over both consonance category (t (30) = -0.61, p = 0.54, 95% CI [-13.59, 7.35], d = 0.21) and interval ratios (t (30) = 0.79, p = 0.43, 95% CI [-6.07, 13.88], d = 0.28) evidenced no differences between experiments. Thus, the present results suggest human participants generalize to novel stimuli based on both consonance and interval ratios independently of the type of test used (2AFC or go/no-go task).

General Discussion

The present study was aimed to test consonance perception in a nonhuman animal with no extensive experience with harmonic stimuli and that mainly produces brief ultrasonic vocalizations. In Experiment 1, rats discriminated consonant from dissonant chords. In Experiment 2, we used two sets of dissonant stimuli. Rats could discriminate between these two sets, suggesting that the discrimination may be based on the differences in interval ratios among chords, as this was the only differentiating feature between the two sets. However, rats did not show generalization to novel stimuli for either the consonance – dissonance categories (Experiment 1) or for the two sets of chords differing only in interval ratios (Experiment 2). In contrast, humans could discriminate and generalize among both consonance – dissonance categories, and among sets of interval ratios (Experiment 3). Human participants' generalization performance was far from perfect, but it was consistent across the three experiments. That is, even though their performance in the different tests did not reflect a near-perfect generalization, they consistently provided evidence that humans effectively discriminated between novel stimuli they had not heard during training. This, together with previous reports of whole octave transpositions in humans (e.g. Patel, 2008), supports the idea that humans can correctly respond to novel chords based on both consonance and interval ratios. Such evidence was not observed in rats. Rats' discriminative capacity among musical chords observed here suggests that at least some components of complex auditory processing needed to tell apart chords based on their interval ratios are shared across species. Their failure to generalize across octaves, points towards differences in the processing of chords between humans and other animals.

Previous studies tackling the extent to which consonance perception could be observed in other species have mainly tested avian and primate species. Here, we broadened the species spectrum by testing an animal that has been scarcely used in this field. Results from Experiment 1 show that rats can discriminate consonant from dissonant chords. However, the fact that rats were able to discriminate stimuli even when chords fall within the dissonant category (as in Experiment 2), suggests that the rats may be focusing on the differences in interval ratios rather than on the perception of sensory consonance. Even more, failure to perform whole octave transpositions, as the ones necessary to successfully display generalization behavior, suggests rats were focusing on the specific features (like pitch levels) defining the chords presented during training. So, it could be that rats were learning both specific intervals or specific pitch ranges that cannot be used to successfully discriminate among novel test items featuring different intervals implemented at different octaves. This opens the possibility that rats are perceiving the stimuli in terms of absolute pitch, and not generalizing the frequencies ratios that are common across training and test stimuli. However, this possibility should be considered under the light of studies exploring a general difficulty found in mammals to process absolute pitch when compared to avian species (e.g. Weisman, Williams, Cohen, Njegovan & Sturdy, 2006), and studies on the perception of specific pitch ranges by starlings (Cynx, 1993; Hulse, Page, & Braaten, 1990). There are thus relevant differences across avian and mammal species in their abilities to process absolute pitch and pitch intervals defining musical chords. Together with the present work, such studies provide the experimental background necessary to understand how different species perceive sounds varying in frequency.

The present results show that rats did not generalize the learned behavior to novel chord stimuli. Previous studies testing songbirds and monkeys have reported successful generalization to novel stimuli (Hoeschele et al., 2012; Hulse, et al., 1995; Izumi, 2000; Watanabe et al., 2005). However, no generalization was observed in a study testing pigeons (Brooks et al., 2009), which is also a species that is not an avid vocal learner. Interestingly, Wright, Rivera, Hulse, Shyan and Neiworth (2000) reported that rhesus monkeys performed successful octave generalization over tonal melodies, but not over individual notes in a same-different judgment task. The authors suggest that the task is simplified when notes configure together as gestalts (melodies) and that this might allow other species perform such task. Thus, two aspects of the present study could account for the lack of generalization we observe in rats. First, we used simple sequences of chords in which the absolute frequencies (octave scales; Exp. 1 and 2) and frequency ratios (Exp. 1) of the test stimuli differed from those of training. This could have lead to a rather stringent generalization test (requiring in part whole octave transpositions) that could not be passed by non-human animals. This leaves open questions that could be tackled in further studies. For example, it would be interesting to explore whether rats would generalize across octaves if the stimuli are implemented over tonal melodies, as Wright et al. (2000) suggest. Further work could also systematically explore whether rats are able to readily process consonance in restricted pitch ranges, and not across wide ranges as the ones tested here, or whether rats are focusing on specific frequencies defining the stimuli and processing the chords presented in our study in terms of absolute frequencies. Additionally, it could be tested whether rats find it easier to generalize across chords if they are transposed to different keys instead of different octaves, which would leave the test stimuli within the same range of the training stimuli. Observe however, that human participants displayed such generalization abilities, highlighting possible processing differences across species. Second, as we mentioned before, rats, like pigeons, are not avid vocal learners and do not have a rather extended vocal repertoire composed by the complex concatenation of elements that characterizes other species, such as starlings and humans (Doupe & Kuhl, 1999). It is thus possible that lack of extensive experience in the production and perception of complex inter-specific vocalizations could lead to a greater difficulty in constructing categories around interval ratios that would be reflected in poor generalization to novel stimuli.

Thus, two aspects of the present study provide insights regarding the role of experience during consonance processing. First, our results suggest that at least some perceptual aspects needed for the discrimination of consonant chords may not be modulated by experience. If the perception of musical universals emerges uniquely as a result of extensive exposure to harmonic stimuli it would be difficult to explain the ability of rats and other animals to perceive and discriminate among consonant and dissonant chords. Second, the fact that rats did not generalize to novel stimuli suggests they were focusing on specific items presented during discrimination training, and failing to establish token-independent relations among musical notes. This points towards important differences from humans in terms of consonance processing. As mentioned above, the observed lack of generalization opens the door to the possibility that experience allowing the creation of memory templates for harmonic stimuli (e.g. McLachlan et al. 2013) might be important for the creation of categories around stimuli that vary in frequency ratios. Species lacking such experience could find it difficult to perform whole octave transpositions and to generalize to novel chords.

There is growing evidence that humans share with other animals processing abilities in the acoustic domain that may be fundamental for the emergence of complex systems such as harmony in music, or phonology in language. For example, recent studies demonstrate that the grouping principles described by the Iambic-Trochaic Law that are used by humans during the early stages of language processing may be built on general perceptual abilities that are already present in other species (De la Mora, Nespor & Toro, 2013). Research showing the extent to which basic abilities tied to rhythm perception are already present in distant animals, such as rats, could lay the groundwork to establish how they are related to the processing of another uniquely-human complex system such as music. It has been argued that language and music are systems that present many parallels. For instance, both systems imply temporally unfolding sequences of sounds with a salient rhythmic and melodic structure (Handel, 1989; Patel, 2008). Comparative research could help to establish both the extent to which the two systems share a common set of underlying general principles, and which aspects are not shared across species and modalities.

Acknowledgements

This research was supported by grants PSI2010-20029 from the Spanish Ministerio de Ciencia, and ERC Starting Grant agreement n.312519.

Footnotes

Conflict of interest.

We declare we do not have conflict of interest.

Ethical standards.

All experiments were conducted following the current laws of the Spanish and Catalan governments regarding animal care and welfare, and in accordance with guidelines from FELASA.

References

- Akiyama K, Sutoo D. Effects of different frequencies of music on blood pressure regulation in spontaneously hypertensive rats. Neuroscience Letters. 2011;487:58–60. doi: 10.1016/j.neulet.2010.09.073. [DOI] [PubMed] [Google Scholar]

- Bolhuis JJ, Okanoya K, Scharff C. Twitter evolution: converging mechanisms in birdsong and human speech. Nature Neuroscience. 2010;11:747–759. doi: 10.1038/nrn2931. [DOI] [PubMed] [Google Scholar]

- Brooks DI, Cook RG. Chord discrimination by pigeons. Music Perception. 2009;27:183–196. [Google Scholar]

- Chiandetti C, Vallortigara G. Chicks like consonant music. Psychological Science. 2011;22:1270–1273. doi: 10.1177/0956797611418244. [DOI] [PubMed] [Google Scholar]

- Cousineau M, McDermott J, Peretz I. The basis of musical consonance as revealed by congenital amusia. Proceedings of the National Academy of Sciences of the United States of America. 2012;109:19858–19863. doi: 10.1073/pnas.1207989109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cynx J. Auditory frequency generalization and a failure to find octave generalization in a songbird, the European starling (Sturnus vulgaris) Journal of Comparative Psychology. 1993;107:140–146. doi: 10.1037/0735-7036.107.2.140. [DOI] [PubMed] [Google Scholar]

- De la Mora D, Toro JM. Rule learning over consonants and vowels in a non-human animal. Cognition. 2013;126:307–312. doi: 10.1016/j.cognition.2012.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De la Mora D, Nespor M, Toro JM. Do humans and nonhuman animals share the grouping principles of the iambic-trochaic law? Attention, Perception, & Psychophysics. 2013;75:92–100. doi: 10.3758/s13414-012-0371-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doupe AJ, Kuhl PK. Birdsong and human speech: common themes and mechanisms. Annual Review of Neuroscience. 1999;22:567–631. doi: 10.1146/annurev.neuro.22.1.567. [DOI] [PubMed] [Google Scholar]

- Fannin HA, Braud WG. Preference for consonant over dissonant tones in the albino rat. Perceptual and Motor Skills. 1971;32:191–193. doi: 10.2466/pms.1971.32.1.191. [DOI] [PubMed] [Google Scholar]

- Fay RR. Hearing in vertebrates: a psychophysics databook. Hill-Fay Associates; Winnetka IL: 1988. [Google Scholar]

- Fishman YI, Volkov IO, Noh MD, Garell PC, Bakken H, Arezzo JC, Howard MA, Setinschneider M. Consonance and dissonance of musical chords: neural correlates in auditory cortex of monkeys and humans. Journal of Neurophysioly. 2001;86:2761–2788. doi: 10.1152/jn.2001.86.6.2761. [DOI] [PubMed] [Google Scholar]

- Fitch T, Hauser M. Computational constraints on syntactic processing in a nonhuman primate. Science. 2004;303:377–380. doi: 10.1126/science.1089401. [DOI] [PubMed] [Google Scholar]

- Gordon C, Webb D, Wolpert S. One cannot hear the shape of a drum. Bulletin of the American Mathematical Society. 1992;27:134–138. [Google Scholar]

- Handel S. Listening: An introduction to the perception of auditory events. Cambridge, MA: MIT Press; 1989. [Google Scholar]

- Harwood D. Universals in music: a perspective from cognitive psychology. Ethnomusicology. 1976;20:521–533. [Google Scholar]

- Heffner H, Heffner R, Contos C, Ott T. Audiogram of the hooded Norway rat. Hearing Research. 1994;73:244–247. doi: 10.1016/0378-5955(94)90240-2. [DOI] [PubMed] [Google Scholar]

- Helmholtz HLF. In: On the sensations of tone as a physiological basis for the theory of music. Ellis A, translator. Dover Publications; New York: 1877/1954. [Google Scholar]

- Hoeschele M, Cook R, Guillette L, Brooks D, Sturdy C. Black-capped chickadee (Poecile articapillus) and human (Homo sapiens) chord discrimination. Journal of Comparative Psychology. 2012;126:57–67. doi: 10.1037/a0024627. [DOI] [PubMed] [Google Scholar]

- Hogden J, Loqvist A, Gracco V, Zlokarnik I, Rubin P, Saltzman E. Accurate recovery of articulator positions from acoustics: new conclusions based upon human data. Journal of the Acoustical Society of America. 1996;100:1819–1834. doi: 10.1121/1.416001. [DOI] [PubMed] [Google Scholar]

- Hulse SH, Bernard DJ, Braaten RF. Auditory discrimination of chord-based spectral structures by European starlings. Journal of Experimental Psychology: General. 1995;124:409–423. [Google Scholar]

- Hulse SH, Page SC, Braaten RF. Frequency range size and the frequency range constraint in auditory perception by European starlings (Sturnus vulgaris) Animal Learning & Behavior. 1990;18:238–245. [Google Scholar]

- Izumi A. Japanese monkeys perceive sensory consonance of chords. Journal of the Acoustical Society of America. 2000;108:3073–3078. doi: 10.1121/1.1323461. [DOI] [PubMed] [Google Scholar]

- Knutson B, Burgdorf J, Panksepp J. Ultrasonic vocalizations as indices of affective states in rats. Psychological Bulletin. 2002;128:961–977. doi: 10.1037/0033-2909.128.6.961. [DOI] [PubMed] [Google Scholar]

- Koda H, Basile M, Oliver M, Remeuf K, Nagumo S, Bolis-Heulin C, Lemasson A. Validation of an auditory sensory reinforcement paradigm: Campbell's monkeys (Cercopithecus campbelli) do not prefer consonant over dissonant sounds. Journal of Comparative Psychology. 2013;127:265–271. doi: 10.1037/a0031237. [DOI] [PubMed] [Google Scholar]

- Krumhansl C. Cognitive foundations of musical pitch. New York: Oxford UP; 1990. [Google Scholar]

- Lipkind D, Marcus G, Bemis D, Sasahara K, Jacoby N, Takahasi M, Susuki K, Feher O, Ravbar P, Okanoya K, Tchernichovski O. Stepwise acquisition of vocal combinatorial capacity in songbirds and human infants. Nature. 2013;498:104–108. doi: 10.1038/nature12173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malmberg C. The perception of consonance and dissonance. Psychological Monographs. 1918;25:93–133. [Google Scholar]

- McDermott J, Hauser MD. Are consonant intervals music to their ears? Spontaneous acoustic preferences in a nonhuman primate. Cognition. 2004;94:B1–B21. doi: 10.1016/j.cognition.2004.04.004. [DOI] [PubMed] [Google Scholar]

- McDermott J, Lehr A, Oxenham A. Individual differences reveal the basis of consonance. Current Biology. 2010;20:1035–1041. doi: 10.1016/j.cub.2010.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLachlan N, Marco D, Light M, Wilson S. Consonance and Pitch. Journal of Experimental Psychology: General. 2013;142:1142–1158. doi: 10.1037/a0030830. [DOI] [PubMed] [Google Scholar]

- Ohmns V, Escudero P, Lammers K, ten Cate C. Zebra finches and Dutch adults exhibit the same cue weighting bias in vowel perception. Animal Cognition. 2012;15:155–161. doi: 10.1007/s10071-011-0441-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel AD. Music, language and the brain. Oxford, England: Oxford University Press; 2008. [Google Scholar]

- Roberts L. Consonance judgments of musical chords by musicians and untrained listeners. Acta Acustica United with Acustica. 1986;62:163–171. [Google Scholar]

- Schwartz DA, Howe CQ, Purves D. The statistical structure of human speech sounds predicts musical universals. The Journal of Neuroscience. 2003;23:7160–7168. doi: 10.1523/JNEUROSCI.23-18-07160.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugimoto T, Kobayashi H, Nobuyoshi N, Kiriyama Y, Takeshita H, Nakamura T, Hashiya K. Preference for consonant music over dissonant music by an infant chimpanzee. Primates. 2010;51:7–12. doi: 10.1007/s10329-009-0160-3. [DOI] [PubMed] [Google Scholar]

- Trainor LJ, Heinmiller BM. The development of evaluative responses to music: Infants prefer to listen to consonance over dissonance. Infant Behavior and Development. 1998;21:77–88. [Google Scholar]

- Trainor LJ, Tsang CD, Cheung VHW. Preference for sensory consonance in 2 – and 4-month-old infants. Music Perception. 2002;20:187–194. [Google Scholar]

- Watanabe S, Uozumi M, Tanaka N. Discrimination of consonance and dissonance in Java sparrows. Behavioral Processes. 2005;70:203–208. doi: 10.1016/j.beproc.2005.06.001. [DOI] [PubMed] [Google Scholar]

- Weisman R, Williams M, Cohen J, Njegovan M, Sturdy C. The Comparative Psychology of absolute pitch. In: Wasserman E, Zentall T, editors. Comparative cognition: Experimental explorations of animal intelligence. New York: Oxford University Press; 2006. pp. 71–86. [Google Scholar]

- Wright AA, Rivera JJ, Hulse SH, Shyan M, Nieworth JJ. Music perception and octave generalization in rhesus monkeys. Journal of Experimental Psychology, General. 2000;129:291–307. doi: 10.1037//0096-3445.129.3.291. [DOI] [PubMed] [Google Scholar]

- Zentner M, Kagan J. Infants' perception of consonance and dissonance in music. Infant Behavior and Development. 1998;21:483–492. [Google Scholar]

- Zentner M, Kagan J. Perception of music by infants. Nature. 1996;383:29. doi: 10.1038/383029a0. [DOI] [PubMed] [Google Scholar]