Abstract

Many techniques have been developed to visualize how an image would appear to an individual with a different visual sensitivity: e.g., because of optical or age differences, or a color deficiency or disease. This protocol describes a technique for incorporating sensory adaptation into the simulations. The protocol is illustrated with the example of color vision, but is generally applicable to any form of visual adaptation. The protocol uses a simple model of human color vision based on standard and plausible assumptions about the retinal and cortical mechanisms encoding color and how these adjust their sensitivity to both the average color and range of color in the prevailing stimulus. The gains of the mechanisms are adapted so that their mean response under one context is equated for a different context. The simulations help reveal the theoretical limits of adaptation and generate "adapted images" that are optimally matched to a specific environment or observer. They also provide a common metric for exploring the effects of adaptation within different observers or different environments. Characterizing visual perception and performance with these images provides a novel tool for studying the functions and consequences of long-term adaptation in vision or other sensory systems.

Keywords: Behavior, Issue 122, Neuroscience, Vision, Perception, Adaptation, Color, Image processing, Psychophysics, Modeling, Simulations

Introduction

What might the world look like to others, or to ourselves as we change? Answers to these questions are fundamentally important for understanding the nature and mechanisms of perception and the consequences of both normal and clinical variations in sensory coding. A wide variety of techniques and approaches have been developed to simulate how images might appear to individuals with different visual sensitivities. For example, these include simulations of the colors that can be discriminated by different types of color deficiencies1,2,3,4, the spatial and chromatic differences that can be resolved by infants or older observers5,6,7,8,9, how images appear in peripheral vision10, and the consequences of optical errors or disease11,12,13,14. They have also been applied to visualize the discriminations that are possible for other species15,16,17. Typically, such simulations use measurements of the sensitivity losses in different populations to filter an image and thus reduce or remove the structure they have difficulty seeing. For instance, common forms of color blindness reflect a loss of one of the two photoreceptors sensitive to medium or long wavelengths, and images filtered to remove their signals typically appear devoid of "reddish-greenish" hues1. Similarly, infants have poorer acuity, and thus the images processed for their reduced spatial sensitivity appear blurry5. These techniques provide invaluable illustrations of what one person can see that another may not. However, they do not — and often are not intended to — portray the actual perceptual experience of the observer, and in some cases may misrepresent the amount and types of information available to the observer.

This article describes a novel technique developed to simulate differences in visual experience which incorporates a fundamental characteristic of visual coding — adaptation18,19. All sensory and motor systems continuously adjust to the context they are exposed to. A pungent odor in a room quickly fades, while vision accommodates to how bright or dim the room is. Importantly, these adjustments occur for almost any stimulus attribute, including "high-level" perceptions such as the characteristics of someone's face20,21 or their voice22,23, as well as calibrating the motor commands made when moving the eyes or reaching for an object24,25. In fact, adaptation is likely an essential property of almost all neural processing. This paper illustrates how to incorporate these adaptation effects into simulations of the appearance of images, by basically "adapting the image" to predict how it would appear to a specific observer under a specific state of adaptation26,27,28,29. Many factors can alter the sensitivity of an observer, but adaptation can often compensate for important aspects of these changes, so that the sensitivity losses are less conspicuous than would be predicted without assuming that the system adapts. Conversely, because adaptation adjusts sensitivity according to the current stimulus context, these adjustments are also important to incorporate for predicting how much perception might vary when the environment varies.

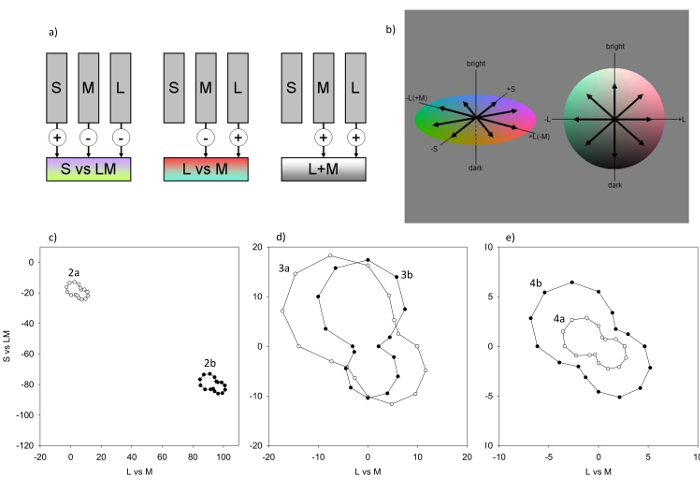

The following protocol illustrates the technique by adapting the color content of images. Color vision has the advantage that the initial neural stages of color coding are relatively well understood, as are the patterns of adaptation30. The actual mechanisms and adjustments are complex and varied, but the main consequences of adaptation can be captured using a simple and conventional two-stage model (Figure 1a). In the first stage, color signals are initially encoded by three types of cone photoreceptors that are maximally sensitive to short, medium or long wavelengths (S, M, and L cones). In the second stage, the signals from different cones are combined within post-receptoral cells to form "color-opponent" channels that receive antagonistic inputs from the different cones (and thus convey "color" information), and "non-opponent" channels that sum together the cone inputs (thus coding "brightness" information). Adaptation occurs at both stages, and adjusts to two different aspects of the color — the mean (in the cones) and the variance (in post-receptoral channels)30,31. The goal of the simulations is to apply these adjustments to the model mechanisms and then render the image from their adapted outputs.

The process of adapting images involves six primary components. These are 1) choosing the images; 2) choosing the format for the image spectra; 3) defining the change in color of the environment; 4) defining the change in the sensitivity of the observer; 5) using the program to create the adapted images; and 6) using the images to evaluate the consequences of the adaptation. The following considers each of these steps in detail. The basic model and mechanism responses are illustrated in Figure 1, while Figures 2 - 5 show examples of images rendered with the model.

Protocol

NOTE: The protocol illustrated uses a program that allows one to select images and then adapt them using options selected by different drop-down menus.

1. Select the Image to Adapt

Click on the image and browse for the filename of the image to work with. Observe the original image in the upper left pane.

2. Specify the Stimulus and the Observer

Click the "format" menu to choose how to represent the image and the observer.

Click on the "standard observer" option to model a standard or average observer adapting to a specific color distribution. In this case, use standard equations to convert the RGB values of the image to the cone sensitivities32.

Click on "individual observer" option to model the spectral sensitivities of a specific observer. Because these sensitivities are wavelength-dependent, the program converts the RGB values of the image into gun spectra by using the standard or measured emission spectra for the display.

Click on "natural spectra" option to approximate actual spectra in the world. This option converts the RGB values to spectra, for example by using standard basis functions33 or Gaussian spectra34 to approximate the corresponding spectrum for the image color.

3. Select the Adaptation Condition

- Adapt either the same observer to different environments (e.g., to the colors of a forest vs. urban landscape), or different observers to the same environment (e.g., a normal vs. color deficient observer).

- In the former case, use the menus to select the environments. In the latter, use the menus to define the sensitivity of the observer.

- To set the environments, select the "reference" and "test" environments from the dropdown menus. These control the two different states of adaptation by loading the mechanism responses for different environments.

- Choose the "reference" menu to control the starting environment. This is the environment the subject is adapted to while viewing the original image. NOTE: The choices shown have been precalculated for different environments. These were derived from measurements of the color gamuts for different collections of images. For example, one application examined how color perception might vary with changes in the seasons, by using calibrated images taken from the same location at different times27. Another study, exploring how adaptation might affect color percepts across different locations, represented the locations by sampling images of different scene categories29.

- Select the "user defined" environment to load the values for a custom environment. Observe a window to browse and select a particular file. To create these files for independent images, display each image to be included (as in step 1) and then click the "save image responses" button. NOTE: This will display a window where one can create or append to an excel file storing the responses to each image. To create a new file, enter the filename, or browse for an existing file. For existing files, the responses to the current image are added and the responses to all images automatically averaged. These averages are input for the reference environment when the file with the "user defined" option is selected.

- Select the "test" menu to access a list of environments for the image to be adjusted for. Select the "current image" option to use the mechanism responses for the displayed image. NOTE: This option assumes the subjects are adapting to the colors in the image that is currently being viewed. Otherwise select one of the precalculated environments or the "user defined" option to load the test environment.

4. Select the Spectral Sensitivity of the Observer

NOTE: For the adaptation effects of different environments, the observer will usually remain constant, and is set to the default "standard observer" with average spectral sensitivity. There are 3 menus for setting an individual spectral sensitivity, which control the amount of screening pigment or the spectral sensitivities of the observer.

Click on the "lens" menu to select the density of the lens pigment. The different options allow one to choose the density characteristic of different ages.

Click on the "macular" menu to similarly select the density of the macular pigment. Observe these options in terms of the peak density of the pigment.

Click on the "cones" menu to choose between observers with normal trichromacy or different types of anomalous trichromacy. NOTE: Based on the choices the program defines the cone spectral sensitivities of the observer and a set of 26 postreceptoral channels that linearly combine the cone signals to roughly uniformly sample different color and luminance combinations.

5. Adapt the Image

Click the "adapt" button. NOTE: This executes the code for calculating the responses of the cones and post-receptoral mechanisms to each pixel in the image. The response is scaled so that the mean response to the adapting color distribution equals the mean responses to the reference distribution, or so that the average response is the same for an individual or reference observer. The scaling is multiplicative to simulate von Kries adaptation35. The new image is then rendered by summing the mechanism responses and converting back to RGB values for display. Details of the algorithm are given in 26,27,28,29.

Observe three new images on the screen. These are labeled as 1) "unadapted" — how the test image should appear to someone fully adapted to the reference environment; 2) "cone adaptation"- this shows the image adjusted only for adaptation in the receptors; and 3) "full adaptation"- this shows the image predicted by complete adaptation to the change in the environment or the observer.

Click the "save images" button to save the three calculated-images. Observe a new window on the screen to browse for the folder and select the filename.

6. Evaluate the Consequences of the Adaptation

NOTE: The original reference and adapted images simulate how the same image should appear under the two states of modeled adaptation, and importantly, differ only because of the adaptation state. The differences in the images thus provide insight into consequences of the adaptation.

Visually look at the differences between the images. NOTE: Simple inspection of the images can help show how much color vision might vary when living in different color environments, or how much adaptation might compensate for a sensitivity change in the observer.

- Quantify these adaptation effects by using analyses or behavioral measurements with the images to empirically evaluate the consequences of the adaptation29.

- Measure how color appearance changes. For example, compare the colors in the two images to measure how color categories or perceptual salience shift across different environments or observers. For example, use analyses of the changes in color with adaptation to calculate how much the unique hues (e.g., pure yellow or blue) could theoretically vary because of variations in the observer's color environment29.

- Ask how the adaptation affects visual sensitivity or performance. For example, use the adapted images to compare whether visual search for a novel color is faster when observers are first adapted to the colors of the background. Conduct the experiment by superimposing on the images an array of targets and differently-colored distractors that were adapted along with the images, with the reaction times measured for locating the odd target29.

Representative Results

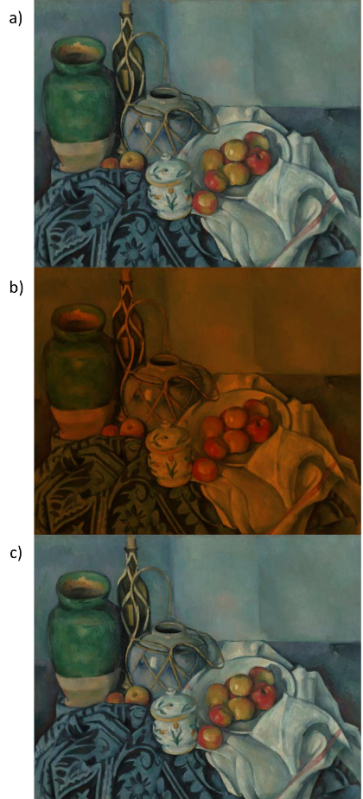

Figures 2 - 4 illustrate the adaptation simulations for changes in the observer or the environment. Figure 2 compares the predicted appearance of Cezanne's Still Life with Apples for a younger and older observer who differ only in the density of the lens pigment28. The original image as seen through the younger eye (Figure 2a) appears much yellower and dimmer through the more densely pigmented lens (Figure 2b). (The corresponding shifts in the mean color and chromatic responses is illustrated in Figure 1c.) However, adaptation to the average spectral change discounts almost all of the color appearance change (Figure 2c). The original color response is almost completely recovered by the adaptation in the cones, so that subsequent contrast changes have negligible effect.

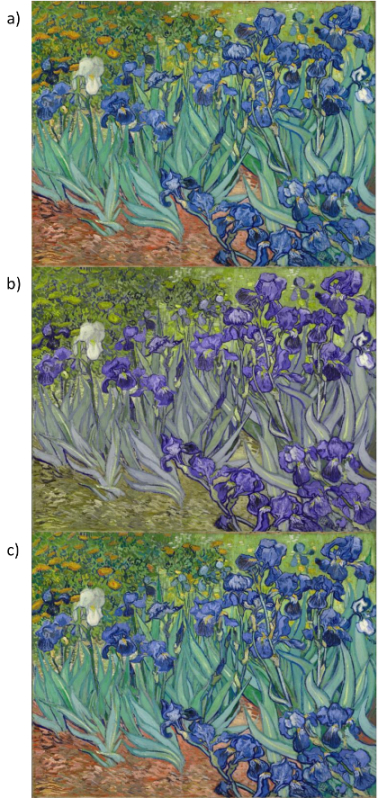

Figure 3 shows van Gogh's Irises filtered to simulate color appearance in a deuteranomalous observer, whose normal M photopigment is shifted in peak sensitivity to within 6 nm of the L photopigment28. Adaptation in the cones again adjusts for the mean stimulus chromaticity, but the L vs. M contrasts from the anomalous pigments are weak (Figure 3b), compressing the mechanism responses along this axis (Figure 1d). It has been suggested that van Gogh might have exaggerated the use of color to compensate for a color deficiency, since the colors he portrayed may appear more natural when filtered for a deficiency. However, contrast adaptation to the reduced contrasts predicts that the image should again "appear" very similar to the normal and anomalous trichromat (Figure 3c), even if the latter has much weaker intrinsic sensitivity to the L Vs. M dimension. Many anomalous trichromats in fact report reddish-greenish contrasts as more conspicuous than would be predicted by their photopigment sensitivities36,37.

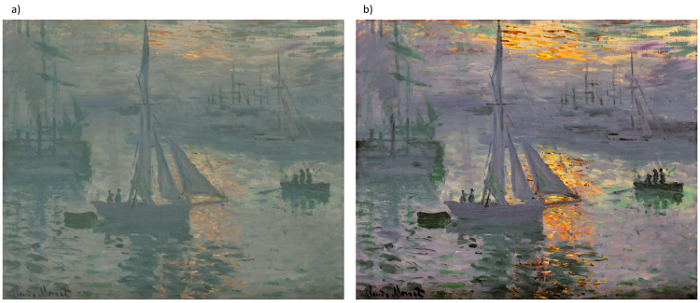

Figure 4 shows the simulations for an environmental change, by simulating how the hazy image portrayed by Monet's Sunrise (Marine) might appear to an observer fully adapted to the haze (or to an artist fully adapted to his painting). Before adaptation the image appears murky and largely monochrome (Figure 4a), and correspondingly the mechanism responses to the image contrast are weak (Figure 1e). However, adaptation to both the mean chromatic bias and the reduced chromatic contrast (in this case to match the mechanism responses for typical outdoor scenes) normalizes and expands the perceived color gamut so that it is comparable to the range of color percepts experienced for well-lit outdoor scene (Figure 4b).

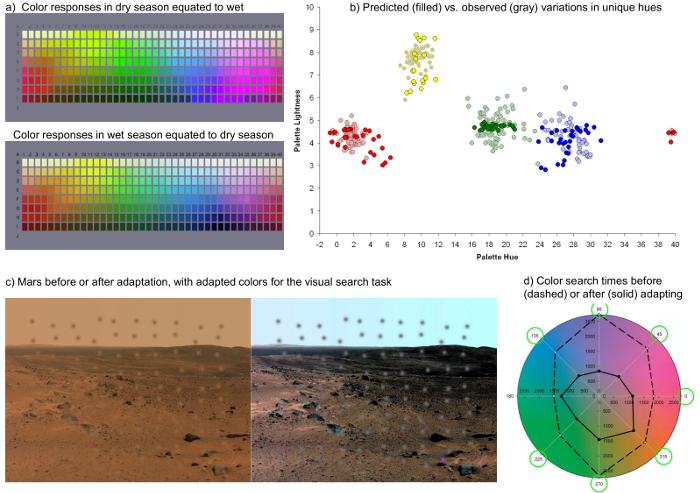

Finally, Figure 5 illustrates the two examples noted in section 6.2 of the protocol for using the model to study color vision. Figure 5a shows the Munsell Palette under adaptation to a lush or arid environment, while Figure 5b plots the shifts in the palette stimuli required to appear pure red, green, blue, or yellow, when the same observer is adapted to a range of different simulated environments. This range is comparable to measurements of the actual stimulus range of these focal colors as measured empirically in the World Color Survey29. Figure 5c instead shows how a set of embedded colors appear before or after adaptation to a Martian landscape. Adapting the set for the image led to significantly shorter reaction times for finding the unique colors in a visual search task29.

Figure 1: The Model. a) Responses are modeled for mechanisms with the sensitivities of the cones (which adapt to the stimulus mean) or postreceptoral combinations of the cones (which adapt to the stimulus variance. b) Each postreceptoral mechanism is tuned to a different direction in the color-luminance space, as indicated by the vectors. For the simulations 26 mechanisms are computed, which sample the space in 45° intervals (shown for the L Vs. M and S Vs. LM plane, and the L Vs. M and luminance plane). c) Responses of the mechanisms in the equiluminant (L Vs. M and S Vs. LM) plane to the images in the top and middle panel of Figure 2. Mean contrast responses are shown at 22.5° intervals to more fully portray the response distribution, though the model is based on channels at 45° intervals. In the original image (Figure 2a) the mean chromaticity is close to gray (0,0) and colors are biased along a bluish-yellowish axis. Increasing the lens density of the observer produces a large shift in the mean toward yellow (Figure 2b). d) Contrast responses for the images shown in Figure 3a & 3b. The cone contrasts in the original (Figure 3a) are compressed along the L Vs. M axis for the color deficient observer (Figure 3b). e) Contrast responses for the images shown in Figure 4a & 4b. The low contrast responses for the original image (Figure 4a) are expanded following adaptation, which matches the mean responses to the painting to the responses for a color distribution typical of outdoor natural scenes (Figure 4b). Please click here to view a larger version of this figure.

Figure 1: The Model. a) Responses are modeled for mechanisms with the sensitivities of the cones (which adapt to the stimulus mean) or postreceptoral combinations of the cones (which adapt to the stimulus variance. b) Each postreceptoral mechanism is tuned to a different direction in the color-luminance space, as indicated by the vectors. For the simulations 26 mechanisms are computed, which sample the space in 45° intervals (shown for the L Vs. M and S Vs. LM plane, and the L Vs. M and luminance plane). c) Responses of the mechanisms in the equiluminant (L Vs. M and S Vs. LM) plane to the images in the top and middle panel of Figure 2. Mean contrast responses are shown at 22.5° intervals to more fully portray the response distribution, though the model is based on channels at 45° intervals. In the original image (Figure 2a) the mean chromaticity is close to gray (0,0) and colors are biased along a bluish-yellowish axis. Increasing the lens density of the observer produces a large shift in the mean toward yellow (Figure 2b). d) Contrast responses for the images shown in Figure 3a & 3b. The cone contrasts in the original (Figure 3a) are compressed along the L Vs. M axis for the color deficient observer (Figure 3b). e) Contrast responses for the images shown in Figure 4a & 4b. The low contrast responses for the original image (Figure 4a) are expanded following adaptation, which matches the mean responses to the painting to the responses for a color distribution typical of outdoor natural scenes (Figure 4b). Please click here to view a larger version of this figure.

Figure 2: Simulating the Consequences of Lens Aging. Cezanne's Still Life with Apples (a) processed to simulate an aging lens (b) and adaptation to the lens (c). Digital image courtesy of the Getty's Open Content Program. Please click here to view a larger version of this figure.

Figure 2: Simulating the Consequences of Lens Aging. Cezanne's Still Life with Apples (a) processed to simulate an aging lens (b) and adaptation to the lens (c). Digital image courtesy of the Getty's Open Content Program. Please click here to view a larger version of this figure.

Figure 3: Simulating Anomalous Trichromacy. van Gogh's Irises (a) simulating the reduced color contrasts in a color-deficient observer (b), and the predicted appearance in observers fully adapted to the reduced contrast (c). Digital image courtesy of the Getty's Open Content Program. Please click here to view a larger version of this figure.

Figure 3: Simulating Anomalous Trichromacy. van Gogh's Irises (a) simulating the reduced color contrasts in a color-deficient observer (b), and the predicted appearance in observers fully adapted to the reduced contrast (c). Digital image courtesy of the Getty's Open Content Program. Please click here to view a larger version of this figure.

Figure 4: Simulating Adaptation to a Low Contrast Environment. Monet's Sunrise (Marine). The original image (a) is processed to simulate the color appearance for an observer adapted to the low contrasts in the scene (b). This was done by adjusting the sensitivity of each mechanism's sensitivity so that the average response to the colors in the paintings is equal to the average response to colors measured for a collection of natural outdoor scenes. Digital image courtesy of the Getty's Open Content Program. Please click here to view a larger version of this figure.

Figure 4: Simulating Adaptation to a Low Contrast Environment. Monet's Sunrise (Marine). The original image (a) is processed to simulate the color appearance for an observer adapted to the low contrasts in the scene (b). This was done by adjusting the sensitivity of each mechanism's sensitivity so that the average response to the colors in the paintings is equal to the average response to colors measured for a collection of natural outdoor scenes. Digital image courtesy of the Getty's Open Content Program. Please click here to view a larger version of this figure.

Figure 5. Using the Model to Examine Visual Performance. a) The Munsell palette rendered under adaptation to the colors of a lush or arid environment. b) Chips in the palette that should appear pure red, green, blue, or yellow after adaptation to a range of different color environments. Light-shaded symbols plot the range of average chip selections from the languages of the World Color Survey. c) Images of the surface of Mars as they might appear to an observer adapted to Earth or to Mars. Superimposed patches show examples of the stimuli added for the visual search task, and include a set of uniformly colored distractors and one differently-colored target. d) In the experiment search times were measured for locating the odd target, and were substantially shorter within the adapted Mars-adapted images. Please click here to view a larger version of this figure.

Figure 5. Using the Model to Examine Visual Performance. a) The Munsell palette rendered under adaptation to the colors of a lush or arid environment. b) Chips in the palette that should appear pure red, green, blue, or yellow after adaptation to a range of different color environments. Light-shaded symbols plot the range of average chip selections from the languages of the World Color Survey. c) Images of the surface of Mars as they might appear to an observer adapted to Earth or to Mars. Superimposed patches show examples of the stimuli added for the visual search task, and include a set of uniformly colored distractors and one differently-colored target. d) In the experiment search times were measured for locating the odd target, and were substantially shorter within the adapted Mars-adapted images. Please click here to view a larger version of this figure.

Discussion

The illustrated protocol demonstrates how the effects of adaptation to a change in the environment or the observer can be portrayed in images. The form this portrayal takes will depend on the assumptions made for the model — for example, how color is encoded, and how the encoding mechanisms respond and adapt. Thus the most important step is deciding on the model for color vision — for example what the properties of the hypothesized channels are, and how they are assumed to adapt. The other important steps are to set appropriate parameters for the properties of the two environments, or two observer sensitivities, that you are adapting between.

The model illustrated is very simple, and there are many ways in which it is incomplete and could be expanded depending on the application. For example, color information is not encoded independently of form, and the illustrated simulations take no account of the spatial structure of the images or of neural receptive fields, or of known interactions across mechanisms such as contrast normalization38. Similarly, all pixels in the images are given equal weight, and thus the simulations do not incorporate spatial factors such as how scenes are sampled with eye movements. Adaptation in the model is also assumed to represent simple multiplicative scaling. This is appropriate for some forms of chromatic adaptation but may not correctly describe the response changes at post-receptoral levels. Similarly, the contrast response functions in the model are linear and thus do not simulate the actual response functions of neurons. A further important limitation is that the illustrated simulations do not incorporate noise. If this noise occurs at or prior to the sites of the adaptation, then adaptation may adjust both signal and noise and consequently may have very different effects on appearance and visual performance39. One way to simulate the effects of noise is to introduce random perturbations in the stimulus28. However, this will not mimic what this noise "looks like" to an observer.

As suggested by the illustrated examples, the simulations can capture many properties of color experience that are not evident when considering only the spectral and contrast sensitivity of the observer, and in particular function to highlight the importance of adaptation in normalizing color perception and compensating for the sensitivity limits of the observer. In this regard, the technique provides a number of advantages and applications for visualizing or predicting visual percepts. These include the following:

Better Simulations of Variant Vision

As noted, filtering an image for a different sensitivity reveals what one experiences when information in the image is altered, but does less well at predicting what an observer with that sensitivity would experience. As an example, a gray patch filtered to simulate the yellowing lens of an older observer's eye looks yellower9. But older observers who are accustomed to their aged lenses instead describe and probably literally see the stimulus as gray40. As shown here, this is a natural consequence of adaptation in the visual system28, and thus incorporating this adaptation is important for better visualizing an individual's percepts.

A Common Mechanism Predicting Differences Between Observers and Between Environments

Most simulation techniques are focused on predicting changes in the observer. Yet adaptation is also routinely driven by changes in the world18,19. Individuals immersed in different visual environments (e.g., urban Vs. rural, or arid Vs. lush) are exposed to very different patterns of stimulation which may lead to very different states of adaptation41,42. Moreover, these differences are accentuated among individuals occupying different niches in an increasingly specialized and technical society (e.g., an artist, radiologist, video game player, or scuba diver). Perceptual learning and expertise have been widely studied and depend on many factors43,44,45. But one of these may be simple exposure46,47. For example, one account of the "other race" effect, in which observers are better at distinguishing faces with our own ethnicity, is because they are adapted to the faces they commonly encounter48,49. Adaptation provides a common metric for evaluating the impact of a sensitivity change Vs. stimulus change on perception, and thus for predicting how two different observers might experience the same world Vs. placing the same observer in two different worlds.

Evaluating the Long-term Consequences of Adaptation

Actually adapting observers and then measuring how their sensitivity and perception change is a well-established and extensively investigated psychophysical technique. However, these measurements are typically restricted to short term exposures lasting minutes or hours. Increasing evidence suggests that adaptation also operates over much longer timescales that are much more difficult to test empirically50,51,52,53,54. Simulating adaptation has the advantage of pushing adaptation states to their theoretical long-term limits and thus exploring timescales that are not practical experimentally. It also allows for testing the perceptual consequences of gradual changes such as aging or a progressive disease.

Evaluating the Potential Benefits of Adaptation

A related problem is that while many functions have been proposed for adaptation, performance improvements are often not evident in studies of short-term adaptation, and this may in part be because these improvements arise only over longer timescales. Testing how well observers can perform different visual tasks with images adapted to simulate these timescales provides a novel method for exploring the perceptual benefits and costs of adaptation29.

Testing Mechanisms of Visual Coding and Adaptation

The simulations can help to visualize and compare both different models of visual mechanisms and different models of how these mechanisms adjust their sensitivity. Such comparisons can help reveal the relative importance of different aspects of visual coding for visual performance and perception.

Adapting Images to Observers

To the extent that adaptation helps one to see better, such simulations provide a potentially powerful tool for developing models of image processing that can better highlight information for observers. Such image enhancement techniques are widespread, but the present approach is designed to adjust an image in ways in which the actual brain adjusts, and thus to simulate the actual coding strategies that the visual system evolved to exploit. Preprocessing images in this way could in principle remove the need for observers to visually acclimate to a novel environment, by instead adjusting images to match the adaptation states that observers are currently in26,29.

It may seem unrealistic to suggest that adaptation could in practice discount nearly fully a sensitivity change from our percepts, yet there are many examples where percepts do appear unaffected by dramatic sensitivity differences55, and it is an empirical question how complete the adaptation is for any given case — one that adapted images could also be used to address. In any case, if the goal is to visualize the perceptual experience of an observer, then these simulations arguably come much closer to characterizing that experience than traditional simulations based only on filtering the image. Moreover, they provide a novel tool for predicting and testing the consequences and functions of sensory adaptation29. Again this adaptation is ubiquitous in sensory processing, and similar models could be exploited to explore the impact of adaptation on other visual attributes and other senses.

Disclosures

The authors have nothing to disclose.

Acknowledgments

Supported by National Institutes of Health (NIH) grant EY-10834.

References

- Vienot F, Brettel H, Ott L, Ben M'Barek A, Mollon JD. What do colour-blind people see? Nature. 1995;376:127–128. doi: 10.1038/376127a0. [DOI] [PubMed] [Google Scholar]

- Brettel H, Vienot F, Mollon JD. Computerized simulation of color appearance for dichromats. J Opt Soc Am A Opt Image Sci Vis. 1997;14:2647–2655. doi: 10.1364/josaa.14.002647. [DOI] [PubMed] [Google Scholar]

- Flatla DR, Gutwin C. So that's what you see: building understanding with personalized simulations of colour vision deficiency. Proceedings of the 14th international ACM SIGACCESS conference on Computers and accessibility. 2012. pp. 167–174.

- Machado GM, Oliveira MM, Fernandes LA. A physiologically-based model for simulation of color vision deficiency. IEEE Trans. Vis. Comput. Graphics. 2009;15:1291–1298. doi: 10.1109/TVCG.2009.113. [DOI] [PubMed] [Google Scholar]

- Teller DY. First glances: the vision of infants. the Friedenwald lecture. Invest. Ophthalmol. Vis. Sci. 1997;38:2183–2203. [PubMed] [Google Scholar]

- Wade A, Dougherty R, Jagoe I. Tiny eyes. 2016. Available from: http://www.tinyeyes.com/

- Ball LJ, Pollack RH. Simulated aged performance on the embedded figures test. Exp. Aging Res. 1989;15:27–32. doi: 10.1080/03610738908259755. [DOI] [PubMed] [Google Scholar]

- Sjostrom KP, Pollack RH. The effect of simulated receptor aging on two types of visual illusions. Psychon Sci. 1971;23:147–148. [Google Scholar]

- Lindsey DT, Brown AM. Color naming and the phototoxic effects of sunlight on the eye. Psychol Sci. 2002;13:506–512. doi: 10.1111/1467-9280.00489. [DOI] [PubMed] [Google Scholar]

- Raj A, Rosenholtz R. What your design looks like to peripheral vision. Proceedings of the 7th Symposium on Applied Perception in Graphics and Visualization. 2010. pp. 88–92.

- Perry JS, Geisler WS. Gaze-contingent real-time simulation of arbitrary visual fields. International Society for Optics and Photonics: Electronic Imaging. 2002. pp. 57–69.

- Vinnikov M, Allison RS, Swierad D. Real-time simulation of visual defects with gaze-contingent display. Proceedings of the 2008 symposium on Eye tracking research. 2008. pp. 127–130.

- Hogervorst MA, van Damme WJM. Visualizing visual impairments. Gerontechnol. 2006;5:208–221. [Google Scholar]

- Aguilar C, Castet E. Gaze-contingent simulation of retinopathy: some potential pitfalls and remedies. Vision res. 2011;51:997–1012. doi: 10.1016/j.visres.2011.02.010. [DOI] [PubMed] [Google Scholar]

- Rowe MP, Jacobs GH. Cone pigment polymorphism in New World monkeys: are all pigments created equal? Visual neurosci. 2004;21:217–222. doi: 10.1017/s0952523804213104. [DOI] [PubMed] [Google Scholar]

- Rowe MP, Baube CL, Loew ER, Phillips JB. Optimal mechanisms for finding and selecting mates: how threespine stickleback (Gasterosteus aculeatus) should encode male throat colors. J. Comp. Physiol. A Neuroethol. Sens. Neural. Behav. Physiol. 2004;190:241–256. doi: 10.1007/s00359-004-0493-8. [DOI] [PubMed] [Google Scholar]

- Melin AD, Kline DW, Hickey CM, Fedigan LM. Food search through the eyes of a monkey: a functional substitution approach for assessing the ecology of primate color vision. Vision Res. 2013;86:87–96. doi: 10.1016/j.visres.2013.04.013. [DOI] [PubMed] [Google Scholar]

- Webster MA. Adaptation and visual coding. J vision. 2011;11(5):1–23. doi: 10.1167/11.5.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster MA. Visual adaptation. Annu Rev Vision Sci. 2015;1:547–567. doi: 10.1146/annurev-vision-082114-035509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster MA, Kaping D, Mizokami Y, Duhamel P. Adaptation to natural facial categories. Nature. 2004;428:557–561. doi: 10.1038/nature02420. [DOI] [PubMed] [Google Scholar]

- Webster MA, MacLeod DIA. Visual adaptation and face perception. Philos. Trans. R. Soc. Lond., B, Biol. Sci. 2011;366:1702–1725. doi: 10.1098/rstb.2010.0360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schweinberger SR, et al. Auditory adaptation in voice perception. Curr Biol. 2008;18:684–688. doi: 10.1016/j.cub.2008.04.015. [DOI] [PubMed] [Google Scholar]

- Yovel G, Belin P. A unified coding strategy for processing faces and voices. Trends cognit sci. 2013;17:263–271. doi: 10.1016/j.tics.2013.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadmehr R, Smith MA, Krakauer JW. Error correction, sensory prediction, and adaptation in motor control. Annu rev neurosci. 2010;33:89–108. doi: 10.1146/annurev-neuro-060909-153135. [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Diedrichsen J, Flanagan JR. Principles of sensorimotor learning. Nat rev Neurosci. 2011;12:739–751. doi: 10.1038/nrn3112. [DOI] [PubMed] [Google Scholar]

- McDermott K, Juricevic I, Bebis G, Webster MA. Rogowitz BE, Pappas TN, editors. Human Vision and Electronic Imaging. SPIE. 2008. V-1-10.

- Juricevic I, Webster MA. Variations in normal color vision. V. Simulations of adaptation to natural color environments. Visual neurosci. 2009;26:133–145. doi: 10.1017/S0952523808080942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster MA, Juricevic I, McDermott KC. Simulations of adaptation and color appearance in observers with varying spectral sensitivity. Ophthalmic Physiol Opt. 2010;30:602–610. doi: 10.1111/j.1475-1313.2010.00759.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster MA. Probing the functions of contextual modulation by adapting images rather than observers. Vision res. 2014. [DOI] [PMC free article] [PubMed]

- Webster MA. Human colour perception and its adaptation. Network: Computation in Neural Systems. 1996;7:587–634. [Google Scholar]

- Webster MA, Mollon JD. Colour constancy influenced by contrast adaptation. Nature. 1995;373:694–698. doi: 10.1038/373694a0. [DOI] [PubMed] [Google Scholar]

- Brainard DH, Stockman A. Bass M, editor. OSA Handbook of Optics. 2010. pp. 10–11.

- Maloney LT. Evaluation of linear models of surface spectral reflectance with small numbers of parameters. J Opt Soc Am A Opt Image Sci Vis. 1986;3:1673–1683. doi: 10.1364/josaa.3.001673. [DOI] [PubMed] [Google Scholar]

- Mizokami Y, Webster MA. Are Gaussian spectra a viable perceptual assumption in color appearance? J Opt Soc Am A Opt Image Sci Vis. 2012;29:A10–A18. doi: 10.1364/JOSAA.29.000A10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chichilnisky EJ, Wandell BA. Photoreceptor sensitivity changes explain color appearance shifts induced by large uniform backgrounds in dichoptic matching. Vision res. 1995;35:239–254. doi: 10.1016/0042-6989(94)00122-3. [DOI] [PubMed] [Google Scholar]

- Boehm AE, MacLeod DI, Bosten JM. Compensation for red-green contrast loss in anomalous trichromats. J vision. 2014;14 doi: 10.1167/14.13.19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Regan BC, Mollon JD. In: Colour Vision Deficiencies. Vol. XIII. Cavonius CR, editor. Dordrecht: Springer; 1997. pp. 261–270. [Google Scholar]

- Carandini M, Heeger DJ. Normalization as a canonical neural computation. Nature reviews. Neurosci. 2011;13:51–62. doi: 10.1038/nrn3136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rieke F, Rudd ME. The challenges natural images pose for visual adaptation. Neuron. 2009;64:605–616. doi: 10.1016/j.neuron.2009.11.028. [DOI] [PubMed] [Google Scholar]

- Hardy JL, Frederick CM, Kay P, Werner JS. Color naming, lens aging, and grue: what the optics of the aging eye can teach us about color language. Psychol sci. 2005;16:321–327. doi: 10.1111/j.0956-7976.2005.01534.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster MA, Mollon JD. Adaptation and the color statistics of natural images. Vision res. 1997;37:3283–3298. doi: 10.1016/s0042-6989(97)00125-9. [DOI] [PubMed] [Google Scholar]

- Webster MA, Mizokami Y, Webster SM. Seasonal variations in the color statistics of natural images. Network. 2007;18:213–233. doi: 10.1080/09548980701654405. [DOI] [PubMed] [Google Scholar]

- Sagi D. Perceptual learning in Vision Research. Vision res. 2011. [DOI] [PubMed]

- Lu ZL, Yu C, Watanabe T, Sagi D, Levi D. Perceptual learning: functions, mechanisms, and applications. Vision res. 2009;50:365–367. doi: 10.1016/j.visres.2010.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Green CS, Pouget A, Schrater P. Brain plasticity through the life span: learning to learn and action video games. Annu rev neurosci. 2012;35:391–416. doi: 10.1146/annurev-neuro-060909-152832. [DOI] [PubMed] [Google Scholar]

- Kompaniez E, Abbey CK, Boone JM, Webster MA. Adaptation aftereffects in the perception of radiological images. PloS one. 2013;8:e76175. doi: 10.1371/journal.pone.0076175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross H. Behavior and Perception in Strange Environments. George Allen & Unwin; 1974. [Google Scholar]

- Armann R, Jeffery L, Calder AJ, Rhodes G. Race-specific norms for coding face identity and a functional role for norms. J vision. 2011;11:9. doi: 10.1167/11.13.9. [DOI] [PubMed] [Google Scholar]

- Oruc I, Barton JJ. Adaptation improves discrimination of face identity. Proc. R. Soc. A. 2011;278:2591–2597. doi: 10.1098/rspb.2010.2480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kording KP, Tenenbaum JB, Shadmehr R. The dynamics of memory as a consequence of optimal adaptation to a changing body. Nature neurosci. 2007;10:779–786. doi: 10.1038/nn1901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neitz J, Carroll J, Yamauchi Y, Neitz M, Williams DR. Color perception is mediated by a plastic neural mechanism that is adjustable in adults. Neuron. 2002;35:783–792. doi: 10.1016/s0896-6273(02)00818-8. [DOI] [PubMed] [Google Scholar]

- Delahunt PB, Webster MA, Ma L, Werner JS. Long-term renormalization of chromatic mechanisms following cataract surgery. Visual neurosci. 2004;21:301–307. doi: 10.1017/S0952523804213025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bao M, Engel SA. Distinct mechanism for long-term contrast adaptation. Proc Natl Acad Sci USA. 2012;109:5898–5903. doi: 10.1073/pnas.1113503109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwon M, Legge GE, Fang F, Cheong AM, He S. Adaptive changes in visual cortex following prolonged contrast reduction. J vision. 2009;9(2):1–16. doi: 10.1167/9.2.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster MA. In: Handbook of Color Psychology. Elliott A, Fairchild MD, Franklin A, editors. Cambridge University Press; 2015. pp. 197–215. [Google Scholar]