Abstract

Background/Aims

Clinicians are increasingly being asked to provide their opinion on the decision-making capacity of older adults, while validated and widely available tools are lacking. We sought to identify an online cognitive screening tool for assessing mental capacity through the measurement of executive function.

Methods

A mixed elderly sample of 45 individuals, aged 65 years and older, were screened with the Montreal Cognitive Assessment (MoCA) and the modified Cambridge Brain Sciences Battery.

Results

Two computerized tests from the Cambridge Brain Sciences Battery were shown to provide information over and above that obtained with a standard cognitive screening tool, correctly sorting the majority of individuals with borderline MoCA scores.

Conclusions

The brief computerized battery should be used in conjunction with standard tests such as the MoCA in order to differentiate cognitively intact from cognitively impaired older adults.

Keywords: Cambridge Brain Sciences Battery, Montreal Cognitive Assessment, Executive function, Capacity assessment

Introduction

As our society ages, it has become increasingly important to assess complex mental capacities. In capacity assessment, there is a strong interest in discriminating cognitively intact from cognitively impaired functioning [1]. The Mini-Mental State Examination (MMSE) [2] and the Montreal Cognitive Assessment (MoCA) [3] represent the two cognitive screening tests encountered most often in probate cases [4]. While the MMSE currently represents the test most commonly used in clinical practice and contentious probate, the MoCA is on its way to replacing the MMSE. One reason for this is that the MoCA is freely available for noncommercial, clinical, and academic purposes. Furthermore, studies comparing the MMSE and the MoCA favor the latter due to its increased sensitivity for detecting patients with cognitive impairment [5, 6]. The MoCA also measures a wider range of abilities including executive functions [4]. Executive functions, which depend largely on the integrity of the frontal lobe and associated structures, are high-level cognitive abilities required for the implementation of goal-directed behavior, and for determining and executing a plan for the future [7]. It is generally agreed that intact executive functioning is important for effective decision-making [8, 9]. For these reasons, the MoCA was chosen as the comparator assessment of cognition in this study.

When dealing with the MoCA, the original cutoff score of 26/30 for normal cognition has been contested. This score may be too high, creating false positives, i.e., it may misclassify cognitively intact individuals as being cognitively impaired [10, 11, 12, 13]. Furthermore, existing research is inconsistent in providing an optimal cutoff score for elderly populations, which have a high incidence of cognitive impairment [11, 12]. Therefore, a primary aim of this study was to determine the feasibility of using a brief computerized battery to accompany the MoCA, to more accurately assign borderline individuals. In this study, we refer to individuals who have a MoCA score between 23 and 26 as having borderline cognitive impairment, i.e., we are unable to classify them definitively as impaired or unimpaired.

Although paper-and-pencil-based assessment has been the standard for decades, computerized cognitive testing has many advantages. There are reduced costs and scheduling flexibility offered by the reduced need for trained administration. Moreover, computerized tests also address critical issues relevant to the cognitive strengths and weaknesses of the participant. For example, many computerized tests, such as those that compose the Cambridge Brain Sciences (CBS) Battery [14], adjust to the performance levels of the participant, i.e., the next level increases or decreases in complexity. This means that computerized tests minimize floor and ceiling effects by properly adjusting the level of difficulty to the cognitive abilities of the participant. These tests can also record accuracy and speed of response with a level of precision not possible in paper-and-pencil assessments. Therefore, computerized testing is emerging as an attractive option compared to traditional instruments [15].

In this study, the MoCA and a modified CBS (mCBS) Battery were used for testing. The full CBS Battery consists of twelve tasks [14]. Five tests from the CBS Battery were chosen based on five aspects of cognition considered most relevant to the assessment of complex mental capacities. The five tests chosen were Paired Associates, Feature Match, Odd One Out, Double Trouble, and the Hampshire Tree Task. Between them, these tests assess aspects of short-term memory, attention, reasoning, and planning. The objective of this study was to identify a short screening tool from the mCBS Battery to accompany the MoCA in a capacity assessment. The acceptability of computerized testing among the elderly was also recorded.

Subjects and Methods

Study Design

This prospective study was conducted at Sunnybrook Health Sciences Centre in Toronto, ON, Canada, where each elderly individual underwent approximately 30 min of cognitive screening. At the single study session, informed consent was obtained by one of the assessors. The participants provided information about their age, gender, and highest level of education. A geriatric psychiatrist determined the diagnosis of a mood or neurocognitive disorder if applicable based on DSM-5 criteria. For approximately half of the study participants, computerized testing was presented before the MoCA, and for remaining participants, the MoCA was administered first.

Subjects and Recruitment

Participants were recruited from a geriatric psychiatry outpatient clinic and the general community. The general inclusion criteria were an age greater than 65 years and the ability and willingness to provide informed consent. Three groups of subjects were recruited: (1) a community sample of older adults without any psychiatric history or cognitive impairment; (2) a group with a diagnosis of a mood disorder, with or without mild neurocognitive disorder; and (3) a group with a major neurocognitive disorder and an MMSE score of 20 or greater. The exclusion criteria were: (1) a physical handicap or condition preventing effective use of a computer mouse with buttons; (2) an unwillingness to undergo computerized testing; and (3) sufficient English language skills to complete the tests. The study design was approved by the Research Ethics Board at Sunnybrook Health Sciences Centre, and all subjects gave their written informed consent to participate.

Apparatus

The computerized cognitive battery (mCBS Battery) was presented on a laptop and the MoCA was presented on paper. Each computerized task from the mCBS Battery began with text and video instructions. The assessors were on hand to answer any additional questions during testing. The five tests that compose the mCBS Battery include (in the order that they appear in the battery): (1) Paired Associates; (2) Feature Match; (3) Odd One Out; (4) Double Trouble; and (5) Hampshire Tree Task.

mCBS Battery

Paired Associates. Paired Associates is based on a paradigm that is commonly used to assess memory impairments in aging clinical populations [16]. Boxes are displayed at random locations on an invisible 5 × 5 grid. The boxes open one after another to reveal an enclosed object. Subsequently, the objects are displayed in random order in the center of the grid and the participant must click on the boxes that contained them. If the participant remembers all of the object-location paired associates correctly, then the difficulty level on the subsequent trial increases by 1 object-box pair, otherwise it decreases by 1. After 3 errors, the test ends. The test starts with just 2 boxes (max. level = 24; min. level = 2). There are two outcome measures: maximum level achieved and mean level achieved.

Feature Match. Feature Match is based on the classic feature search tasks that have historically been used to measure attentional processing [17]. Two grids are displayed on the screen, each containing a set of abstract shapes. In half of the trials, the grids differ by just one shape. In order to gain the maximum number of points, the participant must indicate whether or not the grid's contents are identical, solving as many problems as possible within 90 s. If the response is correct, the total score increases by the number of shapes in the grid and the number of shapes in the subsequent trials increases. If the response is incorrect, the total score decreases by the number of shapes in the grid and the subsequent trials have fewer shapes. The first grids contain 2 abstract shapes each. The outcome measure is the total score.

Odd One Out. Odd One Out (also called Deductive Reasoning) is based on a subset of problems from the Cattell Culture Fair Intelligence Test [18]. A 3 × 3 grid of cells is displayed on the screen. Each cell contains a variable number of copies of a colored shape. The features that make up the objects in each cell (color, shape, and number of copies) are related to each other according to a set of rules. The participant must deduce the rules that relate the object features and select the one cell whose contents do not correspond to those rules. To gain the maximum number of points, the participant must solve as many problems as possible within 90 s. If the response is correct, the total score increases by 1 point and the next problem is more complex. If the response is incorrect, the total score decreases by 1 point. The outcome measure is the total number of correct responses.

Double Trouble. Double Trouble (also called Color-Word Remapping) is a more challenging variant on the Stroop test [19]. A colored word is displayed at the top of the screen, e.g., the word RED drawn in blue ink. The participants must indicate which of 2 colored words at the bottom of the screen describes the color that the word at the top of the screen is drawn in. The color word mappings may be congruent, incongruent, or doubly incongruent, depending on whether or not the color that a given word describes matches the color that it is drawn in. To gain the maximum number of points, the participant must solve as many problems as possible within 90 s. The total score increases or decreases by 1 after each trial depending on whether the participant responded correctly. The outcome measure is the total score.

Hampshire Tree Task. The Hampshire Tree Task (also called Spatial Planning) is based on the Tower of London Task [20], which is widely used to measure executive function. Numbered beads are positioned on a tree-shaped frame. The participant repositions the beads so that they are configured in ascending numerical order running from left to right and top to bottom on the tree. To gain the maximum number of points, the participant must solve as many problems as possible in as few moves as possible within 3 min. The problems become progressively harder, with the total number of moves required and the planning complexity increasing in steps. Trials are aborted if the participant makes more than twice the number of moves required to solve the problem. After each trial, the total score is incremented by adding the minimum number of moves required × 2 – the number of moves actually made, thereby rewarding efficient planning. The first problem can be solved in just 3 moves. The outcome measure is the total score [14].

Montreal Cognitive Assessment

The MoCA was developed as a brief cognitive screening instrument used to detect mild cognitive impairment [3]. This paper-and-pencil assessment requires approximately 10 min to administer, and is scored out of 30 points. The cognitive domains assessed include: visuospatial skills, executive functions, memory, attention, concentration, calculation, language, abstraction, memory, and orientation. The three versions of the MoCA and their instructions are available on the official MoCA website (http://www.mocatest.org/). For this study, both assessors used the standard English version 1 (original) MoCA test with its associated instructions, to ensure accurate administration.

Data Analysis

Raw scores for each participant were recorded for both the mCBS Battery and the MoCA. No additional point was given on the MoCA for participants with 12 years of education. Since the mCBS Battery score is not adjusted for educational level, the raw MoCA score was used to ensure accurate comparisons between the two tests. Significant outliers were winsorized [21]. High outliers were reduced to the maximum value that was not a statistical outlier (i.e., quartile 3 + 1.5 × the interquartile range [IQR]) and low outliers were increased to the lowest value acceptable by quartile 1 − 1.5 × IQR. Only 4 of the 269 total scores were treated this way. Each individual's set of scores was sorted into one of three groups. The population was then divided into three test groups. Group 1 comprised all of those individuals with a MoCA score of less than 23. The middle two quartiles, where all individuals fell into the borderline cognitive impairment range (MoCA scores 23–26), formed group 2. Group 3 comprised all of those individuals with a MoCA score of more than 26. See Table 1 for the descriptions of the three groups.

Table 1.

Description of the three participant groups

| Group | Cognitive classification | MoCA score range | n | Mean | SD |

|---|---|---|---|---|---|

| 1 | Impaired | 22 and below | 11 | −1.13 | 0.49 |

| 2 | Borderline | 23–26 | 22 | −0.011 | 0.38 |

| 3 | Unimpaired | 27–30 | 12 | 1.23 | 0.37 |

After sorting the data into three groups, the mean scores of the five mCBS battery tests in groups 1 (impaired) and 3 (unimpaired) were calculated. The scores from group 2 (borderline impairment) were sorted into group 1 or 3 based on their individual score per test. The scores were compared with the mean scores of groups 1 and 3 and were sorted in one of three ways:

If the value was greater than or equal to the group 3 mean, it would be sorted into group 3 (unimpaired)

If the score fell to below or equal to the group 1 mean, it would be sorted into group 1 (impaired)

If the score fell between the group 1 and group 3 means, it was labelled as in the “gray zone”

Results

The sample consisted of 45 individuals (20 men and 25 women) aged 65 years and older; the average age was 78 years. The participants had varying levels of education: 33.3% of the individuals had less than or equal to 12 years of education, while 66.7% had more than 12 years of education. Two individuals withdrew from the study, leaving complete data for 45 participants.

When analyzing the sorting of group 2, i.e., borderline (MoCA) individuals, it was clear that certain mCBS Battery tests could not clearly distinguish between impaired and unimpaired individuals. This was evident in Paired Associates maximum level (test 1 max.) and Feature Match (test 2) where too many scores were grouped into the “gray zone.” In both test 1 max. and test 2, 50% (11/22) of the scores were sorted into the “gray zone.” Paired Associates mean level was also removed, as it contained 4 scores in the “gray zone” and the group 1 and group 3 means were extremely close (group 1 mean: 2.50; group 3 mean: 3.03).

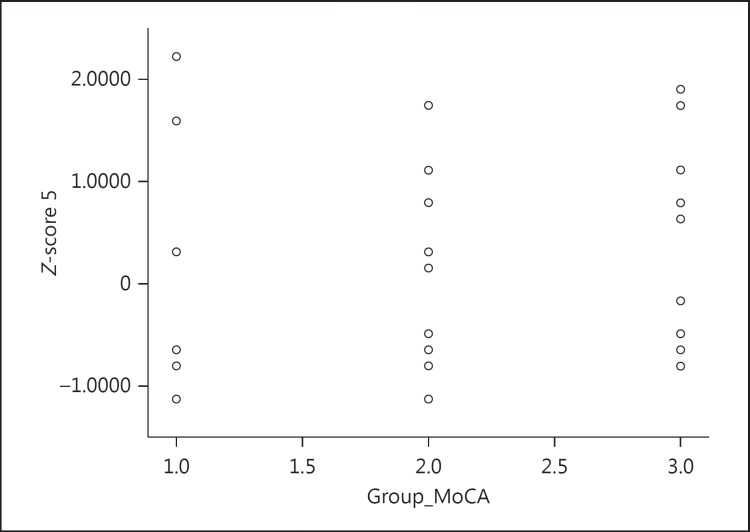

An independent-sample t test was conducted in order to compare the group 1 and group 3 mean scores in the remaining tests, i.e., Odd One Out, Double Trouble, and the Hampshire Tree Task (tests 3, 4, and 5, respectively). As each test had a different range of scores – i.e., the Paired Associates maximum was 5, whereas the Feature Match maximum was 125 – the test scores were converted to Z-scores in order to allow for comparisons between tests. In this analysis, there was a significant difference in mean scores in group 1 (M = −0.12, SD = 0.80) and group 3 (M = 0.62, SD = 0.79) for Odd One Out (t(21) = −2.235, p = 0.036). There was also a significant difference in mean scores in group 1 (M = −0.040, SD = 0.62) and group 3 (M = 0.52, SD = 1.03) for Double Trouble (t(21) = −2.57, p = 0.018). However, there was no significant difference in mean scores in group 1 (M = −0.37, SD = 1.21) and group 3 (M = 0.32, SD = 0.94) for the Hampshire Tree Task (t(21) = −1.54, p = 0.14). Paired Associates (test 5), on the other hand, was deemed too difficult for an elderly population, as any score achieved on the test was equally likely to be from any one of the three groups. This was clear when conducting two additional independent-sample t tests for the Hampshire Tree Task between groups 1 and 2 and groups 2 and 3. There was no significant difference in mean scores between group 1 (M = −0.37, SD = 1.21) and group 2 (M = 0.01, SD = 0.89) for the Hampshire Tree Task (t(31) = −1.01, p = 0.32). There was also no significant difference in mean scores between group 2 (M = 0.01, SD = 0.89) and group 3 (M = 0.32, SD = 0.95) for the Hampshire Tree Task (t(32) = −0.97, p = 0.34). This is shown in Figure 1, where a scatterplot demonstrates that the same score could be achieved on the Hampshire Tree Task being in group 1, 2, or 3 (referred to as Group_MoCA). Therefore, it was clear that the Hampshire Tree Task was not of use in a screening battery, due to its inability to distinguish between those who are impaired, borderline, or unimpaired.

Fig. 1.

Group_MoCA versus test 5 (Hampshire Tree Task). Note that the tests had to be converted to Z-scores in order to compare them on an equivalent scale.

Both of the remaining two tests, Odd One Out and Double Trouble, contained fewer scores in the “gray zone” and could differentiate between group 1 (impaired) and group 3 (unimpaired). It is important to note that while there were 22 individuals in group 2, one set of scores was removed for this analysis, due to a personal issue with color blindness, a factor that impairs one's performance on test 4, Double Trouble. Therefore, this analysis includes 21 comparisons. In the first analysis, 57% of the individuals were clearly sorted into group 1 or 3 according to tests 3 and 4, Odd One Out and Double Trouble. In a supplementary analysis, where one test correctly sorted the individuals into group 1 or 3, 81% of the individuals were clearly sorted; 19% (4/21) of the people could not be clearly sorted, as the results for Odd One Out and Double Trouble were opposite in their conclusions (i.e., one test sorted them into group 1 and the other test sorted them into group 3). Three of these individuals were sorted into the unimpaired group by Odd One Out and the impaired group by Double Trouble. A possible explanation for this result is that the Odd One Out test increases in complexity as one successfully passes each level. However, in Double Trouble, every task is equally difficult.

In this study, 98% of the participants who began computerized testing completed it. Three participants who completed this study stated that they do not use computers on a regular basis or at all, and it was clear that the skill of using a computer mouse with buttons should not be assumed in an elderly sample.

Discussion

We found that the use of two brief computerized tests – Odd One Out and Double Trouble, in addition to the MoCA – was a valid cognitive screening method for complex mental capacity assessment. These cognitive screening tests provide useful additional information about an individual's cognitive state due to their ability to discern individuals with borderline cognitive impairment as unimpaired or impaired [22, 23]. These results suggest that tests 3 and 4, Odd One Out and Double Trouble, can effectively distinguish between those in groups 1 and 3, i.e., those classified as unimpaired or impaired by the MoCA. These tests may be used for the assessment of complex capacities such as testamentary capacity, capacity to make a gift, capacity to manage property, and to execute powers of attorney.

It is important to note that these two tests provide different classifications that would not be available if sorting of those with borderline impairment were strictly based on raw MoCA scores. If sorting were completed according to MoCA scores, scores of 23 and 24 would sort individuals into the impaired group, and scores of 25 and 26 would sort individuals into the unimpaired group. The latter would result in distinctly different conclusions. For instance, only 23% of the individuals who scored 25 or 26 on the MoCA would be in agreement in their classification by both of these sorting methods (the MoCA and the two computerized tests), whereas 77% of the individuals would be sorted either in contrast to Odd One Out and Double Trouble or would be sorted as unimpaired by the MoCA when previously it was unclear (“gray zone”) according to the two tests. The individuals with scores of 23 and 24 based on the MoCA would more accurately be sorted into group 1 (unimpaired group), with 88% of the classification being the same as with Odd One Out and Double Trouble. These results suggest that our two computerized tests provide additional detail, especially for sorting individuals with MoCA scores of 25 and 26, those individuals with borderline cognitive impairment.

In addition to our aim to supplement the MoCA with a brief battery, we wanted to evaluate the feasibility of using a computerized battery in an elderly population. The CBS Battery has been used effectively in other studies with older adults to measure global or domain-specific cognitive functions [24, 25, 26]. In this study, the computerized tests had excellent acceptability, as demonstrated by the very high percentage (98%) of subjects who completed computerized testing. Since not all elderly individuals use a computer and/or mouse on a regular basis, touch screen versions of these tests may be an added benefit for some. This change could increase sample sizes in the future, as the skill of being able to use a computer mouse with buttons would not be a limiting factor.

In this study, Odd One Out and Double Trouble represented the two computerized tests measuring executive functions that were acceptable in an elderly population. Odd One Out is a reasoning task that assesses one's ability to think about ideas, analyze situations, and solve problems. Double Trouble is a measure of sustained attention and assesses one's ability to resist interference from prepotent word processing rules. In this pilot study (n = 45), 81% of the borderline individuals could be clearly classified as unimpaired (group 3) or impaired (group 1) based on at least one computerized test, Double Trouble or Odd One Out. The use of these two tests to accompany the MoCA is especially relevant in an elderly population. Since studies suggest that the regular cutoff score for normal cognition on the MoCA is too strict, having another tool to differentiate borderline individuals into unimpaired or impaired is necessary.

Disclosure Statement

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors. A.M.O. is supported by the Canada Excellence Research Chairs Program (CERC) and the Canada First Research Excellence Fund (CFREF). N.H. has received research support from Lundbeck, Roche, and Axovant Sciences, and consultation fees from Lilly, Merck, and Astellas. A.M.O. holds shares in Cambridge Brain Sciences Inc. and has received consultation fees from Pfizer.

References

- 1.Marson DC, Sawrie SM, Snyder S, McInturff B, Stalvey T, Boothe A, Aldridge T, Chatterjee A, Harrell LE. Assessing financial capacity in patients with Alzheimer disease: a conceptual model and prototype instrument. Arch Neurol. 2000;57:877–884. doi: 10.1001/archneur.57.6.877. [DOI] [PubMed] [Google Scholar]

- 2.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state.” A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- 3.Nasreddine ZS, Phillips NA, Bédirian V, Charbonneau S, Whitehead V, Collin I, Cummings JL, Chertkow H. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 4.Frost M, Lawson S, Jacoby R. Testamentary Capacity: Law, Practice, and Medicine. Oxford: Oxford University Press; 2015. [Google Scholar]

- 5.Smith T, Gildeh N, Holmes C. The Montreal Cognitive Assessment: validity and utility in a memory clinic setting. Can J Psychiatry. 2007;52:329–332. doi: 10.1177/070674370705200508. [DOI] [PubMed] [Google Scholar]

- 6.Zadikoff C, Fox SH, Tang-Wai DF, Thomsen T, de Bie R, Wadia P, Miyasaki J, Duff-Canning S, Lang AE, Marras C. A comparison of the mini mental state exam to the Montreal cognitive assessment in identifying cognitive deficits in Parkinson's disease. Mov Disord. 2008;23:297–299. doi: 10.1002/mds.21837. [DOI] [PubMed] [Google Scholar]

- 7.Kennedy KM. Testamentary capacity: a practical guide to assessment of ability to make a valid will. J Forensic Leg Med. 2012;19:191–195. doi: 10.1016/j.jflm.2011.12.029. [DOI] [PubMed] [Google Scholar]

- 8.Sorger BM, Rosenfeld B, Pessin H, Timm AK, Cimino J. Decision-making capacity in elderly, terminally ill patients with cancer. Behav Sci Law. 2007;25:393–404. doi: 10.1002/bsl.764. [DOI] [PubMed] [Google Scholar]

- 9.Loewenstein D, Acevedo A. The relationship between instrumental activities of daily living and neuropsychological performance. In: Marcotte TD, Grant I, editors. Neuropsychology of Everyday Functioning. New York: Guilford; 2009. pp. 93–112. [Google Scholar]

- 10.Coen RF, Cahill R, Lawlor BA. Things to watch out for when using the Montreal cognitive assessment (MoCA). Int J Geriatr Psychiatry. 2011;26:107–108. doi: 10.1002/gps.2471. [DOI] [PubMed] [Google Scholar]

- 11.Damian AM, Jacobson SA, Hentz JG, Belden CM, Shill HA, Sabbagh MN, Caviness JN, Adler CH. The Montreal Cognitive Assessment and the Mini-Mental State Examination as screening instruments for cognitive impairment: item analyses and threshold scores. Dement Geriatr Cogn Disord. 2011;31:126–131. doi: 10.1159/000323867. [DOI] [PubMed] [Google Scholar]

- 12.Luis CA, Keegan AP, Mullan M. Cross validation of the Montreal Cognitive Assessment in community dwelling older adults residing in the Southeastern US. Int J Geriatr Psychiatry. 2009;24:197–201. doi: 10.1002/gps.2101. [DOI] [PubMed] [Google Scholar]

- 13.Rossetti HC, Lacritz LH, Cullum CM, Weiner MF. Normative data for the Montreal Cognitive Assessment (MoCA) in a population-based sample. Neurology. 2011;77:1272–1275. doi: 10.1212/WNL.0b013e318230208a. [DOI] [PubMed] [Google Scholar]

- 14.Hampshire A, Highfield RR, Parkin BL, Owen AM. Fractionating human intelligence. Neuron. 2012;76:1225–1237. doi: 10.1016/j.neuron.2012.06.022. [DOI] [PubMed] [Google Scholar]

- 15.Wild K, Howieson D, Webbe F, Seelye A, Kaye J. Status of computerized cognitive testing in aging: a systematic review. Alzheimers Dement. 2008;4:428–437. doi: 10.1016/j.jalz.2008.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gould RL, Brown RG, Owen AM, Bullmore ET, Williams SC, Howard RJ. Functional neuroanatomy of successful paired associate learning in Alzheimer's disease. Am J Psychiatry. 2005;162:2049–2060. doi: 10.1176/appi.ajp.162.11.2049. [DOI] [PubMed] [Google Scholar]

- 17.Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- 18.Cattell RB. A culture-free intelligence test. I. J Educ Psychol. 1940;31:161. [Google Scholar]

- 19.Stroop JR. Studies of interference in serial verbal reactions. J Exp Psychol. 1935;18:643. [Google Scholar]

- 20.Shallice T. Specific impairments of planning. Philos Trans R Soc Lond B Biol Sci. 1982;298:199–209. doi: 10.1098/rstb.1982.0082. [DOI] [PubMed] [Google Scholar]

- 21.Ghosh D, Vogt A. Outliers: an evaluation of methodologies; in Joint Statistical Meetings. San Diego: American Statistical Association; 2012. pp. 3455–3460. [Google Scholar]

- 22.Moye J. Theoretical frameworks for competency in cognitively impaired elderly adults. J Aging Stud. 1996;10:27–42. [Google Scholar]

- 23.Moberg PJ, Rick JH. Decision-making capacity and competency in the elderly: a clinical and neuropsychological perspective. NeuroRehabilitation. 2008;23:403–413. [PubMed] [Google Scholar]

- 24.Gregory MA, Gill DP, Shellington EM, Liu-Ambrose T, Shigematsu R, Zou G, Shoemaker K, Owen AM, Hachinski V, Stuckey M, Petrella RJ. Group-based exercise and cognitive-physical training in older adults with self-reported cognitive complaints: the Multiple-Modality, Mind-Motor (M4) study protocol. BMC Geriatr. 2016;16:17. doi: 10.1186/s12877-016-0190-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Corbett A, Owen A, Hampshire A, Grahn J, Stenton R, Dajani S, Burns A, Howard R, Williams N, Williams G, Ballard C. The effect of an online cognitive training package in healthy older adults: an online randomized controlled trial. J Am Med Dir Assoc. 2015;16:990–997. doi: 10.1016/j.jamda.2015.06.014. [DOI] [PubMed] [Google Scholar]

- 26.Ferreira N, Owen A, Mohan A, Corbett A, Ballard C. Associations between cognitively stimulating leisure activities, cognitive function and age-related cognitive decline. Int J Geriatr Psychiatry. 2015;30:422–430. doi: 10.1002/gps.4155. [DOI] [PubMed] [Google Scholar]