Abstract

We consider the task of learning the structure of the graph underlying a mutually-exciting multivariate Hawkes process in the high-dimensional setting. We propose a simple and computationally inexpensive edge screening approach. Under a subset of the assumptions required for penalized estimation approaches to recover the graph, this edge screening approach has the sure screening property: with high probability, the screened edge set is a superset of the true edge set. Furthermore, the screened edge set is relatively small. We illustrate the performance of this new edge screening approach in simulation studies.

Keywords and phrases: Hawkes process, screening, high-dimensionality

MSC 2010 subject classifications: Primary 60G55, secondary 62M10, 62H12

1. Introduction

1.1. Overview of the multivariate Hawkes process

In a seminal paper, Hawkes (1971) proposed the multivariate Hawkes process, a multivariate point process model in which a past event may trigger the occurrence of future events. The Hawkes process and its variants have been widely applied to model recurrent events, with notable applications in modeling earthquakes (Ogata, 1988), crime rates (Mohler et al., 2011), interactions in social networks (Simma and Jordan, 2012; Perry and Wolfe, 2013; Zhou, Zha and Song, 2013a,b), financial events (Chavez-Demoulin, Davison and McNeil, 2005; Bowsher, 2007; Aït-Sahalia, Cacho-Diaz and Laeven, 2015), and spiking histories of neurons (see e.g., Brillinger, 1988; Okatan, Wilson and Brown, 2005; Paninski, Pillow and Lewi, 2007; Pillow et al., 2008).

In this section, we provide a very brief review of the multivariate Hawkes process. A more comprehensive discussion can be found in Liniger (2009) and Zhu (2013).

Following Brémaud and Massoulié (1996), we define a simple point process N on ℝ+ as a family {N(A)}A∈ℬ(ℝ+) taking integer values (including positive infinity), where ℬ(ℝ+) denotes the Borel σ-algebra of the positive half of the real line. Further let t1, t2, … ∈ ℝ+ be the event times of N. In this notation, N(A) = Σi 𝟙[ti∈A] for A ∈ ℬ(ℝ+). We write N ([t, t + dt)) as dN(t), where dt denotes an arbitrary small increment of t. Let ℋt be the history of N up to time t. Then the ℋt-predictable intensity process of N is defined as

| (1) |

Now suppose that N is a marked point process, in which each event time ti is associated with a mark mi ∈ {1, …, p} (see e.g., Definition 6.4.I. in Daley and Vere-Jones, 2003). We can then view N as a multivariate point process (Nj)j=1,…,p, of which the jth component process is given by Nj(A) = Σi 𝟙[ti∈A,mi=j] for A ∈ ℬ(ℝ+). To simplify the notation, we let tj,1, tj,2, … ∈ ℝ+ denote the event times of Nj.

The intensity of the jth component process is

In the case of the linear Hawkes process, this function takes the form (Brémaud and Massoulié, 1996; Hansen, Reynaud-Bouret and Rivoirard, 2015)

| (2) |

We refer to μj ∈ ℝ as the background intensity, and ωj,k(·): ℝ+ ↦ ℝ as the transfer function.

For p fixed, Brémaud and Massoulié (1996) established that the linear Hawkes process with intensity function (2) is stationary given the following assumption.

Assumption 1

Let Ω be a p × p matrix whose entries are , for j, k = 1, …, p. We assume that the spectral norm of Ω is strictly less than 1, i.e., Γmax(Ω) ≤ γΩ < 1, where γω is a generic constant.

We now define a directed graph with node set {1, …, p} and edge set

| (3) |

for ωj,k given in (2). Let

| (4) |

denote the maximum in-degree of the nodes in the graph. In this paper, we propose a simple screening procedure that can be used to obtain a small superset of the edge set ℰ.

1.2. Estimation and theory for the Hawkes process

We first consider the low-dimensional setting, in which the dimension of the process, p, is fixed, and T, the time period during which the point process is observed, is allowed to grow. In this setting, asymptotic properties such as the central limit theorem have been established; for instance, see Bacry et al. (2013) and Zhu (2013). Consequently, estimating the edge set ℰ is straightforward in low dimensions.

In high dimensions, when p might be large, we can fit the Hawkes process model using a penalized estimator of the form

| (5) |

where is a loss function, based on, e.g., the log-likelihood (Bacry, Gaïffas and Muzy, 2015) or least squares (Hansen, Reynaud-Bouret and Rivoirard, 2015); is a penalty function, such as the lasso (Hansen, Reynaud-Bouret and Rivoirard, 2015); λ is a nonnegative tuning parameter; and ℱ is a suitable function class. Then, a natural estimator for ℰ is {(j, k): ω̂j,k ≠ 0}.

Recently, Reynaud-Bouret and Schbath (2010), Bacry, Gaïffas and Muzy (2015), and Hansen, Reynaud-Bouret and Rivoirard (2015) have established that under certain assumptions, penalized estimation approaches of the form (5) are consistent in high dimensions, provided that the edge set ℰ is sparse. For instance, Hansen, Reynaud-Bouret and Rivoirard (2015) establish the oracle inequality of the lasso estimator for the Hawkes process, given that certain conditions hold on the observed event times. However, to show that these conditions hold with high probability for arbitrary samples, these theoretical results require that the point process is mutually-exciting — that is, an event in one component process can increase, but cannot decrease, the probability of an event in another component process. This amounts to assuming that ωj,k(Δ) ≥ 0 for all Δ ≥ 0, for ωj,k defined in (1).

When the dimension p is large, penalized estimation procedures of the form (5) (Bacry, Gaïffas and Muzy, 2015; Hansen, Reynaud-Bouret and Rivoirard, 2015) become computationally expensive: they require 𝒪(Tp2) operations per iteration in an iterative algorithm. This is problematic in contemporary applications, in which p can be on the order of tens of thousands (Ahrens et al., 2013). These concerns motivate us to propose a simple and computationally-efficient edge screening procedure for estimating the true edge set ℰ in high dimensions. Under very few assumptions, our proposed screening procedure is guaranteed to select a small superset of the true edge set ℰ.

1.3. Organization of paper

The rest of this paper proceeds as follows. In Section 2, we introduce our screening procedure for estimating the edge set ℰ, and establish its theoretical properties. We present simulation results in support of our proposed procedure in Section 3. Proofs of theoretical results are presented in Section 4, and the Discussion is in Section 5.

2. An edge screening procedure

2.1. Approach

For j = 1, …, p, let Λj denote the mean intensity of the jth point process introduced in Section 1. That is,

| (6) |

Following Equation 5 of Hawkes (1971), for any Δ ∈ ℝ, the (infinitesimal) cross-covariance of the jth and kth processes is defined as

| (7) |

where δ(·) is the Dirac delta function, which satisfies and δ(x) = 0 for x ≠ 0.

For a given value of Δ, we can estimate the cross-covariance function Vj,k(Δ) using kernel smoothing:

| (8) |

where K(·) is a kernel function with bandwidth h, and is the Stieltjes integral, defined as

In this paper, we focus on kernel functions that are bounded by 1 and are defined on a bounded support, i.e., 0 ≤ K(x/h) ≤ 1 for x ∈ [−h, h], and K(x/h) = 0 for x ∉ [−h, h] (e.g., the Epanechnikov kernel).

Let B denote a tuning parameter that defines the time range of interest for Vj,k(Δ), i.e. Δ ∈ [−B, B]. For any ζ, we define the set of screened edges as

| (9) |

where is the ℓ2-norm of a function f on the interval [l, u].

The screened edge set ℰ̂(ζ) in (9) can be calculated quickly: ||V̂j,k||2,[−B,B] can be calculated in 𝒪(T) computations, and so ℰ̂(ζ) can be calculated in 𝒪(Tp2) computations. The procedure can be easily parallellized.

There are three tuning parameters in the procedure: the bandwidth h in (8), the range B in (9), and the screening threshold ζ in (9). The bandwidth h can be chosen by cross-validation. The range B can be selected based on the problem setting. For instance, when using the multivariate Hawkes process to model a spike train data set in neuroscience, we can set B to equal the maximum time gap between a spike and the spike it can possibly evoke. The choice of screening threshold ζ can be determined based on the sparsity level that we expect based on our prior knowledge. Alternatively, we may wish to use a small value of ζ in order to reduce the chance of false negative edges in ℰ̂(ζ), or a larger value due to limited computational resources in our downstream analysis.

2.2. Theoretical results

We consider the asymptotics of triangular arrays (Greenshtein and Ritov, 2004), where the dimension p is allowed to grow with T. When unrestricted, it is possible to cook up extreme networks, where, for instance, the mean intensity Λj in (6) diverges to infinity. To avoid such cases, we pose the following regularity assumption.

Assumption 2

There exist positive constants Λmin, Λmax, and Vmax such that 0 < Λmin ≤ Λj ≤ Λmax and maxΔ∈ℝ |Vj,k(Δ)| ≤ Vmax for all 1 ≤ j, k ≤ p, where Λj and Vj,k are defined in (6) and (7), respectively. Furthermore, Λmin, Λmax, and Vmax are generic constants that do not depend on p.

Next, we make some standard assumptions on the transfer functions ωj,k in (2).

Assumption 3

The following hold:

The transfer functions are non-negative: ωj,k(Δ) ≥ 0 for all Δ ≥ 0.

- There exists a positive constant βmin such that

There exist positive constants b, θ0, and C such that, for all 1 ≤ j, k ≤ p, and for any Δ1, Δ2 ∈ ℝ, supp(ωj,k) ⊂ (0, b], maxΔ |ωj,k(Δ)| ≤ C, and |ωj,k(Δ1) − ωj,k(Δ2)| ≤ θ0|Δ1 − Δ2|.

Assumption 3(a) guarantees that the multivariate Hawkes process is mutually-exciting: that is, an event may trigger (but cannot inhibit) future events. This assumption is shared by the original proposal of Hawkes (1971). Furthermore, existing theory for penalized estimators for the Hawkes process requires this assumption (Bacry, Gaïffas and Muzy, 2015; Hansen, Reynaud-Bouret and Rivoirard, 2015).

Assumption 3(b) guarantees that the non-zero transfer functions are nonnegligible. Such an assumption is needed in order to establish variable selection consistency (Bühlmann and van de Geer, 2011; Wainwright, 2009) for the penalized estimator (5).

Assumption 3(c) guarantees that the transfer functions are sufficiently smooth; this guarantees that the cross-covariances are smooth (see Section A.2 in Appendix), and hence can be estimated using a kernel smoother (8). Instead of Assumption 3(c), we could assume that ωj,k is an exponential function (Bacry, Gaïffas and Muzy, 2015) or that it is well-approximated by a set of smooth basis functions (Hansen, Reynaud-Bouret and Rivoirard, 2015).

Recall that s was defined in (4). We now state our main result.

Theorem 1

Suppose that the Hawkes process (2) satisfies Assumptions 1–3. Let h = c1s−1/2T−1/6 in(8) and ζ = 2c2s1/2T−1/6 in (9) for some constants c1 and c2. Then, for some positive constants c3 and c4, with probability at least 1 − c3T7/6s1/2p2 exp(−c4T1/6),

ℰ ⊂ ℰ̂(ζ);

.

Theorem 1(a) guarantees that, with high probability, the screened edge set ℰ̂(ζ) contains the true edge set ℰ. Therefore, screening does not result in false negatives. This is referred to as the sure screening property in the literature (Fan and Lv, 2008; Fan, Samworth and Wu, 2009; Fan and Song, 2010; Fan, Feng and Song, 2011; Fan, Ma and Dai, 2014; Liu, Li and Wu, 2014; Song et al., 2014; Luo, Song and Witten, 2014). Typically, establishing the sure screening property requires assuming that the marginal association between a pair of nodes in ℰ is sufficiently large; see e.g. Condition 3 in Fan and Lv (2008) and Condition C in Fan, Feng and Song (2011). In contrast, Theorem 1(a) requires only that the conditional association between a pair of nodes in ℰ is sufficiently large; see Assumption 3(b).

Theorem 1(b) guarantees that ℰ̂(ζ) is a relatively small set, on the order of 𝒪(card(ℰ)s−1T1/3). Suppose that p2 ∝ s−1/2 exp(c4T1/6−ε) for some positive constant ε < 1/6; this is the high-dimensional regime, in which the probability statement in Theorem 1 converges to one. Then the size of ℰ̂(ζ), 𝒪(card(ℰ)s−1T1/3), can be much smaller than p2, the total number of node pairs. We note that the rate of T1/3 is comparable to existing results for non-parametric screening in the literature (see e.g., Fan, Feng and Song 2011; Fan, Ma and Dai 2014).

To summarize, Theorem 1 guarantees that under a small subset of the assumptions required for penalized estimation methods to recover the edge set ℰ, the screened edge set ℰ̂(ζ) (9) is small and contains no false negatives. We note that this is not the case for other types of models. For instance, in the case of the Gaussian graphical model, Luo, Song and Witten (2014) considered estimating the conditional dependence graph by screening the marginal covariances. In order for this procedure to have the sure screening property, one must make an assumption on the minimum marginal covariance associated with an edge in the graph, which is not required for variable selection consistency of penalized estimators (Cai, Liu and Luo, 2011; Luo, Song and Witten, 2014; Ravikumar et al., 2011; Saegusa and Shojaie, 2016).

It is important to note that Theorem 1 considers an oracle procedure, where the tuning parameters depend on unknown parameters. The heuristic selection guidelines suggested at the end of Section 2.1 may not satisfy the requirements of Theorem 1. We leave the discussion of optimal tuning parameter selection criteria for future research. Also, note that the bandwidth h ∝ T−1/6 is wider than the typical bandwidth for kernel smoothing, which is T−1/3 (Tsybakov, 2009). This is because we aim to minimize a concentration bound on V̂j,k − Vj,k (see the proof of Lemma 3 in the Appendix), rather than the usual mean integrated square error as in, e.g., Theorem 1.1 in Tsybakov (2009).

Remark 1

In light of Theorem 1, consider applying a constraint induced by ℰ̂(ζ) to (5):

| (10) |

Theorem 1 can be combined with existing results on consistency of penalized estimators of the Hawkes process (Bacry, Gaïffas and Muzy, 2015; Hansen, Reynaud-Bouret and Rivoirard, 2015) in order to establish that (10) results in consistent estimation of the transfer functions ωj,k. As a concrete example, Hansen, Reynaud-Bouret and Rivoirard (2015) considered (10) with taken to be the least-squares loss, and a lasso-type penalty. Our simulation experiments in Section 3 indicate that in this setting, (10) can actually have better small-sample performance than (5) when p is very large. Furthermore, solving (10) can be much faster than solving (5): the former requires 𝒪(T4/3s−1card(ℰ)) computations per iteration, compared to 𝒪(Tp2) per iteration for the latter (using e.g. coordinate descent, Friedman, Hastie and Tibshirani, 2010). In the high-dimensional regime when p2 ∝ s−1/2 exp(c4T1/6−ε) for some positive constant ε < 1/6, we have that T4/3s−1card(ℰ) ≪ Tp2. We note that in order to solve (10), we must first compute ℰ̂(ζ), which requires an additional one-time computational cost of 𝒪(Tp2).

3. Simulation

3.1. Simulation set-up

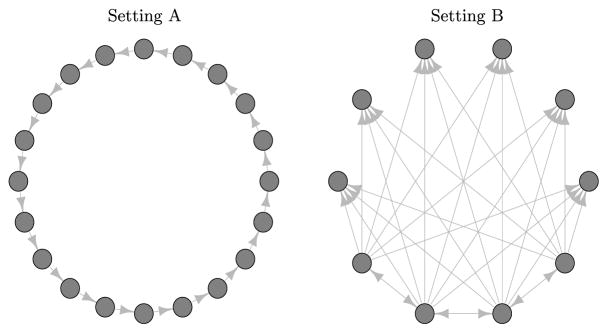

In this section, we investigate the performance of our screening procedure in a simulation study with p = 100 point processes. Intensity functions are given by (2), with μj = 0.75 for j = 1, …, p, and ωj,k(t) = 2t exp(1 − 5t) for (j, k) ∈ ℰ. By definition, ωj,k = 0 for all (j, k) ∉ ℰ. We consider two settings for the edge set ℰ, Setting A and Setting B. These settings are displayed in Figure 1.

Fig 1.

Left: In Setting A, the edge set ℰ is composed of 5 connected components, each of which is a chain graph containing 20 nodes. Right: In Setting B, ℰ is composed of 10 connected components, each of which contains 10 nodes.

In what follows, it will be useful to think about the (undirected) node pairs as belonging to three types. (i) We let

| (11) |

(ii) With a slight abuse of notation, we will use ℰ̃c ∩ supp(V) to denote node pairs that are not in ℰ̃ with non-zero population cross-covariance, defined in (7). (iii) Continuing to slightly abuse notation, we will use ℰ̃c\supp(V) to denote node pairs that are not in ℰ̃ and that have zero population cross-covariance.

Throughout the simulation, we set the bandwidth h in (8) to equal T−1/6, and the range of interest B in (9) to equal 5. Thus, h satisfies the requirements of Theorem 1, and [−B, B] covers the majority of the mass of the transfer function ωj,k. However, these simulation results are not sensitive to the particular choices of h or B.

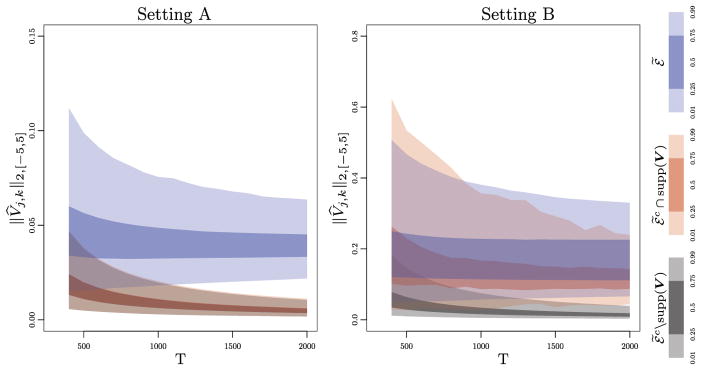

3.2. Investigation of the estimated cross-covariances

In Setting A, within a single connected component, all of the node pairs that are not in ℰ̃ are in ℰ̃c ∩ supp(V). However, for the most part, the population cross-covariances corresponding to node pairs in ℰ̃c ∩ supp(V) are quite small, because they are induced by paths of length two and greater. This can be seen from the left-hand panel of Figure 2. Given the left-hand panel of Figure 2, we expect the proposed screening procedure to work very well in Setting A: for a sufficiently large value of the time period T, there exists a value of ζ such that, with high probability, ℰ̂(ζ) = ℰ̃.

Fig. 2.

The quantiles of ||V̂jk||2,[−5,5] are displayed, for node pairs in ℰ̃ (11), ℰ̃c∩ supp(V), and ℰ̃c\supp(V), as a function of the time period T. Left: Results for Setting A. The estimated cross-covariances of node pairs in ℰ̃c\supp(V) and ℰ̃c ∩ supp(V) overlap. Center: Results for Setting B. The estimated cross-covariances of node pairs in ℰ̃ and ℰ̃c ∩ supp(V) overlap. Right: The color legend is displayed.

In Setting B, six nodes receive directed edges from the same set of four nodes. Therefore, we expect the pairs among these six nodes to be in the set ℰ̃c ∩ supp(V), and to have substantial population cross-covariances. This intuition is supported by the center panel of Figure 2, which indicates that the node pairs in ℰ̃c ∩ supp(V) have relatively large estimated cross-covariances, on the same order as the node pairs in ℰ̃. In light of Figure 2, we anticipate that for a sufficiently large value of the time period T, the screened edge set ℰ̂(ζ) will contain the edges in ℰ̃ as well as many of the edges in ℰ̃c ∩ supp(V).

3.3. Size of smallest screened edge set

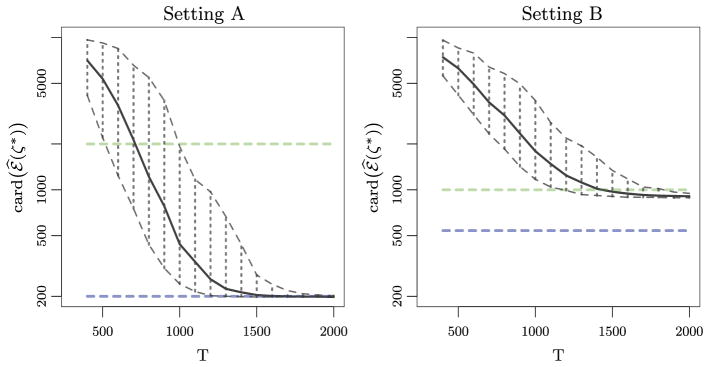

We now define ζ* ≡ max {ζ : ℰ ⊆ ℰ̂(ζ)}, and calculate card(ℰ̂(ζ*)). This represents the size of the smallest screened edge set that contains the true edge set.

Results, averaged over 200 simulated data sets, are shown in Figure 3.

Fig. 3.

For each of 200 simulated data sets, we calculated card(ℰ̂(ζ*)), where ζ* ≡ max {ζ : ℰ ⊆ ℰ̂(ζ)}, as a function of the time period T. The curves represent the mean of card(ℰ̂(ζ*)) (

); the 2.5% and 97.5% quantiles of card(ℰ̂(ζ*)) (

); the 2.5% and 97.5% quantiles of card(ℰ̂(ζ*)) (

); card(ℰ̃) (

); card(ℰ̃) (

); and card(supp(V)) (

); and card(supp(V)) (

). Left: Data generated under Setting A. Right: Data generated under Setting B.

). Left: Data generated under Setting A. Right: Data generated under Setting B.

We see that in Setting A, for sufficiently large T, card(ℰ̂(ζ*)) = card(ℰ̃), which implies that ℰ̂(ζ*) = ℰ̃. In other words, in Setting A, the screening procedure yields perfect recovery of the set ℰ̃ (11). This is in line with our intuition based on the left-hand panel of Figure 2.

In contrast, in Setting B, even when T is very large, card(ℰ̂(ζ*)) > card(ℰ̃), which implies that ℰ̂(ζ*) ⊇ ℰ̃. This was expected based on the center panel of Figure 2.

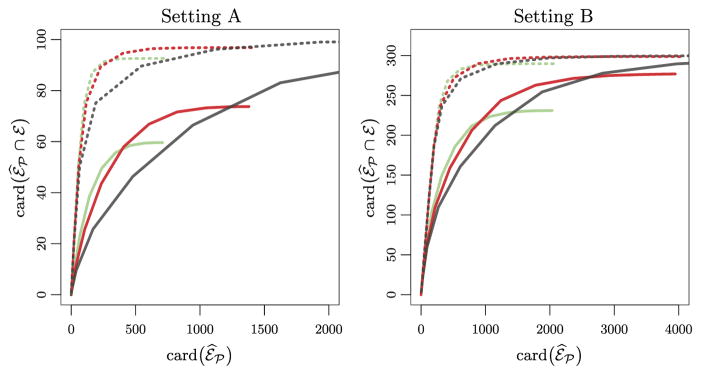

3.4. Performance of constrained penalized estimation

We now consider the performance of the estimator (10), which we obtain by calculating the screened edge set ℰ̂(ζ), and then performing a penalized regression subject to the constraint that ωjk = 0 for (j, k) ∉ ℰ̂(ζ). Note that rather than assuming a specific functional form for ωj,k, Hansen, Reynaud-Bouret and Rivoirard (2015) use a basis expansion to estimate ωj,k. Following their lead, we use a basis of step functions, of the form 𝟙((m−1)/2,m/2](t) for m = 1, …, 6. Instead of applying a lasso penalty to the basis function coefficients (Hansen, Reynaud-Bouret and Rivoirard, 2015), we employ a group lasso penalty for every 1 ≤ j, k ≤ p (Yuan and Lin, 2006; Simon and Tibshirani, 2012). Thus, (10) consists of a squared error loss function and a group lasso penalty. We let

| (12) |

where ω̂j,k solves (10).

Results are shown in Figure 4. In Setting A, solving the constrained optimization problem (10) leads to substantially better performance than solving the unconstrained problem (5). The improvement is especially noticeable when T is small. In Setting B, solving the constrained optimization problem (10) leads to only a slight improvement in performance relative to solving the unconstrained problem (5), since, as we have learned from Figures 2 and 3, the screened set ℰ̂(ζ) contains many edges in ℰ̃c ∩ supp(V). In both settings, solving the constrained optimization problem leads to substantial computational improvements.

Fig. 4.

The constrained penalized optimization problem (10) was performed, for a range of values of the tuning parameter λ. The x-axis displays the size of the estimated edge set ℰ̂℘ (12), and the y-axis displays the number of true positives, averaged over 200 simulated data sets. The curves represent performance when ζ is chosen to yield card(ℰ̂(ζ)) = 4card(ℰ̃) (T = 300 [

]and T = 600 [

]and T = 600 [

]), and when ζ is chosen to yield card(ℰ̂(ζ)) = 8card(ℰ̃) (T = 300 [

]), and when ζ is chosen to yield card(ℰ̂(ζ)) = 8card(ℰ̃) (T = 300 [

] and T = 600 [

] and T = 600 [

]). We also display performance of the unconstrained penalized optimization problem (5) (T = 300 [

]). We also display performance of the unconstrained penalized optimization problem (5) (T = 300 [

] and T = 600 [

] and T = 600 [

]).

]).

4. Proofs of theoretical results

In this section, we prove Theorem 1. In Section 4.1, we review an important property of the Hawkes process, the Wiener-Hopf integral equation. In Section 4.2, we list three technical lemmas used in the proof of Theorem 1. Theorem 1 is proved in Section 4.3. Proofs of the technical lemmas are provided in the Appendix.

4.1. The Wiener-Hopf integral equation

Recall that the transfer functions ω = {ωj,k}1≤j,k≤p were defined in (2), the cross-covariances V = {Vj,k}1≤j,k≤p were defined in (7), and the mean intensities Λ = (Λ1, …, Λp)T were defined in (6). If the Hawkes process defined in (2) is stationary, then for any Δ ∈ ℝ+,

| (13) |

where

and

Equation (13) belongs to a class of integral equations known as the Wiener-Hopf integral equations.

4.2. Technical lemmas

We state three lemmas used to prove Theorem 1, and provide their proofs in the Appendix. The following lemma is a direct consequence of (13) and our assumptions. Recall that [0, b] is a superset of supp(ωj,k) introduced in Assumption 3.

Lemma 1

Under Assumptions 1–3, for sufficiently large B such that B ≥ b, we have that ||Vj,k||2,[−B,B] ≥ βminΛmin for (j, k) ∈ ℰ.

The next lemma shows that the cross-covariance is Lipschitz continuous given the smoothness assumption on ωj,k (Assumption 3(c)). We will use this lemma in the proof of Theorem 1, in order to bound the bias of the kernel smoothing estimator (8). Recall that s, the maximum node in-degree, was defined in (4).

Lemma 2

Under Assumptions 1–3, the cross-covariance function is Lipschitz for 1 ≤ j, k ≤ p. More specifically, there exists some θ1 > 0 such that |Vj,k(x) − Vj,k(y)| ≤ θ1s|x − y| for any x, y ∈ ℝ.

Recall that the bandwidth h was defined in (8). The following concentration inequality holds on the estimated cross-covariance.

Lemma 3

Suppose that Assumptions 1–3 hold, and let h = c1s−1/2T−1/6 for some constant c1. Then

4.3. Proof of Theorem 1

Proof

In what follows, we will consider the event

We will first show that part (b) of Theorem 1 holds. From the Wiener-Hopf equation, (13), for each (j, k), we can write

| (14) |

We thus have

| (15) |

where the last inequality follows from Young’s inequality (see e.g., Theorem 3.9.4 in Bogachev (2007)), which takes the form

| (16) |

with . Here, we let r = q = 2, p = 1, f = ωj,l, and g = Vl,k.

From Assumption 3(c), we know that ωj,k is bounded by C. Therefore, by the Cauchy-Schwartz inequality,

Using (15) and letting V̄j,k ≡ ||Vj,k||2,(−∞,∞), we get

| (17) |

The ℓ2-norm of the vector V̄·,k can then be bounded using the triangle inequality,

Thus, by Assumption 1,

Rearranging the terms, and using the fact that γΩ < 1, gives

| (18) |

Hence,

| (19) |

Now, recall that the number of non-zero elements in Ω is card(ℰ), and Ωj,k ≤ γΩ. Thus, the inequality becomes

| (20) |

Hence, no more than elements of V̄j,k exceed c2s1/2T−1/6. Recalling that V̄j,k = ||Vj,k||2,(−∞,∞), this implies that no more than

elements of ||Vj,k||2,(−B,B) exceed c2s1/2T−1/6.

Given the event ℳ, only edges in the set

can be contained in ℰ̂(ζ) for ζ = 2c2s1/2T−1/6. This implies that the size of ℰ̂(ζ) is on the order of .

We now proceed to prove part (a) of Theorem 1. Lemma 1 states that ||Vj,k||2,[−B,B] ≥ βminΛmin for (j, k) ∈ ℰ. If the event ℳ holds, then for T sufficiently large, ||V̂j,k||2,[−B,B] > 2c2s1/2T−1/6 = ζ for (j, k) ∈ ℰ. Therefore, ℰ ⊂ ℰ̂(ζ).

Finally, Theorem 1 follows from the fact that, by Lemma 3, the event ℳ holds with probability at least 1 − c3s1/2T7/6p2 exp(−c4T1/6).

5. Discussion

In this paper, we have proposed a very simple procedure for screening the edge set in a multivariate Hawkes process. Provided that the process is mutually-exciting, we establish that this screening procedure can lead to a very small screened edge set, without incurring any false negatives. In fact, this result holds under a subset of the conditions required to establish model selection consistency of penalized regression estimators for the Hawkes process (Wainwright, 2009; Hansen, Reynaud-Bouret and Rivoirard, 2015). Therefore, this screening should always be performed whenever estimating the graph for a mutually-exciting Hawkes process.

The proposed screening procedure boils down to just screening pairs of nodes by thresholding an estimate of their cross-covariance. In fact, this approach is commonly taken within the neuroscience literature, with a goal of estimating the functional connectivity among a set of p neuronal spike trains (Okatan, Wilson and Brown, 2005; Pillow et al., 2008; Mishchencko, Vogelstein and Paninski, 2011; Berry et al., 2012). Therefore, this paper sheds light on the theoretical foundations for an approach that is often used in practice.

Appendix A: Technical proofs

A.1. Proof of Lemma 1

Proof

First, we observe that, if Vj,k is non-negative for all j and k, then ωj,l*Vl,k is non-negative for any j, l, k. Under Assumption 1, we know that (13) holds. We can see from (13) that

Therefore, we have

| (21) |

where the inequality follows from Assumption 2 and the equality holds since

From Assumption 3(b), we have that ||Vj,k(Δ)||2,[−B,B] ≥ βminΛmin for (j, k) ∈ ℰ.

We now show that the elements of V are non-negative, i.e., Vl,k(Δ) ≥ 0 for 1 ≤ l, k ≤ p, and Δ ∈ ℝ. Recall from the definition (7) in the main paper that

| (22) |

where the second equality follows from

| (23) |

In this proof, we use the Stieltjes integral to rewrite λl(t) in (2) as

| (24) |

Plugging in λl(t) from (24) into (22) gives

where we use the definition Λk = 𝔼[dNk(t − Δ)]/{d(t − Δ)}.

Using the fact that (see e.g., Hawkes and Oakes (1974))

we have

Rearranging the terms gives

| (25) |

Next, we will rewrite (25) by taking the conditional expectation of dNk or dNm as in (23). Note here that, when Δ′ < Δ, we condition dNm on the history up to t − Δ′, i.e., ℋt − Δ′. Given ℋt − Δ′, dNk(t − Δ) is fixed since t − Δ < t − Δ′. When Δ′ > Δ, we condition dNk on the history up to t − Δ. These cases are discussed separately in the following.

When Δ′ < Δ, for each integral of the summation, it holds that

From the definition of λm(t) in (2), we know that λm(t − Δ′) ≥ μm. Hence, in (25), if Δ′ < Δ, it holds that

| (26) |

On the other hand, when Δ′ ≥ Δ, we have

Expanding λk and Λk yields

Now, observe that Λm ≥ μm and 𝔼{dNi(t − Δ − Δ″) dNm(t − Δ′)}/{dΔ′dΔ″} ≥ μiμm by the nature of the mutually-exciting process. Thus, for Δ′ ≥ Δ,

| (27) |

A.2. Proof of Lemma 2

Proof

For any Δ ≥ 0, the integral equation (13) gives

| (28) |

For any x, y ≥ 0, we can write

| (29) |

where the last inequality holds since ωj,l ≡ 0 for l ∉ εj. We then have

| (30) |

For I, we know from Assumptions 2 and 3(c) that

| (31) |

For IIl, we can expand the convolution

Without loss of generality, we consider only the case that x ≥ y. We can decompose the integrals into parts on the intervals [−x, − y), [−y, b–x), and [b–x, b–y] as

where we use Assumption 3(c) in the second inequality, Assumptions 2 in the third inequality, and the boundedness of ωj,l from Assumption 3(c) in the last inequality. Recalling that x ≥ y, we have

| (32) |

Finally, plugging (31) and (32) into (30) gives

| (33) |

where we set θ1 ≡ θ0Λmax + bθ0Vmax + 2CVmax. Note that the last inequality holds as long as s ≥ 1. (The result also holds if s = 0: in this case, the second term in (30) is zero for every j and the bound (31) suffices.)

A.3. Proof of Lemma 3

Recall that the estimator of the cross-covariance (8) takes the form

The proof of Lemma 3 uses the following result. Lemma 4 is based on Proposition 3 of Hansen, Reynaud-Bouret and Rivoirard (2015); for completeness, we provide its proof in Section A.4.

Lemma 4

Suppose that Assumption 1 holds. We have

| (34) |

| (35) |

where c4, c5, and c6 are constants.

We are now ready to prove Lemma 3.

Proof

First, note that

| (36) |

where we use the definition of V in the third equality. Using the fact that the kernel K(x/h) is defined on [−h, h], we can write

| (37) |

where the first inequality follows from Lemma 2.

Recall that IIj ≡ T−1Nj(T) and IIk ≡ T−1Nk(T). Applying Lemma 4 and (37), we have, with probability at least 1 − 2c5p2T exp(−c4T1/6),

| (38) |

Letting h = c1s−1/2T−1/6, (38) can be written as

| (39) |

Lastly, we need a uniform bound on V̂j,k − Vj,k on the region [−B,B]. We first see that the above probability statement holds for a grid of ⌈s1/2T1/6⌉ points on [−B,B], denoted as . The gap between adjacent points on this grid is bounded by 2Bs−1/2T−1/6. Furthermore, for any Δ ∈ [−B,B], we can find a point on the grid Δi such that |Δ − Δi| ≤ 2B/⌈s1/2T1/6⌉ ≤ 2Bs−1/2T−1/6. From basic calculus we get that, for all Δ ∈ [−B,B],

| (40) |

for some constant c2.

Therefore, with probability at least 1 − c3s1/2p2T7/6 exp(−c4T1/6),

| (41) |

A.4. Proof of Lemma 4

Lemma 4 follows directly from the proof of Proposition 3 in Hansen, Reynaud-Bouret and Rivoirard (2015). The only difference is that we want a polynomial bound on the deviation, while Hansen, Reynaud-Bouret and Rivoirard (2015) consider a logarithmic bound. For completeness, we state the proof of Lemma 4 below, but note that the proof is almost identical to the proof of Proposition 3 in Hansen, Reynaud-Bouret and Rivoirard (2015). We refer the interested readers to the original proof in Section 7.4.3 of Hansen, Reynaud-Bouret and Rivoirard (2015) for more details.

Throughout this section, we assume that N ≡ (N1, …, Np)T is defined on the full real line. We first state some notation that is only used in this section.

Following Hansen, Reynaud-Bouret and Rivoirard (2015), we use to denote a constant that depends only on a1, a2, …; and we use the superscript i to indicate that this is the ith constant appearing in the proof.

Without loss of generality, we assume that supp(ωj,k) ⊂ (0, 1], as in Hansen, Reynaud-Bouret and Rivoirard (2015).

- As in Hansen, Reynaud-Bouret and Rivoirard (2015), we introduce a function Z(N) such that Z(N) depends only on {dN(t′), t′∈ [−A, 0)}, and there exist two non-negative constants η and d such that

(42) We also introduce the (time) shift operator St so that Z ○ St(N) depends only on {dN(t′), t′∈ [−A + t, t)}, in the same way as Z(N) depends on the points of N in [−A, 0).

We are now ready to prove the lemma. When proving the bound (34), we only discuss the case when j ≠ k. The proof for the case when j = k follows from the same argument and is thus omitted.

Proof

In this proof, we will consider a probability bound for [Z ○ St(N) − 𝔼(Z)] dt ≥ u, where, for some κ ∈ (0, 1) to be specified later,

| (43) |

Note that, by applying the bound to −Z(·), we can obtain a bound for|Z ○ St(N) − 𝔼(Z)|. To complete the proof, we will verify the statements (34) and (35) by considering some specific choices of Z(·).

For any positive integer k such that x ≡ T/(2k) > A, we have

where the inequality follows from the stationarity of N. As in Reynaud-Bouret and Roy (2006), let be a sequence of independent Hawkes processes, each of which is stationary with intensities λ(t) ≡ (λ1(t), …, λp(t))T. See Section 3 of Reynaud-Bouret and Roy (2006) for more details on the construction of . For each q, let be the truncated process associated with , where truncation means that we only consider the points in [2qx − A, 2qx + x]. Now, if we set

| (44) |

then

| (45) |

where Te,q is the time to extinction of the process . The extinction time Te,q is introduced in Sections 2.2 and 3 in Reynaud-Bouret and Roy (2006). Roughly speaking, it is the last time when there is an event for the Hawkes process with intensity λ(t) of the form (2), with background intensity μ ≡ (μ1, …, μp)T set to 0 for t ≥ 0. Since Te,q is identically distributed for all q, we can focus on one Te,q. Denoting by al the ancestral points with marks l and by the length of the corresponding cluster whose origin is al, we have:

| (46) |

Then by the exact argument on page 48 of Hansen, Reynaud-Bouret and Rivoirard (2015), we have

| (47) |

Thus, there exists a constant depending on A such that if we take , for some κ ∈ (0, 1) to be specified later, then

| (48) |

where c4 is a constant. Note that x = T/2k ≈ T1−κ is larger than A for T large enough (depending on A).

Now, note that the event 𝒯 ≡ {Te,q ≤ T/2k − A, for all q = 0, …, k} only depends on the process N. We will first find a probability bound for the first term in (45). In other words, we will show that, given the event 𝒯,

| (49) |

Let

Consider the measurable events

where 𝒩̃ is a constant that will be defined later and represents the number of points of lying in [t − A, t). Let Ω = ∩0≤q≤k–1 Ωq. Then

We have , where each can be easily controlled. Indeed, it is sufficient to split [2qx–A, 2qx+x] into intervals of size A (there are about of these) and require the number of points in each sub-interval to be smaller than 𝒩̃/2. By stationarity, we then obtain

Using Proposition 2 in Hansen, Reynaud-Bouret and Rivoirard (2015) with u = [𝒩̃/2] + 1/2, we obtain:

and, thus,

Note that this control holds for any positive choice of 𝒩̃. Thus, for any 𝒩̃ > 0,

| (50) |

Hence by taking , for large enough, the right-hand side of (50) is smaller than .

It remains to obtain the rate of D ≡ ℘(Σq Fq ≥ u/2 and Ω). For any positive constant ε that will be chosen later, we have:

| (51) |

since the variables are independent. But,

and .

Next note that if for any integer l,

then

Hence, cutting into slices of the type { } and using (50) with for a large enough , we obtain

where in the last inequality, we have used the fact that by (42). Plugging into the above equation gives

In the same way, following Hansen, Reynaud-Bouret and Rivoirard (2015), we can write

| (52) |

where . Then, by stationarity,

where σ2 ≡ 𝔼[Z(N) − 𝔼(Z)]. Going back to (51), by (52), we have

using the fact that log(1 + u) ≤ u. Since

one can choose c6 in the definition (43) of u (not depending on d) such that for some z = c4Tκ–2η(1–κ). Hence,

One can choose ε (as in the proof of the Bernstein inequality in Massart (2007), page 25) to obtain a bound on the right-hand side in the form of e−z. We can then choose c4 large enough, and only depending on η and A, to guarantee that D ≤ e−z ≤ c5 exp(−c4T1–κ).

In summary, we have shown that, given the event 𝒯,

With a slight abuse of notation, letting gives (49).

To complete the proof, we apply the concentration inequality (49) with some specific choices of Z(·).

For each pair (j, k), let

We can check that d = 1 and η = 2 satisfy (42). Then with κ = 5/6 in (49), we get, given the event 𝒯,

Applying a union bound for all pairs (j, k), we have, given the event 𝒯,

| (53) |

Recall from the concentration inequality (48) that the event 𝒯 holds with probability at least 1–pT 1/6 exp(−c4T1/6). Thus, given that pT 1/6 exp(−c4T1/6) is dominated by the right-hand side of (53), it holds unconditionally that

which is the statement on Ij,k in (34).

The statement on IIl, l = j, k, in (35) can be shown in a similar manner by taking Z ○ St(N) ≡ dNj(t)/dt, with η = 1, and κ = 13/18.

Contributor Information

Shizhe Chen, Department of Statistics, Columbia University, New York, NY 10027.

Daniela Witten, Department of Biostatistics and Statistics, University of Washington, Seattle, WA 98195.

Ali Shojaie, Department of Biostatistics and Statistics, University of Washington, Seattle, WA 98195.

References

- Ahrens MB, Orger MB, Robson DN, Li JM, Keller PJ. Whole-brain functional imaging at cellular resolution using light-sheet microscopy. Nature Methods. 2013;10:413–420. doi: 10.1038/nmeth.2434. [DOI] [PubMed] [Google Scholar]

- Aït-Sahalia Y, Cacho-Diaz J, Laeven RJA. Modeling financial contagion using mutually exciting jump processes. Journal of Financial Economics. 2015;117:585–606. [Google Scholar]

- Bacry E, Gaïffas S, Muzy J-F. A generalization error bound for sparse and low-rank multivariate Hawkes processes. 2015 arXiv preprint arXiv:1501.00725. [Google Scholar]

- Bacry E, Delattre S, Hoffmann M, Muzy JF. Some limit theorems for Hawkes processes and application to financial statistics. Stochastic Process Appl. 2013;123:2475–2499. [Google Scholar]

- Berry T, Hamilton F, Peixoto N, Sauer T. Detecting connectivity changes in neuronal networks. Journal of Neuroscience Methods. 2012;209:388–397. doi: 10.1016/j.jneumeth.2012.06.021. [DOI] [PubMed] [Google Scholar]

- Bogachev VI. Measure Theory. I, II. Springer-Verlag; Berlin: 2007. [Google Scholar]

- Bowsher CG. Modelling security market events in continuous time: Intensity based, multivariate point process models. Journal of Econometrics. 2007;141:876–912. [Google Scholar]

- Brémaud P, Massoulié L. Stability of nonlinear Hawkes processes. Ann Probab. 1996;24:1563–1588. [Google Scholar]

- Brillinger DR. Maximum likelihood analysis of spike trains of interacting nerve cells. Biological Cybernetics. 1988;59:189–200. doi: 10.1007/BF00318010. [DOI] [PubMed] [Google Scholar]

- Bühlmann P, van de Geer S. Statistics for High-Dimensional Data. Springer Series in Statistics. Springer; Heidelberg: 2011. Methods, theory and applications. [Google Scholar]

- Cai T, Liu W, Luo X. A constrained ℓ1 minimization approach to sparse precision matrix estimation. Journal of the American Statistical Association. 2011;106:594–607. [Google Scholar]

- Chavez-Demoulin V, Davison AC, McNeil AJ. Estimating value-at-risk: a point process approach. Quantitative Finance. 2005;5:227–234. [Google Scholar]

- Daley D, Vere-Jones D. An Introduction to the Theory of Point Processes, volume I: Elementary Theory and Methods of Probability and its Applications. Springer; 2003. [Google Scholar]

- Fan J, Feng Y, Song R. Nonparametric independence screening in sparse ultra-high-dimensional additive models. J Amer Statist Assoc. 2011;106:544–557. doi: 10.1198/jasa.2011.tm09779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Lv J. Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc Ser B Stat Methodol. 2008;70:849–911. doi: 10.1111/j.1467-9868.2008.00674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Ma Y, Dai W. Nonparametric independence screening in sparse ultra-high-dimensional varying coefficient models. J Amer Statist Assoc. 2014;109:1270–1284. doi: 10.1080/01621459.2013.879828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J, Samworth R, Wu Y. Ultrahigh dimensional feature selection: beyond the linear model. J Mach Learn Res. 2009;10:2013–2038. [PMC free article] [PubMed] [Google Scholar]

- Fan J, Song R. Sure independence screening in generalized linear models with NP-dimensionality. Ann Statist. 2010;38:3567–3604. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software. 2010;33:1. [PMC free article] [PubMed] [Google Scholar]

- Greenshtein E, Ritov Y. Persistence in high-dimensional linear predictor selection and the virtue of overparametrization. Bernoulli. 2004;10:971–988. [Google Scholar]

- Hansen NR, Reynaud-Bouret P, Rivoirard V. Lasso and probabilistic inequalities for multivariate point processes. Bernoulli. 2015;21:83–143. [Google Scholar]

- Hawkes AG. Spectra of some self-exciting and mutually exciting point processes. Biometrika. 1971;58:83–90. [Google Scholar]

- Hawkes AG, Oakes D. A cluster process representation of a self-exciting process. J Appl Probability. 1974;11:493–503. [Google Scholar]

- Liniger TJ. PhD thesis, Diss. 2009. Multivariate Hawkes processes. Eidgenössische Technische Hochschule ETH Zürich, Nr. 18403. [Google Scholar]

- Liu J, Li R, Wu R. Feature selection for varying coefficient models with ultrahigh-dimensional covariates. J Amer Statist Assoc. 2014;109:266–274. doi: 10.1080/01621459.2013.850086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo S, Song R, Witten D. Sure Screening for Gaussian Graphical Models. 2014 arXiv preprint arXiv:1407.7819. [Google Scholar]

- Massart P. Concentration inequalities and model selection. Lecture Notes in Mathematics; Lectures from the 33rd Summer School on Probability Theory; Saint-Flour. July 6–23, 2003; Berlin: Springer; 2007. 1896. With a foreword by Jean Picard. [Google Scholar]

- Mishchencko Y, Vogelstein JT, Paninski L. A Bayesian approach for inferring neuronal connectivity from calcium fluorescent imaging data. Ann Appl Stat. 2011;5:1229–1261. [Google Scholar]

- Mohler GO, Short MB, Brantingham PJ, Schoenberg FP, Tita GE. Self-exciting point process modeling of crime. J Amer Statist Assoc. 2011;106:100–108. [Google Scholar]

- Ogata Y. Statistical Models for Earthquake Occurrences and Residual Analysis for Point Processes. Journal of the American Statistical Association. 1988;83:9–27. [Google Scholar]

- Okatan M, Wilson MA, Brown EN. Analyzing Functional Connectivity Using a Network Likelihood Model of Ensemble Neural Spiking Activity. Neural Comput. 2005;17:1927–1961. doi: 10.1162/0899766054322973. [DOI] [PubMed] [Google Scholar]

- Paninski L, Pillow J, Lewi J. Statistical models for neural encoding, decoding, and optimal stimulus design. Progress in Brain Research. 2007;165:493–507. doi: 10.1016/S0079-6123(06)65031-0. [DOI] [PubMed] [Google Scholar]

- Perry PO, Wolfe PJ. Point process modelling for directed interaction networks. J R Stat Soc Ser B Stat Methodol. 2013;75:821–849. [Google Scholar]

- Pillow JW, Shlens J, Paninski L, Sher A, Litke AM, Chichilnisky E, Simoncelli EP. Spatio-temporal correlations and visual signalling in a complete neuronal population. Nature. 2008;454:995–999. doi: 10.1038/nature07140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ravikumar P, Wainwright MJ, Raskutti G, Yu B. High-dimensional covariance estimation by minimizing ℓ1-penalized log-determinant divergence. Electron J Stat. 2011;5:935–980. [Google Scholar]

- Reynaud-Bouret P, Roy E. Some non asymptotic tail estimates for Hawkes processes. Bull Belg Math Soc Simon Stevin. 2006;13:883–896. [Google Scholar]

- Reynaud-Bouret P, Schbath S. Adaptive estimation for Hawkes processes; application to genome analysis. Ann Statist. 2010;38:2781–2822. [Google Scholar]

- Saegusa T, Shojaie A. Joint estimation of precision matrices in heterogeneous populations. Electronic Journal of Statistics. 2016;10:1341–1392. doi: 10.1214/16-EJS1137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simma A, Jordan MI. Modeling events with cascades of Poisson processes. 2012 arXiv preprint arXiv:1203.3516. [Google Scholar]

- Simon N, Tibshirani RJ. Standardization and the group lasso penalty. Statist Sinica. 2012;22:983–1001. doi: 10.5705/ss.2011.075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song R, Lu W, Ma S, Jeng XJ. Censored rank independence screening for high-dimensional survival data. Biometrika. 2014;101:799–814. doi: 10.1093/biomet/asu047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsybakov AB. In: Introduction to nonparametric estimation. Zaiats Vladimir., translator. Springer; New York: 2009. Springer Series in Statistics. Revised and extended from the 2004 French original. (2011g:62006) [Google Scholar]

- Wainwright MJ. Sharp thresholds for high-dimensional and noisy sparsity recovery using ℓ1-constrained quadratic programming (Lasso) Information Theory, IEEE Transactions on. 2009;55:2183–2202. [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. J R Stat Soc Ser B Stat Methodol. 2006;68:49–67. [Google Scholar]

- Zhou K, Zha H, Song L. Learning social infectivity in sparse low-rank networks using multi-dimensional Hawkes processes. Proceedings of the Sixteenth International Conference on Artificial Intelligence and Statistics; 2013a. pp. 641–649. [Google Scholar]

- Zhou K, Zha H, Song L. Learning triggering kernels for multi-dimensional Hawkes processes. Proceedings of the 30th International Conference on Machine Learning (ICML-13); 2013b. pp. 1301–1309. [Google Scholar]

- Zhu L. Nonlinear Hawkes processes. 2013 arXiv preprint arXiv:1304.7531. [Google Scholar]