Abstract

Objective

The primary objective of this study was to assess the effect of electric and acoustic overlap for speech understanding in typical listening conditions using semi-diffuse noise.

Design

This study used a within-subjects, repeated-measures design including 11 experienced adult implant recipients (13 ears) with functional residual hearing in the implanted and non-implanted ear. The aided acoustic bandwidth was fixed and the low-frequency cutoff for the cochlear implant was varied systematically. Assessments were completed in the R-SPACE™ sound-simulation system which includes a semi-diffuse restaurant noise originating from eight loudspeakers placed circumferentially about the subject’s head. AzBio sentences were presented at 67 dBA with signal-to-noise ratio (SNR) varying between +10 and 0 dB determined individually to yield approximately 50–60% correct for the cochlear implant (CI) alone condition with full CI bandwidth. Listening conditions for all subjects included CI alone, bimodal (CI + contralateral hearing aid, HA), and bilateral-aided electric and acoustic stimulation (EAS; CI + bilateral HA). Low-frequency cutoffs both below and above the original “clinical software recommendation” frequency were tested for all patients, in all conditions. Subjects estimated listening difficulty for all conditions using listener ratings based on a visual analog scale.

Results

Three primary findings were that 1) there was statistically significant benefit of preserved acoustic hearing in the implanted ear for most overlap conditions, 2) the default clinical software recommendation rarely yielded the highest level of speech recognition (1 out of 13 ears), and 3) greater EAS overlap than that provided by the clinical recommendation yielded significant improvements in speech understanding.

Conclusions

For standard-electrode CI recipients with preserved hearing, spectral overlap of acoustic and electric stimuli yielded significantly better speech understanding and less listening effort in a laboratory-based, restaurant-noise simulation. In conclusion, EAS patients may derive more benefit from greater acoustic and electric overlap than given in current software fitting recommendations which are based solely on audiometric threshold. These data have larger scientific implications, as previous studies may not have assessed outcomes with optimized EAS parameters, thereby underestimating the benefit afforded by hearing preservation.

INTRODUCTION

Considerable research and clinical attention has been placed on preservation of acoustic hearing with minimally traumatic cochlear implantation. Functional hearing preservation is possible both for short electrodes and associated shallow insertion depth (Büchner et al. 2009; Gantz et al. 2006, 2009; Gifford et al. 2013; Lenarz et al. 2013; Mowry et al. 2012; Turner et al. 2010) and for longer electrodes with deeper insertion (Arnolder et al. 2010; Gifford et al. 2013; Gstoettner et al. 2008; Helbig et al. 2011, 2013; Kiefer et al. 2005; Obholzer et al. 2011; Plant and Babic, 2016; Roland et al. 2016; Skarzynski et al. 2010, 2012). By adding acoustic hearing to electrical stimulation, cochlear-implant (CI) recipients can derive nonlinear, additive gains in speech understanding and basic auditory function (e.g., Ching et al. 2004; Dorman et al. 2010; Dunn et al. 2005, 2010; Gifford et al. 2007, 2014; Morera et al. 2005, 2012; Schafer et al. 2007; Sheffield et al. 2012; Zhang et al. 2013).

Current CI technology combined with acoustic stimulation in the implanted or non-implanted ear yields significant benefit for the vast majority of CI recipients. Mean benefit for speech understanding in noise derived from acoustic hearing in the implanted ear ranges from 10- to 15-percentage points or from 2- to 3-dB improvement in the signal-to-noise ratio (SNR) (Dorman & Gifford 2010; Dorman et al. 2012, 2013; Dunn et al. 2010; Gifford et al. 2010, 2013, 2014; Rader et al. 2013; Sheffield et al. 2015; Turner et al. 2010). This additive benefit is beyond that derived from monaural acoustic hearing from either the implanted or non-implanted ear alone (Dunn et al. 2010; Gifford et al. 2013; Sheffield et al. 2015). Despite this success with electric and acoustic stimulation (EAS), there is still considerable variability in benefit for patients with hearing preservation in the implanted ear(s) (Gifford et al. 2013, 2014; Lenarz et al. 2009, 2013) with some patients showing little-to-no EAS advantage.

Implant recipients with hearing preservation generally have bilateral acoustic hearing in the low-to-mid frequencies. This should, in theory, allow access to interaural time-difference (ITD) cues, which are known to be most prominent for frequencies below 1500 Hz. Research has demonstrated that EAS listeners can access ITD cues via binaural acoustic hearing and that ITD thresholds are significantly correlated with both localization ability (Gifford et al., 2014) and the degree of EAS benefit observed for speech understanding in semi-diffuse noise (Gifford et al., 2013, 2014). Thus there is significant binaural-based benefit for both spatial hearing and speech understanding afforded by cochlear implantation with acoustic hearing preservation—but as described here this benefit is gleaned from the binaural acoustic hearing, not the CI(s). This ITD-based binaural benefit cannot be provided by either bimodal hearing (CI + contralateral HA) nor bilateral cochlear implantation. See van Hoesel (2012) for greater detail regarding contrasting benefits and limitations of bimodal hearing and bilateral CI.

All FDA-approved CI manufacturers promote atraumatic electrodes for hearing and structural preservation—though just one implant manufacturer currently has an FDA approved CI sound processor with integrated hearing-aid (HA) circuitry. The Nucleus 6 (N6) processor system (CP910/920) has an acoustic component that could be fitted to provide acoustic stimulation in combination with electric processing in Nucleus 24M or later implant recipients who have hearing preservation—regardless of electrode type. It is important to note, however, that the N6 system with acoustic component was approved for use with the Hybrid-L24 electrode; thus the use of acoustic component with other electrodes, while allowed, is considered off-label usage. There are several integrated CI/HA processors available outside the U.S. market. For example, MED-EL has had the DUET or DUET2 integrated EAS processor available in European and Canadian markets for over a decade. Most recently, the MED-EL Sonnet EAS processor is available outside the U.S. Additionally, Advanced Bionics has an integrated EAS processor on the Naida CI Q90 processor that is approved for use in Europe, though it is not currently commercially available in the U.S. outside of approved investigational device exemption (IDE) studies.

Optimization of EAS-related variables for HAs and CIs has received considerably less research and clinical attention in the literature than the question of EAS efficacy. For those studies that have investigated the effects of frequency allocation for the CI, acoustic amplification characteristics for the implanted ear, and/or the associated low-frequency (LF) CI limit (Büchner et al. 2009; Dillon et al. 2014; Fraysse et al. 2006; Karsten et al. 2013; Kiefer et al. 2005; Vermeire et al. 2008), subject sample sizes have been relatively small and limited in diversity with respect to devices, insertion depths, aidable bandwidth, and number of frequencies assessed allowing for various degrees of spectral overlap in the acoustic and electric domains. Most studies have attempted to provide the broadest amplified bandwidth for the low-frequency acoustic hearing while high-frequency acoustic information was limited primarily by the degree of high-frequency hearing loss. Hereafter we will refer to the low-frequency (LF) cutoff for the CI as the LF CI cutoff. It is important to note here that EAS overlap as referenced here refers to the frequencies transmitted in the passband of the HA and CI and not the degree of cochlear or neural excitation overlap (i.e. spread of excitation or channel interaction) along the cochlea and/or across the neural populations being stimulated by the HA and CI within an ear. A number of studies have documented that the spread-of-excitation function for single-electrode stimulation can easily spread across ⅓ to ½ of the array and thus, could create neural overlap regardless of the filter frequencies being transmitted (Boike et al. 2000; Chung et al. 2007; Dorman et al. 1981; Hornsby et al. 2001). Electric stimulation can also mask contralateral acoustic stimulation (James et al. 2001). In addition to electric-on-acoustic masking, acoustic stimulation can mask ipsilateral electric hearing (Hohmann et al. 1995) as well as contralateral electric hearing (James et al. 2001). For the purposes of this paper, however, we only refer to frequencies being transmitted via electric and acoustic filtering.

Table 1 summarizes the findings of seven peer-reviewed studies investigating the effects of CI fitting parameters for EAS benefit. These studies included both perimodiolar and straight electrodes with insertion depths ranging from 10 to 24 mm. In summary, the results from these seven studies yielded conflicting conclusions, with some advocating greater spectral overlap and others less spectral overlap. Further, some studies provided little-to-no information on the raw scores obtained with different LF CI cutoff frequencies and others provided no information on whether or not the HA settings were verified with ear canal probe microphone measurements.

Table 1.

Summary of study outcomes investigating the effects of CI fitting parameters for bilateral-aided EAS patients. Studies primarily investigating the effect of HA parameters on EAS benefit were not included here.

| Study | Devices | Study details | Findings |

|---|---|---|---|

| Gantz and Turner (2003) | • Nucleus Hybrid S8, 6 mm & 10 mm • Straight electrode • Electrode insertion depth: 6 to 10 mm |

• n = 6 (3, 6 mm; 3 10 mm) • HA bandwidth: • LF CI cutoffs: multiple tested, details not provided |

• Speech understanding differences were not presented • “most successful maps” had LF CI cutoff of 1000 or 2000 Hz • no difference between 1000 & 2000 Hz |

| Kiefer et al. (2005) | • MED-EL Combi 40+ standard or medium • Straight electrode • Electrode insertion depth: 19 to 24 mm |

• n = 13 • HA bandwidth: • 125 to 1000 Hz (audibility up to 500 Hz for all listeners) • LF CI cutoffs: 300, 650, and 1000 Hz |

• 12 of 13 listener preferred 300 Hz • speech understanding not assessed across LF CI cutoff |

| James et al. (2005) | • Nucleus CI24RCA • Perimodiolar electrode • Electrode insertion depth: 17–19 mm |

• CI cutoffs: full spectral bandwidth, 188+ Hz, | • results for the different LF CI cutoffs were not reported |

| Fraysse et al. (2006) | • Nucleus CI24RCA • Perimodiolar electrode • Electrode insertion depth: 17 mm |

• n = 9 • HA bandwidth: 125 up to ≥ 500 Hz (amplify up to audiometric frequency reaching 80 dB HL, range not specified) • 2 CI cutoffs: 1) MAP A = full spectral bandwidth, 188+ Hz, 2) MAP C = higher LF CI cutoff, but not specified |

• 7 of 9 listeners preferred MAP C (less EAS overlap) • no difference for speech understanding across the cutoffs • trend for higher sentence recognition in noise with MAP C |

| Vermeire et al. (2008) | • MED-EL Combi 40+ standard or medium • Straight electrode • Electrode insertion depth: 18 mm |

• n = 4 • HA bandwidth: amplifying frequencies with thresholds 1) ≤ 85 dB HL and 2) ≤ 120 dB HL • 2 CI cutoffs: 1) full spectral bandwidth, 200+ Hz, and 2) “falloff” frequency at which audiogram > 65 dB HL (ranging from 250 to 700 Hz) |

• higher LF CI cutoff (audiogram falloff) yielded significantly higher speech understanding in noise |

| Simpson et al. (2009) | • Nucleus CI24RE(CA) • Perimodiolar electrode • Electrode insertion depth: ~18 mm |

• n = 5 • HA bandwidth: full audible bandwidth per NAL-NL1 prescriptive fitting formula • 2 CI cutoffs: 1) full spectral bandwidth, 188+ Hz, and 2) acoustic frequency pitch-matched to most apical electrode (ranging from 579 to 887 Hz) |

• no difference for speech understanding across the cutoffs |

| Karsten et al. (2012) | • Nucleus Hybrid S8 & S12 • Straight electrode • Electrode insertion depth: 10 mm |

• n = 10 • HA bandwidth: full audible bandwidth per NAL-NL1 prescriptive fitting formula • 3 CI cutoffs: 1) overlap—LF CI cutoff = 50% below upper limit of acoustic audibility (e.g., if audibility upper limit is 1000 Hz, the overlap cutoff = 500 Hz), 2) meet—LF CI cutoff = upper acoustic audibility), and 3) gap—LF CI cutoff = 50% above upper limit of acoustic audibility (i.e. if audibility upper limit is 1000 Hz, the gap cutoff = 1500 Hz) |

• “meet” LF CI cutoff yielded significantly higher speech recognition in noise as compared to “overlap” • “meet” and “gap” were not significantly different |

| Plant and Babic (2016) | • Nucleus Hybrid L24, CI422, CI24RE(CA)/CI512, and modiolar research array (MRA) • Electrode insertion depths: 16 to 20 mm |

• n = 16 in total study; n=11 with comparison of overlapping vs. non-overlapping frequency assignment for EAS) • HA bandwidth: full audible bandwidth per NAL-NL1 [for 422, CI24RE(CA) & 512] or NAL-RP (for Hybrid-L24) prescriptive fitting formula • 2 CI cutoffs: 1) overlapping—full spectral bandwidth 188+ Hz, and 2) non-overlapping—ranging from 688 to 1060 Hz—equivalent to the frequency at which audiogram ≤ 80 dB HL or 125-Hz lower (per subjective report of sound quality) |

• Speech understanding differences were not presented • 7 participants expressed preference for non-overlapping • 2 expressed preference for overlapping • 2 expressed no preference |

Given the conflicting results of previous studies, it should not be surprising that current clinical recommendations for determining the LF CI limit are inconsistent across manufacturers and are not based upon systematic investigation of LF CI cutoff frequency. The data obtained in the clinical trials of Hybrid-L in Europe, Australia, and the U.S. were collected with the LF CI cutoff set to the frequency at which audiometric threshold reached 90 dB HL. There are, however, a number of reports in the HA literature of diminishing amplification benefit for spectral regions corresponding to audiometric thresholds ≥ 70 dB HL (Amos et al. 2007; Baer et al. 2002; Ching et al. 1998; Hogan et al. 1998; Hornsby et al. 2003, 2006, 2011; Turner 2006; Turner et al. 1999; Vickers et al. 2001). It has been hypothesized that the lack of value-added benefit accompanying amplification in spectral regions with thresholds > 70 dB HL could be due to the presence of cochlear dead regions (Baer et al. 2002; Moore et al. 2000; Vickers et al. 2001; Zhang et al. 2014), spectral distortion and/or broadening of auditory filter shapes with HA output levels required to provide audibility (Ching et al. 1998, 2004; Dorman and Doherty 1981; Hornsby and Ricketts 2001; Moore et al. 1987; Studebaker et al. 1999), and/or the effects of HA compression (Boike & Souza 2000; Chung et al. 2007; Hohmann and Kollmeier 1995; Hornsby & Ricketts 2001). Further, no previous study has evaluated speech-understanding outcomes with LF CI cutoff frequencies distributed both below and above the manufacturer’s clinical recommendations for all participants, i.e., the frequency at which audiometric thresholds reach 90 dB HL.

The goal of this study was to complete a systematic investigation of the effects of six different LF CI cutoffs for speech understanding and subjective ratings of listening effort for a group of 11 CI recipients (13 ears) with hearing preservation in the implanted ear. Our hypothesis was that setting the LF CI cutoff closest to the audiometric frequency at which thresholds exceeded 70 dB HL would yield the highest speech understanding scores as well as the lowest subjective ratings of listening effort. The underlying motivation for this hypothesis was based both in the HA literature for diminishing returns of amplification for severe or greater hearing losses.

EXPERIMENT

Speech understanding and subjective estimates of listening difficulty

Participants

Demographic information for the 11 study participants (13 ears) is shown in Table 2 which includes age at testing, gender, implant type, experience with implant(s), aided Speech Intelligibility Index at 60 dB SPL as provided by the Audioscan Verifit real-ear measures, and Consonant Nucleus Consonant [CNC, (Peterson and Lehiste, 1962)] monosyllabic word recognition performance at 60 dBA. All participants had bilateral aidable acoustic hearing in the low-to-mid frequency region. Bimodal hearing refers to the condition of CI plus contralateral HA (occluding acoustic hearing ipsilateral to the CI) and bilateral-aided EAS refers to the condition of CI plus bilateral HA. Prior to study enrollment, only two of the participants were using a hearing aid in the implanted ear (2_R and 3). For these two participants, they were both using the full spectral bandwidth (188+ Hz) in the implanted ear. Thus they both had complete EAS overlap in the implanted ear.

TABLE 2.

Demographic and device information for the 11 participants (13 ears) participating in this study.

| Age at testing (years) | Gender | device | CI experience (years) at testing | CI ear | SNR used for testing | Aided SII for CI ear test point | Aided SII for contralateral ear at test point | Clinical rec (Rx), in Hz, for LF CI cutoff | Frequency = 70-dB-HL threshold (via linear regression) | CNC (% correct) for the CI alone, bimodal, and best-aided EAS conditions | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1_L | 52 | Male | CI24RE(CA) | 3.8 | LEFT | 5 | 12 | 19 | 563 | 478 | 70, 86, 84 |

| 1_R | -- | -- | CI512 | 5.4 | RIGHT | 0 | 19 | 12 | 813 | 644 | 88, 100, 98 |

| 2_L | 58 | Female | CI422 | 2.2 | LEFT | 5 | 13 | 14 | 813 | 399 | 50, 70, 68 |

| 2_R | -- | -- | CI24RCA | 9.4 | RIGHT | 5 | 14 | 13 | 438 | 232 | 72, 76, 74 |

| 3 | 75 | Male | CI24RE(CA) | 1.2 | RIGHT | 5 | 11 | 35 | 438 | 284 | 40, 80, 82 |

| 4 | 74 | Male | CI24RE(CA) | 1.4 | RIGHT | 10 | 6 | 36 | 438 | 186 | 18, 44, 50 |

| 5 | 54 | Male | CI24RE(CA) | 1.2 | LEFT | 0 | 24 | 32 | 813 | 739 | 82, 96, 94 |

| 6 | 51 | Female | CI422 | 0.6 | LEFT | 0 | 16 | 44 | 563 | 568 | 58, 88, 92 |

| 7 | 60 | Female | CI24RCA | 9.0 | RIGHT | 5 | 9 | 12 | 563 | 463 | 60, 72, 74 |

| 8 | 75 | Male | CI422 | 1.0 | LEFT | 10 | 22 | 29 | 813 | 797 | 40, 48, 54 |

| 9 | 74 | Male | CI422 | 0.7 | LEFT | 10 | 3 | 26 | 563 | 205 | 42, 60, 58 |

| 10 | 40 | Female | CI422 | 1.8 | RIGHT | 0 | 9 | 21 | 813 | 989 | 82, 90, 92 |

| 11 | 48 | Male | CI422 | 1.5 | LEFT | 5 | 12 | 22 | 563 | 163 | 70, 80, 82 |

| Mean (stdev) | 60.1 (12.5) | -- | -- | 3.0 (3.1) | -- | 4.6 | 13.1 (6.02) | 24.2 (10.4) | 630.3 (158.2) | 472.8 (263.0) | 59.4, 76.2, 77.1 (20.6, 17.2, 15.8) |

Mean age at testing was 60.1 years (range 40 to 75 years). Two of the participants were bilateral CI recipients with bilateral hearing preservation and for these participants, each CI was assessed separately. All were experienced CI users with an average of 3.1 years of implant experience (range 0.64 to 9.8). There was a mix of perimodiolar (n=7) and straight electrodes (n=6), though none that were specifically designed to be used in a hybrid/EAS configuration (i.e. Hybrid S81, S122, or Hybrid-L24). In other words, all participants were recipients of standard-length electrodes (18+ mm).

Also shown in Table 2 is the clinical software (Custom Sound) recommendation (Rx)2 for the LF CI cutoff frequency, which is based on the frequency at which audiometric thresholds reach 90 dB HL. Next to the clinical Rx for LF CI cutoff is the frequency at which audiometric threshold reached 70 dB HL. These numbers were derived from quartic polynomial linear regression completed for each participant’s audiogram, beginning with the threshold for the audiometric plateau in the low-frequency range and ending with the threshold for the audiometric plateau in the high-frequency range. The predicted values provided by the regression were used to determine the frequency intercept at 70 dB HL.

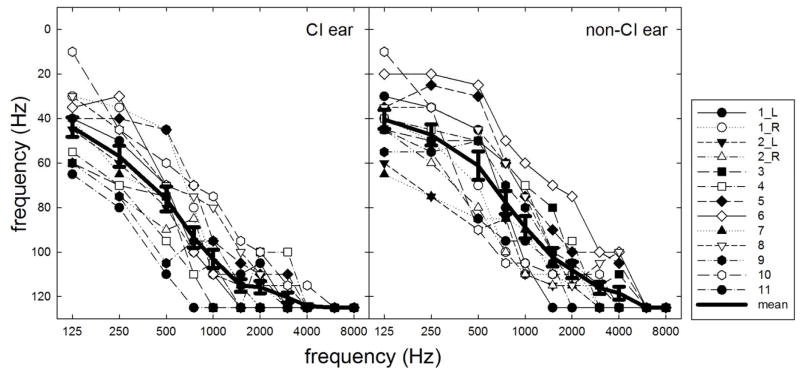

Individual and mean air-conduction audiometric thresholds are shown in Figure 1. Frequencies for which no behavioral threshold could be obtained are indicated as 125 dB HL. Hearing losses in both the implanted and non-implanted ear were sensory in nature (air-bone gaps ≤ 10 dB). To promote transparency and reproducibility in hearing research, individual numerical audiometric thresholds for the implanted and non-implanted ears are provided in Table A1 in the appendix.

FIGURE 1.

Individual and mean audiometric thresholds for the implanted ears (panel A) and non-implanted ears (panel B). Error bars represent +1 SEM.

Methods and stimuli

Acoustic amplification was provided for the low-frequency band encompassing audiometric thresholds up to 90 dB HL—as specified by the Nucleus Custom Sound clinical software as used in the U.S. Nucleus Hybrid Implant System clinical trial. Amplification was provided via the Nucleus 6 sound processor using the integrated acoustic component via a receiver in the canal (RIC) unit attached to the sound processor. Eleven of the thirteen ears were also fitted with custom earmolds—participants 1_R and 5 did not use a custom earmold as NAL-NL2 target audibility was achieved through 500 Hz using a non-custom dome attached to the RIC. All participants were evaluated for the presence of cochlear dead regions for low frequencies (500 & 750 Hz) in the implanted ear by using the Threshold Equalizing Noise (TEN) test (Moore et al. 2000, 2004). Though we were unable to present the TEN stimuli at levels that were within the range of comfort for all listeners at both 500 and 750 Hz (see audiometric thresholds shown in Table A1), the TEN test provided no evidence of cochlear dead regions in the low-frequency region for any of the participants in the current study.

Speech understanding was assessed using both a fixed-SNR and an adaptive procedure. For the fixed SNR condition, AzBio sentences were presented at 67 dBA with signal-to-noise ratio (SNR) varying between +10 and 0 dB SNR. The SNR was individually determined to ensure that performance did not approach ceiling or floor performance and was set using the bimodal hearing configuration with the full CI spectral bandwidth (188–7938 Hz). Speech testing was conducted using the R-SPACETM sound simulation system which includes a semi-diffuse restaurant noise originating from eight loudspeakers placed circumferentially about the subject’s head. Specifically, all individuals were first tested at +5 dB SNR; if the participant scored below 25% or above 75% in this condition, the SNR was adjusted by 5 dB. The individual SNRs used for testing are shown in Table 2. The AzBio sentences originated from the speaker at 0 degrees. For the adaptive procedure, the restaurant noise was fixed at a level of 72 dBA. The Hearing In Noise Test [HINT (Nilsson et al. 1994)] sentences originated from 0 degrees azimuth and their level was varied adaptively using a 1-down, 1-up tracking procedure. Per the development and validation of the HINT (Nilsson et al. 1994), all words in a given sentence must be repeated correctly for a decrease in SNR. Using this method, the actual percentage of words repeated correctly at a given SNR would be expected to exceed 50% correct. Though there is a modified adaptive HINT rule (Chan et al. 2008) allowing for minimal errors without changing the stepping rule, the original tracking method was used here to be consistent with previously published data with the R-SPACETM system (Gifford et al. 2013). The 72-dBA level was chosen as it matched the physical level of the restaurant noise from which the stimuli were recorded. Research has shown that the mean noise level for 27 restaurants surveyed in the San Francisco area was 71 dBA and the median level was 72 dBA (Lebo et al. 1994). Thus this noise level, 72 dBA, holds high ecological validity. All CI programs incorporated autosensitivity with a default sensitivity setting of 12. Thus the level at which infinite output limiting compression was activated in the presence of background noise was 77 dB SPL (65 dB SPL—which is the default CSPL setting—plus 12 dB). This means that for SRTs greater +5 dB SNR, the CI processor was operating in saturation. Additional information regarding processor operation in these conditions is provided in the description of results for adaptive SRT.

The LF CI cutoffs used in the current study are outlined in Table 3. Specifically, the LF CI cutoff was varied in 125-Hz steps starting at 188 Hz increasing to 813 Hz for eight ears and up to 938 Hz for the five ears for which the clinical software-recommended (Rx) cutoff was 813 Hz (1_R, 2_L, 5, 8, and 12). The extra condition was added for these five ears because the goal of the study was to assess outcomes for LF CI cutoffs both below and above the software recommended (Rx) frequency. For the adaptive procedure, only a subset of LF CI cutoffs was tested given the limited number of HINT sentences available for threshold tracking. LF CI cutoff frequencies at the clinical recommendation and at least one frequency above and below the recommendation were assessed for each participant. Speech recognition was assessed for the bimodal (CI + contralateral HA) and the bilateral-aided EAS (CI + bilateral HA) conditions for both the adaptive and the fixed SNR experiments for all participants. In addition, the CI alone condition was tested for 8 of the 13 ears for all LF CI cutoff frequencies. Acoustic-hearing ears not being assessed—as in the CI-alone and bimodal conditions—were occluded with a foam earplug.

Table 3.

Relative LF CI cutoffs for each of the Custom Sound prescriptive (Rx) settings indicated for the 13 ears in the current study. Each of the tested cutoffs was assessed in the CI only (n = 8), bimodal (n = 13), and bilateral-aided EAS conditions (n = 13).

| relative cutoffs | 188 Hz | 313 Hz | 438 Hz | 563 Hz | 688 Hz | 813 Hz | 938 Hz |

|---|---|---|---|---|---|---|---|

| Rx: 438 Hz | Full bandwidth | Rx-125 Hz (min overlap) | Rx | Rx+125 Hz (gap) | tested | tested | Did not test |

| Rx: 563 Hz | Full bandwidth | tested | Rx-125 Hz (min overlap) | Rx | Rx+125 Hz (gap) | tested | Did not test |

| Rx: 688 Hz | Full bandwidth | tested | tested | Rx-125 Hz (min overlap) | Rx | Rx+125 Hz (gap) | Did not test |

| Rx: 813 Hz | Full bandwidth | tested | tested | tested | Rx+125 Hz (gap) | Rx | Rx+125 Hz (gap) |

The effect of LF CI cutoff was assessed in both an acute and a chronic condition for the first three study participants (5 ears) in the fixed SNR R-SPACE™ condition. At the initial fitting of the N6 processor with acoustic component, the first three participants (five ears) were tested on all LF CI cutoff frequencies in the bilateral-aided EAS condition (CI + bilateral HA). They were then provided with programs incorporating four different boundary frequencies including the clinical software recommendation (Rx), Rx – 125 Hz (minimal overlap), Rx + 125 Hz (gap), and full overlap (188 Hz). In addition to these programs, which were used for all participants, each listener was assessed with 188 Hz (full overlap), 313 Hz, 438 Hz, 563 Hz, 688 Hz, and 813 Hz so that we could assess the effect of discrete LF CI cutoff frequencies. All programs incorporated SCAN SmartSound iQ, background-noise reduction (SNR-NR; Mauger et al. 2014; Wolfe et al. 2015), and wind-noise reduction (WNR) (Studebaker et al. 1999). Study participants were asked to use all 4 programs equally for 3–4 weeks. Upon their return for testing following the chronic phase of 3–4 weeks usage, participants’ use of all programs was verified using the data-logging feature in Custom Sound 4.2. After the first 3 participants (five ears) had completed testing following acute and chronic usage of the different programs, we observed no effect of listening experience (acute vs. chronic) at the group level [F(3,4) = 1.1, p = 0.35] nor at the individual level for any of the tested conditions on the basis of the 95% confidence interval for AzBio sentence lists computed using a binomial distribution model for two 20-item lists (Spahr et al. 2012). Thus for experimental efficiency purposes, we decided to discontinue acute testing for all subsequent participants. Of course, further investigation would be required to thoroughly investigate the effect of listening experience for LF CI cutoff as data for just 3 participants (5 ears) is not sufficient to draw definitive conclusions about the effect of listening experience for EAS overlap. Given that participants used 4 of the 6 LF CI cutoffs during the 3–4 weeks of chronic usage, 2 of the cutoffs were tested acutely. We were not concerned about this given that there was no difference in performance across the acute and chronic test points for any of the cutoffs in any of the listening conditions tested for the first 3 participants (five ears)—though this point should be taken into consideration.

Speech understanding was evaluated in the bimodal and bilateral-aided EAS condition for all participants. In addition to the bimodal and bilateral-aided EAS conditions, the last 8 enrolled participants were tested in the CI-alone condition for fixed EAS boundaries ranging from 125 to 813 Hz, in 125-Hz steps. The CI-alone condition was only assessed for AzBio sentence recognition in the fixed-SNR R-SPACE background.

In addition to speech understanding with fixed SNR, participants were asked to rate how difficult it was to understand speech for each of the LF CI cutoff conditions in the fixed SNR task. Estimates of speech understanding or listening difficulty were obtained using a visual analog scale (VAS) similar to that used by Gatehouse and Noble (2004). The VAS was made up of 10 equidistant ticks ranging from 1 to 10 with 1 corresponding to “no difficulty at all” and 10 equaling the “most difficulty imaginable”. Participants were provided with a laminated 8.5″x11″ VAS prior to testing and were aware that they would be asked to provide a difficulty rating after each block. An example visual of the VAS used for experimentation is provided in the appendix.

RESULTS

AzBio sentence understanding: fixed SNR

Individual speech-understanding scores for each LF CI cutoff tested are shown in Tables 4A and 4B for the bimodal and best-aided conditions, respectively. The participants’ “preferred” LF CI cutoff—based on their reported preferred sound quality in the chronic condition—are indicated by a shaded cell, and an asterisk was added for those “preferred” scores when significantly different from that individual’s own best speech understanding score based on the upper and lower 95% confidence intervals for AzBio sentence lists (Spahr et al. 2012). Five of the 13 ears (or 4 of the 11 enrolled participants, 2_L, 2_R, 3, 4, and 8) exhibited significantly poorer speech understanding in the bilateral-aided EAS condition with the individual’s “preferred” LF CI cutoff as compared to that individual’s own best performance. As others have shown a disconnect between preference and performance (Svirsky et al. 2015), the current data also indicate that we may not be able to rely on patient report for optimizing LF CI cutoff parameters.

Table 4.

Individual speech understanding performance for all LF CI cutoffs tested for each participant for AzBio sentence recognition in the fixed SNR in the A) bimodal listening configuration and B) bilateral-aided EAS condition. In each table, the participant’s “preferred” LF CI cutoff—for the best-aided EAS condition as indicated by the participants’ reported preferred sound quality—is indicated by shading. In Table B, those scores with an asterisk indicate that the participant’s preferred LF CI cutoff, for sound quality purposes, yielded significantly lower speech understanding as compared to that individual’s own best score based on the upper and lower 95% confidence intervals for AzBio sentence lists administering two 20-sentence lists.

| A | LF CI cutoff (in Hz): bimodal configuration | ||||||

|---|---|---|---|---|---|---|---|

| Participant | 188 Hz | 313 Hz | 438 Hz | 563 Hz | 688 Hz | 813 Hz | 938 Hz |

| 1_L | 82.6 | 77.2 | 68.3 | Rx: 83.3 | 69.8 | 49.3 | DNT |

| 1_R | 78.2 | 83.5 | 90.0 | 80.0 | 62.4 | Rx: 61.9 | 52.7 |

| 2_L | 28.2 | 36.2 | 56.4 | 40.3 | 43.5 | Rx: 40.7 | 34.2 |

| 2_R | 46.2 | 31.9 | Rx: 14.3 | 4.2 | 3.1 | 7.6 | DNT |

| 3 | 68.2 | 65.4 | Rx: 77.6 | 71.4 | 79.9 | 65.1 | DNT |

| 4 | 19.2 | 36.8 | Rx: 37.7 | 32.5 | 57.2 | 52.3 | DNT |

| 5 | 78.4 | 71.9 | 72.8 | 62.9 | 42.6 | Rx: 26.9 | 23.9 |

| 6 | 66.4 | 67.8 | 75.4 | Rx: 55.3 | 63.5 | 67.7 | DNT |

| 7 | 24.4 | 47.6 | 43.3 | Rx: 29.6 | 28.0 | 18 | DNT |

| 8 | 38.6 | 57.1 | 54.3 | 37.4 | 50.0 | Rx: 44.7 | 39 |

| 9 | 78.7 | 82.1 | 77.1 | Rx: 71.7 | 44.8 | 45.6 | DNT |

| 10 | 61.5 | 58.8 | 57.3 | 50.2 | 45.4 | Rx: 45.4 | 39.9 |

| 11 | 70.0 | 64.0 | 48.6 | Rx: 26.8 | 27.0 | 26.9 | DNT |

| MEAN | 57.0 | 60.0 | 59.5 | 49.7 | 47.5 | 42.2 | 37.9 |

| MEAN without P4 | 60.1 | 62.0 | 61.3 | 51.1 | 46.7 | 41.3 | 37.9 |

| B | LF CI cutoff (in Hz): bilateral-aided EAS condition | ||||||

| Participant | 188 Hz | 313 Hz | 438 Hz | 563 Hz | 688 Hz | 813 Hz | 938 Hz |

| 1_L | 77.0 | 81.2 | 87.4 | Rx: 78.7 | 79.0 | 80.0 | DNT |

| 1_R | 75.8 | 83.6 | 85.1 | 84.0 | 82.6 | Rx: 57.5 | 54.0 |

| 2_L | 26.5* | 44.0 | 50.3 | 39.1 | 36.3 | Rx: 35.0 | 37.0 |

| 2_R | 48.4* | 79.2 | Rx: 66.9 | 46.4 | 36.2 | 20.0 | DNT |

| 3 | 56.9* | 82.6 | Rx: 80.0 | 82.3 | 83.1 | 78.6 | DNT |

| 4 | 26.9 | 39.0* | Rx: 40.3 | 42.5 | 59.2 | 65.3 | DNT |

| 5 | 80.1 | 72.5 | 71.7 | 52.2 | 60.0 | Rx: 43.0 | 40.4 |

| 6 | 73.1 | 71.5 | 70.4 | Rx: 70.3 | 70.4 | 47.0 | DNT |

| 7 | 73.4 | 70.4 | 77.0 | Rx: 60.5 | 47.0 | 45.6 | DNT |

| 8 | 49.5 | 48.3* | 59.4 | 53.6 | 55.6 | Rx: 55.9 | 46.0 |

| 9 | 84.7 | 79.7 | 82.6 | Rx: 71.3 | 55.7 | 50.0 | DNT |

| 10 | 64.4 | 71.7 | 59.0 | 64.0 | 57.6 | Rx: 46.8 | 38.0 |

| 11 | 77.0 | 77.0 | 68.0 | Rx: 53.0 | 32.0 | 33.2 | DNT |

| MEAN | 68.2 | 73.9 | 69.1 | 61.4 | 58.1 | 50.6 | 43.1 |

| MEAN without P4 | 72.3 | 73.9 | 71.3 | 62.8 | 58.0 | 49.5 | 43.1 |

After data collection was completed for subject 4, he had a postoperative CT to determine the placement of his electrode array given his odd pattern of performance both in this study and in the clinic. It was determined that he had considerable tip fold over such that electrode 22, which is supposed to be the most distal electrode on the array, was located at the 1800-Hz place according to the spiral ganglion atlas (Stakhovskaya et al. 2007) for its projected insertion depth and electrode E18 was the most distally located electrode, consistent with the 1500-Hz place. Given this electrode anomaly, all averaged data and statistical analyses from this point forward exclude subject 4’s data. For the two BiBi participants, analyses were completed treating each ear as an independent observation. See Table A1 in the appendix for additional detail regarding statistical analyses including only the first implanted ear for BiBi participants 1 and 2.

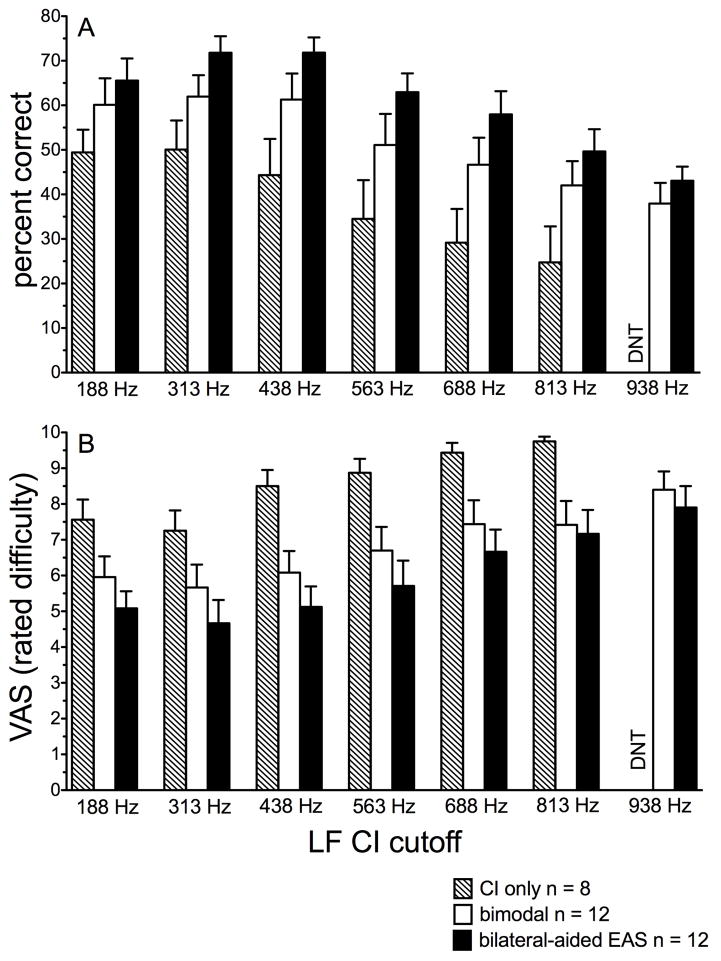

Effect of discrete EAS boundaries (188–813 Hz)

For ease of visual analysis, Figure 2A displays mean performance for AzBio sentence recognition in noise for each of the discrete EAS boundaries assessed for CI-alone (hatched bars, n = 8), bimodal (unfilled bars, n = 12), and bilateral-aided EAS (filled bars, n = 12). A simple statistical analysis was completed comparing bimodal and bilateral-aided EAS performance collapsed across LF CI cutoff. A paired t-test revealed a significant difference (t(1) = −5.6, p < 0.001) between bimodal and bilateral-aided EAS conditions consistent with previous reports showing that hearing preservation in the implanted ear yields significantly higher outcomes (mean difference = 10 percentage points).

FIGURE 2.

AzBio sentence recognition, in percent correct (panel A), and associated perceived listening difficulty (panel B) as a function of discrete EAS boundary frequency from 188 through 938 Hz. The CI alone, bimodal, and bilateral-aided EAS conditions are shown as hatched, unfilled, and filled bars, respectively. Error bars represent +1 SEM.

Table 5 displays the results of all one-way, repeated-measures, analyses of variance (ANOVA). For all ANOVAs, LF CI cutoff was the independent variable and speech understanding, in percent correct, was the dependent variable. Because we were not concerned with the differences between the different listening conditions, we ran separate analyses for each condition. For all listening conditions tested (CI-alone, bimodal, and bilateral-aided EAS), there was a significant effect of LF CI cutoff on speech understanding (see Table 5 for greater detail).

Table 5.

Summary of statistical analyses for LF CI cutoff in the different listening configurations (bimodal, bilateral-aided EAS) and assessments (fixed SNR, subjective listening difficulty, and adaptive SRT). Data were derived from one-way, repeated measures ANOVA. Post hoc testing was completed using all pairwise multiple comparisons with the Holm-Sidak statistic for parametric analyses and Tukey for the non-parametric analyses. Only bimodal SRT data were analyzed with non-parametric statistics due to lack of normality.

| LF CI cutoff definition | Bimodal | Bilateral-aided EAS | CI only (unilateral) | |

|---|---|---|---|---|

| Speech understanding (% correct), fixed SNR | Discrete LF CI cutoff |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 11) = 7.9, p < 0.001 • 813 Hz < 438 Hz, 313 Hz, & 188 Hz • 688 Hz < 438 Hz, 313 Hz, & 188 Hz |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 11) = 9.1, p < 0.001 • 813 Hz < 563 Hz, 438 Hz, 313 Hz, & 188 Hz • 688 Hz < 438 Hz & 313 Hz |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 7) = 8.5, p < 0.001 • 813 Hz < 188 Hz, 313 Hz, & 438 Hz • 688 Hz < 188 Hz & 313 Hz |

| Relative LF CI cutoff |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 11) = 5.2, p < 0.001 • Gap (Rx+125 Hz) < full (188 Hz), 438 Hz, & 70 dB HL |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 11) = 5.2, p < 0.001 • Gap (Rx+125 Hz) < 438 Hz & Minimal overlap (Rx-125 Hz) |

Did not assess | |

| Subjective listening difficulty (VAS), fixed SNR | Relative LF CI cutoff |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 11) = 4.5, p = 0.002 • Gap (Rx+125 Hz) < full (188 Hz), 438 Hz, 70 dB HL, & Minimal overlap (Rx-125 Hz) |

n = 12 ears, 10 participants • Significant effect of cutoff: F(5, 11) = 4.2, p = 0.003 • Gap < 438 Hz & Minimal overlap (Rx-125 Hz) |

|

| Speech understanding (dB SNR), adaptive SRT | Discrete LF CI cutoff |

n = 8 ears, 6 participants • Significant effect of cutoff: χ2(7) = 24.8, p < 0.001 • 813 Hz < 188 Hz, 313 Hz, 438 Hz, & 563 Hz • 688 Hz < 188 Hz, 313 Hz, 438 Hz, & 563 Hz |

n = 8 ears, 6 participants • Significant effect of cutoff: F(5,7) = 3.9, p = 0.019 • 688 Hz < 438 Hz • 188 Hz < 438 Hz |

|

| Relative LF CI cutoff |

n = 8 ears, 6 participants • Significant effect of cutoff: χ2(7) = 22.8, p < 0.001 • Gap (Rx+125 Hz) < full (188 Hz), 438 Hz, and 70 dB HL |

n = 8 ears, 6 participants • Significant effect of cutoff: F(5,7) = 3.3, p = 0.015 • Gap (Rx+125 Hz) < 438 Hz |

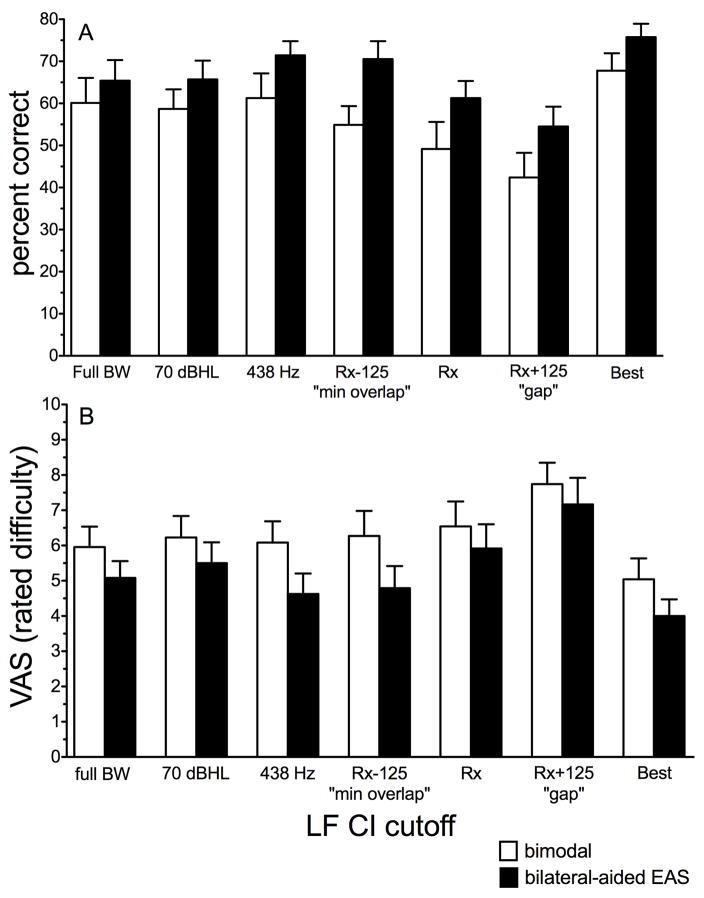

Effect of relative LF CI cutoff

Given that the participants had different levels of hearing preservation in the implanted ear, an investigation of the relative LF CI cutoff was prudent as this holds potential for generalizability. Thus Figure 3 displays AzBio sentence recognition in the fixed-SNR conditions for the bimodal (unfilled bars) and bilateral-aided EAS conditions (filled bars) for the following seven LF CI cutoffs: 1) full CI bandwidth (complete overlap), 2) frequency = 70-dB-HL threshold (based on linear regression, shown in Table A1), 3) 438 Hz, 4) Rx – 125 Hz or “minimal overlap”, 5) clinical software or “Rx” (Table 2), 6) Rx + 125 Hz or “gap”, and 7) the crossover yielding the individual “best” score. While the reason for including these conditions was self-evident, the reason for including condition 6—LF CI cutoff of 438 Hz—was that it is the highest cutoff that encompasses average F1 information for English vowels (mean = 490 Hz).

FIGURE 3.

Mean AzBio sentence recognition, in percent correct (panel A), and associated perceived listening difficulty (panel B) as a function of relative LF CI cutoff for the bimodal (unfilled) and bilateral-aided EAS conditions (filled bars). Error bars represent +1 SEM.

As described above, Figure 3 displays the mean data included in the one-way repeated measures ANOVA using LF CI cutoff as the independent variable and speech understanding score (in percent correct) as the dependent variable. Though we display individual “best” LF CI cutoff in Figure 3, we did not include the “best” condition for statistical analysis purposes. There was a significant effect of LF CI cutoff on speech understanding for both the bimodal and bilateral-aided EAS conditions (see Table 5 for greater detail).

AzBio sentence understanding in fixed SNR: Subjective reports of listening difficulty

Effect of discrete LF CI cutoff frequency (188–813 Hz)

Figure 2B displays mean VAS ratings of listening difficulty for AzBio sentence recognition in noise for the CI-alone (hatched bars), bimodal (unfilled bars) and bilateral-aided EAS conditions (filled bars) for discrete LF CI cutoff frequencies, respectively. Mean VAS scores were 6.4 and 5.4 for the bimodal and bilateral-aided EAS conditions, respectively. A paired t-test revealed a significant difference between perceived listening difficulty for bimodal and bilateral-aided EAS conditions (t(77) = 4.8, p < 0.001). This is consistent with both the literature and the data shown in Figures 2A and 3A that hearing preservation in the implanted ear yields significantly higher outcomes for speech understanding. The CI-alone data were not included in this analysis as a difference between unilateral CI and either bimodal or bilateral-aided EAS was not a primary research question for the current study. No further statistical analyses were completed for the discrete LF CI cutoff frequencies.

Effect of relative LF CI cutoff

Figure 3B displays mean VAS ratings of listening difficulty for AzBio sentence recognition in noise for the bimodal (unfilled bars) and bilateral-aided EAS conditions (filled bars) for the same 7 relative LF CI cutoff frequencies shown in Figure 3A. We completed a separate one-way, repeated-measures ANOVA for the relative cutoffs for both the bimodal and bilateral-aided EAS conditions. The individual “best” condition was not included as a separate cutoff for the analysis, despite being displayed in Figure 3. We found a significant effect of relative LF CI cutoff on subjective listening difficulty for both the bimodal and bilateral-aided EAS conditions. Please see Table 5 for further detail.

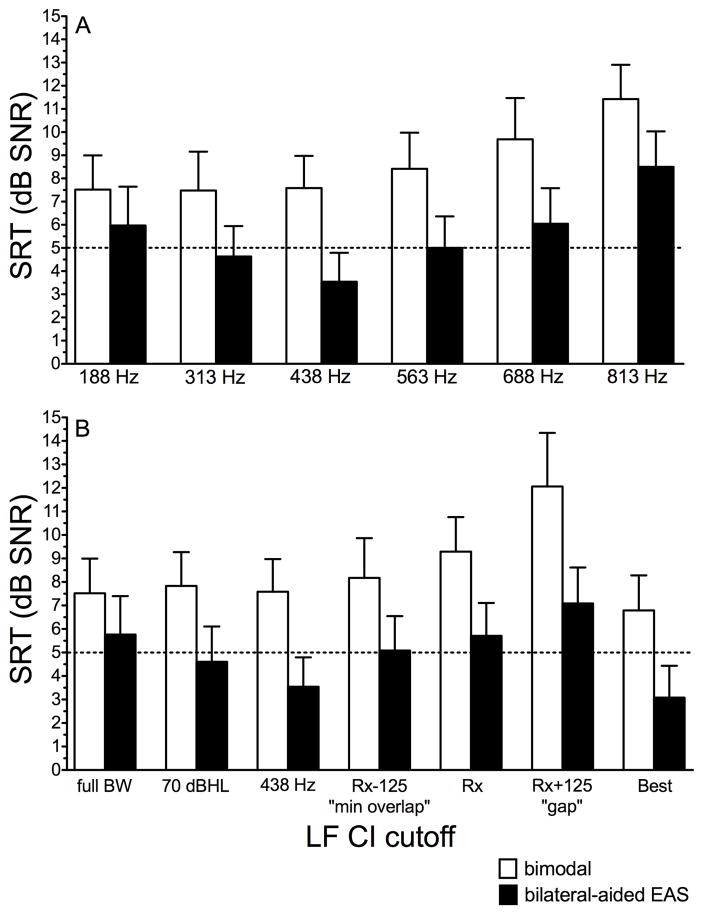

HINT sentence recognition: adaptive speech reception threshold (SRT)

The adaptive SRT was completed for 9 of 13 ears. Time did not allow for testing of all LF CI cutoffs for 4 participants (9, 10, 12, and 13); further, subject 4’s data were not included in this analysis due to electrode tip fold over. Figures 4A and B display mean SRTs, in dB SNR, for HINT sentence recognition in the R-SPACETM restaurant noise for the discrete and relative EAS boundaries as described in previous figures. The horizontal line in Figures 4A and B was meant to highlight +5 dB SNR—the level at which infinite output limiting compression was active for this particular listening condition. All CI programs incorporated autosensitivity with a default sensitivity setting of 12. Using a fixed noise level of 72 dB SPL, speech exceeding +5 dB SNR was infinitely compressed. The reason is that the kneepoint for output-limiting compression (termed “CSPL” in the Cochlear Custom Sound software) is 65 dB SPL. However, when autosensitivity is active and background noise exceeds 57 dB SPL, the CSPL kneepoint shifts upward by the manual sensitivity setting. So for the participants in the current study who were all programmed using a default manual sensitivity setting of 12, the CSPL kneepoint was shifted by 12 dB or in this case, to 77 dB SPL. Thus, although +5 dB SNR is a commonly encountered SNR in real-world listening environments, all SRTs in excess of +5 dB SNR for this listening condition should be interpreted cautiously as the sound processors were operating in saturation.

FIGURE 4.

Mean speech reception threshold (SRT), in dB SNR, for HINT sentences are shown for the discrete EAS boundaries in panel A and relative EAS boundaries in panel B. Bimodal and bilateral-aided EAS conditions are represented by the unfilled and filled bars, respectively. Error bars represent +1 SEM.

Effect of discrete and relative LF CI cutoff

As shown in Figures 4A and B, mean SRT values, collapsed across cutoffs, were 8.7 and 5.6 dB SNR for the bimodal and bilateral-aided EAS conditions, respectively. A paired t-test revealed a significant difference (t(2) = 10.9, p < 0.001) between SRTs obtained in the bimodal and bilateral-aided EAS conditions. This result is consistent with previous reports in the literature of a significant benefit of preserved acoustic hearing in the implanted ear for SRT, though in this case, the magnitude of the benefit, 3.1 dB, is somewhat greater than that reported previously (Dunn et al. 2010; Gifford et al. 2013).

Keeping in line with previous analyses, we completed a one-way repeated measures ANOVA using LF CI cutoff frequency as the independent variable and SRT as the dependent variable. Because we were not concerned with differences between bimodal and bilateral-aided EAS conditions for this research question, we ran separate analyses. We found a significant effect of LF CI cutoff on the SRT for both the bimodal and bilateral-aided EAS conditions. See Table 5 for greater detail.

DISCUSSION

The primary purpose of this study was to investigate EAS programming parameters that may influence the optimization of outcomes for CI recipients with hearing preservation in the implanted ear. As discussed in the introduction, clinical optimization of EAS-related variables for HAs and CIs has received less attention in the literature than the question of EAS efficacy. For those studies that have examined frequency allocation for the CI, HA, and the associated EAS boundary (Büchner et al. 2009; Fraysse et al. 2006; Karsten et al. 2013; Kiefer et al. 2005; Plant & Babic, 2016; Vermeire et al. 2008), sample sizes have been relatively small and limited in diversity with respect to device type, insertion depth, aidable bandwidth, and the number of LF CI cutoffs tested (typically 2 to 3). In this study we systematically investigated the effect of six different LF CI cutoffs for speech understanding in a complex listening environment in conjunction with the assessment of subjective listening difficulty associated with each of the tested boundaries for a group of CI recipients with standard-length electrodes in bimodal and bilateral-aided EAS hearing configurations.

Because current and past clinical recommendations for determining the LF CI cutoff were based on the frequency at which audiometric thresholds reach 90 dB HL (Helbig et al. 2011; Lenarz et al. 2009, 2013; Roland et al. 2016), we tested LF CI cutoff frequencies distributed both below and above this clinical recommendation for all patients. On the basis of the speech-understanding and subjective-listening-difficulty data presented here, we can conclude the following: 1) the previous clinical software recommendation for EAS crossover—corresponding to the frequency at which audiometric thresholds reach 90 dB HL— yielded neither the highest level of speech understanding nor the lowest rating of listening difficulty, 2) the full CI BW did not always yield the highest level of performance in the bimodal or in the bilateral-aided EAS condition, and 3) as demonstrated elsewhere, preserved acoustic hearing in the implanted ear provided significant benefit for speech understanding in complex listening environments as compared to the bimodal hearing configuration (Dorman & Gifford 2010; Dorman et al. 2012; Dunn et al. 2010; Gifford et al. 2008, 2010, 2012, 2013; Rader et al. 2013; Sheffield et al. 2014).

Clinical recommendations did not yield best performance

Starting with the first point, the clinical software Rx CI cutoff yielded neither the highest speech understanding nor the lowest reported listening difficulty. Figure 2 shows that at the group level, the ideal LF CI cutoff was generally closer to the frequency at which the audiogram reached 70 dB HL (for 9 of 13 ears tested or 69% of the study sample), than the frequency at which the audiogram reached 90 dB HL, as previously defined by the clinical software Rx. At the group level, either the 313- or 438-Hz boundary yielded the highest performance and lowest rated difficulty for the bilateral-aided EAS condition, and LF CI cutoff frequencies from 188 through 438 Hz were consistent in performance and rated difficulty for the bimodal hearing configuration.

There are several potential alternative hypotheses that could account for the lower LF CI cutoff yielding higher outcomes. As mentioned in the introduction, there are multiple reports in the HA literature of diminishing amplification benefit for spectral regions at which audiometric thresholds exceed 65–70 dB HL (Amos and Humes 2007; Baer et al. 2002; Ching et al. 1998; Hogan and Turner 1998; Hornsby et al. 2011; Hornsby and Ricketts 2003, 2006; Turner 2006; Turner and Cummings 1999; Vickers et al. 2001). Thus it is possible that the Rx cutoff frequency—corresponding to the frequency at which audiometric threshold reaches 90 dB HL—may be placing too much weight on acoustic amplification in a spectral region for which amplification is likely to be lacking effectiveness. An alternative hypothesis is that lowering the LF CI cutoff to 313 or 438 Hz—irrespective of LF audiometric threshold—allows the implant to transmit F1 information for the majority of English vowels. Processing F1 information through the CI passband and via LF acoustic amplification provides spectral redundancy which may aid speech understanding in adverse listening conditions (Assmann et al. 2004; Warren et al. 1995). This spectral redundancy may be of particular benefit for individuals with more severe hearing losses in the F1 frequency range for whom acoustic amplification alone will not be sufficient for successful information transmission.

Given these data, it would be prudent to commence a thorough investigation for the ideal LF CI cutoff, as a function of hearing preservation and device type, so that audiologists will be best able to assist EAS patients to reach their full auditory potential. Clinicians rarely will have time to assess speech understanding performance for various EAS boundary frequencies; thus, the current dataset suggests that two approaches could prove clinically useful for patients with bilateral acoustic hearing: 1) provide the patient with an EAS boundary equivalent to 125-Hz lower than the previous clinical recommendation (Rx-125 Hz), and/or 2) provide the patient with an EAS boundary equivalent to 313 or 438 Hz. Either 313 or 438 Hz would provide the patient close to maximum performance in both the bilateral-aided EAS condition and bimodal hearing configuration should the acoustic component become temporarily nonfunctional, leaving the listener without a full CI bandwidth. Future studies should also continue this investigation with additional longer (e.g., MED-EL FLEX 28 or FLEX soft) and shorter electrodes (e.g., Hybrid-L24 or S12) as well as examining potential differences in perimodiolar versus straight or lateral wall electrodes to examine whether a generalized clinical recommendation for LF CI cutoff can be determined—see Figure A1 for a visual display of mean sentence recognition scores for the current study participants with perimodiolar (n = 7) and lateral wall electrodes (n = 6) and the accompanying Appendix text for a brief discussion of electrode-specific findings. In the meantime, on the basis of the current dataset, good clinical practice would be to provide patients with several program options allowing for physical overlap in spectral bandwidth between electric and acoustic hearing modalities. In fact, based on the individual scores shown in Table 3, only one individual (patient 4) achieved a significantly higher score at an EAS boundary that differed from 438 Hz, based on 95% confidence intervals for two AzBio sentence lists (Spahr et al. 2012). As described previously, this individual had considerable tip fold over based on postoperative imaging that was completed for clinical purposes. Thus it is possible that individuals with deviations in electrode insertion may represent special cases requiring further study.

Full CI BW did not consistently yield the best performance for even bimodal or CI-alone conditions

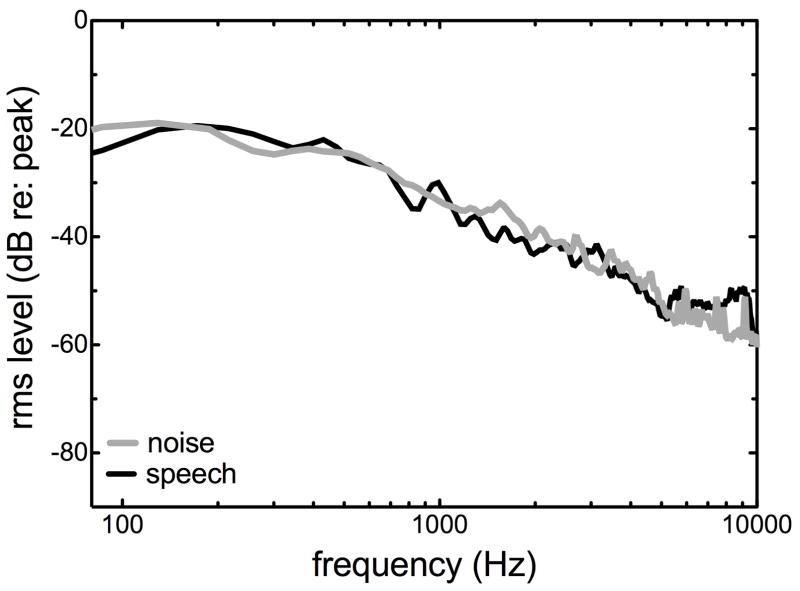

The second primary finding was that the full CI BW did not always yield the highest level of performance in the bimodal nor in the bilateral-aided EAS conditions. This was not unexpected for the bilateral-aided EAS condition given previous results (Karsten et al. 2013; Vermeire et al. 2008), but it is somewhat counterintuitive for bimodal and CI-alone hearing configuration for which full CI BW is the standard of care. Figure 5 displays the long-term average spectra for the R-SPACETM restaurant noise (black line) and the AzBio sentences (gray line). The relative rms amplitude, in dB, is plotted as a function of frequency. Prior to analysis, the stimuli were normalized with respect to peak amplitude. As seen in Figure 5, although the spectra are similar across the noise and speech, the noise stimulus has higher amplitude for frequencies below 200 Hz. This analysis is consistent with the result that providing additional transmission of low frequencies via the CI—in addition to the low-frequency transmission via the HAs—may not provide benefit for speech understanding nor for perceived listening difficulty. By setting the LF CI cutoff at 313 or 438 Hz, the higher quality CI stimulus will not transmit a band of frequencies containing a relatively unfavorable SNR for the target stimulus. Given that the speech and noise spectra were not perfectly matched, this may preclude generalization of these data to more similar types of noise and speaker spectral characteristics.

Figure 5.

RMS amplitude, in dB re: peak amplitude, for the R-SPACETM restaurant noise (black line) and AzBio sentences (gray line).

Another potential hypothesis accounting for the fact that a higher LF CI cutoff (> 188 Hz) yields better speech understanding in noise is related to the closer approximation to the underlying spiral ganglion frequency map. On average, the Nucleus contour advance electrodes (i.e. perimodiolar CI24RCA, CI24RE(CA), or CI512) are inserted to approximately 370 degrees (Boyer et al. 2015; Landsberger et al. 2015; Wanna et al. 2015) and CI422 (or CI522) slim straight electrodes are inserted to approximately 360 to 440 degrees (Franke-Trieger et al. 2015; Mukherjee et al. 2012). Based on the standard spiral ganglion (SG) frequency map, angular insertion depths ranging from 360 to 440 degrees correspond to the 700- to 900-Hz place (Stakhovskaya et al. 2007). Increasing the LF CI cutoff to 313 or 438 Hz—from the default LF CI setting of 188 Hz—provides a closer alignment between the underlying SG place map and the CI frequency allocation for the most apical electrode (Fitzgerald et al. 2013; Landsberger et al. 2015). On the other hand, if providing a closer match between the apical electrode passband and the SG frequency map yielded higher outcomes, then we could expect the highest speech-understanding scores for the Rx and/or the gap (Rx + 125 Hz) conditions, which was not the case. Nevertheless, this is a topic deserving further investigation with a larger population.

An alternative or possibly complementary hypothesis explaining the reason why a higher LF CI cutoff may yield better outcomes may relate to the underlying mechanism driving bimodal and/or bilateral-aided EAS benefit in background noise. Two primary theories of bimodal benefit are segregation and glimpsing. The segregation theory of bimodal benefit holds that cues in the low-frequency acoustic stimulus, particularly F0 periodicity, provide voice-pitch information allowing the listener a better comparison between the electric and acoustic stimuli so that s/he can separate the target speech from the generally aperiodic distracter(s) (Chang et al. 2006; Kong et al. 2005; Qin et al. 2006; Zhang et al. 2010). The glimpsing theory of bimodal benefit is based on the fact that the spectral- and temporal-dependent SNR varies over time so that either acoustic or electric cues from the target signal can be “glimpsed” during spectro-temporal dips (Brown et al. 2009; Kong et al. 2007; Li et al. 2008; Sheffield and Gifford 2014). There have been studies yielding results in support of both possible underlying mechanisms and as such, it is possible that both segregation and glimpsing are differentially responsible for bimodal benefit, depending on the listening environment. In the current experiment, by increasing the lower frequency cutoff for the CI passband, the listener may have less perceptual distraction from the overlap of the physical transmission of the electric and acoustic stimulus. This may have provided a cleaner signal for the lower frequency acoustic stimuli that facilitate segregation and/or glimpsing. This is particularly true for the bilateral-aided EAS condition for which the listener has access to bilateral low frequency acoustic stimulation and can thus, in theory, be better able to take advantage of spectro-temporal dips and also possibly binaural summation of F0 for segregation benefit.

Hearing preservation provides significant benefit for speech understanding in noise

The third primary finding was that significant benefit from acoustic hearing preservation in the implanted ear was consistent with previous reports in the literature; however, the magnitude of the benefit, ranging from 3.1 to 3.5 dB for discrete and relative EAS boundaries and 10-percentage points collapsed across EAS boundary frequency was somewhat greater than previous reports (Dunn et al. 2010; Gifford et al. 2010, 2013). When considering the individual “best” EAS boundary for the bilateral-aided EAS condition as compared to the full-bandwidth bimodal condition—which is what would be considered standard of care for a bimodal fitting—the magnitude of the EAS benefit was even greater at 5.6 dB and 17.7 percentage points for the adaptive and fixed SNR conditions, respectively. Thus it might be the case that previous reports in the literature regarding the magnitude of EAS benefit have underestimated the benefit of preserved acoustic hearing in the implanted ear as it is likely that not all individuals’ EAS fittings had been optimized. This is a point of high clinical relevance as it holds the potential to influence clinical decision-making regarding candidacy for hybrid/EAS devices and the decision of whether or not to provide acoustic amplification for CI recipients with hearing preservation. This may also impact clinical practice motivating CI programs to routinely assess air- and bone-conduction audiometric thresholds in the implanted ear following surgery.

Limitations

There are limitations associated with this study that may preclude generalization of the current dataset. First, as mentioned above, the speech and noise spectra were not perfectly matched (see Figure 5). Though we believe this to be a strength of the experimental design (i.e. using actual physical restaurant recordings and multiple male and female talkers), this remains a caveat and one that may preclude generalization of these data to other types of noise and different speaker characteristics. This caveat holds particular importance for clinical programming and recommendations given that the particular choice of speech and noise spectra may have driven the observed pattern of results. Thus clinicians should take note when counseling patients that using a LF CI cutoff of 313 Hz, 438 Hz, or closer to the point at which the audiogram reaches 70 dB HL may be useful for noisy restaurant environments in which speech and noise spectra may not perfectly match, but that this may not necessarily generalize to other listening environments. Further investigation is needed here. Second, SNR-NR was enabled in all programs. The reason for this was that SNR-NR is a default setting in the Custom Sound software; hence, we wanted to assess how most patients would be performing in realistic environments with a typical processor setting. It was not our intention to investigate the effect of SNR-NR on speech understanding in semi-diffuse noise. Thus we cannot generalize these results to all patients with program settings that do not use SNR-NR. Further, it is important to point out here that SNR-NR does not affect the signal processing for the acoustic component. Thus further investigation will be required to fully examine and understand the differential effects and interaction of the SNR-NR setting on the acoustic component and sound processor for speech understanding in noise and perceived listening difficulty.

In a similar vein, it is also possible that there was an interaction between SNR-NR and SNR, particularly for the adaptive SRT data as shown in Figure 4. The operation of general noise reduction technologies changes across SNR levels. We do not have definitive evidence to suggest that the SNRs used for the CI test range (Figure 4) were within the range for which noise reduction technologies, such as SNR-NR, are less effective—which is generally true for lower or poorer SNRs (Mauger et al., 2014). Given the dynamic nature of the adaptive SRT as shown testing as in figure 4, it is possible that any effect of SNR-NR—particularly for the bilateral best-aided condition—may have been understated since the bilateral best-aided SRTs were obtained at lower or poorer SNRs. Though this may complicate our interpretation, we would assume that if anything, the presence of SNR-NR would only have limited the reported benefit of bilateral acoustic hearing. To date there is one study which has published preliminary results relevant to this topic (Dawson et al., 2011). In their ‘Party Noise’ condition—which is most similar to the R-SPACE™ restaurant noise used in the current study—they demonstrated a significant inverse correlation between degree of SNR-NR improvement and baseline performance. Because we did not assess performance for programs without SNR-NR, it is not possible to quantify the improvement from SNR-NR, and its effect across SNR for the current study; however, based on previous research, it is probable that our poorest performers, with a higher baseline SRT (in dB SNR), received greater benefit from SNR-NR. Further research is warranted to fully understand the interaction between SNR-NR and EAS settings such as those examined here.

Finally, all participants in the current study were recipients of a conventional electrode. In other words, none of the current participants had an electrode specifically designed to be used in an EAS configuration, such as Hybrid-L24, Hybrid S8 or S121, or the MED-EL FLEX 24 (previously known as FLEXeas). Thus the current results may not generalize to recipients of a shorter electrode for whom the electrode-to-neural frequency mismatch will be expectedly larger and who may have lower (i.e. better) audiometric thresholds across a broader range of low frequencies. Thus it will be of great importance to investigate the effect of LF CI cutoff both in larger populations of long electrode recipients as well as shorter, hybrid electrodes. Finally, the results of the current study may not generalize to patients who have EAS in a single ear without acoustic hearing in the non-implanted ear. It would be of interest to further investigate the effect of LF CI cutoff for EAS overlap for situations in which patients do not have bilateral acoustic hearing.

SUMMARY AND CONCLUSIONS

We are seeing more patients in the clinic who have acoustic hearing preservation following cochlear implantation with long, conventional and shorter hybrid/EAS electrodes. Though we have observed significant benefit from hearing preservation with current CI systems, little attention has been placed on the optimization of the HA and CI parameters to maximize EAS benefit. The current study investigated the effect of the lower frequency CI boundary, LF CI cutoff, on speech understanding and subjective reports of listening difficulty for long electrode, conventional implants. From the current dataset we can conclude the following:

Acoustic hearing preservation in the implanted ear provides significant benefit for speech understanding in complex listening environments.

Previous clinical software recommendations for setting the EAS boundary—corresponding to the frequency at which audiometric thresholds reach 90 dB HL—did not yield the highest level of speech understanding nor the least amount of listening difficulty.

The optimum LF CI cutoff was generally closer to the frequency at which the audiogram reached 70 dB HL, a level consistent with previous reports in the HA literature as the threshold for diminishing amplification returns.

Full CI BW did not yield the highest level of performance nor the lowest ratings of listening difficulty for CI alone, bimodal, or the bilateral-aided EAS condition. This may be related to the spectral characteristics of the noise, frequency mismatch between apical electrode frequency allocation and underlying SG frequency map, and/or the underlying mechanisms driving bimodal and EAS benefit. LF CI cutoffs of 313 or 438 Hz generally provided the patient close to maximum performance in both the bilateral-aided EAS condition and bimodal hearing configuration.

Some spectral overlap between the electric and acoustic modalities yielded the highest outcomes with respect to both speech understanding and rated listening difficulty.

Previous reports describing EAS benefit may have underestimated the magnitude of the EAS effect as the participants may not have had optimized EAS parameters.

This report investigated just one manipulation of the EAS benefit and thus further investigation is needed to investigate the effects of the acoustic amplification in conjunction with the EAS boundary for electrical stimulation.

Supplementary Material

Acknowledgments

The research reported here was supported by grant R01 DC009404 from the National Institute of Deafness and Other Communication Disorders (NIDCD) and Cochlear Americas. Portions of this data set were presented at the 14th Symposium on Cochlear Implants in Children in Nashville, TN December 11–13, 2014 and at the 14th International Conference on Cochlear Implants and Other Implantable Technologies in Toronto, Ontario, Canada May 11–14, 2016. Institutional Review Board was approved by Vanderbilt University (#101509). René H. Gifford is on the audiology advisory board for Advanced Bionics and Cochlear Americas and was previously a member of the audiology advisory board for MED-EL. We would like to thank the three anonymous reviewers as the associate editor, Dr. Joshua Bernstein, for their thorough and constructive critiques of this manuscript.

Footnotes

Both the Hybrid S8 (Gantz et al. 2009) and S12 (Gantz et al., 2010) are investigational devices not commercially approved for use in the U.S.

The clinician has the ability to set the LF CI cutoff, which is the turning point in the frequency range where electric processing begins, and in the usual case, where acoustic processing ends. The fitting application derives its default value as being just below the upper acoustic cut-off frequency, with as little overlap as possible Custom Sound automatically determines the acoustic-to-electric crossover frequency based on the unaided audiogram in the acoustics screen. Therefore, acoustic bands are disabled when the hearing loss exceeds a given threshold. Bands with a hearing loss ≥ 90 dB will be automatically disabled by the prescription function, starting from the highest frequency, until the hearing loss is < 90 dB, but the audiologist still has the possibility to enable these bands. The software finds a CI boundary that is just below the acoustic upper edge frequency. The lower CI frequency boundary can be modified as indicated in the present study.

References

- Amos NE, Humes LE. Contribution of high frequencies to speech recognition in quiet and noise in listeners with varying degrees of high-frequency sensorineural hearing loss. Journal of Speech Language and Hearing Research. 2007;50:819–834. doi: 10.1044/1092-4388(2007/057). [DOI] [PubMed] [Google Scholar]

- Arnolder C, Helbig S, Wagenblast J, et al. Electric acoustic stimulation in patients with postlingual severe high-frequency hearing loss: Clinical experience. Advances in Oto-Rhino-Laryngology. 2010;67:116–124. doi: 10.1159/000262603. [DOI] [PubMed] [Google Scholar]

- Assmann P, Summerfield Q. The Perception of Speech Under Adverse Conditions. In: Greenberg S, Ainsworth WA, Fay RR, editors. Speech processing in the auditory system. New York: Springer New York; 2004. pp. 231–308. [Google Scholar]

- Baer T, Moore BCJ, Kluk K. Effects of low pass filtering on the intelligibility of speech in noise for people with and without dead regions at high frequencies. Journal of the Acoustical Society of America. 2002;112:1133–1144. doi: 10.1121/1.1498853. [DOI] [PubMed] [Google Scholar]

- Boike K, Souza P. Effect of compression ratio on speech recognition and speech-quality ratings with wide dynamic range compression amplification. Hearing Research. 2000;43:456–468. doi: 10.1044/jslhr.4302.456. [DOI] [PubMed] [Google Scholar]

- Boyer E, Karkas A, Attye A, et al. Scalar Localization byCone-BeamComputed Tomography of Cochlear Implant Carriers: A Comparative Study Between Straight and Periomodiolar Precurved Electrode Arrays. Otology & Neurotology. 2015;36:422–429. doi: 10.1097/MAO.0000000000000705. [DOI] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Low-frequency speech cues and simulated electric-acoustic hearing. Journal of the Acoustical Society of America. 2009;125:1658–1665. doi: 10.1121/1.3068441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Büchner A, Schüssler M, Battmer RD, et al. Impact of low-frequency hearing. Audiology & Neurotology. 2009;14:8–13. doi: 10.1159/000206490. [DOI] [PubMed] [Google Scholar]

- Chan JC, Freed DJ, Vermiglio AJ, et al. Evaluation of binaural functions in bilateral cochlear implant users. International Journal of Audiology. 2008;47:296–310. doi: 10.1080/14992020802075407. [DOI] [PubMed] [Google Scholar]

- Chang JE, Bai JY, Zeng FG. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Transactions on Biomedical Engineering. 2006;53:2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- Ching TY, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners: predictions from audibility and the limited role of high-frequency amplification. Journal of the Acoustical Society of America. 1998;103:1128–1140. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Ching TY, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear and Hearing. 2004;25:9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- Chung K, Killion MC, Christensen LA. Ranking hearing aid input-output functions for understanding low-, conversational-, and high-level speech in multitalker babble. Journal of Speech Language and Hearing Research. 2007;50:304–322. doi: 10.1044/1092-4388(2007/022). [DOI] [PubMed] [Google Scholar]

- Dawson PW, Mauger SJ, Hersbach AA. Clinical evaluation of signal-to-noise ratio-based noise reduction in Nucleus® cochlear implant recipients. Ear and Hearing. 2011;32:382–90. doi: 10.1097/AUD.0b013e318201c200. [DOI] [PubMed] [Google Scholar]

- Dillon MT, Buss E, Pillsbury HC, et al. Effects of Hearing Aid Settings for Electric-Acoustic Stimulation. Journal of the American Academy of Audiology. 2014;25:133–140. doi: 10.3766/jaaa.25.2.2. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Cook S, Spahr A, et al. Factors constraining the benefit to speech understanding of combining information from low-frequency hearing and a cochlear implant. Hearing Research. 2015;322:107–111. doi: 10.1016/j.heares.2014.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Doherty K. Shifts in phonetic identification with changes in signal presentation level. Journal of the Acoustical Society of America. 1981;69:1439–1440. doi: 10.1121/1.385827. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. International Journal of Audiology. 2010;49:912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Spahr AJ, Gifford RH, et al. Current research with cochlear implants at Arizona State University. Journal of the American Academy of Audiology. 2012;23:385–395. doi: 10.3766/jaaa.23.6.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Spahr AJ, Loiselle L, et al. Localization and speech understanding by a patient with bilateral cochlear implants and bilateral hearing preservation. Ear and Hearing. 2013;34:9–17. doi: 10.1097/AUD.0b013e318269ce70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Perreau A, Gantz BJ, et al. Benefits of localization and speech perception with multiple noise sources in listeners with a short-electrode cochlear implant. Journal of the American Academy of Audiology. 2010;21:44–51. doi: 10.3766/jaaa.21.1.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. Journal of Speech Language and Hearing Research. 2005;48:668–680. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Fitzgerald MB, Sagi E, Morbiwala TA, et al. Feasibility of Real-Time Selection of Frequency Tables in an Acoustic Simulation of a Cochlear Implant. Ear and Hearing. 2013;34:763–772. doi: 10.1097/AUD.0b013e3182967534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franke-Trieger A, Murbe D. Estimation of insertion depth angle based on cochlea diameter and linear insertion depth: a prediction tool for the CI422. European Archives of Oto-Rhino-Laryngology. 2015;272:3193–3199. doi: 10.1007/s00405-014-3352-4. [DOI] [PubMed] [Google Scholar]

- Fraysse B, Macías AR, Sterkers O, et al. Residual hearing conservation and electroacoustic stimulation with the nucleus 24 contour advance cochlear implant. Otology & Neurotology. 2006;27:624–633. doi: 10.1097/01.mao.0000226289.04048.0f. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, et al. Hybrid 10 clinical trial: preliminary results. Audiology & Neurotology. 2009;14:32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz BJ, Dunn CC, Walker EA, Kenworthy M, Van Voorst T, Tomblin B, Turner C. Bilateral cochlear implants in infants: a new approach--Nucleus Hybrid S12 project. 2010;31(8):1300–9. doi: 10.1097/MAO.0b013e3181f2eba1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gatehouse S, Noble W. The Speech, Spatial and Qualities of Hearing Scale (SSQ) International Journal of Audiology. 2004;43:85–99. doi: 10.1080/14992020400050014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Brown C, et al. Hearing, psychophysics, and cochlear implantation: experiences of older individuals with mild sloping to profound sensory hearing loss. Journal of Hearing Science. 2012;2:9–17. [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Brown CA. Psychophysical properties of low-frequency hearing: implications for perceiving speech and music via electric and acoustic stimulation. Advances in Oto-Rhino-Laryngology. 2010;67:51–60. doi: 10.1159/000262596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Sheffield SW, et al. Availability of binaural cues for bilateral implant recipients and bimodal listeners with and without preserved hearing in the implanted ear. Audiology & Neurotology. 2014;19:57–71. doi: 10.1159/000355700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Skarzynski H, et al. Cochlear implantation with hearing preservation yields significant benefit for speech recognition in complex listening environments. Ear and Hearing. 2013;34:413–425. doi: 10.1097/AUD.0b013e31827e8163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Skarzynski H, et al. Cochlear implantation with hearing preservation yields significant benefit for speech recognition in complex listening environments. Ear and Hearing. 2013;34:413–425. doi: 10.1097/AUD.0b013e31827e8163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Spahr AJ, et al. Hearing preservation surgery: psychophysical estimates of cochlear damage in recipients of a short electrode array. Journal of the Acoustical Society of America. 2008;124:2164–2173. doi: 10.1121/1.2967842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Spahr AJ, et al. Combined electric and contralateral acoustic hearing: word and sentence intelligibility with bimodal hearing. Journal of Speech Language and Hearing Research. 2007;50:835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Grantham DW, Sheffield SW, et al. Localization and interaural time difference (ITD) thresholds for cochlear implant recipients with preserved acoustic hearing in the implanted ear. Hearing Research. 2014;312:28–37. doi: 10.1016/j.heares.2014.02.007. [DOI] [PMC free article] [PubMed] [Google Scholar]