Abstract

Lung cancer is a leading cause of death worldwide. Currently, in differential diagnosis of lung cancer, accurate classification of cancer types (adenocarcinoma, squamous cell carcinoma, and small cell carcinoma) is required. However, improving the accuracy and stability of diagnosis is challenging. In this study, we developed an automated classification scheme for lung cancers presented in microscopic images using a deep convolutional neural network (DCNN), which is a major deep learning technique. The DCNN used for classification consists of three convolutional layers, three pooling layers, and two fully connected layers. In evaluation experiments conducted, the DCNN was trained using our original database with a graphics processing unit. Microscopic images were first cropped and resampled to obtain images with resolution of 256 × 256 pixels and, to prevent overfitting, collected images were augmented via rotation, flipping, and filtering. The probabilities of three types of cancers were estimated using the developed scheme and its classification accuracy was evaluated using threefold cross validation. In the results obtained, approximately 71% of the images were classified correctly, which is on par with the accuracy of cytotechnologists and pathologists. Thus, the developed scheme is useful for classification of lung cancers from microscopic images.

1. Introduction

Lung cancer is a leading cause of death for both males and females worldwide [1]. Primary lung cancers are divided into two major types: small cell lung cancer and non-small cell lung cancer. Recent improvements in chemotherapy and radiation therapy [2] have resulted in the latter being further classified into adenocarcinoma, squamous cell carcinoma, and large cell carcinoma [3]. It is often difficult to precisely differentiate adenocarcinoma and squamous cell carcinoma in terms of their morphological characteristics, which requires immunohistochemical evaluation. Cytodiagnosis is advantageous for cytological evaluation of small cell carcinoma compared to histological specimen, often showing crushed small cell cancer cells. For definitive and precise diagnosis, cooperation of cytological evaluation and histopathological diagnosis—which are independent techniques—is indispensable. There are many varieties of morphologies among these cancer cells. Computer-aided diagnosis (CAD) can be a useful tool for avoiding misclassification. Among the four major types of carcinoma, large cell carcinoma is the easiest to detect because of its severe atypism. We therefore concentrate on classification of the other three types—adenocarcinoma, squamous cell carcinoma, and small cell carcinoma—which are sometimes confused with each other in the cytological specimen.

CAD provides a computerized output as a “second opinion” to support a pathologist's diagnosis and helps clinical technologists and pathologists to evaluate malignancies accurately. In this study, we focused on automated classification of cancer types using microscopic images for cytology.

Various studies that apply CAD methods to pathological images have been conducted [4–7]. Barker et al. [5] proposed an automated classification method for brain tumors in whole-slide digital pathology images. Ojansivu et al. [6] investigated automated classification of breast cancer from histopathological images. Ficsor et al. [7] developed a method for automated classification of inflammation in colon histological sections based on digital microscopy. However, to the best of our knowledge, no method has been developed to classify lung cancer types from cytological images.

Deep learning is well known to give better performance than conventional image classification techniques [8, 9]. For example, Krizhevsky et al. [8] won the 2012 ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) using a deep convolutional neural network (DCNN) to classify high-resolution images. In addition, many research groups have investigated the application of DCNNs to medical images [10–13].

Various CAD methods have been proposed for pathological images using deep learning techniques. For example, Ciresan et al. developed a system that uses convolutional neural networks for mitosis counting in primary breast cancer grading [14]. Wang et al. combined handcrafted features and deep convolutional neural networks for mitosis detection [15]. Ertosun and Rubin proposed an automated system for grading gliomas using deep learning [16]. Xu et al. developed a deep convolutional neural network that segments and classifies epithelial and stromal regions in histopathological images [17]. Litjens et al. investigated the effect of deep learning for histopathological examination and verified that its performance was excellent in prostate cancer identification and breast cancer metastasis detection [18].

To our knowledge, DCNNs have not been applied to cytological images for lung cancer classification. In this study, we developed an automated classification scheme for lung cancers in microscopic images using a DCNN.

2. Materials and Method

2.1. Image Dataset

Seventy-six (76) cases of cancer cells were collected by exfoliative or interventional cytology under bronchoscopy or CT-guided fine needle aspiration cytology. They consisted of 40 cases of adenocarcinoma, 20 cases of squamous cell carcinoma, and 16 cases of small cell carcinoma. Final diagnosis was made in all cases via a combination of histopathological and immunohistochemical diagnosis. Specifically, biopsy tissues, simultaneously collected with cytology specimen, were fixed in 10% formalin, dehydrated, and embedded in paraffin. The 3 μm tissue sections were subjected to immunohistochemical analysis for some cases. Cancer lesions were judged as adenocarcinoma if TTF-1 and/or napsin A were positive and diagnosed as squamous cell carcinoma if p40 and/or cytokeratin 5/6 were present. Positivity of neuroendocrine markers including chromogranin A, synaptophysin, and CD56 was suggestive of small cell carcinoma.

The cytological specimens were prepared with a liquid-based cytology (LBC) system using BD SurePath liquid-based Pap Test (Beckton Dickinson, Durham, NC, USA), and were stained using the Papanicolaou method. Using a digital still camera (DP70, Olympus, Tokyo, Japan) attached to a microscope (BX51, Olympus) with ×40 objective lens, 82 images of adenocarcinoma, 125 images of squamous cell carcinoma, and 91 images of small cell carcinoma were collected in JPEG format. The initial matrix size of each JPEG image was 2040 × 1536 pixels.

Subsequently, 768 × 768 pixels square images were generated by cropping and were further resized to 256 × 256 pixels. Subsequently, duplicate 768 × 768 pixels square images were cut from the original image in order not to cause overlap therefrom. Finally, they were resized to 256 × 256 pixels.

This study was approved by an institutional review board, and patient agreements were obtained under the condition that all data were anonymized (number HM16-155).

2.2. Data Augmentation

Training of a DCNN requires that a sufficient amount of training data be available. If only a small amount of training data is used, overfitting may result. To prevent overfitting owing to the limited number of images, training data were augmented by image manipulation [8, 13]. The images obtained by microscope are direction-invariant and the sharpness of the target cell in each image varies according to the position of the focal plane of the microscope. Therefore, we performed data augmentation by rotating, inverting, and filtering the original image.

The rotation pitch for the image was determined such that the number of augmented images was the same for the three cancer classes. In addition, the images were flipped, resulting in the final number of images being twice that of the original. For filtering, Gaussian filter (standard deviation of Gaussian kernel = 3 pixels) and a convolutional edge enhancement filter with center weight 5.4 and the 8-surrounding weight of −0.55 were applied to the images.

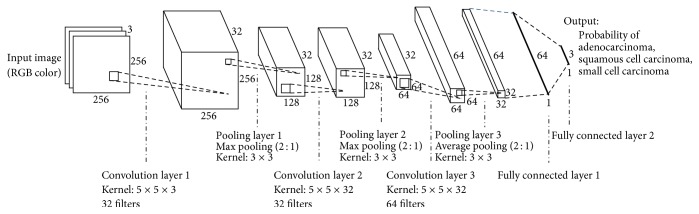

2.3. Network Architecture

The architecture of the DCNN used for cancer-type classification is shown in Figure 1. It consists of three convolution layers, three pooling layers, and two fully connected layers. Color microscopic images are given to the input layer of the DCNN. The filter size, number of filters, and stride for each layer are specified in Figure 1. For example, convolution layer 1 uses 32 filters with a 5 × 5 × 3 kernel, resulting in a feature map of 256 × 256 × 32 pixels; pooling layer 1 conducts subsampling (resampling) that outputs the maximum value in a 3 × 3 kernel for every two pixels, reducing the matrix size of the feature map to 128 × 128 × 32 pixels. Each convolution layer is followed by a rectified linear unit (ReLU). After three convolution layers and three pooling layers, there are two fully connected layers consisting of a multilayer perceptron. In the last layer, the probabilities of cancer types (adenocarcinoma, squamous cell carcinoma, and small cell carcinoma) are obtained using a softmax function. In the training, we employed the dropout method (dropout rate = 50% for full connection layers) to prevent overfitting.

Figure 1.

Architecture of the deep convolutional neural network used for cancer-type classification.

The DCNN was trained using the dedicated training program bundled in the Caffe package [19] on Ubuntu 16.04 and accelerated by a graphic processing unit (NVIDIA GeForce GTX TITAN X with 12 GB of memory). The number of epochs and training time were 60,000 and 8 hours, respectively.

3. Results

For classification of the three cancer types, DCNN was trained and evaluated using augmented data and original data, respectively. Its classification performance was evaluated via threefold cross validation. In this process, 298 images were randomly divided into three groups. However, images taken from the same specimen belonged to the same group.

The original number of images in each dataset is listed in Table 1. By data augmentation, the number of images for each class was unified to approximately 5000.

Table 1.

Number of images in each dataset for cross validation.

| Set 1 | Set 2 | Set 3 | ||||

|---|---|---|---|---|---|---|

| Original | Augmented | Original | Augmented | Original | Augmented | |

| Adenocarcinoma | 28 | 5280 | 28 | 5184 | 26 | 5040 |

| Squamous cell carcinoma | 42 | 5478 | 37 | 5220 | 46 | 5310 |

| Small cell carcinoma | 26 | 5070 | 33 | 5280 | 32 | 5214 |

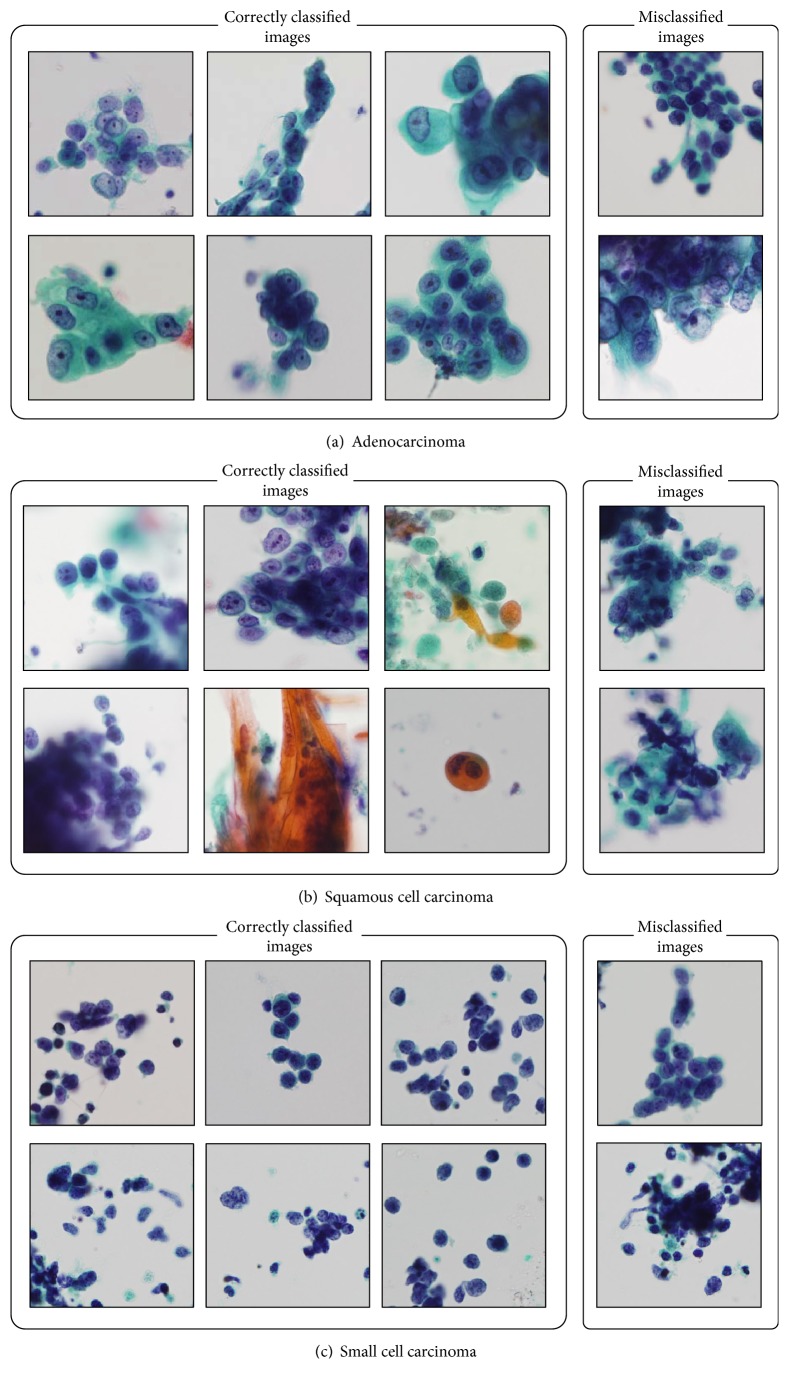

Figure 2 shows sample images of correctly classified and misclassified cancer types obtained using the data augmentation method. The classification confusion matrix is shown in Table 2. It can be seen that squamous cell cancer was often mistaken for adenocarcinoma.

Figure 2.

Sample images of correctly classified and misclassified carcinoma.

Table 2.

Confusion matrix of classification results.

| Adenocarcinoma | Squamous cell carcinoma | Small cell carcinoma | |

|---|---|---|---|

| Adenocarcinoma | 73 (89.0%) | 8 (9.8%) | 1 (1.2%) |

| Squamous cell carcinoma | 35 (28.0%) | 75 (60.0%) | 15 (12.0%) |

| Small cell carcinoma | 7 (7.7%) | 20 (22.0%) | 64 (70.3%) |

Table 3 shows the classification accuracy for the original and the augmented images, respectively. In the results obtained using the augmented images, the classification accuracies of adenocarcinoma, squamous cell carcinoma, and small cell carcinoma were 89.0%, 60.0%, and 70.3%, respectively; the total correct rate was 71.1%. Furthermore, applying augmentation (rotation, flipping, and filtering) provided better classification results.

Table 3.

Classification accuracies trained by original and augmented images.

| Classification accuracy [%] | ||

|---|---|---|

| Original | Augmented | |

| Adenocarcinoma | 73.2 | 89.0 |

| Squamous cell carcinoma | 44.8 | 60.0 |

| Small cell carcinoma | 75.8 | 70.3 |

| Total | 62.1 | 71.1 |

4. Discussion

Using the DCNN, 70% of lung cancer cells were classified correctly. Most of the correctly classified images have typical cell morphology and arrangement. In traditional cytology, pathologists perform classification of small cell carcinoma and non-small cell carcinoma. The classification accuracy rate in this case is 255/298 = 85.6%, which is considered to be sufficient. Of the three types of lung cancers, classification accuracy was highest for adenocarcinoma and lowest for squamous cell carcinoma. This result may be related to the variation of images used for training. The number of images of squamous cell carcinoma was less than that for adenocarcinoma and data augmentation improved classification performance by 15%. We plan to increase the number of cases of adenocarcinoma and squamous cell carcinoma used in future studies. For small cell carcinoma, classification accuracy was highest in the results without data augmentation; the accuracy decreased marginally with augmentation. It has distinctive features in that small cell carcinoma has only little cytoplasm and a small nucleus. Therefore, its image characteristics could be understood by the DCNN from even a small number of images.

The classification accuracy of 71.1% is comparable to that of a cytotechnologist or pathologist [20, 21]. It is noteworthy that DCNN is able to understand cell morphology and placement of cancer cells solely from images without prior knowledge and experience of biology and pathology. The methodology using CNN has already been established. However, to the best of our knowledge, no work has been carried out on automated classification using cytological images. Our experimental results indicate that our overall accuracy rate is more than 70%. This is a satisfactory result because cytological diagnosis of lung cancer is a difficult task for pathologists. Therefore, our method will be useful in assisting with cytological examination in lung cancer diagnosis.

In future studies, we plan to analyze the image features that DCNN focuses on during classification in order to reveal the classification mechanism in detail. We classified lung cancer types in whole images with multiple cells. However, it is also possible to perform feature analysis and classification by focusing on individual cells. Therefore, we hope to develop a method to comprehensively classify cells and arrays of cells.

5. Conclusion

In this study, we developed an automated classification scheme for lung cancers in microscopic images using a DCNN. Evaluation results showed that approximately 70% of images were classified correctly. These results indicate that DCNN is useful for classification of lung cancer in cytodiagnosis.

Acknowledgments

This research was supported in part by a Grant-in-Aid for Scientific Research on Innovative Areas (Multidisciplinary Computational Anatomy, no. 26108005), Ministry of Education, Culture, Sports, Science and Technology, Japan.

Contributor Information

Tetsuya Tsukamoto, Email: ttsukamt@fujita-hu.ac.jp.

Yuka Kiriyama, Email: ykiri@fujita-hu.ac.jp.

Conflicts of Interest

The authors have no conflicts of interest to report.

Authors' Contributions

Atsushi Teramoto and Tetsuya Tsukamoto contributed equally to this work.

References

- 1. American Cancer Society, Cancer Facts and Figures 2015.

- 2.Baas P., Belderbos J. S. A., Senan S., et al. Concurrent chemotherapy (carboplatin, paclitaxel, etoposide) and involved-field radiotherapy in limited stage small cell lung cancer: a dutch multicenter phase II study. British Journal of Cancer. 2006;94(5):625–630. doi: 10.1038/sj.bjc.6602979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Travis W. D., Brambilla E., Burke A., Marx A., Nicholson A. G., editors. WHO Classification of Tumours of the Lung, Pieura, Thymus, and Heart. Lyon, France: IARC; 2015. [DOI] [PubMed] [Google Scholar]

- 4.He L., Long L. R., Antani S., Thoma G. R. Histology image analysis for carcinoma detection and grading. Computer Methods and Programs in Biomedicine. 2012;107(3):538–556. doi: 10.1016/j.cmpb.2011.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Barker J., Hoogi A., Depeursinge A., Rubin D. L. Automated classification of brain tumor type in whole-slide digital pathology images using local representative tiles. Medical Image Analysis. 2016;30:60–71. doi: 10.1016/j.media.2015.12.002. [DOI] [PubMed] [Google Scholar]

- 6.Ojansivu V., Linder N., Rahtu E., et al. Automated classification of breast cancer morphology in histopathological images. Diagnostic Pathology. 2013;8(1):p. S29. [Google Scholar]

- 7.Ficsor L., Varga V. S., Tagscherer A., Tulassay Z., Molnar B. Automated classification of inflammation in colon histological sections based on digital microscopy and advanced image analysis. Cytometry Part A. 2008;73A(3):230–237. doi: 10.1002/cyto.a.20527. [DOI] [PubMed] [Google Scholar]

- 8.Krizhevsky A., Sutskever I., Hinton G. E. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems. 2012;25:1106–1114. [Google Scholar]

- 9.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 10.Anthimopoulos M., Christodoulidis S., Ebner L., Christe A., Mougiakakou S. Lung Pattern Classification for Interstitial Lung Diseases Using a Deep Convolutional Neural Network. IEEE Transactions on Medical Imaging. 2016;35(5):1207–1216. doi: 10.1109/TMI.2016.2535865. [DOI] [PubMed] [Google Scholar]

- 11.Cha K. H., Hadjiiski L., Samala R. K., Chan H.-P., Caoili E. M., Cohan R. H. Urinary bladder segmentation in CT urography using deep-learning convolutional neural network and level sets. Medical Physics. 2016;43(4):1882–1886. doi: 10.1118/1.4944498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Teramoto A., Fujita H., Yamamuro O., Tamaki T. Automated detection of pulmonary nodules in PET/CT images: Ensemble false-positive reduction using a convolutional neural network technique. Medical Physics. 2016;43(6):2821–2827. doi: 10.1118/1.4948498. [DOI] [PubMed] [Google Scholar]

- 13.Miki Y., Muramatsu C., Hayashi T., et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Computers in Biology and Medicine. 2017;80:24–29. doi: 10.1016/j.compbiomed.2016.11.003. [DOI] [PubMed] [Google Scholar]

- 14.Ciresan D. C., Giusti A., Gambardella L. M., Schmidhuber J. Medical Image Computing and Computer-Assisted Intervention—MICCAI 2011. Vol. 8150. Berlin, Germany: Springer; 2013. Mitosis detection in breast cancer histology images with deep neural networks; pp. 411–418. (Lecture Notes in Computer Science). [DOI] [PubMed] [Google Scholar]

- 15.Wang H., Cruz-Roa A., Basavanhally A., et al. Mitosis detection in breast cancer pathology images by combining handcrafted and convolutional neural network features. Journal of Medical Imaging. 2014;1(3):p. 034003. doi: 10.1117/1.JMI.1.3.034003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ertosun M. G., Rubin D. L. Automated grading of gliomas using deep learning in digital pathology images: A modular approach with ensemble of convolutional neural networks. Proceedings of the In AMIA Annual Symposium; 2015; pp. 1899–1908. [PMC free article] [PubMed] [Google Scholar]

- 17.Xu J., Luo X., Wang G., Gilmore H., Madabhushi A. A Deep Convolutional Neural Network for segmenting and classifying epithelial and stromal regions in histopathological images. Neurocomputing. 2016;191:214–223. doi: 10.1016/j.neucom.2016.01.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Litjens G., Sánchez C. I., Timofeeva N., et al. Deep learning as a tool for increased accuracy and efficiency of histopathological diagnosis. Scientific Reports. 2016;6 doi: 10.1038/srep26286.26286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Jia Y., Shelhamer E., Donahue J., et al. Caffe: convolutional architecture for fast feature embedding. Proceedings of the ACM International Conference on Multimedia; November 2014; Orlando, FL, USA. ACM; pp. 675–678. [DOI] [Google Scholar]

- 20.Nizzoli R., Tiseo M., Gelsomino F., et al. Accuracy of fine needle aspiration cytology in the pathological typing of non-small cell lung cancer. Journal of Thoracic Oncology. 2011;6(3):489–493. doi: 10.1097/JTO.0b013e31820b86cb. [DOI] [PubMed] [Google Scholar]

- 21.Sigel C. S., Moreira A. L., Travis W. D., et al. Subtyping of non-small cell lung carcinoma: A comparison of small biopsy and cytology specimens. Journal of Thoracic Oncology. 2011;6(11):1849–1856. doi: 10.1097/JTO.0b013e318227142d. [DOI] [PubMed] [Google Scholar]