Abstract

There is a need to use selected pictures with pure emotion as stimulation or treatment media for basic and clinical research. Pictures from the widely-used International Affective Picture System (IAPS) contain rich emotions, but no study has clearly stated that an emotion is exclusively expressed in its putative IAPS picture to date. We hypothesize that the IAPS images contain at least pure vectors of disgust, erotism (or erotica), fear, happiness, sadness and neutral emotions. Accordingly, we have selected 108 IAPS images, each with a specific emotion, and invited 219 male and 274 female university students to rate only the intensity of the emotion conveyed in each picture. Their answers were analyzed using exploratory and confirmatory factor analysis. Four first-order factors manifested as disgust-fear, happiness-sadness, erotism, and neutral. Later, ten second-order sub-factors manifested as mutilation-disgust, vomit-disgust, food-disgust, violence-fear, happiness, sadness, couple- erotism, female-erotism, male- erotism, and neutral. Fifty-nine pictures for the ten sub-factors, which had established good model-fit indices, satisfactory sub-factor internal reliabilities, and prominent gender-differences in the picture intensity ratings were ultimately retained. We thus have selected a series of pure-emotion IAPS pictures, which together displayed both satisfactorily convergent and discriminant structure-validities. We did not intend to evaluate all IAPS items, but instead selected some pictures conveying pure emotions, which might help both basic and clinical researches in the future.

Keywords: Psychology

1. Introduction

Emotion is any conscious experience characterized by intense mental activity and a high degree of (dis)pleasure (Schacter, 2011), which consists of interactions among subjective and objective factors, and is mediated by neural and hormonal systems. Additionally, it is also connected with cognitive processes, widespread physiological changes and sequential adaptive-behaviors (Kleinginna and Kleinginna, 1981; Ekman and Cordaro, 2011). Emotion research is also fundamental for the emotional and behavioral disorders and their therapy (Dolan, 2002; Leppänen, 2006; Greenberg, 2014). Several material pools of emotional vectors or elicitors have been put forward for emotion research, for instance, the International Affective Picture System, developed for visual research (IAPS, Lang et al., 2008); the International Affective Digitized Sounds, developed for auditory research (Bradley and Lang, 2007); and the film clips developed for audiovisual presentations (Gross and Levenson, 1995). However, a crucial question is, when regarding processing or recognizing a putative emotion selected from a material pool, can an emotion be specifically elicited by its putative vector (picture, sound, or film clip), or can an emotion be specifically recognized from its putative vector? If the question is answered, reliable results would be obtained when the basic and clinical studies use pure emotions as stimulation- or treatment-media.

The IAPS database was developed nearly 20 years ago as a set of emotional pictures, and is one of the most widely used visual stimulation pools worldwide. When studying discrete or categorical emotions, most investigators have had a tendency to choose the IAPS pictures subjectively according to their positive or negative arousal levels (Lane et al., 1997a, b; Gouizi et al., 2011; Hot and Sequeira, 2013). Therefore, a potential bias might exist due to the mismatch between the selected emotion vector and the expected emotion itself. For example, a cluster of negatively arousing IAPS pictures showing burned victims or blood that were classified as highly threatening, were found to elicit more disgust than fear (Mikels et al., 2005; Libkuman et al., 2007). Previous studies have also shown that when evaluating the Japanese and Caucasian Facial Expressions of Emotion (JACFEE), fear is often mistaken for surprise, and disgust for anger (Ekman et al., 1987; Ekman, 1993).

Nevertheless, there have been some trials on the nominal classifications of emotion exhibited in the IAPS pictures. Mikels et al. (2005) tagged some color slides from IAPS with negative words like disgust, fear, and sadness, as well as with positive words like amusement, contentment, and excitement. They also found that the remaining pictures displayed more than one emotion, which were classified as blended or undifferentiated. Libkuman et al. (2007) tagged some IAPS pictures with terms like happiness, surprise, sadness, anger, disgust, and fear. Recently, a subset of 64 fear-evoking IAPS pictures was identified in a German study (Barke et al., 2012); some pictures that clearly reflected “human or animal threat”, such as those denoted as #1033 (snake), #1070 (snake), #1321 (bear), and #6242 (gang) were not rated as fear-evoking, but instead as anger-evoking images. The nominal classifications suffered from the fatal shortcoming that a picture with expected emotion often contained other emotions. For example, Davis et al. (1995) asked participants to rate the intensity of seven emotions on a given IAPS picture, and found that the mean categorical response profiles over the emotions were multimodal for most pictures. The perplexities of emotion judging were evident especially in distinguishing fear versus anger, fear versus disgust, and fear versus surprise (Barke et al., 2012; van Hooff et al., 2013).

Considering that the nominal (categorical) way of classifying emotions frequently suffers from overlaps between emotions (Ekman and Cordaro, 2011) and the nominal classification relies on the cultural information embedded in language (Ekman et al., 1987), some scholars have tested the scalar (dimensional) way of delineating emotions. Factor analysis which is widely used in questionnaire development, might help achieve this endeavor of emotion separation. For example, in personality research, scholars have applied factor analysis to evaluate personality structures (Comrey, 1988; O’Connor, 2002). Similarly, in emotion studies, investigators have used factor analysis to distinguish the JACFEE facial expressions of emotion, by only using the intensity perceived by the participants (Huang et al., 2009). The scale of perceived intensity is comparable to the Likert scale, which is often used in psychological assessment, and the intensity rating prevents participants from facing the dilemma to which nominal classification sometimes leads due to the overlap between emotions (Ekman et al., 1987; Ekman, 1993; Mikels et al., 2005; Libkuman et al., 2007). Thus, the scaling of the perceived intensity indeed provides a new perspective to delineate emotions. Accordingly, it is then reasonable that we use similar methods to delineate the IAPS emotional dimensions, and to select pure-emotion images based on their loadings on target emotion. The aim of the present study was not to evaluate every IAPS pictures by defining their emotional characteristics, but rather to find some pictures which were highly loaded with a specific (single) and salient emotion from the IAPS pool.

Regarding previous studies of IAPS (Libkuman et al., 2007; Jacob et al., 2011; Barke et al., 2012; van Hooff et al., 2013) and JACFEE (Ekman, 1992; Ekman and Cordaro, 2011), we thought that the emotions conveyed in the IAPS pictures would at least include disgust, erotism (or erotica), fear, happiness, and sadness. Since contempt, surprise and anger are more social-driven and human related, they are more frequently found in humans, such as those exhibited in the JACFEE pool, rather than in pictures displaying diverse-environmental surroundings as those in the IAPS. In addition, previous emotion studies (Bradley et al., 2001; Biele and Grabowska, 2006) have shown that women responded more intensively to the negative and aversive emotions than men did. Additionally, gender differences might be more pronounced in erotic situations. Therefore, based on the intensity ratings of the emotion conveyed in IAPS pictures, we hypothesize that: a) the emotional dimensions include disgust, erotism, fear, happiness, sadness and neutral; b) the emotional dimensions are different in intensity rating between the two genders. Accordingly, in this study, we will conduct exploratory and confirmatory factor analysis (with a structural equation modeling), to identify pictures specifically conveying single, distinctive emotions.

2. Methods

2.1. Participants

Five hundred university students majoring in education, engineering, mathematics, mechanics, or medicine were invited to participate in our study. Seven of them had depressive moods and did not complete the experiment, thus their data were removed from further analysis. Statistical analysis was performed on data from the remaining 493 participants (219 men, mean age: 19.58 years with 1.89 S.D., age range: 17–30 years; 274 women, mean age: 19.74 ± 2.13, age range: 17–33). No significant age difference between genders (t = -0.86, p = 0.39) was found. All participants were heterosexually oriented, without somatic or psychiatric disorders, and they were asked to abstain from drugs and alcohol for at least 72 h prior to the test. The study was approved by the Ethics Committee of Zhejiang University School of Public Health (ZGL201409-1), and written informed consent was obtained from all participants.

2.2. Materials

Seven judges (1 PhD holder, and 3 PhD’s and 3 Master’s degree candidates) were asked to select pictures conveying a single emotion (including neutral), out of 716 standardized color photographs of the International Affective Picture System (IAPS, Lang et al., 2008). These judges might consider the original valence and arousal data of those pictures as illustrated in Lang et al. (2008). However, each picture was rated independently by the six judges (3 PhD’s and 3 Master’s degree candidates), on whether it conveyed a meaningful single-emotion. If the picture received four or more “yes” votes, it was retained. If a picture received three “yes” and three “no” votes, the seventh judge (the PhD holder) would make the final decision on whether it should be retained or disregarded. Finally, 108 pictures were selected and evaluated more exhaustively.

2.3. Procedure

Pictures in either the landscape or portrait orientation were presented in a random order by the Microsoft PowerPoint software through a computer monitor (1080 × 720 ppi resolution). The presentation was conducted in a quiet room, where participants sat comfortably in front of the screen, with their visual angles set at about 9° × 7°. After entering the room, they were first asked to relax for 5 minutes before rating the intensity of the perceived emotion conveyed in each picture, using a 9-point (0–8) scale, i.e., from neutral (0), weak (1), moderate (4) to strong (8). The curtains of the room were closed in order to avoid disturbance by excessive light. Each picture was presented for 5 seconds, only once. The presentation order of the images was random, and no more than three successive images belonged to the same putative emotion. Participants were told not to name the emotion conveyed in the picture, but to rate (note down) its intensity only.

2.4. Statistical analysis

The intensity ratings of the 108 selected IAPS pictures were subjected to a principal axis factor analysis first, using the Predictive Analytics Software Statistics, Release Version 18.0.0 (SPSS Inc., 2009, Chicago, IL). Factor loadings were rotated orthogonally using the varimax normalized methods, and the factors (emotion clusters) and their items (pictures) were determined. The factors were treated as the first-level ones. Afterwards, based on these first-level ones (as latent factors), the fit of the structural equation modeling was evaluated by the confirmatory factor analysis using the Analysis of Moment Structures (AMOS) software version 17.0 (AMOS Development Corp., 2008, Crawfordville, FL, USA). We used the following parameters to identify the model fit: the χ2/df, the goodness-of-fit statistic, the global fit index, the adjusted goodness-of-fit index, the comparative fit index, the Tucker-Lewis index, and the root mean square error of approximation. Items which were loaded less heavily on a target factor, or cross-loaded heavily on more than one factor were removed from subsequent analysis one-by-one. The procedure was continued until no further picture needed to be removed.

Next, for each first-level factor, the retained items were once again analyzed principal axis factor analysis. In this instance, the sub-factors, which were treated as second-level ones (facets or emotions), and their items were determined. Items which were loaded less heavily on a target sub-factor, or cross-loaded heavily on more than one sub-factor were removed from subsequent analysis one-by-one (the loading/cross-loading criteria might vary across sub-factors). The procedure was continued until no further picture needed to be removed. For each first-level factor, the fit of the structural equation modeling (sub-factors extracted as latent factors) was also calculated. Finally, with all items retained, based on the second-level sub-factors (emotions), the general model fit was assessed. As a further step, the internal reliabilities (the Cronbach alphas) of the extracted sub-factors (emotions) were calculated. The gender differences between the intensity ratings of each sub-factor and of each picture were then submitted to the Student t test. A P value below 0.05 was considered to be significant.

3. Results

3.1. First-order factors

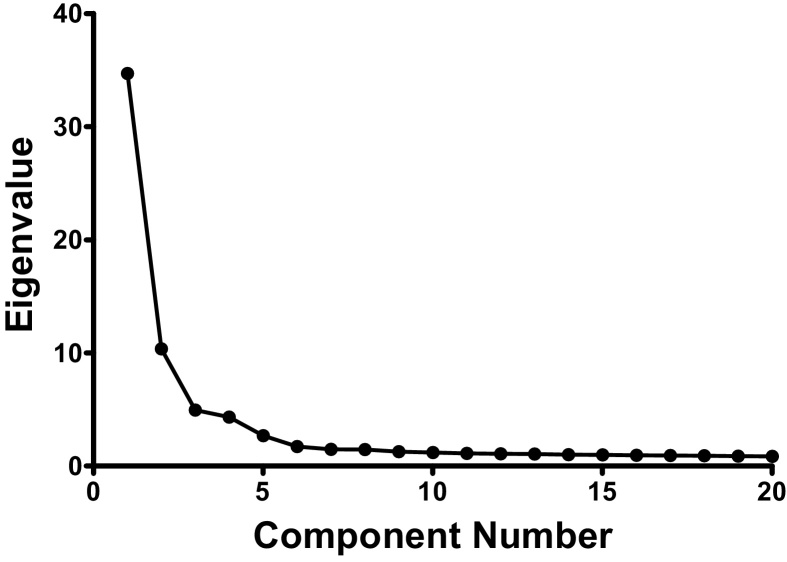

After the principal axis factor analysis of the intensity ratings of the selected 108 IAPS pictures, 14 factors emerged with eigenvalues larger than 1.0, which were 34.70, 10.35, 4.94, 4.32, 2.68, 1.72, 1.49, 1.46, 1.27, 1.20, 1.12, 1.09, 1.06 and 1.00 (Fig. 1). The first three, four, five, six, seven factors accounted for 46.28%, 50.28%, 52.76%, 54.36% and 55.73% of the total variance respectively. From the fifth factor onwards, there was a clear level-off trend, moreover, the structural equation modeling (Table 1) confirmed that a four-factor modeling was a suitable solution. Pictures with high cross-loading were later excluded to ensure that each remaining picture had enough emotion specificity. Ultimately, 97 pictures were retained. The first factor with 34 picture items, e.g., #3010, #9320, #2811 and #7359, was a mixture of disgust and fear. The second factor with 28 items, e.g., #2045 and #2700, was a mixture of happiness and sadness. The third factor with 16 items was solely erotism, e.g., #4660, #4008 and #4520. The fourth factor with 19 items was neutral, e.g., #7175. The four-factor model based on the 97 pictures was developed through confirmatory factor analysis, resulting in fitting parameters at an acceptable level (Table 1).

Fig. 1.

Scree plot from the factor analysis of the arousal ratings of the 108 pictures.

Table 1.

Fitting models of first-order factors and second-order sub-factors in 493 participants.

| Factor model | χ2/df | Goodness of fit index | Adjusted goodness of fit index | Tucker-Lewis index | Comparative fit index | Root mean square error of approximation |

|---|---|---|---|---|---|---|

| First-order: all four factors | 2.25 | 0.64 | 0.63 | 0.81 | 0.82 | 0.05 |

| Second-order: four sub-factors of Factor 1 | 1.86 | 0.93 | 0.91 | 0.97 | 0.97 | 0.04 |

| Second-order: two sub-factors of Factor 2 | 2.25 | 0.95 | 0.93 | 0.97 | 0.97 | 0.05 |

| Second-order: three sub-factors of Factor 3 | 1.85 | 0.97 | 0.95 | 0.98 | 0.99 | 0.04 |

| Second-order: all ten sub-factors | 1.69 | 0.85 | 0.83 | 0.93 | 0.94 | 0.04 |

3.2. Second-order factors

For factor 1, the intensity ratings of all 34 pictures were analyzed again using the principal axis factor analysis; 4 factors emerged with eigenvalues of 16.01, 1.89, 1.64 and 1.03, which accounted for 60.51% of the total variance. From the fifth factor onwards, there was a clear plateau trend. With loading criteria (target loading > 0.55), 23 items remained for the fitting model (Table 1). The first facet with 10 picture items was labeled mutilation-disgust, e.g., #3010. The second facet with 6 picture items became vomit-disgust, e.g., #9320. The third facet with 4 picture items was related to violence-fear (aimed gun), e.g., #2811. The fourth facet with 3 picture items became food-disgust (food with bugs), e.g., #7359.

For factor 2, the intensity ratings of all 28 pictures were analyzed again using the principal axis factor analysis; 2 factors emerged with eigenvalues of 14.22 and 1.53, which accounted for 56.25% of the total variance. From the third factor onwards, there was a clear plateau trend. With loading criteria (target loading > 0.65), 15 items remained for the fitting model (Table 1). The fifth facet with 9 picture items was about happiness, e.g., #2045 (smiling baby). The sixth facet with 6 picture items was about sadness, e.g., #2700 (teary women).

For factor 3, the intensity ratings of all 16 pictures were analyzed again using the principal axis factor analysis; 3 factors emerged with eigenvalues of 9.14, 1.16, and 0.69, which accounted for 68.65% of the total variance. From the fourth factor onwards, there was a clear plateau trend. With loading criteria (target loading > 0.65), 12 items remained for the fitting model (Table 1). The seventh facet with 6 picture items was about heterosexual couple-erotism (naked heterosexual couple), e.g., #4660. The eighth facet with 3 picture items was about single female-erotism (naked female), e.g., #4008. The ninth facet with 3 picture items was single male-erotism (naked male), e.g., #4520.

For factor 4, the intensity ratings of all 19 pictures were analyzed again using the principal axis factor analysis; 2 factors emerged with eigenvalues of 7.87 and 1.67 respectively, which accounted for 50.18% of the total variance. Even though there were several kinds of factor-restriction analysis and strict structural equation modeling, no sub-factor model was arousing. These neutral pictures occupied a unique position which could be continuously regarded as an isolated sub-factor. Among these pictures, nine were loaded highly (≥ 0.65) on this target, e.g., #7175.

With 59 pictures which were distributed among10 sub-factors (facet-level), a second-order fit model was developed. The structural equation modeling confirmed that a ten-facet model was a suitable solution (Table 1). Furthermore, the internal reliabilities of the ten sub-factors were also satisfactory (Table 2).

Table 2.

Intensity ratings (mean ± SD) of the retained 59 IAPS pictures in men (n = 219) and women (n = 274).

| Emotion | Picture identity and description | Men | Women |

|---|---|---|---|

| Mutilation-disgust (.93) | 3010 Mutilation | 6.26 ± 1.99 | 6.77 ± 1.93* |

| 3060 Mutilation | 6.58 ± 1.74 | 6.86 ± 1.78 | |

| 3000 Mutilation | 6.54 ± 1.97 | 6.83 ± 1.88 | |

| 3266 Injury | 6.42 ± 1.87 | 6.69 ± 1.78 | |

| 3030 Mutilation | 5.65 ± 2.21 | 6.00 ± 2.08 | |

| 3051 Mutilation | 5.95 ± 2.02 | 6.45 ± 1.85* | |

| 3001 Headless Body | 5.99 ± 2.00 | 6.35 ± 2.00* | |

| 2352.2 Bloody Kiss | 6.55 ± 2.82 | 6.52 ± 1.78 | |

| 9183 Hurt Dog | 5.69 ± 1.92 | 5.99 ± 2.00 | |

| 9570 Dog | 5.69 ± 1.99 | 6.13 ± 2.00* | |

| Vomit-disgust (.88) | 9320 Vomit | 4.97 ± 2.09 | 5.32 ± 2.18 |

| 9291 Garbage | 3.48 ± 2.08 | 3.22 ± 2.08 | |

| 9326 Vomit | 5.04 ± 2.04 | 5.32 ± 2.04 | |

| 9301 Toilet | 5.70 ± 1.89 | 6.01 ± 1.92 | |

| 9322 Vomit | 5.29 ± 2.80 | 5.51 ± 2.02 | |

| 9325 Vomit | 5.80 ± 1.98 | 6.01 ± 2.01 | |

| Violence-fear (.86) | 2811 Gun | 4.19 ± 2.20 | 4.21 ± 2.30 |

| 6231 Aimed Gun | 4.06 ± 2.10 | 4.57 ± 2.26* | |

| 6260 Aimed Gun | 4.13 ± 2.27 | 4.38 ± 2.34 | |

| 6510 Attack | 4.28 ± 2.06 | 4.79 ± 2.15* | |

| Food-disgust (.70) | 7359 Pie With Bug | 3.89 ± 2.08 | 4.25 ± 2.21 |

| 1271 Roaches | 4.25 ± 2.25 | 4.60 ± 2.44 | |

| 7360 Flies On Pie | 4.02 ± 2.11 | 4.25 ± 3.46 | |

| Happiness (.92) | 2045 Baby | 3.41 ± 2.12 | 3.52 ± 2.17 |

| 2224 Boys | 2.97 ± 2.11 | 3.13 ± 1.99 | |

| 2274 Children | 2.99 ± 2.08 | 2.93 ± 2.07 | |

| 2165 Father | 3.46 ± 2.04 | 3.54 ± 2.15 | |

| 2347 Children | 3.33 ± 2.17 | 3.66 ± 2.15 | |

| 2040 Baby | 3.11 ± 2.12 | 3.11 ± 2.08 | |

| 2035 Kid | 3.11 ± 2.07 | 3.11 ± 2.03 | |

| 2154 Family | 3.45 ± 2.26 | 3.31 ± 2.10 | |

| 2345 Children | 3.21 ± 2.14 | 3.49 ± 2.11 | |

| Sadness (.85) | 2700 Woman | 3.27 ± 1.87 | 3.45 ± 1.93 |

| 2900 Crying Boy | 3.82 ± 2.03 | 4.04 ± 1.98 | |

| 2456 Crying Family | 3.41 ± 1.80 | 3.76 ± 2.00* | |

| 2310 Mother | 3.33 ± 1.90 | 3.53 ± 1.88 | |

| 2399 Woman | 2.68 ± 1.76 | 2.77 ± 1.92 | |

| 2230 Sad Face | 2.44 ± 1.83 | 3.09 ± 2.09* | |

| Couple-erotism (.91) | 4660 Erotic Couple | 4.49 ± 1.91 | 4.35 ± 2.06 |

| 4698 Erotic Couple | 4.65 ± 1.94 | 4.14 ± 2.13* | |

| 4607 Erotic Couple | 4.41 ± 1.88 | 3.93 ± 2.03* | |

| 4604 Erotic Couple | 4.49 ± 1.94 | 4.12 ± 2.11* | |

| 4670 Erotic Couple | 5.15 ± 1.95 | 4.67 ± 2.05* | |

| 4611 Erotic Couple | 4.34 ± 1.95 | 3.96 ± 2.07* | |

| Female-erotism (.86) | 4008 Erotic Female | 4.67 ± 2.02 | 3.26 ± 1.95* |

| 4085 Erotic Female | 4.84 ± 2.06 | 3.81 ± 1.99* | |

| 4225 Erotic Female | 4.29 ± 1.87 | 3.21 ± 1.99* | |

| Male-erotism (.77) | 4520 Erotic Male | 2.93 ± 1.92 | 3.12 ± 1.95 |

| 4559 Erotic Male | 3.26 ± 1.98 | 3.57 ± 2.07 | |

| 4530 Erotic Male | 3.17 ± 2.02 | 3.54 ± 2.02* | |

| Neutral (.88) | 7175 Lamp | 0.80 ± 1.33 | 0.78 ± 1.35 |

| 7003 Disk | 0.95 ± 1.52 | 0.61 ± 1.20* | |

| 7004 Spoon | 0.80 ± 1.45 | 0.74 ± 1.37 | |

| 7186 Abstract Art | 1.09 ± 1.56 | 0.88 ± 1.40 | |

| 7035 Mug | 0.87 ± 1.62 | 0.75 ± 1.44 | |

| 7010 Basket | 0.96 ± 1.50 | 0.91 ± 1.40 | |

| 7041 Baskets | 1.22 ± 1.69 | 1.01 ± 1.52 | |

| 5390 Boat | 1.42 ± 1.94 | 1.29 ± 1.90 | |

| 7052 Clothespins | 1.25 ± 1.87 | 0.86 ± 1.33* |

p < .05 vs. men; the emotion scale alphas are immediately after their names in parentheses.

3.3. Gender difference

Regarding gender differences on the perceived intensities of the 59 pictures, women scored higher on mutilation-disgust (t = 2.31, p = 0.02, 95% confidence interval (CI) = [0.49, 6.07]) and sadness (t = 2.12, p = 0.03, 95% CI = [0.13, 3.24]). Men, on the other hand, scored higher on couple-erotism (t = 2.58, p = 0.01, 95% CI = [0.58, 4.14]) and female-erotism (t = 7.50, p < 0.001, 95% CI = [2.56, 4.14]). Regarding gender differences on the perceived intensities of each individual picture, women scored higher on some pictures of mutilation-fear (#3001 headless body, #3010 mutilation, #3051 mutilation, and #9570 dog), some of violence-fear (#6231 aimed gun and #6510 attack), some of sadness (#2456 crying family, and #2230 sad face), and one of a naked male (#4530). Men, on the other hand, scored higher on some pictures of a naked heterosexual couple (#4604, #4607, #4611, #4698, and #4670), and some pictures of a naked female (#4008, #4085, and #4225) (Table 2).

4. Discussion

Based on intensity-rating only, through exploratory and confirmatory factor analysis, we have characterized ten domains of emotion (mutilation-disgust, vomit-disgust, food-disgust, violence-fear, happiness, sadness, heterosexual couple-erotism, single male-erotism, single female-erotism, and neutral). The 59 ultimately-retained pictures displayed satisfactory convergent and discriminatory validities. According to the best of our knowledge, this is the first study to delineate IAPS pictures as different vectors for pure emotion.

As a first-order domain, disgust and fear were mixed together in our study, which is in line with previous reports that the two emotions are difficult to distinguish due to their close negative valences (Barrett, 1998; van Overveld et al., 2006), and to similar cerebral areas involved, i.e., the occipital, prefrontal and cingulate cortices, and the nucleus accumbens (Stark et al., 2007; Klucken et al., 2012). Although the activation of the anterior insula and the amygdala has been reported to be involved in disgust and fear, respectively, the specificity of their involvement in these emotions has not been confirmed (Calder et al., 2000; Zaki et al., 2012; reviewed in Cisler et al., 2009 and Del Casale et al., 2011).

In our daily lives, fear prepares us to escape and disgust prepares us to avoid an object or situation. In the current study, we have clearly classified some IAPS pictures into the fear and disgust domains. Indeed, fear can be aroused by a gun aimed at you (Woody and Teachman, 2000; Barke et al., 2012), while disgust can be elicited with contamination (Woody and Teachman, 2000; Teachman, 2006; reviewed in Cisler et al., 2009). Our study has additionally distinguished mutilation-disgust, vomit-disgust and food-disgust domains. As has previously been shown, mutilation elicits disgust, while blood-injection-injury elicits fear or phobia (Tolin et al., 1997; Sawchuk et al., 2002). Vomiting as a defensive behavior can be elicited by disgust to avoid contamination (reviewed in Kreibig, 2010 and Levenson, 2014), and both vomiting and contaminated food fit into the law of contagion and disgust (reviewed in Cisler et al., 2009).

As a general factor, happiness and sadness loaded together in our study, which is consistent with some previous results. For instance, a previous study has shown that sadness was confounded with happiness when people were listening to some sad music pieces (Kawakami et al., 2013). Neuroimaging evidence has also shown activation of similar brain regions, such as the thalamus and the medial prefrontal cortex when processing these two emotions (Lane et al., 1997a, b). However, our second-level analysis revealed that happiness and sadness were clearly separated from each other. Another study found evidence showing that happiness and sadness were not bipolar opposites (Rafaeli and Revelle, 2006).

The erotism-related emotions were seldom mentioned as a discrete emotional construct before, but in the current study, the IAPS erotic pictures were clearly separated into the naked female-, male-, and heterosexual couple-erotism domains. The erotism-elicitors have been used in researches concerning attachment (Jacob et al., 2011) and spatial attention (Jiang et al., 2006), and in conjunction with eye-tracking (Rupp and Wallen, 2007) and neuroimaging (Paul et al., 2008) techniques. In our study, we also demonstrated the gender differences between the intensity perceived with the naked pictures. As a basic instinct, men would perceive stronger emotion-intensity with naked heterosexual couple and naked female pictures, while women would perceive stronger emotion-intensity with naked male pictures (Costa et al., 2003; Nummenmaa et al., 2012).

Neutral pictures were perfectly sorted out as one factor with lower cross-loadings on other domains. These pictures were more easily recognized than the neutral faces, because the neutral pictures supplied substantial contextual information (reviewed in Wieser and Brosch, 2012). Our study also suggests that the IAPS database is a rich source of neutral pictures which can be used as qualified controls for other emotions in future studies.

However, there are some design flaws in our current study. First, we only asked participants to rate the intensity, not the valence, nor the dominance of a putative emotion-loaded picture, thus, whether a valence- or a dominance-centered design could offer different cues for classifying IAPS pictures remains unresolved. Second, our study was only conducted with healthy university students, whether the findings could be generalized into other clinical samples remains unclear. Third, the erotic pictures in the IAPS database only include naked male/female and heterosexual couples, whether applying pictures of homosexual couples would influence the scalar-classification results remains unknown. In conclusion, using intensity-rating alone, we have successfully identified ten emotional domains from the IAPS pool. The pictures can be used in emotion-related basic and clinical studies in the future.

Declarations

Author contribution statement

Wei Wang: Conceived and designed the experiments; Wrote the paper.

Bingren Zhang, Qianqian Gao, You Xu: Performed the experiments; Analyzed and interpreted the data.

Zhicha Xu, Rongsheng Zhu, Chanchan Shen: Performed the experiments; Analyzed and interpreted the data, wrote the paper.

Funding statement

Wei Wang was supported by the Natural Science Foundation of China (No. 91132715). Zhicha Xu was supported by the 2013 Student Research Training Program of Zhejiang University.

Competing interest statement

The authors declare no conflict of interest.

Additional information

No additional information is available for this paper.

Acknowledgements

The authors would like to thank Miss Ranqi Chen for collecting some data described in the report.

References

- Barke A., Stahl J., Kroner-Herwig B. Identifying a subset of fear-evoking pictures from the IAPS on the basis of dimensional and categorical ratings for a German sample. J. Behav. Ther. Exp. Psychiatry. 2012;43(1):565–572. doi: 10.1016/j.jbtep.2011.07.006. [DOI] [PubMed] [Google Scholar]

- Barrett L.F. Discrete emotions or dimensions? The role of valence focus and arousal focus. Cogn. Emot. 1998;12(4):579–599. [Google Scholar]

- Biele C., Grabowska A. Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 2006;171(1):1–6. doi: 10.1007/s00221-005-0254-0. [DOI] [PubMed] [Google Scholar]

- Bradley M.M., Codispoti M., Sabatinelli D., Lang P.J. Emotion and motivation II: sex differences in picture processing. Emotion. 2001;1(3):300–319. [PubMed] [Google Scholar]

- Bradley M.M., Lang P.J. University of Florida; Gainesville, Fl: 2007. The International Affective Digitized Sounds (2nd Edition; IADS-2): Affective ratings of sounds and instruction manual. Technical report B-3. [Google Scholar]

- Calder A.J., Keane J., Manes F., Antoun N., Young A.W. Impaired recognition and experience of disgust following brain injury. Nat. Neurosci. 2000;3(11):1077–1078. doi: 10.1038/80586. [DOI] [PubMed] [Google Scholar]

- Cisler J.M., Olatunji B.O., Lohr J.M. Disgust, fear, and the anxiety disorders: a critical review. Clin. Psychol. Rev. 2009;29(1):34–46. doi: 10.1016/j.cpr.2008.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Comrey A.L. Factor-analytic methods of scale development in personality and clinical psychology. J. Consult Clin. Psychol. 1988;56(5):754. doi: 10.1037//0022-006x.56.5.754. [DOI] [PubMed] [Google Scholar]

- Costa M., Braun C., Birbaumer N. Gender differences in response to pictures of nudes: a magnetoencephalographic study. Biol. Psychol. 2003;63(2):129–147. doi: 10.1016/s0301-0511(03)00054-1. [DOI] [PubMed] [Google Scholar]

- Davis W.J., Rahman M.A., Smith L.J., Burns A., Senecal L., McArthur D., Halpern J.A., Perlmutter A., Sickels W., Wagner W. Properties of human affect induced by static color slides (IAPS): dimensional, categorical and electromyographic analysis. Biol. Psychol. 1995;41(3):229–253. doi: 10.1016/0301-0511(95)05141-4. [DOI] [PubMed] [Google Scholar]

- Del Casale A., Ferracuti S., Kotzalidis G.D., Rapinesi C., Serata D., Ambrosi E., Simonetti A., Serra G., Savoja V. The functional neuroanatomy of the human response to fear: a brief review. S. Afr. J. Psychiatr. 2011;17(1):3. [Google Scholar]

- Dolan R.J. Emotion, cognition, and behavior. Science. 2002;298(5596):1191–1194. doi: 10.1126/science.1076358. [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W.V., O’sullivan M., Chan A., Diacoyanni-Tarlatzis I., Heider K., Krause R., LeCompte W.A., Pitcairn T., Ricci-Bitti P.E., Scherer K., Tomita M., Tzavas A. Universals and cultural differences in the judgments of facial expressions of emotion. J. Pers. Soc. Psychol. 1987;53(4):712–717. doi: 10.1037//0022-3514.53.4.712. [DOI] [PubMed] [Google Scholar]

- Ekman P. Facial expression and emotion. Am. Psychol. 1993;48(4):384–392. doi: 10.1037//0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P. An argument for basic emotions. Cogn. Emot. 1992;6(3-4):169–200. [Google Scholar]

- Ekman P., Cordaro D. What is meant by calling emotions basic. Emot. Rev. 2011;3(4):364–370. [Google Scholar]

- Gouizi K., Bereksi R.F., Maaoui C. Emotion recognition from physiological signals. J. Med. Eng. Technol. 2011;35(6-7):300–307. doi: 10.3109/03091902.2011.601784. [DOI] [PubMed] [Google Scholar]

- Greenberg L. The therapeutic relationship in emotion-focused therapy. Psychotherapy (Chic) 2014;51(3):350–357. doi: 10.1037/a0037336. [DOI] [PubMed] [Google Scholar]

- Gross J.J., Levenson R.W. Emotion elicitation using films. Cogn. Emot. 1995;9(1):87–108. [Google Scholar]

- Hot P., Sequeira H. Time course of brain activation elicited by basic emotions. Neuroreport. 2013;24(16):898–902. doi: 10.1097/WNR.0000000000000016. [DOI] [PubMed] [Google Scholar]

- Huang J., Fan J., He W., Yu S., Yeow C., Sun G., Shen M., Chen W., Wang W. Could intensity ratings of Matsumoto and Ekman’s JACFEE pictures delineate basic emotions? A principal component analysis in Chinese university students. Pers. Individ. Dif. 2009;46(3):331–335. [Google Scholar]

- Jacob G.A., Arntz A., Domes G., Reiss N., Siep N. Positive erotic picture stimuli for emotion research in heterosexual females. Psychiatry Res. 2011;190(2-3):348–351. doi: 10.1016/j.psychres.2011.05.044. [DOI] [PubMed] [Google Scholar]

- Jiang Y., Costello P., Fang F., Huang M., He S. A gender- and sexual orientation-dependent spatial attentional effect of invisible images. Proc. Nation Acad. Sci. USA. 2006;103(45):17048–17052. doi: 10.1073/pnas.0605678103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawakami A., Furukawa K., Katahira K., Okanoya K. Sad music induces pleasant emotion. Front Psychol. 2013;4:311. doi: 10.3389/fpsyg.2013.00311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleinginna P.R., Kleinginna A.M. A categorized list of emotion definitions, with suggestions for a consensual definition. Motiv. Emot. 1981;5(4):345–379. [Google Scholar]

- Klucken T., Schweckendiek J., Koppe G., Merz C.J., Kagerer S., Walter B., Sammer G., Vaitl D., Stark R. Neural correlates of disgust- and fear-conditioned responses. Neuroscience. 2012;201:209–218. doi: 10.1016/j.neuroscience.2011.11.007. [DOI] [PubMed] [Google Scholar]

- Kreibig S.D. Autonomic nervous system activity in emotion: a review. Biol. Psychol. 2010;84(3):394–421. doi: 10.1016/j.biopsycho.2010.03.010. [DOI] [PubMed] [Google Scholar]

- Lane R.D., Reiman E.M., Ahern G.L., Schwartz G.E., Davidson R.J. Neuroanatomical correlates of happiness, sadness, and disgust. Am. J. Psychiatry. 1997;154(7):926–933. doi: 10.1176/ajp.154.7.926. [DOI] [PubMed] [Google Scholar]

- Lane R.D., Reiman E.M., Bradley M.M., Lang P.J., Ahern G.L., Davidson R.J., Schwartz G.E. Neuroanatomical correlates of pleasant and unpleasant emotion. Neuropsychologia. 1997;35(11):1437–1444. doi: 10.1016/s0028-3932(97)00070-5. [DOI] [PubMed] [Google Scholar]

- Lang P.J., Bradley M.M., Cuthbert B.N. University of Florida; Gainesville, FL: 2008. International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Technical Report A-8. [Google Scholar]

- Leppänen J.M. Emotional information processing in mood disorders: a review of behavioral and neuroimaging findings. Curr. Opin. Psychiatry. 2006;19(1):34–39. doi: 10.1097/01.yco.0000191500.46411.00. [DOI] [PubMed] [Google Scholar]

- Levenson R.W. The autonomic nervous system and emotion. Emot. Rev. 2014;6(2):100–112. [Google Scholar]

- Libkuman T.M., Otani H., Kern R., Viger S.G., Novak N. Multidimensional normative ratings for the International Affective Picture System. Behav. Res. Methods. 2007;39(2):326–334. doi: 10.3758/bf03193164. [DOI] [PubMed] [Google Scholar]

- Mikels J.A., Fredrickson B.L., Larkin G.R., Lindberg C.M., Maglio S.J., Reuter-Lorenz P.A. Emotional category data on images from the International Affective Picture System. Behav. Res. Methods. 2005;37(4):626–630. doi: 10.3758/bf03192732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa L., Hietanen J.K., Santtila P., Hyönä J. Gender and visibility of sexual cues influence eye movements while viewing faces and bodies. Arch. Sex. Behav. 2012;41(6):1439–1451. doi: 10.1007/s10508-012-9911-0. [DOI] [PubMed] [Google Scholar]

- O’Connor B.P. A quantitative review of the comprehensiveness of the five-factor model in relation to popular personality inventories. Assessment. 2002;9(2):188–203. doi: 10.1177/1073191102092010. [DOI] [PubMed] [Google Scholar]

- Paul T., Schiffer B., Zwarg T., Krüger T.H.C., Karama S., Schedlowski M., Forsting M., Gizewski E.R. Brain response to visual sexual stimuli in heterosexual and homosexual males. Hum. Brain Mapp. 2008;29(6):726–735. doi: 10.1002/hbm.20435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rafaeli E., Revelle W. A premature consensus: are happiness and sadness truly opposite affects? Motiv. Emot. 2006;30(1):1–12. [Google Scholar]

- Rupp H.A., Wallen K. Sex differences in viewing sexual stimuli: An eye-tracking study in men and women. Horm. Behav. 2007;51(4):524–533. doi: 10.1016/j.yhbeh.2007.01.008. [DOI] [PubMed] [Google Scholar]

- Sawchuk C.N., Lohr J.M., Westendorf D.H., Meunier S.A., Tolin D.F. Emotional responding to fearful and disgusting stimuli in specific phobics. Behav. Res. Ther. 2002;40(9):1031–1046. doi: 10.1016/s0005-7967(01)00093-6. [DOI] [PubMed] [Google Scholar]

- Schacter D.L. Second ed. Worth Publishers; 41 Madison Avenue, New York, NY 10010: 2011. Psychology; p. 310. [Google Scholar]

- Stark R., Zimmermann M., Kagerer S., Schienle A., Walter B., Weygandt M., Vaitl D. Hemodynamic brain correlates of disgust and fear ratings. Neuroimage. 2007;37(2):663–673. doi: 10.1016/j.neuroimage.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Teachman B.A. Pathological disgust: In the thoughts, not the eye, of the beholde. Anxiety. Stress Coping. 2006;19(4):335–351. [Google Scholar]

- Tolin D.F., Lohr J.M., Sawchuk C.N., Lee T.C. Disgust and disgust sensitivity in blood-injection-injury and spider phobia. Behav. Res. Ther. 1997;35(10):949–953. doi: 10.1016/s0005-7967(97)00048-x. [DOI] [PubMed] [Google Scholar]

- van Hooff J.C., Devue C., Vieweg P.E., Theeuwes J. Disgust- and not fear-evoking images hold our attention. Acta Psychol. (Amst) 2013;143(1):1–6. doi: 10.1016/j.actpsy.2013.02.001. [DOI] [PubMed] [Google Scholar]

- van Overveld W.J.M., de Jong P.J., Peters M.L., Cavanagh K., Davey G.C.L. Disgust propensity and disgust sensitivity: Separate constructs that are differentially related to specific fears. Pers. Individ. Dif. 2006;41(7):1241–1252. [Google Scholar]

- Wieser M.J., Brosch T. Faces in context: a review and systematization of contextual influences on affective face processing. Front Psychol. 2012;3:471. doi: 10.3389/fpsyg.2012.00471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woody S.R., Teachman B.A. Intersection of disgust and fear: Normative and pathological views. Clin. Psychol. Sci. Pr. 2000;7(3):291–311. [Google Scholar]

- Zaki J., Davis J.I., Ochsner K.N. Overlapping activity in anterior insula during interoception and emotional experience. Neuroimage. 2012;62(1):493–499. doi: 10.1016/j.neuroimage.2012.05.012. [DOI] [PMC free article] [PubMed] [Google Scholar]