This study shows that tactile and visual information can be integrated to improve the estimates of the parameters of self-motion. This, however, happens only if the two sources of information are congruent—as they are in a natural environment. In contrast, an incongruent tactile stimulus is still used as a source of information about self-motion but it is not integrated with visual information.

Keywords: multimodal integration, visual, tactile, simulated self-motion, distance reproduction

Abstract

In the natural world, self-motion always stimulates several different sensory modalities. Here we investigated the interplay between a visual optic flow stimulus simulating self-motion and a tactile stimulus (air flow resulting from self-motion) while human observers were engaged in a distance reproduction task. We found that adding congruent tactile information (i.e., speed of the air flow and speed of visual motion are directly proportional) to the visual information significantly improves the precision of the actively reproduced distances. This improvement, however, was smaller than predicted for an optimal integration of visual and tactile information. In contrast, incongruent tactile information (i.e., speed of the air flow and speed of visual motion are inversely proportional) did not improve subjects’ precision indicating that incongruent tactile information and visual information were not integrated. One possible interpretation of the results is a link to properties of neurons in the ventral intraparietal area that have been shown to have spatially and action-congruent receptive fields for visual and tactile stimuli.

NEW & NOTEWORTHY This study shows that tactile and visual information can be integrated to improve the estimates of the parameters of self-motion. This, however, happens only if the two sources of information are congruent—as they are in a natural environment. In contrast, an incongruent tactile stimulus is still used as a source of information about self-motion but it is not integrated with visual information.

estimating parameters of self-motion is a complex process that integrates information from several different sources (for a review see Britten 2008; Cullen 2011). In the absence of visual landmarks one important visual cue is the global optic flow that allows humans and animals to infer the direction as well as the relative speed of self-motion (Andersen et al. 1993; Brandt et al. 1973; Gibson 1950; Lappe 2000; Warren et al. 1988). Under natural conditions, other sensory modalities contribute to the perception of self-motion as well. One obvious source of information is the vestibular system (e.g., Fetsch et al. 2009; Gu et al. 2006; 2008; Hlavacka et al. 1992; for a review see Angelaki and Cullen 2008; Cullen 2012). Another potential source of information is the auditory modality (Väljamäe et al. 2008; for a review see Väljamäe 2009). Only few investigations on how a combination of auditory and vestibular (Kapralos et al. 2004) or auditory and visual (Knudsen and Brainard 1995; von Hopffgarten and Bremmer 2011) cues contributes to an accurate perception of the parameters of self-motion have been conducted so far.

Another potential source of information about self-motion comes from the tactile domain. Under natural conditions, self-motion causes tactile stimulation from different sources. For example, self-motion often creates an air flow over the skin with a speed that is directly related to the speed of self-motion. Other potential sources of tactile stimulation are the rubbing of clothing on the body or, when moving through dense vegetation, branches and grass touching the body. The intensity and distribution of these tactile sensations is correlated to the speed and direction of self-motion. It has been shown that the presence of tactile air flow facilitates the impression of self-motion (Seno et al. 2011). While some studies have also investigated the integration of tactile information with information from other modalities (e.g., Chancel et al. 2016; Harris et al. 2017; Kaliuzhna et al. 2016), to our best knowledge, no study so far has addressed the interplay of tactile and visual information for estimation of parameters of self-motion.

In the monkey, neurons from the medial superior temporal area (MST) and the ventral intraparietal area (VIP) have been shown to be involved in processing of self-motion (MST: Bremmer et al. 2010; Duffy and Wurtz 1991; Lappe et al. 1996; Liu and Angelaki 2009; Tanaka et al. 1986; VIP: Bremmer et al. 2002a; Chen et al. 2011; Kaminiarz et al. 2014; Maciokas and Britten 2010). These neurons respond not only to visual flow-field stimuli but also to stimuli in other modalities that are associated with self-motion. Neurons in the dorsal part of area MST have been shown to respond to visual as well as vestibular stimulation (Bremmer et al. 1999; Chen et al. 2013; Duffy 1998; Gu et al. 2006, 2008), while neurons in area VIP respond to visual, vestibular, auditory, and tactile stimuli (Bremmer et al. 2002b; Duhamel et al. 1998; Guipponi et al. 2013; Schlack et al. 2002, 2005). Interestingly, VIP neurons that respond to visual as well as tactile stimuli show congruent properties for the two modalities. This means that their visual and tactile receptive fields have the same position in space and they share the same preferred direction (Bremmer et al. 2002b). Important for our present study is that functional equivalents of areas MST and VIP in the monkey have been identified in humans (MST: Huk et al. 2002; VIP: Bremmer et al. 2001). Accordingly, behavioral interaction of visual and the tactile self-motion stimuli, which might be difficult to test in the animal model, can easily be tested in humans. Given the above-mentioned properties of neurons in macaque areas MST and VIP, the congruency in neuronal processing of visual and tactile information may pose a constraint on how we can integrate information from the two modalities to improve the perception of self-motion. How the perceptual system deals with incongruent information may depend on the tested stimuli and the task. In some cases the sources of information were described to interfere—like in the cross-modal congruency effect (Driver and Spence 1998a, 1998b; Marini et al. 2017) where a decrease in performance is caused by an irrelevant incongruent stimulus. In other cases a winner-takes-all mechanism was proposed (e.g., Kastner and Ungerleider 2001) that uses bottom-up and top-down information to select one relevant input for further processing.

In this study we tested in human observers how the information provided by tactile stimulation and visually simulated self-motion influences the perception of the distance covered by (simulated) self-motion. We also investigated the conditions that are necessary for integration of information from the visual and the tactile modality.

METHODS

Subjects

Sixteen volunteers participated in the experiments (seven male and nine female, mean age 24.9 yr); all were naive as to the goals of the study. Eight subjects took part in the experiments using the congruent tactile stimulation condition, and the other eight in the experiments using the incongruent tactile condition. All subjects had normal or corrected-to-normal visual acuity. They gave informed, written consent before the testing and were compensated for their participation at the end of the experiment. All procedures used in this study conformed to the Declaration of Helsinki and were approved by the local ethics committee.

Apparatus

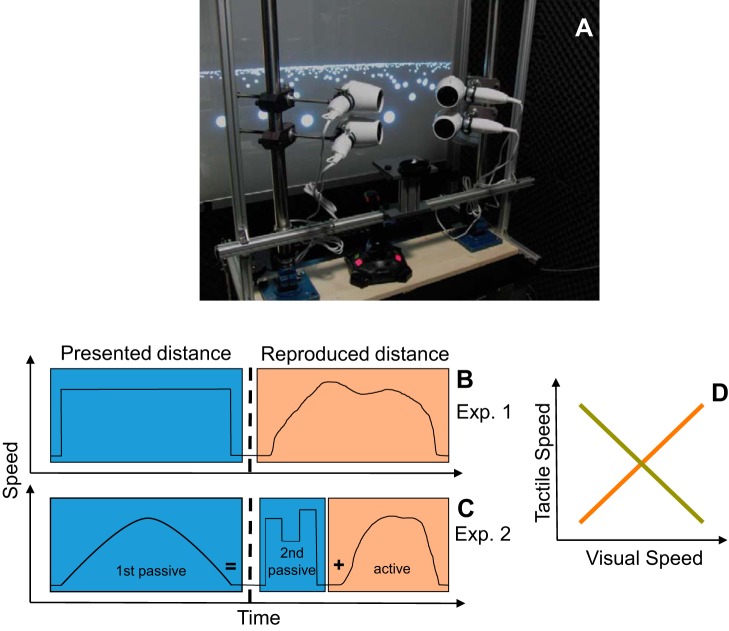

The components of the setup are shown in Fig. 1. The experiments took place in a dark, sound-attenuated room. Subjects were seated in front of a projection screen with the head supported and kept in position by a chin rest and watched the visual display using binocular view without any further instructions regarding eye position. The visual stimuli were generated on a PC (Dell Precision T1600) using MATLAB (The MathWorks, Natick, MA) and the psychophysics toolbox (Brainard 1997; Kleiner et al. 2007; Pelli 1997). They were backprojected on a transparent screen by a video projector (Christie M 4.1 DS+6K-M SXGA) at a frame rate of 120 Hz and a resolution of 1,152 × 864 pixels. The viewing distance was 70 cm and the screen covered the central 81 × 66° of the visual field. The tactile stimulation was provided by a set of four commercial hair dryers (Clatronic HT3393) from which the heating spiral had been removed. We controlled the speed of the air flow using an Arduino microcontroller (Arduino Uno Rev3) that was connected to the PC via an USB port. It was accessed via a MATLAB toolbox that allowed for a gradual change of the speed of the air flow. The distance between the nozzles of the hair dryers and the head of the subject was ~5 cm. To ensure that the subjects only received a tactile stimulation and could not hear the sound of the motors that would also be indicative of the motion speed, the subjects were provided with earmuffs. To fully remove any residual external sound, the subjects were additionally wearing in-ear headphones in which the sound of a motor from a hairdryer, which was recorded at a constant speed before the experiment, was played at a constant volume. The volume was adjusted individually for each subject to cover any external sounds. Subjects used a joystick (ATK3, Logitech) to control the speed of the simulated self-motion. To protect subjects’ eyes from the air flow we asked them to wear protective goggles. The goggles blocked the air flow to the eyes as well as an area of the skin ~2 cm above and below the eyes on the front (but not the sides) of the face.

Fig. 1.

A: experimental apparatus consisting of a visual display, a chin rest, 4 modified hair dryers that produced the tactile simulation of motion, and a joystick that allowed the subjects to control the speed of simulated self-motion. B and C: schematic time course of the trials in experiments 1 and 2. Parts of a trial in which the subjects passively observed the self-motion stimulus are marked by a blue background while pink background indicates the parts in which the subjects actively reproduced the previously observed distance. D: relationship between the visual speed and the speed of the air flow in the congruent (brown line) and the incongruent (green line) experimental conditions.

Visual Stimulus

The visual stimulus consisted of a virtual plane of white random dots on a dark background. The self-motion was simulated as a straight-ahead (orthogonal relative to the orientation of the projection screen) movement over this plane either at a constant speed (experiment 1) or using a sinusoidal speed profile (experiment 2). The size and position of each dot changed at each frame dependent on their position relative to the observer. The maximal lifetime of each dot was 1 s (120 frames) to prevent subjects from estimating distance by tracking single dots. Since the subjects didn’t know the absolute size of the dots and the absolute altitude of the observer above the plane, they could not determine the absolute distances and speeds from the stimulus display. Thus, here, the distances are always quantified in arbitrary units (AU) and the speed of motion in AU/s. The range of possible speeds was set to 0.8–10 AU/s.

Tactile Stimulus

The tactile component of self-motion was simulated by an air flow created by four commercial hair dryers that were always run simultaneously. The system was calibrated to ensure that a linear increase of the speed of visual motion was accompanied by an equally linear increase of the speed of air flow. The speed of the air flow was measured (in m/s) using a commercial anemometer (Technoline EA-3010). In a preliminary test, we ensured that the combination of the visual and the tactile stimulus felt “natural” to the observers. The range of speeds used in the experiments was between 0.8 and 10 m/s, since wind speeds lower than 0.8 m/s could not be reliably generated by the motors.

Procedures

Speed discrimination.

To make sure that the results of our following experiments would not be dominated by one modality just because of large differences in perceptual precision between the two modalities, we tested the ability of the subjects to discriminate between speeds of visual and tactile stimuli. In the speed-discrimination task, the subject was presented with a standard stimulus and a comparison stimulus (each 2 s long with a break of 500 ms in between). The standard stimulus was always presented first at a speed of either 3, 5, or 7 AU/s. The comparison stimulus was chosen from a range of ±2 AU/s around the speed of the standard stimulus. After both stimuli had been presented, subjects had to indicate which one was perceived as faster by pressing one of two buttons. The combination of three standard speeds and two modality conditions resulted in six experimental conditions. In each condition 41 trials were performed that covered the given range of ± 2 AU/s in equidistant steps of 0.1 AU/s. In this discrimination task, each experimental condition was presented only once. The trials were conducted in a pseudo-randomized order.

Experiment 1: distance reproduction.

In the first experiment, we tested the ability of the subjects to reproduce a previously passively observed traveled distance using visual, tactile, or bimodal feedback. The procedure is shown in Fig. 1B. First, the subject was presented with a simulation of self-motion over a certain distance. The speeds during this presentation were always constant at 4 or 7 AU/s and the distances were either 5, 10, or 15 AU. In this first phase (Presented Distance) the stimulation was always bimodal. After this presentation and a brief pause of 500 ms the task of the subject was to reproduce the passively observed distance using a joystick (Reproduced Distance). In this second part either only the visual information, only the tactile information (and a dark screen), or both modalities were available. Since in a preliminary experiment some subjects reported that they used counting to reproduce the duration of the stimulus rather than the distance, we asked all subjects not to count during the experiment. Also the abrupt on- and offset of the movement in the presentation phase made it difficult for the subjects to reproduce the speed-time profile instead of reproducing the traveled distance. Each combination of 2 speeds, 3 distances, and 3 modality conditions ( = altogether 18 conditions) was presented 20 times. The order of all conditions was pseudo-random.

The experiment was performed in two variations that differed in the relationship between the speed of the tactile stimulus and the speed of self-motion. While in the congruent condition the speed of the air flow was directly proportional to the speed of visual self-motion (Fig. 1D, red line), in the incongruent condition the relation of the two measures was inversely proportional (Fig. 1D, green line). E.g., in the congruent condition the wind speed of 1 m/s was associated with a visual speed of self-motion of 1 AU/s and the wind speed of 9 m/s was associated with a visual speed of 9 AU/s while in the incongruent condition the wind speed of 1 m/s was associated with a visual speed of 9 AU/s and the wind speed of 9 m/s was associated with a visual speed of 1 AU/s. The congruent and the incongruent configurations were always used in both the presentation as well as in the reproduction phase of a trial. Importantly, a purely visual stimulation used in the two conditions was identical in both cases. The subjects were informed that in the incongruent experiments slow speed of air flow indicates a fast speed of self-motion. To avoid any confusion, separate groups of subjects were tested in the congruent and in the incongruent conditions.

Experiment 2: rescaling of tactile information.

In the second experiment we investigated the effect of rescaling of tactile information on the reproduced traveled distance. The sequence of events in experiment 2 is shown in Fig. 1C. All simulated self-motion in experiment 2 was bimodal. As in experiment 1 the subjects passively observed a simulated self-motion over a certain distance. Different from experiment 1, the speed of this motion was not constant but had a sinusoidal profile with a peak speed at either 5 or 7 AU/s. The traveled distances were either 10, 15, or 20 AU. After the first presented distance and a brief gap of 500 ms another distance was presented passively. The speed profile of this second movement consisted of three different speeds between 3 AU/s and 10 AU/s. The distance covered by this second passive movement was always one-third of the first passive distance but the subjects were neither informed about nor aware of this relationship. After the second presentation had stopped, the task of the subjects was to actively reproduce the first observed distance as a sum of the second passively observed distance and the subsequent active motion. In a random 10% of the trials the tactile component of the motion was scaled up by 25% and in another 10% of the trials it was downscaled by 25%. This means that the speed of the air flow on these trials was 25% faster (or slower) than in the rest of the trials. The rescaling was applied only during the second passive motion and the active reproduction part of each trial. The subjects were not informed about and were not aware of this manipulation.

As in experiment 1, a congruent and an incongruent condition were also used in experiment 2. In the congruent condition the speed of the air flow was directly proportional to the speed of self-motion while in the incongruent condition the two measures were inversely proportional, as detailed above.

Data Processing

Fitting of psychometric functions.

Cumulative Gaussian functions were fitted to the data of the speed-discrimination task using the psignifit 3.0 toolbox (Fründ et al. 2011). The standard deviation of the fitted Gaussian was then used as a measure of just noticeable difference (JND). The influences of different modality conditions were tested using paired-sample ANOVAs. Here and in all other cases, a P value of 0.05 or smaller was considered to indicate a statistically significant effect. Since in many of the cases the sphericity assumption for paired-sample ANOVAs (as tested by the Maulchy test) was violated, we applied the Greenhouse Geisser correction to obtain an accurate measure of significance.

Calculation of precision and accuracy.

During distance reproduction (experiment 1) we measured the precision and the accuracy of the responses in each experimental condition (combination of presented speed, presented distance, feedback modality, and congruency of visual and tactile stimulus). As a measure of precision, we used the intrasubject variability in each condition represented by the standard deviation of the reproduced distances. Accuracy was calculated as the absolute value of the difference between the presented distance and the mean of the reproduced distances in each condition.

Speed vs. duration tradeoff.

Since the reproduced distance can be subdivided into the factors Speed of Motion and Duration of Motion, we investigated whether the subjects relied only on one of these variables to estimate the traveled distance or whether they took both variables into account at the same time. For this we tested how well the subjects compensated trial-by-trial variability in chosen speed by adjusting the duration of motion to obtain a correct reproduced distance. We calculated a Pearson correlation between the average speed in each trial and its duration. The relationship between the chosen speed and the correct compensation by duration to reach a certain distance is not linear, but inversely related:

Yet a Pearson correlation only correctly operates on linear interdependencies. Accordingly, we reversed the nonlinearity by using the negative reciprocal of the duration (−1/duration) in the calculation of the correlation coefficients. In a second step, to combine the data for different presented distances, we calculated the standard z scores of the duration and the speed data separately for each of the three presented distances and then calculated the Pearson correlation on the z-transformed data.

This last conversion was made to account for potential differences in variability of the data for the different distances; however, it should be noted that it did not change the results appreciably.

To quantitatively describe the extent to which the trial-by-trial variation of speeds was compensated by an adjustment of the duration of self-motion we first calculated the duration that accounted perfectly for the average speed used in each trial:

| (1) |

In a second step, we calculated the linear regression between the measured duration of motion and the optimal duration. The slope of the regression line gives the extent in which the variability of speeds is compensated by the chosen duration. A regression slope of 1 would indicate a perfect compensation, while a slope <1 or >1 would indicate an under- or an overcompensation, respectively.

Optimal integration.

In experiment 1 we calculated the precision and accuracy for the bimodal task that is expected for an optimal integration of the two modalities. For this we used similar methods as described in Alais and Burr (2004). The predicted bimodal precision was calculated from the unimodal precision values as

| (2) |

where sv and st are the standard deviations of the unimodal distance reproductions and svtOpt is the predicted bimodal standard deviation under the assumption of an optimal integration of the two modalities.

For calculation of the predicted bimodal accuracy based on the optimal integration of the two modalities we first calculated the mean expected bimodal reproduced distance as

| (3) |

where distv and distt are the unimodal reproduced distances, distvtOpt represents the estimate of the reproduced distance under the assumption of an optimal integration of the two modalities, and wv and wt are weights according to

| (4) |

Then the predicted accuracy was determined as the absolute value of the difference between distvtOpt and the presented distance.

RESULTS

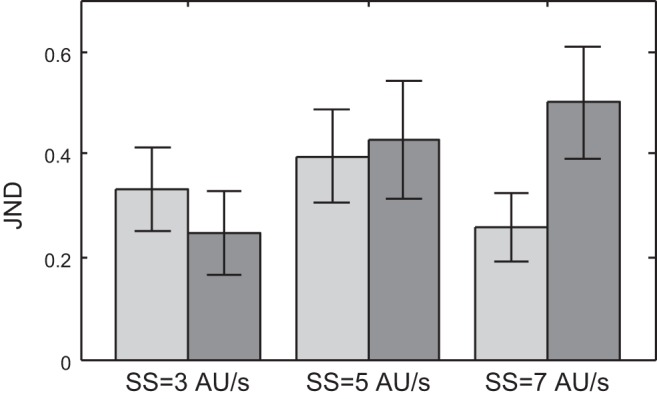

Speed Discrimination

Figure 2 shows the average JNDs from a sample of eight subjects who took part in the experiments using the congruent tactile condition. The JNDs were obtained from the cumulative Gaussian curves that were fitted to the data in the speed-discrimination task (see methods) for each of the investigated conditions. A paired-samples two-way ANOVA revealed neither a significant effect of speed nor a significant effect of modality for our sample (F = 0.62, P = 0.55 for speed, F = 1.4, P = 0.27 for modality) and also no significant interaction of the two factors (F = 2.3, P = 0.15). The JNDs are in a range between 0.2 and 0.5 AU/s, which is rather small relative to the range of speeds used in our experiments of 0–10 AU/s. Overall, the sensitivity for tactile speeds in our apparatus was not significantly different from the sensitivity to visual speeds.

Fig. 2.

Averages and standard errors of just noticeable differences (JNDs) in the speed-discrimination task for a sample of 8 subjects. We investigated combinations of 3 standard speeds [SS; 3, 5, and 7 arbitrary units (AU)/s] and 2 modality conditions; tactile (light bars) and visual (dark bars). Differences between modality conditions were not statistically significant (paired 2-way ANOVA, P > 0.05).

Experiment 1: Distance Reproduction

Integration of visual and tactile information: congruent condition.

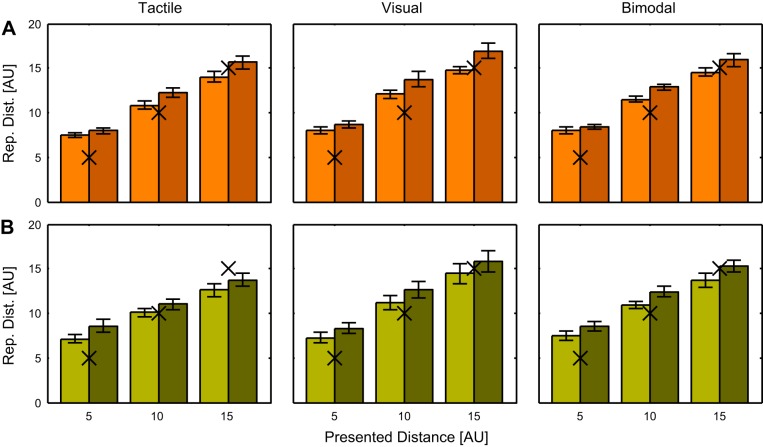

In the distance reproduction task, we tested the precision and accuracy of the actively reproduced distances. In particular, we asked whether the additional information provided by the tactile stimulus is integrated with the visual stimulation in a way that improves the precision and accuracy. Figure 3A shows the average actively reproduced distances for our sample of eight subjects. A three-way repeated-measures ANOVA with factors Presented Distance, Presented Speed, and Modality Condition revealed significant effects of presented speed and presented distance (F = 19.9, P = 0.003 for speed, F = 252, P < 0.001 for distance) and the interaction of the two (F = 5.1, P = 0.03). Interestingly no significant effect of modality condition (F = 1.8, P = 0.22) on reproduced distance was found in the data. Also, no significant interactions between presented distance and modality condition (F = 1.1, P = 0.36) or presented speed and modality condition (F = 0.6, P = 0.56) were found. This indicates that the significant effects of presented speed and presented distance did not depend on feedback modality.

Fig. 3.

A: means and standard errors of the reproduced distances (Rep. Dist.) in the congruent condition for a sample of 8 subjects. Responses for presentation speed of 4 AU/s are shown in orange, responses for presentation speed of 7 AU/s are shown in dark brown. Black Xs mark the presented distances for a better overview. B: means and standard errors of the reproduced distances in the incongruent condition for a sample of 8 subjects. Responses for presentation speed of 4 AU/s are shown in light green, responses for presentation speed of 7 AU/s are shown in dark green. Black Xs mark the presented distances for a better overview.

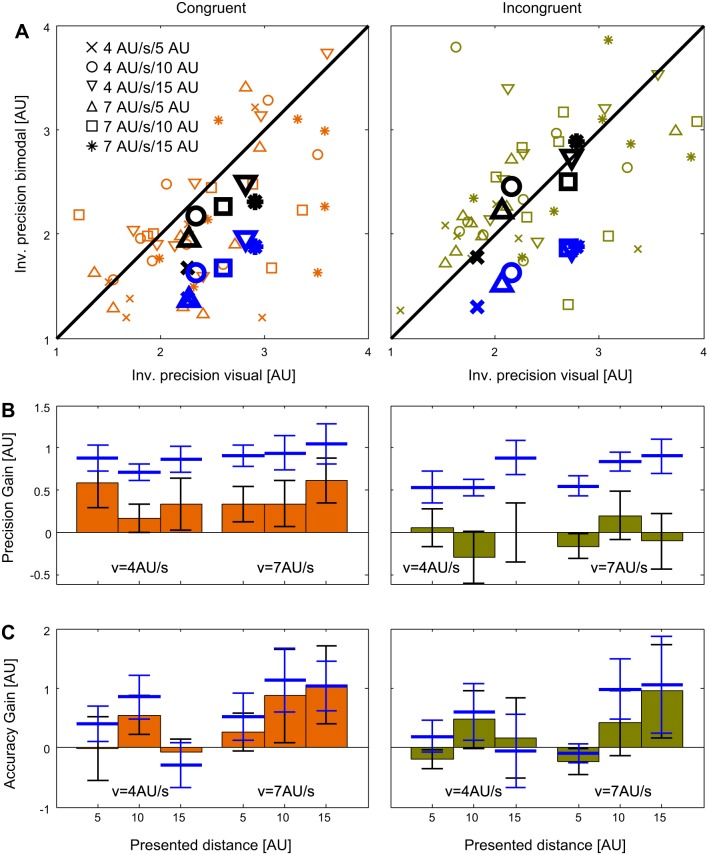

The comparison of precision for the visual and the bimodal feedbacks in the congruent condition is shown in the left panels of Fig. 4, A and B. Figure 4A shows the precision for the two types of feedback for single subjects (small markers) and the averages for each tested condition (large black markers). The precision predicted for the bimodal feedback by an optimal integration of the two single modalities is indicated by blue markers (see Eq. 2). To quantify a potential improvement of precision and accuracy by additional tactile information, we subtracted the precision values for the bimodal feedback from those for unimodal visual feedback; in the following we call this difference the “precision gain.” Figure 4B shows that an increase in precision ( = a positive precision gain) for the bimodal feedback was found in each of the six investigated stimulus configurations (2 presentation speeds × 3 presented distances). A three-way repeated-measures ANOVA with the factors Presented Distance, Presented Speed, and Modality Condition (visual vs. bimodal) revealed a significant effect of modality (F = 7.2, P = 0.03), indicating that the observed increase in precision in the bimodal presentation was indeed significant.

Fig. 4.

A: comparison of precision for the visual (x-axes) and the bimodal (y-axes) feedback in the distance reproduction task. Results for the congruent experiment are shown at left and results for the incongruent experiment on the right. Data from single subjects in each of the six conditions (2 presented speeds × 3 presented distances) are shown as small brown and green markers (for the exact meaning of each marker see legend in the left plot). The means of 8 subjects in each condition are depicted as large black markers. Bimodal precisions predicted by an optimal integration of the 2 modalities are shown as large blue markers. Note that the scales are inverted (Inv.) and thus high values indicate low precision. B: means and standard errors of the increases in precision between the visual and the bimodal conditions in the congruent (left) and the incongruent (right) experiments. Blue lines show the means and standard errors of increases in precision assuming an optimal integration of the modalities in the bimodal condition (Eq. 2). C: means and standard errors of the increases in accuracy between the visual and the bimodal conditions in the congruent (left) and the incongruent (right) experiments. Blue lines show the means and standard errors of increases in accuracy predicted from an optimal integration of the modalities in the bimodal condition (Eqs. 3 and 4).

In a second step, we investigated the difference between the bimodal precision found in the data and bimodal precision expected for an optimal integration of the two modalities. As seen in Fig. 4 (left panels) in each of the six stimulus configurations (2 speeds × 3 distances) we found that the precision found in the bimodal data was lower than predicted by optimal integration (indicated by the blue symbols in Fig. 4A and the blue bars in Fig. 4B). Again, a three-way repeated-measures ANOVA with the factors Presented Distance, Presented Speed, and a factor with the two levels “measured bimodal precision vs. optimal bimodal precision” confirmed a significant difference between the measured and the optimal precision (F = 16.3, P < 0.01), indicating that, although the tactile information was integrated with visual information to provide an increase in precision, this integration was not optimal.

We did the same testing for the accuracy of the data to investigate, whether additional tactile information also improved the accuracy of subjects. The results are shown in Fig. 4C. First, we tested whether an optimal integration of visual and tactile information predicts an increase in accuracy. A three-way repeated-measures ANOVA with the factors Presented Distance, Presented Speed, and a factor with the two levels Measured Visual Accuracy vs. Optimal Bimodal Accuracy didn’t show a significant effect of measured vs. bimodal accuracy (F = 4.71, P = 0.07), albeit a trend that didn’t reach significance. This indicates that no improvement in accuracy of the reproduced distances is expected by an optimal integration of the unimodal data. Indeed, when we compared the unimodal visual accuracy with the bimodal accuracy for our sample of subjects, we didn’t find a significant difference (F = 2.0, P = 0.20). Thus we conclude that an improvement in accuracy was neither expected from optimal integration, nor found in our data.

The full results of the three-way ANOVAs conducted in this section are shown in Table 1.

Table 1.

Results of three-way ANOVAs for the congruent and incongruent experiments

| Congruent |

Incongruent |

|||

|---|---|---|---|---|

| Factor | F | P | F | P |

| Reproduced distances | ||||

| s | 19.9 | 0.003 | 10.4 | 0.015 |

| d | 252.0 | <0.001 | 75.5 | <0.001 |

| m | 1.77 | 0.22 | 2.67 | 0.14 |

| s * d | 5.15 | 0.03 | 0.08 | 0.91 |

| s * m | 0.60 | 0.56 | 0.19 | 0.79 |

| d * m | 1.11 | 0.36 | 5.55 | 0.019 |

| s * d * m | 0.13 | 0.89 | 0.83 | 0.491 |

| Precision | ||||

| s | 0.94 | 0.36 | 3.95 | 0.09 |

| d | 14.5 | 0.001 | 16.88 | 0.001 |

| m | 7.21 | 0.03 | 0.05 | 0.83 |

| s * d | 0.57 | 0.53 | 0.62 | 0.54 |

| s * m | 0.12 | 0.74 | 0.17 | 0.70 |

| d * m | 0.69 | 0.48 | 0.001 | 0.996 |

| s * d * m | 0.63 | 0.52 | 1.31 | 0.30 |

| Accuracy | ||||

| s | 20.7 | 0.003 | 6.86 | 0.03 |

| d | 10.9 | 0.008 | 1.36 | 0.29 |

| m | 1.97 | 0.20 | 0.42 | 0.54 |

| s * d | 2.52 | 0.13 | 5.88 | 0.03 |

| s * m | 1.26 | 0.30 | 2.15 | 0.19 |

| d * m | 1.13 | 0.35 | 2.10 | 0.18 |

| s * d * m | 0.77 | 0.44 | 0.68 | 0.46 |

The factors are Speed (s), Presented Distance (d), and Modality (m) (tactile, visual, bimodal for Reproduced distance; visual, bimodal for Precision and Accuracy data).

Integration of visual and tactile information: the incongruent condition.

As described in methods, we also tested an incongruent condition in which the speed of the air flow was inversely proportional to the speed of the self-motion. It is important to note that in this condition the tactile stimulus was as informative about the speed of self-motion as it was in the congruent condition. Consequentially it would be possible to train an ideal observer to estimate speed (and consequently distance) based on the congruent tactile information as well as on the incongruent tactile information. The reproduced distances for all conditions (2 presentation speeds × 3 presented distances × 3 modality conditions) are shown in Fig. 3B. Again, a three-way repeated-measures ANOVA with factors Presented Distance, Presented Speed, and Modality Condition revealed significant effects of presented speed and distance (F = 10.4, P = 0.015 for speed, F = 75.0, P < 0.01 for distance) but no significant effect of modality (F = 2.67, P = 0.14). As in the congruent condition, we conducted three-way ANOVAs to test whether precision and accuracy of the reproduced distances were improved by adding tactile information (Fig. 4, right columns). As for the congruent condition, we subtracted the precision and accuracy values for the bimodal feedback (ordinate in Fig. 4A) from those for unimodal visual feedback (abscissa in Fig. 4A). We found that neither the precision (Fig. 4B; F = 0.05, P = 0.83) nor the accuracy (Fig. 4C; F = 0.42, P = 0.54) of the results were significantly different between the visual and the bimodal conditions. The complete results of the three-way ANOVAs conducted here are shown in Table 1.

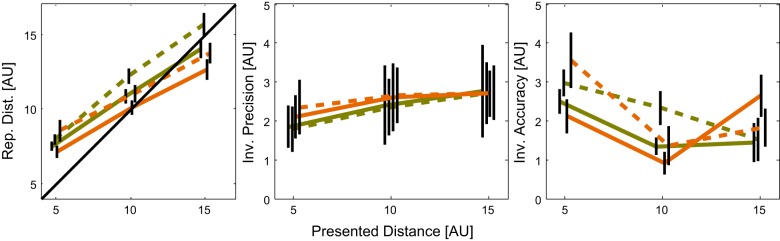

So far, we tested the improvement of precision with additional tactile information by contrasting the visual and the bimodal experimental conditions. In a next step, we also investigated the information provided by the tactile stimulus alone by directly comparing the performance in unimodal tactile conditions that were obtained during the congruent ( = tactile congruent) and the incongruent ( = tactile incongruent) experimental conditions (Fig. 5). Comparisons of performance between the two tactile conditions did not reveal a significant effect, neither for the reproduced distance (three-way mixed-factors ANOVA with the factors Presented Distance, Presented Speed, and Tactile Condition, F = 1.95, P = 0.19) nor for precision (F = 0.49, P = 0.49) and accuracy (F = 0.03, P = 0.87). Full results of the three-way ANOVAs performed in this section are shown in Table 2. This indicates that, while the information from the incongruent tactile stimulus can be used similarly well as from the congruent stimulus, it does not get integrated with the visual information.

Fig. 5.

Comparison of congruent (brown lines) and incongruent (green lines) tactile condition for reproduced distance (left), precision (middle), and accuracy (right) for the different presented distances (x-axes). Solid lines indicate results for presented speed of 4 AU/s, dashed lines for 7 AU/s. Error bars show SE, the lines are slightly shifted for a better visibility of the SE bars. Note that the scales for precision and accuracy are inverted, high values indicating low performance.

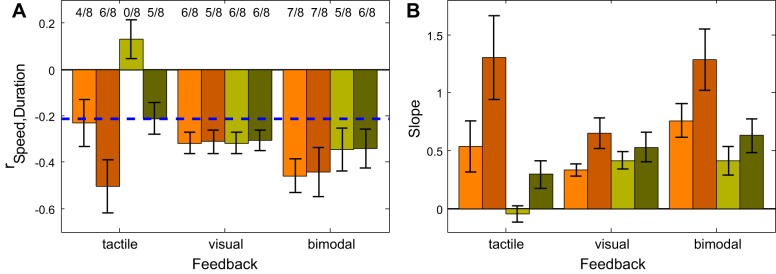

Interactions between speed and duration.

To interpret the above-described results on integration of visual and tactile information, it is crucial to assess what strategy the subjects used during the distance reproduction task. Since the traveled distance can be decomposed into duration of travel and traveling speed, we asked whether the subjects rather relied on independent estimates of these measures or whether they combined speed and time into one measure that could be interpreted as distance. A first step to investigate this question was to test whether the subjects on a trial-by-trial basis compensated for variations of the actively produced traveling speed by accordingly adjusting the duration of travel. As described in methods, we calculated Pearson correlations between the average speed in single trials and an adjusted measure of duration. The results for the sample of eight subjects in the congruent (brown) and in the incongruent (green) conditions are shown in Fig. 6A. For the congruent condition, most subjects showed a significant negative correlation between the two measures for all types of feedback (in 35/48 = 73% of the cases). In contrast, for the incongruent tactile condition the correlations were weaker when only tactile feedback was given. This is shown by the left group of bars in Fig. 6A. A significant effect was found in 5/18 = 28% of the cases in the incongruent condition, but in 10/18 = 56% of the cases in the congruent condition. We compared the correlation coefficients between the congruent and incongruent condition by a mixed-factors ANOVA with the within subject factor Presented Speed and the intersubject factor Tactile Condition (congruent/incongruent) that was carried out separately for each of the three feedback modalities. The effect of the factor Tactile Condition was significant (F = 8.03, P = 0.013) for the purely tactile feedback. When only visual feedback was given (middle group of bars in Fig. 6A) the correlations for congruent and incongruent conditions were very similar (mixed-factors ANOVA with the within subject factor Presented Speed and the intersubject factor Tactile Condition, F = 0.002, P = 0.96) which is not surprising since the visual feedback was identical in both experiments. For the bimodal feedback (right group of bars in Fig. 6A) the correlations in the incongruent condition were slightly smaller than in the congruent condition but this effect failed to reach significance (mixed-factors ANOVA, F = 1.09, P = 0.31). From this we conclude that in the congruent condition the subjects rather combined speed and duration of the stimulus to correctly reproduce distance, while in the incongruent tactile condition speed and duration were rather treated independently.

Fig. 6.

A: Average Pearson correlations (r) between the mean speed and the duration of travel in the distance reproduction task for 8 subjects in the congruent condition (brown) and 8 subjects in the incongruent condition (green). Light colors show results for presentation speed of 4 AU/s, dark colors for presentation speed of 7 AU/s. A blue dashed line marks the threshold of significance (P < 0.05) for a single subject and numbers above the bars indicate the fraction of subjects that has shown a significant negative correlation. Error bars indicate the SE. Note that purely visual stimuli (second group of bars) that were presented in the congruent- or incongruent-tactile experiments were identical in both cases and thus the results—as expected—show no differences. B: means and standard errors of the slopes of the regression lines between the recorded durations of self-motion and durations that would fully compensate for the chosen mean speed in each trial. A regression slope of 1 indicates an accurate compensation while values >1 indicate an over- and values <1 indicate an undercompensation of the chosen speed. The results for the congruent condition are shown in brown, those for the incongruent condition in green. Light colors show results for presentation speed of 4 AU/s, dark colors for presentation speed of 7 AU/s. Error bars indicate the SE.

In a next step, we investigated to what degree the variability of chosen speeds was compensated by changes in response duration. For this we calculated the slope of the linear regression between the duration of each trial and the duration in each trial that would compensate perfectly for the mean chosen speed (for details see methods). A regression slope of 1 indicates an accurate compensation while values >1 indicate an over- and values <1 indicate an undercompensation of the chosen speed. The results for each condition are shown in Fig. 6B. The differences between the congruent and the incongruent conditions regarding the slopes of the regression mirror those of the correlation coefficients. For the tactile and for the bimodal feedback the slopes are significantly steeper in the congruent than in the incongruent condition (mixed-factors ANOVAs, F = 7.41, P = 0.017 for tactile feedback, F = 6.16, P = 0.026 for the bimodal feedback). The slopes for the congruent tactile condition and congruent bimodal conditions are not significantly different from 1 (t = −2.08, P = 0.08, and t = 0.84, P = 0.43 for the two congruent tactile conditions and t = −1.68, P = 0.14, and t = 1.09, P = 0.31 for the two congruent bimodal conditions), which indicates an (almost) perfect coupling between speed and duration for congruent stimuli (note, however, that the slopes for the faster presented speed are always steeper than for the slower presented speed).

For the unimodal visual stimuli the compensation was on average 48% and there was no significant difference between congruent and incongruent experiments (mixed-factors ANOVA, F = 0.05, P = 0.83), which is unsurprising given that the purely visual stimulation was identical in both experiments.

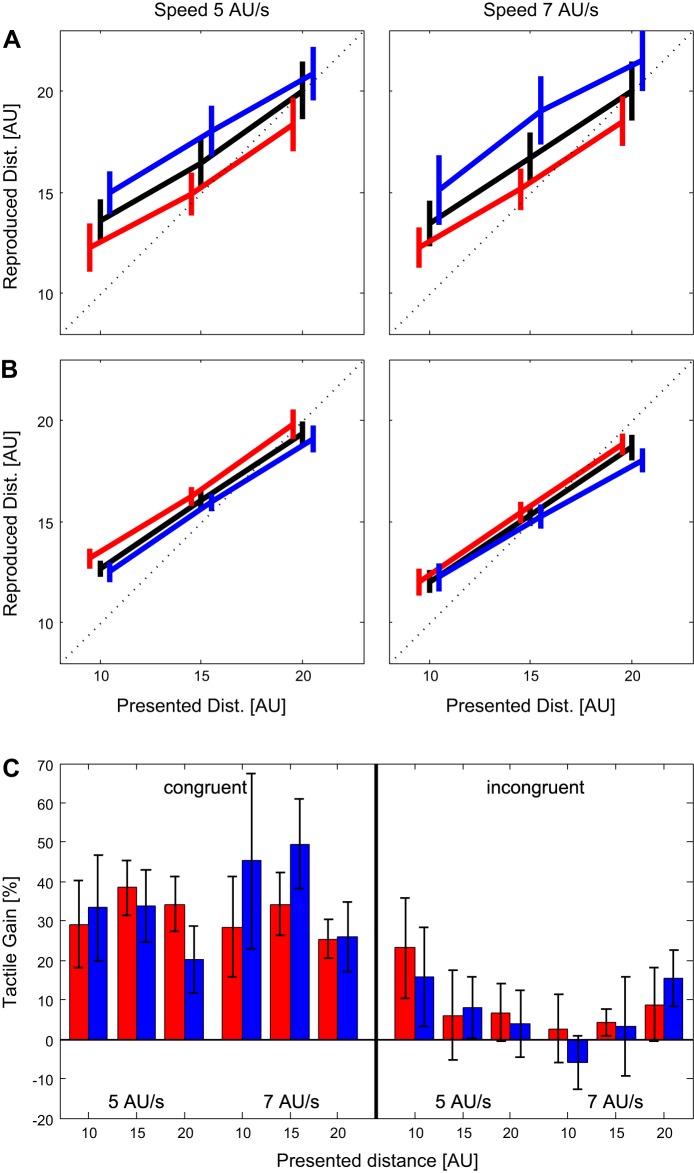

Experiment 2: Rescaling of Tactile Information

In a second set of experiments, the same subjects who took part in experiment 1 again had the task to reproduce a previously experienced distance. Different from experiment 1, however, the gain of the tactile stimulus was increased by 25% in pseudo-randomly chosen 10% of the trials and decreased by 25% in another 10% of the trials. The effects of changes in the tactile gain in the congruent condition are shown in Fig. 7A. A three-way paired-samples ANOVA on the factors Presented Distance, Presented Speed, and Tactile Gain Modification revealed a significant effect of gain modification (F = 31.1, P = 0.001). As shown in Fig. 7A this effect goes in the direction that is predicted, assuming that a downscaled tactile stimulus makes the overall speed appear slower and thus increasing the traveled distance. As expected, the opposite result was obtained for the upscaling of the tactile stimulus. For the incongruent tactile presentation (Fig. 7B) the effect of the gain modification was much weaker than in the congruent condition and failed to reach significance by a narrow margin in a three-way paired-samples ANOVA (F = 5.06, P = 0.053). Note the reversed order of lines in the incongruent condition (Fig. 7B) relative to the congruent condition (Fig. 7A). This change was expected and is a consequence of the incongruent condition in which the downscaling of the speed of the air flow actually indicates an increase of the speed of self-motion.

Fig. 7.

A and B: averages (of 8 subjects) and SE of reproduced distances in experiment 2 for the congruent condition (A) and the incongruent condition (B). The 2 columns of plots represent the 2 different presented speeds. Black lines show trials without rescaling of tactile information, in trials represented by the blue lines the speed of the air flow was reduced by 25% while in trials represented by the red lines the speed of the air flow was increased by 25%. The lines are slightly shifted for a better visibility of the SE bars. C: tactile gain for all investigated conditions in the congruent (left) and incongruent (right) experiments. The tactile gain indicates to what degree the up- and downscaling of the tactile stimulus affected the reproduced distance. A value of 100% would indicate a full reliance on the tactile modality while 0% indicates no influence of the tactile information. Blue bars indicate trials in which the tactile speed was reduced by 25% (downscaling trials), red bars indicate those in which the tactile speed was increased by 25% (upscaling trials). Error bars indicate the SE.

We also calculated the gain in which the up and downscaling of the tactile input influenced the reproduced distance. If the subject would rely entirely on the information provided by the tactile modality, the expected consequence would be a 25% longer reproduced distance in case of downscaling and 25% shorter reproduced distance in case of upscaling. This effect would constitute a tactile gain of 100%. If the influence was equally distributed between the tactile and the visual modality one would expect a gain of ~50%. The actual tactile gains for all tested conditions are shown in Fig. 7C. The grand average for the congruent condition is 33% and for the incongruent condition 8%. This indicates that in the bimodal congruent condition the larger weight is given to the visual information while in the incongruent condition the contribution of tactile information is marginal.

DISCUSSION

In the present study, we found that additional tactile stimulation increases precision in a distance reproduction task. This, however, occurs only when the tactile stimulus is presented congruently with the visual stimulation. In contrast, an ecologically reversed tactile stimulus by itself can still provide information for a successful distance reproduction, but this information is not integrated with the information provided by the visual stimulus.

Contribution of Different Factors to Distance Reproduction

A somewhat surprising first result of our study was a nonsignificant effect of feedback modality on the mean reproduced distances as shown in Fig. 3A. One reason for this indifference could be the relatively similar ability to discriminate visual and tactile speeds in our experimental setting (Fig. 2). On the other hand, the reproduced distance does not seem to be an as sensitive a measure of intermodal differences as the precision.

Two other significant effects that were not influenced by the modality were found in the data. The first was the influence of presented speed showing that faster presented speeds yield longer reproduced distances. The second was the effect of presented distance showing that short presented distances are overestimated in the reproduction. These effects are in a good agreement with results found in other studies on distance reproduction (Bremmer and Lappe 1999; Glasauer et al. 2007; von Hopffgarten and Bremmer 2011). A likely reason why these effects of speed and distance show no dependency on feedback modality is that they occur already during the presentation phase, which is always bimodal. This is well in line with studies showing that perceived duration of visual as well as tactile stimuli is dependent on their speed (Kaneko and Murakami 2009; Tomassini et al. 2011). According to these reports the durations of faster stimuli are perceived as longer than durations of slow stimuli. In our case this can explain the longer reproduced distance for faster presented motion stimuli, since at the presentation stage longer durations result in longer perceived distances.

Comparison with Optimal Integration

We found that although additional information from the tactile modality increased the precision of the reproduced distances, this increase was significantly smaller than expected for an optimal integration of the two modalities. Several studies that investigated the principles that govern multisensory integration reported that information from different modalities is combined in a statistically optimal way (e.g., Alais and Burr 2004; Butler et al. 2010; Ernst and Banks 2002; Prsa et al. 2012). However, another group of studies presented results that were quite different from optimal integration. For example, Battaglia et al. (2003) reported that, in a situation that required the combination of visual and auditory cues perception was biased toward the information provided by the visual modality (“visual capture”). In other cases, subjects failed to integrate information from different modalities (Petrini et al. 2016; von Hopffgarten and Bremmer 2011). Accordingly, no comprehensive set of rules that govern the extent to which information from different modalities competes or integrates has been established yet. Our results in part confirm findings from a recent publication by Harris et al. (2017) that shows that tactile and vestibular information are not optimally integrated during self-motion. In their setting, tactile information was even capable to override vestibular information in a manner similar to a winner-takes-all mechanism. This is clearly not the case for the congruent visual and tactile information in which both modalities are (although not optimally) integrated. In the incongruent condition, however, the use of information was strongly biased toward the visual component with only a minor contribution of tactile information as it is shown in results of experiment 2 (Fig. 7).

There are several possibilities why in our case even in the congruent condition the tactile information and the visual information were not optimally integrated. One possibility is that the perceptual system gives a bigger weight to visual information since under natural conditions (although not in the specific setting of our experiment) the visual modality provides a more reliable information about self-motion. This would be the case since the tactile stimulation can be influenced by other environmental factors e.g., the wind. Another possibility is that besides the visual and tactile feedback there is another potential source of information. During the distance reproduction, it may be e.g., the proprioception. When the subject performs the distance reproduction using a joystick there is a pattern of sensations arising from skin, muscles, and joints that corresponds to a certain joystick displacement (Farrer at al. 2003) and thus to a certain speed of simulated self-motion. This additional source of information would decrease the relative weight of tactile processing in our experiments.

Different Strategies of Distance Reproduction

The estimation of distance from the properties of an optic flow field without any distinct objects is a challenging task. A subject facing the task of reproducing a previously presented distance can follow different strategies. Since the distance can be decomposed into the speed and the duration of motion, the subject may attempt to estimate the two components rather independently. It is difficult to completely discard the possibility that at least some of the subjects did follow this strategy. We tried to prevent the subjects from focusing on the perception of duration by asking them not to count time during the presented duration, but the success of such an intervention can’t be established objectively. We can make a stronger case for the subjects processing an integrated combination of speed and duration by showing a negative correlation between the speed chosen on single trials and the duration of the trial. This is as close as we can go with our data from experiment 1 to claim that the subjects rather processed distance instead of speed and duration independently. In experiment 2 we changed the paradigm to prevent the subjects from just reproducing the properties of the presented stimulus. Here in addition to the presented distance the subject also passively observed the first part of the reproduced distance (presented at a complex velocity profile) and only had to finish the distance by actively performed motion. In this way, the options of the subjects to succeed by a pure stimulus-matching strategy were severely limited.

There is another reason to believe that the subjects did not use primarily the temporal component of the stimulus to reproduce the distance. As was shown in several previous studies, the estimation of the duration of a stimulus is strongly dependent on the stimulus modality. It was for example shown that the duration of acoustic stimuli is perceived as longer than the duration of visual stimuli (Goldstone and Lhamon 1974; Walker and Scott 1981; Wearden et al. 1998) and also that the duration of visual stimuli is perceived as longer than the duration of tactile stimuli (Tomassini et al. 2011). If the stimulus duration were a primary determinant of reproduced distance in our experiment, one would expect that these differences between modalities would be reflected in the reproduced distances. More specifically, the reproduced distance for unimodal visual feedback should be shorter than for unimodal tactile feedback. This effect was not found in our data (paired t-test of differences between visual and tactile reproduced distances, P = 0.19).

Neuronal Mechanisms of Intermodal Integration

Several recent imaging studies suggested that areas in the human brain that are responsive to visual motion [in particular the middle temporal (MT) and medial superior temporal (MST) areas] also process tactile motion stimuli (Hagen et al. 2002; Ricciardi et al. 2007; van Kemenade et al. 2014). It is not clear yet whether these findings rather reflect genuine motion processing or whether they are just a result of motion imagery (Beauchamp et al. 2007; Jiang et al. 2015) that is known to activate motion-sensitive areas (Goebel et al. 1998).

In the macaque monkey, a recent neuroimaging study revealed BOLD activation to tactile stimulation in area MST (Guipponi et al. 2015). Yet, given that no such responses to pure tactile stimulation have been shown in neurophysiological recordings, it could be that these tactile afferents in area MST act rather modulatory on visual responses. On the contrary, neurons in macaque area VIP have been shown to respond vigorously to visual as well as tactile stimuli (Avillac et al. 2005, 2007; Bremmer et al. 2002b; Duhamel et al. 1998; Guipponi et al. 2013). When visual and tactile receptive fields were mapped in more detail it was also found, that the position of the receptive fields as well as the preferred motion direction are congruent for the two modalities (Bremmer et al. 2002b; Avillac et al. 2005). Given that a functional equivalent of macaque area VIP has been identified in humans (Bremmer et al. 2001), it is easy to conceive that this congruency at the neuronal level may account for the observed differences in results for congruent and incongruent conditions in our experiments. More specifically, while in the congruent condition the tactile and the visual stimulus coactivate the same population of neurons, in the incongruent condition the two modalities (e.g., fast visual speed and slow speed of air flow) rather activate separate subpopulations of neurons. The activities produced by the single modalities in this case may compete for dominance (Colavita 1974; Robinson et al. 2016) and inhibit each other (Coultrip et al. 1992) rather than integrate information. The competition and/or integration of information from different modalities has been thoroughly investigated (for a review see Angelaki et al. 2009; Schroeder and Foxe 2005) showing the interplay to be rather complex and often dependent on higher cognitive factors like attention (Talsma et al. 2010). In this specific case, the properties of neurons in area VIP provide a cue to the neuronal processes underlying intermodal integration. Further work on the multimodal properties of VIP neurons is, however, needed to strengthen this link and develop it to a full-fledged quantitative model.

Based on the properties of neurons from macaque area VIP we also could speculate about the perceptual consequences of incongruence between the two modalities that is not based on the different coding of speed but instead on different direction of motion. We expect that when visual and tactile motion are coding same/different headings the integration pattern should be similar as it is for speeds; however, a certain tolerance for different directions is expected due to the large size of the receptive fields in area VIP and the rather broad tuning for heading (Bremmer et al. 2002a). Similar tolerance windows in the temporal and spatial domain for static visual and tactile stimuli have been found, e.g., by Avillac and colleagues (Avillac et al. 2007).

One important difference between the stimuli used in the studies on tactile motion (e.g., Amemiya et al. 2016; Bensmaïa et al. 2006; Craig 2006; Liaci et al. 2016) and those used in our experiments should be kept in mind. In most of those studies a tactile motion was produced by a stimulus that was moved across the skin of the subject, thereby activating different parts of the skin (and thus different receptors) at different times. In our study, in contrast, the tactile consequences of self-motion were simulated by delivering a stream of air that reached different parts of the skin more or less simultaneously. It was mostly the intensity of the tactile stimulus that provided a clue about the speed of self-motion. To our knowledge, a stimulus like this has not yet been investigated in physiological studies on motion perception. Testing neurons from area VIP on congruency between the speed of a visual self-motion and intensity of a tactile air flow stimulus would be a next interesting step to go. Our results suggest that such a congruency exists at some level of visuotactile processing.

Visuotactile Interactions in Peripersonal Space

Our results are somewhat related to another line of research that describes influence of visual and auditory looming stimuli in peripersonal space on tactile sensitivity (Canzoneri et al. 2012; Cléry et al. 2015; Kandula et al. 2015). The studies show that visual (and partly also auditory) approaching stimuli selectively increase tactile sensitivity around the time when the simulated stimulus would reach the observer. The effect is also spatially selective in that the tactile sensitivity is selectively increased at those parts of the skin where the impact of the stimulated stimulus is predicted. In our experiments the random dots increase in size when they approach the observer, which is similar to looming stimuli. Thus the sensitivity for tactile motion in our experiments potentially could be increased by the looming effect. However, in difference to the experiments on looming stimuli, our stimulus consists of a random dot plane that is placed at the ground level. Thus the objects in the plane would never hit the observer. Still it might be an interesting experiment to test how the relative contribution of visual and tactile information might change if 1) the size of the dots remained constant and thus the self-motion were only defined by the trajectory of the dots and not also by the changes in their size or if 2) self-motion were through a 3D cloud of objects some of which might be on a collision course with the observer.

Role of Experience and Training in Integration of Information

Given the lack of integration of the incongruent tactile information with the visual information in our experiments, one could ask whether this would change if subjects had experienced a prolonged training with incongruent tactile stimuli. Since our explanation of this effect links it to basic properties of multimodal neurons in primate area VIP it seems unlikely that a moderate amount of training would have a major effect. Although training-related plasticity regarding the tuning of neurons was reported for different cortical areas (e.g., Espinosa and Stryker 2012; Grunewald et al. 1999; Recanzone et al. 1993; for a review see Gilbert and Li 2012), it is difficult to envision a long-time training in a reverse-tactile world. This is in particular since every natural real-world interaction would immediately countermand the effects of such training.

GRANTS

This work was supported by the DFG-SFB/TRR 135/A2.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

J.C., J.P., S.K., and F.B. conceived and designed research; J.C. and J.P. analyzed data; J.C., J.P., S.K., and F.B. interpreted results of experiments; J.C. prepared figures; J.C. drafted manuscript; J.C., J.P., S.K., and F.B. edited and revised manuscript; J.C., J.P., S.K., and F.B. approved final version of manuscript; J.P. performed experiments.

Table 2.

Results of three-way mixed-factors ANOVAs with the within-subjects factors Speed and Presented Distance and the between-subjects factor Congruence (congruent, incongruent)

| Reproduced Distance |

Precision |

Accuracy |

||||

|---|---|---|---|---|---|---|

| Factor | F | P | F | P | F | P |

| s | 11.5 | 0.004 | 0.07 | 0.80 | 2.90 | 0.11 |

| d | 180.8 | <0.001 | 17.8 | <0.001 | 6.38 | 0.01 |

| c | 1.95 | 0.19 | 0.50 | 0.49 | 0.03 | 0.87 |

| s * d | 0.94 | 0.37 | 0.08 | 0.90 | 5.25 | 0.02 |

| s * c | 0.00 | 0.99 | 0.77 | 0.40 | 0.10 | 0.76 |

| d * c | 3.73 | 0.07 | 1.70 | 0.20 | 1.94 | 0.18 |

| s * d * c | 3.70 | 0.06 | 0.11 | 0.86 | 2.49 | 0.12 |

c, Congruence.

ACKNOWLEDGMENTS

We thank Alexander Platzner for constructing the experimental apparatus and Steven Youngkin and Adrian Wroblewski for helping with collection of the data.

REFERENCES

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol 14: 257–262, 2004. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Amemiya T, Hirota K, Ikei Y. Tactile apparent motion on the torso modulates perceived forward self-motion velocity. IEEE Trans Haptics 9: 474–482, 2016. doi: 10.1109/TOH.2016.2598332. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Treue S, Graziano M, Snowden RJ, Qian N. From direction of motion to patterns of motion: hierarchies of motion analysis in the visual cortex. In: Brain Mechanisms of Perception and Memory from Neuron to Behavior, edited by Ono T, Squire LR, Raichle ME, Perrett DI, Fukuda M. New York: Oxford Univ. Press, 1993, p. 183–189. [Google Scholar]

- Angelaki DE, Cullen KE. Vestibular system: the many facets of a multimodal sense. Annu Rev Neurosci 31: 125–150, 2008. doi: 10.1146/annurev.neuro.31.060407.125555. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Gu Y, DeAngelis GC. Multisensory integration: psychophysics, neurophysiology, and computation. Curr Opin Neurobiol 19: 452–458, 2009. doi: 10.1016/j.conb.2009.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Ben Hamed S, Duhamel J-R. Multisensory integration in the ventral intraparietal area of the macaque monkey. J Neurosci 27: 1922–1932, 2007. doi: 10.1523/JNEUROSCI.2646-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avillac M, Denève S, Olivier E, Pouget A, Duhamel J-R. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci 8: 941–949, 2005. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Battaglia PW, Jacobs RA, Aslin RN. Bayesian integration of visual and auditory signals for spatial localization. J Opt Soc Am A Opt Image Sci Vis 20: 1391–1397, 2003. doi: 10.1364/JOSAA.20.001391. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Yasar NE, Kishan N, Ro T. Human MST but not MT responds to tactile stimulation. J Neurosci 27: 8261–8267, 2007. doi: 10.1523/JNEUROSCI.0754-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bensmaïa SJ, Killebrew JH, Craig JC. Influence of visual motion on tactile motion perception. J Neurophysiol 96: 1625–1637, 2006. doi: 10.1152/jn.00192.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Brandt T, Dichgans J, Koenig E. Differential effects of central versus peripheral vision on egocentric and exocentric motion perception. Exp Brain Res 16: 476–491, 1973. doi: 10.1007/BF00234474. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel J-R, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP). Eur J Neurosci 16: 1554–1568, 2002a. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel J-R, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP). Eur J Neurosci 16: 1569–1586, 2002b. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Hoffmann K-P, Lappe M. Visual selectivity for heading in monkey area MST. Exp Brain Res 200: 51–60, 2010. doi: 10.1007/s00221-009-1990-3. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann N Y Acad Sci 871: 272–281, 1999. doi: 10.1111/j.1749-6632.1999.tb09191.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Lappe M. The use of optical velocities for distance discrimination and reproduction during visually simulated self motion. Exp Brain Res 127: 33–42, 1999. doi: 10.1007/s002210050771. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron 29: 287–296, 2001. doi: 10.1016/S0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Britten KH. Mechanisms of self-motion perception. Annu Rev Neurosci 31: 389–410, 2008. doi: 10.1146/annurev.neuro.29.051605.112953. [DOI] [PubMed] [Google Scholar]

- Butler JS, Smith ST, Campos JL, Bülthoff HH. Bayesian integration of visual and vestibular signals for heading. J Vis 10: 23, 2010. doi: 10.1167/10.11.23. [DOI] [PubMed] [Google Scholar]

- Canzoneri E, Magosso E, Serino A. Dynamic sounds capture the boundaries of peripersonal space representation in humans. PLoS One 7: e44306, 2012. doi: 10.1371/journal.pone.0044306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chancel M, Blanchard C, Guerraz M, Montagnini A, Kavounoudias A. Optimal visuotactile integration for velocity discrimination of self-hand movements. J Neurophysiol 116: 1522–1535, 2016. doi: 10.1152/jn.00883.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci 31: 12036–12052, 2011. doi: 10.1523/JNEUROSCI.0395-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, Deangelis GC, Angelaki DE. Functional specializations of the ventral intraparietal area for multisensory heading discrimination. J Neurosci 33: 3567–3581, 2013. doi: 10.1523/JNEUROSCI.4522-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cléry J, Guipponi O, Odouard S, Wardak C, Ben Hamed S. Impact prediction by looming visual stimuli enhances tactile detection. J Neurosci 35: 4179–4189, 2015. doi: 10.1523/JNEUROSCI.3031-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colavita FB. Human sensory dominance. Percept Psychophys 16: 409–412, 1974. doi: 10.3758/BF03203962. [DOI] [Google Scholar]

- Coultrip R, Granger R, Lynch G. A cortical model of winner-take-all competition via lateral inhibition. Neural Netw 5: 47–54, 1992. doi: 10.1016/S0893-6080(05)80006-1. [DOI] [Google Scholar]

- Craig JC. Visual motion interferes with tactile motion perception. Perception 35: 351–367, 2006. doi: 10.1068/p5334. [DOI] [PubMed] [Google Scholar]

- Cullen KE. The neural encoding of self-motion. Curr Opin Neurobiol 21: 587–595, 2011. doi: 10.1016/j.conb.2011.05.022. [DOI] [PubMed] [Google Scholar]

- Cullen KE. The vestibular system: multimodal integration and encoding of self-motion for motor control. Trends Neurosci 35: 185–196, 2012. doi: 10.1016/j.tins.2011.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Spence C. Cross-modal links in spatial attention. Philos Trans R Soc Lond B Biol Sci 353: 1319–1331, 1998a. doi: 10.1098/rstb.1998.0286. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Spence C. Attention and the crossmodal construction of space. Trends Cogn Sci 2: 254–262, 1998b. doi: 10.1016/S1364-6613(98)01188-7. [DOI] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J Neurophysiol 80: 1816–1827, 1998. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J Neurophysiol 65: 1329–1345, 1991. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol 79: 126–136, 1998. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429–433, 2002. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Espinosa JS, Stryker MP. Development and plasticity of the primary visual cortex. Neuron 75: 230–249, 2012. doi: 10.1016/j.neuron.2012.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farrer C, Franck N, Paillard J, Jeannerod M. The role of proprioception in action recognition. Conscious Cogn 12: 609–619, 2003. doi: 10.1016/S1053-8100(03)00047-3. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic reweighting of visual and vestibular cues during self-motion perception. J Neurosci 29: 15601–15612, 2009. doi: 10.1523/JNEUROSCI.2574-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fründ I, Haenel NV, Wichmann FA. Inference for psychometric functions in the presence of nonstationary behavior. J Vis 11: 16, 2011. doi: 10.1167/11.6.16. [DOI] [PubMed] [Google Scholar]

- Gibson JJ. The Perception of the Visual World. Boston: Houghton Mifflin, 1950. [Google Scholar]

- Gilbert CD, Li W. Adult visual cortical plasticity. Neuron 75: 250–264, 2012. doi: 10.1016/j.neuron.2012.06.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasauer S, Schneider E, Grasso R, Ivanenko YP. Space-time relativity in self-motion reproduction. J Neurophysiol 97: 451–461, 2007. doi: 10.1152/jn.01243.2005. [DOI] [PubMed] [Google Scholar]

- Goebel R, Khorram-Sefat D, Muckli L, Hacker H, Singer W. The constructive nature of vision: direct evidence from functional magnetic resonance imaging studies of apparent motion and motion imagery. Eur J Neurosci 10: 1563–1573, 1998. doi: 10.1046/j.1460-9568.1998.00181.x. [DOI] [PubMed] [Google Scholar]

- Goldstone S, Lhamon WT. Studies of auditory-visual differences in human time judgment. 1. Sounds are judged longer than lights. Percept Mot Skills 39: 63–82, 1974. doi: 10.2466/pms.1974.39.1.63. [DOI] [PubMed] [Google Scholar]

- Grunewald A, Linden JF, Andersen RA. Responses to auditory stimuli in macaque lateral intraparietal area. I. Effects of training. J Neurophysiol 82: 330–342, 1999. [DOI] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci 11: 1201–1210, 2008. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci 26: 73–85, 2006. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guipponi O, Cléry J, Odouard S, Wardak C, Ben Hamed S. Whole brain mapping of visual and tactile convergence in the macaque monkey. Neuroimage 117: 93–102, 2015. doi: 10.1016/j.neuroimage.2015.05.022. [DOI] [PubMed] [Google Scholar]

- Guipponi O, Wardak C, Ibarrola D, Comte J-C, Sappey-Marinier D, Pinède S, Ben Hamed S. Multimodal convergence within the intraparietal sulcus of the macaque monkey. J Neurosci 33: 4128–4139, 2013. doi: 10.1523/JNEUROSCI.1421-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagen MC, Franzén O, McGlone F, Essick G, Dancer C, Pardo JV. Tactile motion activates the human middle temporal/V5 (MT/V5) complex. Eur J Neurosci 16: 957–964, 2002. doi: 10.1046/j.1460-9568.2002.02139.x. [DOI] [PubMed] [Google Scholar]

- Harris LR, Sakurai K, Beaudot WHA. Tactile flow overrides other cues to self motion. Sci Rep 7: 1059, 2017. doi: 10.1038/s41598-017-01111-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hlavacka F, Mergner T, Schweigart G. Interaction of vestibular and proprioceptive inputs for human self-motion perception. Neurosci Lett 138: 161–164, 1992. doi: 10.1016/0304-3940(92)90496-T. [DOI] [PubMed] [Google Scholar]

- Huk AC, Dougherty RF, Heeger DJ. Retinotopy and functional subdivision of human areas MT and MST. J Neurosci 22: 7195–7205, 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang F, Beauchamp MS, Fine I. Re-examining overlap between tactile and visual motion responses within hMT+ and STS. Neuroimage 119: 187–196, 2015. doi: 10.1016/j.neuroimage.2015.06.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaliuzhna M, Ferrè ER, Herbelin B, Blanke O, Haggard P. Multisensory effects on somatosensation: a trimodal visuo-vestibular-tactile interaction. Sci Rep 6: 26301, 2016. doi: 10.1038/srep26301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaminiarz A, Schlack A, Hoffmann K-P, Lappe M, Bremmer F. Visual selectivity for heading in the macaque ventral intraparietal area. J Neurophysiol 112: 2470–2480, 2014. doi: 10.1152/jn.00410.2014. [DOI] [PubMed] [Google Scholar]

- Kandula M, Hofman D, Dijkerman HC. Visuo-tactile interactions are dependent on the predictive value of the visual stimulus. Neuropsychologia 70: 358–366, 2015. doi: 10.1016/j.neuropsychologia.2014.12.008. [DOI] [PubMed] [Google Scholar]

- Kaneko S, Murakami I. Perceived duration of visual motion increases with speed. J Vis 9: 14, 2009. doi: 10.1167/9.7.14. [DOI] [PubMed] [Google Scholar]

- Kapralos B, Zikovitz D, Jenkin MR, Harris LR. Auditory cues in the perception of self motion. Audio Eng Soc 116th Conv Pap May 8–11, 2004, Berlin, Germany. [Google Scholar]

- Kastner S, Ungerleider LG. The neural basis of biased competition in human visual cortex. Neuropsychologia 39: 1263–1276, 2001. doi: 10.1016/S0028-3932(01)00116-6. [DOI] [PubMed] [Google Scholar]

- Kleiner M, Brainard D, Pelli D. What’s new in Psychtoolbox-3? Perception 36 ECVP Abstract Suppl: 14, 2007. [Google Scholar]

- Knudsen EI, Brainard MS. Creating a unified representation of visual and auditory space in the brain. Annu Rev Neurosci 18: 19–43, 1995. doi: 10.1146/annurev.ne.18.030195.000315. [DOI] [PubMed] [Google Scholar]

- Lappe M. Computational mechanisms for optic flow analysis in primate cortex. Int Rev Neurobiol 44: 235–268, 2000. doi: 10.1016/S0074-7742(08)60745-X. [DOI] [PubMed] [Google Scholar]

- Lappe M, Bremmer F, Pekel M, Thiele A, Hoffmann KP. Optic flow processing in monkey STS: a theoretical and experimental approach. J Neurosci 16: 6265–6285, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liaci E, Bach M, Tebartz van Elst L, Heinrich SP, Kornmeier J. Ambiguity in tactile apparent motion perception. PLoS One 11: e0152736, 2016. doi: 10.1371/journal.pone.0152736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu S, Angelaki DE. Vestibular signals in macaque extrastriate visual cortex are functionally appropriate for heading perception. J Neurosci 29: 8936–8945, 2009. doi: 10.1523/JNEUROSCI.1607-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maciokas JB, Britten KH. Extrastriate area MST and parietal area VIP similarly represent forward headings. J Neurophysiol 104: 239–247, 2010. doi: 10.1152/jn.01083.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marini F, Romano D, Maravita A. The contribution of response conflict, multisensory integration, and body-mediated attention to the crossmodal congruency effect. Exp Brain Res 235: 873–887, 2017. doi: 10.1007/s00221-016-4849-4. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10: 437–442, 1997. doi: 10.1163/156856897X00366. [DOI] [PubMed] [Google Scholar]

- Petrini K, Caradonna A, Foster C, Burgess N, Nardini M. How vision and self-motion combine or compete during path reproduction changes with age. Sci Rep 6: 29163, 2016. doi: 10.1038/srep29163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prsa M, Gale S, Blanke O. Self-motion leads to mandatory cue fusion across sensory modalities. J Neurophysiol 108: 2282–2291, 2012. doi: 10.1152/jn.00439.2012. [DOI] [PubMed] [Google Scholar]

- Recanzone GH, Schreiner CE, Merzenich MM. Plasticity in the frequency representation of primary auditory cortex following discrimination training in adult owl monkeys. J Neurosci 13: 87–103, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ricciardi E, Vanello N, Sani L, Gentili C, Scilingo EP, Landini L, Guazzelli M, Bicchi A, Haxby JV, Pietrini P. The effect of visual experience on the development of functional architecture in hMT+. Cereb Cortex 17: 2933–2939, 2007. doi: 10.1093/cercor/bhm018. [DOI] [PubMed] [Google Scholar]

- Robinson CW, Chandra M, Sinnett S. Existence of competing modality dominances. Atten Percept Psychophys 78: 1104–1114, 2016. doi: 10.3758/s13414-016-1061-3. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann K-P, Bremmer F. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP). Eur J Neurosci 16: 1877–1886, 2002. doi: 10.1046/j.1460-9568.2002.02251.x. [DOI] [PubMed] [Google Scholar]

- Schlack A, Sterbing-D’Angelo SJ, Hartung K, Hoffmann K-P, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci 25: 4616–4625, 2005. doi: 10.1523/JNEUROSCI.0455-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr Opin Neurobiol 15: 454–458, 2005. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Seno T, Ogawa M, Ito H, Sunaga S. Consistent air flow to the face facilitates vection. Perception 40: 1237–1240, 2011. doi: 10.1068/p7055. [DOI] [PubMed] [Google Scholar]

- Talsma D, Senkowski D, Soto-Faraco S, Woldorff MG. The multifaceted interplay between attention and multisensory integration. Trends Cogn Sci 14: 400–410, 2010. doi: 10.1016/j.tics.2010.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka K, Hikosaka K, Saito H, Yukie M, Fukada Y, Iwai E. Analysis of local and wide-field movements in the superior temporal visual areas of the macaque monkey. J Neurosci 6: 134–144, 1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomassini A, Gori M, Burr D, Sandini G, Morrone MC. Perceived duration of visual and tactile stimuli depends on perceived speed. Front Integr Neurosci 5: 51, 2011. doi: 10.3389/fnint.2011.00051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Väljamäe A. Auditorily-induced illusory self-motion: a review. Brain Res Brain Res Rev 61: 240–255, 2009. doi: 10.1016/j.brainresrev.2009.07.001. [DOI] [PubMed] [Google Scholar]

- Väljamäe A, Larsson P, Västfjäll D, Kleiner M. Sound representing self-motion in virtual environments enhances linear vection. Presence Teleoperators Virtual Environ 17: 43–56, 2008. doi: 10.1162/pres.17.1.43. [DOI] [Google Scholar]

- van Kemenade BM, Seymour K, Wacker E, Spitzer B, Blankenburg F, Sterzer P. Tactile and visual motion direction processing in hMT+/V5. Neuroimage 84: 420–427, 2014. doi: 10.1016/j.neuroimage.2013.09.004. [DOI] [PubMed] [Google Scholar]