Abstract

Background

In order to be practically useful, computer applications for patients with cancer must be easily usable by people with limited computer literacy and impaired vision or dexterity. We describe the usability development process for an application that collects quality of life and symptom information from patients with cancer.

Methods

Usability testing consisted of user testing with cancer patients to identify initial design problems and a survey to compare the computer application’s ease of use between elderly and younger patients.

Results

In user-testing phase, seven men aged 56 to 77 with prostate cancer were observed using the application and interviewed afterwards identifying several usability concerns. Sixty patients with breast, gastrointestinal, or prostate cancer participated in the ease of use survey, with 40% (n=24) aged 65 or older. Younger patients reported significantly higher scores than elderly patients (14.0 vs. 10.8, p=.001), even when prior computer and touch screen use was controlled.

Conclusion

Elderly users reported lower ease of use scores than younger users; however, their average rating was quite high—10.8 on a scale of −16 to +16. It may be unrealistic to expect elderly or less computer literate users to rate any application as positively as younger, more computer savvy users—perhaps it is enough that they rate the application positively and can use it without undue difficulties. We hope that our process can serve as a model for how to bridge the fields of computer usability and healthcare.

Keywords: Computer, literacy, Quality-of-life, Elderly, Doctor–patient communication, Ease of use

Introduction

Computers are as ubiquitous in health care as in modern society [14]. In health care settings, most computer technologies are used by health professionals or clerical staff to keep track of health information, appointments, and billing—it is less common for computers to be used as patient care tools or by patients themselves. With advancements, computer applications have begun to improve doctor–patient communication [8, 13, 14, 28, 31]. This paper describes our efforts to design and test an easy-to-use computer application for acquiring and reporting patient-reported outcomes including symptoms and quality of life.

In a previous study, we found that oncologists reporting adverse events in a clinical chemotherapy trial did not report approximately half of symptoms reported by cancer patients using a standardized paper instrument [10]. Perhaps more surprisingly, approximately half the symptoms that clinicians did report were not concurrently reported by patients. In reviewing these symptoms, the participating oncologists noted that if they had access to the results patients were reporting, then perhaps they could have done something about them. They challenged us to develop a computer application that would make this research quality data available to them in real time. A number of studies have attempted to integrate quality-of-life assessments into clinical care because such information is recognized to be important [3, 8, 22, 27, 31], but practical concerns (time constraints, what information should be collected, and how it should be used, etc.) are barriers to widespread adoption in clinical practice [11]. In discussing these concerns with participating oncologists, they wanted the application to have the following properties:

Ease of use: patients, including those who were not computer literate or who had functional limitations, needed to be able to report their symptoms with minimal oversight, and the reports needed to be easily readable.

Efficiency: the data collection needed to take place in a way that did not disrupt or slow the flow of patients through clinic.

Timely: the reports needed to be available in time for the office visit.

Ensuring ease of use required that we “Know Our Users,” the first rule in usability design [12, 21, 29]. The users in this study were cancer patients, many of whom are elderly (age 65 and older). Research has shown a steady increase in aging populations, with 60% of cancer patients being elderly [9]. The 2003 “Computer and Internet in the US” survey by the US Census Bureau confirmed that elderly Americans are less likely to use a computer (28% vs. 64% for all adults) and less likely to use the internet (25% vs. 59% for all adults). Functional impairments also become more common with age, particularly impairments in vision, musculoskeletal function, and cognitive function [7, 33]. The 2006 American Community Survey reported that 41% of people age 65 and older had a disability including 16.5% with a severe vision or hearing impairment, 31.3% with a physical disability, and 12.4% with a cognitive disability such as difficulty learning, remembering, or concentrating [4]. Physical impairments often make manipulating a mouse and keyboard difficult [17]. Thus, designing an easily usable computer application for cancer patients must also consider the usability needs of the elderly.

In 2003 when this study was conceived, tablet PCs (mobile touch screen computers) were the best answer for maximizing ease of use concerns for elderly patients while meeting oncologists’ needs for efficiency and timeliness. First, the tablet touch screen would be easier for novice computer users or those with limited dexterity. The Institute of Medical Informatics found that elderly patients with little or no previous computer experience could easily use a touch screen computer [33]. Second, because tablets are portable, patients can use them in the waiting area, in the chemotherapy infusion unit, or in the exam room while waiting for the physician maximizing efficiency and minimizing the impact on workflow. Finally, collecting the symptom and quality-of-life data electronically allows immediate calculation of scores and timely generation of reports and graphs for use during the office.

Next, we needed to develop a software application for the tablet PC that would collect the data in the most robust and usable way. Previous studies using tablets for quality-of-life questionnaires evaluate users’ reactions and evaluations of their questionnaires but do not provide many details about the software design or implementation [3, 16, 22] other than recommending larger text and larger selection boxes [20]. In this study, we carefully designed and evaluated our software application for usability. This paper describes the process we used to ensure that our application is indeed usable by elderly users with cancer.

Methods

Application design

As mentioned previously, the tablet PC fulfilled our hardware needs. The apparatus chosen was a HP Compaq tc4200 tablet PC with a 12.1-in. display touch screen with rotation ability from laptop mode to tablet mode, weighing 4.5 lbs. The survey contents were 30 questions from the European Organization for the Research and Treatment of Cancer Quality of Life Questionnaire C30 (QLQ-C30), a questionnaire designed to measure the quality of life of patients with cancer [1], and numeric rating scale of 0 (none) to 10 (worst possible) [18] for the severity of eight QLQ C-30 symptoms: pain, shortness of breath, fatigue, sleeplessness, appetite loss, nausea/vomiting, constipation, and diarrhea.

Our software needs for the survey application were a bit more complex. The graphic user interface was designed using US Department of Health and Human Services guidelines developed by the National Cancer Institute [30] and rendered as a series of web pages. Because the tablets could lose network connectivity while in use, the web pages were hosted locally on the tablet using Apache server, while patient responses were stored temporarily in a local Sybase SQL Anywhere® database on the tablet. Because tablets are vulnerable to being dropped and other mishaps, we elected to periodically synchronize with a mainframe SQL server database maintained by our institution.

We incorporated several design decisions to account for patients with poor vision. For elderly users, 12+ point fonts are most readable, and 16+ fonts are needed for those with limited vision. Also, Sans Serif typefaces (e.g., Arial instead of Times New Roman or Courier) are crisper on a computer screen and have been shown to be successful among elderly populations [3, 5, 6, 13]. We used a text display with a font size of 18–27 and Arial typeface in black.

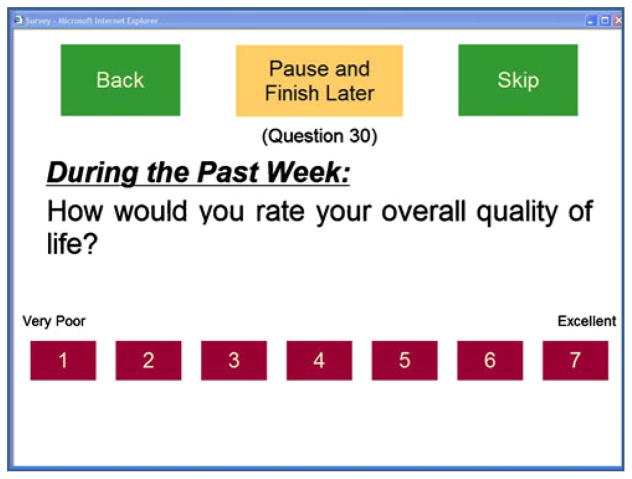

Furthermore lens and cornea changes associated with the aging process can affect color discrimination. The lens darkens and yellows, blocking short wavelength light, so blues appear darker, and patients can struggle to differentiate colors that differ only in their blue content. Primary colors are best, with reds and yellows being the easiest to discern [32]. For these reasons, we used white for the background color with answer option buttons in red, navigational buttons in green, and pause and stop buttons in yellow (Fig. 1).

Fig. 1.

Example question page

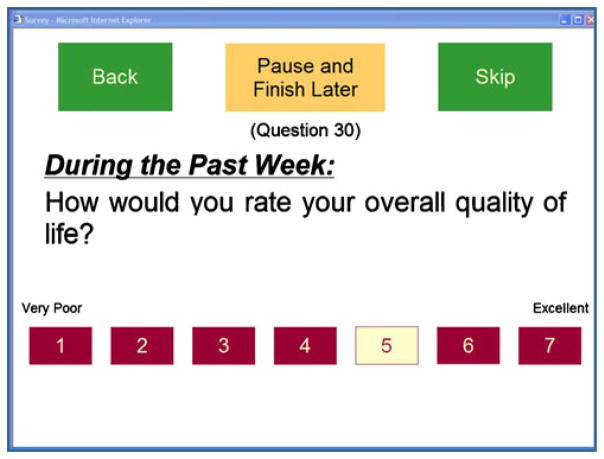

The most important design element was deciding how the patient would select their responses for the survey questions. A standard radio button’s target area for selection was too small for patients with limited vision and dexterity. Instead, we used boxes for the selections, and in order to make the selection boxes as large as possible and the question text as large as possible, we decided to present only one question at a time to the patient. Figure 1 shows an example question in the survey. We also added a visual cue that a response had been selected—the color of a selected response box changes to yellow, as shown in Fig. 2. In addition, if the patient chooses to go back and review answered questions, the selected response box will still be yellow.

Fig. 2.

Example question page with selected response

The final issue we considered was navigational ease. We provided green boxes at the top of every page; the box on the left goes back (omitted for the first question); the box on the right goes ahead (Fig. 2). Because the survey presents one question at a time, we limited survey navigation to one question back or one question ahead to minimize skipped questions. When a question is answered, the survey automatically advances to the next question. To avoid confusion about going back and forward in the survey, we removed the standard internet browser navigation buttons from the window so that the user can use only the boxes on the screen to navigate the survey. In case the survey is interrupted, we provide a selection box at the top center of every page to pause the survey. When the box is selected, the survey ends. When the patient restarts the survey, it automatically begins where the patient previously paused the survey.

Usability testing

Usability testing helps software developers determine whether computer applications are useful and easy to use. Jakob Nielsen, widely considered an authority on usability testing, has demonstrated that 85% of usability problems can be identified from testing the first three to five subjects, whereas 15 subjects are required to identify 100% of usability problems [25]. Instead of testing once with 15 subjects, he recommends testing three to five subjects, modifying the application, then retesting three to five new subjects in iterative cycles until no new major problems are identified [24].

Our usability testing was performed in two phases: a user-testing phase to identify problems with the initial design and an ease of use survey phase to measure the computer application’s usefulness within a clinical environment. All study patients gave their informed consent prior to participating in either phase.

The initial design was user-tested on a convenience sample of patients with metastatic prostate cancer receiving chemotherapy at Oregon Health and Science University (OHSU). All subjects had prostate cancer because we were planning to use the computer application in a clinical trial involving men with prostate cancer. A research assistant asked subjects to read the on-screen instructions, to use the navigational buttons, and to complete the survey questions. Usability was assessed observationally by the research assistant and from a post-test interview. She invited them to “talk out loud” (a usability testing practice where subjects verbalize their thoughts and rationales for performing actions [19]) about what they were experiencing during this process and used a log to record her observations. Observations focused on (1) reading and question comprehension (as indicated by participant inquiry for clarification), (2) answer button identification (appropriate option chosen), (3) delay or pause of >5 s in locating the answer button, (4) skipping a question, (5) going back to a previous question, and (6) ability to perform the task with ease (little to no assistance requested). Afterwards, the research assistant conducted a semi-structured interview asking for subjects overall impression, likes and dislikes, and additional comments, providing us with information for design changes. We planned to change the computer application if we observed obstacles that interfered with the subjects completing any of the tasks. In the case of more subjective concerns (e.g., dislikes), we planned to make a change if a subject raised a concern that was then validated by subsequent subjects.

Ease of use survey

To measure the computer application’s usefulness in a clinical environment, we recruited patients with any form of cancer starting a new chemotherapy regimen at OHSU. Subjects were identified by their oncologist or nurse coordinator as meeting eligibility criteria. For this portion of the study, the research assistant handed the tablet computer to the subject, demonstrated the use of the stylus, and asked them to read the on-screen instructions and answer the quality of life and symptom questions. She did not provide further assistance. To address the oncologists’ concerns about efficiency and timeliness, we scheduled patients to arrive 15–30 min prior to their office visit and included a pause option, so that subjects could interrupt the survey at any time.

Afterwards, subjects completed a paper survey evaluating the computer application’s ease of use based on Ruland’s eight-item adaption of Davis’ ease of use survey [5, 27]. Davis’ survey has demonstrated validity, reliability, internal consistency, and replication reliability [2, 15]. The ease of use questions are answered using a five-point Likert scale (strongly disagree=−2, disagree=−1, neutral=0, agree=1, and strongly agree=2), and the responses are added creating a summary score (range, −16=worst to +16=best). The survey also included nine investigator-authored questions using Davis’ Likert scale format to evaluate specific features of the application and three questions assessing prior computer use from the US Census Bureau’s Computer Use and Internet Use Survey [6].

To assess our application’s usability by elderly patients, we compared their mean score on the Davis questionnaire’s summary score to the mean score for patients age 64 and younger using an independent samples t test. To examine whether age was independently associated with ease of use (versus prior computer experience), we also performed a linear regression with Davis’ summary measure as the dependent variable, and age, prior computer use, and prior touch screen use as independent variables.

Results

For the user-testing phase of this study, seven participants aged 56 to 77 (mean=66 years) were able to complete the survey and navigational tasks without instructions (100% success rate). The average time from start to finish was 16 min (range, 10–22 min). All usability concerns were identified by direct observation and corroborated in subsequent interviews.

Navigation and response buttons: The participants needed a stronger visual cue to let them know they had successfully pen-clicked on a response or navigational button, in particular, when there was a microprocessing delay in moving to the next item. This was addressed by immediately changing the color of a button after it was pressed.

Screen visibility: Patients found the tablet screen hard to read unless it was held at a particular angle. This was addressed by purchasing portable adjustable angle lap tables.

Tablet settings: The digital pen includes a “button” equivalent to a mouse “right-click” button. Subjects who clicked on this got menus that could be confusing or distracting. Also, the tablet would occasionally reorient its screen between landscape and portrait modes which caused some web pages to be cut off. These problems were addressed by disabling the right-click option on the digital pen and locking the tablet display in landscape mode.

Tablet computer limitations: We found the start-up process for the tablets time consuming and complicated. Wireless internet access can be lost or unavailable in “dead” spots. They are easy targets for theft unless they are chained to a heavier object, but that defeats the portable purpose for which they were designed. Also, they are vulnerable to drops. As this was a research study, the research assistant took responsibility for starting the computer and loading the application, and also ensured that the devices were not dropped or stolen; however, in a non-research setting, these seemed like they would be real obstacles to incorporating tablet computers in clinical care.

Observationally, the seven participants were able to complete 83/91 (91.2%) of the navigational tasks “with ease,” meaning without additional instructions from the research assistant. Because we felt the changes we made were relatively minor (the only change to the computer application was to eliminate the delay in color change for response buttons), we elected not to repeat user testing after making the changes described above.

For the ease of use phase, we recruited 60 patients with breast, gastrointestinal, or prostate cancer. Forty percent (n=24) of the subjects were aged 65 or older, mostly men with metastatic prostate cancer. Thirty-three percent of the older subjects did not own or use a computer, compared with 6% of younger subjects. Of the elderly subjects, 33% (n=8) did not own or use a computer, compared with 8% (n=3) of the younger subjects (see Table 1 for demographic summary).

Table 1.

Demographics

| <65years (n=36) | +65years (n=24) | |

|---|---|---|

| Female | 28 (78%) | 1 (4%) |

| Cancer type | ||

| Prostate | 8 (22%) | 23 (96%) |

| Breast | 25 (69%) | 1 (4%) |

| GI | 3 (8%) | 0 |

| Tumor stage | ||

| I | 10 (28%) | 1 (4%) |

| II | 12 (33%) | 0 |

| III | 2 (6%) | 2 (8%) |

| IV | 12 (33%) | 21 (88%) |

| Minority | 1 (3%) | 1 (4%) |

| High school graduate or more | 34 (94%) | 22 (96%) |

| Working full or part time | 19 (53%) | 3 (13%) |

| Computer use | ||

| Never used a touch screen | 2 (6%) | 10 (44%) |

| Do not have or use a computer | 3 (8%) | 8 (33%) |

The subjects’ responses to Davis’ ease of use survey are reported in Table 2, and their responses to the investigator-authored questions about our tablet computers and application are reported in Table 3. Younger subjects reported significantly higher ease of use scores than elderly subjects (14.0 vs. 10.8, p=.001). Of the younger users, 53% (n=19) vs. 33% (n=8) elderly users gave the application the highest ease of use score possible, and no younger users vs. one elderly user gave it a negative score. In linear regression, older age continued to predict lower ease of use scores even when computer use and prior touch screen use were controlled (beta=−0.338 per year of age, p=.016), whereas neither computer use (p=.524) nor prior touch screen use (p=.213) was significantly associated with ease of use. A test for collinearity yielded variance inflation factors of 1.28 to 1.60, suggesting multicollinearity was not significant.

Table 2.

Ease of use

| Meana (standard deviation) | <65years (n=36) | +65years (n=23) |

|---|---|---|

| Learning to operate the computer to fill the questionnaire is easy for me | 1.8 (0.38) | 1.5 (0.93) |

| I find it easy to get the computer to do what I want it to do | 1.7 (0.75) | 1.4 (1.1) |

| It was clear and understandable how to operate the computer | 1.8 (0.45) | 1.5 (0.88) |

| I find the computer to be flexible to interact with | 1.8 (0.38) | 1.3 (1.1) |

| It would be easy for me to become skilled at using the computer | 1.6 (0.70) | 1.1 (1.0) |

| Questions were easy to answer | 1.7 (0.53) | 1.3 (0.85) |

| Overall, I find the computer easy to use | 1.8 (0.38) | 1.5 (0.89) |

| I find this to be a useful tool | 1.7 (0.57) | 1.3 (0.99) |

| Mean summary score (possible range, −16 to +16) | 14.0 (3.2) | 10.8 (6.1) |

Mean scores on Likert scale: −2=strongly disagree, −1=disagree, 0=neutral, 1=agree, and 2=strongly agree

Table 3.

Study specific ease of use questions

| Meana (standard deviation) | <65years (n=36) | +65years (n=23) |

|---|---|---|

| It is easy for me to read the text on the computer screen | 1.8 (0.38) | 1.6 (0.50) |

| The screen is easy to see, clear, and little/no glare | 1.8 (0.38) | 1.54 (0.72) |

| The screen colors are distracting | −1.5 (0.97) | −1.0 (1.30) |

| I can go back and change my answers | 1.6 (0.73) | 1.4 (0.88) |

| If I need to stop the session and restart, I can | 1.6 (0.68) | 0.96 (1.3) |

| It is easy for me to use the stylus pen to click the option buttons | 1.8 (0.55) | 1.5 (0.72) |

| Holding the tablet computer is easy and comfortable | 1.6 (0.73) | 1.5 (0.72) |

| I would prefer to answer survey questions on paper, rather than on a computer | −1.2 (1.3) | −0.88 (1.2) |

| I enjoyed using the tablet PC and Stylus (pen) to answer questions | 1.70 (0.53) | −1.3 (0.92) |

Mean scores on Likert scale: −2=strongly disagree, −1=disagree, 0=neutral, 1=agree, and 2=strongly agree

Discussion

The ease of use survey found that despite our best efforts, elderly users reported lower ease of use summary scores than younger users. Nevertheless, elderly participants rated the overall ease of use as 10.8 (scale −16 to +16), all of their responses to individual items fell between “agree” and “strongly agree,” and only one participant rated ease of use negatively. To our knowledge, there are no benchmarks for Davis’ ease of use instrument; however, Ruland used the same measure to evaluate her computerized system to support shared decision making in symptom management of cancer patients. Subjects in that study were men and women with cancer who had a mean age of 56 years (SD= 11) and gave her computerized system a mean ease of use rating of 5.06 (SD=11.03), which is substantially lower than our tablet computer application [27].

Another way of evaluating our design is to consider how well it follows standard usability principles, also called heuristic evaluation [23]. There are many usability principles that can be used for heuristic evaluation. One commonly referred list is that of Don Norman who describes his principles in his book The Design of Everyday Things [26]. Upon further analysis, we find that by designing for our elderly users, we in fact followed general usability guidelines that apply to everyone. Norman’s principles are as follows:

Visibility: We used large Sans Serif text, large selection boxes, and contrasting colors to help our aging population view the survey. We also provided orienting information such as the question number and time frame (i.e., “during the past week”).

Feedback: It gives users information about what action has just happened. Our response boxes change color when selected, providing users confirmation that the response was registered. We added the words “Loading…please wait” during delays.

Constraints: Limiting choices can prevent errors. We removed the internet browser buttons so that users would not accidentally use them to try to navigate the survey. Preventing subjects from ending the survey until all questions were answered helped avoid missing data.

Mapping: This refers to the relationship of the application controls to their effect in the application. We provided the response options in order of their numeric scale values, lowest on the left to highest on the right. We mapped our navigation boxes similarly—with back on the left and skip (ahead) on the right.

Consistency: This refers to using the same elements in the same way throughout the application. We ensured that all questions in the survey were presented consistently—with 4–11-point scales presented as clickable boxes along the bottom of the page. All navigation buttons are the same color and appear in the same position on all pages, reducing the need for explanations and enhancing memorability.

Affordance: It provides hints or clues about how the application works. For example, a labeled square on a touch screen “affords” pushing; users recognize that the square works as a button. For our application, we used only clickable squares (buttons) as input controls on our pages, which makes the survey simpler and more intuitive for patients.

Our study has a number of obvious limitations. The sample size for the user testing was small, although we can be fairly confident our findings were valid because of Nielsen’s findings that small groups can provide enough usability information, and our subsequent experience using the application has not identified new problems related to the application design [25]. The sample size for our ease of use survey was not large enough to allow us to run multiple t tests that could help to determine if specific ease of use items were driving the age-related differences in the summary score. Because our sample consisted mostly of younger women with breast cancer and older men with prostate cancer, there may be additional confounding factors affecting their ease of use ratings that we did not measure. Furthermore, we did not include non-English speakers in our study, and they might particularly benefit from assistance in communicating about symptoms and quality of life. Finally, our study focused mostly on ease of use and little on usefulness. A future study will look at usefulness of the questionnaire content, as we develop the printed reports that the application will generate for the clinicians and patients to use during office visits. In particular, we want to know if having this information available at the time of the office visit impacts patient symptoms, symptom management practices, or doctor–patient communication.

In conclusion, we applied techniques from the computer usability field in our attempt to create a computer application that could be easily used by patients with cancer including those with some physical and sensory disability. This process could also be applied to other patient populations. Elderly patients did not rate our application as easy to use as younger patients, but perhaps this is an unrealistic goal. Perhaps it is enough that they rate the application positively and can use it without undue confusion, time delays, or difficulties—particularly those introduced by unhelpful design features. We have described in detail the process and measures we used because in addition to the value of our findings, we hope that our process can serve as a model for how to bridge the fields of computer usability and medicine and thus improve the accessibility and utility of future computer applications in clinical care.

Acknowledgments

This work was supported by a Career Development Award (to Dr. Fromme) from the National Cancer Institute (K07CA109511).

Contributor Information

Erik K. Fromme, Division of Hematology and Medical Oncology, Department of Medicine, Oregon Health and Science University, 3181 SW Sam Jackson Park Rd., L586, Portland, OR 97239, USA. Department of Radiation Medicine, Oregon Health and Science University, 3181 SW Sam Jackson Park Rd., Portland, OR 97239, USA. School of Nursing, Oregon Health and Science University, 3181 SW Sam Jackson Park Rd., Portland, OR 97236, USA

Tawni Kenworthy-Heinige, Division of Hematology and Medical Oncology, Department of Medicine, Oregon Health and Science University, 3181 SW Sam Jackson Park Rd., L586, Portland, OR 97239, USA.

Michelle Hribar, Department of Medical Informatics and Clinical Epidemiology, Oregon Health and Science University, 3181 SW Sam Jackson Park Rd., 526/BICC, Portland, OR 97236, USA.

References

- 1.Aaronson NK, Ahmedzai S, Bergman B, Bullinger M, Cull A, Duez NJ, Filiberti A, Flechtner H, Fleishman SB, deHaes JCJM, Kaasa S, Klee M, Osoba D, Razavi D, Rofe PB, Schraub S, Sneeuw K, Sullivan M, Takeda F. The European Organization for Research and Treatment of Cancer QLQ-C30: a quality-of-life instrument for use in international clinical trials in oncology. J Natl Cancer Inst. 1993;85:365–376. doi: 10.1093/jnci/85.5.365. [DOI] [PubMed] [Google Scholar]

- 2.Adams DA, Nelson RR, Todd PA. Perceived usefulness, ease of use, and usage of information technology: a replication. MIS Quarterly. 1992;16:227–247. [Google Scholar]

- 3.Berry DL, Trigg LJ, Lober WB, Karras BT, Galligan ML, Austin-Seymour M, Martin S. Computerized symptom and quality-of-life assessment for patients with cancer part I: development and pilot testing. Oncol Nurs Forum. 2004;31:E75–E83. doi: 10.1188/04.ONF.E75-E83. [DOI] [PubMed] [Google Scholar]

- 4.U.S. Census Bureau. United States S1801 disability characteristics. [Accessed 26 Aug 2008];2006 American Community Survey. 2006 http://factfinder.census.gov/servlet/STTable?_bm=y&-geo_id=01000US&-qr_name=ACS_2006_EST_G00_S1801&-ds_name=ACS_2006_EST_G00_.United States S1801 Disability Characteristics.

- 5.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989;13:319–340. [Google Scholar]

- 6.Day JC, Janus A, Davis J for the U.S. Census Bureau. Computer and internet use in the United States: 2003. [Accessed 29 Jan 2010];Special Studies. 2005 http://www.census.gov/prod/2005pubs/p23-208.pdf.

- 7.Demiris G, Finkelstein SM, Speedie SM. Consideration for the design of a web-based clinical monitoring and educational system for elderly patients. J Med Inform Assoc. 2001;8:468–472. doi: 10.1136/jamia.2001.0080468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Detmar SB, Muller MJ, Schornagel JH, Wever LD, Aaronson NK. Health-related quality-of-life assessments and patient-physician communication: a randomized controlled trial. JAMA. 2002;288:3027–3034. doi: 10.1001/jama.288.23.3027. [DOI] [PubMed] [Google Scholar]

- 9.Ershler WB. Cancer: a disease of the elderly. J Support Oncol. 2003;1(suppl 2):5–10. [PubMed] [Google Scholar]

- 10.Fromme EK, Eilers K, Mori M, Hsieh YC, Beer TM. How accurate is clinician reporting of chemotherapy adverse effects? A comparison to patient-reported symptoms from the QLQ-C30. J Clin Oncol. 2004;22:3485–3490. doi: 10.1200/JCO.2004.03.025. [DOI] [PubMed] [Google Scholar]

- 11.Frost MH, Bonomi AE, Cappelleri JC, Schunemann HJ, Moynihan TJ, Aaronson NK, Group CSCM. Applying quality-of-life data formally and systematically into clinical practice. Mayo Clin Proc. 2007;82(10):1214–1228. doi: 10.4065/82.10.1214. [DOI] [PubMed] [Google Scholar]

- 12.Galitz WO. The essential guide to user interface design: an introduction to GUI design principles and techniques. Wiley Publishing, Inc; Indianapolis: 2007. [Google Scholar]

- 13.Goldsmith D, McDermott D, Safran C. Improving cancer related symptom management with collaborative healthware. MEDINFO. 2004;11:217–221. [PubMed] [Google Scholar]

- 14.Haux R. Health information systems—past, present, future. Int J Med Inform. 2006;75:268–281. doi: 10.1016/j.ijmedinf.2005.08.002. [DOI] [PubMed] [Google Scholar]

- 15.Hendrickson AR, Massey PD, Cronan TP. On the test-retest reliability of perceived usefulness and perceived ease of use scales. MIS Quarterly. 1993;17:227–230. [Google Scholar]

- 16.Hess R, Santucci A, McTigue K, Fischer G, Kapoor W. Patient difficulty using tablet computers to screen in primary care. J Gen Intern Med. 2008;23:476–480. doi: 10.1007/s11606-007-0500-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Holzinger A, Searle G, Nischelwitzer A. On some aspects of improving mobile applications for the elderly. In: Stephanidis C, editor. Coping with diversity in universal access, research and development methods in universal access. LNCS. Vol. 4554. Springer; Berlin/Heidelberg: 2007. pp. 923–932. [Google Scholar]

- 18.Krebs EE, Carey TS, Weinberger M. Accuracy of the pain numeric rating scale as a screening tool in promary care. J Gen Intern Med. 2007;22(10):1453–1458. doi: 10.1007/s11606-007-0321-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lewis CH. Technical Report RC9265. Watson Research Center; Yorktown Heights: 1982. Using the “thinking-aloud” methods in cognitive interface design. [Google Scholar]

- 20.Main DS, Quintela J, Araya-Guerra R, Holcomb S, Pace WD. Exploring patient reactions to pen-tablet computers: a report from CaReNet. Ann Fam Med. 2004;2:421–424. doi: 10.1370/afm.92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mandel T. The elements of user interface design. Wiley; New York: 1997. p. 49. [Google Scholar]

- 22.Mullen KH, Berry DL, Zierler BK. Computerized symptom and quality-of-life assessment for patients with cancer part II: acceptability and usability. Oncol Nurs Forum. 2004;31:E84–E89. doi: 10.1188/04.ONF.E84-E89. [DOI] [PubMed] [Google Scholar]

- 23.Nielsen J. [Accessed 29 Jan 2010];How to conduct a heuristic evaluation. 1994 http://useit.com/papers/heuristic/heuristic_evaluation.html.

- 24.Nielsen J. Why you only need to test 5 users. [Accessed 29 Jan 2010];Alertbox. 2000 http://www.useit.com/alertbox/20000319.html.

- 25.Nielsen J, Landauer TK. A mathematical model of the finding of usability problems. Proceedings ACM/IFIP INTER-CHI’93 Conference; Amsterdam, The Netherlands. 24–29 April; 1993. pp. 206–213. [Google Scholar]

- 26.Norman D. The design of everyday things. Basic Books; New York: 2002. [Google Scholar]

- 27.Ruland C, White T, Stevens M, Fanciullo G, Khilani S. Effects of a computerized system to support shared decision making in symptom management of cancer patients: preliminary results. J Am Med Inform Assoc. 2003;10:553–579. doi: 10.1197/jamia.M1365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Shortliffe EH. Strategic action in health information technology: why the obvious has taken so long. Health Aff (Millwood) 2005;24:1222–1233. doi: 10.1377/hlthaff.24.5.1222. [DOI] [PubMed] [Google Scholar]

- 29.Tidwell J. Designing interfaces: patterns for effective interaction design. O’Reilly Media, Inc; Sebastipol: 2006. [Google Scholar]

- 30.US Department of Health and Human Services. [Accessed 29 Jan 2010];Your guide for developing usable & useful websites: guidelines. http://www.usability.gov/guidelines/index.html.

- 31.Velikova G, Booth L, Smith AB, Brown PM, Lynch P, Brown JM, Selby PJ. Measuring quality of life in routine oncology practice improves communication and patient well-being: a randomized controlled trial. J Clin Oncol. 2004;22:714–724. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- 32.Wijk H, Berg S, Bergman B, Hanson AB, Sivik L, Steen B. Colour perception among the very elderly related to visual and cognitive function. Scand J Caring Sci. 2002;16:91–102. doi: 10.1046/j.1471-6712.2002.00063.x. [DOI] [PubMed] [Google Scholar]

- 33.Williams CA, Templin T, Mosley-Williams AD. Usability of a computer-assisted interview system for the unaided self-entry of patient data in an urban rheumatology clinic. J Am Med Inform Assoc. 2004;11:249–259. doi: 10.1197/jamia.M1527. [DOI] [PMC free article] [PubMed] [Google Scholar]