Abstract

Problem

In medical education, evaluating outcomes from programs intended to transform attitudes or influence career trajectories using conventional methods of monitoring is often difficult. To address this problem, the authors adapted the most significant change (MSC) technique to gain a more comprehensive understanding of the impact of the Medical Education Partnership Initiative (MEPI) program at the University of Zimbabwe College of Health Sciences.

Approach

In 2014–2015, the authors applied the MSC to systematically examine the personal significance and level of positive transformation individuals attributed to their MEPI participation. Interviews were conducted with 28 participants nominated by MEPI program leaders. The authors coded results inductively for prevalent themes in participants’ stories and prepared profiles with representative quotes to place the stories in context. Stakeholders selected 9 themes and 18 stories to illustrate the most significant changes.

Outcomes

Six themes (or outcomes) were expected, as they aligned with MEPI goals—becoming a better teacher, becoming a better clinician, increased interest in teaching, increased interest in research, new career pathways (including commitment to practice in Zimbabwe), and improved research skills. However, three themes were unexpected—increased confidence, expanded interprofessional networks, and improved interpersonal interactions.

Next Steps

We found the MSC to be a useful and systematic evaluation approach for large, complex, and transformative initiatives like MEPI. The MSC seemed to encourage participant reflection, support values inquiry by program leaders, and provide insights into the personal and cultural impacts of MEPI. Additional trial applications of the MSC technique in academic medicine are warranted.

Problem

In 2010, in response to the HIV/AIDs crisis, the Medical Education Partnership Initiative (MEPI), funded by the National Institutes of Health, awarded grants to thirteen medical schools in sub-Saharan Africa. The University of Zimbabwe College of Health Sciences (UZCHS) received three grants; the primary grant was the Novel Education Clinical Trainees and Researchers (NECTAR) program. The UZCHS also received two linked (or supplemental) awards. The goals of MEPI were to increase training and retention of health care workers through faculty development in research and medical education and enhancement in health professions training programs.1

Because of the complex and experiential nature of medical education and academic training programs, meaningful outcomes are often difficult to evaluate. Typical methods of monitoring focus on accountability to funders and quantitative data in the form of participation numbers, surveys, etc. To complement the conventional methods, we need effective evaluation tools that provide a more comprehensive understanding of programs, especially programs intended to transform attitudes or influence career trajectories.

To address this problem, we chose to evaluate the impact of MEPI at the UZCHS using an adaptation of the most significant change (MSC) technique, which was initially developed to evaluate a program in Bangladesh2 and is often used in international development evaluation.3–5 We could find no literature applying the MSC to the evaluation of faculty development programs in health professions education. We believe our interpretation of the MSC fits with the developers’ invitation to innovate and refine the technique in other fields and local contexts.6,7 We also believe this innovative application of the MSC addresses the Best Evidence Medical Education (BEME) Collaboration’s recommendation for greater use of qualitative and mixed methods in the assessment of programs designed to prepare and support medical educators.8

Approach

The grants at the UZCHS supported multifaceted programs and initiatives designed to address MEPI goals in sub-Saharan Africa.9 Unpublished evaluation reports (2011–2015) on the grants by The Evaluation Center, School of Education and Human Development, University of Colorado Denver, used conventional methods that yielded important formative feedback and summative data related to the institutional impacts at the UZCHS. For example, on exit surveys following 15 faculty development workshops, respondents consistently indicated sessions were relevant (average ratings of 4.52 on a 5-point scale, where 1 = “not relevant” and 5 = “very relevant to my work”). A review of attendance records showed 115 of 166 (69%) UZCHS faculty members attended one or more of these workshops, and 40 of 42 (95%) faculty members successfully completed the requirements of a yearlong advanced faculty development program. In surveys and interviews, faculty and students noted MEPI programs had improved internet connectivity, and faculty credited MEPI with serving as a catalyst to the establishment of a department of health professions education. Additionally, analysis of institutional data showed UZCHS faculty increased from 128 to 166 (30%) over five years (2010–2015), and medical student admissions increased from 126 to 203 (61%) over the same period, although other economic factors may been influential.

However, we wanted to systematically examine the personal significance and level of positive transformation individuals attributed to their MEPI participation. For this purpose, we selected and adapted the MSC approach because it differs from conventional methods in four ways. First, with the MSC, interviewers ask broad, open-ended questions rather than focusing on progress toward predetermined goals and objectives. Therefore, the MSC is an ideal method to understand both the expected and unexpected outcomes and to gain more in-depth understanding of a program’s impact. Second, because the MSC uses a highly participatory analysis and interpretation process, we saw the MSC as a useful way to foster participants’ self-reflection and promote discussions among MEPI program leaders.

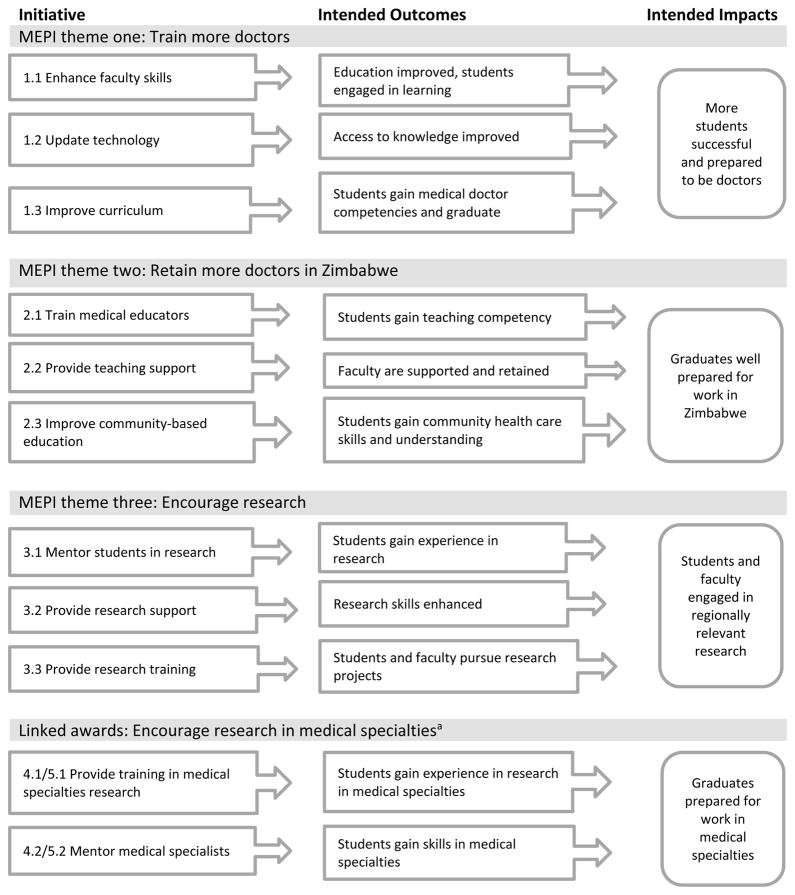

Third, we selected the MSC because of the large and complex nature of the MEPI programs at the UZCHS. The programs had eleven initiatives (Figure 1) and five international partner institutions (the University of Colorado, Stanford University, King’s College London, University College London, and University of Cape Town). Over five years (2010–2015), virtually all UZCHS students and faculty members had the opportunity to engage in initiatives that ranged from minimal exposure (e.g., using the improved internet access) to intense involvement (e.g., international field experiences). The MSC allowed a more holistic evaluation of these experiences, acknowledging the variety of subjects and participation levels, and complemented the conventional evaluations. Finally, we selected the MSC as a means to enhance the sustainability of program accomplishments by publically highlighting both individual and institutional impacts.

Figure 1.

The eleven Medical Education Partnership Initiative (MEPI) grant and awards initiatives at the University of Zimbabwe College of Health Sciences, 2014–2015. The initiatives are organized under guiding themes (related to MEPI’s overall goals); intended outcomes and impacts are shown.

aOne linked award was related to cardiovascular health (initiatives 4.1 and 4.2); the second linked award was related to mental health (initiatives 5.1 and 5.2).

The MSC technique

Using the MSC, evaluators collect stories of change and engage in participatory interpretation of those stories.6,7 The steps for this process include identifying the domain of change of interest, the reporting period, and the sample population (usually the most successful cases). Although the MSC can be used to explore negative impacts, the technique is most often used to make sense of the experiences of those who have been the most successful.6 That is, evaluators look at exemplary cases, rather than average ones. Individual or group interviews are conducted to collect stories of change. Then stakeholders are convened to review the stories and select those that they believe show the most significant change. This process is gently guided by facilitators to allow stakeholders to determine their own criteria for significance; the actual selection may be done through a formal rating process or informal discussions. Facilitators record the identified cases and the reasons for selection, which may provide evaluators and leaders with insights into the underlying values of the stakeholders. In some MSC studies, it may be important to verify stories and identify emerging themes.

Our application of the MSC

Our interpretation of the MSC included seven steps (List 1). In April 2014, we identified our domain of change of interest as the positive program impacts on individuals resulting from participation in MEPI programs. In July 2014, we (S.C.C., S.N.) asked local MEPI program leaders to nominate individuals who they viewed as benefitting from the programs; they nominated 31 individuals who were all invited to participate in an interview. To assure transparency, nominees were told the purpose of the interviews, that their stories would be shared, and that they would have an opportunity to edit any quotes attributed to them. The UZCHS ethics committee approved the study as evaluation.

List 1.

The Most Significant Change (MSC) Process Steps Used to Evaluate the Impact of the Medical Education Partnership Initiative at the University of Zimbabwe College of Health Sciences, 2014–2015a

|

In September 2014, evaluators from Zimbabwe and Colorado (S.C.C., A.C., S.N., C.V.) conducted interviews with 28 of the 31 (90%) nominees (see Supplemental Digital Appendix 1 at [LWW INSERT LINK] for the interview protocol); three had prior commitments. Four (14%) interviewees were senior faculty, 8 (29%) were junior faculty, and 16 (57%) were graduate students, who we included because they participated in MEPI workshops designed to prepare them for roles as educators. Interviews lasted from 30 to 60 minutes and were digitally recorded and transcribed. To facilitate the time-consuming review process for the stakeholders including UZCHS faculty members and MEPI leaders from the partner institutions, we modified the MSC technique described above by coding results inductively for prevalent themes before the stakeholder discussions. We (S.C.C., S.N.) also prepared a brief profile of each interviewee that included representative quotes to illustrate the themes, which we sent to each interviewee to edit for accuracy. We hoped these profiles would serve to place the changes within a personal and locally relevant context for the stakeholders.

Using the MSC method, a critical next step was to engage stakeholders in discussion to select the most significant changes. In March 2015, five stakeholders in Zimbabwe (including J.H.) and two in Colorado (including E.A.) were asked to select the themes perceived to be most important and stories that represented those themes.10 Profiles and themes were shared in advance via e-mail, followed by in-person discussions. By consensus, the stakeholders selected 9 themes and 18 stories to illustrate the most significant changes; the stories represented individuals from 11 UZCHS departments and all MEPI programs. Evaluators shared the criteria that stakeholders used: evidence of action (e.g., implementing a new teaching method), evidence of results (e.g., improved student evaluations), and alignment with MEPI goals. In July 2015, evaluators shared the results with UZCHS faculty and staff through a magazine publication and a slide show at a faculty development workshop; publicizing program impacts was intended to improve their sustainability.

Outcomes

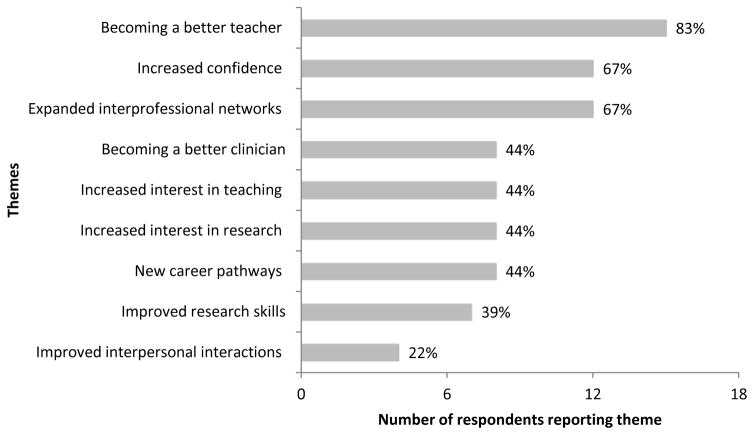

Our stakeholders identified 9 themes (or outcomes) of significant personal and professional changes; these themes and their prevalence are reported in Figure 2. Six were themes we expected, as they aligned with MEPI goals—becoming a better teacher, becoming a better clinician, increased interest in teaching, increased interest in research, new career pathways (including a commitment to practice in Zimbabwe), and improved research skills. However, there were also three we did not expect—increased confidence, expanded interprofessional networks, and improved interpersonal interactions.

Figure 2.

Frequency of 9 themes from 18 interviewees’ answers to the question “How has your participation in the MEPI programs benefitted you personally and professionally?” in a most significant change study of the impact of the Medical Education Partnership Initiative (MEPI) at the University of Zimbabwe College of Health Sciences, 2014–2015.

Becoming a better teacher

The most frequently mentioned benefit was the perception that MEPI programs improved teaching abilities. One senior faculty member described this change as follows: “I attended that team-based learning workshop. My life was never the same in terms of teaching … I made an overhaul to the whole course … and it is now team-based learning. … I have grown professionally.”

Increased confidence

The second most prevalent theme was that MEPI programs increased participants’ confidence across their various roles as teachers, clinicians, and researchers. A senior faculty member explained

Before I started the MEPI program, I was very apologetic. I would always feel other people were more knowledgeable. … When I participated in the MEPI program, I got encouragement from my mentors and from my peers, and people would actually applaud the things that I would say. … Now … I talk with confidence.

Expanded interprofessional networks

The third most dominant theme was an appreciation for the expanded interprofessional networks (both internationally and within the UZCHS) developed through MEPI. Speaking about networks within the UZCHS, one junior faculty member said

I learned the importance of networking. Before I used to more or less live in a cocoon. … I used to think I could only benefit from people within my discipline, and I realized I could benefit from anyone. I remember … a hands-on writing workshop. … What surprised me the most was … [a participant from another department] picked up a lot of things … people from my department had overlooked.

And speaking about international networks, a graduate student described a new sense of connection from MEPI participation: “I think there was a time when we felt we were not in a global village. Now I feel we are part of what is going on in the world.”

Becoming a better clinician

Some interviewees reported their MEPI participation contributed to their effectiveness as clinicians, particularly noting the contribution of new technology and e-resources. One junior faculty member explained

You now hear a lot more up-to-date discussions and literature being quoted. … They now have internet access [and] infrastructure to allow the ideas to be implemented. The combination has really had a significant impact … translating into how well our students are doing and … it actually translates into how well our patients are doing.

Increased interest in teaching

Some interviewees reported MEPI programs increased their interest in teaching as a career. A junior faculty member shared

I didn’t think I was going to join UZCHS because [I thought] teaching must be really boring because I was thinking of teaching as in lectures only. When we were introduced to these staff development workshops, I realized there are more enjoyable methods of teaching. That is when I actually said, “Okay. I am going to join UZCHS.”

Increased interest in research and improved research skills

Similarly, some interviewees also described a new interest in research careers and/or reported improved research skills. For example, one graduate student said

Professionally, I think now I can do research. I have more confidence. … I am inspired and I have […] passion from knowing that we need to have research from our own setting so that we can make our own decisions based on our own population.

New career pathways

Some interviewees also described how MEPI participation supported their career development, including a commitment to practice in Zimbabwe, with two interviewees describing a new interest in medical education as a career. For example, one faculty member said

[My MEPI participation] … helped me to realize my interest in medical education. I had never thought about being a lecturer until I started being a lecturer. … Education as a career had never been emphasized. … It made me realize that maybe this is what I would like to do. … It was a time when I was having a lot of doubts in my teaching capabilities, … then [MEPI] came up. It turned out to be what I was looking for.

Improved interpersonal interactions

Finally, a few interviewees reported their MEPI participation positively influenced their interpersonal interactions, both professionally and personally. A senior faculty member shared

I am using the skills that I got from these courses to handle affairs [both inside and] outside my profession. Personally, I have grown. The way I am managing my family now is different. … The skills from the faculty development workshops … are applicable to both professional work and personal growth.

Next Steps

We found the MSC to be a useful and systematic evaluation approach for large, complex, and transformative initiatives like MEPI. Our results provided evidence of the achievement of MEPI goals and revealed some unexpected outcomes, such as increased confidence and improved interpersonal interactions. Additionally, in debriefing meetings conducted at the conclusion of the grants and awards, most MEPI program leaders reported that our results gave them a greater understanding of the personal and cultural impacts of the MEPI programs than they would have gotten from conventional methods.

We also noted limitations of this approach. The MSC is a time-intensive method requiring at least twice the time typical for conventional qualitative analysis. The method entails active participation by stakeholders, a challenge when stakeholders have many demands on their time and attention. Additionally, the MSC is likely not a cost-effective method for use when expected outcomes are known, when understanding typical experience is desirable, or in groups not open to alternative methods of evaluation. It is also not an efficient method of program monitoring for accountability purposes.

Given these strengths and limitations, we believe the MSC technique warrants additional trial applications in academic medicine. By encouraging participants to reflect on their experiences, supporting values inquiry by program leaders, and providing insights into the personal and cultural impacts of the program as it seemed to do in our study, the MSC technique can contribute to meaningful program evaluation. Additionally, it appears to be especially suitable to assess “hard to measure” outcomes (e.g., interprofessional collaboration, leadership, empathy, professionalism, cultural change). MSC results, such as ours, have the potential to contribute to greater understanding of the effective preparation and support of medical educators.

Supplementary Material

Acknowledgments

The authors wish to thank Midion Chidzonga, dean of the University of Zimbabwe College of Health Sciences, for his on-going support.

Funding/Support: This publication has been supported by the Medical Education Partnership Initiative funding through National Institutes of Health grant no. TW008881.

Footnotes

Supplemental digital content for this article is available at [LWW INSERT LINK].

Other disclosures: None reported.

Ethical approval: The Joint Research Ethics Committee for the University of Zimbabwe, College of Health Sciences and Parirenyatwa Group of Hospitals determined that this study was evaluation and approved publication of the results.

Disclaimer: The content in this article is solely the responsibility of the authors.

Contributor Information

Susan C. Connors, Associate director, The Evaluation Center, School of Education and Human Development, University of Colorado Denver, Denver, Colorado.

Shemiah Nyaude, Monitoring and evaluation specialist, Humanist Institute for Co-operation with Developing Countries (Hivos), Regional Office for Southern Africa, Harare, Zimbabwe.

Amelia Challender, Education coordinator, Colorado Family Medicine Residencies, Denver, Colorado.

Eva Aagaard, Professor of medicine, Division of General Internal Medicine, Department of Medicine, School of Medicine, University of Colorado Anschutz Medical Campus, Aurora, Colorado.

Christine Velez, Senior evaluation specialist, The Evaluation Center, School of Education and Human Development, University of Colorado Denver, Denver, Colorado.

James Hakim, Professor of medicine, Department of Medicine, University of Zimbabwe College of Health Sciences, Harare, Zimbabwe.

References

- 1.Medical Education Partnership Initiative Principal Investigators. Foreword. Acad Med. 2014;89(8 Suppl):S1–S2. [Google Scholar]

- 2.Davies RJ. An evolutionary approach to facilitating organizational learning: An experiment. Christian Commission for Development; Bangladesh: 1996. [Accessed October 18, 2016]. www.mande.co.uk/docs/ccdb.htm. [Google Scholar]

- 3.Willetts J, Crawford P. The most significant lessons about the most significant change technique. Dev Pract. 2007;17:367–379. [Google Scholar]

- 4.Shah R. Assessing the ‘true impact’ of development assistance in the Gaza Strip and Tokelau: ‘Most significant change’ as an evaluation technique. Asia Pacific Viewpoint. 2014;55:262–276. [Google Scholar]

- 5.Kraft K, Prytherch H. Most significant change in conflict settings: Staff development through monitoring and evaluation. Dev Pract. 2016;26:27–37. [Google Scholar]

- 6.Davies RJ, Dart J. [Accessed October 18, 2016];‘Most significant change’ (MSC) technique: A guide to its use. 2005 www.mande.co.uk/docs/MSCGuide.htm.

- 7.Dart J, Davies RJ. A dialogical, story-based evaluation tool: The most significant change technique. Am J Eval. 2003;24:137–155. [Google Scholar]

- 8.Steinert Y, Mann K, Centeno A, Dolmans D, Spencer J, Gelula M, Prideaux D. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME Guide No. 8. Med Teach. 2006;28:497–526. doi: 10.1080/01421590600902976. [DOI] [PubMed] [Google Scholar]

- 9.Ndhlovu CE, Nathoo K, Borok M, et al. Innovations to enhance the quality of health professions education at the University of Zimbabwe College of Health Sciences—NECTAR program. Acad Med. 2014;89(8 Suppl):S88–S92. doi: 10.1097/ACM.0000000000000336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Choy S, Lidstone J. Evaluating leadership development using the most significant change technique. Stud Eval. 2013;39:218–224. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.