Abstract

Like many other primates, humans place a high premium on social information transmission and processing. One important aspect of this information concerns the emotional state of other individuals, conveyed by distinct visual cues such as facial expressions, overt actions, or by cues extracted from the situational context. A rich body of theoretical and empirical work has demonstrated that these socio-emotional cues are processed by the human visual system in a prioritized fashion, in the service of optimizing social behavior. Furthermore, socio-emotional perception is highly dependent on situational contexts and previous experience. Here, we review current issues in this area of research and discuss the utility of the steady-state visual evoked potential (ssVEP) technique for addressing key empirical questions. Methodological advantages and caveats are discussed with particular regard to quantifying time-varying competition among multiple perceptual objects, trial-by-trial analysis of visual cortical activation, functional connectivity, and the control of low-level stimulus features. Studies on facial expression and emotional scene processing are summarized, with an emphasis on viewing faces and other social cues in emotional contexts, or when competing with each other. Further, because the ssVEP technique can be readily accommodated to studying the viewing of complex scenes with multiple elements, it enables researchers to advance theoretical models of socio-emotional perception, based on complex, quasi-naturalistic viewing situations.

Keywords: social neuroscience, socio-emotional perception, facial expression, visual cortex, EEG, ssVEPs, frequency-tagging

Our sensory systems allow us to effortlessly interact with complex, changing environments, optimizing behavioral outcomes and minimizing exposure to threat and danger. Experimental work in the cognitive and neural sciences has demonstrated that this interaction of perception and action is crucial for how sparse and noisy visual information is used by the human brain to extract meaningful information (Grossberg, 2013; Summerfield & Egner, 2009). Together, this research highlights the fact that sensory systems are best understood as embodied in living organisms with motives and goals, interacting with the environment in a dynamic fashion, while constantly adapting to changes and maximizing outcomes (Buzsaki, Peyrache, & Kubie, 2015; Schroeder, Wilson, Radman, Scharfman, & Lakatos, 2010). Central motive states, goals, and predictions from memory likewise bias the selection, extraction, and interpretation of visual cues, and enable efficient computations of semantic content to be translated into adaptive behavior. Since humans, like other primates, are social animals, much of the adaptive value of a given visual cue is determined in the context of social interactions, where emotional states and motives of different agents play an important role. Accordingly, a rich body of theoretical and empirical work has addressed how socio-emotional cues are processed by the human visual system. One of the main premises of this line of research has been that the visual analysis of socio-emotional information is highly dependent on situational contexts and previous experience (for recent reviews, see Barrett, Mesquita, & Gendron, 2011; Wieser & Brosch, 2012), but stringent, quantitative tests of this broad hypothesis have been made difficult by methodological issues.

In the present review, we ask how current concepts and methods in human visual neuroscience can be harnessed to address research questions related to the perception of socio-emotional stimuli. It is intuitively plausible as well as empirically well supported that visual percepts do not typically arise de novo, in a space and time void of expectations, but instead are guided by predictions derived from mnemonic and contextual cues (Clark, 2013; Engel, Fries, & Singer, 2001). Innovative electrophysiological techniques are now available that can be used to examine issues of prediction and context-dependency. Here, we focus on the utility of the steady-state visual evoked potential (ssVEP) frequency tagging approach for addressing these issues in human observers. Using this approach, lower-tier visual cortical processes related to one specific element of a complex scene can be “tagged” by flickering or contrast-reversing this element at different rates than the background and other objects (e.g., Appelbaum, Wade, Vildavski, Pettet, & Norcia, 2006; Martens, Trujillo-Barreto, & Gruber, 2011; Miskovic & Keil, 2013a; Verghese, Kim, & Wade, 2012; Wang, Clementz, & Keil, 2007; Wieser & Keil, 2011; Wieser, McTeague, & Keil, 2011). This approach has been fruitful for studying different aspects of visual cognition, such as changes in feature-based or spatial visual selective attention (Andersen, Müller, & Hillyard, 2009; Müller et al., 2006), contrast gain or response gain (Song & Keil, 2013), and target detection under competition (Kim & Verghese, 2012). It has also been increasingly used for studying the interaction between bottom-up saliency and top-down processes such as temporal attention (Wieser & Keil, 2011) or expectancy (Moratti & Keil, 2009). Notably, the competitive or synergistic interactions between overlapping stimuli can be quantified and systematically studied in vivo at the level of cortical population activity using a technique referred to as “ssVEP frequency-tagging”. The present review discusses the potential uses of this method for addressing current research questions in social and affective neuroscience. We first present outstanding questions and challenges in these fields as they pertain to the perception of social cues that convey socio-emotional information.

1. Investigating the neural correlates of socio-emotional processing: Conceptual and methodological challenges

As discussed above, humans continuously gather social information in order to improve the prediction and interpretation of behavior shown by conspecifics, as well as to guide their own behavior. In this process, information on emotional states as communicated through facial expressions is a crucial element (Smith, Cottrell, Gosselin, & Schyns, 2005). While early categorical models postulated that both the facial expressions of emotion and their perception are unique, natural, automatic, and intrinsic phenomena (Ekman, 1992; Smith et al., 2005), more recent models emphasize the dependency of face perception on situational context and the perceiver’s expectations and predictions (Barrett et al., 2011; Wieser & Brosch, 2012). These notions converge with recent trends in vision research, emphasizing that perception in general is highly dependent on contextual cues (see Bar, 2004; Gilbert & Sigman, 2007 for extensive reviews), guided by previous experience and current states of the observer (Schroeder et al., 2010). Despite the fact that “context” may refer to different concepts in different areas of research, researchers have widely agreed that perception of a stimulus is modulated by properties of the surrounding scene it is embedded in (Gilbert, Ito, Kapadia, & Westheimer, 2000) as well as by semantic properties of the situation in which the stimulus appears, including memory of past events (Fuster, 2009). Contextual effects on visual perception have also been defined at the neural level, referring to interactions between higher-order and lower-tier regions in the traditional visual hierarchy (Roelfsema, 2006), or to the neural effects that change a neuron’s response to its receptive field input (Phillips, Clark, & Silverstein, 2015). These effects have been reliably demonstrated for sensory areas low in the traditional visual hierarchy. For example, a number of electrophysiological studies in awake non-human primates have found that the activity of V1 neurons is modulated by contextual information outside their receptive fields (e.g., Lamme, Rodriguez-Rodriguez, & Spekreijse, 1999; Zipser, Lamme, & Schiller, 1996). Many potential neurophysiological mechanisms for mediating the contextual facilitation of visual perception have been proposed, including fundamental neural processes such as divisive normalization (Reynolds & Heeger, 2009) or neural synchrony (Womelsdorf et al., 2007). In terms of large-scale interactions it has been posited that context-based predictions are mediated via top-down feedback across the visual hierarchy, which may resemble the back-propagation of prediction errors (Friston & Kiebel, 2009), or may involve modulatory prediction signals entering the visual areas from neuronal populations in the prefrontal cortex (Bar, 2004). Likewise, the reverse hierarchical visual processing model assumes that sparse visual information is forwarded and contrasted against a model or prediction in higher order visual areas, from where then feedback fine-tunes activity in earlier stages of visual processing (e.g., Hochstein & Ahissar, 2002; Moratti, Méndez-Bértolo, Del-Pozo, & Strange, 2014).

The visual processing of socio-emotional cues is to a large extent characterized by the principles outlined above: namely, natural vision is a massively parallel and iterative process in which bidirectional flow of information within the visual pathways dynamically and rapidly mediates the observer’s predictions and interpretations of motivationally relevant social signals (Yang, Rosenblau, Keifer, & Pelphrey, 2015). Adding to the complexity of social interactions, it is likely that individual social stimuli and their context overlap, either completely or in part. For example, faces or whole bodies are rarely seen de-contextualized but rather, as being embedded within a visual scene (e.g., Wieser and Keil, 2014). This raises a number of issues that have been traditionally difficult to address in the human neuroscience laboratory, by means of behavioral and traditional neuroimaging methods. How are contextual constraints and priors from memory encoded in (back-projected) signals that modulate visual processes lower in the visual hierarchy? What are the spatio-temporal neural dynamics underlying bi-directional communication? How do units at multiple levels (within and across columns, areas, different cortices) interact to generate context-dependent percepts of socio-emotional information? Are these interactions characterized by competition or synergy, i.e. does the processing of socially significant stimuli incur a cost at the expense of processing contextual stimuli and vice versa, or is social processing related to facilitated processing of its context? Ultimately, one of the core challenges preventing progress in addressing these questions is the need to quantify specific visual responses to many discrete cues simultaneously present in our social world while assessing the interactions between those brain signals. As we will demonstrate in the following paragraph, steady-state brain potentials together with frequency tagging may offer a suitable and feasible research avenue to address this category of questions.

2. Steady-state VEPs and frequency tagging as a tool for studying socio-emotional processing

Electrophysiological approaches for accommodating complex real-world stimuli in the laboratory are often hampered by the fact that the neural responses to the numerous elements and features occurring in complex environments overlap in space and time (Keysers & Perrett, 2002). This makes the analysis of interactive dynamics between discrete stimuli, objects, or features in a social scene difficult. Even gold-standard measures of overt attentional orienting such as eye gaze measurements are difficult to interpret when the stimuli of interest fully overlap in space. By contrast, frequency tagging of multiple stimuli using ssVEPs capitalizes on a robust temporal entrainment phenomenon observed in the recording of large-scale neuronal populations. Rhythmic modulation of stimulus contrast or luminance at a constant rate over a period of time evokes oscillatory field responses in the visual cortex at the same frequency as the modulation rate of the stimulus (in addition to a range of non-linear responses at different harmonic and inter-modulation frequencies). The large-scale frequency-following responses are referred to as either flicker-ssVEP (elicited by luminance modulation) or reversal-ssVEP (elicited by contrast reversal). The resulting response is best quantified by analyzing the evoked (trial-averaged) neuro-electrical response in the frequency or the time-frequency-domain. Traditional Fourier-based methods represent the most popular and most prevalent approach for analyzing the frequency characteristics of a signal (see Pivik et al., 1993 for a description of the basic principles). A limitation of classical Fourier approaches is that time information is lost. However, time-frequency (T-F) analysis methods have been developed to address this gap. A tremendous variety of T-F methods are available including spectrograms, complex demodulation, wavelet transforms, the Hilbert transform, and many others. What these varied methods share in common is that they allow researchers to study the changes of the signal spectrum over time, taking into account the power (or amplitude) of the signal at a given frequency as well as changes in its oscillatory phase. Today, open source software packages that include sophisticated ways of calculating and quantifying frequency-domain aspects of electrophysiological time series signals - the fieldtrip toolbox (http://fieldtrip.fcdonders.nl/start), EEGLAB (http://sccn.ucsd.edu/eeglab/), and EMEGS (http://www.emegs.org/) among others - are readily available to researchers worldwide (see Keil et al., 2014 for more details).

Here, we focus on key characteristics of ssVEPs that are important for its use as a research tool in social and affective neuroscience. For an extensive review on the fundamental principles and stimulating paradigms in vision research, the reader is directed to the review by Norcia and colleagues (Norcia, Appelbaum, Ales, Cotterau, & Rossion, 2015). The oscillatory ssVEP is precisely defined in the frequency domain as well as the time- frequency domain. Thus, it can be reliably separated from noise and quantified as the evoked spectral power in a narrow frequency range. The resulting spectrum typically contains a strong signal at the frequency of the driving stimulus as well as peaks at integer multiples of the fundamental (driving) frequency—the higher harmonics. These harmonic responses depend on the duty cycle, the stimulation method, and the complexity of the stimulus array (Kim & Verghese, 2012).

2.1 Generation of ssVEPs

Several studies have demonstrated that the flicker-evoked ssVEP is predominantly generated in the primary visual and to some extent in adjacent, higher order, cortices (Di Russo et al., 2007; Müller, Teder, & Hillyard, 1997; Wieser & Keil, 2011). Interestingly, a number of studies have suggested different neural sources for the fundamental frequency and the higher harmonics, although these findings await replication and further interpretation (Kim, Grabowecky, Paller, & Suzuki, 2011; Kim & Verghese, 2012). Generally, the ssVEP can easily be driven in lower-tier (retinotopic) visual cortices using high-contrast luminance modulation governed by a rapid square-wave (on-off) stimulation. Alternatively, the rapid modulation of specific stimulus dimensions other than luminance or contrast, while holding these lower-level properties constant (or varying them randomly) may evoke ssVEPs in brain areas sensitive to the particular feature or stimulus dimension of interest (Giabbiconi, Jurilj, Gruber, & Vocks, 2016; Rossion & Boremanse, 2011). For example, stimulation techniques have been used that isolate the ssVEP response to face identity generated in higher order visual areas such as the fusiform cortex (Rossion, Prieto, Boremanse, Kuefner, & Van Belle, 2012). These techniques are more extensively discussed in section 2.4.

A related question often discussed in the context of ssVEP frequency tagging is the preferred sensitivity of visual cortical networks to stimulation at certain, so-called “natural” frequencies (Herrmann, 2001). Often referred to as “resonance”, over-proportionally heightened evoked oscillatory responses have been reported for frequencies in the alpha frequency range (e.g., Schurmann, BasarEroglu, & Basar, 1997), and also for frequencies in the gamma range around 30 to 40 Hz (e.g., Lithari, Sanchez-Garcia, Ruhnau, & Weisz, 2016; Rager & Singer, 1998). Regan (1989) reported evidence for three separate ssVEP components, with unique temporal frequency tuning profiles, roughly corresponding to low, medium and high frequencies. Initial studies of frequency tagging reported that these putative resonance frequencies of the visual system may interact with experimental manipulations, leading to different outcomes of ssVEP amplitude changes depending on the tagging frequencies used (Ding, Sperling, & Srinivasan, 2006). More recent work, however, has found no evidence to support that notion (Keitel, Andersen, & Muller, 2010) and instead supported the hypothesis that stable ssVEP amplitude modulation by cognitive or affective manipulations is seen across a wide range of tagging frequencies (Baldauf & Desimone, 2014; Deweese, Muller, & Keil, 2016; Miskovic et al., 2015).

A final issue to be addressed in this brief overview of issues in ssVEP generation (see Norcia et al., 2015, for a comprehensive review) is the question to what extent ssVEPs can be regarded as a linear superposition of transient ERPs, or alternatively represent a non-linear response that possesses properties beyond the linear combination of individual brain responses. Initial studies in the field have argued that observing higher harmonics when using harmonically simple (e.g., sinusoidal) modulation of luminance or contrast is considered evidence of non-linearity used widely in information processing (Regan, 1972). Subsequent work has examined the extent to which ssVEPs can be explained by properties of transient ERPs, and has observed that especially with square wave (on-off) modulation, ssVEPs may be modeled by transient ERP features with satisfactory accuracy (Capilla, Pazo-Alvarez, Darriba, Campo, & Gross, 2011). The interpretation of these findings is not straightforward, because the ERP itself represents a combination of individual trials that vary greatly in latency (phase) and amplitude, limiting its use for unambiguously explaining the generating mechanism underlying ssVEPs. In line with this notion, other studies have reported that the ssVEP amplitude is poorly predicted by single-trial power changes at the driving frequency, but is best predicted by entrainment of the single-trial phase with the driving stimulus (Moratti, Clementz, Gao, Ortiz, & Keil, 2007). Together, the ssVEP is a signal that is readily quantified, often with clearly defined neuroanatomical origins, but similar to ERPs, the precise mechanism leading to its generation is still debated. Systematic studies documenting the sensitivity of the ssVEP to experimental manipulations with and without frequency tagging have however demonstrated its value, particularly for quantifying competition in visual cortical areas. The use of frequency tagging of ssVEPs to quantify population-level competition is discussed next.

2.2 Implementation of frequency tagging: Working with simultaneous and/or overlapping stimuli

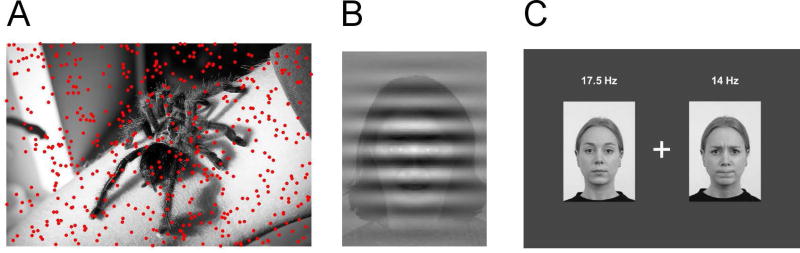

Given the frequency-specificity of the ssVEP, "tagging" a discrete visual object or a discrete feature (e.g., a face, a scene element, a Gestalt consisting of multiple stimuli, etc.) is readily feasible by modulating it at a fixed temporal rate, different from the other elements and features in the field of view. In the simplest case, the ssVEP to a stimulus that is flickered or contrast-modulated in front of a simple, ‘empty’ (e.g., black) background allows the separation of the visual response related to the flickered stimulus from ongoing spontaneous brain activity. Considering a slightly more sophisticated design, the content of the static background can be manipulated by the experimenter and the neural response to the superimposed stimulus can then be quantified in the corresponding ssVEP analysis. With appropriate experimental control, this version of frequency tagging allows researchers to quantify the potential interactions (competitive or synergistic) between a foreground stimulus and varying backgrounds with respect to activity in the visual cortices engaged by the tagged stimulus. In one example study (Müller, Andersen, & Keil, 2008), this approach was used to identify competitive cost effects of task-irrelevant background pictures varying in emotional content, while observers performed a detection task that required attending to the tagged stimulus, consisting of a cloud of flickered dots exhibiting random movement (Brownian motion) that fully covered the background image (see Figure 1 A). Fully overlapping arrays are often used in studies where multiple scene elements are tagged with the goal of quantifying time-varying changes in their competitive versus synergistic interactions.

Figure 1.

Examples of stimulus displays used in ssVEP paradigms in social vision research. A) Foreground task (moving dots) superimposed upon color pictures as used in Müller et al. (2008) and Deweese et al. (2014). Flickering the dots and/or the background picture allows the quantification of trade-off between overlapping stimuli over time. Typically, randomly moving dots are the task stimulus with observers detecting episodes of coherent motion or directional change. Attentional capture by social or emotional stimuli can be measures indirectly by a drop in the dot-evoked ssVEP amplitude when socially relevant pictures are presented. B) Overlaid stimuli as used in Wieser & Keil (2011). Task-relevant Gabor patches are overlaid on faces to investigate the influence of face processing on task-evoked visual cortex activity. In this paradigm, Gabor patch-evoked ssVEPs can be separated from face-evoked ssVEPs providing direct measures of competition of overlapping stimuli. By contrast, spatial arrangements of tagged stimuli (C) allow researchers to study the time course and amount of differential engagement with stimuli at different locations. Note that ssVEPs allow a continuous quantification of how visuo-cortical engagement is shared between stimuli at any given moment in time. Such information is not available based on eye tracking, fMRI, or ERP paradigms.

In cases where researchers wish to capture trade-off or synergistic effects between multiple elements in the field of view (e.g., Wieser, Reicherts, Juravle, & Von Leupoldt, 2016), overlapping or adjacent, ssVEP frequency tagging represents the only known technique that – unlike eye-tracking for example- provides continuous information about each stimulus separately, at higher temporal resolution than hemodynamic measures such as BOLD-fMRI or fNIRS. It should be noted however that the temporal resolution of ssVEPs is below the resolution of the original EEG data. This limitation emerges as the result of the Fourier uncertainty principle: precisely measuring the neural response at a given tagging frequency implies a loss of temporal resolution since time is needed to define the exact frequency (see Keil, 2013 for examples and an expanded explanation). Typically, temporal uncertainty of neural event timing in ssVEP amplitude envelopes is in the range of hundreds of milliseconds, depending on the method used. Often however researchers are not primarily interested in the time course, but in the power at each frequency, reflective of the visual cortical engagement evoked by each of multiple competing stimuli. Irrespective of the analysis technique applied, the principle of frequency tagging follows a straightforward approach, as described above. Two or more elements of the visual scene are modulated at different rates and their respective ssVEPs are separated in the frequency domain (e.g., Appelbaum & Norcia, 2009).

In situations where fully overlapping stimuli are needed to address a given research question, transparency or sparseness of one or more of the overlapping arrays can be used to obtain strong tag-specific neural responses while retaining high visibility of the overlapping objects (see Figure 1b). In situations where adjacent stimuli are preferred, for instance to investigate spatial competition, the two (or more) stimuli to be compared should be of equal size, luminance, contrast and so forth to avoid differences in signal strength owing to physical, rather than semantic, properties (see Figure 1c). When investigating competitive spatio-temporal interactions in studies of attention, concerns about the physical proximity of tagged items become highly relevant. Receptive field sizes exhibit marked variation at different stages of visual processing – they are confined to relatively small regions of space in early striate cortex and they progressively increase in size (and complexity) at more anterior stages of the visuocortical stream (Kastner et al., 2001; Kastner & Ungerleider, 2000). Therefore, depending on the particular stimuli being utilized, competitive interactions may either dominate or decay contingent on the distance between tagged items. For example, when examining attentional competition using relatively simple cues, separation above ~5° of visual angle has been demonstrated to abolish within-hemifield suppressive effects in the ssVEP signal (Fuchs, Andersen, Gruber, & Müller, 2008).

Parameters such as the monitor retrace rate, the duration of the stimulus array, and the EEG sampling rate constrain the availability and usefulness of tagging frequencies in a given experiment. For instance, on a monitor with a retrace rate of 60 Hz, frequencies for tagging are available at the inverse of the product of the retrace interval (16.666 ms) with all integer numbers, multiplied by 1000. Sometimes researchers may prefer that the two parts of the active duty cycle in each stimulus train (e.g., on and off times of the stimulus in a flicker-ssVEP) are of equal duration, which further reduces the candidate tagging frequencies. Furthermore, when using multiple frequencies for as many visual objects simultaneously, it is mandatory to make sure that the tagging frequencies do not exhibit harmonic relations (such as when using 6 Hz and 12 Hz to tag two stimuli, for example), because this prevents the independent analysis of the two spectral responses (in the example, the second harmonic of the 6 Hz stimulus will be at the tagging frequency of the other stimulus, i.e. at 12 Hz). It is advisable to consider tagging frequencies that also do not share a common sub-harmonic, while keeping in mind other constraints such as the epoch duration. Epoch duration (the duration of the EEG data segment used for frequency analysis) determines the spectral resolution used to quantify the ssVEP at each frequency. When the epoch is too short in duration, frequency resolution may not suffice for discriminating between the two or more frequencies used for tagging. It is also advisable to use epoch durations that hold integer numbers of cycles for each frequency, resulting in a spectrum with bins at the exact stimulation frequency (for an extensive discussion of these points, see Bach & Meigen, 1999). This serves to minimize distortions related to so-called "spectral leaking" or smearing of oscillatory responses across two or more bins of the spectrum that are equally distant from the actual frequency. Such leaking may lead to misinterpretation of competition effects, as well as condition differences, especially when the mapping of tagging frequencies to stimuli and experimental condition is not counter balanced across the experiment. The interested reader is directed to reviews and guidelines regarding the technical aspects of ssVEP procedures (Bach & Meigen, 1999; Keil et al., 2014; Norcia et al., 2015; Vialatte, Maurice, Dauwels, & Cichocki, 2010).

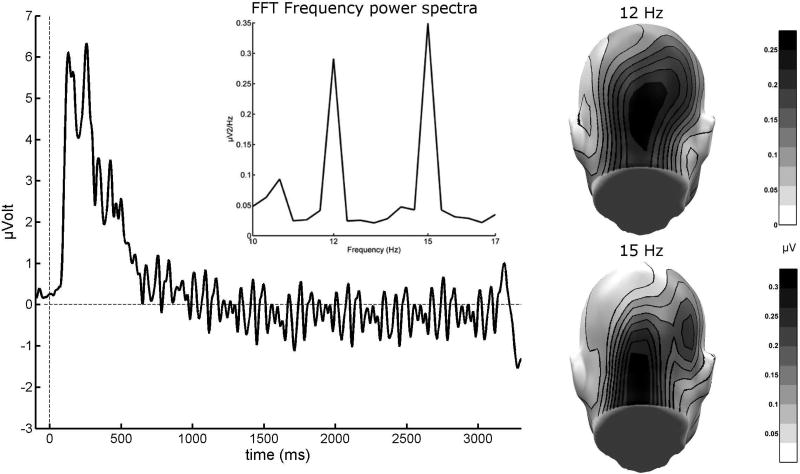

Following trial averaging, the ssVEP spectral power at the tagging frequencies can then be used to independently capture neural population activity evoked by each stimulus (see Figure 2). In factorial research designs, changing competitive or synergistic dynamics between tagged objects are reflected in interactions between the experimental condition and tagged object. Linear indices of competition are likewise easily computed and used as dependent variables. It is also recommended to fully counter-balance the tagging frequencies across experimental conditions to avoid confounding signal strength with experimental condition (energy dissipation for higher frequencies is inevitable due to the 1/f shape of the spectrum of brain electric activity).

Figure 2.

Grand mean steady-state visual evoked potential (ssVEP) from OZ sensor averaged from 21 subjects in a faces superimposed on visual scenes paradigm similar to Wieser & Keil, 2014 (unpublished data). The depicted ssVEP contains a superposition of two driving frequencies (12 and 15 Hz), as shown by the frequency domain representation of the same signal (Fast Fourier Transformation of the ssVEP in a time segment between 200 and 3000 msec) in the upper panel. The mean scalp topographies of both driving frequencies show clear activity peaks over visual cortical areas.

Note: The regularity observed here is the result of additively combining two sinusoidal time series of different frequency. This pooled signal is characterized by a slower oscillation of its overall envelope at the frequency of the difference between the two original sinusoids. In this example, an envelope cycle at 15–12 = 3 Hz is visible.

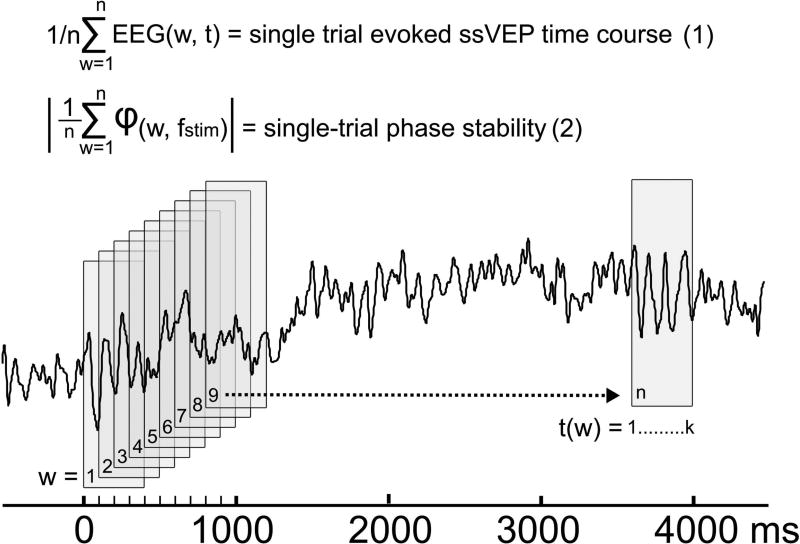

2.3 Trial-by-trial analysis of visual cortical activation

Due to their excellent signal-to-noise ratio, ssVEPs also offer the opportunity to track the dynamics of visuocortical changes at the level of single trials. Trial-by-trial estimates can be calculated by means of a moving window analysis (Keil et al., 2008). Here, a moving average window containing a fixed number of cycles of the ssVEP (e.g., four) is shifted in steps of one ssVEP cycle across the trial epoch. The resulting ssVEP averages can be transformed into the frequency domain by discrete Fourier transformation, and normalized by the length of the segment (Figure 3). It should be noted that this approach (which is analogous to linear deconvolution) quantifies only the part of the electrophysiological response that is stable across the trial, and precisely time and phase-locked to the driving stimulus throughout its duration. Oscillatory events related to the driving stimulus that vary in phase, or that systematically alter the phase across the viewing epoch will lead to a distortion or alteration of the phase and amplitude metrics obtained from moving window analysis. This same principle underlies signal averaging of ERPs, and represents a special case of cross-correlation in which the signal is correlated with an impulse function representing stimulus onsets (Regan, 1972).

Figure 3.

A simple approach for ssVEP estimation across a single trial. Indices of power and phase-locking can be extracted from single trials of ssVEP time series (Keil et al., 2008), by applying a simple moving average technique. As shown in the schematic, a window of fixed length k, sample points, containing an integer number of ssVEP cycles (4 cycles at 10 Hz in the present example), is shifted along a single trial ssVEP signal in n steps, spaced at intervals corresponding to the duration of one ssVEP cycle (here: 100 ms, corresponding the 10 Hz ssVEP response). Equation 1. Point-wise averaging the contents of the n windows (w) yields a time-domain representation of the part of the signal that is locked to the ssVEP driving stimulus. Equation 2. Determining the complex phase φ at the ssVEP frequency (fstim) for each window (w) by means of discrete Fourier transform or a similar method allows phase averaging: Complex Fourier components at fstim for each w are unit normalized by dividing by the absolute value, and then averaged across all n windows. The modulus of the resulting complex number represents an intuitive measure of the stability of the phase at the ssVEP frequency across the duration of the trial. Note that application of the method requires that integer numbers of EEG sample points are available for the window length k, as well as for the step size between windows t1(w+1)− t1(w), achieved by following the recommendations by Bach and Meigen (1999). It should be noted that the resulting measure reflects the regular and stable alignment of the oscillatory waveform to the driving stimulus over time, but not the phase-consistency within the ssVEP signal.

These single-trial derived measures can then be examined across experimental conditions, for example in order to capture trial-by-trial changes in cortical facilitation that result within an experimental learning block (Wieser, Miskovic, Rausch, & Keil, 2014b), and provide reliable estimates of short-term experience-dependent changes in visual cortex. This allows for specifically following up the time course of aversive or appetitive learning, could inform us about the time course of extinction of previously learned associations in visual cortex, and may be therefore especially suited for clinical applications such as exposure therapy.

2.4 Control over the stimulus dimension of interest

An important research question often includes exploring the putative role of physical stimulus parameters for efficiently and accurately perceiving social cues. Specifically, these questions may pertain to the role of spatial frequency, color, brightness, contrast, and so on for conveying relevant aspects of social information. While these questions are relevant eo ipso, many physical dimensions engage specific neurophysiological sub-systems, thus providing researchers with potential avenues for functionally dissecting the vast array of parallel processes involved in social processing (see the following section). For instance, flicker-ssVEPs may be used to examine how the content of a given social stimulus affects luminance processing. Furthermore, reversing or modulating aspects of facial contrast, in specific regions of the face provides an index of visual processing of that particular aspect or feature, which can then be examined using factorial experimental designs and appropriate behavioral tasks. Recently, a similar approach termed Fast Periodic Visual Stimulation has been applied to studies of face processing (e.g., Ales, Farzin, Rossion, & Norcia, 2012; Alonso-Prieto, Van Belle, Liu-Shuang, Norcia, & Rossion, 2013; Boremanse, Norcia, & Rossion, 2014; Liu-Shuang, Norcia, & Rossion, 2014). This approach uses spectral analyses of ssVEP signals evoked by a rapid presentation stream that periodically and intermittently includes a stimulus dimension of interest. The brain response to this recurring event or stimulus can then be quantified in the spectral domain, at the frequency in which it is embedded in the overall stream. The Fast Periodic Visual Stimulation approach is another productive approach to manipulating a specific stimulus dimension at a certain temporal rate and using the spectral representation of the signal to quantify the feature-specific response. For example, this has been used to investigate face identity discrimination by alternating different face identities after adaptation to one facial identity was induced through repeated presentation of that identity (Retter & Rossion, 2016). With regard to research on socio-emotional processing this may help to investigate the importance of specific features such as the eye region in faces for emotional attention.

2.5 Functional connectivity of cortical regions involved in social vision

Since ssVEP designs provide full experimental control over the frequency of neuronal stimulation and possess high signal-to-noise ratios, they provide some advantages for guiding estimates of event-related functional connectivity. Algorithms for quantifying electrophysiological functional connectivity assume stationarity of the underlying oscillators. Empirical EEG data, however, often exhibit dynamic shifts in frequency structure as a function of task demands or endogenous brain activity and this non-stationarity can lead to distortions in functional connectivity estimates (Cohen, 2015). Since the ssVEP technique implies an external, periodic force applied to the visual system, it may represent one way of stabilizing oscillator frequency and minimizing distortions arising from non-stationarity.

A recent study has examined the internal reliability of ssVEP based estimates of functional connectivity and demonstrated good split-half reliability when averaging as few as 10 artifact-free ssVEP trials (Miskovic & Keil, 2015). Recent work in aversive conditioning used ssVEP-based functional connectivity to characterize the long-range neural synchrony between reconstructed sources in the visual and higher-order pre-motor/motor and frontal cortices specifically during the perception of “avoidable” threats (Khodam Hazrati, Miskovic, Príncipe, & Keil, 2015; McTeague, Gruss, & Keil, 2015; Miskovic & Keil, 2014). For social neuroscience research, metrics of functional connectivity may help to further elucidate the extra-visual pathways involved in social perception and their modulation by different types of experimental contexts. For example, these analyses might clarify how early visual areas and higher-order visual areas in the ventral stream (e.g., fusiform gyrus) communicate in social perception through long-range neural synchrony. Specifically, connectivity metrics based on sustained engagement of visual cortices allow researchers to test hypotheses on re-entrant bias signals, thought to modulate visual cortical activity based on information computed in higher-order cortices or deep brain structures. In addition, the combination of single-trial estimates as mentioned above and functional connectivity analyses may further strengthen our knowledge about the temporal dynamics of cortical cross-talk (e.g., Keil et al., 2008; Lachaux et al., 2002).

Despite their obvious benefits, ssVEP guided estimates of functional connectivity are not without limitations that need to be considered, both in terms of how they impact the interpretation of such metrics as well as the underlying methodological soundness of the analyses. One caveat is that periodic driving necessarily implies a common phase-reset mechanism, driven by the exogenous pacemaker-like visual stimulus that could inflate estimates of cross-regional synchrony (i.e., the third variable problem). However, it is possible in principle to control for these effects using partial estimates of neuronal coherence (Nunez & Srinivasan, 2006), using phase-lagged indices of functional connectivity, or examining induced functional connectivity after subtracting out the contribution of exogenously driven phase locking. Similarly, volume conduction poses considerable challenges for any measure of cross-regional synchronization that is derived from surface-recorded electro-cortical signals. A number of solutions have been proposed in the field as ways of dealing with or minimizing the contribution of volume conduction, including the application of spatial filters and/or source localization estimates as a pre-requisite to calculating functional connectivity as well as the use of algorithms that are sensitive to linear signal mixing. Moreover, volume conduction can reasonably be expected to be constant across experimental conditions, so that a balanced and well-controlled experimental design can effectively address many of the issues concerning shared versus condition-specific connectivity patterns. Another consideration in the application and interpretation of ssVEP based estimates of functional connectivity concerns the extent to which the rhythmic mode of stimulus delivery places strong constraints on signal propagation and the nature of cortical information transfer. As mentioned earlier, the ssVEP is believed to originate primarily from striate and early extra-striate regions, so that using it to study re-entrant signal flow from more anterior cortical regions might be problematic. There are several possible theories, each with some level of empirical support, concerning whether the ssVEP is strictly a local phenomenon and how it might propagate to more broadly distributed sources (Vialatte et al., 2010). The input frequency of the visual stimulus likely plays a significant role in shaping the extent of information transfer from visual to non-visual regions (Srinivasan, Fornari, Knyazeva, Meuli, & Maeder, 2007; Thorpe, Nunez, & Srinivasan, 2007). Taken together with evidence that the preferred oscillatory frequency varies across large-scale cortical regions (Rosanova et al., 2009), ssVEP paradigms may pose unique challenges for tracking cross-regional information flow. In addition to these frequency considerations, the spatial configuration of the visual stimuli (e.g., discrete checkerboard stimuli that activate small patches of visual cortex versus full-field flicker) also strongly influences the phase gradients of ssVEP signals that indicate whether the ssVEPs appear as traveling or standing waves (Burkitt, Silberstein, Cadusch, & Wood, 2000). Given these caveats, there is evidence that ssVEPs are not strictly local events and that they do engage broadly distributed functional networks under some contexts (Srinivasan et al., 2007), increasing the coupling between the visual cortex and non-visual structures (Lithari et al., 2016). Thus, the scalp recorded, driven response does not inform researchers regarding the micro- and mesoscopic neural interactions that are well established through animal model research and invasive recordings in humans.

3. ssVEP studies of socio-emotional cue perception

Although the use of ssVEPs in studies of social perception is relatively new, there is a larger literature using this methodology to probe the processing of more complex affective scenes. Across several studies conducted in multiple laboratories, researchers have reported that the flicker ssVEP is amplified when viewing emotionally engaging, compared to neutral, scenes. This effect has been described as particularly robust when using scenes containing threat or mutilation cues. Presenting pleasant, neutral, and unpleasant images from the IAPS at a rate of 10 Hz each for several seconds, Keil and collaborators (Keil et al., 2003) found higher ssVEP amplitude and accelerated phase (i.e. latency of the oscillatory cycle) for arousing, compared to calm pictures. These differences were most pronounced at central posterior as well as right parieto-temporal recording sites. The magneto-cortical counterpart of the ssVEP, the steady-state visual evoked magnetic field also varied with the emotional arousal ascribed to the picture content by the observers (Moratti, Keil, & Stolarova, 2004). In this latter study, source estimation procedures pointed to involvement of fronto-parietal attention networks in evoking and directing attentional resources towards the relevant stimuli. Generally, ssVEP-based paradigms are often characterized by the collection of robust data in short amounts of time, which highlights their appeal for translational and clinical research (e.g., Moratti, Rubio, Campo, Keil, & Ortiz, 2008; Moratti, Strange, & Rubio, 2015).

A research question that has prompted considerable debate is the extent to which endogenously cued shifts of attention and affective arousal modulate sensory processing in an additive or interactive manner. Crossing the factors of selective spatial attention and affective content, Keil and colleagues investigated the ssVEP amplitude and phase as a function of endogenous attention-emotion-interactions (Keil, Moratti, Sabatinelli, Bradley, & Lang, 2005). Participants silently counted random-dot targets embedded in a 10-Hz flicker of colored pictures presented to both hemifields. An increase of ssVEP amplitude was observed as an additive function of spatial attention and emotional content. Mapping the statistical parameters of this difference on the scalp surface, the authors found occipito-temporal and parietal activation contralateral to the attended visual hemifield. Differences were most pronounced during selection of the left visual hemifield, at right temporal electrodes. Results suggest that affective stimulus properties modulate the spatio-temporal process of information propagation along the ventral stream, being associated with amplitude amplification and timing changes in posterior and fronto-temporal cortex. Importantly, even non-attended affective pictures showed electrocortical facilitation throughout these sites, providing evidence in favor of the additive hypothesis.

3.1 Competition between natural scenes and task stimuli

To investigate competition of affective scenes for attentional resources in visual cortex, Müller et al. (Müller et al., 2008) recorded ssVEPs to continuously presented randomly moving dots, flickering at 7.5 Hz. These dots provided a challenging attention task, as they were continuously in motion throughout the trial, and each dot changed its position in a random direction at every frame refresh. Occasionally, the dots produced a 35% coherent motion (targets), lasting for only 2 successive cycles of 7.5 Hz (i.e., 267 ms), which the participants were instructed to detect and indicate. In two studies employing this paradigm, a reduction in the overall ssVEP amplitude evoked by flickering dots when the dots were superimposed on emotionally arousing, compared to neutral distractor pictures was found corroborating behavioral results with significant decreases in target detection rates when emotional compared to neutral pictures were concurrently presented in the background. This decline in task performance and electrocortical activity persisted for several hundred milliseconds (Hindi Attar, Andersen, & Müller, 2010; Müller et al., 2008). Such affective competition was even observed when the emotional pictures were presented for only 200 ms, which possibly provides evidence for a slow reentrant neural competition mechanism for emotional distractors, which continues after the offset of the emotional stimulus (Müller, Andersen, & Attar, 2011). In a further study, the attentional load of the task was manipulated such that participants either performed a detection (low load) or discrimination (high load) task at a centrally presented symbol stream that flickered at 8.6 Hz. Although behavioral data confirmed successful load manipulation with reduced hit rates and prolonged reaction times in high load compared to low load trials, ssVEP amplitudes elicited by the symbol stream did not differ with load, demonstrating that affective competition was not attenuated by attentional load (Hindi Attar & Müller, 2012). In a recent study with snake-fearful individuals, McGinnis et al. (2014) showed that this competition effect is significantly larger in high-fear participants, where snake distractors elicited a sustained attenuation of task evoked ssVEP amplitude, greater than the attenuation prompted by other unpleasant arousing content, indicating sustained hypervigilance to fear cues. Taken together, these studies demonstrate competition between affective and non-affective scene elements, and importantly, the feasibility of using the frequency-tagging method as a direct measure of sustained attentional resource allocation in early visual cortex.

3.2 Faces and facial expressions

In contrast to transient ERPs, ssVEPs elicited by face stimuli allow for a more fine grained examination of individual face discrimination, perceptual face detection (Rossion, 2014), sustained attention and facial expression discrimination (McTeague, Shumen, Wieser, Lang, & Keil, 2011), as well as the interaction of facial expressions and individual face identity (Gerlicher, van Loon, Scholte, Lamme, & van der Leij, 2013).

Recent studies have suggested that oscillatory stimulation of visual cortex with faces captures high-level visual processes, and provides robust indices of individual face discrimination without requiring any face-related behavioral task (Alonso-Prieto et al., 2013; Liu-Shuang et al., 2014; Rossion & Boremanse, 2011). Moreover, using ssVEPs, individual perception thresholds for face detection can be quantified. To accomplish this, Ales et al. (2012) presented phase-scrambled face stimuli alternating with a fully scrambled image at a rate of 3/s and measured the corresponding ssVEP using a novel sweep-based method, in which a stimulus is presented at a specific temporal frequency while parametrically varying (‘sweeping’) the detectability of the stimulus. They observed an abrupt response at the first harmonic (3 Hz) between 30% and 35% phase coherence for the face. This provides an objective perceptual face detection threshold, to be estimated in single participants from as few as 15 trials (Ales et al., 2012). Employing the same stimulation paradigm, it was recently demonstrated that inversion and contrast-reversal increase the threshold and modulate the supra-threshold response function of face detection (Liu-Shuang, Ales, Rossion, & Norcia, 2015). In a different stimulation paradigm (luminance-flicker), it was investigated if inversion-related ssVEP modulation depends on the stimulation rate at which upright and inverted faces were flickered (Gruss, Wieser, Schweinberger, & Keil, 2012). Results demonstrated that amplitude enhancement of the ssVEP for inverted faces was found solely at higher stimulation frequencies (15 and 20 Hz). By contrast, lower frequency ssVEPs did not show this inversion effect. These findings suggest that stimulation frequency affects the sensitivity of ssVEPs to face inversion. In addition, the excellent SNR and the ability of the visual cortex to remain in a state of entrainment for minutes makes this technology an excellent research tool for investigating adaptation in populations of visual neurons. In this vein, Gerlicher et al. (2013) investigated how facial expressions interact with facial identity by presenting the same face identity or different face identities flickering with a frequency of 3 Hz. The amplitude of the steady state visual evoked potential (ssVEP) was decreased for the same face identity as compared with different identities as in previous research (Rossion & Boremanse, 2011). Most interestingly however, this adaptation was attenuated in a linear fashion when faces contained happy, mixed or fearful expressions with the least degree of adaptation to fearful expressions. These results indicate that threat associated with fearful faces seems to prevent a reduction of driven neural activity over time, even if the actual face identity remains identical.

Facial expression processing was investigated in a ssVEP study that recorded responses to facial expressions that differed in valence (i.e., anger, fear, happy, neutral) in a group of individuals with low and high levels of social anxiety (McTeague et al., 2011). The authors found ssVEP amplitude enhancement for emotional (angry, fearful, happy) relative to neutral expressions only in high socially anxious individuals, and that this was maintained throughout the entire 3,500-ms viewing epoch. These data suggest that a temporally sustained, heightened visuocortical response bias toward affective facial cues is associated with generalized social anxiety. Interestingly, no affective modulation of face-evoked ssVEPs was evident in non-anxious individuals.

As previously established, ssVEPs also offer an excellent research tool to track the dynamics of visuocortical changes due to learning experiences (Keil, Miskovic, Gray, & Martinovic, 2013; Miskovic & Keil, 2013a; Miskovic & Keil, 2013b; Miskovic & Keil, 2014). In three recent studies, the time course and short-term cortical plasticity in response to affective learning of faces was investigated in classical conditioning paradigms (Ahrens, Mühlberger, Pauli, & Wieser, 2015; Wieser, Flaisch, & Pauli, 2014a; Wieser et al., 2014b). In one study, enhanced ssVEP amplitudes were observed for faces that were repeatedly paired with offensive nonverbal hand gestures (Wieser et al., 2014a). These results suggest that cortical engagement in response to faces aversively conditioned with nonverbal gestures is facilitated in order to establish persistent vigilance for social threat-related cues. This form of social conditioning involves the establishment of a predictive relationship between social stimuli and motivationally relevant outcomes. The same effect of cortical facilitation was found when faces were repeatedly paired with verbal insults, but interestingly visuocortical discrimination between negatively and positively conditioned faces was impaired in individuals with heightened levels of social anxiety (Ahrens et al., 2015). In another study it was demonstrated that direct-gaze face cues paired with aversive noise compared to averted-gaze face cues elicited larger ssVEP amplitudes during conditioning, whereas this differentiation was not observed when averted-gaze faces were paired with the aversive US (Wieser et al., 2014b). Importantly, a more fine-grained trial-by-trial analysis of visual cortical activation across the learning phase revealed a delayed build-up of cortical amplification for the averted-gaze face cues. This suggests that the temporal dynamics of visual cortex engagement with aversively conditioned faces vary as a function of the cue with gaze direction as an important modulator of the speed of the acquisition of the conditioned response.

Taken together, face-evoked ssVEPs provide insights into how the visual brain discriminates facial identities and expressions, how these processes are related to neural adaptation, and how configural processing of face entities may also take place in earlier visual cortices. Moreover, short-term functional response plasticity of the visual system due to social learning was demonstrated.

3.3 Competition between faces and/or task items

In addition to the aforementioned studies which predominantly tagged one visual stimulus at a time, ssVEP methodology allows for frequency-tagging as mentioned above, which provides a continuous measure of the visual resource allocation to a specific stimulus amid competing cues. In this vein, Wieser, McTeague, and Keil (2011) employed ssVEP methodology while participants viewed two facial expressions presented simultaneously to the visual hemifields at different tagging frequencies (14 vs. 17.5 Hz). Regardless of whether happy or neutral facial expressions served as the competing stimulus in the opposite hemifield, high socially anxious participants selectively attended to angry facial expressions during the entire viewing period. Most interestingly, this heightened attentional selection for angry faces among socially anxious individuals did not coincide with competition (cost) effects for the co-occurring happy or neutral expressions. Rather, perceptual resource availability appeared augmented within the socially anxious group in response to a cue connoting interpersonal threat. In a further study, competition between a change detection foreground task and task-irrelevant background face stimuli was investigated (Wieser, McTeague, & Keil, 2012). Task-irrelevant facial expressions (angry, neutral, happy) were spatially overlaid with a task-relevant Gabor patch stream, which required a response to rare phase reversals. Face and task stimuli flickered at different frequencies (15 vs. 20 Hz). A competition effect of threatening faces was observed solely among individuals high in social anxiety such that ssVEP amplitudes were enhanced at the tagging frequency of angry distractor faces, whereas at the same time the ssVEP evoked by the task-relevant Gabor grating was reliably diminished compared with conditions displaying neutral or happy distractor faces. Thus, threatening faces appear to capture and hold low-level perceptual resources in viewers symptomatic for social anxiety at the cost of a concurrent primary task.

Frequency-tagging not only serves as an avenue for investigating competition among a multitude of facial stimuli, but it also provides a feasible paradigm to investigate how the perception of faces is influenced by concurrent contextual scenes, and vice versa, how perception of faces changes our perception of its surroundings. To this end, Wieser and Keil (2014) investigated the mutual effects of facial expressions (fearful, neutral, happy) and affective visual context (pleasant, neutral, threat) by assigning two different flicker frequencies (12 vs. 15 Hz) to the face and the visual context scene. While processing of the centrally presented faces was unaffected, viewing fearful facial expressions amplified the ssVEP in response to threatening compared to other background contexts. These findings suggest that viewing a fearful face prompts heightened vigilance for potential threat in the surrounding visual periphery.

Frequency-tagging may also inform research into the fundamental brain processes mediating face perception, such as research that examines part-based and integrated, i.e. holistic/configural encoding of facial features. Boremanse & Rossion (2014) flickered left and right halves of faces to isolate the repetition suppression effects for each part of a whole-face stimulus, changing or not changing face identity at every stimulation cycle. Frequency tagging the left and right halves of a face led to part-based repetition suppression at medial occipital electrodes, whereas integrated effects were found over the right occipito-temporal cortex. These observations provide objective evidence for a dissociation between part-based and integrated (i.e. holistic/configural) responses to faces in the human brain, showing not only that a whole face is different from the sum of its parts, but suggesting that only integrated responses may reflect high-level, face-specific representations.

4. Outlook, future directions, and caveats

This article reviewed recent work exploiting the unique properties of ssVEP methodology together with frequency tagging to gain further insights into the visual processing of social information. This technique has already been used within social cognition research to explore competition for attentional resources when multiple stimuli are present in the visual field simultaneously as well as to track trial-by-trial changes in visuocortical activity as a resulting of learning.

As stated throughout the paper, several limitations of ssVEP based measures need to be considered in addition to the unique strengths offered by this method. For example, for the investigation of specific temporal dynamics in the millisecond range, ERP methodology tends to be preferable over ssVEP paradigms (e.g., Rossi & Pourtois, 2014). Another issue, subject to ongoing research in our own laboratories, is the influence of changes in pupil size on the ssVEP response. While there are no studies available directly investigating this topic, it has been recently demonstrated that pupil size can influence the initial feedforward response in human V1 as reflected by the C1 component of the EEG (Bombeke, Duthoo, Mueller, Hopf, & Boehler, 2016). Last but not least, researchers who investigate competition by presenting multiple stimulus displays under fixation instructions (and with appropriate control of fixation compliance) should be aware that in these experiments, only shifts of covert attention are measured. To investigate covert as well as overt shifts of attention, carefully designed studies using a combination of eye-tracking and ssVEP technology seem warranted. Moreover, eye-tracking would allow for the investigation of fixation-locked ssVEP responses.

Open research questions that can be profitably addressed in the future using this methodology include contextual modulations of socio-affective perception and vice versa. In this vein, a first study has used faces overlaid on visual scenes to investigate interactions of facial expressions and affective visual context (Wieser & Keil, 2014). In the future it will be of importance to use natural scenes to which the face actually belongs (contrary to being simply overlaid as in the latter study), to improve the ecological validity of scene-face interactions. Another relevant research question is how several features of faces interact and improve the perception of relevant social cues such as the expressions of the face. For instance, the separate analysis of visuocortical processing of the eyes and other regions (mouth, etc.) may help to clarify their respective relevance for social cognition. Recent studies using eye-tracking and fMRI point towards the relevance of the eye region for the perception of fearful faces as shown by reflexive orienting towards the eye region, which seems to be mediated by amygdala activity (Gamer & Buchel, 2009; Gamer, Schmitz, Tittgemeyer, & Schilbach, 2013). Frequency tagging approaches may contribute to clarifying how covert attentional mechanisms support the recognition of facial expressions by highlighting specific facial features. Also, single-trial analysis of electrocortical short-term response plasticity to investigate the development of social processing and impression formation, for instance in aversive as well as appetitive learning paradigms, represents another fruitful research avenue. This may not only help us to understand how individual differences in person perception develop, but also how person perception and social learning mechanisms are disrupted in psychiatric disorders (e.g., Ahrens et al., 2015). Other research directions involve exploring the competition of socio-affective stimuli, for instance competition of several faces (crowded attention) or competition of task-relevant and irrelevant social information. Finally, it is worth noting that steady-state entrainment can be elicited in other sensory modalities (e.g., auditory, somatosensory), creating opportunities for investigating multimodal stimulation and inter-modal competition of social information. For example, attentional prioritization of social information may be investigated when social cues are presented simultaneously via visual and somatosensory routes (e.g., faces and touch, faces and pain). It will be of interest to determine whether social information provided in one modality boosts the processing of social information in another modality or whether information presented in one modality can exert interfering effects on processing occurring in a second modality.

References

- Ahrens LM, Mühlberger A, Pauli P, Wieser MJ. Impaired visuocortical discrimination learning of socially conditioned stimuli in social anxiety. Social cognitive and affective neuroscience. 2015;10:929–937. doi: 10.1093/scan/nsu140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ales JM, Farzin F, Rossion B, Norcia AM. An objective method for measuring face detection thresholds using the sweep steady-state visual evoked response. Journal of Vision. 2012;12 doi: 10.1167/12.10.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alonso-Prieto E, Van Belle G, Liu-Shuang J, Norcia AM, Rossion B. The 6Hz fundamental stimulation frequency rate for individual face discrimination in the right occipito-temporal cortex. Neuropsychologia. 2013;51:2863–2875. doi: 10.1016/j.neuropsychologia.2013.08.018. [DOI] [PubMed] [Google Scholar]

- Andersen SK, Müller MM, Hillyard SA. Color-selective attention need not be mediated by spatial attention. Journal of Vision. 2009;9 doi: 10.1167/9.6.2. [DOI] [PubMed] [Google Scholar]

- Appelbaum LG, Norcia AM. Attentive and pre-attentive aspects of figural processing. Journal of Vision. 2009;9:18. doi: 10.1167/9.11.18. [DOI] [PubMed] [Google Scholar]

- Appelbaum LG, Wade AR, Vildavski VY, Pettet MW, Norcia AM. Cue-invariant networks for figure and background processing in human visual cortex. The Journal of Neuroscience. 2006;26:11695–11708. doi: 10.1523/JNEUROSCI.2741-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach M, Meigen T. Do's and don'ts in Fourier analysis of steady-state potentials. Documenta Ophthalmologica. 1999;99:69–82. doi: 10.1023/A:1002648202420. [DOI] [PubMed] [Google Scholar]

- Baldauf D, Desimone R. Neural mechanisms of object-based attention. Science. 2014;344:424–7. doi: 10.1126/science.1247003. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nature Reviews Neuroscience. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Mesquita B, Gendron M. Context in Emotion Perception. Current Directions in Psychological Science. 2011;20:286–290. doi: 10.1177/0963721411422522. [DOI] [Google Scholar]

- Bombeke K, Duthoo W, Mueller SC, Hopf J-M, Boehler CN. Pupil size directly modulates the feedforward response in human primary visual cortex independently of attention. Neuroimage. 2016;127:67–73. doi: 10.1016/j.neuroimage.2015.11.072. [DOI] [PubMed] [Google Scholar]

- Boremanse A, Norcia AM, Rossion B. Dissociation of part-based and integrated neural responses to faces by means of electroencephalographic frequency tagging. European Journal of Neuroscience. 2014;40:2987–2997. doi: 10.1111/ejn.12663. [DOI] [PubMed] [Google Scholar]

- Burkitt GR, Silberstein RB, Cadusch PJ, Wood AW. Steady-state visual evoked potentials and travelling waves. Clinical Neurophysiology. 2000;111:246–258. doi: 10.1016/S1388-2457(99)00194-7. [DOI] [PubMed] [Google Scholar]

- Buzsaki G, Peyrache A, Kubie J. Emergence of Cognition from Action. Cold Spring Harb Symp Quant Biol. 2015;79:41–50. doi: 10.1101/sqb.2014.79.024679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Capilla A, Pazo-Alvarez P, Darriba A, Campo P, Gross J. Steady-state visual evoked potentials can be explained by temporal superposition of transient event-related responses. PLoS One. 2011;6 doi: 10.1371/journal.pone.0014543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences. 2013;36:181–204. doi: 10.1017/S0140525X12000477. [DOI] [PubMed] [Google Scholar]

- Cohen MX. Effects of time lag and frequency matching on phase-based connectivity. Journal of Neuroscience Methods. 2015;250:137–146. doi: 10.1016/j.jneumeth.2014.09.005. [DOI] [PubMed] [Google Scholar]

- Deweese MM, Bradley MM, Lang PJ, Andersen SK, Müller MM, Keil A. Snake fearfulness is associated with sustained competitive biases to visual snake features: Hypervigilance without avoidance. Psychiatry research. 2014;219:329–335. doi: 10.1016/j.psychres.2014.05.042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deweese MM, Muller M, Keil A. Extent and time-course of competition in visual cortex between emotionally arousing distractors and a concurrent task. Eur J Neurosci. 2016;43:961–70. doi: 10.1111/ejn.13180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Russo F, Pitzalis S, Aprile T, Spitoni G, Patria F, Stella A, et al. Spatiotemporal analysis of the cortical sources of the steady-state visual evoked potential. Human brain mapping. 2007;28:323–334. doi: 10.1002/hbm.20276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding J, Sperling G, Srinivasan R. Attentional modulation of SSVEP power depends on the network tagged by the flicker frequency. Cereb Cortex. 2006;16:1016–29. doi: 10.1093/cercor/bhj044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P. An argument for basic emotions. Cognition and emotion. 1992;6:169–200. [Google Scholar]

- Engel AK, Fries P, Singer W. Dynamic predictions: oscillations and synchrony in top–down processing. Nature Reviews Neuroscience. 2001;2:704–716. doi: 10.1038/35094565. [DOI] [PubMed] [Google Scholar]

- Friston K, Kiebel S. Predictive coding under the free-energy principle. Philos Trans R Soc Lond B Biol Sci. 2009;364:1211–21. doi: 10.1098/rstb.2008.0300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuchs S, Andersen SK, Gruber T, Müller MM. Attentional bias of competitive interactions in neuronal networks of early visual processing in the human brain. Neuroimage. 2008;41:1086–1101. doi: 10.1016/j.neuroimage.2008.02.040. [DOI] [PubMed] [Google Scholar]

- Fuster JM. Cortex and memory: emergence of a new paradigm. J Cogn Neurosci. 2009;21:2047–72. doi: 10.1162/jocn.2009.2128. [DOI] [PubMed] [Google Scholar]

- Gamer M, Buchel C. Amygdala Activation Predicts Gaze toward Fearful Eyes. Journal of Neuroscience. 2009;29:9123–9126. doi: 10.1523/JNEUROSCI.1883-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Schmitz AK, Tittgemeyer M, Schilbach L. The human amygdala drives reflexive orienting towards facial features. Current Biology. 2013;23:R917–R918. doi: 10.1016/j.cub.2013.09.008. doi: 0.1016/j.cub.2013.09.008. [DOI] [PubMed] [Google Scholar]

- Gerlicher AMV, van Loon AM, Scholte HS, Lamme VAF, van der Leij AR. Emotional facial expressions reduce neural adaptation to face identity. Social Cognitive and Affective Neuroscience. 2013;9(5):610–614. doi: 10.1093/scan/nst022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giabbiconi C-M, Jurilj V, Gruber T, Vocks S. Steady-state visually evoked potential correlates of human body perception. Experimental brain research. 2016:1–11. doi: 10.1007/s00221-016-4711-8. [DOI] [PubMed] [Google Scholar]

- Gilbert C, Ito M, Kapadia M, Westheimer G. Interactions between attention, context and learning in primary visual cortex. Vision Res. 2000;40:1217–26. doi: 10.1016/S0042-6989(99)00234-5. [DOI] [PubMed] [Google Scholar]

- Gilbert CD, Sigman M. Brain states: top-down influences in sensory processing. Neuron. 2007;54:677–96. doi: 10.1016/j.neuron.2007.05.019. [DOI] [PubMed] [Google Scholar]

- Grossberg S. Adaptive Resonance Theory: how a brain learns to consciously attend, learn, and recognize a changing world. Neural Networks. 2013;37:1–47. doi: 10.1016/j.neunet.2012.09.017. [DOI] [PubMed] [Google Scholar]

- Gruss LF, Wieser MJ, Schweinberger S, Keil A. Face-evoked steady-state visual potentials: effects of presentation rate and face inversion. Frontiers in Human Neuroscience. 2012;6 doi: 10.3389/fnhum.2012.00316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrmann CS. Human EEG responses to 1–100 Hz flicker: resonance phenomena in visual cortex and their potential correlation to cognitive phenomena. Experimental brain research. 2001;137:346–353. doi: 10.1007/s002210100682. [DOI] [PubMed] [Google Scholar]

- Hindi Attar C, Andersen SK, Müller MM. Time course of affective bias in visual attention: Convergent evidence from steady-state visual evoked potentials and behavioral data. Neuroimage. 2010;53:1326–1333. doi: 10.1016/j.neuroimage.2010.06.074. [DOI] [PubMed] [Google Scholar]

- Hindi Attar C, Müller MM. Selective Attention to Task-Irrelevant Emotional Distractors Is Unaffected by the Perceptual Load Associated with a Foreground Task. PLoS One. 2012;7 doi: 10.1371/journal.pone.0037186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochstein S, Ahissar M. View from the top: Hierarchies and reverse hierarchies in the visual system. Neuron. 2002;36:791–804. doi: 10.1016/S0896-6273(02)01091-7. [DOI] [PubMed] [Google Scholar]

- Kastner S, De Weerd P, Pinsk MA, Elizondo MI, Desimone R, Ungerleider LG. Modulation of sensory suppression: implications for receptive field sizes in the human visual cortex. Journal of Neurophysiology. 2001;86:1398–1411. doi: 10.1152/jn.2001.86.3.1398. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annual Review of Neuroscience. 2000;23:315–41. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Keil A. Electro-and Magneto-Encephalography in the Study of Emotion. The Cambridge Handbook of Human Affective Neuroscience. 2013:107. [Google Scholar]

- Keil A, Debener S, Gratton G, Junghöfer M, Kappenman ES, Luck SJ, et al. Committee report: Publication guidelines and recommendations for studies using electroencephalography and magnetoencephalography. Psychophysiology. 2014;51:1–21. doi: 10.1111/psyp.12147. [DOI] [PubMed] [Google Scholar]

- Keil A, Gruber T, Müller MM, Moratti S, Stolarova M, Bradley MM, et al. Early modulation of visual perception by emotional arousal: evidence from steady-state visual evoked brain potentials. Cognitive, Affective, & Behavioral Neuroscience. 2003;3 doi: 10.3758/cabn.3.3.195. doi: 195-206, doi: 0.3758/CABN.3.3.195. [DOI] [PubMed] [Google Scholar]

- Keil A, Miskovic V, Gray MJ, Martinovic J. Luminance, but not chromatic visual pathways, mediate amplification of conditioned danger signals in human visual cortex. European Journal of Neuroscience. 2013;38:3356–62. doi: 10.1111/ejn.12316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keil A, Moratti S, Sabatinelli D, Bradley MM, Lang PJ. Additive effects of emotional content and spatial selective attention on electrocortical facilitation. Cerebral Cortex. 2005;15:1187–1197. doi: 10.1093/cercor/bhi001. [DOI] [PubMed] [Google Scholar]

- Keil A, Smith JC, Wangelin BC, Sabatinelli D, Bradley MM, Lang PJ. Electrocortical and electrodermal responses covary as a function of emotional arousal: A single-trial analysis. Psychophysiology. 2008;45:516–523. doi: 10.1111/j.1469-8986.2008.00667.x. [DOI] [PubMed] [Google Scholar]

- Keitel C, Andersen SK, Muller MM. Competitive effects on steady-state visual evoked potentials with frequencies in- and outside the alpha band. Exp Brain Res. 2010;205:489–95. doi: 10.1007/s00221-010-2384-2. [DOI] [PubMed] [Google Scholar]

- Keysers C, Perrett DI. Visual masking and RSVP reveal neural competition. Trends in cognitive sciences. 2002;6:120–125. doi: 10.1016/S1364-6613(00)01852-0. [DOI] [PubMed] [Google Scholar]

- Khodam Hazrati M, Miskovic V, Príncipe JC, Keil A. Functional connectivity in frequency tagged cortical networks during active harm avoidance. Brain Connectivity. 2015;5(5):292–302. doi: 10.1089/brain.2014.0307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim Y-J, Grabowecky M, Paller KA, Suzuki S. Differential Roles of Frequency-following and Frequency-doubling Visual Responses Revealed by Evoked Neural Harmonics. Journal of Cognitive Neuroscience. 2011;23:1875–1886. doi: 10.1162/jocn.2010.21536. doi: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim Y-J, Verghese P. The Selectivity of Task-Dependent Attention Varies with Surrounding Context. The Journal of Neuroscience. 2012;32:12180–12191. doi: 10.1523/JNEUROSCI.5992-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachaux J-P, Lutz A, Rudrauf D, Cosmelli D, Le Van Quyen M, Martinerie J, et al. Estimating the time-course of coherence between single-trial brain signals: an introduction to wavelet coherence. Neurophysiologie Clinique/Clinical Neurophysiology. 2002;32:157–174. doi: 10.1016/S0987-7053(02)00301-5. [DOI] [PubMed] [Google Scholar]

- Lamme VAF, Rodriguez-Rodriguez V, Spekreijse H. Separate Processing Dynamics for Texture Elements, Boundaries and Surfaces in Primary Visual Cortex of the Macaque Monkey. Cerebral Cortex. 1999;9:406–413. doi: 10.1093/cercor/9.4.406. [DOI] [PubMed] [Google Scholar]

- Lithari C, Sanchez-Garcia C, Ruhnau P, Weisz N. Large-scale network-level processes during entrainment. Brain research. 2016;1635:143–152. doi: 10.1016/j.brainres.2016.01.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu-Shuang J, Ales J, Rossion B, Norcia AM. Separable effects of inversion and contrast-reversal on face detection thresholds and response functions: A sweep VEP study. Journal of Vision. 2015;15:11. doi: 10.1167/15.2.11. [DOI] [PubMed] [Google Scholar]

- Liu-Shuang J, Norcia AM, Rossion B. An objective index of individual face discrimination in the right occipito-temporal cortex by means of fast periodic oddball stimulation. Neuropsychologia. 2014;52:57–72. doi: 10.1016/j.neuropsychologia.2013.10.022. [DOI] [PubMed] [Google Scholar]

- Martens U, Trujillo-Barreto N, Gruber T. Perceiving the tree in the woods: segregating brain responses to stimuli constituting natural scenes. The Journal of Neuroscience. 2011;31:17713–17718. doi: 10.1523/JNEUROSCI.4743-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McTeague LM, Gruss LF, Keil A. Aversive learning shapes neuronal orientation tuning in human visual cortex. Nature Communications. 2015;6:7823. doi: 10.1038/ncomms8823. doi: [DOI] [PMC free article] [PubMed] [Google Scholar]

- McTeague LM, Shumen JR, Wieser MJ, Lang PJ, Keil A. Social vision: Sustained perceptual enhancement of affective facial cues in social anxiety. Neuroimage. 2011;54:1615–1624. doi: 10.1016/j.neuroimage.2010.08.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miskovic V, Keil A. Perceiving Threat In the Face of Safety: Excitation and Inhibition of Conditioned Fear in Human Visual Cortex. The Journal of Neuroscience. 2013a;33:72–78. doi: 10.1523/JNEUROSCI.3692-12.2013. doi: [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miskovic V, Keil A. Visuocortical changes during delay and trace aversive conditioning: Evidence from steady-state visual evoked potentials. Emotion. 2013b;13:554. doi: 10.1037/a0031323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miskovic V, Keil A. Escape from harm: Linking affective vision and motor responses during active avoidance. Social Cognitive and Affective Neuroscience. 2014;9:1993–2000. doi: 10.1093/scan/nsu013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miskovic V, Keil A. Reliability of event-related EEG functional connectivity during visual entrainment: Magnitude squared coherence and phase synchrony estimates. Psychophysiology. 2015;52:81–89. doi: 10.1111/psyp.12287. [DOI] [PubMed] [Google Scholar]

- Miskovic V, Martinovic J, Wieser MJ, Petro NM, Bradley MM, Keil A. Electrocortical amplification for emotionally arousing natural scenes: The contribution of luminance and chromatic visual channels. Biological Psychology. 2015;106:11–17. doi: 10.1016/j.biopsycho.2015.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moratti S, Clementz BA, Gao Y, Ortiz T, Keil A. Neural mechanisms of evoked oscillations: stability and interaction with transient events. Human Brain Mapping. 2007;28:1318–33. doi: 10.1002/hbm.20342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moratti S, Keil A. Not what you expect: experience but not expectancy predicts conditioned responses in human visual and supplementary cortex. Cerebral Cortex. 2009;19:2803–2809. doi: 10.1093/cercor/bhp052. [DOI] [PubMed] [Google Scholar]

- Moratti S, Keil A, Stolarova M. Motivated attention in emotional picture processing is reflected by activity modulation in cortical attention networks. Neuroimage. 2004;21:954–64. doi: 10.1016/j.neuroimage.2003.10.030. [DOI] [PubMed] [Google Scholar]