Abstract

The Centers for Public Health Preparedness (CPHP) program was a five-year cooperative agreement funded by the Centers for Disease Control and Prevention (CDC). The program was initiated in 2004 to strengthen terrorism and emergency preparedness by linking academic expertise to state and local health agency needs. The purposes of the evaluation study were to identify the results achieved by the Centers and inform program planning for future programs. The evaluation was summative and retrospective in its design and focused on the aggregate outcomes of the CPHP program. The evaluation results indicated progress was achieved on program goals related to development of new training products, training members of the public health workforce, and expansion of partnerships between accredited schools of public health and state and local public health departments. Evaluation results, as well as methodological insights gleaned during the planning and conduct of the CPHP evaluation, were used to inform the design of the next iteration of the CPHP Program, the Preparedness and Emergency Response Learning Centers (PERLC).

Keywords: Program evaluation, Training, Education, Public health, Preparedness and response

1. Introduction

Evaluation of federally funded programs is critical for program planning, performance improvement, and determination of which programs warrant continued funding. In times of economic uncertainty and shrinking federal budgets, the role of evaluation becomes even more important. Recognition of the need for program evaluation and performance measurement has increased across the federal sector (Fredericks, Carman, & Birkland, 2002) and within public health specifically (DeGroff, Schooley, Chapel, & Poister, 2010).

The Government Performance and Results Act (GPRA), enacted by Congress in 1993, requires that federal agencies evaluate and report their activities on an annual basis. However, not all federal programs are required to evaluate or report through GPRA. The decision to evaluate a program, as well as the extent and complexity of the evaluation, is left to the program officials, planners, and evaluators, who are often the same group of individuals. In an ideal world, all federally funded programs would have a thorough and unbiased, scientifically rigorous evaluation plan and conduct. However, evaluations can be costly for an agency, both in direct costs and contracts. As such, individual programs must determine the need for an evaluation on a case-by-case basis.

Many federally funded programs in public health employ basic monitoring techniques as a part of routine program management, but do not engage in more robust evaluations. Grantees may provide updates on progress outlined in work plans at several points throughout the year; evaluation of these types of federal programs usually requires grantees to provide basic information about their activities (Youtie & Corley, 2011).

The current study moves beyond routine monitoring and accountability and utilized a mixed-methods evaluation approach to determine if the five-year program achieved its goals. The purpose of this article is to describe the evaluation study conducted at the end of the Centers for Public Health Preparedness (CPHP) program.

1.1. The Centers for Public Health Preparedness Program

The CPHP program was initiated in 2004 and was a five-year cooperative agreement funded by the Centers for Disease Control and Prevention (CDC). The program was based on the theory that academic institutions could play a role in meeting the training needs of State, local, tribal, and territorial health agencies not only due to their expertise in training and educating students, but also their specific expertise in terrorism preparedness and emergency response. In theory, linking academic experts, and their ability to develop, deliver, support, and evaluate competency-based training and education activities, with health agency partners should result in a better-trained, more prepared workforce.

Specific program goals were to (1) strengthen public health workforce readiness through implementation of programs for lifelong learning; (2) strengthen capacity at the State, local, tribal, and territorial level for terrorism preparedness and emergency public health response; and (3) develop a network of academic-based programs contributing to national terrorism preparedness and emergency response capacity, by sharing expertise and resources across State and local jurisdictions. In support of these goals, 27 academic institutions representing accredited schools of public health were funded between fiscal years 2004 and 2009. Federal funding authority for the CPHP program was established by the Public Health Service Act, Sections 301(a) and 317(k)(2). The CPHP program formally closed on August 31, 2010.

The primary focus of CPHP program activities was the delivery of education and training, as well as the dissemination of new and emerging information related to terrorism preparedness and emergency response. CDC expected Centers to work closely with State and local health agencies to plan, implement, and evaluate activities designed to deliver competency-based training and education, meet identified specific needs of state and local public health agencies across jurisdictions, and build workforce preparedness and response capabilities (Richmond, Hostler, Leeman, & King, 2010).

Preparedness education activities were required to be either partner-requested based on a community need, or academic or university student-focused. The 27 Centers were located in only 23 states; however, the Centers conducted activities in all states and some U.S. territories. CPHP activities were identified as:

Education and training: These activities were developed to drive knowledge gain in the areas of preparedness and response. Examples included training courses, certificate programs, train-the-trainer programs, conferences, workshops, preparedness curriculum development, and internships.

Partner-requested (other than training): These activities were identified through partner requests, as needed, to support the analysis, design, development, implementation, or evaluation of training. Examples included exercises and drills that assessed participants’ knowledge to identify training needs, tools that identified training needs, and planning assistance.

Supportive: The Centers’ supportive activities included publications, site visits, technical assistance, facility development, and tools for dissemination of training products (e.g., learning management systems).

Network: In an effort to leverage and coordinate preparedness education and training resources and expertise among the Centers, the CDC partnered with the Association of Schools of Public Health (ASPH) to assist with the coordination and facilitation of various CPHP network activities. Centers were expected to participate in convened, annual CPHP grantee meetings, download materials into a web-based CPHP Resource Center to better maximize outreach of all CPHP-developed preparedness education materials, and participate in collaboration groups designed to share resources, materials, and expertise across the network (Richmond et al., 2010).

1.2. Evaluation purpose

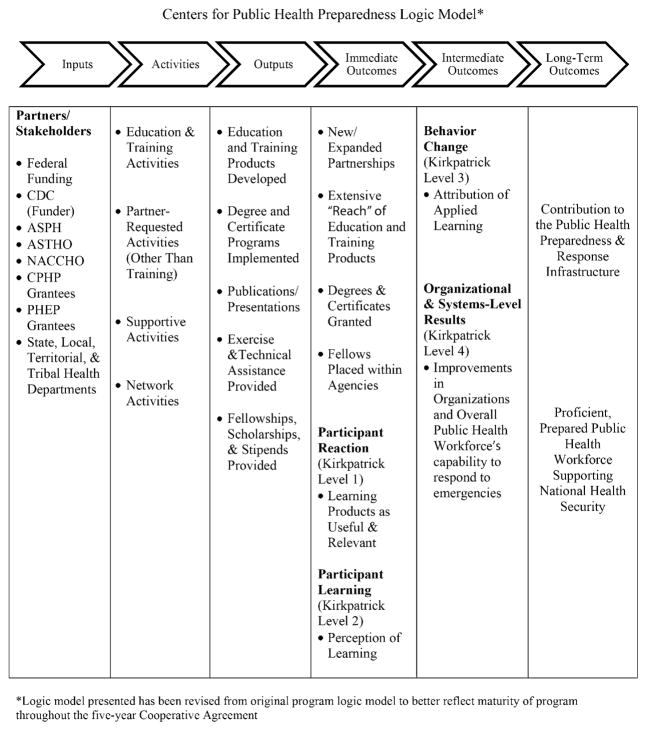

The purposes of the evaluation study were to (1) identify what results had been achieved by the Centers in aggregate; (2) determine the effectiveness of the CPHP program; and (3) inform evaluation and reporting requirements for the next iteration of the CPHP program, the CDC Preparedness and Emergency Response Learning Centers (PERLC). The strategy for the program evaluation was developed using the steps and standards outlined in the CDC’s Framework for Program Evaluation (Centers for Disease Control and Prevention, 1999). Evaluation questions for the study were informed by Kirkpatrick’s (2006) four levels of training evaluation and framed within a basic logic model structure (see Fig. 1) that delineates program outputs and immediate, intermediate, and long-term outcomes (McLaughlin & Jordan, 1999). In order to assess the effectiveness of the program and whether the original three goals had been achieved, the evaluation strategy focused on outputs and outcomes identified within the logic model; primary evaluation questions were mapped to logic model components (see Table 1).

Fig. 1.

Centers for Public Health Preparedness Logic Model. Logic model presented has been revised from original program logic model to better reflect maturity of program throughout the five-year Cooperative Agreement.

Table 1.

Key logic model components and associated evaluation questions.

| Logic model component | Associated evaluation questions |

|---|---|

| Program outputs | What did the Centers develop or provide? |

| Immediate outcomes | Did CPHP training and education products reach target audience and result in new learning? |

| Intermediate outcomes | How were CPHP products, services, and expertise integrated into practice? |

| Long-term outcomes | What impact has the program had on the domestic public health preparedness and response workforce? |

2. Method

The CPHP program evaluation was summative and retrospective in its design and focused on the aggregate outcomes of the CPHP program. Evaluation data were gathered from three distinct sources in an attempt to decrease bias. As a result, the established methodology was complex; it was composed of various web-based surveys and telephone interviews and also relied on information housed within existing program monitoring databases maintained by program stakeholders. A representative from each of the Centers was invited to provide input into the evaluation plan, methodology, and associated data collection instruments. The Office of Management and Budget granted approval for the evaluation (OMB Number: 0920-0826; Expiration Date: October 31, 2010).

2.1. Participants

Grantees

Data were gathered from all 27 Centers. The Principal Investigator, Project Coordinator, and Project Evaluator (if applicable) provided their collective input.

Customers and partners of the CPHP program

Data were gathered from CPHP customers and partners, who were defined as those who play a key role in their organization’s emergency prepared-ness activities or may have utilized emergency preparedness training or technical assistance resources developed, delivered or sponsored by the CPHP program. Customers and partners represented private sector, nonprofit, and state, local, territorial, and tribal government organizations.

As of 2008, CDC awarded more than $6.3 billion in PHEP funding to 62 grantees, which includes 50 states, eight territories and freely associated states, and four metropolitan areas. Beginning in 2006, grantees of the Public Health Emergency Preparedness (PHEP) Cooperative Agreement PHEP cooperative agreement were encouraged to partner with Centers and required to document their work together within their biannual progress reports. PHEP grantees, including state preparedness directors, were included in the evaluation study as customers and partners of the CPHP program.

National partners

Data were gathered from the CPHP program’s national partners: the CDC and the Association of Schools of Public Health (ASPH).

2.2. Summary of measures and procedure

Data collection began in June of 2009 and was completed in April 2010. High-level, relevant methodological information such as respondents, instruments, dates of administration, etc. are provided below. Participation in any evaluation activity was voluntary and results remained confidential.

CPHP survey

The purpose of the web-based survey was to collect information from the grantees about the Centers, including their training and education activities, technical assistance activities, partnerships, and their perceptions of the CPHP program’s collective outcomes and impact. The survey instrument consisted of 23 open and closed-ended items. Principal Investigators, Project Coordinators, and Program Evaluators participated collectively and were counted as one unit of analysis. Twenty-seven Centers participated (Response Rate = 100%).

CPHP interview

The purpose of the semi-structured telephone interview was to collect detailed information about each Center’s experience in implementing the CPHP program and its opinions regarding the program’s impact on public health preparedness. An interviewer guide consisting of 14 open-ended items was used during the interviews to standardize questions and focus discussion. Principal Investigators, Project Coordinators, and Program Evaluators were sent the interview questions in advance of the interview, participated collectively, and were counted as one unit of analysis. A contractor to the CDC facilitated the interviews and scribes were present to record participate responses. Interviews were audio recorded. Twenty-seven Centers participated (Response Rate = 100%).

CDC and ASPH-maintained databases

The purpose of utilizing select data from both CDC and ASPH-maintained databases was to allow for calculation of aggregate results using information submitted by the Centers throughout the program period. Biannually, the Centers provided requested information to CDC regarding training and education activities and partnerships. Data were stored in a secure database and used for reporting and evaluation purposes. Information about Center faculty and staff publications was maintained by ASPH. Twenty-seven Centers participated (Response Rate = 100%).

Customer–partner survey

The purpose of the web-based survey was to gather information related to customers and partners’ experiences and satisfaction with the CPHP program. The survey consisted of 27 open and closed-ended items. Of the 181 individuals who were given the opportunity to complete the survey, 91 participated (Response Rate = 50%).

2.3. Data analysis plan

Data collected through the web-based tool was transferred to the Windows-based statistical software, Statistical Package for the Social Sciences (SPSS). Frequency analysis was conducted for all closed-ended items and qualitative analysis was conducted for open-ended items. For each open-ended item, respondents’ comments were coded by common theme and aggregated. All instruments used for data collection were analyzed separately.

3. Results

Program results, attributed to the CPHP program, are presented below by logic model component and associated evaluation question. The components follow a basic logic model structure that delineates program outputs and immediate, intermediate, and long-term outcomes. Components were populated using a combination of grantee requirements and program activities outlined in the Funding Opportunity Announcement (FOA) and Kirkpatrick’s (2006) four levels of training evaluation. The logic model presented (see Fig. 1) was adapted from the original program logic model and better reflects the maturity of the program across the five years of the Cooperative Agreement. Primary evaluation questions were developed by CDC program staff and mapped to the logic model components.

3.1. Program outputs: what did the Centers develop or provide?

Education and training products

The primary output of the CPHP program was the successful development and delivery of education programs and training products and tools on preparedness and emergency response for pre-service and existing public health workers. 1870 education and training products were developed using full or partial funding from the CPHP Cooperative Agreement; these offerings are available to the practice community and/or enrolled academic students. CPHP products are primarily competency-based training courses that are classroom-based, distance-based, or use a blended approach to learning. Learners can access these training courses in-person (e.g., through a conference, symposia, workshop, classroom-based offering), through the internet (e.g., web-based courses and simulations, satellite broadcasts, podcasts, webinars), or a combination of the two. The topic areas of these courses are diverse; examples included risk communication, domestic pre-paredness, pandemic influenza, incident command for public health, natural disasters, leadership, environmental safety and preparedness, field epidemiology for surge capacity/outbreak response, and occupational safety/worker preparedness. In addition, Centers have developed graduate-level curricula, certificate programs, leadership programs, internship and student response programs, conferences, online portals or Learning Management Systems, evaluation frameworks, and educational materials such as CD-ROMS, audio files for training, toolkits, and newsletters.

Degree/certificate programs and publications

Centers reported that 34 degree and certificate programs with specializations in public health preparedness and response were implemented using complete or partial funding from the CPHP Cooperative Agreement. In addition, a minimum of 276 publications based off of CPHP work were produced by members of the CPHP network and 538 presentations were given.

Exercise and technical assistance

Centers also provided exercise and technical assistance to their partners throughout the program. Examples of exercise assistance provided included consultation and assistance in planning and delivering discussion-based and operations-based exercises including simulations, drills, table top, and full-scale exercises, performance evaluation planning using the Homeland Security Exercise and Evaluation Plan (HSEEP), and the development of After Action Reports (AAR) and Improvement Plans. Examples of other types of assistance provided included the development of assessment tools including those used to conduct risk and needs assessments, providing of emergency response support during disaster, providing of assistance related to surveillance activities, providing of preparedness and emergency response expertise to partners, assistance in developing prepared-ness and emergency response plans, support for evaluation activities, and facilitating the collaboration and connection of multiple partners for preparedness and emergency response projects and meetings.

Fellowships, scholarships, and stipends

Seventy-eight percent (78%; n = 21) of Centers were able to provide fellowships or internships using funding from the CPHP cooperative agreement. A total of 326 internships or fellowships were given. In addition, 62% (n = 10) of Centers provided academic scholarships and stipends to the practice community and/or enrolled academic students. 290 academic scholarships and stipends have been provided in total; the fiscal amount of these scholarships and stipends is estimated at over two million dollars.

3.2. Immediate outcomes: did products reach target audiences and result in new learning?

Partnerships

Centers partnered with the practice community in order to develop and deliver relevant education and training products or provide expertise or consultation. Results indicated that partnerships have been established among the Centers and state health departments (n = 20), government (non-health) agencies at the regional, state, city, local, or community level partners (n = 19), academic institutions including other Centers (n = 18), city, county, or local health departments (n = 15), and with federal agencies such as the Federal Bureau of Investigation (FBI), Federal Emergency Management Agency (FEMA), and the Department of Natural Resources. Sixty-five percent (65%) of customers and partners (n = 50) reported that as a result of the CPHP program, they have developed new or expanded partnerships with other agencies or organizations.

Centers defined their partnerships geographically and reported that they can span from a one-time partnership intended to deliver a seminar or conference to an enduring partnership that matures over time. Centers most frequently reported that their partners provide assistance and feedback in the development and delivery of trainings (n = 12), identify the need for a specific training (n = 10), work to expand the reach of the Centers by promoting their trainings to their staff and partners (n = 6), and provide content expertise (n = 5). The majority of customer and partner respondents (65%; n = 59) reported monthly or weekly interactions with a CPHP; 22% (n = 19) reported yearly interaction. There were significant differences on perceptions of the program between customers and partners who reported greater frequency of interaction with Centers than customers and partners who reported less frequency of interaction. Customers and partners with greater frequency of interaction were significantly more likely to report that their organizations’ response capabilities have improved as a result of using CPHP training, services, or products, t(55) = −2.68, p < .01) and that the CPHP program was an important resource that helped provide emergency response surge support during emergencies t(59) = −2.46, p < .05) than customers and partners who reported lower frequency of interaction.

“Reach” of educational and training activities

778,038 learners were “reached” through CPHP-produced products and programs. “Reach” was a single measure collected for all education and training activities and products that could include classroom-based trainings, distance learning formats, and actual materials distributed to target audiences. The reporting system used to input and calculate “reach” was not initiated until year two of the program; as a result, “reach” encompassed years two through six of the cooperative agreement.

In order to increase access and “reach” of CPHP products, Centers posted their education and training offerings on various Learning Management Systems (LMS) and offered continuing education credits to learners. Centers listed their offerings on LMS’s maintained by state or local governments (73%; n = 19), LMS’s maintained by the Centers (58%; n = 15), and LMS’s maintained by universities (31%; n = 8). In addition, 73% (n = 19) of Centers listed their education and training offerings in other locations such as professional organizations’ electronic mailing lists, various newsletters, flyers and brochures, the CPHP website, mailings to students and alumni, and ASPH’s Friday Letter. More than 1040 CPHP education and training products offer continuing education credits.

Degrees and placement of fellows

Results indicated that 1325 degrees and certificates with a specialization in public health preparedness and response were granted using complete or partial funding from the CPHP Cooperative Agreement. In addition, over 210 fellows or interns were placed within state and local government agencies.

Participant reaction

CPHP customers and partners rated their satisfaction with training and education products, as well as whether the offerings resulted in increased learning and improved skills among participants from their organization or partners.

Results indicated that 83% of customers and partners (n = 70) were “satisfied,” “very satisfied,” or “extremely satisfied” with CPHP training and education activities. Specifically, 83% (n = 68) were satisfied with the range of training/educational topics, 81% (n = 67) were satisfied with the delivery methods for CPHP-sponsored courses, and 75% (n = 62) were satisfied with their opportunities for input on the development, implementation, or evaluation of CPHP program activities. In addition, results suggested that 78% of customers and partners (n = 62) “agreed” or “strongly agreed” that CPHP-delivered educational/training offerings were relevant to the preparedness and response workforce needs of their organization and/or their partners.

An additional indicator of satisfaction with training and education activities was the customers and partners’ perceptions of the CPHP program as an important resource to their organization. More than half of the customers and partners “agreed” or “strongly agreed” that the CPHP program was an important resource that helped to meet the education and training needs (67%; n = 56), the exercise planning, facilitation, and evaluation needs (61%; n = 50), and the emergency response planning needs (59%; n = 49) of their organization.

Participant learning

Seventy-seven percent (77%) of customers and partners (n = 62) “agreed” or “strongly agreed” that CPHP-delivered education and training offerings resulted in increased knowledge among participants from their organization or their partners. Sixty-nine percent (69%; n = 56) “agreed” or “strongly agreed” that the offerings resulted in improved skills.

3.3. Intermediate outcomes. How were products, services, and expertise integrated into practice?

For purposes of this evaluation, intermediate outcomes were defined as the attribution of individual and organizational behavior or performance change to the use of CPHP training, services, or products.

Behavior change

Forty-five percent (45%; n = 34) of customers and partners “agreed” or “strongly agreed” that changes observed in employees’ on-the-job behavior could be attributed to the use of CPHP training, services, or products. In addition, 53% of customers and partners (n = 39) “agreed” or “strongly agreed” that their organization’s exercise program improved and 63% (n = 49) “agreed” or “strongly agreed” that the response capabilities of their organization improved as a result of using CPHP trainings, services, or products. Respondents identified key CPHP-delivered trainings, products, services, or other activities that have improved the preparedness and emergency response capability of their organization. Examples included specialized trainings on topics such as Personal Protective Equipment (PPE), Joint Information Centers (JIC), Weapons of Mass Destruction (WMD), National Incident Management System (NIMS) and Incident Command System (ICS), and Psychological First Aid. Customers and partners also identified certificate and degree programs such as those related to Bioterrorism Preparedness, Environmental Health in Disasters, and Leadership Communications, as well as Centers’ assistance with exercises and conference planning as activities that have improved the preparedness and emergency response capability of their organization.

Both university students and practitioner students enrolled in schools of public health degree and certificate programs applied learning from preparedness and response-oriented coursework to emergency response conditions. Centers reported that both their university students and their practitioner students participated in responses (e.g., disease outbreak, hurricane response, bridge collapse, earthquake, and vaccination clinics; n = 21) and participated in exercises (n = 7). In addition, 70% of Centers (n = 19) supported a university student response team that assists in public health emergencies and outbreaks; these response teams provided students with the opportunity to apply learning from coursework to emergency response conditions. Centers supported a minimum of 172 responses to public health emergencies/outbreaks (e.g., Hurricane Dolly and Katrina, Hepatitis A and H1N1 Influenza Outbreaks, and Midwest Flooding).

Organizational and system level results

Sixty-one percent (61%) of customers and partners (n = 49) “agreed” or “strongly agreed” that the response capabilities of the overall public health workforce improved as a result of the CPHP program. Respondents attributed these improvements to the development and delivery of specialized educational and training-related products such as courses and degree and certificate programs (n = 23), and the technical assistance provided to assist preparedness planning and response activities such as exercise planning and execution (n = 10).

3.4. Long-term outcomes: What impact has the program had on the workforce?

Centers were given the opportunity to provide input on long-term academic and infrastructure outcomes of the program such as if the evidence-base for public health emergency preparedness and response practice has improved, and if the number of qualified public health professionals entering the preparedness and response field has increased as a result of the CPHP program.

Contribution to infrastructure

Centers reported that the public health preparedness and response infrastructure was augmented by the CPHP program through the increased number of individuals who participated in trainings or utilized training products (n = 18), the development and strengthening of traditional and non-traditional partnerships (n = 16), the assistance provided to fill gaps through needed trainings, resources, or expertise (n = 9), the large amount of technical assistance for needs assessments, exercises, and evaluations to increase preparedness and emergency response capacity (n = 8), and the linking of the academic and practice communities (n = 6). In addition, Centers reported that the evidence-base improved or expanded through the use of assessments and evaluation for trainings and exercises (n = 11), an increase in the number of publications and presentations (n = 10), the Centers’ effective use of technology to deliver innovative, interactive trainings (n = 9), and an increase in the number of specialized training materials and products (n = 9). However, 18 Centers reported that the impact on the evidence-base for public health emergency preparedness and response practice was limited due to the Centers’ inability to conduct research and the lack of quantifiable measurement of the program.

Proficient, prepared public health workforce supporting national health security

Centers reported that the number of qualified public health professionals entering the preparedness and response field increased as a result of the CPHP program. Centers reported that at least 112 students from their institution’s degree or certificate programs accepted a position of employment related to public health preparedness and response after graduation. Centers commented that their students have accepted jobs with a specific public health preparedness and response role (n = 15), accepted jobs within federal agencies (n = 13), worked within state, city, or local health departments (n = 9), entered academia to study or teach within the preparedness and emergency response field (n = 9), and have accepted jobs within state agencies (n = 5).

4. Discussion

4.1. Overview of results

Results suggested that a large quantity of training and education products for enrolled academic students and the public health practice community were developed; output was extensive. Partnerships between the public health practice community (including federal agencies, State and local health departments, non-profit organizations, and tribal entities) and academic institutions were expanded and enhanced. In addition, CPHP education and training offerings were perceived as relevant to the preparedness and emergency response workforce needs. Students of CPHP education and training offerings gained knowledge and improved skills in public health preparedness and response. The number of individuals (pre-service and members of the existing workforce) trained in public health preparedness and response has increased.

Customers and partners reported improvements in their organizations’ exercise programs, and that the response capabilities of their organization and the response capabilities of the overall public health workforce have improved as a result of the CPHP program. Although Centers reported that assessing long-term outcomes of the CPHP program was limited due to the inability to conduct research and lack of formal, quantifiable measurement throughout the program, aggregate evaluation results suggested that there were some long-term outcomes of the program, primarily as a result of the expansive and innovative CPHP education and training offerings. These offerings contributed to the preparedness and emergency response infrastructure and improved the evidence-base for public health emergency pre-paredness and response practice. Finally, the number of qualified public health professional entering the preparedness and response field increased as a result of the CPHP program.

In summary, CDC’s investment in the Centers has resulted in progress towards achieving the original program goals, as stated in the FOA. The degree to which this study yielded evidence of goal achievement varied by goal. These findings are described per goal in Table 2. Improved on-the-job performance of workers and enhanced organizational capabilities are important indicators of successful training programs. While this study may not have yielded clear evidence of impact on these indicators at an aggregate level, individual Centers may have achieved success in transfer of learning to the job and contributed to preparedness and response capabilities in the organizations and communities they serve.

Table 2.

Summary of evaluation results by CPHP program goal.

| Program goal | Results |

|---|---|

| Strengthen public health workforce readiness |

|

| |

| Strengthen capacity at State and local levels for preparedness and response |

|

| |

| Develop a network of academic-based programs contributing to national preparedness and response |

|

4.2. Limitations of study

The samples for all methods utilized in the study were samples of convenience; random sampling techniques were not used. Although Centers were informed that their responses would not affect their funding or way in which they were treated, the Centers may have perceived the possibility of financial gains or losses due to participation in the study. Attempts to decrease bias were made by including customers and partners of the CPHP program, although even this sample included customers and partners identified by the Centers themselves in an effort to increase the sample size. Because the present study was conducted with specific groups of individuals, participants were homogenous and may not have allowed for diversity of roles and responsibilities within an organization. The small sample size of the National Partner Survey was limiting. Further limitations of the evaluation study were that all of the instruments were based on self-report and there was no observable counterfactual.

4.3. Lessons learned

Many lessons were learned from the planning, administration, analysis, and reporting of the CPHP evaluation. Of course, there are inherent challenges to conducting any evaluation, especially federally funded, national programs (Edwards, Orden, & Buccola, 1980; Potter, Ley, Fertman, Eggleston, & Duman, 2003). Although participation in program-level evaluation activities is often a requirement within an FOA, as it was in the CPHP program, grantees may perceive evaluation as burdensome (Bernstein, 1991; Gronbjerg, 1993; Rogers, Ahmed, Hamdallah, & Little, 2010), fear comparison to other grantees (Fredericks et al., 2002), and worry that evaluation results could reflect negatively on the program and impact funding, as mentioned under study limitations. Although attempts were made to alleviate these concerns, the end-of-program evaluation could have been stronger if program planners were not so dependent on grantees’ calculations and perceptions during the last year of the program. It is extremely challenging to conduct an end-of-program evaluation when there have not been reliable, focused evaluation efforts throughout the life of the program complementary to routine program monitoring. Evaluation development and implementation should be simultaneous to the FOA development so program objectives and priorities can be identified and measured at any given time during the project period.

In addition, it is important that stakeholders and grantees are included in evaluation activities and have the opportunity to actively contribute to the planning, instrument design, and reporting. Although a consultation committee of grantees and national partners was established for this evaluation, it would have been helpful to have this group in place for the duration of the project period so each grantee feels empowered in terms of evaluation of their activities.

As identified above, this evaluation yielded limited evidence to indicate that CPHP training and educational activities (as a whole) have improved on-the-job performance of public health workers or made an impact on state, tribal, local, or territorial public health preparedness and emergency response capabilities. Although individual Centers may have achieved success in transfer of learning to the job and contributed to preparedness and response capabilities in the communities they serve, evidence of the aforementioned could not be derived at an aggregate level from the current evaluation study. Factors which impeded the evaluation of the CPHP program and may account for the lack of impact-level results are as follows:

The CPHP FOA allowed for significant variability in grantee training activities and products, target audiences, and measurement and evaluation activities.

Individual Centers were not required to base their training and education activities on one common, standardized competency framework; as a result, assessing participant learning and outcomes at the aggregate level was not possible.

Due to the nature and diversity of the program and its grantees, program partners and stakeholders did not set expectations about what constitutes acceptable evaluation findings (e.g., percent of customers and partners satisfied, reach number, etc.).

Individual Centers engaged in few long term outcome and impact level evaluations, thus limiting the body of evidence to draw from.

The CDC CPHP program monitoring and reporting focused on outputs and process measures rather than outcomes.

4.4. Moving forward

The enactment of the Pandemic and All-Hazards Preparedness Act (PAHPA) in 2006 required a refocusing and consolidation of health professions curricula development and training programs. In the case of the CPHP program, PAHPA authorized the development and delivery of core competency-based curricula and training for the public health workforce. This legislation provided an opportunity to apply lessons learned from this evaluation to the design and development of the next iteration of the CPHP program, titled Preparedness and Emergency Response Learning Centers (PERLC).

Preliminary evaluation results as well as methodological insights gleaned during the planning and conduct of the CPHP evaluation were used to inform the: (a) design of the PERLC Funding Opportunity Announcement (FOA); and (b) PERLC program evaluation plan, and grantee reporting requirements. Specific corrective actions applied to the PERLC program based on the evaluation process and findings are related to early development and implementation of the program-level evaluation strategy. At the current time, CDC has developed a program evaluation plan that supports an evolving and participatory approach that allows flexibility for responding to emerging information, priorities, or additional contextual developments. The plan focuses on intended activities/outputs, and immediate, intermediate, and long-term outcomes. Activities and outputs are continuously monitored through a sophisticated reporting system that gathers detailed information on learning products, including reach by modality, target audience, and evaluation results. The outcomes component of the plan aligns with legislative mandates found in PAHPA (public health preparedness and response core competencies), the National Health Security Strategy, and OPHPR strategic goals for state and local readiness (public health preparedness and response capabilities), as well as provides a framework for collecting success stories and applying common training measures of quality, relevancy, and satisfaction throughout the program. Successful FOA applicants were required to demonstrate training evaluation expertise and provide robust evaluation plans through a competitive application process. Centers are held accountable for implementation of evaluation plans at the outcome and impact levels and are required to conduct long-term, follow-up evaluations with participants in their training and education activities. In addition, Centers must participate in an evaluation working group. The primary purpose of the evaluation working group is to inform and promote Center-level and program-level evaluation across the PERLC network. The working group specifically identifies and promotes the use of common training evaluation methods and measures, shares materials, resources, and lessons learned, and provides review, comment, and feedback for CDC’s program-level evaluation activities.

5. Conclusions

Although challenges in program evaluation at the federal level still exist and there is no perfect model to assess impact and return on investment, new and innovative methods to evaluate programs are needed to ensure transparency and accountability across the federal government. Although the authors recognize the limitations of the current study design and even challenges associated with the Kirkpatrick model (Bates, 2004; Holton, 1996), efforts to conduct program evaluation, at any level and at any time, are crucial and needed within public health and the federal government.

Acknowledgments

The authors wish to thank all of the grantees of the Centers for Public Health Preparedness program for their participation in the evaluation, as well as their input on the evaluation design, methods, analysis, and reporting, as well as all of the other program stakeholders. In addition, the authors would like to thank the project staff of the CDC program for their guidance and assistance in data collection and analysis including: Wanda King, Alyson Richmond, Liane Hostler, Gregg Leeman, Joan Cioffi, and Corinne Wigington.

Biographies

Dr. Robyn K. Sobelson serves as a behavioral scientist in the Learning Office (LO) within the Centers for Disease Control and Prevention’s (CDC) Office of Public Health Preparedness and Response (OPHPR). She received her doctorate in social and developmental psychology from Brandeis University and her Bachelor of Arts degree in Psychology from the University of Massachusetts Amherst.

Dr. Andrea C. Young serves as the Chief of the Applied Systems Research and Evaluation Branch within the Centers for Disease Control and Prevention’s (CDC) Office for State, Tribal, Local, and Territorial Support. She earned her Doctorate and Master of Science degrees in educational psychology and learning systems from Florida State University. She received her Bachelor of Arts degree in sociology from Eckerd College.

Footnotes

The findings and conclusions in this article are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

References

- Bates R. A critical analysis of evaluation practice: The Kirkpatrick model and the principle of beneficence. Evaluation and Program Planning. 2004;27:341–347. [Google Scholar]

- Bernstein SR. Contracted services: Issues for the nonprofit agency manager. Nonprofit and Voluntary Sector Quarterly. 1991;20:429–443. [Google Scholar]

- Centers for Disease Control and Prevention. Framework for program evaluation in public health. MMWR. 1999;48(RR-11) [PubMed] [Google Scholar]

- DeGroff A, Schooley M, Chapel T, Poister TH. Challenges and strategies in applying performance measurement to federal public health programs. Evaluation and Program Planning. 2010;33:365–372. doi: 10.1016/j.evalprogplan.2010.02.003. [DOI] [PubMed] [Google Scholar]

- Edwards PK, Orden D, Buccola ST. Evaluating the impact of federal human service programs with locally differentiated constituencies. Journal of Applied Behavioral Science16 1980 [Google Scholar]

- Fredericks KA, Carman JG, Birkland TA. Program evaluation in a challenging authorizing environment: Intergovernmental and interorganizational factors. New Directions for Evaluation. 2002;95:5–20. [Google Scholar]

- Gronbjerg KA. Understanding Nonprofit Funding. San Francisco: Jossey-Bass; 1993. [Google Scholar]

- Holton EF., III The flawed four-level evaluation model. Human Resource Development Quarterly. 1996;7(1):5–21. [Google Scholar]

- Kirkpatrick, Kirkpatrick DL, Kirkpatrick JD. Evaluating training programs: The four levels. 3. San Francisco: Berrett-Koehler Publishers Inc; 2006. [Google Scholar]

- McLaughlin JA, Jordan GB. Logic models: A tool for telling your program’s performance story. Evaluation and Program Planning. 1999;22(1):65–72. [Google Scholar]

- [Accessed 05.05.11];Office of Management and Budget Government Performance Results Act of 1993. Pub. L. No 103-62. 1993 Jan 5; http://www.whitehouse.gov/omb/mgmt-gpra/gplaw2m.

- Pandemic and All-Hazards Preparedness Act of 2006. Pub. L. No. 109-417, 120 Stat 2831 (December 19, 2006).

- Potter MA, Ley CE, Fertman CI, Eggleston MM, Duman S. Evaluating workforce development: Perspectives, processes, and lessons learned. Journal of Public Health Management and Practice. 2003;9(6):489–495. doi: 10.1097/00124784-200311000-00008. [DOI] [PubMed] [Google Scholar]

- Public Health Service Act of 1944. 42 U.S.C. Sect 301(a) and 317(k) (2) (2010).

- Richmond A, Hostler L, Leeman G, King W. A brief history and overview of CDC’s Centers for Public Health Preparedness Cooperative Agreement Program. Public Health Reports. 2010;125:8–14. doi: 10.1177/00333549101250S503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers SJ, Ahmed M, Hamdallah M, Little S. Garnering grantee buy-in on a national cross-site evaluation: The case of ConnectHIV. American Journal of Evaluation. 2010;31(4):447–462. [Google Scholar]

- Youtie J, Corley EA. Federally sponsored multidisciplinary research centers: Learning, evaluation, and vicious circles. Evaluation and Program Planning. 2011;34:13–20. doi: 10.1016/j.evalprogplan.2010.05.002. [DOI] [PubMed] [Google Scholar]