Abstract

Background and objectives

Although pockets of bioinformatics excellence have developed in Africa, generally, large-scale genomic data analysis has been limited by the availability of expertise and infrastructure. H3ABioNet, a Pan African bioinformatics network, was established to build capacity specifically to enable H3Africa researchers to analyse their data in Africa. Since the inception of the H3Africa initiative, H3ABioNet’s role has evolved in response to changing needs from the consortium and the African bioinformatics community. The network set out to develop core bioinformatics infrastructure and capacity for genomics research in various aspects of data collection, transfer, storage and analysis.

Methods and results

Various resources have been developed to address genomic data management and analysis needs of H3Africa researchers and other scientific communities on the continent. NetMap was developed and used to build an accurate picture of network performance within Africa and between Africa and the rest of the world, and Globus Online has been rolled out to facilitate data transfer. A participant recruitment database was developed to monitor participant enrolment, and data is being harmonized through the use of ontologies and controlled vocabularies. The standardized metadata will be integrated to provide a search facility for H3Africa data and biospecimens. Since H3Africa projects are generating large-scale genomic data, facilities for analysis and interpretation are critical. H3ABioNet is implementing several data analysis platforms that provide a large range of bioinformatics tools or workflows, such as Galaxy, the Job Management System and eBiokits. A set of reproducible, portable and cloud scalable pipelines to support the multiple H3Africa data types are also being developed and dockerized to enable execution on multiple computing infrastructures. In addition, new tools have been developed for analysis of the uniquely divergent African data and for downstream interpretation of prioritized variants. To provide support for these and other bioinformatics queries, an online bioinformatics helpdesk backed by broad consortium expertise has been established. Further support is provided by means of various modes of bioinformatics training.

Conclusion

For the past 4 years, the development of infrastructure support and human capacity through H3ABioNet, have significantly contributed to the establishment of African scientific networks, data analysis facilities and training programmes. Here, we describe the infrastructure and how it has impacted genomics and bioinformatics research in Africa.

Introduction

Africa is currently undergoing an epidemiological transition with endemic infection and a rapidly growing burden of cardiometabolic and other non-communicable diseases. Understanding the genetic determinants of diseases can lead to novel insights into disease aetiology, which may identify novel therapeutic targets, and the potential for better disease prognosis and management. Genomics research holds great promise for medical and healthcare research and is gaining global momentum as we transition to an era of precision medicine, whereby treatment of individual patients is driven by a greater understanding of the clinical diagnosis through interpretation of underlying genomic variation. With sequencing costs dropping, whole genome sequencing as an aid to diagnosis is becoming more affordable. However, this does not consider the hidden costs required for analysis and interpretation of the data, which is substantial [Muir et al 2016]. The decreasing costs associated with next generation sequencing (NGS) technologies have been accompanied by increasing size and complexity of the sequence data. To deal with such complex and voluminous data, existing data storage and transfer mechanisms as well as public repositories and data processing technologies have had to adapt, and skills in data science have had to be developed [Muir et al 2016]. As a consequence, bioinformaticians have become essential to biomedical research projects. This is true also of other large-scale technologies, such as genotyping by arrays, which may not generate quite the same data sizes as NGS, but with data for millions of single nucleotide polymorphisms (SNPs) being generated and analysed, the processing and downstream analysis require substantial computing resources and associated skills. Muir et al [Muir et al 2016] describe four key adaptations that have been required for embracing the genomics era, particularly in relation to NGS data: development of algorithms to handle short reads and long reference genomes, new compression formats for facilitating efficient data storage, adoption of distributed and parallel computing, and increasing data security protocols. Bioinformaticians with data science skills are essential for processing, analysing and integrating genomics data, but there is also a need for training geneticists and clinicians to interpret and translate the results.

While large-scale genomics projects have been undertaken for several years internationally, Africa has lagged behind due to limited infrastructure for implementing such large projects. Initiatives such as the Human Heredity and Health in Africa (H3Africa: www.h3africa.org) [The H3Africa Consortium, 2014] are accelerating genomics research on the continent by funding research as well as building capacity and infrastructure. One component of the H3Africa initiative is H3ABioNet [Mulder et al., 2015], a pan-African bioinformatics network for H3Africa (www.h3abionet.org), which is building capacity on the bioinformatics front to enable genomics research on the continent. Here we describe how this bioinformatics capacity development has been undertaken, demonstrated with examples and potential impact on genomics research.

Approach and implementation

H3ABioNet is an extensive network covering over 30 institutions in 15 African countries and 2 partners outside of Africa, and includes a large diversity of skills. However, the task of bioinformatics capacity development in most African countries is large, and due to limited resources, infrastructure and expertise requires careful coordination and pooling of efforts. With limited infrastructure in place prior to the H3Africa initiative, the network had to work on all fronts from genomics data management to building capacity for local analysis of the data.

Tools which are being developed and implemented are addressing the diverse bioinformatics needs of multi-site clinical research projects. This includes tools for monitoring recruitment of participants, optimization of data transfer between project sites and to public repositories, harmonization of data and establishment of standard and custom workflows for data analysis. H3ABioNet has made these available via several web-based, easy to use platforms such as REDCap, Galaxy or Webprotègé. The network also provides access to experts from a variety of domains to address questions about the established infrastructure and to provide support to H3Africa and other genomics projects. The development of bioinformatics capacity and user support undertaken by H3ABioNet can be divided into several categories related to data transfer and storage; data collection, management and integration; data analysis and development of associated tools; and training on all the above. Further details about these developments are described below.

Genomics skills training

Genomics, being a multi-disciplinary field, requires cross-disciplinary skills involving a combination of knowledge and proficiency from the fields of biology, computer science, mathematics and statistics. There are numerous challenges in the field of genomics, a major one being the processing of the massive amount of data and the extraction of biological meaning from them [Thorvaldsdottir et al., 2013]. In view of these challenges, there is an increased demand for highly trained and experienced bioinformatics experts who can handle the data inundation as well as interface with biologists. Thus, at the core of enabling genomic research is the development of human capacity and the promotion of interdisciplinary training. H3ABioNet has undertaken a multi-faceted approach to training, including integrating training with other activities, such as webinars, data analysis or development hackathons, and the inclusion of shadow teams in projects. H3ABioNet delivers formal training through internships (enabling one-on-one skills transfer), short specialised courses, hackathons, and online distributed courses. The training has covered various aspects of bioinformatics from general introductory topics to specialised subjects such as NGS and GWAS analyses. Since 2013, H3ABioNet has conducted over 25 bioinformatics courses across Africa (http://h3abionet.org/training-and-education/h3abionet-courses). Follow up surveys have been developed and are sent out on a regular basis to all participants who have attended any H3ABioNet training to assess the long term impact of training and track the career development of young researchers. Individual nodes also conduct their own training programs within their institutions or across their local regions and some have started new bioinformatics degree programs since the start of the project.

H3ABioNet webinars form part of the regular H3ABioNet activities and help strengthen research activities and foster collaboration amongst the nodes. The inaugural session for the webinar series was launched in May 2015 and has covered a broad range of relevant bioinformatics topics, including GWAS and Population Genetics, Metagenomics, Big Data, NGS, Cloud computing and reproducible science. The webinars take place monthly with 2 speakers per session and are educational for all participants. Early in the project, H3ABioNet also established a Node Accreditation Exercise with the aim to give nodes the opportunity to demonstrate that they have a reasonable level of technical competence on essential data analysis workflows relevant to H3Africa. The node accreditation exercise helps African scientists to develop technical skills and build infrastructure for a particular data analysis workflow.

Data transfer and secure storage

Several challenges face big biological data transfer to, within, and out of Africa. These include slow and unstable Internet connectivity, unreliable power supply, continent-wide obsolete computer infrastructure that varies between medium scale server infrastructure to a small number of workstations, with multiple operating systems (Windows, Linux) for different purposes, and a lack of centralized and secure data storage. Due to the cost and sensitive nature of human genetics data it was important to establish a system for reliable and secure data transfer in Africa. As a first step, we evaluated the connectivity between different collaborating endpoints, followed by the identification of a high-performance transfer service that allows stable, secure, and synchronized file transfer. The NetMap project was set up and used to build an accurate picture of network performance within Africa and between Africa and the rest of the world. Tools, based on the iPerf toolbox (http://iperf.sourceforge.net/), were developed to monitor the performance of network connections. These have been installed at the H3ABioNet nodes and a visualisation dashboard developed to report Internet speeds between sites daily. Through the NetMap project, we have gained the capacity to gather accurate data about the effective bandwidth of links between the nodes. The results have demonstrated that actual speeds vary immensely from bit/s to Gbits/s and seldom reach the speeds the institutions claim to offer. This information has been used to justify upgrades and fixes to some of the nodes’ infrastructure where bandwidth may be limiting.

A technical solution that enables fast, secure and reliable transfer of large datasets, independently of the bandwidth and quality of Internet connections, was needed for collaborators in Africa. Globus Online (https://www.globus.org/) [Foster, 2011] is a grid scale file transfer service that offers the right technologies (GridFTP, Third party file transfer control, etc.) to implement a solution to make data transfer in Africa more reliable. Through Globus scalability and some optimizations made especially to the H3ABioNet nodes, it has addressed most of the roadblocks encountered while using traditional file transfer protocols such as FTP or HTTP. The H3ABioNet infrastructure working group (ISWG) developed Standard Operating Procedures (SOPs) for the configuration of hosts running Globus Online, the implementation of the service, and the best practices for using it, either for individual usage or between teams at an institutional level. Following the SOP, many H3ABioNet nodes have implemented Globus Online in their infrastructure, and others have already used its services for inter-node collaborative work and transparent sharing of research data. As examples, Globus was used to transfer 668,622 individual files from Rhodes University to the Centre for High Performance Computing (CHPC) in Cape Town, and for transferring 140TB of NGS data from the United States of America (USA) to South Africa. The H3ABioNet ISWG also developed an SOP for the usage of the H3Africa archive architecture (see below) as the centralized storage area for H3Africa data. This is linked to an endpoint that enables project data to be transferred using Globus Online services. Addressing the challenges led the ISWG to create a number of publicly available documents with detailed information on how to resolve technical issues to establish an active Globus node.

One of the funder requirements for H3Africa data is the submission to public data repositories in a timely manner. To facilitate this, an H3Africa archive was developed to host and prepare the data for submission to public repositories and timing of submissions could be monitored. The archive architecture is based on the one used by the European Bioinformatics Institute’s European genome-phenome Archive (EGA) [Lappalainen et al., 2015], to ensure data security. The data has to be submitted to the EGA within nine months of reaching the archive, during which time it is prepared for submission by ensuring the format and content is EGA-compliant. H3ABioNet acts as the data coordinating centre which liaises with the EGA and provides support for preparing data for submission.

Data collection, management and integration

Central to the H3Africa projects is the collection and management of data, which can be of many different types, including clinical, demographic, genomic, etc. Clinical and specifically phenotype data are collected during participant recruitment and consultations, while other data may be collected or generated at multiple sites, including laboratories, sequencing centres or other core facilities. Whatever the nature of the data, it is essential that it is accurate, complete and well curated and managed. Data also needs to be tracked and monitored to ensure provenance.

Participant recruitment

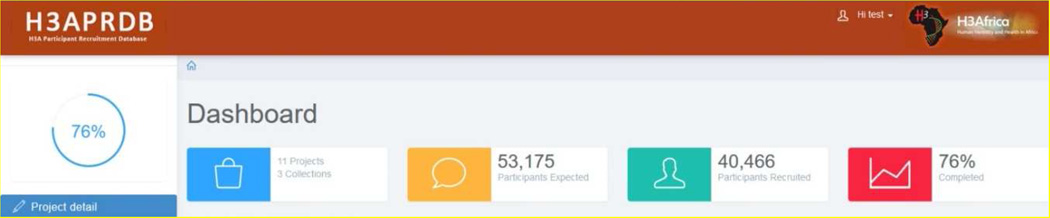

Recruitment of the target number of participants is critical for a clinical study. Failure to achieve the targeted enrolment and retention rates can have scientific consequences, as studies can suffer reduced statistical power. At the request of H3Africa Consortium, H3ABioNet designed an H3Africa Participant Recruitment Database (H3APRDB: https://redcap.h3abionet.org/h3aprdb/). The H3APRDB, backed by REDCap [Harris et al, 2009], is a data submission and reporting interface designed for H3Africa principal investigators and funders to track participant recruitment at the site, project and consortium levels, with built in graphical outputs. In the design, a few of the questions identified for the H3APRDB were common to all projects, while some were project specific. Therefore, the data submission interfaces had to be customized for each project, and these were pre-populated with the project sites, as well as expected recruitment numbers. Users access H3APRDB via the Internet using their respective login credentials, the system filters the user based on their unique login parameters, and renders the required reporting information based on the role assigned to the user. Each PI only has access to his/her own project information, while each funder can access results from any of the projects they fund and get an overview of all funded projects.

In the H3APRDB, REDCap forms are populated quarterly by the projects using the pre-established project questions. It was designed as a server based application, which makes it accessible remotely via an Internet browser. The user interface was also programmed with mobile responsive features using the CSS 3 @media Query that makes it suitable for any mobile device and tablet. A reporting system was developed allowing for automatic export of collected data from REDCap in JSON format and subsequent processing to generate graphs to demonstrate recruitment progress. The User Interface was implemented using Hypertext Mark-up Language (HTML) and JavaScript, using Cascading Style Sheet (CSS) for styling, and the reporting system connects to the RedCap API via server side components implemented in PHP. The code is available in GIT hub at: https://github.com/h3abionet/h3aprdb. The reporting interface provides an overview of recruitment progress over time, the percentage completeness by project and the number of recruited participants per site. Bar charts display the recruitment numbers at each data collection point against the total number to be recruited, enabling a quick comparison of the progress of all the projects at a glance. The charts have clickable project titles on the vertical axis, which link to the unique data for that particular project. The dashboard also features active legends that ease navigation between reports, and buttons to hide or display selected project data. The dashboard has a quick report section at the top of the page (Figure 1), which automatically counts and reports specific numerical values. This provides an up to date count of the number of projects, number of collections, number of expected participants, actual number of participants recruited, and percentage completion over all projects.

Figure 1.

H3Africa Participant recruitment Database Quick Report Section

The dashboard has in-built JavaScript functions for automatic translation of the reported data and charts into different formats either for printing, further use in other software, or for sharing generated reports. It auto-generates pdf, png, jpg, or SVG formats of the graphed reports. In providing a single common reporting platform for all the H3Africa projects, recruitment progress can easily be tracked allowing for better monitoring of project expectations and trends.

Data curation and standardisation

Harmonizing data across multiple projects or even multiple sites within a project is challenging, but also necessary to enable cross-consortium analyses. The heterogeneity of the terminologies used for the description of H3Africa metadata made it necessary to adopt ontologies and controlled vocabularies. Ontologies enable unambiguous contextual description of data as terms have descriptions and relationships between them. Consistent description of data is required before submission of data to the EGA and biospecimens to the biorepositories. This requires adequate sample, phenotype and experimental procedure description and coordination between data and biospecimen repositories. Data curation and standardization is ongoing alongside the submission of data to the H3Africa archive and biorepositories. We are using a simple metadata schema that includes publicly accessible data types that will be available from the EGA and biorepository websites after submission. This schema and the structure underlying ontologies to which the data will be mapped, will be used in an H3Africa catalogue to enabling search for specific data or biospecimens.

Where unique data generated by H3Africa projects could not be mapped to existing ontologies, we sought to extend these ontologies to make them more applicable to Africa. For example, H3ABioNet members and the Sickle Cell Disease community joined forces to develop an ontology covering aspects related to SCD under the classes: phenotype, diagnostics, therapeutics, quality of life, disease modifiers and disease stage. Experts in SCD from around the world contributed to the development of the SCD ontology at a hands-on workshop, the proceedings of which were published [Mulder N. et al. 2016]. We settled on Protégé for an initial implementation of ontology structures and have used its web interface WebProtégé [Horridge et al., 2014] for the follow up developments by domain experts. The generic workflow used for building ontologies starts by enumerating the important terms, reviewing existing ontologies, defining classes, subclasses and the hierarchy between classes, defining the properties of the classes, defining the different facets of the classes, and filling in the values. The SCD ontology is continuing to be developed, curated and integrated into the WebProtégé platform and the website dedicated to this project. The final ontology will be reviewed by an international advisory group.

Data integration

There will be many different data types generated by H3Africa projects. These include phenotype, microbiome data, exome, whole genome sequence and GWAS data. These data types need to be integrated within projects to answer the research questions, but also at a higher level to get a broad picture of data from the consortium. Information about these different data types, or metadata, is essential for understanding, sharing and integration. The metadata, which includes clinical, demographic and genomic or other experimental data from projects, will be available for searching via the H3Africa catalogue mentioned previously, but ultimately will be integrated into a more comprehensive African variation database.

Relevant public data is also being integrated into the Human Mutation Analysis (HUMA) resource (https://huma.rubi.ru.ac.za/), which is a platform for the analysis of genetic variation in humans, with the focus being on the downstream analysis of variation in protein sequences and structures. It integrates data from various sources in a single connected database, including UniProt [UniProt Consortium 2015], dbSNP [Sherry et al., 2001], Ensembl [Hubbard et al., 2002], the Protein Data Bank (PDB) [Berman et al., 2000], Human Genome Nomenclature Committee (HGNC) [Gray et al., 2015], ClinVar [Landrum et al., 2014], and OMIM [Hamosh et al., 2000]. Data collected includes all human genes, protein sequences and structures, exons and coding sequences, genetic variation, and diseases that could be mapped to variations and genes. This data was integrated into a MySQL database and made publicly accessible via a user-friendly web interface and RESTful web API. The web server was developed using the Django web framework. Additionally, HUMA has been designed to allow variation data in VCF format to be uploaded in the form of private data sets. This data is stored separately from the public data and is kept private unless the user explicitly shares it. This data is automatically mapped to protein sequences and structures (based on chromosome co-ordinates) already in the database, allowing users to visualize the location of the uploaded variation in three-dimensional space. Currently, HUMA contains over 22 000 genes, 157 000 unique protein sequences, 14 000 diseases, 32 000 protein structures, and approximately 71 million variants mapped to proteins and genes. HUMA serves as a resource for H3Africa projects, providing a space for unique variation to be uploaded, compared to existing mutations from dbSNP and UniProt, and analyzed computationally.

Data analysis

Analysis of large-scale genomic data and integration with phenotypes is not trivial. It requires tools, computing infrastructure and relevant skills. To support data analysis, H3ABioNet has developed several new tools, SOPs for pipelines required for H3Africa data analysis, and explored computing facilities and how to access these. Support for all of these is provided through a ticketed helpdesk.

SOPs and computing platforms

Genomic projects currently funded through H3Africa typically involve the generation of genetic data through genotyping arrays, whole genome or exome sequencing, while the microbiome projects are mostly using 16S rRNA sequencing. Therefore we have developed SOPs for analysis pipelines for these data, the steps to follow are divided into pre-processing and quality control, analysis, and interpretation, with software for each step and approximate computing requirements (cpus and storage). The SOPs are publicly available from the H3ABioNet website (http://www.h3abionet.org/tools-and-resources/sops), with practice datasets for researchers who want to test the pipelines themselves. The SOPs and datasets are tools for helping the H3ABioNet nodes prepare for Node Accreditation exercises, and for others who want to learn about the pipelines.

Data analysis is variable, requiring anything from running a few scripts to complex analysis workflows with data visualization tools. Several data analysis platforms that provide large range of bioinformatics tools exist. Galaxy (http://usegalaxy.org) [Afgan et al., 2016] is an example, which provides a user friendly graphical interface to a large range of bioinformatics tools for the analysis of various types of data. Galaxy supports reproducible computational research by providing an environment for performing and recording bioinformatics analyses. Several Galaxy instances have been set up by different H3ABioNet nodes (Tunisia: http://tesla.pasteur.tn:8080/, Morocco: www.ensat.ac.ma/mobihic/Galaxy/, and South Africa –currently private) and well-documented standard workflows that are useful for the analysis of H3Africa data have been implemented. Nodes have also included specific pipelines relevant to their regions, for example the Moroccan instance implemented a global workflow for microarray and mass spectroscopy data analysis in their Galaxy platform. The eBiokit (http://www.ebiokit.eu/), is a standalone device that can run bioinformatics analyses independent of the Internet. This is particularly useful for training where Internet access is unreliable, and it also hosts a Galaxy instance.

Another resource to store network-built tools and workflows is the Job Management System (JMS) [Brown et al., 2015], a cluster front-end and workflow management system designed to ease the burden of using HPC resources and facilitate the sharing of tools and workflows. JMS, developed within H3ABioNet, allows users to upload tools and scripts via a user-friendly web interface or tools can be combined via a drag-and-drop interface, to create complex workflows. JMS automatically generates web-based interfaces to tools and workflows based on user-provided information on how each tool can be executed. Tools and workflows can then be shared between JMS users. JMS is being set-up at the CHPC, where it will be freely available to all South African-based academics. For others, JMS can be downloaded and installed on local infrastructure. Tools and workflows collected within H3ABioNet will be shared across all JMS instances, providing each node with the ability to perform multiple types of computational analysis. Those housed in JMS have also been made public via external web interfaces. Future development of JMS will focus on creating a grid computing network incorporating all groups who have set up a JMS instance. This will facilitate the sharing of computational resources between participating nodes. JMS is open-source and can be downloaded from https://github.com/RUBi-ZA/JMS.

More recently, technologies have developed for packaging tools to deploy on various high performance computing infrastructures, including Cloud platforms. We are developing a set of simple portable pipelines that scale well to support multiple H3Africa projects. These use popular workflow management languages to describe the workflows (either Common Workflow Language or Nextflow), which allow the specification of robust and reproducible workflows that are fault tolerant and provide the end users with instructions for running the workflow. We have also used Docker, a containerisation service which abstracts from the underlying system and hardware to promote portability and ease of installation. Four containerized pipelines are currently under construction. The H3Agwas pipeline will support the calling of genotypes, a range of quality control steps and association tests, including linear and logistic regression and mixed model approaches, and will also support population structure analysis. The workflow supports native and dockerised execution on single servers or clusters, and an Amazon AMI is available for execution on Amazon. The second pipeline uses imputation to address the issue of missing information in large-scale data. This Nextflow-based (https://www.nextflow.io/) imputation workflow utilizes IMPUTE2 [Howie et al., 2009] and SHAPEIT [Delaneau et al., 2012] to phase typed genomic variants and impute untyped genomic variants using a previously phased reference panel. To speed up the computation, the workflow parallelizes imputation by chunking across chromosome windows. The workflow is designed to be run either within Docker containers in a Docker swarm deployed on cloud infrastructure (using OpenStack or Amazon AWS) or within a local HPC installation which uses slurm or another scheduler which is supported by Nextflow. The two additional workflows process NGS data, one specialising in 16S rRNA data for metagenomic analysis, and the other exome sequence data, with downstream variant calling. Much of the emphasis on the development of these pipelines has been on portability to take into account the heterogeneous computing environments and infrastructure within Africa to allow groups to run these pipelines on different environments (servers, clusters, high performance computing centres and cloud computing platforms) while also ensuring scalability.

Analysis tools

There is an extensive collection of bioinformatics tools available in the public domain for the analysis of many different data types. However, there is seldom a one solution fits all resource, and sometimes new, unique data brings new analysis challenges. While it is important not to “reinvent the wheel”, there is constantly a need for tool adaptation, customization or re-development to fill gaps in existing tools and pipelines. African genomic data is unique in its novelty and diversity, necessitating new or adapted algorithms for data analysis and visualization. Some examples of new or adapted tools developed by H3ABioNet are described below.

Inference of the genetic ancestries in admixed populations is an important area of study, with applications in admixture mapping (method used to localize disease causing genetic variants which differ in frequency across populations). Several methods have been developed for inferring local ancestry, but many still have limitations in working with complex multi-way admixed populations. Due to the historical action of natural selection, the modeled ancestral population in some chromosomal regions may be divergent from the true ancestral population. These spurious deviations would be present in both cases and controls, and would lead to spurious case-only admixture associations or admixture mapping. Therefore, we developed four tools related to admixture analysis, including a tool for selecting the best proxy ancestral populations for an admixed population, algorithms for dating different admixture events in multi-way admixture populations, and for inferring local ancestry in admixed populations, and a tool for mapping genes underlying ethnic differences in complex disease risk in multi-way admixed populations. The first tool, PROXYANC [Chimusa et al., 2013] is an important precursor for the rest of the tools, as identifying the correct ancestral populations is crucial to enable accurate inference of local ancestry, mapping disease, and dating admixture events in mixed populations. It incorporates two novel algorithms, including the correlation between observed linkage disequilibrium in an admixed population and population genetic differentiation in ancestral populations, and an optimal quadratic programming based on the linear combination of population genetic distances. For dating distinct ancient admixture events in multi-way admixed population, we are currently implementing DateMix, a new method that is based on the distribution of ancestry segments along the genome of the admixed individuals. We are also designing a model that accounts for historical gene flow and natural selection to achieve superior accuracy in inferring ancestry of origin at every genomic locus in a multi-way admixed population. This new approach, called ancENS, makes use of the Approximate Bayesian computation (ABC) sampling approach to approximate the posterior probability of the modeled ancestral hidden Markov model. Finally, since the locus-specific ancestry along the genome of an admixed population can boost the power to detect signals of disease genes for complex disease that differ in prevalence among different ethnic groups, we are developing new disease scoring statistics for multi-way admixed populations that account for admixture association, gene-environment interactions, and family relationships.

GWA studies on complex diseases often provide multiple significant SNPs or no significant associations but a set of potential SNPs that fall just below the significance threshold. For downstream analysis of variants from a Genome Wide Association Study (GWAS) or NGS experiment, we developed ancGWAS [Chimusa et al., 2015] and HUMA. ancGWAS is a network-based tool for analysis of GWAS summary statistics that identifies significant subnetworks in the human protein-protein interaction network by mapping SNPs to genes and genes to pathways and networks. ancGWAS enables one to make sense of how multiple SNPs/genes found to be significant in a GWAS may be related to each other and which biological pathways are enriched with these genes. When working with admixed populations, the tool is also able to identify genes or subnetworks that differ in ancestry proportions compared to the average proportions across the genome. Another useful pipeline developed within H3ABioNet is a variant prioritization workflow and associated guidelines. The variant annotation uses existing tools such as ANNOVAR, PolyPhen, etc., and then a variant prioritization workflow determines which SNPs out of a set of SNPs generated by an experiment, should be prioritized for further analysis to interpret the results of the experiment. A final example is HUMA, which enables downstream interpretation by mapping variants to protein structures based purely on the chromosome coordinates and visualizing these variants in the structure. Additionally, HUMA includes Vapor, a variant analysis workflow, which combines the predictions of Provean [Choi et al., 2012], PolyPhen-2 [Adzhubei et al., 2013], PhD-SNP [Capriotti et al., 2006], FATHMM [Shihab et al., 2013], AUTO-MUTE [Masso & Vaisman, 2010], MuPro [Cheng et al., 2006], and I-Mutant 2.0 to predict the effects of mutations on protein function and stability. HUMA also integrates PRIMO, a homology modeling pipeline. The HUMA web server makes use of JMS to run tools and workflows on the underlying cluster.

Helpdesk

With the development of a variety of tools or computing facilities for addressing many aspects of bioinformatics and genomics research and also, taking cognizance of the fact that many wet-lab researchers have little experience in bioinformatics, H3ABioNet set-up an online helpdesk to provide access to expertise in the field. The helpdesk offers technical support in various bioinformatics and genomics categories to H3Africa and non-H3Africa projects in need of assistance with study design and analysis of genomics experiments.

Queries are handled by a team of experts from H3ABioNet, which collectively have broad experience ranging from whole genome sequencing, genotyping, and systems administration to software engineering. User requests are categorized and assigned to experts, and the expected turnaround time for dealing with a request is monitored. The helpdesk, accessed via a submission interface available at http://www.h3abionet.org/support, is audited every six months to monitor progress and deliver strategies to improve interventions. Users can also browse through the helpdesk knowledgebase or additional resources that include specialized discussion fora, and other bioinformatics platforms that could potentially answers their queries.

Discussion and conclusions

Considering the fact that the largest human genetic diversity lies within the African continent, African genomic data will likely provide unique clinical and biodiversity knowledge [Campbell and Tishkoff, 2008; Chimusa et al., 2015]. However, genomic data poses theoretical challenges through the massive data volumes, dimensionality of the data, and African-specific statistical approaches required for mining and interpreting the data. These challenges range from the physical aspects of dealing with these data through to biomedical interpretation for the ultimate improvement of healthcare. The tools H3ABioNet has developed are relatively new, but were developed in response to an immediate need. Therefore, most have been implemented in research projects, some of which are published and some still in progress. Although robust infrastructure remains a central issue in most African countries, access to computers, tools and training for bioinformatics is being addressed through H3ABioNet, which has contributed significantly to infrastructure and human capacity development in local African nodes within the network. This has the potential to considerably improve the quality of genomics research and enable data analysis by African scientists.

In the past 5 years there has been a considerable increase in the availability of bioinformatics training programmes in many countries [Bishop et al., 2014], which have trained a large number of African-based scientists to develop experts in the discipline. Additionally, improving the computing infrastructure and access to tools across the continent has made large-scale genomic data analysis more accessible for these scientists. At the start of the project, few African groups had the capacity or skills to analyse large genomics datasets, however, there are now several teams who have both the skills and infrastructure to do so, as demonstrated through successful completion of the H3ABioNet node accreditation exercises, and the analysis of over 5,000 full genome sequences for the design of a new genotyping array. The investments in infrastructure support are making critical contributions to advancing biomedical research and associated bioinformatics applications. Networking through H3ABioNet and H3Africa has also presented opportunities for peer support and collaborative research and promoted scientific collaboration between African researchers.

Highlights.

H3ABioNet is building capacity to enable analysis of genomic data in Africa

Tools have been built for clinical and genomic data storage, management and analysis

New algorithms and pipelines for African genomic data analysis have been developed

Data is being harmonised using ontologies to enable easy search and retrieval

Genomics training is implemented using various online and face-to-face approaches

Acknowledgments

Funding

H3ABioNet is supported by the National Institutes of Health Common Fund [grant number U41HG006941]. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Abbreviations

- SNPs

Single Nucleotide Polymorphisms

- H3Africa

Human Heredity and Health and Health in Africa

- EGA

European genome-phenome Archive

- SOP

Standard Operating Procedure

- CHPC

Centre for High Performance Computing

- NGS

Next Generation Sequence

- USA

United States of America

- H3APRDB

H3Africa Participant Recruitment Database

- HTML

Hypertext Mark-up Language

- CSS

Cascading Style Sheet

- SCD

Sickle Cell Disease

- HUMA

Human Mutation Analysis

- PDB

Protein Data Bank

- HGNC

Human Genome Nomenclature Committee

- JMS

Job Management System

- GWAS

Genome Wide Association Study

- ABC

Approximate Bayesian computation

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Muir P, Li S, Lou S, Wang D, Spakowicz DJ, Salichos L, Zhang J, et al. The real cost of sequencing: scaling computation to keep pace with data generation. Genome Biol. 2016;17:53. doi: 10.1186/s13059-016-0917-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.The H3Africa Consortium. Enabling African Scientists to Engage Fully in the Genomic Revolution. Science. 2014;344(6190):1346–1348. doi: 10.1126/science.1251546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mulder NJ, Adebiyi E, Alami R, Benkahla A, Brandful J, Doumbia S, et al. H3ABioNet, a Sustainable Pan African Bioinformatics Network for Human Heredity and Health in Africa. Genome Res. 2015;26(2):271–277. doi: 10.1101/gr.196295.115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Thorvaldsdottir H, Robinson JT, Mesirov JP. Integrative Genomics Viewer (IGV): high-performance genomics data visualization and exploration. Brief. Bioinform. 2013;14:178–192. doi: 10.1093/bib/bbs017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Foster I. Globus Online: Accelerating and Democratizing Science through Cloud-Based Services. Internet Computing, IEEE. 2011;15(3):70–73. [Google Scholar]

- 6.Lappalainen I, Almeida-King J, Kumanduri V, Senf A, Spalding JD, Ur-Rehman S, et al. The European Genome-phenome Archive of human data consented for biomedical research. Nat Genet. 2015;47(7):692–695. doi: 10.1038/ng.3312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap) - A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mulder N, Nembaware V, Adekile A, Inusa B, Brown B, Campbell A. Proceedings of a Sickle Cell Disease Ontology Workshop – towards the first comprehensive Ontology for Sickle Cell Disease. Appll Trans Gen. 2016;9:23–29. doi: 10.1016/j.atg.2016.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Horridge M, Tudorache T, Nuylas C, Vendetti J, Noy NF, Musen MA. WebProtégé: a collaborative Web-based platform for editing biomedical ontologies. Bioinformatics. 2014;30(16):2384–2385. doi: 10.1093/bioinformatics/btu256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Consortium UniProt: a hub for protein information. Nucleic Acids Res. 2015;43(Database issue):D204–D212. doi: 10.1093/nar/gku989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sherry ST, Ward MH, Kholodov M, Baker J, Phan L, Smigielski EM, Sirotkin K. dbSNP: the NCBI database of genetic variation. Nucl Acids Res. 2001;29:308–311. doi: 10.1093/nar/29.1.308. http://doi.org/10.1093/nar/29.1.308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hubbard T, Barker D, Birney E, Cameron G, Chen Y, Clark L, et al. The Ensembl genome database project. Nucl Acids Res. 2002;30(1):38–41. doi: 10.1093/nar/30.1.38. http://doi.org/10.1093/nar/30.1.38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Berman HM, Westbrook J, Feng Z, Gilliland G, Bhat TN, Weissig H, et al. The Protein Data Bank. Nucl Acids Res. 2000;28:235–242. doi: 10.1093/nar/28.1.235. http://doi.org/10.1093/nar/28.1.235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Gray KA, Yates B, Seal RL, Wright MW, Bruford EA. Genenames.org: The HGNC resources in 2015. Nucl Acids Res. 2015;43(D1):D1079–D1085. doi: 10.1093/nar/gku1071. http://doi.org/10.1093/nar/gku1071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Landrum MJ, Lee JM, Riley GR, Jang W, Rubinstein WS, Church DM, et al. ClinVar: Public archive of relationships among sequence variation and human phenotype. Nucl Acids Res. 2014;42 doi: 10.1093/nar/gkt1113. http://doi.org/10.1093/nar/gkt1113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hamosh A, Scott AF, Amberger J, Valle D, McKusick VA. Online Mendelian Inheritance in Man (OMIM) Hum Mut. 2000;15(1):57–61. doi: 10.1002/(SICI)1098-1004(200001)15:1<57::AID-HUMU12>3.0.CO;2-G. [DOI] [PubMed] [Google Scholar]

- 17.Afgan E, Baker D, van den Beek M, Blankenberg D, Bouvier D, Čech M, et al. The Galaxy platform for accessible, reproducible and collaborative biomedical analyses: 2016 update. Nucleic Acids Res. 2016;44(W1):W3–W10. doi: 10.1093/nar/gkw343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brown DK, Penkler DL, Musyoka TM. Tastan Bishop Ö. JMS: An Open Source Workflow Management System and Web-Based Cluster Front-End for High Performance Computing. Plos One. 2015;10(8):e0134273. doi: 10.1371/journal.pone.0134273. http://doi.org/10.1371/journal.pone.0134273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Howie BN, Donnelly P, Marchini J. A flexible and accurate genotype imputation method for the next generation of genome-wide association studies. PLoS Genet. 2009;5:e1000529. doi: 10.1371/journal.pgen.1000529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Delaneau O, Marchini J, Zagury JF. A linear complexity phasing method for thousands of genomes. Nat. Methods. 2012;9:179–181. doi: 10.1038/nmeth.1785. [DOI] [PubMed] [Google Scholar]

- 21.Chimusa ER, Daya M, Möller M, Ramesar R, Henn BM, van Helden PD, Mulder NJ, Hoal EG. Determining Ancestry Proportions in Complex Admixture Scenarios in South Africa using a novel Proxy Ancestry Selection Method. PLoS ONE. 2013;8(9):e73971. doi: 10.1371/journal.pone.0073971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chimusa ER, Mbiyavanga M, Mazandu GK, Mulder NJ. ancGWAS: a Post Genome-wide Association Study Method for Interaction, Pathway, and Ancestry Analysis in Homogeneous and Admixed Populations. Bioinformatics. 2015 doi: 10.1093/bioinformatics/btv619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Choi Y, Sims GE, Murphy S, Miller JR, Chan AP. Predicting the Functional Effect of Amino Acid Substitutions and Indels. PLoS ONE. 2012;7(10) doi: 10.1371/journal.pone.0046688. http://doi.org/10.1371/journal.pone.0046688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Adzhubei I, Jordan DM, Sunyaev SR. Predicting functional effect of human missense mutations using PolyPhen-2. Curr Protoc Hum Gen. 2013;(SUPPL.76) doi: 10.1002/0471142905.hg0720s76. http://doi.org/10.1002/0471142905.hg0720s76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Capriotti E, Calabrese R, Casadio R. Predicting the insurgence of human genetic diseases associated to single point protein mutations with support vector machines and evolutionary information. Bioinform. 2006;22(22):2729–2734. doi: 10.1093/bioinformatics/btl423. http://doi.org/10.1093/bioinformatics/btl423. [DOI] [PubMed] [Google Scholar]

- 26.Shihab HA, Gough J, Cooper DN, Stenson PD, Barker GLA, Edwards KJ, et al. Predicting the Functional, Molecular, and Phenotypic Consequences of Amino Acid Substitutions using Hidden Markov Models. Hum Mut. 2013;34(1):57–65. doi: 10.1002/humu.22225. http://doi.org/10.1002/humu.22225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Masso M, Vaisman II. AUTO-MUTE: Web-based tools for predicting stability changes in proteins due to single amino acid replacements. Prot Eng Des Sel. 2010;23(8):683–687. doi: 10.1093/protein/gzq042. http://doi.org/10.1093/protein/gzq042. [DOI] [PubMed] [Google Scholar]

- 28.Cheng J, Randall A, Baldi P. Prediction of protein stability changes for single-site mutations using support vector machines. Proteins. 2006;62(4):1125–1132. doi: 10.1002/prot.20810. http://doi.org/10.1002/prot.20810. [DOI] [PubMed] [Google Scholar]

- 29.Campbell MC, Tishkoff SA. African genetic diversity: implications for human demographic history, modern human origins, and complex disease mapping. Annu Rev Genomics Hum Genet. 2008;9:403–433. doi: 10.1146/annurev.genom.9.081307.164258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Chimusa ER, Meintjies A, Tchanga M, Mulder N, Seoighe C, Soodyall H, Ramesar R. A genomic portrait of haplotype diversity and signatures of selection in indigenous southern African populations. PLoS Genet. 2015;11(3):e1005052. doi: 10.1371/journal.pgen.1005052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bishop ÖT, Adebiyi EF, Alzohairy AM, Everett D, Ghedira K, Ghouila A, et al. Bioinformatics education - perspectives and challenges out of Africa. Brief Bioinf. 2014;16(2):355–364. doi: 10.1093/bib/bbu022. [DOI] [PMC free article] [PubMed] [Google Scholar]