Significance

Learning about multiple features of a choice option is crucial for optimal decision making. How such multiattribute learning is realized remains unclear. Using functional MRI, we show that the brain exploits separate mesolimbic and mesocortical networks to simultaneously learn about reward and effort attributes. We show a double dissociation, evident in the expression of effort learning signals in dorsomedial prefrontal and reward learning in ventral striatal areas, with this dissociation being spatially mirrored in dopaminergic midbrain. At the time of choice, these segregated signals are integrated in ventral striatum. These findings highlight how the brain parses parallel learning demands.

Keywords: effort prediction errors, reward prediction errors, apathy, substantia nigra/ventral tegmental area, dorsomedial prefrontal cortex

Abstract

Optimal decision making mandates organisms learn the relevant features of choice options. Likewise, knowing how much effort we should expend can assume paramount importance. A mesolimbic network supports reward learning, but it is unclear whether other choice features, such as effort learning, rely on this same network. Using computational fMRI, we show parallel encoding of effort and reward prediction errors (PEs) within distinct brain regions, with effort PEs expressed in dorsomedial prefrontal cortex and reward PEs in ventral striatum. We show a common mesencephalic origin for these signals evident in overlapping, but spatially dissociable, dopaminergic midbrain regions expressing both types of PE. During action anticipation, reward and effort expectations were integrated in ventral striatum, consistent with a computation of an overall net benefit of a stimulus. Thus, we show that motivationally relevant stimulus features are learned in parallel dopaminergic pathways, with formation of an integrated utility signal at choice.

Organisms need to make energy-efficient decisions to maximize benefits and minimize costs, a tradeoff exemplified in effort expenditure (1–3). A key example occurs during foraging, where an overestimation of effort can lead to inaction and starvation (4), whereas underestimation of effort can result in persistent failure, as exemplified in the myth of Sisyphus (5).

In a naturalistic environment, we often simultaneously learn about success in expending sufficient effort into an action as well as the reward we obtain from this same action. The reward outcomes that signal success and failure of an action are usually clear, although the effort necessary to attain success is often less transparent. Only by repeatedly experiencing success and failure is it possible to acquire an estimate of an optimal level of effort needed to succeed, without unnecessary waste of energy. This type of learning is important in contexts as diverse as foraging, hunting, and harvesting (6–8). Hull in his “law of less work” proposed that organisms “gradually learn” how to minimize effort expenditure (9). Surprisingly, we know little regarding the neurocognitive mechanisms that guide this form of simultaneous learning about reward and effort.

A mesolimbic dopamine system encodes a teaching signal tethered to prediction of reward outcomes (10, 11). These reward prediction errors (PEs) arise from dopaminergic neurons in substantia nigra and ventral tegmental area (SN/VTA) and are broadcast to ventral striatum (VS) to mediate reward-related adaptation and learning (12, 13). Dopamine is also thought to provide a motivational signal (14–18), while dopaminergic deficits in rodents impair how effort and reward are arbitrated (1, 4, 19). The dorsomedial prefrontal cortex (dmPFC; spanning presupplementary motor area [pre-SMA] and dorsal anterior cingulate cortex [dACC]) is a candidate substrate for effort learning. For example, selective lesioning of this region engenders a preference for low-effort choices (15, 20–23), while receiving effort feedback elicits responses in this same region (24, 25). The importance of dopamine to effort learning is also hinted at in disorders with putative aberrant dopamine function, such as schizophrenia (26), where a symptom profile (“negative symptoms”) often includes a lack of effort expenditure and apathy (27–30).

A dopaminergic involvement in effort arbitration (14, 15, 17, 19, 30) suggests that effort learning might proceed by exploiting similar mesolimbic mechanisms as in reward learning, and this would predict effort PE signals in SN/VTA and VS. Alternatively, based on a possible role for dorsomedial prefrontal cortex, effort and reward learning signals might be encoded in two segregated (dopaminergic) systems, with reward learning relying on PEs within mesolimbic SN/VTA and VS and effort learning relying on PEs in mesocortical SN/VTA projecting to dmPFC. A final possibility is that during simultaneous learning, the brain might express a unified net benefit signal, integrated over reward and effort, and update this signal via a “utility” PE alone.

To test these predictions, we developed a paradigm wherein subjects learned simultaneous reward and effort contingencies in an ecologically realistic manner, while also acquiring human functional magnetic resonance imaging (fMRI). We reveal a double dissociation within mesolimbic and mesocortical networks in relation to reward and effort learning. These segregated teaching signals, with an origin in spatially dissociable regions of dopaminergic midbrain, were integrated in VS during action preparation, consistent with a unitary net benefit signal.

Results

Effort and Reward Learning.

Our behavioral task required 29 male subjects to learn simultaneously about, and adapt to, changing effort demands as well as changing reward magnitudes (Fig. 1A and SI Appendix). On every trial, subjects saw one of two stimuli, where each stimulus was associated with a specific reward magnitude (1 to 7 points, 50% reward probability across the entire task) and a required effort threshold (% individual maximal force, determined during practice). These parameters were initially unknown to the subjects and drifted over time, such that reward and effort magnitudes changed independently. After an effort execution phase, the associated reward magnitude of the stimulus was shown together with categorical feedback as to whether the subject had exceeded a necessary effort threshold, where the latter was required to successfully reap the reward. Importantly, subjects were not informed explicitly about the height of the effort threshold but only received feedback as to the success (or not) of their effort expenditure. On every trial, subjects received information about both effort and reward, and thus learned simultaneously about both reward magnitude and a required effort threshold, through a process of trial and error (Fig. 1B).

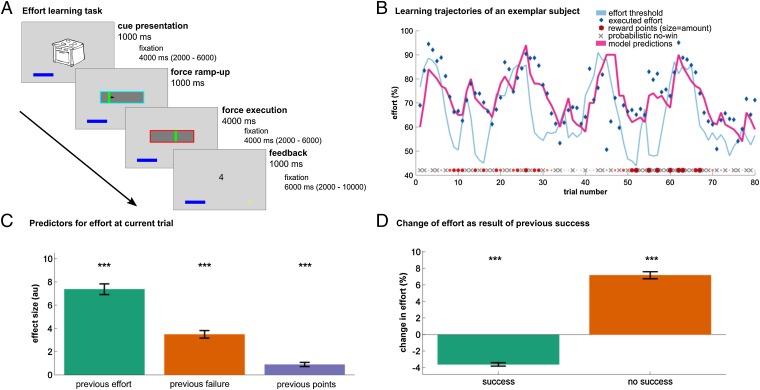

Fig. 1.

Effort learning task and behavior. (A) Each stimulus is associated with a changing reward magnitude and effort threshold. After seeing a stimulus, subjects exert effort using a custom-made, MR-compatible, pneumatic hand gripper. Following a ramp-up phase (blue frame), subjects continuously exert a constant force to exceed an effort threshold (red frame phase). The duration of force exertion was kept constant across all trials to obviate temporal discounting that could confound effort execution (14). If successful, subjects received points that were revealed during feedback (here, 4 points). If a subject exerts too little effort (i.e., does not exceed the effort threshold), a cross is superimposed over the number of (potential) points, indicating they will receive no points on that trial, but still allowing subjects to learn about the potential reward associated with a given stimulus. (B) Effort and reward trajectories and actual choice behavior of an exemplar subject. Both effort threshold (light blue line) and reward magnitude (red points) change across time. Rewards were delivered probabilistically, yielding no reward (gray x’s) on half the trials, independent of whether subjects exceeded a required effort threshold (“0” presented on the screen). The exemplar subject can be seen to adapt their behavior (blue diamonds) to a changing effort threshold. Model predictions (pink, depicting maximal subjective net benefit) closely follow the subject’s behavior. As predicted by our computational model, this subject modulates their effort expenditure based on potential reward. In low-effort trials, the subject exerts substantially more effort in high-reward trials (e.g., Left side) compared with similar situations in low-reward trials (e.g., Right side). (C) Group analysis of 29 male subjects shows that the exerted effort is predicted by factors of previous effort, failure to exceed the threshold on the previous trial, and reward magnitude, demonstrating that subjects successfully learned about reward and effort requirements. (D) If subjects fail to exceed an effort threshold, they on average exert more force in a subsequent trial (orange). Following successful trials, subjects reduce the exerted force and adapt their behavior by minimizing effort (green). Bar plots indicate mean ± 1 SEM; ***P < 0.001. au, arbitrary units.

To assess learning, we performed a multiple regression analysis (Fig. 1C) that predicted exerted effort on every trial. A significant effect of previous effort [t(28) = 15.96, P < 0.001] indicated subjects were not exerting effort randomly but approximated the effort expended with previous experience of the same stimulus, as expected from a learning process. Subjects increased their effort for higher rewards [t(28) = 4.97, P < 0.001], consistent with a motivation to expend greater energy on high-value choices. Last, subjects exerted more effort following trials where they failed to exceed an effort threshold [t(28) = 10.75, P < 0.001], consistent with adaptation of effort to a required threshold. Subsequent analysis showed that subjects not only increased effort expenditure after missing an effort threshold [Fig. 1D; t(28) = 17.08, P < 0.001] but also lessened their effort after successfully surpassing an effort threshold [t(28) = −17.15, P < 0.001], in accordance with the predictions of Hull’s law (9). Thus, these analyses combined reveal subjects were able to simultaneously learn about both rewards and effort requirements.

A Computational Framework for Effort and Reward Learning.

To probe deeper into how precisely subjects learn about reward and effort, we developed a computational reinforcement learning model that predicts effort exerted at every trial, and compared this model with alternative formulations (see SI Appendix, Fig. S1 for detailed model descriptions). Our core model had three distinct components: reward learning, effort learning, and reward–effort arbitration (i.e., effort discounting), that were used to predict effort execution at each trial. To capture reward learning, we included a Rescorla–Wagner–like model (31), where reward magnitude learning occurred via a reward prediction error. Note that our definition of reward PE deviates from standard notation (10, 11, 32), dictated in part by our design. First, our reward learning algorithm does not track actual rewarded points. Because subjects learned about reward magnitude even if they failed to surpass an effort threshold, and thus not harvest any points (as hypothetical rewards were visible behind a superimposed cross), the algorithm tracks the magnitude of potential reward. This implementation aligns with findings that dopamine encodes a prospective (hypothetical) prediction error signal (33, 34). Second, we used a probabilistic reward schedule, similar to that used in previous studies of reward learning (35–37). Subjects received 0 points in 50% of the trials (fixed for the entire experiment), which in turn did not influence a change in reward magnitude. Using model comparison (all model fits are shown in SI Appendix, Fig. S2), we found that a model incorporating learning from these 0-outcome trials outperformed a more optimal model that exclusively learned from actual reward magnitudes. This is in line with classical reinforcement learning approaches (31, 32), wherein reward expectation manifests as a weighted history of all experienced rewards.

To learn about effort, we adapted an iterative logistic regression approach (38), where subjects are assumed to learn about effort threshold based upon a PE. We implemented this approach because subjects did not receive explicit information about the exact height of the effort threshold and instead had to infer it based on their success history. Here we define an effort PE as a difference between a subject’s belief in succeeding, given the executed effort, and their actual success in surpassing an effort threshold. This effort PE updates a belief about the height of the effort threshold and thus the belief of succeeding given a certain effort. Note that this does not describe a simple motor or force PE signal, given that a force PE would be evident during force execution to signal a deviation between a currently executed and an expected force. Moreover, in our task, effort PEs are realized at outcome presentation in the absence of motor execution, signaling a deviation from a hidden effort threshold. Finally, as we are not interested in a subjective, embodied experience of ongoing force execution, we visualized the executed effort by means of a thermometer, an approach used in previous studies (39, 40).

The two independent learning modules, involving effort or reward, are combined at decision time to form an integrated net utility of the stimulus at hand. Previous studies indicate that this reward–effort arbitration follows a quadratic or sigmoidal, rather than a hyperbolic, discount function (39–41). As in these prior studies, we also found that a sigmoidal discounting function best described this arbitration (SI Appendix, Fig. S2) (39, 40). Furthermore, it was better explained if reward magnitude modulated not only the height of this function but also its indifference point. A sigmoidal form predicts that the utility of a choice will decrease as the associated effort increases. Our model predicts utility is little affected in low-effort conditions (compare Fig. 1B and Fig. S1C). Moreover, the impact of effort is modulated by reward such that in high-reward conditions, subjects are more likely to exert greater effort to ensure they surpass an effort threshold (compare SI Appendix, Fig. S1).

To assess whether subjects learned using a PE-like teaching signal, we compared our PE-based learning model with alternative formulations (SI Appendix, Fig. S2). A first comparison revealed that the PE learning model outperformed nonlearning models where reward or effort was fixed rather than dynamically adapted, supporting the idea that subjects simultaneously learned about, and adjusted, their behavior to both features. We also found that the effort PE model outperformed a heuristic model that only adjusted its expectation based on success but did not scale the magnitude of adjustment using an effort PE, in line with a previous study showing a PE-like learning of effort (24). In addition, we compared the model with an optimal reward learning model, which tracks the previous reward magnitude and ignores the probabilistic null outcomes, revealing that PE-based reward learning outperformed this optimal model.

Finally, because our model was optimized to predict executed effort, we examined whether model-driven PEs also predicted an observed trial-by-trial change in effort. Using multiple regression, we found that model-derived PEs indeed have behavioral relevance, and both effort [t(28) = 13.50, P < 0.001] and reward PEs [t(28) = 2.10, P = 0.045] significantly predict effort adaptation. This provides model validity consistent with subjects learning about effort and reward using PE-like signals.

Distinct Striatal and Cortical Representations of Reward and Effort Prediction Errors.

Using fMRI, we tested whether model-derived effort and reward PEs are subserved by similar or distinct neural circuitry. We analyzed effort and reward PEs during feedback presentation by entering both in the same regression model (nonorthogonalized; correlation between regressors: r = 0.056 ± 0.074; SI Appendix, Fig. S4). Bilateral VS responded significantly to reward PEs [P < 0.05, whole-brain family-wise error (FWE) correction; see SI Appendix, Table S1 for all activations] but not to effort PEs (Fig. 2 A–C). In contrast, dmPFC (peaking in pre-SMA extending into dACC) responded to effort PEs (P < 0.05, whole-brain FWE correction; Fig. 2 D–F and SI Appendix, Table S1) but not to reward PEs (Fig. 2F). In relation to dmPFC, activity increased if an effort threshold was higher than expected and was attenuated if it was lower than expected, suggestive of an invigorating function for future action. This finding is also in keeping with previous work on effort outcome (24, 25), and a significant influence of dmPFC activity on subsequent change in effort execution [effect size: 0.04 ± 0.07; t(27) = 2.82, P = 0.009] supports its behavioral relevance in this task and is consistent with updating a subject’s expectation about future effort requirements (33). Interestingly, dmPFC area processing effort PE peaks anterior to pre-SMA and lies anterior to where anticipatory effort signals are found in SMA (SI Appendix, Fig. S7), suggesting a spatial distinction between effort anticipation and evaluation.

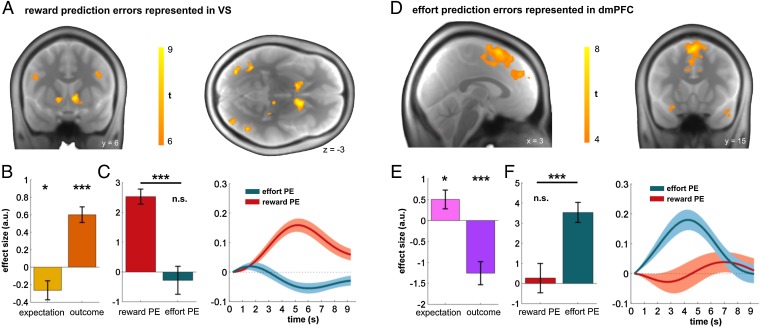

Fig. 2.

Separate reward and effort PEs in striatum and cortex. (A) Reward PEs encoded in bilateral ventral striatum (P < 0.05, whole-brain height-FWE correction). (B) Analysis of VS shows an encoding of a full reward PE, reflecting both expectation [t(27) = −2.44, P = 0.021] and outcome components [t(27) = 6.68, P < 0.001]. (C) VS response at outcome showed a significantly increased response to reward PEs relative to effort PEs [t(27) = 5.80, P < 0.001], with no evidence for an effect of effort PE [t(27) = −0.60, P = 0.554]. (D) Effort PEs encoding in dorsomedial prefrontal cortex (P < 0.05, whole-brain height-FWE correction). This PE includes components reflecting effort expectation [t(27) = 2.28, P = 0.030] and outcome [t(27) = −4.59, P < 0.001; i.e., whether or not threshold was surpassed]. (E) Activity in dmPFC increased when an effort threshold is higher than expected and decreased when it is lower than expected. (F) dmPFC showed no encoding of a reward PE [t(27) = 0.37, P = 0.714], and effort PEs were significantly greater than reward PEs in this region [t(27) = 4.87, P < 0.001]. The findings are consistent with effort and reward PEs being processed in segregated brain regions. Bar and line plots indicate mean effect size for regressor ± 1 SEM. *P < 0.05; ***P < 0.001; nonsignificant (n.s.), P > 0.10. a.u., arbitrary units.

Neither VS nor dmPFC showed a significant interaction effect between effort and reward PEs [dmPFC: effect size: −0.32 ± 3.77; t(27) = −0.45, P = 0.657; VS: effect size: −0.41 ± 1.59; t(27) = −1.36, P = 0.185]. Post hoc analysis confirmed that both components of a prediction error, expectation and outcome, were represented in these two regions (Fig. 2 B and E), consistent with a full PE encoding rather than simply indexing an error signal (cf. 42). The absence of any effect of probabilistic 0 outcomes in dmPFC further supports the idea that this region tracks an effort PE rather than a general negative feedback signal [effect size: 0.01 ± 0.18; t(27) = 0.21, P = 0.832]. No effects were found for negative-heading (inverse) PEs in either reward or effort conditions (e.g., increasing activation for decreasing reward PEs; SI Appendix, Table S1). To examine the robustness of this double dissociation, we sampled activity from independently derived regions of interest (ROIs; VS derived from www.neurosynth.org; dmPFC derived from ref. 25), and again found a significant double dissociation in both VS (SI Appendix, Fig. S5A) and dmPFC (SI Appendix, Fig. S5B). Moreover, this double dissociation was also evident in a whole-brain comparison between effort and reward PEs [SI Appendix, Fig. S5C; dmPFC: Montreal Neurological Institute (MNI) coordinates: −14 −9 69, t = 6.05, P < 0.001 cluster-extent FWE, height-threshold P = 0.001; VS: MNI: 15 9 −6, t = 6.73, P < 0.001 cluster-extent FWE].

Additionally, we controlled for unsigned (i.e., salience) effort and reward PE signals by including them as additional regressors in the same fMRI model (correlation matrix shown in SI Appendix, Fig. S4), as these signals are suggested to be represented in dmPFC (e.g., 43). Interestingly, when analyzing the unsigned salience PEs, we found that both effort and reward salience PEs elicit responses in regions typical for a salience network (44), and a conjunction analysis across the two salience PEs showed common activation in left anterior insula and intraparietal sulcus (SI Appendix, Fig. S3).

Simultaneous Representations of Effort and Reward PEs in Dopaminergic Midbrain.

We next asked whether an effort PE in dmPFC reflects an influence from a mesocortical input originating within SN/VTA. Dopaminergic cell populations occupy the midbrain structures substantia nigra and ventral tegmental area, and project to a range of cortical and subcortical brain regions (45–47). Dopamine neurons in SN/VTA encode reward PEs (11, 48) that are broadcast to VS (12). Similar neuronal populations have been found to encode information about effort and reward (49, 50). Using an anatomically defined mask of SN/VTA, we found that at the time of feedback this region encodes both reward and effort PEs (Fig. 3; P < 0.05, small-volume FWE correction for SN/VTA; SI Appendix, Table S1), consistent with a common dopaminergic midbrain origin for striatal and cortical PE representations.

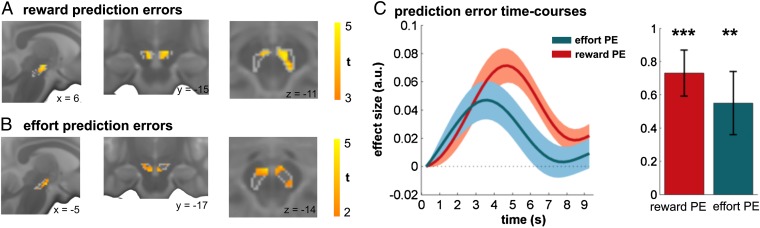

Fig. 3.

Dopaminergic midbrain encodes reward and effort PEs at outcome. Analysis of SN/VTA revealed a significant reward (A) and effort (B) PE signal (P < 0.05, small-volume height-FWE correction for anatomically defined SN/VTA). Gray lines depict boundaries of anatomical SN/VTA mask. A simultaneous encoding of both PEs [C; mean activity in anatomical SN/VTA: reward PE: t(27) = 5.26, P < 0.001; effort PE: t(27) = 2.90, P = 0.007] suggests a common origin of the dissociable (sub)cortical representations. Activation increase signals the outcome was better than expected for reward PEs, but indicates an increased effort threshold for effort PEs. Bar and line plots indicate mean effect size for regressor ± 1 SEM; **P < 0.01, ***P < 0.001. a.u., arbitrary units.

Ascending Mesolimbic and Mesocortical Connections Encode PEs.

PEs in both dopaminergic midbrain and (sub)cortical regions suggest that SN/VTA express effort and reward learning signals which are then broadcast to VS and dmPFC. However, there are also important descending connections from dmPFC and VS to SN/VTA (51, 52), providing a potential source of top–down influence on midbrain. To resolve directionality of influence, we used a directionally sensitive analysis of effective connectivity. This analysis compares different biophysically plausible generative models and from this determines the model with the best-fitting neural dynamics [dynamic causal modeling; DCM (53); Materials and Methods]. We found strong evidence in favor of a model where effort and reward PEs provide a driving influence on ascending compared with descending or mixed connections (Bayesian random-effects model selection: expected posterior probability 0.55, exceedance probability 0.976, Bayesian omnibus risk 2.83e-4), a finding consistent with PEs computed within SN/VTA being broadcast to their distinct striatal and cortical targets.

A Spatial Dissociation of Effort and Reward PEs in SN/VTA.

A functional double dissociation between VS and dmPFC, but a simultaneous representation of both PEs in dopaminergic midbrain, raises a question as to whether effort and reward PEs are spatially dissociable within the SN/VTA. Evidence in rodents and nonhuman primates points to SN/VTA containing dissociable dopaminergic populations projecting to distinct areas of cortex and striatum (45–47, 54, 55). Specifically, mesolimbic projections to striatum are located in medial parts of the SN/VTA, whereas mesocortical projections to prefrontal areas originate from more lateral subregions (46, 47, 56). However, there is considerable spatial overlap between these neural populations (46, 47) as well as striking topographic differences between species, which cloud a full understanding of human SN/VTA topographical organization (45, 46).

A recent human structural study (57) segregated SN/VTA into ventrolateral and dorsomedial SN/VTA subregions. This motivated us to examine whether SN/VTA effort and reward PEs are dissociable along these axes (Fig. 4A). Using unsmoothed data, we tested how well activity in each SN/VTA voxel is predicted by either effort or reward PEs (Materials and Methods). The location of each voxel along the ventral–dorsal and medial–lateral axis was then used to predict the t-value difference between effort and reward PEs. A significance related to both spatial gradients [Fig. 4B and SI Appendix, Fig. S6; ventral–dorsal gradient: β = −0.151, 95% confidence interval (C.I.) −0.281 to −0.022, P = 0.016; medial–lateral gradient: β = 0.469, 95% C.I. 0.336 to 0.602, P < 0.001] provided evidence that dorsomedial SN/VTA is more strongly affiliated with reward PE encoding, whereas the ventrolateral SN/VTA was more affiliated with effort PE encoding. We also examined whether this dissociation reflected different projection pathways using functional connectivity measures of SN/VTA with VS and dmPFC. Analyzing trial-by-trial blood oxygen level-dependent (BOLD) coupling (after regressing out the task-related effort and reward PE effects), we replicated these spatial gradients, with dorsomedial and ventrolateral SN/VTA more strongly coupled to VS and dmPFC, respectively (Fig. 4B; ventral–dorsal: β = −0.220, 95% C.I. −0.373 to −0.067, P = 0.002; medial–lateral; β = 0.466, 95% C.I. 0.310 to 0.622, P < 0.001). Similar results were obtained when using effect sizes rather than t values, and when computing gradients on a single-subject level in a summary statistics approach.

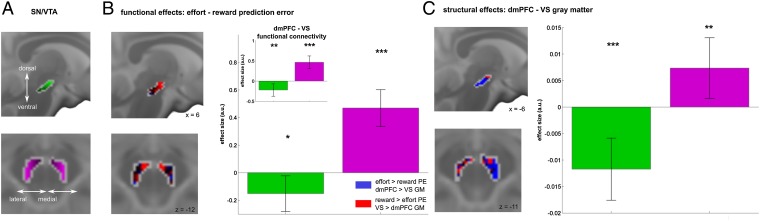

Fig. 4.

SN/VTA spatial expression mirrors cortical and striatal organization. (A and B) Effort and reward PEs in SN/VTA follow a spatial distribution along a ventral–dorsal (green color gradients) and a medial–lateral (violet color gradients) gradient, respectively. Multiple regression analysis revealed that ventral (green bars) and lateral (violet bars) voxels of SN/VTA are representing effort PEs more strongly, relative to reward PEs. Effect maps (B, Left) show that dorsomedial voxels preferentially encode reward PEs (red colors), whereas ventrolateral voxels more strongly encode effort prediction errors (blue colors) (also see SI Appendix, Fig. S6). A functional connectivity analysis (B, small bar plot) revealed SN/VTA expressed spatially distinct functional connectivity patterns: Ventral and lateral voxels are more likely to coactivate with dmPFC, whereas dorsal and medial SN/VTA voxels are more likely to coactivate with VS activity. (C) Gray matter analysis replicates functional findings in revealing that gray matter in ventrolateral SN/VTA covaried with dmPFC GM density, whereas dorsomedial SN/VTA GM was associated with VS GM density. Our findings of consistent spatial gradients within SN/VTA thus suggest distinct mesolimbic and mesocortical pathways that can be analyzed along ventral–dorsal and medial–lateral axes in humans. Bar graphs indicate effect size ± 95% C.I.; *P < 0.05, **P < 0.01, ***P < 0.001. a.u., arbitrary units.

To explore further the spatial relationship between SN/VTA, dmPFC, and VS, we investigated structural associations between these areas. We used structural covariance analysis (58), which investigates how gray matter (GM) densities covary between brain regions, and has been shown sensitive for identifying anatomically and functionally relevant networks (59). Specifically, we asked how well GM density in each SN/VTA voxel is predicted by dmPFC and VS GM (regions defined by their functional activations) and their spatial distribution between subjects. Importantly, this analysis is entirely independent from our previous analyses, as it investigates individual GM differences as opposed to trial-by-trial functional task associations. Note there was no association between BOLD response and GM (dmPFC: r = 0.155, P = 0.430; VS: r = 0.100, P = 0.612; SN/VTA: effort PEs: r = 0.067, P = 0.737; reward PEs: r = 0.079, P = 0.690). We found both spatial gradients were significant (Fig. 4C; ventral–dorsal gradient: β = −0.012, 95% C.I. −0.018 to −0.006, P < 0.001; medial–lateral: β = 0.007, 95% C.I. 0.002 to 0.013, P = 0.007), suggesting that SN/VTA GM was more strongly associated with dmPFC GM in ventrolateral and with VS GM in dorsomedial areas, thus confirming the findings of our previous analyses.

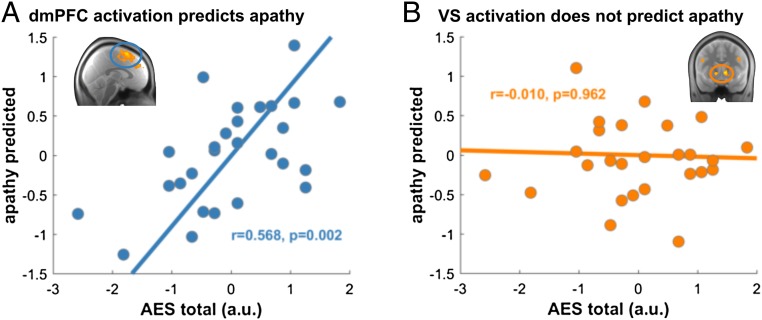

Apathy Is Predicted by Prefrontal but Not Striatal Function.

Several psychiatric disorders, including schizophrenia, express effort-related deficits (e.g., 28–30). A long-standing hypothesis assumes an imbalance between striatal and cortical dopamine (26, 60, 61), involving excess dopamine release in striatal (62, 63) but deficient dopamine release in cortical areas (64). While the former is linked to positive symptoms, such as hallucinations, the latter is considered relevant to negative symptoms, such as apathy (26, 28, 29). Given the striatal–cortical double dissociation, we examined whether apathy scores in our subjects, as assessed using the Apathy Evaluation Scale [AES (65)], were better predicted by dmPFC or VS activation. We ran a fivefold cross-validated prediction of AES total score using mean functional responses in either dmPFC or VS (using the same functional ROIs as above), using effort and reward PE responses in both regions (correlation between predictors: dmPFC: r = 0.149, P = 0.458; VS: r = 0.144, P = 0.473). We found that dmPFC activations were highly predictive of apathy scores (Fig. 5A; P < 0.001, using permutation tests; Materials and Methods). Interestingly, the effect sizes for both reward (0.573 ± 0.050) and effort (0.351 ± 0.059) prediction errors in dmPFC showed a positive association with apathy, meaning that the bigger a prediction error in dmPFC, the more apathetic a person was. Activity in VS did not predict apathy (Fig. 5B; P = 0.796). This was also reflected by a finding that extending a dmPFC-prediction model with VS activation did not improve apathy prediction (P = 0.394). There was no association between dmPFC (r = −0.225, P = 0.258) or VS (r = 0.142, P = 0.481) GM and apathy. Furthermore, we found no link between overt behavioral variables and apathy (money earned: r = −0.02, P = 0.927; mean exerted effort: r = 0.00, P = 0.99; SD exerted effort: r = −0.24, P = 0.235; N trials not succeeding effort threshold: r = −0.13, P = 0.525). These findings suggest self-reported apathy was specifically related to PE processing in dmPFC. Intriguingly, finding an effect of dmPFC reward PEs on apathy in the absence of a reward PE signal in this area at a group level (Fig. 2F) suggests an interpretation that apathy might be related to an impoverished functional segregation between mesolimbic and mesocortical pathways. Indeed, we find a significant effect of reward PEs only in more apathetic subjects [median-split analysis: low-apathy group: effect size: −1.23 ± 3.43; t(13) = −1.35, P = 0.201; high-apathy group: effect size: 2.37 ± 2.83; t(12) = 3.02, P = 0.011] supporting this notion.

Fig. 5.

Apathy related to cortical but not striatal function. (A) Prediction error signals in dmPFC significantly predicted apathy scores as assessed using an apathy self-report questionnaire (AES total score). (B) PE signals in VS were not predictive of apathy. a.u., arbitrary units.

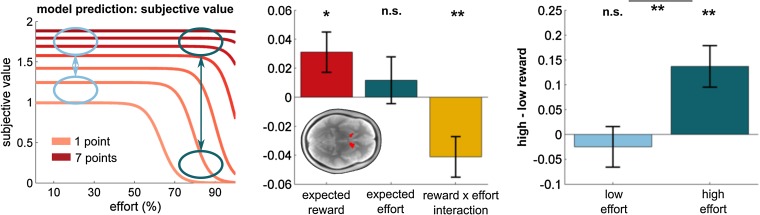

VS Encodes Subjective Net Benefit.

During anticipation, it is suggested VS encodes an overall net benefit or integrated utility signal incorporating both effort and reward (2, 66). We examined whether VS activity during cue presentation tracked both reward and effort expectation. Using a region-of-interest analysis (bilateral VS extracted from reward PE contrast), we found a significant reward expectation effect (Fig. 6) [t(27) = 2.23, P = 0.035] but no effect of effort expectation [t(27) = 0.72, P = 0.476] at stimulus presentation. These findings accord with predictions of our model, where subjective value increases as a direct function of reward but where subjective value does not decrease linearly with increasing effort (Fig. 6, Left). Instead, the sigmoidal function of our reward–effort arbitration model suggests that effort influences subjective value through an interaction with reward. This predicts that during low-effort trials, reward has little impact on subjective value, whereas for high effort the differences between low- and high-reward trials will engender significant change in subjective value. We formally tested this by examining the interaction between effort and reward, and found a significant effect [Fig. 6; t(27) = 2.94, P = 0.007]. A post hoc median-split analysis confirmed the model’s prediction evident in a significant effect of reward in high- [t(27) = 3.28, P = 0.003] but not in low-effort trials [t(27) = 0.61, P = 0.547].

Fig. 6.

Unified and distinct representations of effort and reward during anticipation. Ventral striatum encoded a subjective value signal in accord with predictions of our computational model (Left). A main effect of expected reward (Middle) reflected an increase in subjective value with higher reward. The reward × effort interaction (Middle) and the subsequent median-split analysis (Right) show that a difference between high and low rewards is more pronounced during high-effort trials, as predicted by our model (blue arrows; Left). A similar interaction effect was found when using a literature-based VS ROI [reward × effort expectation: t(27) = −2.28, P = 0.016; high–low reward in high effort: t(27) = 2.51, P = 0.018; high–low reward in low effort: t(27) = −0.41, P = 0.684]. Bar and line plots indicate mean effect size for regressor ± 1 SEM. *P < 0.05; **P < 0.01; nonsignificant (n.s.), P > 0.10.

Discussion

Tracking multiple aspects of a choice option, such as reward and effort, is critical for efficient decision making and demands simultaneous learning of these choice attributes. Here we show that the brain exploits distinct mesolimbic and mesocortical pathways to learn these choice features in parallel with a reward PE in VS and effort PE represented in dmPFC. Critically, we demonstrate that both types of PE at outcome satisfy requirements for a full PE, representing both an effect of expectation and outcome (cf. 42), and thus extend previous single-attribute learning studies for reward PE (e.g., 12, 36, 42) and effort outcomes (24, 25).

Our study shows a functional double dissociation between VS and dmPFC, highlighting their preferential processing of reward and effort PE, respectively. This functional and anatomical segregation provides an architecture that can enable the brain to learn about multiple decision choice features simultaneously, specifically predictions of effort and reward. Although dopaminergic activity cannot be assessed directly using fMRI, both effort and reward PEs were evident in segregated regions of dopaminergic-rich midbrain, and where an effective connectivity analysis indicated a directional influence from SN/VTA toward subcortical (reward PE) and cortical (effort PE) targets via ascending mesolimbic and mesocortical pathways, respectively.

Dopaminergic midbrain is thought to comprise several distinct dopaminergic populations that have dissociable functions (54, 56, 67, 68). Here we demonstrate a segregation between effort and reward learning within SN/VTA across the domains of task activation, functional connectivity, and gray matter density. In SN/VTA, a dorsomedial encoding of reward PEs, and a ventrolateral encoding of effort PEs, extends previous studies on SN/VTA subregions (56, 57, 67, 68) by demonstrating that this segregation has functional implications that are exploited during multiattribute learning. In contrast to previous studies on SN/VTA substructures (56, 67–69), we performed whole-brain imaging, which allowed us to investigate the precise interactions between dopaminergic midbrain and striatal/cortical areas. However, this required a slightly lower spatial SN/VTA resolution than previous studies (56, 67–69), restricting our analyses to spatial gradients across the entire SN/VTA rather than subregion analyses. We speculate that the dorsomedial region showing reward PE activity is likely to correspond to a dorsal tier of dopamine neurons known to form mesolimbic connections projecting to VS regions (55) (SI Appendix, Fig. S6). By contrast, the ventrolateral region expressing effort PE activation is likely to be related to ventral tier dopamine neurons (46, 55) that form a mesocortical network targeting dmPFC and surrounding areas (46, 47).

Our computational model showed that learning about choice features exploits PE-like learning signals, and in so doing extends on previous models by integrating reward and effort learning (cf. 24, 70) with effort discounting (39–41). The effort PE encoded in dmPFC can be seen as representing an adjustment in belief about the height of a required effort threshold. It is interesting to speculate about the functional meaning of this PE signal, such as whether this is more likely to signal motivation or the costs of a stimulus. Our findings that effort PEs have an invigorating function favor the former notion, although we acknowledge we cannot say whether effort PEs would also promote avoidance if our task design included an explicit option to default. External support for an invigorating function comes from related work on dopamine showing that it broadcasts a motivational signal (16, 71) that in turn influences vigor (72–74). Interestingly, direct phenomenological support for such a motivational signal comes from observations in human subjects undergoing electrical stimulation of cingulate cortex, who report a motivational urge and a determination to overcome effort (75).

VS is suggested to encode net benefit or integrated utility of a choice option (1, 30), in simple terms the value of a choice integrated over benefits (rewards) and costs (effort). The interaction between effort and reward expectations we observe in VS during anticipation is consistent with encoding of an integrated net benefit signal, but in our case this occurs exclusively during anticipation. In accordance with our model reward, magnitude is less important in low-effort trials but assumes particular importance during high-effort trials. However, the absent reward effect in low-effort trials contrasts with studies that find reward-related signals in VS during cue presentation, but in the latter there is no effort requirement (e.g., 76). This deviation from previous findings might reflect that subjects in our task need to execute effort before obtaining a reward. Nevertheless, the convergence of our model predictions and the interaction effect seen in VS supports the notion that a net benefit signal is formed at the time of action anticipation, when an overall stimulus value is important in preparing an appropriate motor output.

Effortful decision making assumes considerable interest in the context of pathologies such as schizophrenia, and may provide for a quantitative metric of negative symptoms (28). An association between impaired effort arbitration and negative symptoms in patients with schizophrenia (e.g., 29) supports this conjecture, although it is unknown whether such an impairment is tied to a prefrontal or striatal impairment. Within our volunteer sample, apathy was related to aberrant expression of learning signals in dmPFC but not in VS. This suggests that negative symptoms may be linked to a breakdown in a functional segregation between mesolimbic and mesocortical pathways, and this idea accords with evidence of apathetic behavior seen following ACC lesioning (23).

We used a naturalistic task reflecting the fact that in many environments, effort requirements are rarely explicit and are usually acquired by trial and error while reward magnitudes are often explicitly learned. Although this entails a difference in how feedback is presented, we consider it unlikely to influence neural processes, given that previous studies with balanced designs showed activations in remarkably similar regions to ours (e.g., 24, 25) and because prediction error signals are generally insensitive to outcome modality (primary/secondary reinforcer, magnitude/probabilistic feedback, learning/no learning) (e.g., 12, 36, 70, 77). Moreover, the specificity of the signals in VS and dmPFC for either reward or effort, including a modulation by expectation, favors a view that the pattern of responses observed in these regions reflects specific prediction error signals as opposed to simple feedback signals.

It is interesting to conjecture whether a spatial dissociation that we observe for simultaneous learning of reward and effort also holds if subjects learn choice features at different times, or learn about choice features other than reward and effort. Our finding of a mesolimbic network encoding reward PEs during simultaneous learning accords with results from simple reward-alone learning (e.g., 12). This, together with a known involvement of dmPFC in effort-related processes (24, 25, 40), renders it likely that the same pathways are exploited in unidimensional learning contexts. However, it remains unknown whether the same spatial segregation is needed to learn about multiple forms of reward that are associated with VS activity (e.g., monetary, social).

In summary, we show that simultaneous learning about effort and reward involves dissociable mesolimbic and mesocortical pathways, with VS encoding a reward learning signal and dmPFC encoding an effort learning signal. Our data indicate that these PE signals arise within SN/VTA, where an overlapping, but segregated, topological organization reflects distinct neural populations projecting to cortical and striatal regions, respectively. An integration of these segregated signals occurs in VS in line with an overall net benefit signal of an anticipated action.

Materials and Methods

Subjects.

Twenty-nine healthy, right-handed, male volunteers (age, 24.1 ± 4.5 y; range, 18 to 35 y) were recruited from local volunteer pools to take part in this experiment. All subjects were familiarized with the hand grippers and the task before entering the scanner (SI Appendix). Subjects were paid on an hourly basis plus a performance-dependent reimbursement. We focused on male subjects because we wanted to minimize potential confounds which we observed in a pilot study, for example fatigue in high-force exertion trials. One subject was excluded from fMRI analysis due to equipment failure during scanning. The study was approved by the University College London (UCL) research ethics committee, and all subjects gave written informed consent.

Task.

The goal of this study was to investigate how humans simultaneously learn about reward and effort in an ecologically realistic manner. In the task (Fig. 1A), subjects were presented with one of two stimuli (duration 1,000 ms). The stimuli (pseudorandomized, no more than three presentations of one stimulus in a row) were indicative of a potential reward (1 to 7 points, 50% reward probability) and an effort threshold that needed to be surpassed (range of effort threshold between 40 and 90% maximal force) in order to harvest a reward. Both points and effort thresholds slowly changed over time in a Gaussian random-walk–like manner (Fig. 1B), whereas the reward outcome probability remained stationary across the entire experiment, and subjects were informed about this beforehand. These trajectories were constructed so that reward and effort were decorrelated, and indeed the realized correlation between effort and reward prediction errors was minimal. Moreover, independent trajectories for effort and reward allowed us to cover a wide range of effort and reward expectation combinations, enabling us to comprehensibly assess a reward–effort arbitration function. Thus, to master the task, subjects had to simultaneously learn both reward and effort thresholds. After a jittered fixation cross (mean, 4,000 ms; uniformly distributed between 2,000 and 6,000 ms), subjects had to squeeze a force gripper with their right hand for 5,000 ms. During the first 1,000 ms, the subjects increased their force to the desired level (as indicated by a horizontal thermometer; blue frame phase). During the last 4,000 ms, subjects maintained a constant force (red frame phase) and released as soon as the thermometer disappeared from the screen. After another jittered fixation cross (mean, 4,000 ms; range, 2,000–6,000 ms), subjects received feedback whether and how many points they received for this trial (duration, 1,000 ms). If the exerted effort was above the effort threshold, subjects received the points that were on display. If the subjects’ effort did not exceed the threshold, a cross appeared above the number on display, which indicated that the subject did not receive any points for that trial. More details about the task are provided in SI Appendix.

Behavioral Analysis.

To assess the factors that influence effort execution (Fig. 1C), we used multiple regression to predict the exerted effort at each trial. As predictors, we entered the exerted effort as well as the number of points (displayed during feedback) on the previous trial, and whether the force threshold was successfully surpassed on the previous trial. Please note that the previous trial was determined as the last trial that the same stimulus was presented. The regression weights of the normalized predictors were obtained for each individual and then tested for consistency across subjects using t tests. To test how subjects changed their effort level based on whether they surpassed the threshold or not (“success”), we analyzed the change in effort conditioned on their success. For each subject, we calculated the average change in effort for success and nonsuccess trials and then tested consistency using t tests across all subjects (Fig. 1D).

Computational Modeling.

We developed novel computational reinforcement learning models (32) to formalize the processes underlying effort and reward learning in this task. All models were fitted to individual subjects’ behavior (executed effort at each trial), and a model comparison using the Bayesian information criterion was performed to select the best-fitting model (SI Appendix, Fig. S2). The preferred model was then used for the fMRI analysis. The models and model comparison are detailed in SI Appendix.

fMRI Data Acquisition and Preprocessing.

MRI was acquired using a Siemens Trio 3Tesla scanner, equipped with a 32-channel head coil. We used an EPI sequence that was optimized for minimal signal dropout in striatal, medial prefrontal, and brainstem regions (78). Each volume was formed of 40 slices with 3-mm isotropic voxels [repetition time (TR), 2.8 s; echo time (TE), 30 ms; slice tilt, −30°]. A total of 1,252 scans were acquired across all four sessions. The first 6 scans of each session were discarded to account for T1-saturation effects. Additionally, field maps (3-mm isotropic, whole-brain) were acquired to correct the EPIs for field-strength inhomogeneity.

All functional and structural MRI analyses were performed using SPM12 (www.fil.ion.ucl.ac.uk). The EPIs were first realigned and unwarped using the field maps. EPIs were then coregistered to the subject-specific anatomical images and normalized using DARTEL-generated (79) flow fields, which resulted in a final voxel resolution of 1.5 mm (standard size for DARTEL normalization). For the main analysis, the normalized EPIs were smoothed with a 6-mm FWHM kernel to satisfy the smoothness assumptions of the statistical correction algorithms. For the gradient analysis of SN/VTA (“unsmoothed analysis”), we used a small smoothing kernel of 1 mm to preserve more of the voxel-specific signals. We applied this very small smoothing kernel rather than no kernel to prevent aliasing artifacts that naturally arise from the DARTEL-normalization procedure.

fMRI Data Analysis.

The main goal of the fMRI analysis was to determine the brain regions that track reward and effort prediction errors. To this end, we used the winning computational model and extracted the model predictions for each trial. To derive the model predictions, we used the average parameter estimates across all subjects, similar to previous studies (43, 80–84). This ensures more regularized predictions and does not introduce subject-specific biases. At the time of feedback, we entered four parametric modulators: effort PEs, reward PEs, absolute effort PEs, and absolute reward PEs. For all analyses, we normalized the parametric modulators beforehand and disabled the orthogonalization procedure in SPM (correlation between regressors is shown in SI Appendix, Fig. S4). This means that all parametric modulators compete for variance, and we thus only report effects that are uniquely attributable to the given regressor. The sign of the PE regressors was set so that positive reward PEs mean that a reward is better than expected, and for effort PEs a positive PE means that the threshold is higher than expected (more effort is needed). The task sequences were designed so as to minimize a correlation between effort and reward PEs, as well as between expectation and outcome signals within a PE (effort PE: r = 0.087 ± 0.105; reward PE: r = −0.002 ± 0.109), and thus to maximize sensitivity of our analyses. To control for other events of the task, we added the following regressors as nuisance covariates: stimulus presentation with parametric modulators for expected reward, expected effort, expected reward–effort interaction, and stimulus identifier. To control for any movement-related artifacts, we also modeled the force-execution period (block duration, 5,000 ms) with executed effort as parametric modulator. Moreover, we regressed out movements using the realignment parameters, as well as pulsatile and breathing artifacts (85–88). Each run was modeled as a separate session to account for offset differences in signal intensity.

On the second level, we used the standard summary statistics approach in SPM (89) and computed the consistency across all subjects. We used whole-brain family-wise error correction P < 0.05 to correct for multiple comparisons (if not stated otherwise) using settings that do not show any biases in discovering false positives (90, 91). We examined the effect of each regressor of interest (effort, reward PE) using a one-sample t test to assess the regions in which there was a representation of the regressor. Subsequent analyses (Fig. 2 B, C, E, and F) were performed on the peak voxel in the given area. Prediction errors were compared using paired t tests. To assess the effect of effort and reward PEs on SN/VTA, we used the same generalized linear model applying small-volume FWE correction (uncorrected threshold P < 0.001) based on our anatomical SN/VTA mask (see below), similar to previous studies (e.g., 92, 93).

For the analysis of the cue phase, we extracted responses of a VS ROI and then assessed the impact of our model-derived predictors’ expected effort, expected reward, and their interaction.

Effective Connectivity Analysis.

To assess whether PE signals are more likely to be projected from SN/VTA to (sub)cortical areas or vice versa, we ran an effective connectivity analysis using dynamical causal modeling (53). DCM allows the experimenter to specify, estimate, and compare biophysically plausible models of spatiotemporally distributed brain networks. In the case of fMRI, generative models are specified which describe how neuronal circuitry causes the BOLD response, which in turn elicits the measured fMRI time series. Bayesian model selection (94) is used to determine which of the competing models best explains the data (in terms of balancing accuracy and complexity), drawing upon the slow emergent dynamics which result from the interaction of fast neuronal interactions [referred to as the slaving principle (89)].

We compared several models, all consisting of three regions SN/VTA (using the anatomical ROI), VS, and dmPFC (using the functional contrasts for ROI definition). As fixed inputs, we used the onset of feedback as a stimulating effect on all three nodes. We assumed bidirectional connections between SN/VTA and dmPFC/VS regions, reflecting the well-known bidirectional communication. The models differed in how PEs influenced these connections. Based on the assumption that PEs are computed in the originating brain structure and influence the downstream brain region, we tested whether PEs modulated the connections originating from SN/VTA or targeting it. This same approach (PEs affecting modulation of intrinsic connections) was used in previous studies investigating the effects of PEs on effective connectivity (e.g., 95, 96). We compared six models in total. In the winning ascending model, reward and effort PEs [each only modulating the connection to its (sub)cortical target region] modulated the ascending connections (e.g., reward PEs modulated connectivity from SN/VTA to VS). In the descending model, PEs modulated the descending connections from VS and dmPFC to SN/VTA. Additional models tested whether only having one ascending modulation (either effort or reward PEs), or having one ascending and one descending modulation, fitted the data better. DCMs were fitted for each subject and run separately, and Bayesian random-effects comparison (94) was used for model comparison.

fMRI Analysis of SN/VTA Gradients.

For the analysis of the SN/VTA gradients with the unsmoothed data, the model was identical to the one above, with the exception that the feedback on each trial was modeled as a separate regressor. This allowed us to obtain an estimate of the BOLD response separately for each trial (necessary for functional connectivity analysis), in keeping with the same main effects as in normal mass-univariate analyses (cf. 37). These responses were then used to perform our gradient analyses.

We performed two SN/VTA gradient analyses with the functional data. For the PE analysis, we used the model-derived PEs (as described above) to predict the effects of effort and reward PEs on each voxel of our anatomically defined SN/VTA mask. We then calculated t tests for each voxel on the second level, using the beta coefficients of all subjects. As we were interested whether there is a spatial dissociation/gradient between the two PE types, we then calculated the difference of the absolute t values between the two prediction errors, for each voxel separately. This metric allows us to measure whether a voxel was more predictive of effort or reward PEs. To ensure that we only use voxels that have some response to the PEs, we discarded a voxel that has an absolute t value <1 for both prediction errors. We used the absolute of the t values for our contrast to account for potential negative encoding.

To calculate the gradients, we used a multiple regression approach to predict the t-value differences (e.g., effort − reward PE). As predictors, we used the voxel location in a ventral–dorsal gradient and a voxel location in a medial–lateral gradient. Both gradients entered the regression, together with a nuisance intercept. This analysis resulted in a beta weight for each of the gradients, which indicates whether the effect of the prediction errors follows a spatial gradient or not. We obtained the 95% confidence intervals of the beta weights and calculated the statistical significance using permutation tests (10,000 iterations; randomly permuting the spatial coordinates of each voxel).

For the second, functional connectivity analysis, we used the very same pipeline. However, instead of using model-derived prediction errors, we now used the BOLD response for every trial from the dmPFC and bilateral VS (mean activation across the entire ROI). The ROIs were determined based on task main effect (dmPFC based on effort PEs, VS based on reward PEs, both thresholded at PFWE < 0.05). To ensure this analysis did not reflect the task effects, we regressed out the task effect (reward/effort PEs) before the main analysis.

We found similar effect when using beta weights (which do not take measurement uncertainty into account) instead of t values, and also if we include all voxels, irrespective of whether they respond to any of the PEs. Similar results were also obtained when using a summary statistics approach, in which spatial gradients were obtained for each single subject.

Predicting Apathy Through BOLD Responses.

To assess whether self-reported apathy—a potential reflection of nonclinical negative symptoms (27)—was related to neural responses in our task, we tested whether we can predict apathy by using task-related activation. Apathy was assessed using a self-report version of the Apathy Evaluation Scale (65) (missing data from one subject). We used the total scores as a dependent variable in a fivefold cross-validated regression (cf. 84, 97). To assess whether apathy was more closely linked to dmPFC or VS activation, we used the activation in the given ROI (using mean activation at P < 0.05 FWE ROI, same as in previous analyses), including both effort and reward prediction error signals at the time of outcome. To assess prediction accuracy, we then calculated the L2 norm between predicted and true apathy scores across all subjects (cf. 97). To establish a statistical null distribution, we ran permutation tests by randomly shuffling the PE responses. To assess whether the VS predictors improved a dmPFC prediction, we compared the predictive performance of the dmPFC model with an extended model with VS activations as additional predictors. Permutation tests (by permuting the additional regressors) were again used to assess significance between dmPFC and the full model.

Structural MRI Data Acquisition and Analysis.

Structural images were acquired using quantitative multiparameter maps (MPMs) in a 3D multiecho fast low-angle shot (FLASH) sequence with a resolution of 1-mm isotropic voxels (98). Magnetic transfer (MT) images were used for gray matter quantification, as they are particularly sensitive for subcortical regions (99). In total, three different FLASH sequences were acquired with different weightings: predominantly MT (TR/α, 23.7 ms/6°; off-resonance Gaussian MT pulse of 4-ms duration preceded excitation, 2-kHz frequency offset, 220° nominal flip angle), proton density (23.7 ms/6°), and T1 weighting (18.7 ms/20°) (100). To increase the signal-to-noise ratio, we averaged signals of six equidistant bipolar gradient echoes (TE, 2.2 to 14.7 ms). To calculate the semiquantitative MT maps, we used mean signal amplitudes and additional T1 maps (101), and additionally eliminated influences of B1 inhomogeneity and relaxation effects (102).

To normalize functional and structural maps, we segmented the MT maps (using heavy bias regularization to account for the quantitative nature of MPMs), and generated flow fields using DARTEL (79) with the standard settings for SPM12. The flow fields were then used for normalizing functional as well as structural images. For the normalization of the structural images (MT), we used the VBQ toolbox in SPM12 with an isotropic Gaussian smoothing kernel of 3 mm.

To investigate anatomical links between SN/VTA and VS and dmPFC, we performed a (voxel-based morphometry–based) structural covariance analysis (58). The approach assumes that brain regions that are anatomically and functionally related (e.g., form a common network) should covary in gray matter density between subjects. This means that subjects with a strong expression of a dmPFC gray matter density should also express greater gray matter density in ventrolateral SN/VTA, possibly reflecting genetic, developmental, or environmental influences (59). We used the segmented, normalized gray matter MT maps and applied the Jacobian of the normalization step to preserve total tissue volume (103), as reported in a previous study (84). To account for differences in global brain volume, we calculated the total intracranial volume and used it as a nuisance regressor in the analysis. For each subject, we extracted the mean gray matter density in dmPFC and bilateral VS (mask derived from functional contrasts, thresholded at PFWE < 0.05; see above). Additionally, we extracted the gray matter density of each voxel in our SN/VTA mask. We then calculated the effect of dmPFC and VS gray matter in a linear regression model predicting the gray matter density in every voxel in SN/VTA. Similar to our functional analysis, we calculated the difference of the t values for dmPFC and VS for each voxel (dmPFC − VS). These were then used for the same spatial gradient analysis as in the analysis described above.

For all our SN/VTA analyses, we used a manually drawn anatomical region of interest in MRIcron (104). We used the mean structural MT image where SN/VTA can be easily distinguished from surrounding areas as a bright white stripe (45), similar to previous studies (92, 93).

Data Availability.

Imaging results are available online at neurovault.org/collections/IAYMWZIY/.

Supplementary Material

Acknowledgments

We thank Francesco Rigoli, Peter Zeidman, Philipp Schwartenbeck, and Gabriel Ziegler for helpful discussions. We also thank Peter Dayan and Laurence Hunt for comments on an earlier version of the manuscript. We thank Francesca Hauser-Piatti for creating graphical stimuli and illustrations. Finally, we thank Al Reid for providing the force grippers. A Wellcome Trust Cambridge-UCL Mental Health and Neurosciences Network grant (095844/Z/11/Z) supported all authors. R.J.D. holds a Wellcome Trust Senior Investigator award (098362/Z/12/Z). The Max Planck UCL Centre is a joint initiative supported by UCL and the Max Planck Society. The Wellcome Trust Centre for Neuroimaging is supported by core funding from the Wellcome Trust (091593/Z/10/Z).

Footnotes

The editor also holds a joint appointment with University College London.

This article is a PNAS Direct Submission. R.M. is a guest editor invited by the Editorial Board.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1705643114/-/DCSupplemental.

References

- 1.Phillips PEM, Walton ME, Jhou TC. Calculating utility: Preclinical evidence for cost-benefit analysis by mesolimbic dopamine. Psychopharmacology (Berl) 2007;191:483–495. doi: 10.1007/s00213-006-0626-6. [DOI] [PubMed] [Google Scholar]

- 2.Croxson PL, Walton ME, O’Reilly JX, Behrens TEJ, Rushworth MFS. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Walton ME, Kennerley SW, Bannerman DM, Phillips PEM, Rushworth MFS. Weighing up the benefits of work: Behavioral and neural analyses of effort-related decision making. Neural Netw. 2006;19:1302–1314. doi: 10.1016/j.neunet.2006.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Zhou QY, Palmiter RD. Dopamine-deficient mice are severely hypoactive, adipsic, and aphagic. Cell. 1995;83:1197–1209. doi: 10.1016/0092-8674(95)90145-0. [DOI] [PubMed] [Google Scholar]

- 5. Homer (1996) The Odyssey (Penguin Classics, New York), Reprint Ed.

- 6.Linares OF. “Garden hunting” in the American tropics. Hum Ecol. 1976;4:331–349. [Google Scholar]

- 7.Rosenberg DK, McKelvey KS. Estimation of habitat selection for central-place foraging animals. J Wildl Manage. 1999;63:1028–1038. [Google Scholar]

- 8.Kolling N, Behrens TEJ, Mars RB, Rushworth MFS. Neural mechanisms of foraging. Science. 2012;336:95–98. doi: 10.1126/science.1216930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hull C. Principles of Behavior: An Introduction to Behavior Theory. Appleton-Century; Oxford: 1943. [Google Scholar]

- 10.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 12.Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ferenczi EA, et al. Prefrontal cortical regulation of brainwide circuit dynamics and reward-related behavior. Science. 2016;351:aac9698. doi: 10.1126/science.aac9698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Floresco SB, Tse MTL, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort- and delay-based decision making. Neuropsychopharmacology. 2008;33:1966–1979. doi: 10.1038/sj.npp.1301565. [DOI] [PubMed] [Google Scholar]

- 15.Schweimer J, Hauber W. Dopamine D1 receptors in the anterior cingulate cortex regulate effort-based decision making. Learn Mem. 2006;13:777–782. doi: 10.1101/lm.409306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Salamone JD, Correa M. The mysterious motivational functions of mesolimbic dopamine. Neuron. 2012;76:470–485. doi: 10.1016/j.neuron.2012.10.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Denk F, et al. Differential involvement of serotonin and dopamine systems in cost-benefit decisions about delay or effort. Psychopharmacology (Berl) 2005;179:587–596. doi: 10.1007/s00213-004-2059-4. [DOI] [PubMed] [Google Scholar]

- 18.Salamone JD, Cousins MS, Bucher S. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav Brain Res. 1994;65:221–229. doi: 10.1016/0166-4328(94)90108-2. [DOI] [PubMed] [Google Scholar]

- 19.Walton ME, et al. Comparing the role of the anterior cingulate cortex and 6-hydroxydopamine nucleus accumbens lesions on operant effort-based decision making. Eur J Neurosci. 2009;29:1678–1691. doi: 10.1111/j.1460-9568.2009.06726.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Schweimer J, Saft S, Hauber W. Involvement of catecholamine neurotransmission in the rat anterior cingulate in effort-related decision making. Behav Neurosci. 2005;119:1687–1692. doi: 10.1037/0735-7044.119.6.1687. [DOI] [PubMed] [Google Scholar]

- 21.Walton ME, Bannerman DM, Rushworth MFS. The role of rat medial frontal cortex in effort-based decision making. J Neurosci. 2002;22:10996–11003. doi: 10.1523/JNEUROSCI.22-24-10996.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Walton ME, Bannerman DM, Alterescu K, Rushworth MFS. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci. 2003;23:6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MFS. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- 24.Skvortsova V, Palminteri S, Pessiglione M. Learning to minimize efforts versus maximizing rewards: Computational principles and neural correlates. J Neurosci. 2014;34:15621–15630. doi: 10.1523/JNEUROSCI.1350-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Scholl J, et al. The good, the bad, and the irrelevant: Neural mechanisms of learning real and hypothetical rewards and effort. J Neurosci. 2015;35:11233–11251. doi: 10.1523/JNEUROSCI.0396-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Elert E. Aetiology: Searching for schizophrenia’s roots. Nature. 2014;508:S2–S3. doi: 10.1038/508S2a. [DOI] [PubMed] [Google Scholar]

- 27.Marin RS. Apathy: A neuropsychiatric syndrome. J Neuropsychiatry Clin Neurosci. 1991;3:243–254. doi: 10.1176/jnp.3.3.243. [DOI] [PubMed] [Google Scholar]

- 28.Green MF, Horan WP, Barch DM, Gold JM. Effort-based decision making: A novel approach for assessing motivation in schizophrenia. Schizophr Bull. 2015;41:1035–1044. doi: 10.1093/schbul/sbv071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hartmann MN, et al. Apathy but not diminished expression in schizophrenia is associated with discounting of monetary rewards by physical effort. Schizophr Bull. 2015;41:503–512. doi: 10.1093/schbul/sbu102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Salamone JD, et al. The pharmacology of effort-related choice behavior: Dopamine, depression, and individual differences. Behav Processes. 2016;127:3–17. doi: 10.1016/j.beproc.2016.02.008. [DOI] [PubMed] [Google Scholar]

- 31.Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical Conditioning II: Current Research and Theory. Appleton-Century-Crofts; New York: 1972. pp. 64–99. [Google Scholar]

- 32.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- 33.Enomoto K, et al. Dopamine neurons learn to encode the long-term value of multiple future rewards. Proc Natl Acad Sci USA. 2011;108:15462–15467. doi: 10.1073/pnas.1014457108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kishida KT, et al. Subsecond dopamine fluctuations in human striatum encode superposed error signals about actual and counterfactual reward. Proc Natl Acad Sci USA. 2016;113:200–205. doi: 10.1073/pnas.1513619112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans’ choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chowdhury R, et al. Dopamine restores reward prediction errors in old age. Nat Neurosci. 2013;16:648–653. doi: 10.1038/nn.3364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hauser TU, et al. Temporally dissociable contributions of human medial prefrontal subregions to reward-guided learning. J Neurosci. 2015;35:11209–11220. doi: 10.1523/JNEUROSCI.0560-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bishop C. Pattern Recognition and Machine Learning. Springer; New York: 2007. [Google Scholar]

- 39.Klein-Flügge MC, Kennerley SW, Saraiva AC, Penny WD, Bestmann S. Behavioral modeling of human choices reveals dissociable effects of physical effort and temporal delay on reward devaluation. PLoS Comput Biol. 2015;11:e1004116. doi: 10.1371/journal.pcbi.1004116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Klein-Flügge MC, Kennerley SW, Friston K, Bestmann S. Neural signatures of value comparison in human cingulate cortex during decisions requiring an effort-reward trade-off. J Neurosci. 2016;36:10002–10015. doi: 10.1523/JNEUROSCI.0292-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hartmann MN, Hager OM, Tobler PN, Kaiser S. Parabolic discounting of monetary rewards by physical effort. Behav Processes. 2013;100:192–196. doi: 10.1016/j.beproc.2013.09.014. [DOI] [PubMed] [Google Scholar]

- 42.Rutledge RB, Dean M, Caplin A, Glimcher PW. Testing the reward prediction error hypothesis with an axiomatic model. J Neurosci. 2010;30:13525–13536. doi: 10.1523/JNEUROSCI.1747-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hauser TU, et al. The feedback-related negativity (FRN) revisited: New insights into the localization, meaning and network organization. Neuroimage. 2014;84:159–168. doi: 10.1016/j.neuroimage.2013.08.028. [DOI] [PubMed] [Google Scholar]

- 44.Seeley WW, et al. Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci. 2007;27:2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Düzel E, et al. Functional imaging of the human dopaminergic midbrain. Trends Neurosci. 2009;32:321–328. doi: 10.1016/j.tins.2009.02.005. [DOI] [PubMed] [Google Scholar]

- 46.Björklund A, Dunnett SB. Dopamine neuron systems in the brain: An update. Trends Neurosci. 2007;30:194–202. doi: 10.1016/j.tins.2007.03.006. [DOI] [PubMed] [Google Scholar]

- 47.Williams SM, Goldman-Rakic PS. Widespread origin of the primate mesofrontal dopamine system. Cereb Cortex. 1998;8:321–345. doi: 10.1093/cercor/8.4.321. [DOI] [PubMed] [Google Scholar]

- 48.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307:1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 49.Varazzani C, San-Galli A, Gilardeau S, Bouret S. Noradrenaline and dopamine neurons in the reward/effort trade-off: A direct electrophysiological comparison in behaving monkeys. J Neurosci. 2015;35:7866–7877. doi: 10.1523/JNEUROSCI.0454-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Pasquereau B, Turner RS. Limited encoding of effort by dopamine neurons in a cost-benefit trade-off task. J Neurosci. 2013;33:8288–8300. doi: 10.1523/JNEUROSCI.4619-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Carr DB, Sesack SR. Projections from the rat prefrontal cortex to the ventral tegmental area: Target specificity in the synaptic associations with mesoaccumbens and mesocortical neurons. J Neurosci. 2000;20:3864–3873. doi: 10.1523/JNEUROSCI.20-10-03864.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Haber SN, Behrens TEJ. The neural network underlying incentive-based learning: Implications for interpreting circuit disruptions in psychiatric disorders. Neuron. 2014;83:1019–1039. doi: 10.1016/j.neuron.2014.08.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Friston KJ, Harrison L, Penny W. Dynamic causal modelling. Neuroimage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- 54.Parker NF, et al. Reward and choice encoding in terminals of midbrain dopamine neurons depends on striatal target. Nat Neurosci. 2016;19:845–854. doi: 10.1038/nn.4287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Haber SN, Knutson B. The reward circuit: Linking primate anatomy and human imaging. Neuropsychopharmacology. 2010;35:4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Krebs RM, Heipertz D, Schuetze H, Duzel E. Novelty increases the mesolimbic functional connectivity of the substantia nigra/ventral tegmental area (SN/VTA) during reward anticipation: Evidence from high-resolution fMRI. Neuroimage. 2011;58:647–655. doi: 10.1016/j.neuroimage.2011.06.038. [DOI] [PubMed] [Google Scholar]

- 57.Chowdhury R, Lambert C, Dolan RJ, Düzel E. Parcellation of the human substantia nigra based on anatomical connectivity to the striatum. Neuroimage. 2013;81:191–198. doi: 10.1016/j.neuroimage.2013.05.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Mechelli A, Friston KJ, Frackowiak RS, Price CJ. Structural covariance in the human cortex. J Neurosci. 2005;25:8303–8310. doi: 10.1523/JNEUROSCI.0357-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Alexander-Bloch A, Giedd JN, Bullmore E. Imaging structural co-variance between human brain regions. Nat Rev Neurosci. 2013;14:322–336. doi: 10.1038/nrn3465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Laruelle M. The second revision of the dopamine theory of schizophrenia: Implications for treatment and drug development. Biol Psychiatry. 2013;74:80–81. doi: 10.1016/j.biopsych.2013.05.016. [DOI] [PubMed] [Google Scholar]

- 61.Laruelle M. Schizophrenia: From dopaminergic to glutamatergic interventions. Curr Opin Pharmacol. 2014;14:97–102. doi: 10.1016/j.coph.2014.01.001. [DOI] [PubMed] [Google Scholar]

- 62.Howes OD, et al. Dopamine synthesis capacity before onset of psychosis: A prospective [18F]-DOPA PET imaging study. Am J Psychiatry. 2011;168:1311–1317. doi: 10.1176/appi.ajp.2011.11010160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Howes OD, et al. The nature of dopamine dysfunction in schizophrenia and what this means for treatment. Arch Gen Psychiatry. 2012;69:776–786. doi: 10.1001/archgenpsychiatry.2012.169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Slifstein M, et al. Deficits in prefrontal cortical and extrastriatal dopamine release in schizophrenia: A positron emission tomographic functional magnetic resonance imaging study. JAMA Psychiatry. 2015;72:316–324. doi: 10.1001/jamapsychiatry.2014.2414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Marin RS, Biedrzycki RC, Firinciogullari S. Reliability and validity of the Apathy Evaluation Scale. Psychiatry Res. 1991;38:143–162. doi: 10.1016/0165-1781(91)90040-v. [DOI] [PubMed] [Google Scholar]

- 66.Phillips MR. Is distress a symptom of mental disorders, a marker of impairment, both or neither? World Psychiatry. 2009;8:91–92. [PMC free article] [PubMed] [Google Scholar]

- 67.D’Ardenne K, Lohrenz T, Bartley KA, Montague PR. Computational heterogeneity in the human mesencephalic dopamine system. Cogn Affect Behav Neurosci. 2013;13:747–756. doi: 10.3758/s13415-013-0191-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Pauli WM, et al. Distinct contributions of ventromedial and dorsolateral subregions of the human substantia nigra to appetitive and aversive learning. J Neurosci. 2015;35:14220–14233. doi: 10.1523/JNEUROSCI.2277-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.D’Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- 70.O’Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- 71.Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: Rewarding, aversive, and alerting. Neuron. 2010;68:815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]