Short abstract

In the second of their two articles about using existing routine data to assess performance in the NHS, the authors make practical suggestions about using data for mental health care, potentially avoidable deaths, and forecasting coronary heart disease outcomes, and raise issues about assumptions and technical aspects for discussion

There have been recent calls for better data on NHS outputs and outcomes in England.1-4 However, this will require new data collection that could take several years. In the meantime, creative and informed use of existing data, with clear admission of the known shortcomings, may give some indication of how outcomes are changing.5 The main challenges are to measure health validly and to judge how much any improvements are due to NHS interventions.

In this, the second of our two articles, we explore some of the technical issues involved and make practical illustrative suggestions about how best to use existing data regarding mental health care, potentially avoidable deaths, and forecasting coronary heart disease outcomes.6 Many other quality indicators could be produced along similar lines.7

Suggestions for indicators illustrating a range of methodological issues

Patterns of mental health care

Monitoring the quality of mental health care is problematic. Case fatality rates are low, so indicators based on numbers of deaths are inadequate. Much of mental health care occurs outside hospital without direct data on activity or outcomes. Also, there are few explicit standards. The challenge is to find a way of using data from hospitals to make some inferences about both hospital and community care and to identify aspects of care that are materially below optimum.

The national service framework for mental health highlights the preference for community over hospital care.8 With this policy being expected to produce better outcomes, assessing the way the service for mental health is delivered may be used as a proxy for quality of care. Mentally ill patients vary in numbers of readmissions to hospital and cumulative lengths of stay, and these may reflect variations in the quality and availability of care and support in the community. Too many readmissions and long cumulative lengths of stay may reflect inadequate community care, but too few readmissions and short cumulative lengths of stay may reflect inadequate provision of necessary hospital care.

A combination of the total number of admissions and the total time spent in hospital by patients during a year could be used to assess this. There may well be a trade-off between time spent in hospital and frequency of admission, and it is therefore important to consider the balance between the two variables as well as the individual measures. Studies of observed variation between populations could be used to derive target ranges for acceptable patterns of care.

To test this approach, we followed individual patients aged 17-64 admitted to hospital in April of each year in mental health specialties throughout the financial year, using continuous inpatient spells and the linkage methods described in our first article.6 We calculated the total length of inpatient stay per person during the year and the total number of admissions per person during the year. Readmissions could be to any NHS hospital in England and for any condition, such as injury, not just mental illness. We attributed the values to the primary care organisation that covered the patient's place of residence at the time of first admission.

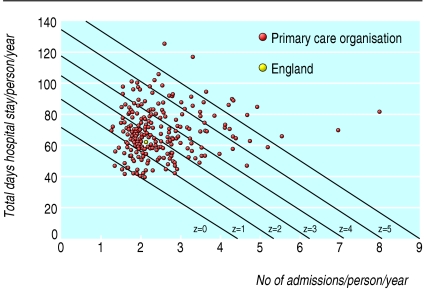

We found substantial variation between primary care organisations for each of the two variables. To give each component equal weight, we transformed them into z scores (by measuring distance from their reference points and dividing by the standard deviations of the individual distributions). Conventionally, the mean of a distribution is the reference point for z scores. However, the mean is not necessarily a suitable target for a performance score because it is partly a reflection of “poor performance” at the tail end of the distribution.9 We used modified z scores in which the reference points were 1.65 admissions per person and 45.0 days total stay in hospital during the year. We chose these points as a realistic joint target because they had been achieved by a strategic health authority in 2000-1 (the year selected as standard). This “best achieved combination” of a low rate of admissions per person and a low total stay per person, giving each the same level of importance, equates to the lowest composite z score at the level of strategic health authority (see Year 3 in table 1), although at the level of primary care organisation, some trusts achieved even better z scores. We used these reference points to calculate modified z scores across all five years.

Table 1.

Values for suggested NHS performance indicators in England over five financial or calendar years

|

Financial or calendar year

|

Trend for average annual improvement*

|

|||||||

|---|---|---|---|---|---|---|---|---|

| Outcome | Year 1 | Year 2 | Year 3 | Year 4 | Year 5 (95% CI) | % | R2 | P value |

|

Patterns of mental health care†

| ||||||||

| No of admissions in April | 7 335 | 8 020 | 7 163 | 8 295 | 8 095 | |||

| Composite z score: | ||||||||

| England | 1.99 | 2.38 | 2.29 | 2.50 | 2.53 | −5.4 | 0.750 | 0.058 |

| SHA minimum | 0.43 | 0.07 | 0.00 | 1.07 | 0.55 | |||

| SHA maximum | 5.37 | 5.52 | 6.72 | 4.26 | 6.97 | |||

|

Population mortality from ischaemic heart disease‡

| ||||||||

| No of deaths | 41 478 | 38 598 | 35 991 | 34 200 | 32 257 | |||

| Death rate/100 000: | ||||||||

| England | 79.9 | 74.1 | 68.9 | 65.2 | 61.0 (60.4 to 61.7) | 6.5 | 0.997 | <0.001 |

| SHA minimum | 56.8 | 52.8 | 46.6 | 46.0 | 45.1 (41.8 to 48.7) | |||

| SHA maximum | 110.2 | 100.6 | 92.2 | 90.4 | 84.3 (80.7 to 87.9) | |||

|

Population mortality from causes amenable to health care, including IHD§

| ||||||||

| No of deaths | 83 821 | 80 852 | 76 648 | 70 439 | 67 907 | |||

| Death rate/100 000: | ||||||||

| England | 164.4 | 158.3 | 149.7 | 137.2 | 131.5 (130.5 to 132.5) | 5.7 | 0.982 | 0.001 |

| SHA minimum | 126.9 | 120.5 | 116.5 | 105.1 | 103.7 (98.2 to 109.1) | |||

| SHA maximum | 214.5 | 203.0 | 187.9 | 177.5 | 169.8 (164.7 to 174.9) | |||

|

Population mortality from causes amenable to health care, excluding IHD§

| ||||||||

| No of deaths | 42 343 | 42 284 | 40 657 | 36 239 | 35 650 | |||

| Death rate/100 000: | ||||||||

| England | 84.5 | 84.2 | 80.9 | 72.1 | 70.5 (69.7 to 71.2) | 5.0 | 0.893 | 0.015 |

| SHA minimum | 69.9 | 66.4 | 67.1 | 59.1 | 58.4 (54.2 to 62.6) | |||

| SHA maximum | 104.4 | 104.2 | 95.7 | 88.6 | 85.5 (81.8 to 89.2) | |||

|

Population mortality from causes not amenable to health care¶

| ||||||||

| No of deaths | 108 229 | 106 715 | 104 922 | 105 808 | 105 888 | |||

| Death rate/100 000: | ||||||||

| England | 215.4 | 212.0 | 207.8 | 208.5 | 206.9 (205.6 to 208.2) | 1.0 | 0.848 | 0.026 |

| SHA minimum | 182.4 | 172.2 | 177.9 | 174.3 | 177.5 (170.3 to 184.7) | |||

| SHA maximum | 265.6 | 260.0 | 254.5 | 252.2 | 246.6 (238.6 to 254.6) | |||

IHD=Ischaemic heart disease. SHA=Strategic health authorities (as at April 2003).

See BMJ.com for further details on indicators.

Fitting a linear trend to log transformed rates.

Composite z score for total number of admissions and total length of stay per person for those organisations with at least one admission in April, compared with the best performance among strategic health authorities in 2000-1, for financial year 1998-9 to 2002-3. (Data source: hospital episode statistics.)

Deaths from ischaemic heart disease among residents aged <95 years for 1998-2002 (ICD-9 codes 410-414 in years 1998-2000, ICD-10 codes 120-125 in years 2001-2). Death rates directly standardised for age using the European standard population. Data based on ICD-9 are not adjusted for equivalence to ICD-10. (Data source: Office for National Statistics.)

Deaths from causes considered amenable to health care among residents aged <75 years for 1998-2002 (various ICD codes for specific age groups, ICD-9 codes in years 1998-2000, ICD-10 codes in years 2001-2). Death rates directly standardised for age using the European standard population. Data based on ICD-9 are not adjusted for equivalence to ICD-10. (Data source: Office for National Statistics.)

Deaths from causes not considered amenable to health care among residents aged <75 years for 1998-2002. (Use of ICD codes, standardisation of death rates, and data source as above.)

The z scores measure how far each primary care organisation is from the defined optimum for total admissions and for total stay. We then produced a composite score for each organisation by adding its two z scores, equating to a summary of that organisation's mix of experience on two fronts. A high composite score would be considered undesirable as it would indicate more hospitalisation than expected. Weighting the z scores before adding them would be a refinement if there was particular concern about the relative importance of the two variables.

Figure 1 shows modified z score isolines at primary care organisation level. Two organisations with a different mix of values for admissions and length of stay may have the same composite z score, reflecting the same performance but different trade-offs. Improvement over time should lead to lower overall composite z scores and a reduction in variation. Table 1 shows non-significant worsening trends in composite z scores at the England level and variation by strategic health authority for each of five financial years. Caution is needed in interpreting these trends because of the substantial recent organisational change in mental health services and disruptions to data collection. However, our analyses show the feasibility of examining patterns of care, rather than focusing on a single variable. The indicator could be refined further by standardising for diagnostic mix, subject to development and testing.

Fig 1.

Combined z score isolines and results for individual primary care organisations for measures of mental health care—total length of inpatient stay per person and total number of admissions per person for patients aged 17-64 years admitted to hospital in mental health specialties in financial year 2002-3. (Excludes organisations with <20 patients admitted in April)

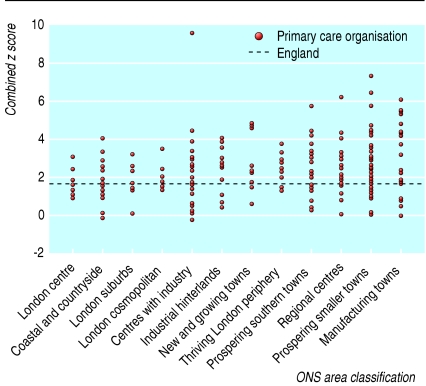

A possible cause of variation between primary care organisations might be differences in the prevalence of illness and in the level of support provided in the community, both by professional bodies and informal networks such as families. A full assessment of the effect of such factors is beyond the scope of this article, but we examined whether variation might be reduced when looking at similar geographical areas. For example, the populations of big cities can be more transient, have a greater incidence of some mental illnesses, and have fewer informal networks. The Office for National Statistics has used cluster analyses to create an area classification for grouping primary care organisations that are most similar in terms of 42 demographic, socioeconomic, housing, and other Census 2001 variables.10 Figure 2 shows the composite modified z score for each primary care organisation grouped within its area group. Although it shows some differences between groups (a slowly increasing z score across the chart), there is much greater residual variation within each group, suggesting that influences other than demography and socioeconomic conditions are largely responsible for the variation, such as service availability and clinical practice.

Fig 2.

Combined z scores of individual primary care organisations grouped by Office for National Statistics (ONS) area classification for patients aged 17-64 years admitted to hospital in mental health specialties in financial year 2002-3. (Excludes organisations with <20 patients admitted in April)

A measure of wider population mortality attributable to health care

Attempts to assess the contribution of health services to the entire population (not just those using health services) have relied on population based indicators of potentially avoidable mortality. Causes of death are included if there is evidence that they are amenable to healthcare interventions and—given timely, appropriate, and high quality care—death rates should be low among the age groups specified.11 Healthcare intervention includes preventing disease onset as well as treating existing disease.

Two such indicators based on potentially avoidable mortality are published annually for the NHS in the Compendium of Clinical and Health Indicators.7 Nolte and McKee reviewed the use of this concept and proposed an updated list of conditions and age bands for international comparisons, based on more recent evidence of amenability to healthcare interventions.12 We have used their list but have added asthma at ages 0-44 years, which they excluded because of lack of comparability in international studies.

In England 138 346 such deaths occurred in people aged less than 75 during 2001 and 2002, of which 48% were from ischaemic heart disease, 16% from cerebrovascular disease, 9% from colorectal cancer, 9% from female breast cancer, and 6% from pneumonia.

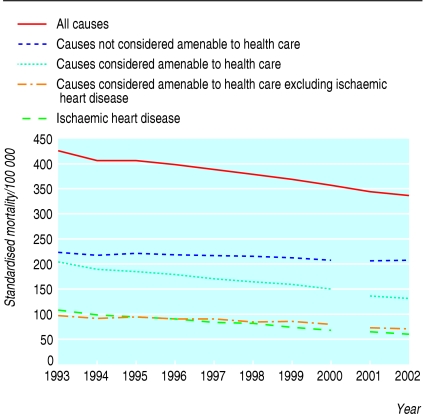

Table 1 shows the trend and geographical variation in deaths that were amenable to healthcare interventions and those that were not in people aged less than 75 years during 1998-2002. Mortality from amenable causes (including ischaemic heart disease) fell from 164 to 132 per 100 000—an average annual improvement of 5.7%. Mortality from ischaemic heart disease improved by an average of 6.5% a year, and mortality from other amenable causes improved by 5.0% a year. This compares with an annual improvement of only 1.0% for mortality from causes not considered amenable.

Figure 3 shows the trends for the 10 year period 1993-2002. There is a discontinuity in the trends as a result of a change in 2001 from ICD-9 to ICD-10 for coding cause of death. The difference between amenable and non-amenable causes in their improvement in mortality suggests improvements in the effectiveness of health care. The main concern about this approach is that trends and geographical variation may partly be due to factors other than the quality of health care, in particular improving socioeconomic conditions.

Fig 3.

Trends in mortality (directly standardised for age) by amenability of cause of death to health care, for population in England aged 0-74 years, 1993-2002. Data based on ICD-9 codes during 1993-2000, and on ICD-10 during 2001-2. (Data source: Office for National Statistics)

Forecasting future outcomes attributable to current investments

The observation that today's survival and death rates are at least partly a reflection of the quality of earlier health care applies particularly to primary and secondary prevention of conditions such as heart disease, stroke, diabetes, some cancers, and diseases of childhood. The converse of this is that many of the benefits to health from improved care today will not be seen for many years. One of the implications of this is that a comprehensive assessment of the quality of a healthcare system should include formal forecasts of the longer term effects of recent changes in provision and activity.

Several mathematical models have been and are being developed for doing this. For example, long term relative survival can be predicted for patients with recently diagnosed cancer.13 Another example is a microsimulation model that provides estimates of the annual benefits and costs over the middle and longer term (up to 20 years) of different patterns of healthcare provision and use for coronary heart disease.14 In terms of primary prevention, the model allows exploration of the population effect of improvements in the control of blood pressure and cholesterol and of changes in rates of cigarette smoking. In terms of treatment, it can explore the effects of changing ambulance response times, thrombolysis, and revascularisation rates. The model can produce, for example, estimates of the likely impact of meeting national service framework activity targets for coronary heart disease. Tables 2 and 3 show some illustrative examples of this, extracted from a report on this developmental work to the Department of Health,14 to demonstrate how simulation could be used to inform policy, subject to various assumptions and constraints.14 Currently, the model is being extended to other clinical conditions such as stroke and diabetes. Such models could be used to show the likely impact of new investments in prevention, incremental shifts from treatment to prevention, or alternative mixes of interventions.

Table 2.

Use of simulation model to show how coronary heart disease outcomes are likely to change after implementation of service targets specified in the national service framework: prevention model*

| Results of simulation | Stable angina | Myocardial infarction | Sudden cardiac death | Stroke death | Other death from cardiovascular disease |

|---|---|---|---|---|---|

| No of events in baseline† | 197.0 | 140.2 | 43.8 | 12.1 | 10.4 |

| % reduction in first events in 10 years after intervention‡ | 6.55 | 6.95 | 10.86 | 2.90 | 5.77 |

Target level of intervention is more systematic identification, assessment, treatment, and follow up of people at risk of coronary heart disease—that is, known patients who cross the blood pressure threshold are assessed within a year, and if no treatment is indicated the recall period is reduced to a year; the uptake of cholesterol treatment, where indicated, is increased to 100%. (These results are very sensitive to assumptions about background trends in baseline risk factors.)

Baseline is 1998, before national service framework, among 6923 respondents aged 35-85.

Events in those aged 45-85 years (that is, 10 years older) after intervention (20 iterations of the model over 10 years).

Table 3.

Use of simulation model to show how coronary heart disease (CHD) outcomes are likely to change after implementation of service targets specified in the national service framework: treatment model*

|

Deaths from all causes

|

|||||

|---|---|---|---|---|---|

| Results of simulation† | No of CHD patients | Life years saved | No of deaths | No prevented | Cost per life year saved |

| Baseline | 30 843 | — | 2302 | — | |

| After intervention (20 iterations of model over 20 years) | 32 603‡ | 1759 | 2109 | 193 | £4998 |

Combined target levels of intervention (with no assumptions about any interaction between them): Revascularisation capacity increased to 3120 angiograms per million population and 1500 angioplasties and bypass grafts combined per million population; Secondary prevention drugs (aspirin, statins, ACE inhibitors, β blockers) provided to 90% of patients, allowing for contraindications; Cardiac rehabilitation offered to 85% of patients having bypass surgery, angioplasty, or myocardial infarction; Ambulance response times reduced to 75% within 8 minutes for myocardial infarction and cardiac arrest calls; Thrombolysis door-to-needle time reduced to 30 minutes for 75th centile of distribution for patients eligible for thrombolysis (excluding 10% who fail to receive thrombolysis at all).

Events per year in a population of 1 million.

Increase in CHD patients is due to the reduction in case fatality.

Models of this kind are inevitably very demanding of data and assumptions, and there may be a trade-off between rigour and transparency. Their requirements include estimates of baseline levels of risk factors, disease prevalence, and healthcare use; estimates of trends over the forecasting period in exogenous factors (those not determined within the model); and, for cost effectiveness analyses, estimates of how treatment costs vary as levels of activity change. As well as modelling relationships between risk factors and outcomes, they have to be able to deal with combinations of changes in risk factors (such as reducing blood pressure and cholesterol concentrations) and interactions between risk factors (such as the effect of stopping smoking on blood pressure). Management of heart disease is one of the best researched aspects of health care, and, as well as the scientific literature, this model is based on new analyses of data from the health survey for England, the Framingham cohort study, and the British heart survey.14 However, different studies define variables in different ways, and substantial gaps in the literature remain, such as the effects of stopping treatment. Also, such models need maintenance, with new research findings needing to be incorporated regularly.

For discussion and debate

Our two articles are confined to health outcome measures and their proxies. There are, however, many other types of outputs that could be included in assessments of productivity. The following issues and assumptions require further discussion.

Selection of indicators and targets

Attribution of changes in health status to healthcare activity would normally require experimentation such as randomised controlled trials. Since this is not feasible as part of routine delivery of health services, judgment must be used, based on three criteria:

Research evidence or consensus (expressed in policies) suggest that health services (including public health, health partnerships, health advocacy) can have a significant influence on the outcome being measured

Variation between organisations in current performance suggests scope for improvement, with the best showing what is realistically achievable given optimum circumstances9

Variation between organisations in changes in performance over time suggests scope for improvement, with the greatest improvement showing what is realistically achievable given optimum circumstances.

The first criterion is essential for selection of indicators. The other two may not be, as the services may already be performing at an optimal level. Even if all three criteria are met, the outcomes may still reflect interventions not attributable to health services.

Aspects such as quality of life may be of more concern to patients than clinical measures, and therefore more appropriate as measures of outcome, albeit with greater problems of attribution. Absence of routine data on health related quality of life is a serious gap in our knowledge.

For annual cross sectional monitoring, it is important to select indicators that reflect short term impact unless long term impact is clear or can be forecast. Indicators such as incidence of stroke and deaths may reflect the cumulative effect of several natural events and interventions or resource use in the past. Some of these, such as prevalence of obesity and high blood pressure, may also act as proxies for future adverse outcomes and may therefore have a dual role in annual cross sectional monitoring.

Where there is clear evidence of the relation between intervention and health, such evidence may be used to create explicit standards for performance audit, and measures of the level of intervention may be used as proxies for future outputs or health outcomes.

Table 4 shows that the measure of what is achievable depends on the level at which analysis is undertaken. In large populations (regions, strategic health authorities) indicators are likely to be stable but may mask the true “optimum.” Smaller populations (primary care organisations, local authorities) will have fewer events and more inherent variability, and extreme values may reflect atypical circumstances. The reference point that is ultimately selected will be a matter of judgment, as not all events being measured (admissions, readmissions, deaths, etc) are likely to be avoidable. What we are seeking, in the absence of an evidence based standard, is an aspirational target based on reality, towards which we would expect the NHS to be moving. In some cases, this could be informed by what is shown to be achievable in international studies, as has been suggested for cancer survival.

Table 4.

Mortality from causes considered amenable to health care by organisational level, England 2001-2

|

Standardised mortality*

|

||||||

|---|---|---|---|---|---|---|

|

Centiles

|

||||||

| Organisational level | No of organisational units | Total population range (1000s) | Mean (range) | 10th | 50th | 90th |

| England | 1 | 49 646 | 134.4 | |||

| Government office region | 9 | 2538-8044 | 136.1 (114.0-157.9) | |||

| ONS area group | 12 | 1428-12 154 | 139.9 (104.2-170.3) | |||

| Strategic health authority | 28 | 1146-2651 | 134.7 (104.4-173.7) | 114.4 | 130.9 | 161.9 |

| Local authority | 354 | 25-990† | 128.5 (0.0-223.8) | 98.6 | 124.1 | 168.3 |

| Primary care organisation | 303 | 65-373 | 134.7 (84.4-259.9) | 103.0 | 129.9 | 175.7 |

(Data sources: Office for National Statistics, Department of Health.)

Directly standardised for age (using the European standard population) per 100 000 people aged 0-74 years.

Excluding Isles of Scilly and City of London.

Methodology

Ideally, numerators and denominators should match—for example, case fatality rates for stroke should be based on all deaths among all patients with stroke, including those not admitted to hospital and who may have either mild disease with lower case fatality or severe disease and death before admission. This is not always possible, and the limitations of what is feasible must be acknowledged. Some indicators measure what happens to known patients, with a risk that those needing care but not receiving it, possibly with poorer outcomes, are excluded.

Any measure of geographical variation or time trends needs to ensure comparability of numerator and denominator data. This may require adjustment of indicators for differences in age, sex, case mix (mix or severity of conditions), etc. A major constraint with existing routinely collected national data is the lack of grouping systems for case mix that are based on prognosis. Grouping systems, such as healthcare resource groups, were designed to create subgroups for comparison that are homogeneous with respect to resource use but not necessarily outcomes. Standardisation also raises questions about what adjustment is legitimate. People in deprived populations might have relatively poor outcomes because of relatively intractable health problems or because of substandard care, or both. Standardisation is undiscriminating and would “protect” the providers against both kinds of effects. Likewise, where there is sex variation, there is a choice between using sex standardised person rates or sex specific rates.

When standardising rates for age (and other variables) the choice of method (direct or indirect) and of the standard population used may affect the results, particularly when comparing sub-national rates. We tested this for hospital case fatality, calculating trends at the England level using both direct and indirect methods and using various years as the standard, and found little difference (table 2, part 16). However, this should be monitored in any new approach to measuring performance. For the correct analysis of trends, data for all years should be adjusted with the same standard and time period.

The stability of the indicator needs to be taken into account. For example, data on strategic health authorities are less prone to yearly fluctuations in rankings than data on primary care organisations because of their larger populations.

Interpretation of data

Variation in data quality (in levels of missing records and missing or invalid codes) could influence trends, particularly if there were biases in such records compared with the rest. In the extra technical material on bmj.com, table 1.1 shows that the levels of incompleteness for indicators based on hospital episode statistics are too small to affect England indicator values and do not vary much between years. Within each year, however, completeness varies by strategic health authority, requiring caution in interpreting comparative strategic health authority data. The accuracy of seemingly valid diagnostic codes has been a source of concern.15 There are now local routine audits of the quality of clinical coding (personal communication, NHS Information Authority) but no national reporting system, which remains a serious gap.

National aggregate values may mask variation in component parts that could be important for productivity assessment. Table 2.2 in the extra technical material on bmj.com shows that there are age and sex specific variations in hospital case fatality and varying time trends. For example, there is convergence between sexes in the 0-5 year old age group over the five years but persistent sex differences in the 60-64 year age group. There are falling trends in deaths in the 75-79 year group but not in the 45-49 year group.

The potential for competition for resources between types of care and conditions needs to be acknowledged at national and local level, because the “best” achieved in one locality for one indicator may have been at the expense of poorer performance in other aspects of health care, reflecting local priorities.

Most service based indicators are incomplete, as data from the independent healthcare sector are missing.

Geographical monitoring is useful, as data can then be interpreted in the context of the strategic roles of strategic health authorities, the commissioning roles of primary care organisations, and local demographic and socioeconomic conditions. Local conditions may explain (although should not justify) poorer outcomes if there are known effective interventions. We found variations (some statistically significant) both between and within the area groups created by the Office for National Statistics for grouping healthcare organisations that are most similar in terms of a range of demographic and socioeconomic conditions. Significant variation within these groups probably reflects influences other than demography and socioeconomic conditions, such as quality of health care.

Summary points

More rigorous analysis of existing routine clinical data would allow assessment of NHS performance across a wide range of services

Examples of such performance indicators include mental health care, potentially avoidable deaths, and forecasting coronary heart disease outcomes

Various assumptions and technical issues need discussion and debate—that is, the selection of indicators and targets, methods, interpretation of data, and application in productivity measurement

Application in productivity assessment

Practical ways need to be found to incorporate multiple indicators in productivity assessment: they may overlap or interact; some may be more important or relevant than others and may need to be weighted; some may reflect mismatching performance for a given time (see the above discussion on stroke). Techniques for dealing with these issues, such as weighted scores, profiles, etc, are beyond the scope of our two articles but need consideration.

High levels of activity in treatment, rehabilitation, and long term care may show desirable high productivity and improvement, but would be considered an undesirable or negative output of preventive activity for a preventable condition such as stroke.

A cross sectional approach does not take account of sequentially linked events over time, such as patients with myocardial infarction having further infarcts in due course.

Reality is even more complex than the approach taken here, and this should be acknowledged explicitly in any output to avoid sweeping simplistic generalisations during interpretation.

Conclusions

We have shown the feasibility of a variety of ways of measuring health related outputs and outcomes. Data from initiatives such as the new mental health minimum dataset and the new general practice contract should lead to better measurement. Any assessment of productivity requires careful matching of outcomes to the inputs used to achieve them, and this brings in a separate set of issues and assumptions that are beyond the scope of our articles.

Supplementary Material

Extra technical details of the methods described appear on bmj.com

Extra technical details of the methods described appear on bmj.com

AL's contributions to the study were made within his role at the Oxford branch of the National Centre for Health Outcomes Development, based at Oxford University, Headington, Oxford.

Contributors: AL conceived of the study, drafted the article, and produced the hospital episode statistics based indicators. JC helped draft the article and produced the mental health indicators. DE helped draft the article and produced the population mortality indicators. CSp analysed hospital episode statistics data. CSa helped draft the article and produced the forecastingmodels. Lee Mellers helped analyse the hospital episode statistics data. Bernard Rachet contributed information on the forecasting models (cancer survival). David Rudrum provided editorial support. AL is guarantor for the study.

Competing interests: All authors are involved in the work of the National Centre for Health Outcomes Development, either directly or via subcontracts. The centre is funded by the Department of Health and commissioned by it and the Healthcare Commission to develop and produce clinical and health indicators for them and the NHS. The views expressed here are those of the authors and not necessarily of the commissioners.

Ethical approval: Not needed.

References

- 1.Smith R. Is the NHS getting better or worse? [editorial] BMJ 2003;327: 1239-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Department of Health. Chief executive's report to the NHS. London: DoH, 2004.

- 3.Atkinson A. Atkinson review: interim report-measurement of government output and productivity for the national accounts. London: Stationery Office, 2004.

- 4.Rudd A, Goldacre M, Amess M, Fletcher J, Wilkinson E, Mason A, et al, eds. Health outcome indicators: stroke. Report of a working group to the Department of Health. Oxford: National Centre for Health Outcomes Development, 1999.

- 5.Lakhani A. Assessment of clinical and health outcomes within the National Health Service in England. In: Leadbeter D, ed. Harnessing official statistics. Abingdon: Radcliffe Medical Press, 2000.

- 6.Lakhani A, Coles J, Eayres D, Spence C, Rachet B. Creative use of existing clinical and health outcomes data to assess NHS performance in England: Part 1—performance indicators closely linked to clinical care. BMJ 2005;330: 1426-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lakhani A, Olearnik H, Eayres D, eds. Compendium of clinical and health indicators. London: Department of Health, National Centre For Health Outcomes Development, 2003.

- 8.Department of Health. National service framework for mental health: modern standards and service models. London: DoH, 1999.

- 9.Keppel KG, Pearcy JN, Klein RJ. Measuring progress in healthy people 2010. Statistical notes No 25. Hyattsville, MD: National Center for Health Statistics, 2004. [PubMed]

- 10.Office for National Statistics. National statistics 2001 area classification for health areas. www.statistics.gov.uk/about/methodology_by_theme/area_classification/ha/default.asp (accessed 1 Mar 2005).

- 11.Charlton JRH, Bauer R, Lakhani A. Outcome measures for district and regional health care planners. Commun Med 1984;6: 306-15. [PubMed] [Google Scholar]

- 12.Nolte E, McKee M. Does healthcare save lives?—Avoidable mortality revisited. London: Nuffield Trust, 2004.

- 13.Brenner H, Gefeller O. An alternative approach to monitoring cancer patient survival. Cancer 1996;78: 2004-10. [PubMed] [Google Scholar]

- 14.Davies R, Normand C, Raftery J, Roderick P, Sanderson C. Policy analysis for coronary heart disease: a simulation model of interventions, costs and outcomes—Report to the Department of Health, July 2003 (revised April 2004). London: London School of Hygiene and Tropical Medicine, 2004.

- 15.Dixon J, Sanderson C, Elliott P, Walls P, Jones J, Petticrew M. Assessment of the reproducibility of clinical coding in routinely collected hospital activity data: a study in two hospitals. J Public Health Med 1998;20: 63-9. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.