Abstract

Background:

Augmented reality (AR) technology that can combine computer-generated images with a real scene has been reported in the medical field recently. We devised the AR system for evaluation of improvements of the body surface, which is important for plastic surgery.

Methods:

We constructed an AR system that is easy to modify by combining existing devices and free software. We superimposed the 3-dimensional images of the body surface and the bone (obtained from VECTRA H1 and CT) onto the actual surgical field by Moverio BT-200 smart glasses and evaluated improvements of the body surface in 8 cases.

Results:

In all cases, the 3D image was successfully projected on the surgical field. Improvement of the display method of the 3D image made it easier to distinguish the different shapes in the 3D image and surgical field, making comparison easier. In a patient with fibrous dysplasia, the symmetrized body surface image was useful for confirming improvement of the real body surface. In a patient with complex facial fracture, the simulated bone image was useful as a reference for reduction. In a patient with an osteoma of the forehead, simultaneously displayed images of the body surface and the bone made it easier to understand these positional relationships.

Conclusions:

This study confirmed that AR technology is helpful for evaluation of the body surface in several clinical applications. Our findings are not only useful for body surface evaluation but also for effective utilization of AR technology in the field of plastic surgery.

INTRODUCTION

Augmented reality (AR) is a technology that combines computer-generated images on a screen with a real object or scene.1 Because of its many advantages, including the lack of a shifting viewpoint through the use of a goggle type display device as a screen,2 AR has recently undergone rapid development in various fields.

Basic studies of AR have been conducted since the 1960s.3 In the medical field, after the development of the first medical navigation system in the late 1980s,4 many studies related to this system were performed.5,6 As with conventional navigation systems, the purpose of this new navigation system was to grasp the deep organs or the tip of an apparatus that is not directly visible.

In contrast, AR technology enables comparison between a (partially) visible organ and its corresponding simulated image. Ming et al.7 described an AR system that projects virtual images of facial bones for guidance while the surgeon performs an osteotomy on actual facial bones.

In the present study, we have advanced these ideas further by comparing surgically induced changes or improvements with the visible body contour using AR technology.

Because the degree of improvement in the body surface by surgery can be viewed directly, judgments regarding this improvement tend to be influenced by the operator’s expectations and subjectivity. AR technology will allow for more ideal comparison of surgical fields with simulated body surface images, making more objective judgment possible.

The main goal of plastic surgery, even that involving bone or soft tissue, is improvement of the body surface contour. Direct evaluation of improvements in the body surface can become a specialized application of AR technology in the field of plastic surgery.

However, no such applications in the medical field have been reported. Trials and errors appear necessary to construct a useful system. Thus, we have constructed a simple AR system based on existing devices/software (inexpensive or free if possible) and have enabled clinicians to modify the system as necessary.

In this study, we began using the AR system in the clinical setting before its development was complete. Therefore, so that the quality of the system did not affect the results of the operation, we limited the application of this system only to the final evaluation performed upon near-completion of the operation.

This study was approved by the Ethics Committee of Osaka Medical College. Written informed consent for use of the patients’ photographs was provided by each patient or an appropriate family member.

MATERIALS AND METHODS

Patients and Purpose of AR System

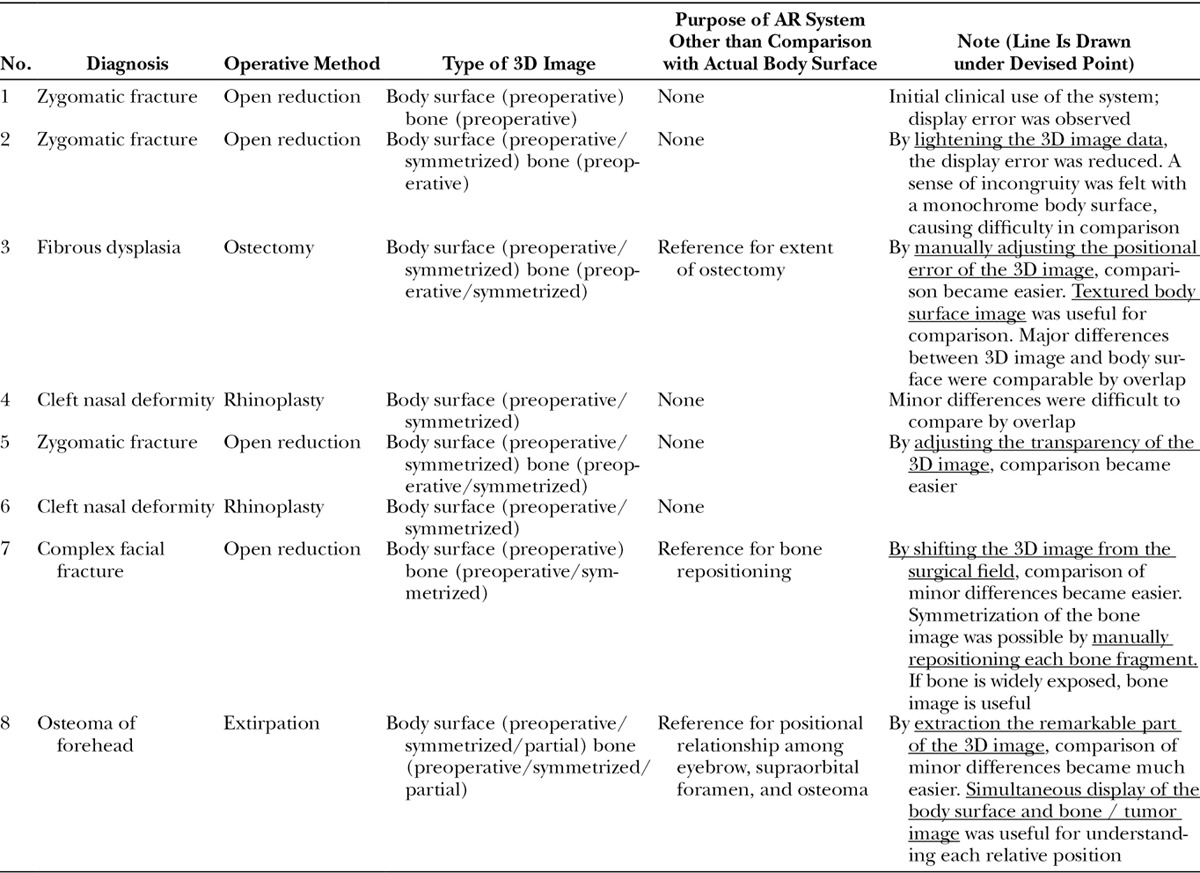

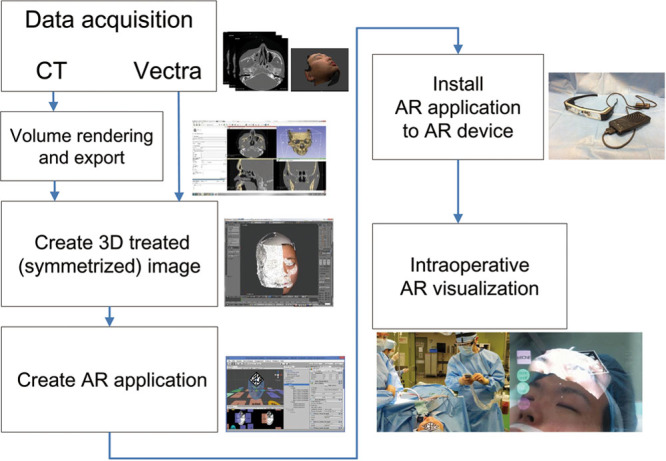

In 3 patients with a zygomatic fracture, the AR system was used to evaluate the cheek shape after reduction. In 2 patients with a cleft nasal deformity, it was used to evaluate the external shape of the nose after rhinoplasty. In 1 patient with fibrous dysplasia, it was used as a reference for the degree of ostectomy and to evaluate the cheek shape after ostectomy. In 1 patient with a complex facial fracture, it was used as a reference for bone repositioning and to evaluate the shape of the forehead and midface after reduction. In 1 patient with an osteoma of the forehead, it was used as a reference for the positional relationships among the eyebrow, supraorbital foramen, and osteoma and to evaluate the shape of the forehead after tumor resection (Table 1). Although the 3-dimensional (3D) image superimposed onto the actual surgical site was basically the body surface image, the bone image was also prepared in cases involving bone manipulation and examined to determine whether it contributed to the usefulness of the AR system. These 3D images were prepared in 2 versions, which were switchable at any time: “preoperative” and “ideal postoperative.” The overall workflow is shown in Figure 1.

Table 1.

The Cases in Which the System Was Clinically Applied

Fig. 1.

Workflow of AR system in this study.

Creation of 3D Images for AR System

Preoperative photographs of the patients were obtained with a VECTRA H1 (Canfield Scientific, Parsippany, N.J.). The VECTRA H1 is a hand-held 3D imaging system that can create precise and small-data 3D images of the body surface with photorealistic texture data. The digital imaging and communications in medicine (DICOM) data acquired by preoperative computed tomography was imported into 3D Slicer (http://www.slicer.org), a free and open-source software package for image analysis and scientific visualization. The software was used to create 3D images of the body surface and facial bones.

The obtained 3D images were further exported to Blender, a professional free and open-source 3D computer graphics software, and each positional relationship was adjusted.

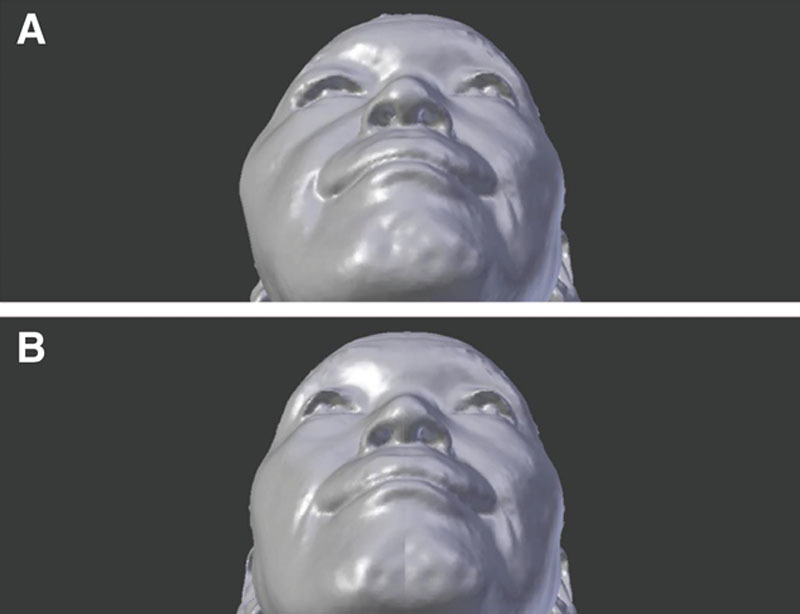

The “ideal postoperative” 3D images were created by modifying the disease-induced asymmetry of the imported 3D images. For example, for cases of right cheek deformation, we created a symmetrical model that was reverse-copied from the left (normal) side of the face (Fig. 2).

Fig. 2.

Creation of “ideal postoperative” 3D image of right zygomatic fractures. A, Preoperative 3D image. B, By reverse-copying the intact left side of the preoperative image, we created the ideal postoperative image.

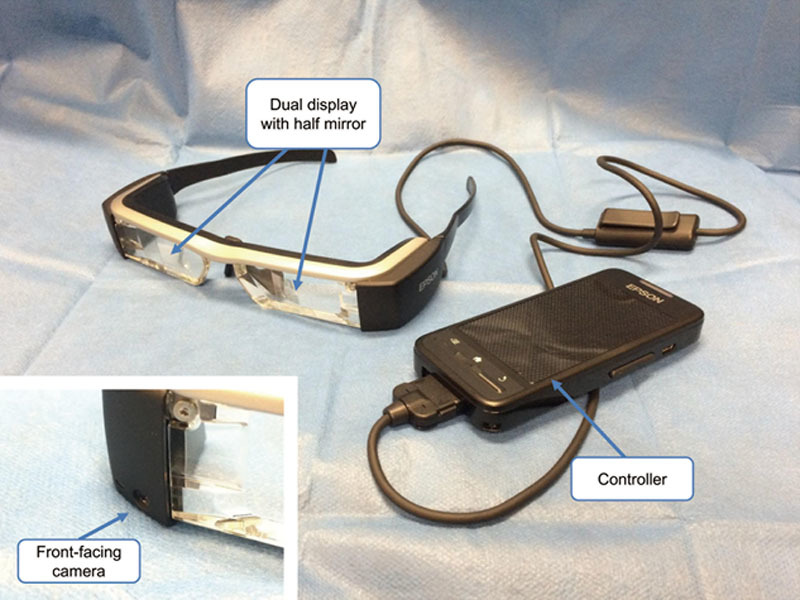

Configuration of AR System

We adopted Moverio BT-200 smart glasses (Seiko Epson Corporation, Nagano, Japan) for the AR device, which is a commercially available binocular optical see-through head-mounted display. Because the display unit has a half-mirror, the wearer can see both a virtual image and the actual background as if they have been superimposed in the same visual field (Fig. 3).

Fig. 3.

Adopted AR device: Moverio BT-200 smart glasses. Because the dual display unit has a half-mirror, the wearer can see a virtual image with actual background scenery within the same visual field. The controller is a capacitive touch panel device that can be used though sterilized gloves and vinyl covers during the operation.

The 3D images processed on Blender were exported to Unity, which is a free application development software with an integrated development environment.

We used a marker-based AR technique to display the 3D images at a position corresponding to real space. Neither Unity nor Moverio has a program for marker recognition; therefore, we incorporated Vuforia, which is a free software development kit for creating AR applications.

When locating an AR marker in the real surgical field, the position of the marker should be easily recognized by the camera equipped in Moverio and avoid disturbing the surgical procedure. In cases involving the face, the midline of the forehead is suitable for this position.

By acquiring the position, direction, and scale information of the AR marker from the equipped camera, the spatial relationship between the camera and marker is established. The 3D images that show the relationship with the marker are positioned in virtual space on the program and projected on the monitor of Moverio, overlapping the real visual field.

Evaluation of Usefulness of AR System during an Operation

When performing a surgical procedure, we attached the sterilized AR marker to the surgical site, mounted Moverio, and started the AR application (Fig. 4). We compared the superimposed 3D images with the actual surgical field from various angles in the same visual field to verify the usefulness of the system. Based on the findings obtained in each case, we improved the display method of the 3D image to establish a more useful system.

Fig. 4.

Use of AR application during an operation. The surgeon mounts the AR device and operates the application with a handheld controller. A sterilized AR marker is pasted onto the surgical field and recognized by a camera built into the device.

RESULTS

In all cases, the 3D body surface image and bone image were successfully projected on the surgical field. The following several problems occurred; therefore, we made improvements and conducted clinical application.

Initially it took time to recognize the marker owing to the narrow angle of view of the equipped camera, but it became recognized promptly after we got used to handling.

The display error was approximately 30–40 mm in the direction of the marker plane and ≥ 50 mm in the depth direction. The error seemed to be caused by the recognition accuracy of the marker. Because the display error was kept constant until the recognition of the marker was reset, we added a program to manually correct the error from case 3. Thus, it became possible to accurately compare the overlap between the surgical field and 3D image.

Until case 2, the 3D body surface image was a monochrome rendering display that could only be used to evaluate stereoscopic information. However, when compared with the surgical field, an unnatural feeling occurred and visual comparison was difficult (Fig. 5). From case 3, the 3D body surface image attained a fine texture derived from the photograph, and a less unnatural feeling was induced when comparing the image with the actual surgical field. Furthermore, from case 5, adjustment of the transparency of the 3D model made it easier to distinguish the different shapes in the 3D model and surgical field, making comparison easier.

Fig. 5.

Images of clinical application for right zygomatic fracture (these images are for illustrative purposes). A, Surgical field. B, Monochrome treated image. C, Monochrome treated image superimposed onto the surgical field.

With these improvements, it became possible to compare the body surface contour of the surgical field with objective information of the “start” (= preoperative image) and “goal” (= ideal postoperative image), from various angles in the same field of view/stereoscopic display. With the AR system, it was possible to provide useful information as to whether sufficient procedures were performed.

Three representative cases are described below.

Case 3: Female, 17 Years of Age, Fibrous Dysplasia of the Right Face

Hyperplasia was observed around the right zygomatic bone, the cheek was markedly elevated upon visual inspection, and ostectomy was performed. At the end of the procedure, by superimposing the body surface image onto the surgical field and comparing it at the angle from the bottom, we visually confirmed that the right malar eminence was smaller than that in the preoperative image and was approaching the size shown in the postoperative image.

Case 7: Male, 41 Years of Age, Complex Facial Fracture (Frontal and Nasoethmoidal Fractures)

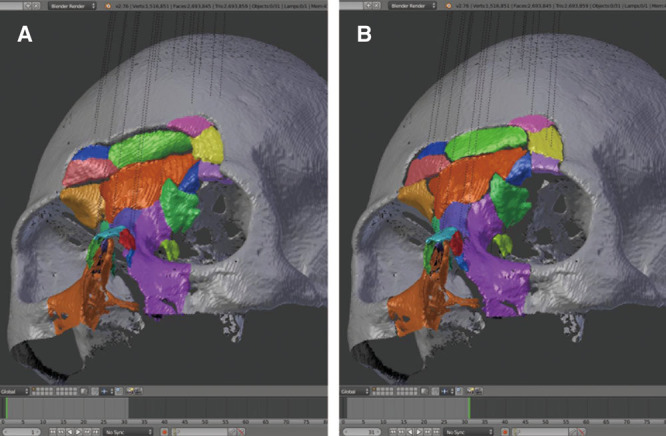

A wide range of deformities were observed across the bilateral sides of the bone and skin and centered on the forehead and nose root. We created an ideal bone reduction image by repositioning each bone fragment on Blender (Fig. 6). After the bone had been widely exposed with a coronary incision, it was used as a reference for the bone reduction position by superimposing the 3D bone image.

Fig. 6.

Creation of “ideal postoperative” 3D image of complex facial fractures. A, Preoperative 3D image, color-coded for each bone fragment. B, Each bone fragment has been properly repositioned.

By diverting the function of manually adjusting the image display position, images were compared with the corresponding surgical field in a side-by-side manner. The capability of comparison in the same field of view/stereoscopic display was not impaired, suggesting utility different from superimposed.

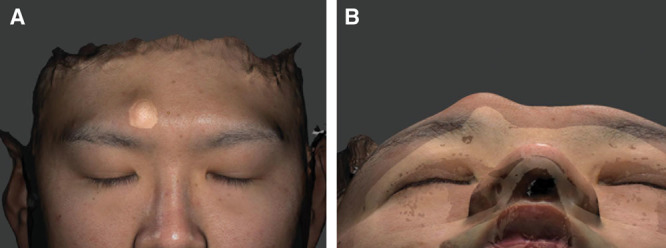

Case 8: Male, 32 Years of Age, Osteoma of the Forehead

This patient had an osteoma on the anterior wall of the frontal sinus, and the supraorbital nerve was close to the tumor. By simultaneously displaying the Vectra-created 3D image of the body surface and DICOM–created bone image and maintaining these positional relationships, we obtained an understanding of the positional relationship of the eyebrows, which served as a landmark of the body surface, tumor, and supraorbital foramen (Fig. 7). By creating a 3D image extracted only around the tumor and projecting it by shifting it slightly from the corresponding surgical field, it became easier to make a visual comparison even when the deformation was small (Fig. 8).

Fig. 7.

Simultaneous display of 3D data of facial bones by CT and body surface data by Vectra in a patient with an osteoma. A, Anterior view. The positional relationship between the left eyebrow and osteoma is readily apparent. B, Base view. The positional relationship among the left eyebrow, osteoma, and supraorbital foramen is readily apparent.

Fig. 8.

Actual shots through the AR device during the operation in a patient with an osteoma. A, The body surface is displayed. B, The facial bones including the osteoma are displayed. C, D, The body surface extracted only around the osteoma is displayed; it has been shifted from the corresponding surgical field. When the deformation is small, this method is easier to compare with the surgical field.

DISCUSSION

Benefits of Projecting Body Surface with AR Technology

In this study, we examined whether AR technology is useful for improved evaluation of the body surface. Both the surgeon’s visual observation and quantitative evaluation by inspection are used to judge improvement in the body surface. The purpose of this study was to provide referential information for the former technique.

In all cases in this study, the body surface contour after the procedure and the ideal postoperative image almost coincided fortunately, so we did not add additional procedures after projection. However, if obvious differences are found in them, it is a valuable information for performing additional procedures.

With the development and cost reduction of 3D printing technology in recent years, many reports have described the use of 3D models for preoperative planning and reference during surgery.8 Using AR technology, however, 3D data can be directly visualized in the surgical field without physical creation of models. Furthermore, it is possible to compare images with the corresponding surgical field in a completely overlapping state. The output of current 3D printers is practically limited to only 1 or several colors. However, because AR technology provides actual referential data in the surgical field, the operator can select the necessary data display methods even during an operation.

Effective Display Method for Comparison of Body Surface

At the beginning of this study, the body surface image was displayed with a single color, and visual information was limited only to the form. Practically, less discomfort was felt when using the 3D image with the photo-based fine texture than when using the single color 3D image, and we could concentrate on evaluation of the form. Many landmarks on the body surface can be delineated by color differences, such as the eyebrows, closed eyelids, and red lips, although their shape is somewhat continuous with the surroundings. When using a single-color display, these landmarks are considered lost.

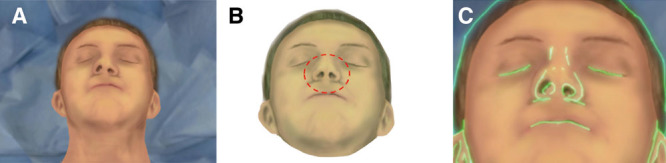

When a large difference was observed between the actual facial surface and the superimposed simulated image, the difference could be visually recognized through the AR device. When the difference was small, however, it was difficult to recognize. In the present study, it was easier to visually confirm the difference in small deformations by shifting the image laterally rather than superimposing it onto the surgical field.

By devising a more advanced display method, such as extraction of the outline of the 3D image, it may become easier to compare the differences in small deformations even if the model is superimposed on the surgical field. This will be examined in the future (Fig. 9).

Fig. 9.

Display methods of 3D image: extracted only outline. A, Surgical field (patient with nasal deformity). B, Treated 3D image (red dashed circle shows symmetrized nose). C, Superimposed 3D image (light green outline) on the surgical field.

Previous reports have described the necessity of devising an image display method in AR systems that allows for navigation of deep organs.9,10 However, even for body surface evaluation, it is desirable to flexibly change the display method for each case in addition to simply superimposing the images.

Will Quantitative Evaluation of the Body Surface using the AR System become Possible?

It is currently possible to quantitatively evaluate improvements in the body surface, even during surgery, by photographing the surgical field with a 3D imaging system.11 However, frequent evaluation is difficult because a person other than the surgeon must perform this evaluation, and about 10–20 minutes is required. If the workflow can be semi-automated and linked with the AR system in the future, the system will be able to quantitatively evaluate changes in the body surface and project them onto the surgical field with visualization.

Registration Technology

In this study, we used fiducial markers for registration of the projected images. Although the recognition speed of the markers was good, the accuracy was low, and an average deviation of 3–4 cm occurred between the surgical field and the corresponding image. Although it was possible to manually correct the deviation, such correction should ideally be unnecessary. Possible causes of deviation include the performance of the original registration program, the resolution of the camera built into the device, the processing speed of the device, and similar factors.

Registration can also be performed using an infrared stereo camera and several optical markers, which are often used in conventional navigation systems.5,6,12 Although the accuracy is high, time is required to perform the initial calibration or resetting when the marker is displaced.

Suenaga et al.13 described a markerless registration method that involved recognizing the contour of the teeth. This would serve as an excellent method if accurate stereoscopic information of the teeth can be obtained before the operation. In other reports, the stereoscopic information of the surrounding environment was recognized and aligned using simultaneous localization and mapping technology.14 Still other reports have described acquiring stereoscopic data of the body surface in real time, matching it with data obtained from computed tomography, and aligning it.15 This technique may be more useful than the marker method when targeting the body surface.

The System Easy to Try and Error, and Future Prospects

Initially, the use of AR technology required the independent development of a system including both an AR device and registration program, which required a long time and was high in cost.16 In recent years, program libraries that allow for realization of AR systems have been gradually opened to the public. Devices that respond to or are specialized for the use of AR technology are readily available for individuals or developers.

In this study, we could construct an AR system based on existing devices/free-software and changed the system based on the findings gained in each case. None of the changes were technically sophisticated (e.g., modification of the model display method); however, each change greatly affected the clinical applications of the system. Importantly, these changes can be performed by clinicians and do not require experts.

The present study showed that by simultaneously displaying the body surface image and the bone/tumor image from different inspection sources, each positional relationship could be understood intraoperatively without strict alignment with the surgical field. We obtained useful findings even not directly related to the body surface evaluation because our system can be flexibly changed for each case. In the future, by simultaneously displaying images from different inspection sources, simple navigation of deep organs may become possible using this system.

As a future prospect, if several operators can simultaneously reference and manipulate the AR system by cooperation among AR devices, communication and unity between operators will be promoted. The AR system can express stereoscopic data that are difficult to understand by language and 2D image, and various data such as anatomical charts and inspection data can be used simultaneously. This will help in teaching surgical skills to residents and providing education to medical students.

CONCLUSIONS

We devised the AR system for evaluation of improvements of the body surface, which is important for plastic surgery. Further clinical trials are needed to identify problems and continue making improvements. We constructed an AR system that is easy to modify by combining existing devices, free software, and libraries. The present study confirmed that this AR technology is helpful for evaluation of the body surface by several clinical applications. Our findings are not only useful for body surface evaluation but also for effective utilization of AR technology in the field of plastic surgery.

PATIENT CONSENT

Patients provided written consent for the use of their images.

Footnotes

Disclosure: The authors have no financial interest to declare in relation to the content of this article. The Article Processing Charge was paid for by the authors.

REFERENCES

- 1.Azuma RT. A survey of augmented reality. Presence Teleop Virt. 1997;6:355–385.. [Google Scholar]

- 2.Michael B, Henry F, Ohbuchi R. Merging Virtual Objects with the Real World: Seeing Ultrasound Imagery within the Patient. 1992;26.2:Computer graphics; 203–210.. [Google Scholar]

- 3.Ivan Sutherland. A head-mounted three dimensional display. In: Proceedings of fall joint computer conference, 1968San Francisco, California. [Google Scholar]

- 4.Watanabe E, Watanabe T, Manaka S, et al. Three-dimensional digitizer (neuronavigator): new equipment for computed tomography-guided stereotaxic surgery. Surg Neurol. 1987;27:543–547.. [DOI] [PubMed] [Google Scholar]

- 5.Robert AM, Max JZ, Alexander CK, et al. Application of an augmented reality tool for maxillary positioning in orthognathic surgery—a feasibility study. J Craniomaxillofac Surg. 2006;34:478–483.. [DOI] [PubMed] [Google Scholar]

- 6.Okamoto T, Onda S, Yanaga K, et al. Clinical application of navigation surgery using augmented reality in the abdominal field. Surg Today. 2015;45:397–406.. [DOI] [PubMed] [Google Scholar]

- 7.Ming Z, Gang C, Li L, et al. Effectiveness of a novel augmented reality-based navigation system in treatment of orbital hypertelorism. Ann Plast Surg. 2015;77:662–668.. [DOI] [PubMed] [Google Scholar]

- 8.Theodore L, Ahmed M, Peter S, et al. A plastic surgery application in evolution: three-dimensional printing. Plast Reconstr Surg. 2014;133:446–451.. [DOI] [PubMed] [Google Scholar]

- 9.Christoph B, Nassir N. Virtual window for improved depth perception in medical AR. In: Proceedings of International Workshop on Augmented Reality environments for Medical Imaging and Computer-aided Surgery (AMI-ARCS), 2006München, Germany. [Google Scholar]

- 10.Hyunseok C, Byunghyun C, Masamune K, et al. An effective visualization technique for depth perception in augmented reality-based surgical navigation. Int J Med Robot. 2016;12:62–72.. [DOI] [PubMed] [Google Scholar]

- 11.Koban KC, Shenck TL, Giunta RE, et al. Using mobile 3D scanning systems for objective evaluation of form, volume, and symmetry in plastic surgery: intraoperative scanning and lymphedema assessment. In: Proceedings of the 7th International Conference on 3D Body Scanning Technologies, 2016Lugano, Switzerland. [Google Scholar]

- 12.Li L, Yang J, Chu Y, et al. A novel augmented reality navigation system for endoscopic sinus and skull base surgery: a feasibility study. PLoS One. 2016;11:e0146996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Suenaga H, Tran HH, Liao H, et al. Vision-based markerless registration using stereo vision and an augmented reality surgical navigation system: a pilot study. BMC Med Imaging. 2015;15:51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mahmoud N, Grasa ÓG, Nicolau SA, et al. On-patient see-through augmented reality based on visual SLAM. Int J Comput Assist Radiol Surg. 2017;12:1–11.. [DOI] [PubMed] [Google Scholar]

- 15.Kilgus T, Heim E, Haase S, et al. Mobile markerless augmented reality and its application in forensic medicine. Int J Comput Assist Radiol Surg. 2015;10:573–586.. [DOI] [PubMed] [Google Scholar]

- 16.Sielhorst T, Feuerstein M, Navab N, et al. Advanced medical displays: a literature review of augmented reality. J Display Technology. 2008;4:451–467.. [Google Scholar]