Abstract

Introduction

The development augmented reality devices allow physicians to incorporate data visualization into diagnostic and treatment procedures to improve work efficiency, safety, and cost and to enhance surgical training. However, the awareness of possibilities of augmented reality is generally low. This review evaluates whether augmented reality can presently improve the results of surgical procedures.

Methods

We performed a review of available literature dating from 2010 to November 2016 by searching PubMed and Scopus using the terms “augmented reality” and “surgery.” Results. The initial search yielded 808 studies. After removing duplicates and including only journal articles, a total of 417 studies were identified. By reading of abstracts, 91 relevant studies were chosen to be included. 11 references were gathered by cross-referencing. A total of 102 studies were included in this review.

Conclusions

The present literature suggest an increasing interest of surgeons regarding employing augmented reality into surgery leading to improved safety and efficacy of surgical procedures. Many studies showed that the performance of newly devised augmented reality systems is comparable to traditional techniques. However, several problems need to be addressed before augmented reality is implemented into the routine practice.

1. Introduction

The first experiments with medical images date back to the year 1895, when W. C. Röntgen discovered the existence of X-ray. This marks the starting point of using medical images in the clinical practice. The development of ultrasound (USG), computed tomography (CT), magnetic resonance imaging (MRI), and other imaging techniques allows physicians to use two-dimensional (2D) medical images and three-dimensional (3D) reconstructions in diagnosis and treatment of various health problems. Further development of medical technology has given an opportunity to combine anatomical and functional (or physiological) imaging in advanced diagnostic procedures, that is, functional MRI (fMRI) or single photon emission computed tomography (SPECT/CT). These methods allowed physicians to better understand both the anatomical and the functional aspects of a target area.

The latest development in medical imaging technology focuses on the acquisition of real-time information and data visualization. Improved accessibility of real-time data is becoming increasingly important as their usage often makes the diagnosis and treatment faster and more reliable. This is especially true in surgery, where the real-time access to 2D or 3D reconstructed images during an ongoing surgery can prove to be crucial. This access is further enhanced by the introduction of augmented reality (AR)—a fusion of projected computer-generated (CG) images and real environment.

The ability to work in symbiosis with a computer broadens horizons of what is possible in surgery, as AR can alter the reality we experience in many ways. The wide range of possibilities it offers to surgeons challenges us to develop new techniques based on AR. In the future, AR may fully replace many items required to perform a successful surgery today, that is, navigation, displays, microscopes, and much more, all in a small wearable piece of equipment. However, the awareness of AR implementation and what it may offer is generally low, as at current state, it cannot fully replace most of long established surgical methods. The main aim of this work is to focus on the latest trends of the rapidly developing connection between augmented reality and surgery.

2. Methods

We performed a review of available literature dating from 2010 to November 2016 by searching PubMed and Scopus using the terms “augmented reality” and “surgery.”

The initial search yielded 808 studies. After removing duplicates and including only journal articles, a total of 417 studies were identified. By reading of abstracts, 91 relevant studies were chosen to be included. 11 references were gathered by cross-referencing. A total of 102 studies were included in this review.

3. Results and Discussion

3.1. Basic Principles of Augmented Reality

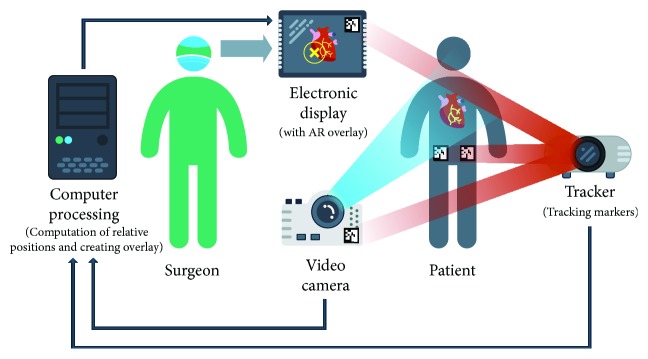

An augmented reality system provides the surgeon with computer-processed imaging data in real-time via dedicated hardware and software. The projection of AR is made possible by using displays, projectors, cameras, trackers, or other specialized equipment. The main principle of a basic AR system is presented in Figure 1. The most basic method is to superimpose a CG image on a real-world imagery captured by a camera and displaying the combination of these on a computer, tablet PC, or a video projector [1–7]. In case it is impossible to mount a video projector in the operating room, a portable video projection device has been designed [3, 4]. The main advantage of AR is that the surgeon is not forced to look away from the surgical site as opposed to common visualization techniques.

Figure 1.

A scheme showing the basic principles of augmented reality.

Another possibility is to use a special head-mounted display (HMD, sometimes referred to as “smart glasses”) which resembles eyeglasses. They use special projectors, head tracking, and depth cameras to display CG images on the glass, effectively creating the illusion of augmented reality. Several AR systems with a HMD have already been developed with success [1, 8]. Using a HMD is beneficial as there is almost no obstruction in the surgeon's view compared to a traditional display; it is not necessary to move the display, and the need of a proper line-of-sight alignment between the display and the surgeon is not as accented [9].

At present, the applications of AR are limited by the essential requisite of preoperative 3D reconstructions of medical images. It is possible to create these reconstructions by using commercial or self-made software from the Digital Imaging and Communications in Medicine (DICOM) format [7, 10–12]. The quality of a reconstruction depends on the quality of input data and the accuracy of the reconstruction system. Such reconstructions can be used for virtual exploration of target areas, planning an effective surgical approach in advance, and for better orientation and navigation in the operative field.

The type and amount of displayed data rely on the requirements of the procedure and personal preferences of the surgical team. AR is especially useful in visualizing critical structures such as major vessels, nerves, or other vital tissues. By projecting these structures directly onto the patient, AR increases safety and reduces the time required to complete the procedure. Another useful feature of AR is the ability to control the opacity of displayed objects [13, 14]. Most HMDs allow the wearer to turn off all displayed images, becoming fully opaque, thus removing any possible distractions in an emergency. Furthermore, it is possible to utilize voice recognition to create voice commands, enabling hands-free control of the device. This is especially important in surgery as it allows surgeons to control the device without the need of assistance or break aseptic protocols. Another interesting option is to use gesture recognition, allowing the team to interact with the hardware even on sterile surfaces or in the air through body movements [6].

3.2. Monitoring the Operative Field

Most surgeries target deformable structures, which change significantly during a procedure (i.e., a removal of tissue during a resection). This problem needs to be addressed by constantly monitoring the operative field and making real-time changes to the displayed 3D model. It is possible to use perioperative ultrasound [15, 16], CT, or MRI to update the surgical site model. However, the amount of time required to capture and reconstruct medical images is significant. Using CT [17] or its modifications (i.e., C-arm cone-beam CT [18]) expose the patient to radiation and therefore can only be performed in a limited number of times. An open MRI is a feasible option for perioperative imaging; however, a single surgery requires 40 ± 9.4 minutes of scanning in average and the use of specialized MRI-compatible instruments [19]. Automatic medical reconstructions tend to include many different structures, which make the orientation difficult, especially in abdominal surgery. In some cases, this can be overcome by a method proposed by Sugimoto et al. [2]. In this technique, carbon dioxide is introduced into the gastrointestinal tract and pancreatico-biliary duct in conjunction with an intravenous contrast agent, which allows better display of these individual structures [2]. The quality of reconstructions may be improved in the future due to the development of imaging techniques, which allow making more detailed and refined images. Medical imaging may be replaced by depth-sensing cameras or video cameras in some cases; however, this method cannot detect structures beneath the surface that might be negated by developing a software to predict tissue behaviour by measuring forces applied to the target tissue. However, all currently proposed solutions need further improvement [7, 12, 17, 20, 21].

3.3. Augmented Reality for Education and Cooperation

Augmented reality proved to be an effective tool for training and skill assessment of surgery residents, other medical staff, or students [22–26]. Specialized training simulators can be created and used to improve surgeons' skills in various scenarios, as well as to objectively measure their technical skills. This will be especially beneficial for trainee residents and students in developing intuition and proper decision-making abilities, which can otherwise be only gained through long clinical practice. It allows simulation of very unlikely scenarios, which can be solved with the assistance of an experienced surgeon. Compared to virtual reality (VR) simulators, where the whole simulation takes part in a CG environment, the main advantage of AR simulators is the ability to combine real life objects with CG images, resulting in satisfactory tactile feedback as opposed to VR. AR has also been reported to increase the enjoyment of basic surgical training [27].

AR enables experienced surgeons to remotely assist residents by using Internet connection and therefore opens the way of excellent distant teaching. Shenai et al. [28] created virtual interactive presence and augmented reality (VIPAR) system. By continually monitoring and transmitting the image of a surgical site between two distant stations, it enables remote virtual collaboration between two surgeons [28]. This concept is sometimes referred to as “telepresence.” Davis et al. [29] used VIPAR system in an effort to allow communication between Vietnam and the USA. Thanks are due to the high resolution of the transmitted image, submillimetre achieved precision, and average latency of 237 milliseconds; the interaction between both surgeons was described as effective [29].

3.4. Methods of Image Alignment

The exact alignment of a real environment and CG images is an extremely important aspect to consider. The simplest method is to manually align both images [30]. Such a method is slow and may be imprecise, and therefore the registration process (the alignment of preoperative images with the currently treated patient) needs to be continuous to compensate for the changes in organ layout, that is, during breathing. The accurate alignment of both images is achieved by a set of trackers, which are used to determine the exact position of the camera and patient's body. These trackers usually track fiducial markers placed on the surface of specific structures, which remain still during the surgery (i.e., iliac crest, clavicles, etc.) and thus providing the system with points of reference.

There are several types of markers described in the literature. Many authors used a set of optical markers and a dedicated camera to detect them for navigation [15, 31–36]. The positions of the camera and the patient are determined by measuring distances between individual trackers and markers. Several studies reported using a set of infrared markers [4, 10, 13, 33, 37–40]. A newer approach suggested by Wild et al. [41] involved using fluorescent markers during laparoscopic procedures. The main advantage of such markers is the clear visibility even in difficult conditions and the ability to remain in the patient after the surgery. However, this requires an endoscopic system capable of detecting the emitted light [41]. Konishi et al. proposed an electromagnetic tracking system paired with an infrared sensor for laparoscopic surgery [42]. The main problem of magnetic tracking is the distortion caused by metal tools and equipment, which can however be minimized by using a special calibration technique [43]. The passive coordinate measurement arm (PCMA) method promises very high precision and the possibility to navigate without a direct line of sight by using a robotic arm for distance measurement [44]. Another method of registration is tracking of a laparoscopic camera and consequent automatic alignment of captured video with a 3D reconstruction [45]. Inoue et al. proved that using simple and affordable equipment, for instance a set of regular web cameras and freeware 3D reconstruction software, can be used to create a registration system thus depicting the purchase of such systems as possibility for all medical institutions [10]. The necessity of a direct line of sight may reduce the maximum possible organ deformation and rotation as well as the maximum range of tracked tools, depending on the exact configuration of the tracking system. It is possible to employ an increased number of tracker markers to minimize the chance of tracking failure due to a line of sight obstruction. Several commercially available tracking systems for medical AR are available, relying mostly on infrared or electromagnetic tracking [46].

With advancement in technology in the future, it may be possible to track organ position in real-time without the use of dedicated markers, using various methods for analysis of the operative field. Hostettler et al. used a real-time predictive simulation of abdominal organs [47], and Haouchine et al. designed a physics-based deformation model of registration [48]. These approaches are based on the usage of computing power to predict and visualize organ movement and deformation. It is also possible to use an RGB (red-green-blue) or range camera to perform registration without the use of markers [49, 50]. On the other hand, Hayashi et al. described natural points of reference as tracking points in the patient's body for progressive registration of cut vessels as markers [50]. Kowalczuk et al. created a system for real-time 3D modelling of the surgical site, with accuracy within 1.5 mm by using a high definition stereoscopic camera and a live reconstruction of the captured image [51].

Another possibility is to use a laser surface scanning technique, aligning images by scanning a high number of surface points without the use of fiducial markers. While Krishnan et al. found the accuracy of laser scanning to be sufficient [52], another study by Schicho et al. [53] suggests lower accuracy compared to using fiducial markers, with the overall deviation of 3.0 mm and 1.4 mm, respectively. Authors suggest that the accuracy may be improved by using an increased number of tracked surface points [53]. An additional marker-less registration method has been described by Lee et al., where authors combined a cone-beam CT image with a 3D RGB image with a sufficient accuracy of 2.58 mm; the absence of real-time image alignment is however still an issue [54]. All camera-based techniques are severely limited by the necessity of a direct line of sight. A novel method designed by Nosrati et al. [55] estimated organ movement by combining preoperative data, intraoperative colour, and visual cues with a vascular pulsation detection (also described by Amir-Khalili et al. [56]), resulting in a robust system not affected by common obstructions (light reflection, light smoke). This method increased the accuracy by 45% compared to traditional techniques. The proposed method, however, is not capable of real-time computation, as every registration requires approximately 10 seconds to be completed [55].

It needs to be noted that the amount of time required to prepare and calibrate an AR system needs to be considered for routine implementation. Pessaux et al. [12] reported a delay of a few seconds for each marker registration. The total time for AR-related registrations was 8 (6–10) minutes [7, 16]. The registration process of a marker-less system used by Sugimoto et al. [2] was completed within 5 minutes. The latency of displaying movement is important as lower latency generally means a better experience.

3.5. Precision

The accuracy and complexity of 3D reconstructed imaging are crucial in providing the correct data to the surgical team. An exact comparison of accuracy between specific studies is impossible due to variable conditions and different approaches for measuring accuracy. Optical systems feature precision within 5 mm in several studies [8, 10, 15, 18, 35, 37, 44, 57–59], which is considered sufficient for clinical application. The required precision differs greatly among various procedures and should be determined individually. The PCMA method of registering relative positions represents the best precision of all mentioned techniques, with an overall precision of <1 mm [44]. Yoshino et al. used a high-resolution MRI image for the reconstruction and an optical tracking system, with reported accuracy of 2.9 ± 1.9 mm while using an operative microscope during an experiment in a phantom model [31]. Two studies reported using a video projector during phantom experiments or an actual surgery, with results comparable to using an electronic display, featuring accuracy of 0.8–1.86 mm [1, 5]. Gavaghan et al. proposed a portable projection device with accuracy of 1.3 mm [3]. A few authors achieved a deviation of <2.7 mm while using a head-mounted display [8, 40, 60]. Although the difference in precision between a computer monitor and an HMD is not statistically significant [40]. It has been noted that the accuracy is not dependent on the surgeon's experience [8]. The maximum achievable precision is further diminished due to the difficulties in giving the projected image 3D appearance [1, 31] or giving a correct depth perception [8, 32, 34]. One of the possible solutions is to display objects with different opacity [61] or increasingly dark colour. This could be further minimized by taking advantage of motion parallax, which can be created by tracking surgeons head and modifying the projection accordingly [62].

3.6. Uses in Clinical Practice

Augmented reality can be used effectively for preoperative planning and completion of the actual surgery in timely fashion. The preoperative 3D reconstructed images can be modified and prepared for display in AR systems. Commonly, AR is used for tailoring individually preferred incisions and cutting planes [1, 36, 58], optimal placement of trocars, [63] or to generally improve safety by displaying positions of major organ components [7]. Another benefit of AR is the ability to aid surgeons in difficult terrain after a neoadjuvant chemotherapy or radiotherapy [64]. AR may be used to envisage and optimize the surgical volume of resection [16]. In many procedures, the AR-assisted surgery is comparable to other methods of assistance and the usage of such devices depends on surgeon's preference [58].

Augmented reality systems are the most useful during a surgery of organs with little movement and deformation (i.e., skull, brain, and pancreas) as the least amount of tracking and processing power is required whereas mobile organs, like the bowel, are significantly more complicated track and display. Considering these facts, the most frequently targeted areas in AR research are the head and brain [1, 8, 9, 11, 22, 31, 34, 36, 59, 61, 65–69], orthopaedic surgery [4, 37, 60, 70–77], hepato-biliary system [2, 3, 7, 13, 18, 32], and pancreas [13, 14, 35]. On top of that, AR may compensate the lack of tactile feedback usually experienced during laparoscopic surgery by presenting the surgeon with visual clues, thus improving hand-eye coordination and orientation, even in robotic surgery [78, 79]. Many studies proposed using augmented reality in laparoscopic procedures with success [7, 33, 38, 51, 64, 69].

Neurosurgical procedures have employed AR systems successfully, because of the inherent limitation of head and brain movement. It has been reported that AR had a major impact in 16.7% of neurovascular surgeries [34], allowing a higher rate of precise localization of lesions and shorter operative time compared to a traditional 2D approach [66]. Neurosurgeons benefit mainly from precise localization of individual gyri, blood vessels, important neuronal tracts, and the possibility to plan the operation corridor, for instance, in a removal of superficial tumours [10, 66], epilepsy surgery [65], or in neurovascular surgery [80].

AR has also proved to be useful during orthopaedic surgeries and reconstructions, especially because it allows to view reconstructions directly on top of the patient's body, which reduces the number of distractions caused by looking at an external display [37]. The list of procedures successfully utilizing AR ranges from minimally invasive percutaneous surgeries to trauma reconstructions [74], bone resections, osteotomies [73, 77, 81], arthroscopic surgery, Kirschner wire placement [82], joint replacement, or tumour removal [75, 76]. Percutaneous interventions only require a surface indicator of the insertion point, which can be displayed by AR [4]. A fluoroscopic dual-laser-based system was used by Liang et al. [37] for insertion guidance with satisfactory accuracy. However, the inability to perceive the depth of insertion forces the use of additional techniques [37]. In spite of that, the use of AR limits the radiation exposure during fluoroscopy and the amount of time required to perform the task [71, 72] while minimizing the risk of unnecessary haemorrhage. Rodas and Padoy used AR to create a user friendly visualization of scattered radiation during a minimally invasive surgery [70], enabling to measure and visualize the amount of radiation received. AR can change the workflow of creating orthopaedic implants, replacing 3D printing of a patient-specific model [60]. It is also possible to employ AR in orthopaedic robot-assisted surgeries [81].

It is more difficult to use AR in abdominal surgery, as the amount of organ movement is significant; nevertheless, it is currently used during liver and pancreatic surgeries as it allows better projection of large vessels [39] or tumour sites owing to comparative static nature of these organs. By comparing the reconstructed data with intraoperative ultrasound, AR can be used for intraoperative guidance during liver resections [83]. It can also assist the surgeon with the establishment of laparoscopic ports [13] or phrenotomy sites [84]. Similarly, the kidneys appear to be suitable for the usage of AR, as demonstrated by Muller et al. during a nephrolithotomy, where the AR was used to establish the percutaneous renal access [58]. An AR system has been proposed to accurately detect a sentinel node using a preoperative SPECT/CT scan of the surrounding lymph nodes. This allows to precisely navigate to the sentinel node and perform a resection even in difficult terrain [85]. Using a freehand SPECT to scan radiation distribution, reconstruction of the data and its implementation into AR in real time can extend the concept [27]. AR has also been successfully employed during splenectomies in children [33] and urological procedures [86]. With the increasing precision of AR systems, they can be safely used even in endocrine surgeries [87–89], otorhinolaryngologic surgery [90], eye surgery [91], vascular surgery [92, 93], or dental implantology too [60]; however, their exact usefulness in such surgeries is not exactly quantified owing to the complicated structures [61]. An AR system for transcatheter aortic valve implantation was designed and successfully used by Currie et al., showing comparable accuracy to traditional fluoroscopic guidance technique, hence avoiding impending complications of contrast agent administration [93].

The use of AR in robotic surgery is expanding rapidly, due to its ability to easily incorporate AR directly into the operators' console, which allows the surgeon to navigate more quickly and better identify important structures [7, 94]. The CG projection can be displayed separately or as an overlay of real-time video as needed by the surgeon. A few authors successfully applied AR during a robotic surgery with satisfactory results, showing a possible role of AR as a future trend in robotic surgery [12, 79, 95].

3.7. Advanced Image in Fusion Using Augmented Reality

Augmented reality cannot only display CG images but can also display images which are not normally visible. A handful of studies successfully combined optical images with near-infrared fluorescence images [96–99]. This technique can be used to increase precision by providing additional data for a surgeon to consider. Using this technique, surgeons could be able to detect blood vessels under the organ surface or detect other tissue abnormalities. Koreeda et al. [100] proposed another interesting concept. Here, AR has been used to visualize areas obscured by surgical instruments in laparoscopic procedures, making the tools appear invisible. This system also helped in effectively reducing the needle exit point errors during suturing, while achieving average accuracy of 1.4 mm and acceptable latency of 62.4 milliseconds [100].

3.8. Problems of Augmented Reality

Augmented reality introduces many new possibilities and adds new dimensions to surgical science. Surgeons can use additional data for decision making and improving safety and efficacy. Advances in technology allow AR devices to display information with increasing accuracy and lower latency. Despite of constant improvement, there are several difficulties which need to be addressed. Currently, all reconstructed images need to be prepared in advance using complex algorithms requiring powerful computers. However, thanks to an expected advancement in technology, a real-time acquisition of high-resolution medical scans and 3D reconstructions may be possible. Such reconstructions of the operative field would significantly improve overall accuracy. Even though AR can speed up a surgical procedure, the necessity to prepare the whole system and make required registrations and measurements generally increases the time necessary to complete the surgery, with the amount of time depending on the type of the procedure and the complexity of the AR system. The introduction of fully automatic systems would eliminate this problem and reduce the total time required for completion.

The time required for completing a procedure has been reduced while using any form of AR; however, there are certain limitations. One of them is inattentional blindness (an event where the surgeon does not see an unexpected object which suddenly appears in his field of view), which is a concerning issue that needs to be addressed while using 3D overlays [101]. The amount of information a surgeon has presented through AR during a surgery is increasing and may be distracting [60]. Therefore, it is necessary to display only important data or provide a method to switch between different sets of information on demand. A method for the reduction of cognitive demands was proposed by Hansen et al. [102]. By optimizing the spatial relationship of individual structures, reducing the occlusion caused by AR to minimum, and maximizing contrast, surgeons were able to reduce visual clutter in some cases [102]. Reaching an adequate image contrast during a projection directly onto organs is also necessary [13]. Difficulties in creating a correct 3D and depth perception also persist. The latency of the whole system is also of concern because excessive latency may lower precision and reduce comfort of the surgeon. Kang et al. measured the latency of their optical system for laparoscopic procedures to be 144 ± 19 milliseconds [15].

Currently used head-mounted displays usually weigh several hundred grams and produce plenty of heat; therefore, long-term wear comfort is an issue. These need to be addressed in future to better fit the ergonomics and allow continuous usage for a long period. It is known that virtual and augmented reality projections in HMDs produce simulator sickness, which is presented by nausea, headache, and vertigo or vomiting in the worst scenario. The exact causes behind the simulator sickness are unknown; however, a discrepancy between visual, proprioceptive, and vestibular inputs is probably the case.

4. Conclusions

Studies suggest that AR systems are becoming comparable to traditional navigation techniques, with precision and safety sufficient for routine clinical practice. Most problems faced presently will be solved by further medical and technological research. Augmented reality appears to be a powerful tool possibly capable of revolutionising the field of surgery through a rational use. In the future, AR will likely serve as an advanced human-computer interface, working in symbiosis with surgeons, allowing them to achieve even better results. Nevertheless, further advancement is much needed to achieve maximum potential and cost-effectiveness of augmented reality.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- 1.Tabrizi L. B., Mahvash M. Augmented reality-guided neurosurgery: accuracy and intraoperative application of an image projection technique. Journal of Neurosurgery. 2015;123:206–211. doi: 10.3171/2014.9.JNS141001. [DOI] [PubMed] [Google Scholar]

- 2.Sugimoto M., Yasuda H., Koda K., et al. Image overlay navigation by markerless surface registration in gastrointestinal, hepatobiliary and pancreatic surgery. Journal Hepato-Biliary-Pancreatic Sciences. 2010;17:629–636. doi: 10.1007/s00534-009-0199-y. [DOI] [PubMed] [Google Scholar]

- 3.Gavaghan K. A., Peterhans M., Oliveira-Santos T., Weber S. A portable image overlay projection device for computer-aided open liver surgery. IEEE Transactions on Biomedical Engineering. 2011;58:1855–1864. doi: 10.1109/TBME.2011.2126572. [DOI] [PubMed] [Google Scholar]

- 4.Gavaghan K., Oliveira-Santos T., Peterhans M., et al. Evaluation of a portable image overlay projector for the visualisation of surgical navigation data: phantom studies. International Journal of Computer Assisted Radiology and Surgery. 2012;7:547–556. doi: 10.1007/s11548-011-0660-7. [DOI] [PubMed] [Google Scholar]

- 5.Wen R., Chui C. K., Ong S. H., Lim K. B., Chang S. K. Y. Projection-based visual guidance for robot-aided RF needle insertion. International Journal of Computer Assisted Radiology and Surgery. 2013;8:1015–1025. doi: 10.1007/s11548-013-0897-4. [DOI] [PubMed] [Google Scholar]

- 6.Kocev B., Ritter F., Linsen L. Projector-based surgeon-computer interaction on deformable surfaces. International Journal of Computer Assisted Radiology and Surgery. 2014;9:301–312. doi: 10.1007/s11548-013-0928-1. [DOI] [PubMed] [Google Scholar]

- 7.Pessaux P., Diana M., Soler L., Piardi T., Mutter D., Marescaux J. Towards cybernetic surgery: robotic and augmented reality-assisted liver segmentectomy. Langenbeck’s Archives of Surgery. 2015;400:381–385. doi: 10.1007/s00423-014-1256-9. [DOI] [PubMed] [Google Scholar]

- 8.Badiali G., Ferrari V., Cutolo F., et al. Augmented reality as an aid in maxillofacial surgery: validation of a wearable system allowing maxillary repositioning. Journal of Cranio-Maxillofacial Surgery. 2014;42:1970–1976. doi: 10.1016/j.jcms.2014.09.001. [DOI] [PubMed] [Google Scholar]

- 9.Watanabe E., Satoh M., Konno T., Hirai M., Yamaguchi T. The trans-visible navigator: a see-through neuronavigation system using augmented reality. World Neurosurgery. 2016;87:399–405. doi: 10.1016/j.wneu.2015.11.084. [DOI] [PubMed] [Google Scholar]

- 10.Inoue D., Cho B., Mori M., et al. Preliminary study on the clinical application of augmented reality neuronavigation. Journal of Neurological Surgery Part A. 2013;74:71–76. doi: 10.1055/s-0032-1333415. [DOI] [PubMed] [Google Scholar]

- 11.Callovini G., Sherkat S., Callovini G., Gazzeri R. Frameless nonstereotactic image-guided surgery of supratentorial lesions: introduction to a safe and inexpensive technique. Journal of Neurological Surgery Part A. 2014;75:365–370. doi: 10.1055/s-0033-1358607. [DOI] [PubMed] [Google Scholar]

- 12.Pessaux P., Diana M., Soler L., Piardi T., Mutter D., Marescaux J. Robotic duodenopancreatectomy assisted with augmented reality and real-time fluorescence guidance. Surgical Endoscopy. 2014;28:2493–2498. doi: 10.1007/s00464-014-3465-2. [DOI] [PubMed] [Google Scholar]

- 13.Okamoto T., Onda S., Yanaga K., Suzuki N., Hattori A. Clinical application of navigation surgery using augmented reality in the abdominal field. Surgery Today. 2015;45:397–406. doi: 10.1007/s00595-014-0946-9. [DOI] [PubMed] [Google Scholar]

- 14.Marescaux J., Diana M. Inventing the future of surgery. World Journal of Surgery. 2015;39:615–622. doi: 10.1007/s00268-014-2879-2. [DOI] [PubMed] [Google Scholar]

- 15.Kang X., Azizian M., Wilson E., et al. Stereoscopic augmented reality for laparoscopic surgery. Surgical Endoscopy. 2014;28:2227–2235. doi: 10.1007/s00464-014-3433-x. [DOI] [PubMed] [Google Scholar]

- 16.Gonzalez S. J., Guo Y. H., Lee M. C. Feasibility of augmented reality glasses for real-time, 3-dimensional (3D) intraoperative guidance. Journal of the American College of Surgeons. 2014;219:S64–S64. [Google Scholar]

- 17.Shekhar R., Dandekar O., Bhat V., et al. Live augmented reality: a new visualization method for laparoscopic surgery using continuous volumetric computed tomography. Surgical Endoscopy. 2010;24:1976–1985. doi: 10.1007/s00464-010-0890-8. [DOI] [PubMed] [Google Scholar]

- 18.Kenngott H. G., Wagner M., Gondan M., et al. Real-time image guidance in laparoscopic liver surgery: first clinical experience with a guidance system based on intraoperative CT imaging. Surgical Endoscopy. 2014;28:933–940. doi: 10.1007/s00464-013-3249-0. [DOI] [PubMed] [Google Scholar]

- 19.Tsutsumi N., Tomikawa M., Uemura M., et al. Image-guided laparoscopic surgery in an open MRI operating theater. Surgical Endoscopy. 2013;27:2178–2184. doi: 10.1007/s00464-012-2737-y. [DOI] [PubMed] [Google Scholar]

- 20.Kranzfelder M., Bauer M., Magg M., et al. Image guided surgery. Endoskopie Heute. 2014;27:154–158. [Google Scholar]

- 21.Nam W. H., Kang D. G., Lee D., Lee J. Y., Ra J. B. Automatic registration between 3D intra-operative ultrasound and pre-operative CT images of the liver based on robust edge matching. Physics in Medicine and Biology. 2012;57:69–91. doi: 10.1088/0031-9155/57/1/69. [DOI] [PubMed] [Google Scholar]

- 22.Shakur S. F., Luciano C. J., Kania P., et al. Usefulness of a virtual reality percutaneous trigeminal rhizotomy simulator in neurosurgical training. Operative Neurosurgery. 2015;11:420–425. doi: 10.1227/NEU.0000000000000853. [DOI] [PubMed] [Google Scholar]

- 23.Leblanc F., Champagne B. J., Augestad K. M., et al. Colorectal Surg Training Group: a comparison of human cadaver and augmented reality simulator models for straight laparoscopic colorectal skills acquisition training. Journal of the American College of Surgeons. 2010;211:250–255. doi: 10.1016/j.jamcollsurg.2010.04.002. [DOI] [PubMed] [Google Scholar]

- 24.Lelos N., Campos P., Sugand K., Bailey C., Mirza K. Augmented reality dynamic holography for neurology. Journal of Neurology, Neurosurgery, and Psychiatry. 2014;85:p. 1. [Google Scholar]

- 25.Vera A. M., Russo M., Mohsin A., Tsuda S. Augmented reality telementoring (ART) platform: a randomized controlled trial to assess the efficacy of a new surgical education technology. Surgical Endoscopy. 2014;28:3467–3472. doi: 10.1007/s00464-014-3625-4. [DOI] [PubMed] [Google Scholar]

- 26.Lahanas V., Loukas C., Smailis N., Georgiou E. A novel augmented reality simulator for skills assessment in minimal invasive surgery. Surgical Endoscopy. 2015;29:2224–2234. doi: 10.1007/s00464-014-3930-y. [DOI] [PubMed] [Google Scholar]

- 27.Profeta A. C., Schilling C., McGurk M. Augmented reality visualization in head and neck surgery: an overview of recent findings in sentinel node biopsy and future perspectives. The British Journal of Oral & Maxillofacial Surgery. 2016;54:694–696. doi: 10.1016/j.bjoms.2015.11.008. [DOI] [PubMed] [Google Scholar]

- 28.Shenai M. B., Dillavou M., Shum C., et al. Virtual interactive presence and augmented reality (VIPAR) for remote surgical assistance. Neurosurgery. 2011;68:p. 8. doi: 10.1227/NEU.0b013e3182077efd. [DOI] [PubMed] [Google Scholar]

- 29.Davis M. C., Can D. D., Pindrik J., Rocque B. G., Johnston J. M. Virtual interactive presence in global surgical education: international collaboration through augmented reality. World Neurosurgery. 2016;86:103–111. doi: 10.1016/j.wneu.2015.08.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Vemuri A. S., Wu J. C. H., Liu K. C., Wu H. S. Deformable three-dimensional model architecture for interactive augmented reality in minimally invasive surgery. Surgical Endoscopy. 2012;26:3655–3662. doi: 10.1007/s00464-012-2395-0. [DOI] [PubMed] [Google Scholar]

- 31.Yoshino M., Saito T., Kin T., et al. A microscopic optically tracking navigation system that uses high-resolution 3D computer graphics. Neurologia Medico-Chirurgica. 2015;55:674–679. doi: 10.2176/nmc.tn.2014-0278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Okamoto T., Onda S., Matsumoto M., et al. Utility of augmented reality system in hepatobiliary surgery. Journal Hepato-Biliary-Pancreatic Sciences. 2013;20:249–253. doi: 10.1007/s00534-012-0504-z. [DOI] [PubMed] [Google Scholar]

- 33.Ieiri S., Uemura M., Konishi K., et al. Augmented reality navigation system for laparoscopic splenectomy in children based on preoperative CT image using optical tracking device. Pediatric Surgery International. 2012;28:341–346. doi: 10.1007/s00383-011-3034-x. [DOI] [PubMed] [Google Scholar]

- 34.Kersten-Oertel M., Gerard I., Drouin S., et al. Augmented reality in neurovascular surgery: feasibility and first uses in the operating room. International Journal of Computer Assisted Radiology and Surgery. 2015;10:1823–1836. doi: 10.1007/s11548-015-1163-8. [DOI] [PubMed] [Google Scholar]

- 35.Okamoto T., Onda S., Yasuda J., Yanaga K., Suzuki N., Hattori A. Navigation surgery using an augmented reality for pancreatectomy. Digestive Surgery. 2015;32:117–123. doi: 10.1159/000371860. [DOI] [PubMed] [Google Scholar]

- 36.Qu M., Hou Y. K., Xu Y. R., et al. Precise positioning of an intraoral distractor using augmented reality in patients with hemifacial microsomia. Journal of Cranio-Maxillofacial Surgery. 2015;43:106–112. doi: 10.1016/j.jcms.2014.10.019. [DOI] [PubMed] [Google Scholar]

- 37.Liang J. T., Doke T., Onogi S., et al. A fluorolaser navigation system to guide linear surgical tool insertion. International Journal of Computer Assisted Radiology and Surgery. 2012;7:931–939. doi: 10.1007/s11548-012-0743-0. [DOI] [PubMed] [Google Scholar]

- 38.Onda S., Okamoto T., Kanehira M., et al. Short rigid scope and stereo-scope designed specifically for open abdominal navigation surgery: clinical application for hepatobiliary and pancreatic surgery. Journal Hepato-Biliary-Pancreatic Sciences. 2013;20:448–453. doi: 10.1007/s00534-012-0582-y. [DOI] [PubMed] [Google Scholar]

- 39.Onda S., Okamoto T., Kanehira M., et al. Identification of inferior pancreaticoduodenal artery during pancreaticoduodenectomy using augmented reality-based navigation system. Journal Hepato-Biliary-Pancreatic Sciences. 2014;21:281–287. doi: 10.1002/jhbp.25. [DOI] [PubMed] [Google Scholar]

- 40.Vigh B., Muller S., Ristow O., et al. The use of a head-mounted display in oral implantology: a feasibility study. International Journal of Computer Assisted Radiology and Surgery. 2014;9:71–78. doi: 10.1007/s11548-013-0912-9. [DOI] [PubMed] [Google Scholar]

- 41.Wild E., Teber D., Schmid D., et al. Robust augmented reality guidance with fluorescent markers in laparoscopic surgery. International Journal of Computer Assisted Radiology and Surgery. 2016;11:899–907. doi: 10.1007/s11548-016-1385-4. [DOI] [PubMed] [Google Scholar]

- 42.Konishi K., Hashizume M., Nakamoto M., et al. Augmented reality navigation system for endoscopic surgery based on three-dimensional ultrasound and computed tomography: application to 20 clinical cases. In: Lemke H. U., Inamura K., Doi K., Vannier M. W., Farman A. G., editors. CARS 2005: Computer Assisted Radiology and Surgery. Vol. 1281. Amsterdam: Elsevier Science Bv; 2005. pp. 537–542. [Google Scholar]

- 43.Nakamoto M., Nakada K., Sato Y., Konishi K., Hashizume M., Tamura S. Intraoperative magnetic tracker calibration using a magneto-optic hybrid tracker for 3-D ultrasound-based navigation in laparoscopic surgery. IEEE Transactions on Medical Imaging. 2008;27:255–270. doi: 10.1109/TMI.2007.911003. [DOI] [PubMed] [Google Scholar]

- 44.Lapeer R. J., Jeffrey S. J., Dao J. T., et al. Using a passive coordinate measurement arm for motion tracking of a rigid endoscope for augmented-reality image-guided surgery. International Journal of Medical Robotics and Computer Assisted Surgery. 2014;10:65–77. doi: 10.1002/rcs.1513. [DOI] [PubMed] [Google Scholar]

- 45.Conrad C., Fusaglia M., Peterhans M., Lu H. X., Weber S., Gayet B. Augmented reality navigation surgery facilitates laparoscopic rescue of failed portal vein embolization. Journal of the American College of Surgeons. 2016;223:E31–E34. doi: 10.1016/j.jamcollsurg.2016.06.392. [DOI] [PubMed] [Google Scholar]

- 46.Nicolau S., Soler L., Mutter D., Marescaux J. Augmented reality in laparoscopic surgical oncology. Surgical Oncology-Oxford. 2011;20:189–201. doi: 10.1016/j.suronc.2011.07.002. [DOI] [PubMed] [Google Scholar]

- 47.Hostettler A., Nicolau S. A., Remond Y., Marescaux J., Soler L. A real-time predictive simulation of abdominal viscera positions during quiet free breathing. Progress in Biophysics and Molecular Biology. 2010;103:169–184. doi: 10.1016/j.pbiomolbio.2010.09.017. [DOI] [PubMed] [Google Scholar]

- 48.Haouchine N., Dequidt J., Berger M. O., Cotin S. Deformation-based augmented reality for hepatic surgery. Studies in Health Technology and Informatics. 2013;184:182–188. doi: 10.3233/978-1-61499-209-7-182. [DOI] [PubMed] [Google Scholar]

- 49.Kilgus T., Heim E., Haase S., et al. Mobile markerless augmented reality and its application in forensic medicine. International Journal of Computer Assisted Radiology and Surgery. 2015;10:573–586. doi: 10.1007/s11548-014-1106-9. [DOI] [PubMed] [Google Scholar]

- 50.Hayashi Y., Misawa K., Hawkes D. J., Mori K. Progressive internal landmark registration for surgical navigation in laparoscopic gastrectomy for gastric cancer. International Journal of Computer Assisted Radiology and Surgery. 2016;11:837–845. doi: 10.1007/s11548-015-1346-3. [DOI] [PubMed] [Google Scholar]

- 51.Kowalczuk J., Meyer A., Carlson J., et al. Real-time three-dimensional soft tissue reconstruction for laparoscopic surgery. Surgical Endoscopy. 2012;26:3413–3417. doi: 10.1007/s00464-012-2355-8. [DOI] [PubMed] [Google Scholar]

- 52.Krishnan R., Raabe A., Seifert V. Accuracy and applicability of laser surface scanning as new registration technique in image-guided neurosurgery. In: Lemke H. U., Inamura K., Doi K., Vannier M. W., Farman A. G., JHC R., editors. Cars 2004: Computer Assisted Radiology and Surgery. Vol. 1268. Amsterdam: Elsevier Science Bv; 2004. pp. 678–683. [Google Scholar]

- 53.Schicho K., Figl M., Seemann R., et al. Comparison of laser surface scanning and fiducial marker-based registration in frameless stereotaxy - technical note. Journal of Neurosurgery. 2007;106:704–709. doi: 10.3171/jns.2007.106.4.704. [DOI] [PubMed] [Google Scholar]

- 54.Lee S. C., Fuerst B., Fotouhi J., Fischer M., Osgood G., Navab N. Calibration of RGBD camera and cone-beam CT for 3D intra-operative mixed reality visualization. International Journal of Computer Assisted Radiology and Surgery. 2016;11:967–975. doi: 10.1007/s11548-016-1396-1. [DOI] [PubMed] [Google Scholar]

- 55.Nosrati M. S., Amir-Khalili A., Peyrat J. M., et al. Endoscopic scene labelling and augmentation using intraoperative pulsatile motion and colour appearance cues with preoperative anatomical priors. International Journal of Computer Assisted Radiology and Surgery. 2016;11:1409–1418. doi: 10.1007/s11548-015-1331-x. [DOI] [PubMed] [Google Scholar]

- 56.Amir-Khalili A., Hamarneh G., Peyrat J. M., et al. Automatic segmentation of occluded vasculature via pulsatile motion analysis in endoscopic robot-assisted partial nephrectomy video. Medical Image Analysis. 2015;25:103–110. doi: 10.1016/j.media.2015.04.010. [DOI] [PubMed] [Google Scholar]

- 57.Li L., Yang J., Chu Y. K., et al. A novel augmented reality navigation system for endoscopic sinus and skull base surgery: a feasibility study. PLoS One. 2016;11:p. 17. doi: 10.1371/journal.pone.0146996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Muller M., Rassweiler M. C., Klein J., et al. Mobile augmented reality for computer-assisted percutaneous nephrolithotomy. International Journal of Computer Assisted Radiology and Surgery. 2013;8:663–675. doi: 10.1007/s11548-013-0828-4. [DOI] [PubMed] [Google Scholar]

- 59.Deng W. W., Li F., Wang M. N., Song Z. J. Easy-to-use augmented reality neuronavigation using a wireless tablet PC. Stereotactic and Functional Neurosurgery. 2014;92:17–24. doi: 10.1159/000354816. [DOI] [PubMed] [Google Scholar]

- 60.Katic D., Spengler P., Bodenstedt S., et al. A system for context-aware intraoperative augmented reality in dental implant surgery. International Journal of Computer Assisted Radiology and Surgery. 2015;10:101–108. doi: 10.1007/s11548-014-1005-0. [DOI] [PubMed] [Google Scholar]

- 61.Cabrilo I., Bijlenga P., Schaller K. Augmented reality in the surgery of cerebral arteriovenous malformations: technique assessment and considerations. Acta Neurochirurgica. 2014;156:1769–1774. doi: 10.1007/s00701-014-2183-9. [DOI] [PubMed] [Google Scholar]

- 62.Riechmann M., Kahrs L., Ulmer C., Raczkowsky J., Lamadé W., Wörn H. Visualisierungskonzept für die projektorbasierte Erweiterte Realität in der Leberchirurgie. Proceeding of BMT. 2006;209:1–2. [Google Scholar]

- 63.Volonte F., Pugin F., Bucher P., Sugimoto M., Ratib O., Morel P. Augmented reality and image overlay navigation with OsiriX in laparoscopic and robotic surgery: not only a matter of fashion. Journal Hepato-Biliary-Pancreatic Sciences. 2011;18:506–509. doi: 10.1007/s00534-011-0385-6. [DOI] [PubMed] [Google Scholar]

- 64.Souzaki R., Ieiri S., Uemura M., et al. An augmented reality navigation system for pediatric oncologic surgery based on preoperative CT and MRI images. Journal of Pediatric Surgery. 2013;48:2479–2483. doi: 10.1016/j.jpedsurg.2013.08.025. [DOI] [PubMed] [Google Scholar]

- 65.Wang A., Mirsattari S. M., Parrent A. G., Peters T. M. Fusion and visualization of intraoperative cortical images with preoperative models for epilepsy surgical planning and guidance. Computer Aided Surgery. 2011;16:149–160. doi: 10.3109/10929088.2011.585805. [DOI] [PubMed] [Google Scholar]

- 66.Mert A., Buehler K., Sutherland G. R., et al. Brain tumor surgery with 3-dimensional surface navigation. Neurosurgery. 2012;71:286–294. doi: 10.1227/NEU.0b013e31826a8a75. [DOI] [PubMed] [Google Scholar]

- 67.Cabrilo I., Bijlenga P., Schaller K. Augmented reality in the surgery of cerebral aneurysms: a technical report. Neurosurgery. 2014;10:252–260. doi: 10.1227/NEU.0000000000000328. [DOI] [PubMed] [Google Scholar]

- 68.Cabrilo I., Sarrafzadeh A., Bijlenga P., Landis B. N., Schaller K. Augmented reality-assisted skull base surgery. Neurochirurgie. 2014;60:304–306. doi: 10.1016/j.neuchi.2014.07.001. [DOI] [PubMed] [Google Scholar]

- 69.Schoob A., Kundrat D., Kleingrothe L., Kahrs L. A., Andreff N., Ortmaier T. Tissue surface information for intraoperative incision planning and focus adjustment in laser surgery. International Journal of Computer Assisted Radiology and Surgery. 2015;10:171–181. doi: 10.1007/s11548-014-1077-x. [DOI] [PubMed] [Google Scholar]

- 70.Rodas N. L., Padoy N. Seeing is believing: increasing intraoperative awareness to scattered radiation in interventional procedures by combining augmented reality, Monte Carlo simulations and wireless dosimeters. International Journal of Computer Assisted Radiology and Surgery. 2015;10:1181–1191. doi: 10.1007/s11548-015-1161-x. [DOI] [PubMed] [Google Scholar]

- 71.Londei R., Esposito M., Diotte B., et al. Intra-operative augmented reality in distal locking. International Journal of Computer Assisted Radiology and Surgery. 2015;10:1395–1403. doi: 10.1007/s11548-015-1169-2. [DOI] [PubMed] [Google Scholar]

- 72.Shen F. Y., Chen B. L., Guo Q. S., Qi Y., Shen Y. Augmented reality patient-specific reconstruction plate design for pelvic and acetabular fracture surgery. International Journal of Computer Assisted Radiology and Surgery. 2013;8:169–179. doi: 10.1007/s11548-012-0775-5. [DOI] [PubMed] [Google Scholar]

- 73.Zhu M., Chai G., Zhang Y., Ma X. F., Gan J. L. Registration strategy using occlusal splint based on augmented reality for mandibular angle oblique split osteotomy. The Journal of Craniofacial Surgery. 2011;22:1806–1809. doi: 10.1097/SCS.0b013e31822e8064. [DOI] [PubMed] [Google Scholar]

- 74.Weber S., Klein M., Hein A., Krueger T., Lueth T. C., Bier J. The navigated image viewer - evaluation in maxillofacial surgery. In: Ellis R. E., Peters T. M., editors. Medical Image Computing and Computer-Assisted Intervention - Miccai 2003, Pt 1. Vol. 2878. Berlin: Springer-Verlag Berlin; 2003. pp. 762–769. [Google Scholar]

- 75.Nikou C., Digioia A. M., Blackwell M., Jaramaz B., Kanade T. Augmented reality imaging technology for orthopaedic surgery. Operative Techniques in Orthopaedics. 2000;10:82–86. doi: 10.1016/S1048-6666(00)80047-6. [DOI] [Google Scholar]

- 76.Blackwell M., Morgan F., DiGioia A. M. Augmented reality and its future in orthopaedics. Clinical Orthopaedics and Related Research. 1998;354:111–122. doi: 10.1097/00003086-199809000-00014. [DOI] [PubMed] [Google Scholar]

- 77.Wagner A., Rasse M., Millesi W., Ewers R. Virtual reality for orthognathic surgery: the augmented reality environment concept. Journal of Oral and Maxillofacial Surgery. 1997;55:456–462. doi: 10.1016/S0278-2391(97)90689-3. [DOI] [PubMed] [Google Scholar]

- 78.Hughes-Hallett A., Mayer E. K., Pratt P., Mottrie A., Darzi A., Vale J. The current and future use of imaging in urological robotic surgery: a survey of the European Association of Robotic Urological Surgeons. International Journal of Medical Robotics and Computer Assisted Surgery. 2015;11:8–14. doi: 10.1002/rcs.1596. [DOI] [PubMed] [Google Scholar]

- 79.Volonte F., Pugin F., Buchs N. C., et al. Console-integrated stereoscopic OsiriX 3D volume-rendered images for da Vinci colorectal robotic surgery. Surgical Innovation. 2013;20:158–163. doi: 10.1177/1553350612446353. [DOI] [PubMed] [Google Scholar]

- 80.Cabrilo I., Schaller K., Bijlenga P. Augmented reality-assisted bypass surgery: embracing minimal invasiveness. World Neurosurgery. 2015;83:596–602. doi: 10.1016/j.wneu.2014.12.020. [DOI] [PubMed] [Google Scholar]

- 81.Lin L., Shi Y. Y., Tan A., et al. Mandibular angle split osteotomy based on a novel augmented reality navigation using specialized robot-assisted arms-a feasibility study. Journal of Cranio-Maxillofacial Surgery. 2016;44:215–223. doi: 10.1016/j.jcms.2015.10.024. [DOI] [PubMed] [Google Scholar]

- 82.Fischer M., Fuerst B., Lee S. C., et al. Preclinical usability study of multiple augmented reality concepts for K-wire placement. International Journal of Computer Assisted Radiology and Surgery. 2016;11:1007–1014. doi: 10.1007/s11548-016-1363-x. [DOI] [PubMed] [Google Scholar]

- 83.Ntourakis D., Memeo R., Soler L., Marescaux J., Mutter D., Pessaux P. Augmented reality guidance for the resection of missing colorectal liver metastases: an initial experience. World Journal of Surgery. 2016;40:419–426. doi: 10.1007/s00268-015-3229-8. [DOI] [PubMed] [Google Scholar]

- 84.Hallet J., Soler L., Diana M., et al. Trans-thoracic minimally invasive liver resection guided by augmented reality. Journal of the American College of Surgeons. 2015;220:E55–E60. doi: 10.1016/j.jamcollsurg.2014.12.053. [DOI] [PubMed] [Google Scholar]

- 85.Brouwer O. R., Van den Berg N., Matheron H., et al. Feasibility of image guided sentinel node biopsy using augmented reality and SPECT/CT-based 3D navigation. Annals of Surgical Oncology. 2013;20:S103–S103. [Google Scholar]

- 86.Ukimura O., Gill I. S. Imaging-assisted endoscopic surgery: Cleveland clinic experience. Journal of Endourology. 2008;22:803–809. doi: 10.1089/end.2007.9823. [DOI] [PubMed] [Google Scholar]

- 87.D'Agostino J., Wall J., Soler L., Vix M., Duh Q. Y., Marescaux J. Virtual neck exploration for parathyroid adenomas a first step toward minimally invasive image-guided surgery. JAMA Surgery. 2013;148:232–238. doi: 10.1001/jamasurg.2013.739. [DOI] [PubMed] [Google Scholar]

- 88.D'Agostino J., Diana M., Vix M., et al. Three-dimensional metabolic and radiologic gathered evaluation using VR-RENDER fusion: a novel tool to enhance accuracy in the localization of parathyroid adenomas. World Journal of Surgery. 2013;37:1618–1625. doi: 10.1007/s00268-013-2021-x. [DOI] [PubMed] [Google Scholar]

- 89.Marescaux J., Rubino F., Arenas M., Mutter D., Soler L. Augmented-reality-assisted laparoscopic adrenalectomy. JAMA: The Journal of the American Medical Association. 2004;292:2214–2215. doi: 10.1001/jama.292.18.2214-c. [DOI] [PubMed] [Google Scholar]

- 90.Winne C., Khan M., Stopp F., Jank E., Keeve E. Overlay visualization in endoscopic ENT surgery. International Journal of Computer Assisted Radiology and Surgery. 2011;6:401–406. doi: 10.1007/s11548-010-0507-7. [DOI] [PubMed] [Google Scholar]

- 91.Mezzana P., Scarinci F., Marabottini N. Augmented reality in oculoplastic surgery: first iPhone application. Plastic and Reconstructive Surgery. 2011;127:57E–58E. doi: 10.1097/PRS.0b013e31820632eb. [DOI] [PubMed] [Google Scholar]

- 92.Mochizuki Y., Hosaka A., Kamiuchi H., et al. New simple image overlay system using a tablet PC for pinpoint identification of the appropriate site for anastomosis in peripheral arterial reconstruction. Surgery Today. 2016;46:1387–1393. doi: 10.1007/s00595-016-1326-4. [DOI] [PubMed] [Google Scholar]

- 93.Currie M. E., McLeod A. J., Moore J. T., et al. Augmented reality system for ultrasound guidance of transcatheter aortic valve implantation. Innovations. 2016;11:31–39. doi: 10.1097/IMI.0000000000000235. [DOI] [PubMed] [Google Scholar]

- 94.Balaphas A., Buchs N. C., Meyer J., Hagen M. E., Morel P. Partial splenectomy in the era of minimally invasive surgery: the current laparoscopic and robotic experiences. Surgical Endoscopy. 2015;29:3618–3627. doi: 10.1007/s00464-015-4118-9. [DOI] [PubMed] [Google Scholar]

- 95.Volonte F., Buchs N. C., Pugin F., et al. Augmented reality to the rescue of the minimally invasive surgeon. The usefulness of the interposition of stereoscopic images in the da Vinci (TM) robotic console. International Journal of Medical Robotics and Computer Assisted Surgery. 2013;9:E34–E38. doi: 10.1002/rcs.1471. [DOI] [PubMed] [Google Scholar]

- 96.Watson J. R., Martirosyan N., Skoch J., Lemole G. M., Anton R., Romanowski M. Augmented microscopy with near-infrared fluorescence detection. In: Pogue B. W., Gioux S., editors. Molecular-Guided Surgery: Molecules, Devices, and Applications. Vol. 9311. Bellingham: Spie-Int Soc Optical Engineering; 2015. [Google Scholar]

- 97.Diana M., Halvax P., Dallemagne B., et al. Real-time navigation by fluorescence-based enhanced reality for precise estimation of future anastomotic site in digestive surgery. Surgical Endoscopy. 2014;28:3108–3118. doi: 10.1007/s00464-014-3592-9. [DOI] [PubMed] [Google Scholar]

- 98.Diana M., Noll E., Diemunsch P., et al. Enhanced-reality video fluorescence a real-time assessment of intestinal viability. Annals of Surgery. 2014;259:700–707. doi: 10.1097/SLA.0b013e31828d4ab3. [DOI] [PubMed] [Google Scholar]

- 99.Martirosyan N. L., Skoch J., Watson J. R., Lemole G. M., Romanowski M. Integration of indocyanine green videoangiography with operative microscope: augmented reality for interactive assessment of vascular structures and blood flow. Operative Neurosurgery. 2015;11:252–257. doi: 10.1227/NEU.0000000000000681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Koreeda Y., Kobayashi Y., Ieiri S., et al. Virtually transparent surgical instruments in endoscopic surgery with augmentation of obscured regions. International Journal of Computer Assisted Radiology and Surgery. 2016;11:1927–1936. doi: 10.1007/s11548-016-1384-5. [DOI] [PubMed] [Google Scholar]

- 101.Marcus H. J., Pratt P., Hughes-Hallett A., et al. Comparative effectiveness and safety of image guidance systems in neurosurgery: a preclinical randomized study. Journal of Neurosurgery. 2015;123:307–313. doi: 10.3171/2014.10.JNS141662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Hansen C., Wieferich J., Ritter F., Rieder C., Peitgen H. O. Illustrative visualization of 3D planning models for augmented reality in liver surgery. International Journal of Computer Assisted Radiology and Surgery. 2010;5:133–141. doi: 10.1007/s11548-009-0365-3. [DOI] [PubMed] [Google Scholar]