Abstract

The functionality of much of human lateral frontal cortex (LFC) has been characterized as “multiple demand” (MD) as these regions appear to support a broad range of cognitive tasks. In contrast to this domain-general account, recent evidence indicates that portions of LFC are consistently selective for sensory modality. Michalka et al. (2015) reported two bilateral regions that are biased for visual attention, superior precentral sulcus (sPCS) and inferior precentral sulcus (iPCS), interleaved with two bilateral regions that are biased for auditory attention, transverse gyrus intersecting precentral sulcus (tgPCS) and caudal inferior frontal sulcus (cIFS). In the present study, we use fMRI to examine both the multiple-demand and sensory-bias hypotheses within caudal portions of human LFC (both men and women participated). Using visual and auditory 2-back tasks, we replicate the finding of two bilateral visual-biased and two bilateral auditory-biased LFC regions, corresponding to sPCS and iPCS and to tgPCS and cIFS, and demonstrate high within-subject reliability of these regions over time and across tasks. In addition, we assess MD responsiveness using BOLD signal recruitment and multi-task activation indices. In both, we find that the two visual-biased regions, sPCS and iPCS, exhibit stronger MD responsiveness than do the auditory-biased LFC regions, tgPCS and cIFS; however, neither reaches the degree of MD responsiveness exhibited by dorsal anterior cingulate/presupplemental motor area or by anterior insula. These results reconcile two competing views of LFC by demonstrating the coexistence of sensory specialization and MD functionality, especially in visual-biased LFC structures.

SIGNIFICANCE STATEMENT Lateral frontal cortex (LFC) is known to play a number of critical roles in supporting human cognition; however, the functional organization of LFC remains controversial. The “multiple demand” (MD) hypothesis suggests that LFC regions provide domain-general support for cognition. Recent evidence challenges the MD view by demonstrating that a preference for sensory modality, vision or audition, defines four discrete LFC regions. Here, the sensory-biased LFC results are reproduced using a new task, and MD responsiveness of these regions is tested. The two visual-biased regions exhibit MD behavior, whereas the auditory-biased regions have no more than weak MD responses. These findings help to reconcile two competing views of LFC functional organization.

Keywords: audition, fMRI, lateral frontal cortex, multiple demand network, vision, working memory

Introduction

Although many cognitive processes rely upon specialized neural structures whose connectivity or architecture supports a single domain of processing (e.g., visual face processing, language production, motor output), human cognitive flexibility is generally held to also require multifunction, domain-general processing centers. Lateral frontal cortex (LFC) has been widely held to be such a “multiple demand” (MD) structure, actively involved in verbal, object, shape, and spatial working memory (WM) (Duncan and Owen, 2000; Postle et al., 2000; Hautzel et al., 2002; Duncan, 2010; Fedorenko et al., 2013), in sensorimotor responses (Ivanoff et al., 2009; Fedorenko et al., 2013), in linguistic tasks (Fedorenko et al., 2013; Blank et al., 2014), and in task representation and executive control (Dosenbach et al., 2007; Crittenden and Duncan, 2014).

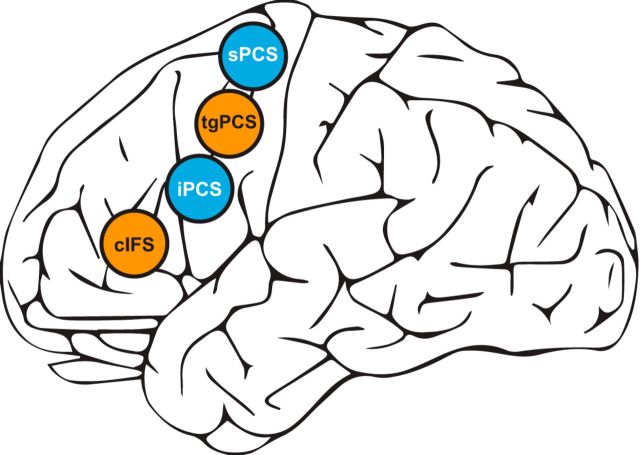

In contrast to this MD view, our laboratory and others have recently reported that preferences for sensory modality characterize distinct subregions of LFC (Michalka et al., 2015; Braga et al., 2017; Mayer et al., 2017). Michalka et al. (2015) contrasted visual spatial attention with auditory spatial attention (matched for task difficulty and using identical stimuli) and observed two bilateral visual-biased regions interleaved with two bilateral auditory-biased regions along the precentral sulcus and caudal inferior frontal sulcus (Fig. 1). The two visual-biased regions were localized to the superior and inferior branches of the precentral sulcus (superior precentral sulcus [sPCS] and inferior precentral sulcus [iPCS], respectively). One auditory-biased region was reported in the transverse gyrus that intersects the precentral sulcus (tgPCS) and the other in the caudal portion of the inferior frontal sulcus (cIFS). Functional connectivity analysis confirmed that these frontal visual- and auditory-biased regions belong to broader visual and auditory attention networks (Michalka et al., 2015). Another functional study identified spatially separate visual-biased and auditory-biased structures in LFC (Mayer et al., 2017), whereas a structural analysis identified complementary connectivity gradients for audition and vision within lateral frontal cortex (Braga et al., 2017).

Figure 1.

Visual-biased (sPCS and iPCS, blue) and auditory-biased (tgPCS and cIFS, orange) structures in LFC as identified by Michalka et al. (2015).

In the present study, we examine the MD and sensory-biased hypotheses for LFC functional organization. (We use MD here to describe participation in both visual and auditory tasks.) Several outcomes are possible.

The Michalka et al. (2015) finding of four bilateral sensory-biased LFC regions has yet to be replicated and might prove elusive in a different paradigm.

The reported sensory-biased regions within LFC are relatively small compared with the large regions typically identified in this area of cortex. Earlier reports of MD properties might reflect a blurring together of several small, but functionally distinct, non-MD regions. This result would imply that any MD behavior occurs in other brain structures.

Although pure MD behavior is incompatible with a sensory-biased organization, a degree of MD responsiveness could occur within one or both sensory-biased networks. Partial MD behavior may occur within any or all of the eight LFC sensory-biased regions under consideration.

Here, we use fMRI to examine LFC responses during auditory and visual WM. We contrast performance of a 2-back WM task in vision with performance of a 2-back WM task in audition to examine sensory-biased regions. Our results reproduce, in a new paradigm, the finding by Michalka et al. (2015) of four bilateral sensory-biased structures anatomically interleaved along the precentral and inferior frontal sulci. Our participants include several individuals from the Michalka et al. (2015) study, allowing us to demonstrate within-subject replication of sensory-biased organization in LFC. Finally, our analysis reveals a degree of MD responsiveness within sensory-biased LFC regions that is intermediate between the “pure MD” responses exhibited in anterior insula and dorsal anterior cingulate cortex (dACC) and the “pure sensory” responses exhibited in superior temporal gyrus/sulcus (STG/S) and intraparietal sulcus (IPS). The visual-biased LFC regions exhibited significantly greater MD responsiveness than did the auditory-biased LFC regions. This asymmetry between modalities indicates that visual-biased LFC structures more strongly participate in general-purpose cognitive networks for attention and WM than do auditory-biased LFC structures.

Materials and Methods

All procedures in this study were approved by the Institutional Review Board of Boston University.

Subjects

Sixteen healthy individuals (ages 24–35 years, 9 males) from the Boston University community participated in this study. All were right-handed and had normal or corrected-to-normal vision. Subjects received monetary compensation and gave informed consent to participate in the study. One subject was excluded after he reported that the headphones became mispositioned during scanning and he could no longer hear the auditory stimuli; all data presented here are from the remaining 15 subjects. Two authors (A.L.N. and S.W.M.) participated as subjects.

Data collection

Each subject participated in two sets of MRI scans collected in two separate sessions. Imaging was performed at the Center for Brain Science Neuroimaging Facility at Harvard University on a 3 T Siemens Tim Trio scanner with a 32-channel matrix head coil. First, structural MRI scans were collected to support anatomical reconstruction of the cortical surfaces. A high-resolution (1.0 × 1.0 × 1.3 mm) MP-RAGE sampling structural T1-weighted scan was acquired for each subject. The cortical surface of each hemisphere was algorithmically reconstructed from this anatomical volume using FreeSurfer software (http://surfer.nmr.mgh.harvard.edu/, Version 5.3.0) (Dale et al., 1999) with human quality control inspection.

In a second session, we collected fMRI: T2*-weighted gradient echo, echo-planar images using a slice-accelerated EPI sequence that permits simultaneous multislice acquisitions using the blipped-CAIPI technique (Setsompop et al., 2012). Sixty-nine 2 mm slices were collected (0% skip) with a slice acceleration factor of 3 (TE 30 ms, TR 2000 ms, in-plane resolution 2.0 × 2.0 mm). Partial Fourier acquisition (6 of 8) was used to keep TE short (for SNR purposes). We collected eight runs of functional data, with each run comprising two blocks of visual 2-back, two blocks of auditory 2-back, and two blocks each of visual and auditory sensorimotor control (described below). Each block lasted 40 s and comprised 32 stimulus presentations. In addition, 8 s of fixation was collected at the beginning, midpoint, and end of each run.

Experimental design and statistical analysis

Stimuli and task.

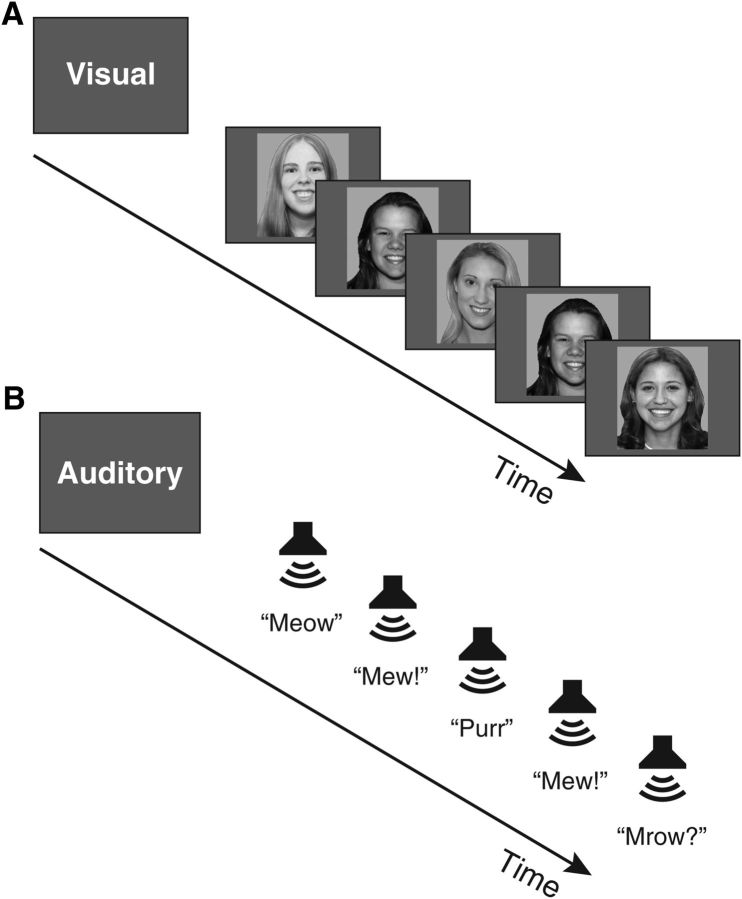

Subjects performed a 2-back WM task on visual and auditory stimuli, in separate blocks. Visual stimuli (Fig. 2A) were black-and-white photographs of young adult faces, each presented for 1 s with a 0.25 s interstimulus interval. To make the 2-back task more challenging and less amenable to a verbal labeling strategy, we included photographs of men and women in separate blocks. That is, all stimuli in a block were exemplars of a single category (e.g., male faces). Images were presented at 300 × 300 pixels, spanning ∼6.4° visual angle, using a liquid crystal display projector illuminating a rear-projection screen within the scanner bore. Auditory stimuli (Fig. 2B) were natural recordings of cat and dog vocalizations, collected from sound effects files freely available on the web. Recordings of cats and dogs were included in separate blocks, again to increase task difficulty and discourage verbal labeling strategies. Auditory stimuli lasted 300–600 ms, and the onsets of successive stimuli were separated by 1.25 s (matching the timing of the visual stimuli). Stimuli were presented diotically. The audio presentation system (Sensimetrics, http://www.sens.com) included an audio amplifier, S14 transformer, and MR-compatible in-ear earphones.

Figure 2.

A, Schematic of the visual 2-back task. Each photograph was presented for 1 s with a 0.25 s interstimulus interval. Subjects responded “2-back repeat” or “new” via button press. B, Schematic of the auditory 2-back task. Onsets of successive auditory stimuli were separated by 1.25 s.

At the beginning of each block, subjects were visually cued whether to perform the 2-back WM task (“auditory 2-back,” “visual 2-back”) or to perform a sensorimotor control (“auditory passive,” “visual passive”). Block order was counterbalanced across runs; run order was counterbalanced across subjects. During 2-back blocks, participants were instructed to decide whether each stimulus was an exact repeat of the stimulus two prior, and to make either a “2-back repeat” or “new” response via button press. Sensorimotor control blocks consisted of the same stimuli and timing, but no 2-back repeats were included, and participants were instructed to make a random button press to each stimulus. Responses were collected using an MR-compatible button box. All stimulus presentation and task control were done using MATLAB (The MathWorks) and PsychToolbox (Brainard, 1997; Kleiner et al., 2007). Behavioral data were compared using two-tailed paired t tests across conditions.

Eye tracking.

Before scanning, each subject was trained to hold fixation at a central point during task performance. Eye movements were recorded during scanning using an EyeLink 1000 MR-compatible eye tracker (SR Research) sampling at 500 Hz. To confirm that differences between conditions are not attributable to differences in eye movements, we measured the frequency with which subjects broke fixation. We operationalized “fixation” as eye gaze remaining within 1.5° of the central fixation point in both the horizontal and vertical directions. Eye tracking data are unavailable for 4 subjects because of technical problems. Eye gaze in these subjects was monitored via camera by the experimenter for acceptable fixation performance.

Eye position data were passed through an 80 Hz first-order low-pass Butterworth filter for smoothing before further analysis. Excursions from fixation were counted within each block, then averaged over runs for each condition. There were no significant differences in the number of times that subjects broke fixation between the visual and auditory active conditions, between the visual and auditory passive conditions, or between each modality's active and passive conditions (t(11) < 1, p > 0.35 for all comparisons).

fMRI analysis: GLM fitting.

Functional data were analyzed using the FreeSurfer/FS-FAST pipeline. Analyses were performed on subject-specific anatomy unless noted otherwise. All subject data were registered to the individual's anatomical data using the mean of the functional data, motion corrected by run, slice-time corrected, intensity normalized, resampled onto the individual's cortical surface (voxels to vertices), and spatially smoothed on the surface with a 3 mm FWHM Gaussian kernel. Where group-averaged cortical maps are shown, subject data were registered to the FreeSurfer fsaverage anatomy using an otherwise identical processing pipeline.

The GLM analysis used standard procedures within FreeSurfer/FS-FAST (version 5.3.0). Scan time series were analyzed vertex by vertex on the surface using a GLM whose regressors matched the time course of the experimental conditions. The time points of the cue period were excluded by assigning them to a regressor of no interest. The canonical hemodynamic response function was convolved with the regressors before fitting; this canonical response was modeled by a gamma function (δ = 2.25 s, τ = 1.25). Contrasts between conditions gave t statistics for each vertex for each subject.

Sensory-biased regions of interest (ROIs) were defined for each individual subject by directly contrasting blocks in which the subject performed visual WM against blocks in which the subject performed auditory WM. This contrast was liberally thresholded at p < 0.05, uncorrected. Cortical significance maps were additionally smoothed using the FreeSurfer visualization toolkit (five iterations of a box kernel) before defining ROIs. This approach was taken to: (1) maximally capture any frontal lobe vertices showing a bias for auditory or visual stimuli in individual subjects, and (2) to match the approach of Michalka et al. (2015) for purposes of testing the reproducibility of those findings within the same subjects. These functional data were used in combination with anatomical constraints drawn from Michalka et al. (2015) to define four bilateral LFC ROIs (sPCS, tgPCS, iPCS, cIFS; see Results) as well as large posterior sensory-biased ROIs (pVis, pAud). Each ROI was required to lie in the expected anatomical region (e.g., on the transverse gyrus intersecting the precentral sulcus), to exhibit the expected task contrast (e.g., auditory > visual), and to follow the pattern of interleaved visual- and auditory-biased regions. We occasionally observed functional activation that broke into two small neighboring regions of the same task contrast; in these cases, the two regions were grouped together to form the ROI. A few subjects exhibited additional interleaved areas of task activation that continued rostrally beyond the four LFC regions described here (Fig. 3-1), but this did not occur reliably across our participant pool.

WM-activated ROIs were defined for each individual subject by directly contrasting all blocks in which the subject performed either WM task against all blocks in which the subject performed a sensorimotor control. This contrast identified several regions defined as sensory-biased above, including large regions of LFC and posterior sensory cortices, as well as areas that we consider putative MD regions (dACC/pre-SMA and AIC; see Results).

We computed Dice coefficients (Dice, 1945; Rombouts et al., 1997; Bennett and Miller, 2010) to measure test-retest reliability of sensory-biased structures in frontal cortex. Seven subjects participated in both Michalka et al. (2015) and the present study. The two sets of scans were performed with different imaging parameters (Michalka: 3 × 3.125 × 3.125 mm voxels, TR = 2.6, no SMS; here: 2 mm iso, TR = 2.0 s, SMS 3) and were collected at least 12 months apart for each subject. For each subject and each of the eight sensory-biased frontal structures, we computed the area of the structure defined by Michalka et al. (2015), the area of the structure defined in the present study, and the size of the intersection between them. The Dice coefficient is the ratio between the intersection size and the mean size of the two separate structures. Our thresholding and ROI definitions matched those used by Michalka et al. (2015).

For ROI signal change analyses, the percentage signal change data were extracted for all voxels in the ROI and averaged across all blocks for all runs for each condition. The percentage signal change measure was defined relative to the average activation level during the sensorimotor control condition.

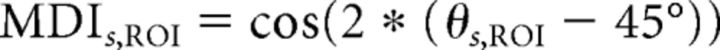

Multi-task activation index.

When examining the functionality of higher-order brain structures, it is often illuminating to compare activation across multiple cognitive tasks; however, subject-by-subject comparison of the relative activation in two tasks or conditions presents several analytical challenges. Ratio metrics are unstable when the denominator approaches or crosses 0, as can occur when one task yields deactivation relative to baseline. Further, when comparing task activation differences between ROIs, variability across the brain in vasculature or signal strength may mask the actual effects of interest. To mitigate these potential pitfalls, we have developed a vector-based, normalized approach that results in a Multi-Task Activation Index, quantifying the responsiveness to each task as a single value bounded between −1 and 1. In this paper, we refer to the index as the Multiple Demand Index (MDI).

We computed, for each vertex in an ROI, its modulation in visual WM versus passive exposure to the visual stimuli (using t scores), and in auditory WM versus passive exposure to the auditory stimuli. We then normalized these values for each subject (subtracting the mean t score across the entire cortical surface and dividing by the SD) to control for any possibility that one WM task led to higher overall activation than the other. Using these normalized scores, we described each vertex's activity as a vector in a 2D space (x-axis: auditory WM; y-axis: visual WM). For each subject, s, and each ROI, we calculated the mean vector Vs,ROI derived from that ROI's component vertices.

Mean vectors that lie along the positive diagonal (y = x) indicate equal contributions from each task, whereas vectors that lie along the negative diagonal (y = −x) indicate strong competitive effects between tasks. Vectors that lie along the x- or y-axis indicate that a single task dominates the response. To quantify these relationships, we extract the angle, θs,ROI for each mean vector, Vs,ROI, and convert this to an MDI, MDIs,ROI, as follows:

|

giving us an index ranging from −1 to 1. Positive MDI scores denote MD behavior, negative scores denote competitive interactions between modalities, and scores near 0 denote purely unimodal behavior, not influenced by the other modality. This bound on possible values keeps the measure well behaved, even if an individual subject's data diverge from the hypothesized pattern. MDI was computed for each ROI within each subject and then averaged.

Results

Subjects (n = 15) were able to perform both the visual 2-back task (90.1% correct, SD = 7.5%) and the auditory 2-back task (87.5% correct, SD = 10.9%) at a high level. There was no significant difference in accuracy between the two tasks (t(14) = 1.463, p = 0.17). fMRI results are as follows:

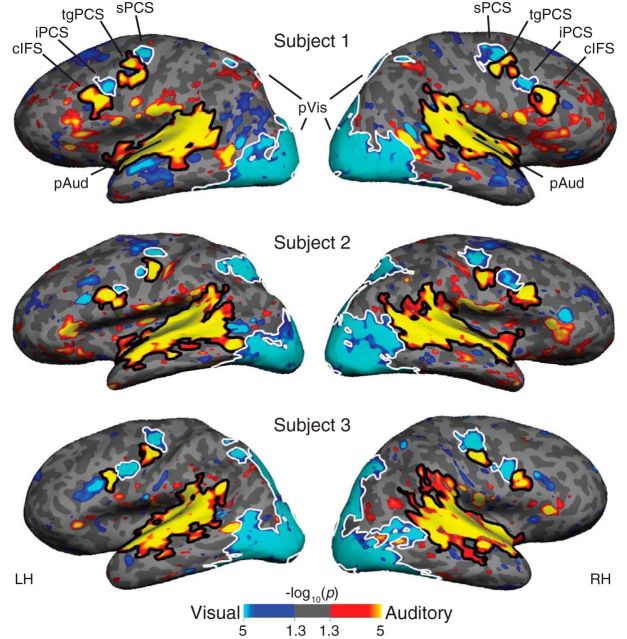

Sensory-biased analysis

We first contrasted auditory WM (auditory 2-back) and visual WM (visual 2-back) in individual subjects to identify structures that are selective for the sensory modality of memoranda. This contrast reveals that lateral frontal cortex contains multiple, bilateral sensory-biased regions. Figure 3 shows a direct contrast of auditory WM with visual WM for three individual subjects; all 15 individual subjects are shown in Figure 3-1. Two structures in lateral frontal cortex that are strongly biased for visual WM are interleaved with two structures that are strongly biased for auditory WM (Table 1). The sPCS and iPCS have significantly stronger BOLD responses during visual WM than during auditory WM. The gap between them consistently contained significantly stronger BOLD responses during auditory WM. This activation aligns with the transverse gyrus that divides the precentral sulcus into superior and inferior portions (tgPCS). We also observed a more ventral and anterior region with significantly stronger responses during auditory WM. This region lies in the cIFS. We were able to identify all four of these structures bilaterally in all 15 of our subjects (Table 1). Although these structures are reliably observed in individual subjects, they are only faintly apparent in a group-average contrast (Fig. 3-2) and do not survive a cluster-size correction analysis.

Figure 3.

Contrast of visual (blue) with auditory (orange) WM task activation in three individual subjects. ROIs for further analyses are outlined in white (visual-biased ROIs) and black (auditory-biased ROIs). All 15 individual subjects are shown in Figure 3-1, and a group-average analysis is shown in Figure 3-2.

Table 1.

Sensory-biased ROIsa

| Hemi | ROI | Sensory bias | MNI coordinates |

Area (mm2) |

Reliability (n = 7) |

|||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |||

| LH | sPCS | Visual | −34, −7, 51 | 7, 5, 4 | 290 | 265 | 0.57 | 0.12 |

| tgPCS | Auditory | −45, −4, 45 | 6, 4, 2 | 354 | 183 | 0.70 | 0.08 | |

| iPCS | Visual | −44, 2, 33 | 4, 6, 4 | 340 | 187 | 0.60 | 0.09 | |

| cIFS | Auditory | −46, 12, 18 | 5, 5, 6 | 466 | 238 | 0.66 | 0.05 | |

| RH | sPCS | Visual | 35, −5, 50 | 8, 4, 4 | 445 | 238 | 0.67 | 0.06 |

| tgPCS | Auditory | 48, −1, 43 | 4, 4, 4 | 276 | 181 | 0.67 | 0.04 | |

| iPCS | Visual | 45, 4, 31 | 5, 5, 4 | 372 | 203 | 0.61 | 0.08 | |

| cIFS | Auditory | 48, 16, 19 | 5, 7, 7 | 423 | 206 | 0.58 | 0.09 | |

aSensory bias is given as visual (V > A) or auditory (A > V). MNI coordinates are of mean centroid position (N = 15). Reliability is given as the Dice coefficient between ROIs identified in the present study and those identified by Michalka et al. (2015) in 7 individuals common to both studies.

Auditory-vs-visual task contrast in each of fifteen individual subjects. The bilateral pattern of four interleaved visual-biased and auditory-biased frontal structures is evident in each individual. The first three subjects are the three individuals shown in Figure 3. Download Figure 3-1, PDF file (6.6MB, pdf)

Group average of auditory-vs-visual task contrast, demonstrating minimal evidence for sensory-biased LFC ROIs. The group average maps identify traces of LFC visual- and auditory-biased regions, but would not survive a cluster correction analysis, and do not capture the robust pattern of interleaved sensory-biased structures that we report in individual subjects. Download Figure 3-2, PDF file (603KB, pdf)

For each of the eight sensory-biased LFC regions, the mean MNI coordinates lie within 1 SD of the mean MNI coordinates of the corresponding area as defined by Michalka et al. (2015). The original study used a covert selective attention task with verbal stimuli (visual and auditory digits and letters). Our replication uses a WM task with nonlinguistic stimuli and no selective attention demands. Thus, our findings not only reproduce the observation of four bilateral sensory biased regions in lateral frontal cortex, but also demonstrate that this generalizes across multiple aspects of the task design.

Seven subjects who participated in the present study also participated in the Michalka et al. (2015) study. Thus, in addition to demonstrating a replication of the sensory-biased regions in LFC using a novel paradigm, we were able to examine the degree to which the result can be replicated within individual subjects across tasks, scan sessions, and scan parameters. These sessions occurred more than a year apart with different imaging parameters (TR, voxel size, and SMS all differed). We observed a high degree of correspondence between studies for all ROIs (Table 1). Mean Dice coefficients range from 0.57 to 0.70 (all structures overlapped in all subjects), and all are significantly >0 (p ≤ 0.002). Subjects 1 and 2 shown in Figure 3 of the present study are also shown as Subjects 1 and 2 in Michalka et al. (2015, their Fig. 2). In addition to the task differences between the present experiment and Experiment 1 of Michalka et al. (2015), it should be noted that the present contrast includes visual versus auditory stimulation differences between conditions, whereas the Michalka et al. (2015) task simultaneously presented visual and auditory stimuli in all conditions. The effects of this stimulation difference can be seen in the increased activation in the vicinity of primary visual cortex and primary auditory cortex in the present study; however, no such differences emerge in the lateral frontal structures.

We also observed bilateral sensory-biased responses in posterior cortical areas. A large area of visual-biased activation (pVis) included portions of occipital cortex, the superior parietal lobule, and posterior temporal lobe (Fig. 3). Similarly, a large area of auditory-biased activation (pAud) comprised superior temporal gyrus and sulcus, including Heschl's gyrus. These effects in posterior visual and auditory regions likely reflect top-down attentional modulation of these structures (Jäncke et al., 1999; Kastner et al., 1999; Hopfinger et al., 2000; Petkov et al., 2004).

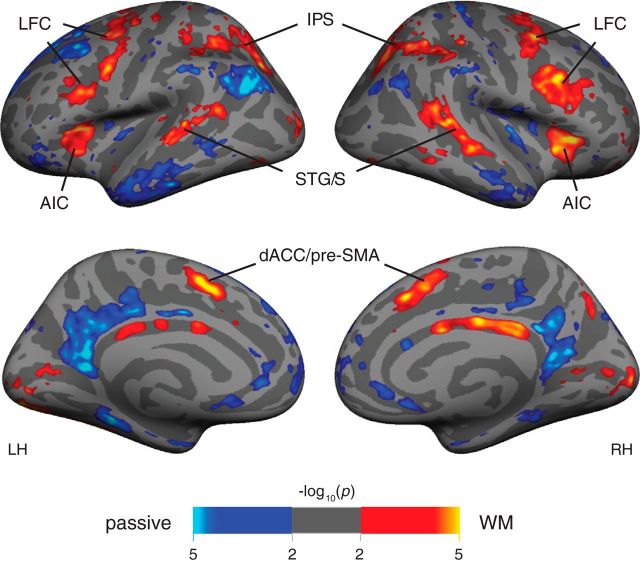

Recruitment by nonpreferred sensory modality

To compare our findings of sensory bias in LFC with prior work indicating MD responsiveness in LFC, we combined data across sensory modalities to examine overall WM activation, contrasting 2-back (visual and auditory) versus sensorimotor control (visual and auditory) conditions. Figure 4 shows group-average activation maps for this contrast. This activation pattern largely reflects a straightforward combination of the visual > auditory and the auditory > visual activation patterns observed in the sensory-biased analysis in individual subjects. Lateral frontal cortex, IPS, and STG/S are all bilaterally activated. Because this contrast equates sensory stimulation, early visual and early auditory cortex exhibit weaker activation than in the contrast between modalities. Notably, two additional cortical structures exhibit strong bilateral WM activation without evidence for sensory bias; activation is higher during WM than during sensorimotor control in bilateral anterior insula cortex (AIC), and bilateral dACC/pre-supplementary motor area (pre-SMA). We suggest that AIC and dACC/pre-SMA may contain pure-MD areas (i.e., task-activated and insensitive to sensory modality), consistent with prior reports identifying these regions as key members of the cognitive control network (Cole and Schneider, 2007; Dosenbach et al., 2007; Tamber-Rosenau et al., 2013). We are using MD here to denote participation in both visual and auditory WM tasks.

Figure 4.

Group-average activation (N = 15) for WM tasks (orange) versus sensorimotor control (blue). This analysis combines visual and auditory modalities. There is increased bilateral activation during WM in regions of lateral frontal cortex along the precentral sulcus and caudal inferior frontal sulcus, as well as in anterior insula, dorsal anterior cingulate/pre-SMA, STG/S, and the IPS and superior parietal lobule. The AIC and dACC/pre-SMA regions do not appear in the sensory modality contrast and are thus included in further analysis as putative “pure MD” regions.

We note that an investigator considering only group-average representations of these data would likely conclude that LFC is broadly recruited in WM tasks (Fig. 4), but that recruitment is not differentiated by visual or auditory sensory modality (Fig. 3-2. In this sense, our work replicates prior findings of MD behavior in LFC. However, analysis at the individual subject level allowed us to identify all eight sensory-biased LFC regions in each of 15 subjects. These sensory-biased regions are anatomically interleaved and relatively small; due to anatomical variability across individuals, these areas can easily be obscured by group-averaging techniques. The differences between Figure 3 and Figure 3-2 illustrate the importance of individual-subject approaches to characterizing frontal lobe organization.

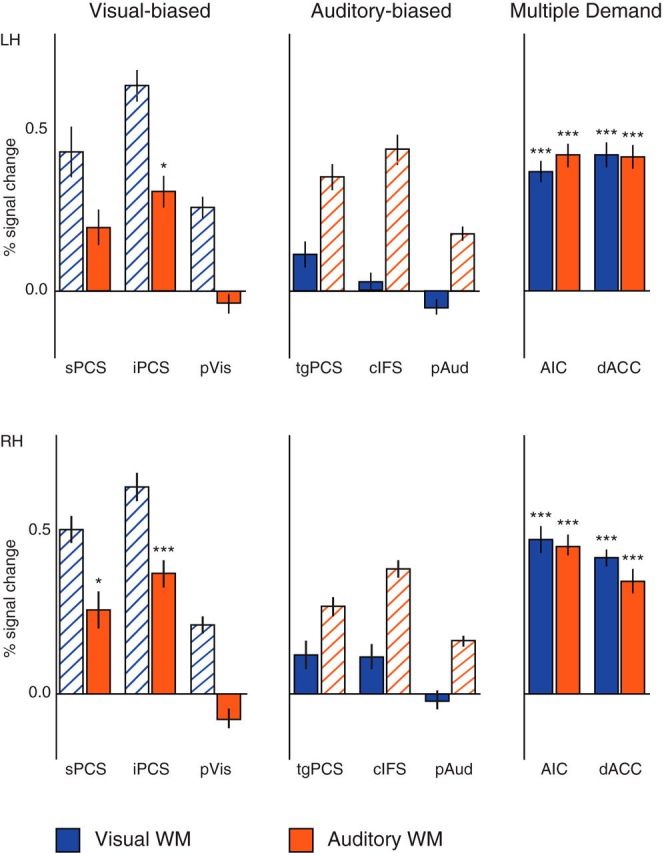

The remaining analyses examine the “partial MD hypothesis,” that is, whether any of the four, bilateral sensory-biased LFC regions exhibit some degree of MD behavior. Specifically, we examine the degree to which each region, visual-biased sPCS and iPCS and auditory-biased tgPCS and cIFS, participates in supporting WM processes of the nonpreferred modality. To provide a broader context to this analysis, we include AIC and dACC/pre-SMA as two bilateral regions that we expect to exhibit a high degree of MD (modality-insensitive) responsiveness. We also include two bilateral posterior sensory-biased ROIs consisting of the large areas of activation in temporal and occipital/parietal cortices identified by contrasting auditory with visual WM. These regions are referred to as posterior visual (pVis) and posterior auditory (pAud). We expected these areas to show strong sensory bias effects, and little or no MD behavior. Each ROI was defined in individual subjects, with AIC and dACC/pre-SMA defined from the contrast of WM to sensorimotor control conditions (WM-activated), and all other ROIs defined from the contrast of visual WM vs auditory WM (sensory-biased).

Within each of these eight bilateral ROIs, we measured average BOLD signal change for visual WM versus passive exposure to the visual stimuli (Fig. 5, blue) and for auditory WM versus passive exposure to the auditory stimuli (Fig. 5, orange). These findings highlight an asymmetry in recruitment by WM for the nonpreferred sensory modality, indicating greater MD responsiveness within visual-biased frontal ROIs than within auditory-biased frontal ROIs. The ROIs are defined by the bias in their WM responses (sPCS, iPCS, and pVis are visual-biased; tgPCS, cIFS, and pAud are auditory-biased); here, however, we examine the degree of recruitment by the nonpreferred sensory modality. The degree of recruitment by the preferred modality is shown in Figure 5 (cross-hatched bars) for completeness only; comparisons involving those bars would comprise circular reasoning and are not statistically valid.

Figure 5.

Visual-versus-passive (blue) and auditory-versus-passive (orange) percentage signal change, in LH (top) and RH (bottom). Activation in a region's preferred modality is displayed here (using cross-hatched bars) for illustrative purposes only; because the ROIs were chosen for strong activation in the preferred modality, statistical comparisons between the cross-hatched and solid bars within a sensory-biased ROI would be invalid. Visual-biased frontal structures (iPCS and, to a lesser extent, sPCS) are also recruited during auditory WM, whereas auditory-biased frontal structures (tgPCS and cIFS) are only minimally recruited during visual WM. Posterior sensory structures (pVis and pAud) are weakly deactivated during WM in the nonpreferred modality. Multiple demand structures (AIC and dACC/pre-SMA) are recruited strongly in both tasks, and their degree of activation does not differ significantly between auditory and visual WM. Error bars are within-subject SE (Morey, 2008). *p < 0.05 (Holm–Bonferroni corrected). ***p < 0.001 (Holm–Bonferroni corrected).

To quantify this apparent asymmetry in crossmodal recruitment, we measured percentage signal change in the nonpreferred modality (in the sensory-biased regions) or both modalities (in the MD regions). All comparisons against zero were Holm–Bonferroni corrected for family-wise error rate (α= 0.05, 20 comparisons), and corrected p values are reported here. There was significant activation in left and right iPCS during auditory WM (left: t(14) = 4.84, p = 0.0029; right: t(14) = 6.43, p = 0.0002), and in right, but not left, sPCS during auditory WM (right: t(14) = 3.68; p = 0.0248; left: t(14) = 2.95, p = 0.0956). There was no significant activation in any frontal auditory-biased structure during visual WM (t(14) < 2.4, p > 0.22 for all comparisons). Posterior sensory-biased regions pVis and pAud showed bilateral nonsignificant deactivation in the nonpreferred modality (t(14) < 2.9, p > 0.09 for all comparisons).

A repeated-measures linear model with factors for ROI Preference (visual-biased, auditory-biased) and Hemisphere (left, right) tested the degree of activation in the nonpreferred modality (i.e., activation during visual WM in the auditory-biased frontal structures, and vice versa). This analysis revealed that visual-biased frontal structures are significantly more active during auditory WM than vice versa (F(1,14) 19.99, p = 0.0005), confirming the asymmetry between visual-biased and auditory-biased LFC structures. There was also an effect of Hemisphere (R > L in the nonpreferred modality, F(1,14) = 12.54, p = 0.0033); the interaction between the two factors was nonsignificant (F < 1; p > 0.75).

As expected, there was significant activation in left and right AIC in both modalities (t(14) > 8.9, corrected p < 0.0001 for all comparisons) and in left and right dACC/pre-SMA in both modalities (t(14) > 11.2, corrected p < 0.0001 for all comparisons), confirming their recruitment in each task individually. There were no significant differences between auditory and visual WM activation in AIC or dACC/pre-SMA in either hemisphere (t(14) < 1.68, p > 0.11, uncorrected for all comparisons).

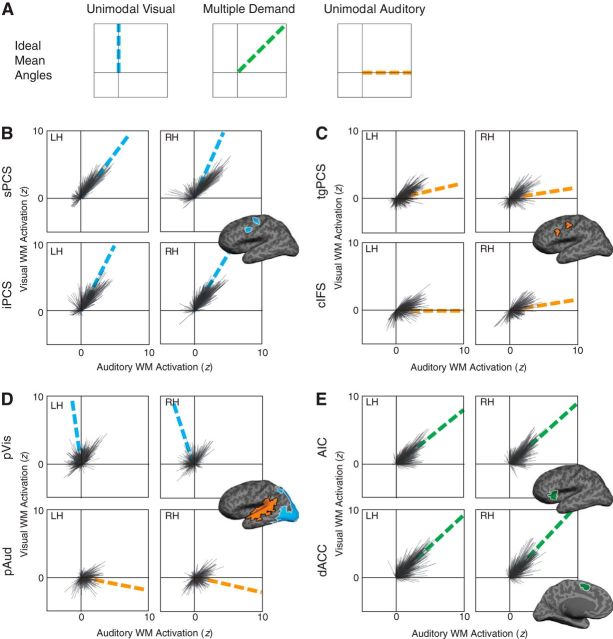

Multitask activation index

To more finely characterize the behavior of the structures of interest, we represented the behavior of each cortical surface vertex as a vector in a 2D space (Fig. 6 shows a subsample of 100 vertices per ROI per subject, for ease of visualization). The normalized difference (in units of SD; see Materials and Methods) between visual WM and visual sensorimotor control forms the vertical axis of this space; the normalized difference between auditory WM and auditory sensorimotor control forms the horizontal axis. Vectors in the upper right quadrant of this space thus correspond to vertices demonstrating MD behavior, with substantial activation in both visual and auditory WM (Fig. 6A). Vertices that are unimodal will appear as vectors clustered around the positive vertical (visual) or horizontal (auditory) axis, with neither activation nor deactivation in the other task. Vectors in the upper left or lower right quadrants of this space correspond to vertices demonstrating competitive interactions between modalities.

Figure 6.

We represented each vertex within the ROI as a vector in a 2D space, with its activation in visual WM on one axis and its activation in auditory WM on the other. We computed the mean vector of the resulting distribution, and represent its angle with a (colored) dashed line. A, Mean vector angles corresponding to an ideal structure that is unimodal visual, MD, or unimodal auditory, respectively. B, Visual-biased frontal ROIs (sPCS and iPCS). C, Auditory-biased frontal ROIs (tgPCS and cIFS). D, Posterior sensory-biased ROIs (pVis and pAud). E, Putative MD ROIs (AIC and ACC). Mean vector angles and lengths are given in Table 6-1.

Angle and length of mean vectors for each ROI shown in Figure 6 of the main text. Angles are reported as θ - 45 such that 0 corresponds to the y=x multiple-demand line, positive numbers approach the visual axis (45°); i.e., counter-clockwise from that line) and negative numbers approach the auditory axis (-45°). Download Table 6-1, DOCX file (89.7KB, docx)

For each ROI, a mean vector per subject was computed by summing all individual vertex vectors and dividing the resulting length by the number of vertices; a mean vector angle across subjects was calculated similarly (Mardia and Jupp, 2009). The mean vector angle and mean vector length for each ROI are given in Table 6-1; the vector lengths appear to reflect primarily the magnitude of activation and were therefore disregarded in this analysis.

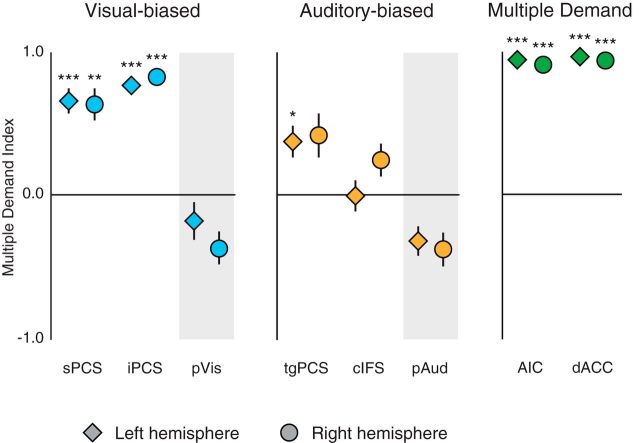

We again observed a difference between visual-biased and auditory-biased LFC structures. In visual-biased frontal structures (Fig. 6B), we observed mean vector angles lying approximately halfway between pure MD and pure unimodal visual behaviors. On the other hand, in auditory-biased frontal structures (Fig. 6C), we observed mean vector angles that stayed very close to the auditory axis, confirming that these structures were, on average, modulated only by auditory WM. To summarize these data, we computed a MDI for each structure (Fig. 7). This index is based on the cosine of the mean vector angle and ranges from −1 to 1; positive scores indicate MD behavior, negative scores indicate competitive interactions between modalities, and scores near 0 indicate purely unimodal behavior (for details, see Materials and Methods).

Figure 7.

MDI for each of eight bilateral regions. MDI ranges from −1 to 1, with negative scores indicating competitive interaction between modalities (as seen in pVis and pAud), scores near 0 indicating unimodal activation (as in cIFS), and positive scores indicating activation in both WM tasks. Error bars are within-subject SE (Morey, 2008). *p < 0.05 (Holm–Bonferroni corrected). **p < 0.01 (Holm–Bonferroni corrected). ***p < 0.001 (Holm–Bonferroni corrected).

For each ROI, we tested whether its MDI score differed from zero, using Holm–Bonferroni corrected p values to control family-wise error rate (α= 0.05, 16 comparisons). All four LFC visual-biased ROIs had MDI scores significantly >0 (left sPCS: t(14) = 5.35, p = 0.0010; right sPCS: t(14) = 4.55, p = 0.0041; left iPCS: t(14) = 13.02, p < 0.0001; right iPCS: t(14) = 12.69, p < 0.0001). Of the auditory LFC ROIs, left tgPCS scored significantly >0 (t(14) = 3.24, p = 0.0472); the other three did not differ significantly from zero (right tgPCS: t(14) = 2.50, p = 0.14; left cIFS: t(14) = 0.00, p > 0.99; right cIFS: t(14) = 1.76, p = 0.30). These scores make it clear that visual-biased frontal structures have substantial MD responsiveness, whereas auditory-biased frontal structures tend to cluster around unimodal patterns of activation. A repeated-measures linear model of MDI scores of the frontal sensory-biased structures with factors ROI Modality (visual-biased, auditory-biased) and Hemisphere (left, right) identified a substantial main effect of ROI Modality (F(1,14) = 17.94, p = 0.0008), with visual structures scoring significantly higher. There was no effect of hemisphere and no interaction (F(1,14) < 1, p > 0.36). Again, visual-biased LFC regions exhibit greater MD responsiveness than auditory-biased LFC regions.

As an exploratory analysis, we tested whether the two visual-biased ROIs (sPCS and iPCS) or the two auditory-biased ROIs (tgPCS and cIFS) differed in their MDI scores, but found only a small effect for higher MDI scores in tgPCS than in cIFS (F(1,14) = 5.043, p = 0.0414) and no difference between the visual-biased ROIs (F(1,14) = 1.9, p = 0.19).

To provide context for these findings, we conducted the same analysis on our large, posterior, sensory-biased structures, pVis and pAud (Fig. 6D). Each of these structures demonstrated competitive interactions between modalities, with mean vector angles in the upper left quadrant for pVis, indicating activation by visual WM and suppression by auditory WM. pAud showed the opposite pattern, with mean vector angles in the lower right quadrant, indicating activation by auditory WM and suppression by visual WM. A second piece of context came from areas that were defined by their WM activation and lack of clear univariate evidence for sensory bias (Fig. 6E). AIC and dACC/pre-SMA each showed, bilaterally, mean vector angles that very closely matched the 45° MD line. The MDI scores (Fig. 7) for posterior sensory structures are negative; the MDI scores in AIC and dACC/pre-SMA are very close to 1. The MDI behavior of sensory-biased LFC regions lies between these two extremes.

Discussion

We examined specialization for sensory modality, vision versus audition, within regions of human LFC. Our findings: (1) provide strong replication of our prior report of two visual-biased regions interleaved with two auditory-biased regions bilaterally in LFC (Michalka et al., 2015); (2) demonstrate high test-retest reliability of these areas within individual subjects; and (3) reveal a functional asymmetry between the visual-biased and auditory-biased LFC regions. We find that visual-biased LFC structures more strongly participate in a general-purpose cognitive network for attention and WM than do auditory-biased LFC structures. These findings significantly bolster evidence for sensory-biased functional regions within human frontal cortex, and help to reconcile these sensory-biased findings with prior evidence for MD processing in these areas (Duncan and Owen, 2000; Postle et al., 2000; Hautzel et al., 2002; Ivanoff et al., 2009; Duncan, 2010; Fedorenko et al., 2013; Crittenden et al., 2016).

Sensory-biased regions of LFC

By mapping visual and auditory preference in individual subjects, we were able to identify two bilateral visual-biased structures, the superior and inferior precentral sulcus, and two bilateral auditory-biased structures, the transverse gyrus bridging precentral sulcus and the caudal inferior frontal sulcus (Fig. 3; Fig. 3-1. Our results are a robust replication of earlier findings from our laboratory (Michalka et al., 2015), despite substantial differences in the cognitive tasks involved. Michalka et al. (2015) used a selective spatial attention task with verbal stimuli, in which subjects monitored one of two auditory and eight visual RSVP streams for digits among letters. The task used in the present study was as follows: (1) nonspatialized (note that processing of any complex visual stimulus requires spatial integration); (2) nonlinguistic (note that WM for animal sounds may recruit subarticulatory processes); and (3) nonselective, with no distractors presented. Despite these differences, we found a remarkably similar pattern of interdigitated visual-biased and auditory-biased structures in lateral frontal cortex. Although these areas were not apparent in a group-average map (Fig. 3-2, we observed all eight LFC structures in each of 15 individual subjects (Fig. 3-1. In 7 subjects who participated in both studies, each LFC structure in our study overlapped with the corresponding structure identified in the previous project, despite differences in tasks and scan parameters. Sensory-biased structures in LFC are thus robust and reliable.

Our findings are consistent with nonhuman primate studies that find distinct auditory processing and visual processing regions in lateral frontal cortex (Petrides and Pandya, 1999; Romanski and Goldman-Rakic, 2002; Romanski, 2007); however, the anatomically interdigitated organization (visual-, auditory-, visual-, and auditory-biased regions) appears unique to humans. In humans, single-modality fMRI studies have identified activation in the vicinity of sPCS and iPCS during visual attention and short-term memory tasks (Hagler and Sereno, 2006; see also Kastner et al., 2007; Fusser et al., 2011; Todd et al., 2011; Jerde et al., 2012; Jerde and Curtis, 2013; Passaro et al., 2013; Takahashi et al., 2013). Similarly, previous single-modality studies have identified activation in the vicinity of tgPCS and cIFS during auditory attention and short-term memory tasks, but typically with less anatomical precision than reported here (Salmi et al., 2009; Hill and Miller, 2010; Westerhausen et al., 2010; Falkenberg et al., 2011; Huang et al., 2013; Kong et al., 2014; Seydell-Greenwald et al., 2014; Glasser et al., 2016).

Given the robustness of our findings, it is surprising that this pattern of sensory-biased structures was previously unobserved in humans. We attribute our success to the careful application of individual subject analyses. These structures are small and interleaved, and, relative to anatomical landmarks, their exact boundaries are not aligned across subjects. If we had considered only group-level analyses of our data, we would have observed robust WM activation in lateral frontal cortex (Fig. 4), but little or no evidence of sensory modality-biased regions in LFC (consistent with Krumbholz et al., 2009; Mayer et al., 2017). In that sense, our group-level analysis replicates prior findings that LFC is not selective for sensory modality. However, individual subject analyses revealed all eight sensory-biased LFC structures in each of 15 individual subjects. Thus, identification and characterization of functional structures in the human frontal lobes seem to depend on analysis at the individual subject level.

One recent study identified a rostral/caudal differentiation in sensory preference (Mayer et al., 2017) with caudal LFC (in the vicinity of our four frontal sensory-biased ROIs) more active in visual than in auditory selective attention, and rostral LFC more active in auditory selective attention. This group-average approach may have missed small-grained differentiation between modality preferences in caudolateral prefrontal cortex (Mueller et al., 2013) and emphasized the strength of visual attention effects in visual-biased ROIs (Fig. 5). Sensory-biased structures recruited for visual and auditory WM have particular relevance for content-based models of WM (Baddeley, 1992). Although early work implicated LFC as the site of WM storage (Goldman-Rakic, 1996; Fuster, 1997; Rao et al., 1997), more recent work points to posterior regions of the brain as the site of storage. The sensory recruitment hypothesis posits that WM comprises stimulus-specific activity within the same brain regions that encode perception of that stimulus (Pasternak and Greenlee, 2005; Ester et al., 2009; Harrison and Tong, 2009; Riggall and Postle, 2012). Our results show that LFC regions participate in sensory-specific WM processes, but we cannot confirm whether stimulus representations are maintained in LFC.

Multiple demand versus sensory-biased LFC activity

The term MD has been defined using several different criteria (e.g., participation in minimally related tasks, sensitivity to task difficulty) in prior work (Postle et al., 2000; Fox et al., 2005; Fedorenko et al., 2013; Blank et al., 2014; Crittenden and Duncan, 2014; Woolgar et al., 2016). In the present study, we use the term specifically to describe participation in both visual and auditory WM tasks.

Evidence for sensory-specialized structures in LFC appears to conflict with earlier results indicating that this region of the brain demonstrates MD behavior (e.g., Postle et al., 2000; Duncan and Owen, 2000; Hautzel et al., 2002; Ivanoff et al., 2009; Duncan, 2010; Fedorenko et al., 2013; Crittenden et al., 2016), and even explicit shared recruitment between auditory and visual tasks (Tombu et al., 2011; Braga et al., 2013). We examined this apparent contradiction by testing the degree to which sensory-biased frontal structures were recruited for the nonpreferred task, and found a consistent functional asymmetry between the visual-biased and auditory-biased LFC regions. The visual-biased frontal ROIs, sPCS and iPCS, demonstrated both a sensory bias and a significant degree of MD behavior (our Hypothesis 3). The partial MD responsiveness of visual-biased LFC regions is consistent with the notion that these areas contain a heterogeneous neural population (e.g., Rao et al., 1997), which may include subpopulations of neurons that support sensory-independent WM function and/or subpopulations that support auditory processing only. These areas have previously been shown to be recruited in auditory spatial tasks (Tark and Curtis, 2009; Michalka et al., 2015); here, we demonstrate that such recruitment occurs even in the absence of spatial task demands. sPCS and iPCS may play a generalized, modality-agnostic role in attention and WM. In contrast, auditory-biased frontal ROIs, tgPCS and cIFS, tended to be selective for specifically auditory WM (our Hypothesis 2).

Recent research has demonstrated that LFC can be parcellated into language-selective and MD structures, which participate in discrete cognitive networks (Fedorenko et al., 2013; Blank et al., 2014). Our visual-biased regions sPCS and iPCS approximately correspond to caudal portions of their MD network; our auditory-biased regions tgPCS and cIFS approximately correspond to caudal portions of their language network. Therefore, our finding of stronger MD responsiveness within visual-biased structures aligns with their reports. One key difference between the vision/audition framework and the MD/language framework is that the sensory-biased LFC regions are strongly bilateral, whereas the language network is strongly left lateralized. Future work should more fully characterize the relationship between these functional organizations.

Other aspects of LFC organization should also be investigated in relationship to sensory selectivity. LFC areas recruited for cognitive control are hierarchically organized, with more rostral areas recruited as the complexity and task demands increase (Koechlin et al., 2003; Badre and D'Esposito, 2009). However, it is unknown whether visual-biased and auditory-biased regions define separate such hierarchies. Alternatively, the MD behavior of the visual-biased structures might reflect a sensory-agnostic cognitive control system. Similarly, our prior work observed visual-biased LFC regions specializing for spatial processing whereas auditory-biased regions specialize for temporal processing, regardless of stimulus sensory modality (Michalka et al., 2015). These results, in apparent correspondence with the three visual spatial maps recently identified by Mackey et al. (2017), raise questions about the broader organization of spatial and temporal processing.

The present findings demonstrate the replicability and robustness of sensory-biased organization in LFC, and help to reconcile this finding with MD accounts of LFC functionality. However, questions remain about the relationship between this pattern of organization and other characterizations of human frontal cortex. Future work should examine possible relationships between sensory modality and other abstract information domains, to further elucidate the cognitive and computational role played by human LFC.

Footnotes

This work was supported by Center of Excellence for Learning in Education Science and Technology, National Science Foundation Science of Learning Center Grant SMA-0835976 to B.G.S.-C., and National Institutes of Health Grants F32-EY026796 to A.L.N., F31-MH101963 to S.W.M., and R01-EY022229 to D.C.S. We thank Kelly Martin for stimulus preparation; Sean Tobyne for the schematic in Figure 1; and Ilona Bloem, James Brissenden, Allen Chang, Kathryn Devaney, Emily Levin, David Osher, and Maya Rosen for assistance with scanning.

The authors declare no competing financial interests.

References

- Baddeley A. (1992) Working memory: the interface between memory and cognition. J Cogn Neurosci 4:281–288. 10.1162/jocn.1992.4.3.281 [DOI] [PubMed] [Google Scholar]

- Badre D, D'Esposito M (2009) Is the rostro-caudal axis of the frontal lobe hierarchical? Nat Rev Neurosci 10:659–669. 10.1038/nrn2667 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett CM, Miller MB (2010) How reliable are the results from functional magnetic resonance imaging? Ann N Y Acad Sci 1191:133–155. 10.1111/j.1749-6632.2010.05446.x [DOI] [PubMed] [Google Scholar]

- Blank I, Kanwisher N, Fedorenko E (2014) A functional dissociation between language and multiple-demand systems revealed in patterns of BOLD signal fluctuations. J Neurophysiol 112:1105–1118. 10.1152/jn.00884.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braga RM, Wilson LR, Sharp DJ, Wise RJ, Leech R (2013) Separable networks for top-down attention to auditory non-spatial and visuospatial modalities. Neuroimage 74:77–86. 10.1016/j.neuroimage.2013.02.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braga RM, Hellyer PJ, Wise RJ, Leech R (2017) Auditory and visual connectivity gradients in frontoparietal cortex. Hum Brain Mapp 38:255–270. 10.1002/hbm.23358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. (1997) The psychophysics toolbox. Spat Vis 10:433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Cole MW, Schneider W (2007) The cognitive control network: integrated cortical regions with dissociable functions. Neuroimage 37:343–360. 10.1016/j.neuroimage.2007.03.071 [DOI] [PubMed] [Google Scholar]

- Crittenden BM, Duncan J (2014) Task difficulty manipulation reveals multiple demand activity but no frontal lobe hierarchy. Cereb Cortex 24:532–540. 10.1093/cercor/bhs333 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crittenden BM, Mitchell DJ, Duncan J (2016) Task encoding across the multiple demand cortex is consistent with a frontoparietal and cingulo-opercular dual networks distinction. J Neurosci 36:6147–6155. 10.1523/JNEUROSCI.4590-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI (1999) Cortical surface-based analysis: I. Segmentation and surface reconstruction. Neuroimage 9:179–194. 10.1006/nimg.1998.0395 [DOI] [PubMed] [Google Scholar]

- Dice LR. (1945) Measures of the amount of ecologic association between species. Ecology 26:297–302. 10.2307/1932409 [DOI] [Google Scholar]

- Dosenbach NU, Fair DA, Miezin FM, Cohen AL, Wenger KK, Dosenbach RA, Fox MD, Snyder AZ, Vincent JL, Raichle ME, Schlaggar BL, Petersen SE (2007) Distinct brain networks for adaptive and stable task control in humans. Proc Natl Acad Sci U S A 104:11073–11078. 10.1073/pnas.0704320104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. (2010) The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour. Trends Cogn Sci 14:172–179. 10.1016/j.tics.2010.01.004 [DOI] [PubMed] [Google Scholar]

- Duncan J, Owen AM (2000) Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci 23:475–483. 10.1016/S0166-2236(00)01633-7 [DOI] [PubMed] [Google Scholar]

- Ester EF, Serences JT, Awh E (2009) Spatially global representations in human primary visual cortex during working memory maintenance. J Neurosci 29:15258–15265. 10.1523/JNEUROSCI.4388-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falkenberg LE, Specht K, Westerhausen R (2011) Attention and cognitive control networks assessed in a dichotic listening fMRI study. Brain Cogn 76:276–285. 10.1016/j.bandc.2011.02.006 [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Duncan J, Kanwisher N (2013) Broad domain generality in focal regions of frontal and parietal cortex. Proc Natl Acad Sci U S A 110:16616–16621. 10.1073/pnas.1315235110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox MD, Snyder AZ, Vincent JL, Corbetta M, Van Essen DC, Raichle ME (2005) The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proc Natl Acad Sci U S A 102:9673–9678. 10.1073/pnas.0504136102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusser F, Linden DE, Rahm B, Hampel H, Haenschel C, Mayer JS (2011) Common capacity-limited neural mechanisms of selective attention and spatial working memory encoding. Eur J Neurosci 34:827–838. 10.1111/j.1460-9568.2011.07794.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM. (1997) Network memory. Trends Neurosci 20:451–459. 10.1016/S0166-2236(97)01128-4 [DOI] [PubMed] [Google Scholar]

- Glasser MF, Coalson TS, Robinson EC, Hacker CD, Harwell J, Yacoub E, Ugurbil K, Andersson J, Beckmann CF, Jenkinson M, Smith SM, Van Essen DC (2016) A multi-modal parcellation of human cerebral cortex. Nature 536:171–178. 10.1038/nature18933 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman-Rakic PS. (1996) Regional and cellular fractionation of working memory. Proc Natl Acad Sci U S A 93:13473–13480. 10.1073/pnas.93.24.13473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagler DJ Jr, Sereno MI (2006) Spatial maps in frontal and prefrontal cortex. Neuroimage 29:567–577. 10.1016/j.neuroimage.2005.08.058 [DOI] [PubMed] [Google Scholar]

- Harrison SA, Tong F (2009) Decoding reveals the contents of visual working memory in early visual areas. Nature 458:632–635. 10.1038/nature07832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hautzel H, Mottaghy FM, Schmidt D, Zemb M, Shah NJ, Müller-Gärtner HW, Krause BJ (2002) Topographic segregation and convergence of verbal, object, shape and spatial working memory in humans. Neurosci Lett 323:156–160. 10.1016/S0304-3940(02)00125-8 [DOI] [PubMed] [Google Scholar]

- Hill KT, Miller LM (2010) Auditory attentional control and selection during cocktail party listening. Cereb Cortex 20:583–590. 10.1093/cercor/bhp124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopfinger JB, Buonocore MH, Mangun GR (2000) The neural mechanisms of top-down attentional control. Nat Neurosci 3:284–291. 10.1038/72999 [DOI] [PubMed] [Google Scholar]

- Huang TR, Hazy TE, Herd SA, O'Reilly RC (2013) Assembling old tricks for new tasks: a neural model of instructional learning and control. J Cogn Neurosci 25:843–851. 10.1162/jocn_a_00365 [DOI] [PubMed] [Google Scholar]

- Ivanoff J, Branning P, Marois R (2009) Mapping the pathways of information processing from sensation to action in four distinct sensorimotor tasks. Hum Brain Mapp 30:4167–4186. 10.1002/hbm.20837 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jäncke L, Mirzazade S, Shah NJ (1999) Attention modulates activity in the primary and the secondary auditory cortex: a functional magnetic resonance imaging study in human subjects. Neurosci Lett 266:125–128. 10.1016/S0304-3940(99)00288-8 [DOI] [PubMed] [Google Scholar]

- Jerde TA, Curtis CE (2013) Maps of space in human frontoparietal cortex. J Physiol Paris 107:510–516. 10.1016/j.jphysparis.2013.04.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jerde TA, Merriam EP, Riggall AC, Hedges JH, Curtis CE (2012) Prioritized maps of space in human frontoparietal cortex. J Neurosci 32:17382–17390. 10.1523/JNEUROSCI.3810-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, Pinsk MA, De Weerd P, Desimone R, Ungerleider LG (1999) Increased activity in human visual cortex during directed attention in the absence of visual stimulation. Neuron 22:751–761. 10.1016/S0896-6273(00)80734-5 [DOI] [PubMed] [Google Scholar]

- Kastner S, DeSimone K, Konen CS, Szczepanski SM, Weiner KS, Schneider KA (2007) Topographic maps in human frontal cortex revealed in memory-guided saccade and spatial working-memory tasks. J Neurophysiol 97:3494–3507. 10.1152/jn.00010.2007 [DOI] [PubMed] [Google Scholar]

- Kleiner M, Brainard D, Pelli D (2007) What's new in Psychtoolbox-3? [Abstract] Perception 36 (ECVP Abstract Supplement).

- Koechlin E, Ody C, Kouneiher F (2003) The architecture of cognitive control in the human prefrontal cortex. Science 302:1181–1185. 10.1126/science.1088545 [DOI] [PubMed] [Google Scholar]

- Kong L, Michalka SW, Rosen ML, Sheremata SL, Swisher JD, Shinn-Cunningham BG, Somers DC (2014) Auditory spatial attention representations in the human cerebral cortex. Cereb Cortex 24:773–784. 10.1093/cercor/bhs359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Nobis EA, Weatheritt RJ, Fink GR (2009) Executive control of spatial attention shifts in the auditory compared to the visual modality. Hum Brain Mapp 30:1457–1469. 10.1002/hbm.20615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackey WE, Winawer J, Curtis CE (2017) Visual field map clusters in human frontoparietal cortex. eLife 6:e22974. 10.7554/eLife.22974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mardia KV, Jupp PE (2009) Directional statistics, Vol 494 New York: Wiley. [Google Scholar]

- Mayer AR, Ryman SG, Hanlon FM, Dodd AB, Ling JM (2017) Look hear! The prefrontal cortex is stratified by modality of sensory input during multisensory cognitive control. Cereb Cortex 27:2831–2840. 10.1093/cercor/bhw131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michalka SW, Kong L, Rosen ML, Shinn-Cunningham BG, Somers DC (2015) Short-term memory for space and time flexibly recruit complementary sensory-biased frontal lobe attention networks. Neuron 87:882–892. 10.1016/j.neuron.2015.07.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morey RD. (2008) Confidence intervals from normalized data: a correction to Cousineau (2005). Tutorial Quant Methods Psychol 4:61–64. 10.20982/tqmp.04.2.p061 [DOI] [Google Scholar]

- Mueller S, Wang D, Fox MD, Yeo BT, Sepulcre J, Sabuncu MR, Shafee R, Lu J, Liu H (2013) Individual variability in functional connectivity architecture of the human brain. Neuron 77:586–595. 10.1016/j.neuron.2012.12.028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Passaro AD, Elmore LC, Ellmore TM, Leising KJ, Papanicolaou AC, Wright AA (2013) Explorations of object and location memory using fMRI. Front Behav Neurosci 7:105. 10.3389/fnbeh.2013.00105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasternak T, Greenlee MW (2005) Working memory in primate sensory systems. Nat Rev Neurosci 6:97–107. 10.1038/nrn1603 [DOI] [PubMed] [Google Scholar]

- Petkov CI, Kang X, Alho K, Bertrand O, Yund EW, Woods DL (2004) Attentional modulation of human auditory cortex. Nat Neurosci 7:658–663. 10.1038/nn1256 [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN (1999) Dorsolateral prefrontal cortex: comparative cytoarchitectonic analysis in the human and macaque brain and corticocortical connection patterns. Eur J Neurosci 11:1011–1036. 10.1046/j.1460-9568.1999.00518.x [DOI] [PubMed] [Google Scholar]

- Postle BR, Stern CE, Rosen BR, Corkin S (2000) An fMRI investigation of cortical contributions to spatial and nonspatial visual working memory. Neuroimage 11:409–423. 10.1006/nimg.2000.0570 [DOI] [PubMed] [Google Scholar]

- Rao SC, Rainer G, Miller EK (1997) Integration of what and where in the primate prefrontal cortex. Science 276:821–824. 10.1126/science.276.5313.821 [DOI] [PubMed] [Google Scholar]

- Riggall AC, Postle BR (2012) The relationship between working memory storage and elevated activity as measured with functional magnetic resonance imaging. J Neurosci 32:12990–12998. 10.1523/JNEUROSCI.1892-12.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM. (2007) Representation and integration of auditory and visual stimuli in the primate ventral lateral prefrontal cortex. Cereb Cortex 17:i61–i69. 10.1093/cercor/bhm099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS (2002) An auditory domain in primate prefrontal cortex. Nat Neurosci 5:15–16. 10.1038/nn781 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rombouts SA, Barkhof F, Hoogenraad FG, Sprenger M, Valk J, Scheltens P (1997) Test-retest analysis with functional MR of the activated area in the human visual cortex. AJNR Am J Neuroradiol 18:1317–1322. [PMC free article] [PubMed] [Google Scholar]

- Salmi J, Rinne T, Koistinen S, Salonen O, Alho K (2009) Brain networks of bottom-up triggered and top-down controlled shifting of auditory attention. Cogn Brain Res 1286:155–164. 10.1016/j.brainres.2009.06.083 [DOI] [PubMed] [Google Scholar]

- Setsompop K, Gagoski BA, Polimeni JR, Witzel T, Wedeen VJ, Wald LL (2012) Blipped-controlled aliasing in parallel imaging for simultaneous multislice echo planar imaging with reduced g-factor penalty. Magn Reson Med 67:1210–1224. 10.1002/mrm.23097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seydell-Greenwald A, Greenberg AS, Rauschecker JP (2014) Are you listening? Brain activation associated with sustained nonspatial auditory attention in the presence and absence of stimulation. Hum Brain Mapp 35:2233–2252. 10.1002/hbm.22323 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi E, Ohki K, Kim DS (2013) Dissociation and convergence of the dorsal and ventral visual working memory streams in the human prefrontal cortex. Neuroimage 65:488–498. 10.1016/j.neuroimage.2012.10.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tamber-Rosenau BJ, Dux PE, Tombu MN, Asplund CL, Marois R (2013) Amodal processing in human prefrontal cortex. J Neurosci 33:11573–11587. 10.1523/JNEUROSCI.4601-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tark KJ, Curtis CE (2009) Persistent neural activity in the human frontal cortex when maintaining space that is off the map. Nat Neurosci 12:1463–1468. 10.1038/nn.2406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd JJ, Han SW, Harrison S, Marois R (2011) The neural correlates of visual working memory encoding: a time-resolved fMRI study. Neuropsychologia 49:1527–1536. 10.1016/j.neuropsychologia.2011.01.040 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tombu MN, Asplund CL, Dux PE, Godwin D, Martin JW, Marois R (2011) A unified attentional bottleneck in the human brain. Proc Natl Acad Sci U S A 108:13426–13431. 10.1073/pnas.1103583108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Westerhausen R, Moosmann M, Alho K, Belsby SO, Hämäläinen H, Medvedev S, Specht K, Hugdahl K (2010) Identification of attention and cognitive control networks in a parametric auditory fMRI study. Neuropsychologia 48:2075–2081. 10.1016/j.neuropsychologia.2010.03.028 [DOI] [PubMed] [Google Scholar]

- Woolgar A, Jackson J, Duncan J (2016) Coding of visual, auditory, rule, and response information in the brain: 10 years of multivoxel pattern analysis. J Cogn Neurosci 28:1433–1454. 10.1162/jocn_a_00981 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Auditory-vs-visual task contrast in each of fifteen individual subjects. The bilateral pattern of four interleaved visual-biased and auditory-biased frontal structures is evident in each individual. The first three subjects are the three individuals shown in Figure 3. Download Figure 3-1, PDF file (6.6MB, pdf)

Group average of auditory-vs-visual task contrast, demonstrating minimal evidence for sensory-biased LFC ROIs. The group average maps identify traces of LFC visual- and auditory-biased regions, but would not survive a cluster correction analysis, and do not capture the robust pattern of interleaved sensory-biased structures that we report in individual subjects. Download Figure 3-2, PDF file (603KB, pdf)

Angle and length of mean vectors for each ROI shown in Figure 6 of the main text. Angles are reported as θ - 45 such that 0 corresponds to the y=x multiple-demand line, positive numbers approach the visual axis (45°); i.e., counter-clockwise from that line) and negative numbers approach the auditory axis (-45°). Download Table 6-1, DOCX file (89.7KB, docx)