Abstract

Introduction

Inspections are widely used in health care as a means to improve the health services delivered to patients. Despite their widespread use, there is little evidence of their effect. The mechanisms for how inspections can promote change are poorly understood. In this study, we use a national inspection campaign of sepsis detection and initial treatment in hospitals as case to: (1) Explore how inspections affect the involved organizations. (2) Evaluate what effect external inspections have on the process of delivering care to patients, measured by change in indicators reflecting how sepsis detection and treatment is carried out. (3) Evaluate whether external inspections affect patient outcomes, measured as change in the 30-day mortality rate and length of hospital stay.

Methods and analysis

The intervention that we study is inspections of sepsis detection and treatment in hospitals. The intervention will be rolled out sequentially during 12 months to 24 hospitals. Our effect measures are change on indicators related to the detection and treatment of sepsis, the 30-day mortality rate and length of hospital stay. We collect data from patient records at baseline, before the inspections, and at 8 and 14 months after the inspections. We use logistic regression models and linear regression models to compare the various effect measurements between the intervention and control periods. All the models will include time as a covariate to adjust for potential secular changes in the effect measurements during the study period. We collect qualitative data before and after the inspections, and we will conduct a thematic content analysis to explore how inspections affect the involved organisations.

Ethics and dissemination

The study has obtained ethical approval by the Regional Ethics Committee of Norway Nord and the Norwegian Data Protection Authority. It is registered at www.clinicaltrials.gov (Identifier: NCT02747121). Results will be reported in international peer-reviewed journals.

Trial Registration

NCT02747121; Pre-results.

Keywords: external inspection, sepsis, stepped wedge design, effect, regulation

Strengths and limitations of the study.

This is a comprehensive study in a field where few experimental studies have previously been published.

The study has the potential to provide new knowledge about the effects of external inspections and their mechanisms for change.

Key challenges include possible contamination of the control group, lack of documentation in patient records and the overall sample size.

Introduction

External inspections constitute a core component of regulatory regimes and certification and accreditation processes.1 2 Different terms such as external review, supervision and audit have been used to describe this activity.3 4 There are differences between these approaches, but they have in common that a healthcare organisation’s performance is assessed according to an externally defined standard. We use the term ‘external inspection’, which implies that the inspection is initiated and controlled by an organisation external to the one being inspected.5 We define external inspection as: a system, process or arrangement in which some dimensions or characteristics of a healthcare provider organisation and its activities are assessed or analysed against a framework of ideas, knowledge, or measures derived or developed outside that organisation.6

Inspections are widely used in healthcare as a means to improve the quality of care delivered to patients.1 7 Quality of care is a complex concept that can be understood in different ways.8 We understand quality of care as: the degree to which health services for individuals and populations increase the likelihood of desired health outcomes, and are consistent with current professional knowledge.9 We found this definition expedient because it highlights that the quality of care encompasses outcomes for patients and populations, and that the outcomes are dependent on the delivery of health services consistent with current professional knowledge. External inspections can be used with the intention to secure that delivery of health services are consistent with current professional knowledge.

Despite the widespread use of external inspections,2 few robust studies have been undertaken to assess their effects on the quality of care.10 The effects of inspections remain unclear and the evidence is contradictory.5 11–13 Observational studies have demonstrated a positive association between accreditation and the ability to promote change, professional development, quality systems and clinical leadership.11 14 15 Research suggests that there is an association between inspections and different quality outcomes, for example, reduced incidence of pressure ulcer and suicides16–18; however, randomised controlled studies have not been able to find evidence of impact of inspections on the quality of care.19 20

We suggest that the conflicting evidence can partly be explained by the fact that inspection can be considered a complex intervention consisting of different elements that are introduced into varying organisational contexts.21 The way the inspection process is conducted will thus influence how the inspected organisations implement improvements following inspection. The manner in which external inspections affect the involved organisation is currently poorly understood.5 22–24 We need more knowledge about the effects of inspections as well as a better understanding of the mechanisms for how they can contribute to improving quality of care.5 11 Such knowledge can deepen our understanding of why the effects of external inspections seem to vary, which in turn can facilitate the development of more effective ways to conduct inspections.5

In this study, we use external inspections related to sepsis detection and treatment in hospitals to explore how such inspections affect the involved organisations and to evaluate their effect on the quality of care. Sepsis is a prevalent disease and one of the main causes of death among hospitalised patients internationally and in Norway.25 26 Early treatment with antibiotics and improved compliance with treatment guidelines are associated with reduced mortality among patients with sepsis.27–30 International studies have shown that compliance with treatment guidelines varies, and that increased compliance can improve patient outcomes.27 28 31 32 External inspections of Norwegian hospitals have demonstrated that insufficient governance of clinical processes in the emergency room could have severe consequences for patients admitted to the hospital with undiagnosed sepsis.33 On this background, the Norwegian Board of Health Supervision decided to conduct a nationwide inspection campaign of sepsis detection and treatment in acute care hospitals in Norway during 2016–2017 as part of its regular inspection activities. Moreover, the Norwegian Board of Health Supervision also decided to conduct the present scientific study to explore and assess the effects of the inspection campaign.

Methods and analysis

Study aim

The study has three main objectives:

To explore how inspections affect the involved organisations;

To evaluate what effect external inspections have on the process of delivering care to patients with sepsis, measured by change in key indicators reflecting how sepsis detection and treatment is carried out;

To evaluate whether external inspections affect patient outcomes, measured as change in the 30-day mortality rate and length of hospital stay for patients with sepsis.

Conceptual framework

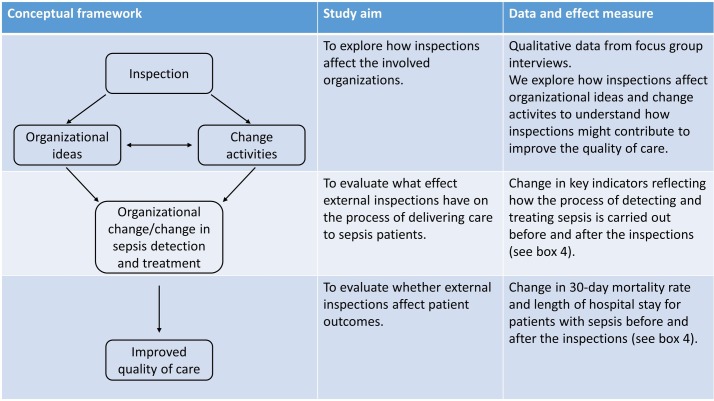

We take the perspective that quality of care can be considered a system property that is dependent on how the organisation providing care performs as a whole.34 Improving the quality of care is thus dependent on changing organisational behaviour, which implies changing the way clinicians interact and perform their clinical processes.35 36 Change in organisational behaviour is a complex social process that involves a range of different organisational activities.37 If external inspection is to contribute to improvement in the quality of care, it should have an impact on those activities involved in organisational change, here defined as any modification in organisational composition, structure or behaviour.38

We have previously conducted a systematic review of published research to identify the mechanisms of how external inspections can contribute to improving quality of care in health organisations.39 By combining empirical evidence and theoretical contributions, we found evidence to support that external inspections need to affect both organisational ideas and organisational change activities to improve the quality of care. Organisational ideas encompass theoretical constructs like organisational readiness for change, awareness of current practice and performance gaps and organisational acceptance that change is necessary.40 41 Organisational change activities refer to key activities involved in quality improvement like setting goals, planning and implementing improvement measures and evaluating effect of such measures.42 43

Figure 1 depicts our overall conceptual framework, how the elements of the framework relate to the different study aims and the corresponding data and effect measures. We suggest that inspections can affect organisational ideas and initiate change activities, which in turn can lead to organisational change. We collect qualitative data to explore how the inspections affect the involved organisations. Moreover, we suggest that organisational change and change in the process of detecting and treating patients with sepsis can contribute to improve the quality of care. To measure change in the process of detecting and treating sepsis, we collect data that reflect this process, for example, time to triage, time to initial assessment by physician and time to treatment with antibiotics. We refer to these data as process indicators, because they reflect how the process of detecting and treating sepsis is carried out. To measure change in the quality of care we use two outcome measures, length of hospital stay and 30-day mortality rate.

Figure 1.

Conceptual study framework.

Study design

Randomised controlled trials (RCTs) are considered the gold standard for assessing the effects of an intervention.44 However, in the present project, an RCT will not be feasible as it is impossible to establish an appropriate control group. Data regarding detection and treatment of sepsis are not available as routine data in Norway. Such data can only be collected by reviewing individual patient records. According to Norwegian legislation, the inspection teams have access to patient records and can collect relevant data as part of the inspection. If we were to conduct an RCT, the inspection teams would have to collect data from hospitals that were not inspected. Collecting such data is a key ingredient of an inspection and would itself be an intervention. Furthermore, if the data collected from hospitals in the control group were indicative of non-compliant behaviour, the inspection teams would have to follow-up their findings with those hospitals; thus, it would no longer be a control group. A stepped-wedge design has been recommended for evaluating intervention effects when it is not feasible to establish a control group.45 Furthermore, this type of design is recommended for evaluating the effect of service delivery type interventions where it is not possible to expose the whole study population for the intervention simultaneously and where implementation takes time.46 In our case, the intervention is aimed at changing service delivery for patient with sepsis, it is not possible to conduct all inspection simultaneously, and implementation of change following the intervention takes time.

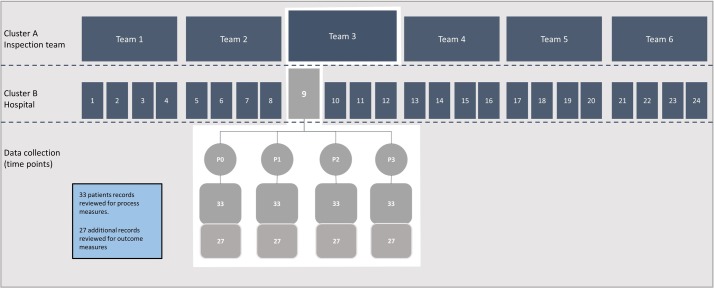

The intervention will be rolled out sequentially during 12 months to 24 hospitals, with six clusters of four geographically close hospitals. Inspections are carried out by six regional teams with members from different county governors and external clinical experts. Each team conducts four inspections in their region, yielding 24 inspected hospitals. Owing to practical and administrative implications, and general work planning for the involved team members, the four inspections must be clustered together and conducted within a limited time span. Each regional team is assigned a time slot of about 7 weeks in which to conduct their inspections; the order of these time slots is randomised (random order generated by computer). Table 1 illustrates the incomplete stepped-wedge design used in the study. The design is incomplete in that we do not continuously collect data from all the included sites, rather we collect data at four different time points. The inspections are rolled out successively over 1 year. On average, there are two inspections each month, though the exact number of inspections each month may vary due to practical work planning for the inspection teams and the involved hospitals.

Table 1.

Study design

| Year | 2015 | 2016 | 2017 | 2018 | ||||||||||||||||||||||||||||||

| Hospital/ month | S | O | N | D | J | F | M | A | M | J | J | A | S | O | N | D | J | F | M | A | M | J | J | A | S | O | N | D | J | F | M | A | M | J |

| 1 | P0 | FI | P1 | SV | P2 | FA | FI | P3 | FA | |||||||||||||||||||||||||

| 2 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 3 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 4 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 5 | P0 | FI | P1 | SV | P2 | FA | FI | P3 | FA | |||||||||||||||||||||||||

| 6 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 7 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 8 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 9 | P0 | FI | P1 | SV | P2 | FA | FI | P3 | FA | |||||||||||||||||||||||||

| 10 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 11 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 12 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 13 | P0 | FI | P1 | SV | P2 | FA | FI | P3 | FA | |||||||||||||||||||||||||

| 14 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 15 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 16 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 17 | P0 | FI | P1 | SV | P2 | FA | FI | P3 | FA | |||||||||||||||||||||||||

| 18 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 19 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 20 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 21 | P0 | FI | P1 | SV | P2 | FA | FI | P3 | FA | |||||||||||||||||||||||||

| 22 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 23 | P0 | P1 | SV | P2 | FA | P3 | FA | |||||||||||||||||||||||||||

| 24 | P0 | P1 | SV | P2 | FA | P3 | FA |

FA, follow-up audit; FI, focus group interviews; P0, baseline measurement; P1, measurement before site visit; P2, measurement 8 months after the site visit; P3, measurement 14 months after the site visit; SV, site visit.

To answer our research questions, we also need to collect qualitative data. We use focus group interviews to collect qualitative data. The phenomenon that we intend to study is how external inspections affect organisational change processes. Organisational change is dependent on interaction between individuals and groups in the organisation.47 Focus groups enable interaction between group members during data collection, thus resembling the phenomenon we wish to study. An inspection at a hospital represents one case, and for each case we conduct focus group interviews with the inspection team, leaders in the inspected hospital and clinicians before and after the inspections.

Study population and intervention

The intervention studied is external inspections of 24 acute care hospitals in Norway, addressing early detection and treatment of patients admitted with possible sepsis. Table 2 presents the key elements of the intervention.

Table 2.

Key elements of the intervention

| Time in months | Activity |

| 1 | Inspection team announces inspection and requests the hospital to submit information. |

| 2 | Inspection team reviews records of patients with sepsis and collect relevant data for the inspection criteria. Data are collected for two time periods, baseline (September 2015) and right before the site visit. Inspection team reviews information from hospital and prepares for the site visit. |

| 3 | Two-day site visit at the hospital with interviews of key personnel. At the end of the site visit, the inspection team presents the preliminary findings, and the hospital can comment on these preliminary findings. |

| 4–5 | The inspection team writes a preliminary report of their findings. The hospital can comment on the report. |

| 6 | The inspection team sends the final report to the hospital. |

| Continuously | The hospital plans and implements improvement measures. |

| 11 | Follow-up audit 8 months after the site visit. The inspection team reviews records of patients with sepsis and collect the same data as they did prior to the site visit. Report on findings from audit. |

| 17 | Follow-up audit 14 months after the site visit. The inspection team reviews records of patients with sepsis and collect the same data as they did prior to the site visit. Report on findings from audit |

The inspections are conducted by six regional teams from the county governors in Norway. The teams consist of a minimum of four inspectors. The leader of the team has long experience and particular training in doing inspections. The team has medical and legal expertise. One of the team members is an external medical expert who has special expertise on sepsis. The expert works on a daily basis in a hospital but has been hired part time by the Norwegian Board of Health Supervision to assist the inspection teams. The clinical experts do not participate in inspections of hospitals where they have their regular work.

The county governors are responsible for supervising the hospitals in their region. According to Norwegian legislation, hospitals are required to inform the county governor about serious adverse patient events, and the county governor investigates such patient events to decide whether the hospital has delivered inappropriate care. Furthermore, the county governor handles general patient complaints and carries out inspections in different areas on a regular basis. Based on these supervisory activities, the county governors possesses knowledge about risk and vulnerability at the hospitals in their counties, for example, high turnover of personnel, lack of key competence or financial constraints.

About 40 acute care hospitals in Norway treat patients with sepsis. There is large variation in the size of these hospitals and the number of patients treated. All 40 hospitals are eligible for inspection. The standard procedure used by the National Board of Health Supervision for conducting nationwide inspections is followed. This procedure implies that the regional teams decide which hospitals to inspect in their region. The main criterion for selecting which hospitals to inspect is hospital size. The large hospitals treat more patients, and consequently substandard care will affect many patients. Moreover, the inspection teams also use their local knowledge about specific risks and vulnerability when selecting hospitals for inspection.

The inspections have two components, a system audit48 and two follow-up audits with verification of patient records, at 8 and 14 months after the initial system revision. The system audits are based on the general requirements for system-oriented, planned inspections by the Norwegian Board of Health Supervision.49 This procedure has its basis in the Internationational Organization for Standardization (ISO) procedures for system revisions48 and has been developed and adapted to the Norwegian context. Inspection consists of four main phases: the development of audit criteria, announcement of inspection and collection of relevant documentation and data, site visit and reporting and follow-up.

The intervention is a statutory inspection. The audit criteria are grounded in two main principles of Norwegian legislation: (1) healthcare services should be safe and effective and provided in accordance with sound professional practice50 and (2) organisations that provide healthcare services are required to have a quality management system to ensure that healthcare services are provided in accordance with the legal requirements. Hospital management is accountable for the quality system. In this way, system audits can challenge the quality of performance through addressing the managerial-level responsibility to ensure good practice by providing an expedient organisational framework for delivering sound professional practice.

In cooperation with clinical experts on sepsis, the Norwegian Board of Health Supervision developed the audit criteria for those clinical practices involved in delivering care for patients with sepsis, as well as audit criteria for the quality management systems to ensure such practice. These audit criteria were based on current internationally accepted guidelines for sepsis detection and treatment31 51 and reflect good clinical practice. The main audit criteria are displayed in box 1.

Box 1. Main audit criteria.

Triage within 15 min of arrival at the hospital.

Assessment by a physician in accordance with time limits specified by the triage system.

Blood samples taken within 30 min of arrival.

Vital signs completed within 30 min of arrival.

Blood cultures taken before treatment with antibiotics.

Adequate supplementary investigation to detect the locus of infection.

Antibiotic treatment within 60 min of arrival for patients with organ dysfunction.

Early antibiotic treatment of patients with sepsis without organ dysfunction. There is no definite time limit for this patient population, but it is essential that they receive adequate diagnostics and observations with no unnecessary delay and early onset of treatment when indicated.

Adequate treatment with liquids and oxygen within 60 min of arrival.

Adequate observation of patients while in the emergency department.

Adequate discharge of patients from the emergency room for further treatment in the hospital (written statement indicating patient status, treatment and further actions).

The quality management system should contain updated procedures for how the hospital handles all aspects of sepsis detection and treatment.

Hospital management must assess to what degree these procedures are implemented and followed.

For this, information is required about when patients are actually triaged, assessed by a physician and receive appropriate treatment.

The inspection teams announce inspections 8 weeks prior to the site visit by means of a standardised letter, which includes a list of documentation that the inspection teams request of the inspected hospitals (box 2).

Box 2. Information requested from hospitals prior to the site visit.

Information about hospital organisational structure, including description of the distribution of authority and responsibility.

Names and job descriptions for all leaders and professional groups involved in sepsis treatment.

Relevant written guidelines and procedures regarding sepsis detection and treatment.

Quality goals and performance measurements for treatment of sepsis.

Internal audits or other reports regarding sepsis detection and treatment.

Information about relevant patient complaints and how these have been followed up.

Information about relevant internal discrepancy reports and how these have been followed up.

Overview of all educational procedures and activities related to the topic of the inspection.

Additional information that the hospital feels may be relevant.

Before the site visit, the inspection teams collect relevant data from patient records corresponding with the audit criteria and review all information that the hospital has submitted. The inspection teams are reinforced with extra medical expertise when they review patient records and collect the data. The data collection is surveilled by the external medical expert and the head of the inspection team. By analysing these data, the inspectors develop an initial risk profile, based on which the subsequent on-site audit is planned in detail.

The on-site visit lasts 2 days. The first day begins with a meeting for all personnel involved with the inspection, in which the purpose of the inspection and methods used are explained. Thereafter, the inspection team conducts individual interviews with a strategic sample of professionals and managers at different organisational levels. The interviews typically last from 15 to 60 min, depending on the amount of information needed from the informant. Around 20 informants are interviewed in total. At the end of the visit, the inspection team presents its key findings and preliminary conclusions to the staff and managers who were involved in the inspection. The objective of this feedback is to give the hospital an opportunity to correct any misunderstandings or misinterpretations.

Following the on-site visit, the inspection team writes a report, which is a consensus product of the whole team. The report presents the findings, the audit criteria against which the findings are reviewed and conclusions regarding whether the hospital delivers care in accordance with the audit criteria. The findings are based on all the collected data including written documents, interviews and review of patient records. The report not only presents assessment for each individual audit criterion, it also presents an overall judgement of the hospital’s management system and its overall ability to deliver care in accordance with sound professional standards. Those findings that support the judgement can thus provide important information on why the hospital fails to deliver care in accordance with sound professional standards and what the hospital can do to improve care.

If the inspection reveals non-compliant performance, hospital management is responsible for planning and implementing necessary improvements. The inspection teams follow-up the hospitals to confirm that necessary changes have been implemented and that the changes in fact improve clinical care. At 8 and 14 months after the inspections, the inspection teams conduct a follow-up audit where they review patient records and collect the same data that they collected prior to the inspection. These data provide insight into how the diagnostic and treatment processes for patients with sepsis are carried out, and is used to judge whether the hospital has managed to improve sepsis detection and treatment. Following the audits at 8 and 14 months, the inspection teams write a short report summarising the initial findings of the inspection, what the hospital has done to improve their performance and the findings from the audit.

To reduce variation in the delivery of inspections, the Norwegian Board of Health Supervision has developed a common framework standardising intervention delivery. All audit criteria and the way in which these should be measured are operationalised and described, and a detailed description of how the inspection teams should carry out the inspections is provided. Such a framework can contribute to harmonisation of the inspection teams with regard to the way in which key activities are performed.52 The inspection teams also receive training and exchange experiences to harmonise the way in which they deliver the intervention.

Data collection

Quantitative data

Figure 2 outlines the clusters and the data collection. For each inspection, we collect data at four different time points, referred to as P0, P1, P2 and P3. P0 is the baseline measurement for all hospitals before the Norwegian Board of Health Supervision announced the inspection campaign. The campaign is part of the regular planned inspection activities. These activities are transparent for the hospitals and are announced in advance, in this particular case 6 months before the first inspection. Due to practical reasons, the inspection teams collect data for P0 and P1 at the same time right before the inspection. Data for P0 are thus collected retrospectively, but it is predefined that the patients that will be included are the last patients with suspected sepsis that were admitted prior to 1 October 2015.

Figure 2.

Illustration of clusters and data collection.

Data for P0 are collected from the time period right before the inspection campaign was announced. All hospitals know that they can be inspected. By collecting data before the campaign was announced, we can track changes throughout the inspection cycle and assess to what extent changes are implemented before the inspections are undertaken. P1 is the preinspection measurement and P2 and P3 are post-inspection measurements. The regional inspection teams collect data during the inspection and audits conducted 8 (P2) and 14 months (P3) following the initial inspection. These data serve two purposes. They are used to guide the judgements on whether the inspected hospitals comply with the requirements and to evaluate how inspections affect the clinical processes involved in diagnosing and treating sepsis.

We use a two-step approach to identify eligible patients who might have sepsis on arrival at the hospital. First, a record search of the National Patient Register (NPR) is carried out, using diagnostic codes for infections and sepsis. The NPR is a national common register of all patients and the treatment they have received in Norwegian hospitals. This record search will produce a list of patients with an identification number that will enable the inspection teams to access the corresponding patient records at inspected hospitals. Second, the inspection teams assess the individual patient records for eligibility. The inclusion criteria are clinically suspected infection and at least two systemic inflammatory response syndrome (SIRS) events, excluding elevated leucocytes.53 The inspection teams extract data from the included patient records.

Based on the literature, previous research and discussion with clinical experts, we identified relevant effect measures that reflect how hospitals handle patients admitted to the hospital with suspected sepsis.28 54 These indicators are displayed in box 3. In addition, data are collected for the following control variables: patient sex and age, sepsis with organ dysfunction and hospital size.

Box 3. Effect measures.

Process measures

Percentage of patients who have been triaged within 15 min of arrival in the emergency room.

Percentage of patients who have been assessed by a medical doctor within the time frame set during triage.

Percentage of patients in which vital signs have been evaluated within 30 min.

Percentage of patients in which blood lactate has been measured within 30 min.

Percentage of patients from which supplementary blood samples have been taken within 30 min.

Percentage of patients from which a blood culture is taken prior to administration of antibiotics.

Percentage of patients in which adequate supplementary investigation to detect locus of infection has been undertaken within 24 hours.

Percentage of patients who have received antibiotics treatment within 1 hour of arrival in the emergency room.

Percentage of patients who have received intravenous fluids within 30 min.

Percentage of patients who have received oxygen therapy within 30 min.

Percentage of patients for whom an adequate surveillance regime has been established.

Percentage of patients who have been adequately discharged from the emergency room for further treatment in the hospital (written statement indicating patient status, treatment and further actions).

Outcome measures

Hospital length of stay.

30-day mortality rate.

We use 30-day mortality rate as our key outcome measure, defined as the ratio of patients with sepsis who are dead within 30 days of hospital admittance. Mortality measures based on in-hospital deaths alone, can be misleading as indicators of hospital performance.55 Our measure also includes out-of-hospital deaths. Using the unique personal identification number provided to all citizens of Norway, we are able to link the patient record data with data from the National Registry to calculate the 30-day mortality rate. Thirty-day mortality rate is an established, national quality indicator for Norwegian hospitals,56 and this indicator has been shown to have better validity as a hospital performance measure than in-hospital mortality for selected medical conditions.57 Thirty-day mortality rate has also previously been used to assess effects of measures to improve care for patients with sepsis.58

We consider the percentage of patients with organ dysfunction who have received antibiotic treatment within 1 hour as the key measure, because it is associated with our outcome measure, 30-day mortality rate.27 28 Furthermore, we suggest that early triage and early assessment by medical doctor are key activities to secure timely treatment with antibiotics and that the corresponding process measures consequently are important too.

The power calculations were performed using the stepped-wedge function in Stata/IC V.14.0 (StataCorp LLC) software for Windows, developed by Hemming and Girling.59 The statistical power in a stepped-wedge design depends on the total number of intervention sites, the total number of data collection points for each intervention site, the number of patient records included at each data collection point, the correlation between clustered observations on the same hospital (intracluster correlation) and the implementation period.45 We based our calculations on 24 intervention sites, 4 data collection points and 33 patient records per collection point at each intervention site. As the intracluster correlation may vary between samples and between process and outcome measurements, it is not straightforward to specify an intracluster correlation in advanced. In addition, we could not find any estimated intracluster correlation in previous trials of patients with sepsis. Consequently, we chose an intracluster correlation of 0.05, which is in line with that estimated for several patient outcomes in a cluster randomised trials of heart failure patients.60 Type I and II errors were assumed to be 0.05 and 0.20, respectively.

Because assessment of patient records must be done manually, data collection is resource demanding. Thus, we must balance the design and power of the study against the available resources, a manageable amount of data collection and likely detectable changes in the key effect measures. For the process data, we have powered the study according to the key clinical process indicator, antibiotic treatment within 1 hour of arrival at the hospital. To detect an absolute improvement of 15 percentage points, for example, from 50% to 65% of patients receive antibiotic treatment within 1 hour of arrival, we must include a minimum of 2376 patient records. We intend to include n=3168 patients (24 hospitals×4 time points×33 patients); we assert that this sample is large enough to examine the other relevant process indicators described in box 3.

To reach sufficient statistical power to detect a significant change in patient outcome measures, such as 30-day mortality rate, we need to include more than the 33 patients that we include to detect changes in process measures per each time point. We have powered the study to detect a reduction from 15% to 11.5% in the mortality rate by including 60 patients for each hospital at each time point. The total intended number of included patients for outcome measures is n=5760 patients (24 hospitals×4 time points×60 patients).

Qualitative data

We conduct focus group interviews based on an interview guide. We developed the interview guide based on theory about practices involved in implementing organisational change61 along with our model for how inspections can contribute to improving quality of care by affecting organisational change activities and ideas.39 The key research questions that will be further explored in the qualitative study are displayed in box 4.

Box 4. Key research questions of the qualitative study.

How do inspection teams plan, conduct, follow-up, and finalise the inspections?

How do the inspected organisations prepare for inspections?

Do inspections contribute to creating awareness about current performance and possible performance gaps in the clinical system for delivering care to patients with sepsis?

Do inspections contribute to engaging leaders and clinicians in improvement of their work?

Do hospitals initiate improvement activities aimed at enhancing the quality of sepsis care prior to inspections?

Do inspections affect organisational ideas, for example, understanding of the clinical system and commitment to change?

Do clinicians and leaders reflect on the performance of their clinical system before and after the inspections?

What kind of change activities do hospitals initiate following the inspections, and how do these affect the quality of care delivered to patients with sepsis?

What is the impact of other contextual factors on the improvement process?

How do follow-up audits of patient records affect the change processes?

We intend to include a strategic sample of six cases; we assert that such a data sample can provide sufficiently rich data about the phenomenon we intend to study.62

Data monitoring

The external medical expert, together with the leader of the inspection team, oversees the data collection process. Inspectors with medical expertise collect data by reviewing electronic patient records. To increase inter-rater reliability, the inspectors work in pairs, and they all sit together in the same room and can ask for supervision from the external medical expert when needed. To reduce inter-rater bias between the inspection teams, the Norwegian Board of Health Supervision has developed a framework describing in detail the data that should be collected from the patient records and the criteria for judgement. All inspection teams have received special training and participated in meetings where the audit criteria have been discussed to promote a common understanding. Once a team is assigned to one hospital, they collect data at all four time points. To promote validity and reliability of the collected data the involved hospitals can oversee how data are collected. The entire data collection process is transparent for the inspected hospitals, and the hospitals can verify all the collected data if they wish. The complete data file is checked manually before analysis, and we also apply various procedures of electronic field checks to secure data quality.

Data analysis

Statistical analysis

Descriptive statistics will be used to quantify sample characteristics. All analysis will use patient-level data, collected at four periods for different patients for 24 hospitals—two collections during the control period and two collections during the intervention period for each hospital. To compare the various process and outcome measurements (dependent variables) between the intervention and control periods (independent variable), we will use logistic regression models for binary measurements and linear regression models for continuous measurements. The choices of regression methods for the various measurements are outlined in table 3. As recommended in the literature,63 64 all models will include time as a covariate to adjust for potential secular changes in the process and outcome measurements during the study period. The underlying form of time will be included in the models as a linear term, polynomial term or cubic spline term, as appropriate.

Table 3.

Outline of regression models for the various process and outcome measurements

| Indicator | Dependent variable | Type | GEE model* |

| Process | Triage within 15 min | Binary | Logistic regression |

| Process | Timely assessment by physician | Binary | Logistic regression |

| Process | Vital signs evaluated within 30 min | Binary | Logistic regression |

| Process | Blood lactate measured within 30 min | Binary | Logistic regression |

| Process | Supplementing blood samples within 30 min | Binary | Logistic regression |

| Process | Blood culture taken before antibiotics | Binary | Logistic regression |

| Process | Adequate supplementing investigation within 24 hours | Binary | Logistic regression |

| Process | Antibiotic treatment within 1 hour | Binary | Logistic regression |

| Process | Intravenous fluid within 30 min | Binary | Logistic regression |

| Process | Oxygen therapy within 30 min | Binary | Logistic regression |

| Process | Adequate surveillance regime established | Binary | Logistic regression |

| Process | Adequate discharge from emergency room | Binary | Logistic regression |

| Outcome | 30 day mortality | Binary | Logistic regression |

| Outcome | Length of stay | Continuous† | Linear regression |

*Regression models with GEE.

†Transformed if skewed distribution.

GEE, generalised estimating methodology.

Norway is divided into 18 counties in addition to its capital city, and there are acute hospitals that treat patients with sepsis in all counties. The population density varies between the counties. The smallest acute hospitals serve a population of about 50 000, while the largest serve a population of about 500 000. The sizes of the intensive care units and the number of patients with sepsis treated during a year will therefore differ between the included hospitals. This is a national inspection campaign, and hospital in all counties will be inspected. As patients are sampled from different hospitals, a between-hospital variation in measurements is likely, introducing correlated data within the hospitals. To account for this intracluster correlation, we will use generalised estimating equations methodology,65 specifying an exchangeable working correlation structure, that is, any two patients are equally correlated within hospitals regardless of time and intervention and control periods. However, as this assumption might not hold for all hospitals, a method for obtaining cluster-robust standard errors of model parameters will be applied.66 Finally, as our repeated sampling of patients with sepsis may not be entirely representative of the total population, difference in certain patient characteristics, including age and sex, between comparison periods might arise. In that case, the above-mentioned models will also include such covariates for obtaining correct model means.

Qualitative analysis

The focus group interviews will be taped and transcribed. We will perform a thematic content analysis of the data guided by theory and the data themselves.67 The quantitative data will indicate whether the clinical processes have improved. The focus for the analysis of the qualitative data is to understand more about why and how the clinical processes for detecting and treating sepsis have been changed or not changed.

We have developed a theory of change for the delivery of the intervention, which guides our analysis. We used the findings from our systematic review as a starting point for developing the theory of change for the delivery of the intervention. Early detection and treatment of sepsis can be challenging because sepsis is a syndrome more than a disease,68 and best practice care for this patient group consists of a series of time-critical events that can involve a range of different actors. Consistently delivering best practice care to all patients with sepsis thus relies on a well-functioning clinical chain. It can be demanding for a hospital to gather relevant data to systematically monitor how well the clinical system actually performs over time.69 Identifying possible performance gaps in the clinical system is a key driver for improvement,70 and we planned and designed the inspections accordingly.

A key part of the intervention is to assess the design of the hospital’s clinical system for delivering care to patients with sepsis and to assess how the clinical system performs over time. As part of the inspection, the inspection teams collect data that reflect the key elements of providing best practice care for patients with sepsis. The inspection teams emphasise presentation of these data in an easily comprehensible way using graphs and diagrams. Furthermore, they compile and aggregate quantitative data together with interview data from the inspections to provide a better understanding of shortcomings in the clinical system and its interdependencies. We suggest that a thorough assessment of the clinical system for delivering care to patients with sepsis can contribute to enhancing leaders’ and clinicians’ understanding of their current performance and to raise their awareness regarding possible performance gaps of which they were previously unaware. A better understanding of their current practices and possible performance gaps can thus trigger further reflection on performance. We suggest that such reflection can in turn contribute to a shared commitment to address shortcomings in the clinical system by planning, implementing and evaluating expedient measures to address performance gaps. Through the analysis of the qualitative data, we explore whether inspections have affected organisational ideas and change activities in line with our suggest theory of change.

During the postintervention interviews, we assess compliance with delivery of the key components of the intervention, as a way of determining to which extent the inspection teams have performed the inspections in accordance with guidelines and requirements. The key components for which we assess compliance are displayed in box 5.

Box 5. Key components of the intervention, which are assessed for fidelity.

Initial letter announcing the inspection and requesting documentation from hospitals.

Review of documentation from hospitals prior to site visit.

Patient record review prior to site visit.

Start-up meeting at site visit.

Interviews with physicians, managers and nurses during site visit.

Closing meeting at site visit.

Written report following site visit containing judgement of clinical performance based on quantitative process data from patient records and interview data.

Audit of patient records 8 months after site visit.

Audit of patient records 14 months after site visit.

Strengths, potential limitations and biases

Regulatory measures within healthcare seem to be increasing, and the burden and effect of such measures are being questioned.69 71 Previous studies have evaluated different approaches to regulation and surveillance in healthcare, but we have limited knowledge about the actual effect of such measures on the quality of care.72 73 External inspections are core components of regulatory regimes and are frequently used within healthcare.1 2 It is therefore of importance to gather more knowledge about the effects of this regulatory measure and how inspections might contribute to improving healthcare quality. Few studies have used an experimental design to assess the effects of external inspections.10 We suggest that the reason for this is that external inspections represent contemporary events involving a whole range of autonomous actors in society, which makes it challenging to apply an experimental design. In our case, it involves the Norwegian Board of Health Supervision, 18 autonomous county governors, 24 hospitals and the NPR. The main strength of our study is the fact that we have been able to persuade all involved actors to cooperate and commit to conducting the inspections using a stepped-wedge design.

The stepped-wedge design enables us to track changes in outcome measures for sepsis detection and treatment over time. The changes that we might observe in the outcome measures are not necessarily attributable to the inspections alone. There can be other factors beside the inspections that can affect sepsis detection and treatment during the study period. Our qualitative data can help identifying such factors and provide insight into how they might interact with the inspections. By combining findings from the qualitative and quantitative data, we assert that we can assess how sepsis detection and treatment develops over time and substantiate how inspections along with other factors can affect the development.

Our study is based on an overall framework suggesting that the inspections need to affect organisational ideas and activities to facilitate change in organisational behaviour and thereby improve quality of care for patients with sepsis (figure 1). Moreover, we have developed a more detailed theory of change for the inspections, suggesting how they can contribute to affect organisational change. We collect quantitative data that indicate whether organisational behaviour and the quality of care improve after the inspections. By combining these findings with our qualitative data that provide insight into how the inspections affect organisational change, we can test our theories about how inspections can contribute to improve the quality of care. We suggest that our study can contribute to build theory about how inspections can improve the quality of care and thus have relevance for inspections covering other topics.

Initially, we had intended to use data from the NPR to calculate the 30-day mortality rate and hospital length of stay. After we had begun planning our study and had received ethical approval, a new definition for sepsis and septic shock was issued.74 Patients with sepsis are identified in the NPR using diagnosis codes of the International Classification of Diseases, 10th revision. Previous research has shown that coding practice for sepsis varies between hospitals and can change over time.75 76 We assert that the new sepsis definition will affect how Norwegian hospitals code sepsis; therefore, we cannot use available routine data to calculate our outcome measures. Instead, we must use a more labour-intensive approach and manually scan all patient records that we include.

Though more time consuming, including patients based on manual reviews of patient records, instead of sepsis codes, is strengthening our study. The patients in this study are recruited from a population identified through a standardised search in the NPR that includes sepsis codes and the most commonly used infection codes. Patients are included based on SIRS criteria. We assert that a change in coding practice owing to the new sepsis definition will not alter the patient population identified by our standardised search. The new sepsis definition is narrower; it is therefore likely that fewer patients will be coded with sepsis. Patients that do not fulfil the criteria for sepsis under the new definition will still be assigned an infection code and will therefore be encompassed in the patient population identified by our standardised search.

The inspections in our study are contemporary and transparent events, thus it is not possible to mask who is exposed to the intervention. Nor is it possible to mask the data collection. Because there is no available routine date about sepsis detection and treatment, such data are collected as part of the intervention in our study. The inspections teams access the patient records manually, and they will therefore know which hospital the patients belong to and whether the patient was admitted before or after the inspection. The fact that data are collected as part of the intervention can be viewed as a limitation. Doing data collection during the inspection is however standard procedure, and thus not atypical for the inspections in our study.48 Given the nature of the intervention—that is collecting data, reporting and giving recommendations—what we are in fact measuring is, at least in part, the effect of data collection.

To enhance data quality and reduce bias, the Norwegian Board of Health Supervision has developed a framework and detailed criteria on which data collection is based. Moreover, data collection is done by experienced inspectors with particular medical expertise, and the whole process is overseen by the external medical experts and the leaders of the inspection teams. The data collection is transparent for the inspected hospital. They can verify all the collected data and give feedback if they encounter missing data or data that has been misinterpreted.

The patients included in this study have a suspected serious infection that potentially requires rapid treatment. The process data that we collect addresses sepsis detection and treatment in the initial phase of the hospital stay, for example, the time to triage, assessment by a physician and antibiotics treatment. Such process measures are still relevant for the patient population included in our study, regardless of whether they fulfil the criteria for sepsis using the new definition. Because our main objective is to track changes over time in the quality of care delivered to a defined patient population, we assert that our study design and process data are still valid and relevant despite the introduction of a new definition of sepsis.

We had to balance the power of the study against the available resources and a manageable amount of data collection. We designed and powered the study to detect realistic changes in our outcome and process measures. However, there is an inherent risk that the study can become underpowered to detect changes in our effect measures. A potential challenge is information missing in the patient records. Lack of documentation itself can be an important finding because this can be associated with poorer patient outcomes.77 If information regarding process measures is missing in the patient records, it will affect the power of the study, which can potentially become underpowered. If there is an association between substandard care and lack of documentation in the patient records, our preinspection data may be biased. Poor care will only be indicated by missing data but will not be supported by actual empirical data. Following inspection, it may be easier to improve the documentation practice within a hospital than actually improving the delivery of care. Thus, there is a risk that the postintervention data will be biased because they include substandard care that would not have been documented prior to inspection.

Postinspection data are collected 8 and 14 months after inspection. There is a delay of about 2 months after the on-site visit before the hospitals receive their final report. Implementing true change in a clinical system can be time consuming. To give the hospital time to plan and implement changes, we schedule the first follow-up audit 8 months after the initial site visit. We assert that the hospitals have begun to implement change at this point. The feedback after the first audit will not be as extensive as that in the report following the site visit, and the feedback will be provided to hospitals without much delay. The hospitals can use this feedback to adapt and reiterate their ongoing improvement activities. We therefore schedule the second follow-up audit 6 months after the first.

A likely challenge in our study is possible contamination of the control group, that is, hospitals that have not yet received the intervention. All hospitals in Norway are aware of the nationwide inspection campaign on sepsis treatment now that the inspections are under way. There is a possibility that hospitals that have not yet been inspected will begin to make improvements prior to inspection. We therefore collect data at P0 to obtain a baseline measurement for all hospitals before announcing the inspection campaign. Collecting quantitative data from P0, P1, P2 and P3 as well as qualitative data will enable investigation of the extent to which hospitals implement changes before the inspections and explore how change processes unfold in the inspected hospitals.

Factors like socioeconomic status and comorbidity can affect the outcomes. We do not have access to such data and can therefore not adjust for these factors. In a stepped-wedge design, all sites are exposed for the intervention and we compare change in the effect measures before and after the intervention. We collect the data in a standardised way, and the intervention itself should not affect which patients that are admitted to the hospitals. Consequently, we have no reason to believe that confounding factors should be unevenly distributed in the study population before and after the intervention.

The size of the included hospitals differ. The order of the inspections are randomised in clusters of four hospitals, and all the clusters include hospitals with different sizes. To account for intracluster correlation, we will use generalised estimating equations methodology,65 and we will include hospital size as a covariate in our analytic models. Due to Norwegian topography with long travel distances, patients with suspected sepsis are typically sent to the nearest acute hospital for initial diagnosis and treatment. In some cases, patients with septic shock can be transferred to a larger hospital later. We know the number of patients with organ dysfunction admitted to the various hospitals and can adjust for this in our analysis. We do however not have access to data about comorbidity for the included patients and can therefore not fully adjust for case mix differences between the included hospitals. Our process measures cover the initial steps of the diagnostic and treatment process, which is done in all hospitals irrespectively of size. We compare changes in the effect measures before and after the intervention, and the intervention itself should not affect which patients that are admitted to the different hospitals. Consequently, we have no reason to believe that case mix differences between the inspected hospitals should change before and after the intervention.

External inspections can have intended and unintended outcomes.78 The aim of our study is to assess the effects of inspection on the intended outcome, that is, improved care for patients admitted to the hospital with suspected sepsis. However, there can be intended and unintended outcomes of inspections that this study does not explicitly address. One such intended outcome is to create legal safeguarding. Governmental or other public institutions often perform external inspections as one of many measures within a larger regulatory system that aims to ensure delivery of services according to certain requirements. An independent system of external control can contribute to confidence that certain services meet relevant quality requirements, both for the recipients of said services and for the public in general. By having an independent controlling mechanism, patients need not examine and control the safety and quality of services themselves.79 Inspections can thus be regarded as a means of legally safeguarding users and the public. This is an intangible outcome that is difficult to quantify and evaluate, and it will not be addressed in our study.

Another outcome that we only partially address is the possibility of goal displacement. Whatever is being inspected tends to receive attention.80 81 An unintended effect of inspections can therefore be that members of the inspected organisation become so preoccupied with responding to an ongoing inspection that they neglect more important improvement efforts initiated by the organisation itself. Furthermore, inspections can influence how the organisation prioritises its resources,22 81 82 and there is an inherent danger that the inspection can draw resources away from areas in greater need of resources. Our qualitative data can provide insight into these unintended consequences of inspection to some extent, but we will not be able to quantify these effects.

A basic precondition for external inspections is that the inspected organisation is accountable for implementing necessary changes following an inspection. The effect of an inspection thus depends on delivery of the inspection and the inspected organisation’s capacity to implement change.11 83 The latter is in turn dependent on a range of factors, for example, engagement by leaders and workers, organisational culture, available resources and knowledge improvement.42 84 85 We assert that the outcome measures used in this study reflect both the inspection delivery and the improvement capacity of the inspected organisation. Delivery of the inspection cannot be evaluated in isolation from the inspected organisation’s ability to effect change. Still, we argue that a lack of improvement following an inspection can primarily be caused by the inspected organisation’s lack of capacity to implement change and not by the inspection itself. Our qualitative data can provide insight into the impact of inspection on the organisation and the organisation’s capacity to implement change. To a certain extent, we can therefore interpret the absence of change as either being primarily a consequence of weaknesses in the delivery of inspection or owing to the inspected organisation lacking the capacity for improvement. However, one limitation of our study is that we cannot quantitatively distinguish between these two causes.

There is a striking mismatch between the widespread use of external inspections in healthcare and the paucity of evidence of their effect.10 We combine quantitative and qualitative data in an innovative way that can contribute to shed light on how inspections affect the quality of care delivered to patients with sepsis. Despite the limitation of our study, we argue that it is robust enough to provide new and needed knowledge about the effects of external inspections.

Ethics and dissemination

The Regional Ethics Committee of Norway Nord (REC) reviewed the study (Identifier: 2015/2195). The REC considered the study to be health service research and not a clinical trial because there is no intervention applied directly to patients. According to Norwegian legislation, the REC is mandated to grant exemptions from the requirement of confidentiality for this kind of research. The REC considers this study to be of essential interest for society and that the welfare and integrity of patients is attended to. Data will be deleted at the end of the project. On this background, the REC has granted an exemption from the requirement of consent and confidentiality for the data used in this study. The Norwegian Data Protection Authority has reviewed and approved the study (Identifier: 15/01559). The study was registered with ClinicalTrials.gov in April 2016 (Identifier: NCT02747121). The study results will be reported in international peer-reviewed journals.

Supplementary Material

Acknowledgments

We thank the clinical experts (Erik Solligård, Steinar Skrede, Ulf Køpp, Kristine Værhaug, Bror A Johnstad, Jon Birger Haug and Jon Henrik Laake) for providing valuable advice on how to design the inspections and which data to collect. We thank all the staff at the Norwegian Board of Health Supervision who participated in planning and developing the intervention.

Footnotes

Contributors: EH led the development of the overall study design and drafted the manuscript. JCF, KW, GSB and SH participated in development of the overall study design and methods for collecting the data and analysis. HKF provided advice on designing substudy two and on how to search the National Patient Register to identify eligible patients. RMN provided statistical advice for the study design, developed the analysis plan and carried out the power calculations for substudy 2. ILT provided advice on developing substudy 1. JCH provided advice on the design, data collection and analysis for substudy 2. All authors contributed to writing and revising the manuscript.

Funding: The Norwegian Board of Health Supervision funds expenses for the clinical experts on the inspection teams and part of the costs related to data collection. The members of inspection teams are employed by the county governors in Norway. EH and GSB are part-time employees at the Norwegian Board of Health Supervision. The management of the funders do not participate in analysis of the research data. Western Norway University of Applied Sciences funds a PhD student who will participate in collecting qualitative data and in data analysis.

Competing interests: None declared.

Patient consent: Obtained. The Regional Ethics Committee of Norway ruled that individual consent from all patients was not necessary.

Ethics approval: The Regional Ethics Committee of Norway (REC) and The Norwegian Data Protection Authority.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: There is no data available for sharing now.

References

- 1. Walshe K, Boyd A. Designing regulation: a review. Manchester: The University of Manchester-Manchester Business School, 2007. [Google Scholar]

- 2. Shaw CD, Braithwaite J, Moldovan M, et al. . Profiling health-care accreditation organizations: an international survey. Int J Qual Health Care 2013;25:222–31. 10.1093/intqhc/mzt011 [DOI] [PubMed] [Google Scholar]

- 3. Walshe K, Wallace L, Freeman T, et al. . The external review of quality improvement in health care organizations: a qualitative study. Int J Qual Health Care 2001;13:367–74. 10.1093/intqhc/13.5.367 [DOI] [PubMed] [Google Scholar]

- 4. Shaw CD. External quality mechanisms for health care: summary of the ExPeRT project on visitatie, accreditation, EFQM and ISO assessment in European Union countries. International Journal for Quality in Health Care 2000;12:169–75. 10.1093/intqhc/12.3.169 [DOI] [PubMed] [Google Scholar]

- 5. Flodgren G, Pomey M-P, Taber S A, et al. . Effectiveness of external inspection of compliance with standards in improving healthcare organisation behaviour, healthcare professional behaviour or patient outcomes. Cochrane Database Syst Rev 2011;11:CD008992 10.1002/14651858.CD008992.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Walshe K. Clinical governance: from policy to practice: health services management centre. University of Birmingham, 2000. [Google Scholar]

- 7. Sutherland K, Leatherman S. Regulation and quality improvement-: a review of the evidence. London: The Health Foundation, 2006. [Google Scholar]

- 8. Campbell SM, Roland MO, Buetow SA. Defining quality of care. Soc Sci Med 2000;51:1611–25. 10.1016/S0277-9536(00)00057-5 [DOI] [PubMed] [Google Scholar]

- 9. Institute of Medicine Committee to Design a Strategy for Quality R. Lohr KN, Assurance in M. Medicare: AStrategy for Quality Assurance: Volume 1. Washington (DC): National Academies Press (US), 1990. [PubMed] [Google Scholar]

- 10. Flodgren G, Gonçalves-Bradley DC, Pomey MP. External inspection of compliance with standards for improved healthcare outcomes. Cochrane Database Syst Rev 2016;12:Cd008992. 10.1002/14651858.CD008992.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Brubakk K, Vist GE, Bukholm G, et al. . A systematic review of hospital accreditation: the challenges of measuring complex intervention effects. BMC Health Serv Res 2015;15:280. 10.1186/s12913-015-0933-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Greenfield D, Braithwaite J. Health sector accreditation research: a systematic review. Int J Qual Health Care 2008;20:172–83. 10.1093/intqhc/mzn005 [DOI] [PubMed] [Google Scholar]

- 13. Hinchcliff R, Greenfield D, Moldovan M, et al. . Narrative synthesis of health service accreditation literature. BMJ Qual Saf 2012;21:979–91. 10.1136/bmjqs-2012-000852 [DOI] [PubMed] [Google Scholar]

- 14. Braithwaite J, Greenfield D, Westbrook J, et al. . Health service accreditation as a predictor of clinical and organisational performance: a blinded, random, stratified study. Qual Saf Health Care 2010;19:14–21. 10.1136/qshc.2009.033928 [DOI] [PubMed] [Google Scholar]

- 15. Paccioni A, Sicotte C, Champagne F. Accreditation: a cultural control strategy. Int J Health Care Qual Assur 2008;21:146–58. 10.1108/09526860810859012 [DOI] [PubMed] [Google Scholar]

- 16. van Dishoeck AM, Oude Wesselink SF, Lingsma HF, et al. . (Transparency: can the effect of governmental surveillance be quantified?). Ned Tijdschr Geneeskd 2013;157:A1676. [PubMed] [Google Scholar]

- 17. Oude Wesselink SF, Lingsma HF, Reulings PG, et al. . Does government supervision improve stop-smoking counseling in midwifery practices? Nicotine Tob Res 2015;17 10.1093/ntr/ntu190 [DOI] [PubMed] [Google Scholar]

- 18. OPM Evaluation team. Evaluation of the Healthcare Commission’s Healthcare Associated Infections Inspection Programme. London: OPM, 2009. [Google Scholar]

- 19. Oude Wesselink SF, Lingsma HF, Ketelaars CA, et al. . Effects of Government Supervision on Quality of Integrated Diabetes Care: a Cluster Randomized Controlled Trial. Med Care 2015;53:784–91. 10.1097/MLR.0000000000000399 [DOI] [PubMed] [Google Scholar]

- 20. Salmon JW, Heavens J, Lombard C, et al. . The Impact of Accreditation on the Quality of Hospital Care: KwaZulu-Natal Province, Republic of South Africa Bethesda. Published for the U.S. Agency for International Development (USAID) by the Quality Assurance Project: University Research Co., LLC, 2003. [Google Scholar]

- 21. Campbell M, Fitzpatrick R, Haines A, et al. . Framework for design and evaluation of complex interventions to improve health. BMJ 2000;321:694–6. 10.1136/bmj.321.7262.694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Benson LA, Boyd A, Walshe K. Learning from regulatory interventions in healthcare: the Commission for Health Improvement and its clinical governance review process. Clin Govern Int 2006;11:213–24. [Google Scholar]

- 23. Ngo D, Ed B, Putters K, et al. . Supervising the quality of care in changing healthcare systems—an international comparison. Rotterdam: Department of Healthcare Governance Institute of Health Policy and Management, Erasmus University Medical Center, 2008. [Google Scholar]

- 24. Sparreboom WF. How effective are you? A research on how health care regulators across Europe study the effectiveness of regulation. Amsterdam: VU University, 2009. Master. [Google Scholar]

- 25. Flaatten H. Epidemiology of sepsis in Norway in 1999. Crit Care 2004;8:R180–R84. 10.1186/cc2867 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Angus DC, Pereira CA, Silva E. Epidemiology of severe sepsis around the world. Endocr Metab Immune Disord Drug Targets 2006;6:207–12. 10.2174/187153006777442332 [DOI] [PubMed] [Google Scholar]

- 27. Ferrer R, Artigas A, Levy MM, et al. . Improvement in process of care and outcome after a multicenter severe sepsis educational program in Spain. JAMA 2008;299:2294–303. 10.1001/jama.299.19.2294 [DOI] [PubMed] [Google Scholar]

- 28. Levy MM, Dellinger RP, Townsend SR, et al. . The surviving sepsis campaign: results of an international guideline-based performance improvement program targeting severe sepsis. Intensive Care Med 2010;36:222–31. 10.1007/s00134-009-1738-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Barochia AV, Cui X, Vitberg D, et al. . Bundled care for septic shock: an analysis of clinical trials. Crit Care Med 2010;38:668–78. 10.1097/CCM.0b013e3181cb0ddf [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Gatewood MO, Wemple M, Greco S, et al. . A quality improvement project to improve early sepsis care in the emergency department. BMJ Qual Saf 2015;24:787–95. 10.1136/bmjqs-2014-003552 [DOI] [PubMed] [Google Scholar]

- 31. Levy MM, Rhodes A, Phillips GS, et al. . Surviving sepsis campaign: association between performance metrics and outcomes in a 7.5-year study. Crit Care Med 2015;43:3–12. 10.1097/CCM.0000000000000723 [DOI] [PubMed] [Google Scholar]

- 32. Miller RR, Dong L, Nelson NC, et al. . Multicenter implementation of a severe sepsis and septic shock treatment bundle. Am J Respir Crit Care Med 2013;188:77–82. 10.1164/rccm.201212-2199OC [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Statens helsetilsyn. ‘MENS VI VENTER…’–forsvarlig pasientbehandling i akuttmottakene Oppsummering av landsomfattende tilsyn i 2007 med forsvarlighet og kvalitet i akuttmottak i somatisk spesialisthelsetjeneste. Oslo: Statens Helsetilsyn, 2008. [Google Scholar]

- 34. Institute of Medicine Committee on Quality of Health Care in America. Crossing the Quality Chasm: a New Health System for the 21st Century. Washington DC: Institute of Medicine (US) Committee on Quality of Health Care in America, 2001. [Google Scholar]

- 35. Berwick DM. Crossing the boundary: changing mental models in the service of improvement. Int J Qual Health Care 1998;10:435–41. 10.1093/intqhc/10.5.435 [DOI] [PubMed] [Google Scholar]

- 36. Plsek PE, Greenhalgh T. Complexity science: the challenge of complexity in health care. BMJ 2001;323:625–8. 10.1136/bmj.323.7313.625 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Grant D, Marshak RJ. Toward a discourse-centered understanding of organizational change. J Appl Behav Sci 2011;47:204–35. 10.1177/0021886310397612 [DOI] [Google Scholar]

- 38. Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev 2008;65:379–436. 10.1177/1077558708317802 [DOI] [PubMed] [Google Scholar]

- 39. Hovlid E, Braut GS, Hannisdal E, et al. . Mechanisms of change in health organizations subject to external inspections: a systematic review, 2016. Submitted. [Google Scholar]

- 40. Weiner BJ. A theory of organizational readiness for change. Implement Sci 2009;4:67. 10.1186/1748-5908-4-67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Holt DT, Helfrich CD, Hall CG, et al. . Are you ready? How health professionals can comprehensively conceptualize readiness for change. J Gen Intern Med 2010;25(Suppl 1):50–5. 10.1007/s11606-009-1112-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Langley GL, Moen R, Nolan KM. The Improvement guide: a practical approach to enhancing organizational performance. San Francisco, CA: Jossey-Bass Publishers, 2009. [Google Scholar]

- 43. Batalden PB, Davidoff F. What is "quality improvement" and how can it transform healthcare? Qual Saf Health Care 2007;16:2–3. 10.1136/qshc.2006.022046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Cochrane AL. Effectiveness and efficiency: random reflections on health services. London: Royal Society of Medicine Press Limited, 1989. [Google Scholar]

- 45. Hemming K, Lilford R, Girling AJ. Stepped-wedge cluster randomised controlled trials: a generic framework including parallel and multiple-level designs. Stat Med 2015;34:181–96. 10.1002/sim.6325 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Hemming K, Haines TP, Chilton PJ, et al. . The stepped wedge cluster randomised trial: rationale, design, analysis, and reporting. BMJ 2015;350:h391. 10.1136/bmj.h391 [DOI] [PubMed] [Google Scholar]

- 47. Weick KE, Quinn RE. Organizational change and development. Annu Rev Psychol 1999;50:361–86. 10.1146/annurev.psych.50.1.361 [DOI] [PubMed] [Google Scholar]

- 48. ISO. ISO/IEC TS 17022:2012. Conformity assessment—requirements and recommendations for content of a third-party audit report on management systems. Geneva: International Organization for Standardization, 2012. [Google Scholar]

- 49. Braut GS : Molven O, Ferkis J, The Requirement to Practice in Accordance With Sound Professional Practice: Healthcare, Welfare and Law Oslo: Gyldendal Akademisk, 2011. [Google Scholar]

- 50. Braut GS : Molven O, Ferkis J, The Requirement to Practice in Accordance With Sound Professional Practice: Healthcare, Welfare and Law Oslo: Gyldendal Akademisk, 2011. [Google Scholar]

- 51. Abraham E. New definitions for sepsis and septic shock: continuing evolution but with much still to be done. JAMA 2016;315:757–9. 10.1001/jama.2016.0290 [DOI] [PubMed] [Google Scholar]

- 52. Campbell NC, Murray E, Darbyshire J, et al. . Designing and evaluating complex interventions to improve health care. BMJ 2007;334:455–9. 10.1136/bmj.39108.379965.BE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Bone RC, Balk RA, Cerra FB, et al. . Definitions for sepsis and organ failure and guidelines for the use of innovative therapies in sepsis. the ACCP/SCCM Consensus Conference Committee. American College of Chest Physicians/Society of Critical Care Medicine. Chest 1992;101:1644–55. [DOI] [PubMed] [Google Scholar]

- 54. Goodwin APL, Srivastava V, Shotton H, et al. . Just say Sepsis! A review of the process of care received by patients with Sepsis National Confidential Enquiry into Patient Outcomes and Death, 2015. [Google Scholar]

- 55. Kristoffersen DT, Helgeland J, Clench-Aas J, et al. . Comparing hospital mortality--how to count does matter for patients hospitalized for acute myocardial infarction (AMI), stroke and hip fracture. BMC Health Serv Res 2012;12:364–64. 10.1186/1472-6963-12-364 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Clench-Aas J, Helgeland J, Dimoski T. Methodological development and evaluation of 30-day mortality as quality indicator for Norwegian hospitals. Oslo: Nasjonalt Kunnskapssenter for Helsetjenesten, 2005. [PubMed] [Google Scholar]

- 57. Borzecki AM, Christiansen CL, Chew P, et al. . Comparison of in-hospital versus 30-day mortality assessments for selected medical conditions. Med Care 2010;48:1117–21. 10.1097/MLR.0b013e3181ef9d53 [DOI] [PubMed] [Google Scholar]

- 58. Torsvik M, Gustad LT, Mehl A, et al. . Early identification of sepsis in hospital inpatients by ward nurses increases 30-day survival. Crit Care 2016;20:244. 10.1186/s13054-016-1423-1 [DOI] [PMC free article] [PubMed] [Google Scholar]