Abstract

Background

Abnormalities in emotional prosody processing have been consistently reported in schizophrenia and are related to poor social outcomes. However, the role of stimulus complexity in abnormal emotional prosody processing is still unclear.

Method

We recorded event-related potentials in 16 patients with chronic schizophrenia and 16 healthy controls to investigate: 1) the temporal course of emotional prosody processing; 2) the relative contribution of prosodic and semantic cues in emotional prosody processing. Stimuli were prosodic single words presented in two conditions: with intelligible (semantic content condition–SCC) and unintelligible semantic content (pure prosody condition–PPC).

Results

Relative to healthy controls, schizophrenia patients showed reduced P50 for happy PPC words, and reduced N100 for both neutral and emotional SCC words and for neutral PPC stimuli. Also, increased P200 was observed in schizophrenia for happy prosody in SCC only. Behavioral results revealed higher error rates in schizophrenia for angry prosody in SCC and for happy prosody in PPC.

Conclusions

Together, these data further demonstrate the interactions between abnormal sensory processes and higher-order processes in bringing about emotional prosody processing dysfunction in schizophrenia. They further suggest that impaired emotional prosody processing is dependent on stimulus complexity.

1. Introduction

Among the most significant predictors of long-term disability in schizophrenia (e.g., Couture et al., 2006) is impaired detection and recognition of emotions from voice, i.e., emotional prosody [EP]. Affect recognition from both voice and face is an aspect of social cognition, which has been recently recognized as an important predictor of functional outcomes at all stages of schizophrenia pathology: clinical high risk (Addington et al., 2008, Green et al., 2012), first episode (Horan et al., 2012) and chronic schizophrenia (Kee et al., 2003; Kucharska-Pietura et al., 2005; Green et al., 2012). While face processing abnormality in schizophrenia has been well characterized (e.g., Li et al., 2010), voice and prosody processing have been understudied, especially using event-related potential (ERP) approaches, which remain the only tool to examine temporal changes in neurophysiological events that correspond to early stages of analysis of a speech signal. The existing studies on vocal emotional processing include just a handful of behavioral (e.g., Edwards et al., 2001), functional magnetic resonance imaging (fMRI – e.g., Leitman et al., 2011; Mitchell et al., 2004) and ERP investigations (Pinheiro et al., 2013).

In healthy subjects, perception of emotional prosody is thought to reflect three interacting stages: 1) sensory processing of a speech signal; 2) implicit categorization of salient acoustic features into emotional and non-emotional features; and 3) explicit evaluation and assignment of emotional meaning to a speech signal (Paulmann & Kotz, 2008; Paulmann et al., 2010; Schirmer & Kotz, 2006). Event-related potential (ERP) studies demonstrated that the first two stages are indexed by N100 and P200, respectively (Paulmann & Kotz, 2008; Paulmann et al., 2010; Pinheiro et al., 2013).

Despite the importance of a detailed understanding of emotional prosody processing deficits in schizophrenia, few studies have examined these abnormalities and their underlying neural mechanisms are not well understood. Recent studies suggested that sensory-based dysfunction might not exclusively account for abnormal prosody processing in schizophrenia. Instead, an interaction between dysfunctional sensory and higher-order cognitive processes may better explain it (Leitman et al., 2010, 2011; Pinheiro et al., 2013). A recent ERP study provided further evidence for these abnormalities (Pinheiro et al., 2013). This study investigated prosody processing in 15 chronic schizophrenia patients and 15 healthy controls (HC). Additionally, it explored the relative contributions of prosodic and semantic cues. Stimuli were prosodic sentences with intelligible (semantic content condition-SCC) and unintelligible semantic content (pure prosody condition-PPC). The ERP effects occurred within the first 200ms from the sentence onset in both groups (Pinheiro et al., 2013), supporting previous studies’ results (Paulmann & Kotz, 2008; Paulmann et al., 2010). The results revealed abnormalities in the three stages of prosody processing in schizophrenia, which were more pronounced for prosodic SCC sentences. Less negative N100 suggested abnormal sensory processing of prosodic SCC sentences irrespective of valence. Increased P200 to angry and happy prosodic stimuli in the SCC, and to happy stimuli in the PPC suggested abnormal detection of emotional salience. Behavioral results revealed impaired cognitive evaluation of the emotional significance of angry SCC and neutral PPC sentences.

In view of a critical need for a systematic study of emotional prosody processing in schizophrenia, the current study extended our previous work, by investigating the temporal course of prosody processing using single words with both intelligible (SCC) and unintelligible semantic content (PPC). Based on language studies demonstrating differences in the processing of words in a sentence vs. in isolation (e.g., Van Petten, 1995) and effects of phrasal length and complexity on prosodic processing (Krivokapi, 2007; Wheeldon & Lahiri, 1997), we reasoned that prosody processing of sentences may differ from that of single words. For example, the processing of words embedded in a sentence is susceptible to syntactic and semantic constraints imposed by a sentence context, which can modify many aspects of their processing (e.g., Van Petten, 1995). Furthermore, in relation to words in isolation, the processing of a sentence demands more working memory and attention resources, as meaning is built up across the course of the sentence (e.g., Van Petten, 1995). Thus, considering the attentional (Laurens et al., 2005; Nestor et al., 2001) and verbal working memory deficits (Menon et al., 2001; Silver et al., 2003) often reported in schizophrenia, the processing of prosodic information may be more impaired in sentences than in single words.

Because of its excellent temporal resolution, we used ERPs to address the role of stimulus complexity in the first two stages of emotional prosody processing: the sensory processing of prosodic information (N100) and the detection of its emotional salience (P200), both processes not accessible to behavioral probes. We also collected data on accuracy of prosody recognition to shed light on a later stage of emotional prosody processing, i.e. the assignment of emotional meaning to a voice signal. We hypothesized that if impaired prosody processing is not dependent on stimulus complexity, similar abnormalities to those reported in Pinheiro et al. (2013) will be observed in the current study. However, if stimulus complexity matters, we expected less severe prosody processing abnormalities in the single word relative to the sentence prosody processing study.

Considering previous studies demonstrating an association between deficits in emotional prosody recognition and positive symptomatology (Poole et al. 2000; Rossell & Boundy, 2005; Shea et al., 2007), and between increased P200 amplitude for happy prosody and delusions (Pinheiro et al., 2013), we predicted that ERP abnormalities amplitude would be associated with positive symptomatology scores.

2. Method

Participants

Sixteen patients with a diagnosis of chronic schizophrenia and 17 HC matched for age, handedness and parental socioeconomic status (Hollingshead, 1976) participated in this study (Table 1). Subjects had normal hearing as assessed by audiometry, and normal or corrected to normal vision. Patients were recruited at the Veterans Affairs Hospital, Brockton and HC were recruited from Internet advertisements.

Table 1.

Demographic and clinical characteristics of participants

| Variable | Healthy Controls (n=17) | Schizophrenia Patients (n=16) | p valuec |

|---|---|---|---|

| Age (years) | 48.13±5.66 | 48.86±7.40 | .750 |

| Women, n | 7 | 5 | |

| Education(years) | 15.18±1.64 | 14.00±2.42 | .119 |

| Subject’s SESa | 2.13±0.81 | 2.93±1.14 | .033* |

| Parental SES | 2.44±0.81 | 2.79±1.53 | .434 |

| Handednessb | 0.81±0.15 | 0.79±0.21 | .848 |

| NEUROCOGNITIVE DATA | |||

| Full Scale Composite Score | 99.33±12.30 | 92.79±14.32 | .227 |

| Verbal Comprehension Composite Score | 99.08±11.47 | 95.93±15.82 | .572 |

| Working Memory Composite Score | 105.33±14.22 | 92.86±12.90 | .049* |

| Processing Speed Composite Score | 101.17±89.64 | 89.64±14.87 | .107 |

| CLINICAL DATA | |||

| Onset age(years) | NA | 30.07±11.23 | NA |

| Duration(years) | NA | 19.47±10.95 | NA |

| Chlorpromazine EQ(mg) | NA | 356.78±294.56 | NA |

| Antipsychotic Medication type | NA | Typical (fluphenazine decanoate, proloxin decanoate, haloperidol)=3; Atypical (risperidone, olanzapine, ziprasidone, quetiapine, aripiprazole)=11 | NA |

| Other Psychotropic Medication | NA | Antidepressants (sertraline, citalopram, bupoprion, trazodone)=4 Benzodiazepines (lorazepam, clonazepam)=4 Lithium carbonate=2 Valproic acid=3 |

NA |

| PANSS delusions | NA | 4.88±2.16 | NA |

| PANSS conceptual disorganization | NA | 2.50±1.10 | NA |

| PANSS hallucinations | NA | 4.00±2.19 | NA |

| PANSS Positive scale | NA | 20.25±8.19 | NA |

| PANSS Negative scale | NA | 22.88±9.76 | NA |

| PANSS General psychopathology | NA | 38.56±11.70 | NA |

| PANSS Total psychopathology | NA | 81.69±25.92 | NA |

| SANS Total | NA | 10.59±5.44 | NA |

| SAPS Total | NA | 9.63±3.05 | NA |

Notes. All values represent mean±SD. SES=socioeconomic status; Chlorpromazine EQ= Chlorpromazine Equivalent Dose; NA=not applicable;

Hollingshead Four-Factor Index of Social Status (Hollingshead, 1976);

Edinburgh Handedness Inventory (Oldfield, 1971);

Independent samples t-test tested for group differences in sociodemographic and neurocognitive measures.

p<0.05.

The inclusion criteria were: English as first language; right handedness (Oldfield, 1971); no history of neurological illness; no history of DSM-IV diagnosis of drug or alcohol abuse (APA, 2000) in the last year prior to EEG assessment; full scale intelligence quotient (IQ) above 85 (Wechsler, 2008); no hearing, vision or upper body impairment. For HC, additional inclusion criteria were: no history of Axis I–II disorders (First et al., 1995, 2002); no history of Axis I disorder in first or second-degree relatives (Andreasen et al., 1977).

Patients were diagnosed (screened for HC) using the SCID-I and SCID-II (First et al., 1995, 2002). Symptom severity was assessed with the Positive and Negative Syndrome Scale (PANSS-Kay et al., 1987), the Scale for the Assessment of Negative Symptoms (SANS-Andreasen, 1983) and the Scale for the Assessment of Positive Symptoms (SAPS-Andreasen, 1984) (Table 1).

All participants had the procedures fully explained to them and read and signed an informed consent form.

Stimuli

Stimuli used in the SCC were 40 words with neutral semantic content and short length (e.g., “card”, “pen” – see Supplementary Material). Words were controlled for frequency (M=10.38±11.05), familiarity (M=582.37±25.88), age of acquisition (M=232.54±55.15), concreteness (M=594.60±43.79), and number of letters (M=5.14±1.81), based on the MRC Psycholinguistic Database (Coltheart, 1981; Wilson, 1998).

Words were recorded by an American English native speaker with training in theatre techniques, with neutral and emotional prosody (happy; angry) using an Edirol R-09 recorder and a CS-15 cardioid-type stereo microphone, at a sampling rate of 22 KHz and 16-bit quantization. Words’ pitch, intensity and duration were compared across conditions (Table 2). Duration of happy words was longer than duration of angry (p<0.01) and neutral words (p<0.001). Mean pitch was higher for happy relative to both angry (p<0.001) and neutral words (p<0.001), and for angry relative to neutral words (p<0.001). Mean intensity did not differ across emotion types (p>0.05).

Table 2.

Acoustic properties of words with angry, happy and neutral prosody in the semantic content (SCC) and pure prosody (PPC) conditions.

| Semantic status | Emotion | Acoustic Properties | ||||||

|---|---|---|---|---|---|---|---|---|

| Duration | F0 | Intensity | ||||||

| Min | Mean | Max | Min | Mean | Max | |||

| SCC | Angry | 639.00 (19.45) | 163.09 (2.13) | 271.94 (4.12) | 368.15 (5.68) | 45.67 (1.05) | 78.64 (0.39) | 86.24 (0.28) |

| Happy | 718.21 (19.89) | 177.58 (11.44) | 371.03 (12.45) | 574.94 (13.53) | 50.58 (0.84) | 79.27 (0.30) | 86.49 (0.26) | |

| Neutral | 663.23 (22.12) | 156.91 (2.04) | 183.70 (1.24) | 228.09 (4.36) | 49.82 (1.41) | 78.97 (0.28) | 85.09 (0.22) | |

| PPC | Angry | 653.94 (25.65) | 170.55 (5.15) | 265.00 (4.38) | 363.09 (6.98) | 43.18 (0.83) | 77.85 (0.36) | 84.88 (0.39) |

| Happy | 722.27 (24.34) | 173.03 (8.76) | 377.73 (10.01) | 559.00 (12.91) | 48.27 (1.05) | 78.73 (0.28) | 86.00 (0.26) | |

| Neutral | 629.24 (28.83) | 156.70 (1.59) | 181.55 (1.52) | 219.09 (2.55) | 48.39 (1.68) | 77.82 (0.35) | 83.79 (0.22) | |

Notes: Mean (standard error); min=minimum; max=maximum

Fifteen subjects (7 female) who did not participate in the ERP sessions assessed the valence of words’ intonation. Angry words were rated as “angry” by 96.11%, happy words were rated as “happy” by 99.18%, and neutral words were rated as “neutral” by 96.66% of participants.

In PPC, the same stimuli were distorted to make their semantic content unintelligible by using Praat software (Boersma & Weenink, 2006). The phones of each SCC word were manually replaced by phones produced by the same speaker, preserving both the original voice and prosodic features. All fricatives were replaced with the phone [s], all stop consonants with [t], all glides with [j], all stressed vowels with [ӕ], and all unstressed vowels with [Ə].

Procedure

Each participant was seated comfortably at a distance of 100cm from a computer monitor in a sound-attenuating chamber. The experimental session was divided into two blocks (block1: SCC words; block2: PPC words). Block order was counterbalanced. Each block contained 105 words of different prosody types (35 neutral, 35 happy, 35 angry). The remaining five words of each valence and type (SCC, PPC), from the original list of 40 words, were presented in the practice block. Stimuli were presented binaurally through headphones at a comfortable sound level. Superlab Pro software package (2008) controlled stimulus delivery.

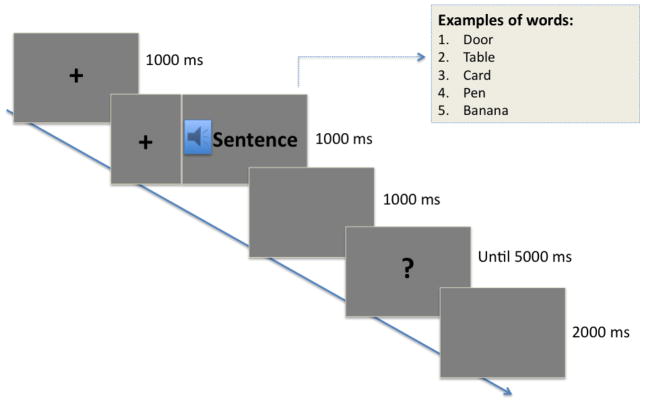

Before each experimental block, participants were given a brief training with feedback. Figure 1 illustrates an experimental trial. Before each word onset, a fixation cross was presented centrally on the screen for 1000 ms, and was kept during word presentation to minimize eye movements. After 1000 ms, a question mark signaled the beginning of the response time (5 seconds). Subjects were instructed to make a decision whether a word was spoken with a neutral, happy, or angry tone of voice by pressing one of the three buttons. The order of button presses was counterbalanced across subjects. Each response key was marked with an emoticon to minimize working memory demands. A 2000 ms inter-stimulus interval separated the end of an event and the beginning of the next one. A short pause was provided after 15 words. During the experiment, no feedback was provided.

Figure 1.

Illustration of an experimental trial.

Data acquisition and analysis

EEG was recorded with custom-made electrode caps with a 64-channel BioSemi Active-Two system (BioSemi B.V., The Netherlands). It was acquired in a continuous mode at a digitization rate of 512 Hz, with a bandpass of 0.01–100 Hz. Blinks and eye movements were monitored through electrodes placed on the left and right temples and below the left eye.

EEG data were processed offline using Brain Analyzer 2 package (Brain Products, Germany), and re-referenced offline to the mathematical average of the left and right mastoids. Individual ERP epochs were created for each prosody type (neutral, happy, angry) in each word condition (SCC, PPC), with −200 ms pre-stimulus baseline and 900 ms post-stimulus epoch. Eye blinks and movement artifacts were corrected by the Gratton et al. (1983) method. EEG epochs containing muscle activity or amplifier blocking were rejected offline before averaging (+/−100μV criterion). After artifact rejection, at least 75% of trials per condition per subject entered the analyses. The number of individual trials did not differ between groups (p>.05).

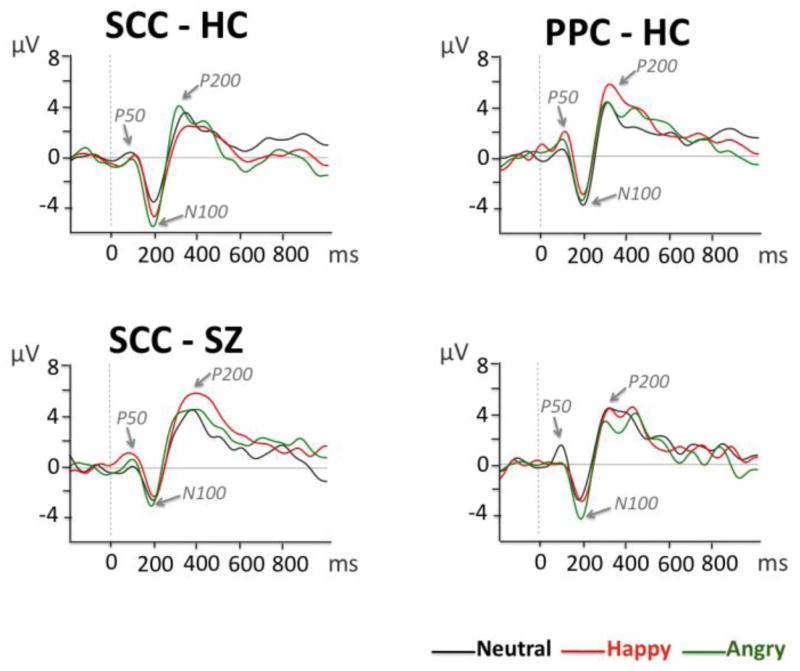

The inspection of grand average waveforms (Figures 2 and 3) revealed three main components with predominantly central distribution: a positivity occurring around 50 ms (P50), a negativity occurring around 100 ms (N100), and a positivity occurring around 200 ms (P200). Temporal windows were then selected for P50, N100 and P200 based on the visual inspection of the waveforms. Mean amplitude was calculated between 30–125 ms (P50), 125–190 ms (N100), 220–320 ms (P200), post-stimulus onset, at central electrodes (Cz, C3, C4).

Figure 2.

Grand average waveforms for neutral, happy and angry prosody in the semantic content condition (SCC) and pure prosody condition (PPC) at Cz, in healthy controls (HC) and schizophrenia patients (SZ).

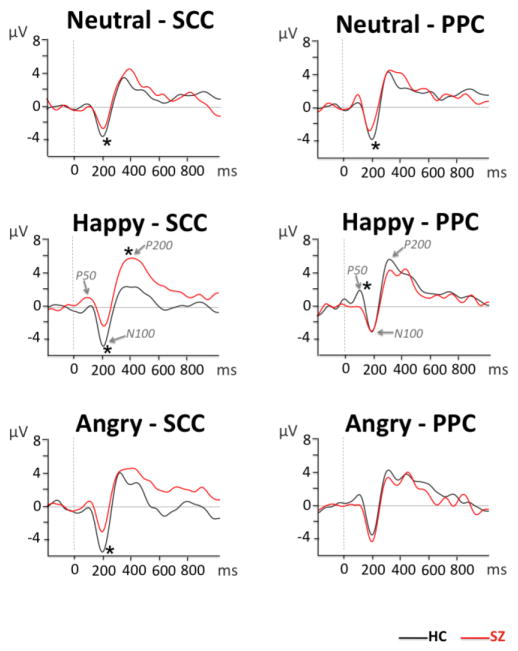

Figure 3.

Grand average waveforms showing group contrasts for neutral, happy, and angry prosody in the semantic content condition (SCC) and pure prosody condition (PPC) at Cz.

Statistical analyses

For the statistical analysis, the PAWS 20.00 (SPSS, Corp., USA) software package was used. Only significant results are presented (p<0.05).

ERP data

Repeated measures analyses of variance (ANOVAs) were computed for the between-group comparisons of N100 and P200 peak amplitude, with semantic status (SCC, PPC), emotion (neutral, happy, angry), and electrodes (Cz, C3, C4) as within-subject factors and group as a between-subject factor, using IBM SPSS Statistics 20 (SPSS, Corp., USA).

Accuracy data

A repeated measures ANOVA with semantic status and emotion as within-subjects factors and group as between-subjects factor tested group differences in behavioral accuracy.

Analyses were corrected for non-sphericity using the Greenhouse–Geisser method (the original df is reported). All significance levels are two-tailed with the preset significance alpha level of p<0.05. Main effects were followed with pairwise comparisons between conditions, using the Bonferroni adjustment for multiple comparisons.

3. Results

ERP data (Figures 2 and 3)

P50 amplitude

A significant group x semantic status x emotion interaction was observed (F(2, 62)=6.603, p<0.01). We followed-up this interaction with subsequent ANOVAs for each semantic status condition separately. A significant group x emotion interaction was observed in the PPC only (F(2, 62)=4.872, p=0.016): groups differed in the processing of happy PPC prosody (p<0.01), with reduced P50 amplitude in patients relative to HC.

N100 amplitude

A main effect of emotion (F(2, 62)=6.723, p<0.01) revealed that N100 was more negative for angry relative to neutral prosody (p<0.01) and tended to be more negative for angry relative to happy prosody (p=0.083) in both groups. A significant group x semantic status x emotion interaction (F(2, 62)=4.638, p=0.02) indicated differences in the way patients and HC processed prosodic stimuli at the sensory level.

Follow-up separate repeated-measures ANOVAs for each semantic status condition showed a significant group effect for the SCC (F(1, 31)=8.395, p<0.01): N100 was overall less negative in the schizophrenia group relative to HC. In addition, a significant group x emotion interaction was observed in the PPC (F(2, 62)=3.874, p=0.027). Pairwise comparisons indicated less negative N100 in schizophrenia relative to HC subjects in the neutral condition only (p=0.039).

P200 amplitude

A significant group x semantic status x emotion interaction (F(2, 62)=5.476, p<0.01) indicated differences in the way groups integrated acoustic information into an emotional percept. Separate ANOVAs were subsequently computed for each semantic status condition. A significant group x emotion interaction was observed for SCC (F(2, 62)=3.215, p=0.049). Subsequent pairwise comparisons indicated more positive P200 for happy prosody in patients relative to HC (p=0.01). No significant effects were observed for PPC.

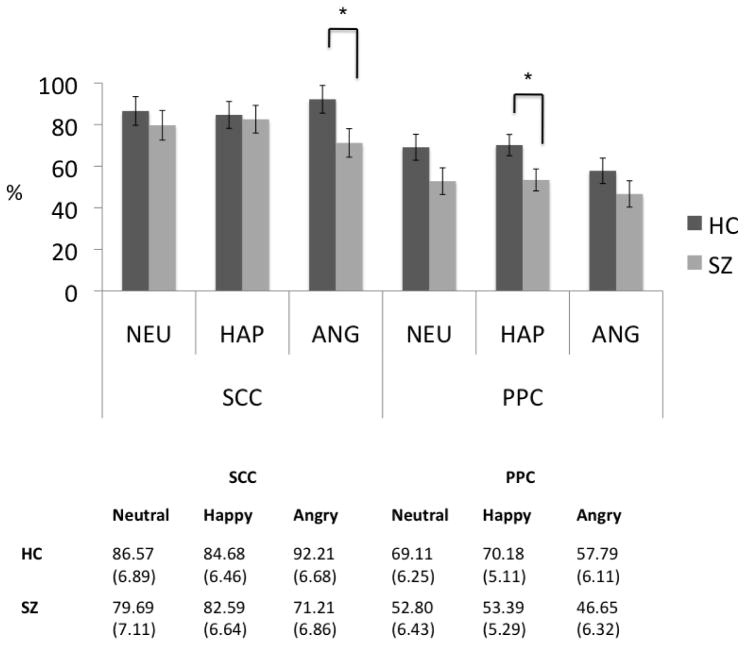

Accuracy data

More correct responses were found in SCC relative to PPC (main effect of semantic status–F(1, 31)=45.606, p<0.001). A significant group x semantic status x emotion interaction (F(2, 62)=4.327, p=0.020) indicated more incorrect responses for angry SCC words (p=0.036) and happy PPC words (p=0.029) in schizophrenia (Figure4). However, no main effect of group was observed (p>0.05).

Figure 4.

Percentage of correct responses in the recognition of emotional prosody in both semantic content condition (SCC) and pure prosody condition (PPC) in healthy controls (HC) and schizophrenia patients (SZ).

Correlational analyses

Two-tailed Spearman’s rho correlation analyses were conducted in an exploratory analysis of the relationship between schizophrenia abnormalities in P50 (happy PPC), N100 (neutral, happy, and angry SCC; neutral PPC) and P200 (happy SCC) amplitude at Cz and: 1) clinical symptoms (PANSS), medication (chlorpromazine equivalent) and illness duration; 2) neurocognitive data (WAIS composite scores); 3) behavioral indices of prosody recognition. The significance level was adjusted for multiple comparisons using Bonferroni correction. No significant correlations were found (p>0.05).

4. Discussion

This study extended and clarified our previous findings for prosodic sentences (Pinheiro et al., 2013). ERP and behavioral findings showed group differences that spanned the three stages of prosody processing and interacted with the semantic status of words. ERP effects were observed within the first 200 ms. In addition to N100 and P200, we observed prosodic effects in an earlier time window around 50 ms (P50), corroborating the sensitivity of P50, N100 and P200 components to prosodic manipulations in speech sounds (Liu et al., 2012; Paulmann & Kotz, 2008; Paulmann et al., 2010; Pinheiro et al., 2013). Schizophrenia patients showed a markedly different P50, N100 and P200 pattern as a function of both semantic status and emotion type relative to HC.

Reduced P50 amplitude for happy PPC prosody was observed in schizophrenia relative to HC. P50 has been reported in studies of auditory gating (e.g., Boutros et al., 2004) and has been considered an index of the formation of sensory memory traces at the level of the primary auditory cortex (Haenschel et al., 2005). The existing evidence suggests that P50 amplitude may be modulated by the physical properties of the eliciting stimulus (Chen et al., 1997; Ninomiya et al., 2000) and by attention (Erwin et al., 1998). Also, in our previous study (Liu et al., 2012) with non-verbal vocalizations, emotion effects were found at the level of P50 (Liu et al., 2012). Reduced P50 amplitude has been consistently demonstrated in schizophrenia (e.g., Potter et al., 2006). In our study, reduced P50 for happy PPC in schizophrenia points to abnormal early somatosensory information processing that is stimulus specific.

Reduced N100 amplitude in schizophrenia was found to both emotional and neutral SCC stimuli, as well as to neutral PPC stimuli. The N100 component is related to early auditory encoding, and its amplitude is modulated by the physical properties of the stimuli and by allocation of attentional resources (Rosburg et al., 2008). P50 and N1 are thought to represent distinct aspects of information processing (Boutros et al., 2004). Considering the functional role of N100 as an index of initial sensory processing of the prosodic signal (Schirmer & Kotz, 2006), these findings support deficits in sensory processing of vocal information (e.g., Pinheiro et al., 2013) that were enhanced when semantic information was present. Given that N100 generators are located mainly in supratemporal plane and superior temporal gyrus (Naatanen & Picton 1987), reduced N100 amplitude may reflect functional and structural brain changes in temporal structures that are a central feature of the schizophrenia diagnosis (e.g., Shenton et al., 1992). However, since N100 cannot be directly related to a single cortical process and is influenced by many individual-related variables, we cannot rule out the contributions of other factors, such as attention or arousal.

Specific abnormalities were noted in the second stage of prosody processing as indexed by increased P200 amplitude to happy SCC words only in schizophrenia relative to HC. The P200 is primarily generated in the temporal cortex (such as the planum temporale and the auditory association complex, area 22 – Godey et al., 2001), even though frontal areas are also involved (McCarley et al., 1991). Considering the role of P200 as an index of the emotional salience of a vocal stimulus (Paulmann & Kotz, 2008), as proposed in the multi-stage model of emotional prosody processing (Schirmer & Kotz, 2006), increased P200 for happy prosody might indicate a specific impairment in categorizing happy auditory emotional percepts as “salient”. However, this was the case only when happy prosodic information was embedded in intelligible speech suggesting that sensory cues were used differently in the two conditions. Additionally, given the sensitivity of P200 to task difficulty (increased P200 amplitude related to increased cognitive effort–Lenz et al., 2007), it is plausible that the salience of positive social information was more difficult to extract for schizophrenia patients. Also, given that all stimuli had neutral semantic content but could carry emotional intonation, we cannot rule out the effects of incongruity (semantic vs. prosodic) on P200 amplitude (Scholten et al., 2008).

Two major conclusions arise from P50, N100 and P200 findings in the current study: 1) the fact that group differences were not observed for all types of prosodic stimuli speaks against a generalized prosodic impairment and suggests that prosodic abnormalities may be dependent on stimulus type; 2) abnormalities in the processing of emotional but not neutral cues seem to be more pronounced when speech’s semantic content is intelligible, suggesting that abnormalities in the processing of both semantic and prosodic aspects of voice interact since early stages of prosody processing.

Behavioral data, indexing the integration of emotionally significant acoustic cues (Schirmer & Kotz, 2006), indicated that emotional prosody recognition was better in SCC relative to PPC in both groups, confirming our initial hypothesis (and also Pinheiro et al., 2013). Given the absence of a memory representation for unintelligible stimuli to facilitate predictive processes, this result likely reflects increased task demands. Additionally, schizophrenia patients made more errors in identifying emotional but not neutral prosody. This result suggests that deficits in recognizing emotional prosody in single words depend both on emotion type and on semantic status.

Finally, we note differences in both ERP and behavioral results reported in this and in our previous study using prosodic sentences (Pinheiro et al., 2013). In our previous study, P200 was increased to happy SCC and PPC sentences and to angry SCC sentences; here, P200 abnormalities were observed only for happy SCC words. These ERP differences suggest that while processing prosody in sentences and words evokes similar ERP components, the processes involved are not identical. They additionally suggest that stimulus complexity may differently impact sensory and early categorization stages of prosody processing.

Furthermore, the overall reduced emotional recognition accuracy observed in the sentences study contrasted with specific deficits in the recognition of angry SCC prosody and happy PPC prosody in single words. Since prosody processing relies on the continuous monitoring of dynamically changing acoustic cues underlying an emotional tone, greater working memory and attention demands exist for sentences vs. single words. Accordingly, they were associated with more errors in identifying sentence relative to single word prosodic stimuli.

Limitations

Limitations of this study are a sample composed by medicated chronic schizophrenia patients. Future research with unmedicated and first-episode patients will overcome some of the limitations associated with medication and chronicity.

Supplementary Material

Acknowledgments

We are grateful to all the participants of this study for their contribution to science. We are also grateful to Elizabeth Thompson and Israel Molina for their help with data acquisition, and to Victoria Choate for her invaluable help with voice recording.

Footnotes

Contributors:

Ana P. Pinheiro and Margaret Niznikiewicz designed the study and wrote the protocol. Ana P. Pinheiro, Margaret Niznikiewicz, Neguine Rezaii and Taosheng Liu collected and analyzed the data. All authors collaborated in the statistical analysis. Andréia Rauber edited the stimuli and completed the acoustic analyses of the stimuli. Paul G. Nestor did the clinical and neuropsychological testing of participants. Ana P. Pinheiro and Margaret Niznikiewicz wrote the first draft of the manuscript. All authors contributed to and have approved the final manuscript.

Author Disclosure:

Conflict of interest: All authors report no competing interests.

Author Disclosure:

Role of the Funding Source: This work was supported by two Grants awarded to A.P.P.: Post-Doctoral Grant no. SFRH/BD/35882/2007 funded by Fundação para a Ciência e a Tecnologia (FCT, Portugal); and Research Grant no. PTDC/PSI-PCL/116626/2010, funded by FCT and FEDER (Fundo Europeu de Desenvolvimento Regional) through the European programs QREN (Quadro de Referência Estratégico Nacional) and COMPETE (Programa Operacional Factores de Competitividade). It was additionally supported by two grants from the National Institute of Mental Health (no. RO1 MH 040799 awarded to R.W.M. and no. RO3 MH 078036 awarded to M.A.N.).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Addington J, Penn D, Woods SW, Addington D, Perkins DO. Facial affect recognition in individuals at clinical high risk for psychosis. Br J Psychiatry. 2008;192(1):67–68. doi: 10.1192/bjp.bp.107.039784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders: DSM-IV-TR. American Psychiatric Association Press; Washington, DC: 2000. [Google Scholar]

- Andreasen NC. Scale for Assessment of Negative Symptoms (SANS) University of Iowa; Iowa City, IA: 1983. [Google Scholar]

- Andreasen NC. Scale for Assessment of Positive Symptoms (SAPS) University of Iowa; Iowa City, IA: 1984. [Google Scholar]

- Andreasen NC, Endicott J, Spitzer RL, Winokur G. The Family History Method using diagnostic criteria: reliability and validity. Arch Gen Psychiatry. 1977;34:1229–1235. doi: 10.1001/archpsyc.1977.01770220111013. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat: doing phonetics by computer (Version 5.0.43) [Computer program] 2006 [Google Scholar]

- Boutros NN, Korzyukov O, Jansen B, Feingold A, Bell M. Sensory gating deficits during the mid-latency phase of information processing in medicated schizophrenia patients. Psychiatry Res. 2004;126(3):203–215. doi: 10.1016/j.psychres.2004.01.007. [DOI] [PubMed] [Google Scholar]

- Chen CH, Ninomiya H, Onitsuka T. Influence of reference electrodes, stimulation characteristics and task paradigms on auditory P50. Psychiatry Clin Neurosci. 1997;51(3):139–143. doi: 10.1111/j.1440-1819.1997.tb02376.x. [DOI] [PubMed] [Google Scholar]

- Coltheart M. The MRC psycholinguistics database. Q J Exp Psychol A. 1981;33A:497–505. [Google Scholar]

- Couture SM, Penn DL, Roberts DL. The functional significance of social cognition in schizophrenia: a review. Schizophr Bull. 2006;32(Suppl 1):S44–63. doi: 10.1093/schbul/sbl029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards J, Pattison PE, Jackson HJ, Wales RJ. Facial affect and affective prosody recognition in first-episode schizophrenia. Schizophr Res. 2001;48(2–3):235–253. doi: 10.1016/s0920-9964(00)00099-2. [DOI] [PubMed] [Google Scholar]

- Erwin RJ, Turetsky BI, Moberg P, Gur RC, Gur RE. P50 abnormalities in schizophrenia: relationship to clinical and neuropsychological indices of attention. Schizophr Res. 1998;33(3):157–167. doi: 10.1016/s0920-9964(98)00075-9. [DOI] [PubMed] [Google Scholar]

- First MB, Spitzer RL, Gibbon M, Williams JBM. Structured Clinical Interview for DSM-IV Axis II Personality Disorders (SCID-II, version 2.0) Biometrics Research Department, New York State Psychiatric Institute; New York, NY: 1995. [Google Scholar]

- First MB, Spitzer RL, Gibbon M, Williams JBW. Structured Clinical Interview for DSM-IV Axis I Diagnosis-Patient Edition (SCID-I/P, version 2.0) Biometric Research Department, New York State Psychiatric Institute; New York, NY: 2002. [Google Scholar]

- Godey B, Schwartz D, de Graaf JB, Chauvel P, Liegeois-Chauvel C. Neuromagnetic source localization of auditory evoked fields and intracerebral evoked potentials: a comparison of data in the same patients. Clin Neurophysiol. 2001;112(10):1850–1859. doi: 10.1016/s1388-2457(01)00636-8. [DOI] [PubMed] [Google Scholar]

- Gratton G, Coles MG, Donchin E. A new method for off-line removal of ocular artifact. Electroencephalogr Clin Neurophysiol. 1983;55(4):468–484. doi: 10.1016/0013-4694(83)90135-9. [DOI] [PubMed] [Google Scholar]

- Green MF, Bearden CE, Cannon TD, Fiske AP, Hellemann GS, Horan WP, Kee K, Kern RS, Lee J, Sergi MJ, Subotnik KL, Sugar CA, Ventura J, Yee CM, Nuechterlein KH. Social cognition in schizophrenia, Part 1: performance across phase of illness. Schizophr Bull. 2012;38(4):854–864. doi: 10.1093/schbul/sbq171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haenschel C, Vernon DJ, Dwivedi P, Gruzelier JH, Baldeweg T. Event-related brain potential correlates of human auditory sensory memory-trace formation. J Neurosci. 2005;25(45):10494–10501. doi: 10.1523/JNEUROSCI.1227-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingshead A. Four Factor index of Social Status. Yale University Social Sciences Library; New Haven, CT: 1976. [Google Scholar]

- Horan WP, Green MF, DeGroot M, Fiske A, Hellemann G, Kee K, Kern RS, Lee J, Sergi MJ, Subotnik KL, Sugar CA, Ventura J, Nuechterlein KH. Social cognition in schizophrenia, Part 2: 12-month stability and prediction of functional outcome in first-episode patients. Schizophr Bull. 2012;38(4):865–872. doi: 10.1093/schbul/sbr001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay SR, Fiszbein A, Opler LA. The positive and negative syndrome scale (PANSS) for schizophrenia. Schizophr Bull. 1987;13(2):261–276. doi: 10.1093/schbul/13.2.261. [DOI] [PubMed] [Google Scholar]

- Kee KS, Green MF, Mintz J, Brekke JS. Is emotion processing a predictor of functional outcome in schizophrenia? Schizophr Bull. 2003;29(3):487–497. doi: 10.1093/oxfordjournals.schbul.a007021. [DOI] [PubMed] [Google Scholar]

- Krivokapi J. Prosodic Planning: Effects of Phrasal Length and Complexity on Pause Duration. J Phon. 2007;35(2):162–179. doi: 10.1016/j.wocn.2006.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kucharska-Pietura K, David A, Masiak K, Phillips M. Perception of facial and vocal affect by people with schizophrenia in early and late stages of illness. Br J Psychiatry. 2005;187:523–528. doi: 10.1192/bjp.187.6.523. [DOI] [PubMed] [Google Scholar]

- Laurens KR, Kiehl KA, Ngan ET, Liddle PF. Attention orienting dysfunction during salient novel stimulus processing in schizophrenia. Schizophr Res. 2005;75(2–3):159–171. doi: 10.1016/j.schres.2004.12.010. [DOI] [PubMed] [Google Scholar]

- Leitman DI, Laukka P, Juslin PN, Saccente E, Butler P, Javitt DC. Getting the cue: sensory contributions to auditory emotion recognition impairments in schizophrenia. Schizophr Bull. 2010;36(3):545–556. doi: 10.1093/schbul/sbn115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leitman DI, Wolf DH, Laukka P, Ragland JD, Valdez JN, Turetsky BI, Gur RE, Gur RC. Not pitch perfect: sensory contributions to affective communication impairment in schizophrenia. Biol Psychiatry. 2011;70(7):611–618. doi: 10.1016/j.biopsych.2011.05.032. [DOI] [PubMed] [Google Scholar]

- Lenz D, Schadow J, Thaerig S, Busch NA, Herrmann CS. What’s that sound? Matches with auditory long-term memory induce gamma activity in human EEG. Int J Psychophysiol. 2007;64(1):31–38. doi: 10.1016/j.ijpsycho.2006.07.008. [DOI] [PubMed] [Google Scholar]

- Li H, Chan RC, McAlonan GM, Gong QY. Facial emotion processing in schizophrenia: a meta-analysis of functional neuroimaging data. Schizophr Bull. 2010;36(5):1029–1039. doi: 10.1093/schbul/sbn190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu T, Pinheiro AP, Deng G, Nestor PG, McCarley RW, Niznikiewicz MA. Electrophysiological insights into processing nonverbal emotional vocalizations. Neuroreport. 2012;23(2):108–112. doi: 10.1097/WNR.0b013e32834ea757. [DOI] [PubMed] [Google Scholar]

- McCarley RW, Faux SF, Shenton ME, Nestor PG, Adams J. Event-related potentials in schizophrenia: their biological and clinical correlates and a new model of schizophrenic pathophysiology. Schizophr Res. 1991;4(2):209–231. doi: 10.1016/0920-9964(91)90034-o. [DOI] [PubMed] [Google Scholar]

- Menon V, Anagnoson RT, Mathalon DH, Glover GH, Pfefferbaum A. Functional neuroanatomy of auditory working memory in schizophrenia: relation to positive and negative symptoms. Neuroimage. 2001;13(3):433–446. doi: 10.1006/nimg.2000.0699. [DOI] [PubMed] [Google Scholar]

- Mitchell RL, Elliott R, Barry M, Cruttenden A, Woodruff PW. Neural response to emotional prosody in schizophrenia and in bipolar affective disorder. Br J Psychiatry. 2004;184:223–230. doi: 10.1192/bjp.184.3.223. [DOI] [PubMed] [Google Scholar]

- Naatanen R, Picton T. The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology. 1987;24(4):375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x. [DOI] [PubMed] [Google Scholar]

- Nestor PG, Han SD, Niznikiewicz M, Salisbury D, Spencer K, Shenton ME, McCarley RW. Semantic disturbance in schizophrenia and its relationship to the cognitive neuroscience of attention. Biol Psychol. 2001;57(1–3):23–46. doi: 10.1016/s0301-0511(01)00088-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ninomiya H, Sato E, Onitsuka T, Hayashida T, Tashiro N. Auditory P50 obtained with a repetitive stimulus paradigm shows suppression to high-intensity tones. Psychiatry Clin Neurosci. 2000;54(4):493–497. doi: 10.1046/j.1440-1819.2000.00741.x. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Kotz SA. Early emotional prosody perception based on different speaker voices. Neuroreport. 2008;19(2):209–213. doi: 10.1097/WNR.0b013e3282f454db. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Seifert S, Kotz SA. Orbito-frontal lesions cause impairment during late but not early emotional prosodic processing. Soc Neurosci. 2010;5(1):59–75. doi: 10.1080/17470910903135668. [DOI] [PubMed] [Google Scholar]

- Pinheiro AP, Del Re E, Mezin J, Nestor PG, Rauber A, McCarley RW, Goncalves OF, Niznikiewicz MA. Sensory-based and higher-order operations contribute to abnormal emotional prosody processing in schizophrenia: an electrophysiological investigation. Psychol Med. 2012:1–16. doi: 10.1017/S003329171200133X. [DOI] [PubMed] [Google Scholar]

- Poole JH, Tobias FC, Vinogradov S. The functional relevance of affect recognition errors in schizophrenia. J Int Neuropsychol Soc. 2000;6(6):649–658. doi: 10.1017/s135561770066602x. [DOI] [PubMed] [Google Scholar]

- Potter D, Summerfelt A, Gold J, Buchanan RW. Review of clinical correlates of P50 sensory gating abnormalities in patients with schizophrenia. Schizophr Bull. 2006;32(4):692–700. doi: 10.1093/schbul/sbj050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosburg T, Boutros NN, Ford JM. Reduced auditory evoked potential component N100 in schizophrenia--a critical review. Psychiatry Res. 2008;161(3):259–274. doi: 10.1016/j.psychres.2008.03.017. [DOI] [PubMed] [Google Scholar]

- Rossell SL, Boundy CL. Are auditory-verbal hallucinations associated with auditory affective processing deficits? Schizophr Res. 2005;78(1):95–106. doi: 10.1016/j.schres.2005.06.002. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn Sci. 2006;10(1):24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Scholten MR, Aleman A, Kahn RS. The processing of emotional prosody and semantics in schizophrenia: relationship to gender and IQ. Psychol Med. 2008;38(6):887–898. doi: 10.1017/S0033291707001742. [DOI] [PubMed] [Google Scholar]

- Shea TL, Sergejew AA, Burnham D, Jones C, Rossell SL, Copolov DL, Egan GF. Emotional prosodic processing in auditory hallucinations. Schizophr Res. 2007;90:214–220. doi: 10.1016/j.schres.2006.09.021. [DOI] [PubMed] [Google Scholar]

- Shenton ME, Kikinis R, Jolesz FA, Pollak SD, LeMay M, Wible CG, Hokama H, Martin J, Metcalf D, Coleman M, et al. Abnormalities of the left temporal lobe and thought disorder in schizophrenia. A quantitative magnetic resonance imaging study. N Engl J Med. 1992;327(9):604–612. doi: 10.1056/NEJM199208273270905. [DOI] [PubMed] [Google Scholar]

- Silver H, Feldman P, Bilker W, Gur RC. Working memory deficit as a core neuropsychological dysfunction in schizophrenia. Am J Psychiatry. 2003;160(10):1809–1816. doi: 10.1176/appi.ajp.160.10.1809. [DOI] [PubMed] [Google Scholar]

- van Petten C. Words and sentences: event-related brain potential measures. Psychophysiology. 1995;32(6):511–525. doi: 10.1111/j.1469-8986.1995.tb01228.x. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Adult Intelligence Scale: Administration and Scoring Manual. 4. The Psychological Corporation; San Antonio: 2008. [Google Scholar]

- Wheeldon L, Lahiri A. Prosodic units in speech production. J Mem Lang. 1997;37(3):356–381. [Google Scholar]

- Wilson MD. The MRC psycholinguistic database: machine readable dictionary, version 2. Behav Res Meth Instr. 1998;20:6–11. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.