Abstract

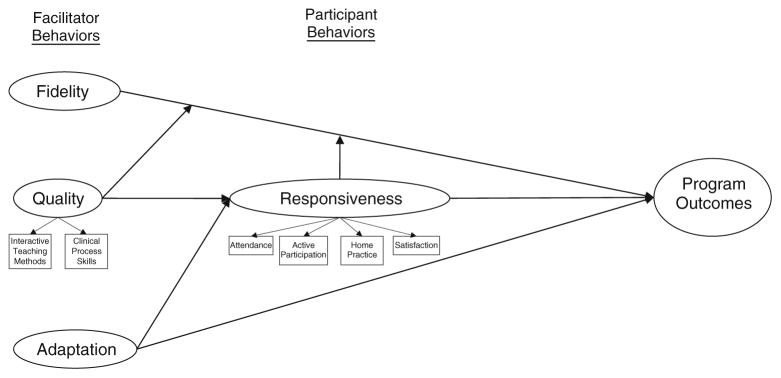

Considerable evidence indicates that variability in implementation of prevention programs is related to the outcomes achieved by these programs. However, while implementation has been conceptualized as a multidimensional construct, few studies examine more than a single dimension, and no theoretical framework exists to guide research on the effects of implementation. We seek to address this need by proposing a theoretical model of the relations between the dimensions of implementation and outcomes of prevention programs that can serve to guide future implementation research. In this article, we focus on four dimensions of implementation, which we conceptualize as behaviors of program facilitators (fidelity, quality of delivery, and adaptation) and behaviors of participants (responsiveness) and present the evidence supporting these as predictors of program outcomes. We then propose a theoretical model by which facilitator and participant dimensions of implementation influence participant outcomes. Finally, we provide recommendations and directions for future implementation research.

Keywords: Program implementation, Fidelity, Quality, Adaptation, Participant responsiveness, Program outcomes

Evidence-based preventive interventions have the capacity to change developmental trajectories in positive ways across multiple domains (e.g., mental health, substance use, risky sexual behavior, and academic achievement), delivery settings (e.g., school and community), and populations served as defined by level of risk (i.e., universal, indicated, and selected) (NRC/IOM 2009). To achieve these effects, however, it is essential that programs are implemented well (Durlak 1998). Previous reviews (Dane and Schneider 1998; Durlak and DuPre 2008; Dusenbury et al. 2003) have identified eight dimensions of program implementation. These include fidelity, dosage, quality, participant responsiveness, program differentiation, monitoring of control conditions, program reach, and adaptation (see Table 1). While there are differences in how studies have labeled and defined dimensions of implementation, we elected to follow Durlak and DuPre’s model, which considered “implementation” as a higher-order category, subsuming the other dimensions. We chose this model because, while the others provided useful information about the tensions between fidelity and adaptation (Dane and Schneider 1998; Dusenbury et al. 2003), Durlak and DuPre was the only review to include adaptation as a dimension distinct from fidelity.

Table 1.

Dimensions of implementation

| Dimension | Definition | Other labels |

|---|---|---|

| Differentiation | Distinctiveness of a program’s theory and practices from other available programs | Program uniqueness |

| Dosage | Number of program sessions delivered | Quantity Intervention strength |

| Reach | Extent to which participants being served by the program are representative of the target population | Participation rates Program scope |

| Monitoringa | Documenting what services members of intervention and control groups receive beyond the program being evaluated | Treatment contamination Usual care Alternative services |

| Fidelity | Whether prescribed program components were delivered as instructed in program protocol | Adherence Compliance Integrity Faithful replication Completeness |

| Quality | Teaching and clinical skill with which the program is implemented | Competence |

| Adaptation | Additions made to the program during implementation | Modification Reinvention |

| Responsiveness | Involvement and interest in the program | Engagement Attendance Retention Satisfaction Home practice completion Dosage |

While Durlak and DuPre (2008) referenced “Monitoring of control/comparison conditions,” monitoring of additional services received is necessary in the intervention group(s) as well

Of these eight dimensions, four occur within the delivery of program sessions, and as a result, constitute potential sources of disconnect between the program as designed and that which is implemented. Three of these dimensions, fidelity (i.e., adherence to the program curriculum), quality of delivery (i.e., the skill with which facilitators deliver material and interact with participants), and program adaptation (i.e., changes made to the program, particularly material that is added to the program), are determined by program facilitators. A fourth dimension, participant responsiveness (i.e., participants’ level of enthusiasm for and participation in an intervention), is determined by participants. Because these dimensions serve as potential sources of variability from the program as designed and across intervention groups, and each has been demonstrated to influence outcomes (Durlak and DuPre 2008), these were selected for inclusion in the proposed model. It is unlikely that these dimensions of implementation are unrelated and function independently to influence outcomes (Rohrbach et al. 2010). Nonetheless, program evaluations have rarely examined more than one dimension in a single study and thus have not untangled possible relations between them. We bring attention to this gap in implementation research by proposing a theoretical framework of how facilitator and participant behaviors work together to produce positive outcomes (see Fig. 1).

Fig. 1.

An integrated theoretical model of program implementation

As systematic reviews of implementation have been conducted recently (Dane and Schneider 1998; Durlak and DuPre 2008; Dusenbury et al. 2003), we examined the studies identified by the reviews evaluating associations between implementation and outcomes. In the first section of the paper, we summarize the findings of those articles linking participant responsiveness and facilitator fidelity, quality, and adaptation with outcomes. We begin with participant responsiveness to provide justification for the inclusion of studies linking facilitator implementation with responsiveness, laying the foundation for mediational hypotheses in the following section. Next, we propose an integrated theoretical model with pathways from facilitator dimensions to participant responsiveness, and from each of these to outcomes. Finally, we suggest future directions for implementation research, with special attention to measurement.

Participant Responsiveness

Definition and Measurement

Dane and Schneider (1998) identified participant responsiveness, defined as “levels of participation and enthusiasm,” as an important component of implementation (1998, p. 45). Prevention researchers have operationalized responsiveness using indicators such as number of sessions attended,1 active participation, satisfaction, and home practice completion (i.e., participants’ attempts at practicing assigned skills at home).

Participant Responsiveness Indicators and Program Outcomes

The number of sessions attended by participants, the most frequently examined indicator of responsiveness, is consistently associated with stronger program effects (e.g., Blake et al. 2001; Kosterman et al. 2001; Prado et al. 2006). Participant reports of program satisfaction, percent of home practice assignments competed, and facilitator reports of participants’ active participation in sessions have also been associated with program outcomes (Baydar et al. 2003; Blake et al. 2001; Garvey et al. 2006; Nye et al. 1995; Prado et al. 2006; Tolan et al. 2002).

Facilitator Implementation

In the sections below, we briefly define fidelity, quality, and adaptation, and discuss issues related to the measurement of each of these facilitator dimensions of implementation. We provide support for the associations between each dimension and outcomes. Further, because responsiveness is associated with outcomes, and is important to our theoretical model, we examine the literature linking fidelity, quality, and adaptation with responsiveness.

Fidelity

Definition and Measurement

Fidelity, sometimes referred to as adherence or program integrity, is the extent to which specified program components were delivered as prescribed and is the most commonly measured dimension of implementation (Durlak and DuPre 2008). While fidelity is generally operationalized as a percentage of the manualized content delivered (Dane and Schneider 1998) and/or the amount of time dedicated to each of the core components (Elliott and Mihalic 2004; Lillehoj et al. 2004), no widely-accepted standards of measurement exist. Fidelity measurement should include identifying “core components” of the manualized material and assessing whether those core components were delivered (e.g., Forgatch et al. 2005; Spoth et al. 2002) or the amount of time dedicated to each of the core components (Elliott and Mihalic 2004; Lillehoj et al. 2004). To assess fidelity in this way, researchers must first have conducted studies to isolate what the driving elements of a program are, yet efforts to empirically validate hypothesized core components are quite rare (Dane and Schneider 1998).

Fidelity and Program Outcomes

Despite widespread agreement that measuring fidelity is a crucial component of evaluating intervention effects, the majority of studies do not report data on fidelity or link fidelity to outcomes (Dane and Schneider 1998; Durlak and DuPre 2008). Studies that have examined the impact of fidelity on outcomes have shown that high levels of fidelity are associated with positive program effects (e.g., Botvin et al. 1989; Forgatch et al. 2005; Ialongo et al. 1999). Durlak and DuPre’s review of nearly 500 individual studies and meta-analyses, found that only 59 studies assessed the relations between fidelity and outcomes. Of these 59 studies, 76% reported that fidelity had a significant positive association with targeted program outcomes. Durlak and DuPre identified that positive program results were visible in programs with at least 60% fidelity coverage. However, several studies report weak or null relations between implementation fidelity and intervention outcomes (Resnicow et al. 1998; Spoth et al. 2002). Durlak and DuPre reported that null findings can be at least partly attributed to a lack of variability in fidelity, which can decrease the power to detect between-group differences.

Quality of Delivery

Definition and Measurement

Quality of delivery has been broadly defined as the processes used to convey program material to participants (Dusenbury et al. 2003), including facilitators’ use of interactive teaching methods and clinical process skills (Dane and Schneider 1998; Durlak and DuPre 2008; Forgatch et al. 2005). Interactive teaching, which elicits sharing and engages participants in the learning process, builds participant competence to use program skills effectively (Giles et al. 2008; Tobler and Stratton 1997). Evidence of the effectiveness of interactive teaching is abundant in the education literature (Slavin 1990; Widaman and Kagan 1987). The enthusiasm and clarity with which facilitators present program content are also important aspects of quality of delivery (Dane and Schneider 1998; Hansen et al. 1991). Clinical processes such as reflective listening and summarizing promote a safe and supportive environment that encourages participation and facilitates learning (Forgatch et al. 2005). Fostering cohesion among participants is also an important dimension of quality for preventive interventions that are delivered in a group format (Coatsworth et al. 2006b; Dillman Carpentier et al. 2007). The importance of clinical processes has been emphasized in the psychotherapy process-outcome research, with considerable evidence that empathy, acceptance, and other relationship factors are essential to the therapeutic environment (see Hubble et al. 1999, for review).

Quality of Delivery and Participant Responsiveness

Among extant research, there is evidence that quality is associated with participant responsiveness (Eddy et al. 1998; Rohrbach et al. 2010). For example, facilitator support in the delivery of a manualized evidence-based parent training program encouraged parents’ attendance and active participation in sessions (Patterson and Forgatch 1985). Participant-facilitator relationship quality has been associated with active participation, attendance, and homework completion (Shelef et al. 2005), and the amount of individual attention facilitators pay to participants has predicted attendance (Charlebois et al. 2004b). Among Mexican-origin families in a family-based preventive intervention, facilitator support and skill in promoting group cohesion predicted attendance and partially explained differences in attendance rates (Dillman Carpentier et al. 2007).

Quality of Delivery and Program Outcomes

There is minimal research examining associations between quality of delivery and participant responsiveness. For example, among a review of 59 studies examining the effect of implementation on program outcomes, only 6 examined the effects of quality of delivery on program effects (Durlak and DuPre 2008). However, the few prevention studies examining effects of quality of delivery on outcomes have found a positive association. For example, quality of process skills used to deliver family-based programs predicted improvements in parenting, which, in turn, predicted reductions in child behavior problems (Eames et al. 2009; Forgatch et al. 2005). Quality of delivery has also been associated with decreases in adolescent substance use (Giles et al. 2008; Hansen et al. 1991; Kam et al. 2003; Shelef et al. 2005) and conduct problems (Conduct Problems Prevention Research Group 1999).

Adaptation

Definition and Measurement

Facilitator adaptation, which at times has been referred to as reinvention, modification, or proficiency, is the extent to which facilitators add to or modify content and processes as prescribed in the manual. Typically, prevention researchers have expressed tremendous concern that facilitators’ adaptation will reduce program effectiveness (Elliott and Mihalic 2004). However, the negative view of facilitator adaptation is likely due to its standard characterization as lack of fidelity. Some researchers have argued that adaptation should be defined as additions to the program, rather than a lack of fidelity (Blakely et al. 1987; McGraw et al. 1996; Parcel et al. 1991). In doing so, it becomes possible to untangle what might be a facilitator contribution to the curriculum, based on the intimate knowledge of the local population, from an inability to implement the program as designed.

Prevention researchers generally appreciate that cultural mismatch will undermine program effectiveness (Botvin 2004). Moreover, as context changes over time and as programs are offered to populations that differ from those with which they were originally validated, adaptation may be necessary to preserve program effectiveness (Castro et al. 2004; Rogers 1995). This may be especially true when components of the program are confusing, irrelevant, or in conflict with the new population’s values (Emshoff et al. 2003). Facilitators also recognize the importance of adapting programs to the ecological niche in which they are working, to make a program culturally relevant for diverse audiences (Dusenbury et al. 2005; Ringwalt et al. 2004). Thus, the possible benefits of adaptation are increasing: ownership on the part of community implementers, perceived relevance on the part of participants, and the match between the program and the ecological niche (Emshoff et al. 2003; Kumpfer et al. 2002). These benefits, in turn, can foster program sustainability, participant attendance and participation, and behavior change by providing the services that address participants’ most pressing needs (Botvin 2004; Castro et al. 2004; Cross et al. 1989; Kumpfer et al. 2002).

As the field of prevention recognizes the need for culturally competent interventions, researchers have reflected about how to adapt programs in ways that make them more relevant for a given population. Community-based participatory approaches and cultural matching of facilitators to participants are two of the strategies that have been advocated to increase cultural relevance of prevention programs (e.g., Castro et al. 2004; Wilson and Miller 2003). Underlying each approach is the belief that community members, in the role of program co-designers or facilitators, possess cultural expertise that may enable them to adapt the program to increase its relevance for the population (Brach and Fraser 2000; Palinkas et al. 2009). While prospectively adapting programs for different groups may be useful in ensuring cultural relevance, there is also the recognition that, as culture occurs on the local level, each program setting is unique and adaptations will continue to occur beyond what is planned by program co-designers (Castro et al. 2004). Failing to document these adaptations through careful study of implementation precludes generalizations about programs across settings (Boruch and Gomez 1977). To the extent that adaptations go unmeasured, the field of prevention will be limited in its understanding of what is culturally appropriate for given contexts (Backer 2002). Finally, the material facilitators add may be either constructive or iatrogenic (Dusenbury et al. 2005). Thus, facilitator adaptations that occur within prevention sessions must be examined.

Some researchers have begun to measure adaptation in ways that distinguish adaptation from lack of fidelity (Blakely et al. 1987; McGraw et al. 1996; Parcel et al. 1991). By defining adaptation as additions or modifications to the program, rather than deviance from the program design, these researchers discovered positive associations between adaptation and study outcomes. Lacking a standardized measure for adaptation, Blakely and colleagues instituted a series of systematic guidelines as decision points. First, independent raters assessed whether each diversion from the manual was an addition or simply a lack of fidelity. Once determined as an adaptation, raters coded events as additions or modifications (although some may argue that a modification would amount to an instance of simultaneous adaptation and lack of fidelity). Additions were defined as any activity or material that was not part of the original program. An example of an additive adaptation was when a facilitator lined children up at the end of a session and asked individualized questions to reinforce what they learned and provide positive feedback. Modifications were defined as activities that were part of the program, but implemented in a way that was beyond prescribed variations. An example of this was a program activity that required victims of youth crimes to attend hearings and offer sentencing recommendations. One community did not require victims to attend, but instead asked victims to write a statement about the hearing outcome. Finally, raters assessed the magnitude of the adaptation on a 3-point scale, based on the amount of change and importance to the program theory.

Adaptation and Participant Responsiveness

The association between adaptation and responsiveness was examined in the Delinquency Research and Development Project over a period of three school semesters (Kerr et al. 1985). It was hypothesized that fidelity would be positively associated with student participation and that it would increase over time as facilitators became more familiar with the material. Contrary to both hypotheses, fidelity decreased over time, but student participation continued to increase. In post-intervention interviews with facilitators, authors found that with experience over time, facilitators maintained program strategies they perceived to be effective in engaging students in the curriculum, dropped strategies they perceived to be ineffective, and supplemented the program with external strategies acquired from previous experiences. Thus, it appears that adaptations that are responsive to participant needs may increase responsiveness.

Adaptation and Program Outcomes

According to Durlak and DuPre (2008), all studies that have assessed the relation between adaptation and outcomes reported a positive effect of adaptation. In the Child and Adolescent Trial for Cardiovascular Health (CATCH) program, as facilitators’ modified more program activities, students experienced greater increases in dietary knowledge and self-efficacy, which in turn predicted distal outcomes (McGraw et al. 1996). A decrease in adaptation during the final year of the study corresponded to reductions in previous gains. Authors concluded that adaptations may have been facilitators’ attempts to tailor the program to the unique context and needs of participants, and as such, had a beneficial influence on outcomes, which disappeared once facilitators eliminated adaptations.

Two studies have examined the relative influence of adaptation and fidelity. Parcel and colleagues (1991) compared the influence of fidelity and adaptation (in this case, operationalized as additions to the curriculum to meet the needs of participants) on program outcomes. They found adaptation was associated with greater change in the targeted attitudes, knowledge, and behaviors than was fidelity. Moreover, among new facilitators, fidelity and adaptation were each associated with increase in participant knowledge, while for more experienced facilitators, only adaptation predicted outcomes. Blakely and colleagues’ (1987) also found that the association between adaptation and outcomes were of the same magnitude as the association of fidelity and outcomes. Moreover, because two adaptation subscales, modifications and additions, were measured separately, the study was able to compare them. Modifications were not significantly associated with program outcomes. Additions to the program, on the other hand, accounted for improvements, above and beyond the influence of fidelity. Further, additive adaptations appeared to occur more frequently in the context of high fidelity.

Introduction to the Conceptual Model

Whereas several dimensions of facilitator program implementation appear to be important for participant responsiveness and program outcomes, there are few instances where researchers have examined more than one in a single study (Durlak and DuPre 2008). Studying implementation variables in isolation limits our understanding of how they influence each other and their relative influence on outcomes. While there is now considerable evidence that different dimensions of implementation are related to outcomes (Durlak and DuPre 2008), more complex questions about the pathways by which implementation affects outcomes have yet to be addressed. Further, within the small number of studies examining multiple dimensions of implementation, some have found dimensions to be correlated with one another, suggesting the possibility of causal mechanisms between the dimensions (e.g., Charlebois et al. 2004b; Kerr et al. 1985; Rohrbach et al. 2010; Tolan et al. 2002). As a first step in developing such a research agenda, we have proposed a theoretical model that 1) distinguishes between two broad categories of implementation (i.e., facilitator or participant behaviors) and 2) suggests how the different dimensions of implementation jointly influence program outcomes (see Fig. 1). In the sections that follow, we elaborate on the theoretical pathways proposed by the model.

Interactions Between Fidelity and Responsiveness in Producing Program Outcomes

Our theoretical model proposes that the effects of facilitator implementation (e.g., fidelity, quality, and adaptation) on program outcomes are mediated or moderated by participant responsiveness. First, many studies have provided evidence that fidelity is positively associated with outcomes (e.g., Botvin et al. 1989), whereas others have found weak or null support for the association (Resnicow et al. 1998; Spoth et al. 2002). Researchers have attributed the variability in the association between fidelity and outcomes to several explanations, including imprecise measurement and restricted variance when implementation is highly monitored (Durlak and DuPre 2008). As proposed in our theoretical model, another possible explanation is that influence of fidelity on outcomes is moderated by participant responsiveness. Programs characterized by high levels of fidelity but low levels of attendance or active participation are unlikely to achieve their intended outcomes (Hansen et al. 1991).

Interactions Between Fidelity and Quality of Delivery in Producing Program Outcomes

Emerging evidence supports the importance of quality of delivery on outcomes (Durlak and DuPre 2008). However, when fidelity is poor, the influence of quality on outcomes is likely to diminish or fade completely. If a facilitator fails to deliver program core components, quality itself would not produce behavioral changes. Similarly, the positive effects of high levels of program fidelity depend on a facilitator delivering core components clearly, comprehensibly, and enthusiastically. Thus, the weak or null associations between fidelity and outcomes mentioned above may also be attributable to poor program delivery quality (Resnicow et al. 1998; Spoth et al. 2002). In this regard, we propose that quality functions as a moderating influence on the association between fidelity and outcomes.

Mediational Influence of Responsiveness in the Relation Between Quality and Outcomes

A growing body of literature on participant engagement (i.e., recruitment and retention) in preventive interventions highlights the importance of responsiveness, as well as challenges associated with facilitating high levels of participation, especially for participants from minority and low-income backgrounds (Prado et al. 2006). A critical question for successful program implementation is thus how to promote high levels of responsiveness. Much of the research examining predictors of participation has focused on participant characteristics, such as sociocultural and behavioral risk factors, including education level, race/ ethnicity, and income (Coatsworth et al. 2006a; Haggerty et al. 2002). Although relatively unexplored, quality of delivery may be a critical influence on participant responsiveness to programs (Coatsworth et al. 2006b), with emerging research showing that quality of delivery has a significant effect on participant responsiveness (Diamond et al. 1999; Patterson and Forgatch 1985). Maximizing quality of delivery to increase participant responsiveness within interventions targeting low-income, ethnically diverse, high-risk participants may be particularly important (Coatsworth et al. 2006b; Dillman Carpentier et al. 2007). Our theoretical model proposes that the link between quality and outcomes is indirect, mediated by increases in participant responsiveness.

Mediational Influence of Responsiveness in the Relation Between Adaptation and Outcomes

Similarly, evidence also links aspects of program adaptation with both responsiveness and program outcomes (Blakely et al. 1987; Kerr et al. 1985; McGraw et al. 1996; Parcel et al. 1991). To the extent that facilitators adapt programs to make them more congruent with the local context, participants may feel that their needs and values are being heard and respected, thus increasing their sense of attachment to the program’s goals and methods (McGraw et al. 1996). Indeed, evidence suggests that culturally blind interventions often erode program effects by failing to engage communities (Kumpfer et al. 2002). Facilitators’ use of adaptation may enhance the cultural relevance of the program, thereby increasing attendance and active participation, and in turn, improving outcomes. In addition, especially to the extent that adaptations occur in response to participants’ explicitly stated concerns, facilitator additions to the program may directly improve outcomes by addressing critical needs and finding relevant solutions (Parcel et al. 1991; Pentz et al. 1990). We propose that adaptation may have both direct and indirect effects on outcomes, mediated by increases in participant responsiveness.

Summary and Directions for Future Research

An impressive body of empirical research has demonstrated that preventive interventions are effective in reducing a wide range of problem outcomes for children (NRC/IOM 2009), but only if these interventions are implemented well (Wilson and Lipsey 2007). Implementation studies have largely been limited by investigations of the effects of a single dimension (Durlak and DuPre 2008) and the lack of attention to some dimensions (Dusenbury et al. 2005). Further, the field of prevention needs a theoretical framework to guide research on how different aspects of implementation may function in concert to affect outcomes (Tolan et al. 2002). To address this need, we integrate implementation research findings and propose a theoretical model highlighting pathways by which the different dimensions of implementation influence program outcomes. We have proposed several specific hypotheses; most notably that 1) the effect of fidelity is moderated by both quality and participant responsiveness, 2) the effect of quality on outcomes is mediated by participant responsiveness, and 3) adaptation has both direct and indirect effects on participant outcomes through responsiveness. Including multiple aspects of implementation in a single model is needed to untangle the effects of implementation on outcomes (Kerr et al. 1985). We introduce pathways in our theoretical model as a starting point for the systematic study of how different aspects of implementation function conjointly to affect outcomes. Testing these pathways is an important direction for the field of prevention’s research agenda.

A critical first step in this agenda is establishing consensus on terms and definitions for each dimension of implementation and developing appropriate measures that distinguish between the different aspects of facilitator implementation and participant responsiveness (Bush et al. 1989; Cross et al. 2009). One particular challenge will be to develop measures that distinguish fidelity from adaptation (Blakely et al. 1987). Other measures have been confounded as well, such as fidelity and quality (Forgatch et al. 2005) and quality and responsiveness (Tolan et al. 2002). While some measurement work needs to be conducted to distinguish conceptually different dimensions of implementation, efforts also need to be undertaken to understand the best way (if at all) to combine indicators of these dimensions. For example, interactive teaching and clinical process skills are often thought to be indicators of a single underlying construct of quality (Forgatch et al. 2005). Further, many indicators have been employed to assess responsiveness (e.g., attendance, active participation within sessions, and home practice completion). These indicators may be part of a latent construct of responsiveness (Baydar et al. 2003). Alternatively, these may be considered distinct components that interact with one another and have differential associations with facilitator dimensions and with program outcomes. These are empirical questions that future implementation research should address.

In the measurement of adaptation, it is likely that some changes to the program curriculum will be positive and others will be negative. According to Dusenbury and colleagues (2003, p. 252), “one of the major problems today is that research has not yet indicated whether and under what conditions adaptation or reinvention might enhance program outcomes, and under what conditions adaptation or reinvention results in a loss of program effectiveness.” Determining the valence of adaptations, in terms of whether the adaptation was positive (i.e., concordant with program’s goals and theory) or negative (i.e., detracting from, or in conflict with, goals and theory) may be critically important in understanding the associations between adaptation and outcomes (Dusenbury et al. 2005; Elliott and Mihalic 2004). While their study did not include program outcomes, Dusenbury and colleagues (2005) found that positively-valenced adaptations co-occurred with high levels of fidelity, while the number of adaptations that were coded as negatively-valenced was associated with lower levels of fidelity. Further, more experienced facilitators scored higher on both fidelity and positively-valenced adaptations. The next step will be to test associations between outcomes and the valence of adaptation.

It is possible, however, that the associations between implementation dimensions and outcomes may differ by cultural context, and implementation processes that are beneficial for some groups may not be beneficial for all (McGraw et al. 1996). Fidelity may diminish in importance if the program does not fit the needs of participants in the local context, and adaptations may be even more important when the current population differs from the original audience (Stanton et al. 2006). Culture may also dictate quality because interpersonal communication that is clear, supportive, respectful in some contexts may be confusing or disrespectful in others (Gonzales et al. 2006; Wolchik et al. 2009). The pathways in the proposed theoretical model should therefore be examined across cultural groups. Further, the delivery context has been shown to influence dimensions of implementation (Durlak and DuPre 2008). It may be relevant to examine these processes across settings that vary in domains previously identified as being related to implementation.

Another concern related to the measurement of implementation is the use of observational or self-report data. From a methodological standpoint, observational methods are preferred because facilitator self-reports may be inflated compared to observer reports; in at least one case, the two were negatively correlated (Dusenbury et al. 2005). Moreover, observer reports may be more predictive of outcomes than self-report (Dusenbury et al. 2003). From a practicality standpoint, however, the costs associated with observational methods are generally prohibitive for community organizations attempting to monitor their own implementation. Prevention researchers should seek innovative ways of assessing implementation that are valid and feasible for use in community delivery settings.

The level of detail is an important consideration in the design of implementation measures. Anecdotally from our work and from conversations with colleagues, it appears that items that assess more microlevel, simple behaviors (e.g., “did the facilitator distribute handouts?”) permit better interrater reliability than those which are more macrolevel or complex (e.g., “did the facilitator process the roleplay with the group?”), yet items assessing the more simple behaviors might not tap into components of the program that have a meaningful impact on outcomes. Another explanation for the failure of some studies to find effects of fidelity on outcomes is that there may be insufficient precision in fidelity assessments. Future research should examine the impact of measurement at different levels of detail on the ability to achieve consensus between observers and relevance for outcomes.

A final important issue relates to the timing of implementation assessments. Many studies code a random sample of program sessions, assuming that the results generalize to all sessions and that implementation has consistent effects across all program components (e.g., Lochman et al. 2009). A more refined approach is to assess outcome-specific implementation. This approach involves selecting a proximal or distal outcome and assessing implementation for the specific program components that target the selected outcome. Consequently, one can examine the relation between implementation of outcome-specific program components and the outcome of interest. Hansen and colleagues (1991) found that outcome-specific fidelity was associated with respective program outcomes, based on both facilitator and independent observer reports. For example, facilitators’ adherence to the curriculum components targeting children’s substance use resistance efficacy was associated with children’s resistance knowledge, skills, and intention. Measuring outcome-specific implementation provides more analytic specificity and may result in more variability among groups than a global score, providing greater power to detect effects.

While the focus of this article is the influence of facilitator dimensions on responsiveness, it is likely that participants also influence facilitators. For example, implementing a program with high fidelity or quality may be particularly challenging with a disruptive group (Giles et al. 2008). Further, in a case study examining one facilitator’s implementation over time, adaptations occurring in earlier cohorts were repeated in later cohorts only when earlier groups were vocally responsive to the adaptation (Berkel et al. 2009). Tracking implementation across sessions may allow bidirectional effects to be untangled.

Studying the trajectory of multiple dimensions of implementation over time is particularly useful (Ringwalt et al. 2010) and may inform training and technical assistance efforts. Diffusion of innovation theory (Rogers 2003) suggests the potential for “program drift,” in which, after repeated delivery of the program, facilitators stray from the curriculum as originally intended and implementation declines (Fagan et al. 2008). It is because of the fear of program drift that effective training and technical assistance systems are a primary focus in effectiveness research (Gingiss et al. 2006). Some studies examining implementation trajectories show that more experienced teachers implement with better fidelity (Dusenbury et al. 2005), while others show that experienced teachers are more likely to omit parts they perceive to be ineffective (Kerr et al. 1985). Ringwalt and colleagues (2010) pitted two theoretical perspectives, diffusion of innovation theory and the Concerns-Based Adoption Model (C-BAM), against one another to understand trajectories of implementation over time. Based on diffusion of innovation theory, one would expect linear decline. On the other hand, following C-BAM, implementation is thought to be more mechanical in early iterations, but quality improves over time as facilitators become more comfortable with the content. Moreover, C-BAM suggests that facilitators perceive the effects of components on participants, and may consequently adapt the program. Ringwalt and colleagues found support for C-BAM, rather than diffusion of innovation theory. However, the authors combined fidelity and adaptation in a single measure and did not include an indicator of quality, which appears particularly relevant for testing this model. Clearer conclusions will be possible with the inclusion of distinct measures of each of these constructs in future research.

In summary, program implementation is a multidimensional construct, and previous literature provides evidence for the importance of facilitator and participant behaviors in understanding program outcomes (Durlak and DuPre 2008). Further, these dimensions are likely to be interdependent, and yet each may function to influence outcomes in different ways. We propose a theoretical model with specific hypotheses about how dimensions may function conjointly to influence program outcomes and suggest future directions for implementation measurement and research. For the field of prevention to move forward in its implementation research agenda, these points must be addressed.

Acknowledgments

Cady Berkel and Erin Schoenfelder’s involvement in the preparation of this manuscript were supported by Training Grant T32MH18387.

Footnotes

We distinguish between the concepts of attendance and dosage. Although attendance is sometimes described as “dosage” in the implementation literature (e.g., Charlebois et al. 2004a; Spoth and Redmond 2002), this confounds a participant behavior with an element of program design. We suggest that dosage should refer to the number of sessions offered, and is thus under the purview of program designers and administrators, while attendance should be used to describe number of sessions participants attend.

References

- Backer TE. Finding the balance: Program fidelity and adaptation in substance abuse prevention. Rockville, MD: Center for Substance Abuse Prevention; 2002. [Google Scholar]

- Baydar N, Reid MJ, Webster-Stratton C. The role of mental health factors and program engagement in the effectiveness of a preventive parenting program for Head Start mothers. Child Development. 2003;74:1433–1453. doi: 10.1111/1467-8624.00616. [DOI] [PubMed] [Google Scholar]

- Berkel C, Hagan M, Jones S, Ayers TS, Wolchik SA, Sandler IN. Longitudinal examination of facilitator implementation: A case study across multiple cohorts of delivery. Paper presented at the annual meeting of the American Evaluation Association; Orlando, FL. 2009. Nov, [Google Scholar]

- Blake SM, Simkin L, Ledsky R, Perkins C, Calabrese JM. Effects of a parent-child communications intervention on young adolescents’ risk for early onset of sexual intercourse. Family Planning Perspectives. 2001;33:52–61. [PubMed] [Google Scholar]

- Blakely CH, Mayer JP, Gottschalk RG, Schmitt N, Davidson WS, Roitman DB, et al. The fidelity-adaptation debate: Implications for the implementation of public sector social programs. American Journal of Community Psychology. 1987;15:253–268. [Google Scholar]

- Boruch RF, Gomez H. Sensitivity, bias and theory in impact evaluations. Professional Psychology. 1977;8:411–434. [Google Scholar]

- Botvin GJ. Advancing prevention science and practice: Challenges, critical issues, and future directions. Prevention Science. 2004;5:69–72. doi: 10.1023/b:prev.0000013984.83251.8b. [DOI] [PubMed] [Google Scholar]

- Botvin GJ, Dusenbury L, Baker E, James-Ortiz S, Kerner JF. A skills training approach to smoking prevention among Hispanic youth. Journal of Behavioral Medicine. 1989;12:279–296. doi: 10.1007/BF00844872. [DOI] [PubMed] [Google Scholar]

- Brach C, Fraser I. Can cultural competency reduce racial and ethnic health disparities? A review and conceptual model. Medical Care Research & Review. 2000;57:181–217. doi: 10.1177/1077558700057001S09. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bush PJ, Zuckerman AE, Taggart VS, Theiss PK, Peleg EO, Smith SA. Cardiovascular risk factor prevention in Black school children: The “Know Your Body” evaluation project. Health Education Quarterly. 1989;16:215–227. doi: 10.1177/109019818901600206. [DOI] [PubMed] [Google Scholar]

- Castro FG, Barrera M, Martinez CR. The cultural adaptation of prevention interventions: Resolving tensions between fidelity and fit. Prevention Science. 2004;5:41–45. doi: 10.1023/b:prev.0000013980.12412.cd. [DOI] [PubMed] [Google Scholar]

- Charlebois P, Brendgen M, Vitaro F, Normandeau S, Boudreau JF. Examining dosage effects on prevention outcomes: Results from a multi-modal longitudinal preventive intervention for young disruptive boys. Journal of School Psychology. 2004;42:201–220. [Google Scholar]

- Charlebois P, Vitaro F, Normandeau S, Brendgen M, Rondeau N. Trainers’ behavior and participants’ persistence in a longitudinal preventive intervention for disruptive boys. Journal of Primary Prevention. 2004;25:375–388. [Google Scholar]

- Coatsworth JD, Duncan LG, Pantin H, Szapocznik J. Patterns of retention in a preventive intervention with ethnic minority families. Journal of Primary Prevention. 2006a;27:171–193. doi: 10.1007/s10935-005-0028-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coatsworth JD, Duncan LG, Pantin H, Szapocznik J. Retaining ethnic minority parents in a preventive intervention: The quality of group process. Journal of Primary Prevention. 2006b;27:367–389. doi: 10.1007/s10935-006-0043-y. [DOI] [PubMed] [Google Scholar]

- Conduct Problems Prevention Research Group. Initial impact of the Fast Track prevention trial for conduct problems: II. Classroom effects. Journal of Consulting & Clinical Psychology. 1999;67:648–657. [PMC free article] [PubMed] [Google Scholar]

- Cross TL, Bazron BJ, Dennis KW, Isaacs MR. Towards a culturally competent system of care: A monograph on effective services for minority children who are severely emotionally disturbed: Volume I. Washington, DC: National Technical Assistance Center for Children’s Mental Health, Georgetown University Child Development Center; 1989. [Google Scholar]

- Cross W, West J, Wyman PA, Schmeelk-Cone KH. Disaggregating and measuring implementor adherence and competence. Paper presented at the 2nd Annual NIH Conference on the Science of Dissemination and Implementation; Bethesda, MD. 2009. [Google Scholar]

- Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: Are implementation effects out of control? Clinical Psychology Review. 1998;18:23–45. doi: 10.1016/s0272-7358(97)00043-3. [DOI] [PubMed] [Google Scholar]

- Diamond GM, Liddle HA, Hogue A, Dakof GA. Alliance-building interventions with adolescents in family therapy: A process study. Psychotherapy: Theory, Research, Practice, Training. 1999;36:355–368. [Google Scholar]

- Dillman Carpentier F, Mauricio AM, Gonzales NA, Millsap RE, Meza CM, Dumka LE, et al. Engaging Mexican origin families in a school-based preventive intervention. Journal of Primary Prevention. 2007;28:521–546. doi: 10.1007/s10935-007-0110-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durlak J. Why program implementation is important. Journal of Prevention & Intervention in the Community. 1998;17:5–18. [Google Scholar]

- Durlak J, DuPre E. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Dusenbury LA, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Education Research. 2003;18:237–256. doi: 10.1093/her/18.2.237. [DOI] [PubMed] [Google Scholar]

- Dusenbury LA, Brannigan R, Hansen WB, Walsh J, Falco M. Quality of implementation: Developing measures crucial to understanding the diffusion of preventive interventions. Health Education Research. 2005;20:308–313. doi: 10.1093/her/cyg134. [DOI] [PubMed] [Google Scholar]

- Eames C, Daley D, Hutchings J, Whitaker CJ, Jones K, Hughes JC, et al. Treatment fidelity as a predictor of behaviour change in parents attending group-based parent training. Child Care, Health & Development. 2009;35:603–612. doi: 10.1111/j.1365-2214.2009.00975.x. [DOI] [PubMed] [Google Scholar]

- Eddy JM, Dishion TJ, Stoolmiller M. The analysis of intervention change in children and families: Methodological and conceptual issues embedded in intervention studies. Journal of Abnormal Child Psychology. 1998;26:53–69. doi: 10.1023/a:1022634807098. [DOI] [PubMed] [Google Scholar]

- Elliott DS, Mihalic S. Issues in disseminating and replicating effective prevention programs. Prevention Science. 2004;5:47–52. doi: 10.1023/b:prev.0000013981.28071.52. [DOI] [PubMed] [Google Scholar]

- Emshoff J, Blakely C, Gray D, Jakes S, Brounstein P, Coulter J, et al. An ESID case study at the federal level. American Journal of Community Psychology. 2003;32:345–357. doi: 10.1023/b:ajcp.0000004753.88247.0d. [DOI] [PubMed] [Google Scholar]

- Fagan AA, Hanson K, Hawkins JD, Arthur MW. Bridging science to practice: Achieving prevention program implementation fidelity in the Community Youth Development study. American Journal of Community Psychology. 2008;41:235–249. doi: 10.1007/s10464-008-9176-x. [DOI] [PubMed] [Google Scholar]

- Forgatch MS, Patterson GR, DeGarmo DS. Evaluating fidelity: Predictive validity for a measure of competent adherence to the Oregon model of parent management training. Behavior Therapy. 2005;36:3–13. doi: 10.1016/s0005-7894(05)80049-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garvey C, Julion W, Fogg L, Kratovil A, Gross D. Measuring participation in a prevention trial with parents of young children. Research in Nursing & Health. 2006;29:212–222. doi: 10.1002/nur.20127. [DOI] [PubMed] [Google Scholar]

- Giles S, Jackson-Newsom J, Pankratz M, Hansen W, Ringwalt C, Dusenbury L. Measuring quality of delivery in a substance use prevention program. Journal of Primary Prevention. 2008;29:489–501. doi: 10.1007/s10935-008-0155-7. [DOI] [PubMed] [Google Scholar]

- Gingiss P, Roberts-Gray C, Boerm M. Bridge-It: A system for predicting implementation fidelity for school-based tobacco prevention programs. Prevention Science. 2006;7:197–207. doi: 10.1007/s11121-006-0038-1. [DOI] [PubMed] [Google Scholar]

- Gonzales NA, Wolchik SA, Sandler IN, Winslow EB, Martinez CR, Cooley M. Quality management methods to adapt interventions for cultural diversity. Paper presented at the annual meeting of the Society for Prevention Research; San Antonio, TX. 2006. Jun, [Google Scholar]

- Haggerty KP, Fleming CB, Lonczak HS, Oxford ML, Harachi TW, Catalano RF. Predictors of participation in parenting workshops. Journal of Primary Prevention. 2002;22:375–387. [Google Scholar]

- Hansen WB, Graham JW, Wolkenstein BH, Rohrbach LA. Program integrity as a moderator of prevention program effectiveness: Results for fifth-grade students in the Adolescent Alcohol Prevention Trial. Journal of Studies on Alcohol. 1991;52:568– 579. doi: 10.15288/jsa.1991.52.568. [DOI] [PubMed] [Google Scholar]

- Hubble MA, Duncan BL, Miller SD. The heart and soul of change: What works in therapy. Washington, DC: American Psychological Association; 1999. [Google Scholar]

- Ialongo NS, Werthamer L, Kellam SG, Brown CH, Wang S, Lin Y. Proximal impact of two first-grade preventive interventions on the early risk behaviors for later substance abuse, depression, and antisocial behavior. American Journal of Community Psychology. 1999;27:599–641. doi: 10.1023/A:1022137920532. [DOI] [PubMed] [Google Scholar]

- Kam CM, Greenberg MT, Walls CT. Examining the role of implementation quality in school-based prevention using the PATHS curriculum. Prevention Science. 2003;4:55–63. doi: 10.1023/a:1021786811186. [DOI] [PubMed] [Google Scholar]

- Kerr DM, Kent L, Lam TC. Measuring program implementation with a classroom observation instrument: The Interactive Teaching Map. Evaluation Review. 1985;9:461–482. [Google Scholar]

- Kosterman R, Hawkins JD, Haggerty KP, Spoth R, Redmond C. Preparing for the Drug Free Years: Session-specific effects of a universal parent-training intervention with rural families. Journal of Drug Education. 2001;31:47–68. doi: 10.2190/3KP9-V42V-V38L-6G0Y. [DOI] [PubMed] [Google Scholar]

- Kumpfer KL, Alvarado R, Smith P, Bellamy N. Cultural sensitivity and adaptation in family-based prevention interventions. Prevention Science. 2002;3:241–246. doi: 10.1023/a:1019902902119. [DOI] [PubMed] [Google Scholar]

- Lillehoj CJ, Griffin KW, Spoth R. Program provider and observer ratings of school-based preventive intervention implementation: Agreement and relation to youth outcomes. Health Education & Behavior. 2004;31:242–257. doi: 10.1177/1090198103260514. [DOI] [PubMed] [Google Scholar]

- Lochman JE, Boxmeyer C, Powell N, Qu L, Wells K, Windle M. Dissemination of the Coping Power program: Importance of intensity of counselor training. Journal of Consulting & Clinical Psychology. 2009;77:397–409. doi: 10.1037/a0014514. [DOI] [PubMed] [Google Scholar]

- McGraw SA, Sellers DE, Stone EJ, Bebchuk J, Edmundson EW, Johnson CC, et al. Using process data to explain outcomes: An illustration from the Child and Adolescent Trial for Cardiovascular Health (CATCH) Evaluation Review. 1996;20:291–312. doi: 10.1177/0193841X9602000304. [DOI] [PubMed] [Google Scholar]

- NRC/IOM. Preventing mental, emotional, and behavioral disorders among young people: Progress and possibilities. Washington, DC: National Research Council and Institute of Medicine; 2009. [PubMed] [Google Scholar]

- Nye CL, Zucker RA, Fitzgerald HE. Early intervention in the path to alcohol problems through conduct problems: Treatment involvement and child behavior change. Journal of Consulting & Clinical Psychology. 1995;63:831–840. doi: 10.1037//0022-006x.63.5.831. [DOI] [PubMed] [Google Scholar]

- Palinkas LA, Aarons GA, Chorpita BF, Hoagwood K, Landsverk J, Weisz JR. Cultural exchange and the implementation of evidence-based practices: Two case studies. Research on Social Work Practice. 2009;19:602–612. [Google Scholar]

- Parcel GS, Ross JG, Lavin AT, Portnoy B, Nelson GD, Winters F. Enhancing implementation of the Teenage Health Teaching Modules. Journal of School Health. 1991;61:35–38. doi: 10.1111/j.1746-1561.1991.tb07857.x. [DOI] [PubMed] [Google Scholar]

- Patterson GR, Forgatch MS. Therapist behavior as a determinant for client noncompliance: A paradox for the behavior modifier. Journal of Consulting & Clinical Psychology. 1985;53:846–851. doi: 10.1037//0022-006x.53.6.846. [DOI] [PubMed] [Google Scholar]

- Pentz MA, Trebow EA, Hansen WB, MacKinnon DP, Dwyer JH, Johnson CA, et al. Effects of program implementation on adolescent drug use behavior: The Midwestern Prevention Project (MPP) Evaluation Review. 1990;14:264–289. [Google Scholar]

- Prado G, Pantin H, Schwartz SJ, Lupei NS, Szapocznik J. Predictors of engagement and retention into a parent-centered, ecodevelopmental HIV preventive intervention for Hispanic adolescents and their families. Journal of Pediatric Psychology. 2006;31:874–890. doi: 10.1093/jpepsy/jsj046. [DOI] [PubMed] [Google Scholar]

- Resnicow K, Davis M, Smith M, Lazarus-Yaroch A, Baranowski T, Baranowski J, et al. How best to measure implementation of school health curricula: A comparison of three measures. Health Education Research. 1998;13:239–250. doi: 10.1093/her/13.2.239. [DOI] [PubMed] [Google Scholar]

- Ringwalt CL, Vincus A, Ennett S, Johnson R, Rohrbach LA. Reasons for teachers’ adaptation of substance use prevention curricula in schools with non-White student populations. Prevention Science. 2004;5:61–67. doi: 10.1023/b:prev.0000013983.87069.a0. [DOI] [PubMed] [Google Scholar]

- Ringwalt CL, Pankratz M, Jackson-Newsom J, Gottfredson N, Hansen WB, Giles S, et al. Three-year trajectory of teachers’ fidelity to a drug prevention curriculum. Prevention Science. 2010;11:67–76. doi: 10.1007/s11121-009-0150-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of drug abuse prevention programs: Spontaneous diffusion, agenda setting, and reinvention. Bethesda, MD: NIDA Research Monograph; 1995. [PubMed] [Google Scholar]

- Rogers EM. Diffusion of innovations. 5. New York: The Free Press; 2003. [Google Scholar]

- Rohrbach LA, Gunning M, Sun P, Sussman S. The Project Towards No Drug Abuse (TND) dissemination trial: Implementation fidelity and immediate outcomes. Prevention Science. 2010;11:77–88. doi: 10.1007/s11121-009-0151-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shelef K, Diamond GM, Diamond GS, Liddle HA. Adolescent and parent alliance and treatment outcome in Multidimensional Family Therapy. Journal of Consulting & Clinical Psychology. 2005;73:689–698. doi: 10.1037/0022-006X.73.4.689. [DOI] [PubMed] [Google Scholar]

- Slavin RE. Comprehensive cooperative learning models: Embedding cooperative learning in the curriculum and school. In: Sharan S, editor. Cooperative learning: Theory and research. New York: Praeger; 1990. pp. 261–283. [Google Scholar]

- Spoth RL, Redmond C. Project Family prevention trials based in community-university partnerships: Toward scaled-up preventive interventions. Prevention Science. 2002;3:203–221. doi: 10.1023/a:1019946617140. [DOI] [PubMed] [Google Scholar]

- Spoth R, Guyll M, Trudeau L, Goldberg-Lillehoj C. Two studies of proximal outcomes and implementation quality of universal preventive interventions in a community-university collaboration context. Journal of Community Psychology. 2002;30:499–518. [Google Scholar]

- Stanton B, Harris C, Cottrell L, Li X, Gibson C, Guo J, et al. Trial of an urban adolescent sexual risk-reduction intervention for rural youth: A promising but imperfect fit. Journal of Adolescent Health. 2006;38:25–36. doi: 10.1016/j.jadohealth.2004.09.023. [DOI] [PubMed] [Google Scholar]

- Tobler NS, Stratton HH. Effectiveness of school-based drug prevention programs: A meta-analysis of the research. Journal of Primary Prevention. 1997;18:71–128. [Google Scholar]

- Tolan PH, Hanish LD, McKay MM, Dickey MH. Evaluating process in child and family interventions: Aggression prevention as an example. Journal of Family Psychology. 2002;16:220–236. doi: 10.1037//0893-3200.16.2.220. [DOI] [PubMed] [Google Scholar]

- Widaman KF, Kagan S. Cooperativeness and achievement: Interaction of student cooperativeness with cooperative versus competitive classroom organization. Journal of School Psychology. 1987;25:355–365. [Google Scholar]

- Wilson BDM, Miller RL. Examining strategies for culturally grounded HIV prevention: A review. AIDS Education & Prevention. 2003;15:184–202. doi: 10.1521/aeap.15.3.184.23838. [DOI] [PubMed] [Google Scholar]

- Wilson SJ, Lipsey MW. School-based interventions for aggressive and disruptive behavior: Update of a meta-analysis. American Journal of Preventive Medicine. 2007;33:S130–S143. doi: 10.1016/j.amepre.2007.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolchik SA, Sandler IN, Jones S, Gonzales N, Doyle K, Winslow E, et al. The New Beginnings Program for divorcing and separating families: Moving from efficacy to effectiveness. Family Court Review. 2009;47:416–435. doi: 10.1111/j.1744-1617.2009.01265.x. [DOI] [PMC free article] [PubMed] [Google Scholar]