Significance

Individuals determine horizontal sound location based on precise calculations of sound level and sound timing differences at the two ears. Although these cues are processed independently at lower levels of the auditory system, their cortical processing remains poorly understood. This study seeks to address two key questions. (i) Are these cues integrated to form a cue-independent representation of space? (ii) How does active listening to sound location alter cortical response to these cues? We use functional brain imaging to address these questions, demonstrating that cue responses overlap in the cortex, that voxel patterns from one cue can predict the other cue and vice versa, and that active spatial listening enhances cortical responses independently of specific features of the sound.

Keywords: auditory space, binaural cues, human auditory cortex, fMRI, sound localization

Abstract

Few auditory functions are as important or as universal as the capacity for auditory spatial awareness (e.g., sound localization). That ability relies on sensitivity to acoustical cues—particularly interaural time and level differences (ITD and ILD)—that correlate with sound-source locations. Under nonspatial listening conditions, cortical sensitivity to ITD and ILD takes the form of broad contralaterally dominated response functions. It is unknown, however, whether that sensitivity reflects representations of the specific physical cues or a higher-order representation of auditory space (i.e., integrated cue processing), nor is it known whether responses to spatial cues are modulated by active spatial listening. To investigate, sensitivity to parametrically varied ITD or ILD cues was measured using fMRI during spatial and nonspatial listening tasks. Task type varied across blocks where targets were presented in one of three dimensions: auditory location, pitch, or visual brightness. Task effects were localized primarily to lateral posterior superior temporal gyrus (pSTG) and modulated binaural-cue response functions differently in the two hemispheres. Active spatial listening (location tasks) enhanced both contralateral and ipsilateral responses in the right hemisphere but maintained or enhanced contralateral dominance in the left hemisphere. Two observations suggest integrated processing of ITD and ILD. First, overlapping regions in medial pSTG exhibited significant sensitivity to both cues. Second, successful classification of multivoxel patterns was observed for both cue types and—critically—for cross-cue classification. Together, these results suggest a higher-order representation of auditory space in the human auditory cortex that at least partly integrates the specific underlying cues.

Across species, one of the most important functions of hearing is the capacity to localize and orient to sound sources throughout 360° of extrapersonal space. This function relies on the neural processing of a variety of acoustical cues, but particularly on interaural time (ITD) and level differences (ILD) that convey sound-source directions in the horizontal dimension (azimuth). The acoustical basis of these cues is well understood: sound arrives earlier and with greater intensity at the ear nearest the source. The magnitudes of both cues depend systematically on azimuth angle, sound frequency, and head size/shape. Acoustic reflections from surfaces of the outer ear and of the listening room further alter these cues in a frequency- and cue-specific manner.

In the absence of echoes and reverberation, ITD and ILD cues vary more or less in parallel (i.e., redundantly) and human listeners appear to use the cues interchangeably. However, ITD is the psychophysically dominant cue for localization when sounds contain energy below ∼1.5 kHz (1–3). For higher-frequency sounds, ILD tends to dominate, although ITD carried by fluctuations in the sound envelope also contributes (4). Whether and how these various cues are integrated into a unified representation of auditory space remains poorly understood. Complicating that process, echoes, reverberation, and competing sounds can introduce significant disparities between the various cues. A potential benefit of “late” (i.e., cortical) integration could be the ability to optimally weight the cues across various listening contexts.

Neural sensitivity to ITD and ILD cues is observed throughout the auditory system, typically in the form of greater response (e.g., higher firing rate) to contralateral than to ipsilateral sound sources (5, 6). In auditory cortex (AC), this contralateral response pattern holds true for ILD spike rate response functions (7–9), but the evidence is more mixed (e.g., including strong ipsilateral and trough-shaped responses) for ITD (10, 11).

Human fMRI studies have consistently mirrored these results, reporting broad contralateral tuning in AC in response to ILD cues (12–14). Importantly, contralateral sensitivity to ILD cues evident in the blood oxygenation level-dependent (BOLD) signal represents binaural processes that cannot be explained by monaural intensity effects alone (15). As in the literature from animal models, reports of human cortical sensitivity to ITD have also been mixed, with some studies reporting moderate–strong contralateral dominance (16–19), some weak contralateral dominance (13, 14, 20), and others a nonexistent sensitivity to ITD (21, 22).

Whether cortical sensitivity to ILD and ITD represents cue-specific or cue-independent representations of auditory space is hotly debated. Evidence for cue-specific representation includes both EEG (22–25) and lesion results (26, 27). Evidence for integrated ITD and ILD processing includes results of EEG (28) and magnetoencephelography (MEG) (29) studies. Importantly, several studies reported mixed results (25, 29), and one is led to conclude that the representations are neither fully integrated nor fully independent. One possibility is that multiple AC regions exhibit different patterns of sensitivity to ITD and ILD but that far-field electrophysiological measures fail to distinguish their contributions. The current study aimed to address this possibility by exploiting fMRI’s greater ability to resolve different patterns of cue sensitivity across cortical regions.

Also incompletely understood is how behavioral tasks modulate ILD- and ITD-specific responses. Task-dependent modulation of AC activity has been demonstrated across a variety of experimental paradigms and methodological techniques, including animal electrophysiology (30–32), human electrocorticography (33, 34), EEG/MEG (35–38), monkey fMRI (39), and human fMRI (40–45). The current study aimed to ascertain whether spatial listening alters the form of ITD and ILD sensitivity in human AC. In particular, task-dependent modulations might help explain why cortical ITD sensitivity appears so much weaker than cortical ILD sensitivity, if broad “default” tuning gives way to sharper cue-specific tuning during active spatial listening.

To investigate the cortical representation of ILD and ITD in AC during active spatial listening, we designed an fMRI experiment to present parametrically varying ILD or ITD (in separate runs; Fig. S1). Concurrently, the task variable was varied in blocks in which participants engaged in an auditory localization, auditory pitch, or visual brightness task. Within each block, trials contained brief changes (“shifts”) along those stimulus dimensions (location, pitch, and visual). Trials with different shift types were presented with equal likelihood regardless of the task block; however, participants were instructed to ignore all shifts except those designated as targets by the task block. Thus, the experimental paradigm required active spatial listening during the location task blocks, compared with intrasensory auditory-pitch (nonspatial task during pitch blocks) and intersensory visual-brightness conditions.

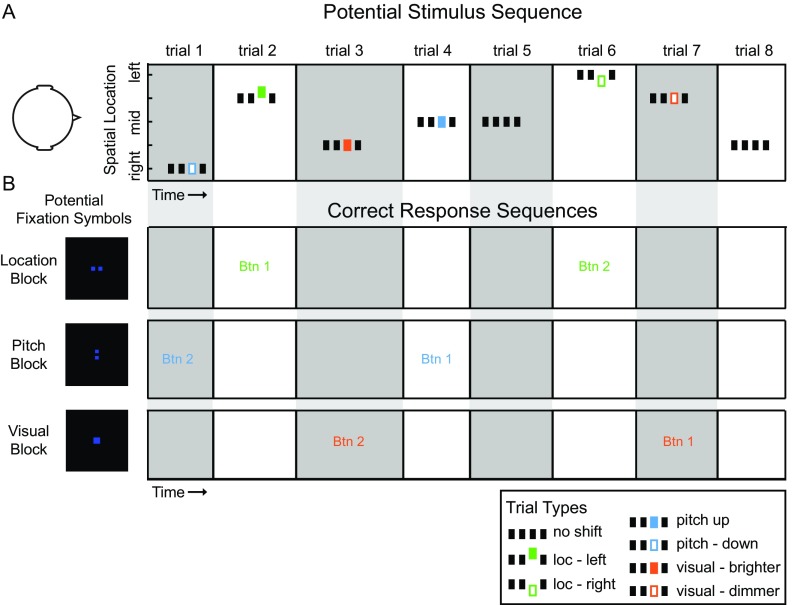

Fig. S1.

Example timeline of stimulus presentation and corresponding task protocols. (A) Schematic timeline of a potential stimulus sequence consisting of eight trials. Within each trial, noise-burst trains were presented in four temporal intervals (grouped rectangles) with ILD or ITD at one of five binaural cue values. ITD and ILD were tested in separate runs. On 78% of trials, the third interval introduced a stimulus change in one of three dimensions: Auditory location (green), auditory pitch (blue), or visual brightness of fixation cue (orange). Trials lasted 1 s overall and were presented with jittered intertrial timing of 1–5 s. (B) Schematic representation of three potential timelines of correct behavioral responses for the sample stimulus sequence in A. Fixation symbol informed participants of the task/target type in each 30-s task block. Two squares arranged horizontally cued a location block, in which target trials were defined by auditory location change in interval 3. Participants responded to target trials by indicating the direction of change. Two squares arranged vertically cued a pitch block in which target trials were defined by pitch change, and a single square cued a visual block in which target trials were defined by change in brightness of the square on the fixation screen. Text (Btn1 or Btn2, button 1 or 2) indicates the correct response for each trial in the sample stimulus sequence (A), given the task block indicated by the fixation symbol at left.

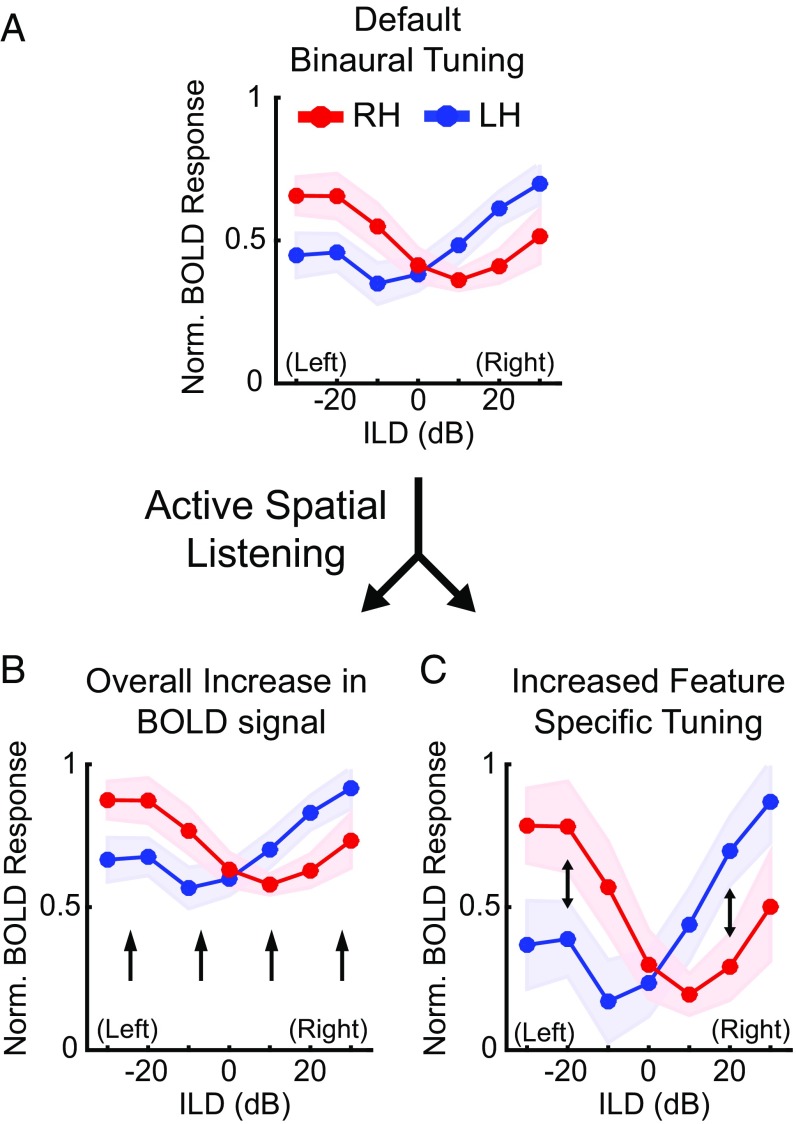

Parametric manipulation of binaural cues during fMRI allows the measurement of binaural response functions, which typically exhibit strong response to the contralateral hemifield (Fig. 1A). Previous ILD-based results (14) and task-related attention studies (34, 36, 43, 45–49) suggest two competing hypotheses regarding how the spatial listening task (compared with the pitch or visual task) might modulate binaural cue (both ILD and ITD) response functions. Spatial listening might enhance the response overall, regardless of cue value (Fig. 1B). Alternatively, spatial listening might enhance the selectivity of responses to particular cue values, for example, increasing the contralateral response (Fig. 1C). Importantly, different types of changes might impact the processing of ITD versus ILD: Sharpening of ILD tuning might enhance contralateral responses, whereas sharpening of ITD tuning might reveal underlying selectivity that is masked by omnidirectional sound-evoked responses during nonspatial listening. Testing that hypothesis is particularly critical because existing psychophysical evidence for ITD dominance (which appears to contradict the existing fMRI data) has been obtained only in focused spatial-listening tasks (2, 3).

Fig. 1.

Hypothetical models of the effects of spatial listening on AC response functions. (A) During nonspatial listening AC responds in a predictably contralateral manner, where each hemisphere responds maximally to sounds originating in the contralateral hemifield (i.e., left hemisphere to (+) rightward ILDs, right hemisphere to (−) leftward ILDs) (15). When engaged in an active spatial listening task, cortical responses might exhibit (B) an overall increase in the BOLD signal or (C) increased sensitivity to specific stimulus features. Red lines correspond to right hemisphere, blue to left hemisphere.

Results

Task Performance Was Equivalent for ITD and ILD Cues.

Participants (n = 10) demonstrated sufficient capability to discriminate shifts along the targeted dimension in both the ILD and ITD runs (Fig. S1). Hit and false-alarm rates were used to compute the index of detection d′ for each cued block type. Performance on the location task averaged d′ = 1.16 (mean) ± 0.41 (SD) on ILD runs and d′ = 1.04 + 0.45 on ITD runs and did not vary between the two cue types (t9 = 0.65, not significant). Overall detection performance tended to be better in the pitch (d′ = 2.6 ± 0.8 for ILD and 2.5 ± 0.9 for ITD) and visual (d′ = 2.7 ± 1.05 for ILD and 2.4 ± 1.06 for ITD) tasks.

Active Spatial Listening Modulates Binaural Cue Response Functions.

Cortical activity in response to parametrically varying spatial cues (ILD or ITD) was measured across 10 participants while they were actively engaged in one of three different types of tasks. Response functions were calculated for left and right hemisphere based on sound responsive voxels located in the defined AC region of interest (ROI) (processing pipeline 1, Fig. S2). Separate functions were calculated for location, pitch, and visual tasks measured across ILD (Fig. 2A) and ITD (Fig. 2B) runs. Values correspond to the mean beta weight measured across participants at each binaural cue condition and were input to repeated-measures ANOVA with factors cortical hemisphere (two levels), binaural cue (five levels), and task (three levels).

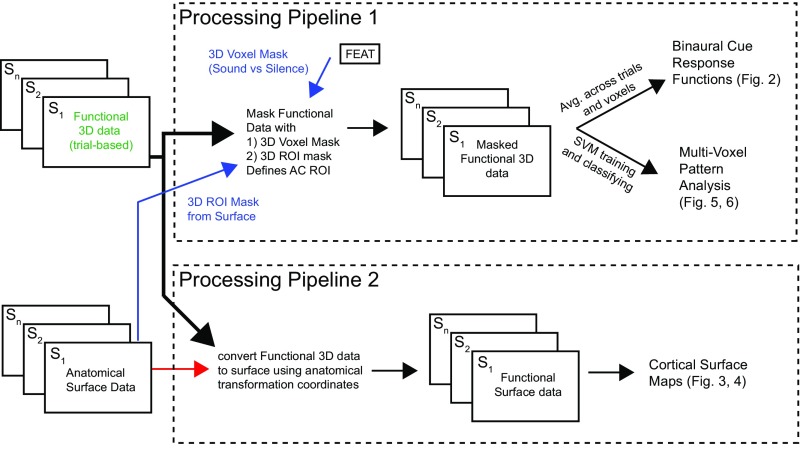

Fig. S2.

Processing pipelines for data analysis. Two data-processing pipelines were used. Pipeline 1 masked the functional 3D data with two separate masks (indicated in blue text). The first corresponded to a sound-versus-silence voxel mask computed during FEAT preprocessing. The second mask was an anatomical ROI derived from surface-based registration data in Freesurfer and projected back to 3D voxel coordinates. Once the two masks were applied to the functional 3D dataset, voxels and trials were extracted and used separately to compute binaural cue response functions and conduct multivoxel pattern analysis. Processing pipeline 2 used the Freesurfer transformation coordinates to convert the functional 3D data for each subject to coregistered cortical surface maps. Functional 2D surface maps were projected to Mollweide coordinates (48) and used for statistical analyses across the cortical surface.

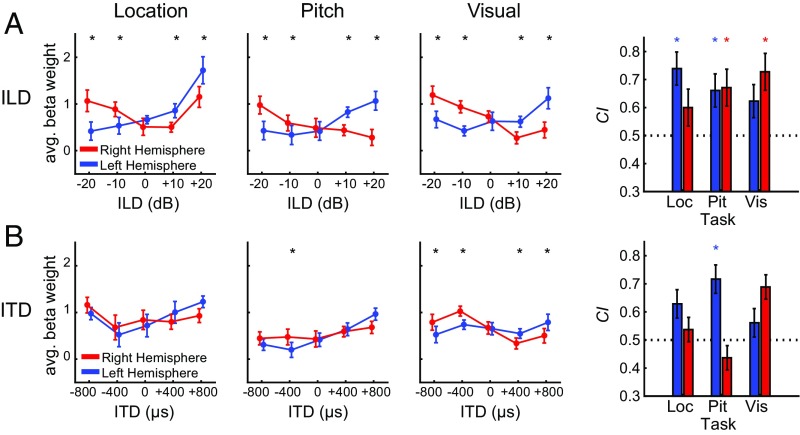

Fig. 2.

Binaural cue response functions characterize cortical sensitivity. Response functions quantify tuning to (A) ILD and (B) ITD during location, pitch, and visual tasks averaged across subjects. Significant interhemispheric statistical comparisons of left and right hemisphere for each spatial cue value are reflected with black asterisks at top of each figure. CI, measured across participants for each hemisphere (right column), quantifies degree of contralateral versus ipsilateral activity. Red and blue asterisks correspond to significant difference from H0 = 0.5 for right and left hemisphere, respectively. Asterisks denote statistical significance following control for FDR (q = 0.05). All error bars correspond to the SEM.

ILD.

For ILD, a significant interaction between hemisphere and binaural cue (F4,36 = 26; P < 0.001) was observed. Response comparisons for right versus left hemisphere at each ILD condition were conducted for each task, with significant differences indicated by asterisks at the top of each panel in Fig. 2. The degree of contralaterality (CI, contralaterality index) is shown in the right column of Fig. 2 for each hemisphere for each task. CI values significantly greater than 0.5 indicated that the response function was biased (i.e., had greater activation) toward the contralateral hemifield. Overall, a response was considered to be contralateral based on significant differences in cue-dependent response between left and right hemisphere, combined with significant CI.

Significant contralateral bias was observed in the left hemisphere during the location (t9 = 4.0; P < 0.001) and pitch (t9 = 2.5; P < 0.05) but not visual task. Conversely, the right hemisphere displayed significant contralateral bias during the pitch (t9 = 2.8; P < 0.05) and visual (t9 = 4.6; P < 0.01) but not location task. Notably, during the location task, large right-hemisphere responses were observed following stimulation at ipsilateral +20 dB, in contrast to the more typical contralateral profile observed in left hemisphere during the location task, and for both hemispheres in nonspatial listening tasks (Fig. 2A). For comparison, the right-hemisphere response to +20 dB ipsilateral ILD was larger during the location task than during visual (t9 = 2.8; P < 0.05, uncorrected) or pitch tasks (t9 = 2.70; P < 0.05, uncorrected).

The full ANOVA revealed no interaction between hemisphere and task but did show a significant main effect of task (F2,18 = 8.5; P < 0.01). Follow-up analyses of task effects reveal the same rank order pattern in left and right hemispheres where location > visual > pitch. Paired tests of each task combination reveal significant differences in the left hemisphere: location > pitch (t9 = 4.2; P < 0.01), location > visual (t9 = 2.9; P < 0.05) and in the right hemisphere: location > pitch (t9 = 4.3; P < 0.01), visual > pitch (t9 = 2.7; P < 0.05). That is, overall cortical activity significantly increased during the location task compared with the pitch and visual tasks.

ITD.

Fig. 2B plots the corresponding data for ITD runs. The factorial repeated-measures ANOVA revealed a significant interaction between hemisphere and binaural cue (F4,36 = 20.1; P < 0.001). Observed response differences between the left and right hemispheres were smaller and exhibited less systematic contralateral dominance than for ILD. This result is particularly apparent in the relative flatness of the right hemisphere response functions. These hemispheric differences are consistent with several other studies (17, 19, 20) demonstrating stronger ITD sensitivity in the left compared with right AC.

The ITD ANOVA also revealed no interaction between hemisphere and task but a significant main effect of task (F2,18 = 7.8; P < 0.01). An identical rank-order pattern was observed for ITD task effects as was seen for ILD, where location > visual > pitch in both hemispheres. Significant differences were observed in the left hemisphere: location > pitch (t9 = 4.3; P < 0.01), location > visual (t9 = 3.1; P < 0.05) and the right hemisphere: location > pitch (t9 = 3.9; P < 0.01). Thus, in ITD runs as in the ILD runs, significantly greater overall cortical activity was observed during the location task than during pitch or visual tasks.

Distinct AC Regions Sensitive to Binaural-Cue and Task Manipulations.

The response functions plotted in Fig. 2 suggest greater sensitivity to ILD than to ITD in sound-activated voxels of AC. One explanation for relatively weak ITD tuning using this approach includes the possibility that only a small subset of sound-driven voxels are also modulated by ITD. In this case, the sound–silence criterion might fail to include highly ITD-selective voxels. To exclude this possibility, and any possibility that the defined AC ROI omitted ITD sensitivity, a separate voxelwise analysis was conducted across all surface voxels, extracted and individually aligned to a common cortical surface (Fig. 3A and Fig. S2, processing pipeline 2). Fig. 3 plots group-mean surface-voxel responses on the cortical surface for each value of ILD (Fig. 3B) and ITD (Fig. 3C). Separate maps displaying response magnitude are plotted for each hemisphere, binaural cue (Fig. 3 B and C, columns), and task (Fig. 3 B and C, rows). The cortical surface maps are generally in agreement with the response function results (Fig. 2), with a few exceptions that may help to illuminate cortical ITD representation.

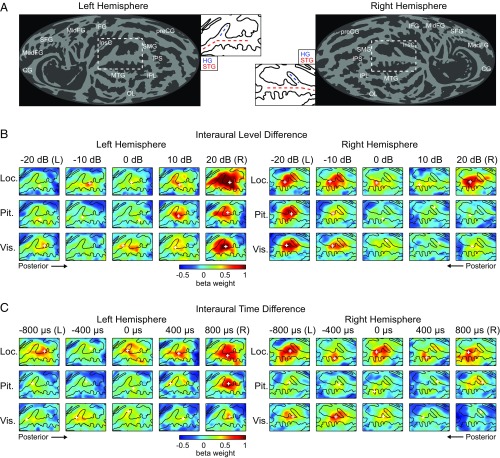

Fig. 3.

Cortical surface maps depicting ROIs and sound-evoked responses. (A) Surface projections of group average gyral anatomy (shading gyri in light gray and sulci in dark gray) for left and right hemisphere. Insets corresponding to left and right AC are indicated by white dashed outline on full map. Dashed lines reflect Heschl’s gyrus (HG, red) and superior temporal gyrus (STG, blue). Anatomical labels: CG, cingulate gyrus; HG, Heschl’s gyrus; IFG, inferior frontal gyrus; InsC, insular cortex; IPL, inferior parietal lobe; IPS, inferior parietal sulcus; MedFG, medial frontal gyrus; MidFG, mid-frontal gyrus; MTG, middle temporal gyrus; OL, occipital lobe; preCG, pre-central gyrus; SFG, superior frontal gyrus; SMG, supra-marginal gyrus; and STG, superior temporal gyrus. (B and C) Average response magnitudes (color scale indicates mean beta weight across trials and subjects) are plotted on the flattened cortical surface for each combination of binaural cue value (columns) and task condition (rows). Black contours indicate the average gyral anatomy in a region surrounding the conjunction of STG and HG. Data for ILD and ITD runs are illustrated in B and C, respectively, in each hemisphere. White cross in each panel indicates point of maximum response.

ILD response magnitudes exhibited a systematic contralateral bias in each hemisphere for all tasks, with the exception of the large response in the right hemisphere to the ipsilateral +20 dB condition during the location task (Fig. 3B). ITD responses in the left hemisphere were generally organized contralaterally with the strongest responses observed following presentation of the 800-µs stimuli (Fig. 3C). The point of maximal response on each map (denoted by white crosses) was consistently observed in medial posterior superior temporal gyrus (pSTG), posterior to Heschl’s gyrus on both ILD and ITD maps. In the right hemisphere, ITD responses appear bilateral during the location task, generally contralateral during the visual task, and nearly nonexistent during the pitch task. The bilateral response pattern during the location task helps illustrate the statistics in Fig. 2; for example, no clear modulation as a function of ITD is observed, but there is an overall larger response during the location task compared with the other tasks.

Factorial Analysis of Binaural and Task Effects on the Cortical Surface.

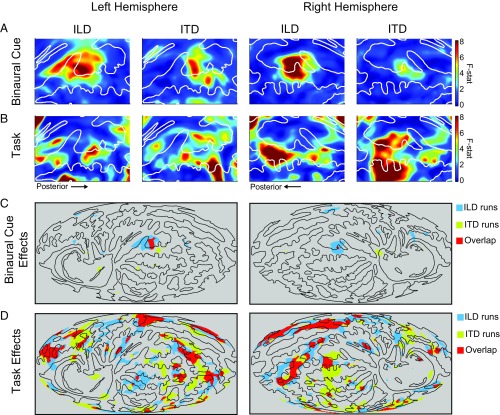

To better localize regions of binaural and task sensitivity on the cortical surface, factorial repeated-measures ANOVA was run at each surface voxel, with factors of binaural cue (five levels) and task (three levels) separately for each hemisphere. Independent ANOVAs were conducted for ILD and ITD runs. No significant interactions between binaural cue and task were observed in either hemisphere.

Main effects are presented in Fig. 4, where F values (unthresholded) are plotted for the effects of binaural cue (Fig. 4A) and task (Fig. 4B) at each surface voxel on the cortical surface projection. Regions of significant main effects, thresholded using random field theory (α = 0.01), are replotted over the full surface of both hemispheres in Fig. 4 C and D: Blue corresponds to significant regions for ILD runs, yellow for ITD runs, and red to overlapping region significance for both ILD and ITD runs. Thresholded results are plotted across the full cortical surface to contrast the regional diversity of sensitivity to binaural cues compared with task effects. The results provide strong evidence of overlapping ITD and ILD sensitivity (and potentially of cue-independent spatial representation) in a region of AC posterior and slightly lateral to Heschl’s gyrus in medial pSTG (red region in Fig. 4C). Significant task effects were found more broadly, in a variety of regions throughout each hemisphere (Fig. 4D). The strongest task effects in the vicinity of AC were localized to right STG in a region further posterior and more lateral than the area sensitive to location cues (43). These main effects were observed similarly in both hemispheres, suggesting a robust and common bilateral pattern of binaural and task sensitivity in AC. A qualitative comparison, however, suggests that joint sensitivity in AC to ILD and ITD may encompass a larger area in the left than the right hemisphere, whereas task-related effects may be more extensive in the right than in the left hemisphere.

Fig. 4.

Statistical analysis of cortical surface maps. Cortical surface projections represent the main effects of binaural cue and task resulting from a two-factor repeated-measures ANOVA at each surface voxel. (A) Binaural cue: Main effects of binaural cue in left and right hemisphere. (B) Task: Main effect of task in left and right hemisphere. In A and B, colors correspond to F values computed at each surface voxel. White contours correspond to gyral anatomy of the population average. (C and D) Whole-hemisphere view of cortical topography of (C) binaural cue and (D) task effects. Colors indicate statistically significant regions from A and B replotted for ILD runs (blue), ITD runs (yellow), or regions of overlap (red). Black contours correspond to the same gyral anatomy as in A and B.

Voxel-Pattern Classification Supports a Cue-Integrated Opponent-Channel Representation of Auditory Space.

Univariate measures of fMRI brain activity provide a parsimonious avenue for analyzing data and interpreting results, but the tests on activation differences are made only on a voxel-by-voxel basis. As an alternative, classification analysis of multivoxel patterns provides a means for accessing a greater proportion of the available response information. Voxels with consistently low feature-specific activation may be equally informative as those with high feature-specific activation. Thus, multivoxel pattern analysis provides a means for accessing information otherwise inaccessible via conventional univariate assessment (50–52). To that end, we used a linear support vector machine (SVM) classifier to investigate the presence of binaural spatial information within patterns of voxel activity. Voxel patterns used in this analysis were restricted to sound-responsive voxels within the defined AC ROI (processing pipeline 1, Fig. S2).

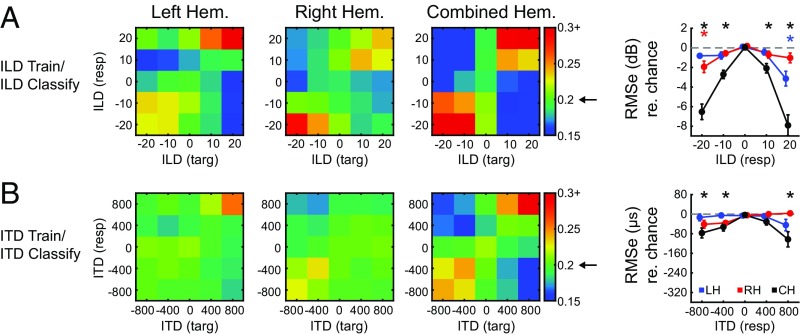

Within-cue classification.

Classification performance along a single cue dimension (only ILD or only ITD) is presented in Fig. 5 A and B. Confusion matrices indicate the probability of classification for ILD or ITD value. For each matrix, the “target” (horizontal) indicates the presented stimulus, and “response” (vertical) indicates the SVM classification. Classification probabilities were calculated with independent cross-validation routines for the left, right, and a combined hemisphere dataset. In accord with the univariate activation patterns, ILD classification (Fig. 5A) was highest for rightward sounds in the left hemisphere (36% correct for +20 dB, P < 0.001) and leftward sounds in the right hemisphere (29% correct for −20 dB, P = 0.002). Classification analysis using the dataset composed of both left and right hemispheres resulted in the highest ILD classification probabilities at both lateral positions (40% correct for −20 dB, P < 0.001; 47% correct for +20 dB, P < 0.001). ITD classification (Fig. 5B), though poorer, was also greatest at contralateral conditions in both left (26% correct for +800 µs, P = 0.028) and right (22% correct, −800 µs, not significant) hemispheres and greatest for the combined dataset (24% correct for −800 µs, not significant; 30% correct for +800 µs, P = 0.001). These observations were further quantified by computing the rms classification error (Fig. 5, column 4) for each condition, relative to a permuted dataset indicating chance performance (dashed line; asterisks denote better-than-chance classification at P < 0.05). In close agreement to the accuracy results, the lowest classification error (with respect to chance) was observed for contralateral ILD and ITDs in the left and right hemisphere and lateral positions in the combined hemispheric dataset (Fig. 5, column 4).

Fig. 5.

Within-cue classification. Confusion matrices present classification probability when a linear classifier is (A) trained and tested using ILD trials and (B) trained and tested using ITD trials for left (LH), right (RH), and combined hemisphere (CH) datasets. Targets and responses are represented on the x and y axis, respectively. Arrow on colorbar indicates chance probability. Column 4 quantifies the rms error (with respect to chance) as a function of binaural cue for each dataset. Chance is indicated by dashed gray line. Asterisks indicate significance based on comparison with permuted dataset (P < 0.05) for left (blue), right (red), and combined (black) hemispheres.

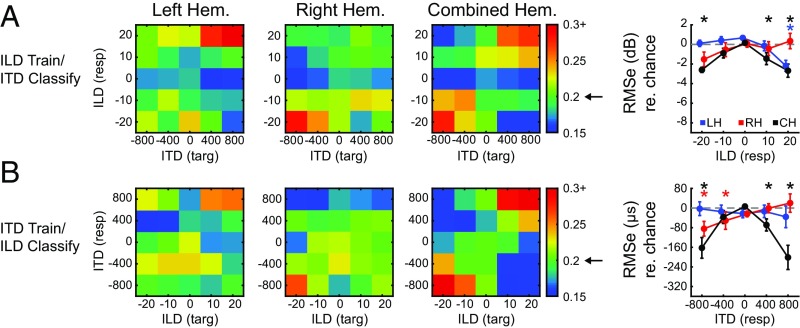

Cross-cue classification.

Within-cue classification results provide strong evidence for contralateral representation of both ILD and ITD (Fig. 5) but do not address whether those patterns are shared across the two types of cues. Activity patterns across the cortical surface suggest at least a partial overlap in the regions sensitive to modulation of ITD and ILD (Fig. 4). In a final analysis, we examined whether voxel patterns from one cue can be used to classify the other. This is a more direct test of whether ITD- and ILD-based representations share informative features. The approach was the same as for within-cue classification but with the SVM trained on data from ILD trials and tested with voxel patterns from ITD trials, or vice versa. Fig. 6A shows confusion matrices for the left, right, and combined hemisphere datasets trained with ILD and tested with ITD. The results are strikingly similar to within-cue classification, with the highest accuracies and lowest errors observed at contralateral spatial locations in the left (peak accuracy of 31%, P < 0.001 for +800 µs/+20 dB) and right hemispheres (peak accuracy of 28.4%, P < 0.001 at −800 µs/−20 dB) and at lateral positions for the combined hemisphere dataset: 28% accuracy, P = 0.001 for −800 µs/−20 dB and 27% accuracy, P = 0.002 for the +800 µs/+20 dB condition. Fig. 6B plots classification by an SVM trained on ITD trials and tested with ILD trials. Again, accurate contralateral classification was observed, where left hemisphere was most accurate (26%, P = 0.025) for rightward +800 µs/+20 dB stimuli and right hemisphere was most accurate (27%, P = 0.012) for leftward −800 µs/−20 dB stimuli. As with the preceding results, the best classification for the “trained with ITD/tested with ILD” analysis were observed for the combined hemisphere dataset at lateral positions: 32% accuracy, P < 0.001 for −800 µs/−20 dB and 36% accuracy for +800 µs/+20 dB.

Fig. 6.

Cross-cue classification. Confusion matrices present classification probability when a linear classifier is (A) trained with ILD trials and tested with ITD trials and (B) trained with ITD trials and tested with ILD trials. Results corresponding to left (LH), right (RH), and combined hemispheres (CH) are presented with targets and responses on the x and y axis, respectively, as in Fig. 5. Arrow on colorbar indicates chance probability. Column 4 quantifies the rms error (with respect to chance) as a function of binaural cue for each dataset. Chance is indicated by dashed gray line. Asterisks indicate significance based on comparison with permuted dataset (P < 0.05) for left (blue), right (red), and combined (black) hemispheres.

Discussion

ITD cues pervade our natural listening environment and provide a strongly salient means for localizing sound horizontally. The psychophysical literature suggests that ITD is the dominant cue for human spatial hearing (2, 3). However, previous attempts to parametrically measure cortical sensitivity to ITD have had limited success compared with equivalent assessments of ILD tuning. One potential explanation for that disconnect is that engagement in spatial tasks might differently affect cortical sensitivity to ITD and ILD cues. To test this idea, cortical sensitivity to ILD or ITD stimuli was measured while participants performed an auditory location, auditory pitch, or visual-brightness task. We hypothesized that active spatial listening during the location task would modulate cortical sensitivity to ITD and ILD differently compared with the auditory pitch or visual tasks.

Spatial Listening Effects on Binaural Response Functions.

Analyses of tuning functions revealed strong contralateral activity in response to ILD cues (Figs. 2A and 3B), in agreement with previous reports (14, 15). The effect of task on ILD tuning was more complex, however. Significant main effects for ILD were observed during the pitch and visual tasks in both hemispheres. During the location task left AC appeared strongly tuned to contralateral ILDs, whereas right-AC activity revealed large response magnitudes to both contralateral and ipsilateral ILDs (Figs. 2A and 3B). As a result of bilateral activity in right hemisphere (in response to −20 and +20 dB, ILD), significant CI was found for the pitch and visual task but not for the location task. This result adds additional support to the hypothesis that right AC contains a more complete (bilateral) spatial representation than left AC, as suggested by several previous functional imaging (12, 17, 19, 20, 53) and unilateral lesion studies (27, 54, 55). Combined with the finding that overall response magnitudes were greater in the location task than in the other tasks, these ILD results suggest a combination of the two effects hypothesized in Fig. 1: Spatial listening enhances responses overall but also alters response functions by emphasizing some cue values over others (albeit in a cue- and hemisphere-specific manner).

As in previous studies (14), sensitivity to ITD was found to be weaker than sensitivity to ILD. Although a significant interaction in the ITD response functions was observed between cue value and hemisphere (Fig. 2B), statistically significant contralateral bias for ITD was only observed in left AC during the pitch task. This lack of significant ITD modulation during the location task strikes an interesting contrast to greater overall activation in that task, suggesting that the increased activation was not cue-specific and did not enhance cortical sensitivity to ITD. That result, hypothesized in Fig. 1B, is further illustrated by cortical surface maps of response magnitude that reveal strong ITD-independent responses during the location task, particularly in the right hemisphere (Fig. 3C). It is worth noting the parallel to a lesion study demonstrating profound bilateral (both left and right hemifield) deficits in ITD-based localization for individuals with lesions in right hemisphere but only contralateral hemifield deficits with lesions in left hemisphere (27). Together, the results suggest a strong right AC engagement during active spatial listening regardless of sound-source location. In that case, the apparent lack of response modulation by ITD may reflect the nature of ITD representation (for instance, balanced contralateral and ipsilateral activity), rather than a genuine lack of ITD sensitivity, in right AC.

Spatial Listening Effects on the Cortical Topography of Binaural Sensitivity.

Topographic analysis of surface voxels using a multifactor ANOVA revealed sensitivity to ILD and ITD cues in both left and right hemispheres. Statistically significant regions for the main effects of ILD and ITD overlap were identified posterior to Heschl’s gyrus in medial pSTG (Fig. 4C), conventionally defined as planum temporale in the Destrieux atlas and Talairach coordinates (56–58). ILD cue sensitivity qualitatively encompassed a larger surface area than ITD, and the statistically defined region was larger in the left than in the right hemisphere for both cues. The results suggest that ITD sensitivity may be restricted to a smaller AC subregion than ILD sensitivity (14). That difference could, in turn, reflect differences in ITD and ILD sensitivity of AC regions favoring different spectral frequencies (e.g., low frequencies for ITD and high frequencies for ILD) (1, 59). Another important factor is that monaural intensity differences partially contribute to response modulation by ILD but not ITD (15). Thus, the region of overlap could encompass the entirety of binaural sensitivity, whereas the broader extent of apparent ILD modulation reflects other factors (e.g., loudness) that do not covary with ITD. Regardless, the current results indicate the existence of an AC region with combined sensitivity to both cues, a prerequisite for the existence of cue-independent AC representations of space per se.

Regions associated with significant task effects in both ILD and ITD runs were observed in both hemispheres in lateral pSTG, with a greater representation in right than in left AC. Topographically, these results are consistent with the locus of modulations observed during auditory attention (33, 40, 49) and spatial discrimination tasks (47, 60). Despite significant differences in experimental design and analysis, the results reported here defining regional sensitivity to sound location and auditory task processing are strikingly similar to those reported by Häkkinen et al. (43). In both studies, maximal sensitivity to location was found in medial STG just posterior to Heschl’s gyrus, whereas task effects were observed particularly in lateral pSTG. One view of these regional differences is that they reflect the anatomical organization of the cortical processing hierarchy, with greater degrees of abstract perceptual and/or cognitive representation along the posterodorsal dimension (61, 62).

Relevance to Neural Codes for Auditory Space.

According to the opponent-channel theory of spatial representation in AC (63), sound-source locations are encoded by differences in the responses of neurons tuned to contralateral versus ipsilateral locations. Importantly, that theory posits the existence of (at least) two broadly tuned neural “channels” within each cortical hemisphere: One tuned contralaterally and the other tuned ipsilaterally. Although BOLD fMRI is not strongly suited to evaluating the spatial tuning of cortical neurons or comingled neural populations, certain aspects of the current results are in line with expectations of the opponent-channel theory. Response functions (Fig. 2) reveal broad contralateral bias within each hemisphere, with some hint of ipsilateral response as seen in previous studies (14, 15). Also consistent with the opponent-channel theory is the trend, seen in Fig. 2, toward more balanced bilateral responses in right AC during the location task. In the opponent-channel model, as in other distributed spatial representations (64, 65), greater spatial acuity can be supported by recruiting larger or more balanced neural populations, rather than by sharpening the tuning of selective responses as required by place-coding mechanisms.

Within-cue pattern classification results (Fig. 5) parallel the univariate activation results, with greatest accuracy and lowest error for contralateral ITD and ILD values in each hemisphere. Interestingly, though not statistically significant, ILD confusion matrices also suggest better-than-chance classification of ipsilateral ILD values (Fig. 5A; left hemisphere negative ILDs, right hemisphere positive ILDs). Whether or not this reflects a subpopulation of ipsilaterally sensitive voxels is beyond the scope of the current analyses. However, it should be noted that this single-hemisphere ipsilateral classification capability is not retained in the cross-cue classification (Fig. 6). One possibility, suggested by McLaughlin et al. (14), is that opponent-channel coding in human AC might be limited primarily to ILD processing, with ITD involving a different mechanism. In that case, ipsilateral ILD responses (i.e., positive BOLD related to neural inhibition) might contribute very differently to multivoxel patterns than do ipsilateral ITD responses.

Relevance to Independent Versus Integrated ILD and ITD.

The duplex theory of sound localization contends that physiological sound localization mechanisms are necessarily (due to head-related acoustics) divided into low- and high-frequency domains sensitive to ITD and ILD cues, respectively (1). There are numerous caveats to this theory, however. It has been established that auditory neurons in higher levels of the auditory system (e.g., inferior colliculus and AC) are sensitive to temporal differences in high-frequency sound envelopes (66–68). Auditory cortical neurons display pervasive ILD sensitivity across a wide range of frequencies (69, 70), and ILD tuning is minimally correlated with characteristic frequency (71–73). Importantly, Brugge et al. (5) demonstrated AC units sensitive to both cues, among which a 1-dB change in ILD produced—on average—similar response changes to 30–70 µs of ITD. Although the current study did not resolve cue values to nearly that precision, the cross-cue classification results at least roughly agree with that metric, in that voxel patterns evoked by 400- to 800-µs ITD were classified at 10- to 20-dB ILD, and vice versa.

As mentioned in the Introduction, previous evoked-potential studies of ILD–ITD cue integration in human AC suggest partly integrated processing of the two cues, at least for matched changes in ILD/ITD cues (28, 29). The current results support this view, in that regions of ITD and ILD sensitivity physically overlapped, and that patterns of voxel activity were consistent enough between the two cues to enable successful machine learning classification of one cue by the other. It is surprising, however, that classification of ITD trials was more successful after training with ILD trials than after training with ITD itself. That result suggests a number of possibilities. It may be that the underlying patterns are identical, but that ILD variation evokes “cleaner” versions of those patterns, which happen to be more suitable to SVM training. In that case, it remains unclear why stimuli that produce similar spatial percepts would not produce equally robust activity patterns. Alternatively, it may be that ILD and ITD are not fully integrated at the level of AC, such that voxel patterns include both overlapping and nonoverlapping features. In that case, it may be that classification performance depends most strongly on common features that are highly ILD-sensitive. Future work should seek to identify the cue-specific features (if any) of AC activity and the spatial information they convey.

Effect of Task on Binaural Cue Representation.

The location task used in this experiment was designed to engage participant attention to spatial features of sound while manipulating auditory space. The pitch task provided a controlled alternative auditory task that also required focused auditory attention to identical sounds but not particularly to their spatial characteristics. Further, the visual task provided an additional control by requiring attention to a different sensory modality. Although participants on average performed at an acceptable level, task difficulty was not equal across tasks, with better participant detection for the pitch and visual than for the location task. It is possible that some aspects of the results, in particular the main effects of task, were affected by such differences, and appropriate care should be taken in interpreting them. Furthermore, spatial tasks tend to be more difficult than pitch tasks (74), and previous fMRI results suggest increasing task difficulty in the auditory domain does not manifest as increased activation in AC (75). It should be noted, however, that the analyses described here included only trials completely lacking in targets or responses of any kind, and thereby minimized the impact of psychophysical performance.

Potential Effects of the Stimulus Spectrum.

With respect to the overall weaker modulation of AC response by ITD versus ILD, it is important to consider potential impacts of the stimulus spectrum on access to each cue. The sensitivity of earphones used in the current study was roughly 14 dB greater around 6,000 Hz than at lower frequencies (Materials and Methods). We may therefore anticipate better overall access to high-frequency ILD cues than fine-structure ITD cues available below 1,400 Hz, particularly when the potential for interference between the manipulated and nonmanipulated cues (e.g., 0-dB ILD during ITD trials) is considered. However, psychophysical performance did not differ between the two cue types, suggesting equal access to both cues during the experiment. Thus, in agreement with previous studies that found no difference in AC sensitivity to ITD across high-frequency, broad-band, and low-frequency stimuli (76), we conclude that the stimulus spectrum itself cannot account for the difference between ITD- and ILD-driven modulations of the AC response. Rather, these appear to reflect genuine differences in the BOLD manifestation of equally salient ITD and ILD cues.

Conclusions

This study provides two key findings. (i) Overlapping regions of response modulation and successful cross-cue classification provide evidence for integrated sensitivity to ILD and ITD cues. (ii) Spatial listening increases response magnitudes overall rather than sharpening cue selectivity. Together, the results support the emergence of cue-independent spatial processing and its enhancement via active listening in posterior AC.

Materials and Methods

All experiments were conducted at the University of Washington Diagnostic Imaging Sciences Center. All procedures, including recruitment, consenting, and testing of human subjects followed the guidelines of the University of Washington Human Subjects Division and were reviewed and approved by the University of Washington Human Subjects Review Committee (Institutional Review Board).

Participants.

Subjects (n = 10, seven female, all right-handed native speakers of English) were 22–31 (28.7 ± 3.6) y of age with normal hearing. All participants passed a standard safety screening, provided written informed consent before each experiment, and were compensated for their time. Participants completed 1–2 h of initial practice in the laboratory to ensure familiarity with the experimental stimuli and tasks before the imaging session.

Experimental Protocol.

Stimuli were generated using custom MATLAB (MathWorks) routines, synthesized via Tucker Davis Technologies RP2.1, and delivered via MR-compatible piezoelectric insert earphones (Sensimetrics S14) enclosed in circumaural ear defenders (providing a total of ∼40 dB outside-noise attenuation). Imaging sessions were divided into four runs, measuring spatial cue sensitivity carried by ILD (two runs) or ITD (two runs). Each run lasted ∼13 min and consisted of 21 blocks of 30 s each. Each block presented 10 stimulus trials (1-s duration each) and was followed by 6 s of silent rest. During a run, subjects were instructed to fixate a visual cue projected to a mirror display inside the scanner. During rest periods no auditory stimuli were presented; subjects maintained fixation on a default square outline while waiting for the visual cue to signal the start of a new block. In each 1-s trial, four stimulus intervals (151-ms duration) were presented with 250-ms onset-to-onset separation. Intervals were trains of 16 white-noise bursts, each 1 ms in duration, presented at a rate of 100 bursts per second (i.e., 16 bursts * 10 ms, excluding 9 ms of silence after the last noise burst equals 151 ms per interval). To facilitate event-related analyses of single-trial responses, timing between trials was pseudorandomized across the range 1–5 s (onset to onset, mean 3 s). As illustrated in an exemplar stimulus sequence in Fig. S1A, the overall ILD or ITD varied from trial to trial, taking one of five values (−20, −10, 0, 10, or 20 dB ILD or −800, −400, 0, 400, or 800 μs ITD). The order of ILD/ITD values was randomized for each run using a continuous-carryover sequence (i.e., each ILD/ITD value was preceded by each other value an equal amount of times) (77). By convention, negative ILD and ITD values correspond to leftward sounds (louder or leading in left ear) and positive values favor the right ear. Stimuli were presented at an approximate average binaural level of 80–83 dB A-weighted sound pressure level, with slight adjustment of balance between the two ears for each participant to establish centered perception of diotic sound (zero ILD and ITD) once inside the scanner. The stimulus spectrum was flat at the amplifier output but shaped by the frequency response of the earphones, which featured a shallow amplitude peak of +8 dB spanning two octaves around 200 Hz and a sharper peak of +23 dB spanning 0.5 octaves around 6,000 Hz. As a result, the power in a 1,400-Hz band spanning 0–1,400 Hz (i.e., the region of sensitivity to fine-structure ITD) was 14 dB lower than in an equal-width band spanning 5,300–6,700 Hz.

The psychophysical task, in all cases, was four-interval change detection and identification. On 165 randomly selected trials (78.6% chance) the third interval was “shifted” by a small amount along one of three sensory dimensions. Shifts could occur in either auditory location (by shifting ILD or ITD left or right by ±5 dB or ±200 µs; Fig. S1A, green rectangles), auditory pitch (by increasing the 100-Hz noise-burst rate to 167 Hz or decreasing to 71 Hz; Fig. S1A, blue rectangles), or visual brightness (by changing RGB color of the visual fixation symbol from standard [0 0 1] to brighter [0.25 0.25 1] or dimmer [0 0 0.75]; Fig. S1A, orange rectangles). Shifts in visual brightness lasted 200 ms and were synchronized to the third interval.

Participants were visually cued via the fixation symbol, in blocks of 10 trials (30 s) (Fig. S1B), to detect location, pitch, or visual changes and to ignore changes in the other dimensions. The task was performed until the end of the block. Targets were defined as stimulus shifts occurring on the cued dimension; these occurred on 26% of trials. Participants were to respond to each target by pressing either one of the two buttons to indicate the direction (left/right, up/down, or bright/dim) of the target shift relative to other intervals of the trial. Task blocks were separated by 6 s of rest during which no stimuli were presented. The number of location (60), pitch (60), and visual (45) changes was controlled across the entire run but distributed randomly across trials so that individual blocks were not predictable. Thus, each type of change was presented at a fixed rate throughout the run, the only manipulation being the detection task the participant was cued to perform. Fig. S1B illustrates the visual cue configurations. Two squares arranged horizontally cued the auditory location task (top row), two squares arranged vertically cued the auditory pitch task (middle row), and a single larger square cued the visual brightness task (bottom row).

In total, 15 unique conditions (5 spatial cue values × 3 tasks) were presented on each imaging run. Fourteen trials per combination (210 total trials, not including rest) were presented in each of two separate runs per spatial cue type (ITD and ILD).

fMRI Data Acquisition and Response Estimation.

Whole-brain fMRI data were acquired at 3 T (Phillips Achieva). Data were collected over the course of a single imaging session per participant, including a high-resolution T1-weighted whole-brain structural scan (MPRAGE, 1-mm3 isometric voxels), B0 field map, and four echo-planar functional scans, each lasting ∼13 min. A continuous acquisition paradigm was used for functional imaging, with a repetition time of 2 s, echo time of 21 ms, and slice thickness of 3 mm for a voxel size of 2.7 × 2.7 mm2, and 40 slices.

The evoked response on each trial was quantified in each voxel via linear regression with a modeled hemodynamic response function (Supporting Information). Trial- and voxel-specific beta weights define the 3D functional dataset (Fig. S2, green text) from which all subsequent analyses were drawn.

Cortical Surface-Based Registration and Extraction of Functional Data.

For every subject, the high-resolution T1-weighted anatomical image was used to extract the cortical surface and register each individual to common 2D cortical surface space using Freesurfer (Fig. S2, bottom). Surface-based coregistered transformation coordinates were used in two diverging processing pipelines, one for analysis of binaural response functions and multivoxel pattern analysis (Fig. S2, processing pipeline 1) and the other for cortical surface mapping (Fig. S2, processing pipeline 2). See Supporting Information for additional details.

Analysis of Binaural Response Functions.

Response functions for ILD and ITD runs were computed by averaging beta weights across AC ROI voxels and cue-specific trials for each participant and hemisphere. Target trials, and any trials where a response was made (correctly or incorrectly), were excluded from this analysis. Following repeated-measures ANOVA, post hoc paired t tests were used to compare activity in the left and right hemisphere. The degree of contralateral bias for each response function was defined as a CI:

where contra and ipsi indicate the mean response across trials combining the two leftward or rightward binaural-cue values for each corresponding hemisphere. CI was calculated for each subject and hemisphere, and t tests compared the distribution to the null hypothesis value of 0.5. Post hoc comparisons were considered significant following control for multiple comparisons (false discovery rate, FDR) at q = 0.05 (78).

Analysis of Cortical Surface Maps.

The dataset for statistical analysis was generated by averaging across trials within each run for each of the 15 combinations of spatial cue and task. As in the binaural response-function analysis, trials that included targets or responses (correct or not) were excluded from the surface analysis. For each spatial cue type (ITD and ILD), the two imaging runs were combined to create one dataset for each subject and spatial-cue type. Then, a factorial repeated-measures ANOVA was conducted at every surface voxel, with factors of spatial cue (five levels) and task (three levels). To correct for multiple comparisons across surface voxels, the resulting maps of F values were analyzed using random field theory based on the smoothing kernel used (10-mm FWHM) and statistical threshold of P < 0.01 (79).

Multivoxel Pattern Analysis.

Cue-dependent activity in the functional dataset (Fig. S2, processing pipeline 1) was also assessed using multivoxel pattern classification of binaural cue values. For this purpose, a voxel pattern—consisting of the beta weights corresponding to each sound-responsive voxel included in the AC ROI—was extracted for each trial. Voxel patterns were then used for training and testing an SVM for different types of analyses as described below.

Single-cue classification.

Single-cue voxel-pattern classification analysis was performed using 1,000-fold cross-validation where the dataset was split into two independent halves. The first half was used to train a linear SVM with a fixed cost parameter of c = 1 (LIBSVM), while the second half was used to test the SVM, thus quantifying the capability of voxel patterns to classify ILD or ITD conditions. All trials from a given 30-s (10-trial) block were assigned to either the training or testing dataset to reduce the possibility of classification of back-to-back trials based on pattern similarity due to temporal proximity. The training/testing procedure was conducted independently on voxel patterns for each subject and each hemisphere, as well as for a combined-hemisphere dataset that concatenated voxel patterns from the left and right hemispheres.

Cross-cue classification.

Cross-cue voxel-pattern classification analysis performed a similar procedure as described above. In this analysis, however, the linear SVM was trained exclusively using the voxel patterns from the two ILD runs. The SVM was then tested using all trials from the two ITD runs (i.e., the other cue). Another linear SVM was trained with ITD trials and tested with ILD trials. Classification performance was analyzed by treating the five ILD and ITD values as roughly equivalent (i.e., −20, −10, 0, +10, +20 dB ILD align with the −800, −400, 0, +400, +800 µs ITD, respectively). Although individual differences in how listeners use these cues suggests that perceptual correspondence was not likely perfect (80), psychophysical pilot testing in the laboratory confirmed that listeners in the current study experienced equivalent lateral perception for left, intermediate left, center, intermediate right, and rightward ILD and ITDs.

Classification performance was quantified by calculating the rms error at each ILD or ITD value. Rms error for null-hypothesis (chance) performance at each value was estimated using a permuted dataset. For this, ILD or ITD labels were scrambled before training, classification, and calculation of rms error. This permutation process was repeated 1,000 times. The proportion of permutations with rms error less than or equal to observed rms error gives the P value directly and is reported to the last significant digit for classification results unless zero instances were observed, in which case P < 0.001. Plots of rms error were calculated with respect to chance performance. That is, in these plots (Figs. 5 and 6) negative values indicate better-than-chance classification in units of the cue in question (decibels for ILD or microseconds for ITD).

fMRI Preprocessing and Response Estimation

Preprocessing of functional data used FEAT (FSL 5.0.7; FMRIB) and consisted of high-pass filtering (100 s), motion correction, B0 fieldmap unwarping, and skull stripping. An event-related general linear model was implemented in FEAT to identify voxels with significantly greater response following sound trials than during rest blocks (sound versus silence; initial cluster definition threshold Z > 2.3, corrected P < 0.001). These voxels were used in later analyses to define a voxel-based functional AC ROI.

Preprocessed functional data were imported into MATLAB (MathWorks) for estimation of voxelwise activity in AC. Preprocessed time-course data for each voxel were temporally interpolated and normalized to Z-score values. Single-trial response measurements were calculated for each voxel by linear regression of the interpolated time course (12 s following each stimulus trial) with a custom hemodynamic response function (HRF). The HRF was defined as the difference of two gamma density functions, using parameters adapted from Glover (81): 3.5 (peak 1), 5.2 (FWHM 1), 10.8 (peak 2), 7.35 (FWHM 2), 0.35 (dip), where 1 and 2 refer to the first and second gamma functions, respectively, and FWHM defines the full-width at half-maximum of the function. The beta weight obtained from linear regression quantified the evoked response on each trial. Trial- and voxel-specific beta weights define the 3D functional dataset (Fig. 3, green text) from which all subsequent analyses were drawn.

Cortical Surface-Based Registration and Extraction of Functional Data

Processing Pipeline 1.

The functional dataset (trial-by-voxel response data; Fig. S2, upper box) was masked with the intersection of an anatomical AC ROI mask and a functional (sound-versus-silence) mask. The AC ROI mask (Fig. S2, lower blue arrow) was defined in Freesurfer as the set of cortical-surface voxels corresponding to Heschl’s gyrus and STG. The ROI was computed separately for each individual using automated cortical parcellation that included regions “Heschl’s gyrus” and “superior temporal gyrus” as defined by Desikan (82). Once identified on the cortical surface, the AC ROI was converted to 3D functional space for each run (Freesurfer; mri_label2vol). This procedure resulted in an anatomically defined 3D ROI mask (containing both gray- and white-matter voxels). The functional mask was a product of the FEAT-processing pipeline (described above; Fig. S2, upper blue arrow) that calculated sound-versus-silence sensitivity for each voxel. The ROI mask and the functional mask were combined (i.e., logical conjunction) and used to select voxels from the functional 3D dataset (Fig. S2, green) for two further analyses: (i) Response tuning curves were computed by averaging across the selected voxels and across trials corresponding to each ILD/ITD value and (ii) pattern classification made use of the trial-by-voxel patterns without averaging.

Processing Pipeline 2.

Trial-based functional 3D data were transformed to 2D cortical surface maps using Freesurfer and aligned to a common 2D surface (Fig. S2, lower box). Surface data for each subject and hemisphere were smoothed (FWHM 10 mm on the surface), registered to a common spherical space using curvature-based alignment across subjects, and flattened to a 500- × 1,000-pixel map using the Mollweide projection (48).

Acknowledgments

This work was supported by NIH Grant R01-DC011548.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1707522114/-/DCSupplemental.

References

- 1.Rayleigh L. XII. On our perception of sound direction. Philos Mag Ser 6. 1907;13:214–232. [Google Scholar]

- 2.Wightman FL, Kistler DJ. The dominant role of low-frequency interaural time differences in sound localization. J Acoust Soc Am. 1992;91:1648–1661. doi: 10.1121/1.402445. [DOI] [PubMed] [Google Scholar]

- 3.Macpherson EA, Middlebrooks JC. Listener weighting of cues for lateral angle: The duplex theory of sound localization revisited. J Acoust Soc Am. 2002;111:2219–2236. doi: 10.1121/1.1471898. [DOI] [PubMed] [Google Scholar]

- 4.Henning GB. Detectability of interaural delay in high-frequency complex waveforms. J Acoust Soc Am. 1974;55:84–90. doi: 10.1121/1.1928135. [DOI] [PubMed] [Google Scholar]

- 5.Brugge JF, Dubrovsky NA, Aitkin LM, Anderson DJ. Sensitivity of single neurons in auditory cortex of cat to binaural tonal stimulation; effects of varying interaural time and intensity. J Neurophysiol. 1969;32:1005–1024. doi: 10.1152/jn.1969.32.6.1005. [DOI] [PubMed] [Google Scholar]

- 6.McAlpine D, Jiang D, Palmer AR. A neural code for low-frequency sound localization in mammals. Nat Neurosci. 2001;4:396–401. doi: 10.1038/86049. [DOI] [PubMed] [Google Scholar]

- 7.Semple MN, Kitzes LM. Binaural processing of sound pressure level in cat primary auditory cortex: Evidence for a representation based on absolute levels rather than interaural level differences. J Neurophysiol. 1993;69:449–461. doi: 10.1152/jn.1993.69.2.449. [DOI] [PubMed] [Google Scholar]

- 8.Higgins NC, Storace DA, Escabí MA, Read HL. Specialization of binaural responses in ventral auditory cortices. J Neurosci. 2010;30:14522–14532. doi: 10.1523/JNEUROSCI.2561-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lui LL, Mokri Y, Reser DH, Rosa MGP, Rajan R. Responses of neurons in the marmoset primary auditory cortex to interaural level differences: Comparison of pure tones and vocalizations. Front Neurosci. 2015;9:132. doi: 10.3389/fnins.2015.00132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Belliveau LAC, Lyamzin DR, Lesica NA. The neural representation of interaural time differences in gerbils is transformed from midbrain to cortex. J Neurosci. 2014;34:16796–16808. doi: 10.1523/JNEUROSCI.2432-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fitzpatrick DC, Kuwada S. Tuning to interaural time differences across frequency. J Neurosci. 2001;21:4844–4851. doi: 10.1523/JNEUROSCI.21-13-04844.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gutschalk A, Steinmann I. Stimulus dependence of contralateral dominance in human auditory cortex. Hum Brain Mapp. 2015;36:883–896. doi: 10.1002/hbm.22673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Palomäki KJ, Tiitinen H, Mäkinen V, May PJC, Alku P. Spatial processing in human auditory cortex: The effects of 3D, ITD, and ILD stimulation techniques. Brain Res Cogn Brain Res. 2005;24:364–379. doi: 10.1016/j.cogbrainres.2005.02.013. [DOI] [PubMed] [Google Scholar]

- 14.McLaughlin SA, Higgins NC, Stecker GC. Tuning to binaural cues in human auditory cortex. J Assoc Res Otolaryngol. 2016;17:37–53. doi: 10.1007/s10162-015-0546-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stecker GC, McLaughlin SA, Higgins NC. Monaural and binaural contributions to interaural-level-difference sensitivity in human auditory cortex. Neuroimage. 2015;120:456–466. doi: 10.1016/j.neuroimage.2015.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.von Kriegstein K, Griffiths TD, Thompson SK, McAlpine D. Responses to interaural time delay in human cortex. J Neurophysiol. 2008;100:2712–2718. doi: 10.1152/jn.90210.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Krumbholz K, Hewson-Stoate N, Schönwiesner M. Cortical response to auditory motion suggests an asymmetry in the reliance on inter-hemispheric connections between the left and right auditory cortices. J Neurophysiol. 2007;97:1649–1655. doi: 10.1152/jn.00560.2006. [DOI] [PubMed] [Google Scholar]

- 18.Johnson BW, Hautus MJ. Processing of binaural spatial information in human auditory cortex: Neuromagnetic responses to interaural timing and level differences. Neuropsychologia. 2010;48:2610–2619. doi: 10.1016/j.neuropsychologia.2010.05.008. [DOI] [PubMed] [Google Scholar]

- 19.Magezi DA, Krumbholz K. Evidence for opponent-channel coding of interaural time differences in human auditory cortex. J Neurophysiol. 2010;104:1997–2007. doi: 10.1152/jn.00424.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Krumbholz K, et al. Representation of interaural temporal information from left and right auditory space in the human planum temporale and inferior parietal lobe. Cereb Cortex. 2005;15:317–324. doi: 10.1093/cercor/bhh133. [DOI] [PubMed] [Google Scholar]

- 21.Woldorff MG, et al. Lateralized auditory spatial perception and the contralaterality of cortical processing as studied with functional magnetic resonance imaging and magnetoencephalography. Hum Brain Mapp. 1999;7:49–66. doi: 10.1002/(SICI)1097-0193(1999)7:1<49::AID-HBM5>3.0.CO;2-J. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ungan P, Yagcioglu S, Goksoy C. Differences between the N1 waves of the responses to interaural time and intensity disparities: Scalp topography and dipole sources. Clin Neurophysiol. 2001;112:485–498. doi: 10.1016/s1388-2457(00)00550-2. [DOI] [PubMed] [Google Scholar]

- 23.Schröger E. Interaural time and level differences: Integrated or separated processing? Hear Res. 1996;96:191–198. doi: 10.1016/0378-5955(96)00066-4. [DOI] [PubMed] [Google Scholar]

- 24.Tardif E, Murray MM, Meylan R, Spierer L, Clarke S. The spatio-temporal brain dynamics of processing and integrating sound localization cues in humans. Brain Res. 2006;1092:161–176. doi: 10.1016/j.brainres.2006.03.095. [DOI] [PubMed] [Google Scholar]

- 25.Altmann CF, et al. Independent or integrated processing of interaural time and level differences in human auditory cortex? Hear Res. 2014;312:121–127. doi: 10.1016/j.heares.2014.03.009. [DOI] [PubMed] [Google Scholar]

- 26.Yamada K, Kaga K, Uno A, Shindo M. Sound lateralization in patients with lesions including the auditory cortex: Comparison of interaural time difference (ITD) discrimination and interaural intensity difference (IID) discrimination. Hear Res. 1996;101:173–180. doi: 10.1016/s0378-5955(96)00144-x. [DOI] [PubMed] [Google Scholar]

- 27.Spierer L, Bellmann-Thiran A, Maeder P, Murray MM, Clarke S. Hemispheric competence for auditory spatial representation. Brain. 2009;132:1953–1966. doi: 10.1093/brain/awp127. [DOI] [PubMed] [Google Scholar]

- 28.Edmonds BA, Krumbholz K. Are interaural time and level differences represented by independent or integrated codes in the human auditory cortex? J Assoc Res Otolaryngol. 2014;15:103–114. doi: 10.1007/s10162-013-0421-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Salminen NH, et al. Integrated processing of spatial cues in human auditory cortex. Hear Res. 2015;327:143–152. doi: 10.1016/j.heares.2015.06.006. [DOI] [PubMed] [Google Scholar]

- 30.Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci. 2003;6:1216–1223. doi: 10.1038/nn1141. [DOI] [PubMed] [Google Scholar]

- 31.McGinley MJ, et al. Waking state: Rapid variations modulate neural and behavioral responses. Neuron. 2015;87:1143–1161. doi: 10.1016/j.neuron.2015.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lee C-C, Middlebrooks JC. Auditory cortex spatial sensitivity sharpens during task performance. Nat Neurosci. 2011;14:108–114. doi: 10.1038/nn.2713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nourski KV, Steinschneider M, Oya H, Kawasaki H, Howard MA., 3rd Modulation of response patterns in human auditory cortex during a target detection task: An intracranial electrophysiology study. Int J Psychophysiol. 2015;95:191–201. doi: 10.1016/j.ijpsycho.2014.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Nourski KV, Steinschneider M, Rhone AE, Howard Iii MA. Intracranial electrophysiology of auditory selective attention associated with speech classification tasks. Front Hum Neurosci. 2017;10:691. doi: 10.3389/fnhum.2016.00691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mesgarani N, David SV, Fritz JB, Shamma SA. Influence of context and behavior on stimulus reconstruction from neural activity in primary auditory cortex. J Neurophysiol. 2009;102:3329–3339. doi: 10.1152/jn.91128.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Elhilali M, Xiang J, Shamma SA, Simon JZ. Interaction between attention and bottom-up saliency mediates the representation of foreground and background in an auditory scene. PLoS Biol. 2009;7:e1000129. doi: 10.1371/journal.pbio.1000129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Alho K, et al. Attention-related modulation of auditory-cortex responses to speech sounds during dichotic listening. Brain Res. 2012;1442:47–54. doi: 10.1016/j.brainres.2012.01.007. [DOI] [PubMed] [Google Scholar]

- 38.Woods DL, Alain C. Feature processing during high-rate auditory selective attention. Percept Psychophys. 1993;53:391–402. doi: 10.3758/bf03206782. [DOI] [PubMed] [Google Scholar]

- 39.Rinne T, Muers RS, Salo E, Slater H, Petkov CI. Functional imaging of audio-visual selective attention in monkeys and humans: How do lapses in monkey performance affect cross-species correspondences? Cereb Cortex. 2017;27:3471–3484. doi: 10.1093/cercor/bhx092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rinne T, et al. Attention modulates sound processing in human auditory cortex but not the inferior colliculus. Neuroreport. 2007;18:1311–1314. doi: 10.1097/WNR.0b013e32826fb3bb. [DOI] [PubMed] [Google Scholar]

- 41.Kong L, et al. Auditory spatial attention representations in the human cerebral cortex. Cereb Cortex. 2014;24:773–784. doi: 10.1093/cercor/bhs359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pugh KR, et al. Auditory selective attention: An fMRI investigation. Neuroimage. 1996;4:159–173. doi: 10.1006/nimg.1996.0067. [DOI] [PubMed] [Google Scholar]

- 43.Häkkinen S, Ovaska N, Rinne T. Processing of pitch and location in human auditory cortex during visual and auditory tasks. Front Psychol. 2015;6:1678. doi: 10.3389/fpsyg.2015.01678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Da Costa S, van der Zwaag W, Miller LM, Clarke S, Saenz M. Tuning in to sound: Frequency-selective attentional filter in human primary auditory cortex. J Neurosci. 2013;33:1858–1863. doi: 10.1523/JNEUROSCI.4405-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Renvall H, Staeren N, Barz CS, Ley A, Formisano E. Attention modulates the auditory cortical processing of spatial and category cues in naturalistic auditory scenes. Front Neurosci. 2016;10:254. doi: 10.3389/fnins.2016.00254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hillyard SA, Hink RF, Schwent VL, Picton TW. Electrical signs of selective attention in the human brain. Science. 1973;182:177–180. doi: 10.1126/science.182.4108.177. [DOI] [PubMed] [Google Scholar]

- 47.Rinne T, Koistinen S, Talja S, Wikman P, Salonen O. Task-dependent activations of human auditory cortex during spatial discrimination and spatial memory tasks. Neuroimage. 2012;59:4126–4131. doi: 10.1016/j.neuroimage.2011.10.069. [DOI] [PubMed] [Google Scholar]

- 48.Woods DL, et al. Functional maps of human auditory cortex: Effects of acoustic features and attention. PLoS One. 2009;4:e5183. doi: 10.1371/journal.pone.0005183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Petkov CI, et al. Attentional modulation of human auditory cortex. Nat Neurosci. 2004;7:658–663. doi: 10.1038/nn1256. [DOI] [PubMed] [Google Scholar]

- 50.Haxby JV, et al. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- 51.Davis T, et al. What do differences between multi-voxel and univariate analysis mean? How subject-, voxel-, and trial-level variance impact fMRI analysis. Neuroimage. 2014;97:271–283. doi: 10.1016/j.neuroimage.2014.04.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Briley PM, Kitterick PT, Summerfield AQ. Evidence for opponent process analysis of sound source location in humans. J Assoc Res Otolaryngol. 2013;14:83–101. doi: 10.1007/s10162-012-0356-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Zatorre RJ, Penhune VB. Spatial localization after excision of human auditory cortex. J Neurosci. 2001;21:6321–6328. doi: 10.1523/JNEUROSCI.21-16-06321.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Duffour-Nikolov C, et al. Auditory spatial deficits following hemispheric lesions: Dissociation of explicit and implicit processing. Neuropsychol Rehabil. 2012;22:674–696. doi: 10.1080/09602011.2012.686818. [DOI] [PubMed] [Google Scholar]

- 56.Derey K, Valente G, de Gelder B, Formisano E. Opponent coding of sound location (azimuth) in planum temporale is robust to sound-level variations. Cereb Cortex. 2016;26:450–464. doi: 10.1093/cercor/bhv269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Destrieux C, Fischl B, Dale A, Halgren E. Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. Neuroimage. 2010;53:1–15. doi: 10.1016/j.neuroimage.2010.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Penhune VB, Zatorre RJ, MacDonald JD, Evans AC. Interhemispheric anatomical differences in human primary auditory cortex: Probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex. 1996;6:661–672. doi: 10.1093/cercor/6.5.661. [DOI] [PubMed] [Google Scholar]

- 59.Hafter ER. Spatial hearing and the duplex theory: How viable is the model? In: Edelman GM, Gall WE, Cowan WM, editors. Dynamic Aspects of Neocortical Function. Wiley; New York: 1984. pp. 425–448. [Google Scholar]

- 60.Rinne T, Ala-Salomäki H, Stecker GC, Pätynen J, Lokki T. Processing of spatial sounds in human auditory cortex during visual, discrimination and 2-back tasks. Front Neurosci. 2014;8:220. doi: 10.3389/fnins.2014.00220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Higgins NC, McLaughlin SA, Da Costa S, Stecker GC. Sensitivity to an illusion of sound location in human auditory cortex. Front Syst Neurosci. 2017;11:35. doi: 10.3389/fnsys.2017.00035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Santoro R, et al. Reconstructing the spectrotemporal modulations of real-life sounds from fMRI response patterns. Proc Natl Acad Sci USA. 2017;114:4799–4804. doi: 10.1073/pnas.1617622114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Stecker GC, Harrington IA, Middlebrooks JC. Location coding by opponent neural populations in the auditory cortex. PLoS Biol. 2005;3:e78. doi: 10.1371/journal.pbio.0030078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Middlebrooks JC, Xu L, Eddins AC, Green DM. Codes for sound-source location in nontonotopic auditory cortex. J Neurophysiol. 1998;80:863–881. doi: 10.1152/jn.1998.80.2.863. [DOI] [PubMed] [Google Scholar]

- 65.Werner-Reiss U, Groh JM. A rate code for sound azimuth in monkey auditory cortex: Implications for human neuroimaging studies. J Neurosci. 2008;28:3747–3758. doi: 10.1523/JNEUROSCI.5044-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Moshitch D, Nelken I. The representation of interaural time differences in high-frequency auditory cortex. Cereb Cortex. 2016;26:656–668. doi: 10.1093/cercor/bhu230. [DOI] [PubMed] [Google Scholar]

- 67.Yin TC, Kuwada S, Sujaku Y. Interaural time sensitivity of high-frequency neurons in the inferior colliculus. J Acoust Soc Am. 1984;76:1401–1410. doi: 10.1121/1.391457. [DOI] [PubMed] [Google Scholar]

- 68.Batra R, Kuwada S, Stanford TR. High-frequency neurons in the inferior colliculus that are sensitive to interaural delays of amplitude-modulated tones: Evidence for dual binaural influences. J Neurophysiol. 1993;70:64–80. doi: 10.1152/jn.1993.70.1.64. [DOI] [PubMed] [Google Scholar]

- 69.Phillips DP, Irvine DR. Responses of single neurons in physiologically defined area AI of cat cerebral cortex: Sensitivity to interaural intensity differences. Hear Res. 1981;4:299–307. doi: 10.1016/0378-5955(81)90014-9. [DOI] [PubMed] [Google Scholar]

- 70.Nakamoto KT, Zhang J, Kitzes LM. Response patterns along an isofrequency contour in cat primary auditory cortex (AI) to stimuli varying in average and interaural levels. J Neurophysiol. 2004;91:118–135. doi: 10.1152/jn.00171.2003. [DOI] [PubMed] [Google Scholar]

- 71.Razak KA. Systematic representation of sound locations in the primary auditory cortex. J Neurosci. 2011;31:13848–13859. doi: 10.1523/JNEUROSCI.1937-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Imig TJ, Adrián HO. Binaural columns in the primary field (A1) of cat auditory cortex. Brain Res. 1977;138:241–257. doi: 10.1016/0006-8993(77)90743-0. [DOI] [PubMed] [Google Scholar]

- 73.Jones HG, Brown AD, Koka K, Thornton JL, Tollin DJ. Sound frequency-invariant neural coding of a frequency-dependent cue to sound source location. J Neurophysiol. 2015;114:531–539. doi: 10.1152/jn.00062.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Salo E, Rinne T, Salonen O, Alho K. Brain activations during bimodal dual tasks depend on the nature and combination of component tasks. Front Hum Neurosci. 2015;9:102. doi: 10.3389/fnhum.2015.00102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Harinen K, Rinne T. Activations of human auditory cortex to phonemic and nonphonemic vowels during discrimination and memory tasks. Neuroimage. 2013;77:279–287. doi: 10.1016/j.neuroimage.2013.03.064. [DOI] [PubMed] [Google Scholar]

- 76.McLaughlin SA. 2013. Functional magnetic resonance imaging of human auditory cortical tuning to interaural level and time differences. PhD dissertation (Univ of Washington, Seattle)