Abstract

Introduction:

Since the release of the World Health Report in 2000, health system performance ranking studies have garnered significant health policy attention. However, this literature has produced variable results. The objective of this study was to synthesize the research and analyze the ranked performance of Canada's health system on the international stage.

Method:

We conducted a scoping review exploring Canada's place in ranked health system performance among its peer Organisation for Economic Co-operation and Development countries. Arksey and O'Malley's five-stage scoping review framework was adopted, yielding 48 academic and grey literature articles. A literature extraction tool was developed to gather information on themes that emerged from the literature.

Synthesis:

Although various methodologies were used to rank health system performance internationally, results generally suggested that Canada has been a middle-of-the-pack performer in overall health system performance for the last 15 years. Canada's overall rankings were 7/191, 11/24, 10/11, 10/17, “Promising” and “B” grade across different studies. According to past literature, Canada performed well in areas of efficiency, productivity, attaining health system goals, years of life lived with disability and stroke mortality. By contrast, Canada performed poorly in areas related to disability-adjusted life expectancy, potential years of life lost, obesity in adults and children, diabetes, female lung cancer and infant mortality.

Conclusion:

As countries introduce health system reforms aimed at improving the health of populations, international comparisons are useful to inform cross-country learning in health and social policy. While ranking systems do have shortcomings, they can serve to shine a spotlight on Canada's health system strengths and weaknesses to better inform the health policy agenda.

Abstract

Introduction:

Depuis le dépôt du Rapport sur la santé dans le monde, en 2000, les études sur la classification du rendement des systèmes de santé ont attiré l'attention politique. Cependant, cette littérature a produit des résultats variables. L'objectif de la présente étude est de synthétiser la recherche et d'analyser la classification du rendement du système de santé canadien par rapport à la scène internationale.

Méthode:

Nous avons mené un examen de la portée qui explore la place du Canada dans la classification du rendement des systèmes de santé parmi les pays de l'OCDE. Le cadre d'examen en 5 étapes d'Arksey et O'Malley a été utilisé, ce qui a permis de dégager 48 articles scientifiques et de la littérature grise. Un outil d'extraction de la littérature a été développé pour obtenir de l'information sur les thèmes qui ont émergé de la littérature.

Synthèse:

Bien que plusieurs méthodologies aient été employées pour classifier le rendement international des systèmes de santé, les résultats suggèrent en général que le Canada se situe en milieu de peloton pour ce qui est du rendement général en santé, et ce, pour les 15 dernières années. Les classifications générales du Canada étaient 7/191, 11/24, 10/11, 10/17, « prometteur » et cote « B » selon les diverses études. La littérature antérieure indique que le rendement du Canada était bon dans les secteurs de l'efficience, de la productivité, de l'atteinte des objectifs du système de santé, des années de vie avec un handicap et de la mortalité due à un AVC. À l'opposé, le rendement du Canada est faible dans les secteurs liés à l'espérance de vie ajustée en fonction de l'incapacité, aux années potentielles de vie perdues, à l'obésité chez les adultes et les enfants, au diabète, au cancer du poumon chez les femmes et à la mortalité infantile.

Conclusion:

Alors que les pays mettent en branle des réformes des systèmes de santé qui visent l'amélioration de la santé des populations, les comparaisons internationales sont utiles pour renseigner l'apprentissage entre pays sur la santé et sur les politiques sociales. Bien que la classification des systèmes présente certaines lacunes, elle peut servir à mettre en lumière les forces et faiblesses du système de santé canadien afin de mieux informer les politiques de santé.

Introduction

Since the release of the World Health Report Health Systems: Improving Performance in June 2000 (WHO 2000), international studies focused on health system performance ranking have been gaining momentum. Despite challenges associated with ranking systems (Forde et al. 2013; Papanicolas et al. 2013; Smith 2002), a general enthusiasm among academic and non-academic audiences for international ranked comparisons has evolved. Studies that compare countries on the international stage provide a general and simplified picture of the overall performance of complex health systems (Hewitt and Wolfson 2013). Although what is measured may not reflect the desired end-state of a healthcare system, ranked performance sets up a contest among countries with enhanced potential to attract the media, and thus the public and the policy makers. Writing about the attention that the World Health Report garnered, Navarro (2001) compared the report to the European soccer championship, which was being held around the same time as the release of the World Health Report: “for a short period it seemed the HCS (Health Care System) league was going to be as important as the European soccer games,” (p. 21). Because rankings present a simple picture of health system performance, this information tends to have broad uptake by appealing to the media and the public at large. Comparisons made between peer countries have the potential to influence health and social policy with a goal to learn lessons from the “best” performers around the world (Murray and Frenk 2010).

Among comparative studies of ranked health system performance, Canada's performance results have been variable, with some suggestion that Canada has declined in the rankings over time. The Canadian ranking for life expectancy at birth, for instance, dropped from second to seventh place in relation to 19 comparator countries between 1990 and 2010, and from fourth to tenth place for years of life lost (Murray et al. 2013). At the same time, studies have suggested that Canada has performed well, at least in certain areas. For example, according to Murray et al. (2013), Canada was shown to perform well in years of life lived with disability and stroke mortality. These variable results have left many with a confusing picture of Canada's overall health system performance on the international stage. To address these disparate and somewhat contradictory findings, the objective of this scoping review was twofold: (1) to synthesize the existing literature on health system performance and rankings, and (2) to examine and summarize Canada's ranked health system performance to provide a clear picture of Canada's performance that can offer insights for policy makers and the public at large.

Methodology

We adopted the five-stage scoping review framework developed by Arksey and O'Malley (2005): identifying the research question; identifying the relevant studies; defining inclusion and exclusion; charting the data; and collating, summarizing and reporting the results. Arksey and O'Malley (2005) define a scoping review as “a technique to ‘map’ relevant literature in the field of interest … [which] tends to address broader topics where many different study designs might be applicable … [and] is less likely to seek to address very specific research questions nor, consequently, to assess the quality of included studies.” (Arksey and O'Malley 2005: p. 20)

Two reviewers (M.N. and T.S.) initiated the review with the question “What do we know about Canada's health system performance in the international context?” Initially, all MeSH (Medical Sub Headings) and keywords related to health system performance (Box 1) were identified and a search was conducted using various sources. The first reviewer (M.N.) searched online databases Medline, Scopus, CINAHL and Embase. The second reviewer (T.S.) searched Google scholar and websites of the World Health Organization (WHO), the Organisation for Economic Co-operation and Development (OECD), the Canadian Institute for Health Information (CIHI), the Conference Board of Canada and the Commonwealth Fund for grey literature.

Box 1. Search terms.

Health system performance OR

Health system ranking OR

Healthcare performance OR

Healthcare ranking OR

Performance measurement OR

AND

Developed countries OR

Canada

Our search terms and sources were broad enough to capture all types of study designs. The search process was iterative. As familiarity with the literature increased, the search terms and sources were redefined to allow more precise searches to be undertaken. The initial literature review increased familiarity with the concept and helped us systematically develop the inclusion and exclusion criteria (Table 1). Although definitions of “performance” were variable within the literature, we defined health system performance as “the capacity of a system to produce the highest attainable or most desirable outcome for indicators, while indicators measured one or many aspects of the health system” (Tchouaket et al. 2012). The two reviewers independently selected articles that ranked health systems based on their performance or that generally discussed ranking health system performance. Papers that did not rank health systems or studies that narrowly focused on subcomponents of health system performance such as efficiency, productivity, effectiveness, quality, accessibility, utilization and equity were excluded from the study. Our exclusion criteria were applied systematically to the best of our knowledge and the included papers represented the span of studies a scoping review usually captures.

Table 1.

Inclusion and exclusion criteria for the papers

| Inclusion criteria | Exclusion criteria |

|---|---|

| Ranked health system performance | Specifically focused on quality, efficiency, equity or accessibility, without linking them to performance |

| Generally about ranking health system performance | Not in English |

| Published in English between 2000 and 2015 | Published before 2000 and after 2015 |

| Focused on international health system performance rather than provincial | Compared only Canadian provinces and territories |

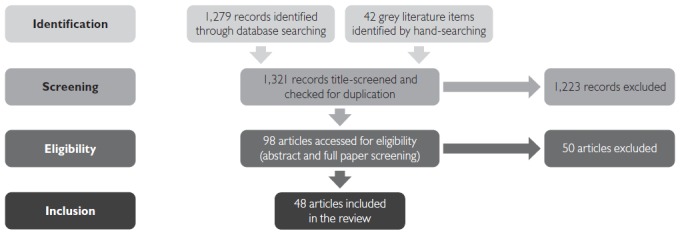

The initial search yielded more than 1,000 sources. We performed a three-part selection process. Initially, the titles were reviewed independently by two reviewers (M.N., T.S.) to verify that each paper met the established inclusion and exclusion criteria. Then, the two independent reviewers screened the abstracts and full papers to include sources. The reviewers exchanged their list of sources to ensure shared understanding of the inclusion/exclusion criteria. Finally, a third reviewer (K.H.) examined the selections of the first two reviewers. Any uncertainty was followed up with discussion amongst the three reviewers to reach a consensus. The inclusion and exclusion criteria resulted in 48 retained sources (Figure 1). The literature was imported into the software program Ref Manager.

Figure 1.

Flow chart

The two reviewers applied a qualitative approach using open coding and inductive reasoning to identify themes in the literature and to develop categories for further coding and sorting. The third reviewer observed the coding to ensure inter-reviewer reliability. The reviewers subsequently agreed on major themes and developed a literature extraction tool to obtain key information from the academic and grey literature. The data were extracted and were inputted directly into the literature extraction tool on an Excel spreadsheet. The extracted data were a mixture of general information about the studies, specific information relating to health system performance methodology and Canada's health system performance. Empirical studies that included Canada were distinguished and charted in a separate table to provide in-depth information on Canada's ranked performance. We synthesized the extracted data and produced a preliminary findings report which was shared with the team and with an external expert panel for further comments. The research team members brought to the table a range of expertise in national and international health policy analysis, population health and health system performance. For the external panel, this project drew together national and international scholars in the fields of epidemiology, biostatistics, public health, international health policy, and clinical medicine. Comments from the team members and the external advisors were integrated, and a final summary of the synthesized findings was produced.

Synthesis

The 48 sources in this review included academic and grey literature, empirical and conceptual papers, commentaries and editorials. Appendix 1 provides basic information on the 48 sources. Grey literature mainly came from the WHO, the Commonwealth Fund, the Conference Board of Canada, the OECD and the CIHI – organizations that maintain and report on national and international health-related databases. The time period of the performance data ranged from 1960 to 2010. Of the 48 sources included, the reviewers distinguished 12 empirical studies that explicitly ranked Canada on the international stage, and those 12 studies were charted separately in Table 2 and Appendix 2 for in-depth synthesis of Canada's ranked performance. On average, a three- to five-year interval existed between the time data were collected and the time a study was published using the same data.

Table 2.

A summary of methodologies for ranked performance and Canada's ranking

| Author (year) | Title | No. | HSP methodology | HSP measurement indicators | Canada's overall/ranked performance |

|---|---|---|---|---|---|

| WHO (2000) | The World Health Report 2000 – Health Systems: Improving Performance | 191 | A composite of indicators as a measure of HSP: focused on objectives of the HS | Health status: DALE Responsiveness: Survey Fairness: Survey |

|

| Anderson and Hussey (2001) | Comparing Health System Performance in OECD Countries | 26 | Individual indicators as a measure of HSP on key subject areas | Individual indicators:

|

Compared to OECD median, Canada has:

|

| Nolte et al. (2006) | Diabetes as a Tracer Condition in International Benchmarking of Health Systems | 29 | Disease as a tracer condition to assess HSP | Diabetes incidence and mortality | Diabetes mortality to incidence ratio: 6/29 |

| The Conference Board of Canada (2006) | Healthy Provinces, Healthy Canadians: A Provincial Benchmarking Report | 24 | A composite of indicators as a measure of HSP: benchmarking all indicators together | Health status: 11 indicators Healthcare outcomes: 7 indicators Healthcare utilization: 1 indicator |

Overall ranking:11/24 |

| Gay et al. (2011) | Mortality Amenable to Healthcare in 31 OECD Countries: Estimates and Methodological Issues | 31 | Mortality amenable to healthcare intervention | Amenable mortality |

|

| Tchouaket et al. (2012) | HSP of 27 OECD Countries | 27 | A composite of indicators as a measure of HSP: Donabedian's structure-process-outcome and effectiveness, efficiency and productivity | Health status: 27 indicators Resources: 21 indicators Health services: 20 indicators |

|

| Verguet and Jamison (2013) | Performance in Rate of Decline of Adult Mortality in the OECD, 1970–2010 | 22 | Individual indicator as a measure of HSP: adult mortality | Female adult mortality |

|

| Veillard et al. (2013), CIHI (2011, 2013) | Methods to Stimulate National and Sub-National Benchmarking through International Health System Performance Comparisons: A Canadian Approach | 34 | Individual indicators as measures of HSP: directional measures for four dimensions of HSP: (1) Health status, (2) Non-medical determinant, (3) Access and (4) Quality of care | Health status: 15 indicators Non-medical determinant: 6 indicators Access: 3 indicators Quality of care: 15 indicators |

Compared to OECD average, Canada performs well on some indicators and needs improvement on others |

| Murray et al. (2013) | UK Health Performance: Findings of the Global Burden of Disease Study 2010 | 19 | Individual indicator as a measure of HSP: benchmarking individual indicators | Mortalities and causes of death, YLL, YLD, DALY, HALE (259 diseases and injuries and 67 risk factors) |

|

| Gerring et al. (2013) | Assessing Health System Performance: A Model-Based Approach | 190 | Composite index: economy–education, epidemiology, geography, culture and residual is modelled as public health index in which residual is considered as HSP | Health outcome: 9 indicators Culture and history: 2 indicators Education: 2 indicators Epidemiology: 3 indicators Geography: 11 indicators Economy: 3 indicators Miscellaneous: 8 indicators |

Canada's overall ranking: 97/190 |

| Davis et al. (2014) | Mirror, Mirror on the Wall | 11 | Composite: ranking based in individual indicators and averaging the ranks | Quality: 44 indicators Access: 12 indicators Efficiency: 11 indicators Equity: 10 indicators Healthy lives: 3 indicators |

Canada's overall ranking: 10/11 |

| The Conference Board of Canada (2015) | International Ranking: Canada Benchmarked Against 15 Countries | 17 | Composite: normalizing and averaging indicators | 11 indicators: LEB; self-reported health status; premature mortality (PYLL); infant mortality; mortality from cancer, circulatory disease, respiratory disease, diabetes, musculoskeletal system, mental disorders and medical misadventures | Canada's overall grade: “B” Canada's overall ranking: 10/17 |

CIHI = Canadian Institute for Health Information; DALE = disability-adjusted LE; DALY = disability-adjusted life year; FAM = female adult mortality; GDP = gross domestic product; HALE = health-adjusted LE; HS = health system; HSP = HS performance; LE = life expectancy; LEB = LE at birth; MRI = magnetic resonance imaging; No. = number of countries compared; OECD = Organisation for Economic Co-operation and Development; PYLL = potential YLL; WHO = World Health Organization; WHR = World Health Report; YLD = years lived with disability; YLL = years of life lost.

The set of comparator countries varied across studies. For example, the original WHO World Health Report (2000) included 190 countries while other studies elected to assess a more narrowed set of OECD countries or “peer countries” (Table 2). Given that the comparator countries had noteworthy influence on rankings, some have argued that ranking performance among peer countries was a more plausible and appropriate pursuit compared to the indiscriminate inclusion of all countries in the original list. However, regardless of countries selected, in most studies peer countries were selected implicitly without establishing any clearly defined criteria. When criteria were explicitly specified, they most often included factors such as GDP per capita, population size, language, culture and history. One paper developed a model based on health outcome indicators and country characteristics to identify clusters of peer countries (Bauer and Ameringer 2010).

Major themes were categorized around health system performance methodologies and Canada's ranked health system performance. It is worth noting that even the health system performance methodologies identified in this review were generally related to Canadian context due to the bias for Canada in the inclusion and exclusion criteria. If there were other methodologies that did not include Canada in their ranking, they were excluded from this review.

Health system performance methodology

Of the 48 sources included in this review, 12 used some method of ranking and included Canada among the countries ranked (Table 2). Each of the studies applied different frameworks, indicators and analytical methods. The four main groups of indicators included population health outcome indicators, disease-specific indicators, healthcare system indicators and indicators focused on the non-medical determinants of health. Population health outcome indicators (i.e., life expectancies, years of life lost and mortalities) were found in nearly all studies (Anderson and Hussey 2001; Davis et al. 2014; Gerring et al. 2013; Heijink et al. 2013; Murray et al. 2013; Nolte et al. 2006; Reibling 2013; Tchouaket et al. 2012; The Conference Board of Canada 2006, 2015; Veillard et al. 2013; Verguet and Jamison 2013; WHO 2000). Some studies went beyond outcome indicators and included causes of death, disease incidence rates and mortality rates for specific diseases (Arah et al. 2005; Murray et al. 2013; Nolte et al. 2006; The Conference Board of Canada 2015). Healthcare system indicators typically comprised the number of physicians and hospitals, the volume of services and utilization rates. Indicators of health spending were also used to assess efficiency, fair financing and equity of access within the health system (Davis et al. 2014; Heijink et al. 2013; Reibling 2013; Tchouaket et al. 2012). Non-medical determinant indicators were generally related to smoking, alcohol and diet (Anderson and Hussey 2001; Hussey et al. 2004; The Conference Board of Canada 2006).

Numerous analytical methods were applied in ranking health system performance. Simple benchmarking approaches were the most common, in which a country's performance was ranked in relation to top and bottom performers (Davis et al. 2014; Tchouaket et al. 2012; The Conference Board of Canada 2006, 2015). Some studies used more complex methods to assess the performance of countries. One study applied cluster analysis to group countries with same level of performance (Tchouaket et al. 2012), and another applied a least squares regression model to control for broader social determinants of health such as education, economy and history and culture (Gerring et al. 2013). The choice of analytical methods depended on the conceptual framework used to assess health system performance.

The methodologies applied to assess health system performance fell into one of two categories: those that used a single health indicator as a proxy for health system performance, and those that developed an index for health system performance using many indicators. When single indicators were taken as a measure for health system performance, population health outcome indicators were the most commonly used (Murray et al. 2013; Verguet and Jamison 2013). The second category of studies used a number of indicators to create a single composite index for health system performance. Composite indices were created in multiple ways. The simplest approach was to sum indicators normalized along the same scale (Davis et al. 2014). Another approach combined indicators weighted according to theoretical or conceptual frameworks (Tchouaket et al. 2012; WHO 2000).

Caution was taken when interpreting findings, as all methods of ranking had limitations. For example, the method that used summary health indicators as a proxy for health system performance was criticized on the grounds that health was a function of the whole of society rather than just the health (care) system, and that health outcomes could not be attributed only to the activities of the health system (Arah et al. 2006; Handler et al. 2001; Kaltenthaler et al. 2004; Navarro 2001; Rosen 2001). Studies that simply added up indicators by giving them equal weight were also consistently criticized (Richardson et al. 2003; Wibulpolprasert and Tangcharoensathien 2001). The use of conceptual frameworks in performance assessment was generally applauded for acknowledging the complexity of health systems, but the way each framework was operationalized was often heavily criticized (Bhargava 2001; Blendon et al. 2001; Deber 2004; Mulligan et al. 2000; Wagstaff 2002; Wibulpolprasert and Tangcharoensathien 2001). For example, the chief editor of the 2000 WHO report, Musgrove (2010) wrote 10 years after the report was published that “61% of the numbers that went into that ranking exercise were not observed but simply imputed” (p. 1546).

Canada's ranked health system performance

Canada's ranked performance varied across the studies. Table 2 and Appendix 2 summarize Canada's ranked health system performance in the 12 empirical studies. When ranked in numbers, Canada's performance ranged from 6/29 for diabetes mortality-to-incidence ratio (Nolte et al. 2006) to 97/190 for overall health system performance (WHO 2000). Table 3 shows Canada's ranked numbers in various studies, with each study applying different indicators, different frameworks, different comparator countries and different analytical methods.

Table 3.

Summary of Canada's ranked performance in numbers

| Year | Author | Ranked for | Canada's ranking |

|---|---|---|---|

| 2000 | WHO | Overall goal attainment | 7/191 |

| 2000 | WHO | Overall health system performance | 30/191 |

| 2006 | Nolte et al. | Diabetes mortality to incidence ratio | 6/29 |

| 2006 | The Conference Board of Canada | Overall health system performance | 11/24 |

| 2013 | Verguet and Jamison | Female adult mortality | 15/22 |

| 2013 | Murray et al. | Age-standardized years of life lost | 10/19 |

| 2013 | Murray et al. | Life expectancy at birth | 7/19 |

| 2013 | Gerring et al. | Overall health system performance | 97/190 |

| 2014 | Davis et al. | Overall health system performance | 10/11 |

| 2015 | The Conference Board of Canada | Overall health system performance | 10/17 (B grade) |

| WHO = World Health Organization. | |||

When not ranked in numbers, Canada was often compared to the OECD average. In these cases, Canada tended to achieve a middling performance (Anderson and Hussey 2001; Veillard et al. 2013) in terms of absolute performance, “above average” for relative performance and “promising” for “integrated overall performance” (Tchouaket et al. 2012).

Overall, we identified a number of themes regarding Canada's ranked performance. First, Canada performed well for some indicators and poorly for others. When analyzed further, it was found that Canada's rankings were higher for most population health outcome indicators but lower for complex indices of performance. Second, there was a sex difference in Canada's ranking in the international stage, with some indicators of female health ranking lower than indicators of male health. Finally, Canada's ranked performance tended to decline over time. In earlier decades, Canada's ranked performance tended to be stronger, but a fall through the ranks is observable in more recent decades.

Canada's performance was variable depending upon the indicators selected. Some of the desirable rankings included Canada being placed 7th out of 191 countries in terms of overall goal attainment (WHO 2000). Canada's male life expectancy was 6/24 in 2006, male disability adjusted life expectancy (DALE) was 5/26 in 2001 and male potential years of life lost (PYLL) was 6/26 in 2001 (Anderson and Hussey 2001). Canada was found to be a top performer in terms of stroke care for years (Murray et al. 2013; The Conference Board of Canada 2006). Tchouaket et al. (2012) clustered Canada into a group of countries with higher levels of service, higher efficiency (outcome/resource) and higher productivity (services/resources). In terms of undesirable performances, Canada ranked 14th out of 26 countries for female DALE and 12th out of 26 countries for female PYLL in 2011 (Anderson and Hussey 2001). Canada ranked second last for female lung cancer rate and third last for female mortality from lung cancer (The Conference Board of Canada 2006). In 2013, Canada ranked 15th out of 22 countries for female adult mortality (Verguet and Jamison 2013). Veillard and colleagues found Canada had higher rates of overweight and obesity in adults and children, and higher rates of diabetes in adults compared to OECD average in 2013 (Veillard et al. 2013). The Conference Board of Canada gave Canada a “C” grade for infant mortality (The Conference Board of Canada 2015). Tchouaket and colleagues (2012) grouped Canada among countries with poorer resources, average outcomes and lower effectiveness (meaning the outcome was not to the level expected of the amount of services provided).

Despite the variability within the literature, Canada often ranked higher for summary population health outcome indicators compared to composite indices. In the 2000 WHO report, Canada ranked 12/191 for health status, which dropped to 30/191 for the overall health performance index (WHO 2000). In 2010, Canada's ranking for health-adjusted life-expectancy at birth was 5/19 and for age-standard years of life lost 10/19 (Murray et al. 2013). Around the same time, Canada ranked 10/11 for a composite index developed by the Commonwealth Fund combining 80 indicators (Davis et al. 2014), 10/17 for another composite index developed by The Conference Board of Canada combining 11 indicators (The Conference Board of Canada 2015) and 97/190 for a composite index of health system performance controlling for social determinants of health (Gerring et al. 2013).

Canada's rankings also had a sex dimension. Canada's ranking for female indicators of health were generally lower compared to its ranking for male indicators of health. For example, Canada's ranking for male DALE was 5/26, while for female DALE, it was 14/26; Canada's male PYLL was 6/26, while Canada's female PYLL was 12/26 (Anderson and Hussey 2001). The Conference Board of Canada (2006) found Canada second last for female lung cancer rate, and third last for female mortality from lung cancer.

Finally, in studies that tracked Canada's performance over time, there was a general trend of decline through the rankings. Canada's ranking for age-standard years of life lost has dropped from 4/19 in the 1990s to 10/19 in 2010, and for life expectancy at birth from 2/19 in the 1990s to 7/19 in 2010 (Murray et al. 2013). The Conference Board of Canada ranked Canada's overall health system performance at 11/24 in 2004, which dropped to 10/17 in 2015 – a three-rank drop if they were put in a same scale (The Conference Board of Canada 2006, 2015).

Discussion and conclusion

When analyzing ranking studies, Hewitt and Wolfson (2013) urged the research community to carefully consider the aspects of health or healthcare being assessed; the relationship between indicators within the health system, as well as the reliability, accuracy and comparability of indicators, and the methods of ranking and analysis. As important as it is to note that not everything measured is necessarily valuable, synthesizing what is already measured can be useful. Our findings indicated that the heterogeneity in methodologies to assess ranked health system performance has led to the development of a diverse literature focused on different aspects of health system performance, yielding variable results. Some studies used simplistic methods of selecting one indicator as a proxy for health system performance, while others applied more intricate methods to create composite indices of health system performance. Despite the heterogeneity in methodologies, a growing literature on health system performance ranking suggests that the systematic compilation of results has the potential to add value by creating an overall picture of performance which can offer insight for policy makers in Canada as well as the public at large.

The time lag between data collection and research publication indicates that published research and analyses are not reflective of the current (the time of publication) performance of health systems. The consideration of time lag becomes more important when combined with political cycles of government and corresponding healthcare priorities and the lag effect of policies, as it often takes years before the impact of policy becomes evident on health at the population level. In today's world, the growing prevalence of timely data requires analytical tools to translate data into actionable knowledge promptly.

In terms of country rankings, it is not surprising to see Canada rank higher for some indicators and lower for others, but unpacking the themes around higher and lower rankings provides further insights. Canada's lower ranking is typically observed when a composite index is used to rank health system performance. For major population health outcome indicators, Canada tends to perform well. However, further research is required to understand the reason for Canada's declining ranking among studies that use composite indices. A decline in ranking has been observed over the last two decades, but it is important to note that this decline over time does not mean that Canada's performance has worsened (Nolte and McKee 2011). In terms of absolute numbers, for example, Canada's life expectancy improved from 77.2 in 1990 to 80.6 in 2010 (Murray et al. 2013). It is other countries that are improving at a higher pace than Canada. The slow improvement rate was found in one study in which Canada ranked 138 out of 191 countries for improvement rate between 1960 and 2010 (Gerring et al. 2013). A sex difference in Canada's ranking observed in various studies may be partially explained by poor performance of Canada's female indicators for lung cancer, evident from multiple studies included in this review.

Conclusions about Canada's middling performance must be interpreted carefully. Canada's middling performance is usually concluded as a result of Canada performing well on some indicators and poorly on others. But middle-of-the-pack performance is a relative assessment. It does not convey a sense of Canada's absolute performance. In a report by the Canadian Institute for Health Information (CIHI 2016), this interpretation challenge is discussed in further detail. By ranking countries' performances, the absolute distance between the first and the second positions may not be the same as the absolute distance between the second and the third performing countries. Thus, a middling performance does not convey much about the absolute performance of the country but only its relative performance according to the set of comparator countries included in the analysis. It is worth noting that countries that are improving at a faster pace than Canada are aiming to be the best in the world. One of Canada's common peer countries, Australia, has been striving to match the best performers in the world, and in some cases appears to be improving at a higher rate than Canada (Ring and O'Brien 2008).

Our study had a number of limitations. We may have missed some relevant sources because of the databases we included; time constraint between 2000 and 2015; exclusion of studies published in languages other than English; a specific focus on Canada and bias from our definition of performance that omits narrow aspects of performance such as equity, efficiency, effectiveness, quality, productivity, accessibility and utilization. Furthermore, it was an intentional decision on the part of the reviewers to focus on specific rather than broad. Therefore, this is not a comprehensive review of literature on health system performance, but an in-depth synthesis of literature on the ranking of health system performance. Although not comprehensive, we observed that the studies included were representative and reflected the patterns and trends in the literature. It is possible that our findings could have been influenced by particular expertise of the members of the team; however, we worked with an external expert panel to minimize that possibility. Unlike systematic reviews, scoping reviews often lack critical appraisal of the sources they include. Though we did not perform a systematic quality appraisal to include sources, we have distinguished empirical studies among the included sources.

In conclusion, ranking health systems based on the heterogeneity of frameworks, methodologies and indicators has three implications for policy. First, countries' rankings change in different studies. It should not be a cause for hasty media attention or policy decisions. Second, rankings are often reflective of certain aspects of health systems. Depending on what is being ranked, it is better that policy debates focus on specific aspects of a health system rather than the whole of the health system. Third, it is not the rank that offers the lesson, but what has been ranked and how. Ranking may be a good way to attract media and raise public awareness about aspects of the health system, but it has limited potential to offer valuable lessons for policy makers, health managers and frontline program implementers. Future research on international health system performance should move from studies that simply present rankings to studies that explore best practices within countries to facilitate cross-learning at the global level.

Acknowledgements

CIHI funded this scoping review as part of a project assessing Canada's ranking on the international stage in terms of PYLL. The CIHI report on the project was published in 2016 on the CIHI website (https://www.cihi.ca) (CIHI 2016). The views expressed in this paper are solely those of the authors and do not necessarily reflect those of the CIHI.

Contributor Information

Said Ahmad Maisam Najafizada, Assistant Professor, Memorial University of Newfoundland, St. John's, NL.

Thushara Sivanandan, Analyst, Canadian Institute for Health Information, Ottawa, ON.

Kelly Hogan, Senior Analyst, Canadian Institute for Health Information, Ottawa, ON.

Deborah Cohen, Adjunct Professor, University of Ottawa, Manager, Thematic Priorities, Canadian Institute for Health Information, Ottawa, ON.

Jean Harvey, Director of Canadian Population Health Initiative, Canadian Institute for Health Information, Ottawa, ON.

References

- Anderson G., Hussey P.S. 2001. “Comparing Health System Performance in OECD Countries.” Health Affairs 20(3): 219–32. Retrieved December 8, 2015. <http://www.scopus.com/inward/record.url?eid=2-s2.0-0035346671 &partnerID=tZOtx3y1>. [DOI] [PubMed] [Google Scholar]

- Arah O.A., Westert G.P., Delnoij D.M., Klazinga N.S. 2005. “Health System Outcomes and Determinants Amenable to Public Health in Industrialized Countries: A Pooled, Cross-Sectional Time Series Analysis.” BMC Public Health 5(81). 10.1186/1471-2458-5-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arah O.A., Westert G.P., Hurst J., Klazinga N.S. 2006. “A Conceptual Framework for the OECD Health Care Quality Indicators Project.” International Journal for Quality in Health Care 18(Suppl. 1): 5–13. [DOI] [PubMed] [Google Scholar]

- Arksey H., O'Malley L. 2005. “Scoping Studies: Towards a Methodological Framework.” International Journal of Social Research Methodology 8(1): 19–32. 10.1017/CBO9781107415324.004. [Google Scholar]

- Bauer D.T., Ameringer C. F. 2010. “A Framework for Identifying Similarities among Countries to Improve Cross-National Comparisons of Health Systems.” Health and Place 16(6): 1129–35. 10.1016/j.healthplace.2010.07.004. [DOI] [PubMed] [Google Scholar]

- Bhargava A. 2001. “WHO Report 2000.” Lancet 358(9287): 1097–98. [DOI] [PubMed] [Google Scholar]

- Blendon R.J., Kim M., Benson J.M. 2001. “The Public versus the World Health Organization on Health System Performance.” Health Affairs 20(3): 10–20. 10.1377/hlthaff.20.3.10. [DOI] [PubMed] [Google Scholar]

- Canadian Institute for Health Information (CIHI). 2011. Learning from the Best: Benchmarking Canada's Health System. Ottawa, ON: Author. [Google Scholar]

- Canadian Institute for Health Information (CIHI). 2013. Benchmarking Canada's Health System: International Comparisons. Ottawa, ON: Author. [Google Scholar]

- Canadian Institute for Health Information (CIHI). 2016. Canada's International Health System Performance Over 50 Years: Examining Potential Years of Life Lost. Ottawa, ON: Author; Retrieved July 31, 2017. <https://www.cihi.ca/en/health-system-performance/performance-reporting/international/pyll>. [Google Scholar]

- Davis K., Stremikis K., Squires D., Schoen C. 2014. “Mirror, Mirror on the Wall: How the Performance of the US Health Care System Compares Internationally.” Commonwealth Fund pub. no. 1755.

- Deber R. 2004. “Why Did the World Health Organization Rate Canada's Health System as 30th? Some Thoughts on League Tables.” Longwoods Review 2(1): 2–7. [Google Scholar]

- Forde I., Morgan D., Klazinga N.S. 2013. “Resolving the Challenges in the International Comparison of Health Systems: The Must Do's and the Trade-Offs.” Health Policy 112(1–2): 4–8. 10.1016/j.healthpol.2013.01.018. [DOI] [PubMed] [Google Scholar]

- Gay J.G., Paris V., Devaux M., De Looper M. 2011. Mortality Amenable to Health Care in 31 OECD Countries – Estimates and Methodological Issues. Paris, FR: Organisation for Economic Co-operation and Development (OECD) Health Working Papers. 10.1787/18152015. [Google Scholar]

- Gerring J., Thacker S.C., Enikolopov R., Arévalo J., Maguire M. 2013. “Assessing Health System Performance: A Model-Based Approach.” Social Science & Medicine 93: 21–28. 10.1016/j.socscimed.2013.06.002. [DOI] [PubMed] [Google Scholar]

- Handler A., Issel M., Turnock B. 2001. “A Conceptual Framework to Measure Performance of the Public Health System.” American Journal of Public Health 91(8): 1235–39. 10.2105/AJPH.91.8.1235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heijink R., Koolman X., Westert G.P. 2013. “Spending More Money, Saving More Lives? The Relationship between Avoidable Mortality and Healthcare Spending in 14 Countries.” European Journal of Health Economics 14(3): 527–38. 10.1007/s10198-012-0398-3. [DOI] [PubMed] [Google Scholar]

- Hewitt M., Wolfson M.C. 2013. “Making Sense of Health Rankings.” Healthcare Quarterly 16(1): 13–15. 10.12927/hcq.2013.23296. [PubMed] [Google Scholar]

- Hussey P.S., Anderson G.F., Osborn R., Feek C., McLaughlin V., Millar J., Epstein A. 2004. “How Does the Quality of Care Compare in Five Countries?” Health Affairs 23(3): 89–99. 10.1377/hlthaff.23.3.89. [DOI] [PubMed] [Google Scholar]

- Kaltenthaler E., Maheswaran R., Beverley C. 2004. “Population-Based Health Indexes: A Systematic Review.” Health Policy 68(2): 245–55. 10.1016/j.healthpol.2003.10.005. [DOI] [PubMed] [Google Scholar]

- Mulligan J., Appleby J., Harrison A. 2000. “Measuring the Performance of Health Systems.” BMJ 321: 191–92. 10.1136/bmj.321.7255.191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray C.J.L., Frenk J. 2010. “Ranking 37th — Measuring the Performance of the U.S. Health Care System.” New England Journal of Medicine 362(2): 98–99. 10.1056/NEJMp0910064. [DOI] [PubMed] [Google Scholar]

- Murray C.J.L., Richards M.A., Newton J.N., Fenton K.A., Anderson H.R., Atkinson C., Bennett D. et al. 2013. “UK Health Performance: Findings of the Global Burden of Disease Study 2010.” Lancet 381(9871): 997–1020. 10.1016/S0140-6736(13)60355-4. [DOI] [PubMed] [Google Scholar]

- Musgrove P. 2010. “Health Care System Rankings.” New England Journal of Medicine 362(16): 1546. 10.1056/NEJMc0910050. [DOI] [PubMed] [Google Scholar]

- Navarro V. 2001. “The New Conventional Wisdom: An Evaluation of the WHO Report ‘Health Systems: Improving Performance.'” International Journal of Health Services 31(1): 23–33. [DOI] [PubMed] [Google Scholar]

- Nolte E., Bain C., McKee M. 2006. “Diabetes as a Tracer Condition in International Benchmarking of Health Systems.” Diabetes Care 29(5): 1007–11. 10.2337/diacare.2951007. [DOI] [PubMed] [Google Scholar]

- Nolte E., McKee M. 2011. “Variations in Amenable Mortality–Trends in 16 High-Income Nations.” Health Policy 103(1): 47–52. 10.1016/j.healthpol.2011.08.002. [DOI] [PubMed] [Google Scholar]

- Papanicolas I., Kringos D., Klazinga N.S., Smith P.C. 2013. “Health System Performance Comparison: New Directions in Research and Policy.” Health Policy 112(1–2): 1–3. 10.1016/j.healthpol.2013.07.018. [DOI] [PubMed] [Google Scholar]

- Reibling N. 2013. “The International Performance of Healthcare Systems in Population Health: Capabilities of Pooled Cross-Sectional Time Series Methods.” Health Policy 112(1–2): 122–32. 10.1016/j.healthpol.2013.05.023. [DOI] [PubMed] [Google Scholar]

- Richardson J., Wildman J., Robertson I.K. 2003. “A Critique of the World Health Organisation's Evaluation of Health System Performance.” Health Economics 12(5): 355–66. 10.1002/hec.761. [DOI] [PubMed] [Google Scholar]

- Ring I.T., O'Brien J.F. 2008. “Our Hearts and Minds–What Would It Take to Become the Healthiest Country in the World?” Medical Journal of Australia 188(6): 375; author reply 375. [DOI] [PubMed] [Google Scholar]

- Rosen M. 2001. “Can the WHO Health Report Improve the Performance of Health Systems?” Scandinavian Journal of Public Health 29(1): 76–77. [PubMed] [Google Scholar]

- Smith P.C. 2002. “Measuring Health System Performance.” European Journal of Health Economics 3(3): 145–48. 10.1007/s10198-002-0138-1. [DOI] [PubMed] [Google Scholar]

- Tchouaket E.N., Lamarche PA., Goulet L., Contandriopoulos A.P. 2012. “Health Care System Performance of 27 OECD Countries.” International Journal of Health Planning and Management 27(2): 104–29. 10.1002/hpm.1110. [DOI] [PubMed] [Google Scholar]

- The Conference Board of Canada. 2006. Healthy Provinces, Healthy Canadians: A Provincial Benchmarking Report. Ottawa, ON: Author. [Google Scholar]

- The Conference Board of Canada. 2015. International Ranking: Canada Benchmarked against 15 Countries. Ottawa, ON: Author. [Google Scholar]

- Veillard J., McKeag A.M., Tipper B., Krylova O., Reason B. 2013. “Methods to Stimulate National and Sub-National Benchmarking through International Health System Performance Comparisons: A Canadian Approach.” Health Policy 112(1–2): 141–47. 10.1016/j.healthpol.2013.03.015. [DOI] [PubMed] [Google Scholar]

- Verguet S., Jamison D.T. 2013. “Performance in Rate of Decline of Adult Mortality in the OECD, 1970–2010.” Health Policy 109(2): 137–42. 10.1016/j.healthpol.2012.09.013. [DOI] [PubMed] [Google Scholar]

- Wagstaff A. 2002. “Reflections on and Alternatives to WHO's Fairness of Financial Contribution Index.” Health Economics 11(2): 103–15. 10.1002/hec.685. [DOI] [PubMed] [Google Scholar]

- Wibulpolprasert S., Tangcharoensathien V. 2001. “Health Systems Performance – What's Next?” Bulletin of the World Health Organization 79(6): 489. [PMC free article] [PubMed] [Google Scholar]

- World Health Organization (WHO). 2000. The World Health Report 2000 – Health Systems: Improving Performance. Geneva, CH: Author. [Google Scholar]