Why does the brain encode goal-oriented, intermanual tasks in a visual space, even in the absence of visual feedback about the target and the hand? We show that the visual encoding is not due to the transfer of proprioceptive signals between brain hemispheres per se, but to the need, due to the mirror symmetry of the two limbs, of transforming joint angle signals of one arm in different joint signals of the other.

Keywords: sensory encoding, bimanual movements, goal-oriented hand movements, kinesthesia, sensory transformations

Abstract

To perform goal-oriented hand movement, humans combine multiple sensory signals (e.g., vision and proprioception) that can be encoded in various reference frames (body centered and/or exo-centered). In a previous study (Tagliabue M, McIntyre J. PLoS One 8: e68438, 2013), we showed that, when aligning a hand to a remembered target orientation, the brain encodes both target and response in visual space when the target is sensed by one hand and the response is performed by the other, even though both are sensed only through proprioception. Here we ask whether such visual encoding is due 1) to the necessity of transferring sensory information across the brain hemispheres, or 2) to the necessity, due to the arms’ anatomical mirror symmetry, of transforming the joint signals of one limb into the reference frame of the other. To answer this question, we asked subjects to perform purely proprioceptive tasks in different conditions: Intra, the same arm sensing the target and performing the movement; Inter/Parallel, one arm sensing the target and the other reproducing its orientation; and Inter/Mirror, one arm sensing the target and the other mirroring its orientation. Performance was very similar between Intra and Inter/Mirror (conditions not requiring joint-signal transformations), while both differed from Inter/Parallel. Manipulation of the visual scene in a virtual reality paradigm showed visual encoding of proprioceptive information only in the latter condition. These results suggest that the visual encoding of purely proprioceptive tasks is not due to interhemispheric transfer of the proprioceptive information per se, but to the necessity of transforming joint signals between mirror-symmetric limbs.

NEW & NOTEWORTHY Why does the brain encode goal-oriented, intermanual tasks in a visual space, even in the absence of visual feedback about the target and the hand? We show that the visual encoding is not due to the transfer of proprioceptive signals between brain hemispheres per se, but to the need, due to the mirror symmetry of the two limbs, of transforming joint angle signals of one arm in different joint signals of the other.

to plan and control movements, the brain can potentially use multiple sources of sensory information. For instance, visual signals inform the brain about the environment and the body’s relative position in space, while proprioception, i.e., the ensemble of skin, muscle, and joint signals, provides information about the position, movement, and exerted efforts of the body segments (Proske and Gandevia 2012). Several studies have shown that, when performing goal-oriented hand movements, the brain uses such sources of information in a statistically optimal fashion (Bays and Wolpert 2007; Faisal and Wolpert 2009; Fetsch et al. 2012; Ghahramani et al. 1997; Körding and Wolpert 2004; Rohe and Noppeney 2016; Sabes 2011; van Beers et al. 1996, 1999). For instance, it has been demonstrated experimentally and theoretically that, to control hand movements, the brain privileges direct comparisons between the information concerning the moving hand and the target position/orientation (Blouin et al. 2014; Sarlegna and Sainburg 2007; Sober and Sabes 2005; Tagliabue and McIntyre 2011). As a consequence, if the hand and target position can be sensed through the same sensory modality, the brain would tend to avoid using other signals requiring sensory transformations. Thus, if reaching to a visual target with the hand in view, the task will be represented in visual coordinates, while blindly moving the hand to a remembered position (e.g., reaching for your car’s stick shift while keeping your eyes on the road) would be carried out in proprioceptive reference frame. Other studies, however, have shown evidence for the use of visual encoding of reaching task even when both target and hand position could be sensed only through proprioception (Jones et al. 2010; McGuire and Sabes 2009; Pouget et al. 2002; Sarlegna and Sainburg 2007; Sober and Sabes 2005). How to reconcile these different observations remains an open question.

We tackled this apparent contradiction in a specific experiment in which the subjects had to sense a hand orientation, remember it, and later reproduce that same orientation without visual feedback of the hand (Tagliabue and McIntyre 2013). The subjects performed the task in two different conditions. In the first condition, a replica of a previous task in which we observed a purely proprioceptive encoding (Tagliabue and McIntyre 2011), the subject used the same hand to sense and then reproduce the orientation. In the second condition, subjects had to reproduce with one hand the remembered orientation that was sensed with the other. This latter task is typical of other paradigms for which a visual encoding has previously been observed (Jones et al. 2010; McGuire and Sabes 2009; Pouget et al. 2002; Sarlegna and Sainburg 2007; Sober and Sabes 2005). Our result showed that the apparently contradicting results found in the literature could be reconciled by the asserting that it is the use of two different hands to sense and reach the target that induces a visual encoding of the available proprioceptive information. Supported by the results of a statistical optimization model, we concluded that the necessity of performing interlimb transformations induces a visual representation of otherwise purely proprioceptive tasks, ostensibly because this improves movement precision. The rationale for this conclusion stems from the fact that sensorimotor transformations add variability to the intrinsic noise of the visual or proprioceptive sensors. According to principles of maximum likelihood, when multiple channels of noisy information are combined in an optimal fashion, the resulting signal is less noisy than any of the component channels, provided that the noise in the different channels is statistically independent from each other. Thus, if there exists multiple, parallel pathways that allow one to compare target to hand, it makes sense to use all of them so that the effects of noise introduced by each individual pathway can be reduced. On the other hand, comparing the posture of the hand to the remembered posture of the same hand does not, a priori, require any transformations to compare target with response. In this case, the central nervous system achieves the theoretical optimum by avoiding sensorimotor transformations altogether, carrying out the task entirely in the intrinsic frame of the proprioceptive sensors themselves (Tagliabue and McIntyre 2013).

The necessity, or not, of performing transformations appears, therefore, to be a key factor in determining when the central nervous system will carry out sensorimotor tasks in one set of coordinate frames or another. This theoretical framework adequately predicts the empirical results from our previous comparison of an inter- vs. intramanual task described above, because the former required transformations while the latter did not (Tagliabue and McIntyre 2013, 2014). But this reasoning does not provide the full story. In our previous intermanual task, subjects not only had to transfer the proprioceptive signals between brain hemispheres, they also had to map joint angles of one arm into different joint angles of the other arm. For instance, a target inclination, sensed through a left-hand supination had to be transformed into a right-hand pronation to align the two hands in space, i.e., to make them parallel, as required by the task instructions. Therefore, to reproduce with one hand the orientation of a target sensed with the other, subjects could not just reproduce the intrinsic wrist, elbow, and shoulder angles, as this would have let to a mirror symmetric (i.e., nonparallel) hand orientation with respect to the subject’s sagittal plane. Thus our previous intermanual orientation task differed from the intramanual task in two fundamentally different ways: interhemispheric transfer of information and interlimb transformation of joint configurations. Further experiments were, therefore, needed to disentangle these confounding factors.

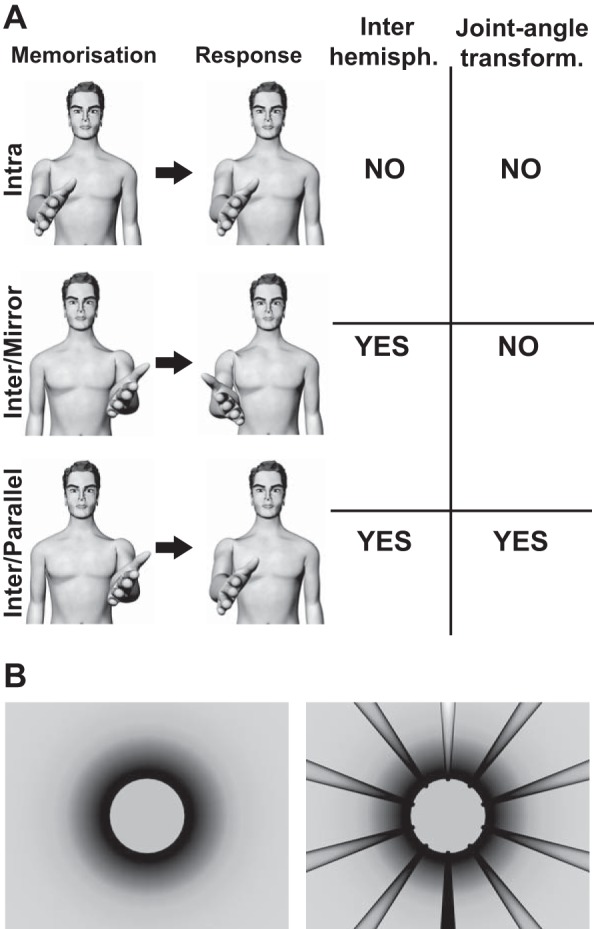

Here we introduce a new task to our series of studies to address this open question. Instead of matching the absolute orientation of the hands, as in both our previous intramanual task (which we will hereafter refer to as our Intra task) and our previous intermanual task (designated Inter/Parallel below), the new task consists of mirroring with one hand the orientation of the target sensed with the other hand (referred to as Inter/Mirror below). In terms used within the literature on proprioceptive sense (c.f., Goble 2010), our Intra task corresponds to an “ipsilateral matching task,” whereas our Inter/Mirror task involves “contralateral remembered matching.” The latter requires interhemisphere transfer of proprioceptive information, but still allows for direct comparison of joint-related proprioceptive signals. Our third condition, i.e., Inter/Parallel, is distinct from the other two because direct comparison of joint positions cannot be used to achieve the task, for the reasons described above, and as such does not constitute a “joint position matching task” at all, although it certainly does qualify as a proprioceptive matching task. All three tasks include a memory interval, raising the possibility that factors arising from the internal storage of proprioceptive information might come into play (Carrozzo et al. 2002; Goble 2010; McIntyre et al. 1998). They differ, therefore, primarily in the need for interhemispheric transfer (Inter/Parallel and Inter/Mirror) or not (Intra) and the ability to directly compare joint angles (Inter/Mirror and Intra), or not (Inter/Parallel), as summarized in Fig. 1. Comparison of the three conditions (Intra, Inter/Mirror, and Inter/Parallel) allows us to discriminate whether the visual encoding of purely proprioceptive tasks is induced by the interhemispheric sensory transfer per se, or by the necessity of transforming intrinsic joint signals to another representation or reference frame to achieve the task.

Fig. 1.

Experimental conditions. A, left: in the Intra condition, the subject senses with the right hand the target orientation, and after a delay (during which the hand is lowered) he/she reproduces the orientation with the same hand. In the Inter/Mirror condition, the subject senses the target orientation with the left hand, and after a delay he/she mirrors the memorized orientation with the right hand. In the Inter/Parallel condition, the target is sensed with the left hand, and its orientation is reproduced with the right hand. Right: a table reports whether each of the experimental conditions requires an interhemispheric transfer of the proprioceptive information and/or the transformation of the joint signals. B: the visual environment shown to the subject in the head-mounted display consisted in a cylindrical tunnel. During half of the experiment, the tunnel walls had no landmarks (left image), whereas, in the other half, visual landmarks were added (right image).

To test these alternative hypotheses we, therefore, performed two experiments using a virtual reality setup. In a first experiment, the subjects performed a purely proprioceptive task consisting of reproducing a target orientation felt by a hidden hand in the three different conditions described above: Intra, Inter/Mirror, and Inter/Parallel (see Fig. 1A). In this experiment, we also probed for the use of visual encoding by simply observing the effect of adding or removing visual scene information (Fig. 1B), the rationale being that the presence of environmental visual information should improve accuracy and precision in the conditions in which the brain uses a visual encoding of the task, as shown in previous studies on reaching movements (Diedrichsen et al. 2004; Obhi and Goodale 2005). A second experiment was performed to specifically quantify the visual encoding of the proprioceptive information. To this end, we compared the amplitude of the deviation of the subjects’ motor responses due to an artificially imposed tilt of the visual scene in the Inter/Mirror and Inter/Parallel conditions. Here, the tilt of the visual information should affect more the subject motor responses in the task where the sensory weighing of the visual information is greater (Sarlegna and Sainburg 2007; Sober and Sabes 2005; Tagliabue and McIntyre 2011; Whitney et al. 2003).

METHODS

Ethics Statement

The experimental protocol was approved by the Internal Review Board of University Paris Descartes (Comité de Personnes Ile-de-France II, Institutional Review Board registration no. 00001073, protocol no. 20121300001072), and all participants gave written, informed consent in line with the Declaration of Helsinki.

Experimental Setup

For the present experiments, we used the same experimental setup employed in our previous work investigating the differences between Intra and Inter proprioceptive tasks (Tagliabue and McIntyre 2013). The system consisted of several components: an active-marker motion-analysis system (CODAmotion; Charnwood Dynamics) used for real-time recording of the three-dimensional position of 27 infrared LEDs (sub-millimeter accuracy, 200-Hz sampling frequency). Eight markers were distributed ~10 cm apart on the surface of stereo virtual reality goggles (nVisor sx60, NVIS) worn by the subjects; eight on the surface of tools (350 g, isotropic inertial moment around the roll axis) that were attached to each of the subjects’ hands; and three attached to a fixed reference frame placed in the laboratory.

The three-dimensional position of the infrared markers was used to estimate in real-time the position and the orientation of the subject’s viewpoint and hands and thus to update accordingly the images shown to him/her in the virtual reality goggles. The virtual environment shown to the subjects through the goggles consisted of a cylindrical horizontal tunnel (Fig. 1B). Longitudinal marks parallel to the tunnel axis could be added on the walls to help the subjects to perceive their own spatial orientation in the virtual word. The fact that the marks went from white in the ceiling to black on the floor facilitated the identification of the visual vertical.

Experiment 1 (Intra, Inter/Mirror, Inter/Parallel)

Experimental procedure.

The subject was seated comfortably in a chair with the head upright and the arms resting alongside the body. At the beginning of each trial, the main phases of which are depicted in Fig. 2A, an auditory command asked the subject to raise a hand (the left for the Inter tasks and the right for the Intra one) and the color of virtual environment changed depending on the hand pronosupination angle. The color changed from red to green as the hand approached the orientation that had to be memorized (target). After 2.5 s, during which he/she actively explored the desired orientation (memorization time), the color of the virtual environment became no longer sensitive to the hand orientation, and the subject was instructed to lower the hand along his/her body. After a 5-s delay, an audio instruction was given to the subject to raise the right hand and align it to the target orientation. Once the subject was satisfied with his/her motor response, he/she validated the trial by pressing a foot-pedal. Since the orientation of the hand was never rendered in the virtual reality goggles, subjects could use only proprioceptive information for both target memorization and to control the hand movement. For this reason, the task is defined as purely proprioceptive.

Fig. 2.

Experimental paradigms for experiments 1 and 2. For both experiments, the condition Inter/Parallel is used as an example. In this graphical representation, the images displayed to the subject through the virtual reality goggles are represented in a square placed in front of the avatar shown in the figures. Note that, for illustrative purpose, the hand was drawn; however, the subject’s hand was never rendered in the virtual reality goggles, and the head-mounted display prevented direct visual observation of the hand’s orientation in space. A: experiment 1. During the memorization phase, the subject rotated his/her hand in front of him/her, and the virtual environment changed color as a function of the hand pronosupination. The subject had to memorize the hand orientation corresponding to the green color. After memorization, the subject was asked to lower the arm and wait for 5 s. After the delay, the subject had to raise his/her hand and reproduce the memorized orientation. B: experiment 2. The paradigm was the same as in experiment 1, but, during the 5-s delay after memorization, the subject performed an audio-controlled 15° head rotation and responded while keeping the head tilted. In 50% of the trials, a gradual, imperceptible conflict was applied: the visual scene was tilted with respect to gravity by an angle equal to −0.6 times the head inclination, so that the visual and gravitational verticals no longer matched.

The three experimental conditions (Intra, Inter/Mirror, and Inter/Parallel) represented in Fig. 1 were tested in distinct blocks of trials. All three conditions were also tested in two different visual environments with the aim of detecting the effects of external visual references in each condition. In one condition, no visual landmarks were provided, whereas in the other visual landmarks were shown to the subject (see Fig. 1B).

For each experimental condition and visual environment, the subjects performed 21 trials, 3 for each of the 7 target orientations (−45°, −30°, −15°, 0°, +15°, +30°, +45°), for a total of 126 trials.

Participants.

Twenty-four volunteers (12 men, 12 women) participated in this experiment, age 27 ± 8 yr (mean ± SD). All subjects performed the task in all three experimental conditions, with and without visual landmarks. We compensated for possible order effects for task condition and visual environment by randomizing among subjects the order of the three experimental conditions (6 possible combinations) and of the two visual environments.

Data analysis.

Figure 3 shows simulated data of typical responses provided by a subject in one condition, to illustrate the analysis methods. Responses to each target are plotted against the target’s orientation, and the dashed line shows the best fit linear regression through the ensemble of individual responses. Responses were analyzed in terms of global bias, accuracy, precision, and contraction of the responses around the vertical (McIntyre and Lipshits 2008; Tagliabue and McIntyre, 2011), as detailed below.

Fig. 3.

Data analyses. Simulated data showing typical data for a single subject’s responses, θ, to different target orientation, Θ. For each target, three responses were provided by the subject. For each target the mean response, , is represented (squares). was then used to compute the global response Bias (small in this example), the average response accuracy, Accu, as well as the response contraction, Contr, toward the vertical. To estimate response precision (variability), the linear regression of all 21 responses is performed (dashed line), then for each response the residual, res, from the regression line is computed. The response precision is associated with the response dispersion around the regression line: root mean squared residual.

Bias quantifies whether the subject’ responses were, on average, globally rotated clockwise or counterclockwise, and it is computed as it follows. First, as shown in Fig. 3, for each of the seven targets (Θt) the mean (), of the three corresponding responses, θt,i, was computed, as well as the corresponding mean response error , often referred to as the signed constant error.

Then the average global Bias was computed as the algebraic mean across targets of signed constant errors:

In Fig. 3, one can see that the mean response errors for positive and negative targets tended to compensate each other in this example, resulting in a small global Bias of the responses.

We characterized the overall accuracy (Accu) by computing the average magnitude of the constant error across the seven targets:

In the example reported in Fig. 3, although the global rotational Bias across all targets is small, the amplitude of target-specific errors is significant.

Orientation reproduction tasks are typically affected by responses’ attraction to, or repulsion from, specific axes (McIntyre and Lipshits 2008; Tagliabue and McIntyre 2011), notably the vertical axis. This phenomenon can be characterized by the term “local distortion” (McIntyre et al. 1998; McIntyre and Lipshits 2008), which is a measure of whether responses to nearby targets are spaced closer together (contraction) or farther apart (expansion) compared with the distance between the targets themselves. In the specific case here, contraction around the vertical axis can also be measured by how much responses to targets on either side of zero orientation were deviated toward zero, i.e., in the positive direction for negative targets and vice versa. Thus, to quantify the local distortion around zero, we computed the response contraction (Contr), as we did in our previous publications (Tagliabue and McIntyre 2011):

where sign(Θt) = 1 for Θt > 0; sign(Θt) = −1 for Θt < 0; and sign(Θt) = 0 for Θt = 0. In the example of Fig. 3, the responses are characterized by an attraction toward the vertical and thus a positive value of Contr.

To quantify the responses’ precision (Prec), for each subject we computed the root mean square of the residuals (res) around the best fit regression line passing through the points (see Fig. 3):

This technique allowed for a robust estimation of the variability despite the fact that for each target orientation the subject provided only three responses. As shown in the example of Fig. 3, this procedure allowed us to obtain an estimation of Prec that is independent of the global Bias and the contraction/expansion of the responses, because those two characteristics are captured by the regression line, yet uses all 21 data points. Note that systematic patterns of errors that vary nonlinearly with target orientation could potentially affect this quantification of Prec, because they would not be captured by the linear regression. To show that Prec was not affected by such patterns of errors, we verified the lack of significant effects of the target orientation on the residuals (res) from the regression.

Statistical analyses.

An ANOVA for repeated measures was performed on the four parameters (Bias, Accu, Contr, Prec). The ANOVA model used the task type (Intra, Inter/Mirror, or Inter/Parallel) and visual scene condition (no visual landmarks, visual landmarks) as independent within-subject factors. Newman-Keuls post hoc tests were performed to assess differences between two specific conditions whenever the ANOVA showed significant effects or interactions between independent factors.

Experiment 2 (Sensory Weighting Quantification: Inter/Mirror vs. Inter/Parallel)

Experimental procedure.

To quantify the importance given to environmental visual information as a function of task, we performed an additional experiment to compare the reliance on the visual field in the Inter/Mirror vs. Inter/Parallel conditions.

Procedurally, the only difference for the subject with respect to experiment 1 was that, during the 5-s delay period after memorization, he or she had to perform a controlled 15° lateral head tilt to the right or to the left, depending on the trial (Fig. 2B). To guide subjects to the desired inclination of the head, audio feedback was provided: a sound with a left-right balance corresponding to the direction of the desired head inclination decreased in volume as the head approached 15°. If the subject could not extinguish the sound within 5 s, the trial was interrupted and was repeated later on. Once he/she reached the desired head inclination, after the 5-s delay period (including the head roll movement), an auditory signal was given to the subject to raise the right hand to respond. The Inter/Parallel condition replicates the “INTER-manual” condition presented in our previous study (Tagliabue and McIntyre 2013).

Unbeknownst to the subject, however, these trials also differed from experiment 1 by the introduction of artificially induced conflict between visual and proprioceptive information about the head tilt. Tracking the virtual reality goggles was normally used to hold the visual scene stable with respect to the real world during movements of the head. But in 50% of the trials, we generated a gradual, imperceptible conflict such that, when the head rotates, the subject received visual information corresponding to a larger tilt. The amplitude of the angle between the visual vertical and gravity varied proportionally (by a factor of 0.6) with the actual head tilt with respect to gravity, so that, when the head was straight, there was no conflict, and when the head was tilted to 15°, the conflict was 9° (see Fig. 2B). At the end of the session, the experimenter explicitly interviewed the subjects about the conflict perception. None of the subjects in this experiment reported to have noticed the tilt of the visual scene.

Participants.

Twenty subjects participated in this study: 10 men and 10 women, age 24 ± 3 yr (mean ± SD). All subjects performed both experimental conditions, and, to compensate for possible order effects, one-half of them started with the Inter/Mirror condition, and the other half with the Inter/Parallel condition. For each of the two experimental conditions, the subjects performed 56 trials: 2 for each combination of 7 target orientations (−45°, −30°, −15°, 0°, +15°, +30°, +45°), 2 head inclinations (±15°), and 2 levels of conflict (with and without conflict).

Data analysis.

As in Tagliabue and McIntyre (2013), the recorded subjects’ responses, θ, were analyzed in terms of errors (Err) made in reproducing the memorized target orientations, Θ. So that for the ith response to a target, t, the error Errt,i = θt,i − Θt. To focus the analyses on the effect of the sensory conflict, we first corrected for any global rotation of responses that might occur due to possible Muller or Aubert effects (for review, see Howard 1982), independent from the tilt of the visual scene in the conflict situation. To this end, we subtracted the global response Bias after left head tilts without sensory conflict, from all Err values for trials performed with the head tilted to the left (with and without conflict), and the same was done for the trials with right head tilts, on a subject-by-subject basis. By subtracting the potential response Biases associated to the head tilts, we were able to analyze together the results of the trials with right and left tilt of the head. Then we subtracted the mean of all responses without conflict from each Err associated to each specific trial with conflict, and we normalized this difference by the actual rotation of the visual scene.

| (1) |

Next, the mean value of these normalized deviations was computed, for each subject and for each condition.

| (2) |

The average deviation corresponds to the percentage weight given to the visual information vs. other sources of information.

We also evaluated the subjects’ precision, Prec. To this end, we employed for the trials without conflict of this set of data the same linear regression technique used for experiment 1, with the only difference being that, before combining the responses following right and left head tilts, we subtracted the respective global Bias to compensate for possible Muller or Aubert effects induced by head tilts with respect to gravity. For sake of simplicity, we have restricted the analyses of this second experiment to the parameters directly comparable with the results previously obtained in Tagliabue and McIntyre (2013), i.e., and Prec.

Statistical analyses.

A t-test for repeated measures was performed on the average response deviations and on the response Prec. The t-test model used the task type (Inter/Mirror or Inter/Parallel) as the single independent within-subject factor. To test whether the response deviations due to the scene tilt were significantly greater than zero, according to our a priori hypothesis, one-tailed Student’s t-tests were performed.

RESULTS

Experiment 1 (Intra, Inter/Mirror, Inter/Parallel)

Figure 4 shows the motor responses for individual subjects for each of the three experimental conditions without visual landmarks. Although errors were nonnegligible for individual targets, the subject globally succeeded in performing the task. Qualitatively, the Inter/Parallel condition seems characterized by larger errors with respect to the other two conditions.

Fig. 4.

Orientation of the subjects’ hand as a function of the target inclination, in the three experimental conditions. Each line represents data from a single subject, and each point represents the average of the three responses made by a given subject for one target orientation. Black points show what would be the responses corresponding to null error.

Accuracy.

As shown in Fig. 5A, subjects were most accurate (smaller values of Accu) in the Intra condition, less so in the Inter/Mirror condition, and significantly worse in the Inter/Parallel condition. Accu was indeed clearly affected by the experimental condition [F(2,46) = 27.74, P < 10−5], with a level of Accu significantly different between each conditions. The effect sizes for each condition are reported in Table 1. The presence of visual landmarks did not seem to affect the absolute error made by the subjects [F(1,23) = 0.12, P = 0.73], and no statistically significant interaction between the effect of the experimental condition and the visual landmarks was observed [F(2,46) = 1.02, P = 0.37].

Fig. 5.

Quantification of subjects’ average performance in the three experimental conditions, in terms of accuracy (Accu; A), total average shift of the responses (Bias; B), contraction of the responses toward the vertical (Contr; C), and variability of the responses (Prec; D). Vertical whiskers represent the 0.95 confidence intervals. Asterisks represent the results of the Newman-Keuls post hoc tests (***P < 0.001; **P < 0.01).

Table 1.

Size of the condition effects for the response accuracy, global bias, contraction, and precision expressed as the average difference between the three experimental conditions

| Accu, ° | Bias, ° | Contr, ° | Prec, ° | |

|---|---|---|---|---|

| Inter/Mirror-Intra | 1.8 | −0.6 | −0.1 | 0.2 |

| Inter/Parallel-Intra | 4.4 | 2.7 | 2.9 | 2.3 |

| Inter/Parallel-Inter/Mirror | 2.7 | 3.2 | 3.0 | 2.1 |

Acc, accuracy; Contr, contraction; Prec, precision.

Bias.

In Fig. 5B, the global deviation of the subjects’ responses appears very similar in the Intra and Inter/Mirror conditions and larger, and in the opposite direction, in the Inter/Parallel condition. This latter difference, however, was not statistically significant, because no clear effect of the experimental condition resulted from the ANOVA test [F(2,46) = 2.76, P = 0.07]. As for the Accu, no significant effect of the visual landmarks on Bias was detected [F(1,23) = 1.15, P = 0.29].

Contraction.

The deviation of the subjects’ responses toward or away from the vertical was affected by the experimental condition [F(2,46) = 9.07, P < 0.001]. Responses in the Inter/Parallel condition were attracted toward the vertical [t-test Contr > 0: F(23) = 2.42, P = 0.01], and this pattern of errors significantly differed from the one seen in both Intra and Inter/Mirror conditions (post hoc tests P < 0.001, effect sizes in Table 1), where the responses tended to be slightly repelled by the vertical instead [t-test Contr < 0: F(23) = −3.24, P = 0.002 and F(23) = −1.74, P = 0.05 for Intra and Inter/Mirror, respectively]. The visual landmarks appear to have had no significant effect on Contr of responses toward the vertical [F(1,23) = 0.41, P = 0.53], and no interaction between the experimental condition and visual landmarks was observed [F(2,46) = 1.28, P = 0.29].

Precision.

The res around the regression line were not significantly affected by target orientation [F(6,138) = 1.41, P = 0.21]; thus the Prec parameter appears to well represent the within-subject response variability. Prec was significantly modulated by the experimental condition [F(2,46) = 41.12, P < 10−5], with a significantly larger variable error in the Inter/Parallel, than in either the Intra or Inter/Mirror conditions (post hoc comparisons: P < 0.001). Effect sizes of the condition factor are reported in Table 1. Visual landmarks did not appear to affect response Prec [F(1,23) = 1.15, P = 0.29], nor did they significantly interact with the effect of the experimental condition [F(2,46) = 0.66, P = 0.52].

Overall, this first experiment allows for an evaluation of the new Inter/Mirror procedure compared with the Intra and the Inter/Parallel conditions. While the Inter/Mirror paradigm appears intermediate in term of Accu, all other parameters (Bias, Contr, Prec) were similar to the Intra condition and differed significantly from the Inter/Parallel condition.

Experiment 2 (Sensory Weighting: Inter/Mirror vs. Inter/Parallel)

In this experiment, a sensory conflict was used to dissociate the visual and the gravitational verticals (see Fig. 2 and procedures) and thus estimate the reliance on a visual representation of the task. Because all of the methodologies are similar to the one previously used, results of the present Inter/Mirror vs. Inter/Parallel experiment can be directly compared with the results obtained in our previous Intra vs. Inter experiment (Tagliabue and McIntyre 2013).

The effect of tilting the visual scene in each of the experimental conditions is qualitatively represented in Fig. 6. In the Inter/Mirror condition, the conflict appears to have had no clear effect on the subjects’ responses, as was the case in the Intra condition from our previous study (Figs. 4 and 5A in Tagliabue and McIntyre 2013). On the other hand, in the Inter/Parallel condition, the hand of the subjects deviated significantly in the direction of the scene rotation, reproducing the same effect that we observed in the “INTER-manual” condition of Tagliabue and McIntyre (2013).

Fig. 6.

Subject responses to each target orientation in the Inter/Mirror and Inter/Parallel conditions. The responses for the trials with and without conflict are represented separately. Thick lines are the average responses (the transparent areas’ width represent the standard error) of all subjects, combining trials with left- and right-head tilt. Green arrows represent the measured responses’ deviations due to the tilt of the visual scene.

Statistical analyses of the average responses’ deviation, , (see Fig. 7A) fully support this qualitative observation. The t-test shows that was strongly affected by the experimental condition [F(1,19) = 14.25, P = 0.001, effect size: 22%]. Figure 7A allows one to appreciate how such modulation between the Inter/Mirror and Inter/Parallel conditions was very similar to what we previously obtained with a statistically significant effect between the Intra and Inter conditions.

Fig. 7.

Results of the present Inter/Mirror vs. Inter/Parallel experiment (black) compared with the results obtained in our previous Intra- vs. Inter- manual experiment (light gray) (Tagliabue and McIntyre 2013). A: deviations induced by an imperceptible tilt of the visual scene. The results are expressed as a percentage of the theoretical deviation expected if subjects aligned the response with respect to the visual scene. B: response precision, Prec. Vertical whiskers represent the 0.95 confidence intervals. Asterisks represent the results of the t-test comparison with the nominal 0% value and of the t-test analyses testing the differences between the two experimental conditions (**P < 0.01; *P < 0.05).

Modulation of Prec shown in Fig. 7B is consistent with the statistically significant modulation that we observed between the analogous two conditions in experiment 1, and it is also very similar to the Prec modulation between the Intra and Inter conditions observed in our previously work (Tagliabue and McIntyre 2013). The within-subject variability indeed increased significantly between the Inter/Mirror and Inter/Parallel condition [F(1,19) = 5.30, P = 0.03, effect size: 1.6°].

Overall, these results confirm and extend our previous findings by demonstrating that the increased sensory weighting of the visual scene observed during the Inter/Parallel task does not depend on the use of both limbs per se. These results are further discussed below.

DISCUSSION

The present experiments aimed at better understanding which factors lead the brain to visually encode goal-oriented motor tasks in which both target and movement are sensed using proprioceptive information only. We recently demonstrated that sensorimotor tasks in which the subjects have to sense the target with one hand and reach/point to it with the other hand result in a partial representation of the task in visual frame, based on an observed reliance on the orientation of the visual scene. Conversely, when this interlimb transfer of information is not required, the brain appears to avoid using visual encoding of the task (Tagliabue and McIntyre 2011, 2013). These experimental observations are consistent with the theoretical predictions that the visual reconstruction of the purely proprioceptive task can statistically improve the sensorimotor Prec only when the necessity of transferring the proprioceptive signals between the two limbs prevents direct comparison between the target and hand information. Our previous study, however, did not allow us to disentangle whether the direct comparison between the target and hand information was prevented by the necessity of transferring the proprioceptive information between hemispheres per se or by the necessity of transforming the joint angles of one arm into different angles of the other arm so as to match the orientation of the hand. Here we have presented the results of two experiments allowing us to answer this question.

Experiment 1

In the first experiment, we directly compared different quantitative assessments of alignment errors (Bias, Accu, Contr, and Prec) of subjects performing tasks requiring neither interhemispheric transfer nor joint-signal transformations (Intra), interhemispheric transfer but not joint-signal transformations (Inter/Mirror), and both interhemispheric transfers and joint-signal transformations (Inter/Parallel). Although some differences were observed between the analyzed parameters, the sensorimotor errors in the two conditions not requiring joint-signal transformations (Intra and Inter/Mirror) tended to be similar, and both appeared smaller than the Inter/Parallel condition that requires transformations of the joint angles. These results suggest that sensory signals are processed in a similar way when comparing intrinsic joint configurations, despite the fact that one task is intrahemispheric and the other is interhemispheric. Only in the condition where the proprioceptive joint signals needed to be transformed did the information appear to be processed in a different way. In particular, lower Accu and Prec in the Inter/Parallel condition than in the other two conditions is consistent with the hypothesis that a more complex processing of the sensory information is necessary in the Inter/Parallel condition. The fact that both the Intra and Inter/Mirror conditions were characterized by a slight repulsion from the vertical, which was previously shown for a purely proprioceptive task (Tagliabue and McIntyre 2011), and which differs from the slight attraction toward the vertical observed in the Inter/Parallel condition and other multimodal tasks (Tagliabue and McIntyre 2011), is also consistent with our hypothesis and provides a first clue that both Intra and Inter/Mirror tasks are encoded in a purely proprioceptive reference frame, while the Inter/Parallel task entails the integration of other sensory signals.

The greater Accu and Prec in the Inter/Mirror than in the Inter/Parallel condition observed here appears to be highly consistent with the similar findings on bimanual tracking tasks reported by Alaerts et al. (2007) and Boisgontier et al. (2014). The results of the present study seem, nonetheless, to contrast with the finding of Iandolo et al. (2015), who showed that blindfolded subjects tend to perform better when reproducing the spatial location of the contralateral hand (extrinsic condition) than when mirroring it with respect to their sagittal plane (intrinsic condition). These apparently contradicting results can be reconciled, however, by the fact that an imposed 10-cm height difference between the two hands (for hands placed on average at a 6.5-cm horizontal distance) actually prevented the mirroring of the hand positions by simply reproducing the same joint angles in the two arms. As a consequence, and in contrast to our experiment, the subjects in that experiment most likely had to perform a sensory transformation in both intrinsic and extrinsic conditions.

To understand whether the differences observed here actually correspond to the reconstruction of a visual representation of the task, we investigated whether adding or removing environmental visual landmarks would affect the motor errors made by the subjects; the rationale being that the additional visual environmental information should improve the performance mainly in the conditions where a visual encoding is used. The addition of visual landmarks in the virtual environment did not, however, produce clear improvement in the response Accu and Prec. Although we cannot exclude, of course, the possibility that visual information was not used at all in this first experiment, we believe that the lack of the effect of the landmarks could be due to an insufficient sensitivity of this experimental technique to modulations of the contribution of the visual information. Indeed, we note that all components of the reaching error tended to be reduced, although not significantly, by the presence of the visual landmarks only in the Inter/Parallel condition. This qualitative observation appears to support the hypothesis that visual information might have been used in this condition, but that its effect was not large enough to be quantified by our experimental paradigm. Experiment 2 was more effective in this regard (see below).

Experiment 2

To investigate modulations in the use of a visual representation of our proprioceptive task, we performed a second experiment in which we compared the two Inter limb conditions (Inter/Mirror and Inter/Parallel), which differ by the necessity, or not, of transforming intrinsic joint angle signals. In this experiment, we measured the deviation of the motor responses generated by an imperceptible deviation of the visual environment to quantify the relative weight given by the brain to the visual and proprioceptive representations of the task, respectively. This technique, which we extensively used in the past to show modulations of multisensory weighting (Tagliabue and McIntyre 2011, 2012, 2013, 2014, Tagliabue et al. 2013), allowed us to quantify the difference between the Inter/Mirror and Inter/Parallel conditions in terms of the importance given by the brain to the visual environment. This difference between conditions appears very similar to what we observed between the Intra and Inter/Parallel conditions in Tagliabue and McIntyre (2013) (see Fig. 7). More specifically, in the Inter/Mirror condition, subjects appeared to neglect the visual scene, as we previously observed in the Intra condition, while in the Inter/Parallel condition the relative weight given to the visual representation rose to nearly 30%. This latter result is fully consistent with what we observed in our previous study (Tagliabue and McIntyre 2013) for the Inter condition, of which the present Inter/Parallel condition is a replica.

The here observed modulation of the sensory weighting, and its strong similarity with the modulation between Intra and Inter task shown in Tagliabue and McIntyre (2013), confirms that the reconstruction of the visual representation of purely proprioceptive tasks is not due to the interhemispheric transfer of the proprioceptive information per se, as the results of our previous work could have suggested. This modulation appears to be related rather to the necessity of transforming the proprioceptive signals relative to the intrinsic joint angles of one arm into different angles for the other arm.

If these findings are interpreted in the frame of our modular sensory-integration model (Tagliabue and McIntyre 2011, 2013, 2014), more detailed conclusions can be drawn. Our model predicts that the brain does not reconstruct representations of the movement in additional sensory modalities when a direct, unimodal comparison between the sensory information related to the target and to the moving hand is possible. It follows that the very similar results observed in the Intra and Inter/Mirror conditions, both in terms of errors patterns and sensory weighting, would mean that the brain can directly match proprioceptive signals from the two different hemispheres with almost no additional processing, as if they came from the same receptors, provided that they come from homologous sensors (see Fig. 8). This conclusion could have a neural correlate in the finding of Diedrichsen and colleagues (2013), showing that, during unimanual finger movement, patterns of activity identical to those generated by contralateral mirror-symmetric movement can be observed on the ipsilateral hemisphere S1 and M1. One could indeed think that this kind of mirror-symmetric “resonance” between hemispheres could reflect privileged connections between interhemispheric homologous hand proprioceptive receptors and actuators.

Fig. 8.

Simplified graphical representation of putative sensory information flow during the following three different experimental conditions. Intra: the proprioceptive signals coming from the right hand supinator (Sup) and pronator (Pron) muscles during the target memorization can be directly compared with the proprioceptive signals coming from the same muscles during the response phase. To achieve the task’s goal, the brain modifies the hand orientation to minimize the difference between the corresponding target and response signals. Inter/Mirror: the proprioceptive signals coming from the left hand Sup and Pron muscles during the target memorization can be directly compared with the proprioceptive signals coming from the homologous muscles of the right hand during the response phase. The task can, therefore, be achieved as in the Intra condition. Inter/Parallel: the task cannot be achieved by simply matching the proprioceptive signals from the homologous muscles of the left and right arm. The brain has to combine the Sup and Pron signal to build a representation of the right- and left-hand orientation than can be indirectly compared. Such reconstruction of the hands’ orientation can take advantage of using additional sensory signals such of proprioceptive signals coming from other parts of the body, gravitational signal, and visual scene information, effectively performing the comparison in multiple reference frames. The red color represents the elements affected by the tilt of the visual scene. Blue and black colors represent the proprioceptive and gravitational signals, respectively, which are supposedly not affected by the sensory conflict.

On the other hand, the clear difference between the Inter/Parallel and the Inter/Mirror conditions both in terms of errors (experiment 1) and in terms of visual encoding (experiment 2) is consistent with the prediction of our model for conditions in which a direct comparison between the target and response sensory signal is not possible. In our previous works (Tagliabue and McIntyre 2013, 2014), we have shown that in this situation the statistically optimal solution (maximum likelihood principle) consists of transforming the proprioceptive signals to encode them in multiple reference frames, including, in some cases, a visual representation (Tagliabue and McIntyre 2013). Such use of the sensory signals provides, indeed, partially independent representations of the task, whose combination reduces the task-execution variability compared with what would be obtained by encoding the task in any single reference frame. The less-than-full deviation of the responses due to the tilt of the visual scene observed in the Inter/Parallel condition hence reflects the encoding of the proprioceptive signals into a visual reference frame that is combined with the encoding of the same information in other reference frames that are not affected by the tilt of the visual scene. Among these latter reference frames, there are probably egocentric (for instance trunk or head centered) encodings, as well as external encodings using gravitational information (Tagliabue and McIntyre 2012). Such multiple encoding of the information is simplistically represented in Fig. 8.

The differences reported here between the Mirror and Parallel Inter tasks and our interpretation of this finding in terms of different information processing appear consistent with the results of several other brain imaging studies. Parallel tasks (often referred to as “anti-phase tasks” for repetitive movements) appear to activate additional brain areas (supplementary motor area, somatosensory cortex, primary motor cortex, cingulate motor area, and premotor cortex) with respect to Mirror (in-phase) tasks (Goerres et al. 1998; Immisch et al. 2001; Sadato et al. 1997; Stephan et al. 1999a, 1999b). One could speculate that these brain areas could be involved in encoding the task in additional reference frames. However, the activation of any “visual” areas, which would even better match our prediction, has not been detected. This could be due to the fact that no “useful” visual information was provided to the subject, and that fast rhythmic movements tested in these previous studies could lend themselves less easily to a visual representation with respect to the slow goal-oriented motor task we tested. Although the encoding of the proprioceptive information in multiple reference frames and their optimal combination correspond to the best sensorimotor performance, this is by definition still noisier than the direct comparison of the proprioceptive signals, such as those used in the Intra and Inter/Mirror conditions (Tagliabue and McIntyre 2013). This model prediction perfectly matches the observed increase in variability between the Inter/Parallel and the other two experimental conditions.

Intermanual Coordination

The present findings not only provide a clear answer to our initial question about the visual encoding of purely proprioceptive tasks, but could also contribute to a better understanding of the neural mechanisms underlying the coordination of bimanual cyclic movements (for review, see Swinnen 2002; Swinnen and Wenderoth 2004). Specifically, the results from our experiments show that “parallel” matching tasks tend to show higher variability than “mirror” matching tasks. Although one might question whether the quasi-static matching tasks performed here can directly speak to mechanisms of rhythmic movements, the legitimacy of transposing the present findings from discrete to rhythmic movements is supported by the fact that theoretically both movement categories can be generated by the same sensorimotor control mechanisms (Ronsse et al. 2009b). Our observations about the noisiness of parallel vs. mirror tasks, applied cautiously, gives rise to new perspectives on the phenomena observed in more dynamic tasks, as we describe below.

Several studies have shown that in-phase (Mirror) rhythmic movements of the hands are significantly more stable than anti-phase (Parallel) movements, so that subjects asked to perform rapid anti-phase cyclic moments show sudden abrupt transitions to the in-phase movements, and once this transition occurred they do not go back to the anti-phase mode (Carson 1995; Kelso 1984; Semjen et al. 1995; Swinnen et al. 1997, 1998; Yamanishi et al. 1980). Interestingly, these phenomena appear to have more sensorial than motor origins (Mechsner et al. 2001; Ronsse et al. 2009a; Weigelt and Cardoso de Oliveira 2003), although the debate is not settled yet (Swinnen et al. 2003).

Several studies successfully modeled this phenomenon by describing the system as nonlinear oscillators with potential functions, as represented in Fig. 9A. In these models, in-phase movements correspond to a deep global minimum and the anti-phase movements correspond to a smaller local minimum, which disappear at high frequencies (Beek et al. 2002; Haken et al. 1985; Schöner et al. 1986). Abrupt transitions from the anti-phase to the in-phase movement can be described as a ball falling from the local minimum to the global minimum, due to modification of the potential curve profile and to some random noise/perturbation (represented as arrows in Fig. 9). While in the original model this noise was considered independent from the phase between the hands, here we have shown that the noise of the sensory system is actually greater in the Parallel than in the Mirror condition. This suggests that different levels of noise may characterize the in- and anti-phase bimanual movements. If this noise modulation is taken into account, then our results lead to an alternative system representation characterized by a potential curve with two similar minima for the in- and anti-phase movements (Fig. 9B). In this case, the ball would tend to move from the anti-phase to the in-phase condition, and not vice versa, because, in the former, but not in the latter, a large noise would tend to push it outside the local minimum. Further experiments will be needed to specifically investigate this alternative interpretation of instability phenomena during bimanual cyclic movements.

Fig. 9.

A: potential curve, V, function of the phase angle between the two hands, ϕ, proposed by Haken, Kelso and Bunz (HKB) (Haken et al. 1985) to represent spontaneous transitions from anti-phase (ϕ = π) to in-phase (ϕ = 0) rhythmic movements. The state of the system can be described as a ball rolling along this curve. The arrows represent intrinsic noise, which tends to perturb the system. In the HKB model, the noise did not vary as a function of ϕ. B: example of an alternative representation of rhythmic movements dynamics. As an extreme case, the in- and anti-phase movements correspond to similar minima of the potential function. The system noise is function of ϕ and more precisely it is larger for the anti-phase than for the in-phase movements.

Conclusions

The present findings suggest that the interhemispheric transfer of the information necessary in the task of mirroring one hand with the other does not increase sensorimotor errors per se. This suggests that the brain can directly compare the sensory signals coming from homologous muscles/receptor in the same way as signals coming from the same receptors. Moreover, we were able to show that, in agreement with the idea of optimal multisensory integration, the brain tends to build up multiple representations of a purely proprioceptive sensorimotor task (both target sensing and movement control only through proprioceptive information), including a visual representation, but only when a noisy transformation of the joint signals is necessary, and not simply when an interhemispheric transfer is involved in the task execution. Interestingly, the present findings could also contribute to a better understanding of the intermanual coordination by suggesting an important role of the sensory noise in the instability of anti-phase with respect to in-phase movements.

GRANTS

This work was supported by the French Space Agency (Centre National d’Etudes Spatiales).

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

L.A., S.F., D.F., and M.T. performed experiments; L.A., S.F., D.F., and M.T. analyzed data; L.A., S.F., M.B., J.M., and M.T. approved final version of manuscript; M.B., J.M., and M.T. interpreted results of experiments; M.B., J.M., and M.T. edited and revised manuscript; M.T. conceived and designed research; M.T. prepared figures; M.T. drafted manuscript.

ACKNOWLEDGMENTS

We gratefully acknowledge the support of the Paris Descartes Platform for Sensorimotor Studies (Université Paris Descartes, CNRS, INSERM, Région Île-de-France) and of Patrice Jegouzo (Université Paris Descartes) for technical support.

REFERENCES

- Alaerts K, Levin O, Swinnen SP. Whether feeling or seeing is more accurate depends on tracking direction within the perception-action cycle. Behav Brain Res 178: 229–234, 2007. doi: 10.1016/j.bbr.2006.12.024. [DOI] [PubMed] [Google Scholar]

- Bays PM, Wolpert DM. Computational principles of sensorimotor control that minimize uncertainty and variability. J Physiol 578: 387–396, 2007. doi: 10.1113/jphysiol.2006.120121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beek PJ, Peper CE, Daffertshofer A. Modeling rhythmic interlimb coordination: beyond the Haken-Kelso-Bunz model. Brain Cogn 48: 149–165, 2002. doi: 10.1006/brcg.2001.1310. [DOI] [PubMed] [Google Scholar]

- Blouin J, Saradjian AH, Lebar N, Guillaume A, Mouchnino L. Opposed optimal strategies of weighting somatosensory inputs for planning reaching movements toward visual and proprioceptive targets. J Neurophysiol 112: 2290–2301, 2014. doi: 10.1152/jn.00857.2013. [DOI] [PubMed] [Google Scholar]

- Boisgontier MP, Van Halewyck F, Corporaal SHA, Willacker L, Van Den Bergh V, Beets IAM, Levin O, Swinnen SP. Vision of the active limb impairs bimanual motor tracking in young and older adults. Front Aging Neurosci 6: 320, 2014. doi: 10.3389/fnagi.2014.00320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrozzo M, Stratta F, McIntyre J, Lacquaniti F. Cognitive allocentric representations of visual space shape pointing errors. Exp Brain Res 147: 426–436, 2002. doi: 10.1007/s00221-002-1232-4. [DOI] [PubMed] [Google Scholar]

- Carson RG. The dynamics of isometric bimanual coordination. Exp Brain Res 105: 465–476, 1995. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Werner S, Schmidt T, Trommershäuser J. Immediate spatial distortions of pointing movements induced by visual landmarks. Percept Psychophys 66: 89–103, 2004. doi: 10.3758/BF03194864. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Wiestler T, Krakauer JW. Two distinct ipsilateral cortical representations for individuated finger movements. Cereb Cortex 23: 1362–1377, 2013. doi: 10.1093/cercor/bhs120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faisal AA, Wolpert DM. Near optimal combination of sensory and motor uncertainty in time during a naturalistic perception-action task. J Neurophysiol 101: 1901–1912, 2009. doi: 10.1152/jn.90974.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE. Neural correlates of reliability-based cue weighting during multisensory integration. Nat Neurosci 15: 146–154, 2012. doi: 10.1038/nn.2983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghahramani Z, Wolpert DM, Jordan MI. Computational models of sensorimotor integration. In: Advances in Psychology. Self-organization, Computational Maps, and Motor Control, edited by Morasso P and Sanguineti V. Oxford: Elsevier, 1997, p. 117–147. doi: 10.1016/S0166-4115(97)80006-4. [DOI] [Google Scholar]

- Goble DJ. Proprioceptive acuity assessment via joint position matching: from basic science to general practice. Phys Ther 90: 1176–1184, 2010. doi: 10.2522/ptj.20090399. [DOI] [PubMed] [Google Scholar]

- Goerres GW, Samuel M, Jenkins IH, Brooks DJ. Cerebral control of unimanual and bimanual movements: an H2(15)O PET study. Neuroreport 9: 3631–3638, 1998. doi: 10.1097/00001756-199811160-00014. [DOI] [PubMed] [Google Scholar]

- Haken H, Kelso JA, Bunz H. A theoretical model of phase transitions in human hand movements. Biol Cybern 51: 347–356, 1985. doi: 10.1007/BF00336922. [DOI] [PubMed] [Google Scholar]

- Howard I. Human Visual Orientation. New York: Wiley, 1982. [Google Scholar]

- Iandolo R, Squeri V, De Santis D, Giannoni P, Morasso P, Casadio M. Proprioceptive bimanual test in intrinsic and extrinsic coordinates. Front Hum Neurosci 9: 72, 2015. doi: 10.3389/fnhum.2015.00072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Immisch I, Waldvogel D, van Gelderen P, Hallett M. The role of the medial wall and its anatomical variations for bimanual antiphase and in-phase movements. Neuroimage 14: 674–684, 2001. doi: 10.1006/nimg.2001.0856. [DOI] [PubMed] [Google Scholar]

- Jones SAH, Cressman EK, Henriques DYP. Proprioceptive localization of the left and right hands. Exp Brain Res 204: 373–383, 2010. doi: 10.1007/s00221-009-2079-8. [DOI] [PubMed] [Google Scholar]

- Kelso JA. Phase transitions and critical behavior in human bimanual coordination. Am J Physiol Regul Integr Comp Physiol 246: R1000–R1004, 1984. [DOI] [PubMed] [Google Scholar]

- Körding KP, Wolpert DM. Bayesian integration in sensorimotor learning. Nature 427: 244–247, 2004. doi: 10.1038/nature02169. [DOI] [PubMed] [Google Scholar]

- McGuire LMM, Sabes PN. Sensory transformations and the use of multiple reference frames for reach planning. Nat Neurosci 12: 1056–1061, 2009. doi: 10.1038/nn.2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntyre J, Lipshits M. Central processes amplify and transform anisotropies of the visual system in a test of visual-haptic coordination. J Neurosci 28: 1246–1261, 2008. doi: 10.1523/JNEUROSCI.2066-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McIntyre J, Stratta F, Lacquaniti F. Short-term memory for reaching to visual targets: psychophysical evidence for body-centered reference frames. J Neurosci 18: 8423–8435, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechsner F, Kerzel D, Knoblich G, Prinz W. Perceptual basis of bimanual coordination. Nature 414: 69–73, 2001. doi: 10.1038/35102060. [DOI] [PubMed] [Google Scholar]

- Obhi SS, Goodale MA. The effects of landmarks on the performance of delayed and real-time pointing movements. Exp Brain Res 167: 335–344, 2005. doi: 10.1007/s00221-005-0055-5. [DOI] [PubMed] [Google Scholar]

- Pouget A, Deneve S, Duhamel JR. A computational perspective on the neural basis of multisensory spatial representations. Nat Rev Neurosci 3: 741–747, 2002. doi: 10.1038/nrn914. [DOI] [PubMed] [Google Scholar]

- Proske U, Gandevia SC. The proprioceptive senses: their roles in signaling body shape, body position and movement, and muscle force. Physiol Rev 92: 1651–1697, 2012. doi: 10.1152/physrev.00048.2011. [DOI] [PubMed] [Google Scholar]

- Rohe T, Noppeney U. Distinct computational principles govern multisensory integration in primary sensory and association cortices. Curr Biol 26: 509–514, 2016. doi: 10.1016/j.cub.2015.12.056. [DOI] [PubMed] [Google Scholar]

- Ronsse R, Miall RC, Swinnen SP. Multisensory integration in dynamical behaviors: maximum likelihood estimation across bimanual skill learning. J Neurosci 29: 8419–8428, 2009a. doi: 10.1523/JNEUROSCI.5734-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ronsse R, Sternad D, Lefèvre P. A computational model for rhythmic and discrete movements in uni- and bimanual coordination. Neural Comput 21: 1335–1370, 2009b. doi: 10.1162/neco.2008.03-08-720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabes PN. Sensory integration for reaching: models of optimality in the context of behavior and the underlying neural circuits. Prog Brain Res 191: 195–209, 2011. doi: 10.1016/B978-0-444-53752-2.00004-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sadato N, Yonekura Y, Waki A, Yamada H, Ishii Y. Role of the supplementary motor area and the right premotor cortex in the coordination of bimanual finger movements. J Neurosci 17: 9667–9674, 1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarlegna FR, Sainburg RL. The effect of target modality on visual and proprioceptive contributions to the control of movement distance. Exp Brain Res 176: 267–280, 2007. doi: 10.1007/s00221-006-0613-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schöner G, Haken H, Kelso JA. A stochastic theory of phase transitions in human hand movement. Biol Cybern 53: 247–257, 1986. doi: 10.1007/BF00336995. [DOI] [PubMed] [Google Scholar]

- Semjen A, Summers JJ, Cattaert D. Hand coordination in bimanual circle drawing. J Exp Psychol Hum Percept Perform 21: 1139–1157, 1995. doi: 10.1037/0096-1523.21.5.1139. [DOI] [Google Scholar]

- Sober SJ, Sabes PN. Flexible strategies for sensory integration during motor planning. Nat Neurosci 8: 490–497, 2005. doi: 10.1038/nn1427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephan KM, Binkofski F, Halsband U, Dohle C, Wunderlich G, Schnitzler A, Tass P, Posse S, Herzog H, Sturm V, Zilles K, Seitz RJ, Freund HJ. The role of ventral medial wall motor areas in bimanual co-ordination. A combined lesion and activation study. Brain 122: 351–368, 1999a. doi: 10.1093/brain/122.2.351. [DOI] [PubMed] [Google Scholar]

- Stephan KM, Binkofski F, Posse S, Seitz RJ, Freund HJ. Cerebral midline structures in bimanual coordination. Exp Brain Res 128: 243–249, 1999b. doi: 10.1007/s002210050844. [DOI] [PubMed] [Google Scholar]

- Swinnen SP. Intermanual coordination: from behavioural principles to neural-network interactions. Nat Rev Neurosci 3: 348–359, 2002. doi: 10.1038/nrn807. [DOI] [PubMed] [Google Scholar]

- Swinnen SP, Jardin K, Meulenbroek R, Dounskaia N, Den Brandt MH. Egocentric and allocentric constraints in the expression of patterns of interlimb coordination. J Cogn Neurosci 9: 348–377, 1997. doi: 10.1162/jocn.1997.9.3.348. [DOI] [PubMed] [Google Scholar]

- Swinnen SP, Jardin K, Verschueren S, Meulenbroek R, Franz L, Dounskaia N, Walter CB. Exploring interlimb constraints during bimanual graphic performance: effects of muscle grouping and direction. Behav Brain Res 90: 79–87, 1998. doi: 10.1016/S0166-4328(97)00083-1. [DOI] [PubMed] [Google Scholar]

- Swinnen SP, Puttemans V, Vangheluwe S, Wenderoth N, Levin O, Dounskaia N. Directional interference during bimanual coordination: is interlimb coupling mediated by afferent or efferent processes. Behav Brain Res 139: 177–195, 2003. doi: 10.1016/S0166-4328(02)00266-8. [DOI] [PubMed] [Google Scholar]

- Swinnen SP, Wenderoth N. Two hands, one brain: cognitive neuroscience of bimanual skill. Trends Cogn Sci 8: 18–25, 2004. doi: 10.1016/j.tics.2003.10.017. [DOI] [PubMed] [Google Scholar]

- Tagliabue M, McIntyre J. Necessity is the mother of invention: reconstructing missing sensory information in multiple, concurrent reference frames for eye-hand coordination. J Neurosci 31: 1397–1409, 2011. doi: 10.1523/JNEUROSCI.0623-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tagliabue M, McIntyre J. Eye-hand coordination when the body moves: dynamic egocentric and exocentric sensory encoding. Neurosci Lett 513: 78–83, 2012. doi: 10.1016/j.neulet.2012.02.011. [DOI] [PubMed] [Google Scholar]

- Tagliabue M, McIntyre J. When kinesthesia becomes visual: a theoretical justification for executing motor tasks in visual space. PLoS One 8: e68438, 2013. doi: 10.1371/journal.pone.0068438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tagliabue M, McIntyre J. A modular theory of multisensory integration for motor control. Front Comput Neurosci 8: 1, 2014. doi: 10.3389/fncom.2014.00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tagliabue M, Arnoux L, McIntyre J. Keep your head on straight: facilitating sensori-motor transformations for eye-hand coordination. Neuroscience 248: 88–94, 2013. doi: 10.1016/j.neuroscience.2013.05.051. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. How humans combine simultaneous proprioceptive and visual position information. Exp Brain Res 111: 253–261, 1996. doi: 10.1007/BF00227302. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. Integration of proprioceptive and visual position-information: an experimentally supported model. J Neurophysiol 81: 1355–1364, 1999. [DOI] [PubMed] [Google Scholar]

- Weigelt C, Cardoso de Oliveira S. Visuomotor transformations affect bimanual coupling. Exp Brain Res 148: 439–450, 2003. doi: 10.1007/s00221-002-1316-1. [DOI] [PubMed] [Google Scholar]

- Whitney D, Westwood DA, Goodale MA. The influence of visual motion on fast reaching movements to a stationary object. Nature 423: 869–873, 2003. doi: 10.1038/nature01693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamanishi J, Kawato M, Suzuki R. Two coupled oscillators as a model for the coordinated finger tapping by both hands. Biol Cybern 37: 219–225, 1980. doi: 10.1007/BF00337040. [DOI] [PubMed] [Google Scholar]