In multiple cortical areas visual heading is represented in retinotopic coordinates while inertial heading is in body coordinates. It remains unclear whether multisensory integration occurs in a common coordinate system. The experiments address this using a multisensory integration task with eccentric gaze positions making the effect of coordinate systems clear. The results indicate that the coordinate systems remain separate to the perceptual level and that during the multisensory task the perception depends on relative stimulus reliability.

Keywords: human, visual stimuli, vestibular stimuli, multisensory integration, psychophysics

Abstract

Visual and inertial stimuli provide heading discrimination cues. Integration of these multisensory stimuli has been demonstrated to depend on their relative reliability. However, the reference frame of visual stimuli is eye centered while inertia is head centered, and it remains unclear how these are reconciled with combined stimuli. Seven human subjects completed a heading discrimination task consisting of a 2-s translation with a peak velocity of 16 cm/s. Eye position was varied between 0° and ±25° left/right. Experiments were done with inertial motion, visual motion, or a combined visual-inertial motion. Visual motion coherence varied between 35% and 100%. Subjects reported whether their perceived heading was left or right of the midline in a forced-choice task. With the inertial stimulus the eye position had an effect such that the point of subjective equality (PSE) shifted 4.6 ± 2.4° in the gaze direction. With the visual stimulus the PSE shift was 10.2 ± 2.2° opposite the gaze direction, consistent with retinotopic coordinates. Thus with eccentric eye positions the perceived inertial and visual headings were offset ~15°. During the visual-inertial conditions the PSE varied consistently with the relative reliability of these stimuli such that at low visual coherence the PSE was similar to that of the inertial stimulus and at high coherence it was closer to the visual stimulus. On average, the inertial stimulus was weighted near Bayesian ideal predictions, but there was significant deviation from ideal in individual subjects. These findings support visual and inertial cue integration occurring in independent coordinate systems.

NEW & NOTEWORTHY In multiple cortical areas visual heading is represented in retinotopic coordinates while inertial heading is in body coordinates. It remains unclear whether multisensory integration occurs in a common coordinate system. The experiments address this using a multisensory integration task with eccentric gaze positions making the effect of coordinate systems clear. The results indicate that the coordinate systems remain separate to the perceptual level and that during the multisensory task the perception depends on relative stimulus reliability.

during everyday experience we are bombarded by multisensory stimuli. Cue integration from diverse sensory inputs is key to perception. A major part of integrating diverse sensory stimuli is reconciling the coordinate systems in which these sensory stimuli occur. A common task in which multisensory integration occurs across coordinate systems is heading discrimination using both visual and vestibular cues. Although the primary cue for self-motion is likely vestibular (Valko et al. 2012), there are also proprioceptive and visceral cues; thus in this report I refer to self-motion as inertial motion. Both optic flow (Gibson 1950; Warren and Hannon 1988) and inertial motion (Crane 2012a; Gu et al. 2007; Guedry 1974; Telford et al. 1995) provide cues to heading direction. However, the coordinates of visual and inertial heading perception are different. Neurophysiology in the ventral intraparietal area (VIP) and dorsal medial superior temporal area (MSTd) suggests visual signals in an eye-centered or retinotopic reference frame (Chen et al. 2014; Fan et al. 2015; Gu et al. 2010; Lee et al. 2011; Takahashi et al. 2007), while vestibular signals are in body coordinates (Chen et al. 2013a; Fetsch et al. 2007). A recent study demonstrated that visual heading estimates were strongly biased toward retinotopic coordinates in humans, while inertial heading estimates remained in body coordinates (Crane 2015). Thus the reference frame differences seen in MSTd and VIP persist to the level of perception.

The reference frame of visual-inertial integration is unclear. One possibility is that sensory signals are combined in a common reference frame (Cohen and Andersen 2002). This has been shown to occur for other areas of multisensory integration such as visual-auditory (Meredith and Stein 1996) and visual-somatosensory (Groh and Sparks 1996) integration. However this hypothesis seems problematic for visual-inertial integration. Although the region of the brain responsible for visual-inertial integration is not known with certainty, there are neurons in the VIP and MSTd that respond to both visual and inertial headings (Bremmer et al. 2002; Gu et al. 2006, 2007, 2008; Page and Duffy 2003), suggesting that these areas play a role in visual-inertial integration (Fetsch et al. 2007, 2013) as well as the determination of heading perception (Yu et al. 2017).

Although prior studies have found multisensory integration to be near optimal based on the relative reliability of the stimuli (i.e., Bayesian), there have been significant deviations from ideal behavior (Battaglia et al. 2003; Knill and Saunders 2003; Odegaard et al. 2015; Oruç et al. 2003; Rosas et al. 2005). Such variations have also been reported in visual-inertial integration in which inertial cues were overweighted (Fetsch et al. 2009), although this only occurred in some individuals. One issue with prior visual-inertial integration heading discrimination tasks is that studying them required the visual and inertial stimuli to be offset from each other, although the thresholds have been studied without offsetting them (Karmali et al. 2014). This offset creates a state of conflict such that a shift in the point of subjective equality (PSE) occurs relative to studying a single sensory modality stimulus (Fetsch et al. 2011). Although in prior paradigms the two stimuli must be offset, the offset must be small enough for the stimuli to be perceived as congruent (i.e., having a common cause). Thus when an offset is present, there is a potential for stimuli to be recognized as incongruent or not having a common origin and one or the other sensory modality to be followed (Körding et al. 2007). Furthermore, when an offset is introduced it also becomes unclear what the ideal performance of a combined stimulus should be.

The present study looked at the effect of multisensory visual-inertial integration in this context by controlling gaze position and varying the reliability of the visual stimulus. By using eccentric gaze positions, integration of visual and inertial stimuli was studied across coordinate systems without artificially offsetting the stimuli. This tests the hypothesis that multisensory visual and vestibular heading cues are integrated ideally during offsets in eye position, which introduces an information source distinct from the sensory estimates themselves and may influence decision making downstream from the cue combination stage.

METHODS

Ethics statement.

The research was conducted according to the principles expressed in the Declaration of Helsinki. Written informed consent was obtained from all participants. The protocol and written consent form were approved by the University of Rochester Research Science Review Board.

Human subjects.

A total of seven human subjects (3 men, 4 women) completed the experiment. Ages ranged from 22 to 67 yr (mean 33 yr, SD 15 yr). All subjects had no history of vestibular disease.

Equipment.

Motion stimuli were delivered with a 6-degree-of-freedom motion platform (Moog, East Aurora, NY; model 6DOF2000E) similar to that used in other laboratories for human motion perception studies (Cuturi and MacNeilage 2013; Fetsch et al. 2009; Grabherr et al. 2008; MacNeilage et al. 2010) and previously described in the present laboratory for heading estimation studies (Crane 2012a, 2014). The head was coupled to the motion platform with a helmet.

Binocular eye position was monitored and recorded at 60 Hz with an infrared head-mounted video eye tracking system (ETD-300HD, IScan, Woburn, MA). Before each experiment the system was calibrated for each subject with fixation points that were centered and 10° in the up, down, right, and left positions. During the experiment the eye position had to be within 8° of the intended fixation point before the stimulus started. It was recognized that these fixation windows may be considered wider than necessary, but there was no visible fixation point (with which there would be a strong temptation for subjects to report the location of the focus of expansion relative to the fixation point), there was a moving visual background, and the subject was moving in many trials. Thus there was a potential for optokinetic nystagmus and the linear vestibuloocular reflex (Tian et al. 2005) to cause eye movements. The eye position was also monitored in real time as well as recorded for later analysis even after the fixation point extinguished. If both eyes strayed >8° from the location of the target in the horizontal direction after the fixation point was extinguished and during the stimulus presentation, a characteristic tone was played after the response was recorded. This alerted the technician running the experiment that the subject made a fixation error. If these errors were frequent, the subject was given further instructions or the eye tracking system was adjusted. A binocular criterion was used to lessen the chance that artifacts such as an eyeblink caused a false report. The technician running the experiment could also see the video from the eye movement tracker in real time, which allowed factors such as slip of the video goggles to be identified. The posttrial analysis identified trials in which the gaze did not remain within the target region.

During both visual and vestibular stimuli, an audible white noise was reproduced from two platform-mounted speakers on either side of the subject as previously described (Roditi and Crane 2012a). The noise from the platform was similar regardless of motion direction. Tests in the present laboratory previously demonstrated that subjects could not predict the platform motion direction on the basis of sound alone (Roditi and Crane 2012a).

Responses were collected with a handheld three-button control box. Subjects pressed the center button to indicate they were ready for each stimulus presentation. The buttons on the left and right sides were used to indicate the respective directions of perceived motion.

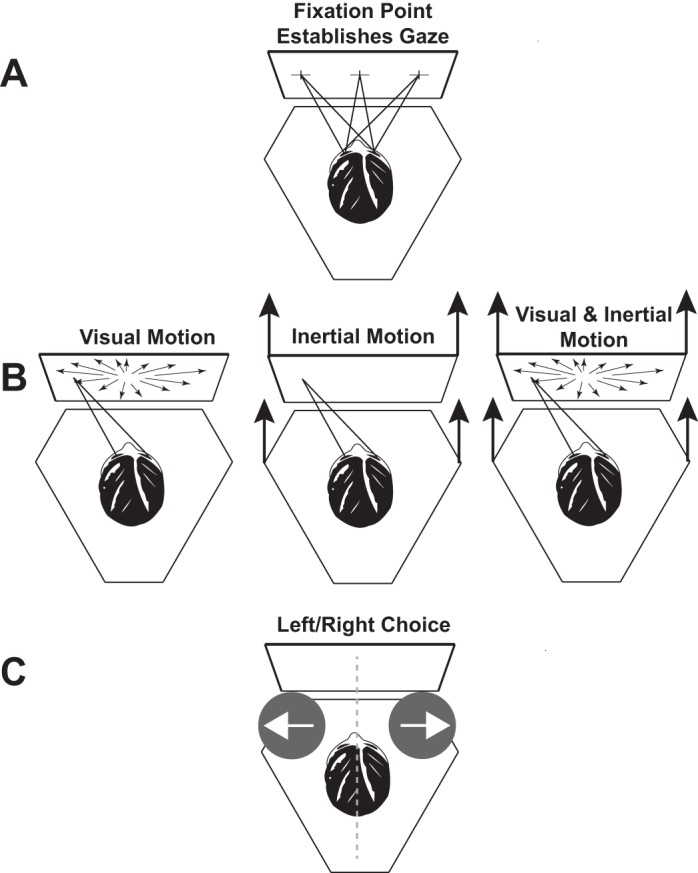

Stimulus.

There were three types of stimuli: visual only, inertial only, and combined visual-inertial (Fig. 1). During the combined stimulus condition the visual and inertial motions were synchronous and represented the same direction and magnitude of motion. The visual and inertial stimuli consisted of a single-cycle 2-s (0.5 Hz) sine wave in acceleration. This motion profile has previously been used for threshold determination (Benson et al. 1989; Grabherr et al. 2008; Roditi and Crane 2012a) and for heading estimation (Crane 2012a, 2014). The displacement of both visual and inertial stimuli was 16 cm with a peak velocity of 16 cm/s and peak acceleration of 25 cm/s2.

Fig. 1.

Experimental design. The subject was seated on a hexapod motion platform in front of a video display attached to the platform. A: a fixation point was shown that was either centered or 25° to the right or left. Trials with different fixation points were randomly interleaved. After the fixation point was established, the subject pushed a button to deliver the stimulus. B: subjects maintained their gaze even though the fixation point was extinguished. The stimulus could be visual (with several possible coherences), inertial, or both. When the visual and inertial stimuli were presented together they were always in the same direction. C: after the stimulus was completed, a brief tone indicated to the subject that the stimulus was completed and a response should be reported. The subject pushed a button to indicate whether the stimulus was perceived as left or right of midline.

Visual stimuli were presented on a color LCD screen with a resolution of 1,920 × 1,080 pixels 50 cm from the subject filling 98° of horizontal field of view. A fixation point consisting of a 2 × 2-cm cross at eye level could be presented centered or ±25° and was visible before each stimulus presentation but was extinguished during the stimulus presentation. The visual stimulus consisted of a star field that simulated movement of the observer through a random-dot cloud with binocular disparity as previously described (Crane 2012a). Each star consisted of a triangle 0.5 cm in height and width at the plane of the screen adjusted appropriately for distance. The star density was 0.01/cm3. The depth of the field was 130 cm (the nearest stars were 20 cm and the furthest 150 cm). Visual coherence was fixed for each trial block and could be varied between 0% and 100% between trial blocks.

It was recognized that maintaining eye position during the trials was important, as this had a large effect on perception of visual stimuli. The eye position data were reviewed to see whether the subject’s eye remained within 8° during the entire stimulus presentation. It was found that this criterion was met in 72% of trials, although when looked at by individual subject it varied over 62–79% when the average for each subject was considered separately. When reviewing the eye tracings it appeared that many of the failures of fixation were actually artifacts caused by eye blinking, the algorithm misidentifying the location of the pupil, or slippage of the goggles rather than actual fixation failures. The possibility of artifact vs. a true fixation failure could not always be determined, but very few events were likely to be actual failures of fixation. The data were analyzed excluding these events and including all of them. Overall, including or excluding trials with possible fixation failures had no significant effect. It would be expected to have the largest effect for head bias with visual stimuli with eccentric (e.g., ±25°) fixation. It is anticipated that since the fixation point was at the end of the oculomotor range, most failures would be due to eye positions in more medial or opposite direction. When the visual trials with eccentric fixation were analyzed, excluding the trials with possible fixation failures caused the bias to be larger by an average of 0.26° (range −2.1 to 4.1°), which was not significantly different from zero (P = 0.35, 1-sample t-test). Thus in the remainder of the analysis all data were used even if there was a possibility of a fixation failure.

Before each stimulus presentation a visual fixation point in the form of a 2 × 2-cm cross appeared that was either centered or 25° to the right or left. The subjects were instructed to look at this point and then press a button to indicate they were ready for the stimulus presentation. When subjects pressed the button the fixation point disappeared, but subjects were asked to maintain their gaze at this location and gaze was monitored and recorded with the eye movement tracker. The fixation point was not visible during the stimulus presentation, to discourage subjects from judging the position of the focus of expansion relative to the fixation point. After each 2-s stimulus, there was an audible tone that signaled the subjects that their response could be reported. Subjects reported each stimulus as left or right of center by pressing an appropriate button.

Experimental procedure.

The stimuli used can be broadly classified into three types: inertial motion in which the platform moved and no star field was visible, visual motion in which the platform remained stationary but star field motion was present, and combined visual and inertial motion. To modify the relative reliability of the visual stimulus, each trial block with a visual heading stimulus was presented with coherences of 35%, 50%, 70%, 80%, 90%, and 100%. The coherence was defined as 1 minus the fraction of points that were randomly repositioned between 60-Hz visual frames. Each trial block included a constant visual coherence, but the order in which these trial blocks were presented was randomized between subjects. The complete experiment included 14 trial blocks per subject, which required multiple sessions on different days to complete. Subjects were told before any experiments that during multisensory conditions the visual and inertial stimuli would always be in the same direction.

Subjects understood that each stimulus would be a linear motion in the horizontal plane. They were asked to judge whether each stimulus was right or left of the midline. A few practice trials were conducted before doing the experiment so the subjects would understand the type of motion they would experience. During these trials, subjects were trained to maintain gaze at the desired position after the fixation point was extinguished. No feedback was given during these trials or later trials to aid the perception of heading direction.

Each block of trials included six independent and randomly interleaved staircases: sets of two with gaze positions of 25° left, centered, and 25° right. For each gaze position there was one staircase that started with a heading of the maximum displacement in each direction (50° left or right). All subjects were able to reliably and correctly identify the direction of the stimulus at the maximum displacement for all stimuli with an inertial component and all visual-only stimuli with a coherence of 50% or greater. One subject was not able to do the visual-only condition at 35% coherence.

Each trial block included 120 stimulus presentations in a 1-up-1-down staircase with variable step size (Fig. 2). Initially, the step size was 8°, so that after a 50° rightward response the next stimulus for that staircase would be 8° in the opposite direction (42° right). When the responses within a staircase changed direction, the step size decreased by half down to a minimum of 0.5°. When there were three responses in the same direction the staircase doubled the step size up to a maximum of 8°. Staircases could pass through zero, so either staircase could deliver stimuli in either direction. Over the course of a trial block staircases tended to focus stimuli near the PSE, at which subjects were equally likely to perceive a stimulus in either direction.

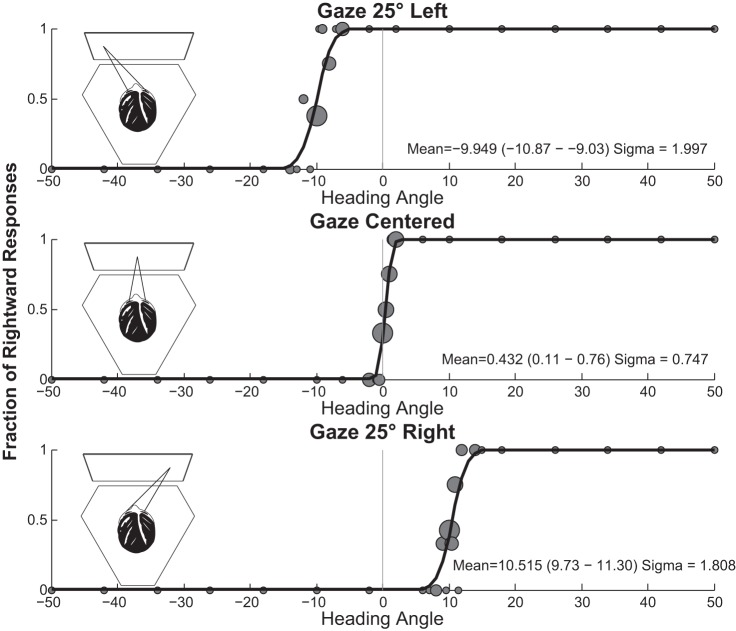

Fig. 2.

Sample data from a 32-yr-old man (subject 5) for the heading discrimination task with a 100% coherence visual stimulus and no inertial stimulus. Circles are scaled relative to the number of stimuli, with the largest circles representing 6 stimulus presentations and the smallest representing a single stimulus presentation.

Data analysis.

The proportion of right/left responses was fit to a cumulative Gaussian function with a Monte Carlo maximum-likelihood method. After the initial data were fit, data were randomly resampled 2,000 times with replacement to generate multiple estimates of the mean and σ that were used to determine 95% confidence intervals (Crane 2012b; Roditi and Crane 2012b). Psychometric fitting for a typical subject is shown in Fig. 2. The PSE was defined as the mean of the psychometric function and represents the motion after which the subject was equally likely to identify the heading as either direction. Threshold was defined as the σ or width of the cumulative Gaussian distribution.

One of the major motivations of the present experiments was to determine how visual and vestibular cues are integrated. We hypothesized that the overall visual inertial heading signal SVI is the weighted sum of the inertial and visual signals SI and SV (where wV = 1 − wI) as previously proposed (Fetsch et al. 2011).

| (1) |

The optimal weights are proportional to stimulus reliability such that (Landy et al. 1995)

| (2) |

The weights can also be determined empirically from differences in the means (e.g., biases) of the visual and inertial stimuli μV and μI given and the mean of the combined stimulus μVI.

| (3) |

Both the optimal and empirical weights were calculated for each subject for each visual-inertial condition. Optimal weights were calculated with Eq.2 from the σ of the visual condition alone (σV) for each visual coherence tested (35%, 50%, 70%, 80%, 90%, and 100%). For the inertial stimulus, the sigma (σI) was determined from the average of the inertial stimulus in darkness and the inertial stimulus with a 0% coherence (all noise) visual stimulus. These estimates of sigma were not significantly different from each other. The empirical weights were calculated using the means for the visual condition (μV) at 100% coherence. For the inertial mean (μI) the trial blocks with inertial stimulus in darkness and the inertial stimulus with a 0% visual coherence stimulus were averaged. The empirical weights could only be calculated when there was a significant difference in the mean between the visual and inertial conditions, which occurred at lateral gaze positions. In the central gaze position neither the inertial or visual conditions were significantly offset (both were zero or nearly so), so it was not possible to calculate a meaningful empirical weight for the central gaze position alone. For each subject the mean was calculated using the difference between the mean at right and left gaze. This method was also applied to the 2,000 randomly resampled means and σs calculated for each condition to give 2,000 estimates of the empirical and optimal weight for each subject/condition. From these weights the 95% and 5% percentiles were determined to given a measure of the reliability of the data.

After analysis the data were analyzed with repeated-measures analysis of variance (ANOVA). Analysis was performed with GraphPad Prism software. Differences were considered statistically significant if P < 0.05. No correction was made for multiple comparisons because differences were generally either not significant (e.g., P > 0.1) or highly significant (e.g., P < 0.001).

RESULTS

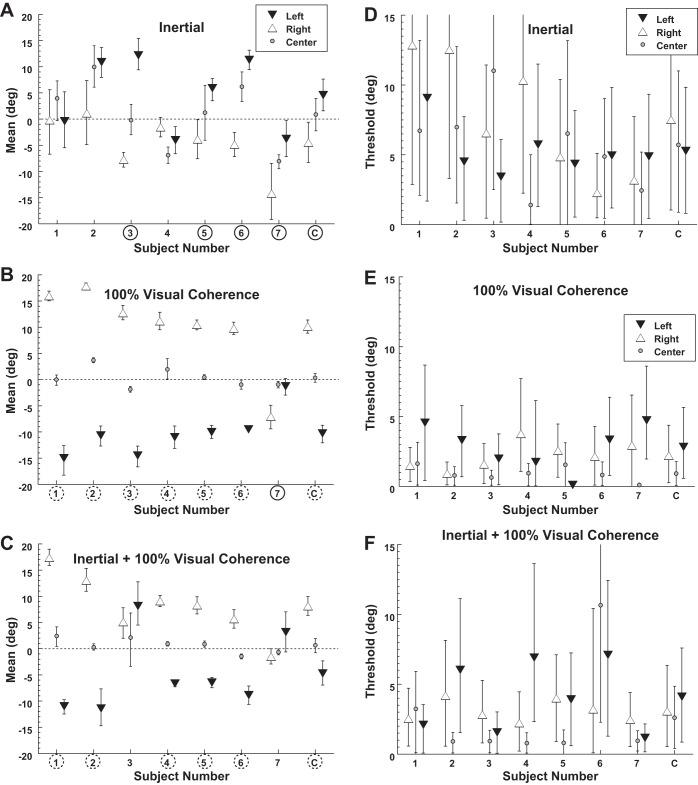

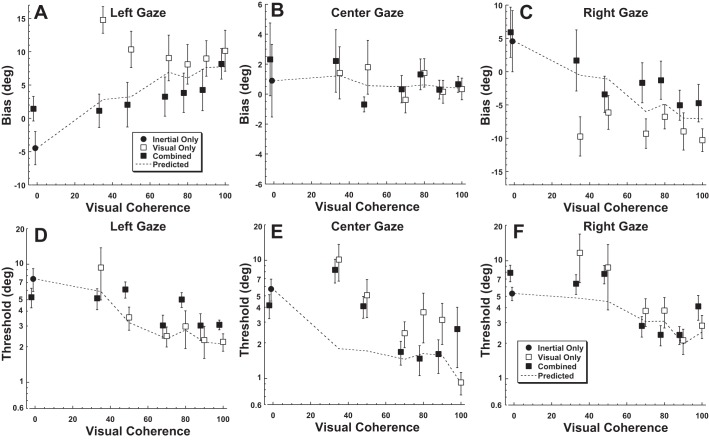

With a purely inertial stimulus, eye position had a small but significant effect such that gaze shifted heading perception in the same direction (Fig. 3A). With a 25° gaze shift to the left, the inertial heading at which the PSE between leftward and rightward perception occurred was 4.6 ± 2.7° (mean ± SE) to the right. Thus a straight forward heading was significantly more likely to be perceived as leftward. Similarly with 25° gaze shift to the right, the PSE shift was −4.5 ± 2.0°. For inertial headings the effect of eye position on PSE was significant (1-way ANOVA, F = 7.4, P = 0.019). The threshold (i.e., σ) averaged 6.6 ± 1.2° (Fig. 3D) and did not depend on gaze (1-way ANOVA, F = 1.0, P = 0.37). Pairing the inertial stimulus with a 0% visual coherence stimulus produced similar PSE (paired 2-tailed t-test, P = 0.08) and thresholds (P = 0.76) as when a blank screen was used.

Fig. 3.

Bias and threshold of visual and inertial heading discrimination. Each condition was tested with eye position 25° to the right or left as well as centered. Error bars represent the 95% confidence interval (CI) for each subject (1–7) or the combined data (C). A circled subject number indicates that leftward gaze shifted the point of subjective equality (PSE) significantly (P < 0.01 with a Monte Carlo technique) to the right when left and right gaze are compared, while a dashed circle represents a significant shift in the opposite direction. Subjects were put in order of their bias under the 100% visual coherence condition (B). A–C demonstrate the mean of the psychometric function, which is also the PSE at which subjects were equally likely to identify a stimulus as right or left. A data point shifted upward (positive) indicates that the PSE is shifted to the right. Thus a stimulus at the midline would be more likely to be perceived as toward the left. A: shifts in gaze caused perception of the inertial stimulus to be shifted in the direction of the gaze shift. B: shifts in gaze with a 100% visual coherence stimulus caused the PSE to shift in the opposite direction consistent with retinotopic coordinates. C: the PSE with a combined visual and inertial stimulus. D–F: thresholds during the conditions shown in A–C.

With a 100% coherence visual stimulus, eye position shifted perception in the opposite direction of gaze (Fig. 3B). With a 25° gaze shift to the left, the heading at which the PSE occurred was also to the left at −10.3 ± 1.7° (mean ± SE). A similar but opposite shift occurred (10.1 ± 3°) with gaze shifts to the right. Thus a straight forward heading would be likely to be perceived in the direction opposite gaze. The shift in PSE with gaze position was highly significant (1-way ANOVA, F = 18.7, P = 0.005). This finding was consistent with a shift toward retinotopic coordinates being used with incomplete correction for eye position. It should be noted that in one individual (subject 7; Fig. 3B) eye position did not shift the PSE in the same direction as gaze but actually had a small shift in the opposite direction. When all subjects were considered, the threshold was significantly lower (paired t-test, central gaze compared with right and left, P < 0.05 for both) with central gaze (0.9 ± 0.2°) compared with lateral (±25°) gaze positions (2.5 ± 0.5°; Fig. 3E). When the 100% visual coherence stimulus was presented simultaneously with the inertial stimulus the PSE and thresholds resembled the visual stimulus alone because of its relatively high reliability (Fig. 3, C and F).

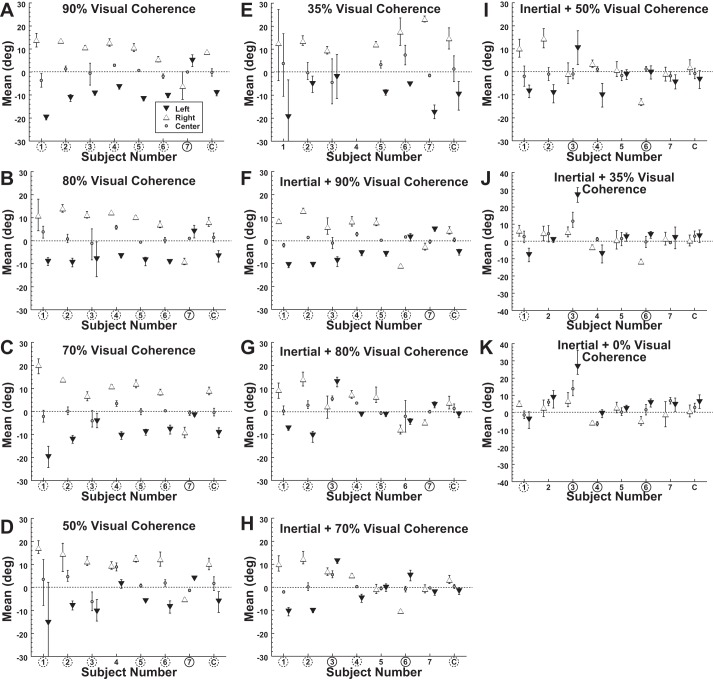

With lateral gaze, the PSEs of heading perception using visual and inertial stimuli were offset by almost 15° (Fig. 4). To determine how these sensory modalities were integrated, the visual heading discrimination was measured at decreased coherences of 90%, 80%, 70%, 50%, and 35%. For these visual-only tasks, decreasing the coherence did not have any significant effect on the shift of the PSE for left (ANOVA F = 2.5, P = 0.10), central (ANOVA F = 1.5, P = 0.25), or right (ANOVA F = 1.4, P = 0.28) gaze as shown in Fig. 4, A–E. Thus the striking effect of gaze position on PSE continued even with low visual coherence.

Fig. 4.

Bias of visual and inertial heading discrimination with decreased visual coherence. Error bars represent 95% CI for each subject (1–7) or the combined data (C). Circled subject numbers indicate that leftward gaze shifted the PSE significantly (P < 0.01 with a Monte Carlo technique) to the right when left and right gaze are compared, while a dashed circle represents a significant shift in the opposite direction. A–E are for a visual stimulus with no platform motion, and F–K indicate that inertial motion occurred with the visual stimulus.

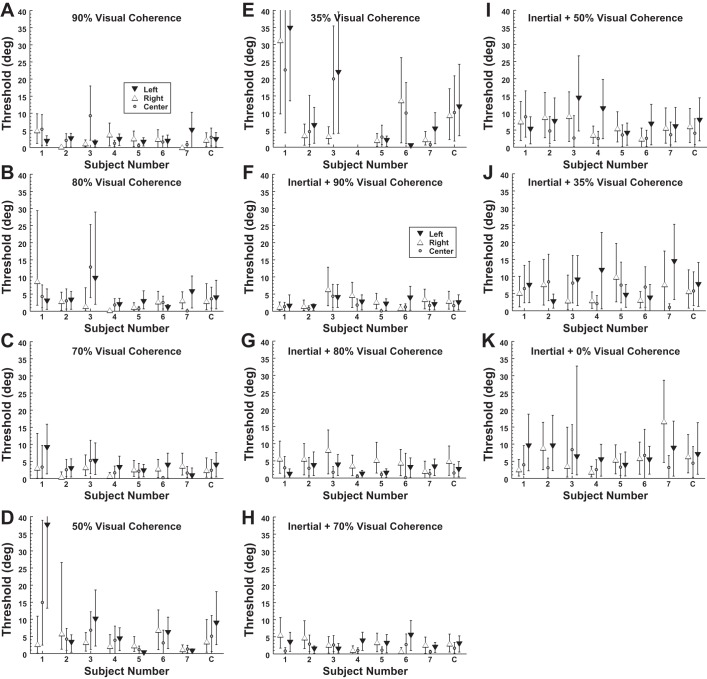

The threshold of heading discrimination increased with decreasing visual coherence (Fig. 5, A–E). There was considerable variation between subjects in the relationship between visual coherence and threshold. Some subjects (e.g., subject 1) had a large increase in threshold below a certain level, while others had thresholds that remained relatively constant (e.g., subject 2). Thresholds significantly increased with lower coherence for central gaze (ANOVA F = 3.1, P = 0.02) and a highly significant increase for lateral gaze (ANOVA F = 6.9, P < 0.0001). When all visual coherences below 100% were considered, the average σ for central gaze was 5.0° while for lateral gaze it was 5.4°; these values were not significantly different (paired t-test, P = 0.30). These trials were also conducted with the visual stimulus paired with the inertial stimulus (Fig. 5, F–K). When combined with an inertial stimulus, eccentric gaze no longer had a significant influence on threshold relative to central gaze (paired t-test, P = 0.48). Because thresholds for lateral and central gaze were similar for all conditions except when the visual stimulus had 100% coherence, the average threshold across gaze positions was used in calculating the Bayesian ideal performance.

Fig. 5.

Threshold of visual and inertial heading discrimination with decreased visual coherence. Error bars represent 95% CI for each subject (1–7) or the combined data (C). A–E are for a visual stimulus with no platform motion, and F–K indicate that inertial motion occurred with the visual stimulus.

When visual and inertial stimuli were presented simultaneously in the same trial, the tendency was for bias based on eye position to also be intermediate between the visual and inertial stimuli being presented independently (Fig. 6, A–C). When combined with a 0% coherence visual stimulus the eye position-related bias of the inertial stimulus, although slightly less, was not significantly different from that of the an inertial stimulus alone (paired t-test, P = 0.10). At visual coherences of 50% and below, the eye position bias of the combined stimulus was close to that predicted by ideal weighting and near zero. With higher visual coherence the bias was displaced from ideal weighting based on stimulus reliability and suggested that the inertial stimulus had slightly more influence than would be predicted by its reliability. When the thresholds were examined (Fig. 6, D–F), the inertial heading threshold across gaze positions was 6.0 ± 0.6°. The 100% visual coherence stimulus had a threshold of 2.0 ± 0.2°, which increased only slightly at coherences of 70–90%. However, at 50% coherence the reliability of the visual and inertial stimuli were similar, and the visual stimulus became less reliable than the inertial stimulus at 35% coherence independent of gaze direction.

Fig. 6.

Bias and threshold of visual and inertial heading discrimination across subjects by gaze position. Error bars represent ±1 SE. Conditions at the same visual motion coherence were offset slightly to keep error bars from overlapping. A–C: the PSE based on eye position. ●, Purely inertial stimuli; □, purely visual stimuli; ■, the visual-inertial combined conditions. A negative bias indicates that the PSE shifted left, while a positive bias indicates a right shift. Dashed line, performance predicted from optimal weights (Eq. 2) calculated from mean thresholds. D–F: average thresholds by eye position. Dashed line, thresholds predicted from optimal stimulus combination.

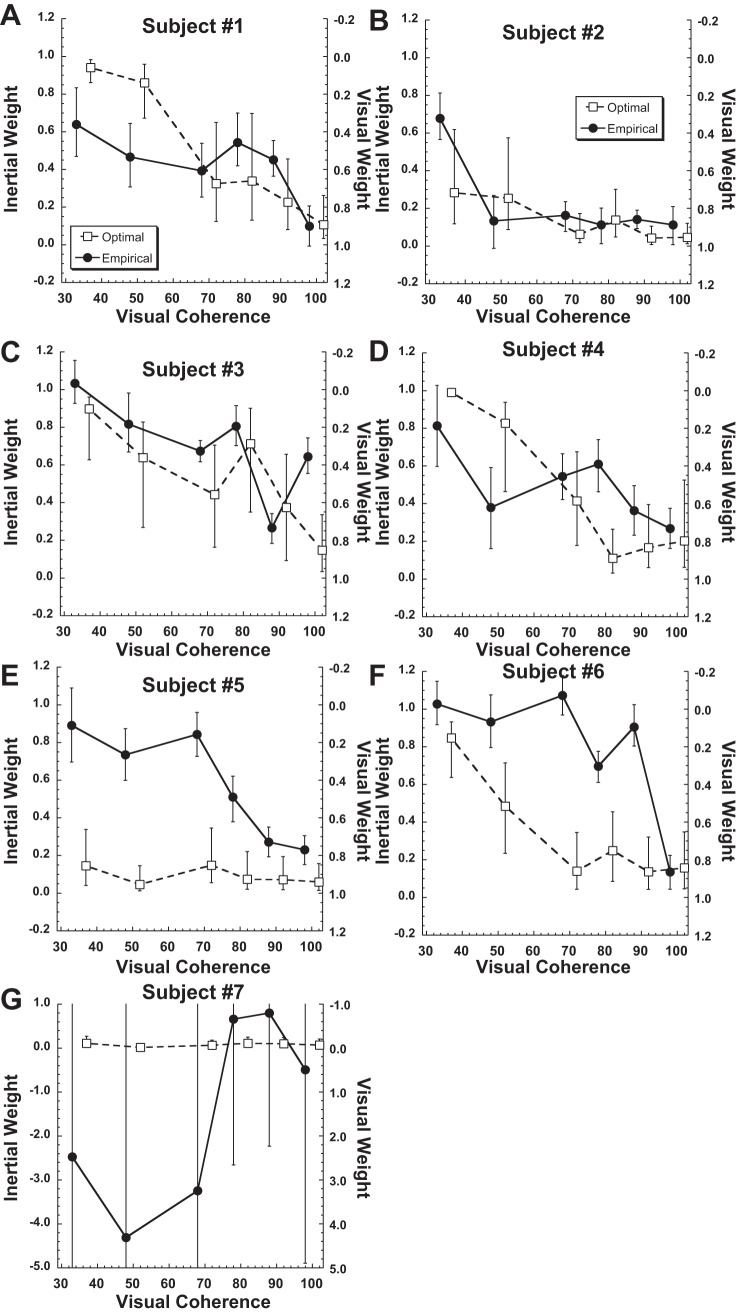

To determine the method by which the visual and vestibular stimuli were combined, the weights of the visual and inertial stimuli were determined with both the optimal (i.e., Bayesian based on relative reliability) and empirical (i.e., based on the relative offset in the PSE) methods for each individual subject (Fig. 7). The subjects were numbered on the basis of size of the gaze-related offset in visual heading perception, so that subject 1 had the largest offset and subject 7 the smallest. In subject 7, gaze direction had minimum influence on either the visual or inertial stimulus direction perception so empirical weights were very imprecise (Fig. 7G), and little can be said about the strategy for integrating the visual and inertial conditions in that subject. In the remaining six subjects, it was clear that not all subjects used the same strategy. In subjects 1–4 the empirical weights were similar to the Bayesian ideal (i.e., optimal) weights. In subjects 5 and 6 the inertial component of the stimulus was weighted more heavily than would be predicted from the reliability of the inertial stimulus relative to that of the visual stimulus.

Fig. 7.

Relative weights of the inertial and visual stimuli determined for each subject. The sum of the visual and inertial weights is always unity. A–G: subjects 1–7. ●, Weights determined empirically from bias; □, optimal weights determined from the average thresholds across the 3 gaze positions. Error bars represent the 95% and 5% percentiles for the weights determined from 2,000 random resampling and curve fit for each condition. Note that coherence of the empirical and optimal weights were artificially offset from each other for clarity and that the range of the y-axis was greatly expanded for subject 7; in that subject the empirical weights were almost all noise due to the unusually small offset in visual perception with gaze shifts in that subject.

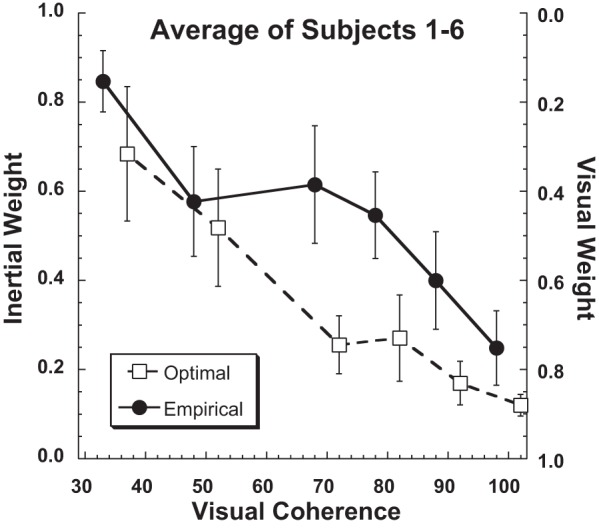

Combined thresholds were calculated from the average mean and thresholds across the individual subjects, with subject 7 excluded because gaze direction had minimal influence in the inertial heading perception, which made determination of accurate weights impossible with the current method (Fig. 8). There was a tendency for the inertial threshold to be more heavily weighted than optimal predictions when presented with a reliable visual stimulus (e.g., coherence >50%). Despite the differences in individual performance, when the optimal and empirical weights were averaged across subjects (Fig. 8) the averaged empirical weights were close to the optimal Bayesian predictions. When examining across subjects, there was not a significant difference between the empirical and optimal weights (paired t-test, P > 0.1 for every condition).

Fig. 8.

Relative weights of the inertial and visual stimuli averaged across subjects. The sum of the visual and inertial weights is always unity. Weights calculated empirically based on bias (Eq. 3) are shown with filled circles (individual subjects) and a solid line (averaged), while those calculated optimally based on the thresholds of single modality (e.g., visual or inertial alone; Eq. 2) are shown with open squares (individual subjects) and a dashed line (averaged). Subject 7 was excluded from the average because the empirical fits were almost all noise due to the minimal offset in visual heading perception with gaze shifts. For clarity the optimal and empirical weights for individual subjects were artificially offset from each other by ±2% visual coherence for clarity.

DISCUSSION

The present experiments used a natural offset in visual and inertial heading perception that occurs with gaze shifts to form the basis of a novel multisensory integration paradigm. Congruent visual and inertial stimuli were presented, but offsets in gaze biased perception of visual stimuli toward retina coordinates while gaze caused a smaller but opposite deviation in inertial heading perception. The results demonstrated that on average subjects weigh cues relative to their reliability without reconciling the offset between visual and inertial coordinates.

Visual heading perception.

This study demonstrated large eye position-dependent biases in visual heading discrimination consistent with a bias toward retinotopic coordinates. Retinotopic or eye-centered coordinates have been demonstrated in many areas of visual processing including the medial temporal (MT) area/V5 (Kolster et al. 2010) as well as other areas of the posterior parietal cortex (Andersen and Mountcastle 1983). Such retinotopic coordinates have also been demonstrated in heading selectivity in the medial superior temporal area (MSTd) (Fetsch et al. 2007; Gu et al. 2006; Lee et al. 2011) and VIP (Chen et al. 2013b). Retinotopic coordinates are behaviorally relevant and have been demonstrated for reach planning (Batista et al. 1999; Cohen and Andersen 2002; Perry et al. 2016). These offsets are consistent with predictions made based on application of a population vector decoder model to the underlying neurophysiology (Gu et al. 2010) and behavior with a heading estimation task (Crane 2015).

However, retinotopic coordinates seem problematic at the perceptual level, as people move their eyes either independently or with head and body motion that would interfere with visual stability (Burr et al. 2007; Melcher 2011; Wurtz 2008; Wurtz et al. 2011). Thus with every eye movement objects will appear at a different location on the retina and lead to activity in different neural circuits, but at the subjective level the world remains stable to us. In our everyday experience we easily maintain our heading while driving or ambulating despite gaze shifts, but the present findings seem at odds with this observation by demonstrating that eye position has a large effect on visual heading perception. During both driving and ambulation humans tend to orient their gaze in the intended direction of travel (Land 1992; Wann and Swapp 2000; Wilkie and Wann 2003), which would eliminate eccentric gaze effects. When subjects were forced to maintain a lateral gaze position during driving, it was found that their heading veered toward the direction of gaze (Readinger et al. 2001). When considered in light of the present findings, lateral gaze would cause the heading to be perceived in the opposite direction of gaze and subjects would then steer in the same direction as gaze to correct this.

Inertial heading bias.

The inertial headings demonstrated a shift in the PSE in the direction of gaze, an effect opposite that observed with visual headings. This effect of eye eccentricity is similar to what has been observed with auditory localization (Bohlander 1984; Cui et al. 2010; Razavi et al. 2007). One hypothesis is that gaze position causes a general shift in egocentric space. Such a hypothesis is consistent with the present findings and would compensate for retinotopic coordinates when a visual stimulus is present but be anticompensatory with a purely inertial heading. However, the present offsets with gaze were much smaller than the visual offsets and more variable between subjects. The previous work using auditory localization (Cui et al. 2010; Razavi et al. 2007) suggested that the shift of egocentric space occurred with a time constant near a minute, much longer than the gaze deviations used in the present study. Thus with longer gaze deviations the inertial offset might be larger and better offset the visual offset, but this was not tested in the present study.

Visual-inertial integration.

During eccentric gaze, visual-vestibular integration was studied with congruent sensory stimuli. Some studies have looked at this problem via a heading estimation task in which human subjects were asked to report the direction of motion (Crane 2012a; Cuturi and MacNeilage 2013; Ohmi 1996; Telford et al. 1995). In these studies the relative reliabilities of the visual and inertial stimuli were not varied; however, at least in some conditions it appeared there was greater precision when both cues were combined. The effect of cue reliability and use of a Bayesian framework to visual-inertial integration has been shown in animals (Fetsch et al. 2011; Gu et al. 2008) and humans (Fetsch et al. 2009). During these prior studies the inertial and visual headings were offset by a few degrees. Because of this the ideal performance was ambiguous, and there was a risk that subjects would realize the cues were not identical and preferentially follow one. In the present experiment the visual and vestibular cues did not need to be offset because with eccentric gaze positions there was a natural and large offset between the perceived direction of visual and inertial stimuli. This allowed the ideal performance to be known. At all visual coherences, the addition of an inertial heading greatly reduced the retinotopic bias seen with the visual-only condition (Fig. 6A). The decrease in threshold with a multisensory stimulus was most apparent at low coherence when the visual stimulus was less reliable (Fig. 6B). The thresholds were as predicted by the Bayesian ideal at 70%, 80%, and 90% coherence and decreased relative to the single sensory conditions but larger than Bayesian prediction at 35% and 50% coherence (Fig. 6B). The threshold for the multisensory condition was actually higher than the threshold of visual heading alone at 100% coherence. This was due to a few subjects (Fig. 3, E and F) who had a higher threshold when the stimuli were combined, perhaps due to visual and inertial stimuli being perceived as not having a common causation (e.g., being in different directions), although recent work by others has demonstrated that large offsets in visual and inertial headings can be perceived as having a common causation (de Winkel et al. 2015, 2017).

Weighting of inertial cues.

When examining the performance averaged across subjects, the present study demonstrated near-Bayesian ideal cue combination (Fig. 8). However, in two subjects inertial stimulus weighting appeared more heavily than its lower threshold would predict (Fig. 7, E and F); thus there was significant variation between individual subjects. Others have noted that when visual and inertial cues were in conflict the inertial cue dominated the perception (de Winkel et al. 2010; Ohmi 1996). Others have observed inertial cues to be overweighted compared with Bayesian predictions (Fetsch et al. 2009). In that study the monkeys were trained on the inertial condition first, which they felt may have contributed to this. However, they also saw this inertial bias in human subjects who were not trained this way. However, only averaged human subject performance was reported and there were fewer subjects than in the present study, so it was unclear what type of variation may have been present. The observation that the inertial stimulus was also overweighted in some of the present data suggests that it may be a common strategy that is used by some individuals, even though in the present study the effect was not significant in the aggregate data. The present study did demonstrate that the effect seemed to be subject dependent, with some subjects using very different strategies.

Another possibility is that the visual and inertial cues may have been perceived as unrelated to each other and not due to a common cause in some studies. This is especially a concern in prior studies when these stimuli were offset relative to each other (Fetsch et al. 2009, 2011; Gu et al. 2008; Ohmi 1996) and thus were in some degree of conflict with each other. In the present study they were not offset but still may be been perceived as independent stimuli. A causal inference model (Körding et al. 2007; Sato et al. 2007) predicts that multisensory perception determines the information provided by each cue and assesses the probability that the two cues arise from a single vs. independent sources. The certainty of causation between the visual and inertial cues is thought to be high in the present study. No subject reported a perceived conflict between these cues (although they were not specifically asked about this issue), although the work of others suggests that large differences in visual and inertial headings are perceived as due to a common cause (de Winkel et al. 2015, 2017). There was a large difference in heading bias for visual vs. inertial headings with gaze shifts, but this conflict presumably also exists during common activities outside the experimental paradigm, such as gaze shifts during ambulation or driving.

Individual variation.

There were some notable deviations from average behavior by individual subjects. Although most (6 of 7) subjects had the PSE deviate in the direction of gaze consistent with retinotopic coordinates, subject 7 exhibited the opposite behavior at every visual coherence tested above 50%. None of the subjects was given feedback about their eye position bias with visual stimuli; thus they were unlikely to be aware they had a large bias in perception with lateral gaze positions. The visual stimulus is ambiguous in that it could represent motion through a fixed environment or environmental motion past a fixed observer. Subjects were instructed that the visual stimulus represented motion through a fixed environment, and this was likely obvious in trials combined with an inertial stimulus. However, if visual stimuli were interpreted as environmental motion this might explain why they responded in the opposite direction. However, the reason the subject perceived and responded to the stimuli this way is unknown. There was also individual variation in how multisensory stimuli were integrated (Fig. 7). Similar, large amounts of variation between individuals has also been observed by others in human multisensory integration experiments (de Winkel et al. 2015; Wozny et al. 2010).

It is also important to note that the offset between the perceived heading of the visual and inertial stimuli was not constant between subjects. Gaze direction had only a small and relatively constant influence on inertial heading (Fig. 3A), while it had a much larger effect on visual heading (Fig. 3B). The subjects were ordered so that subject 1 had the largest gaze effect on bias with visual heading and subject 7 had the smallest (in this case in the opposite direction). Thus in lower-numbered subjects the empirical weight calculation was potentially more reliable because of the larger denominator (Eq. 3). In subject 7 gaze direction produced similar offsets in visual and inertial heading perception; thus the denominator of Eq. 3 was very small and empirically determined weights were very susceptible to noise (Fig. 7G) and weights in this subject were not consistent or reliable. Thus the performance of individual subjects may be summarized as four subjects showing stimulus weighting close to optimal (Fig. 7, A–D) and two subjects weighting the inertial stimulus more than would be predicted by its relative reliability (Fig. 7, E and F). In the final subject (Fig. 7G), it is difficult to make strong conclusions because the biases for the visual and inertial headings were similar. When averaged across subjects effects of noise are presumably minimized, but the average performance was close to ideal (Fig. 8).

Occasionally empirical weights of individual subjects were above unity or below zero. This indicated that the gaze-related deviation in heading perception for the combined condition was outside the range seen for the single-sensory visual and inertial conditions. The furthest excursions were in subject 7 (Fig. 7G), and in this case it was likely due to the small difference in gaze-dependent offset between the visual and inertial heading tasks in that subject, so any excursions outside of this narrow range would produce large changes in empirical stimulus weights.

In prior studies of visual-inertial heading perception the weighting of stimuli has largely been based on performance averaged across individual participants. However, it has been recognized that some individuals have performance that significantly deviates from ideal. Festch et al. reported the performance of two monkeys separately and found that one followed an optimal cue integration strategy while the other tended to weigh the inertial cue more heavily than expected (Fetsch et al. 2011); an earlier study of five monkeys revealed similar outliers (Fetsch et al. 2009). However, for human subjects only average performance has been reported for a visual-inertial heading integration task (Fetsch et al. 2009; Ohmi 1996). The present study is to my knowledge the first to report optimal and empirical performance in individual human subjects. As the present results demonstrate, there are significant deviations from optimal in some individual participants, which is perhaps not surprising given the variation seen between individual primates in a visual-inertial integration task (Fetsch et al. 2009, 2011).

Reference frame of visual-inertial heading integration.

It has been hypothesized that multisensory integration requires different sensory signals to be represented in a common reference frame (Cohen and Andersen 2002; Meredith and Stein 1996). The location of visual-inertial heading integration is not unambiguously known, but MSTd has been implicated based on neurons that respond to both visual and inertial headings (Chen et al. 2013a, 2016; Fetsch et al. 2007; Lee et al. 2011). Furthermore, when MSTd is chemically inactivated there are behavioral deficits in both visual and vestibular heading discrimination (Gu et al. 2012). In area MSTd, visual stimuli are represented in eye-centered or retinotopic coordinates (Chen et al. 2014; Fan et al. 2015; Fetsch et al. 2007; Gu et al. 2010; Lee et al. 2011; Takahashi et al. 2007). Conversely, vestibular signals in MSTd are in head coordinates, with eye position having a minimal influence (Chen et al. 2013a; Fetsch et al. 2007). A previous heading estimation study in our laboratory in which eye position was systematically varied demonstrated that visual heading perception was shifted toward eye-centered coordinates while inertial headings were perceived in body coordinates (Crane 2015). Thus human heading perception also seems consistent with the activity in MSTd. The prior study demonstrated a coordinate system intermediate between eye and body when visual and inertial stimuli were combined, but the relative reliability of these stimuli was not varied. The present study demonstrates that visual and inertial heading coordinates remain independent even during a multisensory integration task, thus rejecting the possibility that offsets caused by eye position are corrected downstream from cue integration.

GRANTS

Grant support was provided by National Institute on Deafness and Other Communication Disorders Grant R01 DC-013580.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author.

AUTHOR CONTRIBUTIONS

B.T.C. conceived and designed research; B.T.C. performed experiments; B.T.C. analyzed data; B.T.C. interpreted results of experiments; B.T.C. prepared figures; B.T.C. drafted manuscript; B.T.C. edited and revised manuscript; B.T.C. approved final version of manuscript.

ACKNOWLEDGMENTS

The author thanks Kyle Critelli for technical assistance as well as editing the final paper and Raul Rodriguez for assistance with eye position recording analysis.

REFERENCES

- Andersen RA, Mountcastle VB. The influence of the angle of gaze upon the excitability of the light-sensitive neurons of the posterior parietal cortex. J Neurosci 3: 532–548, 1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science 285: 257–260, 1999. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- Battaglia PW, Jacobs RA, Aslin RN. Bayesian integration of visual and auditory signals for spatial localization. J Opt Soc Am A Opt Image Sci Vis 20: 1391–1397, 2003. doi: 10.1364/JOSAA.20.001391. [DOI] [PubMed] [Google Scholar]

- Benson AJ, Hutt EC, Brown SF. Thresholds for the perception of whole body angular movement about a vertical axis. Aviat Space Environ Med 60: 205–213, 1989. [PubMed] [Google Scholar]

- Bohlander RW. Eye position and visual attention influence perceived auditory direction. Percept Mot Skills 59: 483–510, 1984. doi: 10.2466/pms.1984.59.2.483. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel JR, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP). Eur J Neurosci 16: 1569–1586, 2002. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Burr D, Tozzi A, Morrone MC. Neural mechanisms for timing visual events are spatially selective in real-world coordinates. Nat Neurosci 10: 423–425, 2007. doi: 10.1038/nn1874. [DOI] [PubMed] [Google Scholar]

- Chen A, Gu Y, Liu S, DeAngelis GC, Angelaki DE. Evidence for a causal contribution of macaque vestibular, but not intraparietal, cortex to heading perception. J Neurosci 36: 3789–3798, 2016. doi: 10.1523/JNEUROSCI.2485-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, Deangelis GC, Angelaki DE. Diverse spatial reference frames of vestibular signals in parietal cortex. Neuron 80: 1310–1321, 2013a. doi: 10.1016/j.neuron.2013.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, DeAngelis GC, Angelaki DE. Eye-centered representation of optic flow tuning in the ventral intraparietal area. J Neurosci 33: 18574–18582, 2013b. doi: 10.1523/JNEUROSCI.2837-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen X, DeAngelis GC, Angelaki DE. Eye-centered visual receptive fields in the ventral intraparietal area. J Neurophysiol 112: 353–361, 2014. doi: 10.1152/jn.00057.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen YE, Andersen RA. A common reference frame for movement plans in the posterior parietal cortex. Nat Rev Neurosci 3: 553–562, 2002. doi: 10.1038/nrn873. [DOI] [PubMed] [Google Scholar]

- Crane BT. Direction specific biases in human visual and vestibular heading perception. PLoS One 7: e51383, 2012a. doi: 10.1371/journal.pone.0051383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crane BT. Roll aftereffects: influence of tilt and inter-stimulus interval. Exp Brain Res 223: 89–98, 2012b. doi: 10.1007/s00221-012-3243-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crane BT. Human visual and vestibular heading perception in the vertical planes. J Assoc Res Otolaryngol 15: 87–102, 2014. doi: 10.1007/s10162-013-0423-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crane BT. Coordinates of human visual and inertial heading perception. PLoS One 10: e0135539, 2015. doi: 10.1371/journal.pone.0135539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui QN, Razavi B, O’Neill WE, Paige GD. Perception of auditory, visual, and egocentric spatial alignment adapts differently to changes in eye position. J Neurophysiol 103: 1020–1035, 2010. doi: 10.1152/jn.00500.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuturi LF, MacNeilage PR. Systematic biases in human heading estimation. PLoS One 8: e56862, 2013. (Erratum. PLoS One 8: 10.1371/annotation/38466452-ba14-4357-b640-5582550bb3dd, 2013). doi: 10.1371/journal.pone.0056862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Winkel KN, Katliar M, Bülthoff HH. Forced fusion in multisensory heading estimation. PLoS One 10: e0127104, 2015. doi: 10.1371/journal.pone.0127104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Winkel KN, Katliar M, Bülthoff HH. Causal inference in multisensory heading estimation. PLoS One 12: e0169676, 2017. doi: 10.1371/journal.pone.0169676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Winkel KN, Weesie J, Werkhoven PJ, Groen EL. Integration of visual and inertial cues in perceived heading of self-motion. J Vis 10: 1, 2010. doi: 10.1167/10.12.1. [DOI] [PubMed] [Google Scholar]

- Fan RH, Liu S, DeAngelis GC, Angelaki DE. Heading tuning in macaque area V6. J Neurosci 35: 16303–16314, 2015. doi: 10.1523/JNEUROSCI.2903-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, DeAngelis GC, Angelaki DE. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci 14: 429–442, 2013. doi: 10.1038/nrn3503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Pouget A, DeAngelis GC, Angelaki DE. Neural correlates of reliability-based cue weighting during multisensory integration. Nat Neurosci 15: 146–154, 2011. doi: 10.1038/nn.2983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Turner AH, DeAngelis GC, Angelaki DE. Dynamic reweighting of visual and vestibular cues during self-motion perception. J Neurosci 29: 15601–15612, 2009. doi: 10.1523/JNEUROSCI.2574-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fetsch CR, Wang S, Gu Y, Deangelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci 27: 700–712, 2007. doi: 10.1523/JNEUROSCI.3553-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ. The Perception of the Visual World. Boston, MA: Houghton Mifflin, 1950, p. 235. [Google Scholar]

- Grabherr L, Nicoucar K, Mast FW, Merfeld DM. Vestibular thresholds for yaw rotation about an earth-vertical axis as a function of frequency. Exp Brain Res 186: 677–681, 2008. doi: 10.1007/s00221-008-1350-8. [DOI] [PubMed] [Google Scholar]

- Groh JM, Sparks DL. Saccades to somatosensory targets. III. Eye-position-dependent somatosensory activity in primate superior colliculus. J Neurophysiol 75: 439–453, 1996. [DOI] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci 11: 1201–1210, 2008. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci 10: 1038–1047, 2007. doi: 10.1038/nn1935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Deangelis GC, Angelaki DE. Causal links between dorsal medial superior temporal area neurons and multisensory heading perception. J Neurosci 32: 2299–2313, 2012. doi: 10.1523/JNEUROSCI.5154-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Fetsch CR, Adeyemo B, Deangelis GC, Angelaki DE. Decoding of MSTd population activity accounts for variations in the precision of heading perception. Neuron 66: 596–609, 2010. doi: 10.1016/j.neuron.2010.04.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci 26: 73–85, 2006. doi: 10.1523/JNEUROSCI.2356-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guedry FE., Jr Psychophysics of vestibular sensation. In: Handbook of Sensory Physiology, edited by Kornhuber HH. New York: Springer, 1974, p. 3–154. [Google Scholar]

- Karmali F, Lim K, Merfeld DM. Visual and vestibular perceptual thresholds each demonstrate better precision at specific frequencies and also exhibit optimal integration. J Neurophysiol 111: 2393–2403, 2014. doi: 10.1152/jn.00332.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knill DC, Saunders JA. Do humans optimally integrate stereo and texture information for judgments of surface slant? Vision Res 43: 2539–2558, 2003. doi: 10.1016/S0042-6989(03)00458-9. [DOI] [PubMed] [Google Scholar]

- Kolster H, Peeters R, Orban GA. The retinotopic organization of the human middle temporal area MT/V5 and its cortical neighbors. J Neurosci 30: 9801–9820, 2010. doi: 10.1523/JNEUROSCI.2069-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Körding KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L. Causal inference in multisensory perception. PLoS One 2: e943, 2007. doi: 10.1371/journal.pone.0000943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Land MF. Predictable eye-head coordination during driving. Nature 359: 318–320, 1992. doi: 10.1038/359318a0. [DOI] [PubMed] [Google Scholar]

- Landy MS, Maloney LT, Johnston EB, Young M. Measurement and modeling of depth cue combination: in defense of weak fusion. Vision Res 35: 389–412, 1995. doi: 10.1016/0042-6989(94)00176-M. [DOI] [PubMed] [Google Scholar]

- Lee B, Pesaran B, Andersen RA. Area MSTd neurons encode visual stimuli in eye coordinates during fixation and pursuit. J Neurophysiol 105: 60–68, 2011. doi: 10.1152/jn.00495.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacNeilage PR, Banks MS, DeAngelis GC, Angelaki DE. Vestibular heading discrimination and sensitivity to linear acceleration in head and world coordinates. J Neurosci 30: 9084–9094, 2010. doi: 10.1523/JNEUROSCI.1304-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melcher D. Visual stability. Philos Trans R Soc Lond B Biol Sci 366: 468–475, 2011. doi: 10.1098/rstb.2010.0277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial determinants of multisensory integration in cat superior colliculus neurons. J Neurophysiol 75: 1843–1857, 1996. [DOI] [PubMed] [Google Scholar]

- Odegaard B, Wozny DR, Shams L. Biases in visual, auditory, and audiovisual perception of space. PLoS Comput Biol 11: e1004649, 2015. doi: 10.1371/journal.pcbi.1004649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohmi M. Egocentric perception through interaction among many sensory systems. Brain Res Cogn Brain Res 5: 87–96, 1996. doi: 10.1016/S0926-6410(96)00044-4. [DOI] [PubMed] [Google Scholar]

- Oruç I, Maloney LT, Landy MS. Weighted linear cue combination with possibly correlated error. Vision Res 43: 2451–2468, 2003. doi: 10.1016/S0042-6989(03)00435-8. [DOI] [PubMed] [Google Scholar]

- Page WK, Duffy CJ. Heading representation in MST: sensory interactions and population encoding. J Neurophysiol 89: 1994–2013, 2003. doi: 10.1152/jn.00493.2002. [DOI] [PubMed] [Google Scholar]

- Perry CJ, Amarasooriya P, Fallah M. An eye in the palm of your hand: alterations in visual processing near the hand, a mini-review. Front Comput Neurosci 10: 37, 2016. doi: 10.3389/fncom.2016.00037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Razavi B, O’Neill WE, Paige GD. Auditory spatial perception dynamically realigns with changing eye position. J Neurosci 27: 10249–10258, 2007. doi: 10.1523/JNEUROSCI.0938-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Readinger WO, Chatziastros A, Cunningham DW, Bülthoff HH. Driving effects of retinal flow properties associated with eccentric gaze (Abstract). Perception 30: 109, 2001. [Google Scholar]

- Roditi RE, Crane BT. Directional asymmetries and age effects in human self-motion perception. J Assoc Res Otolaryngol 13: 381–401, 2012a. doi: 10.1007/s10162-012-0318-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roditi RE, Crane BT. Suprathreshold asymmetries in human motion perception. Exp Brain Res 219: 369–379, 2012b. doi: 10.1007/s00221-012-3099-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosas P, Wagemans J, Ernst MO, Wichmann FA. Texture and haptic cues in slant discrimination: reliability-based cue weighting without statistically optimal cue combination. J Opt Soc Am A Opt Image Sci Vis 22: 801–809, 2005. doi: 10.1364/JOSAA.22.000801. [DOI] [PubMed] [Google Scholar]

- Sato Y, Toyoizumi T, Aihara K. Bayesian inference explains perception of unity and ventriloquism aftereffect: identification of common sources of audiovisual stimuli. Neural Comput 19: 3335–3355, 2007. doi: 10.1162/neco.2007.19.12.3335. [DOI] [PubMed] [Google Scholar]

- Takahashi K, Gu Y, May PJ, Newlands SD, DeAngelis GC, Angelaki DE. Multimodal coding of three-dimensional rotation and translation in area MSTd: comparison of visual and vestibular selectivity. J Neurosci 27: 9742–9756, 2007. doi: 10.1523/JNEUROSCI.0817-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Telford L, Howard IP, Ohmi M. Heading judgments during active and passive self-motion. Exp Brain Res 104: 502–510, 1995. doi: 10.1007/BF00231984. [DOI] [PubMed] [Google Scholar]

- Tian JR, Crane BT, Demer JL. Human surge linear vestibulo-ocular reflex during tertiary gaze viewing. Ann NY Acad Sci 1039: 489–493, 2005. doi: 10.1196/annals.1325.051. [DOI] [PubMed] [Google Scholar]

- Valko Y, Lewis RF, Priesol AJ, Merfeld DM. Vestibular labyrinth contributions to human whole-body motion discrimination. J Neurosci 32: 13537–13542, 2012. doi: 10.1523/JNEUROSCI.2157-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wann JP, Swapp DK. Why you should look where you are going. Nat Neurosci 3: 647–648, 2000. doi: 10.1038/76602. [DOI] [PubMed] [Google Scholar]

- Warren WH Jr, Hannon DJ. Direction of self-motion perceived from optical flow. Nature 336: 162–163, 1988. doi: 10.1038/336162a0. [DOI] [Google Scholar]

- Wilkie RM, Wann JP. Eye-movements aid the control of locomotion. J Vis 3: 677–684, 2003. doi: 10.1167/3.11.3. [DOI] [PubMed] [Google Scholar]

- Wozny DR, Beierholm UR, Shams L. Probability matching as a computational strategy used in perception. PLoS Comput Biol 6: e1000871, 2010. doi: 10.1371/journal.pcbi.1000871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wurtz RH. Neuronal mechanisms of visual stability. Vision Res 48: 2070–2089, 2008. doi: 10.1016/j.visres.2008.03.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wurtz RH, Joiner WM, Berman RA. Neuronal mechanisms for visual stability: progress and problems. Philos Trans R Soc Lond B Biol Sci 366: 492–503, 2011. doi: 10.1098/rstb.2010.0186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu X, Hou H, Spillmann L, Gu Y. Causal evidence of motion signals in macaque middle temporal area weighted-pooled for global heading perception. Cereb Cortex, 2017. doi: 10.1093/cercor/bhw402. [DOI] [PubMed] [Google Scholar]