Abstract

Many biomedical and clinical studies with time-to-event outcomes involve competing risks data. These data are frequently subject to interval censoring. This means that the failure time is not precisely observed, but is only known to lie between two observation times such as clinical visits in a cohort study. Not taking into account the interval censoring may result in biased estimation of the cause-specific cumulative incidence function, an important quantity in the competing risk framework, used for evaluating interventions in populations, for studying the prognosis of various diseases and for prediction and implementation science purposes. In this work we consider the class of semiparametric generalized odds-rate transformation models in the context of sieve maximum likelihood estimation based on B-splines. This large class of models includes both the proportional odds and the proportional subdistribution hazard models (i.e., the Fine-Gray model) as special cases. The estimator for the regression parameter is shown to be semiparametrically efficient and asymptotically normal. Simulation studies suggest that the method performs well even with small sample sizes. As an illustration we use the proposed method to analyze data from HIV-infected individuals obtained from a large cohort study in sub-Saharan Africa. We also provide the R function ciregic that implements the proposed method and present an illustrative example.

Keywords: Competing risks, Cumulative incidence function, Interval censoring, Semiparametric efficiency, R function

1. Introduction

Many biomedical studies with time-to-event outcomes involve competing risk data. The basic identifiable quantities from such data, are the cause-specific hazard and the cause-specific cumulative incidence function. The latter quantity plays a key role in the evaluation of interventions on populations, in studying the prognosis of various diseases, and in prediction. Prediction in turn, is especially useful in quality of life studies and for general implementation science purposes. Frequently, competing risks data are subject to interval censoring, that is, the times to the occurrence of an event are only known to lie between two observation times. An important example of interval-censored competing risk data, and the motivation for the present paper, comes from studies in HIV care and treatment programs. Individuals living with HIV who receive care in these programs may die while in care or become lost to care. Patients not retained in care are less likely to receive antiviral treatment (ART) and, frequently, any other clinical services. They are thus more likely to experience adverse clinical outcomes including death and viral rebound. The resulting increased infectivity of these patients may in addition significantly contribute to further expansion of the HIV epidemic in their communities. Identifying factors associated with mortality while in care and loss to care is useful for optimizing interventions, especially in sub-Saharan Africa, the region most affected by the HIV epidemic and the locus of the present study.

In many cases in this context, when a death has occurred, the corresponding death date is known to be between the date the death was reported and the last clinic visit of the deceased patient. Also, since loss to care is defined as no clinic visits for a certain period (e.g., three months from the last observed clinic visit or for the maximum period that a patient may have adequate medication supplies), the actual date of disengagement from care (being alive but not receiving anti-HIV care) is unknown. Consequently, studies in this context involve interval-censored competing risk data where death and loss to care are competing events. This research was motivated from studies in this setting and the fact that the issue of interval censoring in this context has, to our knowledge, been largely ignored in the literature.

Both nonparametric and fully parametric approaches have been proposed to estimate the cause-specific cumulative incidence function with interval-censored competing risk data. For example, Hudgens et al. [1] proposed a nonparametric maximum likelihood estimator for the cause-specific cumulative incidence under interval censoring. More recently, a parametric estimation method for the cause-specific cumulative incidence under a Gompertz distribution assumption was developed by Hudgens et al. [2]. These methods however do not consider covariates. To incorporate covariate information into a regression setting, fully parametric likelihood methods for the cause-specific cumulative incidence can be modified to deal with interval-censored competing risk data. For example, Jeong and Fine [3] proposed a parametric maximum likelihood approach along with a generalized odds-rate transformation [4] to model the cause-specific cumulative incidence under the Gompertz distribution. On the other hand, Cheng [5] proposed a three-parameter modified logistic model, while Shi et al. [6] considered a parametric method for explicitly modeling the cause-specific cumulative incidence of the cause of interest based on the generalized odds-rate transformation [4] and the modified three-parameter logistic function proposed by Cheng [5].

A key limitation of the fully parametric methods is that they make strong distributional assumptions about the true cause-specific cumulative incidence functions. Additionally, using more flexible three-parameter distributions may be challenging computationally and may lead to non-convergence problems as in univariate survival analysis, as cautioned by Lambert et al. [7] for example. A general approach to overcome the limitations of the fully parametric methods is to consider a semiparametric model. This approach was used successfully by Zhang et al. [8] for the Cox model with interval-censored (univariate) survival data. The authors used a sieve maximum likelihood estimation methodology [9] along with monotone B-splines to approximate the baseline hazard of the Cox proportional hazards model. Recently, Li [10] used the estimation approach by Zhang et al. [8] to the problem of semiparametric regression of the cumulative incidence function with interval-censored competing risk data under the proportional subdistribution hazards or the Fine-Gray model [11]. However, the assumption of proportional subdistribution hazards may not be correct and also the resulting parameter estimates are somewhat difficult to interpret as reported by Fine [12]. As we show in the illustration of our proposed methodology, using a proportional subdistribution hazard model for both competing risks did not provide a good fit to the data in our motivating HIV study compared to other models which better fit these data. Consequently, considering a more general class of semiparametric models for the cumulative incidence function, which also includes models which are easily interpretable by practitioners, such as, for example, the proportional odds model [12, 13], has important practical implications.

The main goal of this paper is to extend the B-spline sieve maximum likelihood estimator for interval-censored data used by [8] and [10], to the class of semiparametric generalized odds rate transformation models for the cause-specific cumulative incidence function and evaluate its asymptotic and finite sample properties. The large class of the generalized odds rate transformation models includes both the proportional odds model and the proportional subdistribution hazards model (i.e., the Fine-Gray model) [11] as special cases. Additionally, we explicitly incorporate the boundedness constraint of the cumulative incidence functions during the likelihood maximization. This is not the case with many existing methods for right-censored competing risk data that analyze each cause of failure separately and which can produce cumulative incidence estimates having a sum, over all possible causes of failure, which is greater than 1. For example, in the illustration presented by Choi and Huang [14], the analysis based on the Fine-Gray model [11] produced cumulative incidence estimates that added to 1.29. This may indicate a model misspecification and may adversely influence the validity of any predictions based on these estimates. The boundedness constraint was not considered by Li [10]. Finally, we develop and illustrate the R function ciregicthat easily implements the proposed estimation methodology for general interval-censored competing risks data.

We show that the B-spline sieve maximum likelihood estimator for the regression (Euclidean) parameter is shown to be semiparametrically efficient and asymptotically normal. We also demonstrate that the estimator for the functional parameter (baseline cause-specific cumulative incidence functions) converges at the optimal rate for nonparametric regression, which is nevertheless slower than the usual parametric rate. Simulation studies suggest that the method performs well even with small sample sizes. We illustrate our methods by considering a number of candidate models like the proportional subdistribution and proportional odds model in the analysis of death and loss to care in the context of data from HIV care and treatment programs. Finally, we conclude this manuscript with a discussion, while examples on how to use the R function ciregiccreated to fit these models and asymptotic theory proofs are reserved for the Appendix.

2. Data and method

We assume the existence of a finite number k of competing risks. The cause of failure is denoted by C, the actual failure time is denoted by T, whereas (U1, …, Um) denote the m ∈ (0, ∞) distinct observation times. Observation times could be clinical visits, laboratory tests and all other occasions where the sampling unit (e.g., a patient) is actually observed. The number of observation times m may differ from subject to subject. To facilitate our further discussion let V ∈ {0, U1, …, Um} correspond to the last observation time prior to the failure and U ∈ {U1, …, Um, ∞} the first observation time after the failure. Using this notation a left-censored observation will correspond to (V, U) = (0, U1), while a right-censored observation to (V, U) = (Um, ∞). For j = 1, …, k, if a subject fails from the j-th cause of failure before the first observation time U1 (i.e. it is left-censored), we observe . If he/she fails between V > U1 and U ≤ Um, we observe δj = 1. But if he/she is right-censored (i.e. T > Um), then we observe . We assume the observation interval to be [a, b], that is a = U1 < Um = b. Along with the C, V, U, and , we also observe a vector of covariates Z ∈ ℝd, whose effects are the primary parameters of interest. We further assume the following two fundamental conditions:

-

A1

The observation times (U1, …, Um) are independent of (T, C) conditional on Z.

-

A2

The distribution of (U1, …, Um) does not contain the parameters that govern the distribution of (T, C) (non-informative interval censoring).

The cause-specific cumulative incidence function is defined as

and corresponds to the cumulative probability of failure from the j-th cause of failure in the presence of the remaining causes of failure, conditional on the covariates. Given the two assumptions above, the likelihood function in terms of the cause-specific cumulative incidence functions is

| (1) |

where is a parameter that includes, in our case, regression coefficients for the effect of the covariate vector Z on each cause-specific cumulative incidence function and unspecified functions of time. A natural choice for the model for Fj is the class of semiparametric transformation models [11, 3, 15]:

| (2) |

where gj is a known increasing cause-specific link function and ϕj is an unspecified, strictly increasing and invertible function of time. A special subset of the class of semiparametric transformation models is the class of the generalized odds rate transformation models [4, 16, 12, 3]:

for j = 1, …, k. The proportional odds model and the proportional subdistribution hazards model [11] are special cases of the generalized odds rate transformation with αj = 1 and αj = 0, respectively [3]. Note that the link functions for different causes of failure are allowed to be different. All authors considering general classes of models assume that the true link functions are known [16, 17, 12, 18] and we adopt this assumption in our present work as well. This is because estimating α is not always possible because not all models with unknown α are identifiable from the data as in the non-competing-risk setting [19]. Even when there is no non-identifiability issues, reliable estimation of α requires very large sample sizes [19, 18].

As in [8] and [10], we avoid imposing parametric assumptions on ϕj in (2) and thus the likelihood involves k infinite-dimensional or functional parameters. In general, maximization of the likelihood function with an infinite-dimensional parameter θ ∈ Θ over Θ may lead to inconsistent maximum likelihood estimates [9]. One approach to overcome this problem is to use sieve maximum likelihood estimation. A sieve [9] is a sequence {Θn}n≥1 of parameter spaces that approximate in a certain sense the original parameter space Θ, with the approximation error tending to zero as n → ∞. A sieve maximum likelihood estimate is the estimate obtained by maximizing the likelihood function over Θn. Another practical advantage of using the sieve maximum likelihood approach is that it reduces the dimensionality of the optimization problem, and, thus, the computational burden, compared to a fully semiparametric likelihood approach [8]. This is because the dimension of Θn is significantly smaller (i.e., it involves fewer number of parameters to be estimated) compared to that of the full parameter space Θ in finite samples. The computational advantage of the sieve maximum likelihood approach compared to a fully semiparametric maximum likelihood approach for interval-censored survival data has been shown in the simulation study by Zhang et al. [8]. In our case, the sequence of approximating functional parameter spaces is chosen to be spaces of monotone (due to the monotonicity of the cumulative incidence function) B-spline functions as defined in [8], i.e.,

for j = 1, …, k, where Nj and mj are the number of internal knots and the order of the B-spline for the j-th cause of failure, γj are the unknown control points and t ∈[a, b], with a and b being the minimum and maximum observation times in the study. The number of internal knots Nj increases with sample size and is selected to satisfy Nj ≈ nν such that max1≤t≤Nj+1 (ct, j − ct−1, j) = O(n−ν), where ct, j is the place of the t-th knot for the j-th cause-specific cumulative incidence function. During the likelihood maximization we impose the monotonicity constraints

for every s = 1, …, Nj + pj and j = 1, …, k. Additionally, the constraint max

| (3) |

is needed to ensure that the sum of the estimated cumulative incidence functions at the maximum follow-up time b is bounded above by 1, where the maximum is over all the observed covariate patterns.

Let the true parameter values be denoted by , where and ϕ0 = (ϕ1,0, …, ϕk,0)′ and the corresponding estimator by , where and ϕ̂n = (ϕ̂1,n, …, ϕ̂k,n)′. Also, define the L2-metric for the distance between two parameters θ1 = (β(1)′, ϕ(1)′)′ and θ2 = (β(2)′, ϕ(2)′)′ as

where

and ||·|| denotes the Euclidean norm. Here we assume that each unspecified function ϕ0, j(t), j = 1, …, k, is smooth in the sense that it has a bounded p-th derivative in [a, b] for p ≥ 1 and that the first derivative of ϕ0, j(t) is strictly positive and continuous on [a, b], along with some other technical regularity conditions that are listed in the Appendix. Our regularity conditions are similar to those used in [8]. Given assumptions A1, A2 and regularity conditions C1–C6, it can be shown that

if the number of internal knots Nj = O(nν) for all j = 1, …, k, where ν satisfies 1/[2(1 + p)]< ν < 1/(2p) (more details on the selection of the number of internal knots are presented in subsection 2.1). Therefore, the B-spline-based sieve maximum likelihood estimator is consistent. Additionally,

This implies that the convergence rate of the estimator for the functional parameters is slower that the usual rate. This estimator achieves the optimal convergence rate for nonparametric regression estimators which is n−p/(1+2p) when one chooses ν = 1/(1 + 2p) [8]. Finally,

which implies that the convergence rate of the estimator for the Euclidean parameter is . This also points to the efficiency of this estimator as the corresponding variance matrix attains the semiparametric efficiency bound I(β0). The definition of the information matrix I(β0) is provided in the Appendix. Since finding I(β0) involves solving an integral equation with no explicit solution, estimation of I(β0) by I(β̂n) is not straightforward [8, 10]. Consequently, one could either use the approach by Zhang et al. [8] and Li [10] for standard error estimation or rely on the computationally simpler nonparametric bootstrap method. The validity of the bootstrap for the Euclidean parameter estimates in general semiparametric M-estimation problems has been verified by Cheng and Huang [20]. The above mentioned asymptotic properties are proved in the Appendix.

2.1. Practical implementation of the method

The proposed methodology can be easily implemented using our R function ciregic. An example on how to use this function is provided in the Appendix. In this subsection we discuss the three steps that are crucial for applying our methodology in practice. The first and third steps are automatically implemented using our ciregicfunction.

The first step to apply the methodology is to consider the number and the placement of the knots for the B-splines. As it was mentioned previously, to achieve the optimal nonparametric convergence rate for the functional parameters, one has to select the knots based on the smoothness of the underlying unspecified parameters ϕ0, j(t), j = 1, …, k, as measured by the order p of the derivatives of these parameters that are bounded. However, this degree of smoothness will be unknown in practice. In this case, one can impose the weakest possible assumption that only the first derivative is bounded, i.e. p = 1, and proceed with setting ν = 1/(1 + 2p) = 1/3. Under this choice, the number of internal knots Nj has the order of n1/3 and, therefore, Nj can be set equal to [n1/3] which corresponds to the largest integer that is smaller than or equal to n1/3. The price, however, for imposing the weakest possible assumption regarding the smoothness of the functional parameters is that the convergence rate of the estimator for the baseline cause-specific cumulative incidence function is n1/3. This is the slowest achievable rate for this estimator using the B-spline-based sieve maximum likelihood approach. Based on our simulation study presented in the next Section, the performance of this approach is quite satisfactory even with small sample sizes. It has to be noted that this minimal assumption (i.e., p = 1) has been imposed in our R function ciregic. The placement of knots for the B-splines is an easier task since, due to the monotonicity constraints, these splines have better behavior compared to unconstrained B-splines and are less sensitive to the placement of the knots. Consequently, placing the knots at the quantiles of the empirical distribution of the observation times provides a reasonable and simple approach to knot placement.

Next, selecting the link function parameters is needed in cases where the true link functions are unknown a priori (as it is usually the case in practice). In this case, we propose, following [18], performing a grid search over a plausible combination of α1, …, αk values and selecting the combination with the largest maximized likelihood value. We must acknowledge however, that the additional uncertainty due to this type of model selection procedure is very difficult to estimate and this is still an open problem in the literature [19, 18]. For this reason we assumed in all theoretical derivations that the true link functions are a priori known.

The final step is to obtain the parameter estimates of the model by maximizing the likelihood function (1) through a constrained optimization algorithm. The monotonicity constraints are linear inequality constraints and they can be easily incorporated in the optimization by using for example the logarithmic barrier method. However, incorporating the boundedness costraint (3) is more challenging as this is a nonlinear inequality constraint. For the special case with k = 2 and α1 = α2 = 1, i.e. with two competing risks and logit link functions for both risks, the boundedness constraint is equivalent to a linear inequality constraint. In this special case the optimization can be performed using a constrained optimization package such as the R package constrOptim, which can handle linear inequality constraints through the logarithmic barrier method. If there are more than 2 competing risks or one needs to fit a wide variety of models under different combinations of α values, then an optimization algorithm that incorporates nonlinear inequality constraints is needed. In this case, the R package alabamaprovides a useful set of functions for optimization under both linear and nonlinear inequality constraints. Our ciregicfunction automatically imposes the monotonicity and boundedness constraints using the alabamapackage.

3. Simulation study

In order to evaluate the finite sample performance of our method, we performed a series of simulation experiments. We considered two causes of failure and two covariates, one binary (Z1) and one continuous (Z2), in the simulations. The covariates Z = (Z1, Z2)′ were generated from the Bernoulli distribution with probability of success 0.4 and from the standard normal distribution, respectively. The cumulative incidence for causes 1 and 2 were considered to have a proportional odds form:

where exp [ϕ1(t)] = 0.4 [1 − exp(−0.6t)]/0.6 and exp [ϕ2(t)] = 0.75 [1 − exp(−0.5t)]/0.5 following a Gompertz distribution [3]. The true values for the regression parameters were β1 = (β11, β12)′ = (0.5, −0.3) and β2 = (β21, β22)′ = (−0.5, 0.3).

Based on these models, we simulated the failure times and causes of failure. The first observation time U1i (e.g. clinic visit) was simulated based on the exponential distribution with a hazard parameter equal to 3. Each one of the next observations were placed after time V from the previous observation, with V being generated based on the exponential distribution with a hazard parameter equal to 3, for a maximum period of 3 years. This choice led to an average time of four months between two consecutive observations. The number of simulated data sets was 1,000, with the sample size per data set ranging between 200, 350 and 500. The proposed methodology was applied in each data set using cubic B-splines, and the number of knots was set equal to the larger integer up to and including N1/3, with N being the total number of distinct time points Vi and Ui for the non-right-censored observations, plus the number of right-censored observations. The knots were placed at the corresponding percentiles of the distribution of the observed times (Vi,Ui). The nonparametric bootstrap method was used for standard error estimation of the parameter of interest β, based on 50 bootstrap samples. More specifically, for each simulated dataset, 50 random samples of size n were drawn with replacement from the dataset, and then the model was fitted to each of these samples. The standard deviation of the corresponding 50 regression parameter estimates was the estimated standard error of β̂n.

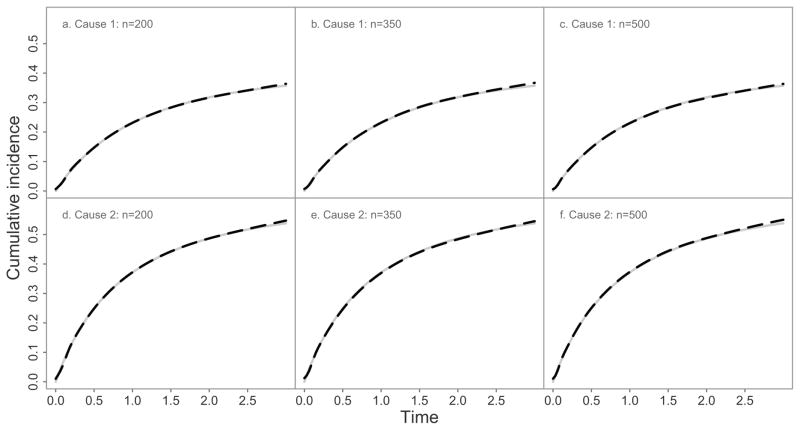

Simulation results regarding the baseline cumulative incidence functions are presented in Figure 1. Even with a sample size of 200, the average of the estimated baseline cumulative incidence functions were in close agreement with the corresponding true quantities. Regarding the regression parameter (Table 1), the method performs well, with the bias being very small and the empirical coverage probability staying close to the nominal 95% level in all cases.

Figure 1.

True (grey lines) and average estimated (black dashed lines) baseline cumulative incidence functions based on 1,000 simulation replications according to cause of failure and sample size n.

Table 1.

Simulation results regarding the Euclidean parameter β = (β11, β12, β21, β22)′ based on 1,000 replications. Bootstrap based on 50 bootstrap samples was used for standard error estimation.

| n | β11 | β12 | β21 | β22 | |

|---|---|---|---|---|---|

| 200 | % bias | −0.311 | 3.239 | 2.631 | 2.950 |

| MCSD | 0.281 | 0.134 | 0.279 | 0.134 | |

| ASE | 0.275 | 0.133 | 0.273 | 0.133 | |

| ECP | 0.943 | 0.945 | 0.938 | 0.947 | |

| 350 | % bias | 0.464 | −0.037 | 2.626 | 0.085 |

| MCSD | 0.196 | 0.095 | 0.191 | 0.095 | |

| ASE | 0.203 | 0.098 | 0.204 | 0.098 | |

| ECP | 0.952 | 0.941 | 0.955 | 0.941 | |

| 500 | % bias | −1.403 | 0.640 | −0.137 | 0.622 |

| MCSD | 0.169 | 0.082 | 0.171 | 0.082 | |

| ASE | 0.169 | 0.081 | 0.168 | 0.081 | |

| ECP | 0.945 | 0.940 | 0.946 | 0.938 |

MCSD: Monte Carlo standard deviation of the estimates;

ASE: Average standard error of the estimates;

ECP: Empirical coverage probability

4. Analysis of the HIV data

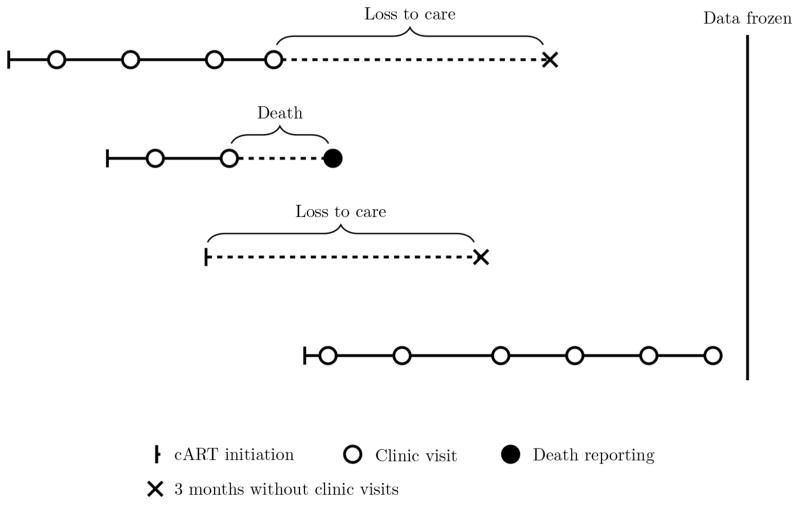

The methodology is applied to the evaluation of risk factors for the cause-specific cumulative incidence of being lost to care and of death while in care. Loss to care is a measure of patient non-retention in care, and thus is an extremely important measure for the success of any intervention program for the HIV/AIDS epidemic, since patients who have disengaged from HIV care do not receive antiretroviral treatment (ART) and thus have increased HIV-related mortality and morbidity, are more infectious and contribute to further expanding the HIV epidemic. The data came from the East Africa IeDEA (International Epidemiologic Databases to Evaluate AIDS) Regional Consortium, a network of HIV care and treatment programs in Kenya, Uganda and Tanzania. The working definition of loss to care was having no clinic visits for a 3-month period. This cutoff was chosen by the clinicians because typically patients on ART are expected to have run out of medication if they have not had a clinic visit within a 3-month period. A schema of typical patient temporal trajectories is presented in Figure 2. Based on this figure it is clear that neither loss to care nor death times are exactly known due to interval censoring. In the current analysis we included patients with a CD4 count of at least 100 cells/μl at ART initiation. Basic descriptive characteristics of the study sample are presented in Table 2.

Figure 2.

Example of four patients in a typical HIV care and treatment program.

Table 2.

Descriptive characteristics of the study sample.

| In HIV care (n=2,232) n (%) |

LTC1 (n=690) n (%) |

Death2 (n=131) n (%) |

|

|---|---|---|---|

| Gender | |||

| Male | 681 (30.5) | 207 (30.0) | 62 (47.3) |

| Female | 1,551 (69.5) | 483 (70.0) | 69 (52.7) |

| HIV status disclosed | |||

| No | 763 (34.2) | 279 (40.4) | 57 (43.5) |

| Yes | 1,469 (65.8) | 411 (59.6) | 74 (56.5) |

| Median (IQR) | Median (IQR) | 40.9 (35.0, 50.8) | |

|

| |||

| Age3 (years) | 37.1 (30.4, 44.9) | 35.2 (28.6, 41.7) | |

| CD43 (cells/μl) | 199 (152, 276) | 188 (140, 262) | 163 (131, 213) |

Loss to care

While in HIV care

At ART initiation

Similarly to the simulation study, we applied the proposed methodology using cubic B-splines and with the number of knots being equal to the larger integer up to and including N1/3, with N being the total number of distinct time points Vi and Ui for the non-right-censored patients plus the number of right-censored patients. The knots were placed at the corresponding percentiles of the distribution of the observed times (Vi,Ui). The nonparametric bootstrap method was used for standard error estimation of the parameter of interest, based on 50 bootstrap samples, as described in the simulation study Section. The analysis was performed using our R function ciregicthat is illustrated in the Appendix.

In order to perform the analysis we needed to select the α = (α1, α2) parameter indexing the link functions for loss to care and death while in care. To do so we performed a grid search over all possible combinations of α1 ∈ {0, 0.2, …, 10} and α2 ∈ {0, 0.2, …, 10}. The maximum log-likelihood value (−3144.47) was achieved at the point α = (2, 1.2). The maximized log-likelihood value for the popular Fine-Gray proportional subdistribution hazards model for both competing risks (FGFG), i.e., α = (0, 0), was −3147.05. Consequently, the fit of the FGFG model is clearly not optimal. In order to illustrate our method for the Fine-Gray model and the proporitonal odds model, which are the most popular choices in practice, we also fitted a model which assumes a proportional subdistribution hazards model for loss to care and a proportional odds model for death (FGPO model). In this case α was set equal to (0, 1). The maximized log-likelihood for the FGPO model was −3146.91. The results of the analysis based on the FGPO and the optimal model are presented in Table 3.

Table 3.

Comparison between the results from the Fine-Gray – proportional odds model (FGPO) with α = (0, 1) and the optimal based on the grid search model with α = (2, 1.2)

| Outcome | Covariate |

α = (0, 1) mRR1/β̂(p-value) |

α = (2, 1.2) β̂ (p-value) |

|---|---|---|---|

| A. Loss to care | Age at ART initiation | ||

| per 10 years | 0.7832/−0.244 (<0.001) | −0.348 (<0.001) | |

| Gender | |||

| Male vs Female | 1.1702/0.157 (0.031) | 0.273 (0.005) | |

| CD4 at ART initiation | |||

| per 100 cells/μl | 1.0022/0.002 (0.947) | 0.001 (0.976) | |

| B. Death | Age at ART initiation | ||

| per 10 years | 1.3603/0.308 (<0.001) | 0.329 (<0.001) | |

| Gender | |||

| Male vs Female | 1.7583/0.564 (0.008) | 0.567 (0.008) | |

| CD4 at ART initiation | |||

| per 100 cells/μl | 0.6993/−0.358 (0.003) | −0.346 (0.006) |

Measure of relative risk

Subdistribution hazard ratio

Odds ratio

Based on Table 3, lower age at ART initiation and male gender are associated with an increased cumulative incidence of loss to care. Specifically, the estimate associated with age implies that patients older by 10 years have 21.7% lower subdistribution hazard [11] of being lost to care (p-value<0.001). In addition, males have 17.0% higher subdistribution hazard of being lost to care compared to females (p-value=0.031). Also, there is no evidence for an association between CD4 count at ART initiation and the cumulative incidence of loss to care (p-value=0.947). Older age, male gender and lower CD4 count at ART initiation are strongly associated with an increased odds of death while in care. It is clear that the interpretability of the estimated regression parameter for death while in care as odds ratios is very desirable, especially for non-statistically inclined investigators. We also compared the results from the FGPO model with those from the optimal model based on the grid search with α = (2, 1.2). The estimated regression coefficients are presented in Table 3 as well. The results from the two analyses are qualitatively similar. However, the regression coefficient estimates for gender and age differ substantially between the two models for the loss to care outcome. As a comparison, we also performed a naïve analysis based on the midpoint imputation approach, under the optimal model with α = (2, 1.2). Using this approach, the unknown failure times are replaced by the midpoint between the two observation times that surround the true failure time, and then the data are analyzed as usual right-censored data. The estimate for the effect of CD4 on loss to care, based on the naïve analysis, was 10.9 times larger (0.011 versus 0.001) compared to the estimate from the proposed approach, although this effect was still not statistically significant. Additionally, the estimate of the effect of age on death was attenuated by 8.3% based on the naïve analysis compared to the estimate from the proposed method. However, this effect was still statistically significant. The difference between the rest estimates from the two approaches was less than 3.4%.

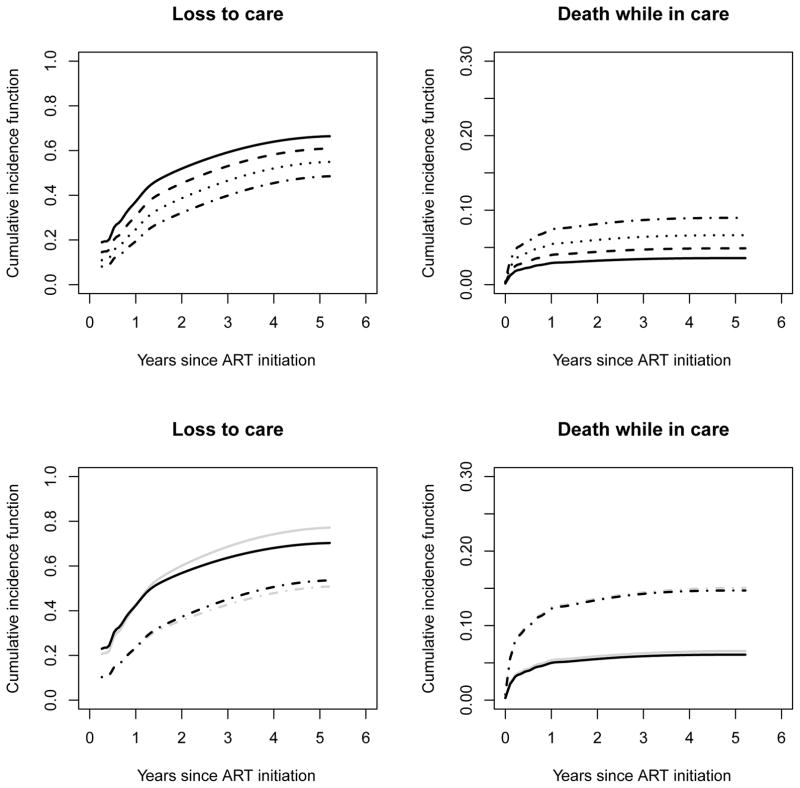

It is straightforward to derive predicted cause-specific cumulative incidence functions for specific patient subgroups. Figure 3 presents results of these quantities, based on the estimates from the optimal model, for a female patient with CD4 count at ART initiation of 120 cells/μl, by age at ART initiation. Such predictions are useful for evaluating program effectiveness and can also be used as inputs to mathematical models of cost-effectiveness analyses to identify the best strategy to optimize care in our setting. The comparison between the predictions from the optimal model and the FGPO model are presented in the bottom row of Figure 3. For the loss to care outcome, the predicted cumulative incidences for a 40-year old male patient with a CD4 count at ART initiation of 120 cells/μl from the two models differ substantially. More specifically, the difference between the two age groups is smaller after the first year based on the optimal model compared to the FGPO model. This provides some evidence for the violation of the proportional subdistribution hazards assumption of the Fine-Gray model. However, when considering the death outcome, the predicted cumulative incidences from the two models are quite close, and this is because the difference between the link function parameters in the two models is somewhat small (i.e., α2 = 1.2 for the optimal model and α2 = 1 for the FGPO model).

Figure 3.

Estimated cumulative incidence of loss to care (top left panel) and of death (top right panel) for a female patient with a CD4 count of 120 cells/μl at ART initiation, according to age at ART initiation (solid lines = 20 years; dashed lines = 30 years; dotted lines = 40 years; dashed-dotted lines = 50 years) based on the optimal model according to the grid search, with α = (2, 1.2), and estimated cumulative incidence of loss to care (bottom left panel) and of death (bottom right panel) for a male patient with a CD4 count of 120 cells/μl at ART initiation, according to age (solid lines = 20 years; dashed-dotted lines = 50 years), based on the optimal model (black lines) and the Fine-Gray – proportional odds model (gray lines) with α = (0, 1).

5. Discussion

In this paper we extended the work by Li [10] on semiparametric analysis of the proportional subdistribution hazards or the Fine-Gray model [11] for cumulative incidence incidence estimation with interval-censored competing risk data, to the class of the semiparametric generalized odds rate transformation models. This general class includes the proportional odds model [3, 6] and the proportional subdistribution hazards model as special cases. This extension is very important for medical and epidemiological applications when the proportional subdistribution hazards model does not provide good fit to the data, as was the case with our HIV data analysis. Unlike [10], we also explicitly incorporate the boundedness of the cause-specific cumulative incidence function constraint into the optimization, and we also provide and illustrate an R function called ciregic, that easily implements the proposed method.

We have showed that the B-spline sieve maximum likelihood estimator for the regression (Euclidean) parameter, under the class of the generalized odd rate transformation models is consistent, semiparametrically efficient and asymptotically normal. It was also shown that the estimator for the functional parameter (baseline cumulative incidence functions) is consistent in an L2-metric and converges at the optimal rate for nonparametric regression which is nevertheless slower than the usual parametric rate. This methodology is also applicable to the case of current status data [21, 22] since these data are special cases of interval-censored data [8]. It is straightforward to show that our results also hold for the more general class of semiparametric transformation models.

Throughout this paper we assumed that the true link functions are known as in [16, 17, 12, 18]. A practical issue regarding the application of the proposed method in practice is when this assumption is not true. To this end, and simiarly to Mao and Wang [18], we proposed performing a grid search over a plausible set of combinations of (α1, …, αk) and choosing the combination with the largest maximized likelihood value. This approach for selecting the proper link function parameter values was illustrated in an analysis of data from a large network of HIV care and treatment programs in sub-Saharan Africa. The issue of the additional variability due to this type of model selection is still an open problem and requires further investigation.

Our approach simultaneously estimates the models for all causes of failure in contrast to many semiparametric methods for right-censored competing risk data. Analysis of all the competing causes of failure is essential for a more complete understanding of the underlying competing risks process. However, this comes at the cost of specifying a model for every cause of failure and can increase the risk of model misspecification, even though our method is very flexible in terms of the baseline cumulative incidence functions and the link functions.

In general, fully parametric methods can be straightforwardly extended to the case of interval censoring, and have the advantage of being efficient. However, the validity of these methods relies on strong assumptions for each cause of failure and this limits their generality. One solution is to adopt more flexible 3-parameter distributions as in [5] and [6]. However, while these distributions are clearly less flexible than the B-spline approximations used in this paper, they may also be plagued by non-convergence issues such as in univariate survival analysis [7]. By contrast, when using our proposed methods, we did not face non-convergence problems in either in the simulations or the analysis of the HIV data. A disadvantage of our method, compared to parametric approaches, is that it does not provide estimates of the long term proportions for each cause of failure. Even though this is possible with parametric approaches as well [23, 3, 6, 2], care is needed when making extrapolations beyond the observable time interval as mentioned by Jeong and Fine [3].

In this paper, we did not consider the asymptotic distribution of the estimated baseline and covariate-specific cumulative incidence functions as the slower rate of convergence of this estimator make this task considerably difficult. However, evaluating the uncertainty of model-based predictions of the cumulative incidence is very useful from a clinical and implementation science perspective. Therefore, it would be useful to study the asymptotic properties of the estimated baseline and covariate-specific cumulative incidence functions and propose a method for the calculation of confidence intervals and simultaneous confidence bands. Additionally, the issue of quantifying the uncertainty due to the link function selection is another interesting and challenging issue for future research.

Acknowledgments

The authors would like to thank the Associate Editor and the two anonymous reviewers for their constructive feedback. Research reported in this publication was supported by the National Institute Of Allergy And Infectious Diseases (NIAID), Eunice Kennedy Shriver National Institute Of Child Health & Human Development (NICHD), National Institute On Drug Abuse (NIDA), National Cancer Institute (NCI), and the National Institute of Mental Health (NIMH), in accordance with the regulatory requirements of the National Institutes of Health under Award Number U01AI069911 East Africa IeDEA Consortium. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. This research has also been supported by the National Institutes of Health grant R01-AI102710 “Statistical Designs and Methods for Double-Sampling for HIV/AIDS” and by the President’s Emergency Plan for AIDS Relief (PEPFAR) through USAID under the terms of Cooperative Agreement No. AID-623-A-12-0001 it is made possible through joint support of the United States Agency for International Development (USAID). The contents of this journal article are the sole responsibility of AMPATH and do not necessarily reflect the views of USAID or the United States Government.

Appendix

The R function ciregic

The R function ciregic, which can be found in the online material of this manuscript, implements the methods proposed in this work. This function depends on the package alabama that performs optimization with both linear and nonlinear inequality constraints. Therefore, prior installation of this package is required in order to use the ciregic function. The first step is to install/define the ciregic function, which can be done by either running the script ciregic.R provided in the online material of this manuscript, or by storing it in the working folder and then using the command

source(“ciregic.R”)

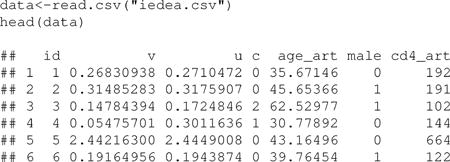

In this illustration we use the HIV dataset that was analyzed in this manuscript. We load and list the first records of these data using the commands

The variables v and u are the lower and upper observation times V and U, respectively. These times correspond to years since ART initiation. The variable c is the cause of failure, where the loss to care event is coded as c=1, the death event as c=2, and the right-censoring as c=0. Note that the u values for the right-censored observations (i.e., c=0) are not included in any calculation. This is because in theory, U = ∞ for right-censored observations. Therefore, these times have been previously replaced with the lower observation times v plus 1 day as

data$u<-(data$v+1/365.25)*(data$c==0)+data$u*(data$c>0)

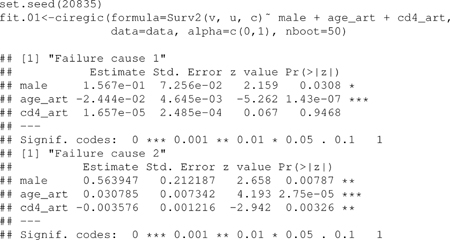

Performing the analysis that was presented in the HIV data example section based on the Fine-Gray model for loss to care and the proportional odds model for death, which correspond to α = (0, 1), requires the commands

In order to achieve reproducibility of the standard error estimates, as they are based on the bootstrap, we need to manually set a random seed as presented above. The first argument in the Surv2 function is the lower observation time V, the second is the upper observation time U, and the third is the cause of failure variable. The last variable should include 0 for right-censored observations, and 1 and 2 for causes of failure 1 and 2, respectively. Currently, we allow for only two competing risks, but we plan to extend this to three or more in the near future. The output presented above corresponds to the first column of Table 3. Note that in that table, the effects of age and CD4 count have been multiplied by 10 and 100, respectively, for interpretation purposes. The object fit.01 is a list that contains an output of the analysis in the first component, the regression parameter estimates and the corresponding variance-covariance matrix in the second and third components respectively, and the value of the maximized log-likelihood in the eighth component. Indication of successful convergence of the optimization algorithm is stored in the ninth component of fit.01. The components 4 to 7, include the estimated baseline cumulative incidence functions and some other elements that are useful for estimating the covariate- specific cumulative incidence function using our pred.cif function. The estimation with 50 bootstrap replications and a dataset of 3,053 observations required 11.65 minutes. We plan to optimize the computational efficiency of our function by utilizing multiple cores, and to upload it in CRAN as a package. Estimation of the optimal model for this analysis with α = (2, 1.2) can be performed using the command

set.seed(20835)

fit.opt<-ciregic(formula=Surv2(v, u, c)∼ male + age_art + cd4_art,

data=data, alpha=c(2,1.2), nboot=50)

Incorporation of an interaction effect between gender and age at ART initiation could be simply incoprorated as

fit.opt<-ciregic(formula=Surv2(v, u, c)∼ male*age_art + cd4_art,

data=data, alpha=c(2,1.2), nboot=50)

Estimation of the covariate-specific cumulative incidence function can be easily performed using the pred.cif function. The first argument in this function is the object containing the results from the model fit generated by the ciregic function, and the second the desired covariate pattern as a vector. For example, in order to estimate the cumulative incidence function of loss to care and death for a 20-year old female with a CD4 count of 120 cells/μl at ART initiation, we need the command

f20.01<-pred.cif(fit.01, covp=c(0,20,120)) head(f20.01) ## t cif1 cif2 ## 1 0.00000000 4.996004e-15 0.001595043 ## 2 0.05265523 7.882583e-15 0.007162271 ## 3 0.10531046 5.606626e-14 0.012532213 ## 4 0.15796569 1.477572e-07 0.015031502 ## 5 0.21062092 4.201278e-02 0.017972113 ## 6 0.26327615 1.896563e-01 0.019366670

The option covp should include the desired covariate values, in the same order as the listing order of the corresponding covariates in the right side of the formula argument in ciregic function. As in any other prediction problem, these covariate values should not be outside the observable range of covariate values in order to avoid extrapolation. The function pred.cif returns a data frame that includes times and the estimated cumulative incidence functions for both failure causes and for the desired covariate pattern. Plotting the object f20.01 in the example above (e.g., with the plot function) will provide the estimated cumulative incidences that are depicted in the second row of Figure 3 as solid grey lines.

Proof of consistency

Empirical process theory techniques will be applied to derive the consistency of the B-spline sieve maximum likelihood estimator. As usual P f = ∫𝒳 f (x)d P(x) and , which is the empirical process indexed by the function f evaluated at , the sample point of individual i, with 𝒳 denoting the sample space. Also, let C denote a general constant which may differ in different settings. The following regularity conditions are assumed

-

C1

E(Z Z′) is non-singular and Z is bounded, i.e. there exists a z0 > 0 such that P(||Z|| ≤ z0) = 1.

-

C2

βj ∈ ℬj, where ℬj is a compact subset of ℝd for every j = 1, …, k.

-

C3

There exists an η > 0 such that P(U − V ≥ η) = 1 and the union of the supports of V and U are contained in [a, b], where 0 < a < b < ∞ and .

-

C4

ϕ0, j ∈ Φ, where Φ is a class of functions with bounded p-th derivative in [a, b] for p ≥ 1 and the first derivative of ϕ0, j is strictly positive and continuous on [a, b]. This holds for all j = 1, …, k.

-

C5

The conditional density of (V,U) given Z has bounded partial derivatives with respect to (v, u) and the bounds of these derivatives do not depend on (v, u, z).

-

C6

For some κ ∈ (0, 1), a′Var(Z|V)a ≥ κa′E(ZZ′|V)a a.s. and a′Var(Z|U)a ≥ κ a′E(ZZ′|U)a a.s. for all α ∈ ℝd.

Let ℱ1 = {l(θ; X): θ ∈ Θn} denote the class of log-likelihood functions indexed by the parameter space . The Euclidean parameters are βj ∈ ℬj and the functional parameters are ϕj ∈ℳn, j, for j = 1, …, k. The functional parameter space ℳn, j ≡ ℳn(γj, Nn, j, pj) is the space of monotone B-spline functions defined on the observation time interval [a, b]. Convergence will be proved in the L2-metric

for θ1 = (β(1)′, ϕ(1)′)′ and θ2 = (β(2)′, ϕ(2)′)′, where

Let 𝕄(θ) = Pl(θ; X) and 𝕄n(θ) = ℙnl(θ; X), which leads to 𝕄n(θ) − 𝕄(θ) = (ℙn − P)l(θ; X) for each θ ∈ Θn. In order to prove that we need to verify the following conditions:

supθ: d(θ, θ0)≥ε 𝕄(θ) < 𝕄(θ0)

The sequence of estimators θ̂n satisfy 𝕄n(θ̂n) ≥ 𝕄n(θ0) − op(1)

It has been shown that there exists a set of brackets {[ ]: i = 1, …, (1/ε)Cqn, j}, where C is a constant and qn, j = Nn, j + p j + 1, with Nn, j and p j being the number of internal knots and the degree of the B-spline for the j-th cause of failure, such that and for any t ∈ [a, b] and i = 1, …, (1/ε)Cqn, j [9]. By condition C2, ℬj ⊂ ℝd is compact and thus it can be covered by C(1/ε)d ε-balls, i.e. for any β ∈ ℬj there exists an s = 1, …,C(1/ε)d such that β ∈ B(βs, j) ≡ {b ∈ ℬj: |b − βs, j | ≤ ε}. By condition C1 it follows that for any β ∈ ℬj and any z, . Let . Then, for any j = 1, …, k we can construct a set of brackets{[ ]: i = 1, …, (1/ε) Cqn, j; s = 1, …,C(1/ε)d }, with and , such that and for any t ∈ [a, b] and z ∈ Z. So, for any cause of failure j = 1, …, k it is straightforward to construct a set of brackets {[ ]: i = 1, …, (1/ε)Cqn, j; s = 1, …,C(1/ε)d } with:

and

such that there exists an s = 1, …,C(1/ε)d and an i = 1, …, (1/ε)Cqn, j such that for any t ∈ [a, b] and z ∈ Z. Using Taylor approximation along with conditions C1, C2 the constraint and the fact that αj < ∞ for all j = 1, …, k, we can show that and the bracketing number with the L1(ℙn)-norm is bounded by C(1/ε)d+Cqn, j, for any j = 1, …, k, s = 1, …,C(1/ε)d and i = 1, …, (1/ε)Cqn, j . Consequently, we can construct a set of brackets {[ ]: ij = 1, …, (1/ε)Cqn, j, sj = 1, …, Cj (1/ε)ν}, where k is the fixed and finite number of competing risks, with

where , δj = I{V<X≤U,δ=j}, and

such that for any j = 1, …, k there exists an i j = 1, …, (1/ε)Cqn, j, sj = 1, …,Cj (1/ε)ν so that for any sample point X ∈ 𝒳. Using Taylor approximation and regularity conditions C1–C3 it can be shown that , for any j, i j and s j . Additionally, the bracketing number N[](Cε,ℱ1, L1(ℙn)) is bounded by . Since qn, j = nrj + m, with r j ∈ (0, 1), it follows that log N[](Cε,ℱ1, L1(ℙn)) = o(n), for any ε > 0. Also, due to condition C3 there exists an envelope F for the class of likelihood functions ℱ1 such that P*F < ∞ and log N(ε,ℱ1, L1(ℙn)) ≤ log N[](2ε,ℱ1, L1(ℙn)) [8]. Consequently, ℱ1 is Glivenko-Cantelli by Theorem 2.4.3 of [24]. Consequently

Next we are going to show that condition 2., i.e. supθ: d(θ, θ0)≥ε 𝕄(θ) < 𝕄(θ0), holds. By definition we have:

where, as in [8], m(x) = x log x − x + 1 ≥ (1/4)(x − 1)2 for x ∈ [0, 5], and thus for any θ in a sufficiently small neighbourhood of θ0 we have

where the last equality follows from a Taylor expansion around the value of the linear predictor ηj (X). Using the same arguments as in [25], pp. 2126–2127, along with regularity conditions C1–C6 it follows that

Consequently

and we have proven condition 2.

Finally, we prove condition 3, i.e. that the sequence of estimators θ̂n satisfy 𝕄n(θ̂n) ≥ 𝕄n(θ0) − op(1). It has been shown that for ϕ0, j ∈ Φ there exists a θ0,n, j ∈ℳn, j of order m ≥ p + 2 such that [26]. This also implies that [8]. Of course the previous result holds for any j = 1, …, k. Letting θ0,n = (β0, ϕ0,n) it follows that

| (4) |

Similar to [8], we consider the class of functions ℱ2 = {l(β0, ϕ; X) − l(β0, ϕ0; X): ϕj ∈ℳj,n, ||ϕj − ϕ0, j||Φ ≤ Cn−pν for any j = 1, …, k} and construct a set of ε-brackets with L2(P))-norm bounded by . Consequently, the corresponding bracketing integral

because the integral above is bounded by 1 [27] and thus by theorem 19.5 of [28] ℱ2 is P-Donsker. Using the fact that ||ϕj − ϕ0, j||Φ ≤ Cn−pν in ℱ2 and the boundedness of l(·; X), along with the dominated convergence theorem we can show that limn→∞ P[l(β0, ϕ; X) − l(β0, ϕ0; X)]2 = 0. Using the fact that for a P-Donsker class of functions there exists a semimetric ρp that makes that class totally bounded and that is asymptotically equicontinuous by corollary 2.3.12 of [24] it follows that:

Next, for the remaining term in (4) we can again use the boundedness of the loglikelihood function for any sample point X ∈ 𝒳 and that ||ϕ0,n, j − ϕ0, j||Φ = O(n−pν), along with the dominated convergence theorem it follows that

as n → ∞. Thus

and this proves the final consistency condition. Consequently

Proof of rate of convergence

Derivation of the rate of convergence will be based on theorem 3.2.5 of [24]. We showed in the proof of consistency that

and that

where I1,n = (ℙn − P)[l(β0, ϕ0,n; X) − l(β0, ϕ0; X)] and I2,n = P[l(β0, ϕ0,n; X) − l(β0, ϕ0; X)]. Applying a Taylor expansion leads to

for any 0 < ε < 1/2 − pν. The uniform boundedness of l̇2(β0, ϕ̃; X) due to the conditions C1–C4 and the fact that ||ϕ0,n − ϕ0||∞ = O(n−pν) [26] lead to

Since ℱ2 is P-Donsker and by corollary 2.3.12 of [24] we have that

Consequently,

Since the function m(x) = x log x − x + 1 is bounded by ≤ (x − 1)2 in a neighbourhood of x = 1, it follows that

and thus

Consequently,

Defining the class of functions ℱ3(η) = {l(θ; X) − l(θ0; X): ϕj ∈ℳn, j and d(θ, θ0) ≤ η} and using similar arguments as in the proofs of consistency, we have that

and thus

Now, using the uniform boundedness of l(θ; X) as a result of conditions C1 and C3, and theorem 3.4.1 of [24], the function ϕn(η) of theorem 3.2.5 [24] is

Also, we have that

Consequently, if pν ≤ (1 − ν)/2 then n2pνϕn(1/npν) ≤ n1/2. Based on this and in accordance with [8] we conclude that if we choose rn = min(pν, (1 − ν)/2), then and . Therefore

Proof of asymptotic normality

In order to prove the asymptotic normality of the estimator for Eucidean parameter β̂n we need to verify that the information matrix I (β0) is nonsingular and also the conditions of theorem 3 of [8]. These conditions are

-

B1

ℙnl̇1(β̂n, β̂n; X) = op(n−1/2) and ℙnl̇2(β̂n, ϕ̂n; X)(ξ 0) = op(n−1/2)

-

B2

(ℙn − P)[l*(β̂n, ϕ̂n; X) − l*(β0, ϕ0; X)] = op(n−1/2)

-

B3

P[l*(β̂n, ϕ̂n; X) − l*(β0, ϕ0; X)] = −I (β0)(β̂n − β0) + op(||β̂n − β0||) + op(n−1/2)

Information for β

At first we will show that the information matrix I (β0) is nonsingular. Generically, the likelihood for an individual observation is

where

We will carry out the calculations for the proportional subdistribution hazards model (i.e. αj = 0). The proof for any other combination of link functions with 0 ≤ αj < ∞, follows from similar arguments and calculations. The likelihood for an individual observation, under αj = 0 for all j = 1, …, k is:

where Λj = exp(ϕj) is the cumulative subdistribution hazard for the j-th cause of failure [11]. The score function for βj (j = 1, …, k) is

Let

The score operator for Λj (j = 1, …, k) is

Let

Then,

and

Next, for any j = 1, …, k, we need to find

and

The random variables here are ( , δj, δ,U, V). Assume U, V the same for all j = 1, …, k and that condition C3 holds. Since the event is equivalent to Tj < V and Tj ≤ Tl for any l = 1, …, k we have that

where g(v, u|z) if the density of (V,U) conditinal on Z = z. Similarly, since the event δj = 1 is equivalent to Tj ∈ (V,U) and Tj ≤ Tl for any l = 1, …, k we have that

Finally, we have that

where t = min(tj: j = 1, …, k). We also have that,

Now

Similarly,

Now,

Let,

Then,

Let,

Then,

Denote,

and

We need to find h1, …, hk s.t.

or equivalently

We have that

Let,

Then,

for tj ≤ tl, for any l = 1, …, k. Similarly let

Then,

for tj ≤ tl, for any l = 1, …, k. Therefore

where

and

Now, as in [29] by lemma 6.1 [29], the fact that K(tj, x) is a bounded kernel and Fredholm integral equations results, there exists a 0(tj, x) such that

Thus, similarly to [29] (pp. 159), is differentiable with a bounded derivative.

Verification of conditions B1–B3

For condition B1 we need to show that

since ℙnl̇1(β̂n, ϕ̂n; X) = 0. ξ0 has a bounded derivative and it is also a function with bounded variation [8]. Define Sn(Dn, Kn,m) to be the space of polynomial spline functions s of order m ≥ 1, with the restriction of s to any of the Kn subinterval being a polynomial of order m ≤ Kn and for m ≥ 2 and 0 ≤ m′ ≤ m − 2, s is m′ times continuously differentiable on [a, b]. Then it can be shown using the argument in [30] pp. 435–436 that there exists a ξ0,n ∈ Sn(Dn, Kn, m) such that

and ℙnl̇2(θ̂n; X)(ξ0,n) = 0. Consequently,

where

and

Let ℱ4 = {l̇2(θ; x)(ξ0 − ξ): θ ∈ℳn, ξ ∈ Sn(Dn, Kn,m) and ||ξ0 − ξ||Φ ≤ n−ν }. Similarly, it can be shown that

and this leads to ℱ4 being Donsker. Also, for any r ∈ ℱ4, Pr2 → 0 as n → ∞ and thus I3,n = op(n−1/2) by corollary 2.3.12 of [24]. Finally, based on the Cauchy-Schwartz inequality and regularity conditions C1–C4 we have that

and thus we proved condition B1.

For condition B2 define the class of functions

Using similar arguments as before, we can show that ℱ5(η) is P-Donsker and that for any r ∈ ℱ5, Pr2 → 0 as η → ∞ and therefore condition B2 holds. Finally, it is a straightforward task to show condition B3 based on Taylor expansion and the convergence rate of the estimator. Thus

References

- 1.Hudgens MG, Satten GA, Longini IM. Nonparametric maximum likelihood estimation for competing risks survival data subject to interval censoring and truncation. Biometrics. 2001;57:74–80. doi: 10.1111/j.0006-341x.2001.00074.x. [DOI] [PubMed] [Google Scholar]

- 2.Hudgens MG, Li C, Fine JP. Parametric likelihood inference for interval censored competing risk data. Biometrics. 2014;70:1–9. doi: 10.1111/biom.12109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jeong JH, Fine JP. Parametric regression on cumulative incidence function. Biostatistics. 2007;8:184–196. doi: 10.1093/biostatistics/kxj040. [DOI] [PubMed] [Google Scholar]

- 4.Dabrowska DM, Doksum KA. Estimation and testing in a two-sample generalized odds-rate model. Journal of the American Statistical Association. 1988;83:744–749. [Google Scholar]

- 5.Cheng Y. Modeling cumulative incidences of dementia and dementia-free death using a novel three-parameter logistic function. The International Journal of Biostatistics. 2009;5:1–17. [Google Scholar]

- 6.Shi H, Cheng Y, Jeong JH. Constrained parametric model for simultaneous inference of two cumulative incidence functions. Biometrical Journal. 2013;55:82–96. doi: 10.1002/bimj.201200011. [DOI] [PubMed] [Google Scholar]

- 7.Lambert PC, Dickman PW, Weston CL, Thompson JR. Estimating the cure fraction in population-based cancer studies by using finite mixture models. Journal of the Royal Statistical Society: Series C Applied Statistics. 2010;59:35–55. [Google Scholar]

- 8.Zhang Y, Hua L, Huang J. A spline-based semiparametric maximum likelihood estimation method for the Cox model with interval-censored data. Scandinavian Journal of Statistics. 2010;37:338–354. [Google Scholar]

- 9.Shen X, Wong WH. Convergence rate of sieve estimates. The Annals of Statistics. 1994;22:580–615. [Google Scholar]

- 10.Li C. The Fine-Gray model under interval censored competing risk data. Journal of Multivariate Analysis. 2016;143:327–344. doi: 10.1016/j.jmva.2015.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fine JP, Gray RJ. A proportional hazards model for the subdistribution of a competing risk. Journal of the American Statistical Association. 1999;94:496–509. [Google Scholar]

- 12.Fine JP. Regression modeling of competing crude failure probabilities. Biostatistics. 2001;2:85–97. doi: 10.1093/biostatistics/2.1.85. [DOI] [PubMed] [Google Scholar]

- 13.Eriksson F, Li J, Scheike T, Zhang M-J. The proportional odds cumulative incidence model for competing risks. Biometrics. 2015;71:687–695. doi: 10.1111/biom.12330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Choi S, Huang X. Maximum likelihood estimation of semiparametric mixture component models for competing risk data. Biometrics. 2014;70:588–598. doi: 10.1111/biom.12167. [DOI] [PubMed] [Google Scholar]

- 15.Mao L, Lin DY. Efficient estimation of semiparametric transformation models for the cumulative incidence of competing risks. Journal of the Royal Statistical Society: Series B. 2017;79:573–587. doi: 10.1111/rssb.12177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Scharfstein DO, Tsiatis AA, Gilbert PB. Semiparametric efficient estimation in the generalized odds-rate class of regression models for right-censored time-to-event data. Lifetime Data Analysis. 1998;4:355–391. doi: 10.1023/a:1009634103154. [DOI] [PubMed] [Google Scholar]

- 17.Fine JP. Analysing competing risk data with transformation models. Journal of the Royal Statistical Society B: Statistical Methodology. 1999;61:817–830. [Google Scholar]

- 18.Mao MM, Wang J-L. Semiparametric efficient estimation for a class of generalized proportional odds cure models. Journal of the Americal Statistical Association. 2010;105:302–311. doi: 10.1198/jasa.2009.tm08459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zeng D, Yin G, Ibrahim JG. Semiparametric transformation models for survival data with a cure fraction. Journal of the American Statistical Association. 2006;101:670–684. [Google Scholar]

- 20.Cheng G, Huang JZ. Bootstrap consistency for general semiparametric M-estimation. The Annals of Statistics. 2010;38:2884–2915. [Google Scholar]

- 21.Groeneboom P, Maathuis MH, Wellner JA. Current status data with competing risks: Consistency and rates of convergence of the MLE. The Annals of Statistics 2008. 2008a;36:1031–1063. doi: 10.1214/009053607000000983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Groeneboom P, Maathuis MH, Wellner JA. Current status data with competing risks: Limiting distribution of the MLE. The Annals of Statistics. 2008;36:1064–1089. doi: 10.1214/009053607000000983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jeong JH, Fine JP. Direct parametric inference for the cumulative incidence function. Journal of the Royal Statistical Society C: Applied Statistics. 2006;55:187–200. [Google Scholar]

- 24.van der Vaart AW, Wellner JA. Weak convergence and empirical processes with applications to statistics. Springer-Verlag; New York: 1996. [Google Scholar]

- 25.Wellner JA, Zhang Y. Two likelihood-based semiparametric estimation methods for panel count data with covariates. The Annals of Statistics. 2007;35:2106–2142. [Google Scholar]

- 26.Lu M. PhD dissertation. Department of Biostatistics, University of Iowa; 2007. Monotone spline estimations for panel cound data. [Google Scholar]

- 27.Kosorok MR. Introduction to emprirical processes and semiparametric inference. Springer; New York: 2008. [Google Scholar]

- 28.van der Vaart AW. Asymptotic statistics. Cambridge University Press; Cambridge: 2000. [Google Scholar]

- 29.Huang J, Wellner JA. Proceedings of the First Seattle Symposium in Biostatistics. Springer; US: 1997. Interval censored survival data: A review of recent progress; pp. 123–169. [Google Scholar]

- 30.Billingsley P. Probability and measure. Wiley; New York: 1986. [Google Scholar]