Abstract

Empathizing with another’s suffering is important in social interactions. Empathic behavior is selectively elicited from genuine, meaningful pain but not from fake, meaningless scenarios. However, the brain’s screening mechanism of false information from meaningful events and the time course for the screening process remains unclear. Using EEG combined with principle components analysis (PCA) techniques, here we compared temporal neurodynamics between the observation of pain and no-pain pictures as well as between true (painful expressions and needle-penetrated arms) and false (needle-penetrated faces with neutral expressions) pain pictures. The results revealed that pain vs. no-pain information is differentiated in the very early ERP components, i.e., the N1/P1 for the face and arm pictures categories and the VPP/N170 for the facial expression category while the mid-latency ERP components, N2 and P3, played key roles in differentiating true from false situations. The complex of N2 and P3 components may serve as a screening mechanism through which observers allocate their attentions to more important or relevant events and screen out false environmental information. This is the first study to describe and provide a time course of the screening process during pain empathy. These findings shed new light on the understanding of empathic processing.

Introduction

The sight of an elderly woman lying in the road with bruises and a grimace on her face elicits pity which urges you to rush to her and help her up. This scene is an example of the psychological phenomenon known as empathy, which is generally defined as the capacity to perceive and understand the emotions, feelings and inner states of another individual1–3. As a basic building block of human social interaction, empathy is believed to contribute greatly to cooperation, socialization and the development of morality2, 4, 5. Most everyone is affected when seeing others in pain, and as such, painful scenes are the most common and robust trigger for empathy6–8. Prior studies have shown that exposure to another’s pain can activate brain regions involved in the direct experience of pain9–11. Through this automatic matching mechanism, one can quickly react to another’s suffering.

It is well accepted that empathy is followed by prosocial behavior12, 13. In cases where subjective reports are unavailable, prosocial behaviors are treated as an index of empathy14–16. However, one may not react to all pain cues in the same manner (i.e. react prosocially). Pain scenes are pervasive, diverse and habitual sights in daily life7 and prosocial behavior has a cost1, 7, 17, 18. Whether to help, ignore, escape or even experience schadenfreude when seeing another in pain may depend on the estimation of the authenticity of the pain, the meaning to the observer, the relationship between the sufferer and the observer and the physical and social context1, 7. For example, a recent fMRI study revealed that subjects were more willing to help ingroup members than outgroup members19. Other research has shown that perceived fairness20, social stigma21, 22, and the familiarity and closeness between empathizer and empathizee23, 24 have an impact on empathy. Together, these studies suggest a screening process during empathy which checks the emotional surroundings and selects information which is important or meaningful for further processing. To date, surprisingly little attention has been given to the study of how and when this screening process occurs.

There is a considerable literature focusing on the neural mechanisms underpinning empathy, among which event-related potential (ERP) studies provide an outstanding perspective on exploring the temporal dynamic of empathy. Fan and Han used a convinced paradigm in which they required participants to perform either a pain judgment task or a hands counting task while watching pictures or cartoons depicting hands in pain25. They found that visual stimuli induced early and late ERP components that differentiated pain from neutral conditions. The early component, frontally distributed at 140 ms after stimulus presentation, was modulated by the reality of the stimuli, regardless of the task demand, hence corresponding to the affective sharing component25. In contrast, the late component, appearing over the central-parietal areas after 380 ms, was only evident when attending to pain cues (i.e., the pain judgment task), and hence was considered a cognitive evaluation component25. These findings have been confirmed by subsequent research26–30. Based on this research, it can be inferred that the “screening process” likely occurs during the late cognitive evaluation period. However, critical questions remain about this speculation. First, some indirect evidence has shown that one may estimate another’s pain in an automatic fashion. For instance, contextual reality25 and racial bias27, 29, 31 modulate the early automatic ERP component of pain empathy. The idea of an automatic screening mechanism is reasonable since it has great evolutionary benefit for the observer (e.g., alleviating cognitive load). In addition, ERP components, especially the late positive component, are commonly a mixture of many spatially or temporally overlapping neural activities (P3 and LPP subcomponents in the case of the late positivity) that cannot be separated from each other by traditional ERP methods32, 33. Thus, the specific time window during which the screening process occurs remains unclear. Direct evidence through the examination of brain activity during empathy is needed.

The present study used ERP to directly measure brain activity during the experience of empathy. Three different categories of painful pictures were used as empathy-eliciting stimuli: a painful facial expression, a needle-penetrated face and a needle-penetrated arm (Fig. 1). A neutral expression, a face touched with a Q-tip and an arm touched with a Q-tip were used as respective controls (i.e., no-pain scenes). All stimuli has previously been used to induce vicarious pain10, 21, 26, 34, 35. The painful expression and the needle-penetrated arm are more natural stimuli that may be experienced in daily life or in clinical settings, while a needle-penetrated face with a neutral expression is a more artificial stimulus, specifically designed for the laboratory, and likely not encountered in actual life settings. The needle-penetrated face accompanied by a neutral expression is perceived as a no-pain stimulus, and ERPs would not differentiate between the needle-penetrated face and a Q-tip-touched face. The aim of the current study was to explore the empathy screening process through ERP components. We expected that the ERP components would distinguish a painful expression from a neutral expression, a needle-penetrated arm from a Q-tip-touched arm, but would not distinguish between a needle-penetrated face and a Q-tip-touched face with a neutral expression. In addition, a temporospatial two-step principle component analysis (PCA) approach was applied to isolate the ERP components of such a process. Temporospatial PCA is a promising tool to capture variance across electrode sites and time points to separate latent components that may be conflated in traditional trial-averaged waveform measures of ERPs32, 33, 36.

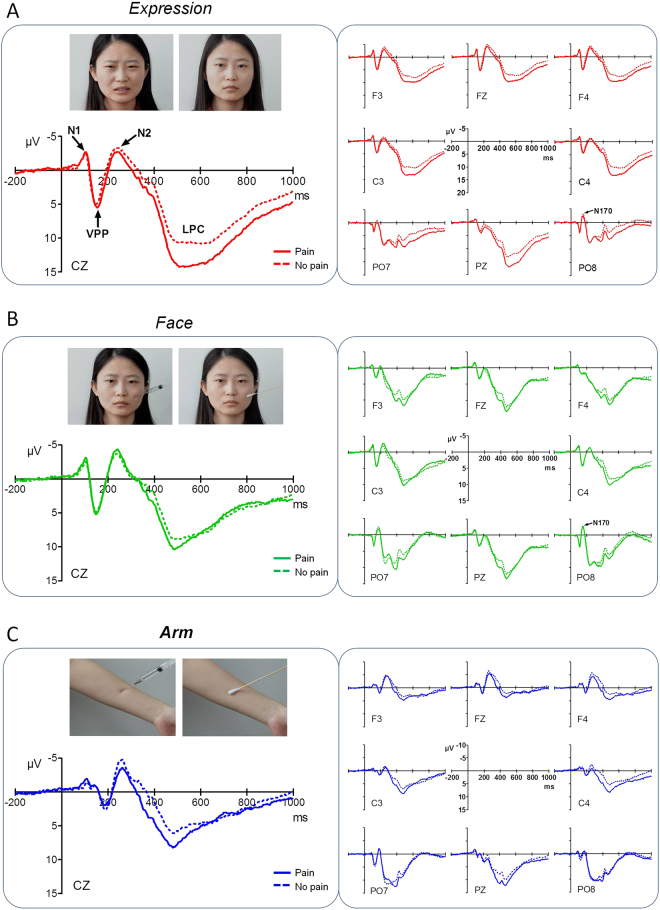

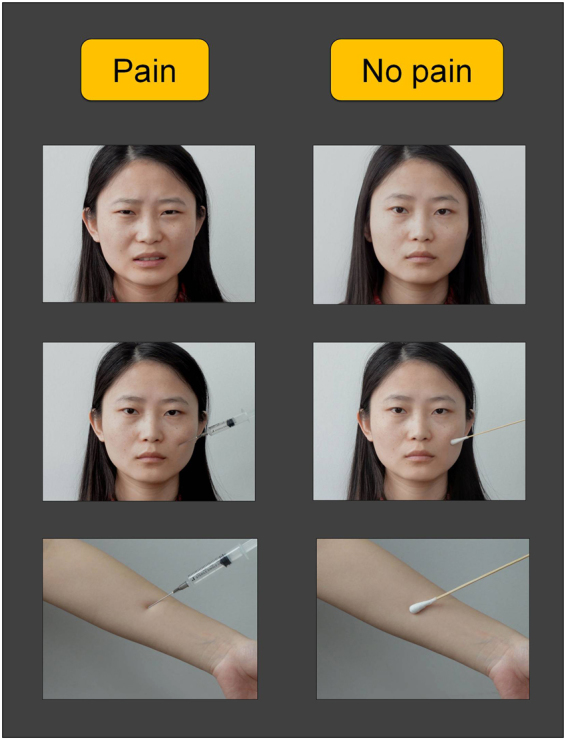

Figure 1.

Experimental design and stimuli. A 2 (condition: pain vs. no-pain) × 3 (stimulus category: facial expressions, face pictures and arm pictures) within-subject factorial design was used. Examples of the visual stimuli are shown here. Top panel: painful expression vs. neutral expression, Middle panel: needle-penetrated face vs. Q-tip-touched face and Bottom panel: needle-penetrated arm vs. Q-tip-touched arm.

Results

ERPs

The grand average ERPs at electrodes of interest (F3, F4, Fz, C3, C4, Cz, Pz, PO7 and PO8) and scalp topographies prior to PCA are illustrated in Figs 2 and 3A. Visual stimuli of the three categories (facial expressions, face pictures and arm pictures) elicited similar ERP components. At frontocentral sites, an N1 component was apparent, followed by a vertex positive potential (VPP), an N2 component, and finally a long lasting late positive complex (LPC). At temporo-occipital sites, posterior P1 and N170 components (see Supplementary Information) were seen.

Figure 2.

Grand average ERPs in response to three stimulus categories under pain and no-pain conditions at frontocentral (F3, F4, Fz, C3, C4 and Cz) and parieto-occipital (Pz, PO7 and PO8) electrode sites. All stimuli elicited similar components. At frontocentral sites, an N1 component is apparent, followed by a VPP component, an N2 component, and a long lasting LPC while at temporo-occipital sites, a posterior P1 component and an N170 component are elicited. Negative amplitudes are plotted upwards. (A) ERPs in response to painful expressions (red solid lines) and neutral expressions (red broken lines). (B) ERPs in response to needle-penetrated faces (green solid lines) and Q-tip-touched faces (green broken lines). (C) ERPs in response to needle-penetrated arms (blue solid lines) and Q-tip-touched arms (blue broken lines).

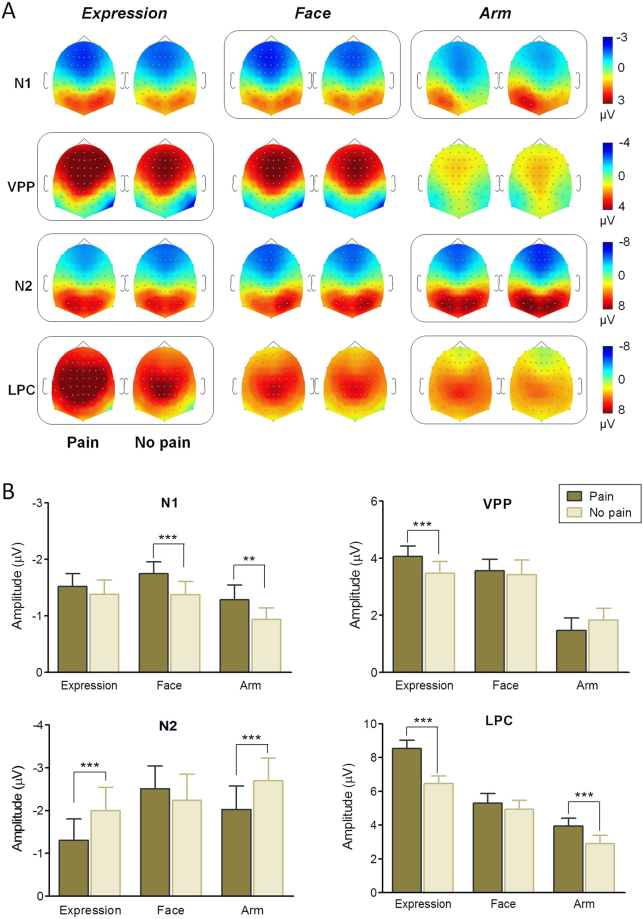

Figure 3.

Topographical maps and statistical results of ERP components. (A) Scalp topographies of N1, VPP, N2, and LPC components (from top to bottom), plotted separately for each experimental condition (from left to right: painful expressions, neutral expressions, needle-penetrated faces, Q-tip-touched faces, needle-penetrated arms and Q-tip-touched arms). Boxed plots indicate a significant difference in ERP amplitude revealed by a Bonferroni-corrected t test. (B) Post hoc comparisons on condition × category interaction effects for N1, VPP, N2, and LPC amplitudes were conducted separately. Asterisks indicate significant differences in amplitude. **P < 0.01; ***P < 0.001. Error bars represent standard errors of the mean.

N1

Painful scenes elicited larger N1 potentials than neutral scenes, and N1 amplitudes were higher in response to face-containing pictures (i.e., facial expressions and face pictures) than to arm pictures (expressions vs. arms, P < 0.001; faces vs. arms, P < 0.001). A 3-way ANOVA on N1 amplitude revealed a significant main effect of condition [F(1, 276) = 56.18, P < 0.001, η P 2 = 0.17], stimulus category [F(2, 552) = 25.70, P < 0.001, η P 2 = 0.09], and laterality [F(2, 276) = 4.34, P = 0.014, η P 2 = 0.03], and a significant interaction between category × laterality [F(4, 552) = 5.13, P < 0.001, η P 2 = 0.04]. Post hoc analysis revealed significant differences between the needle-penetrated face and the Q-tip-touched face (P < 0.001), and between the needle-penetrated arm and the Q-tip-touched arm (P = 0.001; Fig. 3A and B). No differences were observed between painful expressions and neutral expressions (P > 0.999; Fig. 3A and B). Interestingly, when gender was taken into consideration, the early distinction between the needle-penetrated face and the Q-tip-touched face, and between the needle-penetrated arm and the Q-tip-touched arm were only found in females, but not in males, as revealed by the significant gender × condition × category interaction effect [F(1.97, 545.86) = 8.82, P = 0.005, η P 2 = 0.02].

The 3-way ANOVA on N1 latency revealed a significant main effect of stimulus category [F(1.64, 447.30) = 5.29, P = 0.009, η P 2 = 0.02]. Post hoc comparisons indicated that face-containing pictures were detected faster than arm pictures (expression vs. arm, P = 0.041; face vs. arm, P = 0.006). In addition, the condition × category interaction [F(1.99, 547.98) = 4.77, P = 0.009, η P 2 = 0.02] was significant.

VPP

The VPP showed a greater amplitude in the pain condition relative to the no-pain condition. Face-containing pictures produced marked enhancement in the amplitude of the VPP over arm pictures (expression vs. arm, P < 0.001; face vs. arm, P < 0.001). The 3-way ANOVA for the VPP amplitude yielded a significant main effect of condition [F(1, 276) = 22.37, P < 0.001, η P 2 = 0.07] and category [F(1.58, 436.79) = 130.93, P < 0.001, η P 2 = 0.32], and a significant interaction effect of condition × category [F(1.79, 493.27) = 8.62, P < 0.001, η P 2 = 0.03]. Post hoc comparisons revealed a significant difference between painful expressions and neutral expressions (P < 0.001; Fig. 3A and B). No differences were found for the other two (face pictures and arm pictures) stimulus categories (needle-penetrated face vs. Q-tip-touched face, P > 0.999; needle-penetrated arm vs. Q-tip-touched arm, P > 0.999; Fig. 3A and B).

For the VPP latency, the 3-way repeated measures ANOVA revealed a significant main effect of category [F(1.28, 291.63) = 291.63, P < 0.001, η P 2 = 0.51], and a significant condition × category interaction effect [F(1.92, 529.35) = 4.77, P < 0.001, η P 2 = 0.04). Similar to the results for the N1 component, face-containing pictures elicited shorter VPP latencies than arm pictures (expression vs. arm, P < 0.001; face vs. arm, P < 0.001; Fig. 2). In addition, there was a significant difference between painful expressions and neutral expressions (painful expression > neutral expressions, P < 0.001), whereas no differences were found for the other two (face pictures and arm pictures) stimulus categories (needle-penetrated face vs. Q-tip-touched face, P > 0.999; needle-penetrated arm vs. Q-tip-touched arm, P > 0.999).

N2

The N2 component showed a positive shift in the pain condition compared to the no-pain condition. Facial expression pictures elicited a more positive N2 than face pictures and arm pictures (expression vs. face, P < 0.001; expression vs. arm, P < 0.001; Fig. 3). In a 3-way repeated measures ANOVA of N2 amplitude, there were significant main effects of condition [F(1, 276) = 39.93, P < 0.001, η P 2 = 0.13], category [F(1.58, 436.65) = 19.66, P < 0.001, η P 2 = 0.07], and laterality [F(2, 276) = 4.76, P = 0.009, η P 2 = 0.03], and significant interaction effects of condition × category [F(1.98, 545.41) = 28.00, P < 0.001, η P 2 = 0.09], condition × laterality [F(2, 276) = 3.43, P = 0.034, η P 2 = 0.02], category × laterality [F(3.16, 436.65) = 6.07, P < 0.001, η P 2 = 0.04], and condition × category × laterality [F(3.95, 545.41) = 3.10, P = 0.016, η P 2 = 0.02]. Post hoc comparisons indicated that N2 amplitudes were significantly different between painful expressions and neutral expressions (P < 0.001, Fig. 3B), and between needle-penetrated arms and Q-tip-touched arms (P < 0.001, Fig. 3B). No difference in N2 was observed between needle-penetrated faces and Q-tip-touched faces (P = 0.128, Fig. 3B). The pain-related N2 amplitude modulation was significant at midline and right hemisphere electrodes (both Ps < 0.001), but was not significant at left hemisphere electrodes (P = 0.463).

There was a significant main effect of category on N2 latency [F(1.80, 495.49) = 71.34, P < 0.001, η P 2 = 0.21]. The N2 latencies elicited by facial expression pictures were longer than those elicited by face pictures but shorter than those elicited by arm pictures (all Ps < 0.001).

LPC

The 3-way ANOVA of LPC revealed significant main effects of condition [F(1, 276) = 221.43, P < 0.001, η P 2 = 0.45] and category [F(1.94, 535.98) = 497.80, P < 0.001, η P 2 = 0.64], and significant interaction effects of condition × category [F(1.87, 516.39) = 55.74, P < 0.001, η P 2 = 0.17), condition × laterality [F(2, 276) = 5.15, P = 0.006, η P 2 = 0.04] and category × laterality [F(3.88, 535.98) = 3.83, P = 0.005, η P 2 = 0.03]. Post hoc analyses showed that the pain vs. no-pain difference in LPC existed in expression pictures and arm pictures (both Ps < 0.001), but not in face pictures (P = 0.058).

PCA results

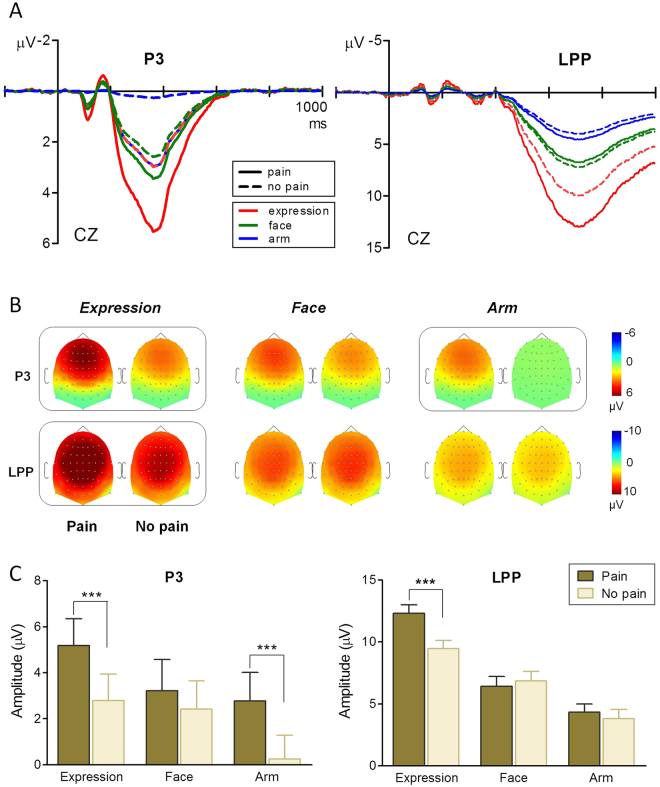

The LPC component was divided into two separate subcomponents by temporospatial PCA: P3 and LPP. The microvolt rescaled factor scores of the PCA-derived P3 and LPP components are presented in Fig. 4A. Two-way ANOVAs were conducted on these components, to better illustrate the empathic process over the long lasting late component (see Fig. 4B and C).

Figure 4.

PCA derived P3 and LPP components. (A) Virtual PCA derived P3 (left) and LPP (right) component waveforms for each experimental condition. (B) Scalp topographies of P3 (upper panel) and LPP (lower panel) components, plotted separately for each experimental condition (from left to right: painful expressions, neutral expressions, needle-penetrated faces, Q-tip-touched faces, needle-penetrated arms and Q-tip-touched arms). Boxed plots indicate a significant difference revealed by a Bonferroni-corrected t test. (C) Post hoc comparisons on condition × category interaction effects for P3 (left) and LPP (right) components. Asterisks indicate significant differences in amplitude. ***P < 0.001. Error bars represent standard errors of the mean.

The P3 amplitudes were greater in response to painful scenes than to neutral scenes, and face-containing pictures elicited greater P3 amplitudes than arm pictures (expression vs. arm, P < 0.001; face vs. arm, P = 0.035; Fig. 4B and C). The 2-way ANOVA revealed a significant main effect of condition [F(1, 30) = 42.37, P < 0.001, η P 2 = 0.59] and category [F(2, 60) = 12.03, P < 0.001, η P 2 = 0.29], and a significant interaction effect between these variables [F(2, 60) = 6.26, P = 0.003, η P 2 = 0.17]. Post hoc analysis revealed a significant pain-related difference between painful expressions and neutral expressions, and between needle-penetrated arms and Q-tip-touched arms (both Ps < 0.001; Fig. 4B and C). No significant difference was found between the needle-penetrated face and the Q-tip-touched face (P = 0.622; Fig. 4B and C).

Painful scenes elicited considerably greater LPP components than neutral scenes. Expression pictures elicited the largest LPP, while arm pictures elicited the smallest LPP (all Ps < 0.001). In the 2-way ANOVA for the LPP amplitude, there were significant main effects of condition [F(1, 30) = 15.85, P < 0.001, η P 2 = 0.35] and category [F(2, 60) = 61.02, P < 0.001, η P 2 = 0.67], and a significant condition × category interaction effect [F(2, 60) = 10.90, P < 0.001, η P 2 = 0.27]. Post hoc comparisons revealed that the pain effect only existed in the expression category (P < 0.001; Fig. 4B and C), suggesting long lasting empathic processing for pain expression.

Behavioral performance

Table 1 summarizes the mean RT and accuracy rate under each category and condition. Participants responded faster to pain pictures than neutral pictures [2-way ANOVA, condition effect: F(1, 30) = 19.52, P < 0.001, η P 2 = 0.39] and took more time to judge expression pictures than to judge face and arm pictures [all Ps < 0.001; category effect: F(2, 60) = 30.12, P < 0.001, η P 2 = 0.50].

Table 1.

Participants’ mean reaction time and accuracy rate of pain judgment task in each experimental condition.

| Stimulus category | Condition | |

|---|---|---|

| Pain | No pain | |

| Reaction time (ms) | ||

| Expression | 756.69 (191.72) | 784.40 (196.91) |

| Face | 642.82 (152.78)***# | 721.93 (249.48) |

| Arm | 635.55 (165.02)*** | 686.96 (169.79) |

| Accuracy rate (%) | ||

| Expression | 91.85 (6.31)# | 94.80 (2.76) |

| Face | 97.53 (2.90)*** | 97.27 (1.92) |

| Arm | 98.39 (1.27)*** | 98.11 (1.70) |

Values are mean (standard deviation). ***P < 0.001, compared with painful expression. # P < 0.05, compared with corresponding no pain condition.

Participants were less accurate in judging painful scenes than neutral scenes, and also less accurate in judging expressions than faces and arms (all Ps < 0.001). The 2-way repeated measures ANOVA revealed significant main effects of condition [F(1, 30) = 4.89, P = 0.035, η P 2 = 0.14] and category [F(1.37, 41.18) = 40.80, P < 0.001, η P 2 = 0.58], and a significant interaction effect of condition × category [F(1.35, 40.61) = 5.71, P = 0.014, η P 2 = 0.16].

Correlation analyses

Correlation analyses showed no associations between electrophysiological measures and any of the psychometric measures or behavioral performance.

Discussion

The present study examined brain activity induced by empathy-eliciting painful scenes through the combination of ERP and PCA techniques. There were two main findings. First, a novel brain screening process during empathy processing for pain (i.e., N2 and P3 components) was discovered and is responsible for distinguishing between true and false pain scenarios. Second, face stimuli had priority over arm stimuli in early pain empathy processing, represented by shorter latencies and higher amplitudes of N1 and VPP components.

The painful expression and the needle-penetrated arm stimuli used in the current study are natural scenarios witnessed in daily life, and as such they can be considered to reflect true pain. In contrast, a neutral expression in response to a prick in the face with a needle is unnatural and thus the pictures depicting a needle-penetrated face are considered to reflect false pain. In the present study, a 2 (pain vs. no-pain) × 3 (facial expression vs. face pictures vs. arm pictures) within-subject design was adopted, revealing different temporal dynamics of neural activity between the observation of pain and no-pain pictures as well as between true and false pain pictures. Pain vs. no-pain information is differentiated in the very early ERP components, i.e., the N1/P1 for the face and arm pictures categories and the VPP/N170 for the facial expression category while the mid-latency ERP components, N2 and P3, played key roles in differentiating true from false situations. That is, N2 and P3 amplitudes were more positive in response to painful scenarios only in the facial expression (grimace) and arm picture (needle-penetrated arm) categories but not in the face category. Thus, the N2 and P3 activity constituted a crucial screening operation during empathy processing for pain. Finally, the long-latency component (the LPP) may be more associated with socioemotionally salient stimuli, as a larger LPP amplitude was exclusively observed in the facial expression category. The temporal characteristics of the components (N1/P1, VPP/N170, N2, P3, and LPP) triggered by empathy-eliciting scenes in the current study are consistent with previous findings25, 29, 30, 37. More importantly, we extended these findings by indicating that a judging process or a screening mechanism exists during empathy for pain, comprising of N2 and P3, and responsible for differentiating true from false pain situations.

The current study demonstrated that environmental pain information can be processed as early as 100 ms following stimulus onset (i.e., the N1/P1 component). The N1 and P1 components are widely accepted as signatures of early sensory processing and prior ERP studies have shown them to be sensitive to emotional content32, 38. The pain-related modulation of N1/P1 components may be interpreted as early differentiation between pain and no-pain processing. However, prior research has reported conflicting results on the pain effect on early ERP components. Some authors have reported a significant pain effect on N1/P1 amplitude29, 30, 37, while others have found no effect25, 27, 31. The controversial results may be due to different empathy-eliciting stimuli (e.g., painful expressions, injured body parts, etc.) used in these studies as well as different ERP component isolation methods. In general, observing injured body parts produces a significant N1/P1 modulation, as in the present study. It may be that injured body parts are more self-relevant than painful facial expressions. That is, when viewing an injured limb, foot, or other body part, observers may experience vicarious pain from the first-person perspective, thus generating a rapid aversive response.

An interesting finding in our study was that only females can differentiate pain vs. non-pain pictures in the N1 time window, suggesting that women were more sensitive in detecting pain-related information than men. This was consistent with a recent EEG research in which females show stronger mu suppressions (a robust biomarker of sensorimotor resonance in empathy) than males when watching painful pictures39, which indicated that females were more easily to imagine themselves into the painful situations. Gender difference is an interesting topic with regard to empathy. Although it is well accepted that females are more empathic than males in general, previous studies using different methods revealed inconsistent findings20, 40–42. Evidence has shown that females score higher than males in both trait empathy and state empathy40, 43–45. However, an influential meta-analysis has revealed no gender-specific activation in previous empathy fMRI studies42. In the present study, we found that females had faster brain processing speed in response to empathy-eliciting situations, providing evidence that females may be more empathic than males.

In line with previous face perception studies, the current results showed that face processing has priority over body-part processing. The early ERP components (N1/P1, VPP/N170) to face stimuli were faster and larger than those to arm stimuli. Face-driven modulation of N1/P1 amplitudes is controversial and such a modulation is commonly considered not specific to face perception46, 47. However, the enhanced amplitude and shortened latency in response to face-containing pictures reflects face-related facilitation of early visual processing. The N170 component and its positive vertex counterpart (VPP component) are well-accepted electrophysiological markers for face perception, as they both demonstrate larger amplitudes in response to faces than non-face stimuli46. Faces convey a wealth of information (including but not limited to sex, age, attractiveness, emotional state, and focus of attention) that is necessary for successful social interaction48. As social beings, humans are sensitive to social information49. The current findings indicate that cognitive resources are allocated preferentially to faces demonstrating pain. This finding, while preliminary, suggests that face-containing stimuli may produce stronger empathic effects than non face-containing stimuli50.

The current study revealed a screening process (comprised of N2 and P3) that discriminates true from false pain situations, in agreement with our hypothesis. Previous studies have shown that N2 amplitude is sensitive to emotional stimuli and reflects selective attention towards biologically important events, while P3 is closely associated with attention resource allocation based on motivational salience32, 38, 51. N2-P3 waveform has also been described as a uniform complex that reflects orienting response52, 53. The modulation of N2 and P3 amplitudes in viewing other’s pain suggests that painful scenes capture more attentional resources than no-pain scenes. This attentional bias occurred only in real pain situations (i.e., painful expressions and needle-penetrated arms) but not in artificial painful scenes (i.e., needle-penetrated faces). These results support our hypothesis that observers tend to first perceive environmental cues that are painful or unpleasant, and then select true or meaningful situations for further processing. Consistent with this idea, previous studies have shown that in-group characteristics, especially race19, 26, 31, 54–56, relationship between the empathy dyad24, 30, and contextual characteristics such as stimulus reality25, 34 influence empathic neural activities. Mainly using fMRI method thereby leaving the screening process undiscovered, nevertheless, these studies suggest that observers select some parts of perceived pain cues into empathy processing based on interpersonal or environmental characteristics. Sessa et al. investigated the effect of race on pain empathy processing by asking white participants to observe pain or no-pain pictures of people from the same or different races27. The pain vs. no-pain difference was magnified in the same-race condition compared to the other-race condition in a time window (280–340 ms) analogous to the N2-P3 component (approximately 250–360 ms) in our study. These findings suggest a similar screening mechanism that discriminates own race from other race during empathy processing for pain.

Although N2 and P3 components are both associated with the process of selective attention, it has been proposed that they reflect different aspects of attention processing. The mid-latency N2 component (peak between 200~300 ms) is a semiautomatic or pre-attentive process57, whereas the P3 component (peak after 300 ms) is generally associated with controlled attention or conscious processing32, 38. In parallel with this idea, the N2 and P3 components are viewed as automatic and controlled processes, respectively in pain empathy studies. For example, Fan and Han’s initial study25 found that pre-P3, short-latency electrophysiological activity was not influenced by task demand. However, the pain effect on long-latency waveforms depended on whether attention was allocated to pain cues or not. Since both the N2 and P3 components are associated with the screening process, it is suggested that the screening process involves engagement of attention and occurs during a shift from automatic to conscious attention.

Attention plays a vital role in empathy58 and prosocial behavior2. The screening mechanism, working as a social information filter and an attention allocator, allows meaningful environmental cues (e.g., pain experienced by a family member) to enter into further empathic processing, while preventing meaningless cues (i.e., irrelevant or unreasonable information) from engaging resources. Due to limited cognitive and physical resources (e.g., attention, time and money), one must analyze and evaluate environmental cues before making a decision to help (approach) or to reject (avoid) a situation. Through the screening mechanism, observers generate empathic feelings as well as helping behavior towards the victims whose situations are judged as painful, distressing, or serious.

By means of a temporospatial PCA approach, the long LPC component was divided into two subcomponents: the P3 and the LPP. As discussed before, the P3 component is part of the screening process and reflected a controlled orienting response. The LPP waveform, however, exhibited a different modulation pattern. The pain effect on LPP was only observed in the expression category but not in the face or arm categories. The LPP indexes ongoing, elaborative processing (cognitive appraisal) of motivationally salient information32, 38. Facial expression is one of the most effective methods by which to communicate pain information, and is considered a credible method in conveying suffering59. As social beings, human have evolved a propensity to detect and interpret facial expressions of pain60. Consistent with this idea, Reicherts et al. found that pain expressions elicited larger LPP components than neutral expressions as well as other emotional facial expressions (i.e., happiness and fear)61. In the present study, the painful expression stimulus was more complicated and socioemotionally salient than the needle-penetrated arm stimulus indicating that emotional expressions capture sustained attention given their inherent motivational value.

The traditional measurement of average ERP is not capable of parsing components that are spatially or temporally overlapped, such as P3 and LPP. These two components are intrinsically different from each other as discussed above. However, in previous empathy ERP studies they are treated as one single component25, 27–29, 31. Temporospatial PCA is a powerful method that can decompose an ERP waveform into its underlying components32, 33, 36. In the present study, we for the first time applied this method to analysis empathic ERP data, and separate out P3 and LPP subcomponents from a mixed long-lasting late positive component (i.e., LPC). This may help us get a better understanding of temporal structure of the screening mechanism.

The present study has several limitations. First, we aimed to identify a neural process that differentiates true pain from false pain. However, instead of a true-false determination task a pain-no pain judgment task was used. Prior studies have shown that task demand can have a marked influence on pain empathic ERPs (mainly the late components)25. Under the framework of empathy study, empathic feelings were more easily induced with a pain-relevant task which draws attention to pain cues. A pain-irrelevant task, on the other hand, withdraws attention from these cues, thus weakening the pain empathy effect. Second, the arm pictures used in the current study have greater visual similarity than the face pictures. This discrepancy between stimuli may produce somewhat different levels of arousal between stimulus categories and modulate the face vs. arm electrophysiological activities, especially in early ERP components. However, the face effect is well accepted in socioemotional research46, and therefore, this discrepancy may not be a serious problem.

In conclusion, this work was the first to identify the existence and time course of a brain screening mechanism during human empathy. Our results demonstrate that more attention is allocated to pain cues when the cues are classified as true pain, accompanied by more positive N2 and P3 ERP components. Observers screen out false, meaningless information, only allowing true and meaningful information into further empathic processing. Our data also revealed a face priority in early empathic processing in brain activity, confirming that face-containing materials are efficient stimuli in eliciting social emotional experience.

Methods

Participants

The original sample consisted of 36 healthy Chinese college students recruited locally through advertisements (internet posts). Five students were excluded from further analysis due to either poor performance on the experimental task or excessive EEG artifacts. Thus the final sample consisted of 31 participants (13 males and 18 females, mean age 21.90 ± 1.90 years; See Supplementary Table 1 for demographic information and scale scores of the original and final samples). All subjects were right handed, and had normal or corrected-to-normal vision, and no current or prior history of chronic pain, neurological or psychiatric disorders or developmental disability (self-report). They gave written informed consent prior to participation and received ¥60 (about $10) as compensation. The study was approved by the Research Ethics Committee of the Institute of Psychology, Chinese Academy of Sciences, and was performed in accordance with relevant guidelines and regulations.

Experimental design and visual stimuli

A 2 × 3 within-subject factorial design was used with the pain condition (pain vs. no-pain) as the first factor, and the stimulus category (facial expression, face pictures, and arm pictures) as the second factor. Accordingly, there were six types of stimuli (painful expression vs. neutral expression, needle-penetrated face vs. Q-tip-touched face, and needle-penetrated arm vs. Q-tip-touched arm). As exemplified in Fig. 1, the painful expression stimulus depicted a person displaying a wrinkled, grimacing, and eye-narrowed face (pain expression according to Prkachin and Solomon62, 63), while a neutral expression depicted a person with an expressionless face. The needle-penetrated face and the Q-tip-touched face were both neutral faces with the left cheek being pricked by a syringe needle or touched by a Q-tip, respectively. The needle-penetrated arm and the Q-tip-touched arm were images depicting a forearm that was penetrated by a syringe and touched by a Q-tip, respectively. These stimulus types are the most commonly used materials in the field of empathy research, and are well accepted as valid representations of others in pain10, 21, 26, 34, 35.

The visual stimuli consisted of 180 digital static color pictures, with 30 pictures of each of the six stimulus types. All photographs used in the current study were taken by the first author using 30 different participants. The detailed information of the production and validation of the photographs are described in Supplementary Information. All visual stimuli were presented on a 14 inch color monitor with a black background at a viewing distance of 80 cm (subtending a visual angle of 16.7° wide × 12.5° high). The resolution of the screen was 1366 × 768 pixels.

Procedure

The experimental task was administered on a personal computer running E-prime 2.0 (Psychology Tools Inc., Pittsburgh, PA, USA) to control stimulus presentation, timing and response recording. Prior to the EEG recording session, participants received detailed instructions and were familiarized with the task via six practice trials (one trial per stimulus type). Stimuli used during practice trials did not appear during the experiment. The experiment was comprised of ten blocks. Each block contained 90 trials, involving 15 trials of each experimental condition. Thus, each picture was presented five times, once every two blocks. Stimuli were fully randomized between participants and pseudorandomized within blocks (no more than two trials of the same stimulus type were presented consecutively). Participants were allowed a one minute break between every two successive blocks.

Each trial began with a red fixation cross (visual angle, 0.7° wide × 0.7° high) appearing at the center of the screen for a random, variable duration (800–1600 ms, with a 100-ms jitter). A picture stimulus was then displayed for 250 ms, followed by a blank screen interval (no time limit), during which subjects were instructed to indicate whether or not the picture represented a pain situation by pressing one of two buttons (‘F’ and ‘J’, counterbalanced across participants). Reaction times (RTs) and accuracy were measured. Once a response was made, the trial ended and the next trial began.

Questionnaires

Dispositional empathy was assessed by a Chinese version of the Interpersonal Reactivity Index (C-IRI)64. The IRI is a 28-item self-administered survey consisting of four subscales (perspective taking [PT], fantasy [FS], empathic concern [EC], and personal distress [PD])65. The PT and FS subscales assess cognitive aspects of empathy: the former assesses the tendency to adopt another’s perspective and the latter reflects emotional identification with fictional characters in books, movies, and plays. The EC and PD subscales assess other- and self-oriented empathy-related emotional reactions, respectively. Specifically, the EC describes a person’s tendency to have feelings of sympathy and concern for others, and the PD measures the extent to which someone feels unease as a result of witnessing another’s emotional distress. The C-IRI was translated from the IRI using back translation, and factor analyses show that the two versions consist of the same factor components.

Catastrophic thinking about pain was measured by the Chinese version of the Pain Catastrophizing Scale (HK-PCS)66. Pain catastrophizing is defined as ‘an exaggerated negative mental set brought to bear during actual or anticipated painful experiences’, and is an important psychological characteristic that contributes to the perception and experience of pain67. The PCS is a 13-item self-report instrument that assesses how frequently participants experience ruminative, alarmist or helpless thoughts during pain. The PCS yields a total score and three subscale scores (rumination, magnification, and helplessness). The HK-PCS is a Chinese translation of the PCS and has proven reliable and valid.

Electroencephalographic (EEG) data recording and preprocessing

EEG was continuously recorded via a BioSemi ActiveTwo system (BioSemi Inc., Amsterdam, The Netherlands) from 64 active electrodes based on the international 10–20 system throughout the pain judgment task. Signals were referenced online to the CMS-DRL (common mode sense-driven right leg) ground and digitized at 1024 Hz with 0.01 Hz high-pass and 100 Hz low-pass filtering. Impedances were maintained below 5 KΩ. The electrooculogram (EOG) was monitored through bipolar electrodes positioned approximately 1 cm from the outer canthus of each eye (horizontal EOG) and above and below the left eye (vertical EOG). Two additional sensors for off-line referencing were placed on the mastoid bones.

Raw EEG data were preprocessed using EEGLAB toolbox68 under Matlab (version 8.3., The MathWorks Inc., Natick, MA). All data were offline down-sampled to 256 Hz, re-referenced to averaged mastoids and filtered with a 0.1 Hz high-pass filter and a 50 Hz notch filter. The continuous EEG was then segmented for each trial, beginning 200 ms prior to stimulus onset and continuing for 1,000 ms after onset. After baseline correction, individual epochs contaminated by large nonstereotyped artifacts (e.g., gross muscle activity) were identified and eliminated by visual inspection. Remaining stereotyped artifacts such as saccades, blinks, cardiac artifacts and tonic muscle noises were corrected using Independent Component Analysis (ICA) methods. Independent components representing such stereotyped artifacts were removed in accordance with published guidelines69. For the artifact-clean trials, only those with a correct response and reaction time ≤2,000 ms were entered for analysis. Participants with less than 120 trials per experimental condition were excluded from analysis. The mean number of trials of each experimental condition for all participants is listed in the Supplementary Information.

Event-related Potential (ERP) components

Conventional ERP analyses were performed first to enable comparisons with previous empathy ERP studies. Average ERPs were computed time-locked to stimulus onset at each electrode for each type of stimulus (painful expression/neutral expression, needle-penetrated face/Q-tip-touched face, needle-penetrated arm/Q-tip-touched arm). In the resulting waveforms, component latency windows were determined based on visual inspection of the grand-average ERPs (Fig. 2) as well as prior research using the same paradigm. The following components were identified: temporo-occipital (O1-O2, PO7-PO8) P1 (90–120 ms) and N170 (130–210 ms); frontocentral (F3-Fz-F4, C3-Cz -C4) and parietal (P3-Pz-P4) N1 (90–120 ms, concomitant with the P1 component), vertex positive potential (VPP, 130–210 ms, concomitant with the N170 component), N2 (210–300), and a late positive complex component (LPC, 350–1000 ms). As an averaged mastoid reference was used in the present study, some of the posterior components (e.g., early posterior negativity) were not apparent32. The latency of each component was determined by the peak latency within a given time window. The amplitude of each component was derived from the average value of a 30 ms (for N1 and P1 components) or a 40 ms (for N170, VPP and N2 components) interval centered at the peak latency, with the exception of the LPC amplitude which was calculated as the mean voltage over 350–1,000 ms.

Principal Component Analysis

To better tease apart the components contributing to the positive peak during the LPC time window, a two-step temporospatial PCA was conducted using the ERP PCA toolkit 2.5070 and following published guidelines33, 36, 71. A covariance matrix and Kaiser normalization were used for both the temporal and spatial PCA. Six ERP averages (i.e., painful expression, neutral expression, needle-penetrated face, Q-tip-touched face, needle-penetrated arm and Q-tip-touched arm) containing temporal information from the entire epoch (307 time points in total) as well as spatial information from all 64 electrode sites for each participant were entered into the data matrix for PCA. A temporal PCA with Promax rotation was performed first to analyze temporal variance and to maximize the initial separation of ERP components36. This first step used 307 time points as variables, and considered the combination of all 31 participants, 64 channels, and six conditions as observations. For data reduction purposes, seven temporal factors (TFs) were extracted based on Scree plots72 using the parallel test73. Thereafter, separate spatial PCAs with Infomax rotation were performed on each temporal factor retained in the previous step to reduce spatial dimensions of datasets. This step used the electrodes as variables with the combination of all participants, conditions and temporal factor scores as observations. Based on the result of the parallel test, three spatial factors (SFs) were extracted from each TF, yielding a total of 21 temporospatial factor combinations (TFSF).

Twelve factor combinations accounting for more than 1% of the variance each were retained for further analysis. These factors accounted for 80.30% of the variance in total. Two combinations were most consistent with the morphology of the P3 and LPP. Specifically, TF2SF1 (accounting for 22.13% of the variance) was maximal at electrode Fz with a peak latency at 363 ms, resembling the P3 component. TF1SF1 (accounting for 23.79% of the variance) was maximal at electrode CPz with a peak latency at 710 ms, resembling the LPP component. Thus, TF2SF1 and TF1SF1 were identified as the PCA-derived P3 and LPP, respectively, and were selected for statistical analysis. To directly assess timing and spatial voltage distributions, the factors were translated back into microvolt scaling.

Statistical analysis

Statistical analyses were performed using STATISTICA (StatSoft Inc., Tulsa, OK). Electrophysiological data were visualized using GraphPad Prism 5.0 (GraphPad Software Inc., La Jolla, CA, USA) and Brainstorm (http://neuroimage.usc.edu/brainstorm). Behavioral data (RTs and accuracy rate) were subjected to a 2 (condition: pain vs. no-pain) × 3 (category: facial expressions, face pictures and arm pictures) repeated measures analyses of variance (ANOVA) in accordance with the experimental design. ERP latencies and mean amplitudes within the predefined time windows for specific locations were submitted to ANOVAs examining condition and category effects as well as topography dimensions (laterality: left, midline and right for N1, VPP, N2 and LPC; and left vs. right for P1 and N170). With respect to PCA factors, 2 (condition) × 3 (category) ANOVAs were conducted on TF2SF1 and TF1SF1 factor scores separately. To investigate gender effect on empathic neural processing, additional 2 (gender: male vs. female) × 2 (condition: pain vs. no-pain) × 3 (category: facial expressions, face pictures and arm pictures) repeated measures ANOVAs were conducted on 5 main ERP components (i.e., N1, VPP, N2, and PCA deprived P3 and LPP). For these additional ANOVAs, data were presented only when the 3-way interaction was significant. For all ANOVAs, the Greenhouse-Geisser correction was applied when appropriate. Bonferroni comparisons were carried out as post-hoc tests. Of primary interest were the main effects of, and the interactions between, condition and stimulus type. Of secondary interest, we examined the relationship between psychological measures (empathy trait, catastrophic thinking) or behavioral performance (accuracy, RTs) and electrophysiological activities (ERP latencies, amplitudes, and PCA factor scores) using Pearson correlations. After Bonferroni correction, a familywise alpha of 0.05 was applied.

Data availability statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Electronic supplementary material

Acknowledgements

This work was funded by an NNSF (National Natural Science Foundation of China) grant (31271092) to J.-Y.W., an NNSF grant (31671140) to N.W., NNSF grants (31171067, 31471061) to F. L., grants from CAS Key Laboratory of Mental Health, Institute of Psychology (KLMH 2014G01, KLMH2016K02) and a grant from the initiation fund of the CAS/SAFEA International Partnership Program for Creative Research Teams (Y2CX131003).

Author Contributions

Y.-B.S. and J.-Y.W. designed the study and analyzed the data. Y.-B.S., X.-X.L. and W.Y. conducted the experiments. Y.-B.S., N.W., J.-Y.W., and F.L. wrote the manuscript.

Competing Interests

The authors declare that they have no competing interests.

Footnotes

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-017-11963-x.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.de Vignemont F, Singer T. The empathic brain: how, when and why? Trends Cogn. Sci. 2006;10:435–441. doi: 10.1016/j.tics.2006.08.008. [DOI] [PubMed] [Google Scholar]

- 2.Eisenberg N. Emotion, regulation, and moral development. Annu. Rev. psychol. 2000;51:665–697. doi: 10.1146/annurev.psych.51.1.665. [DOI] [PubMed] [Google Scholar]

- 3.Decety J, Jackson PL. A Social-Neuroscience perspective on empathy. Curr. Dir. Psychol. Sci. 2006;15:54–58. doi: 10.1111/j.0963-7214.2006.00406.x. [DOI] [Google Scholar]

- 4.Decety J, Cowell JM. The complex relation between morality and empathy. Trends Cogn. Sci. 2014;18:337–339. doi: 10.1016/j.tics.2014.04.008. [DOI] [PubMed] [Google Scholar]

- 5.Preston SD, de Waal FBM. Empathy: Its ultimate and proximate bases. Behav. Brain Sci. 2002;25:1–20. doi: 10.1017/s0140525x02000018. [DOI] [PubMed] [Google Scholar]

- 6.Goubert L, et al. Facing others in pain: the effects of empathy. Pain. 2005;118:285–288. doi: 10.1016/j.pain.2005.10.025. [DOI] [PubMed] [Google Scholar]

- 7.Hadjistavropoulos T, et al. A Biopsychosocial Formulation of Pain Communication. Psychol.Bull. 2011;137:910–939. doi: 10.1037/a0023876. [DOI] [PubMed] [Google Scholar]

- 8.Bernhardt BC, Singer T. The neural basis of empathy. Annu. Rev. Neurosci. 2012;35:1–23. doi: 10.1146/annurev-neuro-062111-150536. [DOI] [PubMed] [Google Scholar]

- 9.Singer T, et al. Empathy for Pain Involves the Affective but not Sensory Components of Pain. Science. 2004;303:1157–1161. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- 10.Avenanti A, Bueti D, Galati G, Aglioti SM. Transcranial magnetic stimulation highlights the sensorimotor side of empathy for pain. Nat. neurosci. 2005;8:955–960. doi: 10.1038/nn1481. [DOI] [PubMed] [Google Scholar]

- 11.Jackson PL, Meltzoff AN, Decety J. How do we perceive the pain of others? A window into the neural processes involved in empathy. NeuroImage. 2005;24:771–779. doi: 10.1016/j.neuroimage.2004.09.006. [DOI] [PubMed] [Google Scholar]

- 12.de Waal FBM. Putting the altruism back into altruism: The evolution of empathy. Annu. Rev. Psychol. 2008;59:279–300. doi: 10.1146/annurev.psych.59.103006.093625. [DOI] [PubMed] [Google Scholar]

- 13.Batson, C. D. The empathy-altruism hypothesis: Issues and implications. Empathy: From bench to bedside (ed Decety, J.), 41–54 (MIT Press, 2012).

- 14.Bartal IBA, Decety J, Mason P. Empathy and Pro-Social Behavior in Rats. Science. 2011;334:1427–1430. doi: 10.1126/science.1210789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bartal, I. B. A., Rodgers, D. A., Sarria, M. S. B., Decety, J. & Mason, P. Pro-social behavior in rats is modulated by social experience. Elife3 (2014). [DOI] [PMC free article] [PubMed]

- 16.Burkett JP, et al. Oxytocin-dependent consolation behavior in rodents. Science. 2016;351:375–378. doi: 10.1126/science.aac4785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Godinho F, et al. How the pain of others enhances our pain: Searching the cerebral correlates of ‘compassional hyperalgesia’. Eur. J. Pain. 2012;16:748–759. doi: 10.1002/j.1532-2149.2011.00039.x. [DOI] [PubMed] [Google Scholar]

- 18.Loggia ML, Mogil JS, Bushnell MC. Empathy hurts: Compassion for another increases both sensory and affective components of pain perception. Pain. 2008;136:168–176. doi: 10.1016/j.pain.2007.07.017. [DOI] [PubMed] [Google Scholar]

- 19.Hein G, Silani G, Preuschoff K, Batson CD, Singer T. Neural Responses to Ingroup and Outgroup Members’ Suffering Predict Individual Differences in Costly Helping. Neuron. 2010;68:149–160. doi: 10.1016/j.neuron.2010.09.003. [DOI] [PubMed] [Google Scholar]

- 20.Singer T, et al. Empathic neural responses are modulated by the perceived fairness of others. Nature. 2006;439:466–469. doi: 10.1038/nature04271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Decety J, Echols S, Correll J. The blame game: the effect of responsibility and social stigma on empathy for pain. J.Cogn. Neurosci. 2010;22:985–997. doi: 10.1162/jocn.2009.21266. [DOI] [PubMed] [Google Scholar]

- 22.Azevedo, R. T., Macaluso, E., Viola, V., Sani, G. & Aglioti, S. M. Weighing the stigma of weight: An fMRI study of neural reactivity to the pain of obese individuals. NeuroImage91 (2014). [DOI] [PubMed]

- 23.Beckes L, Coan JA, Hasselmo K. Familiarity promotes the blurring of self and other in the neural representation of threat. Soc. Cogn. Affect. Neurosci. 2013;8:670–677. doi: 10.1093/scan/nss046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cheng, Y. W., Chen, C., Lin, C. P., Chou, K. H. & Decety, J. Love hurts: an fMRI study. NeuroImage51 (2010). [DOI] [PubMed]

- 25.Fan YT, Han S. Temporal dynamic of neural mechanisms involved in empathy for pain: an event-related brain potential study. Neuropsychologia. 2008;46:160–173. doi: 10.1016/j.neuropsychologia.2007.07.023. [DOI] [PubMed] [Google Scholar]

- 26.Sheng, F., Han, X. & Han, S. Dissociated Neural Representations of Pain Expressions of Different Races. Cereb. Cortex (2015). [DOI] [PubMed]

- 27.Sessa P, Meconi F, Castelli L, Dell’Acqua R. Taking one’s time in feeling other-race pain: an event-related potential investigation on the time-course of cross-racial empathy. Soc. Cogn. Affect. Neurosci. 2014;9:454–463. doi: 10.1093/scan/nst003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Fan YT, Chen CY, Chen SC, Decety J, Cheng YW. Empathic arousal and social understanding in individuals with autism: evidence from fMRI and ERP measurements. Soc. Cogn. Affect. Neurosci. 2014;9:1203–1213. doi: 10.1093/scan/nst101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Contreras-Huerta LS, Hielscher E, Sherwell CS, Rens N, Cunnington R. Intergroup relationships do not reduce racial bias in empathic neural responses to pain. Neuropsychologia. 2014;64:263–270. doi: 10.1016/j.neuropsychologia.2014.09.045. [DOI] [PubMed] [Google Scholar]

- 30.Decety J, Yang CY, Cheng YW. Physicians down-regulate their pain empathy response: An event-related brain potential study. NeuroImage. 2010;50:1676–1682. doi: 10.1016/j.neuroimage.2010.01.025. [DOI] [PubMed] [Google Scholar]

- 31.Sheng F, Han SH. Manipulations of cognitive strategies and intergroup relationships reduce the racial bias in empathic neural responses. NeuroImage. 2012;61:786–797. doi: 10.1016/j.neuroimage.2012.04.028. [DOI] [PubMed] [Google Scholar]

- 32.Hajcak, G., Weinberg, A., MacNamara, A. & Foti, D. ERPs and the study of emotion. The Oxford handbook of event-related potential components (eds Luck, S. J. and Kappenman, E. S.), 441–474 (Oxford University Press, 2012).

- 33.Dien J. Applying Principal Components Analysis to Event-Related Potentials: A Tutorial. Dev. Neuropsychol. 2012;37:497–517. doi: 10.1080/87565641.2012.697503. [DOI] [PubMed] [Google Scholar]

- 34.Han S, et al. Empathic Neural Responses to Others’ Pain are Modulated by Emotional Contexts. Hum. Brain Mapp. 2009;30:3227–3237. doi: 10.1002/hbm.20742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lamm C, Batson CD, Decety J. The neural substrate of human empathy: Effects of perspective-taking and cognitive appraisal. J. Cogn. Neurosci. 2007;19:42–58. doi: 10.1162/jocn.2007.19.1.42. [DOI] [PubMed] [Google Scholar]

- 36.Dien, J. & Frishkoff, G. A. Principal components analysis of event-related potential datasets. Event-related potentials: A methods handbook (ed. Handy T. C.), 189–208 (MIT Press 2005).

- 37.Sessa P, Meconi F, Han S. Double dissociation of neural responses supporting perceptual and cognitive components of social cognition: evidence from processing of others’ pain. Sci. Rep. 2014;4:7424. doi: 10.1038/srep07424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Olofsson JK, Nordin S, Sequeira H, Polich J. Affective picture processing: an integrative review of ERP findings. Biol. Psychol. 2008;77:247–265. doi: 10.1016/j.biopsycho.2007.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Yang CY, Decety J, Lee SY, Chen CY, Cheng YW. Gender differences in the mu rhythm during empathy for pain: An electroencephalographic study. Brain Res. 2009;1251:176–184. doi: 10.1016/j.brainres.2008.11.062. [DOI] [PubMed] [Google Scholar]

- 40.Michalska KJ, Kinzler KD, Decety J. Age-related sex differences in explicit measures of empathy do not predict brain responses across childhood and adolescence. Dev Cogn Neuros. 2013;3:22–32. doi: 10.1016/j.dcn.2012.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Han S, Fan Y, Mao L. Gender difference in empathy for pain: an electrophysiological investigation. Brain Res. 2008;1196:85–93. doi: 10.1016/j.brainres.2007.12.062. [DOI] [PubMed] [Google Scholar]

- 42.Lamm C, Decety J, Singer T. Meta-analytic evidence for common and distinct neural networks associated with directly experienced pain and empathy for pain. NeuroImage. 2011;54:2492–2502. doi: 10.1016/j.neuroimage.2010.10.014. [DOI] [PubMed] [Google Scholar]

- 43.Eisenberg N, Lennon R. Sex differences in empathy and related capacities. Psychol Bull. 1983;94:100–131. doi: 10.1037/0033-2909.94.1.100. [DOI] [Google Scholar]

- 44.Baron-Cohen S, Wheelwright S. The empathy quotient: An investigation of adults with Asperger syndrome or high functioning autism, and normal sex differences. J Autism Dev Disord. 2004;34:163–175. doi: 10.1023/B:JADD.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- 45.Rueckert L, Naybar N. Gender differences in empathy: The role of the right hemisphere. Brain Cogn. 2008;67:162–167. doi: 10.1016/j.bandc.2008.01.002. [DOI] [PubMed] [Google Scholar]

- 46.Rossion, B. & Jacques, C. The N170: understanding the time-course of face perception in the human brain. The Oxford handbook of ERP components(eds Luck S. J. and Kappenman E. S.), 115–142 (Oxford University Press, 2011).

- 47.Rossion B, Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. NeuroImage. 2008;39:1959–1979. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- 48.Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- 49.Oosterhof NN, Todorov A. The functional basis of face evaluation. Proc. Natl. Acad. Sci. USA. 2008;105:11087–11092. doi: 10.1073/pnas.0805664105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Sun YB, Wang YZ, Wang JY, Luo F. Emotional mimicry signals pain empathy as evidenced by facial electromyography. Sci. Rep. 2015;5:16988–16988. doi: 10.1038/srep16988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Codispoti M, Ferrari V, Junghofer M, Schupp HT. The categorization of natural scenes: Brain attention networks revealed by dense sensor ERPs. NeuroImage. 2006;32:583–591. doi: 10.1016/j.neuroimage.2006.04.180. [DOI] [PubMed] [Google Scholar]

- 52.Snyder E, Hillyard SA. Long-latency evoked potentials to irrelevant, deviant stimuli. Behav. Biol. 1976;16:319–331. doi: 10.1016/S0091-6773(76)91447-4. [DOI] [PubMed] [Google Scholar]

- 53.Daffner KR, et al. The influence of stimulus deviance on electrophysiologic and behavioral responses to novel events. J. Cogn. Neurosci. 2000;12:393–406. doi: 10.1162/089892900562219. [DOI] [PubMed] [Google Scholar]

- 54.Bruneau EG, Dufour N, Saxe R. Social cognition in members of conflict groups: behavioural and neural responses in Arabs, Israelis and South Americans to each other’s misfortunes. Philos. T. R. Soc. B. 2012;367:717–730. doi: 10.1098/rstb.2011.0293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Avenanti A, Sirigu A, Aglioti SM. Racial Bias Reduces Empathic Sensorimotor Resonance with Other-Race Pain. Curr. Biol. 2010;20:1018–1022. doi: 10.1016/j.cub.2010.03.071. [DOI] [PubMed] [Google Scholar]

- 56.Azevedo RT, et al. Their pain is not our pain: brain and autonomic correlates of empathic resonance with the pain of same and different race individuals. Hum. Brain Mapp. 2013;34:3168–3181. doi: 10.1002/hbm.22133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Carretie L, Hinojosa JA, Martin-Loeches M, Mercado F, Tapia M. Automatic attention to emotional stimuli: neural correlates. Hum. Brain Mapp. 2004;22:290–299. doi: 10.1002/hbm.20037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Gu XS, Han SH. Attention and reality constraints on the neural processes of empathy for pain. NeuroImage. 2007;36:256–267. doi: 10.1016/j.neuroimage.2007.02.025. [DOI] [PubMed] [Google Scholar]

- 59.Craig KD. The Social Communication Model of Pain. Can. Psychol. 2009;50:22–32. doi: 10.1037/a0014772. [DOI] [Google Scholar]

- 60.Williams AC. Facial expression of pain: an evolutionary accout. Behav. Brain Sci. 2002;25:439–488. doi: 10.1017/s0140525x02000080. [DOI] [PubMed] [Google Scholar]

- 61.Reicherts P, et al. Electrocortical evidence for preferential processing of dynamic pain expressions compared to other emotional expressions. Pain. 2012;153:1959–1964. doi: 10.1016/j.pain.2012.06.017. [DOI] [PubMed] [Google Scholar]

- 62.Prkachin KM. The consistency of facial expressions of pain: A comparison across modalities. Pain. 1992;51:297–306. doi: 10.1016/0304-3959(92)90213-U. [DOI] [PubMed] [Google Scholar]

- 63.Prkachin KM, Solomon PE. The structure, reliability and validity of pain expression: Evidence from patients with shoulder pain. Pain. 2008;139:267–274. doi: 10.1016/j.pain.2008.04.010. [DOI] [PubMed] [Google Scholar]

- 64.Rong X, Sun B, Huang X, Cai M, Li W. Reliabilities and Validities of Chinese Version of Interpersonal Reactivity Index. Chinese J. Clin. Psych. 2010;18:158–160. [Google Scholar]

- 65.Davis MH. Measuring individual differences in empathy: Evidence for a multidimensional approach. J. Pers. Soc. Psychol. 1983;44:113–126. doi: 10.1037/0022-3514.44.1.113. [DOI] [Google Scholar]

- 66.Yap JC, et al. Validation of the Chinese Pain Catastrophizing Scale (HK-PCS) in patients with chronic pain. Pain Med. 2008;9:186–195. doi: 10.1111/j.1526-4637.2007.00307.x. [DOI] [PubMed] [Google Scholar]

- 67.Sullivan MJ, Bishop SR, Pivik J. The pain catastrophizing scale: development and validation. Psychol. Assess. 1995;7:524. doi: 10.1037/1040-3590.7.4.524. [DOI] [Google Scholar]

- 68.Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Meth. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 69.Jung TP, et al. Removing electroencephalographic artifacts by blind source separation. Psychophysiology. 2000;37:163–178. doi: 10.1111/1469-8986.3720163. [DOI] [PubMed] [Google Scholar]

- 70.Dien J. The ERP PCA Toolkit: An open source program for advanced statistical analysis of event-related potential data. J. Neurosci. Meth. 2010;187:138–145. doi: 10.1016/j.jneumeth.2009.12.009. [DOI] [PubMed] [Google Scholar]

- 71.Dien J. Evaluating two-step PCA of ERP data with Geomin, Infomax, Oblimin, Promax, and Varimax rotations. Psychophysiology. 2010;47:170–183. doi: 10.1111/j.1469-8986.2009.00885.x. [DOI] [PubMed] [Google Scholar]

- 72.Cattell RB. The Scree Test For The Number Of Factors. Multivariate Behav. Res. 1966;1:245–276. doi: 10.1207/s15327906mbr0102_10. [DOI] [PubMed] [Google Scholar]

- 73.Horn JL. A Rationale and Test for the Number of Factors in Factor Analysis. Psychometrika. 1965;30:179–185. doi: 10.1007/BF02289447. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.