Abstract

Recent years have seen an explosion of activity in the field of functional data analysis (FDA), in which curves, spectra, images, etc. are considered as basic functional data units. A central problem in FDA is how to fit regression models with scalar responses and functional data points as predictors. We review some of the main approaches to this problem, categorizing the basic model types as linear, nonlinear and nonparametric. We discuss publicly available software packages, and illustrate some of the procedures by application to a functional magnetic resonance imaging dataset.

Keywords: functional additive model, functional generalized linear model, functional linear model, functional polynomial regression, functional single-index model, nonparametric functional regression

1 Introduction

Regression with functional data is perhaps the most thoroughly researched topic within the broader literature on functional data analysis (FDA). It is common (e.g., Ramsay and Silverman, 2005; Reiss et al., 2010) to classify functional regression models into three categories according to the role played by the functional data in each model: scalar responses and functional predictors (“scalar-on-function” regression); functional responses and scalar predictors (“function-on-scalar” regression); and functional responses and functional predictors (“function-on-function” regression). This article focuses on the first case, and reviews linear, non-linear, and nonparametric approaches to scalar-on-function regression. Domains in which scalar-on-function regression (hereafter, SoFR) has been applied include chemometrics (Goutis, 1998; Marx and Eilers, 1999; Ferraty et al., 2010), cardiology (Ratcliffe et al., 2002), brain science (Reiss and Ogden, 2010; Goldsmith et al., 2011; Huang et al., 2013), climate science (Ferraty et al., 2005; Baíllo and Grané, 2009), and many others. We refer the reader to Morris (2015) for a recent review of functional regression in general, and to Wang et al. (2015) for a broad overview of FDA.

In cataloguing the many variants of SoFR, we have attempted to cast a wide net. A major contribution of this review is our attempt not merely to describe many approaches in what has become a vast literature, but to distill a coherent organization of these methods. To keep the scope somewhat manageable, we do not attempt to survey the functional classification literature. We acknowledge, however, that classification and regression are quite closely related—especially insofar as functional logistic regression, a special case of the functional generalized linear models considered below in Section 5.3, can be viewed as a classification method. Our emphasis is more methodological than theoretical, but for brevity we omit a number of important methodological issues such as confidence bands, goodness-of-fit diagnostics, outlier detection and robustness.

In the “vanilla” data setting (Sections 2 to 4), we consider an independent, identically distributed (iid) sample of random pairs , i = 1, … , n, where yi is a real-valued scalar outcome and the predictor belongs to a space of real-valued functions on a finite interval . The most common choice for seems to be the Hilbert space with the usual inner product . Cuevas (2014) discusses more general spaces in which the functional data may “live”.

In the general practice of functional data analysis, functions are observed on a set of discrete grid points that can be sparse or dense, regular or irregular, and possibly subject to measurement errors. Several “preprocessing” steps are typically taken before modeling the data. Aside from smoothing the functional data, in some cases it is appropriate to apply registration or feature alignment, or if the grid points differ across observations, to interpolate to a dense common grid. Measurement error is expected to be low in some (e.g., chemometric) applications, but when it is not it can have important effects on the regression relation. Some methods (e.g., James, 2002) account explicitly for such error. Here, in order to keep the focus on the various regression models, we shall mostly assume functional data observed on a common dense grid with negligible error.

The functional linear model (FLM) is a natural extension of multiple linear regression to allow for functional predictors. Many techniques have been developed to fit this model, and we review these in Section 2. Nonlinear extensions of this basic approach are presented in Section 3. In Section 4 we discuss nonparametric approaches to SoFR, which are based on distances among the predictor functions. For simplicity of exposition, these three sections consider only the most basic and most common scenario: a single functional predictor and one real-valued scalar response. Generalizations and extensions, including the inclusion of scalar covariates, multiple functional predictors, generalized response values, and repeated observations, are reviewed in Section 5. Section 6 presents some ideas on how to choose among the many methods. Available software for SoFR is described in Section 7, and an application to brain imaging data appears in Section 8. Some concluding discussion is provided in Section 9.

2 Linear scalar-on-function regression

The scalar-response functional linear model can be expressed as

| (1) |

where β(·) is the coefficient function and errors εi are iid with mean zero and constant variance σ2.

The coeffcient function β(·) has a natural interpretation: locations t with largest |β(t)| are most influential to the response. In order to enforce some regularity in the estimate, a common general approach to fitting model (1) is to expand β(·) (and possibly the functional predictors as well) in terms of a set of basis functions. Basis functions can be categorized as either (i) a priori fixed bases, most often splines or wavelets; or (ii) data-driven bases, most often derived by functional principal component analysis or functional partial least squares. The next two subsections discuss these two broad alternatives.

2.1 Regularized a priori basis functions

One general class of methods for fitting equation (1) restricts the coefficient function β(·) to the span of an a priori set of basis functions, while imposing a penalty or a prior to prevent overfitting. Assume β(t) = b(t)Tγ where b(t) = [b1(t), … , bK(t)]T is a set of basis functions and . We estimate α and γ by finding

| (2) |

for some λ > 0 and some penalty function P (·). The estimate of the coefficient function is thus .

In spline approaches (e.g., Hastie and Mallows, 1993; Marx and Eilers, 1999; Cardot et al., 2003), the penalty is generally a quadratic form γT Lγ which measures the roughness of β(t) = b(t)T γ, and hence (2) is a generalized ridge regression problem. When , as in Ramsay and Silverman (2005), the quadratic form equals dt; cubic B-splines with a second derivative penalty (q = 2) are particularly popular. Alternatively, the P-spline formulation of Marx and Eilers (1999) takes L = DTD where D is a differencing matrix. Higher values of λ enforce greater smoothness in the coefficient function. Standard methods for automatic selection of λ include restricted maximum likelihood and generalized cross-validation (Craven and Wahba, 1979; Ruppert et al., 2003; Reiss and Ogden, 2009; Wood, 2011). While B-splines are the basis functions most often combined with roughness penalties, other bases are possible. For example, Fourier bases may be employed when the functions are periodic. Marx and Eilers (1999) discuss smoothing of the curves when evaluating the integral in (2) in practice.

While splines and roughness penalties are a natural choice when the coefficient function is expected to be smooth, in some applications β(·) may be irregular, with features such as spikes or discontinuities. Wavelet bases (e.g., Ogden, 1997), which provide sparse representations for irregular functions, have received some attention in recent years. In the framework of (2), Zhao et al. (2012) propose the wavelet-domain lasso, which combines wavelet basis functions b(·) with the ℓ1 penalty in (2). Other sparsity penalties for wavelet-domain SoFR are considered by Zhao et al. (2015) and Reiss et al. (2015). Not all sparse approaches rely on wavelet bases; see, for example, James et al. (2009) and Lee and Park (2011).

More flexible, albeit potentially more complex, models can be built by replacing the penalty with an explicit prior structure in a fully Bayesian framework. Spline approaches of this type are developed by Crainiceanu and Goldsmith (2010) and Goldsmith et al. (2011); wavelet approaches based on Bayesian variable selection include those of Brown et al. (2001) and Malloy et al. (2010).

2.2 Regression on data-driven basis functions

Alternatively, the coefficient function in (1) can be estimated using a data-driven basis. The most common choice is the eigenbasis associated with the covariance function , i.e., the orthonormal set of functions ϕ1(t), ϕ2(t), … such that for each j and all , for eigenvalues λ1 ≥ λ2 ≥ ⋯ ≥ 0. Expressing each of the functional predictors by its truncated Karhunen-Loève expansion

| (3) |

and the coefficient function using the same basis, the integral in (1) becomes

reducing (1) to the ordinary multiple regression model

| (4) |

(Cardot et al., 1999). In practice, the eigenfunctions are estimated by functional principal component analysis (FPCA; Rice and Silverman, 1991; Silverman, 1996; Yao et al., 2005) and treated as fixed in subsequent analysis.

The number A of retained components acts as a tuning parameter that controls the shape and smoothness of β(·). Ways to choose A as a part of the regression analysis include explained variability, bootstrapping (Hall and Vial, 2006), information criteria (Yao et al., 2005; Li et al., 2013), and cross-validation (Hosseini-Nasab, 2013).

Data-driven bases other than functional principal components can also be utilized, such as functional partial least squares (FPLS; Preda and Saporta, 2005; Escabias et al., 2007; Reiss and Ogden, 2007; Aguilera et al., 2010; Delaigle and Hall, 2012) and functional sliced inverse regression (Ferré and Yao, 2003, 2005).

2.3 Hybrid approaches

A number of papers combine the data-driven basis and a priori basis approaches of the previous two subsections. For example, Amato et al. (2006) employ so-called sufficient dimension reduction methods for component selection, but implement them in the wavelet domain. The functional principal component regression method referred to as FPCRR in Reiss and Ogden (2007) restricts the coefficient function to the span of leading functional principal components, but fits model (1) by penalized splines (see also Horváth and Kokoszka, 2012; Araki et al., 2013). Goldsmith et al. (2011) use FPCA to pre-process predictor functions and penalized splines to model the coefficient function. The regularization strategy of Randolph et al. (2012) incorporates information from both the predictors and a linear penalty operator.

2.4 Functional polynomial regression

We conclude the discussion of FLMs with functional polynomial models. These are linear in the coefficients but not in the predictors, in contrast to the models of the next two sections, which are not linear in either sense.

The functional quadratic regression model of Yao and Müller (2010) can be expressed as

| (5) |

Here we have both linear and quadratic coefficient functions, β(t) and γ(s, t); when the latter is zero, (5) reduces to the FLM (1). By expressing elements of (5) in terms of functional principal components, responses can be regressed on the principal component scores. Adding higher-order interaction terms results in more general functional polynomial regression models.

3 Nonlinear scalar-on-function regression

In many applications, the assumption of a linear relationship between and y is too restrictive to describe the data. In this section we review several models that relax the linearity assumption; Section 4 will describe models (usually termed “nonparametric”) that are even more flexible than those described here.

3.1 Single-index model

We begin by presenting the functional version of the single-index model (Stoker, 1986):

| (6) |

which extends the FLM by allowing the function h(·) to be any smooth function defined on the real line. Fitting this model requires estimation of both the coefficient function β(·) and the unspecified function h(·) and is typically accomplished in an iterative way. For given β(·), h(·) can be estimated using splines, kernels or any technique for estimating a smooth function; for given h(·), β(·) can be estimated in a similar fashion; and the process is iterated until convergence. Several of the methods described in Section 2 for estimating an FLM have been combined with a spline method for estimating h(·) in (6) (e.g., Eilers et al., 2009). Alternatively, a kernel estimator can be used for h(·) (e.g., Ait-Säidi et al., 2008; Ferraty et al., 2011).

3.2 Multiple-index model

A natural extension of model (6) is to allow multiple linear functionals of the predictor via the multiple-index model

| (7) |

Models of this kind, which extend projection pursuit regression to the functional predictor case, are developed by James and Silverman (2005), Chen et al. (2011), and Ferraty et al. (2013).

Setting βj(t) = ϕj(t), the jth FPC basis function, reduces (7) to , where we use xij to denote FPC scores as in (3). Müller and Yao (2008) refer to this as a “functional additive model,” generalizing the FLM of Section 2.2 which reduced to the multiple regression model (4) with respect to the FPC scores. An extension of this method, incorporating a sparsity-inducing penalty on the additive components, is proposed by Zhu et al. (2014).

3.3 Continuously additive model

Müller et al. (2013) and McLean et al. (2014) propose the model

| (8) |

where f(·, ·) is a smooth bivariate function that can be estimated by penalized tensor product B-splines. As an aid to interpretation, note that if sℓ = s0 + ℓΔs, ℓ = 1, … , L, and , then for large L, (8) implies

where gℓ(x) = f(x, sℓ)Δs. The expression at right shows that (8) is the limit (as L → ∞) of an additive model—or in the generalized linear extension considered by McLean et al. (2014), of a generalized additive model (Hastie and Tibshirani, 1990; Wood, 2006). Hence McLean et al. (2014) employ the term “functional generalized additive model.”

4 Nonparametric scalar-on-function regression

The monograph of Ferraty and Vieu (2006) has popularized a nonparametric paradigm for SoFR, in which the model for the conditional mean of y is not only nonlinear but essentially unspecified, i.e.,

| (9) |

for some operator . Note that mathematically the FLM can also be formulated as an operator, but as one that is linear—whereas Ferraty and Vieu (2006) focus primarily on nonlinear operators m. (For further discussion of the terms nonlinear and nonparametric SoFR, see Section 9.)

Approaches to estimating m extend traditional nonparametric regression methods (Ḧardle et al., 2013) from the case in which the are scalars or vectors to the case of function-valued . For example, the most popular nonparametric approach, which we consider next, generalizes the Nadaraya-Watson (NW) smoother (Nadaraya, 1964; Watson, 1964) to functional predictors.

4.1 Functional Nadaraya-Watson estimator

The functional NW estimator of the conditional mean is

| (10) |

where K(·) is a kernel function, which we define as a function supported and decreasing on [0, ∞); h > 0 is a bandwidth; and d(·, ·) is a semi-metric. Here we define a semi-metric on as a function that is symmetric and satisfies the triangle inequality, but d(f1, f2) = 0 does not imply f1 = f2. (Such a function is often called a “pseudo-metric”; our terminology has the advantage of implying that a semi-norm ∥·∥ on induces a semi-metric d(f1, f2) = ∥f1 − f2∥.) Smaller values of imply larger and thus larger weight assigned to yi.

Ideally, the bandwidth h should strike a good balance between the squared bias of (which increases with h) and its variance (which decreases as h increases) (Ferraty et al., 2007). Rachdi and Vieu (2007) consider a functional cross-validation method for bandwidth selection, and prove its asymptotic optimality. Shang (2013, 2014a,b, 2015) and Zhang et al. (2014) propose a Bayesian method for simultaneously selecting the bandwidth and the unknown error density, and show that it attains greater estimation accuracy than functional cross-validation.

Observe that if the fixed bandwidth h in (10) is replaced by hk(X), the kth-smallest of the distances , then we instead have a functional version of (weighted) k-nearest neighbors regression (Burba et al., 2009).

4.2 Choice of semi-metric

The performance of the functional NW estimator can depend crucially on the chosen semimetric (Geenens, 2011). Optimal selection of the semi-metric is discussed by Ferraty and Vieu (2006, Chapters 3 and 13) and is addressed using marginal likelihood by Shang (2015).

For smooth functional data, it may be appropriate to use the derivative-based semi-metric

where being the qth-order derivative of . In practice q = 2 is a popular choice (e.g., Goutis, 1998; Ferraty and Vieu, 2002, 2009). The use of B-spline approximation for each curve allows straightforward computation of the derivatives.

For non-smooth functional data, it may be preferable to adopt a semi-metric based on FPCA truncated at A components,

where the last expression uses truncated expansions defined as in (3). A semi-metric based on functional partial least squares components (Preda and Saporta, 2005; Reiss and Ogden, 2007) can be defined analogously. Chung et al. (2014) introduced a semi-metric based on thresholded wavelet coefficients of the functional data objects.

Given a set of possible semi-metrics with no a priori preference for any particular option, one can select the one that minimizes a prediction error criterion such as a cross-validation score; more generally one can adopt an ensemble predictor (see Section 6.2.2 and Fuchs et al., 2015).

4.3 Functional local linear estimator

The functional NW estimator (10) can also be written as

As an alternative to this “local constant” estimator, Baíllo and Grané (2009) consider a functional analogue of local polynomial smoothing (Fan and Gijbels, 1996), specifically a local (functional) linear approximation

for near X, where and . This motivates the minimization problem

whose solution yields the functional local linear estimate . Barrientos-Marin et al. (2010) propose a compromise between the NW (local constant) and local linear estimators, while Boj et al. (2010) offer a formulation based on more general distances.

4.4 A reproducing kernel Hilbert space approach

A rather different nonparametric method (Preda, 2007) is based on the notion of a positive semidefinite kernel (Wahba, 1990; Schölkopf and Smola, 2002), which can roughly be thought of as defining a similarity between functions ; the Gaussian kernel for some σ > 0 is an example. Briefly, any such k(·, ·) (not to be confused with the univariate kernel function K(·) of (10)) defines a reproducing kernel Hilbert space (RKHS) of maps , equipped with an inner product . Preda (2007) considers more general loss functions, but for squared error loss his proposed estimate of m in (9) is

| (11) |

where λ is a non-negative regularization parameter, as in criterion (2) for the FLM. A key RKHS result, the representer theorem (Kimeldorf and Wahba, 1971; Schölkopf et al., 2001), leads to a much-simplified minimization in terms of the Gram matrix : (11) can be written as , where γ = (γ1, … , γn)T minimizes

with y = (y1, … , yn)T.

Note that this nonparametric formulation is distinct from the RKHS approach of Cai and Yuan (2012) to the functional linear model (1)—in which the coefficient function β(·), rather than the (generally nonlinear) map , is viewed as an element of an RKHS—as well as from the RKHS method of Zhu et al. (2014).

Expression (11) is a functional analogue of the criterion minimized in smoothing splines (Wahba, 1990), much as (10) generalizes the NW smoother to functional predictors. We believe that, just as the RKHS-based smoothing spline paradigm has spawned a very flexible array of tools for non- and semiparametric regression (Ruppert et al., 2003; Wood, 2006; Gu, 2013), there is great potential for building upon the regularized RKHS approach to nonparametric SoFR and thereby, perhaps, connecting FDA with machine learning.

As a further link between the (reproducing) kernel approach of Preda (2007) and other nonparametric approaches (such as the NW estimator) that are based on (semi-metric) distances among functions, we note that there is a well-known duality between kernels and distances (e.g., Faraway, 2012, p. 410). Since both kernels and distances can be defined for more general data types than functional data, the nonparametric FDA paradigm is readily extensible to “object-oriented” data analysis (Marron and Alonso, 2014).

5 Generalizations and extensions

Although many of the references cited in the previous three sections have considered more general scenarios, our presentation thus far has considered only the simplest situation: a single functional predictor and a real-valued response. However, situations that arise in practice often require various extensions, including scalar covariates, multiple functional predictors, and models appropriate for responses that arise from a general exponential family distribution. In this section we describe some of these generalizations and extensions.

5.1 Including scalar covariates

Including scalar covariates in the FLM (1) is fairly straightforward. For methods based on penalization, covariates can be included by simply applying the penalty only to the spline basis coefficients. When using a data-driven basis, as noted above, the FLM reduces to the ordinary multiple regression model (4), so adding scalar covariates is even more routine. With nonlinear and nonparametric strategies, however, incorporating scalar covariates can be somewhat more challenging. For example, Aneiros-Pérez and Vieu (2006, 2008) and Aneiros-Pérez et al. (2011) considered semi-functional partial linear models of the form

| (12) |

which include linear effects of scalar covariates zi, estimated using weighted least squares, and effects of functional predictors , estimated nonparametrically via NW weights.

5.2 Multiple functional predictors

A number of recent papers have considered the situation in which the ith observation includes multiple functional predictors , possibly with different domains . The FLM (1) extends naturally to the multiple functional regression model

Penalized or fully Bayesian approaches to selecting among candidate functional predictors have been proposed by Zhu et al. (2010), Gertheiss et al. (2013) and Lian (2013).

One can also consider two types of functional interaction terms. An interaction between a scalar and a functional predictor (e.g., McKeague and Qian, 2014) is formally similar to another functional predictor, whereas an interaction between two functional predictors (e.g., Yang et al., 2013) resembles a functional quadratic term as in (5).

The “functional additive regression” model of Fan et al. (2015) extends the functional single-index model (6) to the case of multiple predictors.

In the nonparametric FDA literature, Febrero-Bande and González-Manteiga (2013) consider the model

| (13) |

where η can be a known function, or estimated nonparametrically; the mr(·)’s are nonlinear partial functions of . Model (13), like model (8), is referred to by the authors as a “functional generalized additive model.” Lian (2011) studied the “functional partial linear regression” model

| (14) |

which combines linear and nonparametric functional terms. Note that both predictors in (14) are functional, whereas the linear terms in the “semi-functional” model (12) are scalars.

5.3 Responses with exponential family distributions

In all models considered to this point, the response variable has been a continuous, real-valued scalar. In many practical applications the response is discrete, such as a binary outcome indicating the presence or absence of a disease. Many of the above methods have been generalized to allow responses with exponential-family distributions, including both linear (Marx and Eilers, 1999; James, 2002; Müller and Stadtmüller, 2005; Reiss and Ogden, 2010; Goldsmith et al., 2011; Aguilera-Morillo et al., 2013) and nonlinear (James and Silverman, 2005; McLean et al., 2014) models. For a single functional predictor and no scalar covariates, the functional generalized linear model can be written as , where and g is a known link function. Estimation for this model is analogous to the methods described in Section 2: using a data-driven basis recasts the functional model as a standard generalized linear model, and regularized basis expansion methods can be implemented using penalization methods for GLMs. Scalar covariates and multiple functional predictors can be incorporated as in Sections 5.1 and 5.2.

5.4 Multilevel and longitudinal SoFR

In recent years it has become more common to collect repeated functional observations from each subject in a sample. In these situations the data are for observations j = 1, … , Ji within each of subjects i = 1, … , n. A relevant extension of the FLM is

with random effects used to model subject-specific effects. Goldsmith et al. (2012) directly extended the spline-based estimation strategy described in Section 2.1 for this model, and Gertheiss et al. (2013) use longitudinal FPCA (Greven et al., 2010) to construct a data-driven basis for coefficient functions. Crainiceanu et al. (2009) considered the related setting in which the functional predictor is repeatedly observed but the response is not, and estimate the coefficient function using a data-driven basis derived using multilevel FPCA (Di et al., 2009).

5.5 Multidimensional functional predictors

While most methodological development of SoFR has focused on one-dimensional functional predictors as in (1), a growing number of authors have considered two- or three-dimensional signals or images as predictors in regression models. While this extension is relatively straight-forward conceptually (replacing the single integral in (1) with a double or triple integral), it can involve significant technical challenges, including higher “natural dimensionality”, the need for additional tuning parameter values and correspondingly greater computational requirements.

Within the linear SoFR model framework, Marx and Eilers (2005) extend their penalized spline regression to handle higher dimensional signals by expressing the signals in terms of a tensor B-spline basis and applying both a “row” and a “column” penalty. Reiss and Ogden (2010) consider two-dimensional brain images as predictors, expressing them in terms of their eigenimages and enforcing smoothness via radially symmetric penalization. Holan et al. (2010) and Holan et al. (2012) reduce the dimensionality of the problem by projecting the images on their (2D) principal components. Guillas and Lai (2010) apply penalized bivariate spline methods and consider two-dimensional functions on irregular regions. Zhou et al. (2013) and Zhou and Li (2014) propose methods that exploit the matrix or tensor structure of image predictors; Huang et al. (2013) and Goldsmith et al. (2014) develop Bayesian regression approaches for three-dimensional images; and Wang et al. (2014) and Reiss et al. (2015) describe wavelet-based methods.

5.6 Other extensions

Our stated goal in this paper has been to describe and classify major areas of research in SoFR, and we acknowledge that any attempt to list all possible variants of SoFR would be futile. In this subsection we very briefly mention a few models that do not fit neatly into the major paradigms discussed in Sections 2 through 4 or in their direct extensions in Sections 5.1 through 5.5, knowing this list is incomplete.

5.6.1 Other non-iid settings

This review has focused on iid data pairs (), with the exception of Section 5.4. Other departures from the iid assumption have received some attention in the SoFR literature. For example, Delaigle et al. (2009) considered heteroscedastic error variance, while Ferraty et al. (2005) studied α-mixing data pairs.

5.6.2 Mixture regression

A data set may be divided into latent classes, such that each class has a different regression relationship of the form (1). This is the model considered by Yao et al. (2011), who represent the predictors in terms of their functional principal components and apply a multivariate mixture regression model fitting technique. Ciarleglio and Ogden (2016) consider sparse mixture regression in the wavelet domain.

5.6.3 Point impact models

In some situations it may be expected that only one point, or several points, along the function will be relevant to predicting the outcome. The model (1) could be adapted to reflect this by replacing the coefficient function β by a Dirac delta function at some point θ. This is the “point impact” model considered by Lindquist and McKeague (2009) and McKeague and Sen (2010), who consider various methods for selecting one or more of these points. Ferraty et al. (2010) consider the same situation but within a nonparametric setting.

5.6.4 Derivatives in SoFR

Derivatives have been incorporated in SoFR investigations in two completely different senses. The first is ordinary (first or higher-order) derivatives of functional observations with respect to the argument t. Some types of functional data may reflect vertical or linear shifts that are irrelevant to predicting y (Goutis, 1998). A key example is near-infrared (NIR) spectroscopy curves, which are used by analytical chemists to predict the contents of a sample: for example, a wheat sample’s spectrum may play the role of a functional predictor, with protein content as the response. It is sometimes helpful to remove such shifts by taking first or second differences (approximate derivatives) of the curves as a preprocessing step. For the same reason, derivative semi-metrics (see above, Section 4.2) can be more useful than the L2 metric for nonparametric SoFR with spectroscopy curves (Ferraty and Vieu, 2002).

A second type of derivative, studied by Hall et al. (2009), is the functional (Gâteaux) derivative of the operator m in the nonparametric model (9). Roughly speaking, for a given , the functional derivative is a linear operator mX such that for a small increment we have m(X + ΔX) ≈ m(X) + mX(ΔX). In the linear case, the functional derivative is given by the coefficient or slope function, i.e., for all X and all g. In the nonparametric case, functional derivatives allow one to estimate functional gradients, which are in effect locally varying slopes. Müller and Yao (2010) simplify the study of functional derivatives and gradients by imposing the additive model framework of Müller and Yao (2008) (see above, Section 3.2).

5.6.5 Conditional quantiles and mode

Up to now we have been concerned with modeling the mean of y (or a transformation thereof, in the GLM case), conditional on functional predictors. Cardot et al. (2005) propose instead to estimate a given quantile of y, conditional on , by minimizing a penalized criterion that is similar to (2), but with the squared error loss in the first term replaced by the “check function” used in ordinary quantile regression (Koenker and Bassett, 1978). Chen and Müller (2012), on the other hand, estimate the entire conditional distribution of y by fitting functional binary GLMs with I(y ≤ y0) (where I(·) denotes an indicator) as response, for a range of values of y0. Quantiles can then be inferred by inverting the conditional distribution function. Ferraty et al. (2005) also estimate the entire conditional distribution, but adopt a nonparametric estimator of a weighted-average form reminiscent of (10). That paper and a number of subsequent ones have studied applications in the geosciences, such as an extreme value analysis of ozone concentration (Quintela-del-Río and Francisco-Fernández, 2011). The mode of the nonparametrically estimated conditional distribution serves as an estimate of the conditional mode of y, whose convergence rate is derived by Ferraty et al. (2005).

6 Which method to choose?

Sections 2 through 5 have presented a perhaps overwhelming variety of models and methods for SoFR. How can a user decide which is the appropriate method in a given situation? We offer here a few suggestions, which may be divided into a priori and data-driven considerations.

6.1 A priori considerations

Some authors have questioned whether the flexibility of the general nonparametric model (9) is worth the price paid in terms of convergence rates. The small-ball probability typically converges to 0 exponentially fast as u → 0, and consequently the functional NW estimator (10) converges at a rate that is logarithmic, as opposed to a power of 1/n (Hall et al., 2009). Geenens (2011) succinctly interprets the problem as a function-space “curse of dimensionality,” but shows that a well-chosen semi-metric d increases the concentration of the functional predictors and thus allows for more favorable convergence of the NW estimator to m.

Aside from asymptotic properties, choice of a method may be guided by the kind of interpretation desired, which may in turn depend on the application. As noted in Section 2, the FLM offers a coefficient function, which has an intuitive interpretation. Nonlinear and especially nonparametric model results are less interpretable in this sense. But for applications in which one is interested only in accurate prediction, this advantage of the FLM is immaterial.

Regarding the FLM, we noted in Section 2.1 that smoothness of the data is an important factor when choosing among a priori basis types, such as splines vs. wavelets. Among data-driven basis approaches to the FLM (Section 2.2), those that rely on a more parsimonious set of components may sometimes be preferred, again on grounds of interpretability. In this regard, FPLS has an advantage over FPCR, as emphasized by Delaigle and Hall (2012). On the other hand, if one is interested only in the coefficient function, not in contributions of the different components, then the relative simplicity of FPCR is an advantage over FPLS. A key advantage of FPCR over spline or wavelet methods is that it is more readily applied when the functional predictors are sampled not densely but sparsely and/or irregularly (longitudinal data).

We tend to view a wide variety of methods as effective in at least some settings, but one method for which we have limited enthusiasm is selecting a “best subset” among a large set of FPCs, as opposed to regressing on the leading FPCs. Whereas leading FPCs offer an optimal approximation in the sense of Eckart and Young (1936), if one is not selecting the leading FPCs then it is not clear why the FPC basis is the right one to use at all. Another basis that is by construction relevant for explaining y, such as FPLS components, may be more appropriate. This view finds support in the empirical results of Febrero-Bande et al. (2015).

Other considerations in choosing among methods include conceptual complexity, the number of tuning parameters that must be selected, sensitivity to how preprocessing is done, and computational efficiency. In practice, a primary factor for many users is the availability of software that is user-friendly and has the flexibility to handle data sets that may be more complex than the iid setup of the Introduction. Available software is reviewed in Section 7, and the real-data example presented in Section 8 highlights the need for software that is flexible.

6.2 Data-driven choice

Relying on one’s a priori preference is unlikely to be the best strategy for choosing how to perform SoFR. Next we discuss two ways to let the data help determine the best approach.

6.2.1 Hypothesis testing

Hypothesis testing is an appropriate paradigm when one wishes to compare a simpler versus a more complex SoFR model, with strong evidence required in order to reject the former in favor of the latter. By now there are enough articles on tests of SoFR-related hypotheses to justify a separate review paper; here we offer just a few remarks. Many papers have proposed tests for the FLM (1). Some methods test the null hypothesis β(t) ≡ 0 in (1) versus the alternative that β(t) ≠ 0 for some (e.g., Cardot et al., 2003; Lei, 2014). The (restricted) likelihood ratio test of Swihart et al. (2014) can be applied either to that zero-effect null or, alternatively, to the null hypothesis that β(t) is a constant—a hypothesis that, if true, allows one to regress on the across-the-function average rather than resorting to functional regression. McLean et al. (2015) treat the linear model (1) as the null, to be tested versus the additive model (8), while García-Portugués et al. (2014) consider testing against a more general alternative. Horváth and Kokoszka (2012) and Zhang et al. (2014) investigate hypothesis testing procedures to choose the polynomial order in functional polynomial models such as the quadratic model (5). Delsol et al. (2011) propose a test for the null hypothesis that m in the nonparametric model (9) belongs to a given family of operators.

6.2.2 Ensemble predictors

In most practical cases, there are not just two plausible models—a null and an alternative—but many reasonable options for performing SoFR, and it is impossible to know in advance which model and estimation strategy will work best for a given data set. Because of this, Goldsmith and Scheipl (2014) extended the idea of model stacking (Wolpert, 1992) (or superlearning, van der Laan et al., 2007) to SoFR. A large collection of estimators is applied to the data set of interest and are evaluated for prediction accuracy using cross-validation. These estimators are combined into an “ensemble” predictor based on their individual performance. These authors found that multiple approaches yielded dramatically different relative performance across several example data sets—underlining the value of trying a variety of approaches to SoFR when possible.

7 Software for scalar-on-function regression

Several packages for R (R Core Team, 2015) implement SoFR. The function fRegress in the fda package (Ramsay et al., 2009) fits linear models in which either the response and/or the predictor is functional. The fda.usc package (Febrero-Bande and Oviedo de la Fuente, 2012) implements an extensive range of parametric and nonparametric functional regression methods, including those studied by Ramsay and Silverman (2005), Ferraty and Vieu (2006) and Febrero-Bande and González-Manteiga (2013). The refund package (Huang et al., 2015) implements penalized functional regression, including several variants of the FLM and the additive model (8), and allows for multiple functional predictors and scalar covariates, as well as generalized linear models. Optimal smoothness selection relies on the mgcv package of Wood (2006). The mgcv package itself is one of the most flexible and user-friendly packages for SoFR, allowing for the whole GLASS structure of Eilers and Marx (2002) plus random effects; see section 5.2 of Wood (2011), which incidentally was the second paper to use the term “scalar-on-function regression”. In mgcv, functional predictor terms are treated as just one instance of “linear functional” terms (cf. the “general spline problem” of Wahba, 1990). The refund.wave package (Huo et al., 2014), a spinoff of refund, implements scalar-on-function and scalar-on-image regression in the wavelet domain.

In MATLAB (MathWorks, Natick, MA), the PACE package implements a wide variety of methods by Müller, Wang, Yao, and co-authors, including a versatile collection of functional principal component-based regression models for dense and sparsely sampled functional data. From a Bayesian viewpoint, Crainiceanu and Goldsmith (2010) have developed tools for functional generalized linear models using WinBUGS (Lunn et al., 2000).

8 A brain imaging example

Our work has made us aware of the expanding opportunities to apply SoFR in cuttingedge biomedical research, a trend that we expect will accelerate in the coming years. For such applications to be feasible, one must typically move beyond the basic model (1) and incorporate scalar covariates and/or multiple functional predictors, allow for non-iid data, and consider hypothesis tests for the coefficient function(s). The following example illustrates how FLMs can incorporate some of these features (thanks to flexible software implementation, as advocated in Section 6.1), and can shed light on an interesting scientific question. For comparisons of linear as well as nonlinear and nonparametric approaches applied to several data sets, we refer the reader to Goldsmith and Scheipl (2014).

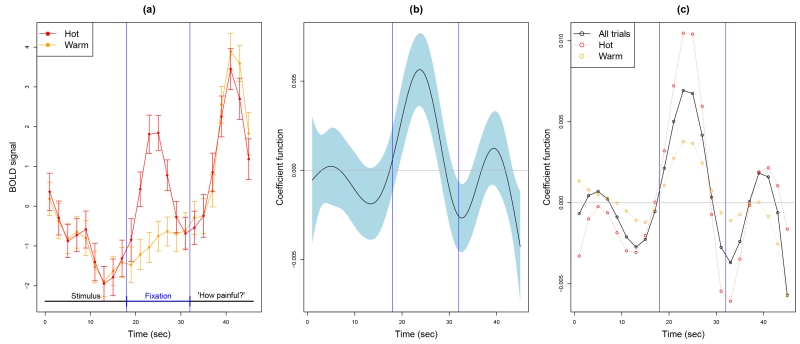

Lindquist (2012) analyzed functional magnetic resonance imaging (fMRI) measures of response to hot (painful) and warm (non-painful) stimuli applied to the left volar forearm in 20 volunteers. Here we apply SoFR to examine whether the blood oxygen level dependent (BOLD) response measured by fMRI in the lateral cerebellum predicts the pain intensity, as rated after each stimulus on a scale from 100 and 550 (with higher values indicating more pain). Each trial consisted of thermal stimulation for 18 seconds; then a 14-second interval in which a fixation cross was presented on a screen; then the words “How painful?” appeared, and after another 14-second interval the participant rated the pain intensity. The BOLD signal was recorded at 23 2-second intervals. There were Ji = 39–48 such trials per volunteer, and 940 in total. As shown in Figure 1(a), the lateral cerebellum BOLD signal tends to be higher in the hot trials, but only during the fixation cross interval.

Figure 1.

(a) Overall mean lateral cerebellum BOLD signal at each of the 23 time points, with approximate 95% confidence intervals, for warm- and hot-stimulus trials. (b) Estimated coefficient function, with approximate pointwise 95% confidence intervals, for model (15). (c) Coeffcient function estimate for the full data set (as in (b)) compared with those obtained with only the hot or only the warm trials.

We fitted the model

| (15) |

in which yij is the log pain score for the ith participant’s jth trial, is an indicator for a hot stimulus, the αi’s are iid normally distributed random intercepts, is the time interval of the trial, and the εij’s are iid normally distributed errors with mean zero. Unsurprisingly, the expected difference in log pain score between hot and warm trials is found to be hugely significant (, p < 2·10−16). The coefficient function is also very significantly nonzero, based on the modified Wald test of Wood (2013) or the likelihood ratio test of Swihart et al. (2014).

In particular, Figure 1(b) shows that is clearly positive for the same time points that evince a warm/hot discrepancy in Figure 1(a). One could venture a “functional collinearity” explanation for this, in view of the high correlation (0.28) between the indicator variable and the functional predictor term . In other words, one might suspect that the highly positive values of around the 20- to 28-second range are just an artifact of the higher BOLD signal in that range for hot versus warm trials. But that seems incorrect, since looks very similar even when we fit separate models with only the warm or only the hot trials (Figure 1(c)). A more cogent explanation is that brain activity detected by the BOLD signal partially mediates the painful effect of the hot stimulus, a causal interpretation developed in depth by Lindquist (2012).

The SoFR implementations of Wood (2011) in the R package mgcv and of Goldsmith et al. (2011) in the refund package—which were used to create Figure 1(b) and (c), respectively— make it routine to include scalar covariates and random effects, and to test the significance of the coefficient function, as we have done here. It is also straightforward to test for a “scalar-by-function” interaction between stimulus type and BOLD signal (found to be non-significant); or to include multiple BOLD signal predictors. Besides the lateral cerebellum, the data set includes such functional predictors for 20 other pain-relevant regions. Several of these have significant effects on pain, even adjusting for the lateral cerebellum signal, but the associated increments in explained variance are quite small.

9 Discussion

This paper has emphasized methodological and practical aspects of some widely used SoFR models. Just a few brief words will have to suffice regarding asymptotic issues. For functional (generalized) linear models (see Cardot and Sarda, 2011, for a survey), convergence rates have been studied both for estimation of the coefficient function (e.g., Müller and Stadtmüller, 2005; Hall and Horowitz, 2007; Dou et al., 2012) and for prediction of the response (e.g., Cai and Hall, 2006; Crambes et al., 2009); see Hsing and Eubank (2015) for a succinct treatment, and Reimherr (2015) for a recent contribution on the key role played by eigenvalues of the covariance operator. A few recent papers (e.g., Wang et al., 2014; Brunel and Roche, 2015) have derived non-asymptotic error bounds for FLMs. In nonparametric FDA, only prediction error is considered, with small-ball probability playing a central role. Ferraty and Vieu (2011) review both pointwise and uniform convergence results. A detailed analysis of the functional NW estimator’s asymptotic properties was recently provided by Geenens (2015).

Regarding the term nonparametric in the FDA context, Ferraty et al. (2005) explain: “We use the terminology functional nonparametric, where the word functional refers to the infinite dimensionality of the data and where the word nonparametric refers to the infinite dimensionality of the model.” But some would argue that, since the coefficient function in (1) lies in an infinite-dimensional space, this nomenclature makes even the functional linear model “nonparametric”. While a fully satisfying definition may be elusive, we find it most helpful to think of nonparametric SoFR as an analogue of ordinary nonparametric regression, i.e., as extending the nonparametric model (9) to the case of functional predictors.

Our use of the term nonlinear in Section 3 can likewise be questioned, since that term applies equally well to nonparametric SoFR. But we could find no better term to encompass models that are not linear but that impose more structure than the general model (9). Note that in non-functional statistics as well, nonlinear usually refers to models that have some parametric structure, as opposed to leaving the mean completely unspecified.

Other problems of nomenclature have arisen as methods for SoFR have proliferated. We have seen that “functional (generalized) additive models” may be additive with respect to FPC scores (Müller and Yao, 2008; Zhu et al., 2014); points along a functional predictor domain (Müller et al., 2013; McLean et al., 2014); or multiple functional predictors with either nonlinear (Fan et al., 2015) or nonparametric (Febrero-Bande and González-Manteiga, 2013) effects. Likewise, authors have considered every combination of scalar linear (SL), functional linear (FL) and functional nonparametric (FNP) terms, so that “partial linear” models may refer to any two of these three: SL+FL (Shin, 2009), SL+FNP (Aneiros-Pérez and Vieu, 2008), or FL+FNP (Lian, 2011). Finally, some of the nonlinear approaches of Section 3 are sometimes termed “nonparametric” since they incorporate general smooth link functions. One of our aims here has been to reduce terminological confusion.

We hope that, by distilling some key ideas and approaches from what is now a sprawling literature, we have provided readers with useful guidance for implementing scalar-on-function regression in the growing number of domains in which it can be applied.

Acknowledgments

We thank the Co-Editor-in-Chief, Prof. Marc Hallin, and the Associate Editor and referees, whose feedback enabled us to improve the manuscript significantly. We also thank Prof. Martin Lindquist for providing the pain data, whose collection was supported by the U.S. National Institutes of Health through grants R01MH076136-06 and R01DA035484-01, and Pei-Shien Wu for assistance with the bibliography. Philip Reiss’ work was supported in part by grant 1R01MH095836-01A1 from the National Institute of Mental Health. Je Goldsmith’s work was supported in part by grants R01HL123407 from the National Heart, Lung, and Blood Institute and R21EB018917 from the National Institute of Biomedical Imaging and Bioengineering. Han Lin Shang’s work was supported in part by a Research School Grant from the ANU College of Business and Economics. Todd Ogden’s work was supprted in part by grant 5R01MH099003 from the National Institute of Mental Health.

References

- Aguilera AM, Escabias M, Preda C, Saporta G. Using basis expansions for estimating functional PLS regression: applications with chemometric data. Chemometrics and Intelligent Laboratory Systems. 2010;104(2):289–305. [Google Scholar]

- Aguilera-Morillo MC, Aguilera AM, Escabias M, Valderrama MJ. Penalized spline approaches for functional logit regression. Test. 2013;22(2):251–277. [Google Scholar]

- Ait-Säidi A, Ferraty F, Kassa R, Vieu P. Cross-validated estimations in the single-functional index model. Statistics. 2008;42(6):475–494. [Google Scholar]

- Amato U, Antoniadis A, De Feis I. Dimension reduction in functional regression with applications. Computational Statistics & Data Analysis. 2006;50(9):2422–2446. [Google Scholar]

- Aneiros-Pérez G, Cao R, Vilar-Fernández JM, Muñoz-San-Roque A. Recent Advances in Functional Data Analysis and Related Topics. Springer-Verlag; Berlin: 2011. Functional prediction for the residual demand in electricity spot markets. [Google Scholar]

- Aneiros-Pérez G, Vieu P. Semi-functional partial linear regression. Statistics and Probability Letters. 2006;76(11):1102–1110. [Google Scholar]

- Aneiros-Pérez G, Vieu P. Nonparametric time series prediction: A semi-functional partial linear modeling. Journal of Multivariate Analysis. 2008;99(5):834–857. [Google Scholar]

- Araki Y, Kawaguchi A, Yamashita F. Regularized logistic discrimination with basis expansions for the early detection of Alzheimer’s disease based on three-dimensional MRI data. Advances in Data Analysis and Classification. 2013;7(1):109–119. [Google Scholar]

- Baíllo A, Grané A. Local linear regression for functional predictor and scalar response. Journal of Multivariate Analysis. 2009;100(1):102–111. [Google Scholar]

- Barrientos-Marin J, Ferraty F, Vieu P. Locally modelled regression and functional data. Journal of Nonparametric Statistics. 2010;22(5):617–632. [Google Scholar]

- Boj E, Delicado P, Fortiana J. Distance-based local linear regression for functional predictors. Computational Statistics & Data Analysis. 2010;54(2):429–437. [Google Scholar]

- Brown PJ, Fearn T, Vannucci M. Bayesian wavelet regression on curves with application to a spectroscopic calibration problem. Journal of the American Statistical Association. 2001;96:398–408. [Google Scholar]

- Brunel E, Roche A. Penalized contrast estimation in functional linear models with circular data. Statistics. 2015;49(6):1298–1321. [Google Scholar]

- Burba F, Ferraty F, Vieu P. k-nearest neighbour method in functional nonparametric regression. Journal of Nonparametric Statistics. 2009;21(4):453–469. [Google Scholar]

- Cai TT, Hall P. Prediction in functional linear regression. Annals of Statistics. 2006;34(5):2159–2179. [Google Scholar]

- Cai TT, Yuan M. Minimax and adaptive prediction for functional linear regression. Journal of the American Statistical Association. 2012;107:1201–1216. [Google Scholar]

- Cardot H, Crambes C, Sarda P. Quantile regression when the covariates are functions. Journal of Nonparametric Statistics. 2005;17(7):841–856. [Google Scholar]

- Cardot H, Ferraty F, Mas A, Sarda P. Testing hypotheses in the functional linear model. Scandinavian Journal of Statistics. 2003;30(1):241–255. [Google Scholar]

- Cardot H, Ferraty F, Sarda P. Functional linear model. Statistics and Probability Letters. 1999;45(1):11–22. [Google Scholar]

- Cardot H, Ferraty F, Sarda P. Spline estimators for the functional linear model. Statistica Sinica. 2003;13:571–591. [Google Scholar]

- Cardot H, Sarda P. Functional linear regression. In: Ferraty F, Romain Y, editors. The Oxford Handbook of Functional Data Analysis. Oxford University Press; New York: 2011. pp. 21–46. [Google Scholar]

- Chen D, Hall P, Müller H-G. Single and multiple index functional regression models with nonparametric link. Annals of Statistics. 2011;39(3):1720–1747. [Google Scholar]

- Chen K, Müller H-G. Conditional quantile analysis when covariates are functions, with application to growth data. Journal of the Royal Statistical Society, Series B. 2012;74(1):67–89. [Google Scholar]

- Chung C, Chen Y, Ogden RT. Functional data classification: A wavelet approach. Computational Statistics. 2014;14:1497–1513. doi: 10.1007/s00180-014-0503-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciarleglio A, Ogden RT. Wavelet-based scalar-on-function finite mixture regression models. Computational Statistics and Data Analysis. 2016;93:86–96. doi: 10.1016/j.csda.2014.11.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crainiceanu CM, Goldsmith J. Bayesian functional data analysis using WinBUGS. Journal of Statistical Software. 2010;32:1–33. [PMC free article] [PubMed] [Google Scholar]

- Crainiceanu CM, Staicu AM, Di C-Z. Generalized multilevel functional regression. Journal of the American Statistical Association. 2009;104:1550–1561. doi: 10.1198/jasa.2009.tm08564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crambes C, Kneip A, Sarda P. Smoothing splines estimators for functional linear regression. Annals of Statistics. 2009;37(1):35–72. [Google Scholar]

- Craven P, Wahba G. Smoothing noisy data with spline functions: estimating the correct degree of smoothing by the method of generalized cross-validation. Numerische Mathematik. 1979;31(4):317–403. [Google Scholar]

- Cuevas A. A partial overview of the theory of statistics with functional data. Journal of Statistical Planning and Inference. 2014;147:1–23. [Google Scholar]

- Delaigle A, Hall P. Methodology and theory for partial least squares applied to functional data. Annals of Statistics. 2012;40(1):322–352. [Google Scholar]

- Delaigle A, Hall P, Apanasovich TV, et al. Weighted least squares methods for prediction in the functional data linear model. Electronic Journal of Statistics. 2009;3:865–885. [Google Scholar]

- Delsol L, Ferraty F, Vieu P. Structural test in regression on functional variables. Journal of Multivariate Analysis. 2011;102(3):422–447. [Google Scholar]

- Di C-Z, Crainiceanu CM, Ca o BS, Punjabi NM. Multilevel functional principal component analysis. Annals of Applied Statistics. 2009;3(1):458–488. doi: 10.1214/08-AOAS206SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dou WW, Pollard D, Zhou HH. Estimation in functional regression for general exponential families. Annals of Statistics. 2012;40(5):2421–2451. [Google Scholar]

- Eckart C, Young G. The approximation of one matrix by another of lower rank. Psychometrika. 1936;1(3):211–218. [Google Scholar]

- Eilers PHC, Li B, Marx BD. Multivariate calibration with single-index signal regression. Chemometrics and Intelligent Laboratory Systems. 2009;96(2):196–202. [Google Scholar]

- Eilers PHC, Marx BD. Generalized linear additive smooth structures. Journal of Computational and Graphical Statistics. 2002;11(4):758–783. [Google Scholar]

- Escabias M, Aguilera AM, Valderrama MJ. Functional PLS logit regression model. Computational Statistics & Data Analysis. 2007;51(10):4891–4902. [Google Scholar]

- Fan J, Gijbels I. Local Polynomial Modelling and its Applications. Chapman & Hall; London: 1996. [Google Scholar]

- Fan Y, James G, Radchenko P. Functional additive regression. Annals of Statistics. 2015;43(5):2296–2325. [Google Scholar]

- Faraway JJ. Backscoring in principal coordinates analysis. Journal of Computational and Graphical Statistics. 2012;21(2):394–412. [Google Scholar]

- Febrero-Bande M, Galeano P, González-Manteiga W. Functional principal component regression and functional partial least-squares regression: An overview and a comparative study. International Statistical Review. 2015 in press. [Google Scholar]

- Febrero-Bande M, González-Manteiga W. Generalized additive models for functional data. Test. 2013;22(2):278–292. [Google Scholar]

- Febrero-Bande M, Oviedo de la Fuente M. Statistical computing in functional data analysis: The R package fda.usc. Journal of Statistical Software. 2012;51(4):1–28. [Google Scholar]

- Ferraty F, Goia A, Salinelli E, Vieu P. Functional projection pursuit regression. Test. 2013;22(2):293–320. [Google Scholar]

- Ferraty F, Hall P, Vieu P. Most-predictive design points for functional data predictors. Biometrika. 2010;97(4):807–824. [Google Scholar]

- Ferraty F, Laksaci A, Vieu P. Functional time series prediction via conditional mode estimation. Comptes Rendus de l’Acadmie des Sciences, Series I. 2005;340(5):389–392. [Google Scholar]

- Ferraty F, Mas A, Vieu P. Nonparametric regression on functional data: inference and practical aspects. Australian & New Zealand Journal of Statistics. 2007;49(3):267–286. [Google Scholar]

- Ferraty F, Park J, Vieu P. Estimation of a functional single index model. In: Ferraty F, editor. Recent Advances in Functional Data Analysis and Related Topics. Springer; 2011. pp. 111–116. [Google Scholar]

- Ferraty F, Rabhi A, Vieu P. Conditional quantiles for dependent functional data with application to the climatic El Niño phenomenon. Sankhyā: The Indian Journal of Statistics. 2005;67(2):378–398. [Google Scholar]

- Ferraty F, Vieu P. The functional nonparametric model and application to spectrometric data. Computational Statistics. 2002;17(4):545–564. [Google Scholar]

- Ferraty F, Vieu P. Nonparametric Functional Data Analysis: Theory and Practice. Springer; New York: 2006. [Google Scholar]

- Ferraty F, Vieu P. Additive prediction and boosting for functional data. Computational Statistics & Data Analysis. 2009;53(4):1400–1413. [Google Scholar]

- Ferraty F, Vieu P. Kernel regression estimation for functional data. In: Ferraty F, Romain Y, editors. The Oxford Handbook of Functional Data Analysis. Oxford University Press; New York: 2011. pp. 72–129. [Google Scholar]

- Ferré L, Yao AF. Functional sliced inverse regression analysis. Statistics. 2003;37(6):475–488. [Google Scholar]

- Ferré L, Yao A-F. Smoothed functional inverse regression. Statistica Sinica. 2005;15:665–683. [Google Scholar]

- Fuchs K, Gertheiss J, Tutz G. Nearest neighbor ensembles for functional data with interpretable feature selection. Chemometrics and Intelligent Laboratory Systems. 2015;146:186–197. [Google Scholar]

- García-Portugués E, González-Manteiga W, Febrero-Bande M. A goodness-of-fit test for the functional linear model with scalar responses. Journal of Computational and Graphical Statistics. 2014;23(3):761–778. [Google Scholar]

- Geenens G. Curse of dimensionality and related issues in nonparametric functional regression. Statistics Surveys. 2011;5:30–43. [Google Scholar]

- Geenens G. Moments, errors, asymptotic normality and large deviation principle in nonparametric functional regression. Statistics & Probability Letters. 2015;107:369–377. [Google Scholar]

- Gertheiss J, Goldsmith J, Crainiceanu C, Greven S. Longitudinal scalar-on-functions regression with application to tractography data. Biostatistics. 2013;14(3):447–461. doi: 10.1093/biostatistics/kxs051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gertheiss J, Maity A, Staicu A-M. Variable selection in generalized functional linear models. Stat. 2013;2(1):86–101. doi: 10.1002/sta4.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldsmith J, Bobb J, Crainiceanu CM, Ca o B, Reich D. Penalized functional regression. Journal of Computational and Graphical Statistics. 2011;20(4):830–851. doi: 10.1198/jcgs.2010.10007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldsmith J, Crainiceanu CM, Ca o B, Reich D. Longitudinal penalized functional regression for cognitive outcomes on neuronal tract measurements. Journal of the Royal Statistical Society: Series C. 2012;61(3):453–469. doi: 10.1111/j.1467-9876.2011.01031.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldsmith J, Huang L, Crainiceanu CM. Smooth scalar-on-image regression via spatial Bayesian variable selection. Journal of Computational and Graphical Statistics. 2014;23(1):46–64. doi: 10.1080/10618600.2012.743437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldsmith J, Scheipl F. Estimator selection and combination in scalar-on-function regression. Computational Statistics & Data Analysis. 2014;70:362–372. [Google Scholar]

- Goldsmith J, Wand MP, Crainiceanu CM. Functional regression via variational Bayes. Electronic Journal of Statistics. 2011;5:572–602. doi: 10.1214/11-ejs619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goutis C. Second-derivative functional regression with applications to near infra-red spectroscopy. Journal of the Royal Statistical Society, Series B. 1998;60(1):103–114. [Google Scholar]

- Greven S, Crainiceanu CM, Ca o B, Reich D. Longitudinal functional principal component analysis. Electronic Journal of Statistics. 2010;4:1022–1054. doi: 10.1214/10-EJS575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu C. Smoothing Spline ANOVA Models. 2nd ed. Springer; New York: 2013. [Google Scholar]

- Guillas S, Lai M-J. Bivariate splines for spatial functional regression models. Journal of Nonparametric Statistics. 2010;22(4):477–497. [Google Scholar]

- Hall P, Horowitz JL. Methodology and convergence rates for functional linear regression. Annals of Statistics. 2007;35(1):70–91. [Google Scholar]

- Hall P, Müller H-G, Yao F. Estimation of functional derivatives. Annals of Statistics. 2009;37(6A):3307–3329. [Google Scholar]

- Hall P, Vial C. Assessing the finite dimensionality of functional data. Journal of the Royal Statistical Society (Series B) 2006;68(4):689–705. [Google Scholar]

- Ḧardle W, Müller M, Sperlich S, Werwatz A. Nonparametric and Semiparametric Models. 2nd ed. Springer; New York: 2013. [Google Scholar]

- Hastie T, Mallows C. Discussion of “A statistical view of some chemometrics regression tools” by by I.E. Frank and J.H. Friedman. Technometrics. 1993;35(2):140–143. [Google Scholar]

- Hastie T, Tibshirani R. Generalized Additive Models. Chapman & Hall/CRC; Boca Raton, FL: 1990. [Google Scholar]

- Holan S, Yang W, Matteson D, Wikle C. An approach for identifying and predicting economic recessions in real-time using time-frequency functional models. (with discussion) Applied Stochastic Models in Business and Industry. 2012;28:485–489. [Google Scholar]

- Holan SH, Wikle CK, Sullivan-Beckers LE, Cocroft RB. Modeling complex phenotypes: Generalized linear models using spectrogram predictors of animal communication signals. Biometrics. 2010;66:914–924. doi: 10.1111/j.1541-0420.2009.01331.x. [DOI] [PubMed] [Google Scholar]

- Horváth L, Kokoszka P. Inference for Functional Data with Applications. Springer; New York: 2012. [Google Scholar]

- Hosseini-Nasab M. Cross-validation approximation in functional linear regression. Journal of Statistical Computation and Simulation. 2013;83(8):1429–1439. [Google Scholar]

- Hsing T, Eubank R. Theoretical Foundations of Functional Data Analysis, with an Introduction to Linear Operators. John Wiley & Sons; West Sussex, UK: 2015. [Google Scholar]

- Huang L, Goldsmith J, Reiss PT, Reich DS, Crainiceanu CM. Bayesian scalar-on-image regression with application to association between intracranial DTI and cognitive outcomes. NeuroImage. 2013;83:210–223. doi: 10.1016/j.neuroimage.2013.06.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang L, Scheipl F, Goldsmith J, Gellar J, Harezlak J, McLean MW, Swihart B, Xiao L, Crainiceanu C, Reiss P. refund: Regression with Functional Data. 2015. R package version 0.1-13. [Google Scholar]

- Huo L, Reiss P, Zhao Y. refund.wave: Wavelet-Domain Regression with Functional Data. 2014. R package version 0.1. [Google Scholar]

- James GM. Generalized linear models with functional predictors. Journal of the Royal Statistical Society Series B. 2002;64(3):411–432. [Google Scholar]

- James GM, Silverman B. Functional adaptive model estimation. Journal of the American Statistical Association. 2005;100:565–576. [Google Scholar]

- James GM, Wang J, Zhu J. Functional linear regression that’s interpretable. Annals of Statistics. 2009;37(5A):2083–2108. [Google Scholar]

- Kimeldorf G, Wahba G. Some results on Tchebyche an spline functions. Journal of Mathematical Analysis and Applications. 1971;33(1):82–95. [Google Scholar]

- Koenker R, Bassett G. Regression quantiles. Econometrica. 1978;46(1):33–50. [Google Scholar]

- Lee ER, Park BU. Sparse estimation in functional linear regression. Journal of Multivariate Analysis. 2011;105(1):1–17. [Google Scholar]

- Lei J. Adaptive global testing for functional linear models. Journal of the American Statistical Association. 2014;109:624–634. [Google Scholar]

- Li Y, Wang N, Carroll RJ. Selecting the number of principal components in functional data. Journal of the American Statistical Association. 2013;108:1284–1294. doi: 10.1080/01621459.2013.788980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lian H. Functional partial linear model. Journal of Nonparametric Statistics. 2011;23(1):115–128. [Google Scholar]

- Lian H. Shrinkage estimation and selection for multiple functional regression. Statistica Sinica. 2013;23:51–74. [Google Scholar]

- Lindquist M, McKeague I. Logistic regression with Brownian-like predictors. Journal of the American Statistical Association. 2009;104:1575–1585. [Google Scholar]

- Lindquist MA. Functional causal mediation analysis with an application to brain connectivity. Journal of the American Statistical Association. 2012;107:1297–1309. doi: 10.1080/01621459.2012.695640. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunn DJ, Thomas A, Best N, Spiegelhalter D. WinBUGS—A Bayesian modelling framework: concepts, structure, and extensibility. Statistics and Computing. 2000;10(4):325–337. [Google Scholar]

- Malloy E, Morris J, Adar S, Suh H, Gold D, Coull B. Wavelet-based functional linear mixed models: an application to measurement error-corrected distributed lag models. Biostatistics. 2010;11(3):432–452. doi: 10.1093/biostatistics/kxq003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marron JS, Alonso AM. Overview of object oriented data analysis. Biometrical Journal. 2014;56(5):732–753. doi: 10.1002/bimj.201300072. [DOI] [PubMed] [Google Scholar]

- Marx BD, Eilers PHC. Generalized linear regression on sampled signals and curves: a P-spline approach. Technometrics. 1999;41(1):1–13. [Google Scholar]

- Marx BD, Eilers PHC. Multidimensional penalized signal regression. Technometrics. 2005;47(1):12–22. [Google Scholar]

- McKeague IW, Qian M. Estimation of treatment policies based on functional predictors. Statistica Sinica. 2014;24(3):1461. doi: 10.5705/ss.2012.196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKeague IW, Sen B. Fractals with point impact in functional linear regression. Annals of Statistics. 2010;38:2559–2586. doi: 10.1214/10-aos791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLean MW, Hooker G, Ruppert D. Restricted likelihood ratio tests for linearity in scalar-on-function regression. Statistics and Computing. 2015;25(5):997–1008. [Google Scholar]

- McLean MW, Hooker G, Staicu A-M, Scheipl F, Ruppert D. Functional generalized additive models. Journal of Computational and Graphical Statistics. 2014;23(1):249–269. doi: 10.1080/10618600.2012.729985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS. Functional regression. Annual Review of Statistics and Its Application. 2015;2:321–359. [Google Scholar]

- Müller H-G, Stadtmüller U. Generalized functional linear models. Annals of Statistics. 2005;33(2):774–805. [Google Scholar]

- Müller H-G, Wu Y, Yao F. Continuously additive models for nonlinear functional regression. Biometrika. 2013;100(3):607–622. [Google Scholar]

- Müller H-G, Yao F. Functional additive models. Journal of the American Statistical Association. 2008;103:1534–1544. [Google Scholar]

- Müller H-G, Yao F. Additive modelling of functional gradients. Biometrika. 2010;97(4):791–805. [Google Scholar]

- Nadaraya EA. On estimating regression. Theory of Probability & Its Applications. 1964;9(1):141–142. [Google Scholar]

- Ogden RT. Essential Wavelets for Statistical Applications and Data Analysis. Birkhauser; Boston: 1997. [Google Scholar]

- Preda C. Regression models for functional data by reproducing kernel Hilbert spaces methods. Journal of Statistical Planning and Inference. 2007;137(3):829–840. [Google Scholar]

- Preda C, Saporta G. PLS regression on a stochastic process. Computational Statistics & Data Analysis. 2005;48(1):149–158. [Google Scholar]

- Quintela-del-Río A, Francisco-Fernández M. Nonparametric functional data estimation applied to ozone data: prediction and extreme value analysis. Chemosphere. 2011;82(6):800–808. doi: 10.1016/j.chemosphere.2010.11.025. [DOI] [PubMed] [Google Scholar]

- R Core Team . R: a language and environment for statistical computing. R Foundation for Statistical Computing; Vienna, Austria: 2015. [Google Scholar]

- Rachdi M, Vieu P. Nonparametric regression for functional data: automatic smoothing parameter selection. Journal of Statistical Planning and Inference. 2007;137(9):2784–2801. [Google Scholar]

- Ramsay JO, Hooker G, Graves S. Functional Data Analysis with R and MATLAB. Springer; New York: 2009. [Google Scholar]

- Ramsay JO, Silverman BW. Functional Data Analysis. 2nd ed. Springer; New York: 2005. [Google Scholar]

- Randolph TW, Harezlak J, Feng Z. Structured penalties for functional linear models—partially empirical eigenvectors for regression. Electronic Journal of Statistics. 2012;6:323–353. doi: 10.1214/12-EJS676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliffe SJ, Leader LR, Heller GZ. Functional data analysis with application to periodically stimulated fetal heart rate data: I. Functional regression. Statistics in Medicine. 2002;21(8):1103–1114. doi: 10.1002/sim.1067. [DOI] [PubMed] [Google Scholar]

- Reimherr M. Functional regression with repeated eigenvalues. Statistics & Probability Letters. 2015;107:62–70. [Google Scholar]

- Reiss PT, Huang L, Mennes M. Fast function-on-scalar regression with penalized basis expansions. International Journal of Biostatistics. 2010;6 doi: 10.2202/1557-4679.1246. Article 28. [DOI] [PubMed] [Google Scholar]

- Reiss PT, Huo L, Zhao Y, Kelly C, Ogden RT. Wavelet-domain regression and predictive inference in psychiatric neuroimaging. Annals of Applied Statistics. 2015;9(2):1076–1101. doi: 10.1214/15-AOAS829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss PT, Ogden RT. Functional principal component regression and functional partial least squares. Journal of the American Statistical Association. 2007;102:984–996. [Google Scholar]

- Reiss PT, Ogden RT. Smoothing parameter selection for a class of semiparametric linear models. Journal of the Royal Statistical Society: Series B. 2009;71(2):505–523. [Google Scholar]

- Reiss PT, Ogden RT. Functional generalized linear models with images as predictors. Biometrics. 2010;66(1):61–69. doi: 10.1111/j.1541-0420.2009.01233.x. [DOI] [PubMed] [Google Scholar]

- Rice J, Silverman B. Estimating the mean and covariance structure nonparametrically when the data are curves. Journal of the Royal Statistical Society, Series B. 1991;53:233–243. [Google Scholar]

- Ruppert D, Wand MP, Carroll RJ. Semiparametric Regression. Cambridge University Press; Cambridge: 2003. [Google Scholar]

- Schölkopf B, Herbrich R, Smola AJ. A generalized representer theorem. In: Helmbold D, Williamson B, editors. Volume 2111 of Lecture Notes in Artificial Intelligence; Computational Learning Theory: 14th Annual Conference on Computational Learning Theory, COLT 2001 and 5th European Conference on Computational Learning Theory, EuroCOLT 2001; Berlin and Heidelberg: Springer. 2001.pp. 416–426. [Google Scholar]

- Schölkopf B, Smola AJ. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond. MIT Press; Cambridge, Massachusetts: 2002. [Google Scholar]

- Shang HL. Bayesian bandwidth estimation for a nonparametric functional regression model with unknown error density. Computational Statistics & Data Analysis. 2013;67:185–198. [Google Scholar]

- Shang HL. Bayesian bandwidth estimation for a functional nonparametric regression model with mixed types of regressors and unknown error density. Journal of Nonparametric Statistics. 2014a;26:599–615. [Google Scholar]

- Shang HL. Bayesian bandwidth estimation for a semi-functional partial linear regression model with unknown error density. Computational Statistics. 2014b;29:829–848. doi: 10.1080/02664763.2020.1736527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shang HL. A Bayesian approach for determining the optimal semi-metric and bandwidth in scalar-on-function quantile regression with unknown error density and dependent functional data. Journal of Multivariate Analysis. 2015 in press. [Google Scholar]

- Shin H. Partial functional linear regression. Journal of Statistical Planning and Inference. 2009;139(10):3405–3418. [Google Scholar]

- Silverman BW. Smoothed functional principal components analysis by choice of norm. Annals of Statistics. 1996;24(1):1–24. [Google Scholar]

- Stoker TM. Consistent estimation of scaled coefficients. Econometrica. 1986;54(6):1461–1481. [Google Scholar]

- Swihart BJ, Goldsmith J, Crainiceanu CM. Restricted likelihood ratio tests for functional eects in the functional linear model. Technometrics. 2014;56:483–493. [Google Scholar]

- van der Laan MJ, Polley EC, Hubbard AE. Super learner. Statistical Applications in Genetics and Molecular Biology. 2007;6(25) doi: 10.2202/1544-6115.1309. [DOI] [PubMed] [Google Scholar]

- Wahba G. Spline Models for Observational Data. Society for Industrial and Applied Mathematics; Philadelphia: 1990. [Google Scholar]

- Wang J-L, Chiou J-M, Müller H-G. Review of functional data analysis. 2015. arXiv preprint arXiv:1507.05135. [Google Scholar]

- Wang X, Nan B, Zhu J, Koeppe R, Alzheimer’s Disease Neuroimaging Initiative Regularized 3D functional regression for brain image data via Haar wavelets. Annals of Applied Statistics. 2014;8(2):1045–1064. doi: 10.1214/14-AOAS736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson GS. Smooth regression analysis. Sankhya A. 1964;26:359–372. [Google Scholar]

- Wolpert DH. Stacked generalization. Neural Networks. 1992;5:241–259. [Google Scholar]

- Wood SN. Generalized Additive Models: An Introduction with R. Chapman & Hall; London: 2006. [Google Scholar]

- Wood SN. Fast stable restricted maximum likelihood and marginal likelihood estimation of semiparametric generalized linear models. Journal of the Royal Statistical Society, Series B. 2011;73(1):3–36. [Google Scholar]

- Wood SN. On p-values for smooth components of an extended generalized additive model. Biometrika. 2013;100(1):221–228. [Google Scholar]

- Yang W-H, Wikle CK, Holan SH, Wildhaber ML. Ecological prediction with nonlinear multivariate time-frequency functional data models. Journal of Agricultural, Biological, and Environmental Statistics. 2013;18(3):450–474. [Google Scholar]

- Yao F, Fu Y, Lee TCM. Functional mixture regression. Biostatistics. 2011;12(2):341–353. doi: 10.1093/biostatistics/kxq067. [DOI] [PubMed] [Google Scholar]

- Yao F, Müller H, Wang J. Functional data analysis for sparse longitudinal data. Journal of the American Statistical Association. 2005;100:577–590. [Google Scholar]

- Yao F, Müller H-G. Functional quadratic regression. Biometrika. 2010;97(1):49–64. [Google Scholar]

- Zhang T, Zhang Q, Wang Q. Model detection for functional polynomial regression. Computational Statistics & Data Analysis. 2014;70:183–197. [Google Scholar]

- Zhang X, King ML, Shang HL. A sampling algorithm for bandwidth estimation in a nonparametric regression model with a flexible error density. Computational Statistics and Data Analysis. 2014;78:218–234. [Google Scholar]

- Zhao Y, Chen H, Ogden RT. Wavelet-based weighted LASSO and screening approaches in functional linear regression. Journal of Computational and Graphical Statistics. 2015;24(3):655–675. doi: 10.1080/10618600.2012.679241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y, Ogden RT, Reiss PT. Wavelet-based LASSO in functional linear regression. Journal of Computational and Graphical Statistics. 2012;21(3):600–617. doi: 10.1080/10618600.2012.679241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou H, Li L. Regularized matrix regression. Journal of the Royal Statistical Society, Series B. 2014;76(2):463–483. doi: 10.1111/rssb.12031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou H, Li L, Zhu H. Tensor regression with applications in neuroimaging data analysis. Journal of the American Statistical Association. 2013;108:540–552. doi: 10.1080/01621459.2013.776499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu H, Vannucci M, Cox DD. A Bayesian hierarchical model for classification with selection of functional predictors. Biometrics. 2010;66(2):463–473. doi: 10.1111/j.1541-0420.2009.01283.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu H, Yao F, Zhang HH. Structured functional additive regression in reproducing kernel Hilbert spaces. Journal of the Royal Statistical Society, Series B. 2014;76(3):581–603. doi: 10.1111/rssb.12036. [DOI] [PMC free article] [PubMed] [Google Scholar]