Abstract

Diagnostic radiology reports are increasingly being made available to patients and their family members. However, these reports are not typically comprehensible to lay recipients, impeding effective communication about report findings. In this paper, we present three studies informing the design of a prototype to foster patient–clinician communication about radiology report content. First, analysis of questions posted in online health forums helped us identify patients’ information needs. Findings from an elicitation study with seven radiologists provided necessary domain knowledge to guide prototype design. Finally, a clinical field study with 14 pediatric patients, their parents and clinicians, revealed positive responses of each stakeholder when using the prototype to interact with and discuss the patient’s current CT or MRI report and allowed us to distill three use cases: co-located communication, preparing for the consultation, and reviewing radiology data. We draw on our findings to discuss design considerations for supporting each of these use cases.

Author Keywords: Families, Adolescents, Radiology Report, Patient–Doctor Communication

ACM Classification Keywords: H.5.m. Information interfaces and presentation (e.g, HCI): Miscellaneous

INTRODUCTION

Growing evidence suggests that patients are increasingly interested in timely, easy, and full access to all personal health data [29,30,37]. Following the passage of the American Recovery and Reinvestment Act (ARRA) of 2009 [13], healthcare organizations have begun to increase access to medical imaging data through personal health record technology such as online patient portals, so as to provide all diagnostic health data in the patient’s electronic medical record. Results of diagnostic radiology imaging studies, such as computerized tomography (CT) and magnetic resonance imaging (MRI) studies, represent an important type of medical record data, and play a critical role in diagnosing complicated diseases (e.g., brain tumors, lung cancers).

Radiology reports, which consist of a semi-structured, written text report describing a large volume of image files, serve to communicate radiologists’ interpretation of imaging findings to referring physicians [18]. While communication around radiology report findings has historically been focused on interactions between the interpreting radiologist and the referring physician, ongoing trends toward patient access to their health data place new demands on clinicians to consider the lay recipients of these reports [4].

Patient portals, via electronic health records (EHR), already allow for direct patient access to this data [14], yet patients’ current use of diagnostic radiology data is significantly limited due to the technical nature of the report content [27], preventing them from making meaning of it and engaging in effective communication about clinical findings.

We describe a formative study focused on the design of interactive tools to support non-expert patients and their family members in navigating and communicating about reports related to two types of radiology studies: CT and MRI scans. Through qualitative analysis of questions posted in online health forums, we identified patients’ common information needs related to these reports. We then conducted a knowledge elicitation study with seven radiologists to guide the design of content-based features for a subsequent prototype. We applied principles gleaned through both our qualitative question analysis and domain expert study to design and develop a prototype through which 14 adolescent oncology patients, their parents, and their clinicians interacted with the patient's current diagnostic report during a clinical consultation. Our information needs assessment, knowledge elicitation study, prototype, and feedback sessions are components in a single design exploration. Through this multi-component exploration, we make the following contributions:

A preliminary design for interactive diagnostic radiology reporting tools supporting collaborative review of report data, informed by an iterative process to identify patients’ needs and radiologists’ recommendations.

Findings from a field study in a pediatric oncology setting, detailing responses to our tools and the preferences and scenarios emerging from use of the tools by adolescent patients, their parents, and their oncologists when reviewing the patient’s current report.

Discussion of the preferences and recommendations of domain experts for designing tools to support young patients in interacting with diagnostic radiology reports, based on usage of our report prototype and qualitative feedback.

BACKGROUND AND RELATED WORK

Patient-Centered Radiology Reporting

Advances in health information technology, coupled with general interest in patient-centered radiology reporting, have culminated in the provision of direct patient access to their imaging data through electronic patient portals [4,7,15,31]. The medical community is well aware of the benefits of allowing patients to access and review their health data, including the patients’ ability to better educate themselves about their health, act on the data in a timely way, and prepare for doctor visits [29,34]. Patients too are eager to access their radiology data. In a survey study, Basu et al. found that most patients undergoing CT and MRI tests expressed strong preferences for receiving results of the study within a few hours of the procedure [3]. While some hospitals have begun to allow patients to directly view radiology reports through the web portal, the technical nature of the language used in the report continues to present a barrier to patient’s accessing this data [27].

To reduce this barrier, efforts in clinical informatics research adopt natural language processing (NLP) techniques to provide on-the-fly explanations of salient concepts extracted from radiology reports. For example, based on analysis of frequent words and word pairs from 100 knee MRI reports, the PORTER prototype system demonstrated the use of a predefined glossary to provide hyperlinks that support instant retrieval of definitions as well as accompanying images [24]. Arnold et al. demonstrated an integrated radiology patient portal interface which uses the cTAKES knowledge extraction NLP module [1] to automatically identify and extract medical concepts from the Impression section of an unstructured report [2]. In addition to providing lay and clinician-specific descriptions of medical terms, the portal system emphasized educating patients to help them understand the reason for undergoing an imaging study, and how to review radiology images. While these systems show promising approaches to support patient’s understanding of their report content, they are designed to support data review outside of the clinical environment. In contrast, we explore opportunities to support in situ review of radiology imaging data with clinicians.

Patient-Clinician Communication about Electronic Health Record Data

Good communication, one that is particularly patient-centered, in clinical consultations has proven to be effective in areas affecting patient understanding, trust, decision-making, satisfaction and other outcomes associated with improved health [8,32,33]. In diagnostic radiology [3], communication of imaging results is considered an important yet challenging task. While patients strongly prefer immediate access to test results through patient portals [11,16], Johnson suggests that such results can best be communicated in the clinical setting: i) the patient may become anxious when reading their report, ii) assessing what the patient does and does not understand is most effectively done in person, iii) radiologists are unaware of patient’s larger medical context, and iv) referring physicians, through their continuing relationship with patients, can provide the most tailored and appropriate advice [15]. However, there are no systems to support communication of diagnostic radiology imaging results in the clinic.

Previous research in HCI has addressed opportunities to improve aspects of patient–clinician communication about EHR data in the clinical setting, and could inform the design of tools to support communication of radiology data. One line of research addressed gaps in patient knowledge about the care process through the design of displays to deliver timely information. Wilcox et al. proposed and piloted a design for large, patient-centric information displays to keep emergency department patients informed about the status of their care using data in the patients electronic record [35], while Pfeifer Vardoulakis and colleagues prototyped mobile displays for a similar purpose [25]. Bickmore et al. introduced a bedside mobile kiosk through which an animated virtual nurse agent teaches low-literacy hospital patients about their post discharge care plan [5]. These studies demonstrated novel techniques for presenting medical information to patients to aid them in understanding their care, to supplement their interactions with their care team.

While most HCI research emphasizes the need to support patient education in the clinic, Unruh et al. [34] and later, Miller et al. [22] found through their clinical field work that support for patient information spaces and shared patient–clinician interactions with medical information is lacking, yet critical to the clinical work of both patients and family members. One system demonstrating how shared patient–clinician interaction with medical data might be realized is AnatOnMe, a handheld, projection-based prototype system designed to allow doctors to project selected medical images, such as x-rays, directly onto the patient’s body [23]. While the AnatOnMe system was found to be engaging to use and promoted understanding of injury-specific information, it was designed for specific types of musculoskeletal images, rather than complex forms of “difficult to see” anatomy. In our study, we sought to introduce tools to enable shared interaction with diagnostic imaging data between patients, their family members and their physicians.

STUDIES

In the following sections, we describe lessons learned from 1) an online health forum analysis, and 2) a knowledge elicitation study. We then show how results of our first two studies informed the design decisions† embodied in a prototype application supporting collaborative review and communication of radiology report content. Finally, we report findings from a field study in which we elicited responses to the prototype by presenting adolescent cancer patients and their parents with the patient’s current CT or MRI report, using our prototype, during a clinical consultation.

ONLINE HEALTH FORUM STUDY (S1)

To gauge users’ general information needs concerning radiology reports, we analyzed questions people ask about their CT and MRI reports. Our decision to focus on questions is supported by Zhang’s [37] definition of questions in the context of consumer health information searching: a “manifestation of a person’s information need arising from an intention to fulfill his or her knowledge gaps.” Thus, we treat questions in online health communities as posters’ attempts to resolve knowledge gaps resulting from technical medical language used in radiology reports.

We performed a thematic analysis of a total of 480 posts collected from four different online health communities: medhelp.com [20], healthboard.com [12], csncancer.org [9], and reddit.com [26]. We chose these communities based on their relevance as determined by the recency (latest observed activity within last 10 days) of question posts related to radiology reports. We first extracted 1656 posts based on radiology-specific targeted keywords (e.g., reports, scan, CT, MRI, etc.) from the four websites over January–May 2016. To match our study population, we isolated post content that includes questions involving CT and MRI reports, while excluding other modalities (e.g., ultrasound, mammograms, X-Rays, etc.) as well as content pertaining to seeking social support.

Beginning with iterative coding of 936 posts sampled from the original corpus, two coders, C1 and C2, developed a codebook consisting of 23 themes characterizing types of questions posted on the websites (Table 1). We clustered these themes under four topics: interpretation of the report content, about the test, healthcare process, and diagnosis and illness experience. A third (C3) and fourth coder (C4) independently coded 50 posts randomly sampled from the original corpus to check for exhaustiveness of the themes (for a total of 100). Once confirming that the themes were comprehensive, C1 and C2 independently coded the same set of 100 posts to test for inter-rater agreement (80%). Once resolving any disagreements, C1 and C2 independently coded 380 posts (190 each) to conclude the analysis. In the end, we discarded 80 posts for lack of relevance.

Table 1.

Summary of themes from the online health forum study. Some posts fell into multiple themes, so percentages add up to more than 100% (out of 400 posts).

| Theme | % (n) | Theme | % (n) |

|---|---|---|---|

| Interpretation of report content (S1a) | Diagnosis and illness experience (S1d) | ||

| Certain sections (mostly Impressions and Findings) of the report content | 15.75 (63) | Seeking help understanding current illness experience | 15.25 (61) |

| Specific phrase or sentence in the report | 4.75 (19) | Concerned about the prognosis | 11.00 (44) |

| Image of a scanned body region | 4.75 (19) | Hypothesizing about the relationships among test results, diagnosis, and treatment | 4.50 (18) |

| Specific medical term in the report | 2.25 (9) | Seeking confirmation of doctor’s opinion or diagnosis | 3.00 (12) |

| Quantitative measurement of a questionable mass or body part | 1.75 (7) | Concerned about misdiagnosis by the doctor(s) | 2.75 (11) |

| Specific type of test (S1b) | Healthcare process (S1c) | ||

| Concerned about the risks associated with a test | 7.75 (31) | Concerned about what they should be doing or asking next | 8.75 (35) |

| Inquiring about the diagnostic abilities of a specific test | 6.50 (26) | Seeking help in making decisions about their healthcare options | 6.25 (25) |

| Seeking confirmation about the accuracy of a test | 4.25 (17) | Confused or expressing a need to understand the healthcare process | 5.25 (21) |

| Looking for comparison among tests | 4.00 (16) | Expressing lack of satisfaction with their healthcare | 4.25 (17) |

| Seeking test recommendations generally or for a condition | 3.25 (13) | Concerned about what will happen next in care | 3.75 (15) |

| Concerned about the need for the test | 2.75 (11) | Expressing lack of explanation from their doctor about healthcare options | 1.75 (7) |

| Concerned about the cost of treatment | 1.00 (4) | ||

Results

Below, we present findings organized by four topics that arose from the analysis. In doing so, we highlight the most salient themes within each, and follow with discussion.

Interpretation of report content (S1a)

Our analysis of online forums revealed that posters turn to these channels to make sense of their imaging data by sharing certain parts of the report content. In particular, people posted questions to seek an interpretation of certain sections (mostly impressions and findings) of the report content (15.75%), a specific phrase or sentence in the report (4.75%), or an image of a scanned body region (4.75%). Posters often asked non-specific questions (e.g., please help […], interpret […], etc.) along with significant parts of their report copy-pasted verbatim. For example, a post seeking clarification of a specific phrase reads:

“Please help- what does it mean- a) ‘parenchymal fibrosis is identified overlying’ b) ‘Cardiac size increased with CT 0.61.’ Do I need further investigations? and is this serious.”

Yet another seeks interpretation of an image with a direct link to the file:

“Can someone help me determine what the mri is seeing. http://pastebo***.co/dagn***.jpg”

About the test (S1b)

Posters also asked questions concerning the process of undergoing an imaging study. Mostly, they were concerned about the risks or hazards associated with a test (7.75%), inquired about the diagnostic abilities of a specific test (4.25%), and sought comparison among individual test results (4%). For example, a post expressing concern about risks reads:

“I am quite concerned with the number of CT scans that I have had, and honestly speaking all of them have been unnecessary. I would like to know what my risk is for any radiation damage or increased risk of cancers because of all of these scans?"

Healthcare process (S1c)

Other common concerns that posters expressed were related to understanding their health care process. Lack of sufficient information about the healthcare process led people to ask about what they should be doing or asking next (8.75%) and seek help in making decisions about their healthcare options (6.25%). For example, a post seeking help deciding what question to ask their doctor asks:

“I had an MRI of the head and spine and I am looking for some direction on the results or questions I should ask my Neuro before I go in and see him.”

Diagnosis and illness experience (S1d)

Finally, difficulty making sense of the diagnosis and illness experience led people to seek help understanding their current illness experience (15.25%) and the prognosis associate with the diagnosis (11%).

The profusion of technical medical terminology in reports certainly hinders patient understanding. Yet, technical terms account for only some of the complexity of these reports—particular linguistic expressions containing technical terms add additional complexity, and without proper guidance from clinical experts, terms can be misinterpreted even with the use of high-quality term-definition tools. This is supported by the higher prevalence of questions we found in S1a regarding inferencing meaning from whole sections (15.75%) or phrases (4.75%) as opposed to medical terms alone (2.25%). More importantly, the context necessary to clarify specific terms and phrases is not limited to the report document. Dominant themes in S1b-d show that posters are not only concerned about the difficulty understanding the report content, but often desire relevant information in the context of receiving the imaging procedure, including certain risks associated with the radiology exam, next steps in the health care process, and illness experience. We draw from these contextually relevant information needs to inform the design of content structure and types of information to include in the prototype. This question of inferring meaning of medical terminologies from its surrounding context motivated our second study, as well as subsequent decisions that led to the design of our prototype.

DOMAIN KNOWLEDGE ELICITATION STUDY (S2)

We focused next on capturing essential types of information that could be communicated through a typical radiology report. First, we sought to understand the range of common concepts embedded in the language used by clinicians who write these reports. To do this, we treat commonly-occurring phrases in the report content as ground truth, then validate their meaning with practicing radiologists. We began the study by applying text-processing techniques to mine the top 200 frequently occurring 5-grams‡ from approximately 205,250 unstructured radiology reports, comprising 78,221 CT and 127,029 MRI reports of the head, chest, and abdomen–modifying the 5-grams as necessary to remove extraneous words After removing any redundancies, we randomly sampled 20 commonly-occurring 5-grams and used them as basis for the elicitation study. The radiologist co-author of this paper aided in the development of an initial set of 10 concept categories, including reference to body part, size (measure), comparison between reports, comparison within report, presence or absence of evidence, uncertainty, significance, suggestion, technique, and follow-up. We prepared a paper packet containing materials necessary to elicit domain specific knowledge about the 20 phrases. Each page included a representative phrase, three excerpts to show their usage in actual reports, and the concept categories.

We recruited seven radiologists with varying levels of experience, including five attending radiologists (mean=8.8 yrs) and two fellows in training (mean=7.5 mos). We handed the packets containing common phrases to each participant and asked them to select concept categories that most closely match the meaning implied by each phrase in question. While categorizing, we asked the radiologists to provide comments that would help clarify the meaning of each phrase. Additionally, we solicited comments on the comprehensiveness of the provided concept categories–modifying the list as necessary. Eventually, we refined the concept category list to 13 concepts: reference to body part or organ system, appearance (size, density), comparison between reports, comparison within report, presence of evidence, absence of evidence, uncertainty, certainty, significance, diagnosis, technique, suggestion for follow up, and recommendation for follow-up. The elicitation study session lasted about an hour for each participant.

Conversations with the radiologists revealed insights about certain structural consistencies observed in typical radiology report content. We learned that the Impression section contains the most important findings listed in the order of importance, effectively acting as a summary of the entire report. We also learned how radiologists communicate uncertainty to referring physicians. Radiologists explained that they use phrases such “clinical correlation is needed”, “if there is continued clinical suspicion”, or “in the appropriate clinical setting” to indicate that additional clinical data (e.g., evidence from another study, lab or physical exam) is needed to reach a diagnosis that cannot be made by that imaging study alone.

Such phrases serve to show that uncertainty is in fact common, because a report is intended to be taken together with other clinical findings—it is part of the interpreting radiologist’s duty to address a clinical question that would then provide guidance to the referring physician to evaluate the patient’s health [18]. Thus, despite findings from S1a showing ample evidence of patient information needs directed toward understanding and reviewing their report content, we were convinced that focusing on “technical-to-lay” language translation of the radiology report as the driving design goal would be insufficient in meeting patients’ needs. Instead, we support some aspects of the translation, to scaffold patients and their family members’ participation in clinical conversations about radiology reports, while also aiming to support co-located, synchronous communication in the clinic.

DESIGNING INTERACTIVE PATIENT–CENTERED RADIOLOGY REPORT

From findings uncovered in S1 and S2, we constructed the following list of design goals.

Structure and organize report content to simplify navigation

Difficulty interpreting content in specific sections of the report was the largest barrier to patients in reading their report (S1a). We learned through conversations with radiologists that structural patterns exist in the report that highlight salient details of the findings. We distilled these structural patterns through our large-scale report analysis (S2) and multiple iterations with radiologists, and used them to organize the user interface into specific tabs and user interface panels to aid reading and navigation of the report.

Enable contextualized selection of report content to associate content fragments with questions and discussion topics

Enabling patients and family members to identify what in the report (S1a) they wish to clarify or discuss with clinicians is an integral part of report interpretation. We sought to design interactions that permit selection and tagging of terms and phrases in the report, through minimal touches and pairing of selected content fragments with annotations and questions tags in the report user interface.

Clarify medical jargon within the clinical context

Although web services exist to provide consumer friendly explanations for medical terms, our social media analysis in S1 suggests that patients still turn to online health communities in order to better understand the context necessary to make sense of the radiology data. S2 showed that much of this context cannot be obtained outside of the clinical consultation. We strive in our design to assist the user in obtaining a basic understanding of medical terminology, while not divorcing the text from the clinical history it exists within.

Permit caregiver awareness of patients' points of interest to support situated, co-constructed information understanding among family caregivers, patients, and clinical caregivers

For clinicians to effectively communicate about report content, a clinician must assess what patients or family members already know—essentially, what ground have they covered in the report, and what portions of the report constitute regions of interest that could be useful starting points for collaborative review, discussion and cultivating shared knowledge.

Prototype Design

We designed a web application with a touch-based user interface that was formatted for interaction on a Microsoft Surface tablet, and designed to handle written content of a CT or MRI study. We did not include support for viewing images of the CT or MRI scan in the current prototype§. Below, we describe the prototype through the features that we developed based on our design goals, noting how each feature supports user interaction with the radiology report.

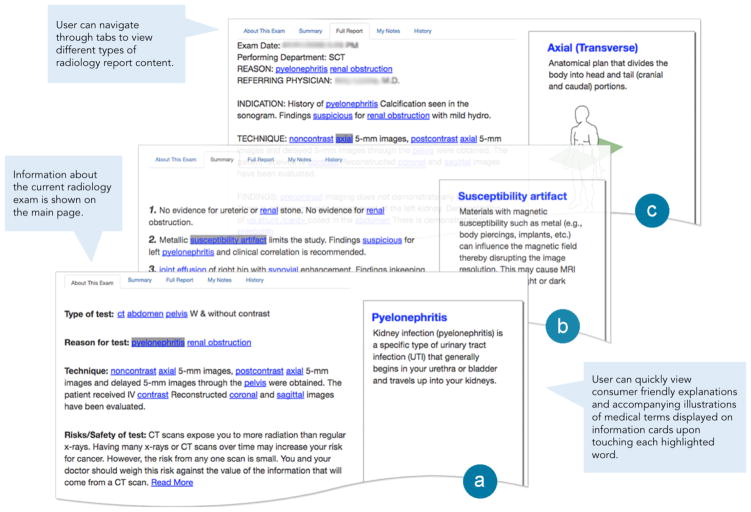

Information Cards

The application scans a .txt radiology report for words or phrases that need definitions or further clarification. These words and phrases came from a predefined list, which was constructed from the text analysis in S2 and curated by the radiologist co-author. Words were later added to the list on a case-by-case basis if initial review of a patient’s report by the radiologist revealed critical medical text missed by our prototype. Identified words are linked to a unique information card and become clickable in the prototype. When linked text is clicked, the information card appears on the far right-hand side of the screen, similar to how search engines such as Google and Yahoo! currently present their healthcare information. Information cards contain a definition, an explanation, or a diagram. Definitions are used to simplify medical jargon. Explanations are used to clarify complex phrases, while diagrams are used to give patients a visual understanding of physical and spatial references, such as the physical angle of the scan, and specific anatomical areas imaged. In such an event, we turned to alternative web sources verifying with the radiologist of the team the accuracy of the information.

About the Exam

About the Exam (Figure 2a) highlights all text in the radiology report related to the imaging process: type of test, reason, and technique. Content is obtained from the original report by scraping the text according to structural features highlighted by radiologists interviewed in Study 2. Additional content, Risk/Safety of test, is added to the section to give patients a holistic view of her scan. The risk/safety statement was hardcoded by our team's radiologist.

Figure 2.

Excerpts from our field study session showing design of the interactive radiology report prototype. (a) About This Exam; (b) Summary; (c) Full Report. Different types of content extracted from the raw text report are shown on the main screen; information retrieved from the web are displayed on information cards.

Summary

The Summary (Figure 2b) provides a list of all the important findings as well as recommendations and follow-ups, sorted in order of significance. These findings directly reflect the Impressions section of the original radiology report, with a title more easily understood by patients.

Full Report

The full report is a duplication of the original CT or MRI scan report in its entirety. Patients can use this page to view and interact with all content (information cards are available and history captures user-selected content).

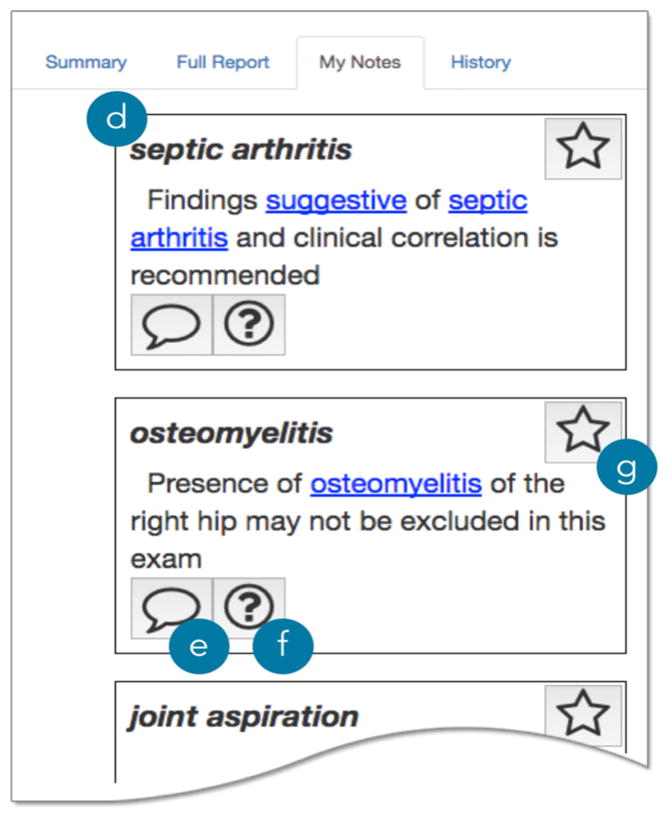

My Notes

My Notes (Figure 3) keeps a history of every information card the patient triggered. A box containing the word, the sentence the word appeared in, and buttons for further interaction, appear in a list (one for every clicked word) on the right-hand side of the screen (Figure 3d–g). Each word or phrase gives the patient the ability to add a question, comment, or favorite. All comments, questions, and favorites are stored on the screen for later viewing by patients, their accompanying family members, or clinicians.

Figure 3.

My Notes. Each content fragment appears in a (d) note card; user can add a (e) comment, (f) question, or mark a g) favorite note card.

History

History allows users to step through every word or phrase they clicked on, in the order that they clicked them. As the user steps through their interaction history, the word or phrase that was clicked is highlighted in context of the full report (e.g., bringing focus to region of the selected term) and other instances of the word or phrase also highlighted.

FIELD STUDY (S3)

Setting and Recruitment

We conducted a field study at Children’s Healthcare of At-lanta (CHOA) spanning two Cancer and Blood Disorders Centers, following IRB approval, with adolescent oncology patients and their parents, from July–Aug 2016. A total of 14 patients (mean age=15.4; female=4) and 14 accompanying parents (mean age=39.1; female=11) were recruited through convenience sampling guided by clinician recommendation. All patients suffered from some form of cancer and blood disorders. A total of five oncologists, with varied experience ranging from three to 36 years (mean=18 yrs.) cared for participating patients, and all five used the prototype in clinical consultations and participated in discussions about practical considerations and use cases envisioned through the prototype features. All patient participants fit the inclusion criteria approved by the IRB: between ages of 13–17**; have the mental capability to participate (determined by the patient’s clinician); and speak English. While not explicitly excluded from this study, clinicians were hesitant to suggest patients with negative test results. Thus, all participants had scans that indicated a normal or unchanged state in their diagnosis as compared to their previous scan result.

Procedure

If patient-parent pairs indicated to their doctors (privately) their willingness to be approached by researchers, we introduced ourselves, explained the study, and obtained assent from patients, consent from their parents, and HIPAA authorization. At the participating clinician’s discretion, we acquired the most recent patient CT or MRI scan report, the scan was conducted and the report completed during the same patient visit, then promptly reviewed by the oncologist, and subsequently uploaded in our prototype.

We first demonstrated the features of the prototype by performing a guided tutorial using a sample radiology report to allow patients and their family members to learn and try out the technology using the sample report before reviewing their own data. We then loaded the recent report into the prototype and allowed the user to interact with the device unguided. We solicited responses from the patient with a few short questions throughout their interaction, to structure feedback. When the patient had completed independent evaluation of the prototype, we observed the clinician joining in to discuss the report with the patient and their family, as part of a typical consultation. Following the consultation, we performed a semi-structured interview with the patient and parents. All proceedings were audio recorded and observational notes were taken throughout by the researchers.

Analysis

All audio recordings were transcribed verbatim and then coded for qualitative themes. Two researchers followed an iterative, inductive coding method, developing new themes until no new codes emerged. The analyses resulted in seven broad primary themes: current use patterns, challenges interacting with report data, desired information, context of use, information access and control, and communication with the doctor. We then split the transcripts along these primary themes and iteratively coded until each primary theme had an agreed-upon list of subthemes. Two researchers used subthemes to encode all transcripts, ensuring coverage of all topics present in the documents.

PROTOTYPE FEEDBACK

Most parents in our study had prior exposure to radiology reports, and a moderate level of familiarity with the report content. Ten out of 13 parents who responded to questions about record-keeping, keep copies, and read each full report about their child; four parents (P1, P6, P10, and P11) told us that they take notes in the clinic. Two teen patients (T3, T13) said they had read their own radiology report prior to participating in our study. In reporting on findings, we refer to clinicians, parents and teens with the labels C, P and T (e.g., C1, P2, and T2). Below we share results of patient and parent responses to individual design features and information types presented in the prototype.

Information Cards

Of all information types presented to the participants, the ability to quickly view definitions and related diagrams in the information cards yielded uniformly positive responses (T&P:1, 3–5, 7–14). Several patient–parent pairs indicated that the expediency of retrieving medical term definitions afforded an overall improved reading experience. T13 shared his excitement: “I mean, it's definitely easier, some of these terms for how they actually do the scan, the different views at which they're actually scanning it in.” His excitement stemmed from the convenience of not needing to search for medical terms. “It definitely helps a lot [when] trying to figure [things] out, because before you'd have to go on Google or another search engine just to look it up.”

Besides the convenience of faster information retrieval, providing definitions in our self-contained application ensured that definitions came from a trusted source. T5 told us, “It makes it a lot easier, rather than taking the effort to look it up and then find a reliable website to look at, that has factual information.” P1 saw that accessing verified information within the report could overcome a communication barrier when asking questions about her child’s health. She reflected on her prior experience with a physician who questioned the veracity of the information source: “I think that might be the most beneficial things for a parent. I didn't like having to Google and kind of try to pick apart what was [there]. Once she was diagnosed at home I came in with questions that [oncologist] probably was like, ‘Where are you getting all this from?’”

Summary

Most participants (T&P:1, 5–6, 8, 12–13) had positive comments about the summary page. Teen patients especially seemed to like the summary feature for its simplicity. T12 told us, “That's the full report, that's boring. You want to go to the summary.” While interacting with the prototype, T5 commented that the summary content was easier to understand. “I think it's pretty cool. This part, the summary, is cool to me because I can understand it, what it means.”

Many parents commented on prior experiences encountering difficulty navigating the report content (P1, P3, P5–6, P9 and P13). For example, P1 recalled her initial encounter with her daughter’s radiology report: “It was detailed. I was like: I don't know what I'm looking at. I didn't really know how to navigate [it] in that form.” We also encountered participants who read the report without any guidance. P6, for example, said “I usually go straight to the source and I'm like, ‘Just give me the whole thing, and I'll just dig through and find it myself.’”

While the intended purpose of the Impression section in a radiology report is to communicate the interpreting radiologist’s overall ‘impression’ (or assessment) of findings in the image to referring physicians, changing the label from Impressions to Summary seemed to provide reassurance to the patient and parent participants’ understanding of the section content. P13, for example, told us: “I think, for me and for my wife, I think we comprehended that that was the overall assessment of what were our findings. What did this MRI test tell us? I think we did understand that, but [as] a summary as opposed to an impression.”

P1 and P13 considered summary section important because they knew their doctors pay significant attention to it. Once moving to the summary, P1 shared: “I actually like it. I was telling her that this is my favorite page right here, that summary […] I want to know what's the most pressing based on the doctors that looked at the report.”

My Notes

Our analysis of interview results and observations of patient and parent responses to the My Notes feature revealed that these features supported participants’ memory and coordination of their participation (e.g., turn-taking) when engaging in medical conversations with the doctor. T12 told us that the notes would help him keep track of follow up instructions. “He told you about six or seven things, you'd lose track of all of them. You could do it on this and just write them all down in notes.”

When we asked why she liked the My Notes feature, P1 told us that the ability to write notes already referencing relevant parts of the report can be particularly helpful when trying to remember what was said about a specific term: “Not remembering what they told me about a certain part of the report. When I get it home and I will look at it, if it's axial or whatever the word is, I get flustered.”

Patient and parent participants appreciated the ability to digitally interact with the text in the report. P9 shared her excitement about how the prototype encouraged shared viewing and construction of meaning via referencing: “Just sitting with the doctor and looking through it...right now it's we talk about the test and then she hands it to me, and then we leave you know? It's not really talked about you know.”

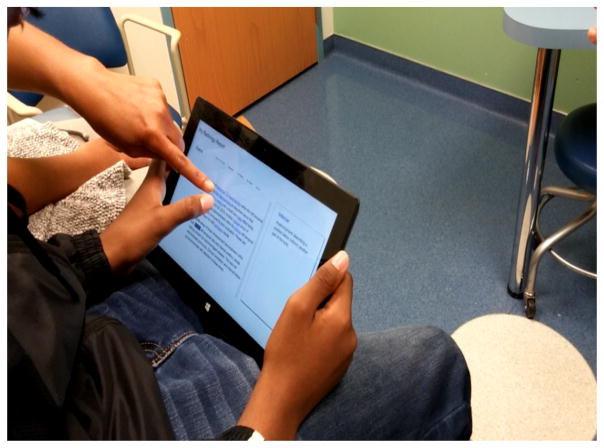

We observed T11, who typed notes on the prototype as both he and P11 (mother) asked C5 questions bookmarked on the My Notes section, coordinating their participation in the conversation. The following excerpt of the interview transcript shows how the My Notes feature helped participants’ management of turn-taking: as C5 begins to answer T11’s question, P11 indicates her intention to ask a question about the next word while T11 write notes (Figure 1).

Figure 1.

Patient and parent viewing the patient’s MRI report using a prototype designed to support review and communication with doctors.

C5: “That's a good question. [...] That's what Gadavist is. That's the chemical that looks white on the MRI.”

T11: (starts typing notes) “I should take notes for this.”

P11: “Can we skip down to the one I had a question about?”

T11: (pointing at the word “Gadavist”) “Can you explain this, because I forgot that fast?”

C5: “It's the chemical that looks white in the brain MRI. It shows where the brain filter is leaking. Okay?”

P11: (scrolling down, then pointing at the word Lep-tomeningeal) “What does that mean?”

USE CASES AND DISCUSSION

The previous sections looked at patient responses to information types and design features presented in the prototype. We now turn to share notable findings from in-depth discussions involving patients, their family members and participating clinicians about certain scenarios of use in which stakeholders further contemplated and commented about practical considerations. Analyses of the patient-parent-clinician interaction revealed additional use cases for which different features could play a significant role. The discussions about specific use cases provided further insight into how the individual information types and design features of the proposed technology could fit in the clinical workflow. We share three such use cases: facilitating co-located communication in the clinic preparing for the consultation, and supporting collaborative review.

Co-located Communication

Earlier we showed how interactive components of the My Notes feature supported patient- and parent-driven, co-located, and simultaneous interaction with the report, during the consultation. In the clinic, the interaction with the report led to dynamic interspersing of report interaction and both patient- and clinician-led discussions. Interestingly, these discussions started out focusing on technical concepts, but we observed that patient and parent participants drew on technical knowledge to ask about symptoms, disease process and treatment.

We found that, in practice, clinicians reference the full report prior to the consultation, yet use images of the patient’s body to supplement the conversation. C5’s comment illustrates this point well: “I'll actually take a picture of it [screenshot of the scan] and I'll show them and translate what I think is difficult. I [also] read the text, but then present the pictures to explain what they're talking about, especially if there is a change.”

We also observed that when answering questions, clinicians alternated between sketching, and physically referenced areas of their and the patient’s bodies, to demonstrate certain visuospatial concepts. We observed both C3 and C5 sketching the relevant body region and internal structures on a drawing board and bed sheet, to shift between shared prototype interaction with the patient, sketching, and physical bodily references to orient the patient and situate sketched explanations. C5 used regions of the patient's’ body to demonstrate the anatomical concepts that imply directionality.

Pulling up an abstracted graphical representation of the human anatomy [6] could provide a backdrop to scaffold sketching while explaining report concepts. However, techniques focused on browsing anatomical images alone are insufficient. In the pediatric clinic, multi-party human-human communication involving the minor patient, their accompanying parent and physician is unavoidable. In this case, systems that provide support for effective turn management [10] via user- and system-driven cues could be a compelling approach. As illustrated by T11 and P11’s interactions with the My Notes feature, opportunities exist for patient and parent pairs to engage in fruitful medical conversations through the use of interactive user-directed cues that reference specific parts of the report, as well as system-directed cues supported by auto-linked graphical depictions.

Preparing for the Consultation

In-depth discussions about communication also revealed another important use case: preparing for the consultation. Both patients and parents expressed a strong desire to access the report data immediately after the exam or at a time prior to their consultation, to better contemplate and construct questions prior to the consultation. P3 expressed her complaint, “If he's having a scan today, I would like to know the results of it today versus next week when I'm not here, so I can ask the doctor those questions.” P7 described the ability to better accumulate questions related to the report, “If we had the opportunity to look at that before we saw the doctors, we might have more questions.” P13 reflected on the usefulness of being able to record and send questions as they occur, “I think it'd be real helpful to have in advance, just that way if I had two or three questions, or [spouse] had, or [T13] has two or three questions, we can even send them ahead of time.”

Access to radiology findings prior to clinical consultation was often met with resistance from clinicians, however, who were concerned about the anxiety patients and family members would experience were they left to interpret results alone. C2 expressed his concern, “Because if I showed everybody all the complete reports they would go crazy…I see 10 little things that could be cancer. It’s not, but every time they write the report they write that down, which means you have to learn how to […] No one has a normal CT scan.” C5 echoed this concern for misinterpretation, remarking that, “There's some lab results that are listed as abnormal, so a child may have a really low liver function test, [but] is totally not dangerous.”

The need for clinicians to filter information was further supported by parents. P13 and C5 hinted at a viable solution: providing summarized information prior to a consultation. We found that C5 often communicated summaries to patients verbally as soon as the report was available. P13 told us, “That's typically when we have the MRI, a week to ten days, usually not too much longer than that is when we actually have a visit in clinic. That's when [C5] goes over that, but she will also reach out to give us a call after she's seen him to say is it something we'll go in detail when we see you in a week or here's what it is, no change. Something along those lines, but at that summary level.”

Supporting patients’ and parents’ desire for information to prepare for the clinical visit, while respecting the clinicians' concern regarding patient anxiety and misinterpretation will be the driving consideration for any technology designed to support the patient's ability to prepare for a clinical visit. Clinicians were particularly concerned about releasing the scan images to patients before they could provide their own interpretive input. The summary, on the other hand, was generally considered an acceptable level of information to provide the patient. As studied by Hassol et al. [11], determining when and what patients and family members deem an acceptable means to receive which subsets of information from their diagnostic radiology report would be an important topic to explore in future work. In addition, it would be equally important to address patient concerns and anxiety through the provision of appropriate contextual information (as shown in S1b–d) accompanying these subsets of information.

Collaborative Review

The final use case arising from our interviews with patients, parent, and clinicians is the importance of supporting review of report content over time. Many patients and parents expressed difficulty in processing and encoding all the information while in consultation. P1 describes how the emotional experience of being in the consultation makes processing the presented information difficult. “I feel like sometimes coming up here it makes her so anxious that she doesn't really have any questions until we get two hours down the road...” P12 reflects on how emotions impact her memory. She recalled that, “You don't start writing, because the emotions get in the way of what's really got to happen, and your emotions will run away with you; or you'll forget.” Patients and parents saw the prototype as an opportunity to facilitate memory recall through record keeping and eased time constraints. P8 explains how a personalized space would help her process and understand the events discussed in the consultation: “Probably at home, to be able to hear what the doctor explains to us about the scans, but then…look through it ourselves on our own time and understand it.”

Others saw the review process as a time to discuss findings with other family members. P11 describes the process by which she obtains domain knowledge from her mother, a registered nurse: “Grandma [will] read them because she is a registered nurse. She's able to clarify some things that I didn't really understand that [C5] was going over.”

In addition to investigating appropriate timing for collaborative and individual review of diagnostic radiology reports, our future work will focus on how to best incorporate selected image frames, for situated, collaborative navigation of rich image data, to support collaborative image review through coordinated browsing of report and image content. As a useful starting point, we will look to Mentis and colleagues, who have examined collaborative, anatomical image interaction in the context of remote surgery [21]. While our work applies to diagnostic image reporting for patients and family members (rather than real-time, distributed expert procedures), their insights relating to the use of shared annotation tools and image manipulation techniques among collaborators with varying expertise provide guidance that we look forward to applying to co-located and distributed patient–clinician communication scenarios.

CONCLUSION AND LIMITATIONS

In this paper, we explore how communication-focused technology can be leveraged in the clinical setting to help patients interpret heavily technical diagnostic documents. Through formative studies, we develop design considerations unique to this domain, then evaluate and expand these considerations with a field study using a high-fidelity prototype. Our study has several limitations. The online health forum posts may not necessarily represent the concerns of pediatric patients and family members who participated in our study. The implications of our study findings may not extend to all cases of pediatric family and clinician communication as they are based on a small sample size and specific type of chronic condition.

Still, feedback from patients about the prototype features support our use cases and design considerations and point to new considerations specific to the patient–parent–clinician relationship observed in the consultation. As we continue to address shortcomings of the prototype, we hope to explore how these design considerations play out in different settings, for different imaging modalities, and different patient populations.

Acknowledgments

We thank the study participants and CHOA staff. This work was supported by an IPaT seed grant and NSF #1464214.

Footnotes

Our decision to use retrospective analysis of forum posts and elicitation of expert knowledge to inform the design process is guided in part by Kay et al. [17].

n-grams refer to NLP terminology that denotes a string of consecutive words that are used for text analytics. Our decision to use n-grams as a proxy for understanding radiology report content is guided in part by [19].

Patients and family members currently do not have electronic access to image files of the radiology scans in our study setting.

Patients who participated in our study get frequent scans (approx. once every three months) and our decision to focus on this age range is motivated by hospital policy that adolescents 13 and above can access their electronic health record data.

References

- 1.Apache cTAKESTM clinical Text Analysis Knowledge Extraction System. Retrieved January 5, 2017 from http://ctakes.apache.org/

- 2.Arnold Corey W, McNamara Mary, El-Saden Suzie, Chen Shawn, Taira Ricky K, Bui Alex AT. Imaging informatics for consumer health: towards a radiology patient portal. Journal of the American Medical Informatics Association. 2013;20(6):1028–1036. doi: 10.1136/amiajnl-2012-001457. http://doi.org/10.1136/amiajnl-2012-001457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Basu Pat A, Ruiz-Wibbelsmann Julie A, Spielman Susan B, Van Dalsem Volney F, Rosenberg Jarrett K, Glazer Gary M. Creating a patient-centered imaging service: determining what patients want. American Journal of Roentgenology. 2011;196(3):605–610. doi: 10.2214/AJR.10.5333. http://doi.org/10.2214/AJR.10.5333. [DOI] [PubMed] [Google Scholar]

- 4.Berlin Leonard. Communicating results of all radiologic examinations directly to patients: has the time come? American Journal of Roentgenology. 2007;189(6):1275–1282. doi: 10.2214/AJR.07.2740. http://doi.org/10.2214/AJR.07.2740. [DOI] [PubMed] [Google Scholar]

- 5.Bickmore Timothy W, Pfeifer Laura M, Jack Brian W. Taking the time to care: empowering low health literacy hospital patients with virtual nurse agents. Proceedings of the ACM International Conference on Human Factors in Computing Systems (CHI '09); 2009. pp. 1265–1274. http://doi.org/10.1145/1518701.1518891. [Google Scholar]

- 6.BioDigital. 3D Human Visualization Platform for Anatomy and Disease. Retrieved December 24, 2016 from https://www.biodigital.com/

- 7.Bruno Michael A, Petscavage-Thomas Jonelle M, Mohr Michael J, Bell Sigall K, Brown Stephen D. The “Open Letter”: Radiologists’ reports in the era of patient web portals. Journal of the American College of Radiology. 2014;11(9):863–867. doi: 10.1016/j.jacr.2014.03.014. http://doi.org/10.1016/j.jacr.2014.03.014. [DOI] [PubMed] [Google Scholar]

- 8.Butow Phyllis N, Dunn Stewart M, Tattersall Martin H, Jones Quentin J. Computer-based interaction analysis of the cancer consultation. British Journal of Cancer. 1995;71(5):1115–1121. doi: 10.1038/bjc.1995.216. http://www.ncbi.nlm.nih.gov/pubmed/7734311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cancer Survivors Network. Retrieved from https://csn.cancer.org/forum.

- 10.Duncan Starkey. Some signals and rules for taking speaking turns in conversations. Journal of Personality and Social Psychology. 1972;23(2):283–292. http://doi.org/10.1037/h0033031. [Google Scholar]

- 11.Hassol Andrea, Walker James M, Kidder David, Rokita Kim, Young David, Pierdon Steven, Deitz Deborah, Kuck Sarah, Ortiz Eduardo. Patient experiences and attitudes about access to a patient electronic health care record and linked web messaging. Journal of the American Medical Informatics Association. 2004;11(6):505–513. doi: 10.1197/jamia.M1593. http://doi.org/10.1197/jamia.M1593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.HealthBoards. Retrieved from http://www.healthboards.com/

- 13.The Health Information Technology for Economic and Clinical Health (HITECH) Act from ARRA Act of 2009. :353–380. Retrieved December 22, 2016 from https://www.healthit.gov/sites/default/files/hitech_act_excerpt_from_arra_with_index.pdf.

- 14.Henshaw Dan, Okawa Grant, Ching Karen, Garrido Terhilda, Qian Heather. Access to radiology reports via an online patient portal: experiences of referring physicians and patients. Journal of the American College of Radiology. 2015;12(6):582–586. doi: 10.1016/j.jacr.2015.01.015. http://doi.org/10.1016/j.jacr.2015.01.015. [DOI] [PubMed] [Google Scholar]

- 15.Johnson Annette J. Reporting radiology results to patients: keeping them calm versus keeping them under control. Imaging in Medicine. 2010;2(5):477–482. http://doi.org/10.2217/iim.10.42. [Google Scholar]

- 16.Johnson Annette J, Easterling Doug, Nelson Roman, Chen Michael Y, Frankel Richard M. Access to radiologic reports via a patient portal: clinical simulations to investigate patient preferences. Journal of the American College of Radiology. 2012;9(4):256–263. doi: 10.1016/j.jacr.2011.12.023. http://doi.org/10.1016/j.jacr.2011.12.023. [DOI] [PubMed] [Google Scholar]

- 17.Kay Matthew, Morris Dan, Mc Schraefel, Kientz Julie A. There’s no such thing as gaining a pound: reconsidering the bathroom scale user interface. Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing(UbiComp '13); 2013. pp. 401–410. http://doi.org/10.1145/2493432.2493456. [Google Scholar]

- 18.Kushner David C, Lucey Leonard L. Diagnostic radiology reporting and communication: The ACR Guideline. Journal of the American College of Radiology. 2005;2(1):15–21. doi: 10.1016/j.jacr.2004.08.005. http://doi.org/10.1016/j.jacr.2004.08.005. [DOI] [PubMed] [Google Scholar]

- 19.Lin Chin-Yew, Hovy Eduard. Automatic evaluation of summaries using N-gram co-occurrence statistics. Proceedings of the 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Language Technology (NAACL ‘03); Association for Computational Linguistics; 2003. pp. 71–78. http://doi.org/10.3115/1073445.1073465. [Google Scholar]

- 20.MedHelp. Retrieved from http://www.medhelp.org/

- 21.Mentis Helena M, Taylor Alex S. Imaging the body: embodied vision in minimally invasive surgery. Proceedings of theACM International Conference on Human Factors in Computing Systems (CHI '13); 2013. pp. 1479–1488. http://doi.org/10.1145/2470654.2466197. [Google Scholar]

- 22.Miller Andrew D, Mishra Sonali R, Kendall Logan, Haldar Shefali, Pollack Ari H, Pratt Wanda. Partners in care: design considerations for caregivers and patients during a hospital stay. Proceedings of the ACM Conference on Computer-Supported Cooperative Work & Social Computing (CSCW '16); 2016. pp. 754–767. http://doi.org/10.1145/2818048.2819983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ni Tao, Karlson Amy K, Wigdor Daniel. AnatOnMe: facilitating doctor-patient communication using a projection-based handheld device. Proceedings of the ACM International Conference on Human Factors in Computing Systems (CHI '11); 2011. pp. 3333–3342. http://doi.org/10.1145/1978942.1979437. [Google Scholar]

- 24.Seong Cheol Oh, Cook Tessa S, Kahn Charles E. PORTER: a Prototype System for Patient-Oriented Radiology Reporting. Journal of Digital Imaging. 2016:1–5. doi: 10.1007/s10278-016-9864-2. http://doi.org/10.1007/s10278-016-9864-2. [DOI] [PMC free article] [PubMed]

- 25.Vardoulakis Laura Pfeifer, Karlson Amy, Morris Dan, Smith Greg, Gatewood Justin, Tan Desney. Using mobile phones to present medical information to hospital patients. In. Proceedings of the ACM International Conference on Human Factors in Computing Systems (CHI '12); 2012. pp. 1411–1420. http://doi.org/10.1145/2207676.2208601. [Google Scholar]

- 26.Reddit. Retrieved from https://www.reddit.com/

- 27.Rosenkrantz Andrew B, Flagg Eric R. Survey-based assessment of patients’ understanding of their own imaging examinations. Journal of the American College of Radiology. 2015;12(6):549–555. doi: 10.1016/j.jacr.2015.02.006. http://doi.org/10.1016/j.jacr.2015.02.006. [DOI] [PubMed] [Google Scholar]

- 28.Ross Stephen E, Lin Chen-Tan. The effects of promoting patient access to medical records: a review. Journal of the American Medical Informatics Association. 2003;10(2):129–138. doi: 10.1197/jamia.M1147. http://doi.org/10.1197/jamia.M1147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ross Stephen E, Todd Jamie, Moore Laurie A, Beaty Brenda L, Wittevrongel Loretta, Lin Chen-Tan. Expectations of patients and physicians regarding patient-accessible medical records. Journal of Medical Internet Research. 2005;7(2):e13. doi: 10.2196/jmir.7.2.e13. http://doi.org/10.2196/jmir.7.2.e13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Rubin Daniel L. Informatics methods to enable patient-centered radiology. Academic Radiology. 2009;16(5):524–534. doi: 10.1016/j.acra.2009.01.009. http://doi.org/10.1016/j.acra.2009.01.009. [DOI] [PubMed] [Google Scholar]

- 31.Sobo Elisa J. Good communication in pediatric cancer care: a culturally-informed research agenda. Journal of Pediatric Oncology Nursing. 2004;21(3):150–154. doi: 10.1177/1043454204264408. [DOI] [PubMed] [Google Scholar]

- 32.Street Richard L, Makoul Gregory, Arora Neeraj K, et al. How does communication heal? Pathways linking clinician-patient communication to health outcomes. Patient education and counseling. 2009;74(3):295–301. doi: 10.1016/j.pec.2008.11.015. http://doi.org/10.1016/j.pec.2008.11.015. [DOI] [PubMed] [Google Scholar]

- 33.Tang Paul C, Ash Joan S, Bates David W, Marc Overhage J, Sands Daniel Z. Personal health records: definitions, benefits, and strategies for overcoming barriers to adoption. Journal of the American Medical Informatics Association. 2006;13(2):121–126. doi: 10.1197/jamia.M2025. http://doi.org/10.1197/jamia.M2025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Unruh Kenton T, Skeels Meredith, Civan-Hartzler Andrea, Pratt Wanda. Transforming clinic environments into information workspaces for patients. Proceedings of the ACM International Conference on Human Factors in Computing Systems (CHI '10); 2010. pp. 183–192. http://doi.org/10.1145/1753326.1753354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wilcox Lauren, Morris Dan, Tan Desney, Gatewood Justin. Designing Patient-Centric Information Displays for Hospitals. In. Proceedings of the ACM International Conference on Human Factors in Computing Systems (CHI '10); 2010. pp. 2123–2132. http://doi.org/10.1145/1753326.1753650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Woods Susan S, Schwartz Erin, Tuepker Anais, et al. Patient experiences with full electronic access to health records and clinical notes through the My HealtheVet Personal Health Record Pilot: qualitative study. Journal of Medical Internet Research. 2013;15(3):e65. doi: 10.2196/jmir.2356. http://doi.org/10.2196/jmir.2356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zhang Yan. Contextualizing consumer health information searching: an analysis of questions in a social Q&A community. Proceedings of the ACM international conference on Health informatics (IHI ‘10); 2010. pp. 210–219. http://doi.org/10.1145/1882992.1883023. [Google Scholar]