SUMMARY

Advantageous foraging choices benefit from an estimation of two aspects of a resource’s value: its current desirability and availability. Both orbitofrontal (OFC) and ventrolateral (VLPFC) prefrontal areas contribute to updating these valuations, but their precise roles remain unclear. To explore their specializations, we trained macaque monkeys on two tasks: one required updating representations of a predicted outcome’s desirability, as adjusted by selective satiation; the other required updating representations of an outcome’s availability, as indexed by its probability. We evaluated performance on both tasks in three groups of monkeys: unoperated controls and those with selective, fiber-sparing lesions of either OFC or VLPFC. Representations that depend on VLPFC—but not OFC—play a necessary role in choices based on outcome availability; in contrast, representations that depend on OFC—but not VLPFC—play a necessary role in choices based on outcome desirability.

INTRODUCTION

To choose the most advantageous course of action, humans and other animals need to combine information about the desirability of an option with a graded estimate of its potential availability, and economists have long appreciated these two aspects of valuation. By combining the probability of a particular outcome with its subjective value, the overall value of a particular course of action can be estimated. Although economic behavior of this sort is reasonably well understood at the behavioral level, the brain areas necessary for processing these two aspects of valuation remain uncertain.

Orbitofrontal cortex (OFC, Walkers areas 11, 13, and 14) is widely held to be important for learning about both reward value and reward contingency (Mishkin, 1964; Padoa-Schioppa, 2011; Rolls, 2000; Wallis, 2007). “Reward value” in the present context refers to subjective value based on preference or desirability of a particular food outcome, as opposed to value as commonly computed in economic theory (probability × magnitude). Lesions of the granular OFC of primates disrupt the ability to use information about the desirability and probability of rewarding outcomes to guide decision-making (Camille et al., 2011; Hornak et al., 2004; Izquierdo et al., 2004; Walton et al., 2010), and similar observations have followed lesions of the agranular OFC of rodents (Burke et al., 2008; Mobini et al., 2002).

Recently, a role for the OFC in signaling reward probability has been questioned; monkeys with selective excitotoxic lesions of OFC, unlike monkeys with aspiration lesions of OFC, are unimpaired in learning and reversing object choices based on reward feedback in deterministic settings (Rudebeck et al., 2013). This finding raises a question about the learning of stimulus–outcome probabilities: is the OFC involved and, if not OFC, what area is necessary for updating these representations in the primate brain? The work of Walton et al. (2010), combined with our previous results (Rudebeck et al., 2013), suggests that some area near OFC might be the crucial area, rather than OFC per se. The adjacent inferior convexity has been implicated in similar types of learning (Iversen and Mishkin, 1970; Rygula et al., 2010), but only with a deterministic experimental design similar to that used in (Rudebeck et al., 2013). Accordingly, we tested the contributions of both regions to choices based on reward desirability and reward probability.

Here we report the effects of excitotoxic lesions of either granular OFC or ventrolateral prefrontal cortex (VLPFC, Walker’s areas 12, 45, and ventral 46)—a part of the granular prefrontal cortex adjacent to the OFC—on two tasks. One task is designed to assess the ability to use the updated probability of a predicted outcome to guide a choice among visual stimuli, the other to measure the ability to use the current desirability of a predicted outcome to make similar choices. In both tasks, monkeys chose between options depending on their expected value. In the first task (Experiment 1), we manipulated the probability of receiving a single reward for a particular choice while holding the desirability and magnitude of reward constant. In the second task (Experiment 2), we manipulated the subjective value of different food rewards with a selective satiation procedure while holding the probability and magnitude of reward stable.

RESULTS

Experiment 1: Updating likelihood estimates for predicted outcomes

We trained a group of unoperated control monkeys (n=8) and a group of monkeys with excitotoxic OFC lesions (n=4) to perform a three-choice probabilistic learning task (Fig. 1A) (Walton et al., 2010). Four of the unoperated control monkeys subsequently completed additional preoperative testing, received excitotoxic lesions of VLPFC (n=4, Figs 1C and S1), and then were retested on the three-choice probabilistic task. This difference in testing history between OFC and VLPFC lesion groups meant that monkeys with OFC lesions were compared to concurrently run controls, whereas monkeys with VLPFC lesions were compared to their own preoperative performance (see Fig. S7 for full details of the testing order).

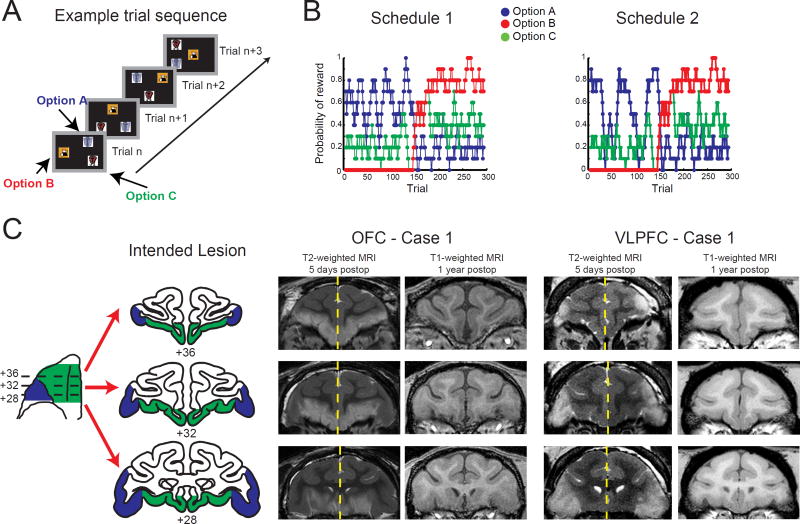

Figure 1. The three-choice probabilistic learning task, reward schedules, and lesions extents.

A) Task sequence. On each trial, monkeys were presented with three stimuli for choice, and through trial and error could learn which stimulus was associated with the highest probability of reward. B) Reward delivery was dependent on the underlying reward schedules shown here and the ones illustrated in Fig. S3. C) Schematic of OFC (green) and VLPFC lesions (blue). For both OFC and VLPFC lesions, T2-weighted MRI images taken within one week of surgery was used to estimate the extent of the lesions. White hypersignal in the T2-weighted images—set off by arrowheads—is associated with edema that follows injections of excitotoxins and indicates the likely extent of the lesion. For T2-weighted images, left and right sides of the MR images are from different scans and have been placed together for ease in viewing. Yellow dashed lines indicate where images from two different postoperative scans have been joined. MR images from T1-weighted scans acquired at least a year after surgery confirm the loss of cortex in the intended regions. Numerals indicate the distance in mm from the interaural plane. MRI images are from levels matching the drawings of coronal sections.

Based on MRI assessment we estimated that the lesions destroyed a mean of 84.6% of OFC (range: 71.0 – 96.3) and, in the other group of monkeys, 91.4% of VLPFC (range: 85.0 – 98.9, Supplemental Information, Fig. S1 and Table 1). Importantly, there was minimal overlap between lesions with on average less than 5% of the nontarget structure affected (Table 1). Inadvertent damage was typically unilateral and inconsistent across subjects.

TABLE 1.

Percent of intended and unintended damage to either OFC or VLPFC in monkeys that received OFC lesions (top) and monkeys that received VLPFC lesions (bottom).

| OFC (intended) | VLPFC (inadvertent) | |||||

|

| ||||||

| Case # | Left | Right | Mean | Left | Right | Mean |

|

| ||||||

| OFC 1 | 82.0 | 78.2 | 80.1 | 0.64 | 0.15 | 0.4 |

| OFC 2 | 92.2 | 89.7 | 91.0 | 4.26 | 2.66 | 3.46 |

| OFC 3 | 81.2 | 60.7 | 71.0 | 2.49 | 0.28 | 1.39 |

| OFC 4 | 96.1 | 96.6 | 96.3 | 11.1 | 7.9 | 9.5 |

| Mean | 87.9 | 81.3 | 84.6 | 4.6 | 2.7 | 3.7 |

| OFC (inadvertent) | VLPFC (intended) | |||||

|

| ||||||

| Case # | Left | Right | Mean | Left | Right | Mean |

|

| ||||||

| VLPFC 1 | 0.75 | 3.56 | 2.16 | 91.6 | 95.9 | 93.8 |

| VLPFC 2 | 3.66 | 3.65 | 3.66 | 97.8 | 100.0 | 98.9 |

| VLPFC 3 | 3.95 | 0 | 1.98 | 92.5 | 83.6 | 88.1 |

| VLPFC 4 | 0 | 2.12 | 1.06 | 83.1 | 86.8 | 85.0 |

|

| ||||||

| Mean | 2.1 | 2.3 | 2.2 | 91.3 | 91.6 | 91.4 |

At the start of each 300-trial session, monkeys were presented with three novel stimuli on a touchscreen monitor (Fig. 1A). By sampling different stimuli over trials, monkeys could learn which of the three stimuli was the best option, i.e., the one associated with the highest probability of receiving a single banana-flavored pellet. Because the reward probabilities assigned to each option changed over the course of the session, to maximize reward monkeys needed to continually update their representation of the best option. Reward delivery for selecting a particular stimulus was predetermined based on one of four different schedules, as described in Figure 1B, S3, and Walton et al. (2010), and each trial was followed by a 5-s intertrial interval (ITI).

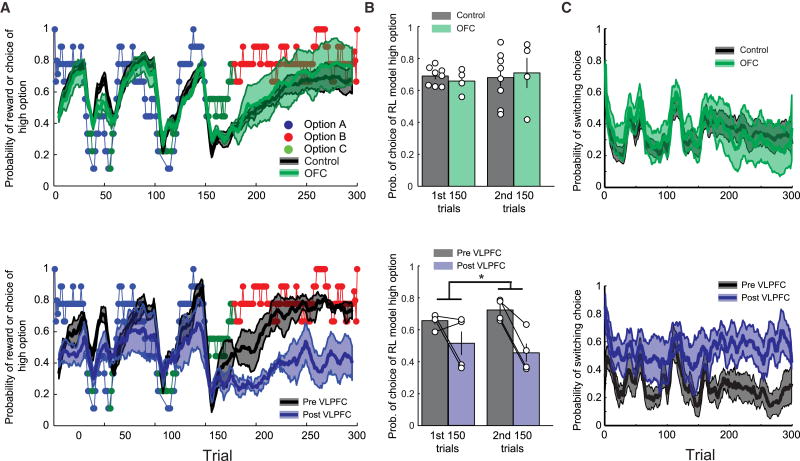

Unoperated monkeys, both the control group and monkeys before VLPFC lesions (a pre-operative group), quickly learned which image was associated with the highest probability of reward and were able to track the best option as it changed over the course of each test session (Fig. 2A, gray line/shaded area). Contrary to reports of deficits in updating probabilistic outcomes after aspiration lesions of OFC (Camille et al., 2011; Hornak et al., 2004; Mobini et al., 2002; Walton et al., 2010), monkeys with selective, excitotoxic lesions of OFC have no impairment on this task. For instance, on schedule 2, the choices of monkeys with OFC lesions clearly overlapped with those of the unoperated controls (Fig. 2A). To probe this null result, we used a reinforcement learning model to estimate on a trial-by-trial basis whether monkeys were choosing the image associated with the highest probability of reward based on their history of previous choices and outcomes on schedule 2 (Fig. 2B). Estimating the best choice on each trial in this way confirmed that monkeys with OFC lesions did not differ from controls [F(1,10)<0.1, p>0.9]. In addition, monkeys with OFC lesions also chose the option associated with the highest probability of reward at greater than chance levels (one sample t-test, t(3)=4.9, p<0.01). This null effect was consistent over all of the schedules on which the monkeys with OFC lesions were tested [Figs 3A, S2–3, effect of group, F(1,10)=0.15, p>0.7, group by schedule interaction, F(3,30)=0.28, p>0.8] and was not dependent on the phase of the test session [first vs second 150 trials, effect of phase or phase by group interaction, Fs<2, ps>0.15].

Figure 2. VLPFC, but not OFC, lesions disrupt the ability to choose according to outcome probability on the three-choice probabilistic learning task.

A) Mean (±SEM) choice behavior of unoperated controls (gray, top row, n = 8), monkeys with OFC lesions (green, top row, n = 4), and monkeys before (gray, n =4) and after (blue, n= 4) VLPFC lesions (bottom row) on schedule 2. Note that in A (top), the gray curve and shading (Control) is largely obscured by the overlying green curve and shading (OFC). Colored points represent the identity and probability of receiving a reward for selection of the high reward option. B) Mean (±SEM) probability of choice of reinforcement learning estimated high reward option in the first and second sets of 150 trials for unoperated controls (n = 8) and monkeys with OFC lesions (top row, n= 4) and monkeys before and after VLPFC lesions (bottom row, n = 4) on schedule 2. Symbols show scores of individuals subjects. C) Mean (±SEM) probability of switched choice options from trial-to-trial for unoperated controls (n = 8) and monkeys with OFC lesions (top row, n = 4) and monkeys before and after VLPFC lesions (bottom row, n = 4) on schedule 2. * p<0.05. Also see Figs S2 and S3.

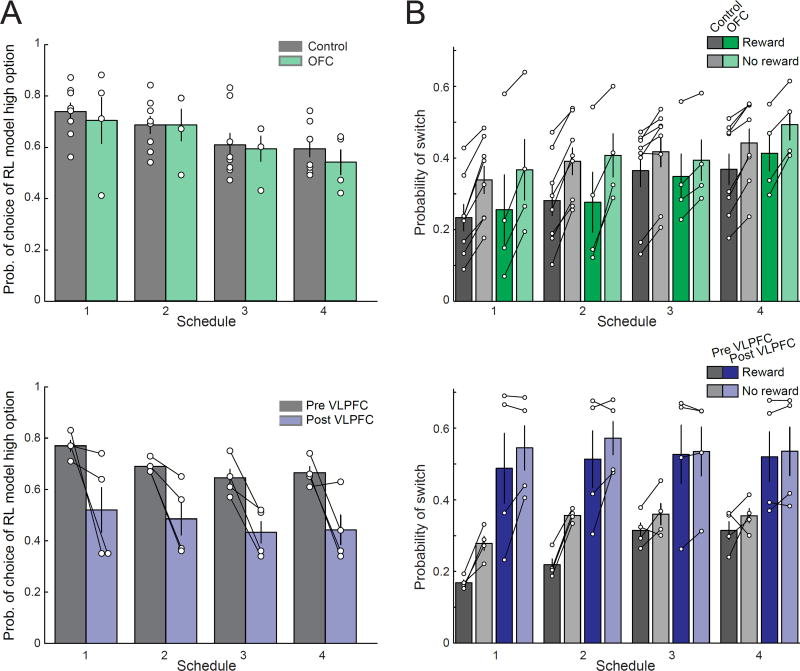

Figure 3. VLPFC, but not OFC, lesions disrupt probabilistic learning.

A) Mean (±SEM) probability of choice of the option associated with the highest probability of reward as defined by a reinforcement learning model fit to monkeys’ choices in each of the 4 schedules for unoperated controls (gray, top row, n = 8) and monkeys with OFC lesions (green, top row, n = 4) and monkeys before and after VLPFC lesions (gray and blue, respectively, bottom row, n = 4). B) Mean (±SEM) probability of switching on rewarded (darker shading) or unrewarded trials (lighter shading) for unoperated controls (gray, n = 8), monkeys with OFC lesions (green, n = 4), and monkeys before (gray, n = 4) and after VLFPC lesions (blue, n = 4). Symbols show scores of individual subjects.

In contrast, after excitotoxic lesions of VLPFC, monkeys exhibited a profound deficit in the ability to learn probabilistic stimulus–outcome associations. The deficit was most prominent when the image associated with the highest probability of reward switched at the midpoint of the session [Fig. 2A]. Determining the best choice on each trial on schedule 2 using a reinforcement learning model further revealed that, after VLPFC lesions, monkeys were less likely to choose the option associated with the highest probability of reward in both the first and second 150 trials [Fig. 2B, effect of surgery, F(1,3)=12.42, p<0.05; phase by surgery interaction, F(1,3)=1.95, p>0.25]. This effect of VLPFC lesions on learning was observed across all of the schedules that the monkeys completed [Fig. 3A, Supplemental Figs 2 and 3, effect of surgery, F(1,10)=10.14, p=0.05; surgery by phase interaction, F(1,3)=0.22, p>0.6], with one exception, schedule 1 [surgery by schedule by phase interaction, F(1,3)=7.77, p<0.05]. In the first 150 trials of this schedule one option has a very high probability of reward compared to the other options (Fig. 1B), and, in this situation, VLPFC lesions did not affect the ability of monkeys to learn the option associated with the highest probability of reward (effect of surgery first 150 trials, F(1,3)=2.64, p>0.2). Overall, this analysis indicates that VLPFC lesions affect learning of probabilistic associations especially when the difference between options is small, and have less influence when there is one good option.

Previous reports have interpreted the effects of OFC lesions in terms of a perseverative impairment related to the loss of inhibitory control (Rolls et al., 1994; cf. Walton et al., 2010), and cortex in the inferior frontal gyrus in humans has also been associated with inhibitory control (Aron et al., 2004). We therefore examined whether lesions of VLPFC or OFC resulted in perseveration, i.e., a decrease in the likelihood of switching choices. As can be seen for schedule 2, monkeys with VLPFC lesions were much more likely to change their choice from one trial to the next compared to before lesions were made [effect of surgery, F(1,3)=45.53, p<0.01, Fig. 2C, bottom]. In contrast, monkeys with OFC lesions did not differ from controls in this regard [group, F(1,10)=0.07, p>0.8, Fig. 2C, top).

To further probe this effect, we evaluated the influence of positive (reward) and negative (no reward) feedback on subsequent choices across all schedules. Unoperated controls and monkeys with OFC lesions showed a similar pattern of behavior; both groups were less likely to switch choices after a rewarded choice (positive feedback) than after an unrewarded one [Fig. 3B, top row, effect of group, F(1,10)=0.04, p>0.8; effect of reward, F(1,10)=225.56, p<0.001]. Following VLPFC lesions, however, the effect of positive feedback on choice was reduced [Fig. 3B, effect of surgery, F(1,3)=9.05, p=0.057]. Thus, the deficit in monkeys with VLPFC lesions appears to be characterized by an inability to assign feedback to the previously chosen stimulus.

To directly test this hypothesis, we conducted a logistic regression analysis to assess how monkeys used the outcomes that they received for choosing a particular option on each trial, either reward or no reward, to guide future choices. This analysis goes beyond those conducted above as it allows us to determine not just the effect of the most recent choice and outcome, but also longer term effects of reward history and choice history on current choices. Our analysis was identical to the one conducted on the choice behavior of monkeys with aspiration lesions of the OFC (Walton et al., 2010) and was conducted on choices and outcomes from all four schedules. To specifically look at how choice and outcome history influenced behavior, this analysis included all of the possible combinations of choices and outcomes, i.e., whether monkeys received a reward or not, from the five preceding trials (n-1 to n-5, Fig. 4A). We also included the n-6 trial in the analysis as a confounding variable for longer choice and reward histories (see STAR Methods for full details).

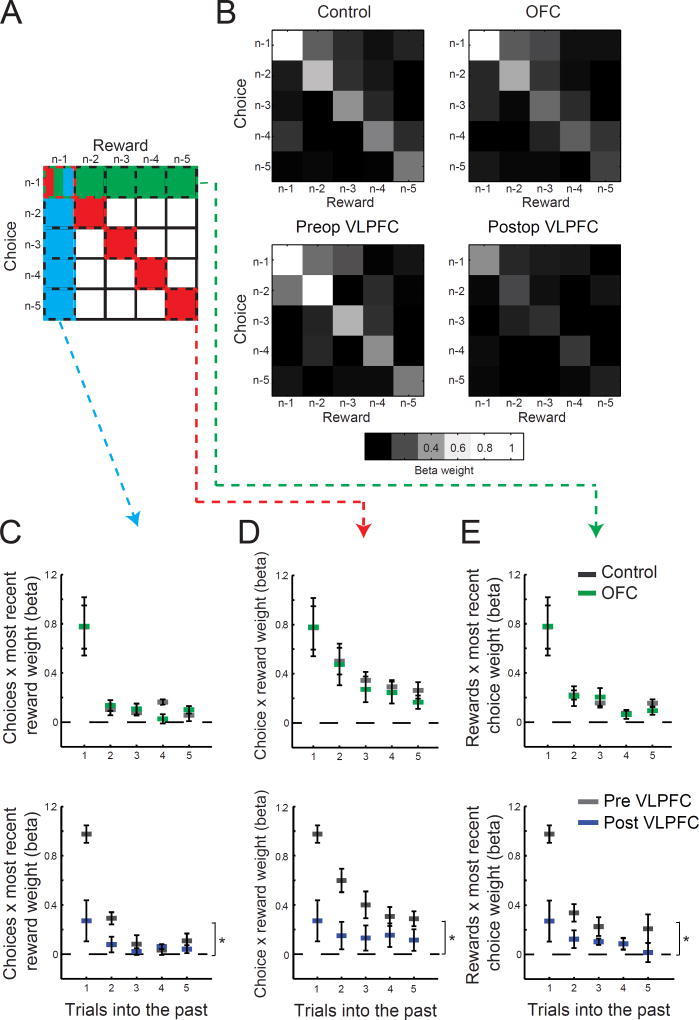

Figure 4. VLPFC, but not OFC lesions, disrupt contingent and noncontingent learning.

A) Schematic of the full matrix of five previous choices and corresponding rewards received for those choices. The matrix components highlighted in red along the diagonal represent the influence of previous choices and their contingent rewards on subsequent choices. Components highlighted in green represent the influence of rewards from the previous five trials and the most recent choice on subsequent choices whereas those highlighted in blue represent the influence of the five previous choices and the reward from the previous trial on the subsequent choices. B) Matrix plots showing the influence (beta weightings from logistic regression) of all combinations of the five previous choices and rewards on subsequent choice for control monkeys (n = 8), monkeys with OFC lesions (top row, n = 4), and monkeys before and after VLPFC (bottom row, n = 4). Lighter shading is associated with higher beta weights. C–E) Raw beta weights from the matrix for controls (gray, n = 8) and monkeys with OFC lesions (green, top row, n = 4) and monkeys before (gray) and after VLPFC lesions (blue, bottom row, n = 4). * p<0.05.

By computing the influence of all combinations of choices and outcomes from the recent past in this way we were able to probe monkey’s ability to credit an outcome to the choice made directly before. This type of learning is often referred to as “contingent learning”, in the sense that a causal association is made between a particular choice and its contingent outcome ("Law of Effect", Thorndike, 1933). In such learning, positive outcomes that follow a choice will increase the likelihood of that choice being repeated, the converse for negative outcomes. In Figure 4A higher weightings on the diagonal of the matrix of past choices and outcomes would indicated that monkeys are learning contingently (Fig. 4A, red squares in the matrix). In addition, this approach also allowed us to probe noncontingent learning mechanisms (“Spread of Effect”, Thorndike, 1933): how past outcomes can influence choices made nearby in time but which did not causally lead to that outcome. In Figure 4A noncontingent learning is associated with higher weighting in off-diagonal parts of the matrix, most notably on the vertical or horizontal from the previous trial corresponding to the influence of both previous choices and rewards, respectively (Fig. 4A, blue and green squares in the matrix).

The choices of unoperated monkeys, both control and preoperative monkeys, were strongly influenced by recently chosen stimuli and the outcome, either rewarded or unrewarded, associated with each of those choices, as evidenced by the higher weightings on the diagonal of the matrix of past choices and rewards (Fig. 4A, red shading, 4B, left side, and 4D). Such a pattern indicates that monkeys were making contingent associations between their specific choices and subsequent outcomes. This effect diminished with increasing distance from the current trial suggesting that monkeys preferentially used the most recent feedback to guide future choices (effect of trial; unoperated controls, F(5,35)=12.65, p<0.01; preop VLPFC lesion monkeys, F(5,15)=27.74, p<0.001). In keeping with the findings of Walton et al. (2010), there was also evidence of monkeys learning from noncontingent choices and outcomes, as evidence by higher weightings in matrix squares away from the diagonal (Fig 4B). Specifically, there was an influence of recent rewards on previous choices [Fig. 4A, blue shading; Fig. 4C, controls and preop VLPFC effect of trial Fs>10, p<0.01] as well as an influence of previous rewards on recent choices [Fig. 4A, green shading; Fig. 4E, Fs>14, p<0.005], and both affected subsequent choices.

Monkeys with lesions of OFC exhibited a pattern almost identical to that of the unoperated control monkeys; their current choices were strongly influenced by previous choices and their contingent outcomes (compare left and right of top part of Fig. 4B, and see also top of Fig. 4D). Not only were these monkeys able to use contingent associations between choices and outcomes to guide subsequent choices [unoperated controls vs OFC, effect of group or group by trial interaction, Fs<0.3, p>0.6, Figs 4B and D, top row], but their choices were also influenced by noncontingent associations [either comparison effect of group or group by trial interaction, Fs<1, p>0.6, Figs 4C and E, top row]. This pattern of results suggests that both contingent and noncontingent learning mechanisms were intact in monkeys with excitotoxic lesions of OFC.

In contrast, monkeys with lesions of VLPFC had a profound impairment in contingent learning (Figs 4B and D, bottom row). The association between previous choices and the outcomes that contingently followed had virtually no influence on monkeys’ subsequent choices [preop vs postop VLPFC, surgery by trial effect, F(5,15)=6.94, p<0.01; postoperative VLPFC, effect of trial, F(5,15)=1.61, p>0.25, Figs 4D, bottom row]. Lesions of VLPFC also affected noncontingent learning mechanisms. This was true for both associations between previous choices and the most recent outcome [Fig. 4C, surgery by trial interaction, F(5,15)=10.81, p<0.01] as well as between the most recent choices and previous outcomes [Fig. 4E, surgery by trial interaction, F(5,15)=5.76, p<0.01].

In three additional experiments we confirmed that: (1) the deficit exhibited by monkeys with VLPFC lesions on the three-choice probabilistic learning task was stable over time and could not be attributed to the order of testing [retest over a year after the initial lesion, contingent learning – preop versus postop test 2, test by trial effect, F(5,15)=9.4, p<0.001, Supplemental information, Fig. S4]; (2) the deficit was not simply due to an inability to flexibly alter stimulus–outcome associations as indexed by the good performance of this group on an object discrimination reversal learning task with deterministic feedback [Supplemental Information, effect of group, F(1,10)=0.06, p>0.8, Fig. S5]; and (3) monkeys with VLPFC lesions were able to learn the prevailing stimulus–outcome associations when the difference between the three options was set at the extreme probabilities (1.0, 0.0, 0.0) and were stable over trials (Fig. S6, also see Fig. 6A).

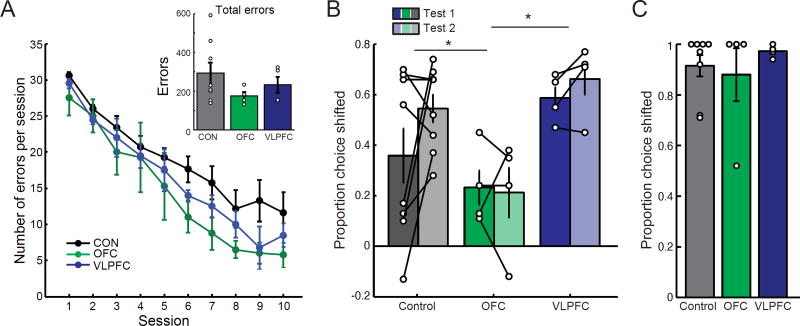

Figure 6. OFC lesions, but not VLPFC lesions, disrupt the ability to choose according to outcome value on the reinforcer devaluation task.

A) Mean (±SEM) number of errors for each group during the first 10 sessions of the 60 pair discrimination learning. Inset shows the total errors to criterion for unoperated controls (n = 8), monkeys with OFC lesions (n = 4), and monkeys with VLPFC lesions (n = 4). B–C) Mean (±SEM) proportion shifted for unoperated controls (gray bars, n = 8), monkeys with OFC lesions (green bars, n = 4), and monkeys with VLPFC lesions (blue bars, n = 4) during (B) the two reinforcer devaluation tests (C) and control test where only foods (no objects) were presented for choice. In all plots symbols show scores of individual subjects. * p<0.05.

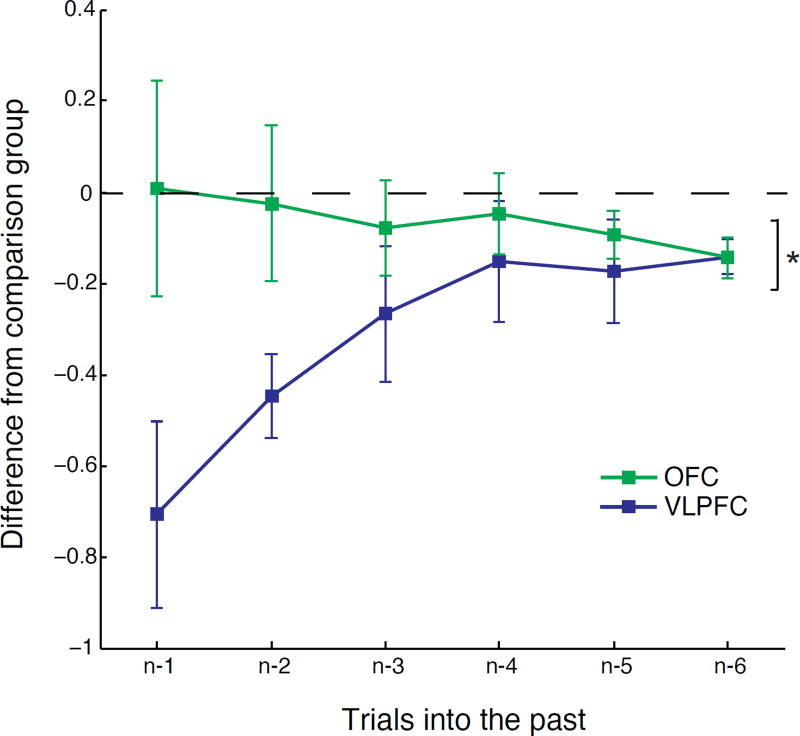

Finally, to confirm that there was a dissociation between monkeys with OFC and VLPFC lesions in the ability to contingently associate choices and outcomes we conducted an additional analysis directly comparing performance. To account for the additional training in the VLPFC group, we computed difference scores based on the beta weights from the logistic regression that reflect contingent associations (red squares in Fig. 4A) as follows: for monkeys with excitotoxic OFC lesions, the control group mean was subtracted from each OFC lesion monkey’s individual score, whereas for monkeys that received VLPFC lesions, difference scores were computed as the difference between each subject’s preoperative and postoperative test scores. Comparison of these difference scores revealed that monkeys with VLPFC lesions differed from monkeys with OFC lesions (Fig. 5, difference score OFC vs VLPFC, group by trial interaction, F(5,30)=5.05, p<0.005). Taken together, these data show that VLPFC, but not OFC, is required for choosing the best option when choices are guided by reward probability.

Figure 5. Direct comparison of contingent learning in monkeys with OFC and VLPFC lesions.

Mean (±SEM) contingent learning difference score for monkeys with OFC (green, n = 4) and VLPFC lesions (blue, n = 4). For each subject, we computed difference scores based on the beta weights from the logistic regression that reflect contingent associations (red cells in Fig 4A) as follows: for monkeys with excitotoxic OFC lesions, the control group mean was subtracted from each OFC lesion monkey’s individual score, whereas for monkeys that received VLPFC lesions, difference scores were computed as the difference between each subject’s preoperative and postoperative test scores. Negative scores reflect decrease in performance relative to controls/preoperative data. * p<0.05.

Experiment 2: Updating the desirability of predicted outcomes

To determine how OFC and VLPFC contribute to choices based on desirability, monkeys were tested on a stimulus-based reinforcer devaluation task (Malkova et al., 1997). This task measures the ability of monkeys to choose between visual stimuli associated with different food rewards based on current biological needs. In contrast to the probabilistic learning task, in which the history of choices and outcomes provides information about the best option and the value of the outcome, a single food pellet, is stable, in the devaluation task the current value of the food outcome guides choices between visual stimuli.

In this experiment, we used three-dimensional objects as visual stimuli. Over a number of weeks, monkeys learned to discriminate 60 pairs of objects for food reward. One of the objects in each pair was always rewarded with either food 1 (e.g., peanuts, 30 objects) or food 2 (e.g., M&Ms, 30 objects). Despite a profound impairment in learning probabilistic associations, monkeys with VLPFC lesions learned to discriminate the object pairs at the same rate as controls and monkeys with OFC lesions [effect of group, F(2,13)=2.03, p>0.1, Fig. 6A].

We then employed a selective satiation procedure intended to devalue one of the two foods and tested whether monkeys were able to shift their choices of objects to obtain the higher value outcome. Specifically, following the selective satiation procedure, monkeys were presented with pairs of objects, one object each associated with food 1 and food 2. The effects of devaluation were quantified by calculating the extent to which monkeys shifted their choices toward objects associated with the higher-value food, relative to baseline choices. A higher proportion of shifted choices reflects a greater sensitivity to the current value of the foods updated on the basis of recent and selective satiation. For example, a proportion shifted score of 0 corresponds to no change in object choice whereas a score of 1 corresponds to all object choices being shifted away from the devalued food. Two tests, carried out approproximately a month apart, were conducted (Test 1 and Test 2). Each test took into account choices after food 1 and food 2 were devalued, which was assessed in separate sessions.

Both unoperated control monkeys and monkeys with lesions of VLPFC were able to update and use the current biological value of food rewards to guide their choices (Fig. 6B). In contrast, monkeys with lesions of OFC chose stimuli associated with the sated food at a much higher rate—as reflected by lower proportion of shifted choices [effect of group (F(2,13)=4.59, p=0.031; posthoc LSD, control vs OFC: p=0.044; OFC vs VLPFC: p=0.011; VLPFC vs control: p=0.258]. Because monkeys with VLPFC lesions were tested both before and after lesions were made, we also compared their pre- and postoperative performance. This further confirmed that lesions did not affect the ability to update the value of a specific food reward to guide choices [effect of surgery, F(1,3)=0.41, p>0.8].

A control test revealed that, when given the opportunity to make visual choices between two foods after selective satiation, monkeys consistently chose the higher-value (nonsated) food [Fig. 6C, effect of group, F(2,13)=0.315, p=0.735]. Thus, the deficit in monkeys with OFC lesions was due to an inability to link objects with the current value of the food (or some feature of the food), as opposed to an inability to discriminate the foods or a disruption of satiety mechanisms. In summary, lesions of OFC, but not of VLPFC, affected the ability to use the current, updated desirability of a predicted outcome to guide choices.

Comparison of performance in Experiments 1 and 2

Finally, to provide strong evidence for a double dissociation of function between OFC and VLPFC we directly compared performance across the two tasks. Here we conducted an ANOVA using the difference scores computed for the two most recent trials (n-1 and n-2) from the direct comparison of contingent learning in the OFC and VLFPC groups in the three-choice learning task (Fig. 5) and the proportion shifted scores from the two devaluation tests (Fig. 6B). This confirmed a double dissociation of function between VLPFC and OFC on the three-chioce probabilistic learning and reinforcer devaluation tasks [task by group interaction, F(1,6)=13.86, p<0.05].

DISCUSSION

The present findings reveal selective and independent contributions of two parts of the granular prefrontal cortex (PFC) in primates. In Experiment 1 we found that VLPFC, but not OFC, is necessary for updating representations of stimulus–outcome probabilities (Figs 2–5). In Experiment 2 we found that OFC, but not VLPFC, is necessary for updating representations of stimulus–outcome desirability based on current biological states and needs (Fig. 6B). Taken together, our findings indicate that although both VLPFC and OFC guide choices based on representations of outcome values, they contribute to updating these representations in different ways. VLPFC is critical for guiding choices based on updated outcome probability, a property that reflects the potential availability of beneficial outcomes, whereas OFC is necessary for guiding choices based on current biological value, a property that reflects the desirability of a specific outcome.

VLPFC

Neurons in VLPFC, especially those in area 12, encode different aspects of outcomes during decision-making, including risk (Kobayashi et al., 2010), and a number of studies have suggested that neural activity in this area is linked to external task variables or attentional processes (Kennerley and Wallis, 2009; Rich and Wallis, 2014). Consistent with this idea, fMRI studies in macaques have reported activations in VLPFC that reflect stimulus value in a two-choice probabilistic learning task (Kaskan et al., 2016). Furthermore, activations in VLPFC encode adaptive responding (a win-stay, lose-shift strategy) in the context of object reversal learning (Chau et al., 2015).

A straightforward account for the impairment on the 3-choice probabilistic learning task is that VLPFC is important for associating, at the time of feedback, particular visual stimuli (or the choice of a given stimulus) with the outcome that occurs on a specific trial. Walton et al. (2010) referred to these contingent associations in terms of credit assignment, suggesting that OFC is necessary for updating valuations based on memories of individual events. Although the concept of credit assignment has several variants, Walton et al. emphasized the correct attribution of a beneficial outcome to the stimulus or choice. On the basis of our results, we embrace many of their conclusions but substitute VLPFC for OFC, and the same substitution probably applies to human performance on probabilistic reward tasks as well (Camille et al., 2011; Hornak et al., 2004).

VLPFC lesions affected both contingent and noncontingent learning (Fig. 4), but only under conditions of dynamic, stochastic stimulus–outcome associations. When the association between stimuli and outcomes was deterministic (Fig. 6A), static (Fig. S5), or when there was clearly a best option (first 150 trials of Schedule 1, Fig. S2), monkeys with VLPFC lesions were not impaired. This was true even when such deterministic associations between stimuli and outcomes were reversed (Fig. S5). This latter finding means that neither OFC nor VLPFC are required for object discrimination reversal learning in macaques. This indicates that the “classic” impairment seen after aspiration lesions of OFC is not due to a single area but likely caused by disconnection of a number of areas from PFC, potentially including medial striatum, mediodorsal thalamus, and/or neuromodulatory systems (Clarke et al., 2004; Clarke et al., 2008; Groman et al., 2013; Iversen and Mishkin, 1970; Roberts et al., 1990).

We also note that our findings are qualitatively and quantitatively different to those following excitotoxic VLPFC lesions in marmosets (Rygula et al., 2010). Specifically, Rygula et al., (2010) reported that VLPFC was required for reversing new postoperatively acquired associations, but not associations learned before lesions, in a deterministic reversal learning task. Because the deficits in marmosets are seen only during the reversal phase on the task, they are clearly different to what we report here: VLPFC lesions disrupted probabilistic learning before any reversal in stimulus-reward contingencies. Further, the findings of Rygula and colleagues would predict that VLPFC lesions should degrade object discrimination reversal learning performance when monkeys had to learn and reverse associations with novel stimuli; however, we observed no deficit in this situation (Fig. S5).

It is more difficult to explain why, despite being primates that have a comparably differentiated prefrontal cortex (Burman and Rosa, 2009; Carmichael and Price, 1994), we see differences between the effects of VLPFC lesions in macaques and marmosets. We note that VLPFC is one of the brain areas where the greatest differential expansion has occurred between these two lineages (Chaplin et al., 2013). One possibility is that—since their last common ancestor more that 30 million years ago—the VLPFC has developed divergent functions in macaques and marmosets, partly as a consequence of independent and differential expansion and partly as a consequence of corresponding changes in anatomical connections. This possibility is bolstered by the knowledge that an expansion of prefrontal cortex occurred independently in macaques more recently that 15 million years ago (Gonzales et al., 2015). A related possibility is that the foraging niche of the two species has driven these areas to subserve divergent functions. Common marmosets (Callithrix jacchus) primarily eat tree sap and insects, foods that require more localized foraging in home ranges of between 1 – 6 hectares (Hubrecht, 1985; Scanlon, 1989). Rhesus macaques (Macaca mulatta), in contrast, feed on seeds, bark, cereals, buds, and fruit, which requires more distant foraging. Consequently their home ranges are much larger than those of marmosets: up to 1,500 hectares (Lindburg, 1971). It is possible that a difference in foraging range placed dissimilar selective pressures on VLPFC in macaques and marmosets, a point we take up later.

Although our results from VLPFC lesions resemble most of the effects that Walton et al. (2010) attributed to OFC lesions, they differ with respect to noncontingent learning. Their aspiration lesions of OFC affected contingent learning, but left noncontingent mechanisms intact. In contrast, our VLPFC lesions affected both contingent and noncontingent learning (Fig. 4). One possible account for this difference is that their aspiration lesions of OFC only disrupted fibers connected to VLPFC that coursed through the uncinate fascicle, and left intact the gray matter of VLPFC as well as many of its connections. Accordingly, the remaining functionality of VLPFC might have been sufficient to support noncontingent learning.

Additional possibilities involve the intertrial interval, which was slightly longer in our case than in the experiment of Walton et al. (5 vs 2 seconds) and the fact that in our experiment the chosen stimulus was not re-presented in the absence of the other stimuli after choice. We think that these differences in task parameters provide unlikely accounts for the difference in findings on noncontingent learning, but they merit further investigation as they would suggest a mnemonic component to the deficit following VLPFC lesions.

Our conclusions about the role of VLPFC agree with the known anatomical connections of this area, which receives highly processed visual information from inferior temporal cortex (IT) as well as inputs from the amygdala, OFC and other outcome-related structures (Carmichael and Price, 1995a, b). On this view, VLPFC underlies the ability to link the kinds of representations housed in IT—mid-level visual feature conjunctions of color, shape, glossiness, translucence and texture—with the memory of an outcome that appeared to be caused by the choice of a stimulus that had these features.

A related role of VLPFC in probabilistic learning involves its role in top-down selective attention. VLPFC damage has been linked to reduced attentional selection, as evidenced by impairments in shifting between stimulus dimensions (Buckley et al., 2009; Dias et al., 1996), reduced performance on tasks requiring allocation of attention to specific visual cues (Rossi et al., 2007; Rushworth et al., 2005), and poor implementation of vision-based rules in the absence of either discrimination or working memory impairments (Baxter et al., 2009; Bussey et al., 2001; Rushworth et al., 1997). Accordingly, the impairment we observed on the 3-choice probabilistic learning task could result from a deficit in attentional selection, in learning, or in some combination of the two. A recent study in humans supports the idea of that VLPFC plays a role in attentional selection (Vaidya and Fellows, 2016).

Notably, activation related to the win-stay, lose-shift rule in macaques was found in a relatively restricted region of VLPFC immediately lateral to the lateral orbital sulcus, in area 12o (Chau et al., 2015). As Fig. 1 shows, this area was included in our VLPFC lesion, although in a descriptive sense it lies mostly on the orbital surface of the primate frontal lobe. So inclusion of area 12o as part of the OFC can lead to different conclusions about OFC than the ones advanced here. In prior work, OFC has usually been defined as areas 11, 13 and 14, and we adhere to that view here. However, if a part of VLPFC, area 12o, is included in OFC, then conclusions about its functional specializations will need to be adjusted to take this redefinition into account. We do not know which parts of our VLPFC lesions caused the impairment reported here, and additional work might be directed to a more precise identification of the crucial region or regions.

Granular OFC

A number of neurophysiological studies have shown that OFC neurons carry signals related to previous choices, outcome history, or both (Kennerley et al., 2011; Simmons and Richmond, 2008; Tsujimoto et al., 2009). The findings reported here indicate that these signals are not necessary for learning about stimulus–outcome probabilities. Instead of OFC, VLPFC is required for updating these representations. Our current findings augment those already in the literature on OFC by demonstrating intact learning of a 3-choice probabilistic stimulus–outcome task after complete, excitotoxic OFC removals, as well as by showing impaired devaluation-based choice shifts in the same group of monkeys. This pattern of spared and impaired abilities after excitotoxic OFC lesions helps establish a double dissociation of function between OFC and VLPFC.

The performance of one of the monkeys with an OFC lesion (case 1) differed from the others in the group on the three-choice probabilistic learning task (Figs 2–3, S2–3). However, although different from the others monkeys that received excitotoxic OFC lesions, this subject rarely scored outside the range of the unoperated controls. Further, there was no relation between lesion volume and performance, again suggesting that the poor performance of this monkey was not related to the OFC lesion.

VLPFC–OFC cooperativity

The separate processing of outcome availability and desirability in VLPFC and OFC, respectively, has implications for models of PFC function during choice behavior. Notably, within OFC our previous work shows that lateral OFC areas 11 and 13, not medial OFC area 14, is essential for registering changes in the value of outcomes (Rudebeck and Murray, 2011). In addition, medial PFC, including medial OFC (area 14) and medial frontal pole cortex (the medial part of area 10), are involved in comparing different options for choice (Blanchard et al., 2015; Fellows and Farah, 2007; Noonan et al., 2010; Rudebeck and Murray, 2011). Accordingly, one possibility is that medial PFC receives converging signals from VLPFC and OFC. The former could convey information about outcome probabilities; the latter would provide information about the current desirability of a specific outcome. The combination of these types of information, along with other valuation-related variables such as magnitude and effort costs, could then guide foraging choices. In line with this idea, medial PFC receives projections from both OFC and VLPFC (Carmichael and Price, 1996). In addition, fMRI studies in macaques and humans have found activations in medial PFC that are modulated not only by outcome contingency (Kaskan et al., 2016; Tanaka et al., 2008), delays in receiving an outcome (Kable and Glimcher, 2007) and the current biological value of outcomes (Howard and Kahnt, 2017), but also by the comparison between alternative outcomes (Boorman et al., 2009).

Interpretational limitations

As always is the case in lesion experiments, the interpretation of results can be compromised by neuroplastic adaptations in remaining brain areas and connections. However, the effects of VLPFC lesions were evident over a year after surgery (Fig. S4), and our earlier work showed that lesions of OFC produce enduring effects on the devaluation task (Rhodes and Murray, 2013; Rudebeck et al., 2013). The remaining brain structures that contribute to updating outcome valuations, either desirability or availability, appear to have a poor ability, if any, to compensate for the loss of representations established, updated and maintained by neuronal networks that depend on either OFC or VLPFC. As such, these parts of the granular PFC seem to provide a significant advantage over the remainder of the brain. We close with a consideration of this topic.

Comparative analysis

Comparative neuroanatomy indicates that granular OFC and VLPFC arose at different times during primate evolution (Preuss and Goldman-Rakic, 1991), and they have different connectional fingerprints (Neubert et al., 2015; Neubert et al., 2014; Passingham et al., 2002). According to Preuss and Goldman-Rakic, granular OFC emerged early in primate evolution, and it connects preferentially with perirhinal cortex and agranular OFC (Kondo et al., 2005; Saleem et al., 2008). The former provides it with visual representations at the level of whole objects, the latter with with olfactory, gustatory and visceral signals (Carmichael and Price, 1996). These inputs suggest that granular OFC represents conjunctions of outcome features, such as visual appearance and taste, an assumption confirmed by neurophysiological studies in macaque monkeys (Rolls and Baylis, 1994). This enhanced capacity, and especially the contribution from fine-grained visual features of outcomes, probably provided early primates with a selective advantage in making local foraging choices.

In contrast to the emergence of granular OFC in early primates, VLPFC evolved later, sometime during anthropoid evolution (Preuss and Goldman-Rakic, 1991). Rather than perirhinal cortex, VLPFC is preferentially connected with IT (Kondo et al., 2005; Saleem et al., 2014), which supplies it with visual signals at a level of hierarchy between that of whole objects and low-order feature conjunctions or elemental features. Accordingly, VLPFC probably provided a selective advantage for foraging choices made at a distance, a mode of decision-making that became especially important as anthropoids became large, far-ranging animals (Murray et al., 2017). As we noted earlier, modern macaques differ from marmosets in that the former forage over large home ranges whereas the latter forage locally. When foraging at a distance, information about a resource’s fine-grained visual properties, smell, and taste are less important (due to distance) or unavailable. A role for VLPFC in representing reward probability may have arisen because this area provided an advantage in estimating resource availability at distant locations, based on visual signals from IT or acoustic signals from the superior temporal cortex.

STAR METHODS

Contact for reagent and resource sharing

Further information and request for resources should be directed to and will be fulfilled by the Lead contact, Drs Peter H. Rudebeck (peter.rudebeck@mssm.edu).

EXPERIMENTAL MODEL AND SUBJECT DETAILS

Sixteen adult rhesus monkeys (Macaca mulatta), 14 male and 2 female, served as subjects. Monkeys weighed between 5.1–10.0 kg and all were at least 4.5 years old at the start of testing. Each animal was individually or pair housed, was kept on a 12-h light dark cycle and had access to water 24 hours a day. All experiments were conducted during the light phase. For the first experiment, four monkeys sustained bilateral excitotoxic lesions of OFC and the remaining eight were retained as unoperated controls (CON). For the second experiment, four monkeys that had previously served as unoperated controls received bilateral excitotoxic lesions of VLPFC and were retested on the 3-choice probabilistic learning task. For the reinforcer devaluation task, eight monkeys served as unoperated controls; four had been tested on the three-choice probabilistic learning task, and the other four had not. Data from the monkeys with excitotoxic lesions of OFC on the devaluation task have previously been reported (Rudebeck et al., 2013). Monkeys were randomly assigned to each group. The testing order in which tasks were administered is shown in Figure S7. No statistical test was run to determine the sample size a priori. The sample sizes we chose are similar to those used in previous publications. All procedures were reviewed and approved by the National Institute of Mental Health (NIMH) Animal Care and Use Committee.

METHOD DETAILS

Apparatus and materials

All apparatus and materials were identical to those described in previous reports on the effects of lesions within the macaque OFC on probabilistic learning and reinforcer devaluation tasks (Izquierdo and Murray, 2007; Izquierdo et al., 2004, 2005; Rudebeck and Murray, 2011).

For the probabilistic learning task, monkeys sat in primate chairs in front of a touch sensitive monitor on which visual stimuli could be presented and monkeys’ choices recorded. Reward pellets (190 mg Noyes pellets) were delivered from an automated food dispenser (MED Associates) into a centrally located cup. A computer running custom software (Ryklin Software, New York, USA) controlled stimulus presentation, timing, contingency, and reward delivery.

For reinforcer devaluation, all testing was conducted in a modified Wisconsin General Test Apparatus (WGTA) inside a darkened room. Monkeys occupied a wheeled transport cage in the animal compartment of the WGTA. The test compartment of the WGTA held the test tray, which contained two food wells spaced 235 mm apart. Test material for reinforcer devaluation consisted of 120 objects that varied in size, shape, color and texture. Food rewards for the devaluation task consisted of two of the following six foods: M & M’s (Mars candies, Hackettstown, NJ), half peanuts, raisins, craisins (Ocean Spray, Lakeville-Middleboro, MA), banana-flavored pellets (Noyes, Lancaster, NH) and fruit snacks (Giant Foods, Landover, MD).

Two additional novel objects were used for object discrimination reversal learning. For object reversal learning a half peanut served as the food reward.

Surgery

Standard aseptic surgical procedures were used throughout (Rudebeck and Murray, 2011). Under isoflurane anesthesia, a large bilateral bone flap was raised over the region of the prefrontal cortex and a dura flap was reflected toward the orbit to allow access to the orbital surface in one hemisphere. For the excitotoxic OFC lesion, a series of injections was made into the cortex corresponding to Walker’s areas 11, 13 and 14 in each hemisphere using a hand-held Hamilton syringe with a 30-gauge needle. Surgery was carried out in two stages, one hemisphere at a time. Injections were made into the cortex on the orbital surface between the fundus of the lateral orbital sulcus and the rostral sulcus on the medial surface of the hemisphere. The rostral boundary of the injections was an imaginary line at the level of the rostral end of the medial orbital sulcus. The caudal boundary of the injections was an imaginary line at the caudal end of the medial orbital sulcus (Fig. 1C). For the VLPFC lesion injections were made into the cortex corresponding to Walker’s areas 12, 45, and ventral 46 in each hemisphere (Fig. 1C). For cases 1, 3 and 4, surgery was carried out in two stages, one hemisphere at a time. For case 2, surgery was completed in a single stage. The lateral boundary of the lesion was just ventral to the lower lip of the principal sulcus and the medial boundary was the fundus of the lateral orbital sulcus. On the inferior frontal convexity, the rostral boundary of the lesion was the rostral tip of the principal sulcus, and the caudal boundary was the caudal end of the principal sulcus. The lesion therefore avoided the frontal eye fields but included the cortex on the anterior bank of the inferior limb of the arcuate sulcus. On the orbital surface, the rostral limit of the lesion was the anterior tip of the lateral orbital sulcus and the caudal limit was the caudal end of the lateral orbital sulcus. At each site 1.0 µl of ibotenic acid (10–15 µg/µl; Sigma or Tocris) or a cocktail of ibotenic acid and N-Methyl-D-aspartic acid (NMDA) (ibotenic acid 10 µg/µl, NMDA 10 µg/µl; Sigma) was injected into the cortex as a bolus. The needle was then held in place for 2–3 seconds to allow the toxin to diffuse away from the injection site. Injections were spaced approximately 2 mm apart. For OFC, the mean number of injections per hemisphere was ±SEM: 98 ± 9 (Range: 71 – 119), whereas for VLPFC, the mean number of injections per hemisphere was ±SEM: 92 ± 4 (Range: 76 – 102).

Lesion assessment

Injections of excitotoxins into OFC and VLPFC resulted in hypersignal – visible in T2-weighted MR scans – in the cortex on the orbital and ventrolateral surface, respectively. For the monkeys with injections into the OFC, hypersignal extended from the fundus of the lateral orbital sulcus, laterally, to the rostral sulcus, medially (Fig. 1C and S1). For the VLPFC group, hypersignal extended from the fundus of the lateral orbital sulcus laterally to the principal sulcus (Fig. 1C and S1). For brain regions studied so far, the location and extent of excitotoxic lesions is reliably indicated by white hypersignal on T2-weighted scans. Accordingly, for each operated monkey the extent of hypersignal on coronal MR images between approximately 40 to 26 mm anterior to the interaural plane was plotted onto a standard set of drawings of coronal sections from a macaque brain. The volume of the lesions was then estimated using a digitizing tablet (Wacom, Vancouver, WA).

Behavioral testing

Prior to surgery all animals were habituated to the WGTA and were allowed to retrieve food from the test tray. For experiment 1, following preliminary training and initial food preference testing, monkeys either received excitotoxic lesions of OFC or were retained as unoperated controls. Following surgery, monkeys were tested on reinforcer devaluation and then the three-choice probabilistic learning task. For the second experiment, four unoperated controls from the first experiment received excitotoxic VLPFC lesions and were retested on the three-choice probabilistic learning task. They were then tested on the reinforcer devaluation task. Over a year after receiveing excitotoxic VLPFC lesions they were retested on the 3-choice probabilistic learning task. Testers conducting the behavioral experiments were, where possible, blind to group assignments.

Three-choice probabilistic learning task

All testing was conducted while monkeys sat in a primate chair positioned in front of a touch sensitive monitor. In each test session, animals were presented with 3 novel stimuli, which they had never previously encountered, assigned to the three options (A–C). Stimuli could be presented in one of four spatial configurations and each stimulus could occupy any of the three positions specified by the configuration (see Rudebeck et al., 2008). Configuration and stimulus position was determined randomly on each trial thereby ensuring that animals used stimulus identity rather than action- or spatially-based values to guide their choices.

The start of each trial was signaled by the presentation of three stimuli. Animals made their selections by touching one of the stimuli on the screen. The stimuli then disappeared and reward was delivered, or not, according to the programmed schedule. Intertrial intervals were 5 s.

Reward was delivered stochastically on each option according to four predefined schedules (Figs 1B and S3): stable, variable, forwards, and backwards which have previously been used to probe stimulus-reward learning in macaques (Rudebeck et al., 2008; Walton et al., 2010). The schedules are a predetermined series of reward/no-reward outcomes for each option on each trial of the 300-trial testing session. The likelihood of receiving a reward for choosing an option on each trial was calculated using a moving 20-trial window (±10 trials) and this is what is shown in Figs 1B and S3. The highest probability of receiving a reward on each trial was determined by taking the envelope of these reward probability functions. Whether or not reward was delivered for selecting one option was independent of the other alternatives. Available rewards on unchosen alternatives were not held over for subsequent trials. Each animal completed ten sessions for each schedule. Monkeys completed a single 300-trial testing session each day. Testing proceeded at the rate of one session per day for 5–6 days per week. Novel stimuli were used each day. For the four schedules the sessions were interleaved (i.e., day 1, stable1; day 2, variable1; etc.) to ensure the subjects could not learn the underlying reward schedules.

To confirm that the deficit in learning probabilistic reward associations was stable over time and could not be overcome by compensatory mechanisms, monkeys with VLPFC lesions were retested on the three-choice probabilistic learning task over one year after the initial testing. Each monkey completed 5 sessions of each of the four schedules after an initial period where they were re-familiarized with the task (5 completed sessions with stimuli associated with stationary probabilities of 0.8, 0.5, 0.2 of receiving a reward. There was one exception: Monkey 1 was unable to complete testing on schedules 1 and 2 during the retest, meaning that analyses for this monkey only compared performance on schedules 3 and 4 before and over a year after excitotoxic VLPFC lesions (retest). Otherwise, the data were analyzed using identical methods to those used previously.

Food preference testing

After habituation to the WGTA, each monkey’s preference for six different foods was assessed over a 15-day period. Every day monkeys received 30 trials consisting of pairwise presentation of the six different foods, one each in the left and right wells of the test tray. The left-right position of the foods was counterbalanced. Preferences were determined by analyzing choices within each of the 15 possible pairs of foods over the final five days of testing.

Reinforcer devaluation

The behavioral methods used were highly similar to those reported before (Rudebeck et al., 2013). The procedure employed object discrimination learning, which set up particular object-outcome associations, followed by reinforcer devaluation tests, in which probe trials gauged the monkeys’ ability to link objects with current food value. For the operated groups, all testing was conducted postoperatively.

Object discrimination learning

Monkeys were trained to discriminate 60 pairs of novel objects. For each pair, one object was randomly designated as the positive object (S+, rewarded) and the other was designated as negative (S−, unrewarded). Half of the positive objects were baited with food 1. The other half were baited with food 2. For each monkey, the identity of foods 1 and 2 was based on the monkey’s previously determined food preferences. The foods selected were those that the monkey valued highly and which were roughly equally palatable as judged by choices in the food preference test.

On each trial, monkeys were presented with a pair of objects, one each overlying a food well, and were allowed to choose between them. If they displaced the S+ they were allowed to retrieve the food. The trial was then terminated. If they chose the S−, no food was available, and the trial was terminated. The left-right position of the S+ followed a pseudorandom order. Training continued until monkeys attained the criterion of a mean of 90% correct responses over 5 consecutive days (i.e., 270 correct responses or greater in 300 trials).

Reinforcer devaluation test 1

Monkey’s object choices were assessed under two conditions: after one of the foods was devalued, and in normal (baseline) conditions. On separate days we conducted four test sessions, each consisting of 30 trials. Only the positive (S+) objects were used. On each trial, a food-1 object and a food-2 object were presented together for choice; each object covered a well baited with the appropriate food. With the constraint that a food-1 object was always paired with a food-2 object, the object pairs were generated randomly for each session.

Preceding two of the test sessions a selective satiation procedure, intended to diminish the value of one of the foods, was conducted. For the other two test sessions, which provided baseline scores, monkeys were not sated on either food before being tested. The order in which the test sessions occurred was the same for all monkeys and was as follows: 1) baseline test 1; 2) food 1 devalued by selective satiation prior to test session; 3) baseline test 2; 4) food 2 devalued by selective satiation prior to test session.

For the selective satiation procedure a food box filled with a pre-weighed quantity of either food 1 or food 2 was attached to the front of the monkey’s home cage. The monkey was given a total of 30 minutes to consume as much of the food as it wanted, at which point the experimenter started to observe the monkey’s behavior. Additional food was provided if necessary. The selective satiation procedure was deemed to be complete when the monkey refrained from retrieving food from the box for 5 minutes. The amount of time taken in the selective satiation procedure and the total amount of food consumed by each monkey was noted. The monkey was then taken to the WGTA within 10 minutes and the test session conducted.

Reinforcer devaluation test 2

A second devaluation test, identical to the first, was conducted between 44 and 90 days after reinforcer devaluation test 1. Monkeys were retrained on the same 60 pairs to the same criterion as before. After relearning, the reinforcer devaluation test was conducted in the same manner as before.

Reinforcer devaluation test 3 - Food choices after selective satiation

Shortly after reinforcer devaluation test 2, we assessed the effect of selective satiation on monkey’s choices of foods alone (object-based reinforcer devaluation test 3, Fig. S7). This test was conducted to evaluate whether satiety transferred from the home cage to the WGTA, and whether behavioral effects of the lesion (if any) were due to an inability to link objects with food value as opposed to an inability to discriminate the foods. This test was identical to both reinforcer devaluation tests 1 and 2, but with the important difference that no objects were presented over the two wells where foods were placed. On each trial of the 30-trial sessions, monkeys could see the two foods and were allowed to choose between them. As was the case for reinforcer devaluation tests 1 and 2, there were four critical test sessions; two were preceded by selective satiation and two were not.

Object discrimination reversal learning

Monkeys with VLPFC lesions were tested postoperatively and their behavior compared to unoperated controls. A single pair of objects, novel at the start of testing, was used throughout object discrimination reversal learning testing. To prevent object preferences from biasing learning scores, both objects were either baited (for half the monkeys in each group) or unbaited on the first trial of the first session of acquisition of the object discrimination. If the object chosen on the first trial was rewarded, it was designated the S+; if not, it was designated the S−. Through trial and error monkeys learned which object was associated with a food reward. Monkeys were tested for 30 trials per daily session for 5–6 days per week. Criterion was set at 93% (i.e., 28 correct responses in 30 trials) for one day followed by at least 80% (i.e., 24/30) the next day. Once monkeys had attained criterion on the initial object discrimination problem, the contingencies were reversed and animals were trained to the same criterion as before. This procedure was repeated until a total of nine serial reversals had been completed. Data were analyzed with repeated measures ANOVA with factors of surgery (within subject effect, 2 levels) and reversal (within subjects effect, 9 levels).

Data analysis

To obtain a trial-by-trial estimate of whether monkeys were choosing the best option based on their prior history of choices and rewards, we fit a reinforcement-learning model to monkey’s choices in schedules 1 and 2. The model was fit separately to the choice behavior from each session producing estimates of stimulus value and choice probability, for each stimulus on each trial, as well as the learning rate and the inverse temperature for each session. The model updates the value, v, of a chosen option, i, based on reward feedback, r in trial t as follows:

| (Equation 1) |

Thus, the updated value of an option is given by its old value, vi(t − 1) plus a change based on the reward prediction error (r(t) − vi(t − 1)), multiplied by the learning rate parameter, α. At the beginning of each session, the value, v, of all three novel stimuli is set to zero. The free parameters (the learning rate parameter, α, and the inverse temperature, β, which estimates how consistently animals choose the highest valued option), were fit by maximizing the likelihood of the choice behavior of the monkeys, given the model parameters. Specifically, we calculated the choice probability di(t) using the following:

| (Equation 2) |

And then calculated the log-likelihood as follows:

| (Equation 3) |

Where ck(t) = 1 when the subject chooses option k in trial t and ck(t) = 0 for all unchosen options, meaning that the model maximizes the choice probability (dk(t)) of the actual choices the monkeys made. T is the total number of trials that monkeys completed in a session, usually 300. Model parameters were fitted using methods as described in Averbeck et al. (2013). In brief, parameters were optimized by minimizing the log likelihood of the subject’s choices using the fminsearch function in MATLAB. Learning rate parameters were drawn from a normal distribution with a mean of 0.5 and a standard deviation of 3. The inverse temperature parameter was drawn from a normal distribution with a mean of 1 and a standard deviation of 5. These distributions were chosen because learning rates in probabilistic settings should be considerably less than 1, given the stochastic nature of reward delivery, and positive inverse temperatures indicate that choices are biased towards higher reward values. Model fits were repeated 1000 times to avoid local minima and no constraints were placed on the estimated parameters. The maximum log-likelihood across the 1000 fits was used as the model’s estimate. We then took the choice probabilities on each trial and determined whether monkeys chose the image with the highest choice probability in either the first or second 150 trials in all schedules.

Logistic regression analysis of monkeys choices in the three-choice probabilistic learning task used methods identical to those used in Walton et al. (2010). These analyses were conducted on the data from all 4 reward schedules (Fig. 1B and S3). To determine how recently made choices and recently received rewards influenced subsequent choices, we conducted three separate logistic regression analyses, one for each potential stimulus (A,B,C) that the monkey could select. From here on we describe the logistic regression analyses for “A” choices, but the same was done for for stimuli B and C. We first constructed vectors for whenever the monkey chose stimulus A, (vector set to 1) and when they chose stimuli B or C (vector set to 0). We then formed explanatory variables (EVs) based on all possible combinations of choices and rewards from the recent past, trials n-1 to n-6. For each choice-outcome interaction, the EV was set to 1 when the monkey chose stimulus A and was rewarded. The same EV was set to −1 when either stimulus B or C was chosen and rewarded and set to 0 when no reward was delivered for any choice. A standard logistic regression was then fit to these 36 EVs (i.e. 6 by 6 matrix of all combination of previous choices and outcomes from preceding 6 trials). Of these, the 25 EV constructed from the five most recent trials were of interest whereas the remaining 11 that involved n-6 trials were included as confounding regressors in order to remove the influence of longer term choice/reward trends.

Ultimately, this analysis produced estimates of β̂A and ĈA. The analysis was repeated for stimuli B and C, which produced regression weights for each stimulus, β̂A, β̂B, β̂C, and a corresponding set of covariances, ĈA, ĈB, ĈC. Regression weights for each stimulus were combined into a single weight vector using the variance-weighted mean (Lindgren, 1993):

| (Equation 4) |

Regression weights from the different groups were then compared using repeated measures ANOVAs to determine the differential influence of previous choices, outcomes, and combinations between the two within and across the groups of monkeys.

For the reinforcer devaluation task, the proportion shifted relative to baseline for each subject was computed using the following equation:

| (Equation 5) |

F1 and F2 represent the choices of the objects paired with the two food rewards in sessions where the foods were devalued (D) and when they were not (N). Nondevalued choices (F1/2N) were based on the average of two baseline sessions conducted in the week prior to the devaluation sessions. Proportion shifted scores for each monkeys were analyzed using repeated measures ANOVA with factors of test (two levels, within subjects effect), group (three levels, between subjects effect), and interaction effects where appropriate.

QUANTIFICATION AND STATISTICAL ANALYSIS

Statistical analyses were conducted in both SPSS version 22 and MATLAB version 2014a. Unless otherwise stated, we used repeated measures ANOVA to compare the performance of different groups. Analysis of the control and OFC group data was conducted with group as a between subjects factor, whereas for the monkeys that received VLPFC lesions, data were analyzed with surgery as a within subjects factor. Other within subjects factors used were phase (first vs second 150 trials of each session, 2 levels), schedule (4 levels), reward (2 levels), trial (trials into the past, 6 levels), test (devaluation test 1 vs test 2), reversal (9 levels), and task (2 levels). In all figures, error bars reflect the standard error of the mean.

Supplementary Material

Acknowledgments

We thank Emily Moylan for assistance with data collection, and Rachel Reoli for help performing surgery. We also thank Vincent D. Costa and Steven P. Wise for comments on an earlier version of the manuscript. This work was supported by the Intramural Research Program of the National Institute of Mental Health (EAM, ZIAMH002887), an NIMH BRAINS award (PHR, R01 MH110822), a NARSAD Young Investigator Award (PHR), Rosen Family Scholarship (PHR), and generous seed funds from the Ichan School of Medicine at Mount Sinai (PHR).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

COMPETING INTERESTS: The authors declare no competing financial interests.

AUTHOR CONTRIBUTIONS

PHR and EAM devised and designed the study. DAL, PHR, and EAM conducted testing and analyzed the data. RCS, PHR, and EAM performed the surgeries and wrote the manuscript.

References

- Aron AR, Robbins TW, Poldrack RA. Inhibition and the right inferior frontal cortex. Trends Cogn Sci. 2004;8:170–177. doi: 10.1016/j.tics.2004.02.010. [DOI] [PubMed] [Google Scholar]

- Averbeck BB, Djamshidian A, O'Sullivan SS, Housden CR, Roiser JP, Lees AJ. Uncertainty about mapping future actions into rewards may underlie performance on multiple measures of impulsivity in behavioral addiction: evidence from Parkinson's disease. Behav Neurosci. 2013;127:245–255. doi: 10.1037/a0032079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter MG, Gaffan D, Kyriazis DA, Mitchell AS. Ventrolateral prefrontal cortex is required for performance of a strategy implementation task but not reinforcer devaluation effects in rhesus monkeys. European Journal of Neuroscience. 2009;29:2049–2059. doi: 10.1111/j.1460-9568.2009.06740.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanchard TC, Hayden BY, Bromberg-Martin ES. Orbitofrontal cortex uses distinct codes for different choice attributes in decisions motivated by curiosity. Neuron. 2015;85:602–614. doi: 10.1016/j.neuron.2014.12.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boorman ED, Behrens TE, Woolrich MW, Rushworth MF. How green is the grass on the other side? Frontopolar cortex and the evidence in favor of alternative courses of action. Neuron. 2009;62:733–743. doi: 10.1016/j.neuron.2009.05.014. [DOI] [PubMed] [Google Scholar]

- Buckley MJ, Mansouri FA, Hoda H, Mahboubi M, Browning PG, Kwok SC, Phillips A, Tanaka K. Dissociable components of rule-guided behavior depend on distinct medial and prefrontal regions. Science. 2009;325:52–58. doi: 10.1126/science.1172377. [DOI] [PubMed] [Google Scholar]

- Burke KA, Franz TM, Miller DN, Schoenbaum G. The role of the orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature. 2008;454:340–344. doi: 10.1038/nature06993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burman KJ, Rosa MG. Architectural subdivisions of medial and orbital frontal cortices in the marmoset monkey (Callithrix jacchus) J Comp Neurol. 2009;514:11–29. doi: 10.1002/cne.21976. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Wise SP, Murray EA. The role of ventral and orbital prefrontal cortex in conditional visuomotor learning and strategy use in rhesus monkeys (Macaca mulatta) Behav Neurosci. 2001;115:971–982. doi: 10.1037//0735-7044.115.5.971. [DOI] [PubMed] [Google Scholar]

- Camille N, Tsuchida A, Fellows LK. Double dissociation of stimulus-value and action-value learning in humans with orbitofrontal or anterior cingulate cortex damage. J Neurosci. 2011;31:15048–15052. doi: 10.1523/JNEUROSCI.3164-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Architectonic subdivision of the orbital and medial prefrontal cortex in the macaque monkey. Journal of Comparative Neurology. 1994;346:366–402. doi: 10.1002/cne.903460305. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Limbic connections of the orbital and medial prefrontal cortex in macaque monkeys. Journal of Comparative Neurology. 1995a;363:615–641. doi: 10.1002/cne.903630408. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Sensory and premotor connections of the orbital and medial prefrontal cortex of macaque monkeys. Journal of Comparative Neurology. 1995b;363:642–664. doi: 10.1002/cne.903630409. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Connectional networks within the orbital and medial prefrontal cortex of macaque monkeys. Journal of Comparative Neurology. 1996;371:179–207. doi: 10.1002/(SICI)1096-9861(19960722)371:2<179::AID-CNE1>3.0.CO;2-#. [DOI] [PubMed] [Google Scholar]

- Chaplin TA, Yu HH, Soares JG, Gattass R, Rosa MG. A conserved pattern of differential expansion of cortical areas in simian primates. J Neurosci. 2013;33:15120–15125. doi: 10.1523/JNEUROSCI.2909-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chau BK, Sallet J, Papageorgiou GK, Noonan MP, Bell AH, Walton ME, Rushworth MF. Contrasting Roles for Orbitofrontal Cortex and Amygdala in Credit Assignment and Learning in Macaques. Neuron. 2015;87:1106–1118. doi: 10.1016/j.neuron.2015.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke HF, Dalley JW, Crofts HS, Robbins TW, Roberts AC. Cognitive inflexibility after prefrontal serotonin depletion. Science. 2004;304:878–880. doi: 10.1126/science.1094987. [DOI] [PubMed] [Google Scholar]

- Clarke HF, Robbins TW, Roberts AC. Lesions of the medial striatum in monkeys produce perseverative impairments during reversal learning similar to those produced by lesions of the orbitofrontal cortex. Journal of Neuroscience. 2008;28:10972–10982. doi: 10.1523/JNEUROSCI.1521-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dias R, Robbins TW, Roberts AC. Dissociation in prefrontal cortex of affective and attentional shifts. Nature. 1996;380:69–72. doi: 10.1038/380069a0. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. The role of ventromedial prefrontal cortex in decision making: judgment under uncertainty or judgment per se? Cerebral Cortex. 2007;17:2669–2674. doi: 10.1093/cercor/bhl176. [DOI] [PubMed] [Google Scholar]

- Gonzales LA, Benefit BR, McCrossin ML, Spoor F. Cerebral complexity preceded enlarged brain size and reduced olfactory bulbs in Old World monkeys. Nature communications. 2015;6:7580. doi: 10.1038/ncomms8580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Groman SM, James AS, Seu E, Crawford MA, Harpster SN, Jentsch JD. Monoamine levels within the orbitofrontal cortex and putamen interact to predict reversal learning performance. Biological psychiatry. 2013;73:756–762. doi: 10.1016/j.biopsych.2012.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hornak J, O'Doherty J, Bramham J, Rolls ET, Morris RG, Bullock PR, Polkey CE. Reward-related reversal learning after surgical excisions in orbito-frontal or dorsolateral prefrontal cortex in humans. Journal of Cognitive Neuroscience. 2004;16:463–478. doi: 10.1162/089892904322926791. [DOI] [PubMed] [Google Scholar]

- Howard JD, Kahnt T. Identity-specific reward representations in orbitofrontal cortex are modulated by selective devaluation. J Neurosci. 2017 doi: 10.1523/JNEUROSCI.3473-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hubrecht RC. Home-range size and use and territorial behavior in the common marmoset, Callithrix jacchus jacchus, at the Tapacura Field Station, Recife, Brazil. International Journal of Primatology. 1985;6:533–550. [Google Scholar]

- Iversen SD, Mishkin M. Perseverative interference in monkeys following selective lesions of the inferior prefrontal convexity. Experimental Brain Research. 1970;11:376–386. doi: 10.1007/BF00237911. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Murray EA. Selective bilateral amygdala lesions in rhesus monkeys fail to disrupt object reversal learning. Journal of Neuroscience. 2007;27:1054–1062. doi: 10.1523/JNEUROSCI.3616-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. Journal of Neuroscience. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Comparison of the effects of bilateral orbital prefrontal cortex lesions and amygdala lesions on emotional responses in rhesus monkeys. Journal of Neuroscience. 2005;25:8534–8542. doi: 10.1523/JNEUROSCI.1232-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaskan PM, Costa VD, Eaton HP, Zemskova JA, Mitz AR, Leopold DA, Ungerleider LG, Murray EA. Learned Value Shapes Responses to Objects in Frontal and Ventral Stream Networks in Macaque Monkeys. Cereb Cortex. 2016 doi: 10.1093/cercor/bhw113. [DOI] [PMC free article] [PubMed] [Google Scholar]