Abstract

Objective:

We present the results of the 2015 quality metrics (QUALMET) survey, which was designed to assess the commonalities and variability of selected quality and productivity metrics currently employed by a large sample of academic radiology departments representing all regions in the USA.

Methods:

The survey of key radiology metrics was distributed in March–April of 2015 via personal e-mail to 112 academic radiology departments.

Results:

There was a 34.8% institutional response rate. We found that most academic departments of radiology commonly utilize metrics of hand hygiene, report turn around time (RTAT), relative value unit (RVU) productivity, patient satisfaction and participation in peer review. RTAT targets were found to vary widely. The implementation of radiology peer review and the variety of ways in which peer review results are used within academic radiology departments, the use of clinical decision support tools and requirements for radiologist participation in Maintenance of Certification also varied. Policies for hand hygiene and critical results communication were very similar across all institutions reporting, and most departments utilized some form of missed case/difficult case conference as part of their quality and safety programme, as well as some form of periodic radiologist performance reviews.

Conclusion:

Results of the QUALMET survey suggest many similarities in tracking and utilization of the selected quality and productivity metrics included in our survey. Use of quality indicators is not a fully standardized process among academic radiology departments.

Advances in knowledge:

This article examines the current quality and productivity metrics in academic radiology.

INTRODUCTION

One cannot manage anything effectively without recourse to measurement. A treasured quote, often mistakenly attributed to W Edwards Deming, is “you can't improve what you can't measure”. What Deming actually said was “The most important figures that one needs for management are unknown or unknowable, but successful management must nevertheless take account of them”. Demming1 was proponent of statistical quality control best known for his work with Japanese industry after World War II. While meaningful measurement may seem relatively straightforward in a manufacturing environment (e.g. choosing metrics and benchmarks for productivity per worker, cost per unit, number of defects per million units produced etc.), selecting similarly actionable or meaningful metrics within a service-based enterprise such as radiology is much more challenging. As healthcare payment moves inexorably to a “value” paradigm, the need to reliably and reproducibly measure the quality of service provided becomes paramount.

The national “volume-to-value” movement in American healthcare economics places a new premium on “quality”, as being the numerator in the new “value equation” paradigm (Value = Quality/Costs). The majority of metrics discussed in this article attempt to increase value by directly or indirectly encouraging higher quality. The relative value unit (RVU) productivity metric is used to encourage greater productivity per radiologist and increase value by decreasing cost. Academic radiology departments are thus experiencing increasing pressures to demonstrate that they provide a higher level of quality than their competitors, quality that is at least commensurate with their higher relative costs. As such, academic departments of radiology are often the first to develop and adopt new quality measures and programmes and to serve as a “testing ground” for these novel quality programmes and metrics. In recent years, there has been considerable discussion within the radiology literature as to which of many proposed quality metrics offer the greatest utility in assessing and improving service quality in radiology;2–7 however, to date, only a limited range of quality metrics are actually in common use.

Our study is a survey of a large sample of academic radiology departments designed to assess the variability in targets or goals of quality and productivity metrics commonly utilized by academic radiology departments.

METHODS AND MATERIALS

A cross-sectional multi-institutional survey (Table 1) was distributed to academic radiology departments throughout the USA from 13 March–4 April 2015 via personalized e-mail to the department quality and safety officer or department chair with a link to Survey Monkey. An exception was obtained from our institutional review board. Academic radiology departments were identified as those having a department chair with a membership in the Society of Chairs of Academic Radiology Departments. The study objective was to identify and assess the variability/consistency of utilization of quality and productivity metrics currently used by academic radiology departments in the USA. Inclusion criteria were that respondents would be an individual faculty member at an academic radiology centre who self-reported that they are familiar with the quality metrics, procedures and process improvement projects under way in their own academic radiology department. The individual responses were kept confidential and anonymous to all but the primary investigator.

Table 1.

QUALMET survey questions

| What is your role in the radiology department? |

| Are faculty required to participate in MOC? |

| Is hand hygiene compliance measured in the radiology department and if so, how? |

| Do you track RTAT? |

| If your measurement of RTAT is not study completion to final signature, how is it measured? |

| What is your target RTAT in the ED for radiographs? |

| What is your target RTAT in the ED for CT? |

| What is your target RTAT in the ED for MRI? |

| What is your target RTAT for inpatient radiographs? |

| What is your target RTAT for inpatient CT? |

| What is your target RTAT for inpatient MRI? |

| What is your target report RTAT for outpatient routine radiographs? |

| What is your target RTAT for outpatient routine CT? |

| What is your target RTAT for outpatient routine MRI? |

| How do you communicate critical results? |

| Does your department participate in peer review? |

| What product is used for peer review? |

| How is a discrepancy discovered in the peer review process communicated to the radiologist in error? |

| Do you have departmental or section challenging/missed case conferences? How often? |

| Does your hospital have a patient portal? |

| If your hospital has a patient portal, are radiology reports included? |

| Do you have a decision support tool to assist your referring clinicians in ordering the correct examination? |

| How is patient satisfaction measured? |

| Do you distribute satisfaction surveys to your referring clinicians? If so, how often? |

| How frequently do your radiologists get formal evaluations from the section head or chair? |

| Are your radiologists given target RVU productivity based on either the AAMC or MGMA surveys? |

| If so, what is the minimum and target percentage? |

AAMC, American Association of Medical College; ED, emergency department; MGMA, Medical Group Management Association; MOC, Maintenance of Certification; RTAT, report turn around time; TAT, turn around time; RVU, relative value unit.

QUALMET survey contents

The survey questions were designed by examining what we measure in our department and asking radiologists at other academic centres what they measure and how (Table 1). We then reviewed the literature on quality metrics in academic radiology to look for any metrics we might have missed. The role of the respondent within their academic department of radiology was first determined.

Participation in Maintenance of Certification

The respondents were asked whether radiologists are required to participate in Maintenance of Certification (MOC).

Hand hygiene compliance

The QUALMET survey then queried whether hand hygiene compliance is measured in the radiology department and if so, how?

Report turn around time

Is report turn around time (RTAT) tracked at the responder institution? The survey then assessed the target turn around time for radiographs, CT and MRI in the emergency department (ED), inpatient population and routine outpatient population if any. The respondents were asked to describe how RTAT is measured in their department with free text. Is it study completion to final report signature or some other method?

Critical results reporting

The respondents were asked to select all methods of critical results reporting utilized by their radiology department. Critical results refer to all interpreted studies.

Peer review and the challenging case or missed case conference

Peer review in this study is any method for radiologists reviewing a colleague's cases for a variety of credentialing, recredentialing and quality purposes. The American College of Radiology (ACR) and the Joint Commission on Accreditation of Healthcare Organizations (JCAHO) requires some form of peer review. A few popular methods were given as responses (ACR RadPeer, Radisphere, Primordial, Insight Health Solutions Radiology Insight peer review) and an “other” response with free-text answer. There are questions regarding departmental participation in a peer review process and what product or method of peer review is used. The respondents were asked how the discrepancies uncovered in peer review are communicated to the radiologist in error. The survey queried whether error percentage results discovered in peer review are included in the formal performance review. The survey assessed whether the department or individual sections engage in a challenging (missed) case conference, and if so the frequency.

Use of patient web portals

The respondents were asked whether their hospital has a patient portal and if so, are the radiology reports included in the portal.

Decision support tools

A decision support tool is software incorporated into the computerized provider order entry (CPOE) system of the health system. These tools use evidence-based medicine to assist the ordering physician with choosing the most appropriate next imaging test. The survey assessed the presence or absence of a decision support tool to assist referring clinicians in ordering the correct imaging examination.

Patient and referring clinician satisfaction surveys

The survey queried how patient satisfaction is assessed and whether satisfaction surveys are also distributed to the referring physicians and how frequently.

Periodic formal evaluations

The respondents were asked how frequently do the radiologists receive formal evaluations from the division chief or department chair.

Relative value unit productivity

The survey queried whether RVU productivity is tracked and whether radiologists are given a figure for the minimum and target RVU productivity based on either the American Association of Medical College or Medical Group Management Association surveys. The individual responses were kept confidential and anonymous to all but the primary investigator.

RESULTS

Role of respondents

A total of 39 (25%) of the 155 survey recipients completed the survey, representing 34.8% of the 112 academic centres with chair membership in the Society of Chairs of Academic Radiology Departments at the time of the survey. In 43 institutions, a second recipient was identified and sent a survey after initial lack of survey response. No more than one completed survey per academic centre was incorporated into the data. The department chair completed the survey in 61.5% (n = 24) of responses. The second largest role among responders was the department quality and safety officer or team member in 20.5% (n = 8). The third most common role identified was “other”, 12.8% (n = 5) with stated roles of Administrator, Vice Chair for Clinical Affairs, former and recently retired department chair and Chief Clinical Officer. 1 (2.6%) division chief (n = 1) and 1 (2.6%) staff radiologist (n = 1) completed the survey.

Participation in Maintenance of Certification

Survey participants reported that radiologists were not required to participate in MOC as a condition of employment in 48.7% (n = 19) of institutions and were required to participate in 46.1% (n = 18) of academic centres. 2 (5.1%) respondents were unsure of the current policy. 100% (39/39) of participants responded to this question.

Hand hygiene compliance

Hand hygiene compliance is tracked in 76.9% (n = 30) of the radiology departments surveyed. Methods communicated in the comments included spot audits, incognito observer, random checks, secret shoppers, and observation by rotating nurses. All systems describe assessing compliance with intermittent or continuous monitoring, by an unsuspected observer. Hand hygiene protocol was not measured by radiology departments in 20.5% (n = 8) of surveyed institutions. In 2.6% (n = 1), institutions respondents were unsure whether the radiology department measures hand hygiene. 100% (39/39) of participants responded to this question.

Report turn around time

RTAT was tracked in 97.4% (n = 38) of departments (Table 2). All 39 participants answered this question. RTAT was tracked as time from study completion to final attending signature in 38% (n = 8) of responding institutions. TAT was defined as the time from preliminary report generation to final attending signature in 14% (n = 3) of responding institutions. TAT was defined as the time from study completion to first available report on picture archiving and communication system (PACS), which would be preliminary if a resident or fellow was involved or final signature if signed directly by an attending with no trainee involvement in 9.5% (n = 2) of responding institutions. 8 (38%) institutions reported tracking multiple TAT metrics. 53% (21/39) of participants responded to this question.

Table 2.

Summation of report turn around time for locations and modalities

| None | 15 min | 30 min | 45 min | 60 min | Other | Unsure | |

|---|---|---|---|---|---|---|---|

| ED radiographs | 0% | 11.1% | 30.6% | 0% | 30.6% | 22.1% | 5.6% |

| ED CT | 0% | 0% | 27% | 2.5% | 46% | 19% | 5.5% |

| ED MRI | 8% | 0% | 22% | 2.5% | 40.5% | 19% | 8% |

| Inpatient X-ray | 16% | 0% | 8% | 0% | 19% | 48.7% | 5.6% |

| Inpatient CT | 19% | 0% | 5.5% | 2.5% | 19% | 48.5% | 5.5% |

| Inpatient MRI | 19% | 0% | 5.5% | 0% | 21.5% | 45.9% | 8.1% |

| Outpatient X-ray | 16% | 0% | 2.7% | 0% | 10.8% | 57% | 13.5% |

| Outpatient CT | 16% | 0% | 2.7% | 0% | 5.8% | 62% | 13.5% |

| Outpatient MRI | 26% | 0% | 4.5% | 0% | 13% | 54.5% | 2% |

ED, emergency department.

Emergency department turn around time

Departments tracking TAT reported that the goal for radiographs obtained in the ED was none in 0% (n = 0), 15 min or less in 11.1% (n = 4), 30 min or less in 30.6% (n = 11), 45 min or less in 0% (n = 0), 60 min or less in 30.6% (n = 11), other in 22.1% (n = 8) and unsure in 5.6% (n = 2) institutions (Table 2). 92% (36/39) of participants answered this question. The TAT goal for CT obtained in the ED was none in 0% (n = 0), 15 min or less in 0% (n = 0), 30 min or less in 27% (n = 10), 45 min or less in 2.5% (n = 1), 60 min or less in 46% (n = 17), other in 19% (n = 7) and unsure in 5.5% (n = 2) institutions. 95% (37/39) of participants answered this question. The TAT goal for MRI obtained in the ED was none in 8% (n = 3), 15 min or less in 0% (n = 0), 30 min or less in 22% (n = 8), 45 min or less in 2.5% (n = 1), 60 min or less in 40.5% (n = 15), other in 19% (n = 7) and unsure in 8% (n = 3) institutions. 95% (37/39) of participants answered this question.

Inpatient imaging turn around time

The TAT goal for radiographs obtained for inpatients was none in 16% (n = 6), 15 min or less in 0% (n = 0), 30 min or less in 8% (n = 3), 45 min or less in 0% (n = 0), 60 min or less in 19% (n = 7), other in 48.7% (n = 18) and unsure in 5.6% (n = 2) institutions. 95% (37/39) of participants answered this question. The TAT goal for CT obtained for inpatients was none in 19% (n = 7), 15 min or less in 0% (n = 0), 30 min or less in 5.5% (n = 2), 45 min or less in 2.5% (n = 1), 60 min or less in 19% (n = 7), other in 48.5% (n = 18) and unsure in 5.5% (n = 2) institutions. 95% (37/39) of participants answered this question. The TAT goal for MRI obtained for inpatients was none in 19% (n = 7), 15 min or less in 0% (n = 0), 30 min or less in 5.5% (n = 2), 45 min or less in 0% (n = 0), 60 min or less in 21.5% (n = 8), other in 45.9% (n = 17) and unsure in 8.1% (n = 3) institutions. 95% (37/39) of participants answered this question.

Routine outpatient imaging

The TAT goal for radiographs obtained for routine outpatient imaging was none in 16% (n = 6), 15 min or less in 0% (n = 0), 30 min or less in 2.7% (n = 1), 45 min or less in 0% (n = 0), 60 min or less in 10.8% (n = 4), other in 57% (n = 21) and unsure in 13.5% (n = 5) institutions. 95% (37/39) of participants answered this question. The TAT goal for CT obtained during outpatient imaging was none in 16% (n = 6), 15 min or less in 0% (n = 0), 30 min or less in 2.7% (n = 1), 45 min or less in 0% (n = 0), 60 min or less in 5.8% (n = 2), other in 62% (n = 23) and unsure in 13.5% (n = 5) institutions. 95% (37/39) of participants answered this question. The TAT goal for MRI obtained for outpatients was none in 26% (n = 6), 15 min or less in 0% (n = 0), 30 min or less in 4.5% (n = 1), 45 min or less in 0% (n = 0), 60 min or less in 13% (n = 3), other in 54.5% (n = 12) and unsure in 2% (n = 1) institutions. 59% (23/39) of participants answered this question.

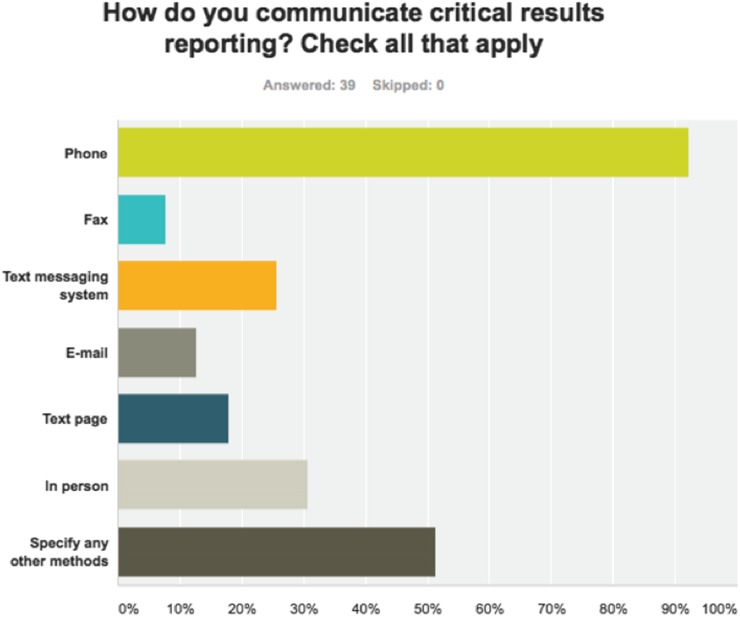

Critical results reporting

Critical results (Figure 1) were delivered to the referring physician by phone in 92% (n = 36) of responding institutions. In-person communication was selected by 30.7% (n = 12) respondents. A text messaging system was selected by 25.6% (n = 10) respondents. Text page was selected by 18% (n = 7) respondents. Fax was selected by 7.7% (n = 3) respondents. Other method was selected by 51.3% (n = 20) of respondents. >1 answer could be selected if applicable. 100% (39/39) of participants responded to this question.

Figure 1.

Methods of critical results reporting utilized by participating institutions.

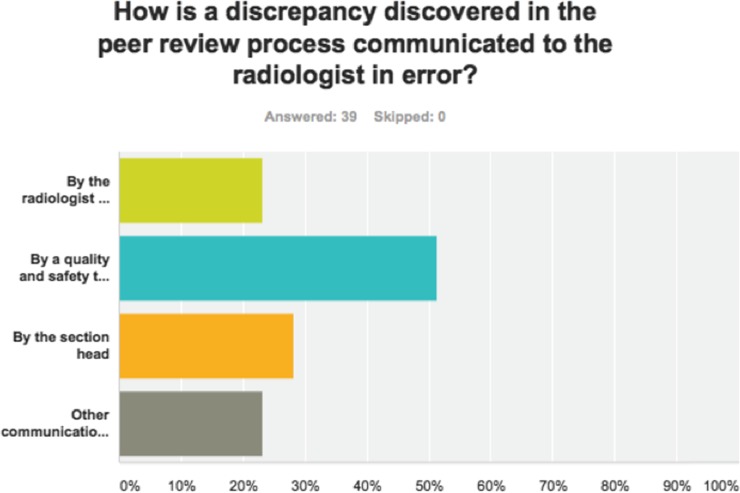

Peer review and the challenging case or missed case conference

Some type of peer review was performed in all institutions. ACR RadPeer was the product utilized by 56.4% (n = 22) of respondent institutions. Radisphere and Insight Health Solutions Radiology Insight peer review were both utilized by 0% (n = 0) institutions surveyed. A Primordial PACS plug-in was used by 7.7% (n = 3) of respondent institutions. Don't know/unsure was the response from 2.6% (n = 1) institutions. Other peer review product was chosen by 46% of respondents. Free-text answers from the “other” category include use of an in-house developed tool by several; many use Peer Vue (Software developer peer vue of sarasota, FL) others use PACS plug-in, EPIC HER system product and Peer review portal in Powerscribe 360. 100% (39/39) of participants responded to this question.

When a discrepancy is discovered during the peer review process, most respondents report the discrepancy is communicated to the radiologist in error (Figure 2) by a quality and safety team member [51% (n = 20) respondents]. A section chief communicates the error in 28.2% (n = 11) cases. The responsibility falls to the radiologist discovering the error and performing the peer review in 23% (n = 9) cases. Other communication paths were selected in 23% (n = 9) cases. 100% (39/39) of participants responded to this question.

Figure 2.

Responsibility for communication of the discovered discrepancy.

Percentage results of peer review are included in yearly evaluations at 61.5% (n = 24) of respondent institutions. Peer review results were not discussed in formal evaluations at 30.8% (n = 12) of respondent institutions. 7.7% (n = 3) respondents answered don't know/unsure. 100% (39/39) of participants responded to this question.

The challenging (missed) case conference was utilized by 97.4% (n = 38) of respondent institutions and absent in 2.6% (n = 1) respondent institutions. The frequency of occurrence is monthly in 46.2% (n = 18) respondent institutions, quarterly in 28.2% (n = 11) respondent institutions and yearly in 2.6% (n = 1) respondent institutions. Other frequency was reported in 20.5% (n = 8) of respondent institutions. 100% (39/39) of participants responded to this question.

Use of patient web portals

A patient web portal, whereby patients can access radiology reports directly, is used by 92.3% (n = 36) of participating institutions. 1 (2.6%) respondent institutions reported no patient portal and 5.1% (n = 2) respondent institutions answered don't know/unsure. (39/39) 100% of participants responded to this question.

Of the institutions with a patient portal, 83.8% (n = 31) institutions report the availability of radiology reports on the patient portal. 3 (8.1%) respondents replied radiology reports were not available on the patient portal and 8.1% (n = 3) respondents replied don't know/unsure. 95% (37/39) of participants answered this question.

Decision support tools

A decision support tool to assist referring physicians in ordering the most appropriate imaging examination is being used in 46.1% (n = 18) of respondent institutions. 100% (39/39) of participants responded to this question.

Patient and referring clinician satisfaction surveys

Patient satisfaction was measured using the Press Ganey survey in 81.6% (n = 31) of respondent institutions. Zero respondents reported use of National Research Corporation or The Myers Group to measure patient satisfaction. A departmentally designed survey was implemented in 34.2% (n = 13) of respondent institutions. 1 (2.6%) respondent replied don't know/unsure and 21.1% (n = 8) respondents replied other. 97% (38/39) of participants responded to this question.

Satisfaction surveys are distributed to the referring clinicians in 65.8% (n = 25) respondent institutions. Referring physicians are not surveyed in 31.6% (n = 12) of respondent institutions. Respondents did not know in 1 (2.6%) institution. 97% (38/39) of participants responded to this question.

The periodicity of satisfaction surveys to referring clinicians was most frequently yearly [44.1% (n = 15)]. Surveys were distributed quarterly in 14.7% (n = 5). Never was answered by 20% (n = 7), don't know by 8.8% (n = 3) and other by 11.8% (n = 4). 87% (34/39) of participants responded to this question.

Periodic formal evaluations

Yearly formal evaluations of radiologists by the department chair or section chief were noted in 84.6% (n = 33) of respondent institutions. Quarterly evaluations were performed in 5.1% (n = 2) of respondent institutions. Zero respondents reported formal evaluations were never given. 18% respondents reported the frequency of formal evaluations as other. 100% (39/39) of participants responded to this question.

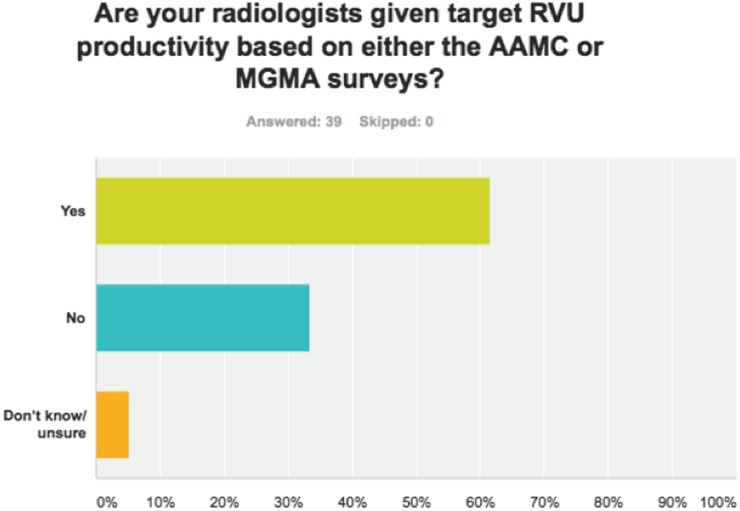

Relative value unit productivity

Radiologists are given target RVU productivity goals at 61.5% (n = 24) of respondent institutions (Figure 3). 13 (33.3%) of respondents report no RVU productivity goals. 2 (5.1%) respondents answered don't know/unsure. The minimum value varied from the 25th percentile to the 50th percentile. The target value varied from the 50th percentile to the 100th percentile. 100% (39/39) of participants responded to this question.

Figure 3.

Approximately 62% of respondent institutions tracked individual radiologists relative value unit (RVU) productivity compared with some national benchmark. AAMC, American Association of Medical College; MGMA, Medical Group Management Association.

DISCUSSION

Our study demonstrated similarities in the use of the selected quality and productivity metrics at surveyed academic institutions.

Maintenance of Certification

The MOC guidelines developed by the American Board of Medical Specialties in 2002 were approved by the 24 speciality boards and include 6 core competencies and 4 components with time-limited certifications ranging from 7 to 10 years.8 Almost half of the institutions surveyed require participation in MOC as a condition of employment. This requirement affects only those radiologists who achieved lifetime board certification before June of 2002. Those achieving board certification after June 2002 received a time-limited certification by the American Board of Radiology and need to participate in MOC regardless of departmental policy. Many institutions that do not require MOC may include participation as a component in the determination of financial incentive (bonus). Some experts question the value of MOC participation,9 but it is currently the best metric to suggest a radiologist has up-to-date knowledge and is familiar with recommended quality and safety practices.10 Third party payers may someday require MOC participation for payment or may reward MOC physicians with a more favourable payment scale.11

Hand hygiene

Hand hygiene compliance is one of the many quality indicators which can be readily measured in the radiology department along with patient falls, radiographic contrast extravasation, patient wait times, study availability, radiation dose, wrong study (patient call backs), and accurate preparation for imaging (study postponed). Initiatives to improve hand hygiene have been instituted at many institutions,12 and hand hygiene was one of the key performance indicators listed under the category of patient safety and quality care at Massachusetts General Hospital.2 Hand hygiene compliance is tracked in 76.9% of the institutions surveyed. This metric is easy to track and improvement decreases hospital-acquired infections.

Report turn around time

The implementation of PACS, electronic reporting systems and voice recognition software has resulted in marked improvement of radiology RTAT in recent years.13,14 The time to final report signature by an attending radiologist has improved from several days just over 10 years ago to the potential for an average of several minutes today. Many argue that radiology departments create little value until referring physicians have access to the finalized radiology report to guide treatment, and anything that delays report availability will diminish the value of radiology.14 Some studies are very time sensitive such as stroke imaging or trauma with suspected visceral bleeding. Delays can result in increased brain damage or death.

There is legitimate concern, however, that further improvements in these measures beyond the current practice level may have little impact in patient outcomes but could have a strong negative effect on resident and fellow training. Authors have suggested that the current focus on RTAT has decreased resident exposure to ED studies and has resulted in decreased teaching time during the resident readout.15 On the other hand, resident involvement can also potentially have a positive influence on the quality of care, as each case benefits from the scrutiny of two physicians (i.e. the trainee and the attending radiologist) for the same cost.

Critical results reporting

Effective communication between healthcare providers is very important to the delivery of quality patient care. Greater than half of all preventable adverse events occurring after hospital discharge are related to poor communication among healthcare providers.16 The most common utilized method of critical results reporting is by the radiologist to the referring physician via phone conversation (92%). A critical result may be defined as “a new/unexpected radiologic finding that could result in mortality or significant morbidity if appropriate diagnostic and/or therapeutic follow-up steps are not undertaken”.17,18 Phone conversation and direct person-to-person communications with documentation in the radiology report are the currently recommended methods. This direct conversation allows additional clinical history to be communicated, decreases the chance of misinterpretation and increases rapport with the referring physician. This direct voice communication has the disadvantage of requiring a significant amount of the radiologist time, thereby decreasing RVU productivity.

Electronic methods of communication with feedback verification of receipt are faster, but do not have several of the advantages of direct voice communication. Several academic departments reported the use of text messaging, text page, e-mail and fax for communication of critical results. This is not generally recommended, however, as there is no verification that the referring clinician or patient has actually received the transmitted message. There is past legal precedent attributing equal responsibility between the physician requesting the test to seek the result and to the radiologist to make the results known in a timely way, with greater responsibility shifting to the radiologist when results are time sensitive or critical to prevent serious injury or death.19

Peer review

Peer review utilizes oversight among radiology colleagues as a means of ensuring quality care for patients.20 The Joint Commission requires some form of departmental peer review for accreditation of hospital radiology departments. Not surprisingly, all respondent institutions participated in some form of peer review. The radiology department or parent health systems are free to decide how this peer review is performed. ACR RadPeer™ was the peer review product most often utilized (56.4%) by academic centres in our survey. Tying individual peer review results to a yearly performance review or incentive payment could decrease the likelihood of honest reporting of errors by the group not wanting to harm their colleagues (resulting in underreporting). Peer review results should be protected from legal discovery for this same reason. In departments where imaging interpretation discrepancies are required to be communicated by the radiologist performing the peer review to the radiologist in error, the peer review process removes the discrepancy reporter anonymity. In a 2014 article, 44% of respondents agreed with the statement that peer review, as performed in our institution using a system similar to RadPeer, is a waste of time.23 Although the peer process is not perfect as performed at most institutions, we believe that valuable information can be obtained when sampling 2.5% of each radiologist volume with a maximum of 300 cases.21

Physician errors collected in the peer review process or by some other method can be used for radiologist teaching in the form of challenging (missed) case conferences, or morbidity and mortality conferences.20 This allows for openly discussing mistakes as a learning opportunity for all members of the department or section.21 Most radiology departments surveyed are using data obtained during peer review to develop challenging case conferences. The challenging case conference was utilized by approximately 97% of respondent institutions. These most often occur monthly (46%) followed by quarterly (28%). The underlying premise is that the open review and discussion of errors in a group format can lead to better understanding of error and risk, increase the knowledge base of all practitioners within the group as well as serve to promote dialogue on possible ways to prevent similar errors from occurring in the future. This process must be performed non-judgmentally, and often the radiologists who committed the error are allowed to remain anonymous during the discussion if they so choose. If blame, embarrassment and fear of punishment are a feature of the peer review/morbidity and mortality process, one can reasonably expect that individual radiologist participation will likely plummet. Active participation in a departmental or institutional peer review process and regular participation (at least 10 per year) in departmental or group conferences focused on patient safety such as a challenging case conference satisfy American Board of Radiology MOC Part 4 requirements as of this writing.22

Patient web portals

The goal of the patient portal is to increase patient engagement in their healthcare, and some literature supports the utility of patient portals.23 A patient web portal was available in 92.3% of the academic health systems surveyed. This high percentage is expected, as the availability of a patient portal is required by the Health Information Technology for Economic and Clinical Health act by healthcare organizations to qualify for “Meaningful Use Stage 2” incentives and avoid future penalties.23 Radiology reports were available in 83.8% of those systems using patient portals. Many comments included “there is a delay before radiology reports are available on the portal”. This delay, or embargo of radiology report access, most commonly of 48–72 h12 is a typical feature of patient web portals designed to allow the referring physician an opportunity to access the results before they are made available to patients, and thus be the first to discuss the results with their patient—a feature that is especially valued by physicians when test results include bad news or require substantial explanation in order for patients to appreciate their significance.

Decision support tools

In an effort to decrease duplicate or unnecessary imaging and associated costs, beginning in January 2017 (as of this writing, this deadline will likely be postponed), referring clinicians have been mandated by Centers for Medicare & Medicaid Services to use physician-developed appropriateness criteria when ordering advanced imaging for all patients at Medicare.24 The most likely form of implementation will be a clinical decision support (CDS) tool integrated with the computerized physician order entry (CPOE) system. Integrating CDS into inpatient CPOE has been shown to increase the overall ACR Appropriateness Criteria score of advanced imaging requests. This was more pronounced in primary care physicians than in specialists.24 A CDS tool was currently in use at approximately 46% of respondent institutions. A well-developed product with a small learning curve and a minimum of extra mouse clicks has the potential of saving the government and third party payers significant money and saving the patient time and potential harm from unnecessary or incorrect imaging.

Satisfaction surveys

Institutions providing service to patients at Medicare & Medicaid are currently required to distribute patient satisfaction surveys. A patient survey can be very useful to help determine in what ways service is falling short of patient expectations. The required standardized Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) Survey may not be optimal for evaluation of imaging services. Quality of care ratings may determine government payouts; so, patient satisfaction surveys have significant influence.25 A departmentally designed survey specific to the medical imaging experience was implemented in approximately 34% of respondent institutions. The information collected with a well-designed survey distributed by radiology could be very instrumental in improving the patient imaging experience. In a competitive market, patients clearly exercise their own preferences in where their imaging is to be performed.

Physicians will recommend particular imaging centres to their patients based on many factors such as availability of services, speed of interpretation (TAT), accuracy and quality of radiology reports and rapport with the radiologists. Several published articles address the measurement of physician satisfaction with radiologic services through the use of survey data.26,27 Satisfaction surveys were distributed to the referring clinicians in approximately 66% of respondent institutions. The needs of the referring clinicians are somewhat different from those of the patients, and include such things as ease of scheduling, making reports and images easily and rapidly available to the clinician and being cheerful and available for consultation matters to this “customer base”.

Periodic formal evaluations

The regular evaluation multisource feedback process is a proven valuable mechanism for avoiding serious problems with residents.28 The implementation of a similar process for attending radiologists may prove useful for evaluating the components of the core competencies, as defined in the MOC requirements. A yearly formal assessment of the radiologist could ensure continued demonstration of the characteristics necessary for quality care.29 In 2007, the Joint Commission introduced the Ongoing Professional Practice Evaluation (OPPE). The OPPE is a screening tool to yearly evaluate all practitioners who have been granted privileges and to identify those clinicians who might be delivering an unacceptably low quality of care. The selected metrics and the data to be measured in the OPPE need to be clearly defined in advance. This information is used to determine whether existing privileges should be continued, limited or revoked.30 In our survey, periodic radiologist performance evaluations occurred yearly most frequently (approximately 85% respondent institutions) followed by quarterly evaluations (5%).

Relative value unit productivity

RVU productivity is the most accurate measure of individual clinical productivity and is proportional to the revenue an individual radiologist earns for the parent organization. In most academic institutions, salary scales and incentive payments are strongly influenced by RVU data. There is widespread concern that this metric is overused in academic departments of radiology, potentially incentivizing radiologists to neglect other highly valued professional activities that do not directly generate revenue, such as resident and medical student teaching, research and participation in multidisciplinary conferences. Radiology departments may choose to decrease academic time to increase the RVU productivity of each radiologist with potential negative results to the education and research. In their 2013 article, MacDonald et al31 identified six important categories of activities contributing to the workload of the average academic radiologist including the interpretation and reporting of imaging studies (35% of time), procedures (23%), resident supervision (15%), clinical conferences and teaching (14%), informal case discussions with referring clinicians (10%) and administrative duties (3%). Some authors describe the implementation of an academic RVU system that assigns weights to and creates formulas for assessing productivity in publications, teaching, administrative and community service and research.32 The American Association of Medical College and the Medical Group Management Association conduct surveys and provide benchmarks of RVU productivity.33 Approximately 62% of respondent institutions tracked individual radiologist RVU productivity compared with some national benchmark. This category varied widely in the QUALMET survey, with minimum acceptable values set between the 25th and 50th percentile and target performance between the 50th and 100th percentile.

Experience demonstrates that radiologist behaviours will change to satisfy the metric and not necessarily achieve the intended result.34 Care should be taken when selecting quality and productivity metrics. For example, a strong focus on RVU productivity measures may influence radiologists to spend less time on other important behaviours such as resident and medical student teaching, interdepartmental conferences and communication of critical results, all of which are important components of the mission of an academic department of radiology.34,35

In summary, the results of the QUALMET survey suggest many similarities in tracking and utilization of the quality metrics included in our survey. The metrics employed with greatest variability are the requirement of MOC participation, the use of a decision support tool, the use of referring physician satisfaction surveys and departmental RVU expectations. As stated in the literature, use of quality indicators is not a standardized process among academic radiology departments.6 There has been considerable discussion within radiology literature as to which quality and productivity metrics offer the greatest utility in assessing and improving the quality of radiology departments.2–7 Future work is needed to validate new metrics to assure they reflect desired behaviours and outcomes to improve quality and value in radiological service, and to develop national guidelines to reduce variability in how these metrics are applied and interpreted. Academic departments of radiology are in a unique position to provide national leadership in this area.

Contributor Information

Eric A Walker, Email: ewalker@hmc.psu.edu.

Jonelle M Petscavage-Thomas, Email: jthomas5@hmc.psu.edu.

Joseph S Fotos, Email: jfotos@hmc.psu.edu.

Michael A Bruno, Email: mbruno@hmc.psu.edu.

REFERENCES

- 1.Demming WE. Out of the crisis. Cambridge, MA: Massachusetts Institute of Technology; 1986. [Google Scholar]

- 2.Abujudeh HH, Kaewlai R, Asfaw BA, Thrall JH. Quality initiatives: key performance indicators for measuring and improving radiology department performance. Radiographics 2010; 30: 571–80. doi: https://doi.org/10.1148/rg.303095761 [DOI] [PubMed] [Google Scholar]

- 3.Abujudeh HH, Bruno MA. Quality and safety in radiology. 2012. [Google Scholar]

- 4.Dunnick NR, Applegate KE, Arenson RL. Quality—a radiology imperative: report of the 2006 Intersociety Conference. J Am Coll Radiol 2007; 4: 156–61. doi: https://doi.org/10.1016/j.jacr.2006.11.002 [DOI] [PubMed] [Google Scholar]

- 5.Ondategui-Parra S, Bhagwat JG, Zou KH, Gogate A, Intriere LA, Kelly P, et al. Practice management performance indicators in academic radiology departments. Radiology 2004; 233: 716–22. [DOI] [PubMed] [Google Scholar]

- 6.Ondategui-Parra S, Erturk SM, Ros PR. Survey of the use of quality indicators in academic radiology departments. AJR Am J Roentgenol 2006; 187: W451–5. [DOI] [PubMed] [Google Scholar]

- 7. General Radiology Improvement Database [Internet]. acr.org. [cited 2017 Jan 31]. Available from: http://www.acr.org/∼/media/ACR/Documents/PDF/QualitySafety/NRDR/GRID/MeasuresGRID.

- 8.Madewell JE. Lifelong learning and the maintenance of certification. J Am Coll Radiol 2004; 1: 199–203; discussion 204–7. [DOI] [PubMed] [Google Scholar]

- 9.Grosch EN. Does specialty board certification influence clinical outcomes? J Eval Clin Pract 2006; 12: 473–81. [DOI] [PubMed] [Google Scholar]

- 10.Guiberteau MJ, Becker GJ. Counterpoint: maintenance of certification: focus on physician concerns. J Am Coll Radiol 2015; 12: 434–7. doi: https://doi.org/10.1016/j.jacr.2015.02.005 [DOI] [PubMed] [Google Scholar]

- 11.Dudley RA. Pay-for-performance research: how to learn what clinicians and policy makers need to know. JAMA 2005; 294: 1821–3. [DOI] [PubMed] [Google Scholar]

- 12.Bruno MA, Nagy P. Fundamentals of quality and safety in diagnostic radiology. J Am Coll Radiol 2014; 11: 1115–20. doi: https://doi.org/10.1016/j.jacr.2014.08.028 [DOI] [PubMed] [Google Scholar]

- 13.Krishnaraj A, Lee JK, Laws SA, Crawford TJ. Voice recognition software: effect on radiology report turnaround time at an academic medical center. AJR Am J Roentgenol 2010; 195: 194–7. doi: https://doi.org/10.2214/AJR.09.3169 [DOI] [PubMed] [Google Scholar]

- 14.Boland GW, Guimaraes AS, Mueller PR. Radiology report turnaround: expectations and solutions. Eur Radiol 2008; 18: 1326–8. doi: https://doi.org/10.1007/s00330-008-0905-1 [DOI] [PubMed] [Google Scholar]

- 15.England E, Collins J, White RD, Seagull FJ, Deledda J. Radiology report turnaround time: effect on resident education. Acad Radiol 2015; 22: 662–7. doi: https://doi.org/10.1016/j.acra.2014.12.023 [DOI] [PubMed] [Google Scholar]

- 16.Roy CL, Poon EG, Karson AS, Ladak-Merchant Z, Johnson RE, Maviglia SM, et al. Patient safety concerns arising from test results that return after hospital discharge. Ann Intern Med 2005; 143: 121–8. [DOI] [PubMed] [Google Scholar]

- 17.Khorasani R. Optimizing communication of critical test results. J Am Coll Radiol 2009; 6: 721–3. doi: https://doi.org/10.1016/j.jacr.2009.07.011 [DOI] [PubMed] [Google Scholar]

- 18.Anthony SG, Prevedello LM, Damiano MM, Gandhi TK, Doubilet PM, Seltzer SE, et al. Impact of a 4-year quality improvement initiative to improve communication of critical imaging test results. Radiology 2011; 259: 802–7. doi: https://doi.org/10.1148/radiol.11101396 [DOI] [PubMed] [Google Scholar]

- 19.Berlin L. Communicating findings of radiologic examinations: whither goest the radiologist's duty? AJR Am J Roentgenol 2002; 178: 809–15. [DOI] [PubMed] [Google Scholar]

- 20.Halsted MJ. Radiology peer review as an opportunity to reduce errors and improve patient care. J Am Coll Radiol 2004; 1: 984–7. [DOI] [PubMed] [Google Scholar]

- 21.Eisenberg RL, Cunningham ML, Siewert B, Kruskal JB. Survey of faculty perceptions regarding a peer review system. J Am Coll Radiol 2014; 11: 397–401. [DOI] [PubMed] [Google Scholar]

- 22. Participatory quality improvement activities for part 4. theabr.org. Cited 22 June 2016. Available from: http://www.theabr.org/moc-part4-activities.

- 23.Kruse CS, Bolton K, Freriks G. The effect of patient portals on quality outcomes and its implications to meaningful use: a systematic review. J Med Internet Res 2015; 17: e44. doi: https://doi.org/10.2196/jmir.3171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Moriarity AK, Klochko C, O'Brien M, Halabi S. The effect of clinical decision support for advanced inpatient imaging. J Am Coll Radiol 2015; 12: 358–63. doi: https://doi.org/10.1016/j.jacr.2014.11.013 [DOI] [PubMed] [Google Scholar]

- 25.Lang EV, Yuh WT, Ajam A, Kelly R, MacAdam L, Potts R, et al. Understanding patient satisfaction ratings for radiology services. AJR Am J Roentgenol 2013; 201: 1190–6; quiz 1196. doi: https://doi.org/10.2214/AJR.13.11281 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kubik-Huch RA, Rexroth M, Porst R. Referrer satisfaction as a quality criterion: developing an questionnaire for measuring the quality of services provided by a radiology department [In German.]. Rofo 2005; 177: 429–34. [DOI] [PubMed] [Google Scholar]

- 27.Nielsen GA. Measuring physician satisfaction with radiology services. Radiol Manage 1991; 14: 43–9. [PubMed] [Google Scholar]

- 28.Borus JF. Recognizing and managing residents' problems and problem residents. Acad Radiol 1997; 4: 527–33. [DOI] [PubMed] [Google Scholar]

- 29.Mendiratta-Lala M, Eisenberg RL, Steele JR, Boiselle PM, Kruskal JB. Quality initiatives: measuring and managing the procedural competency of radiologists. Radiographics 2011; 31: 1477–88. doi: https://doi.org/10.1148/rg.315105242 [DOI] [PubMed] [Google Scholar]

- 30.Steele JR, Hovsepian DM, Schomer DF. The joint commission practice performance evaluation: a primer for radiologists. J Am Coll Radiol 2010; 7: 425–30. doi: https://doi.org/10.1016/j.jacr.2010.01.027 [DOI] [PubMed] [Google Scholar]

- 31.MacDonald SL, Cowan IA, Floyd RA, Graham R. Measuring and managing radiologist workload: a method for quantifying radiologist activities and calculating the full-time equivalents required to operate a service. J Med Imaging Radiat Oncol 2013; 57: 551–7. doi: https://doi.org/10.1111/1754-9485.12091 [DOI] [PubMed] [Google Scholar]

- 32.Mezrich R, Nagy PG. The academic RVU: a system for measuring academic productivity. J Am Coll Radiol 2007; 4: 471–8. [DOI] [PubMed] [Google Scholar]

- 33.Satiani B, Matthews MA, Gable D. Work effort, productivity, and compensation trends in members of the society for vascular surgery. Vasc Endovascular Surg 2012; 46: 509–14. doi: https://doi.org/10.1177/1538574412457474 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Taylor GA. Impact of clinical volume on scholarly activity in an academic children's hospital: trends, implications, and possible solutions. Pediatr Radiol 2001; 31: 786–9. [DOI] [PubMed] [Google Scholar]

- 35.Jamadar DA, Carlos R, Caoili EM, Pernicano PG, Jacobson JA, Patel S, et al. Estimating the effects of informal radiology resident teaching on radiologist productivity: what is the cost of teaching? Acad Radiol 2005; 12: 123–8. doi: https://doi.org/10.1016/j.acra.2004.11.006 [DOI] [PubMed] [Google Scholar]