Abstract

Alzheimer’s Disease (AD) is the most common type of dementia. Identifying correct biomarkers may determine pre-symptomatic AD subjects and enable early intervention. Recently, Multi-task sparse feature learning has been successfully applied to many computer vision and biomedical informatics researches. It aims to improve the generalization performance by exploiting the shared features among different tasks. However, most of the existing algorithms are formulated as a supervised learning scheme. Its drawback is with either insufficient feature numbers or missing label information. To address these challenges, we formulate an unsupervised framework for multi-task sparse feature learning based on a novel dictionary learning algorithm. To solve the unsupervised learning problem, we propose a two-stage Multi-Source Multi-Target Dictionary Learning (MMDL) algorithm. In stage 1, we propose a multi-source dictionary learning method to utilize the common and individual sparse features in different time slots. In stage 2, supported by a rigorous theoretical analysis, we develop a multi-task learning method to solve the missing label problem. Empirical studies on an N = 3970 longitudinal brain image data set, which involves 2 sources and 5 targets, demonstrate the improved prediction accuracy and speed efficiency of MMDL in comparison with other state-of-the-art algorithms.

Keywords: Multi-task, Alzheimer’s Disease, Dictionary Learning

1 Introduction

Alzheimer’s disease (AD) is known as the most common type of dementia. It is a slow progressive neurodegenerative disorder leading to a loss of memory and reduction of cognitive function. Many clinical/cognitive measures such as Mini Mental State Examination (MMSE) and Alzheimer’s Disease Assessment Scale cognitive subscale (ADAS-Cog) have been designed to evaluate a subject’s cognitive decline. Subjects are commonly divided into three different groups: AD, Mild Cognitive Impairment (MCI) and Cognitively Unimpaired (CU), defined clinically based on behavioral and above assessments. It is crucial to predict AD related cognitive decline so an early intervention or prevention becomes possible. Prior research have shown that measures from brain magnetic resonance (MR) images correlate closely with cognitive changes and have great potentials to provide early diagnostic markers to predict cognitive decline presymptomatically in a sufficiently rapid and rigorous manner.

The main challenge in AD diagnosis or prognosis with neuroimaging arises from the fact that the data dimensionality is intrinsically high while only a small number of samples are available. In this regard, machine learning has been playing a pivotal role to overcome this so-called “large p, small n” problem. A dictionary that allows us to represent original features as superposition of a small number of its elements so that we can reduce high dimensional image to a small number of features. Dictionary learning [8] has been proposed to use a small number of basis vectors to represent local features effectively and concisely and help image content analysis. However, most existing works on dictionary learning focused on the prediction of target at a single time point [19] or some region-of-interest [18]. In general, a joint analysis of tasks from multiple sources is expected to improve the performance but remains a challenging problem.

Multi-Task Learning (MTL) has been successfully explored for regression with different time slots. The idea of multi-task learning is to utilize the intrinsic relationships among multiple related tasks in order to improve the prediction performance. One way of modeling multi-task relationship is to assume all tasks are related and the task models are connected to each other [6], or the tasks are clustered into groups [21]. Alternatively, one can assume that tasks share a common subspace [4], or a common set of features [1]. Recently, Maurer et al. [12] proposed a sparse coding model for MTL problems based on the generative methods. In this paper, we proposed a novel unsupervised multi-source dictionary learning method to learn the different tasks simultaneously which utilizes shared and individual dictionaries to encode both consistent and individual imaging features for longitudinal image data analysis.

Although a general unsupervised dictionary learning may overcome the missing label problem to obtain the sparse features, we still need to consider the prediction labels at different time points after we learn the sparse features. A forthright method is to perform linear regression at each time point and determine weighted matrix W separately. However, even when we have the common dictionary which models the relationship among different tasks, if prediction is purely based on linear regression which treats all tasks independently and ignores the useful information reserved in the change along the time continuum, there still exists strong bias to predict future multiple targets clinical scores.

To excavate the correlations among the cognitive scores, several multi-task models were put forward. Wang et al. [14] proposed a sparse multi-task regression and feature selection method to jointly analyze the neuroimaging and clinical data in prediction of the memory performance. Zhang and Shen [17] exploited a l2,1-norm based group sparse regression method to select features that could be used to jointly represent the different clinical status and two clinical scores (MMSE and ADAS-cog). Xiang et al. [16] proposed a sparse regression-based feature selection method for AD/MCI diagnosis to maximally utilize features from multiple sources by focusing on a missing modality problem. However, the clinical scores for many patients are missing at some time points, i.e., the target vector yi may be incomplete and the above methods all failed to model this issue. A simple strategy is to remove all patients with missing target values. It, however, significantly reduces the number of samples. Zhou et al [21] considered multi-task with missing target values in the training process, but the algorithm did not incorporate multiple sources data.

In this paper, we propose a novel integrated unsupervised framework, termed Multi-Source Multi-Target Dictionary Learning (MMDL) algorithm, we utilize shared and individual dictionaries to encode both consistent and changing imaging features along longitudinal time points. Meanwhile, we also formulate different time point clinical score predictions as multi-task learning and overcome the missing target values in the training process. The pipeline of our method is illustrated in Fig. 1. We evaluate the proposed framework on the N = 3970 longitudinal images from Alzheimer’s Disease Neuroimaging Initiative (ADNI) database and use longitudinal hippocampal surface features to predict future cognitive scores. Our experimental results outperform some other state-of-the-art methods and demonstrate the effectiveness of the proposed algorithm.

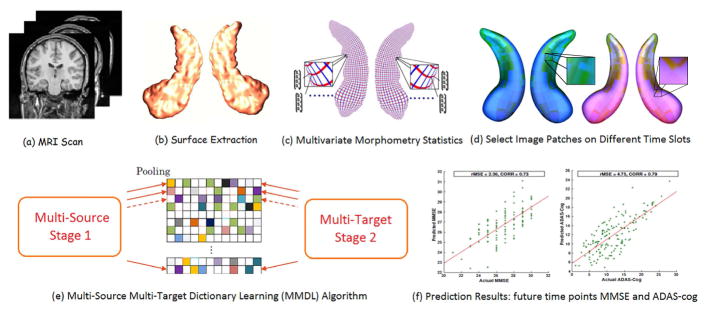

Fig. 1.

The pipeline of our method. We extracted hippocampi from MRI scans (a), then we registered hippocampal surfaces (b) and computed surface multivariate morphometry statistics (c). Image patches were extracted from the surface maps to initialize the dictionary (d) for Multi-Source Multi-Target Dictionary Learning (e). We used features from two time points to predict five future time points MMSE and ADAS-cog (f).

Our main contributions can be summarized into threefold. Firstly, we considered the variance of subjects from different time points (Multi-Source) and proposed an unsupervised dictionary learning method in stage 1 of the MMDL algorithm, in which not only does a patient share features between different time slots but different patients share some common features within the same time point. We also explore the relationship between the shared and individual dictionary in stage 1. Secondly, we use sparse features learned from dictionary learning as an input and multiple future clinical scores as corresponding labels (Multi-Target) to train the multi-task prediction model in stage 2 of the MMDL Algorithm. To the best of our knowledge, it is the first learning model which unifies both multiple source inputs and multiple target outputs with dictionary learning research for brain imaging analysis. Lastly, we also take into account the incomplete label problem. We deal with the missing label problem during the regression process and theoretically prove the correctness of the regression model. Our extensive experimental results on the ADNI dataset show the proposed MMDL achieves faster running speed and lower estimation errors, as well as reasonable prediction scores when comparing with other state-of-the-art algorithms.

2 Multi-Source Multi-Target Dictionary Learning

2.1 Stage 1: Multi-Source Dictionary Learning Stage

Given subjects from T time points: {X1, X2, …, XT}, our goal is to learn a set of sparse codes {Z1, Z2, …, ZT} for each time point where Xt ∈ ℝp×nt, Zt ∈ ℝlt×nt and t ∈ {1, …, T}. p is the feature dimension of each subject, nt is the number of subjects for Xt and lt is the dimension of each sparse code in Zt. When employing the online dictionary learning (ODL) method [11] to learn the sparse codes Zt by Xt individually, we obtain a set of dictionary {D1, …, DT} but there is no correlation between learnt dictionaries. Another solution is to construct the subjects {X1, …, XT} into one data matrix X to obtain the dictionary D. However, only one dictionary D is not sufficient to model the variations among subjects from different time points. To address this problem, we integrate the idea of multi-task learning into the ODL method. We propose a novel online dictionary learning algorithm, called Multi-Source Multi-Target Dictionary Learning (MMDL), to learn the subjects from different time points.

For the subject matrix Xt of a particular time point, MMDL learns a dictionary Dt and sparse codes Zt. Dt is composed of two parts: Dt = [D̂t, D̄t] where D̂t ∈ ℝp×l̂, D̄t ∈ ℝ p×l̄t and l̂ + l̄t = lt. D̂t is the common dictionary among all the learnt dictionaries {D1, …, DT} while D̄t is different from each other and only learnt from the corresponding matrix Xt. Therefore, objective function of MMDL can be reformulated as follows:

| (1) |

where Ψt = {Dt ∈ ℝp×lt : ∀j ∈ 1, …, lt, ||[Dt]j ||2 ≤ 1} (t = 1, 2, ⋯ , T ) and [Dt]j is the jth column of Dt.

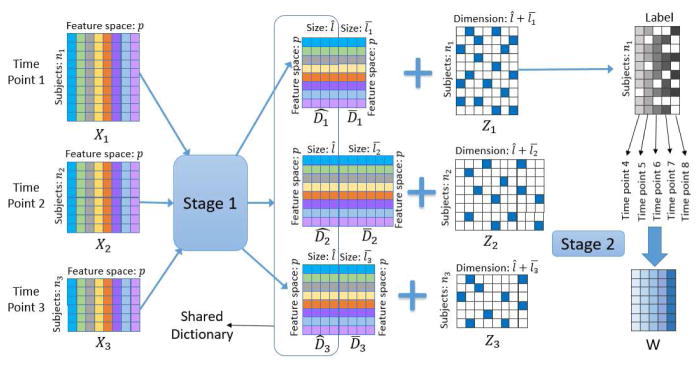

Fig. 2 illustrates the framework of MMDL with subjects of ADNI from three different time points which represents as X1, X2 and X3, respectively. Through the multi-source dictionary learning stage of MMDL, we obtain the dictionary and sparse codes for subjects from each time point t: Dt and Zt. In Stage 1, a dictionary Dt is composed by a shared part D̂t and an individual part D̄t. In this example, D̂1, D̂2 and D̂3 are the same. For the individual part of dictionaries, MMDL learns different D̄t only from the corresponding matrix Xt. We vary the number of columns l̄t in D̄t to introduce the variant in the learnt sparse codes Zt. As a result, the feature dimensions of learnt sparse codes matrix Zt are different from each other. Then we employ the max-pooling [2] method to extract the features and use extracted features to perform the regression across different time points.

Fig. 2.

Illustration of the learning process of MMDL on ADNI datasets from multiple different time points to predict multiple future time points clinical scores.

The initialization of dictionaries in MMDL is critical to the whole learning process. We propose a random patch method to initialize the dictionaries from different time points. The main idea of the random patch method is to randomly select l image patches from n subjects {x1, x2, …, xn} to construct D where D ∈ ℝp×l. It is a similar way to perform the random patch approach in MMDL. In MMDL, the way we initialize D̂t is to randomly select l̂ subjects from subjects across different time points {X1, ⋯ , XT} to construct it. For the individual part of each dictionary, we randomly select l̄ subjects from the corresponding matrix Xt to construct D̄t. After initializing dictionary Dt for each time point, we set all the sparse codes Zt to be zero at the beginning. For each sample Xt at t-th time point, Xt ∈ ℝp×nt.

2.2 Stage 2: Multi-Target Learning with Missing Label

In the longitudinal AD study, we measure the cognitive scores of selected patients at multiple time points. Instead of considering the prediction of cognitive scores at a single time point as a regression task, we formulate the prediction of clinical scores at multiple future time points as a multi-task regression problem. We employ multi-task regression formulations in place of solving a set of independent regression problems since the intrinsic temporal smoothness information among different tasks can be incorporated into the model as prior knowledge. However, the clinical scores for many patients are missing at some time points, especially for 36 and 48 months ADNI data. It is necessary to formulate a multitask regression problem with missing target values to predict clinical scores.

In this paper, we use a matrix Θ ∈ ℝmt×nt to indicate missing target values, where Θi,j = 0 if the target value of label Yt(i, j) is missing and Θi,j = 1 otherwise. Give the sparse codes {Z1, …, ZT} and corresponding labels {Y1, …, YT} from different times where Yt ∈ ℝmt×nt, we formulate the multi-target learning stage with missing target values as:

| (2) |

Algorithm 1.

Multi-Source Multi-Target Dictionary Learning (MMDL)

| Input: Samples and corresponding labels from different time points: {X1, X2, .....XT} and {Y1, Y2, .....YT} | |

| Output: The model for different time points: {W1, …, WT}. | |

| 1: | Stage 1: Multi-Source Dictionary Learning |

| 2: | for k = 1 to κ do |

| 3: | For each image patch xt(i) from sample Xt, i ∈ {1, …, nt} and t ∈ {1, …, T}. |

| 4: | Update . |

| 5: | Update and index set by a few steps of CCD: |

| 6: | . |

| 7: | Update the D̂t and D̄t by one step SGD: |

| 8: | . |

| 9: | Normalize and based on the index set . |

| 10: | Update the shared dictionary . |

| 11: | end for |

| 12: | Obtain the learnt dictionaries and sparse codes: {D1, …, DT}, {Z1, …, ZT}. |

| 13: | Stage 2: Multi-Target Regression with incomplete label |

| 14: | for t = 1 to T do |

| 15: | Given the jth column Yt(j) in Yt, for the jth model wt(j) in Wt |

| 16: | |

| 17: | end for |

Although the Eqn. 2 is associated with missing values on the labels, we show that it has a close form and present the theoretical analysis of stage 2 as follows:

Theorem

For the data matrix pair (Zt, Yt), we denote the jth row’s labels Ỹt(j) in Yt. We use and Ỹt(j) to represent the remaining datasets after removing the missing value in Yt(j). The problem of (Eqn. 2) can decomposed as the following equation:

| (3) |

Proof

Eqn (3) is known the Ridge regression [7]. To optimize the problem, we calculate the gradient and set the gradient to be zero. Then we can get the optimal wt(j) by the following steps:

After solving wt(j) for every time point where j ∈ {1, …, mt}, we can obtain the learnt model {W1, …, WT} to predict the clinical scores.

Our MMDL algorithm can be summarized into Algorithm 1. k denotes the epoch number where k ∈ {1, …, κ}. Φ represents the shared part of each dictionary Dt which is initialized by the random patch method. For each image patch xt(i) extracted from Xt, we learn the i-th sparse code from Zt by several steps of Cyclic Coordinate Descent (CCD) [3]. Then we use learnt sparse codes to update the dictionary and by one step Stochastic Gradient Descent (SGD)[20]. Since is very sparse, we use the index set to record the location of non-zero entries in to accelerate the update of sparse codes and dictionaries. Φ is updated by the end of the k-th iteration to ensure is the same part among all the dictionaries.

2.3 Updating the sparse codes

After we pick an image patch xt(i) from the sample Xt at the time point t, we fix the dictionary and update the sparse codes by following the ODL method. Then the optimization problem we need to solve becomes the following equation:

| (4) |

It is known as the Lasso problem [13]. Coordinate descent [3] is known as one of the state-of-the-art methods for solving this problem. In this study, we perform the CCD to optimize Eqn (4). Empirically, the iteration may take thousands of steps to converge, which is time-consuming in the optimization process of dictionary learning. However, we observed that after a few steps, the support of the coordinates, i.e., the locations of the non-zero entries in zt(i), becomes very accurate, usually after less than ten steps. In this study, we perform P steps CCD to generate the non-zero index set , recording the non-zero entry of . Then we perform S steps CCD to update the sparse codes only on the non-zero entries of , accelerating the learning process significantly. SCC [9, 10] employs a similar strategy to update the sparse codes in a single task. For the multi-task learning, we summarize the updating rules as follows:

Perform P steps CCD to update the locations of the non-zero entries and the model zt(i)k+1.

Perform S steps CCD to update the zt(i)k+1 in the index of .

In (a), for each step CCD, we will pick up j-th coordinate to update the model zt(i)j and non-zero entries, where j ∈ {1, …, lt}. We perform the update from the 1st coordinate to the lt-th coordinate. For each coordinate, we calculate the gradient g based on the objective function (4) then update the model based on g. The calculation of g and follows the equations:

| (5) |

| (6) |

where Ω is a sparse matrix multiplication function that has three input parameters. Take Ω(A, b, I) as an example, A denotes a matrix, b is a vector and I is an index set that records the locations of non-zero entries in b. The returning value of function Ω is defined as: Ω(A, b, I) = Ab. When multiplying A and b, we only manipulate the non-zero entries of b and corresponding columns of A based on the index set I, speeding up the calculation by utilizing the sparsity of b. Γ is the soft thresholding shrinkage function [5] and the definition of Γ is given by:

| (7) |

In the end of (a), we count the non-zero entries in and store the nonzero index in . In (b), we perform S steps CCD by only considering the non-zero entries in . As a result, for each index μ in , we calculate the gradient g and update the by:

| (8) |

| (9) |

Since we only focus on the non-zero entries of the model and P is less than 10 iteration and S is a much larger number, we accelerate the learning process of sparse codes significantly.

2.4 Updating the dictionaries

We update the dictionaries by fixing the sparse codes and updating the current dictionaries. Then, the optimization problem becomes as follow:

| (10) |

After we update the sparse codes, we have already known the non-zero entries of . Another key insight of MMDL is that we just need to focus on updating the non-zero entries of the dictionaries but not all columns of the dictionaries, and it accelerates the optimization dramatically. For example, when we update the i-th column and j-th row’s entry of the dictionary D, the gradient of Dj,i is set to be . If the i-th entry of z is equal to zero, the gradient would be zero. As a result, we do not need to update the i-th column of the dictionary D. The learning rate is set to be an approximation of the inverse of the Hessian matrix , which is updated by the sparse codes in k-th iteration. In the beginning, we update the Hessian matrix by:

| (11) |

We perform one step SGD to update the dictionaries: and . To speed up the computation, we use a vector to store the information Dz − x:

| (12) |

For entry of dictionary in the μ-th column and j-th row, the procedure of learning dictionaries take the form of 1

| (13) |

where μ is the non-zero entry stored in . For the μ-th column of dictionary, we set the learning rate as the inverse of the diagonal element of the Hessian matrix, which is Due to Dt ∈ Ψt in equation (1), it is necessary to normalize the dictionaries and after updating them. We can perform the normalization on the corresponding columns of non-zero entries from because the dictionaries updating only occurs on these columns. Utilizing the non-zero information from can accelerate the whole learning process significantly.

3 Experiments

3.1 Experimental Setting

We studied multiple time points structural MR Imaging from ADNI baseline (837) and 6-month (733) datasets. The responses are the MMSE and ADAS-cog coming from 5 different time points: M12, M18, M24, M36 and M48. Thus, we learned a total of 3970 images which combines 2 sources and 5 targets. The sample sizes corresponding to 5 targets are 728, 326, 641, 454 and 251. For the experiments, we used hippocampal surface multivariate statistics [15] as learning features, which is a 4 × 1 vector on each vertex of 15000 vertices on every hippocampal surface.

We built a prediction model for the above datasets using MMDL algorithm. To train the prediction models, 1102 patches of size 10 × 10 are extracted from surface mesh structures and each patch dimension is 400. The model was trained on an Intel(R) Core(TM) i7-6700 K CPU with 4.0GHz processors, 64 GB of globally addressable memory and a single Nvidia GeForce GTX TITAN X GPU. In the experimental setting of Stage 1 in MMDL, the sparsity λ = 0.1. Also, we selected 10 epochs with a batch size of 1 and 3 iterations of CCD (P is set to be 1 and S is 3). When the dictionaries and sparse codes were learned, Max-Pooling was used to generate features for annotation and get a 1 × 1000 vector feature for each images. In the Stage 2, 5-fold cross validation is used to select model parameters ξ in the training data (between 10−3 and 103).

In order to evaluate the model, we randomly split the data into training and testing sets using a 9:1 ratio and used 10-fold cross validation to avoid data bias. Lastly, we evaluated the overall regression performance using weighted correlation coefficient (wR) and root mean square error (rMSE) for task-specific regression performance measures. The two measures are defined as . For wR, Yi is the ground truth of target of task i and Ŷi is the corresponding predicted value, Corr is the correlation coefficient between two vectors and ni is the number of subjects of task i. For each task of rMSE, y and n is the ground truth of target and the number of subjects and ŷ is the corresponding prediction. The smaller rMSE, the bigger wR mean the better results. We report the mean and standard deviation based on 50 iterations of experiments on different splits of data.

We compared MMDL with multiple state-of-the-art methods, ODL-L: the single-task online dictionary learning [11] followed by Lasso, L21: the multi-task method called L2,1 norm regularization with least square loss [1]. TGL: the disease multi-task progression model called Temporal group Lasso [21], as well as Ridge and Lasso. For the parameters selection, we used the same method with the experimental setting in our stage 2.

3.2 Experimental Results

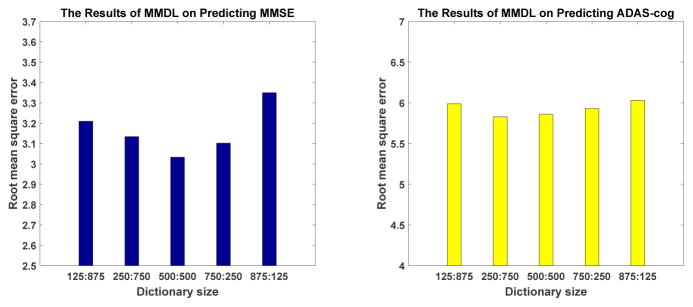

The Size of Common Dictionaries in MMDL

In Stage 1 of MMDL, the common dictionary is assumed to be shared by different tasks. It is necessary to evaluate what is an appropriate size of such common dictionary. Therefore, we set the dictionary size to be 1000 and partitioned the dictionary by different proportions: 125:875, 250:750,500:500, 750:250 and 875:125, where the left number is the size of common dictionary while the right one is the size of individual dictionary for each task. Fig. 3 shows the results of rMSE of MMSE and ADAS-cog prediction. As it shows in Fig. 3, the rMSE of MMSE and ADAS-Cog are lowest when we split the dictionary by half and a half. It means the both of common and individual dictionaries are of equal importance during the multi-task learning. In all experiments, we use the split of 500:500 as the size of common and individual dictionaries, the dimension of each sparse code in MMDL is 1000.

Fig. 3.

Comparison of rMSE performance by varying the size of common dictionary.

Time Efficiency Comparison

We compare the efficiency of our proposed MMDL with the state-of-the-art online dictionary learning (ODL). In this experiment, we focus on the single batch size setting, that is, we process one image patch in each iteration. We vary the dictionary size as: 500, 1000 and 2000. For MMDL, the ratio between the common dictionary and the individual parts is 1:1. We report the results in Table 1. We observe that the proposed MMDL use less time than ODL. When the size of dictionary are increasing, MMDL is more efficient and has a higher speedup compared to ODL.

Table 1.

Time comparisons of MMDL and ODL by varying dictionary size.

| Dictionary Size | MMDL | ODL |

|---|---|---|

| 500 | 1.74 hour | 8.84 hour |

| 1000 | 3.34 hour | 21.95 hour |

| 2000 | 6.93 hour | 49.90 hour |

Performance Comparison

We report the results of MMDL and other methods on the prediction model of MMSE with ADNI group in Table 2. The proposed approach MMDL outperformed ODL-L, Lasso and Ridge, in terms of both rMSE and correlation coefficient wR on four different time points. The results of Lasso and Ridge are very close while sparse coding methods are superior to them. For sparse coding models, we observe that MMDL obtained a lower rMSE and higher correlation result than traditional sparse coding method ODL-L since we consider the correlation between different time slots for different tasks and the relationship with different time points on the same patient among all tasks. We also notice that the proposed MMDL’s significant accuracy improvement for later time points. This may be due to the data sparseness in later time points, as the proposed sparsity-inducing models are expected to achieve better prediction performance in this case.

Table 2.

The Prediction Results of MMSE on Whole Dataset.

| Methods | wR | M12 | M18 | M24 | M36 | M48 |

|---|---|---|---|---|---|---|

| Lasso | 0.40±0.09 | 4.04±0.77 | 3.46±0.97 | 5.53±0.86 | 4.39±0.74 | 4.73±1.49 |

| Ridge | 0.41±0.07 | 4.26±0.56 | 3.56±0.93 | 5.05±0.54 | 4.21±0.47 | 3.62±0.91 |

| L21 | 0.57±0.01 | 3.32±0.63 | 4.75±0.75 | 4.64±0.88 | 4.08±1.01 | 3.11±1.05 |

| ODL-L | 0.63±0.08 | 2.99±0.63 | 2.88±0.68 | 4.29±0.84 | 3.62±1.45 | 2.93±1.07 |

| TGL | 0.70±0.05 | 2.73±0.72 | 4.00±1.31 | 4.00±0.64 | 3.19±1.38 | 2.60±1.42 |

| MMDL | 0.73±0.02 | 2.61±0.55 | 3.37±1.01 | 3.66±0.78 | 2.73±1.09 | 2.52±1.20 |

We follow the same experimental procedure in the MMSE study and explore the prediction model by ADAS-cog scores. The prediction performance results are shown in Table 3. We can observe that the best performance of predicting scores of ADAS-Cog is achieved by MMDL for four time points.

Table 3.

The Prediction Results of ADAS-cog on Whole Dataset.

| Methods | wR | M12 | M18 | M24 | M36 | M48 |

|---|---|---|---|---|---|---|

| Lasso | 0.49±0.05 | 6.81±1.03 | 6.87±0.74 | 7.62±0.87 | 8.08±1.39 | 6.55±1.34 |

| Ridge | 0.46±0.07 | 7.68±0.96 | 6.89±1.69 | 7.84±1.54 | 8.59±0.62 | 6.64±1.58 |

| L21 | 0.53±0.07 | 6.40±0.51 | 6.95±0.88 | 8.07±0.67 | 8.00±1.04 | 5.92±0.60 |

| ODL-L | 0.53±0.05 | 5.65±0.73 | 4.97±0.67 | 7.30±0.77 | 7.25±0.69 | 5.56±1.22 |

| TGL | 0.72±0.04 | 5.52±1.15 | 5.70±0.53 | 6.85±1.06 | 6.36±1.22 | 5.73±0.61 |

| MMDL | 0.77±0.02 | 5.18±0.88 | 4.64±1.12 | 6.76±1.35 | 6.78±1.54 | 5.27±1.76 |

Comparing with L21, after MMDL dealing with missing label, the results more linear, reasonable and accurate. Due to the dimension of M36 and M48 is too small, it is hard to learn a complete model. TGL also considered the issue of missing labels, however, MMDL still achieved the better results because MMDL incorporates multiple sources data and uses common and individual dictionaries. Although the result of MMDL had bias, MMDL still achieved the best result compared with the other five methods on predicting both MMSE and ADAS-cog, which shows our method is more efficient about dealing with missing data.

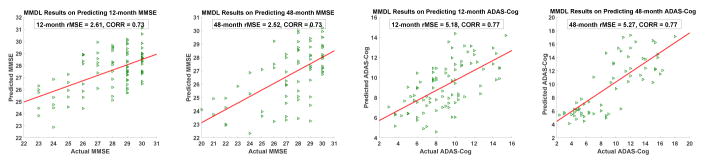

We show the scatter plots for the predicted values versus the actual values for MMSE and ADAS-Cog on the M12 and M48 in Fig. 4. In the scatter plots, we see the predicted values and actual clinical scores have a high correlation. The scatter plots show that the prediction performance for ADAS-Cog is better than that of MMSE.

Fig. 4.

Scatter plots of actual MMSE and ADAS-Cog versus predicted values on M12 and M48 by using MMDL.

4 Conclusion and Future Work

In this paper, we propose a novel Multi-Source Multi-Target Dictionary Learning for modeling cognitive decline, which allows simultaneous selections of a common set of biomarkers for multiple time points and specific sets of biomarkers for different time points using dictionary learning. We consider predicting future clinical scores as multi-task and deal with the missing labels problem. The effectiveness of the proposed progression model is supported by extensive experimental studies. The experimental results demonstrate that the proposed progression model is more effective than other state-of-the-art methods. In future, we will extend our algorithm to multi-modality data and propose more completely multiple sources with multiple targets algorithms.

Acknowledgments

The research was supported in part by NIH (R21AG049216, RF1AG051710, U54EB020403) and NSF (DMS-1413417, IIS-1421165).

References

- 1.Argyriou A, Evgeniou T, Pontil M. Convex multi-task feature learning. Machine Learning. 2008;73(3):243–272. [Google Scholar]

- 2.Boureau YL, Ponce J, LeCun Y. A theoretical analysis of feature pooling in visual recognition. Proceedings of the 27th Annual ICML. 2010:111–118. [Google Scholar]

- 3.Canutescu AA, Dunbrack RL. Cyclic coordinate descent: A robotics algorithm for protein loop closure. Protein science. 2003;12(5):963–972. doi: 10.1110/ps.0242703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chen J, et al. A convex formulation for learning shared structures from multiple tasks. Proceedings of the 26th Annual ICML; ACM; 2009. pp. 137–144. [Google Scholar]

- 5.Combettes PL, Wajs VR. Signal recovery by proximal forward-backward splitting. Multiscale Modeling & Simulation. 2005;4(4):1168–1200. [Google Scholar]

- 6.Evgeniou T, Micchelli CA, Pontil M. Learning multiple tasks with kernel methods. Journal of Machine Learning Research. 2005:615–637. [Google Scholar]

- 7.Hoerl AE, Kennard RW. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics. 1970;12(1):55–67. [Google Scholar]

- 8.Lee H, Battle A, Raina R, Ng AY. Advances in neural information processing systems. 2006. Efficient sparse coding algorithms; pp. 801–808. [Google Scholar]

- 9.Lin B, et al. Stochastic coordinate coding and its application for drosophila gene expression pattern annotation. 2014 arXiv preprint arXiv:1407.8147. [Google Scholar]

- 10.Lv J, et al. MICCAI. Springer; 2015. Modeling task fmri data via supervised stochastic coordinate coding; pp. 239–246. [Google Scholar]

- 11.Mairal J, Bach F, Ponce J, Sapiro G. Online dictionary learning for sparse coding. Proceedings of the 26th Annual ICML; ACM; 2009. pp. 689–696. [Google Scholar]

- 12.Maurer A, Pontil M, Romera-Paredes B. Sparse coding for multitask and transfer learning. Proceedings of the 26th Annual ICML 2013; Atlanta, GA, USA. 16–21 June 2013; 2013. pp. 343–351. [Google Scholar]

- 13.Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological) 1996:267–288. [Google Scholar]

- 14.Wang H, et al. ICCV. IEEE; 2011. Sparse multi-task regression and feature selection to identify brain imaging predictors for memory performance; pp. 557–562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang Y, et al. Surface-based TBM boosts power to detect disease effects on the brain: an N=804 ADNI study. Neuroimage. 2011 Jun;56(4):1993–2010. doi: 10.1016/j.neuroimage.2011.03.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Xiang S, et al. Bi-level multi-source learning for heterogeneous block-wise missing data. NeuroImage. 2014;102:192–206. doi: 10.1016/j.neuroimage.2013.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhang D, Shen D, Initiative ADN, et al. Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in alzheimer’s disease. Neuroimage. 2012;59(2):895–907. doi: 10.1016/j.neuroimage.2011.09.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Zhang J, et al. MICCAI. Springer; 2016. Hyperbolic space sparse coding with its application on prediction of Alzheimer’s disease in mild cognitive impairment; pp. 326–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zhang J, et al. Applying sparse coding to surface multivariate tensor-based morphometry to predict future cognitive decline. Biomedical Imaging (ISBI), 2016 IEEE 13th International Symposium on; IEEE; 2016. pp. 646–650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang T. Solving large scale linear prediction problems using stochastic gradient descent algorithms. Proceedings of the 25th Annual ICML; ACM; 2004. p. 116. [Google Scholar]

- 21.Zhou J, Liu J, Narayan VA, Ye J. Modeling disease progression via fused sparse group lasso. Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining; ACM; 2012. pp. 1095–1103. [DOI] [PMC free article] [PubMed] [Google Scholar]