Abstract

There is clear evidence for cross-modal cortical reorganization in the auditory system of post-lingually deafened cochlear implant (CI) users. A recent report suggests that moderate sensori-neural hearing loss is already sufficient to initiate corresponding cortical changes. To what extend these changes are deprivation-induced or related to sensory recovery is still debated. Moreover, the influence of cross-modal reorganization on CI benefit is also still unclear. While reorganization during deafness may impede speech recovery, reorganization also has beneficial influences on face recognition and lip-reading. As CI users were observed to show differences in multisensory integration, the question arises if cross-modal reorganization is related to audio-visual integration skills. The current electroencephalography study investigated cortical reorganization in experienced post-lingually deafened CI users (n = 18), untreated mild to moderately hearing impaired individuals (n = 18) and normal hearing controls (n = 17). Cross-modal activation of the auditory cortex by means of EEG source localization in response to human faces and audio-visual integration, quantified with the McGurk illusion, were measured. CI users revealed stronger cross-modal activations compared to age-matched normal hearing individuals. Furthermore, CI users showed a relationship between cross-modal activation and audio-visual integration strength. This may further support a beneficial relationship between cross-modal activation and daily-life communication skills that may not be fully captured by laboratory-based speech perception tests. Interestingly, hearing impaired individuals showed behavioral and neurophysiological results that were numerically between the other two groups, and they showed a moderate relationship between cross-modal activation and the degree of hearing loss. This further supports the notion that auditory deprivation evokes a reorganization of the auditory system even at early stages of hearing loss.

Abbreviations: AV, audio-visual; CI, cochlear implant; ICA, independent component analysis; MHL, mild to moderate hearing loss; NH, normal hearing; ROI, region of interest

Keywords: Audio-visual integration, Cochlear-implant users, Moderately hearing impaired individuals, Cross-modal reorganization, McGurk effect

Highlights

-

•

CI users activate the auditory cortex in response to human faces.

-

•

Cross-modal reorganization indicates audio-visual integration in CI users.

-

•

CI users show a strong bias to the visual component of audio-visual speech.

-

•

Hearing impaired individuals show evidence for an onset of cortical reorganization.

-

•

Hearing impaired show stronger audio-visual integration compared to controls.

1. Introduction

Speech in human real-world communication typically is based on the integration of information from multiple sensory modalities (Campbell, 2008, Driver and Noesselt, 2008, Rosenblum, 2008). It is well known that visual information during audio-visual (AV) speech perception can substantially improve speech understanding (Campbell, 2008, Grant and Seitz, 2000, Remez, 2012, Ross et al., 2007, Sumby and Pollack, 1954). For hearing impaired individuals visual speech information may be particularly important, as it facilitates participation in daily-life conversations. Individuals that are severely hearing impaired can nowadays regain parts of their hearing with cochlear implants (CI) (Moore and Shannon, 2009). Within some weeks after implantation, most post-lingually deafened CI users appear to adapt reasonably well to the new electrical input (Lenarz et al., 2012, Pantev et al., 2006, Sandmann et al., 2015, Suarez et al., 1999, Wilson and Dorman, 2008). Furthermore, they seem to integrate AV stimuli efficiently after sensory restoration with a CI (Moody-Antonio et al., 2005, Rouger et al., 2007). Nevertheless, patterns of cortical reorganization that developed during sensory deprivation may influence auditory as well as audio-visual processing after sensory restoration. The aim of the present study was to understand better, how cortical patterns of reorganization in hearing impaired individuals relate to audio-visual speech skills.

There is clear evidence for cross-modal cortical reorganization in the auditory and the visual system in post-lingually deaf CI users (Giraud et al., 2001a, Giraud et al., 2001b, Rouger et al., 2012, Sandmann et al., 2012, Stropahl et al., 2015, Chen et al., 2016). Similar patterns may even exist in individuals with modest levels of hearing loss (Campbell and Sharma, 2014). A seminal study demonstrated a causal relationship between auditory cortex cross-modal activation and supranormal visual performance in deaf cats (Lomber et al., 2010). However, in humans, it is still difficult to disentangle deprivation- versus CI-adaptation-induced patterns of cortical reorganization, which would be ideally addressed with a prospective longitudinal study covering different stages of auditory deprivation. Similarly, the functional purpose of cortical reorganization is less well understood (for a review, see Stropahl et al. (2017)). On the one hand, activation of auditory cortex by visual processing seems to impede speech perception with a CI (Doucet et al., 2006, Lee et al., 2001, Sandmann et al., 2012). Accordingly, this pattern of cross-modal take-over has been termed as maladaptive to CI hearing restoration. On the other hand, by focusing on face processing, we found that auditory cortex activation to visually presented faces is positively related to face recognition and lip-reading performance. This pattern seems to be clearly adaptive for daily-life communication with a CI (Stropahl et al., 2015). Our result fits well to observations showing that cortical reorganization following hearing deprivation is not limited to the auditory cortex. Cortical reorganization in the visual cortex appears to positively influence speech perception recovery after implantation (Giraud et al., 2001a, Giraud et al., 2001b, Chen et al., 2016). Moreover, a study observed a reorganization of networks of visual and audiovisual speech to potentially support a more efficient integration of audio-visual speech after sensory recovery (Rouger et al., 2012). It can be concluded that cross-modal compensatory changes during hearing deprivation and maybe during CI restoration as well, take place in both visual and auditory sensory systems. Given that post-lingually deafened CI users show a stronger integration of visual and auditory speech than normal hearing individuals (Barone and Deguine, 2011, Cappe et al., 2009, Desai et al., 2008, Rouger et al., 2007, Rouger et al., 2008, Rouger et al., 2012, Tremblay et al., 2010), it is tempting to conclude that AV speech processing may be related to cross-modal patterns of auditory cortical reorganization.

In this study, we investigated the possible relationship between auditory cross-modal reorganization and AV integration skills. We used the McGurk illusion to measure audio-visual speech skills (McGurk and Macdonald, 1976). The illusion occurs if the visual speech of a talker speaking a syllable (e.g. ‘Ga’) is simultaneously presented with an incongruent auditory syllable (e.g. ‘Ba’). The person seeing the incongruent AV combination typically perceives neither the visual nor the auditory component of the AV token but a fusion of the two components (e.g. ‘Da’). Those fusion percepts reflect audio-visual integration (MacDonald and McGurk, 1978, McGurk and Macdonald, 1976). To test the hypothesis of a relationship between cross-modal take-over and AV speech skills we conducted an EEG study and compared post-lingually deafened CI users, mild to moderately hearing impaired individuals and normal hearing controls. Using EEG source localization, auditory cross-modal activation to the face-selective N170 component (Bentin et al., 1996, Bötzel and Grüsser, 1989, Rossion and Jacques, 2008) and AV integration based on the McGurk paradigm were measured. We hypothesized a stronger amount of visual take-over, that is, more auditory cortex activation for faces presented in silence, in CI users compared to age-matched normal hearing controls. We also investigated the relationship between the amount of cortical reorganization and the amount of sensory deprivation and included a third group of mild to moderately hearing impaired individuals. We expected to see more cross-modal take-over in the hearing impaired group when compared with normal hearing individuals. Moreover, based on the hypothesis that cross-modal reorganization may be dependent on the level of hearing deprivation as well as on successful hearing restoration, we expected to see less take-over in the hearing impaired group when compared to CI users. Furthermore, we hypothesized that cross-modal activation in CI users is associated with AV integration strength and CI users were expected to have enhanced lip-reading skills compared to both other groups.

2. Materials and methods

2.1. Participants

In total 53 adults participated in the experiment, none of them reported acute neurological or psychiatric conditions and all confirmed normal or corrected-to-normal vision. The study was approved by the local ethical committee of the University of Oldenburg and conducted in agreement with the declaration of Helsinki. Participants gave written informed consent before the experiment.

The sample consisted of a CI group, a group of individuals with mild to moderate hearing loss (MHL), and a normal hearing (NH) control group. The mean age of the 18 CI users was M = 58.5 years, SE = 3.8 years. All CI users (8 women) were unilaterally implanted (right ear n = 13). Participants subjectively reported their duration of deafness as the time that elapsed between speech recognition with hearing aids being insufficient and CI surgery. All CI users became deaf after acquiring aural language skills (post-lingually). The individual duration of deafness ranged from 1.5 months up to 18 years (M = 61 months, SE = 12.5 months). None of the CI users had active sign language skills. Most of the CI users showed a progressive hearing loss with a broad age range of onset (mean age M = 24.8 years, SE = 5.2 years), the corresponding demographics are listed in Table 1. The CI users had been using their CI approximately 16 h per day for at least 12 months. The residual hearing was assessed as the hearing threshold on the ear contralateral to the CI and measured before conducting the experiment.

Table 1.

Demographics of the CI users.

| ID | Gender | Age [years] | Age at HL onset | Etiology | Duration of deafness [months] | CI side | CI experience [months] | Residual hearing [dB HL] | Speech in noise [dB SNR] |

|---|---|---|---|---|---|---|---|---|---|

| ci01 | m | 27 | 0 | Ototoxic antibiotics | 216 | Right | 110 | 100 | − 0.9 |

| ci02 | w | 54 | 0 | Unknown | 84 | Right | 106 | 95 | 2.4 |

| ci03 | w | 21 | 0 | Hereditary | 96 | Right | 156 | 100 | 1.1 |

| ci04 | w | 61 | 43 | Unknown | 12 | Right | 144 | 100 | 4.4 |

| ci05 | w | 47 | 0 | Oxygen loss at birth | 120 | Right | 28 | 91.25 | |

| ci06 | m | 76 | 15 | Unknown | 72 | Right | 148 | 100 | 2.1 |

| ci07 | m | 66 | 36 | Sudden HL | 96 | Right | 20 | 5 | 1.9 |

| ci09 | m | 74 | 56 | Meningitis | 12 | Right | 54 | 73.75 | 17.3 |

| ci10 | m | 50 | 45 | Meningitis | 1.5 | Right | 63 | 65 | |

| ci11 | w | 48 | 0 | Hereditary | 60 | Left | 82 | 77.5 | 1.6 |

| ci12 | m | 76 | 30 | Unknown | 48 | Left | 46 | 93.75 | 10.7 |

| ci13 | m | 76 | 45 | Hereditary | 36 | Left | 74 | 92.5 | 5 |

| ci14 | w | 70 | 4 | Meningitis | 72 | Right | 222 | 100 | − 1 |

| ci15 | w | 38 | 0 | Oxygen loss at birth | 5 | Right | 144 | 100 | 3.9 |

| ci16 | m | 67 | 16 | Sudden HL | 36 | Right | 62 | 65 | 4.2 |

| ci17 | m | 72 | 52 | Acoustic neuroma | 84 | Right | 108 | 96.25 | 3.5 |

| ci18 | w | 53 | 46 | Unknown | 24 | Left | 60 | 21.25 | 3.5 |

| ci19 | m | 79 | 59 | Sudden HL | 24 | Left | 176 | 100 | 4.5 |

CI = Cochlear implant.

HL = hearing loss.

SNR = Signal to noise ration.

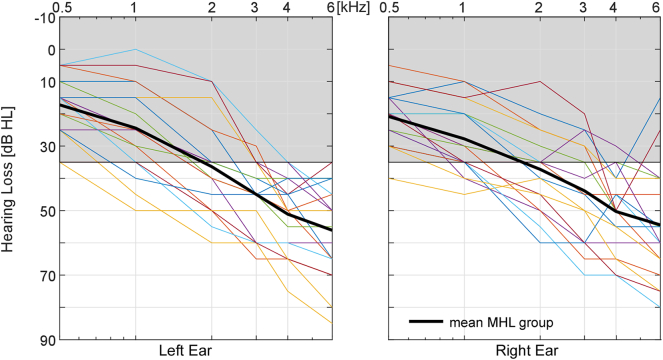

The MHL group consisted of 18 mild to moderately hearing impaired individuals (age: M = 69.3 years, SE = 1.68 years). None of the MHL individuals used hearing aids and most of them were unconscious of their degree of hearing loss. The mean hearing loss (averaged pure tone hearing loss from 1 kHz to 4 kHz) ranged from 24 dB HL to 60 dB HL with a mean of M = 42 dB HL (SE = 3). Hearing thresholds of the MHL group are plotted in Fig. 1. The MHL group had a significantly higher mean age compared to the other two groups (MHL vs. CI t(34) = − 2.55, p = 0.03).

Fig. 1.

Hearing threshold for the left and the right ear of the MHL group. Grey shaded areas represent the range considered as normal hearing. Black solid lines represent the average hearing loss for each ear.

As a control group, 17 normal hearing (NH) individuals were tested with a mean age of M = 57.2 years, SE = 4.3 years. NH controls were gender and age-matched (maximum difference ≤ 5 years) to the CI group, only for the oldest CI participant a match could not be found. The mean age of the CI and the NH groups did not show a significant difference. Hearing thresholds were measured prior to the experiment ensuring that thresholds were below 35 dB HL for the frequencies between 0.5 and 4 kHz, with few exceptions.

2.2. Experimental design

Since the aim of the study was to relate auditory cross-modal reorganization to AV integration skills, a subset of McGurk AV tokens of the freely available OLAVS stimuli was used (Stropahl et al., 2016). In total six AV tokens including six different talkers (three female and three male talkers) and two different AV syllable combinations (audio ‘Ba’/visual ‘Ga’ and audio ‘Pa’/visual ‘Da’) were used (see Table 2). The AV tokens were selected based on their prior probability in normal hearing controls, in order to evoke a fusion percept in about 70% of the presentations (for details see Stropahl et al., 2016). Incongruent AV tokens were presented 20 times each, giving a total number of 120 AV incongruent trials. Furthermore, 240 unimodal and 120 AV congruent trials were presented.

Table 2.

Two syllable combinations were used as audio-visual stimuli, presented by six different talkers.

The stimuli were selected from the OLAVS set (cf. Stropahl et al., 2016). The right column shows the response options presented to the participant as a four-alternative forced choice design (4-AFC).

| A – V stimulus | Talker | 4-AFC options (A, V, Fusion1, Fusion2) |

|---|---|---|

| Ba-Ga | 2 females, 1 male | Ba, Ga, Da, Ma |

| Pa-Da | 1 female, 2 males | Pa, Da, Ka, Ta |

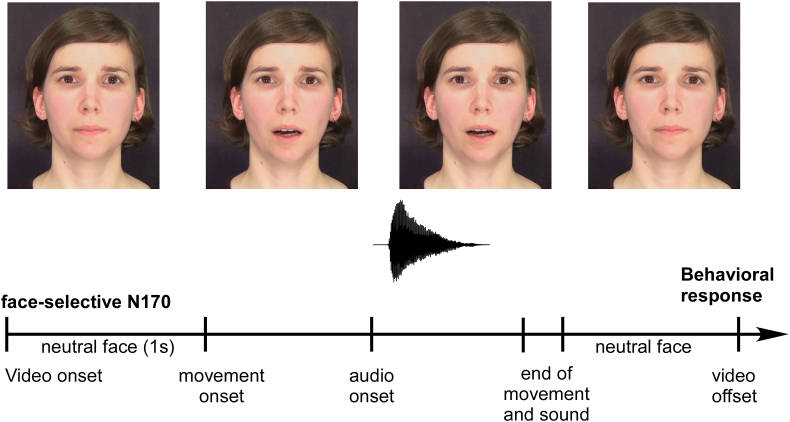

All trials including visual speech began with a 1 s still image of the talker, consisting of the last frame before movement onset. Still image onset was used to analyze cross-modal reorganization to static face stimuli. The still image was followed by the spoken syllable, giving a total duration of approx. 2 s for each clip. A schematic trial set-up is presented in Fig. 2. Participants were seated in a sound-shielded booth, 1.5 m in front of a 24-in. monitor. Video size was set to 1920 × 1080 pixels, spanning a horizontal angle of 30° and a vertical angle of 17°. Audio signals were presented binaurally in a free-field setting through high-quality studio monitors. The intensity was adjusted prior to the start of the experiment to the individual comfortable loudness level of each participant, which could be adjusted in 1 dB steps, starting from an initial loudness of the presented syllables of approximately 75 dB(A). The four conditions, unimodal auditory (Aonly), unimodal visual (Vonly), AV incongruent (AVinc) and AV congruent (AVcon) were presented randomly across trials, in a single-trial four-alternative forced-choice (4-AFC) procedure. 4-AFC response options were adjusted for the different AV tokens and consisted of the visual and the auditory component of the AV token as well as two fusion options (see Table 2). The fusion options in the 4-AFC were chosen based on the evaluation of the OLAVS material (cf. Stropahl et al., 2016). Participants were instructed to report what they perceived aurally in both AV and the Aonly conditions, and report what they lip-read in the Vonly condition.

Fig. 2.

Schematic illustration of a trial in the AV condition. The first second of the video was used to estimate the cross-modal activation of the auditory cortex in response to a human face. The behavioral response is given from the participant at the end of the trial.

2.3. Data acquisition

EEG data was collected with a BrainAmp EEG amplifier system (BrainProducts, Gilching, Germany) and a 64 Ag/AgCl electrode cap (Easycap, Herrsching, Germany). Sensors were equidistantly placed. Electrode placement included infra-cerebral spatial sampling, which aims to facilitate source localization efforts due to a better coverage of the head sphere compared to traditional 10–20 electrode layouts (Debener et al., 2008, Hauthal et al., 2014, Hine et al., 2008, Hine and Debener, 2007). A central fronto-polar site was used as ground and the nose-tip as reference. Two electrodes were placed below the eyes to capture eye blinks and movements. All electrode impedances were kept below 20 kΩ. EEG data was recorded with 1000 Hz sampling rate and an online analog filter from 0.016 to 250 Hz. Stimulus presentation was controlled with Presentation software (Neurobehavioral Systems, Albany, CA, USA). During EEG acquisition, the CI remained switched on and the contralateral ear was masked with an earplug. Electrodes in close proximity to the speech processor and the coil of the implant were not available for EEG acquisition.

2.4. EEG data analysis

We showed previously that the face-selective N170 component of the visually evoked potential to static faces can be EEG source-localized in or near the fusiform face area (Stropahl et al., 2015). Moreover, we could show that CI users elicited higher cross-modal activation to face onsets in the right auditory region compared to NH controls. To replicate these findings, an analysis approach very similar to the one reported by Stropahl et al. (2015) was applied to the current data.

EEG data were preprocessed with EEGLAB 13.6.5b (Delorme and Makeig, 2004) using Matlab (Mathworks). Independent component analysis (ICA) based on the extended Infomax (Bell and Sejnowski, 1995, Jung et al., 2000a, Jung et al., 2000b) was applied to attenuate artifacts such as eye blinks, eye movements and electrical heartbeats. To identify these components, the semi-automatic algorithm CORRMAP was used (Viola et al., 2009) as well as manual inspection of the data. ICA-corrected data sets were filtered between 0.1 and 30 Hz, segmented into 1 s epochs (− 200 to 800 ms) and baseline corrected to the pre-stimulus time interval (− 200 to 0 ms). Segments that contained residual artifacts not accounted for by ICA were rejected (joint probability, SD = 4).

Since the focus of this study was to identify a possible relationship between AV integration reflected by the frequency of McGurk fusions, and cross-modal cortical activation, measured by auditory cortex source activation, only those trials in which participants elicited a fusion percept were included into the EEG analysis.

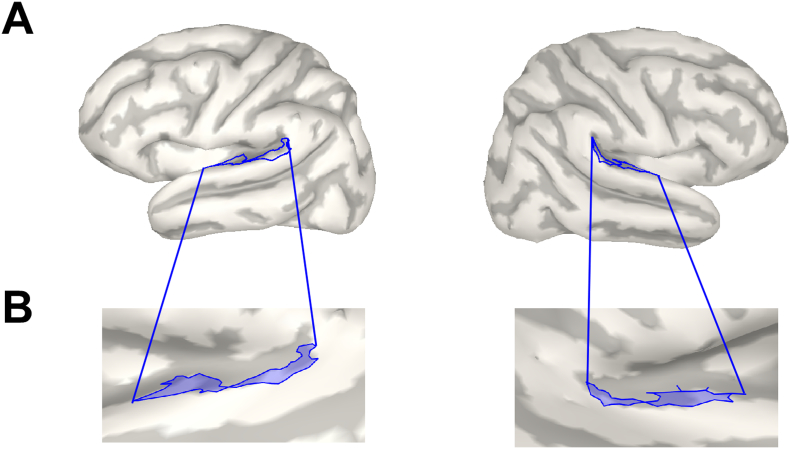

2.5. Source analysis

Cortical source activities were compared between the three groups using Brainstorm software (Tadel et al., 2011). Here, the method of dynamic statistical parametric mapping was applied to the data (dSPM, Dale et al., 2000). The dSPM method uses the minimum-norm inverse maps with constrained dipole orientations to estimate the locations of the scalp-recorded electrical activity of the neurons. dSPM seems to localize deeper sources more accurately than standard minimum norm procedures, but the spatial resolution remains blurred (Lin et al., 2006). EEG data were re-referenced to the common average before source estimation. Single-trial pre-stimulus baseline intervals (− 200 to 0 ms) were used to calculate individual noise covariance matrices and thereby estimate individual noise standard deviations at each location (Hansen et al., 2010). The boundary element method (BEM) as implemented in OpenMEEG was used as a head model. The BEM model provides three realistic layers and representative anatomical information (Gramfort et al., 2010, Stenroos et al., 2014). Source activities were evaluated on the group average and statistically analyzed by comparing activation strengths in the a-priori defined region of interest (ROI) between the groups. As in the previous study (Stropahl et al., 2015), an auditory ROI (Fig. 3) was defined as a combination of three smaller regions of the Destrieux-atlas implemented in Brainstorm (Destrieux et al., 2010), in order to approximate Brodmann areas 41 and 42. ROI peak activation magnitudes were extracted for each individual and submitted to statistical evaluation. The data of cross-modal activation is shown as absolute values with arbitrary units based on the normalization within the dSPM algorithm.

Fig. 3.

Apriori defined ROI for the auditory area in the right and the left hemisphere. (A) shows the ROIs for each hemisphere. (B) shows the zoomed region aligned on the unfolded left and right cortex.

2.6. Behavioral data analysis

The percentages of correctly identified phonemes for each condition were calculated for each individual and statistically compared between groups. The AVinc trials were treated separately, that is, responses in the incongruent trials were classified into the (correct) auditory percept, the visual percept, or the individual fusion percept. Additionally, all participants were tested in their speech perception in silence and in noise, using the Oldenburg sentence test (OLSA, Wagener et al., 1999). Furthermore, individual lip-reading performance was quantified using visual presentations of monosyllabic words of a German speech recognition test (for a detailed description cf. Stropahl et al., 2015).

2.7. Statistical analyses

The non-parametric Kruskal-Wallis test was applied to compare the percentage of correctly identified phonemes. A group comparison was performed for each condition (Aonly, Vonly, AVcon). The response behavior in the AV incongruent trials (visual, auditory, fusion) was compared between the groups (CI, MHL, NH) with the Kruskal-Wallis test. Lip-reading scores were subjected to a between-subjects one-way ANOVA to compare the three groups. Estimated source activations in the auditory ROI were compared between the three groups at the time interval (140 to 200 ms) of the face-selective N170 component. The Kruskal-Wallis test was used to compare between the groups (CI, MHL, NH). Predicted associations between behavioral and electrophysiological results were investigated by means of parametric correlations, which were corrected for outliers using Shepherd's pi correlation procedure (Schwarzkopf and Haas, 2012). The Shepherd's pi correlation procedure identifies outliers of the data that may influence the statistics by bootstrapping the Mahalanobis distance. Data points with a distance equal or larger than six are removed from the calculation of the correlation. Shepherd's pi is similar to Spearman's rho but adapts additionally the p-statistics accounting for the removal of outliers. For a more detailed description (cf. Schwarzkopf and Haas, 2012). Means and standard error of the mean (M ± SE) will be reported. If appropriate, nonparametric tests were performed. Post-hoc tests were two-tailed unless directional hypotheses were followed-up, in which case one-tailed test results are reported. The significant α-level was set to 0.05, p-values for multiple comparisons are corrected using the Holm-Bonferroni approach (Holm, 1979).

3. Results

3.1. Behavioral data

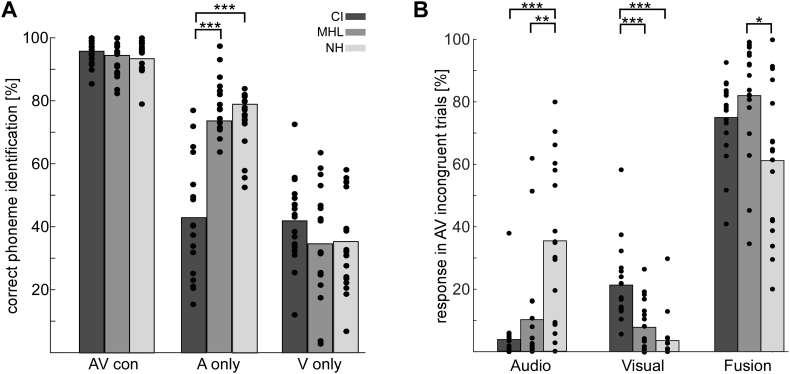

Kruskal-Wallis tests were performed to compare the correct phoneme identification for the Aonly, the Vonly and AVcon conditions between groups (see Fig. 4A). The group effect for the Aonly condition was observed to be significant, χ22,53 = 29.41, p < 0.001. To follow-up the group differences, the Mann-Whitney-U test was applied. CI users showed significantly less correctly identified phonemes in the Aonly condition (M = 43%, SE = 4) compared to the NH controls (M = 79%, SE = 2, U33 = 11.5, z33 = − 4.67 p < 0.001) and compared to the MHL group (M = 74%, SE = 2, U34 = 22, z34 = − 4.43 p < 0.001). Differences between the MHL group and the NH controls as well as all other conditions were not significant.

Fig. 4.

Behavioral results of the McGurk paradigm. (A) The correct phoneme identification is displayed for CI users (dark grey), the MHL group (middle grey), and NH controls (light grey). (B) Response behavior in the AV incongruent trials for CI (dark grey), MHL (middle grey) and NH (light grey). Possible responses are either the auditory or the visual component of the AV token, or a fusion percept. Individuals are displayed as dots for each condition. Significant group differences are indicated (*p < 0.05, **p < 0.01, ***p < 0.001).

The AVinc condition was analyzed separately to identify group differences in response behavior for incongruent trials (see Fig. 4B). Note that, based on the instructions given, the auditory component of the AV token was defined as the correct response in the McGurk trials. Possible other responses were the visual component of the AV token, or a fusion percept. Kruskal-Wallis tests revealed significant group differences for all three response options (Audio: χ22,53 = 19.42, p < 0.001; Visual: χ22,53 = 23.78, p < 0.001; Fusion: χ22,53 = 9.18, p = 0.009). The follow-up test between the groups revealed that CI users (M = 4%, SE = 2) and the MHL group (M = 10%, SE = 4) chose the auditory component of the AV token significantly less frequently than the NH controls (M = 35%, SE = 6), CI vs. NH: U33 = 29, z33 = − 4.13, p < 0.001; MHL vs. NH: U33 = 56, z33 = − 3.2, p = 0.005. The CI users chose the visual token in M = 21% (SE = 3) of the trials, which was significantly more frequent than NH control choices (M = 4%, SE = 2), U33 = 17, z33 = − 4.52, p < 0.001. A similar group difference was observed between the CI users and the MHL group (M = 8%, SE = 2), U34 = 50.5, z34 = − 3.53, p < 0.001. Fusion frequency was highest in the MHL group (M = 82%, SE = 4), followed by the CI group (M = 75%, SE = 3) and the NH group (M = 61%, SE = 6). The difference between the MHL group and the NH controls was significant, U33 = 71, z33 = − 2.71, p = 0.03, whereas differences between CI users and NH controls, as well as CI users and the MHL group were not significant.

Group differences in lip-reading revealed a significant group effect as well (F2,50 = 4.86, p = 0.012). A post-hoc comparison between the groups confirmed highest lip-reading skills for CI users (M = 33%, SE = 4). NH controls (M = 23%, SE = 3) and the MHL group (M = 22%, SE = 2) recognized significantly less words correctly (CI vs. NH t33 = 2.33, p = 0.052; CI vs. MHL = t33 = 2.68, p = 0.033).

3.2. Auditory cross-modal activation

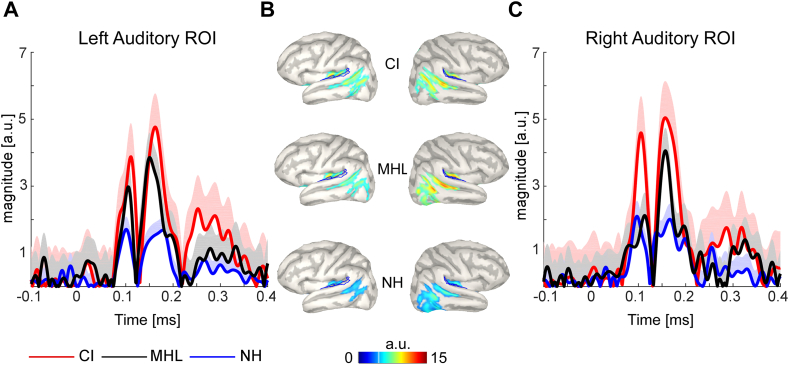

Since cross-modal activation in CI users seems to be dominant in the right hemisphere (Lazard et al., 2014, Sandmann et al., 2012, Stropahl et al., 2015), we compared the three groups for right auditory cortex activation. Averaged time series of the left and right auditory ROI for each group are shown in Fig. 5B. CI users showed the strongest auditory cortex activation at the N170 peak latency (M = 1.61, SE = 0.21), followed by the MHL group (M = 1.15, SE = 0.11) and the NH controls (M = 1.01, SE = 0.13). The comparison of right auditory ROI activation revealed a significant group effect, χ22,53 = 6.9, p = 0.032. Since we predicted strongest activation in the CI group compared to both other groups, and stronger activation in the MHL group compared to the NH group, directional post-hoc tests were performed. Activation was significantly stronger for the CI group compared to NH controls, U33 = 82, z33 = − 2.34, p = 0.027. The difference between CI and MHL groups indicated a trend for a higher activation in the CI group, which did not reveal significance due to multiple comparison correction, U34 = 104, z34 = − 1.84, p = 0.068. The MHL group showed descriptively higher cross-modal activation compared to the NH controls but this effect did not reach significance, U33 = 115, z33 = − 1.25, p = 0.109.

Fig. 5.

Grand average time series of cross-modal activation in the auditory ROI. (A) Represents the left auditory ROI and (C) the right auditory ROI for the CI group (red line), the MHL group (black line) and NH controls (blue line). The standard deviation is displayed as shaded area for each group. (B) Shows the activation on the cortex for the left and the right hemisphere. Cross-modal activation is shown as absolute values with arbitrary units based on the normalization within the dSPM algorithm. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

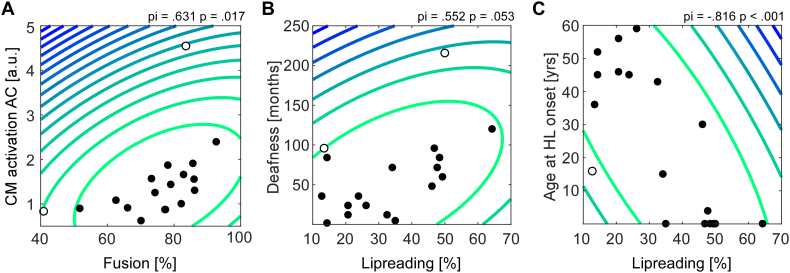

To test the hypothesis of a relationship between auditory cross-modal activation and AV integration strength, a correlation analysis was performed. The CI group showed a significant relationship between cross-modal activation in the left auditory ROI and the frequency of fusion percepts, pi(16) = 0.631, p = 0.017, such that higher cross-modal activation was associated with more fusion (see Fig. 6A). A similar correlation was not observed in the other two groups (Fig. 7B: MHL: pi(16) = − 0.04, p = 1.0; NH: pi(16) = 0.183, p = 0.99). As shown in Fig. 6C and D, the group of CI users furthermore showed a relationship between lip-reading skills and the duration of deafness, pi(16) = 0.552, p = 0.053, and likewise between lip-reading skills and the age at onset of hearing loss, pi(16) = − 0.816, p < 0.001. Moreover, the accuracies in the visual only condition showed a significant correlation with lip-reading, pi(16) = 0.635, p = 0.017.

Fig. 6.

Correlation analysis for the CI group. (A) Shows the correlation between the amount of cross-modal activation in the auditory ROI and the fusion frequency. (B) Shows the relationship between a longer duration of deafness and enhanced lip reading skills. A similar relationship was observed for lip reading skills and the age at hearing loss onset (C). The contour lines in the scatter plot represent the Mahalanobis distance from the bivariate mean (darker blue indicates greater distances). Open circles represent outliers which were not included in the correlation calculation. P-statistics have been adapted for removed outliers. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Fig. 7.

Correlation analysis for the MHL group. (A) Shows the correlation between the individual hearing loss and the amount of cross-modal reorganization in the MHL group. (B) Shows the non-significant correlation between the amount of cross-modal reorganization in the auditory ROI and the fusion frequency. The contour lines in the scatter plot represent the Mahalanobis distance from the bivariate mean (darker blue indicates greater distances). Open circles represent outliers which were not included in the correlation calculation. P-statistics have been adapted for removed outliers. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

We tested the hypothesis that in the MHL group, activation of the auditory ROI to faces is associated to individual hearing loss. As shown in Fig. 7, the correlation analysis revealed that individuals with a more pronounced hearing loss showed a non-significant trend toward stronger auditory cortex activation, pi(16) = 0.552, p = 0.053.

Since the MHL group differed significantly in age, the influence of age on group differences was evaluated. Correlations between cross-modal activation and age, as well as fusion and age were performed. None of the correlations reached significance, neither for the whole sample nor for the individual groups.

4. Discussion

The present study compared cross-modal auditory cortex activation and, for the first time in CI research, its relationship to AV integration in post-lingually deafened CI users and age-matched NH controls. In addition, we included a group of mild to moderately hearing impaired individuals to explore whether a less severe amount of sensory deprivation induces cross-modal take-over as well. By means of EEG source estimation, visual-induced activation of the auditory cortex was determined and, as predicted, was found significantly stronger in CI users compared to the NH controls. Additionally, we confirmed our hypothesis that cross-modal activation in the CI group is related to audio-visual integration strength. A similar effect was not observed in the other two groups. As expected from other studies testing the McGurk illusion in CI users (Desai et al., 2008, Rouger et al., 2008, Schorr et al., 2005, Tremblay et al., 2010), only CI users showed a bias toward the visual component of McGurk stimuli, and they showed enhanced lip-reading skills. Lip-reading performance was better the longer the duration of deafness was, and the earlier in life individuals experienced a hearing loss. In contrast to our predictions, CI users did not show significantly higher amounts of fusion percepts compared to the other groups. Interestingly, for most analyses conducted, the MHL group showed a pattern that was numerically between the CI and the NH group. In line with the assumption of a temporal evolution of deprivation-induced cross-modal activation, we could show first evidence that the activation of the MHL group was found to be related to the degree of hearing loss.

4.1. Behavioral differences in AV integration

The unimodal visual condition did not reveal significant group differences, even though the CI users achieved slightly higher phoneme accuracies. At first glance this may seem contradictory, because the same CI users showed a clear advantage in word lip-reading. However, it has been shown before that CI users are superior in visual speech perception only if the presented speech contains semantic information (Moody-Antonio et al., 2005, Rouger et al., 2008, Tremblay et al., 2010). Nevertheless, better lip-reading of words was correlated to enhanced performance in lip-reading phonemes. Moreover, similar to previous studies, we observed a strong bias for the CI group to the visual component in the AV incongruent trials (Desai et al., 2008, Rouger et al., 2008, Schorr et al., 2005, Tremblay et al., 2010). This bias toward visual speech may be one reason for the strong AV integration in CI users. Semantic context may trigger top-down processing which can be used in an AV context to optimally integrate auditory and visual sensations into one percept (Bernstein et al., 2000, Rönnberg et al., 1996, Rouger et al., 2008, Strelnikov et al., 2009). Although, words provide a higher semantic context and may be more predictive compared to units of phonemes, AV integration seems to be beneficial at this phonetic level of speech. This is especially reflected in the strong AV benefit for the CI users.

Rouger et al. (2007) previously demonstrated that CI users are better AV integrators. Several studies aimed to confirm this study with various types of AV stimulation, but only few investigated the McGurk illusion. Even though fusion percepts reflect AV integration, results are contradictory (Desai et al., 2008, Rouger et al., 2008, Schorr et al., 2005, Tremblay et al., 2010). Unfortunately, our study does not help to shed light on the heterogeneity in previous results. In our study, the group of CI users indicated more fusion percepts than NH controls, but the difference was not significant. Other studies showed that fusion is related to CI experience or CI proficiency (Desai et al., 2008, Tremblay et al., 2010). No such relationship could be observed in our sample. Studies with non-linguistic AV stimuli could likewise not unambiguously confirm superior AV integration skills but rather suggested performance levels that are very comparable to NH performance (Gilley et al., 2010, Schierholz et al., 2015). In the original study of Rouger et al. (2007), CI users were tested on AV word recognition. As already observed in animal as well as human studies, superiority might not be generalizable but rather be functionally specific (Bavelier et al., 2006, Bavelier and Neville, 2002, Lomber et al., 2010). Superior AV integration in CI users may be highly specific to visual speech that contains semantic information. Since the McGurk illusion represents rather artificial AV speech with little semantic information, this task may simply not be perfectly well suited to reveal clear behavioral AV integration differences between CI users and NH controls. This interpretation could be directly tested in future studies.

Interestingly, the MHL group did not show any differences in the visual condition and in lip-reading performance compared to the NH controls. At the same time, the conditions that included auditory stimulation revealed a pattern of descriptive differences. In the unimodal auditory condition, average accuracies were about 5% worse compared to the NH group. The responses in the AV incongruent trials showed that mild to moderately hearing-impaired individuals had a small bias toward the visual component. Moreover, they revealed a similar amount of fusion compared to the CI users, which was significantly higher compared to the NH group. Even though these differences did not show a relationship to speech performance or the degree of hearing loss, the emerging pattern of behavioral differences in the auditory conditions may already indicate altered configurations of processing audio-visual stimuli, at rather early stages of hearing loss.

4.2. Cross-modal reorganization

Here we successfully replicate our previous finding of stronger cross-modal activations to visually presented faces in the right auditory cortex of CI users compared to NH controls (Stropahl et al., 2015). Moreover, we show for the first time that higher cross-modal activation of the auditory cortex was related to stronger AV integration. This observation of a link between cross-modal activity and AV fusion was exclusively found in the CI group.

Cross-modal reorganization is typically interpreted as a deprivation-induced residual of cortical changes that impedes on speech recovery. Some studies found a maladaptive relationship between processing differences and speech perception in CI users, more specifically, CI users with a higher amount of cross-modal reorganization show a poorer performance in speech perception (Buckley and Tobey, 2011, Campbell and Sharma, 2014, Doucet et al., 2006, Kim et al., 2016, Sandmann et al., 2012). One has to keep in mind that, in contrast to source estimation as in the current study, some of these correlations are based on scalp EEG analyses, which cannot easily disentangle contributions from different cortical generators (cf. Stropahl et al., 2017 for a discussion of methods used in investigating cortical reorganization in CI users). On the scalp level, differences in visually evoked potentials between CI users and NH controls should be treated with care and may not necessarily reflect cross-modal reorganization (Stropahl et al., 2017). Similar to our previous study (Stropahl et al., 2015), the present study did not reveal a maladaptive relationship between the amount of cortical reorganization and speech perception performance in our group of CI users. Reasons may be various and complex. It is common nowadays to quantify CI benefit by speech in noise tests conducted in laboratory conditions. Yet, currently used speech tests are rather artificial and may not generalize very well to real speech situations. Furthermore, differences between speech tests may influence speech performance results. Moreover, the use of only speech (in noise) performance may not characterize CI benefit sufficiently. We therefore speculate that a broader test battery tailored toward characterizing CI benefit may better support the long-term goal to validate biological markers such as cross-modal reorganization as clinical predictor for CI outcome (Glick and Sharma, 2016). In our opinion, these tests should include other communication skills such as lip-reading, and more realistic settings of speech in noise testing, such as multisensory speech (Barone and Deguine, 2011, Schierholz et al., 2015, Stropahl et al., 2015, Stropahl et al., 2017).

Recent discussions point toward a more balanced, less maladaptive view on the impact of cross-modal reorganization on CI performance (Heimler et al., 2014, Rouger et al., 2012, Stropahl et al., 2015). The interpretation that residual changes are exclusively leftovers from the period of deprivation may be too limited (Stropahl et al., 2017). Even though there is evidence that cross-modal changes may emerge already during the period of auditory deprivation to enhance (visual) speech processing (Strelnikov et al., 2013), after sensory recovery, additive, functionally specific changes may occur (Rouger et al., 2012, Stropahl et al., 2015, Stropahl et al., 2017). Moreover, cortical changes may not be limited to the auditory cortex (Giraud et al., 2001a, Giraud et al., 2001b, Campbell and Sharma, 2013, Campbell and Sharma, 2016, Chen et al., 2016) and may even include visual and audio-visual speech processing networks (Rouger et al., 2012). In line, a recent animal study showed that auditory responsiveness in deaf cats was not impeded by cross-modal reorganization to visual stimuli (Land et al., 2016). Accordingly, the overall pattern is rather complex, and supporting evidence for the compensatory, adaptive nature of cortical changes emerges. For example, a study showed that cross-modal activation of the visual cortex increased with CI experience and went along with increased speech perception (Giraud et al., 2001b). Furthermore, our previous study showed that CI users with higher cross-modal activation had enhanced lip-reading skills. The present results of a positive relationship between cross-modal reorganization and AV integration are consistent with this line of reasoning. Studies suggest that the reorganization during deprivation toward visual speech results in a more efficient audio-visual integration after sensory recovery to enhance speech perception (Rouger et al., 2012). Moreover, our results strengthen the assumption of shared rather than independent cortical networks for audio-visual integration and compensatory cross-modal reorganization (Barone and Deguine, 2011, Cappe et al., 2009, Desai et al., 2008, Rouger et al., 2007, Rouger et al., 2008, Tremblay et al., 2010). This is a reasonable interpretation, since speech perception in daily-life situations is rather a multisensory experience, at least in CI users (Humes, 1991). Therefore, observations of maladaptive influences on pure speech perception and adaptive relationships to other communication skills do not seem contradictory but may reflect different aspects of CI benefit. Improved lip-reading and/or enhanced AV integration skills may support speech perception in ecologically valid listening conditions (Rouger et al., 2007, Rouger et al., 2012, Stropahl et al., 2015, Stropahl et al., 2017). We therefore suggest that future rehabilitation strategies for CI users should emphasize the importance of visual speech information before and during early stages of sensory recovery to obtain a better integration of AV speech.

The lack of longitudinal studies that track cross-modal reorganization is still impeding our knowledge on the temporal dynamics of deprivation-induced cortical changes. Campbell and Sharma (2014) showed first evidence for cross-modal reorganization at early stages of hearing loss, although their results did not unambiguously identify auditory cortex. However, a recent single case study conducted by the same lab showed that cross-modal activation in the auditory cortex may begin as early as three months after hearing loss onset (Glick and Sharma, 2016). The present findings are very well in line with this research. We observed a pattern of cross-modal changes in a group of mild to moderately hearing impaired individuals. Yet, our MHL group was significantly older compared to the other two groups, and age may influence AV processing, resulting in a stronger AV integration (Laurienti et al., 2006, Sekiyama et al., 2014). While none of our analyses revealed a relationship to age, we cannot exclude that age effects contributed to the group differences found. Besides, we found an association between the strength of cross-modal activation and the degree of hearing loss, an effect that was not found for age. We therefore conclude that a temporal evolution of cross-modal reorganization depends on the amount of deprivation. Already mild hearing loss may leave its trace on how auditory and visual senses interact.

Neurophysiologically, the results show a pattern that is in-between the CI users and the NH controls. This is a plausible pattern, given that the degree and/or duration of hearing loss may bring about cross-modal reorganization. However, we did not find a direct relationship between cross-modal reorganization at early stages of hearing loss and behavioral measures. The impact of cortical changes at early stages of hearing loss on behavior remains to be investigated in detail. Another important question to answer is whether cortical changes are fully reversible with acoustic amplification. The first case study addressing this issue observed that a child with single-sided deafness showed cross-modal activity before the implantation of a CI, which was reduced later with CI experience (Sharma et al., 2016). How this finding relates to adult plasticity in hearing impaired individuals remains to be seen.

5. Conclusion

The present study confirmed previous reports of higher cross-modal activation in adult post-lingually deafened CI users, and suggests that cross-modal reorganization is already induced by mild to moderate hearing loss. Importantly, cross-modal activation could be related to AV integration as measured by the McGurk illusion. We conclude that patterns of cortical cross-modal reorganization are at least partly adaptive, as they seem to support multisensory aspects of speech communication, which appear to be of particular importance in individuals with a history of auditory deprivation.

Acknowledgments

Acknowledgment

We thank Franziska Klein for her help in data collection and Catharina Zich for valuable discussions.

Funding

The research leading to these results has received funding from the grant I-175-105.3-2012 of the German-Israel Foundation and the German Research Foundation (Deutsche Forschungsgemeinschaft, DFG) Cluster of Excellence “Hearing4all”, Task group 7, Oldenburg, Germany.

References

- Barone P., Deguine O. Multisensory processing in cochlear implant listeners. In: Zeng F.-G., Popper A.N., Fay R.R., editors. Springer Handbook of Auditory Research: Auditory Prostheses. Springer Handbook of Auditory Research. Springer; New York, USA: 2011. pp. 365–381. [Google Scholar]

- Bavelier D., Neville H.J. Cross-modal plasticity: where and how? Nat. Rev. Neurosci. 2002;3:443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- Bavelier D., Dye M.W.G., Hauser P.C. Do deaf individuals see better? Trends Cogn. Sci. 2006;10:512–518. doi: 10.1016/j.tics.2006.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell A.J., Sejnowski T.J. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 1995;7:1129–1159. doi: 10.1162/neco.1995.7.6.1129. [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein L.E., Demorest M.E., Tucker P.E. Speech perception without hearing. Percept. Psychophys. 2000;62:233–252. doi: 10.3758/bf03205546. [DOI] [PubMed] [Google Scholar]

- Bötzel K., Grüsser O.J. Electric brain potentials evoked by pictures of faces and non-faces: a search for “face-specific” EEG-potentials. Exp. Brain Res. 1989;77:349–360. doi: 10.1007/BF00274992. [DOI] [PubMed] [Google Scholar]

- Buckley K.A., Tobey E.A. Cross-modal plasticity and speech perception in pre- and postlingually deaf cochlear implant users. Ear Hear. 2011;32:2–15. doi: 10.1097/AUD.0b013e3181e8534c. [DOI] [PubMed] [Google Scholar]

- Campbell R. The processing of audio-visual speech: empirical and neural bases. Philos. Trans. R. Soc. B. 2008;363:1001–1010. doi: 10.1098/rstb.2007.2155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell J., Sharma A. Compensatory changes in cortical resource allocation in adults with hearing loss. Front. Syst. Neurosci. 2013;7:1–9. doi: 10.3389/fnsys.2013.00071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell J., Sharma A. Cross-modal re-organization in adults with early stage hearing loss. PLoS One. 2014;9:1–9. doi: 10.1371/journal.pone.0090594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell J., Sharma A. Visual cross-modal re-organization in children with cochlear implants. PLoS One. 2016;11 doi: 10.1371/journal.pone.0147793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C., Rouiller E.M.M., Barone P. Multisensory anatomical pathways. Hear. Res. 2009;258:28–36. doi: 10.1016/j.heares.2009.04.017. [DOI] [PubMed] [Google Scholar]

- Chen L.-C., Sandmann P., Thorne J.D., Bleichner M.G., Debener S. Cross-modal functional reorganization of visual and auditory cortex in adult cochlear implant users identified with fNIRS. Neural Plast. 2016:2016. doi: 10.1155/2016/4382656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale A., Liu A.K., Fischl B., Buckner R.L., Belliveau J.W., Lewine J.D., Halgren E. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000;26:55–67. doi: 10.1016/s0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- Debener S., Hine J., Bleeck S., Eyles J. Source localization of auditory evoked potentials after cochlear implantation. Psychophysiology. 2008;45:20–24. doi: 10.1111/j.1469-8986.2007.00610.x. [DOI] [PubMed] [Google Scholar]

- Delorme A., Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Desai S., Stickney G., Zeng F.-G. Auditory-visual speech perception in normal-hearing and cochlear-implant listeners. J. Acoust. Soc. Am. 2008;123:428–440. doi: 10.1121/1.2816573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Destrieux C., Fischl B., Dale A., Halgren E. Automatic parcellation of human cortical gyri and sulci using standard anatomical nomenclature. NeuroImage. 2010;53:1–15. doi: 10.1016/j.neuroimage.2010.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doucet M.E., Bergeron F., Lassonde M., Ferron P., Lepore F. Cross-modal reorganization and speech perception in cochlear implant users. Brain. 2006;129:3376–3383. doi: 10.1093/brain/awl264. [DOI] [PubMed] [Google Scholar]

- Driver J., Noesselt T. Multisensory interplay reveals crossmodal influences on “sensory-specific” brain regions, neural responses, and judgments. Neuron. 2008;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilley P.M., Sharma A., Mitchell T.V., Dorman M.F. The influence of a sensitive period for auditory-visual integration in children with cochlear implants. Restor. Neurol. Neurosci. 2010;28:207–218. doi: 10.3233/RNN-2010-0525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud A.-L., Price C.J., Graham J.M., Frackowiak R.S.J. Functional plasticity of language-related brain areas after cochlear implantation. Brain. 2001;124:1307–1316. doi: 10.1093/brain/124.7.1307. [DOI] [PubMed] [Google Scholar]

- Giraud A.-L., Price C.J., Graham J.M., Truy E., Frackowiak R.S.J. Cross-modal plasticity underpins language recovery after cochlear implantation. Neuron. 2001;30:657–663. doi: 10.1016/s0896-6273(01)00318-x. [DOI] [PubMed] [Google Scholar]

- Glick H., Sharma A. Cross-modal plasticity in developmental and age-related hearing loss: clinical implications. Hear. Res. 2016;343:191–201. doi: 10.1016/j.heares.2016.08.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gramfort A., Papadopoulo T., Olivi E., Clerc M. OpenMEEG: opensource software for quasistatic bioelectromagnetics. Biomed. Eng. Online. 2010;9:45. doi: 10.1186/1475-925X-9-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grant K.W., Seitz P.F.P. The use of visible speech cues for improving auditory detection of spoken sentences. J. Acoust. Soc. Am. 2000;108:1197–1208. doi: 10.1121/1.1288668. [DOI] [PubMed] [Google Scholar]

- Hansen P.C., Kringelbach M.L., Salmelin R. Oxford University Press; 2010. MEG - An Introduction to Methods. [Google Scholar]

- Hauthal N., Debener S., Rach S., Sandmann P., Thorne J.D. Visuo-tactile interactions in the congenitally deaf: a behavioral and event-related potential study. Front. Integr. Neurosci. 2014;8:98. doi: 10.3389/fnint.2014.00098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heimler B., Weisz N., Collignon O. Revisiting the adaptive and maladaptive effects of crossmodal plasticity. Neuroscience. 2014;283:44–63. doi: 10.1016/j.neuroscience.2014.08.003. [DOI] [PubMed] [Google Scholar]

- Hine J., Debener S. Late auditory evoked potentials asymmetry revisited. Clin. Neurophysiol. 2007;118:1274–1285. doi: 10.1016/j.clinph.2007.03.012. [DOI] [PubMed] [Google Scholar]

- Hine J., Thornton R., Davis A., Debener S. Does long-term unilateral deafness change auditory evoked potential asymmetries? Clin. Neurophysiol. 2008;119:576–586. doi: 10.1016/j.clinph.2007.11.010. [DOI] [PubMed] [Google Scholar]

- Holm S. A simple sequentially rejective multiple test procedure. Scand. J. Stat. 1979;6:65–70. [Google Scholar]

- Humes L.E. Understanding the speech-understanding problems of the hearing impaired. J. Am. Acad. Audiol. 1991;2:59–69. [PubMed] [Google Scholar]

- Jung T.-P., Makeig S., Humphries C., Lee T., McKeown M.J., Iragui I., Sejnowski T.J. Removing electroencephalographic artefacts by blind source separation. Psychophysiology. 2000;37:163–178. [PubMed] [Google Scholar]

- Jung T.-P., Makeig S., Westerfield M., Townsend J., Courchesne E., Sejnowski T.J. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin. Neurophysiol. 2000;111:1745–1758. doi: 10.1016/s1388-2457(00)00386-2. [DOI] [PubMed] [Google Scholar]

- Kim M.-B., Shim H.-Y., Jin S.H., Kang S., Woo J., Han J.C., Lee J.Y., Kim M., Cho Y.-S., Moon I.J., Hong S.H. Cross-modal and intra-modal characteristics of visual function and speech perception performance in postlingually deafened, cochlear implant users. PLoS One. 2016;11 doi: 10.1371/journal.pone.0148466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Land R., Baumhoff P., Tillein J., Lomber S.G., Hubka P., Kral A. Cross-modal plasticity in higher-order auditory cortex of congenitally deaf cats does not limit auditory responsiveness to csochlear implants. J. Neurosci. 2016;36:6175–6185. doi: 10.1523/JNEUROSCI.0046-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurienti P., Burdette J., Maldjian J., Wallace M. Enhanced multisensory integration in older adults. Neurobiol. Aging. 2006;27:1155–1163. doi: 10.1016/j.neurobiolaging.2005.05.024. [DOI] [PubMed] [Google Scholar]

- Lazard D.S., Innes-Brown H., Barone P. Adaptation of the communicative brain to post-lingual deafness. Evidence from functional imaging. Hear. Res. 2014;307:136–143. doi: 10.1016/j.heares.2013.08.006. [DOI] [PubMed] [Google Scholar]

- Lee D.S., Lee J.S., Oh S.H., Kim S.-K., Kim J.-W., Chung J.-K., Lee M.C., Kim C.S. Deafness: cross-modal plasticity and cochlear implants. Nature. 2001;409:149–150. doi: 10.1038/35051653. [DOI] [PubMed] [Google Scholar]

- Lenarz M., Sönmez H., Joseph G., Büchner A., Lenarz T. Cochlear implant performance in geriatric patients. Laryngoscope. 2012;122:1361–1365. doi: 10.1002/lary.23232. [DOI] [PubMed] [Google Scholar]

- Lin F.H., Witzel T., Ahlfors S.P., Stufflebeam S.M., Belliveau J.W., Hämäläinen M.S. Assessing and improving the spatial accuracy in MEG source localization by depth-weighted minimum-norm estimates. NeuroImage. 2006;31:160–171. doi: 10.1016/j.neuroimage.2005.11.054. [DOI] [PubMed] [Google Scholar]

- Lomber S.G., Meredith M.A., Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat. Neurosci. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- MacDonald J., McGurk H. Visual influences on speech perception processes. Percept. Psychophys. 1978;24:253–257. doi: 10.3758/bf03206096. [DOI] [PubMed] [Google Scholar]

- McGurk H., Macdonald J. Hearing lips and seeing voices. Nature. 1976;264:691–811. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Moody-Antonio S., Takayanagi S., Masuda A. Improved speech perception in adult congenitally deafened cochlear implant recipients. Otol. Neurotol. 2005;26:649–654. doi: 10.1097/01.mao.0000178124.13118.76. [DOI] [PubMed] [Google Scholar]

- Moore D.R., Shannon R.V. Beyond cochlear implants: awakening the deafened brain. Nat. Neurosci. 2009;12:686–691. doi: 10.1038/nn.2326. [DOI] [PubMed] [Google Scholar]

- Pantev C., Dinnesen A., Ross B., Wollbrink A., Knief A. Dynamics of auditory plasticity after cochlear implantation: a longitudinal study. Cereb. Cortex. 2006;16:31–36. doi: 10.1093/cercor/bhi081. [DOI] [PubMed] [Google Scholar]

- Remez R.E. Three puzzles of multimodal speech perception. In: Vatikiotis-Bateson E., Bailly G., Perrier P., editors. Audiovisual Speech Processing. Cambridge University Press; Cambridge: 2012. pp. 4–20. [Google Scholar]

- Rönnberg J., Samuelsson S., Lyxell B., Arlinger S. Lipreading with auditory low-frequency information. Contextual constraints. Scand. Audiol. 1996;25:127–132. doi: 10.3109/01050399609047994. [DOI] [PubMed] [Google Scholar]

- Rosenblum L.D. Speech perception as a multimodal phenomenon. Curr. Dir. Psychol. Sci. 2008;17:405–409. doi: 10.1111/j.1467-8721.2008.00615.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross L.A., Saint-Amour D., Leavitt V.M., Javitt D.C., Foxe J.J. Do you see what I am saying? Exploring visual enhancement of speech comprehension in noisy environments. Cereb. Cortex. 2007;17:1147–1153. doi: 10.1093/cercor/bhl024. [DOI] [PubMed] [Google Scholar]

- Rossion B., Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. NeuroImage. 2008;39:1959–1979. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Rouger J., Lagleyre S., Fraysse B., Deneve S., Deguine O., Barone P. Evidence that cochlear-implanted deaf patients are better multisensory integrators. Proc. Natl. Acad. Sci. U. S. A. 2007;104:7295–7300. doi: 10.1073/pnas.0609419104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rouger J., Fraysse B., Deguine O., Barone P. McGurk effects in cochlear-implanted deaf subjects. Brain Res. 2008;1188:87–99. doi: 10.1016/j.brainres.2007.10.049. [DOI] [PubMed] [Google Scholar]

- Rouger J., Lagleyre S., Démonet J.F., Fraysse B., Deguine O., Barone P. Evolution of crossmodal reorganization of the voice area in cochlear-implanted deaf patients. Hum. Brain Mapp. 2012;33:1929–1940. doi: 10.1002/hbm.21331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandmann P., Dillier N., Eichele T., Meyer M., Kegel A., Pascual-Marqui R.D., Marcar V.L., Jäncke L., Debener S. Visual activation of auditory cortex reflects maladaptive plasticity in cochlear implant users. Brain. 2012;135:555–568. doi: 10.1093/brain/awr329. [DOI] [PubMed] [Google Scholar]

- Sandmann P., Plotz K., Hauthal N., de Vos M., Schönfeld R., Debener S. Rapid bilateral improvement in auditory cortex activity in postlingually deafened adults following cochlear implantation. Clin. Neurophysiol. 2015;126:594–607. doi: 10.1016/j.clinph.2014.06.029. [DOI] [PubMed] [Google Scholar]

- Schierholz I., Finke M., Schulte S., Hauthal N., Kantzke C., Rach S., Büchner A., Dengler R., Sandmann P. Enhanced audio-visual interactions in the auditory cortex of elderly cochlear-implant users. Hear. Res. 2015:133–147. doi: 10.1016/j.heares.2015.08.009. [DOI] [PubMed] [Google Scholar]

- Schorr E.A., Fox N.A., van Wassenhove V., Knudsen E.I. Auditory-visual fusion in speech perception in children with cochlear implants. Proc. Natl. Acad. Sci. U. S. A. 2005;102:18748–18750. doi: 10.1073/pnas.0508862102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarzkopf D., Haas B. De. Better ways to improve standards in brain-behavior correlation analysis. Front. Hum. 2012;6 doi: 10.3389/fnhum.2012.00200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sekiyama K., Soshi T., Sakamoto S. Enhanced audiovisual integration with aging in speech perception: a heightened McGurk effect in older adults. Front. Psychol. 2014;5:1–12. doi: 10.3389/fpsyg.2014.00323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A., Glick H., Campbell J., Torres J., Dorman M., Zeitler D.M. Cortical plasticity and reorganization in pediatric single-sided deafness pre- and postcochlear implantation. Otol. Neurotol. 2016;37:e26–e34. doi: 10.1097/MAO.0000000000000904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stenroos M., Hunold A., Haueisen J. Comparison of three-shell and simplified volume conductor models in magnetoencephalography. NeuroImage. 2014;94:337–348. doi: 10.1016/j.neuroimage.2014.01.006. [DOI] [PubMed] [Google Scholar]

- Strelnikov K., Rouger J., Barone P., Deguine O. Role of speechreading in audiovisual interactions during the recovery of speech comprehension in deaf adults with cochlear implants. Scand. J. Psychol. 2009;50:437–444. doi: 10.1111/j.1467-9450.2009.00741.x. [DOI] [PubMed] [Google Scholar]

- Strelnikov K., Rouger J., Demonet J.-F.F., Lagleyre S., Fraysse B., Deguine O., Barone P. Visual activity predicts auditory recovery from deafness after adult cochlear implantation. Brain. 2013;136:3682–3695. doi: 10.1093/brain/awt274. [DOI] [PubMed] [Google Scholar]

- Stropahl M., Plotz K., Schönfeld R., Lenarz T., Sandmann P., Yovel G., De Vos M., Debener S. Cross-modal reorganization in cochlear implant users: auditory cortex contributes to visual face processing. NeuroImage. 2015;121:159–170. doi: 10.1016/j.neuroimage.2015.07.062. [DOI] [PubMed] [Google Scholar]

- Stropahl M., Schellhardt S., Debener S. McGurk stimuli for the investigation of multisensory integration in cochlear implant users: the Oldenburg Audio Visual Speech Stimuli (OLAVS) Psychon. Bull. Rev. 2016:1–10. doi: 10.3758/s13423-016-1148-9. [DOI] [PubMed] [Google Scholar]

- Stropahl M., Chen L.-C., Debener S. Cortical reorganization in postlingually deaf cochlear implant users: intra-modal and cross-modal considerations. Hear. Res. 2017;343:128–137. doi: 10.1016/j.heares.2016.07.005. [DOI] [PubMed] [Google Scholar]

- Suarez H., Mut F., Lago G., Silveira A., De Bellis C., Velluti R., Pedemonte M., Svirsky M.A. Changes in the cerebral blood flow in postlingual cochlear implant users. Acta Otolaryngol. 1999;119:239–243. doi: 10.1080/00016489950181747. [DOI] [PubMed] [Google Scholar]

- Sumby W.H., Pollack I. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 1954;26:212. [Google Scholar]

- Tadel F., Baillet S., Mosher J.C., Pantazis D., Leahy R.M. Brainstorm: a user-friendly application for MEG/EEG analysis. Comput. Intell. Neurosci. 2011;2011:8. doi: 10.1155/2011/879716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay C., Champoux F., Lepore F., Théoret H. Audiovisual fusion and cochlear implant proficiency. Restor. Neurol. Neurosci. 2010;28:283–291. doi: 10.3233/RNN-2010-0498. [DOI] [PubMed] [Google Scholar]

- Viola F.C., Thorne J., Edmonds B., Schneider T., Eichele T., Debener S. Semi-automatic identification of independent components representing EEG artifact. Clin. Neurophysiol. 2009;120:868–877. doi: 10.1016/j.clinph.2009.01.015. [DOI] [PubMed] [Google Scholar]

- Wagener K.C., Kühnel V., Kollmeier B. Entwicklung und Evaluation eines Satztests für die deutsche Sprache I: Design des Oldenburger Satztests. Zeitschrift für Audiol. 1999;38:4–15. [Google Scholar]

- Wilson B., Dorman M.F. Cochlear implants: a remarkable past and a brilliant future. Hear. Res. 2008;242:3–21. doi: 10.1016/j.heares.2008.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]