Abstract

Ultrasound tomography (UST) image segmentation is fundamental in breast density estimation, medicine response analysis, and anatomical change quantification. Existing methods are time consuming and require massive manual interaction. To address these issues, an automatic algorithm based on GrabCut (AUGC) is proposed in this paper. The presented method designs automated GrabCut initialization for incomplete labeling and is sped up with multicore parallel programming. To verify performance, AUGC is applied to segment thirty-two in vivo UST volumetric images. The performance of AUGC is validated with breast overlapping metrics (Dice coefficient (D), Jaccard (J), and False positive (FP)) and time cost (TC). Furthermore, AUGC is compared to other methods, including Confidence Connected Region Growing (CCRG), watershed, and Active Contour based Curve Delineation (ACCD). Experimental results indicate that AUGC achieves the highest accuracy (D = 0.9275 and J = 0.8660 and FP = 0.0077) and takes on average about 4 seconds to process a volumetric image. It was said that AUGC benefits large-scale studies by using UST images for breast cancer screening and pathological quantification.

1. Introduction

Breast cancer threatens women's lives worldwide. It ranks as the second most common form of cancer with more than 1.3 million women diagnosed annually [1, 2]. In the United States, 12% of women will potentially develop this disease during their lifetime [1]. Consequently, breast cancer early detection is increasingly critical. Breast cancer screening plays an important role in early cancer detection, disease diagnosis, treatment planning, and therapeutic verification. In clinical applications, medical images serve as one of the primary means of breast cancer screening. Among the various modalities for breast cancer screening, mammography remains as the first choice, while supplementary modalities include hand-held ultrasound, computerized tomography (CT), and magnetic resonance imaging (MRI) [3, 4]. Of these commonly used modalities, mammography and hand-held ultrasound create two-dimensional (2D) images of the compressed breast, which leads to various deficiencies in clinical applications. Moreover, mammograms use X-ray imaging technology, exposing women to potentially harmful ionizing radiation. On the other hand, MRI provides three-dimensional (3D) images of the breast without exposure to ionizing radiation; however, its high cost prevents it from being widely adapted for breast cancer screening.

Practically, to promote an affordable and accurate 3D breast cancer screening imaging technique, Dr. Duric et al. developed a novel ultrasound tomography (UST) [5, 6]. It can scan the entire breast using ring array transducers with B-mode, which reduces breast compression and human subjectivity in image acquisition [7]. Moreover, no radiation is involved in UST imaging and breast anatomy is presented in 3D space [8]. As such, it aids in tumor differentiation in cases of obscured tumors or tumors located within dense breasts [5, 8–10]. In addition, UST volumetric images can be applied in breast density estimation [6, 11, 12], medicine response analysis [13], anatomical change, and breast tumor analysis [14, 15]. In summary, the image acquisition of UST is safe, cost-effective, and highly efficient. In clinical practice, breast segmentation affects follow-up image analysis for risk assessment, detection, and diagnosis [16, 17], as well as cancer treatment [18, 19]. Furthermore, extracting the breast region from surrounding water enhances tissue visualization and provides physicians and radiologist with superior understanding of breast tumor positioning [7].

To our knowledge, several algorithms have been developed toward UST image segmentation. Balic et al. [20] proposed an algorithm based on active contours, which is time consuming and its success depends on quality initial contour. Furthermore, this method is without a systematic evaluation of accuracy. Hopp et al. [21] presented a method integrating edge detection and surface fitting for breast segmentation. However, this method typically requires massive user interaction and postprocessing for outputting results. Sak et al. [22] took advantage of K-means [23] and the thresholding methods [24] to reduce user interaction, though proper parameters are yet needed in these methods. Generally, major methods suffer from heavy time consumption and excessive interactions not applicable to large-scale studies. In order to overcome these issues, we proposed an approach based on GrabCut for automatic segmentation of UST images. GrabCut utilizes incomplete labeling to reduce user interaction and seeks efficient segmentation in an iterative manner of energy minimization [25]. It falls under the graph cut method [26–31] and shows superiority in manual segmentation of 2D natural images, while in the presented algorithm, we provide an automatic approach for incomplete labeling of GrabCut, as well as deploying the algorithm on multicores to speed up the segmentation as demonstrated in [32].

The organization of this paper is as follows. The proposed method, experimental design, and evaluation criteria are presented in Section 2. Section 3 presents experimental results from perceived evaluation to objective evaluation. Discussion and conclusion are given in Sections 4 and 5, respectively.

2. Methods

2.1. Proposed Algorithm

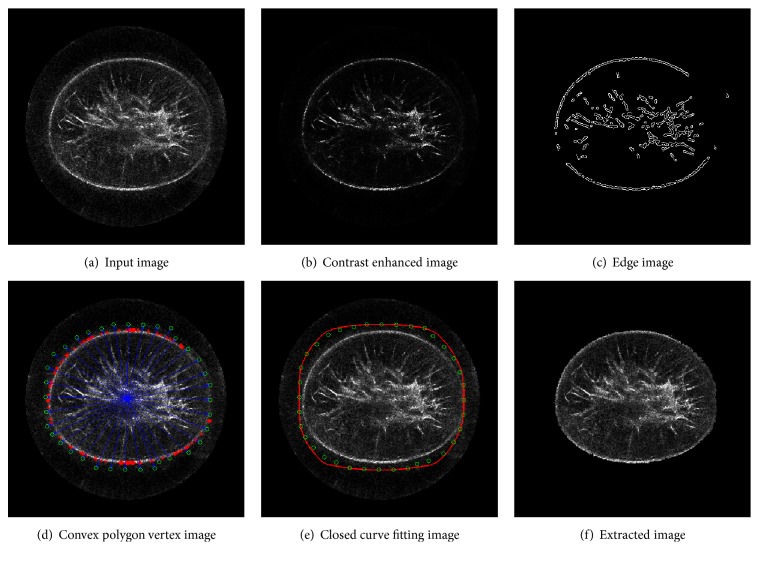

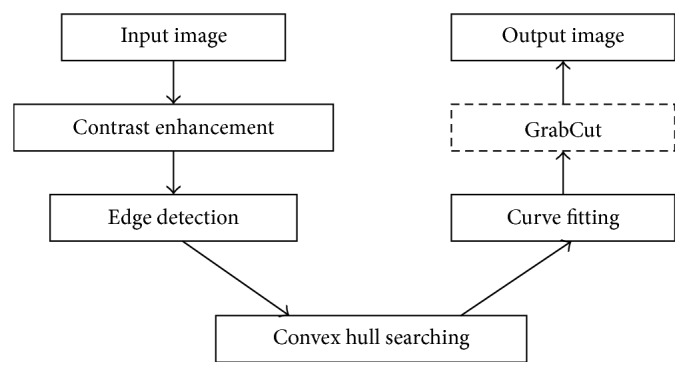

As a semiautomatic algorithm, GrabCut is widely used in various scenarios [33, 34]. However, in clinical applications, an automatic method that can lighten workload and minimize subjective bias is always desirable. Figure 1 illustrates the proposed automatic method (AUGC) based on GrabCut. It integrates contrast enhancement, edge detection, convex hull searching, and curve fitting for automatic initialization of GrabCut.

Figure 1.

The flowchart of the proposed AUGC algorithm.

To make the flowchart clearer, we present a case study shown in Figure 2. First, after contrast enhancement, the input image suppresses image content in the background and highlights breast boundaries and glandular tissues (b). Then, the major boundaries are detected in (c). After that, convex hull searching is used, and key points (red sparkers) are found (d). Generally, the boundaries of the breast in UST images are not complete to a large extent. Taking robustness into consideration, we further push these key points outward (green circle) to enclose the tissue region of interest shown in (d). Next, green circles are interpolated with Hermite curve to form a bimap (red polygon) for incomplete labeling (e). In the end, (f) shows the extracted breast region.

Figure 2.

A case study of AUGC in UST image segmentation. (a) The UST image slice, (b) the input image after contrast enhancement, (c) the edge image detected by Canny with an adaptive thresholding, (d) the convex polygon vertex image produced by using convex hull searching and postprocessing, (e) the closed curve image produced by using Hermite cubic curve algorithm, and (f) the resultant image extracted by GrabCut. The figure can be enlarged to view details.

Image Preprocessing. This step includes contrast enhancement and boundary detection of UST images. It uses sigmoid function to sharpen the image followed by a median filter with a kernel of [33] to suppress speckle noise. As shown in Figure 2(b), this step not only suppresses background image content, but also reduces speckle noise. Moreover, it benefits edge detection as shown in Figure 2(c), because image contrast is enhanced.

Convex Hull Searching. A fast convex hull algorithm is used to determine a convex hull point set Vhull = {Vi}i=1n. With the point set Vhull, we calculated a centroid C and a distance R between the farthest point Pf in the point set Vhull and C, as well as four extreme points of xmin, xmax, ymin, and ymax for the regions of interest. A set of points Vpsu = {vi}i=136 are uniformly generated on the circle centered at C with the radius R (R = ‖C − Pf‖2). Note that outliers in Figure 2(c) are removed with morphology operation before convex hull searching.

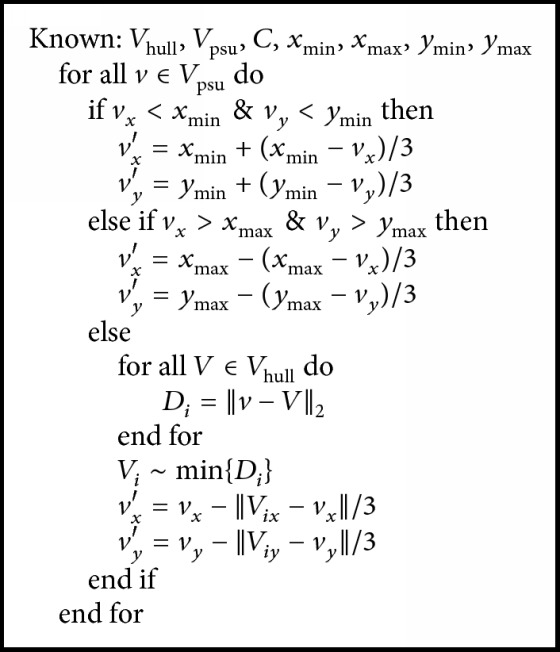

As shown in Figure 2(c), one problem occurs because the breast boundary is incomplete. To tackle this problem, the convex hull point set Vhull and the pseudo-contour point set Vpsu are combined to refine the breast mask. The algorithm is given in Algorithm 1.

Algorithm 1.

Convex hull refinement (the refinement flowchart of the convex hull points).

Figure 2(d) shows the results after the convex hull searching and refinement, in which red sparkers are the original convex hull point set Vhull, green circles are adjusted points for generating breast mask, and the blue point is the centroid C.

Closed Curve Fitting. Hermite cubic curve is powerful in smooth interpolation between control points [35] and four Hermite basis functions are described in

| (1) |

Moreover, the general form of Hermite curve is expressed in (2) below, where scale s goes from 0 to 1 with equal spacing Δs (Δs = 0.1). The closed curve in red is the Hermite curve with the inputting of the 36 points refined and shown in Figure 2(e). Pixels in the closed curve are the potential foreground, while outside of the curve is the definite background for GrabCut initialization.

| (2) |

Here P0 and P1 represent the starting and the ending points of the curve, and μ0 and μ1 represent tangent to how the curve leaves the starting point and the ending point, respectively.

GrabCut. After closed curve fitting, Gaussian Mixture Models (GMMs) [31] are initialized with pixels inside and outside the closed curve and a flow network is built. In the network, each pixel represents a graph node. After that, a max-flow min-cut algorithm is applied for graph segmentation [27]. At last, a sample of the extracted breast is shown in Figure 2(f).

Overall, the procedure mentioned above handles only one slice in volumetric images and the entire breast UST volume is a stack of multiple gray-scale slices. As such, the proposed AUGC can be deployed with parallel programming as presented in [32]. Based on the advanced computer architecture, parallel programming can be realized on graphic processing units (GPUs) or on multicore central processing units (CPUs). GPU-based acceleration is difficult in algorithm development, in addition to costing extra programming time. On the other hand, parallel programming based on multicore CPUs is more promising as the technology is mature and comparatively easy to use. Personal computers with multicore CPUs are particularly easy to access; therefore, parallel programming based on multicore CPUs is utilized in the proposed method.

2.2. Algorithms for Comparison

Three algorithms are involved in this study. The first one, CCRG, utilizes simple statistics in region growth [36]. It calculates the median intensity m and the standard deviation σ based on a given region. A multiplier l should be supplied which defines a range around m. In our experiment, the multiplier l is adjusted to range from 3 to 5 times, the maximum iteration number is 500, and the seed radius is 4 mm.

The second algorithm, watershed, is a level set algorithm that classifies pixels into regions using gradient descent [37]. Additionally, a key parameter of watershed is water level (wl), tuned according to segmentation image. In our experiment, we start exploring it at 0.2. If too many small regions are obtained, we set it higher or else we tune it lower until a visually acceptable result is generated. Regarding different UST volumes, we found that water level ranges from 0.16 to 0.23. Since resultant regions are rendered by using different colors, a postprocessing step is used to merge these regions into two groups as the background and the breast region.

The final algorithm, ACCD, is derived from active contour evolution [38] and allows for control point delineation [39]. The number of control points is proportionally distributed to the region boundary length. Although basic active contour has more than ten tunable parameters, we focus on only two key parameters, α and β, which define the relative importance of the internal and external energy [38]. Note that we place control points near but not on the breast boundary. Compared to the original algorithm in [39], no refinement is involved.

At last, the classification and comparison of algorithms mentioned above were summarized in Table 1. For full knowledge of technical details, please refer to [35–39].

Table 1.

Classification and comparison of involved algorithms in this paper.

| Category | Initialization | Tuned parameters | |

|---|---|---|---|

| AUGC | GrabCut | Canny operator with adaptive thresholding and the structure element radii of morphological operators is initialized to 4 and 8 | — |

|

| |||

| ACCD | Active contour | 20 control points per slice | α and β |

|

| |||

| Watershed | Level sets | 0.1 | wl |

|

| |||

| CCRG | Region growing | 10 seeds for each volume | l |

2.3. Case Study and Evaluation

Data Collection. Thirty-two whole-breast UST volume images are collected (SoftVue™, Delphinus Medical Technologies, Michigan, USA). The size of image slice is 512 × 512 and the physical resolution of UST volume is [0.5, 0.5, 2.0] mm3. An experienced radiologist defined the starting and ending slices following the procedure described in [14], and the average number of remaining slices in each volume is 17 ± 2. The radiologist also manually delineated the breast region in each slice to build the ground truth for algorithm validation.

Software Platform. AUGC and CCRG are implemented with VS2010 (https://www.visualstudio.com) in cooperation with OpenCV (http://opencv.org) and ITK (https://itk.org) [40], and ACCD is previously built with MATLAB [35], while the watershed algorithm is manipulated on VolView (https://www.kitware.com/opensource/volview.html). All codes are running on Windows 7 workstation with 4 Intel (R) Cores (TM) of 3.70 GHz and 8 GB DDR RAM.

Accuracy Evaluation. Three criteria, Dice (D), Jaccard (J) coefficients, and false positive (FP), are used to evaluate the accuracy of breast image segmentation [41]. These measures are defined in

| (3) |

where S and G denote the segmentation result and the corresponding ground truth, respectively, while |·| denotes the breast voxel number. The values of these equations range from 0 to 1. Higher values indicate better performance for D and J, while a value of zero is achieved when performing perfect breast volume segmentation for FP.

To evaluate the real-time capability, time cost (TC) is defined as

| (4) |

where t is the number of total image slices, n is the number of breast volumes, and tci is the time cost for each volume. Note that time spent on parameters tuning and manual initialization for semiautomatic algorithms is not taken into account.

3. Results

3.1. Perceived Evaluation

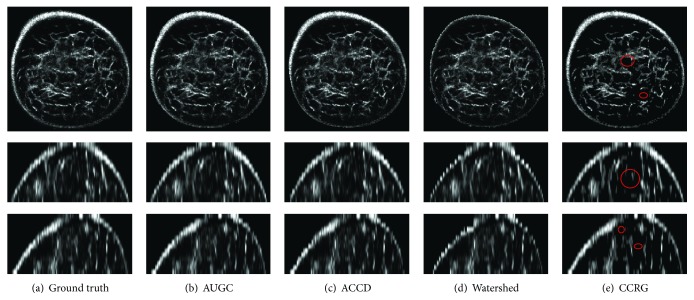

Perceived evaluation of segmentation results is shown in Figure 3. From left to right are the ground truth and resultant breast regions from AUGC, ACCD, watershed, and CCRG, while from top to bottom are the coronal, sagittal, and transverse view, respectively. Note that images are cropped for display purposes. No visual difference is observed between algorithms on this case, except that watershed fails to detect bright pixels on the breast boundary and CCRG fails to segment the foreground content shown in red circles.

Figure 3.

Perceived segmentation results of a UST image. (a) Ground truth produced by manual delineating, (b) AUGC, (c) ACCU, (d) watershed, and (e) CCRG. Images are interpolated in the sagittal and coronal view and then cropped in three views for display purpose. The figure can be enlarged to view details.

3.2. Quantitative Evaluation

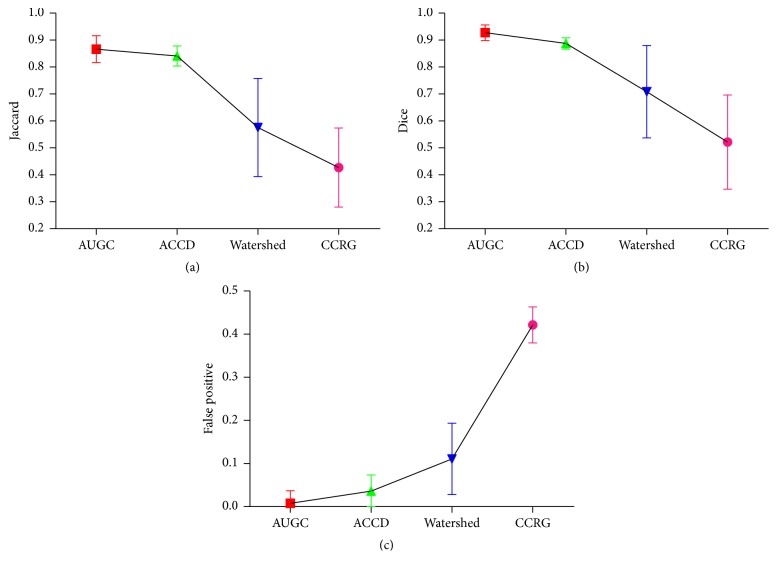

Quantitative evaluation of all algorithms for UST image segmentation is shown in Figure 4 where different colors indicate different algorithms. Moreover, (a), (b), and (c) represent the values of D, J, and FP, respectively. It indicates that AUGC outperforms other algorithms, followed by ACCD. Furthermore, both AUGC and ACCD feature relatively robust values of D, J, and FP.

Figure 4.

Accuracy evaluation of AUGC, ACCD, watershed, and CCRG segmentation methods, (a) represents the values of D, (b) is the values of J, and (c) denotes the values of FP. The figure can be enlarged to view details.

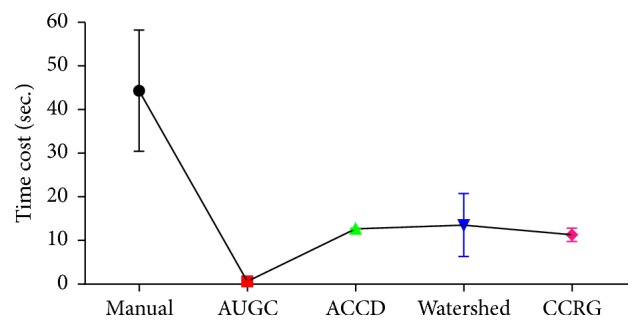

3.3. Real-Time Capability

Time cost for each slice in UST image segmentation is shown in Figure 5. Compared to manually building the ground truth (44.33 seconds per slice), all the algorithms speed up the process of breast image segmentation. Particularly, AUGC dramatically shortens time consumption and makes it possible for real-time UST image breast segmentation.

Figure 5.

Real-time capability of involved algorithms. Time consumption is decreased dramatically from manual segmentation to AUGC. The figure can be enlarged to view details.

3.4. Comprehensive Performance Evaluation

Table 2 illustrates overall performance of four algorithms. It reveals that AUGC achieves the best performance, not only providing the highest volume overlap measures (D and J), but also leading to the least error (FP). In addition, AUGC demonstrates the real-time capability in image segmentation. Inferior to AUGC is ACCD. Both watershed and CCRG achieve J value less than 0.6. Additionally, CCRG produces the lowest accuracy with the highest FP.

Table 2.

Comprehensive performance evaluation of involved algorithms.

| Dice (D) | Jaccard (J) | False positive (FP) | Time cost (TC) | |

|---|---|---|---|---|

| AUGC | 0.9275 | 0.8660 | 0.0077 | 0.2356 |

| ACCD | 0.8874 | 0.8407 | 0.0362 | 12.6742 |

| Watershed | 0.7084 | 0.5757 | 0.1107 | 13.5360 |

| CCRG | 0.5218 | 0.4268 | 0.4214 | 11.3120 |

4. Discussion

UST holds tremendous promise for breast cancer screening and examination and UST images are preferred in clinical applications, such as quantitative breast tissues analysis [5, 9, 10], breast mass growing monitoring [6, 11], and clinical pathologic diagnosis [12–15]. In this paper, we presented a fully automated algorithm (AUGC) for breast UST image segmentation. The performance of four segmentation algorithms has been verified based on thirty-two volumetric images.

Quantitative evaluation of segmentation performance suggests that AUGC is superior to other three algorithms, ACCD, watershed, and CCRG, shown in Figures 3 and 4 and Table 2. Among these methods, CCRG resulted in the lowest accuracy and the highest amount of false positives. Moreover, watershed produced background content onto the final results. On the whole, ACCD is slightly inferior to AUGC. However, ACCD requires a user to locate several control points in the breast boundary. In addition, it contains more than ten parameters which need to be tuned manually, making the segmentation complicated and exhaustive. Generally, AUGC obtains the best performance in terms of segmentation accuracy.

AUGC is also superior to other approaches in terms of the real-time capacity. It can isolate an entire UST volumetric image within four seconds (0.2356 × 16 = 3.7696) on a four-core CPU system. Therefore, the greater the number of CPUs is, the less segmentation time it needs. It is known that real-time breast extraction plays a critical role in practical applications. For instance, breast density estimation is a routine task before rating breast cancer risk. At present, manual extraction of the whole breast in UST image hampers its large-scale experiments. Consequently, the proposed AUGC paves the way for large-scale studies in terms of high accuracy and real-time speed. It can accelerate the application of UST in anatomical change quantification, medicine response, and other related tasks.

The UST imaging technology is still under development and remarkable improvements have been made recently [42, 43]. These improved technologies are bound to enhance UST image quality and tissue contrast. High UST image quality can improve the performance of AUGC in breast segmentation, suggesting an even greater potential of AUGC to facilitate clinical diagnosis by using whole-breast UST images

5. Conclusion

UST image segmentation not only is time consuming, but also requires massive user interaction. An automated algorithm based on GrabCut is proposed and verified in this study. Experimental results have validated its good performance in UST image segmentation. Furthermore, it can segment one slice within less than 0.3 seconds. It is beneficial for large-scale studies and physicians can also be released from the tedious task of UST image segmentation.

Acknowledgments

This work is supported by the grants of the National Key Research Program of China (Grant no. 2016YFC0105102), the Union of Production, Study and Research Project of Guangdong Province (Grant no. 2015B090901039), the Technological Breakthrough Project of Shenzhen City (Grant no. JSGG20160229203812944), the Shenzhen Fundamental Research Program (JCYJ201500731154850923), the Natural Science Foundation of Guangdong Province (Grant no. 2014A030312006), and the CAS Key Laboratory of Human-Machine Intelligence-Synergy Systems, Shenzhen Institutes of Advanced Technology.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Desantis C., Ma J., Bryan L., Jemal A. Breast cancer statistics, 2013. CA: A Cancer Journal for Clinicians. 2014;64(1):52–62. doi: 10.3322/caac.21203. [DOI] [PubMed] [Google Scholar]

- 2.Fan L., Strasser-Weippl K., Li J.-J., et al. Breast cancer in China. The Lancet Oncology. 2014;15(7):e279–e289. doi: 10.1016/S1470-2045(13)70567-9. [DOI] [PubMed] [Google Scholar]

- 3.Tabar L., Dean B. P., Chen T. H., et al. Breast Cancer: A New Era in Management. chapter 2. 2014. The impact of mammography screening on the diagnosis and management of early-phase breast cancer; pp. 31–78. [Google Scholar]

- 4.Lee N. C., Wong F. L., Jamison P. M., et al. Implementation of the national breast and cervical cancer early detection program: the beginning. Cancer. 2014;120(16):2540–2548. doi: 10.1002/cncr.28820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Duric N., Littrup P., Poulo L., et al. Detection of breast cancer with ultrasound tomography: First results with the Computed Ultrasound Risk Evaluation (CURE) prototype. Medical Physics. 2007;34(2):773–785. doi: 10.1118/1.2432161. [DOI] [PubMed] [Google Scholar]

- 6.Glide C., Duric N., Littrup P. Novel approach to evaluating breast density utilizing ultrasound tomography. Medical Physics. 2007;34(2):744–753. doi: 10.1118/1.2428408. [DOI] [PubMed] [Google Scholar]

- 7.Duric N., Littrup P., Schmidt S., et al. Breast imaging with the SoftVue imaging system: First results. Proceedings of the Medical Imaging 2013: Ultrasonic Imaging, Tomography, and Therapy; February 2013; Lake Buena Vista, Fla, USA. pp. 1–8. [DOI] [Google Scholar]

- 8.Duric N., Littrup P., Li C., et al. Breast imaging with SoftVue: Initial clinical evaluation. Proceedings of the Medical Imaging 2014: Ultrasonic Imaging and Tomography; February 2014; San Diego, Calif, USA. [DOI] [Google Scholar]

- 9.Li C., Duric N., Littrup P., Huang L. In vivo Breast Sound-Speed Imaging with Ultrasound Tomography. Ultrasound in Medicine and Biology. 2009;35(10):1615–1628. doi: 10.1016/j.ultrasmedbio.2009.05.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ranger B., Littrup P. J., Duric N., et al. Breast ultrasound tomography versus MRI for clinical display of anatomy and tumor rendering: Preliminary results. American Journal of Roentgenology. 2012;198(1):233–239. doi: 10.2214/AJR.11.6910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Glide-Hurst C. K., Duric N., Littrup P. Volumetric breast density evaluation from ultrasound tomography images. Medical Physics. 2008;35(9):3988–3997. doi: 10.1118/1.2964092. [DOI] [PubMed] [Google Scholar]

- 12.Duric N., Boyd N., Littrup P., et al. Breast density measurements with ultrasound tomography: A comparison with film and digital mammography. Medical Physics. 2013;40(1) doi: 10.1118/1.4772057.013501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sak M., Duric N., Littrup P., et al. Breast density measurements using ultrasound tomography for patients undergoing tamoxifen treatment. Proceedings of the Medical Imaging 2013: Ultrasonic Imaging, Tomography, and Therapy; February 2013; Lake Buena Vista, Fla, USA. pp. 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Khodr Z. G., Sak M. A., Pfeiffer R. M., et al. Determinants of the reliability of ultrasound tomography sound speed estimates as a surrogate for volumetric breast density. Medical Physics. 2015;42(10):5671–5678. doi: 10.1118/1.4929985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.O'Flynn E., Fromageau J., Ledger M., et al. Breast density measurements with ultrasound tomography: a comparison with non-contrast MRI. Breast Cancer Research. 2015;17(S1) doi: 10.1186/bcr3759. [DOI] [Google Scholar]

- 16.Ortiz C. G., Martel A. L. Automatic atlas-based segmentation of the breast in MRI for 3D breast volume computation. Medical Physics. 2012;39(10):5835–5848. doi: 10.1118/1.4748504. [DOI] [PubMed] [Google Scholar]

- 17.Lin M., Chen J.-H., Wang X., Chan S., Chen S., Su M.-Y. Template-based automatic breast segmentation on MRI by excluding the chest region. Medical Physics. 2013;40(12) doi: 10.1118/1.4828837.122301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gao L., Yang W., Liao Z., Liu X., Feng Q., Chen W. Segmentation of ultrasonic breast tumors based on homogeneous patch. Medical Physics. 2012;39(6):3299–3318. doi: 10.1118/1.4718565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Mustra M., Grgic M., Rangayyan R. M. Review of recent advances in segmentation of the breast boundary and the pectoral muscle in mammograms. Medical and Biological Engineering and Computing. 2016;54(7):1003–1024. doi: 10.1007/s11517-015-1411-7. [DOI] [PubMed] [Google Scholar]

- 20.Balic I., Goyal P., Roy O., Duric N. Breast boundary detection with active contours. Proceedings of the Medical Imaging 2014: Ultrasonic Imaging and Tomography; February 2014; San Diego, Calif, USA. pp. 1–8. [DOI] [Google Scholar]

- 21.Hopp T., Zapf M., Ruiter N. V. Segmentation of 3D ultrasound computer tomography reflection images using edge detection and surface fitting. Proceedings of the Medical Imaging 2014: Ultrasonic Imaging and Tomography; February 2014; San Diego, Calif, USA. [DOI] [Google Scholar]

- 22.Sak M., Duric N., Littrup P., et al. Using speed of sound imaging to characterize breast density. Ultrasound in Medicine and Biology. 2017;43(1):91–103. doi: 10.1016/j.ultrasmedbio.2016.08.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jain A. K. Data clustering: 50 years beyond K-means. Pattern Recognition Letters. 2010;31(8):651–666. doi: 10.1016/j.patrec.2009.09.011. [DOI] [Google Scholar]

- 24.Otsu N. A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man and Cybernetics. 1975;11(285-296):23–27. [Google Scholar]

- 25.Rother C., Kolmogorov V., Blake A. “GrabCut”: interactive foreground extraction using iterated graph cuts. ACM Transactions on Graphics. 2004;23(3):309–314. doi: 10.1145/1015706.1015720. [DOI] [Google Scholar]

- 26.Boykov Y. Y., Jolly M.-P. Interactive graph cuts for optimal boundary & region segmentation of objects in N-D images. Proceedings of the 8th International Conference on Computer Vision (ICCV '01); July 2001; Vancouver, Canada. pp. 105–112. [DOI] [Google Scholar]

- 27.Chuang Y.-Y., Curless B., Salesin D. H., Szeliski R. A Bayesian approach to digital matting. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR '01); December 2001; pp. II264–II271. [Google Scholar]

- 28.Boykov Y., Veksler O., Zabih R. Fast approximate energy minimization via graph cuts. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2001;23(11):1222–1239. doi: 10.1109/34.969114. [DOI] [Google Scholar]

- 29.Boykov Y., Funka-Lea G. Graph cuts and efficient N-D image segmentation. International Journal of Computer Vision. 2006;70(2):109–131. doi: 10.1007/s11263-006-7934-5. [DOI] [Google Scholar]

- 30.Blake A., Rother C., Brown M., Perez P., Torr P. Interactive image segmentation using an adaptive GMMRF model. Proceedings of the European Conference on Computer Vision; 2004; Springer; pp. 428–441. [DOI] [Google Scholar]

- 31.Carreira J., Sminchisescu C. CPMC: Automatic object segmentation using constrained parametric min-cuts. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2012;34(7):1312–1328. doi: 10.1109/TPAMI.2011.231. [DOI] [PubMed] [Google Scholar]

- 32.Wang G., Zuluaga M. A., Pratt R., et al. Slic-Seg: Slice-by-Slice segmentation propagation of the placenta in fetal MRI using one-plane scribbles and online learning. Proceedings of the 18th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI '15); 2015; pp. 29–37. [DOI] [Google Scholar]

- 33.Han S., Tao W., Wang D., Tai X.-C., Wu X. Image segmentation based on GrabCut framework integrating multiscale nonlinear structure tensor. IEEE Transactions on Image Processing. 2009;18(10):2289–2302. doi: 10.1109/TIP.2009.2025560. [DOI] [PubMed] [Google Scholar]

- 34.Na I., Oh K., Kim S. Unconstrained object segmentation using grabcut based on automatic generation of initial boundary. International Journal of Contents. 2013;9(1):6–10. doi: 10.5392/IJoC.2013.9.1.006. [DOI] [Google Scholar]

- 35.Zhou W., Xie Y. Interactive contour delineation and refinement in treatment planning of image-guided radiation therapy. Journal of Applied Clinical Medical Physics. 2014;15(1):1–26. doi: 10.1120/jacmp.v15i1.4499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kass M., Witkin A., Terzopoulos D. Snakes: active contour models. International Journal of Computer Vision. 1988;1(4):321–331. doi: 10.1007/BF00133570. [DOI] [Google Scholar]

- 37.Martin K., Ibáñez L., Avila L., Barré S., Kaspersen J. H. Integrating segmentation methods from the Insight Toolkit into a visualization application. Medical Image Analysis. 2005;9(6):579–593. doi: 10.1016/j.media.2005.04.009. [DOI] [PubMed] [Google Scholar]

- 38.Yushkevich P. A., Piven J., Hazlett H. C., et al. User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. NeuroImage. 2006;31(3):1116–1128. doi: 10.1016/j.neuroimage.2006.01.015. [DOI] [PubMed] [Google Scholar]

- 39.Gao Y., Kikinis R., Bouix S., Shenton M., Tannenbaum A. A 3D interactive multi-object segmentation tool using local robust statistics driven active contours. Medical Image Analysis. 2012;16(6):1216–1227. doi: 10.1016/j.media.2012.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Johnson H. J., McCormick M., Ibanez L. The ITK Software Guide. ITK version 4.10, 2016.

- 41.Choi S., Cha S. H., Tappert C. A survey of binary similarity and distance measures. Journal of Systemics, Cybernetics and Informatics. 2010:43–48. [Google Scholar]

- 42.Wang K., Matthews T., Anis F., Li C., Duric N., Anastasio M. Waveform inversion with source encoding for breast sound speed reconstruction in ultrasound computed tomography. IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control. 2015;62(3):475–493. doi: 10.1109/TUFFC.2014.006788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Sandhu G. Y., Li C., Roy O., Schmidt S., Duric N. Frequency domain ultrasound waveform tomography: Breast imaging using a ring transducer. Physics in Medicine and Biology. 2015;60(14, article no. 5381):5381–5398. doi: 10.1088/0031-9155/60/14/5381. [DOI] [PMC free article] [PubMed] [Google Scholar]