Abstract

Spoken language unfolds over time. Consequently, there are brief periods of ambiguity, when incomplete input can match many possible words. Typical listeners solve this problem by immediately activating multiple candidates which compete for recognition. In two experiments using the visual world paradigm, we examined real-time lexical competition in prelingually deaf cochlear implant (CI) users, and normal hearing (NH) adults listening to severely degraded speech. In Experiment 1, adolescent CI users and NH controls matched spoken words to arrays of pictures including pictures of the target word and phonological competitors. Eye-movements to each referent were monitored as a measure of how strongly that candidate was considered over time. Relative to NH controls, CI users showed a large delay in fixating any object, less competition from onset competitors (e.g., sandwich after hearing sandal), and increased competition from rhyme competitors (e.g., candle after hearing sandal). Experiment 2 observed the same pattern with NH listeners hearing highly degraded speech. These studies suggests that in contrast to all prior studies of word recognition in typical listeners, listeners recognizing words in severely degraded conditions can exhibit a substantively different pattern of dynamics, waiting to begin lexical access until substantial information has accumulated.

Keywords: Speech Perception, Spoken Word Recognition, Cochlear Implants, Incremental Processing, Vocoded Speech, Lexical Access

1.0 Introduction

Language unfolds over time, and early portions of the signal are often insufficient to recognize a word. For example, a partial auditory input like /w …/ is consistent with wizard, with, winner, and will, and this ambiguity will not be resolved for several hundred milliseconds. Consequently, even a clearly articulated word has a large (but temporary) form of ambiguity among many lexical candidates. As a result of this, even normal hearing (NH) adults confront and manage a brief period of ambiguity every time they recognize a word. This process is now well understood in typical listeners (Dahan & Magnuson, 2006; Weber & Scharenborg, 2012). And understanding how typical listeners deal with this normal temporary ambiguity, may help understand situations in which listeners confront much greater ambiguity, for example, listeners who face significant loss of acoustic detail because they use a cochlear implant (CI).

There is consensus that NH listeners solve the problem of temporary ambiguity with some version of immediate competition (Marslen-Wilson, 1987; McClelland & Elman, 1986; Norris & McQueen, 2008). As listeners hear a word, multiple candidates are partially activated in parallel (Allopenna, Magnuson, & Tanenhaus, 1998; Marslen-Wilson & Zwitserlood, 1989). As the signal unfolds, some candidates drop out of consideration (Frauenfelder, Scholten, & Content, 2001), and more active words inhibit less active ones (Dahan, Magnuson, Tanenhaus, & Hogan, 2001; Luce & Pisoni, 1998) until only a single word remains. The alternative—what we term a wait-and-see approach—suggests information accumulates in a memory buffer and listeners wait to initiate lexical access until sufficient information is available to identify the target word. This account is largely hypothetical and has received almost no empirical support,, but the contrast between wait-and-see and immediate competition has motivated much work in word recognition (Dahan & Magnuson, 2006; Weber & Scharenborg, 2012)

Immediate competition has a number of advantages over wait-and-see. It does not require a dedicated memory buffer to store auditory information prior to lexical access. It also does not require a dedicated segmentation process – the system can consider multiple segmentations of a string (e.g., car#go#ship vs. cargo#ship) and let competition sort it out (McClelland & Elman, 1986; Norris, 1994). Finally, by maintaining partial activation for multiple alternatives, listeners may have more flexibility in dealing with variable input if an initial commitment turns out to be wrong (Clopper & Walker, in press; McMurray, Tanenhaus, & Aslin, 2009).

The ubiquity of this conceptualization is underscored by work on individual differences, development and communicative impairment. All of the non-young-adult groups that have been studied to date exhibit some form of immediate competition. This includes toddlers (Fernald, Swingley, & Pinto, 2001; Swingley, Pinto, & Fernald, 1999), adolescents (Rigler et al., 2015) children with SLI (Dollaghan, 1998; McMurray, Samelson, Lee, & Tomblin, 2010), people undergoing cognitive aging (Revill & Spieler, 2012), and postlingually deafened adults who use cochlear implants (CIs) (Farris-Trimble, McMurray, Cigrand, & Tomblin, 2014). While the dynamics of lexical access in these groups differs quantitatively (and in interesting ways) from typical adults, all of these groups also exhibit behavior broadly consistent with immediate competition.

We set out to characterize the dynamics of lexical access in prelingually deaf children who use Cochlear Implants (CIs). Many studies have characterized word recognition accuracy in this population, but few have examined processing. While we expected (and found) quantitative differences, lexical processing in this group also in differed in marked ways from immediate competition accounts. This suggested these listeners might be doing something a closer to wait-and-see. We then demonstrated a similar finding with NH adults hearing extremely degraded speech. These studies raise the possibilities that immediate competition is not the only option for dealing with temporally unfolding inputs, and that demands of severely degraded speech can lead to a range of solutions – solutions that may require somewhat different cognitive architectures.

1.1 Word Recognition in Prelingually Deaf CI users

CIs directly electrically stimulate the auditory nerve to provide profoundly deaf people with the ability to perceive speech. In the normal auditory system, frequency is coded topographically along the basilar membrane. CIs work by inserting an electrode along the basilar membrane with multiple channels (see Niparko, 2009) that directly electrically excite localized portions of the basilar membrane to the degree that each electrode’s characteristic frequency is present in the input. CIs result in some loss of information and systematic distortion from the original acoustic signal. CIs are generally good at transmitting rapid changes in the amplitude envelope of speech. However, because of a limited number of channels (as well as electrical “bleed” between channels), they only transmit a relatively coarse representation of the frequency structure – harmonics are lost as are rapid spectral changes (particularly within a channel). Fundamental frequency is typically not present in CI input (as electrodes are not typically inserted deeply enough for those frequencies), and periodicity in the signal is replaced by rapid electrical pulses. Despite these limitations, adults who use CIs generally show good speech perception (Francis, Chee, Yeagle, Cheng, & Niparko, 2002; Holden et al., 2013).

In prelingually deaf children, CIs generally offer sufficient input to supporting speech perception and functional oral language development (Dunn et al., 2014; Svirsky, Robbins, Kirk, Pisoni, & Miyamoto, 2000; Uziel et al., 2007). However, outcomes are highly variable, and speech perception and language may take many year of device use to fully develop (Dunn et al., 2014; Geers, Brenner, & Davidson, 2003; Gstoettner, Hamzavi, Egelierler, & Baumgartner, 2000; Svirsky et al., 2000). Outcomes are related to a variety of audiological, medical and demographic factors. Earlier implantation tends to lead to better outcomes (Dunn et al., 2014; Kirk et al., 2002; Miyamoto, Kirk, Svirsky, & Sehgal, 1999; Nicholas & Geers, 2006; Waltzman, Cohen, Green, & Roland Jr, 2002), though there is not evidence for a sharp cut-off or critical period (Harrison, Gordon, & Mount, 2005). Better pre-implantation hearing and a longer duration of CI use both lead to better outcomes (Dunn et al., 2014; Nicholas & Geers, 2006). At the same time, variability is a persistent problem that cannot always be linked to medical and/or audiological factors.

A standard measure of speech perception outcomes in CI users is open set word recognition, the ability to produce an isolated word that is presented auditorily. Common examples of this are tests like the CNC (consonant nucleus coda), or PBK (phonetically balanced kindergarten) word lists, or the GASP (Glendonald Auditory Screening Procedure) and LNT (Lexical Neighborhood Test). These are commonly seen as measures of speech perception. However, performance is also affected by lexical and cognitive processes like working memory (Cleary, Pisoni, & Kirk, 2000; Geers, Pisoni, & Brenner, 2013; Pisoni & Cleary, 2003; Pisoni & Geers, 2000) and sequence learning (Conway, Pisoni, Anaya, Karpicke, & Henning, 2011). Importantly, performance is also influenced by lexical factors like frequency and neighborhood density (Davidson, Geers, Blamey, Tobey, & Brenner, 2011; Eisenberg, Martinez, Holowecky, & Pogorelsky, 2002; Kirk, Pisoni, & Osberger, 1995), suggesting that word recognition in children who use CIs reflects competition among words (much like NH adults). As a whole, these studies suggest that a better understanding of the cognitive processes that underlie spoken word recognition in children who use CIs may be crucial for understanding variable outcomes in this population. This highlights the need to understand real-time lexical competition in CI users. However, only two studies have examined this.

Farris-Trimble et al. (2014) examined postlingually deafened adult CI users as well as NH listeners hearing spectrally degraded CI simulations. Participants were tested in a 4AFC version of the Visual World Paradigm (VWP; Allopenna et al., 1998); they heard target words like wizard and selected the referent from a screen containing pictures of the target, a word that overlapped with it at onset (a cohort, whistle), a rhyme competitor (lizard), and an unrelated word (baggage). Fixations to each object were monitored as a measure of how strongly it was considered. Both CI users and NH listeners hearing degraded speech showed eye-movements consistent with immediate competition: shortly after word onset they fixated the target and cohort, and later suppressed competitor fixations. However, there were also quantitative differences. Adult CI users and listeners under simulation were slower to fixate the target and fixated competitors for longer than NH listeners. Thus, degraded hearing quantitatively alters the timing of the activation dynamics, though listeners still show immediate competition.

Grieco-Calub, Saffran, and Litovsky (2009) studied prelingually deaf two-year-old CI users using the “looking while listening” paradigm (Fernald, Pinto, Swingley, Weinberg, & McRoberts, 1998). Toddlers heard prompts like “Where is the shoe?” while viewing screens containing pictures of the target object (shoe) and an unrelated control (ball). CI users were slower than NH toddlers to fixate the target, suggesting differences in real-time processing. However, participants were only tested in a two-alternative version of the task with no phonological competitors, making it difficult to map differences in speed of processing onto the broader process of sorting through the lexicon.

Unlike postlingually deafened adults, CI users who were born deaf must acquire phonemes and words from a degraded input; consequently they exhibit delayed language development (Dunn et al., 2014; Svirsky et al., 2000), and their poor input likely leads to substantially different representations for auditory word forms than those of post-lingually deafened adults (who acquired words from clear input). For example, under exemplar type accounts of word recognition (Goldinger, 1998), clusters of exemplars for neighboring words are likely to overlap considerably, as much of the distinctive acoustic information is lost by the CI. Even in under models in which words are represented in terms of phonemes, the distributions of acoustic cues for individual phonemes will overlap creating overlap among phonemes (which will cascade to make lexical templates more similar). Under either account, mental representations of words learned under the degradation imposed by a CI will be more overlapping and harder to discriminate. The consequences of this additional form of uncertainty for real-time lexical competition are not known, and require a richer investigation of multiple types of competitors.

1.2 The Present Study

The primary goal of this study was to examine the time course of lexical competition in prelingually deaf CI users. We used the VWP to assess lexical processing using the same stimuli and task as Farris-Trimble et al. (2014). As in that study, we examined early eye-movements to onset (cohort) and offset (rhyme) competitors to assess how immediately CI users activate lexical candidates. This technique has been used with older children (Rigler et al., 2015; Sekerina & Brooks, 2007), and a variety of clinical populations including individuals with language impairment (McMurray et al., 2010), aphasia (Yee, Blumstein, & Sedivy, 2008), and postlingually deafened CI users (Farris-Trimble et al., 2014). The version used here has good test/re-test reliability (Farris-Trimble & McMurray, 2013).

Experiment 1 examined prelingually deaf adolescent CI users. This population may represent a compounding of factors that may influence the dynamics of lexical processing. This group has poorer perceptual input; they are still undergoing language development; and they have delayed vocabulary and language development (Dunn et al., 2014; Svirsky et al., 2000; Tomblin, Spencer, Flock, Tyler, & Gantz, 1999). Highly similar experiments have examined the dynamic of lexical processing as a result of each factor singly, and found distinct effects on the timecourse of activation. Typically developing adolescents (between 9 and 16) show an over abundance of initial competition from cohorts and rhymes, and consequently somewhat slower target activation (Rigler et al., 2015) (see also, Sekerina & Brooks, 2007); however, by the end of processing even 9 year olds fully suppress competitors. In contrast adolescents with poorer language (language impairment) show no early effects on competition but do not fully suppress lexical competitors by the end of processing (McMurray, Munson, & Tomblin, 2014; McMurray et al., 2010) (see also, Dollaghan, 1998), and, as described, post-lingually deaf CI users are somewhat slower to activate target words, and also maintain competitors later.

What might be predicted when these factors compound? Given that all of these populations show incremental processing, we expected to see evidence for immediate competition here too. However, both younger children and adults with degraded input exhibit slower lexical processing, so a delay is also to be predicted. At the same time, younger adolescents show enhanced competition at peak, and both adult CI users and people with SLI tend to preserve competitor activation quite late into processing, so there was a strong possibility of very large—perhaps overwhelming—competition from cohorts and rhymes on top of any delay (and similar effects are seen by NH listeners in noise: Brouwer & Bradlow, 2015). On the other hand, it may be that these factors do not compound additively, but rather lead to an emergent pattern of dynamics that is quite different.

A key marker of immediate competition (in contrast to wait and see accounts) is competition from onset competitors (cohorts). Somewhat straightforwardly one might expect that greater perceptual confusion would lead to heightened competition across the board. Indeed, this is what is seen in language impairment (McMurray et al., 2010) and postlingually deafened CI users (Farris-Trimble et al., 2014) as well as NH listeners in noise (Brouwer & Bradlow, 2015). However, if listeners delay lexical access (as in a wait and see account), they may show a reduction of cohort activity, as by the time lexical access initiates, sufficient information may have arrived to rule out the cohort.

Indeed, this kind of emergent result is what was observed; while pre-lingually deaf CI users showed some of the coarse hallmarks of incremental processing, lexical access was so delayed that competition from onset competitors was surprisingly reduced, consistent with something closer to a wait-and-see approach. Experiment 2 then asked whether this is uniquely a product of long-term developmental exposure to a CI (and the resulting delayed language development), or if this is a more automatic adaptation to severely degraded speech.

2.0 Experiment 1

2.1 Methods

2.1.1 Participants

Eighteen prelingually deaf CI users (8 female) and 19 age-matched NH listeners (10 female) participated in this study. Inclusion criteria the CI group included 1) onset of deafness prior to 36 months; 2) use of one or more CIs; 3) greater than 12 years of age at test; 4) sufficient hearing (with the CI) to perform the task (as deemed by the research team and the audiologists on the project); and 5) normal or corrected-to-normal vision, monolingual American English status, and no cognitive concerns. CI users were part of a larger project sponsored by University of Iowa’s Department of Otolaryngology, in which CI users come to the University of Iowa approximately yearly for an audiological tune up, a battery of standardized assessments, and participation in research. This sample represented all of the children from this larger study who met these eligibility requirements and who visited the Dept. over a two year period from August 2009 to August 2011. Sample size was derived from this recruitment window, and data were not analyzed until the conclusion of the study.

A complete description of the CI users is in the Online Supplement S1. CI users had a mean age of 17.0 years (range = 12 to 25). The average age of onset of deafness was 5.3 months (range = 0 to 33 months) and all but 5 were congenitally deaf. As these were adolescents they were implanted some time ago (when infant implantation was not the norm) and the average age of implantation was 47.9 months (SD=20.8 months). All users had extensive experience with their CIs, averaging 13.0 years of device use (SD=3.4 years, range=8.8 – 21.0 years). Three CI users used bilateral implants; the other 15 were unilateral. Three additional CI users were tested but excluded from analysis because of very low accuracy (1), or few fixations to the objects (2).

NH participants were recruited through newspaper advertisements, fliers, and word of mouth. These were age matched to the CI listeners and had a mean age of 15.4 (range= 13.7 to 17.3). Two additional NH participants were tested but excluded from analysis due to difficulty calibrating the eye-tracker (1), or equipment error (1). NH participants reported normal hearing at the time of testing, and hearing was verified with hearing screening. Screenings were conducted with a calibrated Grayson-Stadler portable audiometer at 500, 1000, 2000, and 4000 Hz with supra-aural headphones. NH participants responded to each frequency at 25 dB HL or better. For four NH participants, hearing screenings were not available due to technical issues. Their data were analyzed separately and did not differ from other participants. Given the low incidence of hearing loss among typical adolescents, they were retained for analysis.

2.1.2 Clinical Measures

To document speech and language abilities we administered several standardized assessments. The Peabody Picture Vocabulary Test (PPVT-IV; Dunn & Dunn, 2007) assessed receptive vocabulary. These scores were available for 16 of the 18 CI listeners and all NH listeners. The mean standard score for NH listeners was 114.0 (SD=10.0). The CI group was significantly lower (M=91.2, SD=21.1; t(35) = 3.89, p < 0.001), though still in the normal range. We assessed speech perception in CI users using the most recently collected Consonant-Nucleus-Consonant (CNC) test, and Hearing In Noise Test (HINT). The CNC is a monosyllabic word list of 50 phonetically balanced words; participants listen to each word and repeat it orally. The HINT is a set of sentences the participant hears and repeats aloud; accuracy is scored from keywords in the sentences. The HINT was presented in quiet and noise (for most participants at +10 SNR, though for some it was at +5). Participants averaged 59.9% correct on the CNC (SD=23.78%). On the HINT they averaged 82.1% (SD=25.0%) in quiet and 74.0% (SD=21.7%) in noise. Thus, the CI users’ speech perception was good, but variable.

2.1.3 Design

29 sets of four words were used (the same as Farris-Trimble et al., 2014; see Supplement S2). Each set contained a base word (e.g. wizard), an onset/cohort competitor (whistle), an offset/rhyme competitor (lizard), and an unrelated word (baggage). Pictures of each word in a set always appeared together on any trial. Each of the words in a set was the auditory stimulus five times. This yielded 580 trials.

Since each word in a set could serve as the target, this created four trial-types (depending on which word was heard). When the base word (e.g., wizard) was the stimulus, there was a cohort (e.g. whistle), and rhyme (e.g. lizard) competitors and an unrelated item (bottle); these were termed TCRU trials. Conversely when whistle was the target there was a cohort (wizard) and two unrelated items (lizard and bottle), a TCUU trial. When lizard was the target, there was a rhyme (wizard), and two unrelated words (whistle and bottle), a TRUU trial. Finally, when bottle was the target, all three competitors were unrelated (a TUUU trial). When analyzing looks to a particular competitor, we averaged across all trial types containing that competitor (e.g., for cohort fixations, we averaged TCUU and TCRU trials). Consequently, both items of a competitor pair (e.g., whistle and wizard) served as competitors, controlling for disparities in frequency, density, imageability, etc between target and competitor.

Each item-set was repeated 20 times (5 times for each trial-type). There was no attempt to block presentation (e.g., presenting all of the items before the next repetition) and order was completely random for each subject. This scheme was intended to disrupt strategies that could bias looking. For example, participants may assume that because they heard wizard in the recent past, it could not be the target again, or that since they had already heard wizard, whistle and bottle, that lizard must be the target. For most participants a few items would be repeated before the other items in the set were presented, disrupting such strategies.

2.1.4 Stimuli

Auditory stimuli were the same as Farris-Trimble et al. (2014). Stimuli were recorded in a sound attenuated room by a female native English speaker with a Midwestern dialect. Recordings were made with a Kay CSL 4300B A/D board at a sampling rate of 44.1 kHz. Each word was recorded in a carrier phrase (He said X) with a pause between said and the stimulus. This carrier phrase ensured consistent prosody across words. Several exemplars of each word were recorded, and the clearest was selected and excised. 100 milliseconds of silence were added to the beginning and end of each word, and stimuli were amplitude normalized.

Visual stimuli consisted of clip art images developed using a standard lab procedure to create the most representative image for each word. For each word, multiple images were downloaded from a clip art database and compiled for review. A focus group of undergraduate and graduate students then selected the most representative image for each word. Next, the images underwent minor editing to remove distracting components, to ensure consistency with other images in the experiment, and to use prototypical orientations and colors. Final images were approved by one of three laboratory members with extensive VWP experience.

2.1.5 Procedure

After consent and assent were obtained, NH participants were given the hearing screening. Participants were then seated at the computer with a desktop mounted Eyelink 1000 eye-tracker in the chin rest configuration. The researcher adjusted the chin rest to a comfortable position and the eye-tracker was calibrated. Next, participants were given written and verbal instructions for the experiment. On each trial, they saw four pictures in each corner of a 17″ (1280 × 1024 pixel) computer monitor. Pictures were 300 × 300 pixels and located 50 pixels (horizontally and vertically) from the edge of the monitor. At the onset of the trial, the pictures were displayed with a small red dot was in the center. After 500 ms, the dot turned blue and the participant clicked on it to initiate the auditory stimulus. Participants then clicked on the picture matching the word. The experiment was controlled with Experiment Builder (SR Research, Ontario, Canada).

Auditory stimuli were played over two front-mounted Bose speakers, powered by a Sony STRDE197 amplifier/receiver. Volume was initially set to 65 dB, but participants could control the volume during an 8 trial practice sequence to achieve a comfortable listening level.

2.1.6 Eye-movement Recording and Analysis

Eye movements were recorded with a desktop mounted SR Research Eyelink 1000 eye tracker. A standard 9-point calibration procedure was used. Every 29 trials a drift correction was performed to take into account natural drift over time. If the participant failed a drift correction, the eye-tracker was recalibrated. Both pupil and corneal reflections were used to determine the fixation position. Eye-movements were recorded every 4 msec from trial onset until the participant clicked a picture. The eye-movement record was parsed into saccades, fixations, and blinks using default parameters. Saccades and subsequent fixations were grouped into a single unit for analysis (a “look”), which started at saccade onset and ended at fixation offset. When identifying the object being fixated, the boundaries of the screen ports were extended by 100 pixels (horizontally and vertically) to account for noise in the eye-tracker. This did not result in any overlap between images.

2.2 Results

All data contributing to the analysis of Experiment 1 are publicly available in an Open Science Framework repository (McMurray, Farris-Trimble, & Rigler, 2017b).

2.2.1 Mouse clicks

To assess accuracy and latency, we first analyzed the mouse click data for each group. Across all trials, CI users selected the target word with 88.5% accuracy (SD = 10.7%; range: 59.7%–98.4%) and NH controls had an average of 99.5% accuracy (SD = 0.3%; range: 98.4%–100%; t(35) = 4.53, p < 0.001). Thus, while they performed well, the CI users were less accurate than NH controls. CI users’ responded more slowly than NH listeners. Mean RTs (on correct trials) were 1997 ms (SD=321) for CI users and 1355 ms (SD=135; t (35)=8.0, p < 0.001) for NH listeners.

2.2.2 Eye-movement analysis

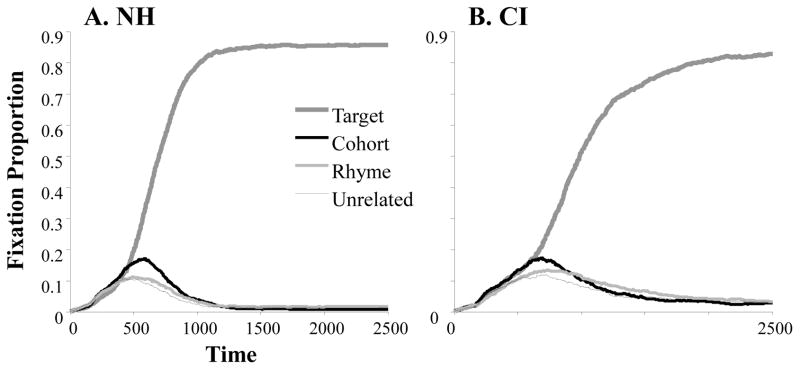

Figure 1 shows the proportion of trials on which participants fixated each competitor every 4 msec for TCRU trials. We eliminated trials in which participants did not ultimately click on the correct object as our goal was not to understand word recognition accuracy. Rather, we asked, given that the participant ultimately reached the right answer, are there differences between listeners in how they did so?

Figure 1.

Proportion of fixation to each word type as a function of time A) for NH listeners; B) for CI users.

About 300 msec after auditory stimulus onset, fixations to each object are similar. Given 100 msec of silence before the onset of the auditory stimulus and the approximately 200 msec it takes to plan and launch an eye-movement, this is a time at which no signal-driven information is available to guide fixations. Shortly after 300 ms, fixation curves diverge: targets and cohorts receive more looks than rhymes and unrelateds, but are roughly equal to each other. This is consistent with immediate use of the onset. As more of the word unfolds, listeners make some fixations to the rhyme, reflecting the phonological overlap at the end of the rhyme and the target. By about 1000 ms, the participants converge on the target. This broad description appears similar for CI users (Panel B), even as there are marked overall differences.

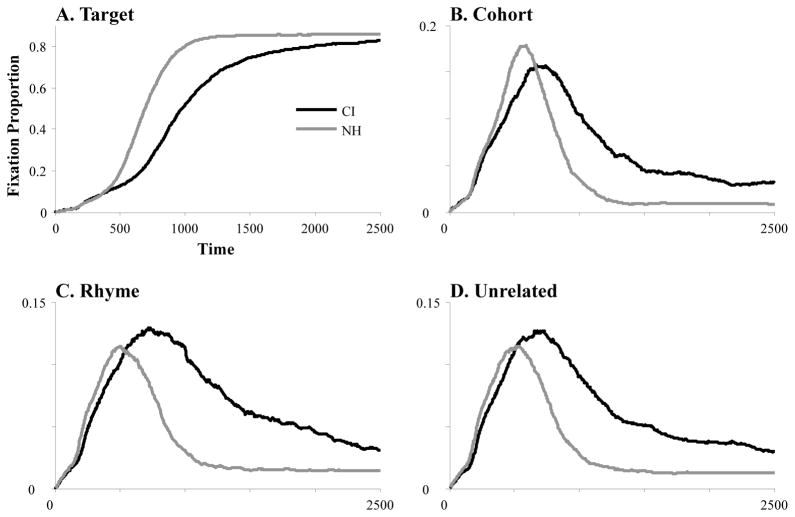

Figure 2 compares CI users and NH participants for each class of lexical competitors separately. Here, CI users’ target looking is delayed and rises more slowly than NH children (Figure 2A). Unexpectedly, CI users show fewer early looks to cohort competitor than NH listeners (Figure 2B), suggesting less competition. In contrast, they show more increased and longer-lasting looking to the rhyme and unrelated objects.

Figure 2.

Proportion of fixations to each competitor type as a function of time and listener group. A) Target, averaged across TCRU, TCUU and TRUU trials; B) Cohort, averaged across TCRU and TCUU trials; C) Rhyme, averaged across TCRU and TRUU trials.; D) Unrelated, averaged across TCRU, TCUU and TRUU trials.

To quantify these differences we used a non-linear curve fitting technique developed in prior studies (Farris-Trimble & McMurray, 2013; McMurray et al., 2010; Rigler et al., 2015) that estimates the precise shape of the timecourse of looking to each competitor for each participant. Nonlinear functions were fit to each participant’s data, and their parameters were analyzed as descriptors of the timecourse of processing. Data contributing to this analysis used all relevant trial types: the target analysis used TCRU, TCUU, and TRUU trials; the cohort analysis used TCRU and TCUU trials; the rhyme analysis used TCRU and TRUU trials, and the unrelated analysis used TCRU, TCUU and TRUU trials1.

Target fixations were fit to a four parameter logistic. The cross-over point (in msec) describes the overall delay or shift of the curve in time, and the slope at the cross-over reflects speed at which activation builds. The upper asymptote is the degree of final fixations; it can be seen as confidence in the final decision and is reduced in language impairment (McMurray et al., 2010). The lower asymptote is almost always 0 and was not examined. Results are in Table 1. There were large (D>2) and significant (p<.0001) effects on the two timing parameters (crossover and slope), with CI users showing a very large delay of 236 msec. in the time to reach the halfway point relative to NH listeners. There was also a moderate (D=.58) but marginally significant effect (p=.079) on the maximum asymptote with CI listeners showing a lower overall asymptote.

Table 1.

Results of analysis of logistic curvefits on target fixations.

| M (SD) | T(35) | p | D | ||

|---|---|---|---|---|---|

| NH | CI | ||||

| Crossover | 655.2 (37.8) | 891.0 (95.1) | 10.0 | <.0001 | 3.26 |

| Slope | .0018 (.0004) | .0010 (.0003) | 6.5 | <.0001 | 2.17 |

| Max | .853 (.128) | .782 (.111) | 1.81 | .079 | 0.58 |

Competitor fixations were fit to an Asymmetric Gaussian (Equation 1). This function is the combination of two Gaussians with same mean (μ, on the time axis) and peak (p, along the Y axis), but with independent slopes and asymptotes at onset (σ1, b1) and offset (σ2, b2).

| (1) |

Here peak height, p, should be interpreted as the maximum amount of early competition, and μ reflects when this is observed. Onset slope, (σ1) is the speed at which fixations reach peak and offset slope (σ2) is the speed at which they are reduced. Finally offset baseline, b2, is the degree to which competitor fixations are fully suppressed.

This analysis revealed many differences between NH and CI users (Table 2). There were strong effects on the time of peak fixations (d>1, p<.0001) for all three competitors with delays of about 200 msec. This reinforces the rather sizeable delay in the timecourse of lexical access by CI users (and is mirrored by the even larger difference in RT). Similarly offset slope showed moderate effects (d>.75, p<.029) for all three competitors, with CI users suppressing the competitors substantially more slowly than NH listeners. Most intriguingly, there was no difference in peak cohort fixations (T<1), suggesting CI users do not show different degrees of competition from onset competitors; while there was a marginal effect for rhymes (p=.075, d=0.6), suggesting increased competition.

Table 2.

Results of analysis of asymmetric Gaussian parameters on competitor fixations.

| M (SD) | T(35) | p | d | |||

|---|---|---|---|---|---|---|

| NH | CI | |||||

| Cohort | Peak Time | 560.5 (71.2) | 722.1 (147.4) | 4.28 | <.0001 | 1.40 |

| Peak | 0.183 (0.054) | 0.168 (0.059) | <1 | 0.26 | ||

| Onset Slope | 180.2 (52.4) | 285.1 (149.8) | 2.87 | 0.007 | 0.94 | |

| Offset Slope | 205.4 (53.2) | 326.2 (176.8) | 2.85 | 0.007 | 0.94 | |

| Offset Baseline | 0.0124 (.0061) | 0.0399 (.0232) | 5.00 | <.0001 | 1.64 | |

| Rhyme | Peak Time | 545.1 (132.2) | 772.0 (245.9) | 3.52 | 0.001 | 1.15 |

| Peak | 0.124 (.050) | 0.153 (.044) | 1.84 | 0.075 | 0.60 | |

| Onset Slope | 215.1 (94.7) | 271.4 (126.1) | 1.54 | 0.13 | 0.51 | |

| Offset Slope1 | 217.5 (102.5) | 422.9 (377.4) | 2.28 | 0.029 | 0.75 | |

| Offset Baseline | 0.0150 (.039) | 0.0227 (.051) | <1 | 0.15 | ||

| Unrelated | Peak Time | 499.5 (91.8) | 704.8 (219.2) | 3.75 | 0.001 | 1.23 |

| Peak | 0.126 (.064) | 0.135 (.054) | <1 | 0.16 | ||

| Onset Slope | 172.5 (39.9) | 250.8 (98.2) | 3.21 | 0.003 | 1.05 | |

| Offset Slope1 | 222.0 (51.2) | 319.3 (176.3) | 2.30 | 0.028 | 0.76 | |

| Offset Baseline | 0.0136 (.009) | 0.0328 (.036) | 2.24 | 0.032 | 0.74 | |

One outlier excluded (a CI user)

These suggest large differences in the timing of lexical access between NH listeners and CI users. They also hint at differences in the degree of competition. However, such differences are much more difficult to judge given differences in unrelated fixations: if CI listeners look around more in general, this could confound differences in cohort or rhyme looks that derive from changes in lexical competition. For example, Figure 2 suggests CI users maintain cohort and rhyme fixations after word offset. However, it is not clear if these asymptotic fixations differ from those to an unrelated item. When we compared b2 between cohorts/rhymes and unrelated objects (within subject) there was no difference for either group for either competitor (Cohort: CI: t<1; NH: t(18)=1.05, p=.31; Rhymes: both t<1). Thus, both groups eventually suppress the cohorts and rhymes to the level of unrelated items. However, this approach cannot address differences earlier in the timecourse where differences may be more non-linear.

Thus, to account for differences in unrelated fixation, we subtracted unrelated looks from cohort and rhyme fixations (Figure 3). This revealed a striking cross-over asymmetry. Accounting for unrelated looks, CI users showed markedly reduced cohort effects (Figure 3A) but enhanced rhyme effects (Figure 3B). To examine this statistically, timecourse functions (Figure 3) for each participant were smoothed with a 48 msec (12 frame) triangular window. We then derived three new measures from these difference curves (see Brouwer & Bradlow, 2015; Rigler et al., 2015, for similar analyses). First, we found the maximum of each participant’s function (peak fixations), which can be seen as the overall amount of early competition. Second, we identified the time at which the maximum occurred (peak time). Finally, we examined the duration over which participants fixated the competitor more than the unrelated (fixation extent, how long competition lasts), by computing the time at which competitor – unrelated fixations exceeded a threshold of 0.02 (other thresholds were used with similar results). These measures were computed separately for cohorts and rhymes and submitted to a 2×2 ANOVA examining listener group (between-subject) and competitor type (cohort vs. rhyme, within subject).

Figure 3.

Competitor minus Unrelated fixations as a function of time and listener group. Note that a data were smoothed with a 48 msec triangular window. A) Cohort; B) Rhymes.

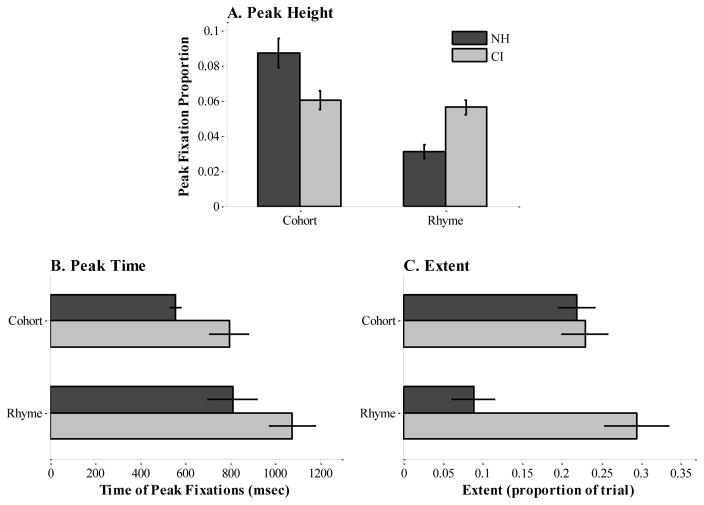

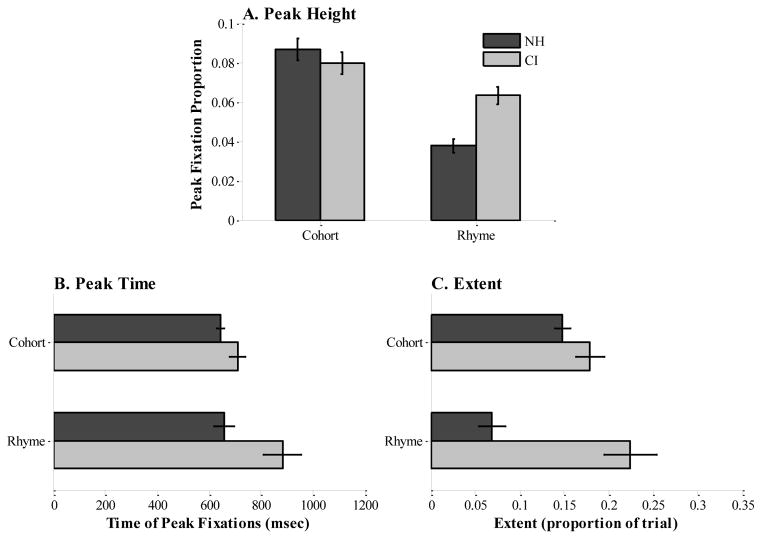

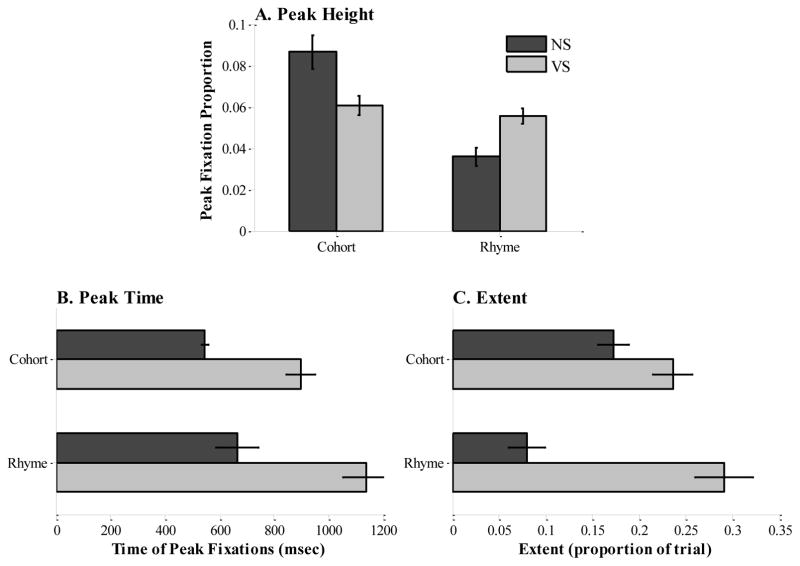

We first examined peak fixations (Figure 4A). There was no main effect of listener-group (F<1), but a large effect of competitor type (F(1,35)=39.8, p<.0001; ηp2=.53), with more fixations at peak for cohorts than rhymes. This was moderated by a cross-over interaction between competitor type and listener group (F(1, 35)=29.5, p<.0001, ηp2=.48). For cohorts, NH listeners showed significantly more fixations at peak than CI users (t(35)=2.69, p=.011), while for rhymes the pattern was reversed (t(35)=4.3, p<.0001).

Figure 4.

Measures of competitor fixations (over and above unrelated fixations) for prelingually deaf CI users. A) The maximum proportion of fixations (e.g., the peak of the functions shown in Figure 3) as a function of competitor type and listener group. B) The time at which that peak was reached; C) The extent (in time) to which the competitor was considered.

Peak time (Figure 4B) showed an additive pattern. There were main effects of both competitor type (F(1, 35)=11.3, p<.0001, ηp2=.25), and listener group (F(1,35)=7.7, p=.009, ηp2=.18). In general, cohorts peaked (M=674 msec) about 250 msec earlier than rhymes (M=940 msec), which is expected given the nature of their overlap with the target words. CI users showed an overall delay of about 250 msec relative to NH listeners in peak timing.

Finally, extent (Figure 4C) showed an interaction. There was no main effect of competitor type (F(1, 35)=1.4, p=.24, ηp2=.04), but a main effect of listener group (F(1, 35)=11.3, p=.002, ηp2=.25), and an interaction (F(1, 35)=12.7, p=.001, ηp2=.27). For cohorts, both NH and CI listeners showed similar extent of fixations (t<1), while for rhymes CI listeners tended to maintain fixations for a much longer period of time (t(35)=4.34, p<.0001).

One concern is that the asymmetric effects of listener-group on cohort and rhymes may derive from differences in the relative overlap of each competitor class with the target. While this was not observed in prior studies of CI users using the same stimuli (Farris-Trimble et al., 2014), nor in studies of listeners with LI with similar stimuli (McMurray et al., 2010), we replicated these analyses with only a subset of items that were maximally overlapping (all but one phoneme) and found the same results (see Supplement S3). In addition, it was possible that the asymmetric effects derive from differences between one and two syllable words; for example, if the reduction in cohort fixations was only observed in shorter words, while the increase in rhyme fixations was only observed in longer words. This too was examined in Supplement S3, finding little evidence for any moderation of these effects by syllable length.

Thus, these analyses suggest that 1) CI users are delayed in activating both competitors; 2) they show dramatically less competition from cohorts though they maintain it for a similar extent; and 3) they show more competition from rhymes, both in magnitude and extent. As we show in Supplement S4, these differences in activation are reflected in what participants ultimately report hearing. An analysis of errors suggests that when listeners are incorrect, CI users are more likely to choose a rhyme (than a cohort) and NH listeners show the reverse.

2.2.4 Comparisons with postlingually deafened Adult CI users

The primary goal of this project was not to directly compare pre and post-lingually deaf CI users. However, the most revealing analyses here (the competitor – unrelated analysis) was not conducted in prior work (Farris-Trimble et al., 2014). Thus it is unclear if this population would also show this somewhat surprising pattern with a more sensitive analysis. We addressed this by retrospectively analyzing the 33 postlingually deafened adult CI users (and 26 age-matched NH controls) previously tested by Farris-Trimble et al. (2014). This group had input signals that were similarly degraded as the present participants (most were implanted with similar CIs by the same team), but they acquired language prior to losing hearing. These participants were run in an identical experiment in the same laboratory. However, we should be cautious in making strong claims about differences between these groups, as there was not an attempt to match these participants on demographic or hearing factors (they were independent studies).

A number of key differences in the findings are worth highlighting. First, Farris-Trimble et al. performed a similar curvefitting analysis. Thus, we compared timing parameters across groups to estimate numerical differences in the effects. Table 3 summarizes three relevant timing parameters. In all cases, postlingually deafened adults showed a statistically significant delay relative to NH controls; however this delay was one half to a one third the size of the prelingually deaf adolescents. Thus, CI use in general delays aspects of lexical access, but developing under such conditions leads to much larger delays. While this may appear a quantitative difference, the scale of the difference should not be underestimated – a 200 msec delay constitutes the length of many one syllable words.

Table 3.

Summary of timing results from Farris-Trimble et al and present study. “Post-” refers to postlingually deafened adults studied in Farris-Trimble et al.; “Pre-” refers to prelingually deaf adolescents from the present study

| M (msec) | Diff. | |||

|---|---|---|---|---|

| CI | NH | |||

| Target | Post- | 777 | 703 | 74 |

| Crossover | Pre- | 891 | 655 | 236 |

| Cohort μ | Post- | 652 | 603 | 49 |

| Pre- | 722 | 561 | 161.6 | |

| Rhyme μ | Post- | 649 | 563 | 86 |

| Pre- | 772 | 545 | 226.9 | |

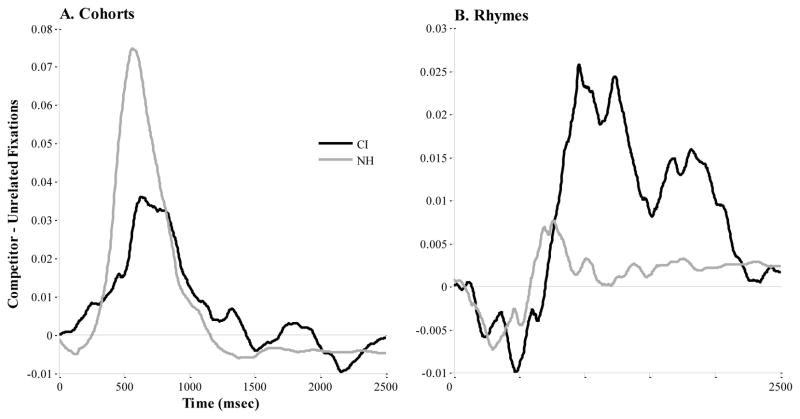

Second, we evaluated the striking asymmetry between cohort and rhyme activation (Figure 3, 4). Farris-Trimble et al. did not examine the competitor – unrelated differences that we report here. Thus we examined these measures for the post-lingually deaf CI users (Figure 5).

Figure 5.

Measures of competitor fixations (over and above unrelated fixations) for postlingually deafened adults in Farris-Trimble et al. (2014). A) The maximum proportion of fixations (e.g., the peak of the functions shown in Figure 3) as a function of competitor type and listener group. B) The time at which that peak was reached; C) The extent (in time) to which the competitor was considered.

For peak fixations (Figure 5A), we found a main effect of competitor type (F(1,68)=54.3, p<.0001, ηp2=.44), a marginal effect of listener type (F(1,68)=3.2, p=.076, ηp2=.05), and an interaction (F(1,68)=13.4, p<.0001, ηp2=.17). Crucially, while prelingually deaf CI users showed a significant reduction in peak fixations to the cohort, this was not so for the postlingually deafened CI users (t<1). Postlingually deafened CI users, however, did show an increase in peak fixations to rhyme (t(68)=4.5, p<.0001), like prelingually deaf CI users.

For peak time there was a main effect of competitor type (F(1,68)=6.8, p=.011, ηp2=.09) and listener type (F(1,68)=9.1, p=.004, ηp2=.12). This time there was an interaction (F(1,68)=5.0, p=.029, ηp2=.07). This was due to the fact that while CI users showed a significant delay for cohorts (t(68)=2.0, p=.048), it was quite a bit smaller than for rhymes (t(68)=2.9, p=.005).

Finally, for fixation extent, there was no main effect of competitor type (F<1), but a main effect of listener group (F(1,68)=24.3, p<.0001, ηp2=.26) and an interaction (F(1,68)=12.5, p=.001, ηp2=.16). Postlingually deafened CI users maintained cohort fixations marginally longer than NH controls (t(68)=1.76, p=.083) (whereas prelingually deaf CI users did not differ from NH). Like prelingually deaf CI users, post-lingually deaf CI users also showed a larger fixation extent for rhymes (t(68)=4.9, p<.001).

To summarize, that pattern of data shown by the present participants and the postlingually deafened adults of Farris-Trimble et al. (2014) differed. Both experiments found that rhymes tend to show higher peak fixations, a delayed peak and a longer fixation extent in CI users relative to NH controls. However, with respect to cohorts (a key marker of immediate speech processing), postlingually deafened adult CI users show a profile much closer to NH listeners: they show similar peak fixations, a much less delayed peak time, and perhaps slightly longer extent, whereas cohort activation for prelingually deaf CI users was both reduced and not longer in extent.

2.2.5 Oculomotor Factors

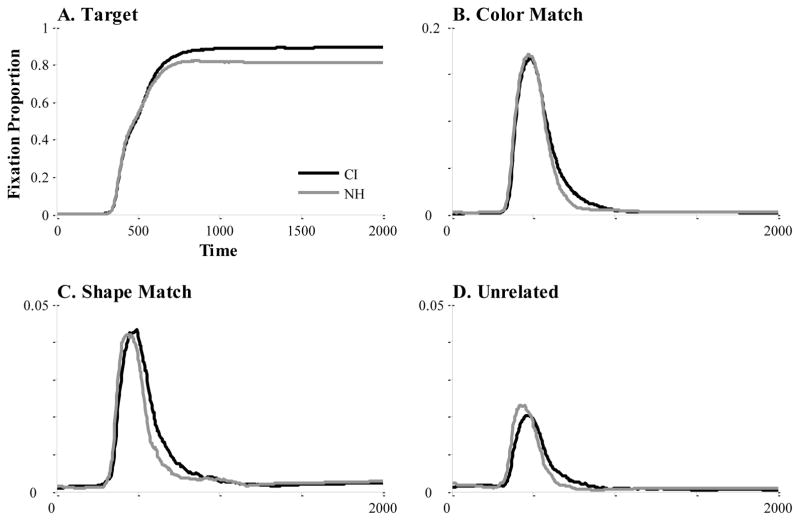

Finally, we were concerned that the differences in unrelated fixations may reflect differences in basic visual, cognitive or oculomotor factors. To that end, a separate group of CI users and NH controls were run in a task that was designed to capture the decision and task demands of this experiment, but with purely visual stimuli (Farris-Trimble & McMurray, 2013) (see McMurray, Farris-Trimble, & Rigler, 2017a, for data). This experiment is fully reported in the Online Supplement S5. On each trial participants saw a briefly presented colored shape, and matched it to one of four shapes in the corners of the screen. One of those shapes was a complete match; one matched on color but with a different shape; one matched on shape but with a different color, and one was unrelated. We monitored fixations to each of competitor to estimate unfolding decision dynamics similarly to the VWP.

Figure 6 shows the results (complete analysis in Supplement S5). There were two key findings. First, the proportion of unrelated fixations is exceedingly low in both groups (about .02 at peak). Second, there were no significant group differences on any measure. Consequently, there is no evidence that CI users differ from NH controls in these basic visual/cognitive processes. This suggests the increased unrelated looks observed in CI users may have an origin within the language system, reflecting uncertainty about the identity of the target word.

Figure 6.

Results from a visual analogue of the visual world paradigm run on 30 CI users and 20 NH controls. See Supplement S1 for more details.

2.3 Discussion

Prelingually deaf CI users showed extremely delayed lexical access relative to NH peers. This delay is upwards of 200 msec, and it appears in both the target fixations as well as the peak of the competitor fixations. This is quite sizeable relative to a) the much smaller delays observed in postlingually deafened adult CI users (~75 msec; Farris-Trimble et al., 2014), between typically developing 9 and 16 year olds (~65 msec; Rigler et al., 2015), and relative to typical word durations where 200 msec may be most of the length of a one syllable word. As a result of this delay, by the time CI users initiate lexical access they show reduced competition to cohort competitors. While we would not argue that CI users are non-incremental, this pattern is clearly something closer to wait-and-see. However, this delay also appears to lead them to downweight the initial phoneme resulting in increased competition from offset (rhyme) competitors. This pattern of data is not attributable to oculomotor factors, as a similar group of participants showed no deficits in a purely visual task. Perhaps most importantly, and this pattern is also not shown by similar postlingually deafened CI users in an equivalent task (Farris-Trimble et al., 2014).

It is noteworthy that the pattern of competitor fixations mirrored the pattern of errors in the ultimate response (Supplement S3): while CI users showed increased errors to the rhyme, NH listeners showed more errors to the cohort (even though they showed dramatically fewer errors). This suggests that this is more than an issue of timing – the delay in accessing the lexicon leads to changes in partial activation of competitors, which ultimately affects (on some proportion of trials) what the listener reports. However, at the same time, this error pattern is consistent with a quantitative rather than qualitative difference. The fact that CI users made more errors to cohort than unrelated options (mirroring the non-zero fixations to cohort competitors) rules out a strong claim that cohorts are not active, rather cohorts are substantially less active.

As a whole these results suggest that in prelingually deaf CI users, lexical competition dynamics are somewhat consistent with a partial wait-and-see style of processing. Given that this is not shown by postlingually deafened adults, it raises the possibility that this is the unique product of long term language development with a degraded input (the CI). However, it is important to note that the prelingually deaf children here showed lower overall accuracy (M=88.5%; SD = 10.7%) than postlingually deafened adults studied previously (M=94.8%, SD=5.1%). This small numerical difference could be meaningful, suggesting that the prelingually deaf children struggled more with this task than the adults. If this is the case, it raises the possibility that that partial wait-and-see strategy observed here may not be a unique consequence of development, but could appear whenever the input quality is sufficiently poor. To test this, Experiment 2 tested NH adults with severely degraded speech.

3.0 Experiment 2

Experiment 2 utilized noise-vocoded speech to degrade the auditory stimuli in a way that shares features with the output of a CI (Shannon, Zeng, Kamath, & Wygonski, 1995). In this technique, speech carved into several frequency bands (e.g., 500–1000 Hz), the envelope within each band is extracted, and the spectral content within that band is replaced by noise that conforms to the original envelop in that band. Consequently, vocoded speech contains many of the temporal cues of the original input, but with degraded frequency precision: the thousands of frequencies in natural speech are replaced with a small number of frequency bands.

Typically, 8-channel simulation yields similar accuracy as postlingually deafened CI users (Friesen, Shannon, Baskent, & Wang, 2001; Shannon et al., 1995). Farris-Trimble et al. (2014) tested NH adults listening to 8-channel simulation with this same paradigm. Listeners’ fixations were consistent with an immediate competition strategy, albeit with a small delay. However, in that study, listeners were also highly accurate (M=98.4%), suggesting 8-channel simulation may not match the limitations of the prelingually deaf participants. We thus piloted a similar task as Experiment 1 with four- to seven-channel simulation to identify the level of degradation that would yield close to 88.5% correct (the accuracy of prelingually deaf CI users). Five channels yielded performance around 91% correct and 4-channel led to 80%. Thus, we adopted the latter to be conservative. NH adults were then tested in the same paradigm as Experiment 1 using either 4-channel vocoded or unmodified speech (between-subject).

3.1 Methods

3.1.1 Participants

56 NH University of Iowa students were tested. Participants reported normal hearing and passed a hearing screening. Participants provided informed consent and received course credit or $15. Three additional participants were run, but excluded for extremely poor performance (two had accuracies at 25% or lower, a third was 43% correct). Participants were randomly assigned to either vocoded or normal speech. We originally targeted 50 participants (25/group), but due to scheduling issues, six additional participants were run in the vocoded group. None of the data were examined prior to study completion.

3.1.2 Design and Procedure

The design and procedure were identical to Experiment 1.

3.1.3 Stimuli

Stimuli were based on the recordings used in Experiment 1. For participants in the normal speech (NS) group, stimuli were not modified. For participants in the vocoded (VS) group, stimuli were processed with AngelSim Cochlear Implant Simulator (Ver 1.08.01; Fu, 2012). We used a white noise carrier with 4 channels whose centers were at the default frequencies specified by the Greenwood function.

3.2 Results

All data contributing to the analysis of Experiment 2 are publicly available in an Open Science Framework repository (McMurray, Farris-Trimble, & Rigler, 2017c).

3.2.1 Accuracy

The NS group had an average accuracy of 99.4% (SD=0.6%); the VS group averaged 81.7% (SD=8.2%; t(54)=10.7, p<.0001). The VS group was significantly less accurate than CI users in Experiment 1(M=88.5%, SD=10.7%, t(47)=2.48, p=.017). RTs in the VS group (M=1961 msec, SD=540 msec) were significantly slower than the NS group (M=1149 msec, SD=135; t(54)=7.34, p<.0001), though they did not differ from the CI users (M=2073 msec, SD=370, t(47)=.78, p=.49). Thus, 4-channel vocoding degraded the speech to the point where accuracy and RT were comparable to or worse than the CI users of Experiment 1.

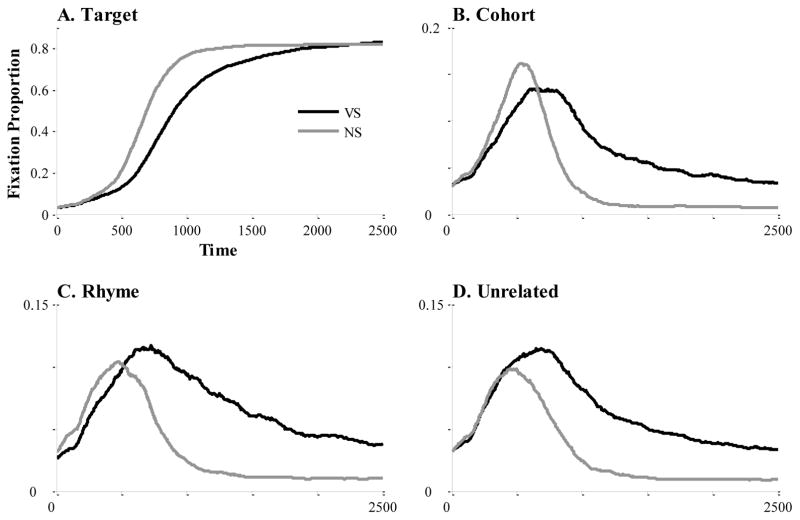

3.2.2 Fixations

Figure 7 shows fixations to each of the four competitors as a function of stimulus type. Results look similar to prelingually deaf CI users. Target fixations (panel A) show an approximately 200 msec delay. All three competitors had delayed peaks, and increased fixations at the end of the timecourse. For statistical analysis we again fit nonlinear functions to the data and analyzed their parameters as a function of stimulus type (Table 4). This generally shows a close match between experiments. As in Experiment 1, we found a significant (and numerically large) delay in target crossover, and a similarly shallower slope. Also as in Experiment 1, the peak time for all three competitors was significantly delayed, and offset slopes were shallower. Thus, the global properties of the fixation record were quite similar.

Figure 7.

Proportion of fixations to each competitor type as a function of time and listener group (normal speech [NS] vs. vocoded [VS]). A) Target, averaged across TCRU, TCUU and TRUU trials; B) Cohort, averaged across TCRU and TC trials; C) Rhyme, averaged across TCRU and TRUU trials.; D) Unrelated, averaged across TCRU, TCUU and TRUU trials.

Table 4.

Results of analysis of curve fit parameters. The Exp 1 column shows a comparison with the analogous results of Experiment 1. ✓: matching difference in both experiments (significant or non significant difference in both); ✘: significant in one experiment but not the other; -: Marginally significant in one experiment and non-significant in the other.

| M (SD) | T(53) | p | D | Exp 1 | |||

|---|---|---|---|---|---|---|---|

| NS | VS | ||||||

| Target | Crossover | 641.1 (39.9) | 837.9 (136.6) | 6.96 | <0.001 | 1.87 | ✓ |

| Slope | 0.0017 (.0005) | 0.0011 (.0004) | 4.91 | <0.001 | 1.32 | ✓ | |

| Max | 0.788 (.212) | 0.759 (.075) | 0.73 | 0.20 | - | ||

| Cohort1 | Peak Time | 573.0 (88.2) | 684.8 (199.2) | 2.60 | 0.012 | 0.70 | ✓ |

| Peak | 0.172 (.076) | 0.161 (.053) | 0.64 | 0.17 | ✓ | ||

| Onset Slope | 190.6 (96.7) | 208.5 (93.1) | 0.70 | 0.19 | ✘ | ||

| Offset Slope | 170.5 (53.4) | 290.8 (180.3) | 3.22 | 0.002 | 0.87 | ✓ | |

| Offset Baseline | 0.015 (.012) | 0.044 (.024) | 5.54 | <0.001 | 1.50 | ✓ | |

| Rhyme2 | Peak Time | 506.9 (139.9) | 780.0 (313.9) | 4.02 | <0.001 | 1.10 | ✓ |

| Peak | 0.116 (.065) | 0.139 (.058) | 1.43 | 0.16 | 0.39 | - | |

| Onset Slope | 185.7 (112.7) | 245.1 (116.7) | 1.90 | 0.064 | 0.52 | - | |

| Offset Slope1 | 206.9 (77.3) | 296.4 (180.7) | 2.30 | 0.026 | 0.63 | ✓ | |

| Offset Baseline | 0.017 (.013) | 0.049 (.023) | 6.08 | <0.001 | 1.65 | ✘ | |

| Unrelated1 | Peak Time | 518.2 (170.6) | 634.5 (236.9) | −2.05 | 0.045 | 0.55 | ✓ |

| Peak | 0.112 (.057) | 0.133 (.056) | −1.42 | 0.161 | 0.38 | - | |

| Onset Slope | 183.0 (99.5) | 208.2 (121.8) | −0.83 | 0.22 | ✓ | ||

| Offset Slope1 | 221.3 (97.5) | 342.8 (168.7) | −3.18 | 0.002 | 0.86 | ✓ | |

| Offset Baseline | 0.015 (.011) | 0.044 (.020) | −6.57 | 0.000 | 1.78 | ✘ | |

One participant (VS condition) excluded for poor fit.

Two participants (VS condition) excluded for poor fit.

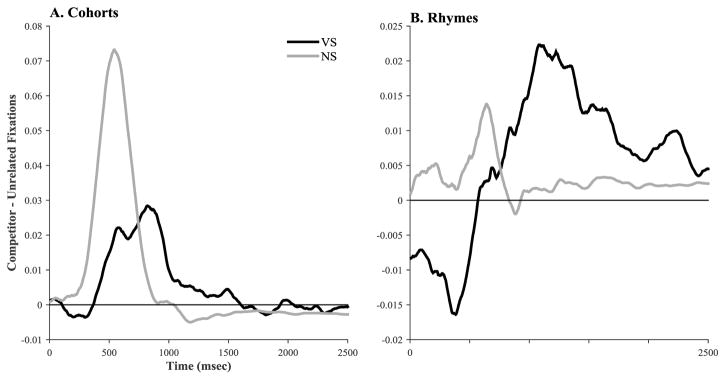

We next examined the proportion of competitor fixations relative to the unrelated objects. Recall that it was this measure that revealed reduced consideration of cohorts by prelingually deaf CI users. Figure 8 shows cohort and rhyme fixations after subtracting unrelated fixations. Again, we see reduced peak fixations to cohorts and increased late fixations to rhymes. We again analyzed peak height, peak time and the extent of these curves (Figure 9). They show a very similar pattern to the prelingually deaf children (but not postlingually deafened adults).

Figure 8.

Competitor minus Unrelated fixations as a function of time for NH listeners hearing either vocoded (VS) or normal (NS) speech. Note that a data were smoothed with a 48 msec triangular window. A) Cohort; B) Rhymes.

Figure 9.

Measures of competitor fixations (over and above unrelated fixations) for NH listeners hearing normal (NS) or vocoded (VS) speech. A) The maximum proportion of fixations (e.g., the peak of the functions shown in Figure 3) as a function of competitor type and listener group. B) The time at which that peak was reached; C) The extent (in time) to which the competitor was considered.

A series of ANOVAs examining speech type (between-subject) and competitor-type (cohort vs. rhyme, within-subject) confirmed this. For peak height (Figure 9A), we found no main effect of speech type (F<1), but a main effect of competitor type (F(1,54)=37.3, p<.0001, ηp2=.41) with more fixations at peak to cohorts than rhymes. Crucially, there was a competitor-type × speech-type interaction (F(1,54)=24.8, p<.0001, ηp2=.31). Listeners showed significantly lower peak fixations to cohorts under VS speech than NS speech (t(54)=2.91, p=.005), but more rhyme fixations for VS speech (t(54)=3.33, p=.002).

Next we examined peak time (Figure 9B). We found a main effects of competitor type (F(1,54)=8.1, p=.006, ηp2=.13) and speech-type (F(1,54)=37.3, p<.0001, ηp2=.41), but no interaction (F<1). Like Experiment 1, there was a later peak for rhymes than cohorts, and later peaks for VS than NS.

Finally, we examined extent of fixations (Figure 9C). There was no effect of competitor type (F(1,54)=1.2, p=.28, ηp2=.02). However, there was an effect of speech type (F(1,54)=22.0, p<.0001, ηp2=.29) and an interaction (F(1,54)=18.4, p<.0001, ηp2=.25). Like CI users, rhymes showed much larger extents in VS than NS (t(54)=5.4, p<.0001); however, unlike CI users, there was also a small but significant difference in cohort extent (t(54)=2.3, p=.027).

3.3 Discussion

This experiment showed a strikingly similar pattern to Experiment 1. Even NH adults, when confronted with a substantially degraded signal, show hallmarks of a partial wait-and-see strategy for lexical access. Target fixations were delayed by over 200 msec; cohort competitors received less consideration early in processing and rhymes were more active. As in Experiment 1, these differences in timing also led to differences in what was ultimately selected (Supplement S4): VS listeners were more likely to choose the rhyme than the cohort (on incorrect trials) and NS listeners showed the reverse. These results suggest that this “mode” of lexical access does not uniquely derive from developing speech and lexical skills in the context of a degraded input. Rather, it can also be achieved rather quickly in response to severely degraded input.

4.0 General Discussion

Relative to NH controls, prelingually deaf CI users are delayed by more than 200 msec in accessing lexical candidates; they exhibit less activity for onset (cohort) competitors; and they have more activity for rhyme competitors. Simply put, prelingually deaf CI users do not access words as immediately as typical listeners, and this has ripple effects throughout the dynamics of lexical competition. This is more than a matter of timing—the delay in lexical access cascades to affect how strongly various competitors are considered, and which items are ultimately chosen. This pattern is not shown by adult CI users in a prior study or NH listeners under moderate degradation (Farris-Trimble et al., 2014). However, Experiment 2 demonstrates that NH listeners hearing severely degraded speech (4-channel vocoded speech) exhibit the same strategy.

The results of this study rule out a sort of additive deficits account in which the late competitor activation exhibited by adolescents with weaker language skills, compounds with the slight delay exhibited by post-lingually deaf adults who use CIs. This would have predicted heightened competition across the board. Instead, we saw an emergent difference with a large delay in lexical access, reduced cohort activation and increased rhyme activation. Moreover, the fact this could be observed in NH listeners with degraded input suggests a more complex story.

These results have implications for theories of spoken word recognition, for our understanding of language learning and development, and for work on hearing impairment. Before we discuss these, we briefly discuss several limitations of this study.

4.2 Limitations

One limitation is that this study focused on isolated words. The addition of sentential context most obviously could add semantic expectations that may effectively rule out competitors before the target word is heard (Dahan & Tanenhaus, 2004). As a result, in everyday listening situations, CI users may be able to functionally overcome these bottom up delays, essentially pre-activating (or pre-inhibiting) lexical items on the basis of context.

Even absent any effects of semantic context, running speech also contains information that could improve auditory processing. Such factors—which have been demonstrated to affect lexical access—include prosody (Dilley & Pitt, 2010), indexical cues about the talker (Trude & Brown-Schmidt, 2012), speaking rate (Summerfield, 1981; Toscano & McMurray, 2015), and speaking style (e.g., clear vs. reduced: Brouwer, Mitterer, & Huettig, 2012). It is not clear whether all of this information is available to CI users or to listeners with heavily vocoded speech, but the availability of such factors in a sentence context could improve lexical access. However, at the same time, the kinds of delays observed here—or even the shorter ~75 msec of post-lingually deaf CI users—could pose serious problems in running speech. Speech unfolds at a rate of 3–5 words per second. Consequently, a small delay compounded over many words could lead to significant delays by the end of a sentence.

These are important questions for further research. However, our goal was not to study the dynamics of lexical access in isolated words because of their real-world validity. Rather, our use of isolated words was motivated by 1) a close match to clinically relevant assessments of speech perception outcomes, and 2) the long history of using such materials to isolate and characterize the dynamics of word recognition in typical listeners (Luce & Pisoni, 1998; Magnuson, Dixon, Tanenhaus, & Aslin, 2007; Marslen-Wilson & Zwitserlood, 1989). While isolated words may not generalize to CI users’ everyday life, they reveal evidence for a novel pattern of lexical access that may not have been observed otherwise.

A second limitation is the interpretation of the participants’ ultimate response. Our analysis was restricted to trials in which listeners responded correctly. This was done to emphasize a specific question: given that CI users ultimately accessed the correct word, did they do so via the same dynamics? Moreover, collapsing across all trials may average two distinct types of data. On incorrect trials, competitor (e.g., cohort or rhyme) fixations take a substantially different form (than on correct ones): since the listener is intending to click on a competitor, their fixations will look logistic (like target curves on correct trials). Thus, collapsing correct and incorrect trials averages functions with qualitatively different shapes.

But even with this restriction in place, can we guarantee that CI users ultimately accessed the correct word? Since we only have a single discrete response on each trial, we cannot rule out the possibility that on some proportion of trials, the participant responded correctly by guessing. However, this was likely the case on only a few trials because when erroneous responses were made they were typically made to competitor objects and not unrelated objects (Supplement S4). If guessing played a major role, one would have expected a roughly equal distribution of responding across the four objects. This suggests a fairly small contribution of outright guessing to the target accuracy. While of course, we cannot fully rule out this possibility, the available data suggests it is not a major contributor.

Moreover, our analysis of only correct trials may have underestimated the degree of competition exhibited by CI users and VS listeners. On some trials, competitor activation may have been too great to overcome, leading listeners to ultimately make the incorrect response. These trials were excluded from analysis, underestimating the degree of competition. This is supported by the close correspondence between the heightened rhyme fixations (in CI users) and the pattern of ultimate errors (Supplement S3).

A third concern is that CI and NH (or VS and NS) listeners were not balanced on accuracy – perhaps these differences were driving the entire effect? At a trivial level this cannot be true. The accuracy of a given trial comes after the fixations (and the lexical access processes) leading up to it. At a deeper level, however, these issues should not be separated. The point of lexical access is to identify the correct word. Consequently, what our studies demonstrate is that under circumstances in which lexical access is likely to be impaired (e.g., poor peripheral encoding of a CI, poorer lexical templates), the dynamics take a different form.

4.3 Long Term Adaptation?

There were intriguing differences among pre-lingually deaf CI users studied here and post-lingually deafened CI users of Farris-Trimble et al. (2014). Whereas prelingually deaf CI users showed a very large delay in lexical access and fewer looks to cohort competitors, post-lingually deafened CI users show much smaller delays, and a tendency to maintain activation longer for both competitors.

It is tempting to ascribe this to developmental differences – prelingually deaf CI users face problem of acquiring words and speech perception from a degraded input while postlingually deafened CI users suffer an imposition of degraded input on a well-developed system. It is clearly premature for such conclusions. First we could not match listeners on factors like language experience or age. Indeed, given the natural distribution of CI users, such matching may not be possible. Second, and more importantly, effects observed in CI users were also seen with NH listeners and vocoded speech. Thus, these effects are not necessarily specific to one hearing configuration/history. NH listeners with well-developed speech and lexical skills can exhibit this pattern in the course of an hour.

At the same time we would not rule out a developmental component. The fact that this partial wait-and-see pattern was observed under 4-channel (but not 8-channel) simulation suggests that if sensory degradation were the only cause, the prelingually-deafened CI users would have to have had extremely poor peripheral encoding. There is little evidence to support this. All of these CI users had 22 channel electrodes, and as a group were developing language well (average PPVT scores were within one SD of normal). Further, while there have been few psychoacoustic studies of frequency discrimination in prelingually-deafened CI users, the only available study, Jung et al. (2012), shows no statistical difference from post-lingually deafened CI users in broad band frequency discrimination (spectral ripple) or pitch change discrimination2. Thus, there is no reason to think that pre-lingually deaf CI users have half of the spectral resolution of post-lingually deafened adults.

Given this, how does one account for the similarity between Experiments 1 and 2? One possibility is that having acquired words from degraded input, lexical templates or representations are defined less precisely. These fuzzier representations are not as separable in the mental lexicon – as they can only be defined in the poorer and more overlapping acoustic space of CI input. When combined with a moderately degraded signal this could lead to similar levels of uncertainty as would a profoundly degraded signal (with good templates). I n contrast, for post-lingually deafened CI users, well defined lexical templates coupled to the same moderately degraded signal leads to less uncertainty, and consequently a different pattern of lexical activation dynamics. While this remains speculative, minimally, the differences between pre- and post-lingually deafened CI users (and between 4 and 8-channel vocoded speech) should be seen as potential variants in the form that lexical access dynamics can take under different conditions of uncertainty.

4.3 Immediate competition and lexical access

Prelingually deaf CI users and others facing severely degraded speech exhibited a dramatic delay in the onset of lexical access. This appeared to have a ripple effects in the degree to which various competitors are considered, with less competition from cohort competitors and more from rhymes. The effect of degraded speech on the timing of target fixations alone seems to challenge the notion that lexical access begins immediately at word onset. But what is one to make of the differences in cohort and rhyme activation?

At the outset, one might have predicted an increase in competition more generally as a result of degraded input. Indeed that was what is observed in adult CI users (Farris-Trimble et al., 2014), and as well as in language impairment (Dollaghan, 1998; McMurray et al., 2010) and in younger typical listeners (Rigler et al., 2015; Sekerina & Brooks, 2007). This increase in competition was observed here for rhyme competitors, but cohort competitors showed reduced competition. This is likely a consequence of the extreme delays due to degraded input. CI users are very slow to begin accessing lexical candidates – by some measures, upwards of 250 msec relative to NH peers (and a similar delay was seen with vocoded speech). Thus, by the time they access the lexicon, they’ve heard enough information to eliminate cohorts, resulting in fewer fixations. However, no matter how long they wait, there will never be any more information to rule out rhymes. In fact by delaying a commitment, rhymes ultimately may be more active, as they are less inhibited by targets and cohorts (which are not active initially). Thus, the asymmetry in cohort and rhyme activation could derive from this large delay in lexical access. Supporting this, CI users with more target delay (later crossovers) showed fewer peak cohort fixations (R=−.487, p=.002) and stronger peak rhyme fixations (R=.451, p=.005)3. Thus, a large delay affects not only how efficiently listeners process speech, but cascades to affect which words are considered or even recognized (as our analysis of the errors indicates).

This appears to partially challenge a long-held tenet of spoken word recognition: immediate competition (Marslen-Wilson, 1987; McClelland & Elman, 1986). Much of the early evidence for immediacy derives from the parallel consideration of cohort competitors (Marslen-Wilson & Zwitserlood, 1989; Zwitserlood, 1989). This holds across many methods, and a wide variety of populations. Cohort activation is considered strong evidence for immediacy because it derives from the temporary ambiguity at word onset—if people are making immediate lexical commitments they cannot avoid activating cohorts (since there is not information to rule them). Here, however, we see a reduction in cohort activation, the key evidence of immediacy. This is not to say that the participants in either experiment completely exhibit a wait-and-see strategy. In both experiments, cohorts and rhymes are fixated more than unrelated objects, and cohort and rhyme competitors clearly display a different timecourse of fixations from each other. If listeners were exhibiting an extreme version of wait-and-see, these competitors should be only minimally active as by the time lexical access begins there should be little uncertainty as to the correct word. Nonetheless, the reduction in cohort activity and delay in target activity argue that listeners in these experiments are not immediately activating lexical candidates from word onset, a departure from the typical profile. This raises the possibility that immediacy is not an obligatory way to deal with temporally unfolding input.

These results thus offer a friendly challenge to current models of adult lexical processing (which were not intended to account for degraded input). However, it is as yet unclear how they will need to be modified to handle this kind of processing. We see three avenues for potential extension of these models.

First, we must also consider the possibility that such effects are a functional consequence of differences in the reliability of acoustic cues (for a given listener). CIs systematically distort the input, reducing the availability of fine-grained frequency differences, and eliminating the fundamental frequency, while preserving much of the amplitude envelope. Such differences could make particular phoneme contrasts or phonemes in particular positions of a word less reliable for discriminating lexical candidates. Faced with a developmental history of this, children could downweight information at certain points in the word. If they did this at word onset, this would leading to slower initial activation growth (while they wait for relatively more reliable information later).

This is not something we can rule out, however, at present there is little evidence supporting a reliability account. Our study purposely used a wide range of consonants and vowels in order to isolate higher level differences from particular phonemes; as a result any effects of cue-reliability would have be at the level of the position within syllable, and in particular would require less reliable information at word onset. However, there is only mixed evidence that information transmitted by CIs is less reliable for onset consonants than for other positions. Dorman, Dankowski, McCandless, Parkin, and Smith (1991) showed less than 1% difference in accuracy for vowels and word-medial consonants (and Shannon et al. (1995) show similar effects in simulated speech). In contrast, Donaldson and Kreft (2006) show a large 14% difference between onset consonants and vowels, but only a 4% difference between onset and medial consonants. However, both studies were conducted with adults, and neither statistically compared performance as a function of syllable position in part because chance level performance differs among positions, so it is not known whether these differences are reliable. Moreover, it is unlikely that the effects observed here are solely driven by a greater reliability for vowels as CI users waited over 200 msec to begin lexical access in this study, well past the vowel and into the later consonants.

The other reason to disfavor a cue reliability account is that these effects were not seen in post-lingually deaf CI users with a highly similar form of degradation (Farris-Trimble et al., 2014). Given that typical listeners can adapt to differences in cue reliability within a few hours or less (Bradlow & Bent, 2008; Clayards, Tanenhaus, Aslin, & Jacobs, 2008), these listeners (with at least two years of CI experience) should have also shown the effect. Yet they appeared to adapt quite differently to their CI with a shorter delay in processing and heightened competition from both cohorts and rhyme competitors. Nonetheless, we cannot entirely rule out a reliability account. Crucially, however, such an account would also require further modification to models of spoken word recognition. Such models do not incorporate the ability to weight information at different positions in the syllable, and this would clearly be needed to account for the results seen here in this framework.

Second, a perhaps more parsimonious account of the effects observed here is that they derive from a tuning of the parameters that govern the dynamics of lexical competition. The fact that these competition processes develop (Rigler et al., 2015; Sekerina & Brooks, 2007) and can be mistuned in impairment (Coady, Evans, Mainela-Arnold, & Kluender, 2007; Dollaghan, 1998; McMurray et al., 2014; McMurray et al., 2010) suggests that lexical activation dynamics do not just passively reflect the input, but are actively tuned to manage temporary ambiguity. Consequently, parameters of this process may be adapted to deal with uncertainty. As we’ve suggested here, this could be done simply by slowing target activation (with consequent changes throughout the process). However, it could also be advantageous to deliberately maintain activation for certain classes of competitors in case of a mistake (Ben-David et al., 2011; Clopper & Walker, in press; McMurray et al., 2009), although this account alone does not predict why this would be the case for rhymes but not cohorts. And recent work suggests that lexical inhibition between words is also plastic and can be adapted within an hour (Kapnoula & McMurray, 2016). Such changes or a constellation of such changes could represent an adaptive response to the extreme uncertainty here, perhaps helping children (or adults faced with high uncertainty) reduce a potentially overwhelming amount of competition.